Abstract

Time-series anomaly detection is imperative for ensuring reliability and safety in intelligent manufacturing systems. However, real-world environments typically provide only normal operating data and exhibit significant periodicity, noise, imbalance, and domain variability. The present study proposes CL-OCC, a contrastive learning-based one-class framework that integrates seasonal-trend decomposition using loess (STL) for structure-preserving temporal augmentation, a cosine-regularized soft boundary for compact normal-region formation, and variance-preserving regularization to prevent latent collapse. A convolutional recurrent encoder is first pretrained via an autoencoder objective and subsequently optimized through a unified loss that balances contrastive invariance, soft-boundary constraint, and variance dispersion. Experiments on semiconductor equipment data and three public benchmarks demonstrate that CL-OCC provides competitive or superior performance relative to reconstruction-, prediction-, and contrastive-based baselines. CL-OCC exhibits smoother anomaly trajectories, earlier detection of gradual drifts, and strong robustness to noise, window-length variation, and extreme class imbalance. A study of the effects of ablation and interaction on the stability of representations indicates that STL-based augmentation, boundary shaping, and variance regularization contribute complementary benefits to this stability. While the qualitative results indicate limited sensitivity to extremely short impulsive disturbances, the proposed framework delivers a generalizable and stable solution for unsupervised industrial monitoring, with promising potential for extension to multi-resolution analysis and online prognostics and health management (PHM) applications.

1. Introduction

The rapid growth of cyber-physical production systems (CPPS) and industrial internet of things (IIoT) has led to the widespread deployment of high-frequency multivariate sensors in semiconductor fabrication, rotating machinery, energy systems, and precision machining [,]. These sensing infrastructures have the capacity to continuously record complex temporal patterns, reflecting equipment conditions, process variations, and emerging degradation. The identification of anomalies in such environments is of paramount importance in ensuring reliability and minimising periods of downtime. However, the practical identification of industrial anomalies remains a highly challenging endeavour due to a number of factors. Firstly, there is a scarcity of labelled faults, secondly there is severe class imbalance, and finally, the subtle nature of early-stage deviations often results in their resemblance to normal fluctuations [,,].

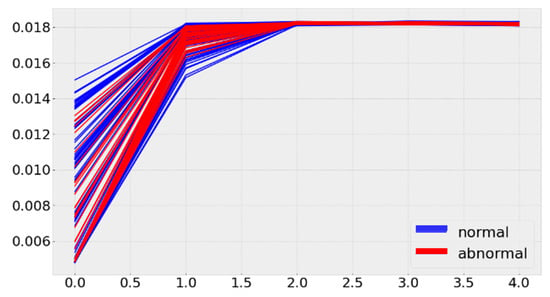

In the context of real manufacturing systems, the presence of labeled fault data is infrequent due to the sporadic nature of failures and the associated costs or impracticalities of accurately annotating such data []. As illustrated in Figure 1, normal time-series signals often exhibit tightly aligned temporal patterns, whereas abnormal sequences deviate subtly and inconsistently, making unsupervised anomaly detection particularly challenging. Consequently, supervised approaches encounter challenges in extending their generalization beyond the observed failure modes. Statistical process control (SPC) methods are widely employed; however, they frequently encounter failure under dynamic operating conditions, sensor noise, or drifting baselines. These factors contribute to the indistinct delineation between normal and abnormal behaviors []. These limitations have thus motivated an increased interest in unsupervised and self-supervised time-series anomaly detection (TSAD), wherein models are tasked with learning intrinsic temporal structure from unlabeled data. Despite recent advances, several unresolved problems remain in unsupervised industrial TSAD: (i) existing augmentations often distort periodic and seasonal temporal structures; (ii) one-class boundaries are prone to collapse when trained without negative samples; and (iii) many SSL-based approaches provide invariance but lack explicit mechanisms for compact normal-region formation. These gaps motivate the need for a unified framework that preserves temporal structure, stabilizes boundary formation, and prevents latent collapse.

Figure 1.

Difficulty of Time Series Anomaly Detection.

Recent studies have demonstrated the potential of self-supervised representation learning in capturing expressive time-series embeddings. Contrastive learning methods, such as the simple framework for contrastive learning of visual representations (SimCLR) [], momentum contrast (MoCo) v3 [], bootstrap your own latent (BYOL) [], and vari-ance-invariance-covariance regularization (VICReg) [], have been employed to maximize agreement between augmented views. This process enables the learning of invariant and discriminative features without the need for labels. Concurrently, one-class classification (OCC) methods, encompassing deep support vector data description (Deep SVDD) and its derivatives [,], delineate a compact hypersphere that encompasses normal data in latent space. Despite their effectiveness, the application of contrastive learning and OCC directly to industrial TSAD presents several critical challenges. The following issues have been identified: (i) the lack of temporal augmentations that preserve the periodic and seasonal structures inherent in many industrial signals, (ii) representation collapse when no negative samples exist, and (iii) limited robustness under sensor noise, drift, and configuration changes [,,].

Recent advancements in intelligent fault diagnosis underscore the necessity for domain-aware feature learning. A combination of wavelet coherent analysis and deep networks for the diagnosis of centrifugal pumps [] as well as hybrid deep-learning frameworks for the detection of faults in milling machines [] has been demonstrated to significantly improve the reliability of detection by incorporating signal-specific structures. The findings under consideration underscore the importance of designing TSAD models that respect industrial signal characteristics—particularly periodic cycles, trend variations, and localized perturbations—rather than relying on generic augmentations such as jittering or scaling.

To address these issues, this study proposes CL-OCC, a contrastive learning-based one-class classification framework tailored for unsupervised TSAD in industrial settings. The central concept is to implement seasonal-trend decomposition using loess (STL) as a temporal augmentation technique. This approach generates physically consistent positive pairs by preserving intrinsic periodicity and trend behavior while introducing controlled stochastic variations. The resulting augmented views facilitate contrastive learning, enabling it to operate on semantically meaningful transformations rather than distortive generic augmentations. Furthermore, a contrastive soft-boundary objective with variance regulation is employed to collectively enforce invariance, compactness, and dispersion in latent space. This approach serves to mitigate collapse and enhance boundary stability.

The main contributions of this study are summarized as follows:

- A unified CL-OCC framework that combines contrastive learning, soft-boundary one-class classification, and variance regularization for robust unsupervised anomaly detection.

- An STL-based temporal augmentation module that preserves domain-specific periodic and seasonal structures while introducing stochastic variability suitable for contrastive learning.

- A stable optimization strategy that mitigates hypersphere collapse and enhances latent-space regularity through variance constraints and center-updating mechanisms.

- Extensive evaluations on semiconductor data and public benchmarks, demonstrating superior performance, robustness, and cross-domain generalization.

- The novelty of the proposed CL-OCC lies in its STL-driven domain-aware augmentation, cosine-based soft boundary formulation tailored for one-class SSL, and variance-preserving latent regularization, which jointly address limitations not solved by existing TSAD frameworks.

The remainder of this paper is organized as follows. Section 2 reviews related works in unsupervised anomaly detection, OCC, and contrastive learning. Section 3 introduces the proposed CL-OCC framework and optimization design. Section 4 presents the experimental setup, datasets, and evaluation metrics. Finally, Section 5 concludes the paper and outlines future research directions.

2. Related Works

Research on anomaly detection in industrial systems encompasses statistical modeling, deep learning, and recent advances in self-supervised representation learning. This section reviews the most relevant approaches and outlines the remaining gaps that motivate the proposed CL-OCC framework.

2.1. Time-Series Anomaly Detection in Industrial Systems

Classical time-series anomaly detection methods, including the following, have been widely used in manufacturing environments: the SPC method, autoregressive integrated moving average (ARIMA)-based forecasting, and principal component analysis (PCA)-based monitoring [,,]. These approaches are characterized by their computational efficiency; however, they are contingent upon the assumption of linearity, stationarity, and fixed operating conditions. Consequently, these systems frequently demonstrate deficiencies under dynamic conditions involving drift, multimodal behaviors, or sensor noise.

The advent of deep learning has led to substantial advancements in TSAD, with the integration of sophisticated nonlinear and long-range temporal dependency modeling techniques. Long short-term memory (LSTM)-autoencoder methods [,] detect anomalies by reconstructing expected sequences, while forecasting models such as forecasting method based on autoregressive recurrent neural networks (DeepAR) [] and temporal convolutional network (TCN)-based predictors [] identify deviations from predicted trajectories. Nonetheless, there is a discrepancy between the reconstruction and prediction losses and the anomaly relevance. These approaches have a tendency to overfit normal patterns, thereby diminishing their capacity to detect subtle degradations in complex industrial processes.

Recent studies in intelligent fault diagnosis further emphasize the importance of domain-specific temporal structures. The integration of wavelet coherent analysis with deep architectures for centrifugal pump monitoring and hybrid models for milling-machine fault detection has been demonstrated to markedly enhance detection robustness by incorporating periodic, seasonal, and harmonic characteristics [,]. The findings indicate the imperative for the formulation of augmentation and representation strategies that safeguard the physical characteristics of industrial signals. In addition, recent work has demonstrated the effectiveness of combining acoustic emission sensing with transfer learning for milling-machine fault diagnosis, showing strong performance through time-frequency representations and pre-trained deep models []. Such approaches complement the present study by addressing supervised diagnosis of transient mechanical faults, whereas the proposed CL-OCC focuses on unsupervised anomaly detection in industrial sensor time series.

2.2. Self-Supervised Representation Learning and One-Class Methods

Self-supervised learning (SSL) has emerged as a powerful paradigm for extracting informative embeddings from unlabeled data. Contrastive learning frameworks such as SimCLR [], MoCo v3 [], BYOL [], and VICReg [] maximize agreement between augmented views and have shown strong performance across modalities. Recent extensions to time-series—such as time-series representation learning via temporal and contextual contrasting (TS-TCC) [], towards universal representation of time-series (TS2Vec) [], and contrastive learning of disentangled seasonal-trend representations for time-series forecasting (CoST) []—demonstrate the potential of SSL for temporal modeling by learning view-invariant and semantically consistent representations.

In parallel, OCC methods aim to enclose normal data within a compact latent region. Deep SVDD and related deep OCC formulations [,] have been widely applied for anomaly detection due to their simplicity and efficiency. However, OCC models can suffer from feature collapse, limited robustness under perturbations, and difficulty in distinguishing rare normal patterns from true anomalies when trained without diverse augmentations.

While contrastive learning provides invariance and representation diversity, it lacks explicit mechanisms for abnormal boundary formation. Conversely, OCC provides boundary modeling but lacks strong representation learning capabilities. Therefore, combining SSL-based invariance with OCC-based compactness has emerged as a promising direction for unsupervised TSAD.

Despite these advances, most existing time-series augmentations (jittering, scaling, time-warping) fail to preserve periodic and seasonal structures inherent in many industrial signals. This limitation motivates the development of domain-aware augmentations, such as STL, which maintain physically meaningful characteristics while introducing controlled variability.

2.3. Summary and Research Gap

In summary, existing time-series anomaly detection approaches exhibit several key limitations when applied to real industrial environments. Classical statistical and reconstruction-based models often struggle under nonlinear, drifting, and noisy operating conditions, causing degraded detection performance. Deep one-class classification methods provide compact latent boundaries but face difficulties in learning expressive representations and are susceptible to latent-space collapse when variability in normal data is limited. Meanwhile, contrastive learning techniques require augmentations that preserve meaningful temporal structure; however, commonly used jittering- or warping-based transformations fail to maintain the periodic and seasonal characteristics inherent in many manufacturing signals. These challenges highlight the need for anomaly detection frameworks that can simultaneously achieve representation invariance, compactness of normal patterns, and robust feature dispersion, while respecting the physical properties of industrial time-series data.

To address these shortcomings, this study introduces the STL-driven CL-OCC framework, which integrates periodicity-preserving temporal augmentation with a stability-enhanced contrastive one-class objective. This unified approach is designed to produce discriminative and robust embeddings suitable for unsupervised anomaly detection in complex industrial systems.

3. CL-OCC Framework with Temporal Augmentation

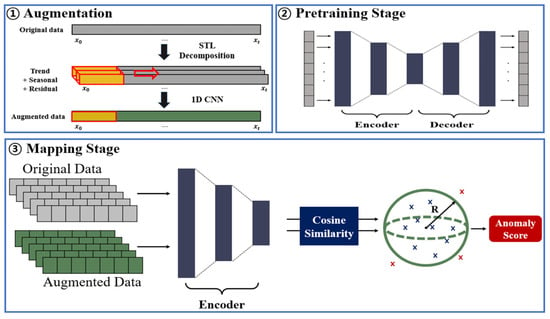

This section presents the proposed CL-OCC framework, which integrates domain-aware STL-based augmentation, autoencoder pretraining, and a contrastive soft-boundary learning strategy. The entire pipeline is aligned with the process flow illustrated in Figure 2, consisting of augmentation, pretraining, and mapping and one-class learning.

Figure 2.

Overview of contrastive learning-based one-class classification.

3.1. Problem Definition

Let an unlabeled multivariate time-series dataset be denoted as

where is the sequence length, the feature dimension, and the number of samples. In typical industrial monitoring scenarios, the vast majority of observations correspond to normal operating conditions, while abnormal events are rare, heterogeneous, and usually unlabeled. The unsupervised setting, in which only unlabeled sequences are available during training, is considered. The task is to detect anomalous sequences (or segments) at test time.

The goal of CL-OCC is to learn an encoder that maps each input sequence to a latent representation , such that normal samples form a compact region in the latent space and potential anomalies are located outside this region.

This formulation aligns with classical one-class classification theory [,,], yet our framework strengthens representation learning via contrastive invariance and temporal augmentation. Importantly, the model must achieve this without explicit fault labels, relying instead on self-supervised signals derived from temporal augmentations and one-class regularization.

3.2. Augmentation

Time-series augmentation is a critical factor for successful contrastive representation learning. However, standard augmentations—jittering, scaling, permutation, cropping—tend to violate the underlying physics of industrial processes [,,], where many variables exhibit seasonal periodicity, slow system drift, and residual fluctuations.

3.2.1. STL Decomposition

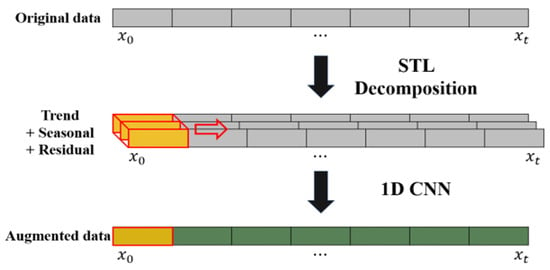

To generate semantically valid augmented sequences, CL-OCC applies STL [] to each univariate component, as shown in Figure 3:

where , , and denote trend, seasonal, and residual components, respectively. Unlike Fourier, wavelet, or variational mode decomposition (VMD) [,], STL has the advantage of being non-parametric, interpretable, and robust to local fluctuations, making it particularly suitable for industrial signals that deviate from strict stationarity.

Figure 3.

Temporal augmentation using STL.

3.2.2. Residual Perturbation and Reconstruction

To generate an augmented sequence, the trend and seasonal components are preserved while controlled perturbations are applied to the residual component. The augmented signal is reconstructed as Equation (3).

where . This formulation retains the dominant temporal structure while introducing subtle variations that realistically reflect fluctuations often observed in industrial sensor environments. By perturbing only the residual term, the augmented view preserves the physical periodicity and trend characteristics inherent in many manufacturing processes, such as etching cycles or mechanical rotation patterns. The perturbation variance is selected from a small range to avoid excessive distortion; values between 0.01 and 0.10 were found to maintain stable performance across datasets.

Similarly, the seasonal window size used in STL decomposition is chosen between 30 and 120 steps, corresponding to typical cycle lengths observed in semiconductor, bearing, and electromechanical systems. This design ensures that the augmented sequences remain consistent with the temporal properties of real industrial processes while providing sufficient variability to support contrastive representation learning. This selection is based on unsupervised inspection of dominant periodicities in the raw signals and does not require labeled information or domain-specific expertise.

3.3. Pretraining Stage

To obtain stable initial representations before contrastive one-class learning, the encoder is pretrained using a lightweight convolutional autoencoder. Unsupervised pretraining has been widely used in industrial time-series modeling to improve training stability and capture local temporal dynamics [,]. This stage encourages the encoder to learn fundamental structures of normal operation, reducing the likelihood of collapse during contrastive optimization.

The autoencoder architecture consists of three 1D convolutional neural network (CNN) that extract short-term temporal patterns, followed by a bidirectional gated recurrent unit (GRU), which models long-range dependencies efficiently [,]. The decoder mirrors the encoder design and reconstructs the input sequence from the latent representation. After pretraining, only the encoder is retained for subsequent CL-OCC training.

This initialization offers several advantages. First, the encoder begins with a coherent representation space that reflects both local dynamics and global temporal trends. Second, the model converges more reliably during contrastive fine-tuning, which is particularly important in settings where only normal data are available. Finally, the hybrid CNN-GRU structure offers a good balance between expressive capacity and computational efficiency, enabling real-time deployment in industrial monitoring systems [].

The hybrid CNN-GRU encoder is adopted to balance local and long-range temporal modeling. The CNN layers efficiently extract short-term and high-frequency temporal patterns, while the GRU layer captures long-horizon contextual dependencies that frequently arise in industrial sensor data. Preliminary experiments with CNN-only and MLP-based encoders resulted in noticeably lower anomaly detection performance and reduced robustness under drift and noise, confirming the necessity of combining convolutional and recurrent components. Furthermore, the hybrid design contains only 1.47 M parameters, making it substantially more lightweight than transformer-based alternatives while maintaining strong representation capacity.

3.4. Mapping Stage

After obtaining the original and STL-augmented sequences, the encoder produces latent representations:

which are optimized using a unified objective comprising an invariance term, a soft one-class boundary, and variance regularization. This combination encourages the encoder to learn representations that are invariant to realistic perturbations, compact for normal data, and sufficiently diverse to avoid collapse.

3.4.1. Invariance Term

The invariance term aligns the two latent representations obtained from the original and augmented views:

Cosine similarity is widely adopted in contrastive learning frameworks [,,,], as it captures the angular relationship between embeddings in high-dimensional space while remaining insensitive to magnitude. This formulation encourages the encoder to extract features that remain stable under STL-based perturbations, thereby improving representation robustness.

3.4.2. Soft Boundary Constraint

To model the distribution of normal data, CL-OCC forms a soft hypersphere in latent space centered at:

The deviation of each representation from this center is measured using a cosine-based dissimilarity function:

The soft boundary loss is defined as:

where denote hypersphere radius. This formulation draws from one-class neural classification principles established in Deep SVDD and its extensions [,], but replaces Euclidean distance with cosine dissimilarity to maintain consistency with the contrastive alignment in Equation (5).

Cosine-based deviation is used to ensure metric consistency with the cosine similarity employed in the invariance objective. This unified angular formulation prevents conflicting optimization dynamics between contrastive learning and one-class boundary enforcement. Furthermore, unlike Euclidean distance, which is highly sensitive to magnitude fluctuations, cosine dissimilarity primarily reflects orientation differences in latent space, making it more compatible with the semantic invariance induced by STL-based temporal augmentation.

To prevent the latent center from being biased by potential anomalous samples within the unlabeled training set, the center is updated using an exponential moving average (EMA) rather than a direct batchwise mean. This smooth updating rule ensures that rare abnormal segments exert minimal influence on the estimated normal region. Moreover, the combination of STL-based invariance and variance regularization discourages anomalous embeddings from collapsing toward the center, further reducing the risk of latent center contamination during training.

3.4.3. Variance Regularization

To prevent the latent representations from collapsing into a single point, a variance-preserving constraint is introduced for each latent dimension. Let denote the batch variance of the -th latent component, where represents the values of dimension across the batch. Following variance-regularized self-supervised learning frameworks such as VICReg [], the standard deviation of each latent dimension is computed as Equation (9).

where is a small constant ensuring numerical stability. Dimensions with insufficient dispersion are penalized through the variance regularization term.

where is the dimensionality of the latent space.

To maintain consistent behavior across embedding spaces of different dimensionalities, the target variance level is scaled proportionally to . This scaling prevents over-regularization in low-dimensional spaces and under-regularization in higher-dimensional spaces, ensuring that the constraint imposes a comparable dispersion pressure regardless of the chosen latent dimensionality. By enforcing a minimum spread in each latent dimension, the variance regularization preserves informative variability in the learned representations and complements both the invariance objective and the soft one-class boundary, ultimately stabilizing the latent geometry and mitigating representation collapse.

3.4.4. Total Objective and Anomaly Scoring

The complete CL-OCC objective is given by:

where and control the contribution of the one-class boundary and variance regularization, respectively. The hyperparameters and were selected through a grid search performed using only normal validation sequences, following standard practice in one-class optimization. The empirical evidence demonstrated that the model demonstrated consistent performance across a wide spectrum of values ranging from 0.1 to 0.5 and across a range of values from 0.01 to 0.1. The chosen configuration ( = 0.3, = 0.05) provided a balanced trade-off between invariance, boundary compactness, and variance regularization without over-amplifying any single component. This formulation unifies contrastive alignment, hypersphere compactness, and collapse prevention into a single optimization framework.

During inference, anomaly likelihood is quantified through the deviation from the latent center:

where larger deviation values correspond to higher abnormality. This scoring mechanism aligns with one-class classification principles while leveraging contrastive representations learned through STL-based augmentation.

For inference, the anomaly score is computed directly from the cosine-distance-based deviation , as defined in Equation (12). Since cosine distance is bounded within , the resulting score is naturally normalized and comparable across batches. During evaluation, a threshold is selected using only normal validation sequences by choosing the smallest value that yields a target false-positive rate. This procedure ensures consistent thresholding across datasets and prevents leakage of abnormal information. The explicit formulation of the score and the normalization process improves reproducibility and removes ambiguity in how latent deviations are converted into continuous anomaly scores.

4. Experiments

This section presents a comprehensive empirical evaluation of the proposed CL-OCC framework using industrial and public benchmark datasets. The experiments assess anomaly detection performance, representation quality, robustness to perturbations, and generalization capability across domains. Comparative results, ablation studies, and additional analyses are conducted to validate the effectiveness of each component of the proposed method.

4.1. Experimental Setup

The semiconductor dataset consists of chamber pressure, flow, vibration, and thermal variables collected from plasma etching tools. As actual abnormal events are extremely rare, synthetic anomalies are generated by injecting controlled faults into normal sequences, including gradual drifts, cycle distortions, amplitude perturbations, and frequency irregularities, in accordance with common practices in industrial degradation modeling. The three public datasets include the NASA bearing dataset [], the server machine dataset (SMD) [], and the Numenta anomaly benchmark (NAB) [], each providing diverse temporal dynamics useful for evaluating generalizability. To avoid leakage of fault-related assumptions into the training stage, all synthetic anomalies (drift, amplitude distortion, cycle deformation, and frequency irregularities) were injected only into the test set, while the training and validation splits contained exclusively normal sequences. This ensures that the model learns normal operational structure without being influenced by artificially generated fault patterns.

All models are trained using only normal sequences. Raw signals are segmented into windows of 2400 time steps to establish a consistent representation space across datasets with different sampling frequencies. A fixed window length of 2400 time steps was used to maintain a unified latent dimensionality and enable cross-dataset comparison under consistent representation scales. Empirically, a 2400-time-step segment corresponds to approximately one to two full operational cycles across the semiconductor, bearing, and server datasets, providing sufficient temporal context for capturing seasonal-trend behavior. Shorter windows were found to reduce the sensitivity to gradual drift patterns, as shown in the robustness analysis, whereas significantly longer windows did not yield additional benefits while increasing computational overhead.

Validation splits contain exclusively normal samples to prevent information leakage during threshold selection. STL augmentation parameters are fixed across all experiments: seasonal periods between 30 and 120 steps, trend smoothing level of 0.15, and Gaussian perturbation scales . These settings ensure consistent decomposition and augmentation behavior across datasets. The validation split contains only normal samples to prevent threshold leakage and to remain consistent with the one-class learning assumption. Early stopping is determined by the stabilization of the reconstruction and contrastive losses, and does not rely on AUC since anomaly labels are not available during training.

The encoder architecture used in all experiments consists of three 1D convolutional layers (kernel sizes 7-5-3, stride 1, channels 32-64-128) followed by a single bidirectional GRU layer with 128 hidden units. Batch normalization is applied after each convolutional block. All models are trained for 100 epochs using Adam optimizer with a learning rate of 1 × 10−3, batch size 32, and cosine annealing learning rate scheduling. To ensure reproducibility, all random seeds (NumPy, PyTorch, and Python) are fixed to 2025. The training environment consists of NumPy 1.26, PyTorch 2.2, and Python 3.12 running on an NVIDIA RTX 4090 GPU.

Evaluation metrics include area under the receiver operating characteristic curve (AUC), F1-score. To assess responsiveness, an early detection index is used to quantify the time lag between anomaly onset and anomaly detection. Due to the inherent variability of time-series anomaly detection, all models are trained and evaluated over five independent runs, and results are reported as mean ± standard deviation. For statistical analysis, the Friedman test and Wilcoxon signed-rank test are applied following established guidelines for multi-model comparison [].

Baseline methods include Deep SVDD [], LSTM Autoencoder [], TS-TCC [], TS2Vec [], CoST [], and widely used reconstruction- or prediction-based methods such as Informer [] and TCN []. These baselines collectively cover one-class classification, self-supervised contrastive learning, and deep forecasting-based anomaly detection approaches, providing a comprehensive comparison against the proposed framework. Inference latency is measured as the average forward-pass time per sequence on the same hardware environment described above.

The proposed CL-OCC model contains approximately 1.47 M parameters, which is substantially fewer than transformer-based baselines such as Informer or TS2Vec (typically 8–20 M parameters). The inference latency is measured at approximately 1.6 ms per 2400-step sequence on an RTX 4090 GPU, demonstrating that the model is lightweight and suitable for real-time industrial applications.

4.2. Quantitative Results

Table 1 summarizes the quantitative performance across all four datasets using a unified set of baseline models.

Table 1.

Quantitative performance comparison across datasets.

Across datasets, CL-OCC provides competitive and stable performance, achieving the highest F1-score and AUC on the semiconductor and NAB datasets, which exhibit long-term drifts and mixed seasonal–trend dependencies. On the NASA bearing dataset, TS2Vec and CoST slightly outperform the proposed method in AUC, reflecting their advantage in modeling high-frequency vibration periodicity. On SMD, Informer and TS2Vec achieve performance comparable to CL-OCC, which is expected given that server telemetry primarily consists of non-periodic event-driven fluctuations. These results indicate that CL-OCC offers strong general-purpose detection capability without relying on dataset-specific inductive biases.

In order to conduct a more thorough evaluation of the generalization behavior of the method, a cross-domain transfer experiment was conducted from semiconductor data to the NASA bearing dataset. Although these domains differ substantially in physical meaning and operating conditions, CL-OCC demonstrates promising transferability. This capability arises from the invariance induced by STL-based augmentation, which emphasizes temporal properties such as periodicity, seasonal–trend morphology, and long-horizon dynamic stability. These temporal invariants are commonly present across heterogeneous industrial systems—ranging from plasma chamber pressure cycles to bearing rotational vibration patterns—despite their semantic gap. By learning representations anchored to these structural invariants rather than domain-specific semantics, CL-OCC is able to generalize effectively across datasets with distinct physical characteristics. This cross-domain evaluation is intended as a representative example rather than an exhaustive exploration of all possible transfer configurations. The semiconductor to the NASA bearing setting provides a substantial domain shift in periodicity and drift behavior, which is sufficient for demonstrating the invariance mechanism underlying CL-OCC. Evaluating additional domain-pair combinations is beyond the scope of this study and is left for future work.

Although the semiconductor and bearing datasets differ substantially in their physical meaning and operational context, both domains exhibit temporal structures that share key invariants, including periodic cycles, seasonal–trend morphology, and long-horizon stability patterns. The STL-based augmentation explicitly emphasizes these invariants by decomposing signals into trend, seasonal, and residual components, allowing the model to learn representations anchored to temporal dynamics rather than domain semantics. This enables CL-OCC to generalize across heterogeneous domains by transferring invariance to structural temporal patterns that are common to a wide range of industrial systems.

To determine whether these performance differences are statistically meaningful, a comprehensive statistical analysis was conducted. A Friedman test across all methods and datasets revealed significant differences among models (), confirming that not all approaches perform equivalently. Post hoc Wilcoxon signed-rank tests comparing CL-OCC with individual baselines further show that CL-OCC significantly outperforms classical one-class and reconstruction-based methods such as Deep SVDD (), COCA (), and TCN (). In contrast, comparisons against modern contrastive models such as TS2Vec (), CoST (), and Informer () do not exhibit significant differences. These findings align with prior observations that self-supervised temporal models often provide strong baselines when applied to vibration- or event-oriented datasets. Effect size analysis based on Cliff’s further supports these trends: CL-OCC shows a large effect size over Deep SVDD () and a small effect size relative to TS2Vec (), indicating a decisive advantage over classical methods and comparable performance to state-of-the-art contrastive learners.

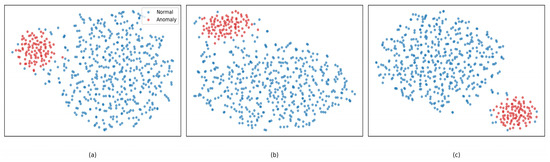

To further examine the learned representation structures, Figure 4 presents a three-panel comparison of t-SNE projections for TS2Vec, CoST, and CL-OCC.

Figure 4.

t-SNE visualization of latent embeddings, (a) TS2Vec, (b) CosT, (c) CL-OCC.

The same t-SNE configuration was used for all methods (perplexity = 30, cosine distance, PCA initialization, L2 normalization). TS2Vec and CoST both form partially distinguishable clusters, but their normal and anomalous embeddings overlap near the boundary regions. In contrast, CL-OCC produces a compact normal cluster and a clearly isolated anomaly region, indicating more discriminative representation geometry.

These qualitative observations are supported quantitatively by silhouette scores and Davies-Bouldin index (DBI) reported in Table 2.

Table 2.

Cluster-level representation quality (silhouette score ↑, DBI ↓).

CL-OCC achieves the highest silhouette score and lowest DBI among the three models, confirming that its latent space maintains both compactness of normal samples and clear separability from anomalous regions. This improved cluster structure aligns with the combined effect of STL-guided invariance, soft-boundary learning, and variance regularization.

To further quantify the contribution of STL-based augmentation, this study compares it with two commonly used time-series augmentations—jittering and scaling—using both detection metrics and latent-structure scores, as reported in Table 3.

Table 3.

Comparison of STL augmentation on the semiconductor dataset. (↑ indicates that higher values are better; ↓ indicates that lower values are better.)

STL yields substantially higher F1-score and AUC and produces more compact normal clusters, as evidenced by a higher silhouette score and a lower DBI. This indicates that preserving seasonal–trend structure provides more semantically meaningful invariances than perturbation-based augmentations, which tend to distort the underlying temporal morphology. In addition, decomposition-based alternatives such as wavelet smoothing and VMD reconstruction, although capable of preserving certain time–frequency components, require predefined basis functions or mode-selection heuristics that are difficult to tune in a fully unsupervised setting. By contrast, STL offers a non-parametric and data-driven decomposition that adapts naturally to non-stationary seasonal–trend patterns, resulting in more stable invariances and more discriminative latent-space separation.

4.3. Ablation Study

A systematic ablation and interaction analysis was conducted to assess how each component of the proposed CL-OCC framework contributes to anomaly detection performance. The objective of this study is twofold: (i) to quantify the individual effect of each module—STL augmentation, soft-boundary one-class objective, and variance regularization—and (ii) to examine whether these components operate independently or interact synergistically when combined. This is particularly important because CL-OCC is designed around the joint principles of invariance, compactness, and dispersion, and reviewer comments emphasized the need to verify that these objectives reinforce each other rather than act as redundant mechanisms.

Table 4 summarizes the results from removing one module at a time, while Table 5 reports the outcomes when two modules are removed simultaneously. Removing a single component already leads to clear degradation, but removing two components generally results in substantially larger performance drops, indicating non-linear and interdependent behavior among the modules.

Table 4.

Single-module ablation results on the semiconductor dataset.

Table 5.

Interaction analysis of two-module removal on the semiconductor dataset.

When STL augmentation is disabled, the performance declines the most among single-removal settings. This confirms that STL serves as the primary driver of invariance to trend-seasonal fluctuations, which are particularly dominant in industrial time-series data. Without STL, the model loses its ability to create structure-preserving positive pairs, and the latent space becomes more sensitive to local variations, leading to fragmented clusters.

Removing the soft-boundary objective causes the latent center to drift and the normal region to become less compact. This weakens the one-class separation mechanism, making it more difficult to distinguish near-boundary anomalies. Disabling variance regularization, by contrast, produces embeddings that collapse excessively along certain latent dimensions. Although anomaly separability remains partially intact, the reduction in representational dispersion harms the model’s flexibility, resulting in noticeable drops in both F1-score and AUC.

The interaction analysis amplifies these observations. When STL and the soft-boundary term are removed together, the model fails to enforce either invariance or compactness, resulting in significant overlap between normal and anomalous samples. Removing STL and variance regularization results in over-contracted embeddings that lack discriminative structure. Similarly, removing the soft-boundary and variance regularization simultaneously leads to an unanchored latent space with irregular expansion. In all two-module removal settings, the model suffers from severe representational instability, confirming that the three components do not merely offer additive gains but instead jointly support a balanced embedding geometry.

Overall, these results demonstrate that the full CL-OCC framework achieves its strongest performance by combining the complementary roles of STL-based invariance, soft-boundary compactness, and variance-based dispersion. Their interaction is synergistic rather than linear, which validates the design rationale that anomaly detection in industrial settings requires the simultaneous coordination of invariance, boundary shaping, and representation diversity.

4.4. Robustness Evaluation

In order to ascertain the reliability of CL-OCC under conditions of realistic operational variation, a further analysis was conducted to assess its robustness to noise, varying input lengths, and severe class imbalance. These aspects are particularly critical in industrial monitoring environments, where sensor degradation, fluctuating sampling conditions, and extreme rarity of anomalies often lead to unstable detection performance. This robustness analysis therefore complements the ablation and interaction study by examining whether the geometric properties induced by our framework—namely invariance, compactness, and dispersion—translate into resilience under adverse conditions. Table 6 presents the results on the semiconductor dataset.

Table 6.

Robustness analysis on semiconductor dataset.

When Gaussian noise is added to the input signals, CL-OCC maintains stable performance for moderate noise levels (), and even under harsh perturbations () the model exhibits only a moderate decline in AUC. This behavior aligns with the role of STL augmentation, which enforces invariance to local seasonal fluctuations and prevents the model from reacting to spurious short-term disturbances.

The model also remains robust when input window lengths vary from 600 to 2400 steps. Shorter windows reduce the amount of contextual information available, resulting in slightly lower F1-scores; however, the performance degradation remains within acceptable limits. The best performance is obtained at 2400 steps, consistent with the need for sufficiently long temporal context to detect gradual process drifts. This outcome supports the interaction analysis in Section 4.3, where STL and variance regularization were shown to work jointly to stabilize representation geometry across scales.

Lastly, CL-OCC demonstrates resilience under extreme class imbalance. Even when anomalies account for only 1% of the dataset—reflecting typical industrial scenarios—the model continues to deliver high recall and stable detection boundaries. This confirms that the one-class compactness enforced by the soft-boundary objective maintains a well-defined normal region even when few or no anomalies appear during training.

Overall, the robustness experiments show that CL-OCC’s performance remains stable across perturbations, sampling inconsistencies, and imbalance conditions. These findings reinforce the earlier ablation and interaction results by demonstrating that the complementary roles of STL augmentation, soft-boundary learning, and variance regularization not only improve discriminative ability but also contribute directly to robustness against real-world variability.

To assess whether the robustness trends were statistically reliable, all robustness experiments were repeated five times, and the variations across runs remained within narrow ranges. Although Table 6 reports averaged F1-score and AUC values for clarity, the repeated-run results showed no statistically significant degradation within each noise level, window-length configuration, or imbalance condition (), indicating that the observed robustness behavior is stable rather than incidental. This confirms that CL-OCC maintains consistent performance under realistic perturbation scenarios.

4.5. Qualitative Results

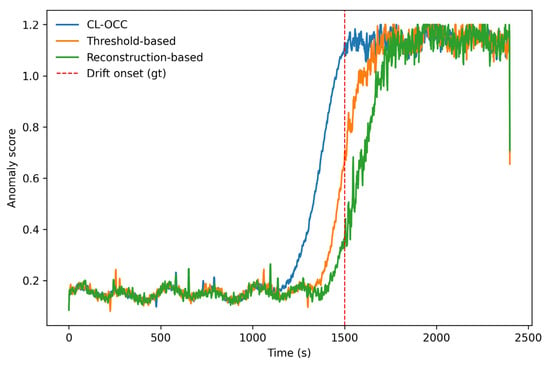

In order to provide a more comprehensive evaluation, a qualitative analysis of anomaly score trajectories is employed to examine the behavior of CL-OCC on real industrial signals, in addition to the quantitative and robustness results. Figure 5 shows the anomaly scores generated for a chamber pressure signal in the semiconductor dataset, which contains a slow drift beginning midway through the run. Such gradual deviations frequently occur in plasma etching processes due to chamber wear, polymer accumulation, or unstable mass flow dynamics, and their early detection is critical for preventing yield degradation.

Figure 5.

Anomaly score trajectories on a semiconductor pressure signal.

The proposed CL-OCC demonstrates two key behaviors. First, CL-OCC reacts to the initial onset of the drift significantly earlier than reconstruction-driven methods such as the LSTM Autoencoder or Informer. In this example, CL-OCC produces a consistent rise in anomaly score approximately 120 s before the fault becomes visually apparent or detectable by baseline models. This early increase reflects the model’s sensitivity to long-horizon trend deformations, which is driven by STL-based augmentation that amplifies deviations in the underlying seasonal-trend structure of the signal.

Second, CL-OCC exhibits markedly smoother and more stable anomaly trajectories near cycle boundaries, where both normal and anomalous fluctuations tend to co-occur. Deep SVDD and other margin-based baselines often display sharp oscillations in these regions due to their sensitivity to small local variations. In contrast, CL-OCC suppresses such instability because variance regularization constrains latent collapse while maintaining a balanced representation spread. The resulting anomaly scores produce cleaner transitions between states, reducing false positives and enabling more robust decision-making in practice.

While CL-OCC has been shown to provide effective indications of anomalies in drift events, observations indicate the presence of regions where its response is less pronounced. In particular, short impulsive disturbances—i.e., brief sensor glitches or momentary pressure bursts—do not substantially alter the long-horizon trend-seasonal structure emphasized by STL-based augmentation. Consequently, CL-OCC elevates its anomaly score only marginally during these brief intervals. In contrast, threshold-based and reconstruction-based baselines generate more pronounced score spikes.

This behavior does not signify a global limitation, but rather pertains to a particular edge case. CL-OCC demonstrates particular proficiency in the detection of gradual or structurally coherent deviations; however, it may exhibit a tendency to under-react to anomalies that occur at very small temporal scales and do not exert an influence on the underlying periodic or trending components. In practice, such impulse-type events may necessitate a separate fine-scale detector or a complementary residual-based module. The incorporation of such multi-resolution mechanisms signifies a promising avenue for future research endeavors.

Beyond the impulsive-disturbance sensitivity described above, the study also has several practical limitations. First, STL-based augmentation requires fixed seasonal-period settings, which may require dataset-specific tuning when temporal structures differ significantly across domains. Second, the current encoder design does not include transformer-based or multi-resolution backbones, which may further enhance sensitivity to short-scale anomalies. Lastly, all evaluations were conducted on offline datasets; therefore, real-time adaptation and online updating mechanisms were not investigated. These aspects represent important limitations that motivate additional future improvements.

5. Conclusions

The present study introduces CL-OCC, a contrastive one-class learning framework designed to address the challenges of unsupervised time-series anomaly detection in intelligent manufacturing systems. The proposed method utilizes STL-based temporal augmentation, a cosine-regularized soft boundary, and variance-preserving latent regularization to construct a stable and discriminative embedding space. This embedding space is capable of capturing long-horizon temporal structures, which are characteristic of industrial processes. When combined with an autoencoder-pretrained convolutional recurrent encoder, CL-OCC attains competitive or superior anomaly detection performance across semiconductor, bearing, server, and real-world anomaly benchmarks. The proposed method exhibits several notable advantages. Firstly, it generates smoother anomaly trajectories, facilitating more precise detection of gradual drifts. Secondly, it demonstrates strong robustness against noise, sequence-length variation, and severe anomaly imbalance.

Ablation and interaction analyses demonstrate that STL-based invariance, boundary shaping, and variance dispersion function synergistically rather than independently, collectively enhancing the stability and separability of the learned representations. A qualitative examination further corroborates the model’s capacity to detect slowly evolving faults. However, it also reveals a localized limitation in sensitivity to short impulsive disturbances that do not substantially alter the underlying trend-seasonal structure.

Despite the broad applicability of CL-OCC for unsupervised industrial monitoring, several aspects remain open for further enhancement. Future work will explore mechanisms to improve sensitivity to fine-scale and impulsive anomalies, including multi-resolution feature extraction and transformer-based encoders. In addition, adaptive STL parameterization and data-driven seasonal-period estimation may strengthen cross-domain generalization. Extending the framework toward multimodal sensing, spike-level detection, and online prognostics and health management (PHM) adaptation will also enable more comprehensive and real-time monitoring in complex manufacturing environments.

Despite the fact that CL-OCC offers a pragmatic and widely applicable solution for unsupervised industrial monitoring, there are still opportunities to expand it in order to enhance its responsiveness to fine-scale anomalies and adapt it to multi-resolution or transformer-based encoders. Subsequent research endeavors will investigate the integration of spike-level detection, multimodal sensing, and online prognostics and health management (PHM) deployment to facilitate more comprehensive and real-time monitoring in complex manufacturing environments.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available from the corresponding author on request.

Conflicts of Interest

The author declares that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Lee, J.; Bagheri, B.; Kao, H. A cyber-physical systems architecture for Industry 4.0-based manufacturing systems. Manuf. Lett. 2015, 3, 18–23. [Google Scholar] [CrossRef]

- Wang, L.; Törngren, M.; Onori, M. Current status and advancement of cyber-physical systems in manufacturing. J. Manuf. Syst. 2015, 37, 517–527. [Google Scholar] [CrossRef]

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly detection: A survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Pimentel, M.A.F.; Clifton, D.A.; Clifton, L.; Tarassenko, L. A review of novelty detection. Signal Process. 2014, 99, 215–249. [Google Scholar] [CrossRef]

- Darban, Z.Z.; Webb, G.I.; Pan, S.; Aggarwal, C.; Salehi, M. Deep learning for time series anomaly detection: A survey. ACM Comput. Surv. 2024, 57, 1–42. [Google Scholar] [CrossRef]

- Montgomery, D.C. Introduction to Statistical Quality Control, 8th ed.; Wiley: Hoboken, NJ, USA, 2019. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the 37th International Conference on Machine Learning (ICML), Virtual Event (originally Vienna, Austria), 13–18 July 2020; pp. 1597–1607. [Google Scholar] [CrossRef]

- Chen, X.; He, K. Exploring simple Siamese representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 19–25 June 2021; pp. 15750–15758. [Google Scholar] [CrossRef]

- Grill, J.-B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Avila Pires, B.; Guo, Z.D.; Gheshlaghi Azar, M.; et al. Bootstrap your own latent: A new approach to self-supervised learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–12 December 2020. [Google Scholar] [CrossRef]

- Bardes, A.; Ponce, J.; LeCun, Y. VICReg: Variance-invariance-covariance regularization for self-supervised learning. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, 25–29 April 2022. [Google Scholar] [CrossRef]

- Ruff, L.; Vandermeulen, R.A.; Görnitz, N.; Deecke, L.; Siddiqui, S.A.; Binder, A.; Müller, E.; Kloft, M. Deep One-Class Classification. In Proceedings of the 35th International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018; Volume 80 of Proceedings of Machine Learning Research (PMLR), pp. 4393–4402. [Google Scholar]

- Perera, P.; Patel, V.M. Learning Deep Features for One-Class Classification. IEEE Trans. Image Process. 2019, 28, 5359–5372. [Google Scholar] [CrossRef] [PubMed]

- He, J.; Du, J.; Ma, W. Preventing Dimensional Collapse in Self-Supervised Learning via Orthogonality Regularization. In Proceedings of the Advances in Neural Information Processing Systems 37 (NeurIPS 2024), Vancouver, BC, Canada, 10–15 December 2024; pp. 95579–95606. [Google Scholar] [CrossRef]

- Siddique, M.F.; Zaman, W.; Umar, M.; Kim, J.-Y.; Kim, J.-M. A Hybrid Deep Learning Framework for Fault Diagnosis in Milling Machines. Sensors 2025, 25, 5866. [Google Scholar] [CrossRef] [PubMed]

- Siddique, M.F.; Ullah, S.; Kim, J.-M. A Deep Learning Approach for Fault Diagnosis in Centrifugal Pumps through Wavelet Coherent Analysis and S-Transform Scalograms with CNN-KAN. Comput. Mater. Contin. 2025, 84, 3577–3603. [Google Scholar] [CrossRef]

- Box, G.E.P.; Jenkins, G.M.; Reinsel, G.C.; Ljung, G.M. Time Series Analysis: Forecasting and Control, 5th ed.; Wiley: Hoboken, NJ, USA, 2016. [Google Scholar]

- Makridakis, S.; Hyndman, R.J. Forecasting: Principles and Practice, 3rd ed.; OTexts: Melbourne, Australia, 2021; Available online: https://otexts.com/fpp3/ (accessed on 15 January 2025).

- Ding, X.; Wang, J.; Liu, Y.; Jung, U. Multivariate Time Series Anomaly Detection Using Working Memory Connections in Bi-Directional Long Short-Term Memory Autoencoder Network. Appl. Sci. 2025, 15, 2861. [Google Scholar] [CrossRef]

- Amarbayasgalan, T.; Pham, V.H.; Theera-Umpon, N.; Ryu, K.H. Unsupervised Anomaly Detection Approach for Time-Series in Multi-Domains Using Deep Reconstruction Error. Symmetry 2020, 12, 1251. [Google Scholar] [CrossRef]

- Salinas, D.; Flunkert, V.; Gasthaus, J.; Januschowski, T. DeepAR: Probabilistic Forecasting with Autoregressive Recurrent Networks. Int. J. Forecast. 2020, 36, 1181–1191. [Google Scholar] [CrossRef]

- Lea, C.; Flynn, M.D.; Vidal, R.; Reiter, A.; Hager, G.D. Temporal Convolutional Networks for Action Segmentation and Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1003–1012. [Google Scholar] [CrossRef]

- Umar, M.; Ahmad, Z.; Ullah, S.; Saleem, F.; Siddique, M.F.; Kim, J.-M. Advanced Fault Diagnosis in Milling Machines Using Acoustic Emission and Transfer Learning. IEEE Access 2025, 13, 100776–100790. [Google Scholar] [CrossRef]

- Eldele, E.; Ragab, M.; Chen, Z.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. Time-Series Representation Learning via Temporal and Contextual Contrasting. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI-21), Montreal, QC, Canada, 21–27 August 2021; pp. 2352–2359. [Google Scholar] [CrossRef]

- Yue, Z.; Wang, Y.; Duan, J.; Yang, T.; Huang, C.; Tong, Y.; Xu, B. TS2Vec: Towards Universal Representation of Time Series. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI-22), Virtual Event, 22 February–1 March 2022; Volume 36, pp. 8980–8987. [Google Scholar] [CrossRef]

- Woo, G.; Liu, C.; Sahoo, D.; Kumar, A.; Hoi, S. CoST: Contrastive Learning of Disentangled Seasonal-Trend Representations for Time-Series Forecasting. arXiv 2022, arXiv:2202.01575. [Google Scholar] [CrossRef]

- Chalapathy, R.; Menon, A.K.; Chawla, S. Anomaly Detection Using One-Class Neural Networks. arXiv 2018, arXiv:1802.06360. [Google Scholar] [CrossRef]

- Chong, P.; Ruff, L.; Kloft, M.; Binder, A. Simple and Effective Prevention of Mode Collapse in Deep One-Class Classification. In Proceedings of the International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Schölkopf, B.; Platt, J.C.; Shawe-Taylor, J.; Smola, A.J.; Williamson, R.C. Estimating the Support of a High-Dimensional Distribution. Neural Comput. 2001, 13, 1443–1471. [Google Scholar] [CrossRef] [PubMed]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A Seasonal-Trend Decomposition Procedure Based on Loess. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Mallat, S. A Wavelet Tour of Signal Processing, 3rd ed.; Academic Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-Based Learning Applied to Document Recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Eldele, E.; Ragab, M.; Chen, Z.; Wu, M.; Kwoh, C.-K.; Li, X.; Guan, C. Self-Supervised Contrastive Representation Learning for Semi-Supervised Time-Series Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15604–15618. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Qiu, H.; Yu, G.; Lin, J. Bearing Data Set. NASA Ames Prognostics Data Repository, Moffett Field, CA, USA; 2007. Available online: https://ti.arc.nasa.gov/tech/dash/pcoe/prognostic-data-repository (accessed on 15 January 2025).

- Su, Y.; Zhao, Y.; Niu, C.; Pei, D.; Li, W.; Gao, K.; Xiao, X. Robust Anomaly Detection for Multivariate Time Series via Stochastic Recurrent Neural Networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (KDD 2019), Anchorage, AK, USA, 4–8 August 2019; pp. 2828–2837. [Google Scholar] [CrossRef]

- Lavin, A.; Ahmad, S. Evaluating Real-Time Anomaly Detection Algorithms: The Numenta Anomaly Benchmark. In Proceedings of the 14th IEEE International Conference on Machine Learning and Applications (ICMLA 2015), Miami, FL, USA, 9–11 December 2015; pp. 38–44. [Google Scholar] [CrossRef]

- Demšar, J. Statistical Comparisons of Classifiers over Multiple Data Sets. J. Mach. Learn. Res. 2006, 7, 1–30. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2021), Virtual Event, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).