Abstract

This research presents a new form of iterative technique for Garcia-Falset mapping that outperforms previous iterative methods for contraction mappings. We illustrate this fact through comparison and present the findings graphically. The research also investigates convergence of the new iteration in uniformly convex Banach space and explores its stability. To further support our findings, we present its working on to a BV problem and to a delay DE. Finally, we propose a design of an implicit neural network that can be considered as an extension of a traditional feed forward network.

Keywords:

Garcia-Falset; stability; iterative methods; implicit neural network; delay differential equations; boundary value problems MSC:

47H0; 47H09; 47H10; 68T07

1. Introduction

The theory of fixed points (FP) is a discipline that studies the criteria for the existence and uniqueness of FPs for specific mappings in abstract spaces. This theory, built on the basis of the Banach contraction principle (BCP), demonstrates that the FP of contraction mapping is unique in the entire metric space. In the early 1900s, scholars expanded the BCP to the encompass multiple abstract spaces and developed additional types of contraction mapping. Initially, the notion of fixed points focused solely on locating them. However, in other cases, it is impossible to determine fixed points using direct approaches, particularly for nonlinear mapping. As a result, iterative approximations are offered as an alternative method for estimating the fixed point. Many issues in applied physics and engineering are too complex to solve with traditional analytical approaches [,,,,]. In such instances, FP theory proposes several alternate strategies for obtaining the desired results. To begin, we convert the problem to an FP problem, ensuring that the FP set of one problem is equivalent to the solution set of the other. This establishes that the existence of a FP for an equation implies the existence of a solution for it. Suppose and represent the standard notations for the set. Consider to be a closed convex subset of a uniformly convex Banach space (UCBS). represents a contraction ( resp., nonexpansive) mapping if there is a constant (resp., ) that satisfies:

for all . The set of FP of T is denoted by .

In 1965, refs [,,] developed FP theorem for nonexpansive mappings in a UCBS. A Banach space S is uniformly convex if for any , some exists such that holds whenever , and . Soon after, Goebel [] established a basic proof for the Kirk–Browder–Gohde Theorem. Several writers have explained that nonexpansive mappings occur spontaneously while studying nonlinear problems in various distance space topologies. Thus, it is entirely reasonable to investigate extensions of these mappings.

BCP ensures the uniqueness of FP for every contraction T on a Banach space. A similar claim for nonexpansive mapping is no longer accurate.

Additionally, even if a nonexpansive T has a FP which is unique, the Picard iteration may not be useful to find it.

Thanks to these aspects, nonexpansive mappings have been a significant research topic in nonlinear analysis. In 2008, Suzuki [] extended nonexpansive mappings by defining it with a weaker inequality. A self-map is defined to satisfy condition (C), if holds for each whenever:

It seems trivial that a nonexpansive mapping satisfies condition (C), but [] demonstrated with an example that the converse does not generally hold true. Thus, Suzuki mappings have a broader class as compared to the nonexpansive mappings. On similar lines, Garcia-Falset et al. [] established a new class that is more general than the class of Suzuki (C) and can be defined as follows:

is a Garcia-Falset map (or a map T with condition (E)) if there is for such that:

It is trivial to verify that every Suzuki mapping T satisfies condition (E) with . We now present an example for a map , with condition (E), and show that it is not nonexpansive.

Example 1.

Consider a self-map is defined by:

To verify that T satisfies condition (E), we need to check

for some and .

Fixing the value of , we discuss the following cases. : Select , then . We have:

: Choose any , then and . We have:

: Choose any and , then and . We have:

: Choose any and , then and . We have:

In the above, we proved that in each case T satisfies condition (E). When choosing and and fixed point of this is , it is easily can be seen that but . Hence, is a Garcia-Falset mapping.

Remark 1.

Every Garcia-Falset mapping is not a nonexpansive mapping.

Soon after this discovery for these mappings, the approximation of FP under Ishikawa iteration in a certain-distance space was first studied by Bagherboum [].

Iterative Techniques

Iterative techniques can solve a variety of issues, including minimization, equilibrium, and viscosity approximation in various domains [,,,]. Picard [] proposed an iterative scheme for approximation of the FP in 1890 as follows:

Some well-known iteration processes that are often used to approximate FPs of nonexpansive mappings include Picard [], Mann [], Ishikawa [], Noor [], Abbas and Nazir [] and Thakur et al. [] iterations. A Thakur iteration [] is as follows:

where , and are in (0, 1). They established that, for contractive mappings, it converges faster than iterations of [,,,,].

The Asghar Rahim iteration [] is as follows:

where , and are in (0, 1). This process also proven by the authors to be faster than that of [,,,,,].

A Picard–Thakur hybrid iteration [] is as follows:

where , and are in (0, 1). They proved that this process converges faster than iteration processes (4)–(6) for contraction mappings.

Akanimo iteration [] is as follows:

where , are in (0, 1). They proved that it converges faster than iterations (4)–(7) for contractive mappings.

Iterative methods based on fixed-point theory are essential in order to solve the heat equation.

Time delays add complexity to delay differential equations, which is why fixed-point iterative techniques (4)–(7) are employed. By approximating the system’s state at each time step, these methods iteratively approach a solution, aiding in the stabilization and precise solution of equations when delays impact future behavior.

Implicit neural networks can be regarded as extensions of feedforward neural networks that allow for the transfer of training parameters between layers through input-injected weight tying. In fact, backpropagation in implicit networks is achieved by implicit differentiation in gradient computation, while evaluation of function is carried out by solving a FP equation. Compared to typical neural networks, implicit models have better memory efficiency and greater flexibility because of these special characteristics. However, because their FP equations are nonlinear, implicit networks may experience instability during training.

The fact that deep learning models are very effective when equipped with implicit layers has been demonstrated by numerous publications in learning theory. These learning models substitute a rule of composition, which may be a fixed-point scheme or a differential equation solution for the concept of layers. Known deep learning frameworks that use implicit infinite-depth layers are neural ODEs [], implicit deep learning [] and deep equilibrium networks []. In [], the convergence of specific classes of implicit networks to global minima is examined.

The research of implicit-depth learning models’ well-posedness and numerical stability has attracted attention lately. An adequate spectral requirement for the convergence and well-posedness of the Picard’s scheme, connected to the implicit network fixed-point equation, is presented by [].

Robust and well-posed implicit neural networks for the non-Euclidean norm are built on the basis of contraction theory []. In order to create durable models, they develop a training problem that is constrained by the average iteration and the well-posedness condition. The input–output Lipschitz constant is used as a regularizer in this process. A new kind of deep learning model that works on the basis of implicit prediction rules is presented in []. These principles are unlike modern neural networks as they are not produced through a recursive approach across multiple layers. Instead, they rely on solving a fixed-point problem in a “state” vector .

The structure of the paper is as follows. First, we present a novel iterative technique for Garcia-Falset mapping and evaluate its convergence and stability in UCBS. Secondly, we compare this with different well-known iterative schemes, and finally, we illustrate its applications in various problems, namely the BV problem, delay DE and a training problem for an implicit neural network.

2. Rate of Convergence

In this paper, we prove that our novel iterative scheme converges faster than iteration processes (4)–(6). Our novel proposed iteration is given by:

where , and are in (0,1).

Theorem 1.

Proof.

As proven in Theorem 2 of [], we have:

Let

Thus,

Let

Then,

From Definition 3.4 of [], converges faster than . □

Remark 2.

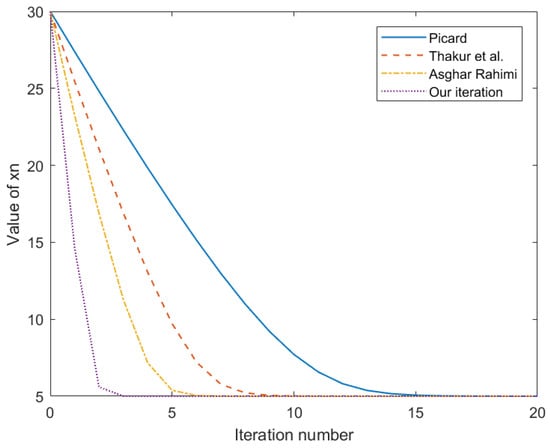

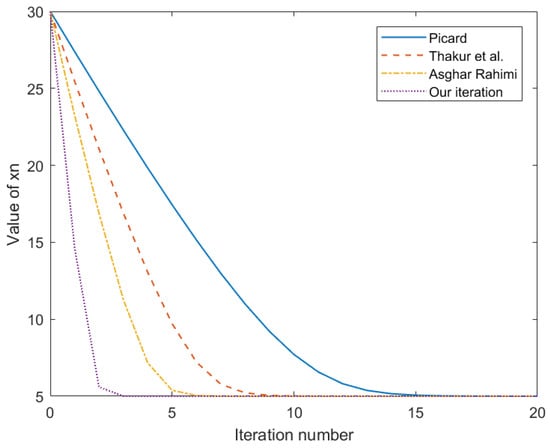

We now demonstrate with an example that our novel iteration (9) converges at a greater rate than Picard (4), Thakur (5) and Asghar Rahimi iteration processes (6).

Example 2.

Consider to be a mapping defined by for any . Choose , with an initial guess . It can be easily seen that is a fixed point of T. Table 1 and Figure 1 below shows the comparison of all the iterative schemes to a fixed point of T in 21 iterations.

Table 1.

Comparing iteration convergence.

Figure 1.

Convergence rate of iterative schemes [].

3. Convergence Results for Garcia-Falset Mapping

Lemma 1.

Let be a convex and closed subset of a Banach space S and is a mapping with condition (E). If defined by (9) then exists for every fixed point p provided that .

Proof.

Recall that . By Lemma 6 of [], we have:

so that

and

Thus,

Hence, is bounded and nonincreasing for every . Thus, exists. □

Lemma 2.

Let be a convex and closed subset of a Banach space S and be a Garcia-Falset mapping. Suppose is defined by (9) and , then

.

Proof.

From Lemma 1, for each , exists. Suppose that there exists , such that:

From the proof of Lemma 1 we obtain that . Accordingly, one has

Now p is the fixed point, and by Lemma 6 of [], we obtain that

Again, by the proof of Lemma 1, we obtain that . So,

Hence,

So,

From (18), we have

Hence,

4. Stability

In this section, we establish the stability for Garcia-Falset mappings via our novel iteration process (9).

Theorem 2.

The iterative process defined by Equation (9) is T-stable in the sense of Harder and Hicks [].

Proof.

Suppose is an arbitrary sequence in C and is the sequence generated by (9) converging to a fixed point p (by Theorem 1) and for all . We have to prove that which implies that . Suppose ; then, by iteration process (9), we have

since

and

we obtain .

Define . Then, . Since , we have , i.e., . Conversely, suppose , we have:

This implies that . Hence, iteration process (9) is T-stable. □

5. Application

Thermal analysis and engineering both make extensive use of the heat equation, which represents the temperature distribution over time in a specific location. By adding delays, delay differential equations expand on conventional differential equations and can be used in systems where past states affect future behavior, such as population dynamics. Implicit functions are used by designed implicit neural networks to define outputs, resulting in reliable and effective solutions to challenging issues.

5.1. Heat Equation

Consider the following one-dimensional heat equation:

The initial and homogeneous boundary conditions are given as follows:

and

is a continuous function. Utilizing iterative scheme (9), a solution of the problem (24) is approximated with the following assumptions:

Theorem 3.

Proof.

satisfies (24) only if it satisfies the following equation:

Let and using (27), we obtain

Such that

Example 3.

Consider the following problem:

with initial and boundary conditions given as follows:

and

The problem (33) has an exact solution given by:

is defined by:

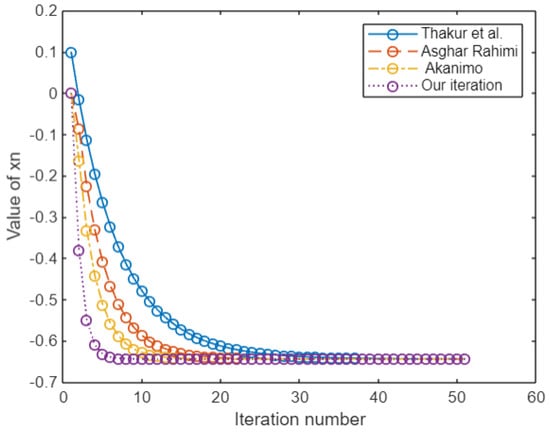

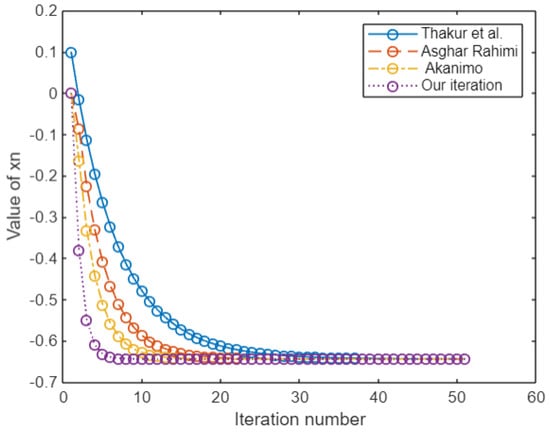

The iterative scheme converges to (34) for the operator defined in Equation (35) which is shown in Table 2 and Figure 2.

Table 2.

Comparing iteration convergence.

Figure 2.

Comparison of iteration processes convergence [].

5.2. Delay Differential Equations

A delay differential equation (DDE) is a class of differential equations that includes terms dependent on past values of the solution. It takes the following form:

where is the unknown function of time t, is the delay parameter and f is a function that describes how the rate of change of y depends on both the current state and the state at an earlier time . This inclusion of past states allows DDEs to model systems where historical information impacts future dynamics, such as in biological, engineering or economic processes.

In this subsection, we use novel iterative scheme (9) to find the solution.

Consider , where the Banach space [] of all continuous functions on closed interval has the Chebyshev norm defined as follows:

We consider the following delay differential equation:

with initial condition

We further suppose that the following conditions are satisfied:

- ,

- There exists such that:

- .

We present the following result as a generalization of the result of Coman et al. [].

Theorem 4.

Proof.

and

Let . We show that as for each

Now, for each , we have

Moreover,

Finally,

□

Remark 4.

By condition 5 and as and , the conditions of Lemma 3 of [] are satisfied. Hence, .

5.3. Implicit Neural Network

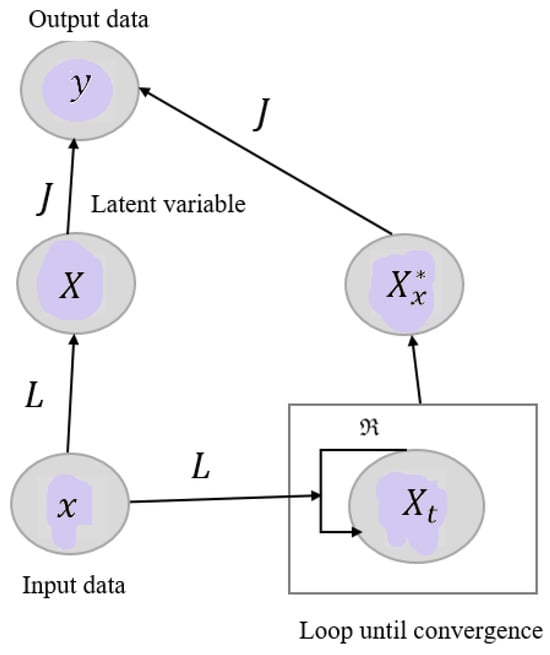

In this section, we present a modified implicit neural network which can be regard as an extension of the traditional feed-forward neural network.

Deep equilibrium (DEQ) models, an emerging class of implicit models that map inputs to fixed points in neural networks, are gaining popularity in the deep learning community. A deep equilibrium (DEQ) model deviates from classical depth by solving for the fixed point of a single nonlinear layer R. This structure allows for the decoupling of the layer’s internal structure (which regulates representational capacity) from how the fixed point is determined (which affects inference-time efficiency), which is typically carried out using classic techniques.

We aim to build the neural network given in Figure 3 that translates from a data space x to an inference space y. The implicit component of the network utilizes a latent space X, and data are translated through L maps of this latent space from x to X. We defined that T is a network operator that transforms by:

Figure 3.

Feed-forward networks act by computing . Implicit networks add a fixed point condition using R. When R is Garcia-Falset, repeatedly applying R to update a latent variable converges to a fixed point .

The objective is to find the unique fixed point of given input data x. We will then use a final mapping to transfer to the inference space y. Because of this, we can create an implicit network N by

Implicit models specify their outputs as solutions to nonlinear dynamical systems, as opposed to stacking a number of operators hierarchically. For instance, the outputs of DEQ models, which are the subject of this work, are defined as fixed points (i.e., equilibria) of a layer R and input x; that is, output

Theorem 5.

Let be a convex and closed subset of a UCBS and be a contraction mapping (activation function). Then, Equation (53) models well-posed and robust neural network provided that the weights {}, {}, {},{} and biases {} are in [δ, 1 ] for any and some .

Proof.

Under the given conditions, Equation (53) models a well-posed system as the existence of unique fixed point for the contraction mapping is guaranteed []. The robustness of system can be verified by Theorem 2 where the iterative scheme is proved to be T-stable, which shows that the smaller perturbation to the system does not effect the output. □

Example 4.

Training implicit neural network. Suppose we want to build a neural network that can predict an exam score based on the number of hours studied by assuming that whoever studies for hours tends to achieve an exam score of , which can be defined by function . For this purpose, we train a network that takes an input of 3 (hours) and gives an output of 10 (score).

Key steps for solving iteration using implicit neural networks:

- FormulatetheProblem: Represent the task as a fixed-point equation.

- Initialization: Define an initial guess for x and determine the conditions for convergence.

- BackpropagationthroughIterations: Implement the backpropagation process, considering the differentiation of the fixed-point iteration with respect to the parameters using implicit differentiation.

- ConvergenceandStabilityAnalysis: Analyze the convergence behavior and stability of the iterative process, ensuring that the method reliably finds a solution within the desired tolerance.

Given:

- Maximum input (max) = 3;

- Minimum input (min) = 0;

- Input value = 3.

Normalizing the input and output:

we get:

By normalization, input 3 becomes 1, and similarly, output 10 also becomes 1. We start the Example by taking the following:

- Input ;

- Weights: ;

- Bias: ;

- ;

- Learning rate .

Novel Proposed Method:

We will compute using (53). For this, let us start by taking the activation function as , which is a contraction on .

At Time Step:

Now applying our proposed iterative method, we obtain:

This will be output at time step

Compute Loss:

The mean square error cost function is defined as follows:

where:

- x: input;

- : output at time step t;

- W: weights collected in the network;

- b: biases;

- : usual length of vector v.

Let us use the Mean Squared Error (MSE) . The true output is

Backpropagation:

Now, by using backpropagation, we will update weights and biases.

Gradient Learning Algorithm:

We will update weights and biases using the gradient learning algorithm. This technique can be written as follows:

Updating

:

Updating :

In a similar way, we will calculate other weights and biases as well.

Updated Weights and Biases:

| Weights | Biases |

At Time Step:

We will calculate output by using updated weights and biases.

Using the same procedure, we calculated

Compute :

Let us use the Mean Squared Error (MSE) .

By repeating the same procedure:

At Time Step :

After 6 iterations, our model is trained and we obtain the following loss:

Now, as our model is trained, we will check at and see what the output for this will be. Output y at normalized input is

We obtained the estimated output (score) for an input of hours.

It is proven in Section 2 that the rate of convergence of the iterative scheme (9) is higher than several others; therefore, the convergence rate of the trained network (53) is higher than many traditional networks like FNN, RNN, etc.

Since the proposed iterative method is faster in its convergence rate, therefore, the implicit neural network operating on its basis also has an improved convergence rate.

6. Conclusions

This research presents a novel iterative scheme that converges faster than Picard [], Thakur et al. [], Asghar Rahimi [], Sintunavarat iteration [], Jubair et al. [], Okeke and Abbas [], Agarwal et al. [] and Akanimo []. The comparison of (9) with other iterative schemes through an Example 2 is also demonstrated. Finally, applications raised from various fields of science are given to show the applicability of our scheme. As a future work, it would be very interesting to see the applicability of this iterative scheme for solving nonlinear ODEs and PDEs after discretization. For similar work, see [].

Author Contributions

Conceptualization, Methodology, Supervision, Review: Q.K. Original draft preparation and editing: S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Alagoz, O.; Birol, G.; Sezgin, G. Numerical reckoning fixed points for Barinde mappings via a faster iteration process. Facta Univ. Ser. Math. Inform. 2008, 33, 295–305. [Google Scholar]

- Ullah, K.; Ahmad, J.; Arshad, M.; Ma, Z. Approximating fixed points using a faster iterative method and application to split feasibility problems. Computation 2021, 9, 90. [Google Scholar] [CrossRef]

- Censor, Y.; Elfving, T. A multiprojection algorithm using Bregman projections in a product space. Numer. Algorithms 1994, 8, 221–239. [Google Scholar] [CrossRef]

- Byrne, C. A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Prob. 2004, 20, 103–120. [Google Scholar] [CrossRef]

- López, G.; Márquez, V.M.; Xu, H.K. Halpern iteration for Nonexpansive mappings. Contemp. Math. 2010, 513, 211–231. [Google Scholar]

- Kirk, W.A. A fixed point Theorem for mappings which do not increase distances. Amer. Math. Mon. 1965, 72, 1004–1006. [Google Scholar] [CrossRef]

- Browder, F.E. Nonexpansive nonlinear operators in a Banach space. Proc. Natl. Acad. Sci. USA 1965, 54, 1041–1044. [Google Scholar] [CrossRef]

- Göhde, D. Zum Prinzip der kontraktiven Abbildung. Math. Nachr. 1965, 30, 251–258. [Google Scholar] [CrossRef]

- Goebel, K. An elementary proof of the fixed-point Theorem of Browder and Kirk. Michigan Math. J. 1969, 16, 381–383. [Google Scholar] [CrossRef]

- Suzuki, T. Fixed point Theorems and convergence Theorems for some generalized nonexpansive mappings. J. Math. Anal. Appl. 2008, 340, 1088–1095. [Google Scholar] [CrossRef]

- Garcia-Falset, J.; Llorens-Fuster, E.; Suzuki, T. Fixed point theory for a class of generalized nonexpansive mappings. J. Math. Anal. Appl. 2011, 375, 185–195. [Google Scholar] [CrossRef]

- Bagherboum, M. Approximating fixed points of mappings satisfying condition (E) in Busemann space. Numer. Algorithms 2016, 71, 25–39. [Google Scholar] [CrossRef]

- Kitkuan, D.; Muangchoo, K.; Padcharoen, A.; Pakkaranang, N.; Kumam, P. A viscosity forward-backward splitting approximation method in Banach spaces and its application to convex optimization and image restoration problems. Comput. Math. Methods 2020, 2, e1098. [Google Scholar] [CrossRef]

- Kumam, W.; Pakkaranang, N.; Kumam, P.; Cholamjiak, P. Convergence analysis of modified Picard’s hybrid iterative algorithms for total asymptotically nonexpansive mappings in Hadamard spaces. Int. J. Comput. Math. 2020, 97, 157–188. [Google Scholar] [CrossRef]

- Sunthrayuth, P.; Pakkaranang, N.; Kumam, P.; Thounthong, P.; Cholamjiak, P. Convergence Theorems for generalized viscosity explicit methods for nonexpansive mappings in Banach spaces and some applications. Mathematics 2019, 7, 161. [Google Scholar] [CrossRef]

- Thounthong, P.; Pakkaranang, N.; Cho, Y.J.; Kumam, W.; Kumam, P. The numerical reckoning of modified proximal point methods for minimization problems in non-positive curvature metric spaces. J. Nonlinear Sci. Appl. 2020, 97, 245–262. [Google Scholar] [CrossRef]

- Picard, E. Mémoire sur la théorie des équations aux dérivées partielles et la méthode des approximations successives. J. Math. Pures Appl. 1890, 6, 145–210. [Google Scholar]

- Mann, W.R. Mean value methods in iteration. Proc. Amer. Math. Soc. 1953, 4, 506–510. [Google Scholar] [CrossRef]

- Ishikawa, S. Fixed points by a new iteration method. Proc. Amer. Math. Soc. 1974, 44, 147–150. [Google Scholar] [CrossRef]

- Noor, M.A. New approximation schemes for general variational inequalities. J. Math. Anal. Appl. 2000, 251, 217–229. [Google Scholar] [CrossRef]

- Abbas, M.; Nazir, T. A new faster iteration process applied to constrained minimization and feasibility problems. Mate. Vesnik 2014, 66, 223–234. [Google Scholar]

- Thakur, B.S.; Thakur, D.; Postolache, M. A new iteration scheme for approximating fixed points of nonexpansive mappings. Filomat 2015, 30, 2711–2720. [Google Scholar] [CrossRef]

- Rahimi, A.; Rezaei, A.; Daraby, B.; Ghasemi, M. A new faster iteration process to fixed points of generalized alpha-nonexpansive mappings in Banach spaces. Int. J. Nonlinear Anal. Appl. 2024, 15, 1–10. [Google Scholar]

- Okeke, G.A.; Udo, A.V.; Alqahtani, R.T.; Kaplan, M.; Ahmed, W.E. A novel iterative scheme for solving delay differential equations and third order boundary value problems via Green’s functions. AIMS Math. 2024, 9, 6468–6498. [Google Scholar] [CrossRef]

- Chen, T.Q.; Rubanova, Y.; Bettencourt, J.; Duvenaud, D. Neural ordinary differential equations. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Ghaoui, E.; Gu, F.; Travacca, B.; Askari, A.; Tsai, A. Implicit deep learning. Siam J. Math. Data Sci. 2021, 3, 930–950. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. Deep equilibrium models. arXiv 2019, arXiv:1909.01377. [Google Scholar]

- Kawaguchi, K. On the theory of implicit deep learning: Global convergence with implicit layers. arXiv 2021, arXiv:2102.07346. [Google Scholar]

- Jafarpour, S.; Davydov, A.; Proskurnikov, A.V.; Bullo, F. Robust implicit networks via non-Euclidean contractions. arXiv 2022, arXiv:2106.03194. [Google Scholar]

- Sahu, D.R. Applications of the S-iteration process to constrained minimization problems and split feasibility problems. Fixed Point Theory 2011, 12, 187–204. [Google Scholar]

- Gopi, R.; Pragadeeswarar, V.; De La Sen, M. Thakur’s Iterative Scheme for Approximating Common Fixed Points to a Pair of Relatively Nonexpansive Mappings. J. Math. 2022, 2022, 55377686. [Google Scholar] [CrossRef]

- Harder, A.M.; Hicks, T.L. A Stable Iteration Procedure for Nonexpansive Mappings. Math. Japon. 1988, 33, 687–692. [Google Scholar]

- Heammerlin, G.; Hoffmann, K.H. Numerical Mathematics; Springer Science and Business Media: Berlin/Heidelberg, Germany, 1991. [Google Scholar]

- Coman, G.; Rus, I.; Pavel, G.; Rus, I. Introduction in the Operational Equations Theory; Dacia: Cluj-Napoca, Romania, 1976. [Google Scholar]

- Weng, X. Fixed point iteration for local strictly pseudo-contractive mapping. Proc. Am. Math. Soc. 1991, 113, 727–731. [Google Scholar] [CrossRef]

- Sintunavarat, W.; Pitea, A. On a new iteration scheme for numerical reckoning fixed points of Berinde mappings with convergence analysis. J. Nonlinear Sci. Appl. 2016, 9, 2553–2562. [Google Scholar] [CrossRef]

- Ali, F.; Ali, J. Convergence, stability and data dependence of a new iterative algorithm with an application. Comput. Appl. Math. 2020, 39, 267. [Google Scholar] [CrossRef]

- Okeke, G.A.; Abbas, M. A solution of delay differential equations via Picard, Krasnoselskii hybrid iterative process. Arab. J. Math. 2017, 6, 21–29. [Google Scholar] [CrossRef]

- Agarwal, R.P.; O’Regan, D.; Sahu, D. Fixed Point Theory for Lipschitzian-Type Mappings with Applications; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Wang, X. Fixed-Point Iterative Method with Eighth-Order Constructed by Undetermined Parameter Technique for Solving Nonlinear Systems. Symmetry 2021, 13, 863. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).