Abstract

We investigate the properties of matrices that emerge from the application of Fourier series to Jacobi matrices. Specifically, we focus on functions defined by the coefficients of a Fourier series expressed in orthogonal polynomials. In the operational formulation of integro-differential problems, these infinite matrices play a fundamental role. We have derived precise calculation formulas for their elements, enabling exact computation of these operational matrices. Numerical results illustrate the effectiveness of our approach.

Keywords:

orthogonal polynomials; fourier series; Jacobi matrix; functions of matrices; spectral methods MSC:

33C45; 42A16; 15A16; 65M70; 65N35

1. Introduction

This work presents a comprehensive investigation of matrices generated through the application of Fourier series to Jacobi matrices, with a particular focus on functions defined by Fourier series coefficients expressed in orthogonal polynomials. Precise formulas for computing the elements of these matrices are derived, which are crucial for the operational formulation of integro-differential problems. The fundamental properties of these matrices are highlighted, emphasizing their algebraic representation within functional operators. In numerical analysis, that representation gives rise to operational methods of which spectral methods are among a wide range of numerical tools to approximate solutions to integro-differential problems.

Let be, at least approximately, a linear operator, with and being adequate function spaces, and let

be the problem to be solved, where is given and is the sought solution, at least approximately. Assuming that , the space of all real polynomials, is dense in , then y can be represented by a Fourier series:

where is an orthogonal polynomial sequence (OPS) and is the coefficients vector. The orthogonality of ensures the existence of an inner product such that , is the Kronecker delta, and are the Fourier coefficients of y [1]. In addition, if is also dense in , writing , then the problem of finding y in (1), given and f, can be reduced to building , the matrix operator such that

and solving the algebraic problem Here, f corresponds, naturally, to the vector of Fourier coefficients of function f.

Building is often a challenging task, involving numerically unstable and computationally heavy operations. Many mathematical problems can be modeled using derivatives and primitives, together with algebraic operations. For those cases, the matrix can be built from three building blocks: the matrix , representing multiplication in ; the matrix , representing differentiation; and the matrix , representing primitivation. Formally,

The linear integro-differential operator can be cast in the form

with , where the negative indices represent primitives and the coefficients. Then, in matrix form,

where corresponds to for negative powers. If are functions given by its Fourier series, then

The paper is structured as follows: In Section 2 we derive properties of matrix polynomials ; in Section 3 we explore the functions of the matrix multiplication matrix; numerical results are provided to demonstrate the effectiveness of the proposed approach, showcasing the practical applicability of the derived formulas in Section 4; and we conclude in Section 5.

2. Polynomials of Jacobi Matrices

Using the well-known properties of orthogonal polynomials, we derive properties of matrix polynomials. In this context, we consider matrix polynomials as matrices transformed by polynomials with real scalar coefficients.

2.1. Orthogonal Polynomials

One characteristic property of orthogonal polynomials is the three-term recurrence relation. Let be an OPS satisfying

and let be a square real or complex matrix. We call , the set of matrices defined by (4):

with and the null and the identity matrices, respectively. Of course, since and are commutative matrices ( is a linear combination of powers of ), we can equivalently consider

For our purpose, we are interested in the case , defined by (2) and (3), that is, with , and so, from (4),

is the Jacobi matrix associated with .

When considering the powers of such matrices, it is important to remember that they are infinite matrices. However, since each row and column of contains only a finite number of non-zero elements, their products are well defined. Consequently, each power with is well defined and has only a finite number of non-zero elements in each row and column.

In computational applications, to deal with we have to truncate it to an square matrix for some . One consequence of such a truncation operation is that elements outside the main block of can differ from the corresponding ones in . In practice, if we need to evaluate the main block of we must evaluate and truncate the resulting square block.

2.2. Linearization Coefficients

From now on, are the set of matrices defined by (5), with defined by (7). In that case, are matrices of linearization coefficients, in the sense of:

Proposition 1.

If is an OPS, is its associated Jacobi matrix and , then

Proof.

Since, formally,

then , and by linearity

□

In the context of orthogonal polynomial theory, those values are called linearization coefficients [2,3,4] because they solve the linearization problem

It has been proven [2,3] that when is a classical OPS the coefficients satisfy a second-order recurrence relation in the indices k. Furthermore, conditions have been established to ensure that has only non-negative entries [4].

In search of specific cases, some authors have derived explicit formulas for these coefficients. Notable examples include the Legendre polynomials [5,6], the Hermite polynomials [7], and the Jacobi polynomials [8]. However, the formulas in some of these cases involve hypergeometric functions, which are not practical for numerical purposes. The linearization coefficients of the Chebyshev polynomials are particularly noteworthy due to their simplicity. Using the standard notation for the first, second, third, and fourth order of Chebyshev polynomials, we have found the following results [9,10] for ,

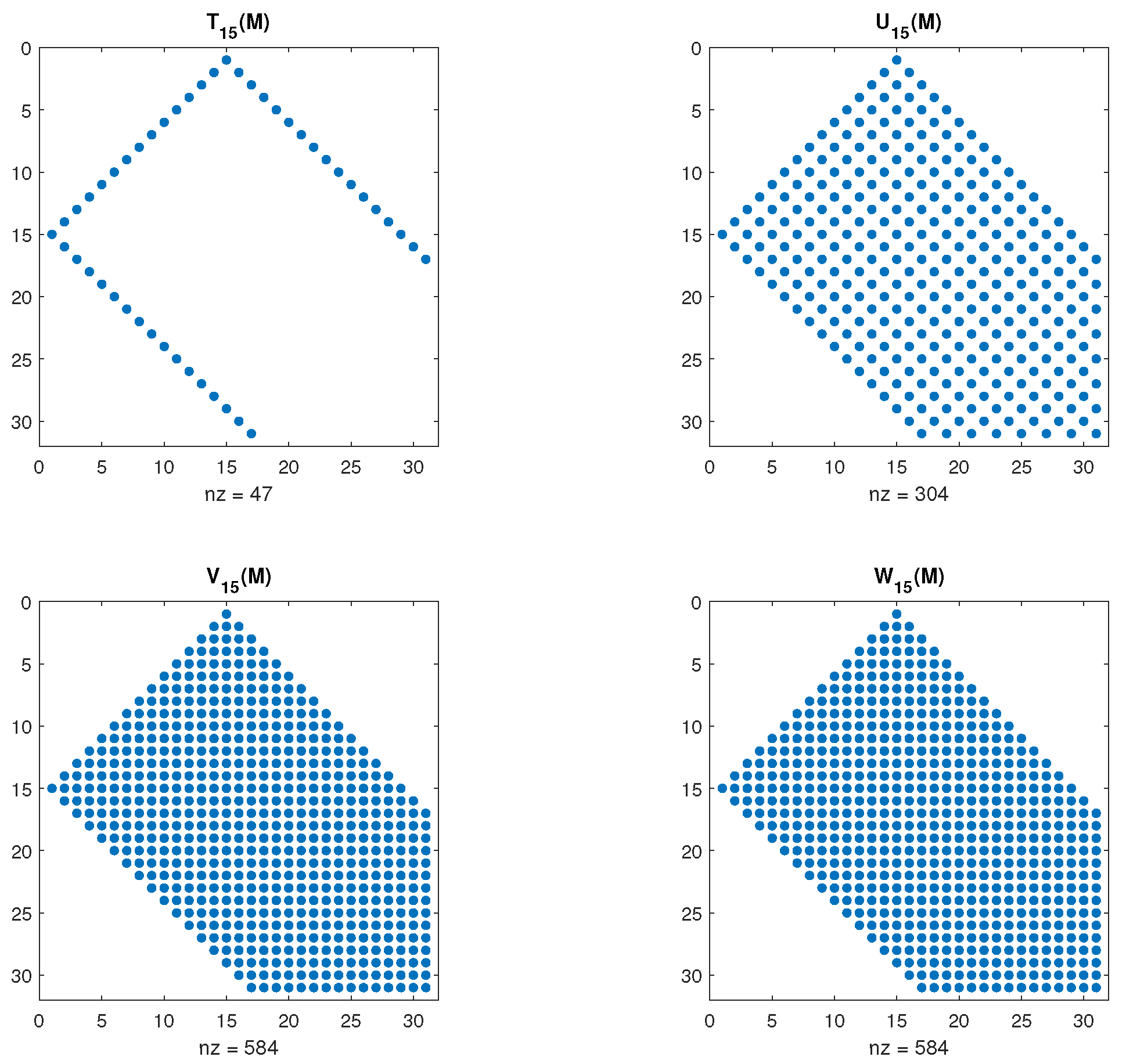

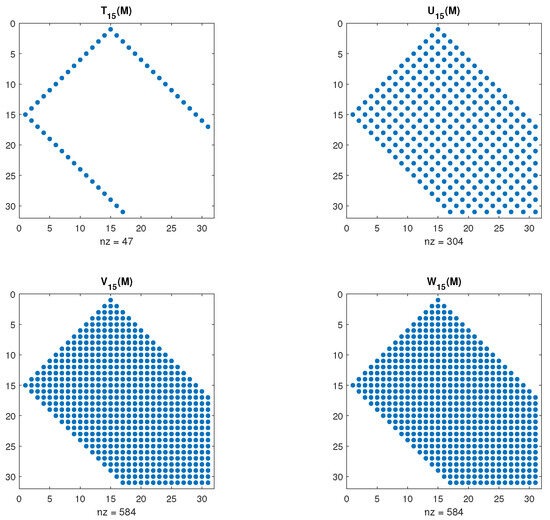

and, in the four cases, . In Figure 1, we can see the sparse structure of matrices for those cases.

Figure 1.

Sparse structure of , truncated to their main block, for T, U, V, and W, the first-, second-, third-, and fourth-kind Chebyshev polynomials, respectively.

This band structure, characterized by zeros outside the diagonals and within the triangular block, , of matrices , is not exclusive to Chebyshev polynomials. In fact, it is a consequence of the general Formula (8), inherited from the three-term recurrence relation (4).

Proposition 2.

If is an OPS satisfying (8), is its associated Jacobi matrix and , then

Proof.

One immediate consequence of those triangular null blocks is that they include square main blocks inside.

Corollary 1.

Let , defined as in Proposition 2, and let be a square main block. If , then is a null matrix.

Proof.

Proposition 2 implies that for any pair such that , and this is valid if and . □

Three additional consequences of Proposition 1 are as follows:

The first one, meaning that each column j of a matrix equals the column k of ,

is a general property. The other two, relating each row j of with row i of ,

and with its transpose,

are both verified if the associated inner product satisfies the wide general property .

Next, we study the more general properties of the matrices , associated with generic OPSs, not limited to the classical ones.

2.3. General Properties of Matrices

First of all, we can verify that any re-normalization in the polynomial sequence leads to similar matrices.

Proposition 3.

Let such that , with and ; and let and be the Jacobi matrices associated with and , respectively. Then, and are related by

with .

Proposition 3 can be utilized to establish a connection between matrices associated with any generic OPS and matrices linked to their respective monic orthogonal polynomial sequences, or to the corresponding orthonormal polynomial sequences.

Corollary 2.

Let be an OPS with leading coefficients , and let be the monic OPS , then

where is the diagonal matrix .

Corollary 3.

Let be an OPS with polynomial norms , and let be the orthonormal polynomial sequence , then

with .

As pointed out in [11], the Jacobi matrix associated with any OPS is similar to the symmetric matrix associated with the corresponding orthonormal sequence. Indeed, is the symmetric matrix

associated with the orthonormal polynomials

Proposition 4.

Let be an orthonormal OPS defined by (12) and let be its associated Jacobi matrix, then are symmetric matrices.

Proof.

Since and are both symmetric matrices, the proof results from

by the induction hypothesis in j and by the symmetry of . □

Another general property of matrices is invariance under the orthogonality support of , in the sense of the following proposition:

Proposition 5.

Let be the OPS defined by (4) and be the OPS defined by

with , and for some , . If and are the Jacobi matrices associated with and , respectively, then

Proof.

From , we have

and , since and . □

If is the domain of orthogonality of and is the domain of orthogonality of , then the conditions of Proposition 5 hold, with and , and we obtain for all j.

2.4. Recurrence Relations

Returning to recursive Formulas (5) and (6), taking , we obtain

recursive formulas relating the rows and columns of with those of and . From their element-wise versions, we obtain a recursivity in elements,

for , where whenever at least one index is negative.

From (13) we can take values for a matrix sequence . Recurrence relations (14) are useful in case only a single matrix is needed. For the latter case, we need the first two columns for the initial values.

Proposition 6.

Let be an OPS and its associated Jacobi matrix. If and , then for ,

- (a)

- (b)

Proof.

The proof of (a) is immediate from Proposition 2. (b) results from (a) and (14). □

Keeping in mind that is a band matrix, from (14) we obtain explicit formulas for the sub-diagonals j and .

Proposition 7.

Let be an OPS, and its leading coefficients, and , then for ,

- (a)

- ;

- (b)

- .

Proof.

For the proof, we need the well-known relations [9]

and, since , we have

From (14) and Proposition 2,

This is a recurrence relation that, with the initial value given in Proposition 6, results in

So (a) is proved.

Similar results can be obtained for the first two non-null anti-diagonals.

Proposition 8.

In the conditions of Proposition 7, for ,

- (a)

- , for ;

- (b)

- , for .

Proof.

Since , and belong to the null triangular block of , from (14) we have, for ,

The proof of (a) results from [9]

from which we have

We arrive at (b) from

Using (a) and iterating in k, we obtain

and (b). □

Using property (b) of Proposition 7, we confirm that for any values of , j and k satisfy . The next proposition presents a sufficient condition for the matrix to have null intercalated diagonals.

Proposition 9.

Let be an OPS satisfying (4) with constant , then

Proof.

Using (14), with ,

The hypothesis implies that , and so, using Proposition 7, we obtain . Admitting by the induction hypothesis that, for some we have for , then

□

Proposition 9 includes symmetric OPSs, generalizing the one in [12], , for which .

3. Functions of the Matrix

So far, we have found recursive formulas to evaluate , from and , or from its first two columns or from its first two non-null diagonals. With already evaluated, we build matrices

In the case where is a Fourier series, then is the operational matrix representing the multiplication by f in . Taking into account that for the matrices we know exactly each element, defined as a finite sum, implies that the entries of matrix are also evaluated explicitly as finite sums. This is explained next.

Having found, in Section 2.2, a particular sparse structure of matrices , with well-located non-null entries, this is the core result to prove that each entry of is evaluated as a finite sum.

Proposition 10.

Let be defined by (15), , and , then for ,

Proof.

From Proposition 2 we obtain that if , or if , or if , and the result follows from

□

The values for the first two columns of , as particular cases of (16), arise from Propositions 6 and 10.

Corollary 4.

In the conditions of Proposition 10:

- (a)

- ;

- (b)

- .

Another corollary of Proposition 10 is that each block of depends only on a finite number of f coefficients.

Corollary 5.

Let be defined by (15), a truncated series, and a square main block, then

- (a)

- depends on ;

- (b)

- .

Two Fourier series sharing the first coefficients do have identical blocks in their respective matrices, as stated in the next corollary.

Corollary 6.

Let and be two Fourier series, and let and be the respective matrix functions, then

These results are relevant because they allow working with approximate series when only the first coefficients are known.

Benzi and Golub [13] showed that the entries of a matrix function , when f is analytic and is any symmetric band matrix, are bounded in an exponentially decaying manner away from the main diagonal. This result also applies to our work since whenever is not symmetric it can be reduced by similarity to a symmetric matrix . As proved in Corollary 3, instead of , we can work with matrices , associated with monic polynomials.

Proposition 11.

Let , . If

then

Proof.

The proof follows from and Corollary 3. □

And so, and, when f is analytic, is bounded in an exponentially decaying manner away from the main diagonal.

In the next section, we illustrate the effectiveness of the previous formulas in the evaluation of the entries of matrix .

4. Applications

We conclude with applications to functions whose Fourier coefficients are given by closed-form expressions. We present two illustrative examples. The first, using Legendre polynomials, a particular case of Jacobi polynomials, where the linearization coefficients are explicitly known. The second example, based on Laguerre polynomials, for which the linearization coefficients are not available, makes use of the recurrence relations presented in Section 2.4. Valuable references on linearization coefficients for Jacobi and Laguerre polynomials can be found in [14,15], along with additional relevant sources cited within these works.

4.1. The Sign Function with Legendre Polynomials

Considering Legendre polynomials , for which the Legendre–Fourier coefficients of a function are

if f is the sign function

then

Using the parity property [9], and the primitives formula [16], then

and so, . Finally, since and , it is a straightforward exercise to prove that

With , we represent the rising factorial and , also known by Pochhammer’s symbol.

We proceed to evaluate

where is the Jacobi matrix associated with Legendre polynomials. To the reader interested in matrix sign functions, we recommend [17,18]. Making use of Corollary 5, we consider the first Legendre–Fourier coefficients to evaluate the main block of .

The linearization coefficients of Legendre polynomials products are explicitly known [5]:

with

where .

Those coefficients and (16) result in

To compare these formulas with the ability of matrix to mimic the effect of , we use the function . Since , the Legendre–Fourier coefficients of g can be obtained from those of f,

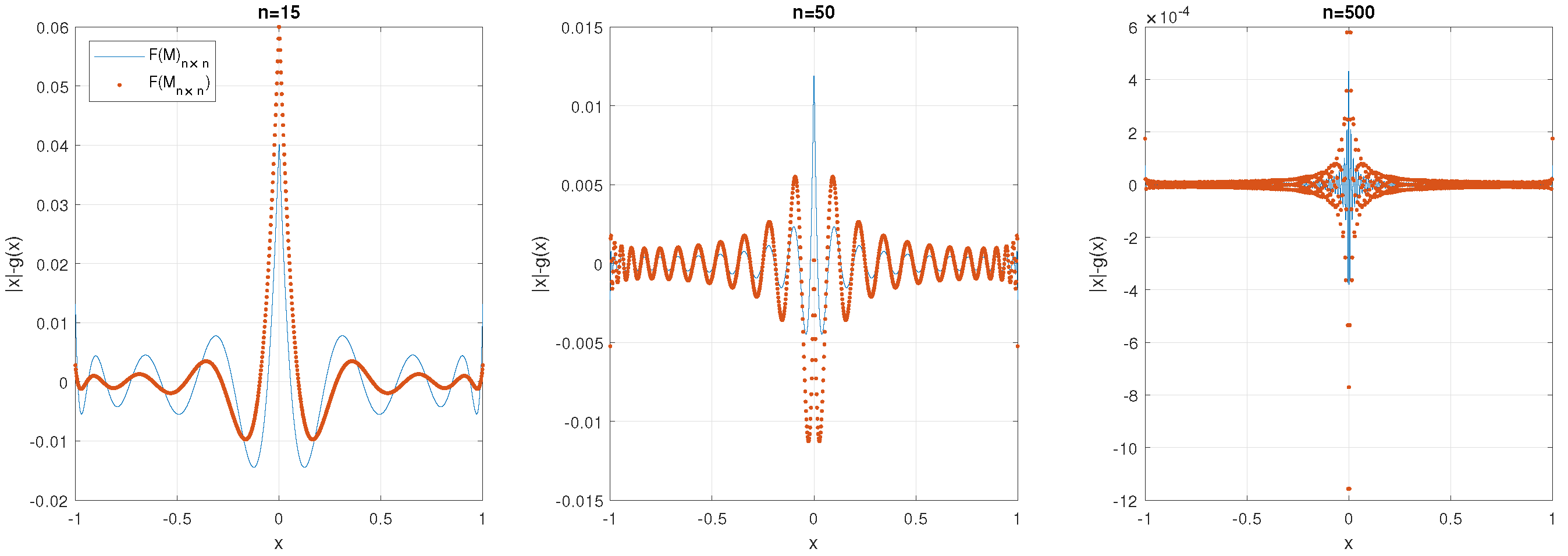

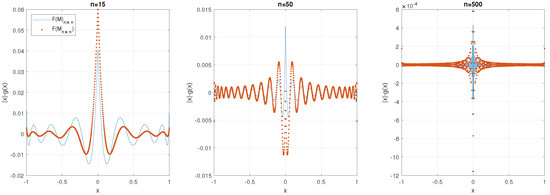

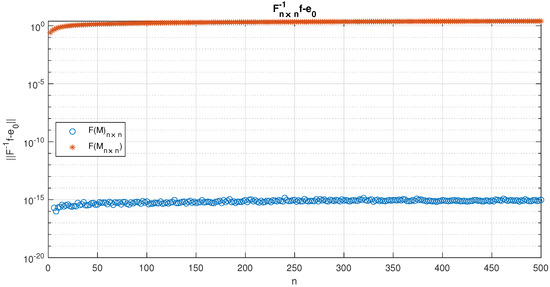

and, since , for Legendre polynomials, those g coefficients must lie in column . In Figure 2, we present the error with coefficients obtained in our matrix and in matrix , with selected values of matrix dimensions n. Matrix is obtained with Newton–Schultz iteration [18].

Figure 2.

Error with coefficients obtained in and in , with , and .

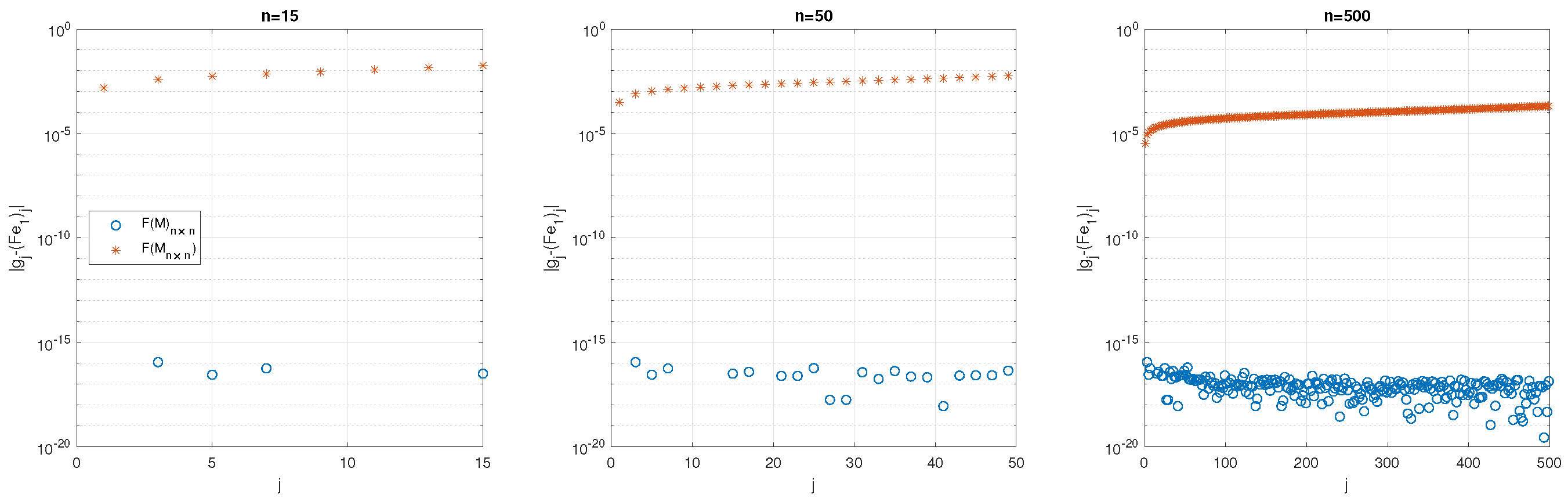

In Figure 3, we present in log scale the error , with coefficients obtained with (17) and , the values , and in matrix , with selected values of matrix dimensions n.

Figure 3.

Error with coefficients obtained in and in , with , and .

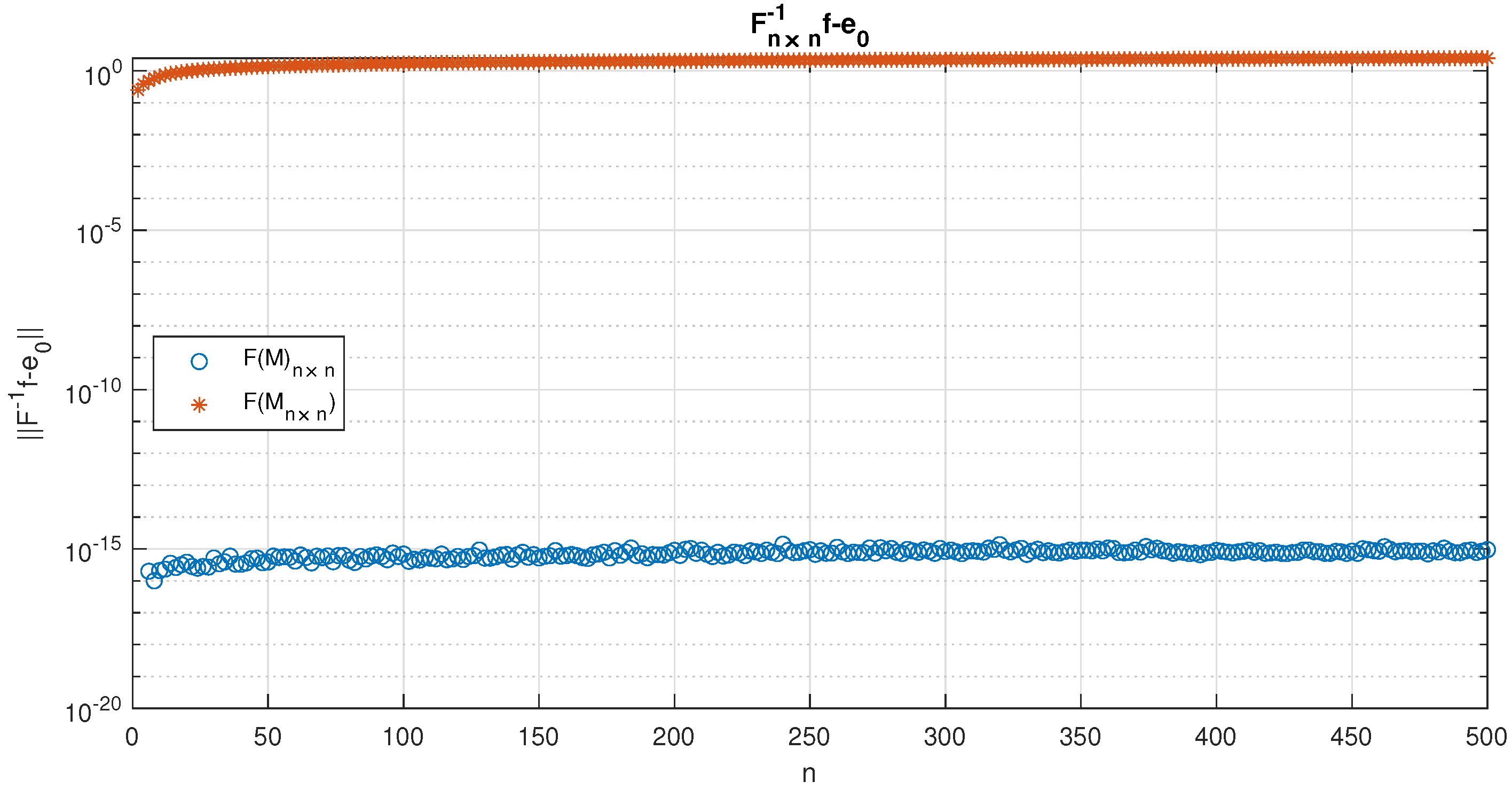

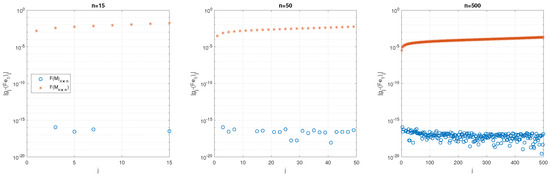

A similar test can be made using the fact that , and so, ; we can verify if . In Figure 4, we plot the norms , illustrating the fact that while behaves like , does not. We remark that is regular for even n only.

Figure 4.

Norm for even .

4.2. Trigonometric Functions with Laguerre Polynomials

From the generating function of Laguerre polynomials [9],

we obtain

The recurrence relation of Laguerre polynomials,

results in the particular case of recurrence relation (14):

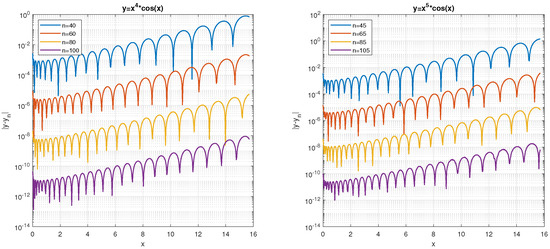

Illustrating an application of these formulas, we build square matrices,

truncated to the respective main blocks, where is the Jacobi matrix of Laguerre polynomials for several values of n. Given , the coefficients vector of a Fourier series in Laguerre polynomials, then is the coefficients vector of in the same polynomial basis. In order to illustrate the effectiveness of this procedure, we choose , for several given m, whose Fourier–Laguerre coefficients are given by

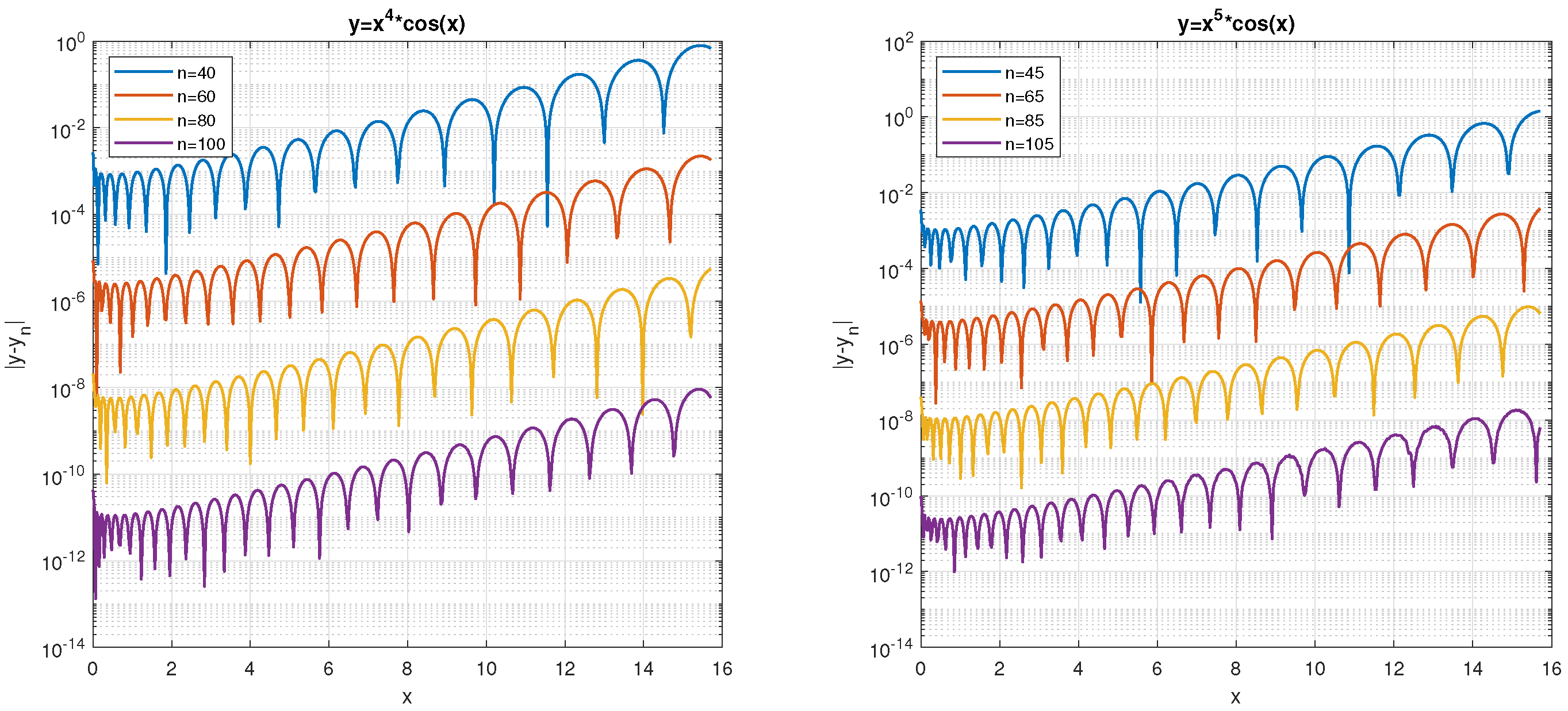

In Figure 5, we present in log scale the error , for and , where results from truncated to dimension n.

Figure 5.

Absolute error , for , with for ; and is the truncated Laguerre series evaluated by truncated matrices .

5. Conclusions

In this work, we reveal fundamental properties of matrices resulting from the image of Jacobi matrices transformed by generalized Fourier series. These are useful matrices in the context of algebraic representation of functional operators. Among the properties associated with matrix polynomials, we highlight the relationship between linearization coefficients and operational matrices. Additionally, we explore symmetry properties, invariance over domain linear displacements, and certain recurrence relations. We conclude that the properties exhibited by matrices transformed through polynomials are inherited by matrices transformed using Fourier series with orthogonal polynomials.

In addition to efficiently addressing the challenge of operating with infinite matrices, this work offers effective calculation formulas applicable to any finite block of these matrices. The examples illustrate the successful and efficient performance of the presented calculation formulas.

Author Contributions

Conceptualization, J.M.A.M.; software, J.M.A.M. and J.A.O.M.; investigation, J.M.A.M.; writing—original draft preparation, J.M.A.M., P.B.V. and J.A.O.M.; writing—review and editing, J.M.A.M. and P.B.V. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by CMUP, member of LASI, which is financed by national funds through FCT - Fundação para a Ciência e a Tecnologia, I.P., under the projects with reference UIDB/00144/2020 and UIDP/00144/2020.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author (Matlab codes can be provided upon request).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gautschi, W. Orthogonal polynomials: Applications and computation. Acta Numer. 1996, 5, 45–119. [Google Scholar] [CrossRef]

- Lewanowicz, S. Second-order recurrence relation for the linearization coefficients of the classical orthogonal polynomials. J. Comput. Appl. Math. 1996, 69, 159–170. [Google Scholar] [CrossRef]

- Ronveaux, A.; Hounkonnou, M.N.; Belmehdi, S. Generalized linearization problems. J. Phys. A Math. Gen. 1995, 28, 4423. [Google Scholar] [CrossRef]

- Szwarc, R. Linearization and connection coefficients of orthogonal polynomials. Monatshefte Für Math. 1992, 113, 319. [Google Scholar] [CrossRef]

- Adams, J.C., III. On the expression of the product of any two Legendre’s coefficients by means of a series of Legendre’s coefficients. Proc. R. Soc. Lond. 1878, 27, 63–71. [Google Scholar]

- Park, S.B.; Kim, J.H. Integral evaluation of the linearization coeficients of the product of two Legendre polynomials. J. Phys. A Math. Gen. 2006, 20, 623–635. [Google Scholar]

- Chaggara, H.; Koepf, W. On linearization and connection coefficients for generalized Hermite polynomials. J. Comput. Appl. Math. 2011, 236, 65–73. [Google Scholar] [CrossRef][Green Version]

- Chaggara, H.; Koepf, W. On linearization coefficients of Jacobi polynomials. Appl. Math. Lett. 2010, 23, 609–614. [Google Scholar] [CrossRef][Green Version]

- Olver, F.W.J.; Olde Daalhuis, A.B.; Lozier, D.W.; Schneider, B.I.; Boisvert, R.F.; Clark, C.W.; Miller, B.R.; Saunders, B.V.; Cohl, H.S.; McClain, M.A. (Eds.) NIST Digital Library of Mathematical Functions. Release 1.1.10 of 2023-06-15. Available online: https://dlmf.nist.gov/ (accessed on 30 July 2024).

- Doha, E.; Abd-Elhameed, W. New linearization formulae for the products of Chebyshev polynomials of third and fourth kinds. Rocky Mt. J. Math. 2016, 46, 443–460. [Google Scholar] [CrossRef]

- Gene, H.; Golub, J.H.W. Calculation of Gauss quadrature rules. Math. Comput. 1969, 23, 221–230. [Google Scholar] [CrossRef]

- Chihara, T.S. An Introduction to Orthogonal Polynomials; Dover Publications, Inc.: Mineola, NY, USA, 2011. [Google Scholar]

- Benzi, M.; Golub, G. Bounds for the Entries of Matrix Functions with Applications to Preconditioning. BIT Numer. Math. 1999, 39, 417–438. [Google Scholar] [CrossRef]

- Abd-Elhameed, W. New product and linearization formulae of Jacobi polynomials of certain parameters. Integral Transform. Spec. Funct. 2015, 26, 586–599. [Google Scholar] [CrossRef]

- Ahmed, H.M. Computing expansions coefficients for Laguerre polynomials. Integral Transform. Spec. Funct. 2021, 32, 271–289. [Google Scholar] [CrossRef]

- Matos, J.M.A.; Rodrigues, M.J.; de Matos, J.C. Explicit formulae for derivatives and primitives of orthogonal polynomials. arXiv 2017, arXiv:1703.00743. [Google Scholar]

- Roberts, J.D. Linear model reduction and solution of the algebraic Riccati equation by use of the sign function. Int. J. Control 1980, 32, 677–687. [Google Scholar] [CrossRef]

- Wang, X.; Cao, Y. A numerically stable high-order Chebyshev-Halley type multipoint iterative method for calculating matrix sign function. AIMS Math. 2023, 8, 12456–12471. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).