Abstract

Outliers often occur during data collection, which could impact the result seriously and lead to a large inference error; therefore, it is important to detect outliers before data analysis. Gamma distribution is a popular distribution in statistics; this paper proposes a method for detecting multiple upper outliers from gamma (). For computing the critical value of the test statistic in our method, we derive the density function for the case of a single outlier and design two algorithms based on the Monte Carlo and the kernel density estimation for the case of multiple upper outliers. A simulation study shows that the test statistic proposed in this paper outperforms some common test statistics. Finally, we propose an improved testing method to reduce the impact of the swamping effect, which is demonstrated by real data analyses.

MSC:

62F03

1. Introduction

The presence of outliers in the data may have an appreciable impact on the data analysis, which often leads to erroneous conclusions, and in turn results in severe decision-making mistakes. Therefore, it is necessary to detect outliers before statistical analysis. On the other hand, outlier detection has a wide range of applications in the prevention of financial fraud, disease diagnosis, and judgment of the truth of military information, etc.

Refs. [1,2] define outliers as those observations which are surprisingly far away from the main group. In a one dimensional situation, if the observations are arranged in an ascending order of magnitude, there will be only three types of outlier detection problems: (i) only upper outliers; (ii) only lower outliers; and (iii) both upper and lower outliers.

The commonly used methods of dealing with outliers include the detection of outliers and robust statistical methods. Robust methods aim to analyze data while retain outliers and minimize the deviation of analytical results from theoretical results. The detection of outliers is to identify outliers in the sample by using a reasonable statistical procedure and then analyzing the remaining observations. In this paper, we focus on this method.

In the field of statistics, there are many results on the detection of outliers, and many effective methods have been proposed. These methods include descriptive statistics, machine learning, and hypothesis testing.

Descriptive statistics is intuitive and contains no computational burden. Commonly used methods include Box-plot, Hampel rule, etc. Box-plot needs to compute the quantile and quantile of the sample, and . Denote as the interquartile range, then the observations are located in the interval of , in the plot are observed as clean observations, and other observations are tested as outliers. According to [3], a data point is identified as an outlier if the distance between it and the sample median exceeds 4.5 times MAD, where .

Machine learning mainly trains the sample to detect outliers according to the data characteristics, combined with mathematical models and statistical principles. Some common methods include one-class support vector machines (one-class SVM), minimum spanning tree (MST), etc. One-class SVM usually trains a minimal, ellipsoid which contains all normal observations from historical data or other clean data. Then, the observations that fall outside the ellipsoid are treated as outliers; see [4]. MST algorithm defines the distance between points as Euclidean distance, considers the points as nodes, and finds a path connecting each node with the smallest sum of distances. Then, based on the given criteria, the sample is divided into different classes. The largest set is treated as inlying data, while the rest is treated as outliers; see [5].

Hypothesis testing is a basic method for outlier detection. By setting appropriate null and alternative hypotheses and constructing test statistics with certain properties, the hypothesis testing method can detect whether there are outliers in the sample with the given significance level.

In a univariate sample, and unlike the limitations of the exponential distribution, observations from gamma distribution are more extensive and easier to collect. This paper studies the multiple outlier detection under gamma distribution, a parameter slippages model. Since the 1950s, there has been many results about outlier detection based on the hypothesis testing method, but most of them aim to detect a single outlier or outliers in a normal distribution. In the 1970s, outlier detection under more general distributions such as exponential, Pareto, and uniform distributions received much attention. Multiple outlierdetection has recently drawn considerable attention in practice owing to the development of science and technology and the diversification of data collection methods. We briefly introduce three commonly used statistics, which are suitable for detecting multiple upper outliers in the gamma distribution.

Dixon’s statistic proposed in [6] is based on the idea that the dispersion of the suspect observations accounts for a large proportion of the sample dispersion. This method is further extended in [7,8,9], where [8] proposes the following statistic

With the given significance level , , ⋯, are identified as outliers if , where is the critical value of . Later, another Dixon type statistic for detecting outliers in a gamma distribution is proposed in [10,11], and the statistic is

Ref. [10] gives the critical value for the given significance level , , ⋯, are regarded as outliers if . The third test statistic is by [10,11]:

Ref. [10] also obtains the corresponding critical value for the given significance level . , ⋯, are regarded as outliers if . The fourth test statistic is a “gap-test” ([12]), which is given by

Ref. [12] provides the critical value for the significance level , and , ⋯, are identified as outliers if . The fifth test statistic is proposed in [13], which is given by

Ref. [13] shows that the distribution of and the critical value can be obtained for the given significance level . Thus, , ⋯, are regarded as outliers if .

The remainder of this article is organized as follows. In Section 2, we propose a test statistic to detect outliers in a gamma sample, and the density function of the proposed test statistic is derived. In order to obtain the critical values, a Monte Carlo procedure and a kernel density estimation procedure are proposed. In Section 3, the simulation results demonstrate that the proposed test statistic is better than others. Furthermore, an improved method is suggested, which can eliminate the swamping effect in multiple outliers detection in Section 4. A real data analysis is performed in Section 5. Section 6 is the conclusion. All proofs of theoretical results are presented in Appendix A, and the data of empirical applications is contained in Appendix B.

2. Model Framework and Methodology for Detecting Outliers

In this section, we propose a testing method to detect upper outliers from a gamma distribution. Both single and multiple outliers are considered. We will derive the distribution of the test statistic for single upper outlier detection, and design two methods—the Monte Carlo method and the kernel density method—to calculate the critical value of for multiple outliers.

2.1. Model Framework

Assume the null distribution is gamma distribution, gamma (), with the density function given by

where m and are unknown, . The null hypothesis is

Then, the density function in the alternative hypothesis is

where denotes the contaminant factor. The slippage alternative hypothesis is

Sorting ,⋯, from small to large, we obtain the sample , where corresponds to the th observation in S. When , is the suspicious point, we propose the test statistic to detect an outlier in S,

For a given significance level , letting be the critical value, and is detected as an outlier if . When , we propose the following test statistic to detect multiple outliers,

For a given significance level , if we let be the critical value, ,⋯, are detected as outliers if .

Theorem 1.

is a test statistic that is derived from the likelihood ratio principle.

Proof of Theorem 1.

See Appendix A.1. □

2.2. Detecting Single Outlier

can be used for testing a single upper outlier for the gamma sample. To obtain the critical value of the test, we derive the distribution of under the null model, as follows.

Denote and . Note that , , ⋯, are independent, so follows beta () under the null model. Let and , for any j, the density function of is

As , the density function of is given by

It can be shown that

Lemma 1.

Assume that ,⋯,, are independent identically from gamma (m,θ), then and are independent.

Proof of Lemma 1.

See Appendix A.1. □

Theorem 2.

If ,,⋯,, are independent from gamma (m,θ), then the density function of is

whereis the cumulative distribution function (CDF) of.

Proof of Theorem 2.

See Appendix A.1. □

The density function of under the null model is an iterative function and the critical value of can be obtained by Equation (12).

2.3. Detecting Multiple Outliers

with can be used to detect outliers in the gamma sample if there exist multiple outliers. However, deriving the distribution of is a difficult task. In this case, to obtain the critical value of the test, we propose two methods, the Monte Carlo method and the kernel density estimation method.

2.3.1. Monte Carlo Method

First, note that the distribution of is unrelated to under the null model. Based on this property, the Monte Carlo method for computing the critical value of the test is given below.

Parameter m can be obtained by the Newton-Rapson algorithm which is based on the sample or estimated by other samples, empirical methods, and so on. We consider the outliers from a slippage model in which the parameter has been shifted to , with the parameter m being fixed, where is the contamination factor.

The idea of the Monte Carlo method is generating n samples, and can be obtained from each sample. Denote as the set that consists of all . Then, based on the law of large numbers, we use the quantile of as the estimate of . The pseudocode of the Monte Carlo method is given by Algorithm 1.

| Algorithm 1 Monte Carlo method |

| Input: Parameters |

| n: sample size; |

| k: number of suspicious observations; |

| α: the significance level, say, α = 0.05; |

| u: number of samples, say, u = 5000. |

| Output: . |

| for j in do |

| generate n observations from gamma(); |

| ; |

| end for; |

| get ; |

| quantile of . |

Using the above Monte Carlo method to compute the critical values of the test statistic for different n, k, and , the results are summarized in Table 1.

Table 1.

The critical values of in the case of and significance level .

2.3.2. Kernel Density Estimation Method

This method aims to use a large sample of to approach its density function, and the estimated function is denoted as . Then, with the significance level , we compute from

Using a Gaussian kernel function, we have

where and h is the bandwidth. Therefore, the estimated density function of is

The pseudocode of the kernel density estimation method is given by Algorithm 2.

| Algorithm 2 Kernel density estimation method |

| Input: Parameters |

| n: sample size; |

| k: number of suspicious observations; |

| : the significance level, say, ; |

| u: number of samples, say, . |

| Output:. |

| for j in do |

| generate n observations from gamma(); |

| ; |

| end for; |

| get ; |

| compute the bandwidth of ; |

| choose Gaussian kernel function, , and the estimated density function of is ; |

| root of . |

Table 2 includes critical values of the test statistic for different n and k with and , which are calculated by the kernel density algorithm.

Table 2.

The critical values of in the case of and significance level .

After comparing a large number of simulation results of the Monte Carlo method and the kernel density estimation method, we find the difference of results between these two methods is very small. Therefore, which method is chosen depends on your personal preference.

More generally, Algorithms 1 and 2 contribute two feasible methods to calculate the critical values of any test statistics for the given significance level, sample size n, and presupposed k.

3. Simulation Study

In this section, we evaluate, by a simulation study, the performance of the proposed test statistic and compare it with the commonly used methods including , , , , and given in Section 1.

3.1. Simulation Setting

To evaluate the performance of a test statistic in the outlier detection, we consider two cases with and without outliers. For the former, a test statistic can be evaluated by computing the power when there exist k outliers in the gamma (), and the probability of its power is replaced by the frequency of identifying outliers correctly; for the latter, a test statistic can be evaluated by counting the number of times that inlying observations are misjudged as outliers, which is called “false alarm”. A test statistic is better if it has higher power and lower “false alarm”.

To use the similar simulation setting as in [10,12,13], we transform the and in Equations (6) and (7) to and , respectively.

For computing the power, we generate n observations from Equation (6) and sort these points from small to large. , ⋯, are replaced by , ⋯, , which has the same effect as producing k upper outliers from Equation (7). Where , in [1:2] (0.055), and . To measure the “false alarm”, denote as the number of outliers in the k largest observations. When , we have . Generate observations from Equation (6), and generate from Equation (7). Then, detect the largest k observations by using the different test statistics. These two cases with significance levels and 0.05. Our simulation study is carried out based on 2000 replications.

3.2. Results

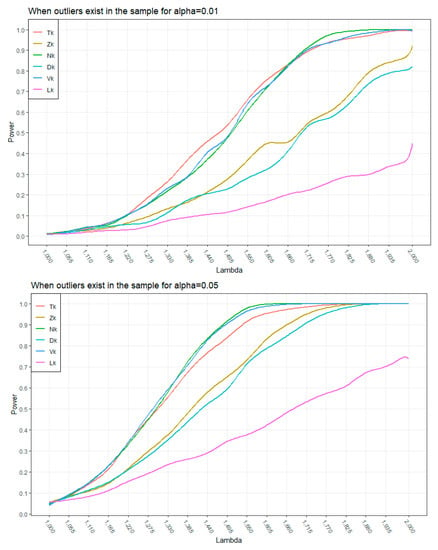

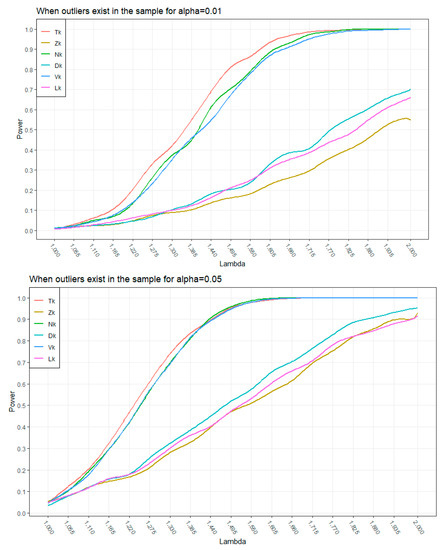

For the case of outliers existing, the simulation results on the power of six test statistics are shown in Figure 1 and Figure 2. It can be observed from Figure 1 that when , and , our test statistic has a higher power than the other five test statistics for the values of smaller than 1.650; and for larger , is worse than and but better than , , and . For , is worse than and but better than , , and . It is clear from Figure 2 that when and , has the highest outlier detection capability for ; and if , has the highest power for almost all the values.

Figure 1.

Power of test statistics for , , and .

Figure 2.

Power of test statistics for , , and .

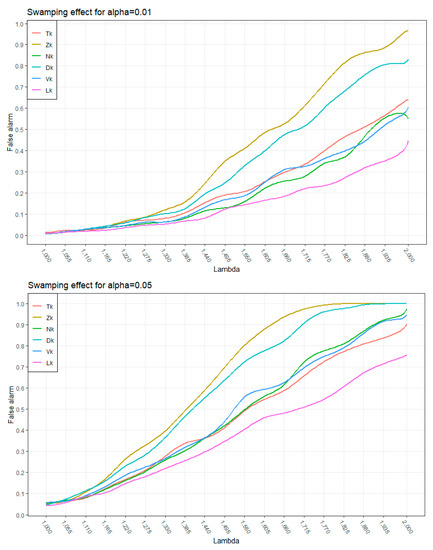

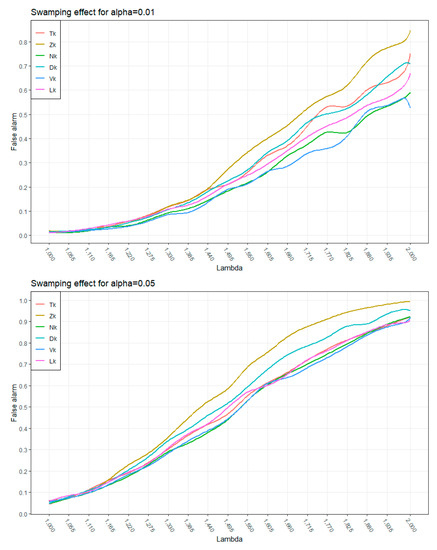

For the case of the k largest observations consisting of contaminants and some good observations, the simulation results on the swamping effect of six test statistics are shown in Figure 3 and Figure 4. It can be observed from Figure 3 that for , with the significance level of 0.01, is better than and but worse than , , and . For , the “false alarm” of is worse than that of , but better than those of , , , and . It is clear that the results of Figure 3 with and Figure 4 with are similar when . For , Figure 4 shows that is worse than and but better than , , and .

Figure 3.

False alarm of statistics for , , , and .

Figure 4.

False alarm of statistics for , , , and .

In summary, the simulation results show that has the highest power and relatively lower “false alarm” than , and for and . With and , has the highest power than other test statistics, but the “false alarm” of is worse than those of , and . Therefore, with large m and k, is generally better than , , , , and for multiple outlierdetection.

4. Modified Test-ITK

In practice, almost all test statistics used to detect multiple outliers have the swamping effect. This phenomenon happens because large outliers may cause the sum of multiple observations to be too large in the block test. To reduce or eliminate the impact of the swamping effect, we suggest a modified test, ITK, which retains the high probabilities of outliers detecting and low error probabilities when there is no outlier in the gamma sample.

Note that for multiple outlier detection, some inlying observations may be judged as outliers falsely caused by improper k. For example, consider a sample consisting of , and , and use to test , , . Clearly, , and the critical value of , by using Algorithm 1 in Section 2.3.1, is . Therefore, , and , , are outliers in the sample. However, in fact, is a genuineobservation from the inlying cluster. is detected as an outlier because and compared with the inlying sample are too large, causing the sum of , , beyond the bound range, i.e., swamping effect. However, this negative impact will be eliminated if we take .

To deal with the swamping effect, a method for choosing a reasonable k should be given. Thus, our modified test includes two stages: (1) pick a reasonable k, and use the test to detect k upper observations; (2) use stepwise forward testing for the remainingobservations or stepwise backward testing for the “outliers” sample from the first stage.

4.1. Estimation of k

From [14], the number of outliers should be less than . Later, [1] put forward a point that the number of outliers is usually less than if the sample is collected properly.

Here, we take

where is the greatest integer less than or equal to .

4.2. The Improvement of the Test-ITK

Based on Section 4.1, we propose an improved test procedure, as follows:

Step 1. For the significance level , , ⋯, are judged as outliers preliminarily, which forms a preliminary outliers sample, if ; otherwise, goto Step 5. The remaining observations constitute the preliminary inlying group, .

Step 2 (step forward test). Using step forward test to detect whether includes any outliers. For , , and is an outlier if ; otherwise, goto Step 4.

Step 3. Repeat the test process in Step 2 until no outlier can be detected in . If is the smallest outlier in , then , ⋯, are outliers in the data and stop the procedure.

Step 4 (step backward test). After the step forward test has stopped, use the step backward test to check the preliminary outliers sample in Step 1. For the significance level , if , then the step backward test ends; otherwise, use the step backward test for detecting . Repeat this step until an outlier is detected. If is not judged as an outlier, then there is no outlier in the sample, the sample is inlying data.

Step 5. Let , and substitute to Step 1. If , there is no outlier in the sample, and the test procedure ends.

5. Empirical Applications

In this section, we apply the ITK test method to two data sets: Alcohol-related mortality rates and artificial scout position data, and compare it with the other six test statistics of , , , , , and .

5.1. Alcohol-Related Mortality Rates in Selected Countries in 2000

The dataset (see Appendix B) is selected from Office for National Statistics (ONS). The Kolmogorov-Smirnov test indicates that this data follows the gamma distribution.

Here, and so . We obtain by using the Newton-Rapson algorithm. From Appendix B, it is observed that . Further, we compute the critical value of by using Algorithm 1 in Section 2.3.1, and obtain . Obviously, , and hence are detected as outliers preliminarily.

Then, we use the step forward test for the remaining sample. It is clear that . Thus, is a normal observation.

We now use the step backward test for . It is readily observed that , , and in the 5% significance level, . As , are detected as upper outliers.

On the other hand, we utilize the , , , , , and test statistics to detect outliers, and the results are shown in Table 3.

Table 3.

The outlier detection results of alcohol-related mortality rates by using various tests.

As we can observe from Table 3, ITK, , , and can identify outliers correctly without misjudgment. This phenomenon happens because k is chosen reasonably. We can also observe that , , and have bad performance in multiple upper outlier detection.

Furthermore, the result from Table 3 shows that Ireland, France, Austria, Slovenia, Portugal, Denmark, the United Kingdom of Great Britain and Northern Ireland, the Republic of Korea, the Russian Federation, and Australia have higher alcohol-related mortality rates, which means that these countries need to pay more attention to alcohol-related mortality.

5.2. Artificial Scout Position Data

In the application of military information, the gamma model is usually used to describe the position of some objects. Suppose a military scene, in a mission, 20 scouts reconnoiter a certain area, and their location components are characterized by , , ⋯, 20, and the larger , the further they are away from the landing site. If deviates from the main group, this indicates that the th soldier is separated from the troops and may not be able to obtain support in time in case of an emergency. Therefore, it is necessary to pay attention to this movement.

In our setting, the basic model is gamma (3,5) and the alternative model is gamma (3,10). The initial data are outlined in Appendix B.

Here, is known. The sample size is 20, thus . From Appendix B, it is observed that . With the significance level of 0.05, we utilize the Monte Carlo method to calculate the critical value for , and we obtain . As , , , and are placed into the initial outlier group.

Furthermore, we continue to test the remained sample and carry out the step forward test for . Noting that > , is not an outlier.

Presently, we use step backward test for , , , . It is clear that , and with the significance level of 0.05, . Noting that , is not an outlier. Moreover, , the test procedure ends. Therefore, , and are outliers in the sample.

Meanwhile, we utilize the , , , , , and test statistics to detect outliers, and the results are shown in Table 4.

Table 4.

The outlier detection results of artificial scout position data.

It can be observed from Table 4 that the ITK method performs better than the other five methods (the , , , , , and test statistics) because it can not only detect all outliers in the sample, but also has the lowest misjudged probabilities.

Further, from the result of the ITK method, we can obtain information that the IDs 18, 19, and 20 seem to be far away from the landing site. This means that they would be endangered in case of an emergency.

6. Concluding Remarks

It can be observed from the simulation that with the increase in k and n values, compared with other test statistics, our test statistic has a higher power and relatively lower “false alarm” on outlier detection, especially for a lower significance level. However, the swamping effect still exists for , and this phenomenon will cause the loss of information. Therefore, to reduce the impact of swamping effect, we design the ITK test. From the outlier detection results of the two real data analyses, the ITK test has the same high power as the test statistic and lower error probabilities than the other six test statistics (, , , , , and ). In conclusion, compared with other test statistics, ITK has the highest detection capability for outliers and the lowest “false alarm”. Thus, the ITK method is recommended to be used to identify multiple outliers in a sample.

In this paper, we design two algorithms based on the Monte Carlo and the kernel density estimation to obtain the critical values of . How to derive the exact critical value of is an interesting problem. Further, in the case of k being unknown, we take a conservative estimation of . Thus, it is worth studying the problem of choosing a more appropriate value of k in our ITK method. This article discusses only the case of multiple upper outliers existing in a gamma sample. Noting that lower outliers or both upper and lower outliers may exist in practice, it is necessary to extend our outlier detection methods to these situations. In addition, the masking effect with our methods is not discussed in this paper, which remains our future research. How to extend our approaches to other distributions is also an important topic.

Author Contributions

Conceptualization, X.L., T.W. and G.Z.; methodology, X.L.; software, X.L.; validation, X.L., T.W. and G.Z.; formal analysis, X.L. and T.W.; writing—original draft preparation, X.L.; writing—review and editing, X.L., T.W. and G.Z.; visualization, X.L. and T.W.; supervision, G.Z.; project administration, G.Z.; funding acquisition, G.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Beijing Natural Science Foundation (Grant No. Z210003).

Data Availability Statement

Open suorce. Data presented in the article can be obtained by visiting https://www.ons.gov.uk/ (accessed on 29 November 2022).

Acknowledgments

The authors are grateful to the anonymous referees for helpful comments and suggestions that greatly improved this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Appendix A.1. Lemma 1 and Its Proof

Proof of Lemma 1.

For any n > k , we have . Thus, and are independent if and are independent. Note that , so and are independent if is independent of . Observe that are independent and from gamma , so the joint density of () is

Similar to [15], let , then the joint density of is

It can be observed that the marginal densities of and are given by

and

respectively. Clearly, , so is independent of . Therefore, Lemma 1 is proved. □

Appendix A.2. Proofs of Theorems

Proof of Theorem 1.

Consider the null distribution defined by Equation (6), and distribution of the alternative model defined by Equation (7). The proof for Theorem 1 is an extension of that in [1], which discusses the single outlier detection in the exponential distribution. Suppose there are n observations, denoted by , , ⋯, , especially, (, ⋯, n) is an observation from the sample, which consists of the k largest points. Therefore, the alternative hypothesis is

Denoting and , we first prove that the test statistic T is an MLR test statistic. Noting that under the H, {} is a random sample from (6), the likelihood function is

Denote the associated log likelihood function as , and let , , then we obtain the maximum likelihood estimates of m and , denoted by and , i.e.,

and satisfies ; here, there is no explicit form solution for . The numerical value of can be obtained by Newton-Raphson algorithm or extra-sample information. Therefore, if m is known, and we substitue to , then

Similarly, under the alternative hypothesis , we have

Therefore, reject H if , i.e., , ⋯, are outliers if . Thus, we consider

where . Obviously, the derivative of Equation (A10) with respect to T is

It is clear that for , and about T is monotone increasing. Thus, is an MLR test statistic; see [1,16,17].

In practice, it is too difficult to assure is not only the th observation but also an observation from the k largest observations. Therefore, to extend the T test statistic for the ordering the samples’ situation, the multiple decision procedures will be used here. The null hypothesis remains unchanged, and the th alternative hypothesis is

The number of such alternative hypotheses is , and

where with . Subject to a probability of correct adoption of the null hypothesis H, the decision criterion is that of maximizing the power of adopting the correct . In the present situation of a gamma model, the multiple decision procedures lead to adopting if is maximized and is sufficiently large. Because all observations are one-dimensional, outliers only exist at the upper end, and so the appropriate test statistic is

Theorem 1 is proved. □

Proof of Theorem 2.

Similar to [18], denote and as the density function and the cumulative distribution function (CDF) of , respectively, and we have

Denote , so

Note that is incompatible with , for any , thus, by the additivity of probability measures,

where . Note that

Since ,⋯, are independent and from gamma (), follows beta (). Therefore,

Because and are independent, we obtain

Theorem 2 is proved. □

Appendix B

The appendix lists the alcohol-related mortality rates in selected countries in 2000 and artificial scout position data.

Table A1.

Alcohol-related mortality rates in selected countries in 2000.

Table A1.

Alcohol-related mortality rates in selected countries in 2000.

| Country | Mortality |

|---|---|

| Afghanistan | 0.01 |

| Algeria | 0.25 |

| Angola | 1.85 |

| Armenia | 2.90 |

| Australia | 10.17 |

| Austria | 13.2 |

| Azerbaijan | 0.65 |

| Bahrain | 2.15 |

| Bangladesh | 0.01 |

| Benin | 1.34 |

| Bhutan | 0.17 |

| Bolivia (Plurinational State of) | 2.32 |

| Brunei Darussalam | 0.37 |

| Cambodia | 1.51 |

| Central African Republic | 1.51 |

| Chad | 0.25 |

| Colombia | 4.66 |

| Comoros | 0.09 |

| Congo | 2.26 |

| Democratic Republic of the Congo | 1.98 |

| Denmark | 11.69 |

| Djibouti | 1.34 |

| Egypt | 0.14 |

| El Salvador | 2.79 |

| Eritrea | 0.83 |

| Estonia | 0.01 |

| Ethiopia | 0.88 |

| Fi Ji | 2.05 |

| France | 13.63 |

| Gambia | 2.18 |

| Ghana | 1.60 |

| Guatemala | 2.63 |

| Guinea | 0.17 |

| Guinea-Bissau | 2.84 |

| Honduras | 2.61 |

| Iceland | 6.17 |

| India | 0.93 |

| Indonesia | 0.06 |

| Iran | 0.01 |

| Iraq | 0.20 |

| Ireland | 14.07 |

| Israel | 2.53 |

| Jordan | 0.49 |

| Kenya | 1.51 |

| Kiribati | 0.46 |

| Kuwait | 0.01 |

| Kyrgyzstan | 2.13 |

| Lebanon | 2.26 |

| Libya | 0.01 |

| Madagascar | 1.16 |

| Malawi | 1.18 |

| Malaysia | 0.54 |

| Maldives | 1.83 |

| Mali | 0.47 |

| Mauritania | 0.03 |

| Mexico | 4.99 |

| Micronesia (Federated States of) | 2.23 |

| Mongolia | 2.79 |

| Montenegro | 0.01 |

| Morocco | 0.45 |

| Mozambique | 1.14 |

| Myanmar | 0.35 |

| Nepal | 0.08 |

| Niger | 0.1 |

| Oman | 0.38 |

| Pakistan | 0.02 |

| Papua New Guinea | 0.73 |

| Portugal | 11.89 |

| Qatar | 0.5 |

| Republic of Korea | 10.33 |

| Russian Federation | 10.18 |

| Samoa | 3 |

| Saudi Arabia | 0.05 |

| Senegal | 0.29 |

| Singapore | 2.03 |

| Slovenia | 11.9 |

| Solomon Islands | 0.71 |

| Somalia | 0.01 |

| Sri Lanka | 1.45 |

| Sudan | 1.76 |

| Syrian Arab Republic | 1.41 |

| Tajikistann | 0.37 |

| The former Yugoslav republic of Macedonia | 2.86 |

| Timor-Leste | 0.5 |

| Togo | 1.1 |

| Tonga | 1.24 |

| Tunisia | 1.21 |

| Turkey | 1.54 |

| Turkmenistan | 2.9 |

| United Arab Emirates | 1.64 |

| United Kingdom of Great Britain and Northern Ireland | 10.59 |

| Uzbekistan | 1.6 |

| Vanuatu | 1.21 |

| Viet Nam | 1.6 |

| Yemen | 0.07 |

| Zambia | 2.62 |

| Zimbabwe | 1.68 |

Table A2.

Artificial scout position data.

Table A2.

Artificial scout position data.

| Soldier’s ID | Position |

|---|---|

| 1 | 0.88 |

| 2 | 2.90 |

| 3 | 0.21 |

| 4 | 0.47 |

| 5 | 3.44 |

| 6 | 0.48 |

| 7 | 0.83 |

| 8 | 3.32 |

| 9 | 0.58 |

| 10 | 0.35 |

| 11 | 0.31 |

| 12 | 0.53 |

| 13 | 0.91 |

| 14 | 0.65 |

| 15 | 0.70 |

| 16 | 0.80 |

| 17 | 0.52 |

| 18 | 0.13 |

| 19 | 0.55 |

| 20 | 0.85 |

References

- Barnett, V.; Lewis, T. Outliers in Statistical Data, 3rd ed.; Wiley and Son: Chichester, UK, 1994; pp. 1–76. [Google Scholar]

- Hawkins, D.M. Identification of Outliers; Springer: Dordrecht, The Netherlands, 1980; pp. 1–67. [Google Scholar]

- Hampel, F.R.; Ronchetti, E.M.; Rousseeuw, P.; Stahel, W.A. Robust Statistics: The Approach Based on Influence Functions; Wiley-Interscience: New York, NY, USA, 1986; pp. 1–67. [Google Scholar]

- Smola, A.J.; Schölkopf, B. Learning with Kernels; GMD-Forschungszentrum Informationstechnik: Berlin, Germany, 1998; pp. 27–42. [Google Scholar]

- Sebert, D.M.; Montgomery, D.C.; Rollier, D.A. A clustering algorithm for identifying multiple outliers in linear regression. Comput. Stat. Data Anal. 1998, 27, 461–484. [Google Scholar]

- Dixon, W.J. Ratios involving extreme values. Ann. Math. Stat. 1951, 22, 68–78. [Google Scholar] [CrossRef]

- Likeš, J. Distribution of Dixon’s statistics in the case of an exponential population. Metrika 1967, 11, 46–54. [Google Scholar] [CrossRef]

- Singh, A.K.; Lalitha, S. Detection of upper outliers in gamma sample. J. Stat. Appl. Probab. Lett. 2018, 5, 53–62. [Google Scholar] [CrossRef] [PubMed]

- Singh, A.K.; Singh, A.; Patawa, R. Multiple upper outlier detection procedure in generalized exponential sample. Eur. J. Stat. 2021, 1, 58–73. [Google Scholar] [CrossRef]

- Nooghabi, M.J.; Nooghabi, H.J.; Nasiri, P. Detecting outliers in gamma distribution. Commun. Stat. Theory Methods 2010, 39, 698–706. [Google Scholar] [CrossRef]

- Zerbet, A.; Nikulin, M. A new statistic for detecting outliers in exponential case. Commun. Stat. Theory Methods 2003, 32, 573–583. [Google Scholar] [CrossRef]

- Lalitha, S.; Kumar, N. Multiple outlier test for upper outliers in an exponential sample. J. Appl. Stat. 2012, 39, 1323–1330. [Google Scholar] [CrossRef]

- Kumar, N.; Lalitha, S. Testing for upper outliers in gamma sample. Commun. Stat. Theory Methods 2012, 41, 820–828. [Google Scholar] [CrossRef]

- Tietjen, G.L.; Moore, R.H. Some Grubbs-type statistics for the detection of several outliers. Technometrics 1972, 14, 583–597. [Google Scholar] [CrossRef]

- Mathal, A.M.; Moschopoulos, P.G. A form of multivariate gamma distribution. Ann. Inst. Stat. Math. 1992, 44, 97–106. [Google Scholar] [CrossRef]

- Neyman, J.; Pearson, E.S. On the use and interpretation of certain test criteria for purposes of statistical inference: Part II. Biometrika 1928, 20A, 263–294. [Google Scholar] [CrossRef]

- Domaóski, P.D. Study on statistical outlier detection and labelling. Int. J. Autom. Comput. 2020, 17, 788–811. [Google Scholar] [CrossRef]

- Lewis, T.; Fieller, N.R.J. A recursive algorithm for null distributions for outliers: I. gamma samples. Technometrics 1979, 21, 371–376. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).