Abstract

The filled function method is an effective way to solve global optimization problems. However, its effectiveness is greatly affected by the selection of parameters, and the non-continuous or non-differentiable properties of the constructed filled function. To overcome the above-mentioned drawbacks, in this paper, a new parameterless filled function is proposed that is continuous and differentiable. Theoretical proofs have been made to show the properties of the proposed filled function. Based on the new filled function, a filled function algorithm is proposed to solve unconstrained global optimization problems. Experiments are carried out on widely used test problems and an application of supply chain problems with equality and inequality constraints. The numerical results show that the proposed filled function is effective.

Keywords:

filled function method; global optimization; parameterless filled function; mathematical programming MSC:

65K10

1. Introduction

Global optimization is rich in content and widely used subject in mathematics. With the development of science and information technology, global optimization has been widely applied to economic models, finance, image processing, machine designing and so on. Therefore, the theories and methods for global optimization need to be studied deeply. With the efforts of scholars in this field, various methods have been developed for global optimization. However, finding the global optimal solution is usually not easy due to the two properties of the global optimization problems: (1) usually there exists a lot of local optimal solutions, and (2) optimization algorithms are very easy to be trapped in certain local optimal solutions and unable to escape. Therefore, one key problem is how to help the optimization method escapes from local optimal solutions. The filled function method is specifically designed to solve this problem. Now we will introduce some basic information about the filled function method.

The filled function method was first proposed by Ge [1] in which he constructed an auxiliary function named filled function to help the optimization algorithm escape from local optimal solutions. In the following, we will introduce the original definition of the filled function proposed by Ge [1] and its related concepts. In this paper, we consider the following optimization problem:

where n is the dimension of the objective function , which is continuous and differentiable. has a finite number of local optimal solutions . Suppose is the local optimal solution found by the optimization algorithm in the iteration; the definition of basin is as follows.

Definition 1.

The basin of the objective function at an isolated minimum (local optimal solution) refers to the connected domain that contains , and in this domain, the steepest descent trajectory of will converge to starting from any initial point, but outside the basin, the steepest descent trajectory of does not converge to .

A basin at is lower (or higher) than basin at iff

A basin is actually an area that contains one local optimal solution. Within this area, the gradient descent optimization algorithm will converge to the corresponding local optimal solution no matter what the initial point is. One basin is lower than the other basin if its corresponding local optimal solution is smaller (better for minimization problems).

Definition 2.

A function is called a filled function of at a local minimum if it satisfies the following properties:

- 1.

- is a strictly local maximum of , and the whole basin of becomes a part of a hill of .

- 2.

- has no minima or stable points in any basin of higher than .

- 3.

- If has a lower basin than , then there is a point in such a basin that minimizes on the line through x and .

From the definition of the filled function, we can see that the three properties together ensure the optimization algorithm escapes from one local optimal solution to a better one. For example, the optimization algorithm is now trapped in a local optimal solution and can not escape. Now we construct a filled function to help escape . The first property of the filled function will make the local optimal solution become a local worst solution of the filled function. In this case, when the filled function is optimized, it will surely leave the local worst solution; that is, the local optimal solution will escape. Then the second property of the filled function will ensure that when optimizing , it will not end up at a worse solution than because there are no minima or stable points in any basin higher than . Instead, the optimization procedure of will enter a basin that is better than , if such a basin exists. The overall optimization procedure is as follows: First, it starts from an initial point to optimize the objective function and find a locally optimal solution (e.g., ). Secondly, a filled function at this point is constructed (e.g., ) and optimized starting from . After the optimization of , the algorithm will enter a better region (basin) ensured by the properties of the filled function. Thirdly, starting from the new better basin, the algorithm continues to optimize to find a better local optimal solution than . Then, repeating the above steps, the algorithm will continuously move from one local optimal solution to better ones till the global optimal solution is found.

2. Related Work

With optimization algorithms extensively used in various fields, more and more efforts are devoted to optimization theory. As a deterministic algorithm for optimization, the filled function method has drawn a lot of attention. The main idea of the filled function method is to locate a current local optimal solution by any local search algorithm and then construct an auxiliary function called the filled function at that local optimal solution. The filled function should have three good properties in order to help it escape from the current local optimal solutions and enter regions that contain better solutions.

The first definition of a filled function was proposed by Ge in ref. [1], in which he constructed a filled function with two parameters:

Experiments show this filled function is effective. However, it has two disadvantages: first, this filled function has two adjusting parameters r and , the values of the two parameters need to be adjusted in order to ensure the global optimal solution will not be missed in the optimization procedure; secondly, since there is an exponent term in denominator when it gets larger, the function value will become smaller, and thus, the filled function may locate a fake stable point.

In order to improve the efficiency of the filled function method, a lot of effort has been made, and new contributions have been achieved. In ref. [2], a filled function with only one parameter is proposed that also has no exponent term:

However, this filled function is undefined at . To overcome the discontinuous and non-differential disadvantages of filled function, Liu proposed a class of filled functions that is continuous and differentiable [3]:

where u and w are two real functions that are twice continuously differentiable in their domains, satisfying the following conditions:

However, this class of filled function is not easy to construct and still contains two parameters to adjust. Afterward, new continuous differentiable filled functions were proposed [4,5,6], yet these filled functions all have one or two parameters. In order to improve the parameter-adjusting problem of the filled function, the authors of [7] proposed a filled function with two parameters and gave a reasonable and effective way to choose the parameters. In ref. [8], the authors proposed a filled function without any parameter. This filled function contains no exponent term and is simple in form; however, it is not a continuous differentiable, which may produce extra local optimal solutions. To overcome this problem, the authors of [9] proposed a continuously differentiable filled function without any adjusting parameter:

Afterward, researchers have proposed more continuous differentiable filled functions without parameters, such as in ref. [10,11,12,13,14]. The authors of [12] proposed a new continuous differentiable filled function without any parameter or exponent term:

These parameterless and continuous differentiable filled functions have several advantages. First, more efficient local search algorithms can be applied. Secondly, it is not easy to produce extra fake local optimal solutions. Thirdly, no parameter adjusting is needed. Thus this kind of filled function can improve the efficiency of the performance of filled function methods.

To better enhance the efficiency of filled function methods, a two-stage method with a stretch function was proposed [15]. After a current local minimum is located in the stage of optimizing the objective function, a stretch function is used to make this local minimum higher. Then a filled function is constructed and optimized in the second stage. However, this filled function is not continuous, which means classical efficient local search methods can not be applied to this method.

The authors of [16] proposed a new algorithm based on filled function. First, a multi-dimensional objective function is transformed into one-dimensional functions, and then, for each direction, a filled function is constructed to optimize the one-dimensional function. To overcome the potential failure that only local minimum is found, the authors of [17] proposed a new filled function method. By combing an adaptive strategy of determining the initial points and a narrow valley widening strategy, the ability to escape the local minimum and locate the global minimum is further enhanced. In ref. [18], the authors proposed a new filled function using a smoothing method to eliminate local optimal solutions. Further, an adaptive method is used to determine the step length and shallow valleys.

Now the filled function method is not only used in unconstrained optimization problems but is extended to constrained optimization problems with inequalities, bi-level programming, nonlinear integer programming and non-smooth constrained problems. The authors of [19] proposed a continuous differentiable filled function with one parameter to solve constrained optimization problems. The authors of [20] proposed a single-parameter filled function and applied it to a supply chain problem, which is a nonlinear programming problem with equality and inequality constraints. For bi-level programming with inequality and equality constraints, the authors of [21] first transformed the bi-level programming problem into a single-layer constrained optimization problem and then constructed the filled function combing penalty functions.

The authors of [22] first transformed the original problem into an equivalent constrained optimization problem and then constructed a filled function to solve it.

For the following type of constrained global optimization P:

where is an integer set of and is bounded. The authors of [23] proposed a method to transform this constrained problem into a box-constrained integer programming problem and then constructed a filled function to solve it. In ref. [24], the authors proposed a parameterless filled function to solve nonlinear equations with box constraints.

The filled function method is also extended to non-smoothing optimization problems. The authors of [25,26] proposed a one-parameter filled function based on a new definition of filled function for a non-smoothing-constrained programming problem.

Based on the idea of filled function, in this paper, a new parameterless filled function is proposed that is continuous and differentiable. The properties of the new filled function is proven in Section 3. Based on it, a filled function algorithm is also proposed to handle unconstrained optimization problems. Numerical experiments are carried out, and comparisons are made in Section 4.

3. A New Parameterless Filled Function and a Filled Function Algorithm

In this section, a new filled function is proposed with the advantages of being parameterless, continuous and differentiable. The three properties of the proposed filled function are described and proven. Based on it, a new filled function method is designed to solve unconstrained optimization problems.

3.1. A New Parameterless Filled Function and Its Properties

The first definition of the filled function is defined in [1]. However, the third property of the definition is not so clear, e.g., it is not clear where the point is and where the line is through x and . To make the definition more clear and more strict, some scholars gave several revised definitions of the filled function [27,28]. In this paper, we will use the revised definition from ref. [9] since it is more clear and strict by using the gradient. The revised definition is as follows.

Definition 3.

A function is called a filled function of at a local minimum if it satisfies the following properties:

- 1.

- is a strictly local maximum of , and the whole basin of becomes a part of a hill of .

- 2.

- For any , we have , where .

- 3.

- If is not empty, then there exists , such that is a local minimum of .

Now we give a brief explanation of the revised definition of the filled function. Property 1 is the same as the original definition, which turns the local minimum of the objective function into a local maximum of the filled function. In this case, when optimizing the filled function, it is easy to escape since it is a local maximum (worst solution for the minimization problem). Property 2 makes sure the optimization procedure will not end up with solutions worse than the current local minimum because there are no stationary points there. Property 3 means that it is easy for the optimization procedure to end in a region that contains a better solution than the current local minimum because in that region, there exists a local minimum. Therefore, the three properties together will drive the optimization procedure to escape from the current local minimum and enter a better region that contains a better solution.

Based on Definition 3, we design a new parameterless filled function that is also continuous and differentiable:

The new filled function mainly has two advantages. First, it has no parameter to adjust, which makes it easier to apply to different optimization problems. Secondly, the new filled function is continuous and differentiable. Note that continuity and differentiability are two excellent properties of filled function. Compared to filled functions that are not continuous or differentiable, it is easier to optimize since more choices of algorithms, especially more efficient algorithms designed for continuous differentiable functions can be used, and it is also not easy to generate extra local optimal solutions during the optimization. Now, we will first prove that the new filled function is continuously differentiable and then prove that it fulfills the three properties of the definition of the filled function.

Since the only point that may cause the filled function to not be continuously differentiable is in , so if is continuously differentiable at , the filled function is continuously differentiable.

Since , the new filled function is continuous.

Since

and

Thus, , so the new filled function is differentiable. Now we will prove that satisfies the three properties of filled function.

Theorem 1.

Suppose is a local minimum of the objective function and is the filled function constructed at , then is a strictly local maximum of .

Proof.

Suppose is the basin containing (please refer to Definition 1 about basin), since is a local minimum of , so , we have . Thus, , in this case . According to the construction of the filled function , we get

Thus, , which means that is the strict local maximum of . □

Theorem 2.

For any , we have , where , .

Proof.

Since , for any , we have , thus

and

This proves Theorem 2. □

Theorem 3.

If is not empty, then there exists , such that is a local minimum of .

Proof.

Since is not empty, then must have a minimum in .

Since is continuous and differentiable on , it must have a minimum, say at . Because is differentiable at , then this minimum must be a stationary point, that is, .

Since is not empty, then there exists a point such that . Thus and . Therefore, we know that (according to the definition of from Theorem 2); therefore, . □

3.2. A Filled Function Algorithm to Solve Unconstrained Optimization Problems

Based on the proposed filled function, we design a filled function algorithm to solve unconstrained optimization problems. The steps of the algorithm are as follows.

- Initialization. Randomly generate 10 points in the feasible region and choose the point with the best function value as the initial point . Then, set , to record the best solution and its corresponding function value, we set as the stopping criteria and as the iteration counter.

- Optimize the objective function . Starting from the initial point , we use the BFGS Quasi-Newton Method as the local search method to optimize the objective function to locate a local optimal point . The main steps of the BFGS method are shown in Algorithm 1.

- Construct the filled function at :

- Optimize the filled function . Set + as the initial point, and use the BFGS Quasi-Newton Method local search method to optimize the filled function to obtain a local minimum point of . It is known from property 3 of the filled function that point must lie in a lower basin than .

- Set the point as the initial point, and continue to optimize the objective function to obtain a new local minimum point of .

- Determine whether is less than . If satisfied, update by and by , let . Go to step 2, otherwise, is the global optimum and the algorithm terminates.

| Algorithm 1 Main steps of the BFGS Quasi-Newton Method |

|

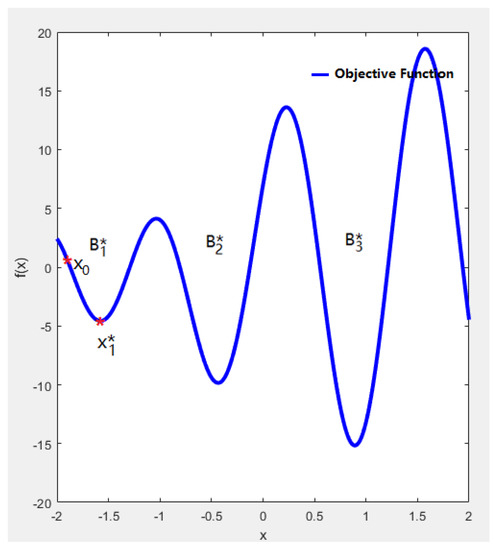

In the following, we use an example to demonstrate the optimization procedure of the filled function algorithm. Figure 1 shows the objective function with the search region [−2, 2]. From Figure 1, we can see that has three basins , and in the search region, where is the lowest basin that contains the global optimal solution. Suppose the optimization procedure starts from ; using the BFGS local search method we can obtain a local minimal solution of the objective function .

Figure 1.

Illustration of steps 1 and 2 of the filled function algorithm.

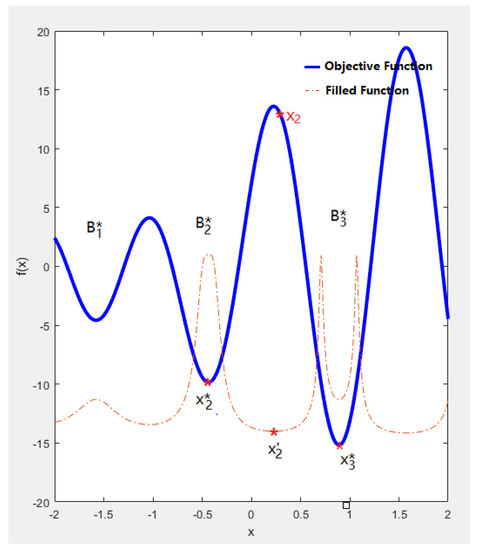

To escape from this local minimum , we construct the filled function at , as shown in Figure 2.

Figure 2.

Illustration of steps 3 to step 5 of the filled function algorithm.

From Figure 2, we can see that is a strictly local maximum (maximal point) of , which is guaranteed by the definition of the filled function. Therefore, a local search of , starting from point , can easily escape from this point and yield a local minima of . Next, using as the initial point to optimize the objective function , we can obtain another local minimal solution that is better than . At this time, the first iteration is completed.

To escape from the local minimum , we repeat the above steps to construct the filled function at , as shown in Figure 3.

Figure 3.

Illustration of the filled function algorithm in the second iteration.

Similarly, peaks at point , which makes it easy to escape from this point. We continue to optimize to obtain a local minimal point . Then, using as the initial point to optimize the objective function , a new better local optimal solution is obtained. Now, the second iteration is completed. We continue the above procedure to optimize the objective function and filled function alternately to escape from the current local optimal solution to a better one till the global optimal solution is located.

From the above optimization procedure, we can clearly see that the proposed method can easily and continuously escape from a current local optimal solution to obtain a better one till the global optimal solution is located. This is a good way to overcome the disadvantage of premature convergence of optimization algorithms. Moreover, the proposed method also has three other advantages. First, since the proposed filled function is parameterless; the algorithm has no adjustable parameters to tune for different problems. Secondly, since the new filled function is continuous and differentiable, the proposed algorithm is less apt to produce an extra local minimum while more choices of local search methods, especially the efficient gradient-based ones, can be applied to make the optimization more efficient and effective. Thirdly, once the filled function is designed and constructed, it is easy to implement and apply to different optimization problems.

There are mainly two disadvantages of the filled function method. First, it is not easy to design a good filled function and each time when a local optimal solution is found, the filled function has to be constructed. Secondly, the filled function method becomes less effective when the dimensionality of the problem is large. More research is needed to extend the scope of the filled function method.

4. Numerical Experiments

The proposed filled function algorithm is implemented in Matlab 2021 and tested on wildly used test problems. Comparisons are made with a state-of-the-art filled function algorithm [18], another continuous differentiable filled function algorithm [5] and Ge’s filled function algorithm [1]. The test problems used in this paper are listed as follows.

Test case 1. (The rastrigin function)

The global minimum solution is , and the corresponding function value is .

Test case 2. (Two-dimensional function)

where . The global minimum solution is , and the corresponding function value is for all values of c.

Test case 3. (Three-hump back camel function)

The global minimum solution is , and the corresponding function value is .

Test case 4. (Six-hump back camel function)

The global minimum solution is , and the corresponding function value is .

Test case 5. (Treccani function)

The global minimum solution is and , and the corresponding function value is .

Test case 6. ( Two-dimensional Shubert function)

There are multiple local minimum solutions in the feasible region, and the global minimum function value is .

Test case 7. ( n-dimensional function)

where

The global minimum solution is , and the corresponding function value is for all values of n.

First, all results obtained by the new filled function algorithm are listed in Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14. Further, the comparisons are made with another continuous differentiable filled function algorithm, CDFA in [5]. In these tables, we use the following notations:

Table 1.

Results of Problem 1.

Table 2.

Results of Problem 2 (c = 0.2).

Table 3.

Results of Problem 2 (c = 0.5).

Table 4.

Results of Problem 2 (c = 0.05).

Table 5.

Results of Problem 3.

Table 6.

Results of Problem 4.

Table 7.

Another results of Problem 4.

Table 8.

Results of Problem 5.

Table 9.

Results of Problem 6.

Table 10.

Results of Problem 7 (n = 2).

Table 11.

Results of Problem 7 (n = 3).

Table 12.

Results of Problem 7 (n = 5).

Table 13.

Results of Problem 7 (n = 7).

Table 14.

Results of Problem 7 (n = 10).

the local minimum of the objective function in the iteration.

the function value of the objective function at .

the iteration counter.

the total function evaluations of the objective function and the filled function.

CDFA: the filled function algorithm proposed in [5].

FFFA: the filled function algorithm proposed in [18].

Table 1, Table 2, Table 3, Table 4, Table 5, Table 6, Table 7, Table 8, Table 9, Table 10, Table 11, Table 12, Table 13 and Table 14 show the numerical results of the test problems in different test criteria (different parameters and different dimensions) obtained by the proposed filled function algorithm. In these tables, k means the iterations for the filled function algorithm to locate the global minimum solution , is the corresponding function value and n is the dimension of the test problem.

From the numerical results, we can see that the proposed filled function algorithm can locate all the global minima solutions successfully (some with a precision error of less than ) and within small iterations.

For these test problems, we also listed the results of another continuous differentiable filled function algorithm, CDFA [5]. Since CDFA just carried out parts of the test problems, we use slashes ( / ) to indicate the missed values. From the comparison, we can see that for problem 2 (c = 0.2), we use one less iteration to locate a minimum solution with six orders of magnitude higher accuracy than CDFA. For problem 2 (c = 0.5), although we use one more iteration than CDFA, we successfully located the global minimum 0. For problem 2 (c = 0.05), we use one less iteration and obtain a better result than CDFA. For problem 3 we locate a better result (five orders of magnitude higher accuracy) than CDFA with the same iterations. For problems 5 and 7 (n = 7), our algorithm use one more iteration than CDFA. For problem 6 we use one less iteration to locate the global minimum than CDFA. For problem 7 (n = 10), although we use one less iteration, CDFA located the global minimum 0 while we get . From the above analysis, we can achieve the comparison results that our algorithm has four wins (problem 2 with c = 0.2, c = 0.05, and problems 3 and 6), two losses (problems 5 and 7 with n = 7), and three ties (problem 2 with c = 0.5, and problems 4 and 7 with n = 10) out of the overall ten test problems. Therefore, we come to the conclusion that the proposed filled function algorithm is more effective than CDFA.

Comparisons are also made with a state-of-the-art filled function algorithm, FFFA [18] and Ge’s filled function [1]. The comparison results are listed in Table 15, where is the number of test problems and n is the dimension, refers to the total number of function evaluations consumed to obtain the optimal solutions (minimum solutions for minimization problems).

Table 15.

The overall comparisons.

Since all three filled function algorithms can find the global minimum solutions, we compare their efficiency by the total number of iterations and function evaluations consumed by each algorithm. From Table 15, we can see that for all test problems, our algorithm is much better than Ge’s algorithm. As for the comparison with FFFA, we can see that for test problems 1, 3 and 4, although our algorithm takes one more iteration to get the optimal solution, we use much fewer function evaluations. For problems 2, 5 and 6, our algorithm uses fewer iterations and fewer function evaluations than that of FFFA. For problem 7, we can see that for dimension , our algorithm uses fewer iterations but more function evaluations than FFFA, for and , our algorithm uses more function evaluations than that of FFFA, but for and , our algorithm performs much better than that of FFFA. We can see that for our algorithm uses three fewer iterations and only uses 2287 function evaluations, while FFFA uses 12,681 function evaluations; for , our algorithm only uses 12,795 function evaluations (which is nearly half of that of FFFA’s 20,044) to get the global optimal solution. Overall, we come to the conclusion that the filled function algorithm proposed in this paper is more efficient than FFFA. From the numerical results and comparison with the other three filled function algorithms, we come to the conclusion that the new filled function algorithm is effective and efficient for solving unconstrained global optimization problems.

5. An Application of the Filled Function Algorithm

In this section, the proposed filled function algorithm is applied to the supply chain problem. Supply chain problems can be divided into three types, namely manufacturer’s core supply chain, supplier core supply chain and seller core supply chain. In this paper, we mainly consider the manufacturer’s core supply chain. For the manufacturer’s core supply chain, there are multiple suppliers, multiple shippers, multiple generalized transportation methods, multiple sellers and one manufacturer. In this supply chain, the manufacturer uses different raw materials to produce various products that are sold by multiple sellers. The optimization objective of the supply chain is to minimize the total transportation cost.

We suppose there is a supply chain with a manufacturer as the core, one supplier and one kind of raw material required for production. The unit raw material cost of this kind of raw material supplied by the supplier is 2000 USD/t, the maximum supply is 5000 t, and all shippers can deliver it. The manufacturer produces only one product and requires 1.2 t of raw material per ton of product. There are two shippers, both of which can provide services of two generalized modes of transport. There are three sellers, and the order quantity of each seller must be strictly satisfied. The manufacturer initially has no inventory products, the production cost per unit product is 1000 USD, and the maximum production capacity is 4500 t. The relevant unit costs are shown in Table 16, Table 17 and Table 18.

Table 16.

Unit transportation cost and maximum transportation capacity of shippers.

Table 17.

Sales capacity and unit sales cost.

Table 18.

Unit cost and maximum transportation capacity of shippers.

The optimization model used here is:

The constraints are:

where:

where are non-negative integers, are non-negative numbers. The symbols used in the model are explained as follows:

: Total supply chain cost;

: The number of -th product delivered to the -th seller use the -th generalized transportation method by the -th transporter;

: The ratio of -th transporter using the -th generalized transportation method from the -th supplier to the -th raw material to the manufacturer’s demand for the kind of raw material.

It can be seen from the model that the objective function is nonlinear, and the constraints of suppliers and the transportation of raw materials are also nonlinear, so the model is a nonlinear mixed integer programming model.

We applied the proposed filled function algorithm to this supply chain model to optimize the total transportation cost. We used MATLAB2021b programming on a 64-bit Windows 10, Intel(R) Core(TM) i5-9400F CPU@2.90 GHz memory personal computer to calculate it, we executed 20 independent runs, and the results are listed in Table 19.

Table 19.

The optimization results.

Table 19 shows the optimization results of nonzero variables (the values of other variables are all 0). We can see that this supply chain has multiple optimal solutions. By careful observation, we can see that when , , , and are fixed to the values shown in Table 19, and and satisfy the following conditions:

Therefore, the minimum transportation cost of this example is USD 1171.8 million. In this case, means that the manufacturer in this supply chain should arrange shipper 1 to deliver 200 t of the product to the second seller using the second generalized mode of transportation. means that the manufacturer should let shipper 2 complete 44.4 percent of the transportation task using generalized transportation method 2.

We compare our results with the results from ref. [20], the proposed filled function algorithm in this paper finds multiple optimal solutions and takes less computational time. From Table 19, we can see that our algorithm successfully finds five optimal solutions while the algorithm in [20] only finds single optimal solution (0, 0, 800, 0, 1000, 200, 0, 0, 0, 0, 1000, 0), . Moreover, the average running time of our algorithm is 1106 s, while the running time of [20] is 5128 seconds. Therefore, we can come to the conclusion that the filled function algorithm in this paper is more effective and efficient.

6. Conclusions

In this paper, we design a new filled function method to solve unconstrained global optimization problems. The new filled function has two advantages. First, it has no adjustable parameters to tune for different optimization problems; Secondly, the new filled function is continuous and differentiable, which are very good properties. These good properties mean the filled function is less apt to produce extra fake local minimum during the optimization; further, more choices of local search methods, especially some efficient gradient-based ones, can be applied to make the optimization more efficient and effective. The proposed filled function algorithm is tested on widely used benchmark problems and is also applied to a supply chain problem. Numerical experiments show the algorithm is effective and efficient. However, we also notice that the filled function algorithm become less effective with high dimensional optimization problems. The reason may lie in two aspects: First, the search space grows exponentially with the increase in the distention, and secondly, the local search method is not efficient enough for high dimensional problems. The time complexity of the proposed filled function algorithm is dependent on the local search method adopted. Since the filled function designed in this paper has the advantage of continuous and differentiable, the efficient gradient-based algorithm BFGS Quasi-Newton Method can be used in the proposed model. BFGS is well known for its fast super-linear convergence speed, although its time complexity is . This may be one of the reasons that when the optimization problem grows, its performance degrades. The proposed algorithm mainly helps the optimization process to repeatedly escape from local optimal solutions to better ones to locate the global optimal solution. In this process, a different local search method can be used. In our future work, we will continue to work on this issue and design new and better local search methods to make the filled function algorithm more efficient and perform better on higher dimensional problems.

Author Contributions

Conceptualization, H.L. and S.X.; methodology, H.L. and S.X.; software, S.X.; validation, Y.C.; writing—original draft, H.L. and S.X.; writing—review and editing, Y.C. and S.T.; supervision, H.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (No.62002289).

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ge, R. A filled function method for finding a global minimizer of a function of several variables. Math. Program. 1990, 46, 191–204. [Google Scholar] [CrossRef]

- Liu, X. Finding Global Minima with a Computable Filled Function. J. Glob. Optim. 2001, 19, 151–161. [Google Scholar] [CrossRef]

- Liu, X. A Class of Continuously Differentiable Filled Functions for Global Optimization. Syst. Man Cybern. Part A Syst. Hum. IEEE Trans. 2008, 38, 38–47. [Google Scholar] [CrossRef]

- Lin, H.; Wang, Y.; Fan, L.; Gao, Y. A new discrete filled function method for finding global minimizer of the integer programming. Appl. Math. Comput. 2013, 219, 4371–4378. [Google Scholar] [CrossRef]

- Lin, H.; Gao, Y.; Wang, Y. A continuously differentiable filled function method for global optimization. Numer. Algorithms 2014, 66, 511–523. [Google Scholar] [CrossRef]

- Gao, Y.; Yang, Y.; You, M. A new filled function method for global optimization. Appl. Math. Comput. 2015, 268, 685–695. [Google Scholar] [CrossRef]

- El-Gindy, T.; Salim, M.; Ahmed, A. A new filled function method applied to unconstrained global optimization. Appl. Math. Comput. 2016, 273, 1246–1256. [Google Scholar] [CrossRef]

- Ma, S.; Yang, Y.; Liu, H. A parameter free filled function for unconstrained global optimization. Appl. Math. Comput. 2010, 215, 3610–3619. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Y.; Guan, S.; Liu, X. A new filled function method for unconstrained global optimization. Int. J. Comput. Math. 2017, 94, 2283–2296. [Google Scholar] [CrossRef]

- Pandiya, R.; Widodo, W.; Endrayanto, I. Non parameter-filled function for global optimization. Appl. Math. Comput. 2021, 391, 125642. [Google Scholar] [CrossRef]

- Ahmed, A. A new parameter free filled function for solving unconstrained global optimization problems. Int. J. Comput. Math. 2021, 98, 106–119. [Google Scholar] [CrossRef]

- Ahmed, A. A new filled function for global minimization and system of nonlinear equations. Optimization 2021, 71, 1–24. [Google Scholar] [CrossRef]

- Pandiya, R.; Salmah; Widodo; Endrayanto, I. A Class of Parameter-Free Filled Functions for Unconstrained Global Optimization. Int. J. Comput. Methods 2022, 19, 2250003. [Google Scholar] [CrossRef]

- Qu, D.; Shang, Y.; Zhan, Y.; Wu, D. A new parameter-free filled function for the global optimization problem. Oper. Res. Trans. 2021, 25, 89–95. [Google Scholar]

- Wang, Y.J.; Zhang, J.S. A new constructing auxiliary function method for global optimization. Math. Comput. Model. 2008, 47, 1396–1410. [Google Scholar] [CrossRef]

- Sahiner, A.; Abdulhamid, İ.A.M.; Ibrahem, S.A. A new filled function method with two parameters in a directional search. J. Multidiscip. Model. Optim. 2019, 2, 34–42. [Google Scholar]

- Wu, X.; Wang, Y.; Fan, N. A new filled function method based on adaptive search direction and valley widening for global optimization. Appl. Intell. 2021, 51, 6234–6254. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Wei, S.; Sui, X.; Tong, W. A Filled Flatten Function Method Based on Basin Deepening and Adaptive Initial Point for Global Optimization. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2059011. [Google Scholar] [CrossRef]

- Lin, H.; Wang, Y.; Gao, Y.; Wang, X. A filled function method for global optimization with inequality constraints. Comput. Appl. Math. 2018, 37, 1524–1536. [Google Scholar] [CrossRef]

- Qu, D.; Shang, Y.; Wu, D.; Sun, G. Filled function method to optimize supply chain transportation costs. J. Ind. Manag. Optim. 2022, 18, 3339. [Google Scholar] [CrossRef]

- Yuan, L.; Li, Q. Filling function method for solving two-level programming problems. J. Math. 2022, 42, 153–161. [Google Scholar]

- Wan, Z.; Yuan, L.; Chen, J. A filled function method for nonlinear systems of equalities and inequalities. Comput. Appl. Math. 2012, 31, 391–405. [Google Scholar] [CrossRef][Green Version]

- Ma, S.; Gao, Y.; Zhang, B.; Zuo, W. A New Nonparametric Filled Function Method for Integer Programming Problems with Constraints. Mathematics 2022, 10, 734. [Google Scholar] [CrossRef]

- Yuan, L.y.; Wan, Z.p.; Tang, Q.h.; Zheng, Y. A class of parameter-free filled functions for box-constrained system of nonlinear equations. Acta Math. Appl. Sin. Engl. Ser. 2016, 32, 355–364. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, Y.T. A one-parameter filled function method applied to nonsmooth constrained global optimization. Comput. Math. Appl. 2009, 58, 1230–1238. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, Y.; Zhang, L. A filled function method applied to nonsmooth constrained global optimization. J. Comput. Appl. Math. 2009, 232, 415–426. [Google Scholar] [CrossRef][Green Version]

- Zhang, L.S.; Ng, C.K.; Li, D.; Tian, W.W. A New Filled Function Method for Global Optimization. J. Glob. Optim. 2004, 28, 17–43. [Google Scholar] [CrossRef]

- Yang, Y.; Shang, Y. A new filled function method for unconstrained global optimization. Appl. Math. Comput. 2006, 173, 501–512. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).