Abstract

In this paper, we develop a new linguistic aggregation operator based on the cloud model for solving linguistic group decision-making problem. First, an improved generating cloud method is proposed so as to transform linguistic variables into clouds, which modifies the limitation of the classical generating cloud method. We then address some new cloud algorithms, such as cloud possibility degree and cloud support degree which can be respectively used to compare clouds and determine the weights. Combining the cloud support degree with power aggregation operator, we develop a new cloud aggregation operator dubbed the cloud generalized power ordered weighted average (CGPOWA) operator. We study the properties of the CGPOWA operator and investigate its family including a wide range of aggregation operators such as the CGPA operator, CPOWA operator, CPOWGA operator, CPWQA operator, CWAA and CWGA operator. Furthermore, a new approach for linguistic group decision-making is presented on the basis of the improved generating cloud method and CGPOWA operator. Finally, an illustrative example is provided to examine the effectiveness and validity of the proposed approach.

1. Introduction

As an important part of modern decision science, multiple criteria decision-making (MCDM) is the process of finding the best option from all of the feasible alternatives. It consists of a single decision maker (DM), multiple decision criteria and multiple decision alternatives []. However, the increasing complexity of the socioeconomic environment makes it less possible for a single DM to consider all relevant aspects of a problem as many decision-making processes take place in group settings. This makes the multiple criteria group decision-making (MCGDM) become more and more attractive in management [,,,,]. Due to the complexities of objects and the vagueness of the human mind, it is more appropriate for the DMs to use linguistic descriptors than other descriptors to express their assessments in the actual process of MCGDM [,,]. For example, when evaluating the “comfort” or “design” of a car, terms such as “good”, “medium”, and “bad” are frequently used, and when evaluating a car’s speed, terms such as “very fast”, “fast”, and “slow” can be used instead of numerical values. In such situations, the use of a linguistic approach is necessary. The objective of Linguistic multiple criteria group decision-making (LMCGDM) is to find the optimal solution(s) from a set of feasible alternatives by means of linguistic information provided by the DMs. To realize this objective, aggregating linguistic information is the key point and linguistic aggregation operators are commonly used.

Until now, many linguistic aggregation operators have been proposed and these operators can be classified into six types: (1) the first is based on linear ordering, such as the linguistic max and min operators [,,], linguistic max-min weighted averaging operator [], linguistic median operator [], ordinal ordered weighted averaging operator [], linguistic weighted conjunction operator []; (2) The second is built on the extension principle [,] and makes computations on fuzzy numbers that support the semantics of the linguistic labels, such as the linguistic OWA operator [], and the linguistic weighted OWA operator [], the inverse linguistic OWA operator degree [], distance measure operator with linguistic information [], induced linguistic continuous ordered weighted geometric operator [], linguistic distances with continuous aggregation operator [], linguistic probabilistic weighted average operator []; (3) The third is based upon 2-tuple representation, including the 2-tuple arithmetic mean operator [], 2-tuple OWA operator [], dependent 2-tuple ordered weighted geometric operator [], 2-Tuple linguistic hybrid arithmetic aggregation operator []; (4) The fourth computes directly with words, such as the linguistic weighted averaging operator [], extended ordered weighted geometric operator [], linguistic weighted arithmetic averaging operator [], linguistic ordered weighted geometric averaging operator [], uncertain linguistic weighted averaging operator [], induced uncertain linguistic OWA operator [], uncertain linguistic geometric mean operator []; (5) The fifth is on the basis of the power ordered weighted average operator [], including linguistic power ordered weighted average (LPOWA) operator [], the linguistic generalized power average (LGPA) operator []; (6) and the last is a class of cloud aggregation operator which introduces the cloud model [], in LMCGDM, such as the cloud weighted arithmetic averaging (CWAA) operator and cloud weight geometric averaging (CWGA) operator [], trapezium cloud ordered weighted arithmetic averaging (TCOWA) operator []. A detail description of the operators LPOWA, CCWA, and CWGA will be presented in Section 2 of the paper.

The above mentioned operators of types (1)–(2) develop approximation processes to express the results in the initial expression domain, but they produce a consequent loss of information and then result in a lack of precision []. This shortcoming of operators of types (1)–(2) is just overcome by those operators of types (3)–(4) which allow a continuous representation of the linguistic information on their domains, and then can represent any counting of information obtained in an aggregation process without loss of information [,,,]. However, the operators of types (3)–(4) do not consider the information about the relationship between the values being combined []. The weakness of operators of types (3)–(4) can be corrected by operators of type (5) since they allow exact arguments to support each other in aggregation process and the weighting vectors depend on the input arguments and allow values to be aggregated to support and reinforce each other [,,]. In this way, operators of type (5) consider the information about the relationship between the values being fused, but these operators of type (5) cannot describe the randomness of languages [].

The limitation of operators of type (5) can be explained by the following fact. We know that natural languages generally include uncertainty in which randomness and fuzziness are the two most important aspects; here, the fuzziness mainly refers to uncertainty regarding the range of extension of concept, and the randomness implies that any concept is related to the external world in various ways []. The fuzziness and randomness are used to describe the uncertainty of natural languages. For instance, for a linguistic decision-making problem, DM A may think that 75% fulfillment of a task is “good”, but DM B may regard that less than 80% fulfillment of the same task cannot be considered as “good” with the same linguistic term scale. In this way, when considering the degree of certainty of an element belonging to a qualitative concept in a specific universe, it is more feasible to allow a stochastic disturbance of the membership degree encircling a determined central value than to allow a fixed number [,]. The cloud model, based on the fuzzy set theory and probability statistics [,,], can describe the fuzziness with a normal membership function and the randomness by means of three numerical characteristics (expectation, entropy and Hyper entropy). Hence, the cloud aggregation operators of type (6) just overcome the limitation of operators of type (5). Nevertheless, the cloud aggregation operators of type (6) do not take into account the information about the relationship between the values being fused.

Based on the above analyses, we find that the limitations of linguistic power aggregation operators of type (5) and cloud aggregation operators of type (6) are mutually complementary. In other words, the linguistic power aggregation operators focus on the information about the relationship between the values being fused, while they ignore the randomness of qualitative concept; the cloud aggregation operators can capture the fuzziness and randomness of linguistic information, but they neglect the information about the relationship between values being fused.

Therefore, this paper aims to propose a new cloud generalized power ordered weighted average (CGPOWA) operator so as to overcome the limitations of existing linguistic power aggregation operators of (5) and cloud aggregation operators of (6). The novelty of this paper is as follows.

- (i)

- We present an improved generating cloud method to transform linguistic variables into clouds. The key to linguistic decision-making based on cloud models is the transformation between linguistic variables and clouds, for which Wang and Feng [] proposed a method of generating five clouds on the basis of the golden ratio, but this method has three weaknesses: (a) it is limited to a linguistic term set of 5 labels; (b) the expectation of clouds sometimes exceeds the range of the universe; and (c) it cannot effectively distinguish the linguistic evaluation scale over the symmetrical interval. Regarding these limitations, we present an improved method by applying the cloud construction principle. This method can transform linguistic term set of any odd labels rather than only five labels, and can guarantee that all the expectations of clouds fall into the range of the universe. Meanwhile, it can effectively distinguish the linguistic evaluation scale over the symmetrical interval. In this way, this method modifies the weaknesses of the classical generating cloud method.

- (ii)

- We address some new cloud algorithms such as cloud possibility degree and cloud support degree. Based on “3En rules” of cloud model, a cloud distance is defined. We further put forward to a cloud possibility degree according to this cloud distance, which can be used to compare the clouds, and define a cloud support degree which is a similarity index. That is, the greater the similarity is, the closer the two clouds are, and consequently the more they support each other. The support degree can be used to determine the weights of aggregation operator.

- (iii)

- We develop a new CGPOWA operator. By using the cloud support degree we defined and the power aggregation operator [], we develop the CGPOWA operator, which overcomes the limitations of existing linguistic power aggregation operators and cloud aggregation operators and simultaneously maintains the advantages of the two types operators. By studying its properties, we find that the CGPOWA operator is idempotent, commutative and bounded. In addition, we investigate the family of the CGPOWA operator which contains a wide range of aggregation operators such as the CGPA operator, CPOWA operator, CPOWGA operator, CPWQA operator, CWAA and CWGA operators, the maximum and minimum operators.

- (iv)

- A new approach for LMCGDM is developed by applying the improved generating cloud method and CGPOWA operator. The main advantage of this approach is that it gives a completely objective view of the decision problem because the CGPOWA operator and the weighting method depend on the arguments completely. Comparing our method with three traditional LMCGDM approaches (linguistic symbolic model, linguistic membership function model, 2-tuple linguistic model) and the cloud aggregating method [,], we find that:

- (a)

- Compared with the three traditional LMCGDM approaches, our method takes a multi-granular linguistic assessment scale of great psychological sense, while the three traditional LMCGDM approaches only use a uniform granular linguistic assessment scale. In other words, when the alternatives are assessed, these three traditional approaches only regard the average level as the unique criterion, which leads to the evaluations rough and one-sided. Our method, however, considers not only the average level but also the fluctuation and stability of qualitative concepts via the cloud model;

- (b)

- Compared with cloud aggregating method [,], our method provides a completely objective weighting model by using the cloud support degree, while the weights in Wang et al. [,] are subjectively given by the DMs which may result in different ranking results if the DMs provide different weight vectors. In addition, the CGPOWA operator considers the relationships between the arguments provided by the DMs, while the cloud aggregating operators in Wang et al. [,] do not;

- (c)

- Our method presents a simple measure to compare different clouds by the cloud possibility degree (Equation (11)) and the ranking vector (Equation (13)), which requires no knowledge about the distribution of cloud drops, this is different from the score function [] which needs to know the distribution of cloud drops. This is also an attractive feature because in most case the distribution of cloud drops is unknown and it is rigid to acquire cloud drops.

This approach is also applicable to different linguistic decision-making problems such as strategic decision-making, human resource management, product management and financial management.

The rest of the paper is organized as follows. Section 2 reviews the LPOWA, CWAA and CWGA operators and the cloud model. Section 3 presents an improved method of transforming linguistic variables into clouds, and provides some new cloud algorithms. Section 4 develops the CGPOWA operator and studies its properties. Section 5 develops an approach for LMCGDM. Section 6 presents an illustrative example and the conclusions are drawn in Section 7.

2. Preliminaries

In this section, we briefly review the LPOWA operator, the definitions and operational rules of the clouds, CWAA operator and CWGA operator.

2.1. The LPOWA Operator

The linguistic approach is an approximate technique that represents qualitative aspects as linguistic values using linguistic variables. Let be a finite and completely ordered discrete term set, which stands for a possible value for a linguistic variable. For instance, a set of nine terms could be [,]:

In many real problems, the input linguistic arguments may not match any of the original linguistic labels, or may be located between two of them. For such cases, Xu [] presents some operational laws. Let and , the operational rules are as follows:

- (i)

- ;

- (ii)

- , where ;

- (iii)

- ;

- (iv)

- , where .

Yager [] introduced a nonlinear ordered weighted-average aggregation tool, called the power ordered weighted average (POWA) operator, which can be defined as follows:

where

Here is the support for from such that , and for ; and is the ith largest of the arguments, and the basic unit-interval monotonic (BUM) function satisfies , , , if .

Based on the POWA operator, Xu, Merigó and Wang [] provided a linguistic power ordered weighted average (LPOWA) operator, which is defined as follows.

Definition 1 (Xu, Merigó and Wang, []).

Let be a collection of linguistic variables, a linguistic power ordered weighted averaging (LPOWA) operator is a mapping LPOWA: , if

where is a permutation of such that for all j, and

where is a basic unit-interval monotonic (BUM) function satisfying , , if . denotes the support of the jth largest argument by all the other arguments.

Remark 1.

The LPOWA operator considers the linguistic information about the relationship between the values being combined since it allows exact arguments to support each other in aggregation process and the weighting vectors depend on the input arguments and allow arguments being aggregated to support each other. However, this type operator can not characterize the randomness of languages. Here the randomness implies that any language is related to the external world in various ways []. In fact, natural languages usually involve in randomness and fuzziness (refer to uncertainty regarding the range of extension of languages). For example, DM A thinks 75% fulfillment of a task is “good”, but DM B thinks that less than 80% fulfillment of the same task cannot be considered “good” with the same linguistic term scale. When considering the degree of certainty of an element belonging to a qualitative concept in a specific universe, it is more feasible to allow a stochastic disturbance of the membership degree encircling a determined central value than to allow a fixed number.

2.2. Cloud Model

The cloud model, based on the fuzzy set theory and probability statistics [], can describe the fuzziness with membership function and the randomness via probability distribution.

Definition 2 (Li, Meng and Shi, []).

Let be a quantitative domain expressed by precise values, and C a qualitative concept on the domain. If the quantitative value x is a random instantiation to C, whose membership for C is a random number with stable tendency:

Then, the distribution of x on the domain is named as a cloud and each x is named as a droplet.

The normal cloud model is applicable and universal for it is based on normal distribution and on the Gauss membership function [].

Definition 3 (Li and Du, []).

Suppose that is the universe of discourse and T is a qualitative concept in . If is a random instantiation of concept T satisfying and and the certainty degree of x belonging to T satisfies , then the distribution of x in the universe is called a normal cloud.

The cloud model can effectively integrate the randomness and fuzziness of concepts and describe the overall quantitative property of a concept via Expectation Ex, Entropy En, and Hyper entropy He. If A is a cloud with three numerical characteristics Ex, En, and He, then cloud A can be described as . Li, Liu and Gan [] provided operation rules of clouds as follows. Assume that there are two clouds and , operations between cloud A and cloud B are given by:

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- ;

- (v)

- .

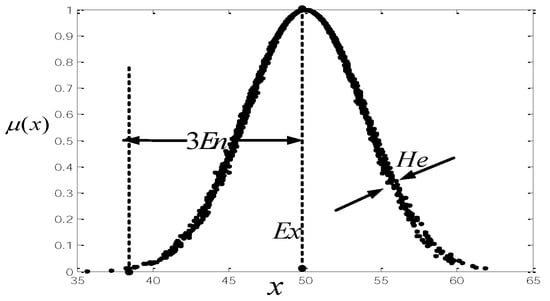

Figure 1 shows that fuzziness is about the extension range of x, such as [37, 62]. Randomness is about the various cognitions for the DMs. In linguistic decision-making, there are occasions for which different individuals attribute different meanings to a linguistic expression. The same individual may even interpret the same linguistic expression differently in different situations. For instance, DM A may believe the membership degree of 45 belonging to the “number near 40” is 0.8, whereas DM B may regard it to be 0.85. Non-uniform cognition exists among the DMs. The process of aggregation of linguistic information will be distorted owing to the lack of uniformity. The cloud model allows the certainty degree of x to follow a probability distribution, which allows the distortion held by the DMs in the aggregation process to be neutralized to a great extent [].

Figure 1.

Cloud (50, 3.93, 0.1).

2.3. The CWAA Operator and CWGA Operator

Wang, Peng and Zhang [] introduced the cloud model in LMCGDM and presented the cloud weighted arithmetic averaging (CWAA) operator and cloud weight geometric averaging (CWGA) operator.

Definition 4 (Wang, Peng and Zhang, []).

Let be the set of all clouds and be a subset of . A mapping CWAA: is defined as the cloud-weighted arithmetic averaging (CWAA) operator so that the following is true:

Here is the associated weight vector of , and .

Definition 5 (Wang, Peng and Zhang, []).

Let be the set of all clouds and be a subset of . A mapping CWGA: is defined as the CWGA operator, and the following is true:

Remark 2.

The CWAA and CWGA operators characterize the fuzziness and randomness of languages with cloud model, while they do not take into account the information about the relationship between the values being fused.

3. An Improved Generating Cloud Method and Cloud Algorithms

This section provides an improved method to transform linguistic values into clouds, and define some new cloud algorithms, such as cloud possibility degree and cloud support degree.

3.1. An Improved Generating Cloud Method

For an LMCGDM problem, natural languages generally include vague and imprecise information which is too complex and ill-defined to describe by using conventional quantitative expressions, and thus there is a barrier for transforming linguistic information into quantitative values. The cloud model describes linguistic concepts via three numerical characteristics which realize the objective and interchangeable transformation between qualitative concepts and quantitative values. Hence, it is necessary to transform linguistic variables into clouds. The key of this transformation is to select a transformation method. As for this, Wang and Feng [] proposed a classical method for generating five clouds on the basis of the golden ratio, which is equal of .

Let n be the linguistic evaluation scale and be an effective universe given by the DMs. Assume that the intermediate cloud is expressed by . The adjacent clouds around are respectively expressed by:

The numerical characteristics of five clouds are shown as follows (Wang and Feng, []):

Here is given beforehand.

However, we find that there are three weaknesses in the method of Wang and Feng [].

- First, the expectation of clouds may exceed the range of the universe . For example, if , then , and .

- Second, the method of Wang and Feng [] can not be widely used for it is only limited to five labels of the linguistic evaluation scale.

- And third, the method cannot effectively distinguish the linguistic evaluation scale over the symmetrical interval. For instance, if , then the expectation values , which results in the linguistic evaluation scale undistinguished.

To overcome the above weaknesses, we present an improved method to transform linguistic variables into clouds by means of the cloud construction principle, which is shown as follows.

Procedure for transforming linguistic variables into clouds:

Step 1. Calculate

Step 2. Compute

Step 3. Calculate

, here is given beforehand.

The following Theorem proves that our method can overcome the weaknesses of method given by Wang and Feng [].

Theorem 1.

Let n be the linguistic evaluation scale and be a valid universe given by the DMs. If are the cloud in , then , , and .

Proof.

- (1)

- First, we prove that the expectations of clouds are different from each other.

Let , according to Step 1 of the procedure for transforming linguistic variables into clouds, we get:

Therefore,

It follows from expressions (6) and (7) that expectations of clouds are different from each other.

- (2)

- Second, we prove that all the expectations of clouds fall within the range of the universe.

From Step 1 of the procedure for transforming linguistic variables into clouds, we see that:

Since it can be concluded that:

Similarly, note that , we then have:

Therefore, the expectations of clouds fall into the range of the universe.

By the same token, it is easy to verify that the expectations of clouds fall into the range of the universe. Based on the above analysis, we can conclude that all the expectations of clouds fall into the range of the universe.☐

Remark 3.

Theorem 1 shows that the improved generating cloud method can guarantee that all the expectations fall into the range of the universe, and meanwhile this method can effectively distinguish the linguistic evaluation scale over the symmetrical interval and transform linguistic term set of any odd labels into cloud rather than only five labels.

Example 1.

Let and the linguistic assessment set . Then the five clouds can be obtained by using the classical method and the improved generating cloud method, respectively.

- The classical method given by Wang and Feng []:

- The improved generating cloud method:

From Example 1, we find that some expectations of clouds obtained by the classical method exceed the range of the universe, e.g., . In particular, we see that , , That is, cloud is absolutely better than cloud . This is obviously inconsistent with the fact that linguistic variable is absolutely better than . Fortunately, these weaknesses have been corrected by the improved generating cloud method.

3.2. New Algorithms of the Cloud Model

This subsection defines the cloud distance, cloud possibility degree and cloud support degree, which will be used for cloud comparison and the weight determination, respectively.

Based on “3En rules” of cloud model, the distance between clouds is defined as follows.

Definition 6.

Let and be two clouds in the universe . Then, the distance of these clouds and is given by:

where , and .

Proposition 1.

The cloud distance has the following properties:

- (i)

- ;

- (ii)

- ;

- (iii)

- For

where stands for the collection of all clouds in .

Proof.

See Appendix A.☐

Remark 4.

If , then the cloud will degenerate into a real number, in this case,

Based on the cloud distance, a cloud possibility degree can be defined as follows.

Definition 7.

Let and be two clouds in universe , and be the positive ideal cloud, then the cloud possibility degree is defined as:

where and are the distances between and , , respectively.

Definition 7 shows that the cloud possibility degree is described by the distance and . The larger the distance between and is, the larger the cloud possibility degree is. The cloud possibility degree can be used for clouds comparison.

From Definition 7, we can easily obtain the following properties of cloud possibility degree.

Proposition 2.

Let , and be three cloud variables. Then, the cloud possibility degree has the following properties:

- (i)

- ;

- (ii)

- ;

- (iii)

- ;

- (iv)

- particularly, ;

- (v)

- if and , then ;

- (vi)

- if , then .

To rank clouds , following Wan and Dong [] who ranked interval-valued intuitionistic fuzzy numbers via possibility degree, we can construct a fuzzy complementary matrix of cloud possibility degree as follows:

where , and . Then, the ranking vector is determined by:

and consequently, the clouds can be ranked in descending order via values of . That is, the smaller the value of is, the larger the corresponding order of is.

The advantage of utilizing the vector for ranking clouds lies in the fact that fully uses the decision-making information and makes the calculation simple.

Proposition 3.

Suppose that and are two clouds in the universe , if , , , then .

Proof.

See Appendix A.☐

Example 2.

Let , , , be four normal clouds, and these clouds can be ranked by the values of .

Note that the positive ideal cloud and according to Equation (10), we have that , , and .

Consequently, based on Equation (11), the possibility degree matrix can be derived as follows:

According to Equation (13), we further derive the ranking vector . So the ranking of the normal clouds is:

Following Yager [], we can define the cloud support degree.

Definition 8.

Let be the set of all clouds and support (hereafter, Sup) a mapping from to R. For any and , if the term Sup satisfies:

- (i)

- ;

- (ii)

- ;

- (iii)

- if . where is a distance measure for clouds.

Then is called the support degree for from .

Note that Sup measure is essentially a similarity index, meaning that the greater the similarity is, the closer the two clouds are, and consequently the more they support each other. The support degree will be used to determine the weights of aggregation operator.

4. Cloud Generalized Power Ordered Weighted Average Operator

For an LMCGDM problem, when the linguistic information is converted to clouds, an aggregation step must be performed for a collective evaluation. In this section, we provide a cloud generalized power ordered weighted average (CGPOWA) operator and study its family which includes many different operators.

Following LPOWA operator of Xu, Merigó and Wang [] and using the cloud support degree, we can define a cloud generalized power ordered weighted average (CGPOWA) operator as follows.

Definition 9.

Let be the set of all clouds and be a subset of . A mapping is defined as a cloud generalized power ordered weighted average (CGPOWA) operator,

where is a parameter satisfying ,

and is the jth largest cloud of for all , the function is a BUM function which satisfies , and if .

There is a noteworthy theorem that can be deduced from the definition given above.

Theorem 2.

The CGPOWA operator is still a cloud and such that:

Proof.

From operational rules of the cloud given by Li, Liu and Gan [], we have

and

Therefore, from Definition 9, we derive that

The GPOWA operator given in Definition 9 has the following properties.☐

Proposition 4.

- (i)

- (Idempotency). If for , then .

- (ii)

- (Commutativity). If is any permutation of , then .

- (iii)

- (Boundedness). If and , we then have , and .

Proof.

See Appendix A. ☐

Remark 5.

The CGPOWA operator possesses the following attractive features: (a) it considers the importance of the ordered position of each input argument, here each input argument is a cloud; (b) it has the basic features of LPOWA operator, for instance, it considers the relationships between the arguments and gauges the similarity degrees of the arguments; (c) the weighting vectors associated with the CGPOWA operator can be determined by Equation (15), which provides an objective weighting model based on the objective data rather than relying on the preferences and knowledge of the DMs, moreover, it will reduce the influence of those unduly high (or low) arguments on the decision result by using the support measure to assign them lower weights; (d) the CGPOWA operator considers the decision arguments and their relationships, which are neglected by existing cloud aggregation operators; in addition, it can describe the randomness of linguistic terms, whereas linguistic power aggregation operators cannot do this work; (e) if the linguistic information is converted to a sequence of random variables with certain distribution and moment properties, it is possible to formulate the CGPOWA operator in an abstract stochastic model.

Table 1 shows that the CGPOWA operator can degenerate into many aggregation operators (here ), such as cloud power ordered weighted quadratic average (CPOWQA) operator, cloud power ordered weighted average (CPOWA) operator, cloud power ordered weighted geometric average(CPOWGA) operator, CGPA operator, CGM operator, cloud power weighted quadratic average (CPWQA) operator, CWAA and CWGA operator (See Appendix B for a proof).

Table 1.

Family of CGPOWA operator.

By taking different weighting vector in CGPOWA operator, we can obtain some other aggregation operators such as the maximum operator, the minimum operator, the cloud generalized mean operator and the Window-CGPOWA operator (See Table 2).

Table 2.

Particular cases of CGPOWA operator.

5. An Approach for LGDM Based on the CGPOWA Operator

The LMCGDM problem is the process of finding the best alternative from all of the feasible alternatives which can be evaluated according to a number of criteria values with linguistic information. In general, LMCGDM problem includes multiple experts (or the DMs), multiple decision criteria and multiple alternatives.

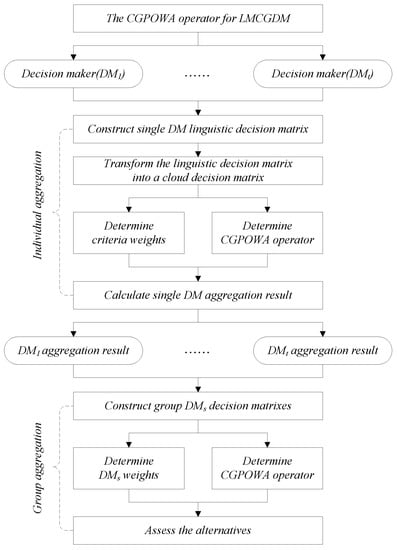

To better understand the procedure for solving LMCGDM problem on the basis of a cloud model, we develop a general framework for LMCGDM aggregation procedure (see, Figure 2) which contains two stages: (i) individual aggregation, which is a MCDM process for each DM; and (ii) group aggregation, which is a multiple experts decision-making process composed by multiple experts and multiple alternatives. For individual aggregation, we need to determine the weights of criteria, and then aggregate the criteria values of each alternative into one collective value by means of the CGPOWA operator and consequently, we derive a collective decision matrix composed by the DMs and alternatives; For group aggregation, we need to determine the weights of the DMs based on the collective decision matrix, and further aggregate the collective values of each alternative into one result by using the CGPOWA operator. Finally, we can assess the alternatives.

Figure 2.

General Framework of the CGPOWA Operator for an LMCGDM Procedure.

We develop a new algorithm for LMCGDM based on the improved generating cloud method and CGPOWA operator with the weight information being completely unknown. The algorithm is summarized in a simple algorithm through six steps. We first describe the algorithm inputs.

Input data of our new LMCGDM algorithm. Let be the set of m alternatives, be the set of n criteria, and be the set of t DMs. Assume that the DM provides his/her preference value for the alternative w. r. t. the criterion , where takes the form of linguistic variable, and consequently we can construct a decision matrix for . We summarize all input data below:

Given the input data in (17), our objective is to determine the optimal alternative . We give a new LMCGDM approach below.

An LMCGDM algorithm.

Step 1. Transform the linguistic information into clouds.

Transform the linguistic decision matrix into a cloud decision matrix by applying the improved generating cloud method developed in Section 3.

Step 2. Determine the weights of criteria.

Calculate the cloud support degrees:

which satisfy the support conditions (i)–(iii) in Definition 8. Here, the cloud distance measure is expressed by Equation (10), and denotes the similarity between the sth largest cloud preference value and the qth largest cloud preference value . We further calculate the weights of criteria by means of Equation (15).

Step 3. Aggregate the criteria values of each alternative into a collective value.

Utilize Equation (14) to aggregate all cloud decision matrices into a collective cloud decision matrix .

Step 4. Calculate the weights of the DMs.

Calculate the cloud support degrees:

which satisfy the support conditions (i)–(iii) in Definition 8. Here, the cloud distance measure is calculated by Equation (10). According to Equation (15), we can calculate the weights of the DMs.

Step 5. Aggregate the collective values of each alternative into one result.

Utilize Equation (14) to compute the collective overall preference value of the alternative .

Step 6. Rank the alternatives and choose the best one(s).

According to the cloud possibility degree (11) and the ranking vector (13), we can rank the collective overall preference values in descending order and consequently select the best one in the light of the collective overall preference values .

Remark 6.

Compared with the traditional linguistic approaches (e.g., linguistic membership function model, linguistic symbolic model, 2-tuple linguistic model) and the existing cloud aggregating method (e.g., []), the attractive features of our approach are as follows.

- (a)

- The three traditional LMCGDM approaches only use a uniform granular linguistic assessment scale, while ours takes a multi-granular linguistic assessment scale of great psychological sense. In other words, when assessing the alternatives, these three traditional approaches only regard the average level as the unique criterion, which leads to the evaluations rough and one-sided. Our method, however, considers not only the average level but also the fluctuation and stability of qualitative concepts by using and , respectively. Such statements can also be examined by the numerical analysis in Section 6.2.

- (b)

- In addition, the corresponding aggregation operators for the three traditional LMCGDM methods are the linguistic power average (LPA) operator, triangular fuzzy weighted averaging (TFWA) operator and 2-tuple weighted averaging (TWA) operator, respectively. Note that these operators have their own weaknesses when describing the randomness, while our aggregation operator can effectively reduce the loss and distortion of information in aggregating process, and correspondingly improve the precision of the results.

For instance, LPA and TWA operators cannot precisely depict the randomness because when converting the linguistic variables into real numbers, they directly transform the random decision-making information into the precise domain, therefore, partial linguistic information is lost. TFWA operator can describe the fuzziness whereas it cannot describe the randomness.

- (c)

- Compared with the cloud aggregating method (cf., []), our method provides a completely objective weighting model by using the cloud support degree, while the weights in Wang et al. [] are subjectively given by the DMs which may result in different ranking results if the DMs provide different weight vectors. In addition, the CGPOWA operator considers the relationships between the arguments provided by the DMs, while the cloud aggregating operators in Wang et al. [] do not.

- (d)

- Our method presents a simple measure for comparing different clouds by the cloud possibility degree Equation (11) and the ranking vector Equation (13), which requires no knowledge about the distribution of cloud drops, this is different from the score function [] which needs to know the distribution of cloud drops. This is also an attractive feature because in most case the distribution of cloud drops is unknown and it is rigid to acquire cloud drops.

6. Illustrative Example

This section provides a numerical example to illustrate the application of the approach proposed in Section 5 and makes a comparative study to examine the validity of our approach.

6.1. An Investment Selection Problem

Following [], we assume that there is an investment company who wants to invest a sum of money in another company. There are five possible alternatives for investing the money: a car company , a food company , a computer company , an arms company , and a TV company . The investment company will make a decision according to the following six criteria: financial risk ; technical risk ; production risk ; market risk ; management risk ; and environmental risk . The five possible alternatives are evaluated by the linguistic term set used by three DMs for these six criteria. The linguistic decision matrix is shown in Table 3.

Table 3.

Linguistic Decision matrix .

To simplify the calculation, throughout the numerical analysis, we assume that , in CGPOWA operator, and that the universe and in the improved generating cloud method. Based on the approach developed in Section 5 and the given parameters, the order of enterprises can be ranked by applying MATLAB or Lingo software package.

Procedures of LMCGDM based on the cloud model.

Step 1. Transform the linguistic decision matrix into the corresponding cloud decision matrix by using the new cloud generating method. (See Table 4)

Table 4.

Cloud decision matrix .

Step 2. Calculate the weights of criteria by means of Equation (15). (See Table 5)

Table 5.

The weights of criteria.

Step 3. Aggregate the criteria values of each alternative into a collective value by using Equation (14). (See Table 6)

Table 6.

Collective cloud decision matrix .

Step 4. Calculate the weights of the DMs by means of Equation (15). (See Table 7)

Table 7.

The weights of the DMs.

Step 5. Utilize Equation (14) to compute the collective overall preference value of the alternative .

Step 6. Rank the alternatives and choose the best one(s).

From Step 5, we can get the positive ideal cloud Then, the ranking vector is derived by Equations (11) and (13): And consequently, the rank of the clouds is: The ranking order in the light of the overall collective preference values is:

Thus, the best investment alterative is the computer company , which is in accordance with the result of Wang et al. []. However, compared with the cloud aggregating method, our approach has the following features:

- (1)

- As for the weighting method, we provide an objective weighting model based on the cloud support degree, while the weights in Wang et al. [] are subjectively given by the DMs.

For example, Wang et al. [] supposed that the weights of the DMs and criteria are respectively given by and , which completely rely on the subjective preferences and knowledge of the DMs. Thus, the ranking results obtained by Wang et al. [] are not stable because there may exist different ranking results if the DMs provide different weight vectors (See Table 8). However, our method does not require the DMs to provide weighting information and the weights are derived based on the objective data and the cloud support degree Equation (15), and then the ranking results generally remain unchanged (See Table 9).

Table 8.

Ranking results for different weights of the DMs and weights of criteria.

Table 9.

Comparison with different parameter .

- (2)

- Our method considers the relationship between arguments given by the DMs, while Wang et al. [] neglect it.

For instance, CWAA and CWGA operators provided by Wang et al. [] do not consider the relationship between the arguments, while the CGPOWA operator we derived considers the relationship among input arguments by allowing values being aggregated to support and reinforce each other via cloud support degrees. Here, cloud support degrees of the arguments can be calculated by applying Equations (18) and (19).

6.2. Comparative Analysis

To validate the feasibility of our method, a comparative study is conducted by applying three traditional LMCGDM methods, i.e., linguistic symbolic model, linguistic membership function model, 2-tuple linguistic model. The corresponding aggregation operators for the three traditional LMCGDM methods are respectively the LPA operator, TFWA operator and TWA operator. This comparative analysis is based on the same illustrative example given in Section 6.1. The weights of the DMs and criteria are respectively taken from Table 4 and Table 6 so as to make it easy to compare these results with the case of our method.

• Linguistic symbolic model

First, aggregate all linguistic decision matrices into a collective linguistic decision matrix by applying LPA operator (See Table 10).

Table 10.

Collective linguistic decision matrix

Second, utilize LPA operator to derive the overall collective preference values.

Finally, rank the order of the five alternatives:

• Linguistic membership function model

First, transform linguistic variables into triangular fuzzy numbers by the method of Iraj et al. []:

Second, utilize TFWA operator to derive the individual overall evaluation value :

Third, use TFWA operator to determine the overall collective evaluation value :

Finally, rank the order of the five alternatives via the method of comparing triangular fuzzy numbers (Chang & Wang, []):

• 2-tuple linguistic model

First, utilize TWA operator to derive the individual overall evaluation value:

Second, apply TWA operator to get the overall collective evaluation values :

Third, rank the order of the five alternatives:

Table 11 shows the ranking results with three different aggregation operators (i.e., LPA, TFWA, TWA). Comparing Table 11 with Table 9, we find that the respective ranking results of the three operators are all the same, but the ranking result is different when the CGPOWA operator is applied. The difference lies in the ranking order of and .

Table 11.

Comparison with different models.

The above difference can be explained by the following fact: when the alternatives are assessed, these three traditional methods only regard the average level as the unique criterion, which leads to the evaluations rough and one-sided. Notice that the average level of is higher than the case of , and then the ranking order of these three traditional methods becomes . Our method, however, considers not only the average level but also the fluctuation and stability of qualitative concepts by using and , respectively. In other words, the three traditional methods only use a uniform granular linguistic assessment scale, while our method takes a multi-granular linguistic assessment scale of great psychological sense. This causes the average level of to be lower than the case of , namely, . In addition, we derive that and in this example. Therefore, according to Equation (13), we can conclude that the ranking result becomes in our method.

7. Conclusions

LMCDM problems are widespread in various fields such as economics, management, medical care, social sciences, engineering, and military applications. However, traditional aggregation methods are not robust enough to convert qualitative concepts to quantitative information in LMCDM problems. Among the existing aggregation operators, linguistic power aggregation operators and cloud aggregation operators have the most merits, but they have their own weaknesses. If combined together, the two types of operators can overcome their own weaknesses, that is, the characters of two types of operators are mutually complementary. This paper developed a new class of aggregation operator which successfully unified the advantages of the existing linguistic power aggregation operators and cloud aggregation operators, and simultaneously overcame their limitations. First, we presented an improved method to transform linguistic variables into clouds, which corrects the weaknesses of the classical generating cloud method. Based on this method, we developed some new cloud algorithms such as the cloud possibility degree and cloud support degree, which can be used for cloud comparison and the weight determination, respectively. Furthermore, a new CGPOWA operator was developed, which considers the decision arguments and their relationships and characterizes the fuzziness and randomness of linguistic terms. By studying the properties of CGPOWA operator we found that it is commutative, idempotent, bounded. Moreover, CGPOWA operator can degenerate into many different operators, including CGPA operator, CPOWA operator, CPOWGA operator, CPWQA operator, CWAA and CWGA operators, the maximum operator and the minimum operator. In particular, based on the new generating cloud method and CGPOWA operator, a new approach for LGDM was developed. In the end, to show the effectiveness and the good performance of our approach in practice, we provided an example of investment selection and made a comparative analysis.

In further research, it would be very interesting to extend our analysis to the case of more sophisticated situation such as introducing the behavior theory of the DMs in the context of CGOWPA operator. Nevertheless, we leave that point to future research, since our methodology cannot be applied to that extended framework, which will result in more sophisticated calculation and which we cannot tackle here.

Acknowledgments

This research is supported by Natural Science Foundation of China under Grant No. 71671064 and Humanity and Social Science Fund Major Project of Beijing under Grant No. 15ZDA19.

Author Contributions

Jianwei Gao and Ru Yi contributed equally to this work. Both authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Proposition 1.

From Definition 6, it is easy to verify that conclusions (i) and (ii) hold. (iii) From Definition 6, we have:

Similarly, we can obtain that:

Therefore,

Proof of Proposition 3.

Notice that if , and , then the positive ideal cloud will become . According to Definition 6, we derive . In addition, then, based on Equation (11), the possibility degree matrix can be obtained as follows:

According to Equation (13), we can get the ranking vector Thus, we have:

Proof of Proposition 4.

- (i)

- (Idempotency). If for , then according to Equation (11), we have:Hence,

- (ii)

- (Commutativity). Assume that is any permutation of , then for each , there exists one and only one such that , and vice versa. Therefore, from Equation (11), we have:.

- (iii)

- (Boundedness). Note that if , according to Proposition 2, we have:So,Then,By the same token, if , we then can obtain:

Appendix B

Proof of the Family of CGPOWA Operator (See Table 1)

Proof of No.6 in Table 1.

If , then CGPOWA operator will degenerate into CGPA operator. According to Theorem 2, we derive that:

Thus, as in the above equation, we have:

References

- Wiecek, M.M.; Ehrgott, M.; Fadel, G.; Figueira, J.R. Multiple criteria decision-making for engineering. Omega 2008, 36, 337–339. [Google Scholar] [CrossRef]

- Wu, J.; Li, J.C.; Li, H.; Duan, W.Q. The induced continuous ordered weighted geometric operators and their application in group decision-making. Comput. Ind. Eng. 2009, 58, 1545–1552. [Google Scholar] [CrossRef]

- Xia, M.M.; Xu, Z.S. Methods for fuzzy complementary preference relations based on multiplicative consistency. Comput. Ind. Eng. 2011, 61, 930–935. [Google Scholar] [CrossRef]

- Merigó, J.M.; Casanovas, M. Induced and uncertain heavy OWA operators. Comput. Ind. Eng. 2011, 60, 106–116. [Google Scholar] [CrossRef]

- Gong, Y.B.; Hu, N.; Zhang, J.G.; Liu, G.F.; Deng, J.G. Multi-attribute group decision-making method based on geometric Bonferroni mean operator of trapezoidal interval type-2 fuzzy numbers. Comput. Ind. Eng. 2015, 81, 167–176. [Google Scholar] [CrossRef]

- Wan, S.P.; Wang, F.; Lin, L.L.; Dong, J.Y. Some new generalized aggregation operators for triangular intuitionistic fuzzy numbers and application to multi-attribute group decision-making. Comput. Ind. Eng. 2016, 93, 286–301. [Google Scholar] [CrossRef]

- Ngan, S.C. A type-2 linguistic set theory and its application to multi-criteria decision-making. Comput. Ind. Eng. 2013, 64, 721–730. [Google Scholar] [CrossRef]

- Wei, G.W.; Zhao, X.F.; Lin, R. Some hybrid aggregating operators in linguistic decision-making with Dempster-Shafer belief structure. Comput. Ind. Eng. 2013, 65, 646–651. [Google Scholar] [CrossRef]

- Wang, Z.Q.; Richard, Y.K.F.; Li, Y.L.; Pu, Y. A group multi-granularity linguistic-based methodology for prioritizing engineering characteristics under uncertainties. Comput. Ind. Eng. 2016, 91, 178–187. [Google Scholar] [CrossRef]

- Yager, R.R. Fusion of ordinal information using weighted median aggregation. Int. J. Approx. Reason. 1998, 18, 35–52. [Google Scholar] [CrossRef]

- Xu, Z.S. An overview of operators for aggregating information. Int. J. Intell. Syst. 2003, 18, 953–969. [Google Scholar] [CrossRef]

- Xu, Z.S. Deviation measures of linguistic preference relations in group decision-making. Omega 2005, 33, 249–254. [Google Scholar] [CrossRef]

- Yager, R.R. An approach to ordinal decision-making. Int. J. Approx. Reason. 1995, 12, 237–261. [Google Scholar] [CrossRef]

- Yager, R.R.; Rybalov, A. Understanding the median as a fusion operator. Int. J. Gen. Syst. 1997, 26, 239–263. [Google Scholar] [CrossRef]

- Yager, R.R. Applications and extensions of OWA aggregations. Int. J. Man-Mach. Stud. 1992, 37, 103–132. [Google Scholar] [CrossRef]

- Herrera, F.; Herrera-Viedma, E. Aggregation operators for linguistic weighted information. IEEE Trans. Syst. Man Cybern. Part A 1997, 27, 646–656. [Google Scholar] [CrossRef]

- Lee, H.M. Applying fuzzy set theory to evaluate the rate of aggregative risk in software development. Fuzzy Sets Syst. 1996, 79, 323–336. [Google Scholar] [CrossRef]

- Lee, H.M.; Lee, S.Y.; Lee, T.Y.; Chen, J.J. A new algorithm for applying fuzzy set theory to evaluate the rate of aggregative risk in software development. Inf. Sci. 2003, 153, 177–197. [Google Scholar] [CrossRef]

- Herrera, F.; Herrera-Viedma, E.; Verdegay, J.L. A sequential selection process in group decision-making with a linguistic assessment approach. Inf. Sci. 1995, 85, 223–239. [Google Scholar] [CrossRef]

- Torra, V. The weighted OWA operator. Int. J. Intell. Syst. 1997, 12, 153–166. [Google Scholar] [CrossRef]

- Herrera, F.; Herrera-Viedma, E. Linguistic decision analysis: Steps for solving decision problems under linguistic information. Fuzzy Sets Syst. 2000, 115, 67–82. [Google Scholar] [CrossRef]

- Merigó, J.M.; Casanovas, M. Decision-making with distance measures and linguistic aggregation operators. Int. J. Fuzzy Syst. 2010, 12, 190–198. [Google Scholar]

- Zhou, L.G.; Chen, H.Y. The induced linguistic continuous ordered weighted geometric operator and its application to group decision-making. Comput. Ind. Eng. 2013, 66, 222–232. [Google Scholar] [CrossRef]

- Zhou, L.; Wu, J.; Chen, H.Y. Linguistic continuous ordered weighted distance measure and its application to multiple attributes group decision-making. Appl. Soft Comput. 2014, 25, 266–276. [Google Scholar] [CrossRef]

- Merigó, J.M.; Palacios-Marqué, D.; Zeng, S.Z. Subjective and objective information in linguistic multi-criteria group decision-making. Eur. J. Oper. Res. 2016, 248, 522–531. [Google Scholar] [CrossRef]

- Herrera, F.; Martínez, L. A model based on linguistic 2-tuples for dealing with multi granular hierarchical linguistic contexts in multi-expert decision-making. IEEE Trans. Syst. Man Cybern. Part B 2001, 31, 227–234. [Google Scholar] [CrossRef] [PubMed]

- Herrera, F.; Martinez, L. A 2-tuple fuzzy linguistic representation model for computing with words. IEEE Trans. Fuzzy Syst. 2015, 8, 746–752. [Google Scholar]

- Wei, G.; Zhao, X. Some dependent aggregation operators with 2-tuple linguistic information and their application to multiple attribute group decision-making. Expert Syst. Appl. 2012, 39, 5881–5886. [Google Scholar] [CrossRef]

- Wan, S.P. 2-Tuple linguistic hybrid arithmetic aggregation operators and application to multi-attribute group decision-making. Knowl.-Based Syst. 2013, 45, 31–40. [Google Scholar] [CrossRef]

- Xu, Z.S. On generalized induced linguistic aggregation operators. Int. J. Gen. Syst. 2006, 35, 17–28. [Google Scholar] [CrossRef]

- Xu, Z.S. EOWA and EOWG operators for aggregating linguistic labels based on linguistic preference relations. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2004, 12, 791–810. [Google Scholar] [CrossRef]

- Xu, Y.J.; Da, Q.L. Standard and mean deviation methods for linguistic group decision-making and their applications. Expert Syst. Appl. 2010, 37, 5905–5912. [Google Scholar] [CrossRef]

- Xu, Z.S. A method based on linguistic aggregation operators for group decision-making with linguistic preference relations. Inf. Sci. 2004, 166, 19–30. [Google Scholar] [CrossRef]

- Xu, Y.J.; Da, Q.L.; Zhao, C.X. Interactive approach for multiple attribute decision-making with incomplete weight information under uncertain linguistic environment. Syst. Eng. Electron. 2009, 31, 597–601. [Google Scholar]

- Xu, Z.S. Induced uncertain linguistic OWA operators applied to group decision-making. Inf. Fusion 2006, 7, 231–238. [Google Scholar] [CrossRef]

- Xu, Z.S. An approach based on the uncertain LOWG and induced uncertain LOWG operators to group decision-making with uncertain multiplicative linguistic preference relations. Decis. Support Syst. 2006, 41, 488–499. [Google Scholar] [CrossRef]

- Yager, R.R. The power average operator. IEEE Trans. Syst. Man Cybern. Part A 2001, 31, 724–731. [Google Scholar] [CrossRef]

- Xu, Y.; Merigó, J.M.; Wang, H. Linguistic power aggregation operators and their application to multiple attribute group decision-making. Appl. Math. Model. 2012, 36, 5427–5444. [Google Scholar] [CrossRef]

- Zhou, L.; Chen, H.Y. A generalization of the power aggregation operators for linguistic environment and its application in group decision-making. Knowl.-Based Syst. 2012, 26, 216–224. [Google Scholar] [CrossRef]

- Li, D.; Meng, H.; Shi, X. Membership clouds and membership cloud generators. J. Comput. Res. Dev. 1995, 32, 16–21. [Google Scholar]

- Wang, J.Q.; Peng, L.; Zhang, H.Y.; Chen, X.H. Method of multi-criteria group decision-making based on cloud aggregation operators with linguistic information. Inf. Sci. 2014, 274, 177–191. [Google Scholar] [CrossRef]

- Wang, J.Q.; Wang, P.; Wang, J.; Zhang, H.Y.; Chen, X.H. Atanassov’s interval-valued intuitionistic linguistic multi-criteria group decision-making method based on trapezium cloud model. IEEE Trans. Fuzzy Syst. 2015, 23, 542–554. [Google Scholar] [CrossRef]

- Li, D.Y.; Du, Y. Artificial Intelligence with Uncertainty; Chapman & Hall/CRC, Press: Boca Raton, FL, USA, 2007. [Google Scholar]

- Li, D.Y.; Liu, C.Y.; Gan, W.Y. A new cognitive model: Cloud model. Int. J. Intell. Syst. 2009, 24, 357–375. [Google Scholar] [CrossRef]

- Wang, H.L.; Feng, Y.Q. On multiple attribute group decision-making with linguistic assessment information based on cloud model. Control Decis. 2005, 20, 679–685. [Google Scholar]

- Wan, S.P.; Dong, J.Y. A possibility degree method for interval-valued intuitionistic fuzzy multi-attribute group decision-making. J. Comput. Syst. Sci. 2014, 80, 237–256. [Google Scholar] [CrossRef]

- Iraj, M.; Nezam, M.A.; Armaghan, H.; Rahele, N. Designing a model of fuzzy TOPSIS in multiple criteria decision-making. Appl. Math. Comput. 2008, 206, 607–617. [Google Scholar]

- Chang, T.H.; Wang, T.C. Using the fuzzy multi-criteria decision-making approach for measuring the possibility of successful knowledge management. Inf. Sci. 2009, 179, 355–370. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).