Abstract

Few-shot classification aims to generalize from very limited samples, providing an effective solution for data-scarce scenarios. From a symmetry viewpoint, an ideal Few-Shot classifier should be invariant to class permutations and treat support and query features in a balanced manner, preserving intra-class cohesion while enlarging inter-class separation in the embedding space. However, existing methods often violate this symmetry because prototypes are estimated from few noisy samples, which induces asymmetric representations and task-dependent biases under complex inter-class relations. To address this, we propose FC-SBAAT, feature collaboration, and Sparse Bias-Aware Attention Transformer, a framework that explicitly leverages symmetry in feature collaboration and prototype construction. First, we enhance symmetric interactions between support and query samples in both attention and contrastive subspaces and adaptively fuse these complementary representations via learned weights. Second, we refine prototypes by symmetrically aggregating intra-class features with learned importance weights, improving prototype quality while maintaining intra-class symmetry and increasing inter-class discrepancy. For matching, we introduce a Sparse Bias-Aware Attention Transformer that corrects asymmetric task bias through bias-aware attention with a low computational overhead. Extensive experiments show that FC-SBAAT achieves 55.71% and 73.87% accuracy for 1-shot and 5-shot tasks on MiniImageNet and 70.37% and 83.86% on CUB, outperforming prior methods.

1. Introduction

In supervised learning models, classification performance typically depends on large amounts of accurately labeled data. However, obtaining large-scale annotated datasets is both time-intensive and costly, especially in domains like medical imaging and remote sensing, where the annotation process is not only complex but also requires the involvement of domain experts. As a result, in scenarios where labeled data is scarce, the model’s robustness might be impacted, potentially leading to issues such as overfitting or bias. To tackle the challenges posed by data scarcity, Few-Shot Learning (FSL) is recognized as a critical focus in current research efforts. This learning paradigm enables models to be trained with only a few labeled samples, simulating the human ability to learn from limited data. It thus reduces reliance on large-scale annotations and enhances model performance in data-constrained environments. From a broader pattern-recognition perspective, symmetry is a fundamental concept for designing robust classifiers. In Few-Shot Learning, an ideal model should preserve permutation symmetry with respect to class labels and treat support and query samples in a symmetric way so that similar objects are mapped to compact, approximately symmetric clusters in the embedding space. Such geometric and relational symmetries are crucial for constructing stable prototypes from only a few labeled samples.

A variety of effective strategies have been proposed in the area of Few-Shot classification. Metric-based methods have become a central focus in research because of their broad applicability and robustness in real-world applications. These methods are primarily characterized by making classification decisions through the construction of similarity metric functions in the feature space. Prototypical Networks [1] and Relation Networks [2] establish the foundational paradigm of metric learning through generative class prototype construction and discriminative relational modeling, respectively. The former pioneered the use of class-wise mean feature representations as prototypes, defining geometric similarity metrics in the feature space. The latter broke away from traditional fixed metric patterns and introduced an end-to-end learnable relational function model for the first time. Together, these two innovations provide a methodological cornerstone for subsequent research in Few-Shot classification. Matching Networks [3] proposed a prototype matching method based on the attention mechanism, while Neural-Network-based Metric Learning [4] explores optimization strategies for deep feature transformations.

However, prototype-based methods still face certain limitations in Few-Shot classification tasks. These include the difficulty of adapting fixed metric functions to intra-class distribution shifts, the accumulation of metric errors among heterogeneous samples, sensitivity to outliers, and the inability to model complex inter-class relationships—all of which may result in degraded classification performance. To mitigate the constraints of Few-Shot classification tasks, researchers have adopted Graph Neural Networks (GNNs) to enhance learning by modeling relationships between samples, as in Few-Shot Learning with Graph Neural Networks [5]. Building on this, Prototype Rectification for Few-Shot Learning [6] improves classification accuracy by correcting prototype vectors using information from neighboring classes. Another class of methods focuses on incorporating external information to improve model learning. For example, Learning with Side Information for Few-Shot Learning [7] introduces contextual data such as labels or class attributes to help the model better capture inter-class similarities and differences. AMRN [8] improves classification performance by learning multiple prototypes per class to handle intra-class diversity. In terms of task optimization, Task-Aware Prototype Refinement (TAPR) [9] dynamically adjusts prototype vectors during training based on relationships between task-relevant samples. Meanwhile, distributed Few-Shot Learning methods, such as Distributed Few-Shot Learning with Prototype Distribution Correction, further optimize intra-class relationships by introducing distribution correction techniques.

For relation modeling, SaberNet [10] introduces a self-attention mechanism to better model relationships between samples. PSANet [11] applies a salient attention mechanism to focus on key image regions and improve segmentation performance. SCL [12] leverages base class information to train multiple self-supervised objectives and enhance classification. Although significant advances have been made in Few-Shot classification, most approaches handle features within each class separately and do not adequately capture relationships between classes, feature interactions, and multi-level semantic correlations. Moreover, task adaptability remains limited. From the symmetry perspective, the above limitations can be summarized as breaking under low-data regimes. Noisy supports distort class centers and break intra-class exchangeability, which yields unstable prototypes. Meanwhile, insufficient modeling of support–query interactions may introduce asymmetric feature representations and task-dependent biases. Therefore, restoring symmetry in prototype construction and matching becomes a direct path to improving robustness and generalization in Few-Shot tasks. These constraints reduce the expressive capacity of models when dealing with complex Few-Shot classification tasks, hindering further performance improvements.

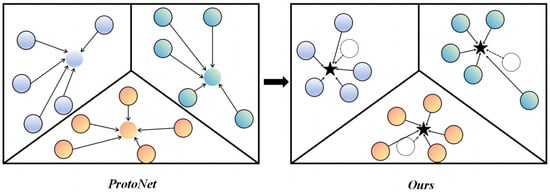

To address prototype instability and inefficient matching in Few-Shot classification, we propose FC-SBAAT, a unified framework that jointly designs feature collaboration, prototype rectification, and attention-based matching. The method first improves the reliability of class representations by rectifying prototypes under scarce and noisy support sets and then leverages the rectified prototypes to guide the subsequent matching process. Specifically, FC-SBAAT performs fine-grained reinforcement in two complementary subspaces and builds intra-class relations to estimate the reliability of support instances. An MLP is then employed to adaptively fuse the enhanced representations, followed by an intra-class-relation-guided refinement that conducts instance-level symmetric weighted aggregation to obtain rectified prototypes, as illustrated in Figure 1. Notably, this rectification differs from common prototype rectification strategies that rely on cross-class neighborhoods or query-dependent heuristics; instead, it exploits intra-class consistency to suppress outliers and mitigate prototype shift. In novel-class Few-Shot scenarios, cross-class or query-driven rectification may introduce unreliable external cues and amplify the shift; hence, we adopt intra-class-consistency-driven symmetric aggregation to improve prototype stability.

Figure 1.

Prototype Generation Process (ProtoNet: ProtoNet uses class mean as prototypes; Ours: Ours uses prototype correction to refine prototypes). Each colored circle represents a sample of a class, the dashed circle represents an uncorrected prototype, and the star represents a corrected prototype.

Furthermore, we introduce a Sparse Bias-Aware Attention mechanism in the Transformer decoder to address two key challenges in Few-Shot matching: the high time complexity of dense cross-attention and the susceptibility to task-irrelevant keys in low-data regimes. Concretely, a relevance-driven bias term is injected into the attention logits, and sparse selection is performed on the biased logits so that normalization and value aggregation are computed only over the most task-relevant connections. Regarding information-flow coupling, the class representations produced by prototype rectification are directly used to construct the relevance bias for matching, which in turn determines sparse selection and aggregation, enabling coupled optimization between prototype construction and relation matching. This design improves semantic focus while reducing the time complexity of attention computation, leading to better accuracy, robustness, and generalization.

The principal contributions presented in this study include the following:

- We propose FC-SBAAT, a unified framework that jointly designs feature collaboration, prototype rectification, and attention-based matching and establishes an information-flow coupling from prototype rectification to relevance-bias construction and sparse selection. The rectified prototypes directly shape the matching bias and determine the sparse aggregation scope, enabling coupled optimization between prototype construction and relation matching and improving accuracy, robustness, and generalization under noisy Few-Shot prototypes and complex inter-class relations.

- We introduce a two-subspace feature enhancement mechanism that strengthens fine-grained representations in two complementary subspaces and builds intra-class relations to characterize the reliability of support samples. An MLP is then employed to adaptively fuse the enhanced features, producing more informative task representations for prototype generation.

- We develop an intra-class-consistency-based prototype rectification strategy that suppresses the influence of outliers and noisy samples on mean prototypes through intra-class relation guided symmetric weighted aggregation, thereby mitigating prototype shift. This strategy avoids cross-class neighborhood or query-driven heuristics and is particularly suitable for novel-class Few-Shot scenarios.

- We introduce a Sparse Bias-Aware Attention mechanism in the Transformer decoder for matching. A relevance-driven bias term is injected into attention logits, and sparse selection is performed on the biased logits so that normalization and value aggregation are computed only over the most task-relevant subset, reducing the time complexity of attention computation while improving semantic focus.

2. Related Work

2.1. Few-Shot Learning

Few-Shot Learning (FSL) tackles the difficulty of training models with limited labeled data. Meta-Learning (ML) [13], a significant area of research, is generally classified into three primary methodologies: metric-based, optimization-based, and model-based approaches.

Metric-based methods concentrate on establishing an optimal embedding space by reducing distances between same-category instances and enlarging the gap between different-category instances. The Siamese Network [14], an early approach, utilized a twin network structure to tackle Few-Shot classification by learning pairwise sample similarity. ProtoNet introduced a more simplified approach, where utilizing the mean of support set samples acts as the class prototype, and classification is conducted by quantifying the dissimilarity between query samples and the class prototypes. Relation Network further improved metric learning by utilizing relational modules to measure the similarity between query samples and support set samples using a relational network.

Recent studies, like Matching Networks, employed an attention mechanism with soft attention weights to model the relationship between support samples and query samples. Optimization-based approaches focus on learning an effective optimization strategy that allows the model to rapidly adjust its parameters for new tasks. MAML [15] was a classic representative of such methods, which trained model parameters across multiple tasks to enable fast adaptation to novel tasks following a limited number of gradient updates. Subsequent studies such as Meta-SGD [16] further improved the MAML model by simultaneously learning the model’s parameters and the learning rate to improve adaptability. The Reptile method [17] incorporated inter-task variation information into the model parameters through an optimization strategy of multiple task iterations. In addition, MetaOptNet [18] enhanced the model’s adaptability and generalization by addressing the quadratic constraint problem on the support set and solving the linear classifier for the query set.

Model-based approaches achieved Few-Shot Learning by constructing models with fast learning capabilities [19]. The LSTM Meta-Learner proposed by Ravi and Larochelle was one of the early representatives of this approach, which simulated the updating process of an optimization algorithm by training an LSTM, facilitating rapid adaptation of the model to previously unencountered tasks. MetaNet [20] introduced an external memory module, which stored task-related information in external memory and allowed for fast retrieval and updating of new tasks. The recent SNAIL [21] model enabled the model to efficiently acquire historical information at each step by introducing temporal convolution and attentional mechanisms to better adapt to new tasks.

Hybrid methods integrated the strengths of metric, optimization, and model learning to enhance Few-Shot Learning performance through multi-strategy fusion. Meta-Critic Networks [22] proposed a hybrid meta-learning approach based on reinforcement learning, which dynamically selected the optimal policy to update the model parameters. pMAML [23] improved the traditional MAML method by combining probabilistic modeling with MAML.

Although the aforementioned methods have made certain progress in Few-Shot classification, there are still several unresolved challenges. Metric-based approaches exhibit limitations and instability when dealing with complex class distributions and diverse sample features. For example, the attention mechanism in Matching Networks mainly focuses on local similarities, i.e., local relationships between support and query samples. However, it lacks the ability to process global contextual information across classes or tasks, making it ineffective in leveraging task-relevant global features. Optimization-driven approaches seek to acquire a generic optimization strategy or parameter initialization via multi-task learning, empowering the model to rapidly learn new tasks using only a few gradient iterations. However, they often overlook the stability of the gradient update process. When there is a large distributional gap between tasks, the model may fail to find a stable optimization direction within a limited number of updates. Model-based approaches attempt to construct models with rapid learning and adaptation capabilities so that they are able to acquire new tasks from a limited number of samples or training iterations. However, these methods typically rely on fixed feature extraction and representation mechanisms, making it difficult to adapt flexibly across different tasks.

To tackle the issues mentioned above, we present a unified framework that jointly designs feature collaboration, prototype rectification, and Transformer-based matching. We enhance support and query representations through feature collaboration in two complementary subspaces, where global contextual cues and inter-class contrast are jointly exploited to improve discriminability. Based on intra-class relations, we estimate the reliability of support samples and employ an MLP [24] to adaptively fuse the enhanced features, producing task-aware representations for prototype construction. The fused representations are further used to rectify class prototypes via intra-class consistency driven symmetric weighted aggregation, which suppresses noisy or atypical support samples and mitigates prototype shift. To improve task adaptability and matching efficiency, we design a Top-t Sparse Bias-Aware Attention module in the Transformer decoder. A similarity-derived bias is injected into the attention logits, and sparse selection is performed on the biased logits so that normalization and value aggregation are computed only over the selected subset. This information-flow coupling allows the rectified prototypes to shape the relevance bias and sparsification during matching, improving semantic focus while reducing the time complexity of attention computation.

2.2. Contrastive Learning in Few-Shot Learning

The Contrastive Learning framework improves feature robustness by aligning positive sample pairs more closely and separating negative pairs. This method not only strengthens feature representation but also alleviates overfitting issues in data-scarce scenarios.

Self-Supervised Learning for Few-Shot Image Classification [25] introduced the integration of self-supervised Learning with Few-Shot Learning by designing auxiliary tasks, such as rotation prediction and color recovery. Global Class Representations [26] addressed inter-class separation challenges by constructing global representation vectors for each class and incorporating contrastive loss at the global level. Building on these approaches, we integrate Contrastive Learning into the feature co-reinforcement process, enhancing intra-class feature similarity by expanding the feature subspace, thereby improving the effectiveness and stability of feature representations.

2.3. Multi-Head Attention

Attention mechanisms [27] were designed to mimic the ability of humans to pay selective attention when processing information, giving models the flexibility to automatically and selectively focus on important information while ignoring irrelevant or minor parts, thereby improving the models’ ability to understand and process complex data. This was to solve the problem of information redundancy that Self-Attention may have ignored when processing sequences. By using multiple attentional “heads” in parallel, Multi-Head Attention enables the model to learn varied data representations within different subspaces, thus capturing the fused global information. For a given sample matrix , where n represents the number of features and d represents the feature dimensionality, the Multi-Head Attention mechanism aims to acquire diverse feature representations and capture global contextual information through different subspaces, each of which represents a Self-Attention module. The Self-Attention is computed by acquiring the Query, Key, and Value for the attention computation, which requires three trainable parameter matrices. For the three trainable parameter matrices , their dimensions are , , . Usually, . The matrices are obtained by the following formulas:

The output of the Self-Attention mechanism is calculated as follows:

The final output of Multi-Head Attention can be obtained by splicing the output of each head and mapping it by a linear transformation :

where the output dimension of each head is , and the total dimension after splicing join is . In this way, the Multi-Head Attention mechanism is able to capture more diverse and detailed feature representations in different subspaces. As proposed in [28], MTAN introduced the Multi-Head Attention mechanism in the feature extraction and classification stages to enhance feature representation and generalization performance of Few-Shot Learning models. SPAENet [29] enhances feature representation by attentively weighting the input image. Drawing inspiration from MTAN, we integrate the Multi-Head Attention mechanism into feature co-enhancement, where the heads focus on global information and intra-class relationships. By feeding all support features into the Multi-Head Attention module, the feature representation is strengthened during context processing. Multi-Head Attention improves feature representation while simultaneously modeling relationships between classes, thus generating an inter-class relationship matrix, which provides more accurate relationship weights for subsequent prototype generation. Compared with traditional methods, our approach not only has a certain advantage in feature enhancement but also is more flexible in modeling inter-class relationships, which enhances the representational ability of prototypes by explicitly constructing inter-class relationship matrices and further enhances the model’s generalization capability and robustness.

2.4. Transformer-Based Methods

Since its inception, the Transformer architecture has found extensive applications in diverse sequence modeling tasks, spanning natural language processing, image recognition, and multimodal fusion. In particular, the cross-attention mechanism within Transformer has been extensively used for modeling information interactions across different modalities [30,31]. These methods utilize fully connected attention to capture global relationships between modalities. Although effective, many improved Transformer variants have been proposed to better suit Few-Shot Learning scenarios. For instance, Swin Transformer [32] introduces local window attention to mitigate overfitting risks. AdapterFusion [33] incorporates lightweight adaptation layers into pre-trained Transformers, enabling state-of-the-art performance in Few-Shot tasks with minimal fine-tuning. SLTRN [34] enhances Few-Shot image classification by introducing Transformer-based context modeling both within the support set and between support–query pairs. Inspired by these Transformer-based Few-Shot efforts, we propose a Sparse Bias-Aware Attention module that revisits the support–query interaction beyond conventional cross-attention. In contrast to SLTRN, which improves performance mainly through Transformer context modeling over support samples and support–query pairs, our design introduces task-conditioned bias derived from rectified prototypes and applies Top-t sparsification on the biased attention logits. This prototype-guided bias and sparsification are coupled in the matching stage so that attention is encouraged to concentrate on semantically relevant connections while suppressing task-irrelevant ones that may cause overfitting under scarce supervision. Meanwhile, the sparse computation reduces the time complexity of normalization and value aggregation, improving efficiency without sacrificing discriminative focus. As a result, the proposed module improves robustness and generalization in Few-Shot regimes.

3. Methodology

3.1. Notations and Problem Definition

To formalize the subsequent formulation, we consider a standard Few-Shot classification setting with a base-class dataset and a novel-class dataset , where . Following the episodic meta-learning protocol, the overall procedure is divided into a meta-training phase and a meta-testing phase, and the model is optimized/evaluated over a sequence of Few-Shot tasks (episodes).

In the meta-training phase, each training episode is constructed by first sampling K classes from to form a K-way N-shot task . The support set is defined as

where denotes the i-th labeled support sample from the k-th sampled class, and denotes the class label for the k-th class in the episode. In addition, we sample query instances from the remaining examples of the same K classes to form the query set

N is typically very small (e.g., 1 or 5). Importantly, in our method, the query labels in are available during meta-training and are used to compute the training objectives (e.g., relation-based supervision and Contrastive Learning terms) together with the support set, while the model is still required to predict query classes conditioned on the support set.

In the meta-testing phase, we evaluate the trained model on test episodes sampled from under the same K-way N-shot protocol.

3.2. Framework

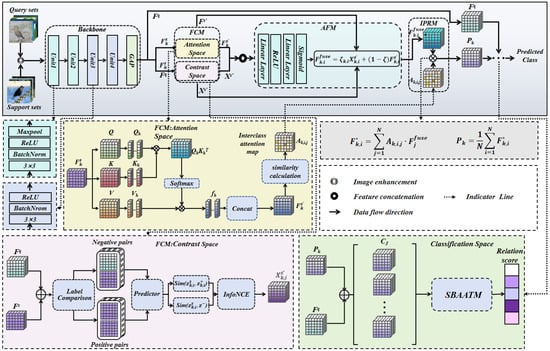

Our framework is composed of four core modules and one data augmentation preprocessing step, as shown in Figure 2. Specifically, the data augmentation module implements task-adaptive sample enhancement to mitigate the problem of data scarcity; the FCM module conducts feature collaboration through two spaces (Attention Space and Contrast Space) to strengthen intra-class correlations and improve inter-class discriminability; the AFM module performs dynamic weighted fusion on dual-subspace features to avoid information redundancy and enhance model stability; the IPRM module refines prototypes under the guidance of intra-class relations to boost the representativeness of prototypes, and the SBAATM adopts a Sparse Bias-Aware Attention mechanism to reduce time complexity and achieve an accurate classification matching process.

Figure 2.

System architecture diagram. Note: The symbol ⨂ denotes element-wise multiplication, and the symbol ⨁ denotes concatenation.

3.3. FCM

We treat support and query samples as unordered sets and enhance them through relation-based operators. Multi-Head Attention computes interactions based on pairwise similarities, so re-ordering inputs only permutes the attention matrix accordingly and preserves permutation equivariance. The contrastive subspace further enforces intra-class cohesion and inter-class separation, which can be viewed as a symmetry-preserving regularization in the embedding space. By fusing two complementary subspaces with learned weights, FCM produces symmetry-consistent representations for subsequent prototype construction. The specific procedure is described as follows:

In the feature reinforcement stage, we perform feature collaborative enhancement through the parallel Multi-Head Attention and Contrastive Learning methods within FCM, thereby addressing the intra-class heterogeneity issue. Specifically, during the data augmentation process, we adopt the mixing enhancement scheme corresponding to the ImageNet Enhancement data augmentation strategy, as shown in Figure 2. If the number of support samples is equal to or less than 1 (1-shot scenario), we apply traditional data enhancement methods such as , which include color perturbation, Gaussian noise, fuzzy processing, and other operations. These enhancements are applied multiple times in sequence, with intensities set to 10, 20, and 30, respectively. When the number of support samples is greater than 1, we adopt a sample mixing strategy, randomly selecting two samples from the same class and mixing them according to a certain ratio to enhance sample diversity. This strategy helps improve the model’s ability to learn from different samples, thereby enhancing classification performance. The specific formula is as follows:

where and are different support samples of the same category, and is a random mixing proportion (a random value between 0 and 1). The full formula for the augmentation process is shown as follows, which defines the enhancement strategy based on the number of support samples .

Subsequently, the feature-enhanced support samples are subjected to feature extraction using the backbone network, and the feature extractor extracts the feature representations of the support samples and query samples as .

For the Multi-Head Attention enhancement component of the Feature Co-enhancement Module (FCM), we first extract features of all support and query samples via the backbone network in the : as illustrated in Figure 2, yielding and . Linear transformations are then applied to the query, key, and value vectors to generate the matrices Q, K, and V, respectively.

Here, denotes the support feature matrix (with S samples in the class and feature dimension D). Multi-Head Attention uses c heads indexed by . For the h-th head, the query, key, and value are obtained by head-specific linear projections:

where are trainable parameter matrices, and . Equivalently, one may write , , and .

For each head h, the dot-product similarity between and is computed and normalized by to produce attention weights, which are then used to aggregate :

Finally, the outputs of all heads are concatenated and projected back to the input feature space by a learnable output matrix :

where denotes the attentional outputs of each head, and is the learnable output mapping matrix. The support feature, enhanced by the Multi-Head Attention mechanism, is represented as , as described by the following equation:

To facilitate a clearer elaboration of the algorithm details, in the following, we will adopt a more refined index notation to supplement the definition of relevant symbols so as to precisely map to the feature information of different classes and samples. This Multi-Head Attention module enables the model to capture fine-grained intra-support feature dependencies more accurately in different subspaces by computing multiple attention heads in parallel. For each class , we construct an intra-class similarity matrix based on the N attention-enhanced support features of that class, where denotes the -th entry of .

where denotes the number of classes in an episode, N is the number of support samples per class, and is the enhanced feature of the i-th support sample in the k-th class.

For the contrastive enhancement part of FCM, which is illustrated in the Contrast Space of FCM (denoted as FCM: Contrast Space in Figure 2), positive and negative pairs are constructed from samples of the same and different classes, respectively. Given query features and support features , we map each feature to a contrastive embedding via a shared projection head followed by normalization:

where denotes a shared projection head (e.g., a lightweight MLP) applied to both support and query features, and performs normalization, i.e., . This operation projects heterogeneous features into a unified embedding space and makes comparable across samples.

For an anchor embedding , we construct the positive sample from another support feature of the same class:

where indexes the class in an episode, and index the N support samples of class k. When , is obtained by using another augmented view of the same support sample to ensure a valid positive pair.

We construct negative samples from query features belonging to different classes:

where m indexes a query sample, and denotes its class label. The similarity function is defined as , which is equivalent to cosine similarity after normalization. Let denote the set of negatives for anchor .

The InfoNCE loss is formulated as

where is a temperature parameter controlling the sharpness of the softmax distribution. The numerator encourages the anchor to be close to its positive sample, while the denominator contrasts the anchor against all negatives in . The factor averages the loss over all anchors in the support set within each episode.

3.4. AFM

To produce more distinctive and discriminative features, the MLP-driven Adaptive Fusion Module (AFM) performs a weighted fusion of the contrastive-learning reinforced features and the Multi-Head-Attention-enhanced features. Specifically, for each support sample indexed by the class label and the intra-class index , we concatenate the attention-enhanced feature and the contrastive reinforced feature to form a fused representation:

We use an MLP, as shown in the of Figure 2, to generate dynamic weights. For each support sample indexed by , the MLP takes the concatenated feature as input and outputs a scalar weight through a linear transformation followed by a nonlinear activation function so as to dynamically control the fusion ratio of the features. The weight generator is formulated as , where is the sigmoid function.

where and are the learnable weight matrices, and and are the corresponding bias terms. is a nonlinear activation function used to enhance the expressive capacity of the model. denotes the sigmoid function, which constrains the generated weight to the range .

After obtaining the dynamic weights , we perform a weighted fusion between the Contrast-Learning-reinforced feature and the Multi-Head-Attention-enhanced feature . The fusion is formulated as

The weight controls the relative contribution of the two features in the final fused representation. As varies, the model can be dynamically adjusted between the Contrastive-Learning-reinforced features and the Multi-Head-Attention-enhanced features , thereby improving the model’s adaptability.

3.5. IPRM

In addition to addressing the problem of uneven distribution within classes as well as to obtain more accurate feature aggregation, we use intra-class weighting for prototype generation, as shown in the component of Figure 2, which is different from the direct method of finding the average value within a class, and use the intra-class similarity matrix generated by the Multi-Head Attention mechanism, which is aggregated with different features of each class, with the specific details as follows: For the support feature after feature fusion and the intra-class matrix generated by Multi-Head Attention, is weighted using the similarity matrix:

where denotes the weighted feature of the i-th fused support feature within the k-th class. denotes the intra-class similarity between the i-th and j-th support features within the k-th class. The final weighted feature matrix represents the features after adding the similarity weights within the class. The weighted features are averaged to obtain the prototype features of the class:

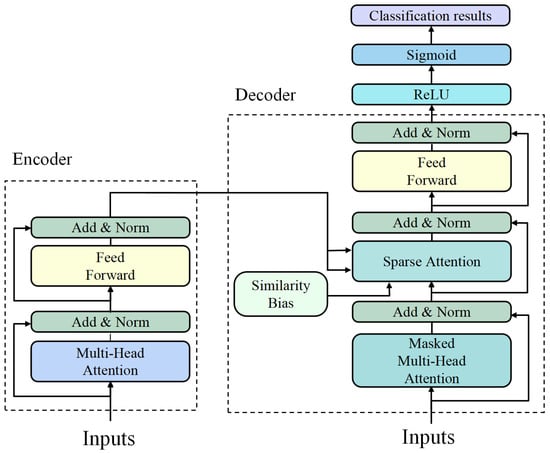

3.6. SBAATM

In the classification stage, we adopt a novel classification architecture based on the Sparse Bias-Aware Attention Transformer, which reconstructs the conventional classification paradigm by incorporating similarity as a bias mechanism. The overall framework is depicted in Figure 2, while the detailed structure is presented in Figure 3. Unlike traditional CNN-based Relation Networks that rely on local feature interactions, we design a Transformer with the capability of global relational modeling. This module replaces the cross-attention mechanism in the Transformer decoder with a Top-t sparse attention strategy and integrates inter-class similarity bias. By constructing an implicit relational graph along the feature channel dimension, the classifier adaptively captures semantic associations across regions. Specifically, during the encoding phase, we concatenate the query features with the class prototypes to form a sequence , where . Through a Multi-Head Attention mechanism, we obtain inter-class relations, and the entire process can be formulated as follows:

Figure 3.

SBAATM architecture.

The feature sequence , which contains inter-class relationships, is obtained through processing by the encoder.

During the decoding phase, as shown in Figure 3, the decoder first applies masked multi-head self-attention to the query feature sequence to form an intermediate decoder representation, where the mask adopts a diagonal masking scheme. The resulting output is then passed through an Add & Norm layer to obtain , and . is fed into the proposed Sparse Bias-Aware Attention module in which the sparse attention mechanism replaces the conventional cross-attention. A bias term B is introduced during attention computation, which is calculated based on the cosine similarity between the query features and the prototypes:

represents the query feature, and denotes the prototype feature. The cosine similarity is used as the bias term B, which is integrated into the sparse attention computation to dynamically adjust the similarity between the query and the prototype. This enhances the model’s ability to focus on important features. The sparse attention mechanism selects the most relevant prototypes, thereby reducing computational cost and improving classification performance. The final sparse attention computation is defined as follows:

We employ a Top-t sparsification strategy that retains only the t highest-value prototype connections for each query feature, where and denotes the number of classes (K-way) in an episode. Let h denote the number of attention heads. For the h-th head, the per-head dimension is defined as

The attention logits are computed by

where B is a cosine-similarity-based bias term.

For each query index , we select the Top-t indices from the m-th row of :

where . The attention weights are then computed by normalizing the selected logits:

and for . The head output for the m-th query is obtained by aggregating the corresponding values over the selected indices:

Finally, the multi-head output is produced by concatenation and linear projection:

We realize the Top-t selection and subsequent computations via and , so the normalization and value aggregation are carried out only on the selected entries, avoiding explicit materialization of a dense attention matrix.

By applying the aforementioned Top-t sparsification strategy to the attention logits integrated with this similarity bias, this module retains only the t most relevant prototype connections for each query. It then aggregates the values and outputs the Multi-Head Attention result.

The output of the sparse attention module then passes sequentially through the subsequent Add & Norm layer, feed-forward network, and final Add & Norm layer of the decoder. These steps further refine the relational features between queries and prototypes, which are then processed through ReLU and Sigmoid activation functions to generate the class-level matching score matrix R. The computation can be expressed as

k denotes the k-th class, and indicates the matching score between the query sample and the prototype class P. The complete network is ultimately trained end to end, employing MSE as the loss function. This procedure is mathematically expressed as

where is an indicator function. Here, denotes the ground-truth label of the m-th query sample , and denotes the class label associated with the k-th prototype . The indicator returns 1 if and 0 otherwise. Therefore, a matched (query and prototype class) pair has a target value of 1, while an unmatched pair has a target value of 0.

where and are hyperparameters. Finally, the model’s accuracy on the test tasks is calculated as

where denotes the predicted class label of the m-th query sample, which is determined by the decoder-produced matching score matrix R as

Finally, we summarize the overall proposed method in Algorithm 1.

| Algorithm 1 The training procedure of FC-SBAAT |

|

4. Experimental Results and Discussion

This chapter begins by detailing the experimental setup, including the dataset and implementation specifics (Section 4.1). Subsequently, we evaluate the proposed method through comparisons with contemporary state-of-the-art approaches to demonstrate its performance merits (Section 4.2). Ablation analysis is carried out to examine the influence of individual modules on the model’s overall results (Section 4.3). Finally, t-SNE visualization is employed to illustrate the model’s performance across various tasks, providing deeper insights into its feature space distribution and classification capabilities (Section 4.4).

4.1. Datasets and Experimental Environment

The MiniImageNet and CUB datasets were chosen for this study to systematically assess the performance of the proposed FC-SBAAT method in Few-Shot Learning tasks. A brief overview of the two datasets is provided below:

MiniImageNet: The MiniImageNet dataset [3] is a widely used benchmark for Few-Shot Learning. It is derived from ImageNet and consists of 100 randomly selected categories, each with 600 images resized to 84 × 84 pixels. The dataset is split into three parts: 64 categories for training, 16 for validation, and 20 for testing.

CUB (Caltech-UCSD Birds 200): The CUB-200-2011 dataset [35] is a standard benchmark for fine-grained image categorization and contains 11,788 images of 200 bird categories, with approximately 30 to 60 images per category. This dataset presents a high challenge for Few-Shot Learning due to the high inter-category similarity and intra-category variation. In this study, 200 categories are randomly divided into 100 for training, 50 for validation, and the remaining 50 for testing. The standard training-validation-testing split and the corresponding evaluation scheme specified for CUB-200-2011 are adopted throughout all experiments.

Experimental configuration environment: Intel Core i9-13900F CPU (Intel Corporation, Santa Clara, CA, USA), 32 GB RAM, NVIDIA RTX 4060 GPU (NVIDIA, Santa Clara, CA, USA), Windows 11 (64-bit, Microsoft, Redmond, WA, USA), Python 3.11.8 (https://www.python.org, accessed on 4 January 2026). experimental environment.

4.2. Experimental Details

This study adopts the episode training paradigm for Few-Shot Learning. All key training parameters are explicitly defined through configuration files and code to ensure full reproducibility of the experiments. The training phase includes 700,000 training episodes, providing sufficient training volume for the model to fully learn stable feature representations and robust relation matching capabilities.

The Adam optimizer is selected as it effectively balances convergence speed and gradient stability in Few-Shot Learning tasks. The initial learning rate is set to , with an L2 weight decay coefficient of introduced to alleviate overfitting during model training. For the loss function, the hyperparameters and are set to 0.9 and 0.1, respectively, to optimize the trade-off between intra-class cohesion and inter-class separation in feature learning [36,37].

The sample composition of each training episode follows the K-way N-shot setting for Few-Shot tasks: a single task contains five classes, each with one or five support samples corresponding to the two core task scenarios of 5-way 1-shot and 5-way 5-shot. Each class is paired with 16 query samples for model optimization. In the Contrastive Learning module, the temperature coefficient is set to 0.1. This parameter adjusts the discrimination scale of feature similarity, enhancing the model’s ability to distinguish fine-grained similar features and improving the discriminability of feature representations.

The validation phase includes 500 validation episodes, with model performance evaluated every 5000 training episodes. The final experimental results are calculated as the average of the evaluation data from these 500 validation episodes, and the 95% confidence interval is used to measure the statistical reliability of the results.

4.3. Comparison with State of the Arts

In this section, we conduct a comprehensive evaluation of the proposed FC-SBAAT method using the MiniImageNet and CUB datasets, comparing it with existing state-of-the-art techniques. As indicated in Table 1, FC-SBAAT shows notable improvements in both 1-shot and 5-shot tasks on MiniImageNet and CUB, surpassing other methods. These results highlight the enhanced performance and efficiency of the proposed approach.

Table 1.

Mean classification accuracy (%) on MiniImageNet and CUB (5-way 1-shot/5-shot).

MAML and MetaOptNet are optimization-based meta-learning methods that achieve fast adaptation mainly through within-task parameter updates or explicit optimization. Under Few-Shot conditions, these methods can be sensitive to the optimization trajectory and initialization, and they often fail to fully exploit fine-grained relationships among samples, which may lead to performance bottlenecks when the class structure is complex. As shown in Table 1, under the Conv4 setting, FC-SBAAT achieves 55.71% and 73.87% accuracy on MiniImageNet for 1-shot and 5-shot, respectively, which is clearly higher than MAML with 48.70% and 63.11% and MetaOptNet with 51.28% and 69.71%. This indicates that relying solely on within-task optimization is insufficient to stably capture fine-grained discriminative cues in Few-Shot regimes, whereas explicit relation modeling and more robust class-level representations are more critical [44]. On CUB, FC-SBAAT further reaches 70.37% and 83.86% accuracy for 1-shot and 5-shot, respectively, again outperforming the aforementioned optimization-based baselines. This result further suggests that, in fine-grained scenarios with larger intra-class variations, optimization-driven adaptation alone is more vulnerable to noisy support samples and prototype shift and thus requires a more robust class representation construction strategy. When adopting ResNet-12 as the backbone, FC-SBAAT still maintains a consistent advantage over optimization-based baselines. Table 1 shows that, on MiniImageNet, FC-SBAAT achieves 67.42% and 85.71% accuracy for 1-shot and 5-shot, respectively, which is higher than MetaOptNet with 62.64% and 78.63% and also exceeds the cosine classifier baseline with 55.43% and 77.18%. This implies that even with a stronger feature extractor, optimization-based methods may not sufficiently convert the representational gains of the backbone into stable task-level discrimination, while relation enhancement and prototype refinement are more effective for task generalization [45]. On CUB, FC-SBAAT attains 77.13% and 91.48% accuracy for 1-shot and 5-shot, respectively, surpassing MetaOptNet with 72.00% and 84.20% and the cosine classifier with 67.30% and 84.75%. This demonstrates that, for fine-grained datasets, relying solely on decision-level optimization or fast adaptation is still inadequate to suppress representation shift caused by intra-class variations and noise-driven prototype bias, whereas FC-SBAAT can produce more robust class representations and more reliable matching decisions through effective relation modeling and prototype generation refinement, thereby achieving consistent performance gains across different backbones [46,47].

Compared with metric-learning methods, the advantages of FC-SBAAT are mainly reflected in two aspects: feature representation quality and the stability of prototypes and matching. ProtoNet forms class prototypes by aggregating support samples within each class. RelationNet computes similarities between the support set and the query set via a relation module. DeepEMD [41] measures distances using local matching based on Earth Mover’s Distance (EMD). SLTRN enhances inter-sample relation modeling by combining the Transformer self-attention mechanism with relational reasoning. TADAM [39] improves cross-class generalization by strengthening the task adaptivity of the metric space. However, under extremely Few-Shot settings, these methods are still susceptible to noisy samples, intra-class variations, and prototype shift. In particular, although SLTRN mainly improves the expressiveness of inter-sample relations, its class representation construction inevitably relies on holistic aggregation of support features, and it lacks an explicit mechanism to suppress prototype bias caused by uneven support quality or noisy samples. As a result, when the support set contains abnormal samples or exhibits quality fluctuations, class-level representations are more likely to drift, thereby undermining the stability and discriminability of the matching process. As reported in Table 1, under the Conv4 backbone, FC-SBAAT improves upon DeepEMD on MiniImageNet by increasing the 1-shot accuracy from 53.81% to 55.71% and the 5-shot accuracy from 70.56% to 73.87%, and it further boosts the 5-shot accuracy on CUB from 83.58% to 83.86%. Meanwhile, FC-SBAAT also achieves more consistent gains over SLTRN: on MiniImageNet, the 1-shot accuracy increases from 52.11% to 55.71% and the 5-shot accuracy increases from 66.54% to 73.87%; on CUB, the 1-shot accuracy increases from 67.55% to 70.37%, and the 5-shot accuracy increases from 80.07% to 83.86%. Under the ResNet-12 backbone, FC-SBAAT further surpasses the strong baseline BML, improving the 1-shot accuracy on MiniImageNet from 67.04% to 67.42% and the 5-shot accuracy from 83.63% to 85.71% and also improving the 1-shot accuracy on CUB from 76.21% to 77.13% and the 5-shot accuracy from 90.45% to 91.48%. These results indicate that FC-SBAAT can still deliver stable improvements under a stronger backbone.

For FC-SBAAT, we adopt a feature co-enhancement strategy to optimize the prototype generation process. By modeling inter-sample relations in a more fine-grained manner, FC-SBAAT produces more representative class prototypes and better captures subtle inter-class differences. This design enables FC-SBAAT to consistently outperform ProtoNet, which mainly relies on simple prototype estimation, under different backbones. Under Conv4, ProtoNet achieves 49.42% and 68.20% accuracy on MiniImageNet for 1-shot and 5-shot and 54.52% and 73.30% accuracy on CUB, whereas FC-SBAAT achieves 55.71% and 73.87% on MiniImageNet and 70.37% and 83.86% on CUB, corresponding to relative improvements of 12.72% and 8.31% on MiniImageNet and 29.07% and 14.41% on CUB. Under ResNet-12, ProtoNet achieves 62.39% and 80.53% on MiniImageNet and 71.88% and 87.42% on CUB, while FC-SBAAT achieves 67.42% and 85.71% on MiniImageNet and 77.13% and 91.48% on CUB, yielding relative improvements of about 8.06% and 6.43% on MiniImageNet and 7.30% and 4.64% on CUB. Overall, the results in Table 1 demonstrate that FC-SBAAT achieves superior Few-Shot recognition performance under both Conv4 and ResNet-12, and the improvement is more evident on the fine-grained CUB dataset, which aligns with the design goal of relation enhancement and more robust prototypes.

4.4. Ablation Study

4.4.1. The Impact of Different Structures

To evaluate the impact of individual sub-modules in the prototype rectification process of FC-SBAAT, we conduct ablation studies (see Table 2) to systematically quantify the contribution of each component and its role in both feature enhancement and prototype generation. Specifically, we decompose the Feature Cooperative Enhancement Module (FCM) into two components: the Multi-Head Attention enhancement module (MHA) and the Contrastive Learning enhancement module (CL). The former strengthens feature interactions via a Multi-Head Attention mechanism, while the latter improves discriminability by pulling samples from the same class closer and pushing samples from different classes apart through Contrastive Learning. In addition, we adopt a Relation Network paradigm as the baseline, where a Sparse Bias-Aware Transformer is used as the relation function. For fair comparison, all methods are evaluated on MiniImageNet and CUB under both 1-shot and 5-shot settings. The baseline model employs Conv-4 as the backbone, and all variants use the same feature enhancement strategy described above, forming the variants Base-MHA, Base-FCM, Base-FCM-AFM, and Base-FCM-AFM+IRPM to progressively verify the effectiveness and synergy of the proposed modules.

Table 2.

Ablation study on FC-SBAAT: classification accuracy (%) on MiniImageNet and CUB.

Table 2 reports the mean accuracy with 95% confidence intervals on MiniImageNet and CUB under different sub-module combinations, aiming to quantify how the key components in FC-SBAAT contribute to feature enhancement and prototype refinement. Overall, the results exhibit a clear monotonic and steady improvement as MHA, FCM, AFM, and IRPM are progressively incorporated. This consistent trend across both datasets and both shot settings indicates that the proposed components provide complementary benefits along two core paths, namely, representation reinforcement and prototype rectification, with their cumulative effects driving gradual performance optimization.

Base serves as the prototype-based reference. After introducing the Multi-Head Attention module, Base-MHA yields steady incremental gains on both datasets, improving MiniImageNet from 52.42% to 53.11% in 1-shot and from 67.10% to 71.37% in 5-shot while improving CUB from 67.86% to 68.92% in 1-shot and from 80.36% to 81.64% in 5-shot. The more noticeable incremental improvement on MiniImageNet 5-shot suggests that Multi-Head Attention effectively captures fine-grained cross-sample dependencies and stabilizes feature interactions when more support evidence is available, which translates to more tangible gains in scenarios with relatively sufficient samples.

Replacing MHA with the full feature co-enhancement strategy further achieves steady performance gains. Base-FCM consistently outperforms both Base and Base-MHA, reaching 53.88% and 71.81% on MiniImageNet and 69.17% and 82.38% on CUB under 1-shot and 5-shot, respectively. These results confirm that co-enhancement provides richer and more discriminative representations than attention-only reinforcement, thereby yielding more consistent incremental gains for metric-based classification. The steady performance lift demonstrates that the synergistic effect of MHA and CL is conducive to refining feature quality without introducing redundant information.

When AFM is incorporated on top of Base-FCM, performance continues to show steady incremental improvements in all settings, with Base-FCM-AFM achieving 54.95% and 72.62% on MiniImageNet and 69.81% and 83.03% on CUB. This observation highlights that adaptive fusion is critical for converting multi-source enhanced features into a unified and stable representation—even with modest accuracy gains, the functional value of AFM is evident. By dynamically reweighting reinforced cues, AFM reduces information cancellation and suppresses noise accumulation, which directly improves the reliability of support features and thus the quality of prototype estimation.

Finally, adding IRPM leads to further steady incremental improvements, and the complete model attains the best results in every setting, reaching 55.71% and 73.87% on MiniImageNet and 70.37% and 83.86% on CUB. The additional modest yet meaningful gains over Base-FCM-AFM indicate that the remaining bottleneck lies not only in representation quality but also in the sensitivity of prototype construction under limited support. IRPM explicitly exploits intra-class structural cues to reweight and constrain prototype formation, mitigating prototype shift induced by atypical samples and improving decision stability—an important merit beyond mere incremental performance gains, especially in small-sample scenarios where result reliability is crucial.

In the ablation analysis of the classification module (see Table 3), we adopt the vanilla Transformer architecture as the baseline and construct an improved classification module by introducing a bias-aware mechanism, thereby systematically validating its effectiveness on representative Few-Shot classification benchmarks. To ensure a fair comparison, all methods consistently employ Conv-4 as the feature extraction backbone and share the same prototype construction pipeline, i.e., the framework composed of FCM, AFM, and IRPM, and we vary only the classification module to eliminate confounding factors. The experimental results show that the improved classification module delivers stable performance gains under all task configurations, with more pronounced improvements when labeled samples are extremely limited. This advantage primarily stems from the bias-aware mechanism, which directionally strengthens semantic correlations. Compared with the global unbiased similarity computation in conventional attention, the bias-guided strategy better focuses on task-relevant discriminative features.

Table 3.

Ablation study on classification module: classification accuracy (%) comparison on MiniImageNet and CUB datasets.

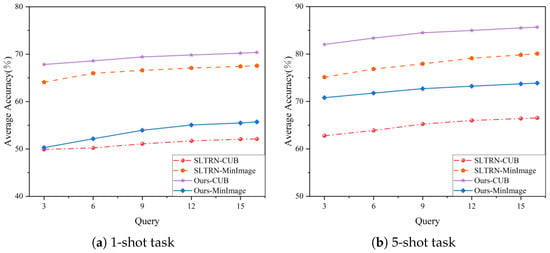

4.4.2. The Impact of Different Hyper-Parameters

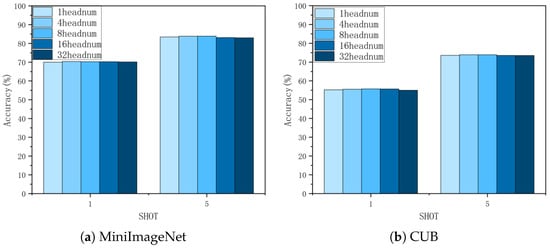

This experiment involved a comprehensive evaluation of the FC-SBAAT model on the MiniImageNet and CUB datasets for 1-shot and 5-shot tasks, systematically varying the number of query images and recording the classification accuracies for each configuration. The results are presented in Figure 4, where Figure 4a shows the 1-shot setting, and Figure 4b shows the 5-shot setting.

Figure 4.

Impact of query sample size ((a): impact on 1-shot task; (b): impact on 5-shot task).

The classification accuracy of FC-SBAAT significantly improves as the number of query images increases, particularly in 1-shot and 5-shot tasks.The model’s performance shows a strong positive correlation with the number of query images, indicating that as more query images are available, the model can access additional support samples, thereby improving its ability to discriminate subtle category differences. More query images provide the model with richer information, enabling it to better understand the task from different perspectives, ultimately enhancing classification accuracy.

However, as the number of query images increases, the model’s performance improvement starts to plateau and becomes less noticeable after a certain point, suggesting a saturation effect in the impact of query image quantity on performance. Further increasing the number of query images may lead to a waste of computational resources, while the performance improvement effect tends to be limited. Therefore, in practical applications, a reasonable selection of the number of query images is crucial for improving the efficiency of the model.

In this experiment, when the number of query images reaches 15, the model’s performance has clearly leveled off and increasing the number of query images further no longer significantly enhances classification accuracy. Therefore, in order to avoid the computational overhead caused by too many query images while fully utilizing the model’s potential, we chose 16 query images as the optimized parameter setting. Such a choice balances the relationship between computational resources and classification accuracy, ensuring both efficient model training and high classification accuracy, thereby reflecting the effectiveness and practicality of the model optimization.

Figure 5a,b demonstrate the performance of the FC-SBAAT model in 1-shot and 5-shot tasks on the MiniImageNet and CUB datasets. The experimental results indicate that with variations in the number of attention heads within the Multi-Head Attention mechanism, the model’s classification accuracy remains stable and does not exhibit notable fluctuations. This phenomenon indicates that FC-SBAAT possesses excellent robustness and stability in hyperparameter selection, especially in adjusting the number of Multi-Head Attention heads.

Figure 5.

Impact of the number of attention heads ((a): on MiniImageNet dataset; (b): on CUB dataset).

Specifically, as the number of attention heads increases, although the model’s performance fluctuates across different configurations, its final performance tends to stabilize and can be adaptively adjusted within a certain range without significant degradation. From the experimental results, both on the MiniImageNet and CUB datasets, the performance of FC-SBAAT demonstrates a steady improvement with the increase in the number of attention heads, eventually reaching a plateau as the number continues to grow.

This suggests that the FC-SBAAT model is able to maintain its performance effectively under different hyperparameter settings and is highly adaptable to changes in the number of attention heads. This property not only indicates that the model is designed with strong generalization ability but also reflects its optimization flexibility within the Multi-Head Attention mechanism.

4.4.3. The Impact of Different Feature Enhancement Strategies

For assessing the effectiveness of our presented feature enhancement approach, we performed experiments using the MiniImageNet and CUB datasets, assessing performance in both 1-shot and 5-shot task scenarios. The experimental results are shown in Table 4, where our feature enhancement scheme achieves consistent improvement under multiple task settings.

Table 4.

Comparison of feature enhancement strategies: classification accuracy (%) on MiniImageNet and CUB datasets.

Our feature enhancement scheme employs a careful strategy in data preprocessing and enhancement by gradually adjusting the enhancement magnitude of each sample so that each sample undergoes multiple changes at different intensities, thus generating more diverse data. This stepwise enhancement strategy enhances the diversity of data and facilitates the model’s capture of fine-grained differences and variations among samples. In this way, the model is able to extract more accurate features from diverse training data, which significantly improves its adaptability and learning ability for the learning task with fewer samples.

The experimental findings validate the efficacy of our proposed method. Using the MiniImageNet dataset, the feature enhancement scheme improves accuracy by 0.22% and 0.53% in 1-shot and 5-shot tasks, respectively, compared to other methods. On the CUB dataset, the accuracy improvements are even greater, with increases of 0.45% and 0.11% in the 1-shot and 5-shot tasks, respectively. These results suggest that progressively adjusting enhancement strength and including subtle sample variations greatly improves the model’s performance in Few-Shot Learning tasks.

4.4.4. The Computational Impact of Time Complexity

To quantitatively evaluate the impact of the Top-t sparsification mechanism on the computational complexity and inference efficiency of the Transformer-based relation network, we conduct an ablation study by comparing the Vanilla Transformer relation network with its Top-t-equipped variant and report FLOPs, parameter count, and processing time, as summarized in Table 5.

Table 5.

Model complexity and processing time comparison of the Transformer relation network.

The results show that the number of parameters remains unchanged at for both variants since Top-t sparsification only alters the retention pattern of attention connections and does not change the dimensions or the number of weights in the projection matrices , , , and . Meanwhile, the FLOPs decrease slightly from to , indicating a limited overall change. This is mainly because the scoring stage still computes attention logits against all prototypes/keys to enable Top-t selection, and thus the dominant computation is preserved, which bounds the potential FLOP reduction. The observed FLOPs decrease primarily comes from the post-selection stages, where the softmax normalization and the value-weighted aggregation are carried out on the selected Top-t subset rather than the full dimension, reducing the non-dominant exponential and multiply–accumulate operations.

In contrast, the processing time decreases from to , corresponding to an approximately reduction in latency and about a 1.39× speedup. This gain mainly arises after Top-t selection: the attention weights are normalized only over the selected t entries, and the output is aggregated using the corresponding k values, which reduces practical memory traffic and cache pressure. Overall, the ablation study indicates that Top-t sparsification improves inference efficiency while maintaining global scoring over all prototypes/keys in the Transformer relation network.

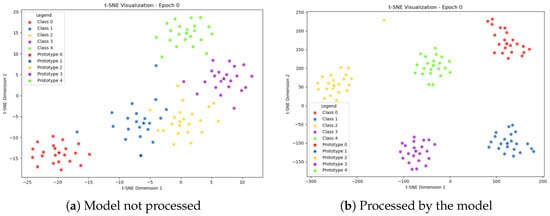

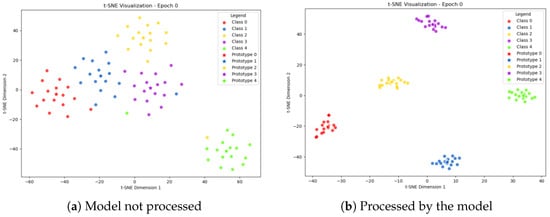

4.5. Visualization

Beyond the quantitative analysis, we additionally conducted low-dimensional visualization of the extracted features using t-SNE to further validate the internal states of the FC-SBAAT model, as shown in Figure 6 and Figure 7. In these visualization experiments, we randomly selected five new classes, each containing 21 embedded features. We present the t-SNE visualization results before and after feature enhancement and prototype optimization for the 1-shot and 5-shot tasks on the MiniImageNet and CUB datasets. On the left side of each subplot, the original feature distribution without model processing is shown, while the right side shows the optimized feature distribution after feature enhancement and prototype calibration.

Figure 6.

MiniImageNet tasks.

Figure 7.

CUB tasks.

In the visualizations presented in Figure 6 and Figure 7, the left subfigures illustrate the results without task-related feature enhancement and prototype optimization. It can be observed that the prototypes of each class are scattered, failing to effectively capture class centers or distinguish inter-class differences—indicating that raw features lack sufficient discriminative power for class separation. In contrast, the right subfigures demonstrate the outcomes after applying the proposed feature enhancement and prototype optimization modules. Notably, the prototypes become more compact and representative, with significantly improved separability between distinct classes. This visualization evidence confirms that FC-SBAAT not only enhances inter-class separability and prototype representativeness but also strengthens the model’s adaptability to task-specific structures. Consequently, the model achieves more accurate and robust generalization when classifying novel categories.

5. Conclusions

We propose a novel Few-Shot classification method, FC-SBAAT, which is based on a Feature-Cooperative Prototype Optimization Strategy and a Task-Adaptive Sparse Bias-Aware Transformer Strategy. By integrating contextual information into intra-class relationship modeling, minimizing intra-class similarity, and maximizing inter-class differences, this method effectively optimizes class prototypes—yielding more representative and discriminative prototype representations. Additionally, it uses Bias-Aware Attention to guide the model in learning task-relevant features, thereby enhancing classification performance.

We performed extensive experiments on the widely adopted MiniImageNet and CUB datasets. The results show that FC-SBAAT outperforms existing state-of-the-art methods on both 1-shot and 5-shot tasks. These findings highlight FC-SBAAT’s significant advances in prototype quality, model performance, and robustness. Notably, FC-SBAAT demonstrates strong inter-class separability even with extremely limited labeled data.

Furthermore, we conducted detailed ablation studies to evaluate the contribution of each module. The results indicate that both the Multi-Head Attention mechanism and contrastive learning play key roles in feature enhancement and prototype refinement. The adaptive feature fusion mechanism dynamically adjusts feature weights, further improving model flexibility and robustness, which leads to significantly enhanced task performance.

Author Contributions

M.W.: conceptualization, methodology, and writing—original draft; C.Y.: conceptualization, methodology, supervision, writing—review and editing, literature review, resources, formal analysis, data analysis, and interpretation; L.S.: conceptualization and research framework design; J.L.: data collection, data curation (image preparation), and methodology (optimization); S.T.: formal analysis, investigation, and validation. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was funded by the authors.

Data Availability Statement

This experiment adopts public datasets. The Mini-ImageNet dataset is available at https://image-net.org/download.php (accessed on 1 November 2025). The CUB200 (CUB-200-2011) dataset is available at https://www.vision.caltech.edu/datasets/cub_200_2011/ (accessed on 1 November 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Snell, J.; Swersky, K.; Zemel, R. Prototypical networks for Few-Shot learning. In Proceedings of the Advances in Neural Information Processing Systems 30 (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hu, H.; Gu, J.; Zhang, Z.; Dai, J.; Wei, Y. Relation networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2018), Salt Lake City, UT, USA, 18–23 June 2018; pp. 3588–3597. [Google Scholar]

- Vinyals, O.; Blundell, C.; Lillicrap, T.; Wierstra, D. Matching networks for one shot learning. In Proceedings of the Advances in Neural Information Processing Systems 29 (NeurIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 3630–3638. [Google Scholar]

- Cheng, Y.; Yu, M.; Guo, X.; Zhou, B. Few-shot Learning with Meta Metric Learners. arXiv 2019, arXiv:1901.09890. [Google Scholar] [CrossRef]

- Garcia, V.; Bruna, J. Few-shot learning with graph neural networks. arXiv 2017, arXiv:1711.04043. [Google Scholar] [CrossRef]

- Liu, J.; Song, L.; Qin, Y. Prototype rectification for Few-Shot learning. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Proceedings, Part I 16; Springer: Cham, Switzerland, 2020; pp. 741–756. [Google Scholar] [CrossRef]

- Tsai, Y.-H.H.; Salakhutdinov, R. Improving one-shot learning through fusing side information. arXiv 2017, arXiv:1710.08347. [Google Scholar] [CrossRef]

- Li, X.; Tian, T.; Liu, Y.; Yu, H.; Cao, J.; Ma, Z. Adaptive multi-prototype relation network. In Proceedings of the 2020 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Auckland, New Zealand, 7–10 December 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1707–1712. [Google Scholar]

- Zhang, W.; Gu, X. Task-aware prototype refinement for improved Few-Shot learning. Neural Comput. Appl. 2023, 35, 17899–17913. [Google Scholar] [CrossRef]

- Li, Z.; Hu, Z.; Luo, W.; Hu, X. SaberNet: Self-attention based effective relation network for few-shot learning. Pattern Recognit. 2023, 133, 109024. [Google Scholar] [CrossRef]

- Li, H.; Huang, G.; Yuan, X.; Zheng, Z.; Chen, X.; Zhong, G.; Pun, C.-M. PSANet: Prototype-guided salient attention for few-shot segmentation. Vis. Comput. 2025, 41, 2987–3001. [Google Scholar] [CrossRef]

- Lim, J.Y.; Lim, K.M.; Lee, C.P.; Tan, Y.X. SCL: Self-supervised Contrastive Learning for few-shot image classification. Neural Networks 2023, 165, 19–30. [Google Scholar] [CrossRef] [PubMed]

- Hospedales, T.; Antoniou, A.; Micaelli, P.; Storkey, A. Meta-learning in neural networks: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 5149–5169. [Google Scholar] [CrossRef] [PubMed]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural networks for one-shot image recognition. In Proceedings of the ICML Deep Learning Workshop, Lille, France, 6–11 July 2015; Volume 2, pp. 1–30. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-agnostic meta-learning for fast adaptation of deep networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, Australia, 6–11 August 2017; PMLR: Cambridge, MA, USA, 2017; Volume 70, pp. 1126–1135. [Google Scholar]

- Li, Z.; Zhou, F.; Chen, F.; Li, H. Meta-SGD: Learning to Learn quickly for few-shot learning. arXiv 2017, arXiv:1707.09835. [Google Scholar]

- Nichol, A.; Schulman, J. Reptile: A scalable metalearning algorithm. arXiv 2018, arXiv:1803.02999. [Google Scholar]

- Gong, Y. Meta-Learning with Differentiable Convex Optimization. Technical Report. EasyChair. 2023. Available online: https://easychair.org/publications/preprint/RJVM (accessed on 2 January 2026).

- Ravi, S.; Larochelle, H. Optimization as a model for few-shot learning. In Proceedings of the 5th International Conference on Learning Representations (ICLR 2017), Toulon, France, 24–26 April 2017. [Google Scholar]

- Munkhdalai, T.; Yu, H. Meta networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, Australia, 6–11 August 2017; PMLR: Cambridge, MA, USA, 2017; pp. 2554–2563. [Google Scholar]

- Mishra, N.; Rohaninejad, M.; Chen, X.; Abbeel, P. A Simple Neural attentive meta-learner. arXiv 2017, arXiv:1707.03141. [Google Scholar]

- Sung, F.; Zhang, L.; Xiang, T.; Hospedales, T.; Yang, Y. Learning to learn: Meta-critic networks for sample efficient learning. arXiv 2017, arXiv:1706.09529. [Google Scholar] [CrossRef]

- Finn, C.; Xu, K.; Levine, S. Probabilistic model-agnostic meta-learning. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 9516–9527. [Google Scholar]

- Taud, H.; Mas, J.-F. Multilayer perceptron (MLP). In Geomatic Approaches for Modeling Land Change Scenarios; Springer: Cham, Switzerland, 2018; pp. 451–455. [Google Scholar] [CrossRef]

- Chen, D.; Chen, Y.; Li, Y.; Mao, F.; He, Y.; Xue, H. Self-supervised learning for few-shot image classification. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1745–1749. [Google Scholar] [CrossRef]

- Li, A.; Luo, T.; Xiang, T.; Huang, W.; Wang, L. Few-Shot learning with global class representations. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2019), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9714–9723. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Liu, S.; Johns, E.; Davison, A.J. End-to-end multi-task learning with attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 15–20 June 2019; pp. 1871–1880. [Google Scholar] [CrossRef]

- Huang, X.; Choi, S.H. SAPENet: Self-attention based prototype enhancement network for few-shot learning. Pattern Recognit. 2023, 135, 109170. [Google Scholar] [CrossRef]

- Lin, H.; Cheng, X.; Wu, X.; Shen, D. Cat: Cross attention in vision transformer. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Hou, R.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Cross attention network for few-Shot classification. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 4003–4014. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Kamath, A.; Rücklé, A.; Cho, K.; Gurevych, I. Adapterfusion: Non-destructive task composition for transfer learning. arXiv 2020, arXiv:2005.00247. [Google Scholar] [CrossRef]

- Sun, Z.; Zheng, W.; Wang, M. SLTRN: Sample-level transformer-based relation network for few-shot classification. Neural Netw. 2024, 176, 106344. [Google Scholar] [CrossRef] [PubMed]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S. The Caltech-UCSD Birds-200-2011 Dataset; Technical Report CNS-TR-2011-001; California Institute of Technology: Pasadena, CA, USA, 2011. [Google Scholar]

- Li, H.; Sun, Y.; Qiao, S. Enhanced lithology identification with few-Shot well-logging data using a confidence-enhanced semi-supervised meta-learning approach. Measurement 2025, 247, 116762. [Google Scholar] [CrossRef]

- Li, H.; Qiao, S.; Sun, Y. A depth graph attention-based multi-channel transfer learning network for fluid classification from logging data. Phys. Fluids 2024, 36, 106623. [Google Scholar] [CrossRef]

- Chen, W.-Y.; Liu, Y.-C.; Kira, Z.; Wang, Y.-C.F.; Huang, J.-B. A closer look at few-shot classification. arXiv 2019, arXiv:1904.04232. [Google Scholar] [CrossRef]

- Oreshkin, B.; Rodríguez López, P.; Lacoste, A. TADAM: Task dependent adaptive metric for improved few-shot learning. In Proceedings of the Advances in Neural Information Processing Systems 31 (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 721–731. [Google Scholar]

- Hao, F.; He, F.; Cheng, J.; Wang, L.; Cao, J.; Tao, D. Collect and select: Semantic alignment metric learning for few-Shot learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2019), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8460–8469. Available online: https://github.com/haofusheng/saml (accessed on 2 January 2026).

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. DeepEMD: Differentiable Earth Mover’s distance for few-shot learning. arXiv 2020, arXiv:2003.06777. [Google Scholar] [CrossRef]

- Ye, H.-J.; Hu, H.; Zhan, D.-C.; Sha, F. Few-shot learning via embedding adaptation with set-to-set functions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), Seattle, WA, USA, 13–19 June 2020; pp. 8808–8817. Available online: https://openaccess.thecvf.com/content_CVPR_2020/html/Ye_Few-Shot_Learning_via_Embedding_Adaptation_With_Set-to-Set_Functions_CVPR_2020_paper.html (accessed on 2 January 2026).

- Zhou, Z.; Qiu, X.; Xie, J.; Wu, J.; Zhang, C. Binocular mutual learning for improving few-shot classification. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; pp. 8402–8411. [Google Scholar] [CrossRef]

- Qiao, S.; Huang, M.; Li, H.; Wang, L.; Wenjing, Y.; Sun, Y.; Zhao, Z. FedSSH: A consumer-oriented federated semi-supervised heterogeneous IoMT framework. IEEE Trans. Consum. Electron. 2025, 71, 8465–8476. [Google Scholar] [CrossRef]

- Qiao, S.; Guo, Q.; Wang, M.; Zhu, H.; Rodrigues, J.J.P.C.; Lyu, Z. FRW-TRACE: Forensic-ready watermarking framework for tamper-resistant biometric data and attack traceability in consumer electronics. IEEE Trans. Consum. Electron. 2025, 71, 8234–8245. [Google Scholar] [CrossRef]