Abstract

In off-road scenes, segmentation targets exhibit significant scale progression due to perspective depth effects from oblique viewing angles, meaning that the size of the same target undergoes continuous, boundary-less progressive changes along a specific direction. This asymmetric variation disrupts the geometric symmetry of targets, causing traditional segmentation networks to face three key challenges: (1) inefficientcapture of continuous-scale features, where pyramid structures and multi-scale kernels struggle to balance computational efficiency with sufficient coverage of progressive scales; (2) degraded intra-class feature consistency, where local scale differences within targets induce semantic ambiguity; and (3) loss of high-frequency boundary information, where feature sampling operations exacerbate the blurring of progressive boundaries. To address these issues, this paper proposes the ProCo-NET framework for systematic optimization. Firstly, a Progressive Strip Convolution Group (PSCG) is designed to construct multi-level receptive field expansion through orthogonally oriented strip convolution cascading (employing symmetric processing in horizontal/vertical directions) integrated with self-attention mechanisms, enhancing perception capability for asymmetric continuous-scale variations. Secondly, an Offset-Frequency Cooperative Module (OFCM) is developed wherein a learnable offset generator dynamically adjusts sampling point distributions to enhance intra-class consistency, while a dual-channel frequency domain filter performs adaptive high-pass filtering to sharpen target boundaries. These components synergistically solve feature consistency degradation and boundary ambiguity under asymmetric changes. Experiments show that this framework significantly improves the segmentation accuracy and boundary clarity of multi-scale targets in off-road scene segmentation tasks: it achieves 71.22% MIoU on the standard RUGD dataset (0.84% higher than the existing optimal method) and 83.05% MIoU on the Freiburg_Forest dataset. Among them, the segmentation accuracy of key obstacle categories is significantly improved to 52.04% (2.7% higher than the sub-optimal model). This framework effectively compensates for the impact of asymmetric deformation through a symmetric computing mechanism.

1. Introduction

Image segmentation in off-road environments is a core task of complex scene visual perception, aiming to accurately separate drivable areas, obstacles, and dynamic targets (such as pedestrians and vehicles) from off-road images of unstructured terrains (such as mud, rocks, and vegetation), providing key environmental understanding capabilities for autonomous driving [] and field robot navigation [,,]. In autonomous off-road vehicles, this technology constructs real-time semantic maps of passable areas, serving as direct evidence for path planning and obstacle avoidance decisions. It ensures that vehicles can safely traverse complex terrains with variable conditions and no clear road signs, such as jungle trails, riverbanks, and steep slopes. For field exploration or rescue robots, refined segmentation enables them to accurately identify and avoid static hazards like rocks and gullies while promptly detecting dynamic threats (e.g., wild animals). Moreover, it allows for reliable autonomous positioning and navigation in extreme environments where GPS signals are unavailable. This technology can enhance the decision-making robustness and safety of systems on rough roads, extreme climates, and occluded conditions through real-time terrain analysis, thus generating important values for scenarios such as field rescue, military reconnaissance, and extreme environment surveys. In recent years, with the breakthrough of efficient semantic segmentation architectures based on large-kernel convolutions and the innovation of off-road segmentation networks integrating attention mechanisms, existing technologies have significantly improved the real-time performance of segmentation in unstructured environments and the accuracy of path planning, promoting the application of related intelligent systems for off-road environments from laboratories to real complex scenes.

In the field of image segmentation in off-road environments, existing methods mainly focus on overcoming the core challenges of complex terrain features [,,] and solving the lack of large-scale pixel-level annotations [,]. In terms of overcoming complex terrain features, Rahi et al. [] proposed a hybrid architecture based on the ResNet34 encoder and U-Net decoder, using a feature extraction mechanism combining residual learning and multi-level convolutions, as well as a feature fusion mechanism based on skip connections, to achieve the fine-grained capture of multi-scale features and robustness to dynamic environments in complex terrains; the FGSN network [] realizes fine-grained segmentation of blurred terrain boundaries through self-supervised learning and a dual-branch architecture based on multi-scale attention, using k-means clustering and a feature capture mechanism based on a hierarchical adaptive module. Meanwhile, to address the problem of insufficient pixel-level annotations due to relying only on image-level labels in weakly supervised semantic segmentation, Ahn et al. [] designed the AffinityNet network to predict the semantic similarity of adjacent pixels and used a random walk algorithm to achieve correct pixel classification based on the similarity of adjacent pixels, significantly reducing the dependence on large-scale pixel-level annotations while ensuring segmentation accuracy. Further, Gao et al. [] proposed a framework based on contrastive learning and active learning, using weakly annotated image patches to construct contrastive pairs and screening high-risk frames for supplementary annotation through risk assessment, achieving fine-grained segmentation of images with a small number of weakly annotated frames.

Currently, in semantic segmentation tasks for off-road scenes with real-time constraints, achieving a balanced optimization between segmentation accuracy and inference speed [,] has become a core research challenge. The OFFSEG segmentation network [] addresses this by employing category pooling to merge the 20/24 classes of the RELLIS-3D and RUGD datasets into four major categories (sky, navigable terrain, non-navigable terrain, and obstacle) while introducing color segmentation (K-means clustering) within navigable regions, thereby reducing model complexity and mitigating class imbalance while preserving fine-grained segmentation requirements in off-road environments. Sharma et al. [] proposed a lightweight network architecture that halves the number of convolutional and deconvolutional layers in DeconvNet (reduced from 13 to 7 layers), combined with fine-tuning using synthetic data as an intermediate domain, significantly reducing computational complexity while enhancing generalization to real off-road scenes. GA-Nav [] addresses computational demands during feature fusion by unifying multi-scale features to lower resolutions while introducing component attention loss to compensate for accuracy degradation, achieving a substantial computational reduction without compromising segmentation precision. To systematically clarify the technical evolution and characteristics of existing semantic segmentation methods in off-road scenarios, seven key studies are summarized and compared in Table 1. This table constructs a comprehensive academic context from five dimensions, aiming to provide a clear reference for understanding the advantages and limitations of different approaches in addressing off-road segmentation challenges. The specific content of the table is as follows:

Table 1.

Comparative analysis of semantic segmentation methods.

Although off-road segmentation methods [,,] based on multi-scale attention mechanisms, self-supervised learning [,], and lightweight design [,] have significantly improved scene parsing capabilities in complex terrains, existing networks still struggle to effectively address the fundamental challenge of target scale progression. Traditional multi-scale methods (such as feature pyramids, multi-scale convolution kernels, or dilated convolutions) have fundamental limitations in terms of handling the continuous and boundary-blurred scale gradients unique to off-road scenes: their discretized hierarchical design (such as fixed-resolution pyramid levels or preset dilation rates) leads to a step-like break in scale coverage, making it impossible to smoothly capture the stepless continuous changes in target size along specific directions. At the same time, there is an irreconcilable contradiction between expanding the scale coverage (such as increasing pyramid levels or multiple branches) and computational efficiency; excessively stacking levels significantly increases the computational burden, while simplifying the structure will inevitably lead to the insufficient capture of continuous gradient-scale features. Ultimately, this makes it difficult for the model to effectively adapt to asymmetric progressive deformation caused by the perspective depth effect. In off-road segmentation task scenes, the scale progression inherent in the targets constitutes the core characteristic that triggers a series of detection and segmentation difficulties. This property manifests through pronounced depth-of-field effects under oblique viewing angles, causing various targets—including traversable areas of varying widths—to exhibit asymmetric scale gradation, wherein their dimensions undergo continuous, boundary-less progressive changes along specific directions. Such directional asymmetric transitions pose multiple severe challenges to target segmentation, which are specifically outlined below.

(1) Low efficiency in capturing continuous-scale features: Traditional target image segmentation is limited by fixed-scale design and is difficult to adapt to such gradient changes in scale. Since detectors are usually optimized for specific scale ranges, their receptive fields and feature extraction mechanisms have inherent defects when facing continuous gradient scales. To solve this problem, existing methods commonly use multi-level structures such as feature pyramids [] or multi-scale kernels for feature extraction, attempting to cover a wider scale range through the combination of features at different levels. Additionally, methods using dilated convolutions with different dilation rates [] are also adopted for feature extraction. However, there is an irreconcilable contradiction between the number of stacked layers in the feature pyramid and detection accuracy and computational efficiency. Increasing the number of stacked layers can cover a wider scale range to a certain extent, but this significantly increases computational complexity, making it difficult to meet the real-time and efficiency requirements; reducing the number of stacked layers would result in insufficient feature fusion due to incomplete coverage of different gradient-scale target segmentation needs, causing a loss in segmentation accuracy. Similarly, the use of dilated convolutions leads to the discretization of scale coverage, and their fixed dilation rates cannot achieve smooth and continuous-scale feature capture, limiting segmentation performance.

(2) Degradation of intra-class feature consistency: The scale gradient of targets also causes rapid changes in the features of different regions within the same target, reducing the similarity of different regions within the class. During the continuous-scale change in the target, different positions within it may correspond to different local scales, and such differences in local scales would cause features to show rapid changes within the target. For example, a target gradually transitioning from a smaller scale to a larger scale may have significantly different feature representations in its edge and central regions due to different local scales. This phenomenon makes traditional segmentation methods based on holistic category features ineffective at capturing the overall consistency of targets, leading to the misclassification of regions within the same target—where significant feature differences arise due to scale gradation—as distinct categories, thereby severely degrading the segmentation performance of objects.

(3) Loss of high-frequency boundary information: The scale gradient also easily causes high-frequency information loss and boundary blurring during feature fusion. When processing targets with continuous-scale changes, simple upsampling strategies (such as bilinear interpolation) commonly used in feature pyramids struggle to accurately handle the gradient differences between features of different scales. This upsampling operation amplifies the inconsistency of features at different levels during the scale gradient and has an excessive smoothing effect on the high-frequency detail information of boundary regions. Specifically, as the target scale gradually changes, the features at its boundaries also show a gradient transition characteristic, while simple upsampling cannot accurately retain the high-frequency details of this gradient change, making the representation ability of the fused features in the target boundary region decline, thereby affecting the accuracy of the segmentation.

Aiming to solve the above problems caused by the target scale gradient, this study proposes the ProCo-NET framework. First, to address the problem of low efficiency in capturing continuous-scale features [], we designed a Progressive Strip Convolution Group (PSCG), which decomposes large-kernel convolutions into multiple groups of orthogonal strip convolutions (vertical and horizontal directions) to construct a cascaded multi-level receptive field expansion mechanism. The symmetrical design in orthogonal directions enhances the model’s continuous perception capability across scales, effectively compensating for feature fragmentation caused by asymmetric scale variations, thereby enabling the capture of continuously scaled targets in off-road environments. Second, to address the problems of intra-class feature dispersion and boundary blurring, we proposed an Offset-Frequency Cooperative Module (OFCM). (1) We introduced a learnable offset generator, which predicts the spatial offset and direction between the convolution sampling points of the feature map and the target through coordinate attention, driving the convolution kernel to dynamically adjust the distribution of sampling points in the key regions within the target, reducing ambiguity arising from asymmetric boundaries or intra-class inconsistent regions, and establishing a receptive field covering the global morphology and local details of the target. (2) We constructed a dual-channel frequency domain filter, which uses discrete Fourier transform to separate high-level features into low-frequency overall structure and high-frequency detail components, and in the feature fusion stage, it selectively enhances boundary high-frequency signals through an adaptive high-pass filter, thereby enhancing the high-frequency part above the Nyquist frequency and sharpening the boundary, obtaining clearer boundary details. The OFCM module significantly enhances feature fusion quality in asymmetric scenarios through a symmetrical collaborative mechanism between spatial and frequency domains. Our contributions are as follows:

(1) Aiming to solve the feature dispersion within the class caused by the scale gradient in off-road environments, a Progressive Strip Convolution Group (PSCG) is proposed, which innovatively decomposes large-kernel convolutions into multiple groups of orthogonal strip convolutions and constructs a cascaded multi-level receptive field expansion mechanism to achieve the efficient capture of multi-scale features. Compared with traditional pyramid kernel multi-scale kernel structures, this method significantly improves the model’s fine perception ability for continuous-scale changes in off-road environments while reducing computational complexity.

(2) We also designed an Offset-Frequency Cooperative Module (OFCM), including a learnable offset generator and a dual-channel frequency domain filter. The former dynamically adjusts the sampling point distribution of the convolution kernel through the coordinate attention mechanism, enhancing the model’s adaptability to the internal structure changes in targets caused by the scale gradient in off-road environments; the latter uses an adaptive high-pass filter to achieve the adaptive enhancement of high-frequency details, effectively improving the feature expression of boundary regions.

(3) The ProCo-NET network achieves 71.22% MIoU on the RUGD dataset, an increase of 2–10% compared with other networks, and reaches 83.05% MIoU on the Freiburg_Forest dataset, with an obstacle accuracy of 52.04%, an increase of 2.7%, significantly optimizing the problems of intra-class consistency and boundary blurring.

2. Literature Review

2.1. Multi-Scale Semantic Segmentation Networks

The core challenge in semantic segmentation models lies in balancing the extraction efficiency of spatial details and global context. Early work such as FCN [] addressed the issue of reduced image dimensions caused by convolution and pooling during feature extraction by employing upsampling to restore image size. It utilized skip connections to fuse semantic information from deep coarse layers with appearance details from shallow fine layers, achieving pixel-level classification. However, its single-encoder architecture struggled to resolve detail loss in complex scenes. To overcome this, the BiSeNet series [] proposed a bilateral segmentation network. Through the parallel design of a spatial path (using small-stride convolutions to preserve details) and a context path (employing rapid downsampling to capture global information), it separately captured spatial details and global context while reducing convolution complexity and achieving higher inference speeds. DDRNet [] consists of two parallel branches: a depth branch and a semantic branch. The depth branch performs downsampling using three stride-2 convolution kernels without pooling operations to obtain high-resolution feature representations, while the semantic branch extracts richer global information through continuous downsampling based on the depth branch. Additionally, DDRNet designed a bilateral feature fusion module that combines features under multi-scale operations using bilinear interpolation and average pooling. HRNet [] tackled severe detail loss when recovering low-resolution representations to high-resolution feature maps by designing a framework with parallel connections from high-to-low resolutions. It continuously exchanges information between high- and low-resolution feature maps by fusing multi-resolution features at different network stages, ensuring high-resolution representations throughout the entire process. These approaches demonstrate that multi-branch collaboration and multi-scale feature fusion significantly enhance model adaptability to complex scenarios, such as multi-scale objects in off-road terrains.

In summary, existing networks based on multi-branch collaboration and multi-scale feature fusion have significantly improved adaptability to multi-scale targets in complex scenarios. However, they have inherent limitations in handling continuous-scale gradients in off-road scenes: pyramid or multi-scale convolution kernels discretely cover limited scale levels—increasing levels leads to high computational overhead, while reducing levels fails to smoothly capture continuous changes. Although DDRNet and HRNet maintain high resolution, their preset scale designs struggle to efficiently model gradient receptive fields. The core module of ProCo-NET, PSCG (Progressive Strip Convolution Group), overcomes this limitation: it decomposes large-kernel convolutions into orthogonal (horizontal/vertical) strip convolution cascades, constructing a continuously expanding multi-level receptive field mechanism. This symmetric design, combined with self-attention gating, enables the efficient and continuous perception of asymmetric scale changes, avoids feature discontinuities caused by discretization, and maintains low computational complexity.

2.2. Attention Mechanisms for Segmentation Tasks

The attention mechanism is an adaptive feature selection process, aiming to make the network focus on important parts. Aiming to solve the problem that when existing PSPNet [] and ASPP [] aggregate context information, the pixel information of different targets is mixed, resulting in the inability to accurately assign correct labels to each pixel, which limits the segmentation accuracy, OCNet [] proposed an object semantic pooling mechanism. By first calculating the similarity between each pixel and all other pixels and generating an object semantic map for each pixel, and then using similarity weighted aggregation to characterize the pixel, the feature extraction and understanding of pixels of the same class are enhanced. HyperSeg [] realizes convolution kernel parameters that are dynamically adjusted according to different spatial positions of the input data through the introduction of dynamic parameter adjustment (Patch-wise Weight Shuffle) operations and channel attention enhancement modules and obtains decoder weights that change spatially to achieve adaptive flexible convolution processing of the local area of the input data. Aiming to solve the problem that attaching a single attention block at the end of the network or introducing Transformers as the encoder means that the former cannot capture global features and the latter significantly increases the complexity of the network and loses spatial details, UnetFormer [] proposed a UNet-like Transformer structure, which uses four-level Resblock and three global–local Transformer blocks and a feature refinement head as the encoder and decoder, respectively, to ensure that the model is lightweight. At the same time, by constructing a dual-branch structure of global–local attention in the decoder, it realizes the extraction of sufficient global context information and the retention of fine-grained local information. Peng et al. [] proposed a lightweight network structure CF-Net, introducing two key modules: a channel attention optimization block and a cross-fusion block. The channel attention optimization block assigns global weights to each channel of the feature map through the channel attention mechanism so as to selectively amplify the features of certain channels; at the same time, the cross-fusion block expands the context information of the underlying feature map by fusing the high-order feature map, improving the ability of the underlying feature map to capture small targets.

Existing attention mechanisms enhance the model’s focusing ability through adaptive feature selection. However, they remain insufficient in addressing intra-class inconsistency and boundary blurriness caused by scale gradients: similarity-based attention has limited ability to distinguish intra-class semantic ambiguity and incurs high computational overhead, and dynamic convolution or complex Transformers may introduce heavy burdens or smooth boundary details. The OFCM module in our ProCo-NET effectively addresses these challenges: its learnable offset generator dynamically adjusts the distribution of sampling points using coordinate attention, aggregates intra-class consistent regions, and significantly alleviates feature dispersion; its dual-channel frequency domain filter selectively enhances high-frequency components of boundaries through Fourier transform and adaptive high-pass filtering, sharpens blurry boundaries, and avoids the high cost of global attention.

3. Methodology

In this section, we describe the proposed ProCo-NET architecture in detail. Overall, we adopt an encoder–decoder architecture that follows most previous works. In the encoding stage, a series of PSCG modules are stacked during each downsampling, which includes four stages with progressively reduced spatial resolutions of H/4 × W/4, H/8 × W/8, H/16 × W/16, and H/32 × W/32. Here, H and W are the height and width of the input image, respectively. The decoding stage employs a bidirectional feature fusion path: the underlying high-resolution features realize dynamic sampling and frequency domain filtering through the Offset-Frequency Collaborative Module (OFCM). The two modules collaborate closely through a cascaded optimization link: the continuous-scale features extracted by PSCG are input into the OFCM for spatial alignment, high-frequency enhancement, and finally for segmentation results with clear boundaries to be output.

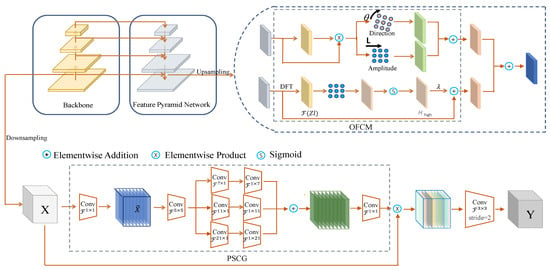

Aiming to solve the three core problems of low feature capture efficiency, intra-class semantic consistency degradation, and high-frequency boundary information loss caused by the target scale gradient in off-road image segmentation, we propose the ProCo-NET dual-module collaborative optimization framework. Specifically, as shown in Figure 1, in the encoder stage, the PSCG module is used to decompose large-kernel convolutions into multi-scale strip convolution kernels through orthogonal decomposition, constructing a cascaded mechanism to achieve cross-direction continuous-scale perception; then, in the decoder stage, a bottom-up feature fusion path is adopted: deep low-resolution features are gradually fused with shallow high-resolution features through the cascaded OFCM module. The OFCM module adopts a dual-path parallel strategy: the feature resampling mechanism realizes spatial adaptive alignment, eliminating semantic ambiguity between multi-scale features, and the adaptive high-pass filter captures boundary high-frequency components. The two modules form a cascaded optimization link; the multi-scale continuous features extracted by PSCG are input into OFCM, the semantic ambiguity is eliminated through spatial domain resampling, then the boundary high-frequency signals above the Nyquist frequency are enhanced through frequency domain filtering, and finally the segmentation results with strong class consistency and clear boundaries are output. All modules are detailed below.

Figure 1.

Overall structure of ProCo-Net. This end-to-end framework integrates the Progressive Strip Convolution Group (PSCG), which decomposes large kernels into multi-scale orthogonal strips for continuous-scale perception, and the Offset-Frequency Cooperative Module (OFCM), which combines dynamic offset sampling with adaptive frequency domain filtering to sharpen boundaries and enhance intra-class consistency.

3.1. Encoder Module

Traditional CNN models (such as the DeepLab series [,,]) perform well in local feature extraction, but due to the locality of convolution kernels and the downsampling of pooling layers, which lead to the limitation of receptive fields and the loss of information, it is difficult to effectively capture global context information, thus limiting their performance in scenarios with a scale gradient. While Transformer models [,,,,,] have made breakthroughs in modeling global dependencies through self-attention mechanisms and have a certain ability to adapt to scale gradients, their computational complexity increases quadratically with image size, resulting in low efficiency when processing high-resolution images.

In order to adapt to the gradual change in image scale in off-road scenes and meet their timeliness requirements, as shown in Figure 1, in the encoder stage, we proposed a Progressive Strip Convolution Group (PSCG) that integrates a self-attention mechanism. The specific construction of this module is as follows: First, after using depth convolution to gather local information, we further extract features in two dimensions. In the spatial dimension, we employed multi-branch depth strip convolution to capture the context of different scales. In the channel dimension, we compress the information of the feature map into attention weights through 1 × 1 convolution to obtain an integrated feature representation of global and local information. Then, we use the obtained feature information as attention weights to weight the input of PSCG so that the model can dynamically adjust the degree of attention to different regions and enhance the feature expression ability. Specifically, the mathematical expression form of PSCG is as follows:

where F represents the input feature. Att and Out are the attention map and output, respectively. ⊗ is the element-wise matrix multiplication operation, DW-Conv represents depth convolution, and represents the i-th branch using the PSCG module. The input feature F is first passed through a depth convolution (DW-Conv) to extract local features. This operation performs convolution on each input channel separately, reducing computational complexity while retaining detail information. Then, F is sent to multiple branches, with each branch using depth strip convolution kernels of different scales (such as 7 × 1 and 1 × 7, 11 × 1 and 1 × 11, 21 × 1 and 1 × 21) to capture context information of different scales and achieve the extraction of gradient-scale features. Then, the output of each branch is fused through a 1 × 1 convolution layer to generate an attention weight map, highlighting important features and suppressing secondary information. Finally, the generated attention weight map is combined with the input feature F through element-wise matrix multiplication (⊗) to obtain the final output feature Out. This design enables the PSCG module to capture multi-scale spatial information while maintaining efficiency and realizes the enhancement of gradient features through cross-scale attention interactions, significantly improving the robustness of the feature extraction for continuously changing scale targets.

3.2. Decoder Module

In multi-scale image prediction tasks, traditional feature fusion methods, although widely used, have two significant problems: intra-class inconsistency and boundary displacement. Intra-class inconsistency originates from the rapid change in feature values within the same object, leading to a decrease in intra-class similarity; boundary displacement is due to the loss of high-frequency information during feature fusion, making the boundary blurry. In response, we proposed a new feature fusion module, Offset-Frequency Cooperative Module (OFCM), which is mainly composed of an offset generator and a dual-channel frequency domain filter. The offset generator solves large-scale inconsistent regions by resampling target features, and the dual-channel filter mainly overcomes the problem of boundary blurring through a high-frequency filter. The two key components are described in detail below.

3.2.1. Learnable Offset Generator

First, based on the observation that adjacent features with low intra-class similarity often exhibit features with high intra-class similarity, the first step of the offset generator is to calculate the cosine similarity between adjacent pixels:

where contains the cosine similarity between each pixel and 8 other adjacent pixels, which is conducive to the offset generator sampling towards features with high class similarity, thereby reducing the blurriness of the class-inconsistent regions. represents the feature value of channel a at position (i, j) in the first layer feature map; represents the feature value of the adjacent position after offset (p, q); and the output represents the cosine similarity matrix of the 8-neighborhood direction at position (i, j).

After the offset generator obtains the cosine similarity between adjacent pixels, it takes the Zl and S obtained after feature pyramid fusion as inputs to predict the offset. It consists of two 3 × 3 convolution layers for predicting the offset direction and offset scale, expressed as follows:

where represents the offset direction, controls the size of the offset, and represents the final predicted offset of each pixel of the advanced feature. G is the number of offset groups; we strategically divide the features into different groups to allocate unique spatial offsets for more fine-grained resampling. Among them, the first convolution layer predicts the offset direction, learns the distribution of features in the training data through , identifies which directions of features are more likely to have high intra-class similarity, and obtains the direction prediction feature map. The second convolution layer predicts the offset scale through the Sigmoid activation function, limiting the output between 0 and 1, thereby controlling the size of the offset. In this way, by independently predicting the offset direction and offset size for each group of features, the low-class features are offset, so the low-class features are transformed into features with high class similarity, effectively achieving the consistency of the target as a whole.

3.2.2. Dual-Channel Frequency Domain Filter

In traditional feature fusion, the linear upsampling strategy of the feature pyramid is prone to cause the problems of high-frequency detail loss and boundary information blurring, especially for the boundary transition regions of targets with gradual scales. Therefore, we proposed a dual-channel frequency domain filter, and its specific operations are as follows: first, we used discrete Fourier transform (DFT) to transform the feature map into the frequency domain , which is expressed as follows:

where represents the complex array output by DFT. H and W represent the height and width of the feature map. h and w represent the coordinates of the feature map X. The normalized frequencies in the height and width dimensions are given by |p| and |q|.

Here, the choice of discrete Fourier transform (DFT) stems from its comprehensive advantages over DCT and wavelet transform. The complex number representation of DFT can provide excellent rotation/translation invariance, which can effectively retain the directional features of boundaries with arbitrary orientations in off-road scenes (such as inclined paths), outperforming the sensitivity of DCT’s real-valued basis functions. Meanwhile, its computational efficiency is significantly higher than wavelet transform, meeting the real-time requirements. In addition, the global frequency view of DFT combined with an adaptive high-pass filter can more directly and uniformly enhance the high-frequency components of boundaries, avoiding the complexity of wavelet basis selection and decomposition levels. Therefore, DFT is the optimal choice in terms of directional robustness, efficiency, and the directness of boundary enhancement.

Next, to solve the problem that the frequency image information being higher than the Nyquist frequency would mean that it was permanently lost in the downsampling process, we take the Zl obtained after feature pyramid fusion as the input and learn to obtain a spatially varying high-pass filter, which is composed of a 3 × 3 convolution layer, a softmax layer, and a filter inversion operation, expressed as follows:

where contains the initial kernel at each position (i, j), and K represents the kernel size of the high-pass filter. In the process, to ensure that the finally generated kernel is a high-pass filter, we first use kernel-wise softmax to obtain a low-pass kernel and then subtract these kernels from the unit kernel E. When K = 3, the weights of the unit kernel E are [[0,0,0], [0,1,0], [0,0,0]]. Finally, after applying the high-pass filter and adding the residual, we obtain the enhanced result, expressed as follows:

In this way, under the operations of high-pass filtering and residual connection, the dual-channel filter retains the high-frequency detail features of gradual changes, provides clearer boundary details, and improves the overall quality of the feature fusion.

4. Experiments

4.1. Dataset and Technical Indicators

The RUGD dataset adopts 24 categories of fine-grained semantic annotations, including 5948 training images and 1488 validation images, with an original resolution of 550 × 688. During the training phase, 10 repeated samplings are implemented through RepeatDataset, and pixel-level data augmentation is performed using random scaling (0.5 to 2.0 ratio), 512 × 512 random cropping, horizontal flipping (probability 0.5), and photometric distortion (brightness/contrast ± 30%). In the validation phase, the basic resolution of 1024 × 576 is maintained, and ResizeToMultiple is used to ensure that the output size is an integer multiple of 32. Its rich category coverage (such as various terrains, vegetation, and man-made objects) and complex scene images accurately reflect the challenges of target diversity and scale gradient in real off-road environments, making it an ideal benchmark for evaluating the fine-grained segmentation ability of the model.

The Freiburg_Forest dataset focuses on five core off-road elements. In the training phase, 10 repeated samplings are also used, and the same augmentation process as RUGD is adopted; that is, random scaling to the basic size of 1024 × 576 followed by cropping, flipping, and photometric distortion. In the validation phase, a multi-scale strategy is used to maintain the aspect ratio of the image. While maintaining the resolution of 1024 × 576, the size of the feature map is strictly aligned to avoid spatial misalignment during decoding. This dataset pays special attention to the core elements of off-road navigation (especially obstacles), and its images contain significant perspective depth effects and continuous-scale changes, providing a targeted test platform for verifying the segmentation robustness of the model in safety-critical areas, such as drivable paths and obstacle boundaries.

4.2. Experimental Evaluation Metrics

In the image segmentation task, the model needs to accurately classify each pixel of the input image. However, traditional classification indicators (such as accuracy) would produce misleading high scores in the case of severe class imbalance and completely ignore the recognition quality of key boundaries and small targets, so it is difficult to directly reflect the effectiveness of pixel-level prediction. For this reason, the mean intersection over union (MIoU) is widely used as the core evaluation standard for segmentation performance. This indicator comprehensively measures the segmentation accuracy of the model by quantifying the overlap degree between the predicted region and the real annotation region.

For each target category, the intersection over union (IoU) is defined as the ratio of the intersection area to the union area between the predicted region and the real region, as follows:

where represents the number of pixels correctly predicted as the category, is the number of pixels incorrectly predicted as the category, and is the number of missed pixels. MIoU comprehensively evaluates the segmentation ability of the model on multiple sample categories by taking the average of the IoU of all categories:

where is the total number of categories. Compared with the evaluation method that only relies on pixel accuracy, MIoU pays more attention to the matching accuracy of target boundaries and has stronger robustness to class-imbalanced data (such as small targets or excessive background proportion).

At the same time, we introduce other indicators to comprehensively evaluate the performance of the model. We use the inference time spent detecting an image and the number of frames detected per second (Fps) to evaluate the detection speed. In addition, we use the storage space occupied by the model’s parameters (Param) to evaluate the model size. FLOPs is the abbreviation of floating point operations, which can be understood as the computational amount of the network and is used to measure the complexity of the model.

4.3. Parameter Settings

We use the pre-trained MSCAN-T as the encoder backbone network, which includes four feature extraction stages; the number of output channels is 32, 64, 160, and 256, respectively, and the depth of the convolutional layers in each stage is 3, 3, 5, and 2. The backbone network uses synchronized batch normalization for feature standardization and sets a path dropout rate of 0.1 to enhance the regularization effect. The decoder adopts a lightweight frequency-aware attention head structure and receives the features of the second to fourth stages of the backbone network as inputs. The decoder fuses multi-scale information through a 256-channel attention mechanism. The key design includes simultaneously enabling high-pass filtering, and the filter size is set to three. The feature resampling module configures the upsampling operation with four groups and a compression ratio of 4 to optimize detail recovery.

The training process uses the AdamW optimizer; the basic learning rate is set to 1.0 × 10−4, and the weight decay coefficient is 0.01. The learning rate scheduling strategy includes a linear warm-up of 1000 iterations and a polynomial decay with a power exponent of 1.0. Differentiated parameter settings are made for specific network layers: the learning rate of the decoding head, upsampling layer, and compression convolution layer is amplified by 10 times, while the position encoding block and normalization layer are exempt from weight decay. The data enhancement process includes random scaling, random cropping, horizontal flipping, and photometric distortion. The input image is first scaled to the basic size of 1024 × 576, randomly scaled in the ratio range of 0.5 to 2.0, and then randomly cropped to 512 × 512. The probability of horizontal flipping is configured to 0.5, and the input image is standardized with the mean set to 123.675, 116.28, and 103.53 and the standard deviation to 58.395, 57.12, and 57.375.

The experiment uses the Freiburg_Forest dataset containing five semantic categories, and the training data is enhanced through 10 repeated samplings. In the verification and testing stages, the multi-scale strategy is adopted, the aspect ratio of the image is maintained and adjusted to the size of 1024 × 576, and the output size is ensured to be an integer multiple of 32. The loss function uses cross-entropy loss, and the evaluation index takes the average intersection over union as the core. The model is automatically saved when the verification score reaches the historical best. The hardware platform is configured with a single NVIDIA 3070 Ti graphics processor, and the software framework is implemented based on PyTorch 2.6.0+cu126.

4.4. Ablation Experiments

4.4.1. Evaluation of Different Components

We carried out relevant ablation experiments on the Freiburg_Forest and RUGD datasets to verify the performance of the proposed PSCG and OFCM and explore the parameter settings that make them perform best. Table 2 shows the results of the baseline model using and not using the proposed PSCG and OFCM on the Freiburg_Forest and RUGD datasets. On the Freiburg_Forest dataset, the baseline model only achieved 76.34% MIoU because the traditional multi-scale feature fusion architecture is limited by the fixed receptive field design and the discrete skip connections between pyramid levels, resulting in the model being unable to effectively capture continuous gradient-scale features. At the same time, the high-frequency details of the shallow features gradually decay during the cross-layer transmission, further exacerbating the problems of boundary blurring and intra-class feature inconsistency. When the baseline model is combined with the proposed PSCG, the performance of the detector is optimized by 4.69%, indicating that the PSCG module improves the problem of the insufficient capture of gradient-scale features caused by the insufficient receptive field of the fixed convolution kernel in the existing method through the cascaded structure of the Progressive Strip Convolution Group constructing multi-branch orthogonal strip convolution (horizontal and vertical directions), using the combination of strip convolution kernels of different scales to decompose the traditional large kernel convolution and the axial receptive field expansion ability of the depth strip convolution and the feature fusion ability of the cross-scale attention mechanism, thereby significantly improving the adaptability of the network to the gradient-scale features. After the OFCM module is introduced alone, the model’s performance is improved to 78.26%. This gain comes from the frequency domain compensation mechanism of OFCM: the low-frequency contour and high-frequency detail components are separated through discrete Fourier transform, and the attenuated high-frequency signals are compensatively enhanced by using adaptive weights, thereby inhibiting the feature blurring effect in cross-layer transmission. When PSCG and OFCM are used together, the model achieves the optimal performance of 83.05%, verifying the collaborative optimization effect between them; the PSCG module captures the gradient-scale information of the target from local to global through multi-scale orthogonal strip convolution and the OFCM module uses these scale-continuous feature maps to accurately locate high-frequency regions (such as obstacle edges) that need to be enhanced through discrete Fourier transform, achieving targeted detail compensation. Based on the above two modules, the ProCo-NET network significantly improves the detection robustness of multi-scale targets.

Table 2.

Ablation experiment results (%) of different modules of ProCo-NET network on Freiburg_Forest. Here, ✓ indicates support for the feature, and ✗ indicates no support for the feature. The bold value in the table is the best-performing value in each category.

Similar experimental results can be obtained on the RUGD dataset. As shown in Table 3, compared with using only one module, the ProCo-NET network achieves better performance after using different module superpositions. PSCG obtains features of different directions and scales through multi-scale strip convolution, establishing cross-layer continuous gradient feature expression while maintaining the integrity of high-frequency details; OFCM resamples high-class consistency regions through the learnable offset generator and enhances boundary high-frequency signals with the dual-channel frequency domain filter, alleviating the decrease in segmentation accuracy caused by feature aliasing and boundary blurring. The ProCo-NET network achieved a detection result of 71.22% MIoU on the RUGD dataset.

Table 3.

Ablation experiment results (%) of different modules of ProCo-NET network on RUGD. Here, ✓ indicates support for the feature, and ✗ indicates no support for the feature. The bold value in the table is the best-performing value in each category.

4.4.2. Evaluation of Components PSCG

On the Freiburg_Forest dataset, we conducted ablation experiments to validate the multi-scale receptive field expansion mechanism and dynamic feature fusion strategy of the Progressive Strip Convolution Group (PSCG). The results in Table 4 demonstrate the following: When using only a single 21 × 21 branch convolution to capture large-scale spatial dependencies, the segmentation MIoU reached 79.35%, representing a 0.66% improvement over the 7 × 7 small-scale branch. This confirms that expanding the receptive field plays a critical role in modeling semantic coherence for distant targets in off-road scenes, though a single scale struggles to balance near-field details and far-field semantics. Upon removing the 1 × 1 convolutional fusion layer, MIoU plummeted by 1.7% to 81.35% due to channel misalignment caused by unaligned orthogonal strip features from multi-scale branches, exposing the limitations of static feature aggregation. When deploying only the triple-branch structure without the attention mechanism, MIoU was 82.21%; fixed weights failed to adaptively adjust the contribution ratio between near-field textures and far-field contours, resulting in significant mis-segmentation in vegetation-occluded regions. Finally, by simultaneously activating the triple-branch structure, 1 × 1 convolutional channel coordinator, and attention mechanism, a peak MIoU of 83.05% was achieved, attaining optimal segmentation accuracy.

Table 4.

Ablation experiments of different submodules of PSCG. Here, ✓ indicates support for the feature, and ✗ indicates no support for the feature. The bold value in the table is the best-performing value in each category.

4.4.3. Evaluation of Components OFCM

We carried out experimental verification on the two core components of the OFCM module on the Freiburg_Forest dataset, and the results are shown in Table 5. By only using the learnable offset generator (OFG) to resample the features in the high-class consistency region, compared with the baseline model, the segmentation MIoU is improved by 1.99%. Although the offset generator effectively alleviates the problem of intra-class feature dispersion by dynamically adjusting the sampling position of the convolution kernel, the improvement effect on boundary blurring is limited. When the dual-channel frequency domain filter (DFF) is deployed alone, after obtaining the high-frequency components of the feature map, the MIoU of the boundary region is improved by 1.43%, but there are still local mis-segmentation phenomena in complex terrain regions. When the two submodules are used collaboratively, a significant performance improvement of 2.65% is achieved, which benefits from the complementary mechanism formed by the optimization of feature consistency driven by the offset generator and the enhancement of boundary details strengthened by the frequency domain filter. These experimental data confirm that the offset generator establishes a strong correlation of the internal structure of the target through dynamic resampling in the spatial domain, and the dual-channel filter realizes the fine reconstruction of boundary details through the frequency domain high-frequency enhancement and residual compensation mechanism. The collaborative effect of the two effectively solves the core challenges in the segmentation of gradient-scale targets.

Table 5.

Ablation experiment results (%) of different submodules of OFCM. Here, ✓ indicates support for the feature, and ✗ indicates no support for the feature. The bold value in the table is the best-performing value in each category.

4.5. Comparison Experiments

4.5.1. Experimental Results and Analysis of RUGD Dataset

Table 6 shows the results of the proposed ProCo-NET and other off-road environment segmentation methods on the RUGD dataset. For sand, grass, tree, sky, building, and asphalt, ProCo-NET obtained the highest detection accuracy, of 62.86%, 87.53%, 92.08%, 80.68%, 84.21%, 95.38%, and 71.22%, respectively. In addition, ProCo-NET obtained the best average result of all categories with 71.22%. As can be seen from Table 6, except for ProCo-NET, DANet [] has the best effect in the image segmentation task of the off-road environment because it uses dual attention mechanisms (spatial attention and channel attention) to effectively model global context dependencies and enhances the feature response of important regions through an adaptive feature weighting strategy, significantly improving the semantic consistency of target segmentation in complex scenes. However, the self-attention mechanism of DANet has the problems of high computational complexity, dynamic scale adaptation defects, and insufficient high-frequency detail retention. ProCo-NET makes up for the defect of high-frequency detail loss, increasing the MIoU by 0.84%.

Table 6.

Performance comparison of each algorithm on RUGD dataset. In the RUGD dataset, sand, grass, trees, sky, buildings, and asphalt are 6 key semantic labels, covering the core navigation elements of autonomous driving scenarios. ProCo-NET is the full name of the innovative framework proposed in this study (Progressive Strip Convolution and Frequency-Optimized Framework). The bold values in the table are the best-performing values in each category.

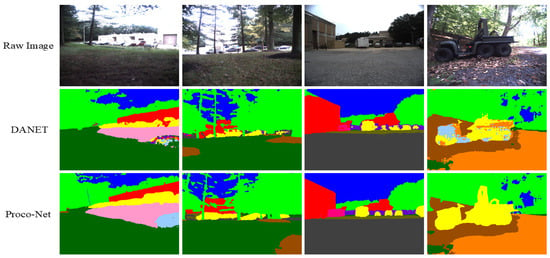

As shown in Figure 2, the segmentation results of ProCo-Net effectively address the issue of inconsistent internal features within targets caused by variations in object distance and local disparities in off-road environments. Specifically, examining the vehicle segmentation results in the fourth column, the vehicle in the original image exhibits significant internal feature inconsistency due to comprising multiple materials (metal bodywork, glass windscreen, and rubber tires) and complex illumination. The segmentation result from DANet demonstrably exacerbates this problem: it fragments the vehicle body into multiple chromatic segments—for instance, the windscreen is incorrectly labeled blue, sections of the body are mislabeled red/yellow, and the tires are even merged with ground vegetation into a single colored region—completely compromising the vehicle’s semantic integrity. In contrast, our ProCo-Net network demonstrates superior performance in maintaining the integrity of the vehicle’s main body. The primary vehicle body is consistently labeled orange, and a basic separation between tires and vegetation is achieved. Similarly, the results in the first column show that even when multiple targets overlap and intertwine, our network successfully delineates distinct targets while preserving their individual integrity. Collectively, these results clearly demonstrate our network’s capability to uniformly handle representational variations within targets caused by positional differences, illumination changes, and material diversity, significantly enhancing the robustness of accurate target segmentation in challenging off-road scenarios.

Figure 2.

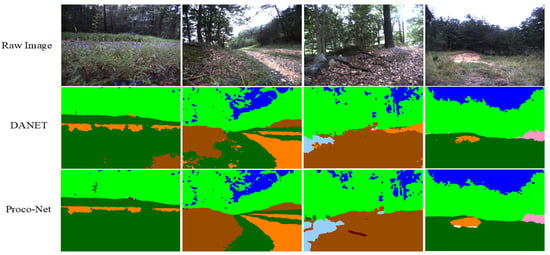

Performance of DANet and ProCo-Net in handling intra-class feature diversity within the RUGD test set.

As evident in Figure 3, our network demonstrates remarkable superiority in preserving high-frequency details along target boundaries. This advantage is clearly exemplified in the segmentation results of the first column. In our network’s output, the transition region between the gravel road and the adjacent vegetation exhibits a sharp, well-defined semantic boundary. The contour details of the road gravel and the intricate structural features at the edges of vegetation leaves are both precisely captured. In contrast, the boundary transition region in DANet’s result displays blocky artifacts and jagged discontinuities, lacking continuity. Particularly noteworthy are the segmentation results in the third column. This scene presents highly intricate and blurred boundaries where the ground leaf litter layer intermingles with vegetation roots, exposed soil, and low-lying shrubs. Nevertheless, our segmentation results clearly delineate and label intricate details such as the serrated edges of leaf piles, the specific junctions between tree roots and soil, and the feathery margins of low-lying foliage. This convincingly indicates that when segmenting terrains in off-road environments, our network adeptly preserves high-frequency detail information in boundary regions. Crucially, it avoids the excessive smoothing of gradual transition features, thereby fundamentally enhancing segmentation accuracy.

Figure 3.

Performance of DANet and ProCo-Net in handling intra-class feature diversity within the RUGD test set.

4.5.2. Experimental Results and Analysis of Freiburg_Forest Dataset

The experimental results in Table 7 show that ProCo-NET has excellent semantic segmentation performance on the Freiburg_Forest dataset, exceeding all comparative models with an MIoU of 83.05%. Specifically, ProCo-NET achieved the best results in the four categories of grass, tree, sky, and obstacle, especially achieving an accuracy of 52.04% in the challenging obstacle category, which is 2.7 percentage points higher than the sub-optimal model DeepLabv3+. From the above segmentation results, it can be seen that our network can maintain high accuracy for off-road paths with continuous-scale changes, demonstrating the adaptability of the network to various environmental elements in off-road environments.

Table 7.

Performance comparison of each algorithm on Freiburg_Forest Dataset. The bold values in the table are the best-performing values in each category.

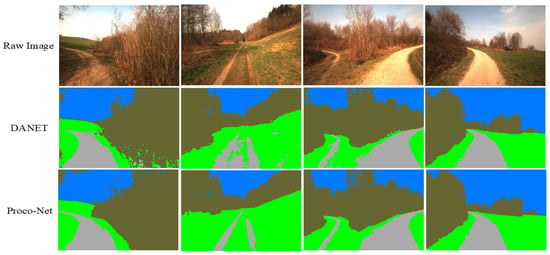

The semantic segmentation results in the right panel of Figure 4 effectively validate the optimized effectiveness of our method in multi-scale feature fusion. As discerned in the first column, the scene contains both large-scale trees with distinct structures and smaller, partially occluded tree crowns in the mid-to-far distance. Nevertheless, the segmentation results generated by our network successfully and accurately classify these trees that exhibit significant scale variations. Similarly, in the third column, the meandering dirt path—serving as a key object of observation—demonstrates substantial scale gradation within the scene: the proximal segment appears broad and distinct, progressively narrowing into a thin, elongated path towards the distal end. Our network’s segmentation results reveal that this entire continuous path is correctly identified as a single “road” category. Crucially, the color labeling remains consistent and uninterrupted across distant, intermediate, and proximal road regions. In contrast, DANet exhibits a fragmented segmentation pattern specifically within the proximal section of the path. Collectively, these results demonstrate that for targets with continuous-scale variations (such as the road and trees), our network dynamically adapts local feature extraction to the varying scale demands, thereby maintaining detection accuracy for multi-scale objects.

Figure 4.

Performance of DANet and ProCo-Net in handling intra-class feature diversity within the RUGD test set.

4.6. Performance Discussion and Limitations

In terms of technical performance, ProCo-NET efficiently and continuously models the progressive scale changes unique to off-road scenes through its core innovation, the Progressive Strip Convolution Group (PSCG). This significantly enhances the model’s ability to perceive multi-scale targets, achieving an MIoU of 71.22% on the RUGD dataset and a 4.69% improvement over the baseline on Freiburg_Forest. The Offset-Frequency Collaborative Module (OFCM) effectively addresses the issue of intra-class feature fragmentation caused by scale gradients through the synergistic effect of dynamic offset sampling in the spatial domain (aggregating intra-class consistent features) and adaptive high-pass filtering in the frequency domain (sharpening high-frequency components of boundaries). This is evident in the improved vehicle integrity and significantly sharpened boundaries. Specifically, it increases the MIoU for the obstacle category on Freiburg_Forest to 52.04%, with particularly notable improvements in the accuracy of the boundary regions.

Existing limitations mainly include false detections in backgrounds with highly similar textures and missed detections due to motion blur caused by high-speed movement, such as drone overhead shooting scenarios. Future work could enhance the contextual consistency by introducing video temporal modeling and optimizing deployment efficiency through FPGA hardware acceleration.

5. Conclusions

This paper aims to solve the problems of intra-class feature consistency degradation and boundary displacement caused by scale gradient in off-road image segmentation and designs a dual-module solution through multi-scale feature response analysis and feature similarity quantitative analysis guidance. The Progressive Strip Convolution Group (PSCG) realizes the efficient capture of continuous-scale features through orthogonal strip convolution decomposition and dynamic gating cascading; the Offset-Frequency Cooperative Module (OFCM) combines a learnable offset generator and a dual-channel frequency domain filter, which enhance semantic consistency and boundary sharpening through dynamic resampling and adaptive high-pass filtering, respectively, and the two collaboratively optimize feature expression. Systematic experimental evaluations have verified the effectiveness of the framework on two challenging off-road datasets: on the RUGD dataset containing 24 categories of complex scenes, ProCo-NET achieved leading segmentation accuracy (MIoU 71.22%); on the Freiburg_Forest dataset focusing on safety-critical elements, the overall MIoU reached 83.05%, among which the segmentation accuracy of the most challenging obstacle category achieved a significant breakthrough, increasing by 2.7 percentage points compared with the sub-optimal method. Visualization results further confirm its advantages: PSCG effectively maintains the internal semantic consistency of targets under progressive scale changes, while OFCM significantly sharpens high-frequency boundary details in complex terrains. These results fully confirm the core value of the proposed symmetric computing mechanisms (orthogonal strip convolution and spatial–frequency domain collaboration) in compensating for the impact of asymmetric deformation.

Future work could focus on studying the mobile implementation and deployment of ProCo-NET in actual off-road scenes to meet the timeliness requirements of off-road navigation. In view of the current limitations revealed by the experimental results, there is still confusion in high-similarity texture regions (such as dense fallen leaves and soil), and boundary sharpness may decrease under motion blur caused by high-speed movement (such as drone overhead shooting). To alleviate these issues, we will expand the OFCM module to incorporate video temporal dimension information and enhance robustness to dynamic blur and occlusion by modeling inter-frame contextual consistency. In addition, based on the significant improvement in obstacle segmentation accuracy on the Freiburg_Forest dataset, exploring the integration of multi-modal perception (such as lidar point clouds) to further improve the recognition ability of safety-critical regions in complex off-road environments is also an important future research direction.

Author Contributions

Conceptualization, Z.L. and C.J.; methodology, Z.L.; software, Z.L.; validation, Z.L., C.J., and D.J.; formal analysis, Z.L.; investigation, Z.L.; resources, Z.L.; data curation, Z.L.; writing—original draft preparation, Z.L.; writing—review and editing, C.J.; visualization, C.J.; supervision, D.J.; project administration, D.J.; funding acquisition, D.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are openly available in Dataset-Ninja at https://datasetninja.com/rugd (accessed on 23 November 2019).

Acknowledgments

The authors would like to acknowledge that no additional individuals or organizations beyond those listed in the contributor and funding sections provided support for this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Matsuzaki, S.; Yamazaki, K.; Hara, Y.; Tsubouchi, T. Traversable region estimation for mobile robots in an outdoor image. J. Intell. Robot. Syst. 2018, 92, 453–463. [Google Scholar] [CrossRef]

- Manduchi, R.; Castano, A.; Talukder, A.; Matthies, L. Obstacle detection and terrain classification for autonomous off-road navigation. Auton. Robot. 2005, 18, 81–102. [Google Scholar] [CrossRef]

- Procopio, M.J.; Mulligan, J.; Grudic, G. Learning terrain segmentation with classifier ensembles for autonomous robot navigation in unstructured environments. J. Field Robot. 2009, 26, 145–175. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part VI 16. Springer: Cham, Switzerland, 2020; pp. 173–190. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Strudel, R.; Garcia, R.; Laptev, I.; Schmid, C. Segmenter: Transformer for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 7262–7272. [Google Scholar]

- Zhu, H.; Jing, D. Optimizing slender target detection in remote sensing with adaptive boundary perception. Remote Sens. 2024, 16, 2643. [Google Scholar] [CrossRef]

- Deng, C.; Jing, D.; Han, Y.; Wang, S.; Wang, H. FAR-Net: Fast anchor refining for arbitrary-oriented object detection. IEEE Geosci. Remote Sens. Lett. 2022, 19, 6505805. [Google Scholar] [CrossRef]

- Rahi, A.; Elgeoushy, O.; Syed, S.H.; El-Mounayri, H.; Wasfy, H.; Wasfy, T.; Anwar, S. Deep Semantic Segmentation for Identifying Traversable Terrain in Off-Road Autonomous Driving. IEEE Access 2024, 12, 162977–162989. [Google Scholar] [CrossRef]

- Larsson, M.; Stenborg, E.; Toft, C.; Hammarstrand, L.; Sattler, T.; Kahl, F. Fine-grained segmentation networks: Self-supervised segmentation for improved long-term visual localization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 31–41. [Google Scholar]

- Ahn, J.; Kwak, S. Learning pixel-level semantic affinity with image-level supervision for weakly supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4981–4990. [Google Scholar]

- Gao, B.; Zhao, X.; Zhao, H. An active and contrastive learning framework for fine-grained off-road semantic segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 24, 564–579. [Google Scholar] [CrossRef]

- Srinivas, A.; Lin, T.Y.; Parmar, N.; Shlens, J.; Abbeel, P.; Vaswani, A. Bottleneck transformers for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 16519–16529. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Viswanath, K.; Singh, K.; Jiang, P.; Sujit, P.; Saripalli, S. Offseg: A semantic segmentation framework for off-road driving. In Proceedings of the 2021 IEEE 17th International Conference on Automation Science and Engineering (CASE), Lyon, France, 23–27 August 2021; IEEE: New York, NY, USA, 2021; pp. 354–359. [Google Scholar]

- Sharma, S.; Ball, J.E.; Tang, B.; Carruth, D.W.; Doude, M.; Islam, M.A. Semantic segmentation with transfer learning for off-road autonomous driving. Sensors 2019, 19, 2577. [Google Scholar] [CrossRef]

- Guan, T.; Kothandaraman, D.; Chandra, R.; Sathyamoorthy, A.J.; Weerakoon, K.; Manocha, D. Ga-nav: Efficient terrain segmentation for robot navigation in unstructured outdoor environments. IEEE Robot. Autom. Lett. 2022, 7, 8138–8145. [Google Scholar] [CrossRef]

- Guo, M.H.; Lu, C.Z.; Hou, Q.; Liu, Z.; Cheng, M.M.; Hu, S.M. Segnext: Rethinking convolutional attention design for semantic segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 1140–1156. [Google Scholar]

- Chen, L.C.; Yang, Y.; Wang, J.; Xu, W.; Yuille, A.L. Attention to scale: Scale-aware semantic image segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3640–3649. [Google Scholar]

- Deng, C.; Jing, D.; Han, Y.; Chanussot, J. Toward hierarchical adaptive alignment for aerial object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5615515. [Google Scholar] [CrossRef]

- Novosel, J.; Viswanath, P.; Arsenali, B. Boosting semantic segmentation with multi-task self-supervised learning for autonomous driving applications. In Proceedings of the 33rd Conference on Neural Information Processing Systems (NeurIPS-Workshops), Vancouver, BC, Canada, 8–14 December 2019; Volume 3. [Google Scholar]

- Valada, A.; Mohan, R.; Burgard, W. Self-supervised model adaptation for multimodal semantic segmentation. Int. J. Comput. Vis. 2020, 128, 1239–1285. [Google Scholar] [CrossRef]

- Poudel, R.P.; Liwicki, S.; Cipolla, R. Fast-scnn: Fast semantic segmentation network. arXiv 2019, arXiv:1902.04502. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, J.; Huang, K.; Liang, K.; Yu, Y. Fastfcn: Rethinking dilated convolution in the backbone for semantic segmentation. arXiv 2019, arXiv:1903.11816. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Nirkin, Y.; Wolf, L.; Hassner, T. Hyperseg: Patch-wise hypernetwork for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4061–4070. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Huang, L.; Guo, J.; Zhang, C.; Chen, X.; Wang, J. OCNet: Object context for semantic segmentation. Int. J. Comput. Vis. 2021, 129, 2375–2398. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Peng, C.; Zhang, K.; Ma, Y.; Ma, J. Cross fusion net: A fast semantic segmentation network for small-scale semantic information capturing in aerial scenes. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5601313. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Dai, X.; Xiao, B.; Yuan, L.; Gao, J. Focal self-attention for local-global interactions in vision transformers. arXiv 2021, arXiv:2107.00641. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Wang, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pvt v2: Improved baselines with pyramid vision transformer. Comput. Vis. Media 2022, 8, 415–424. [Google Scholar] [CrossRef]

- Liu, R.; Deng, H.; Huang, Y.; Shi, X.; Lu, L.; Sun, W.; Wang, X.; Dai, J.; Li, H. Fuseformer: Fusing fine-grained information in transformers for video inpainting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 14040–14049. [Google Scholar]

- Yuan, Y.; Fu, R.; Huang, L.; Lin, W.; Zhang, C.; Chen, X.; Wang, J. Hrformer: High-resolution vision transformer for dense predict. Adv. Neural Inf. Process. Syst. 2021, 34, 7281–7293. [Google Scholar]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.C.; Lin, D.; Jia, J. Psanet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar]

- Wu, T.; Tang, S.; Zhang, R.; Cao, J.; Zhang, Y. Cgnet: A light-weight context guided network for semantic segmentation. IEEE Trans. Image Process. 2020, 30, 1169–1179. [Google Scholar] [CrossRef]

- Wang, P.; Da, C.; Yao, C. Multi-granularity prediction for scene text recognition. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 339–355. [Google Scholar]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 12179–12188. [Google Scholar]

- Feng, X.; Du, H.; Fan, H.; Duan, Y.; Liu, Y. Seformer: Structure embedding transformer for 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 632–640. [Google Scholar]

- Wang, L.; Zhu, F.; Zhang, H.; Xiong, G.; Huang, Y.; Chen, D. MSSINet: Real-Time Segmentation Based on Multi-Scale Strip Integration. IEEE J. Radio Freq. Identif. 2024, 8, 241–251. [Google Scholar] [CrossRef]

- Xu, G.; Chen, J.; Huang, W.; Jia, W.; Gao, G.; Qi, G.J. SCASeg: Strip Cross-Attention for Efficient Semantic Segmentation. arXiv 2024, arXiv:2411.17061. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).