Benders Decomposition Approach for Generalized Maximal Covering and Partial Set Covering Location Problems

Abstract

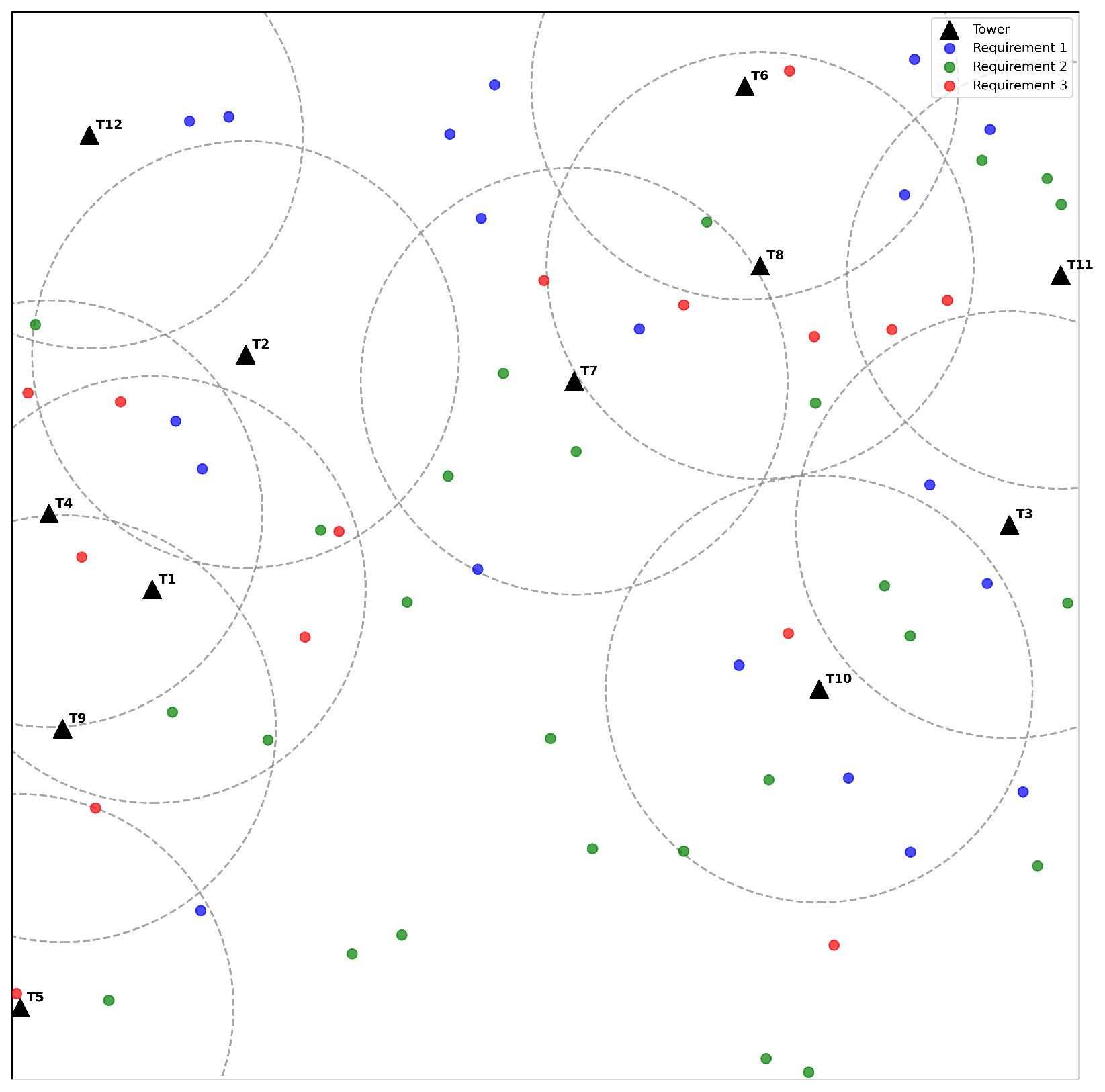

1. Introduction

- The Maximal Covering Location Problem (MCLP) [13], which seeks to select facility locations such that the total value of covered demand points is maximized, subject to a facility budget constraint;

- The Partial Set Covering Location Problem (PSCLP) [14], which minimizes the required facility construction budget while ensuring that the total value of covered demand points meets or exceeds a specified covering demand.

- G-MCLP: Maximize the total value of demand points that are each served by at least their multi-coverage requirement, subject to a facility budget constraint.

- G-PSCLP: Minimize the total facility budget while ensuring that a specified total value of demand points is each served in accordance with their multi-coverage requirement.

- We generalize and formulate IP models for the classical MCLP/PSCLP by incorporating a multiple coverage requirement, whereby each demand point must be served by a specified number of facilities. This extension significantly broadens the applicability of these models to real-world scenarios with stringent reliability requirements;

- We reformulate the generalized models to enhance their structural properties. In particular, the reformulation allows variables associated with demand point coverage to be relaxed as continuous without altering the optimal solution. This property facilitates the use of decomposition techniques and helps reduce the overall computational burden;

- Building on the reformulated models, we develop exact Benders decomposition algorithms that dynamically introduce violated constraints as needed for both problems. Moreover, we design efficient separation algorithms and establish their time and space complexity bounds. The algorithms effectively exploit problem structures and yield substantial improvements in scalability and computational efficiency;

- Computational experiments show that the proposed method achieves superior efficiency compared to state-of-the-art solvers on instances with up to 200,000 demand points as well as on real-world cases. Furthermore, our method outperforms the tabu search heuristic by obtaining higher-quality feasible solutions.

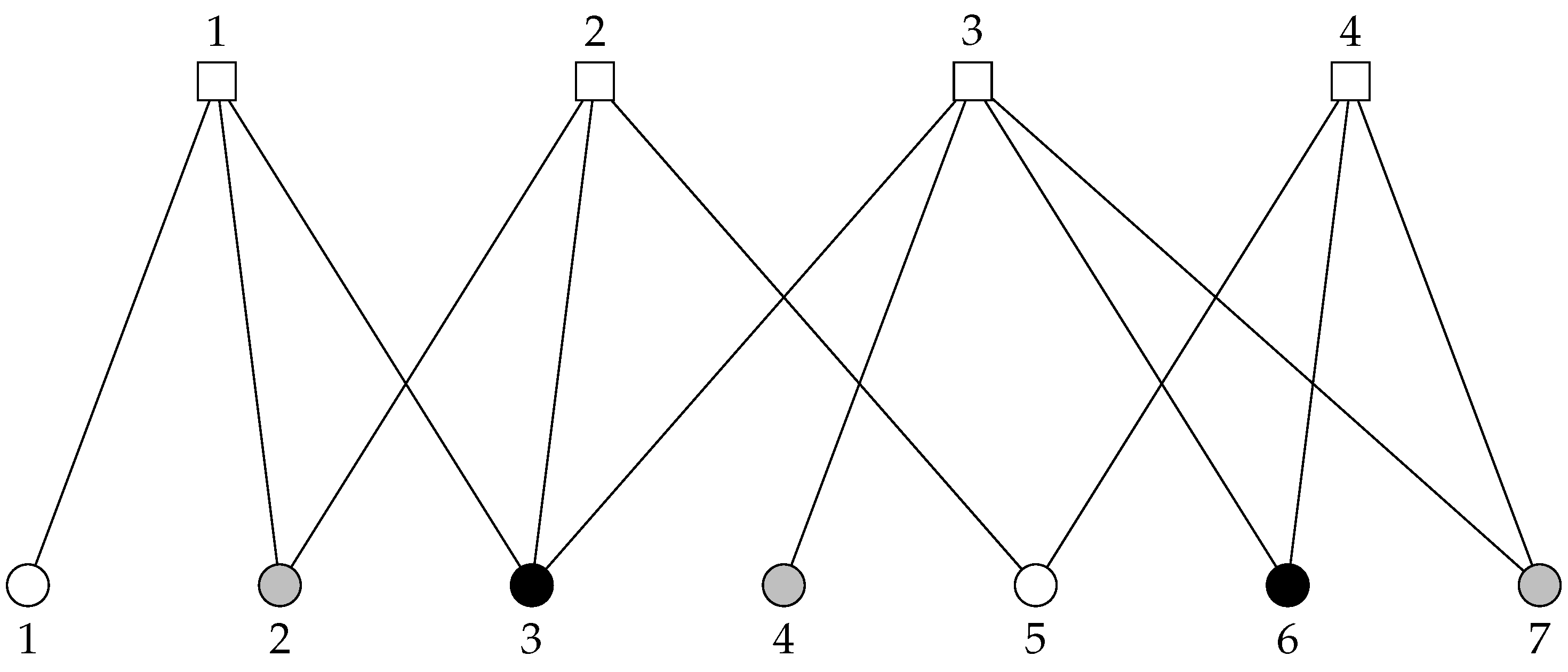

2. Problem Formulation

3. Exact Solution Methods for G-MCLP and G-PSCLP

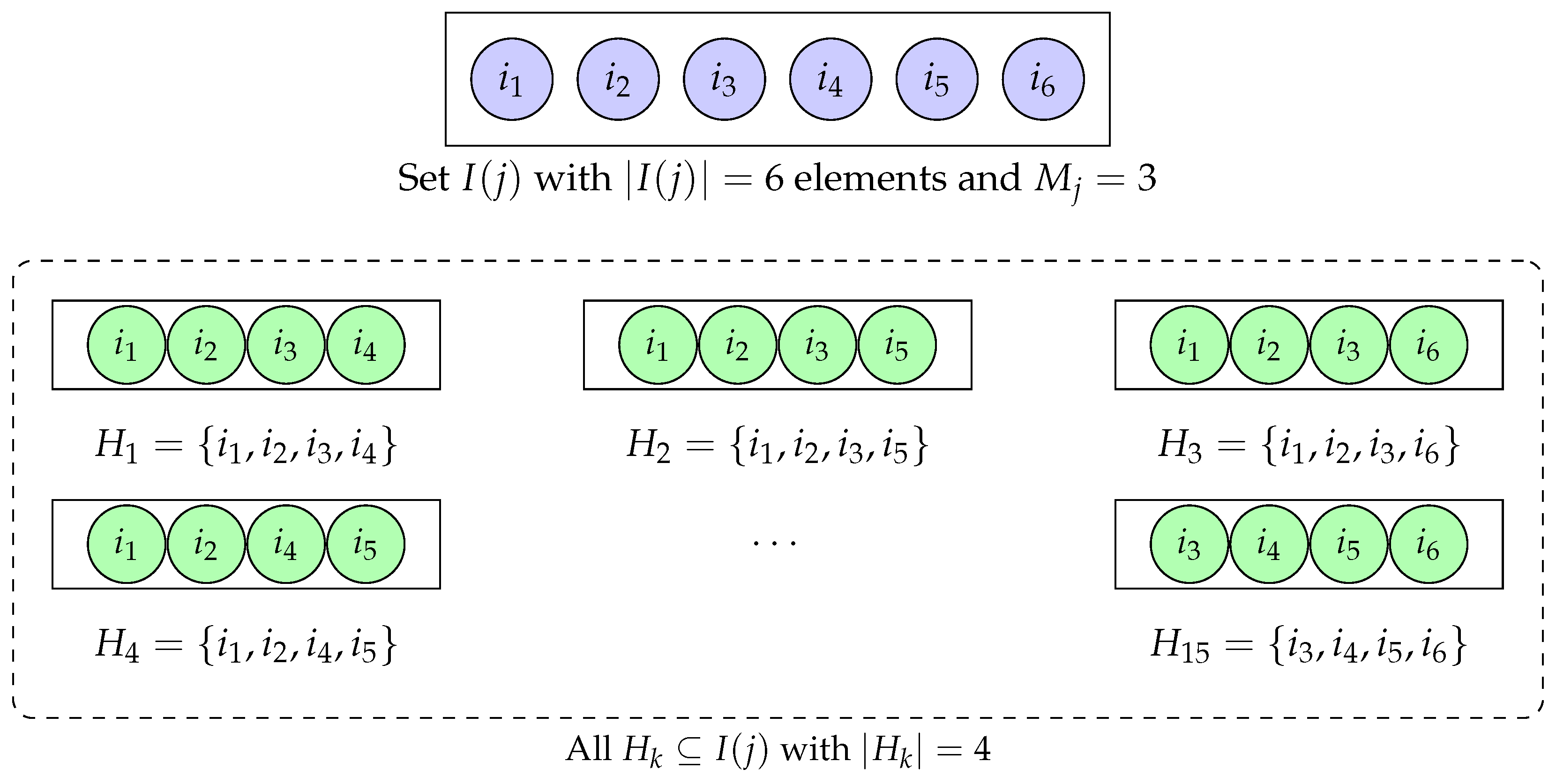

3.1. Problem Reformulation

- We first show that for any , if satisfies , then it also satisfies When , the inequality trivially holds for all . Now consider the case where . Then, we haveSince , this implies that no more than components of can be equal to 0. Therefore, any subset of containing at least elements must include at least one i with . That is

- Conversely, for any , assume that satisfies for all If , the inequality trivially holds. Now suppose . Take any . Since , there exists at least one index such that . Next, consider another subset such that . Similarly, we can find such that . Repeat this process over subsets , , and so on. After steps, it leads to set , which is non-empty as . As a result, we obtain distinct indices for which for all . This implies that , which completes the proof. □

3.2. Benders Decomposition for G-MCLP

3.2.1. Benders Optimality Inequalities

3.2.2. Separation of Benders Optimality Inequalities

3.3. Benders Decomposition for G-PSCLP

3.3.1. Benders Feasibility Inequalities

3.3.2. Separation of Benders Feasibility Inequalities

4. Branch-And-Benders-Cut Strategy

| Algorithm 1 Branch-and-Benders-cut strategy |

| Require: G-MCLP: The IP model (14), and the current LP relaxed solution G-PSCLP: The IP model (23), and the current LP relaxed solution Ensure: G-MCLP: The Benders inequalities violated by G-PSCLP: The Benders inequalities violated by

|

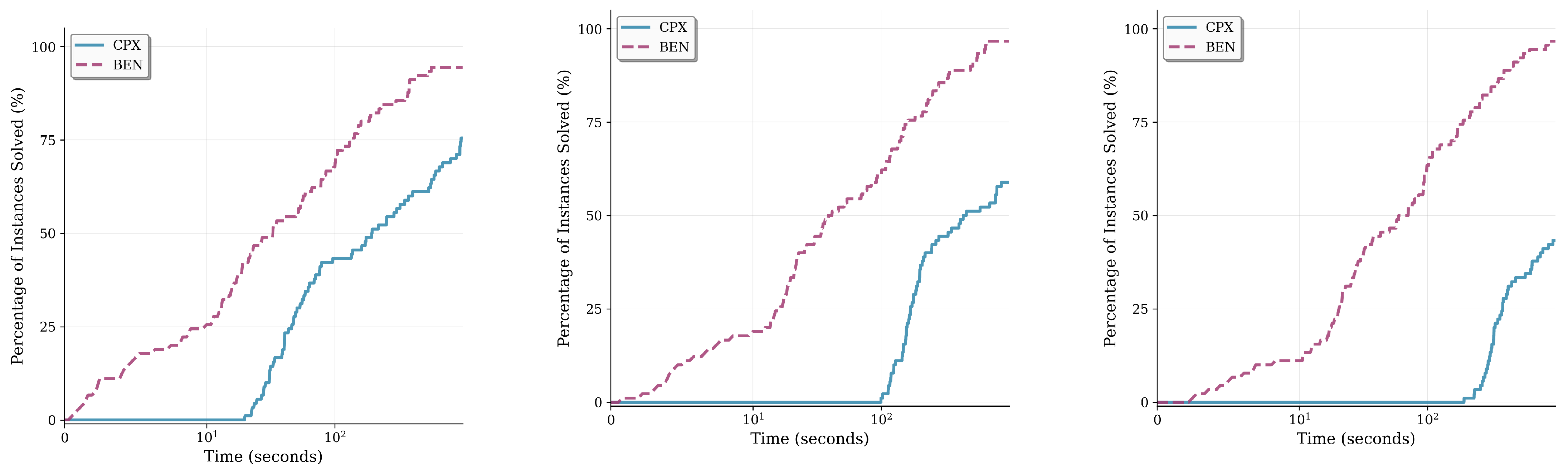

5. Computational Study

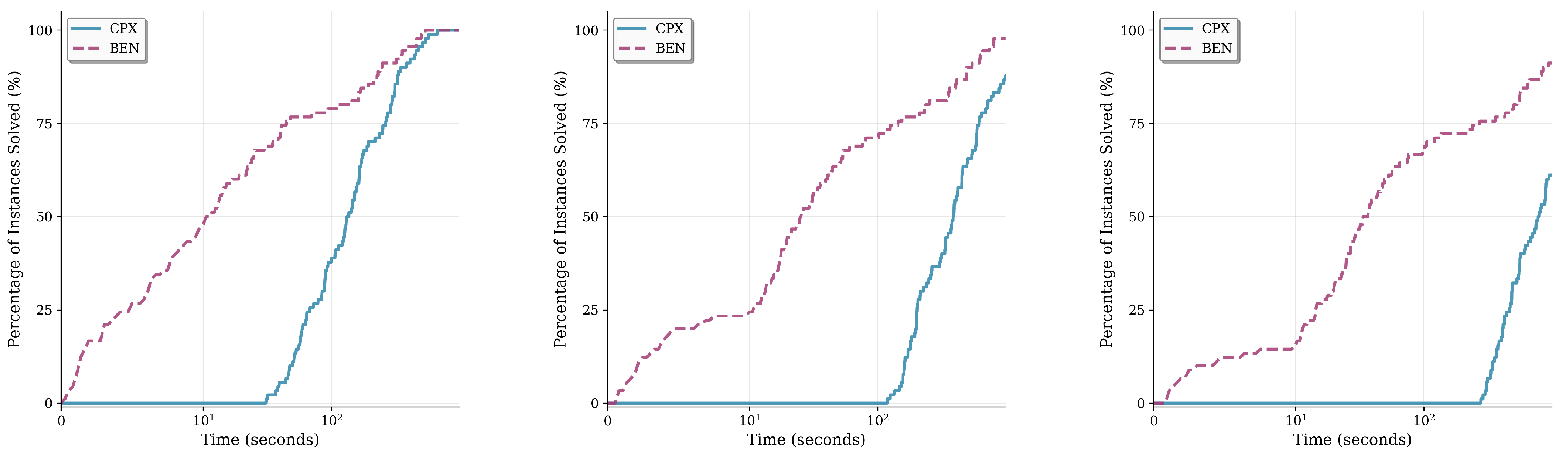

5.1. Results and Analysis of Random Instances

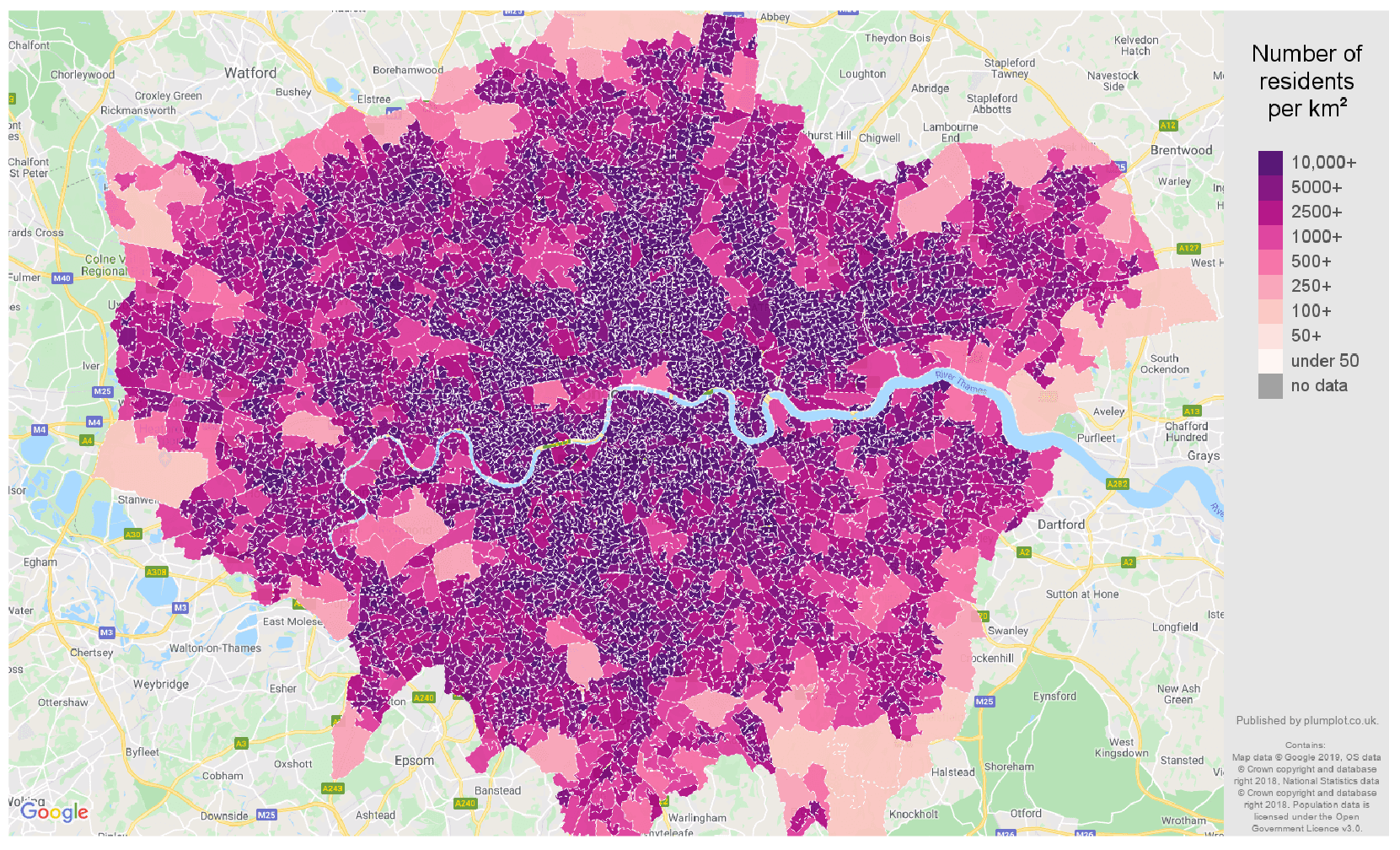

5.2. Results and Analysis on Real-World Data

5.3. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IP | Integer Programming |

| MALP | Maximal Availability Location Problem |

| MCLP | Maximal Covering Location Problem |

| PSCLP | Partial Set Covering Location Problem |

| G-MCLP | Generalized Maximal Covering Location Problem |

| G-PSCLP | Generalized Partial Set Covering Location Problem |

| BEN | Branch-and-Benders-Cut Algorithm |

| TABU | Tabu Search Heuristic |

| CPX | CPLEX Solver |

References

- Shariff, S.R.; Moin, N.H.; Omar, M. Location allocation modeling for healthcare facility planning in Malaysia. Comput. Ind. Eng. 2012, 62, 1000–1010. [Google Scholar] [CrossRef]

- Alizadeh, R.; Nishi, T.; Bagherinejad, J.; Bashiri, M. Multi-period maximal covering location problem with capacitated facilities and modules for natural disaster relief services. Appl. Sci. 2021, 11, 397. [Google Scholar] [CrossRef]

- Dimopoulou, M.; Giannikos, I. Spatial optimization of resources deployment for forest-fire management. Int. Trans. Oper. Res. 2001, 8, 523–534. [Google Scholar] [CrossRef]

- Adenso-Diaz, B.; Rodriguez, F. A simple search heuristic for the MCLP: Application to the location of ambulance bases in a rural region. Omega 1997, 25, 181–187. [Google Scholar] [CrossRef]

- Alexandris, G.; Dimopoulou, M.; Giannikos, I. A three-phase methodology for developing or evaluating bank networks. Int. Trans. Oper. Res. 2008, 15, 215–237. [Google Scholar] [CrossRef]

- Li, H.; Mukhopadhyay, S.K.; Wu, J.j.; Zhou, L.; Du, Z. Balanced maximal covering location problem and its application in bike-sharing. Int. J. Prod. Econ. 2020, 223, 107513. [Google Scholar] [CrossRef]

- Kahr, M. Determining locations and layouts for parcel lockers to support supply chain viability at the last mile. Omega 2022, 113, 102721. [Google Scholar] [CrossRef]

- Berman, O.; Krass, D.; Drezner, Z. The gradual covering decay location problem on a network. Eur. J. Oper. Res. 2003, 151, 474–480. [Google Scholar] [CrossRef]

- Bansal, M.; Kianfar, K. Planar maximum coverage location problem with partial coverage and rectangular demand and service zones. INFORMS J. Comput. 2017, 29, 152–169. [Google Scholar] [CrossRef]

- Mahapatra, P.R.S. Variations of Enclosing Problem Using Axis Parallel Square(s): A General Approach. Am. J. Comput. Math. 2014, 2014, 45998. [Google Scholar] [CrossRef][Green Version]

- Canbolat, M.S.; von Massow, M. Planar maximal covering with ellipses. Comput. Ind. Eng. 2009, 57, 201–208. [Google Scholar] [CrossRef]

- Toregas, C.; Swain, R.; ReVelle, C.; Bergman, L. The location of emergency service facilities. Oper. Res. 1971, 19, 1363–1373. [Google Scholar] [CrossRef]

- Church, R.; Velle, C.R. The maximal covering location problem. Pap. Reg. Sci. 1974, 32, 101–118. [Google Scholar] [CrossRef]

- Daskin, M.S.; Owen, S.H. Two new location covering problems: The partial p-center problem and the partial set covering problem. Geogr. Anal. 1999, 31, 217–235. [Google Scholar] [CrossRef]

- Ahmadi-Javid, A.; Seyedi, P.; Syam, S.S. A survey of healthcare facility location. Comput. Oper. Res. 2017, 79, 223–263. [Google Scholar] [CrossRef]

- ReVelle, C.; Hogan, K. A reliability-constrained siting model with local estimates of busy fractions. Environ. Plan. B Plan. Des. 1988, 15, 143–152. [Google Scholar] [CrossRef]

- Hogan, K.; ReVelle, C. Concepts and applications of backup coverage. Manag. Sci. 1986, 32, 1434–1444. [Google Scholar] [CrossRef]

- Bababeik, M.; Khademi, N.; Chen, A. Increasing the resilience level of a vulnerable rail network: The strategy of location and allocation of emergency relief trains. Transp. Res. Part E Logist. Transp. Rev. 2018, 119, 110–128. [Google Scholar] [CrossRef]

- Curtin, K.M.; Hayslett-McCall, K.; Qiu, F. Determining optimal police patrol areas with maximal covering and backup covering location models. Netw. Spat. Econ. 2010, 10, 125–145. [Google Scholar] [CrossRef]

- Aghajani, M.; Torabi, S.A.; Heydari, J. A novel option contract integrated with supplier selection and inventory prepositioning for humanitarian relief supply chains. Socio-Econ. Plan. Sci. 2020, 71, 100780. [Google Scholar] [CrossRef]

- Li, X.; Ramshani, M.; Huang, Y. Cooperative maximal covering models for humanitarian relief chain management. Comput. Ind. Eng. 2018, 119, 301–308. [Google Scholar] [CrossRef]

- Lusiantoro, L.; Mara, S.; Rifai, A. A locational analysis model of the COVID-19 vaccine distribution. Oper. Supply Chain. Manag. Int. J. 2022, 15, 240–250. [Google Scholar] [CrossRef]

- Garey, M.R.; Johnson, D.S. Computers and Intractability: A Guide to the Theory of NP-Completeness; W.H. Freeman: San Francisco, CA, USA, 1979. [Google Scholar]

- Megiddo, N.; Zemel, E.; Hakimi, S.L. The maximum coverage location problem. SIAM J. Algebr. Discret. Methods 1983, 4, 253–261. [Google Scholar] [CrossRef]

- Daskin, M. Network and discrete location: Models, algorithms and applications. J. Oper. Res. Soc. 1997, 48, 763–764. [Google Scholar] [CrossRef]

- Senne, E.L.F.; Pereira, M.A.; Lorena, L.A.N. A decomposition heuristic for the maximal covering location problem. Adv. Oper. Res. 2010, 2010, 120756. [Google Scholar] [CrossRef]

- ReVelle, C.; Scholssberg, M.; Williams, J. Solving the maximal covering location problem with heuristic concentration. Comput. Oper. Res. 2008, 35, 427–435. [Google Scholar] [CrossRef]

- Zarandi, M.F.; Davari, S.; Sisakht, S.H. The large scale maximal covering location problem. Sci. Iran. 2011, 18, 1564–1570. [Google Scholar] [CrossRef]

- Máximo, V.R.; Nascimento, M.C.; Carvalho, A.C. Intelligent-guided adaptive search for the maximum covering location problem. Comput. Oper. Res. 2017, 78, 129–137. [Google Scholar] [CrossRef]

- Bilal, N.; Galinier, P.; Guibault, F. An iterated-tabu-search heuristic for a variant of the partial set covering problem. J. Heuristics 2014, 20, 143–164. [Google Scholar] [CrossRef]

- Lin, P.T.; Tseng, K.S. Maximal coverage problems with routing constraints using cross-entropy Monte Carlo tree search. Auton. Robot. 2024, 48, 3. [Google Scholar] [CrossRef]

- Alosta, A.; Elmansuri, O.; Badi, I. Resolving a location selection problem by means of an integrated AHP-RAFSI approach. Rep. Mech. Eng. 2021, 2, 135–142. [Google Scholar] [CrossRef]

- Li, G.Z.; Nguyen, D.; Vullikanti, A. Differentially private partial set cover with applications to facility location. arXiv 2022, arXiv:2207.10240. [Google Scholar]

- Daskin, M.S.; Haghani, A.E.; Khanal, M.; Malandraki, C. Aggregation effects in maximum covering models. Ann. Oper. Res. 1989, 18, 113–139. [Google Scholar] [CrossRef]

- Chen, L.; Chen, S.J.; Chen, W.K.; Dai, Y.H.; Quan, T.; Chen, J. Efficient presolving methods for solving maximal covering and partial set covering location problems. Eur. J. Oper. Res. 2023, 311, 73–87. [Google Scholar] [CrossRef]

- Cordeau, J.F.; Furini, F.; Ljubić, I. Benders decomposition for very large scale partial set covering and maximal covering location problems. Eur. J. Oper. Res. 2019, 275, 882–896. [Google Scholar] [CrossRef]

- Farahani, R.Z.; Asgari, N.; Heidari, N.; Hosseininia, M.; Goh, M. Covering problems in facility location: A review. Comput. Ind. Eng. 2012, 62, 368–407. [Google Scholar] [CrossRef]

- Marianov, V.; Eiselt, H. Fifty years of location theory—A selective review. Eur. J. Oper. Res. 2024, 318, 701–718. [Google Scholar] [CrossRef]

- ReVelle, C.; Hogan, K. The maximum availability location problem. Transp. Sci. 1989, 23, 192–200. [Google Scholar] [CrossRef]

- Revelle, C.; Hogan, K. The maximum reliability location problem and α-reliable p-center problem: Derivatives of the probabilistic location set covering problem. Ann. Oper. Res. 1989, 18, 155–173. [Google Scholar] [CrossRef]

- Marianov, V.; ReVelle, C. The queueing maximal availability location problem: A model for the siting of emergency vehicles. Eur. J. Oper. Res. 1996, 93, 110–120. [Google Scholar] [CrossRef]

- Wang, W.; Wu, S.; Wang, S.; Zhen, L.; Qu, X. Emergency facility location problems in logistics: Status and perspectives. Transp. Res. Part E Logist. Transp. Rev. 2021, 154, 102465. [Google Scholar] [CrossRef]

- Berman, O.; Drezner, Z.; Krass, D. Discrete cooperative covering problems. J. Oper. Res. Soc. 2011, 62, 2002–2012. [Google Scholar] [CrossRef]

- Fischetti, M.; Ljubić, I.; Sinnl, M. Benders decomposition without separability: A computational study for capacitated facility location problems. Eur. J. Oper. Res. 2016, 253, 557–569. [Google Scholar] [CrossRef]

- Güney, E.; Leitner, M.; Ruthmair, M.; Sinnl, M. Large-scale influence maximization via maximal covering location. Eur. J. Oper. Res. 2021, 289, 144–164. [Google Scholar] [CrossRef]

- Di Summa, M.; Grosso, A.; Locatelli, M. Branch and cut algorithms for detecting critical nodes in undirected graphs. Comput. Optim. Appl. 2012, 53, 649–680. [Google Scholar] [CrossRef]

- Pavlikov, K. Improved formulations for minimum connectivity network interdiction problems. Comput. Oper. Res. 2018, 97, 48–57. [Google Scholar] [CrossRef]

- CPLEX. User’s Manual for CPLEX. IBM, 2022. Available online: https://www.ibm.com/docs/en/icos/20.1.0?topic=cplex-users-manual (accessed on 23 August 2025).

- Glover, F. Tabu search—Part I. ORSA J. Comput. 1989, 1, 190–206. [Google Scholar] [CrossRef]

| Parameter | Symbol | Values |

|---|---|---|

| Number of demand points | {30,000, 50,000, 70,000} | |

| Coverage radius | R | {3.5, 4, 4.5} |

| G-MCLP: | ||

| Budget | B | {10, 15, 20} |

| G-PSCLP: | ||

| Covering demand | D | {50%, 60%, 70%} |

| B | # | BEN | CPX | |||

|---|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | |||

| 30,000 | 10 | 30 | 30 | 10.79 | 27 | 172.04 |

| 15 | 30 | 30 | 99.61 | 20 | 474.39 | |

| 20 | 30 | 25 | 302.54 | 21 | 602.77 | |

| 50,000 | 10 | 30 | 30 | 17.91 | 25 | 321.88 |

| 15 | 30 | 30 | 99.65 | 17 | 588.93 | |

| 20 | 30 | 27 | 327.15 | 11 | 808.23 | |

| 70,000 | 10 | 30 | 30 | 22.91 | 23 | 534.98 |

| 15 | 30 | 30 | 126.15 | 11 | 797.32 | |

| 20 | 30 | 27 | 335.67 | 5 | 923.67 | |

| D | # | BEN | CPX | |||

|---|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | |||

| 30,000 | 50% | 30 | 30 | 12.21 | 30 | 94.88 |

| 60% | 30 | 30 | 52.60 | 30 | 168.03 | |

| 70% | 30 | 30 | 151.17 | 30 | 274.32 | |

| 50,000 | 50% | 30 | 30 | 24.82 | 30 | 248.57 |

| 60% | 30 | 29 | 93.50 | 27 | 484.62 | |

| 70% | 30 | 29 | 318.46 | 22 | 655.07 | |

| 70,000 | 50% | 30 | 30 | 25.14 | 29 | 478.35 |

| 60% | 30 | 28 | 136.00 | 18 | 785.11 | |

| 70% | 30 | 24 | 490.63 | 8 | 914.20 | |

| B | # | BEN | CPX | |||

|---|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | |||

| 100,000 | 2 | 30 | 30 | 16.00 | 23 | 1573.58 |

| 4 | 30 | 30 | 202.96 | 11 | 2894.60 | |

| 6 | 30 | 28 | 1256.35 | 8 | 3109.51 | |

| 150,000 | 2 | 30 | 30 | 35.20 | 12 | 2937.76 |

| 4 | 30 | 30 | 417.05 | 6 | 3456.12 | |

| 6 | 30 | 22 | 1840.50 | 1 | 3569.20 | |

| 200,000 | 2 | 30 | 30 | 49.12 | 9 | 3280.43 |

| 4 | 30 | 30 | 624.40 | 0 | 3600.00 | |

| 6 | 30 | 20 | 2359.01 | 0 | 3600.00 | |

| D | BEN | CPX | |||

|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | ||

| 100,000 | 10% | 30 | 9.05 | 30 | 206.62 |

| 30% | 22 | 977.03 | 22 | 1280.16 | |

| 50% | 16 | 1930.91 | 16 | 2713.50 | |

| 150,000 | 10% | 30 | 19.51 | 29 | 433.24 |

| 30% | 21 | 1146.02 | 21 | 1904.84 | |

| 50% | 10 | 2745.02 | 9 | 3260.81 | |

| 200,000 | 10% | 30 | 27.47 | 28 | 725.26 |

| 30% | 20 | 1224.67 | 18 | 2564.32 | |

| 50% | 7 | 2927.88 | 0 | 3600.00 | |

| B | # | BEN | TABU | |

|---|---|---|---|---|

| 10,000 | 10 | 30 | 278,624.63 | 282,947.70 |

| 15 | 30 | 376,182.40 | 328,145.03 | |

| 20 | 30 | 436,640.17 | 340,717.60 | |

| 15,000 | 10 | 30 | 416,225.57 | 400,157.03 |

| 15 | 30 | 560,630.20 | 455,275.20 | |

| 20 | 30 | 654,453.27 | 474,509.17 | |

| 20,000 | 10 | 30 | 552,271.83 | 504,916.73 |

| 15 | 30 | 747,655.07 | 561,071.20 | |

| 20 | 30 | 871,002.17 | 578,468.43 |

| D | # | BEN | TABU | |

|---|---|---|---|---|

| 10,000 | 50% | 30 | 29.57 | 32.80 |

| 60% | 30 | 36.97 | 41.00 | |

| 70% | 30 | 45.03 | 49.93 | |

| 15,000 | 50% | 30 | 29.33 | 32.87 |

| 60% | 30 | 36.43 | 40.77 | |

| 70% | 30 | 44.90 | 50.10 | |

| 20,000 | 50% | 30 | 29.73 | 32.80 |

| 60% | 30 | 36.97 | 40.67 | |

| 70% | 30 | 45.67 | 50.20 |

| B | # | BEN | CPX | |||

|---|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | |||

| 80 | 2 | 30 | 30 | 3.19 | 24 | 1658.09 |

| 4 | 30 | 30 | 18.92 | 4 | 3380.11 | |

| 6 | 30 | 30 | 159.59 | 3 | 3388.45 | |

| 100 | 2 | 30 | 30 | 6.52 | 15 | 2574.98 |

| 4 | 30 | 30 | 64.43 | 2 | 3461.90 | |

| 6 | 30 | 26 | 968.06 | 0 | 3600.00 | |

| 120 | 2 | 30 | 30 | 14.53 | 14 | 2591.83 |

| 4 | 30 | 30 | 377.51 | 0 | 3600.00 | |

| 6 | 30 | 14 | 2444.75 | 0 | 3600.00 | |

| D | BEN | CPX | |||

|---|---|---|---|---|---|

| # opt | t (s) | # opt | t (s) | ||

| 80 | 10% | 30 | 9.41 | 30 | 163.33 |

| 30% | 29 | 456.13 | 28 | 1257.67 | |

| 50% | 2 | 3426.31 | 2 | 3391.14 | |

| 100 | 10% | 30 | 16.58 | 30 | 311.83 |

| 30% | 26 | 959.03 | 22 | 1922.39 | |

| 50% | 0 | 3600.00 | 0 | 3600.00 | |

| 120 | 10% | 30 | 26.11 | 30 | 146.35 |

| 30% | 24 | 1153.82 | 21 | 1929.45 | |

| 50% | 0 | 3600.00 | 0 | 3600.00 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Li, Y.; Zhang, W.; Chen, S. Benders Decomposition Approach for Generalized Maximal Covering and Partial Set Covering Location Problems. Symmetry 2025, 17, 1417. https://doi.org/10.3390/sym17091417

Li G, Li Y, Zhang W, Chen S. Benders Decomposition Approach for Generalized Maximal Covering and Partial Set Covering Location Problems. Symmetry. 2025; 17(9):1417. https://doi.org/10.3390/sym17091417

Chicago/Turabian StyleLi, Guangming, Yufei Li, Wushuaijun Zhang, and Shengjie Chen. 2025. "Benders Decomposition Approach for Generalized Maximal Covering and Partial Set Covering Location Problems" Symmetry 17, no. 9: 1417. https://doi.org/10.3390/sym17091417

APA StyleLi, G., Li, Y., Zhang, W., & Chen, S. (2025). Benders Decomposition Approach for Generalized Maximal Covering and Partial Set Covering Location Problems. Symmetry, 17(9), 1417. https://doi.org/10.3390/sym17091417