Abstract

In order to improve image visual quality in high dynamic range (HDR) scenes while avoiding motion ghosting artifacts caused by exposure time differences, innovative image sensors captured two registered extreme-exposure-ratio (EER) image pairs with complementary and symmetric exposure configurations for high dynamic range imaging (HDRI). However, existing multi-exposure fusion (MEF) algorithms suffer from luminance inversion artifacts in overexposed and underexposed regions when directly combining such EER image pairs. This paper proposes a neural network-based framework for HDRI based on attention mechanisms and edge assistance to recover missing luminance information. The framework derives local luminance representations from a convolution kernel perspective, and subsequently refines the global luminance order in the fused image using a Transformer-based residual group. To support the two-stage process, multi-scale channel features are extracted from a double-attention mechanism, while edge cues are incorporated to enhance detail preservation in both highlight and shadow regions. The experimental results validate that the proposed framework can alleviate luminance inversion in HDRI when inputs are two EER images, and maintain fine structural details in complex HDR scenes.

1. Introduction

In natural conditions, the dynamic range of a scene can reach up to ten orders of magnitude. However, mainstream image capture and display devices can only acquire and present low dynamic range (LDR) content. HDRI technology successfully addresses this limitation, benefiting applications in medical imaging, security, remote sensing, and industrial inspection. Conventional HDR techniques expand the luminance, contrast, and color gamut of the synthesized image by merging images with diverse exposure settings, significantly enhancing the realism and immersion of visual experiences. To generate HDR images, various capture methods have emerged, like staggered HDR [], temporal exposure control [], and global shutter with regional exposure []. These state-of-the-art techniques can obtain different exposure time information in a single shot, effectively preventing the motion artifacts that often arise from multi-exposure images synthesis in HDRI [,,,].

However, capturing images with excessively different exposures often comes at the cost of spatial resolution. Thus, it is typical to obtain a pair of EER images consisting of one long-exposure image and one short-exposure image with symmetric and complementary exposure settings, as shown in Figure 1a. A larger exposure interval is deliberately applied to the EER images to better capture a wider dynamic range, leading to hardly any overlap between the radiance ranges of the two images. The MEF algorithms based on pyramid weight map (e.g., [,,]) may occur luminance inversion artifacts that degrade visual realism, as illustrated in Figure 1b. Consequently, the pixel values in the highlight areas of the low-exposure image are lower than those in the dark regions of the large-exposure image. As shown in Figure 1b, the outdoor areas appear brighter than the indoor areas at night.

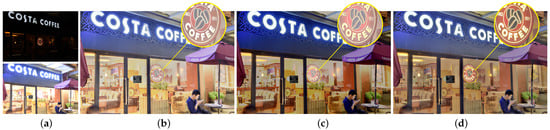

Figure 1.

Two methods for reducing luminance inversion artifacts with two EER images captured by ourselves. (a) is two input EER images. (b) is the fused image by using Kou []. (c) is the fused image by using Deepfuse [], and (d) is the fused image by our framework.

Recent research has focused on designing end-to-end deep approaches for MEF by leveraging data captured at varying exposure times to address luminance inversion artifacts [,,,,]. These methods have proven effective in reducing such luminance reversal issues. However, as shown in Figure 1c, these convolutional-based methods cannot preserve the sharp contrast in high- and low-brightness areas. By focusing on local regions, CNNs are able to detect and combine fine-grained details from different exposures effectively. However, when fusing two EER images, it is critical to consider not only localized features but also the overall contextual cues contained in the image. In contrast to CNNs, Transformer models have demonstrated effectiveness in a wide range of vision tasks, owing to the self-attention mechanism that captures wide-range spatial dependencies between features [,,]. Intuitively, this attention structure can model the global brightness representation, making it advantageous for fusing two EER images. Nevertheless, the self-attention is generally applied to small independent patches, which can produce artifacts when handling image-related tasks. To address this issue, SwinIR [] proposed an interesting Swin Transformer model for image restoration [], which adopts a shifting window method to capture long-range dependencies. Despite the use of window-based Transformers for image tasks, blocking artifacts persist in the smooth areas of the images. This occurs because the shifted window strategy fails to establish adequate connections between adjacent local windows.

This paper proposes an HDRI framework based on an attention-edge-assisted neural network (AEAN), aiming to fuse two registered EER images. The network combines convolutional kernels with a multi-head attention mechanism to enhance both localized and holistic feature representation. We first use a residual network to roughly learn fusion features. Due to the limited representation ability of preliminary fusion, the coarse fusion result can be regarded as a noisy image for real images. Therefore, this paper embeds Swin Transformer into the residual CNN group (STRCG) to form a short jump connection, which can retain shallow information and pass it to the deep layer, so as to learn the luminance differences between varying exposure images. Simultaneously, the residual CNNs are injected into the Swin Transformer block (CSTB) to ensure that the fused image can effectively represent the global brightness smoothness and local detail features. Specifically, a multi-scale channel attention (MSCA) and an edge-assisted enhancement (EH) model are designed in the CSTB structure to extract features with stronger representation ability. Notably, the entire proposed framework is cascaded through the residual structure, so that the fused features have sufficient multipath transmission ability. Therefore, the algorithm can solve both halo artifacts and luminance inversion artifacts, as demonstrated in Figure 1d, outperforming methods that rely solely on CNNs. In addition to the network structure, we also design suitable loss functions to maintain color realism and brightness spatial consistency. We use the color cosine loss function to alleviate the risk of color inconsistency in the fused output, and use SSIM and spatial loss functions to enhance spatial coherence. Experimental results indicate that our AEAN effectively suppresses luminance inversion artifacts while maintaining details in shadow and highlight areas. Our main contributions are as follows

- An attention-edge-assisted neural framework is proposed to fuse two EER images, which preserves the information of the highlight and shadow areas and avoids halo-like artifacts present in the fusion output.

- To retain the overall contrast structure and simultaneously enhance fine local details of the fused image, we embed the CNNs into the Transformer module as the core component. We parallelly concatenate the dual attention mechanism and residual gradient information into the window-based multi-head attention, taking into account both local and long-range information of the input images.

2. Related Works

Under the assumption that the scenes in all images to be fused are aligned, many MEF algorithms for HDR imaging have been proposed. The core objective of these algorithms is to integrate information from all input exposure images as much as possible to generate a high-definition image. Existing MEF methods include traditional algorithms, CNN-based frameworks, and Transformer-based architectures.

The classic traditional MEF method in [] decomposed the image into a pyramid and fused the features at each scale level to maintain the multi-scale expression of the image. Then, at each scale level, an appropriate fusion strategy was adopted and weights were carefully designed to fuse pixels in different exposure images at multiple scales. Finally, the fused image was reconstructed to produce the final high-quality output. This approach captures a wider range of brightness and provides richer colors in the fused image, allowing details in both dark and bright areas to be visible. However, the algorithm in [] faces a fundamental difficulty in preserving information from both the highlights and shadows of an HDR scene []. As shown in [], such pyramid-based methods [,,,] can solve this problem, but they also suffer from brightness reversal in overexposed areas. Adding a suitable virtual exposure image into the input sequence has proven to be one of the effective methods to solve the luminance reversal problem [,,].

The CNN-based methods can automatically extract the features and learn the patterns from the image through the characteristics of deep learning, significantly improving the fusion effect. The first CNN network for MEF named DeepFuse [] obtained the richly textured image by decomposing the images into YUV space and then fusing luminance cues from two LDR images using an end-to-end framework. Since then, a large number of MEF algorithms based on combining traditional models and CNNs have been proposed. Similar to [], Wu et al. proposed a DMEF network [], which obtains the initial illumination map from the Retinex model [] as the input of CNN, so as to learn the fused illumination map. Ma et al. [] introduced an MEF-Net, where the weight map is learned from downsampled images via unsupervised learning on top of MEF-SSIM [] and then upsampled to full size via []. Moreover, Jiang et al. [] proposed a fast MEF method, which used a one-dimensional lookup table to take pixel values as input to produce fusion weights. The lookup table is learned for each exposure using unsupervised learning and GGIF. Zheng [] proposed a filter prediction-driven fusion paradigm to generate spatially flexible filters based on level-by-level represented features to carry out pixel-level dynamic fusion. The algorithms in [,,] calculate the coefficients of filter once, which means that the methods in [,,] may not be able to transfer the structure of the brightness component to the weighted graph well. There are also methods that obtain information-rich image fusion structures by improving the network structure, such as MEF-GAN [] and MIN-MEF []. These interactive methods learn brightness information and may not be able to preserve scene depth and local contrast well. They perform well if enough images are captured and if the captured images cover as much radiance as possible in the HDR scene. Unfortunately, this is not always true. For example, the brightness in overexposed and underexposed areas of the fused image might occur inverted if only two exposure images of an HDR scene are fused.

The self-attention mechanism in Transformer enables capturing wide-range spatial correlations across multi-exposure images. Therefore, compared to CNNs that focus on processing local information, Transformers are able to model the global features of images, avoiding possible missed details or artifacts. Vs et al. [] proposed an MEF framework named IFT by training an auto-encoder at multiple scales and using a spatial Transformer fusion strategy. Qu et al. [] proposed a framework entitled TransMEF by introducing self-supervised learning and multi-task learning. Liu et al. [] designed an encoder that integrates CNNs and Transformer to capture multi-level abstractions while incorporating holistic context. These Transformer-based methods attempt to use Transformer’s self-attention mechanism to extract abundant features as much as possible. IET [] and TransMEF [] pay attention to the availability of the Transformer module in MEF, while Liu et al. [] focus on utilizing CNN and Transformer to extract short and long-distance features, respectively. In a word, the above methods aim to make up for the shortcomings of long-range extraction in CNN, but directly using Transformer will produce block artifacts in the fused image. To address this issue, the proposed AEAN algorithm first applies a CNN to perform coarse fusion of the two EER images, followed by a coarse-to-fine fusion strategy that integrates Transformer and CNN frameworks.The approach leverages multi-scale spatial attention and edge-assisted features, enabling the model to produce a single, information-rich fused image that faithfully replicates the radiometric characteristics of the HDR scene.

3. The Proposed AEAN Method

In this part, Section 3.1 first outlines the fusion process of two EER images. Section 3.2 introduces the proposed attention-edge-assisted neural (AEAN) HDRI method, which learns both global and detailed fusion representations from low-exposure and high-exposure images. Finally, Section 3.3 describes the training strategy and objectives.

3.1. Motivation and Overview

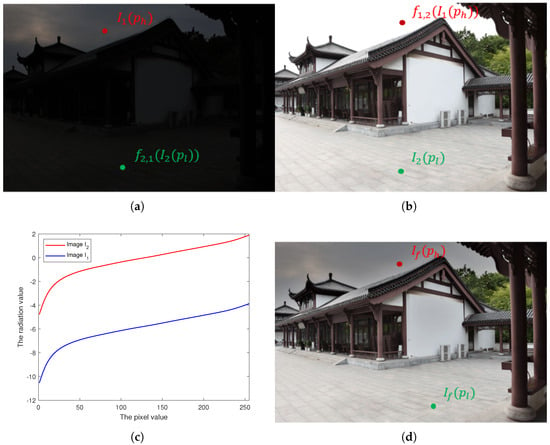

When there is a pair of EER images and , assuming that the exposure times of and are and , respectively, . Assume is the pixel mapping function of images to . This function is the CRF inverse mapping and can be easily calculated by the algorithms in [,]. When the radiation values between the two images hardly overlap, as shown in Figure 2c, the relationship between the pixels in the two images may be

where and denote the highlight pixel in image and the low-light pixel in image , respectively [or Figure 2a,b], and are their corresponding intensity values. According to common sense, the exposure time does not change the holistic luminance distribution across the image. The high-brightness area is still the brightest in the image when the exposure time is very short. This feature also applies to the low-brightness area. However, if we directly fuse these two images, the pixel brightness of the fused image , as demonstrated in [], will be reversed as

as one example shown in Figure 2d. All in all, considering both global luminance and local detail preservation when fusing two EER images is challenging. When there is hardly any overlap in the radiance range of the two images, directly fusing them will introduce luminance order inversion [or Figure 2d] and only convolution-based modules for fusion will limit global brightness smoothing [or Figure 1c]. Although good gradient details can be maintained by convolution, the overall contrast of the fused result in bright and shadow parts will be lost. To accurately extract complementary information from both EER images, the proposed exposure fusion method aims to fuse the pair into a single information-rich image, while ensuring global and local brightness. Therefore, we first proposed STRCG, which incorporates the Swin Transformer into the residual network structure to capture the global contrast between bright and dark areas. In each STRCG, we then designed several CSTB modules, which embed CNNs within the Swin Transformer to reduce the blockiness artifacts associated with the window-based Transformer and to learn multi-scale gradient detail features.

Figure 2.

An example of two EER images and the outcome of their direct fusion. (a) is the low-exposure image and (b) corresponds to the high-exposure image . (c) shows the mapping relationship between scene radiance and pixel intensity values at the exposure times in (a,b), calculated from the CRF. (d) presents the fused images by (a,b).

3.2. Structure of the Proposed AEAN Method

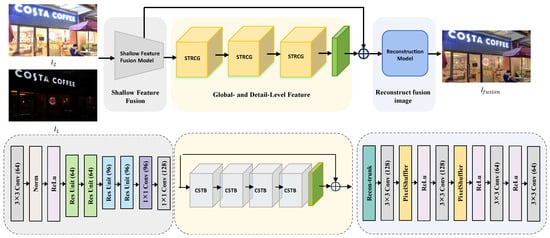

This subsection presents the entire architecture of the proposed AEAN method. As shown in Figure 3, the proposed method comprises a three-stage framework, including shallow feature fusion, global and detail level feature enhancement, and fused image reconstruction.

Figure 3.

Overview of the proposed network structure for EER image fusion.

Taking two EER images as an example, AEAN takes as inputs, where H and W are the height and the width of the input, and 3 corresponds to the color channel. As shown in Figure 3, a shallow feature fusion is first performed, consisting of a convolution followed by a normalization layer and an activation function, 4 residual blocks with 2 at resolution and 2 at resolution, and one convolution. An additional convolution is then applied for preliminary fusion, producing the rough fusion feature . Then, the global and detail level feature enhancement strategies are adopted through the Swin Transformer combined residual CNN group (STRCG) to further learn the global brightness and detail fusion features of the fused image. The core part of STRCG is the residual CNN-embedded Swin Transformer block (CSTB). In the core module CSTB, we use CNN self-supervised learning to preserve the local texture features. Meanwhile, we use the STRCG to supplement the missing global brightness information of the two EER images. Details of CSTB can be found in Section 3.2.2. Then, the descriptor integration is achieved through a global residual connection that combines both shallow features and global detail fusion outputs. Finally, the image reconstruction module is responsible for generating the final fused image. It consists of a reconstruction trunk (Recon-trunk) composed of 10 residual blocks, followed by two upsampling layers. Each upsampling layer includes a convolution, a PixelShuffle operation with an upscale factor of 2 for spatial resolution enhancement, and a ReLU activation function. The reconstruction process concludes with a final convolution layer that produces the final fused image. Next, we explain the structures of STRCG and CSTB from the perspectives of global-level design and detail-level design, respectively.

3.2.1. Global-Level Design

At the global level, we incorporate Transformer into Resnet to learn the global brightness characteristics. The global level consists of three STRCGs and one convolutional layer, as shown in Figure 3. Given an input feature , the output of STRCG is computed as

where refers to the i- STRCG, and then the output enters the convolution layer and residual addition to obtain the output of global level

In particular, each STRCG has four CSTBs; that is, for a given feature of the - CSTB in i- STRCG, the intermediate output feature is

where is the j- CSTB in the i- STRCG. Following processing by multiple CSTBs, the convolution layer is preserved before the residual connection in the subsequent STRCG, as formulated below:

where is the output of the j- CSTB in - STRCG. The whole process is similar to the CNN-injected Transformer in []; thus, the global contrast of the fused image is well preserved.

3.2.2. Detail-Level Design

At the detail level, we embed the CNNs into Swin Transformer block (CSTB) to efficiently leverage multi-scale texture and global contrast from different images, and the structure of CSTB is shown in Figure 4. We propose multi-scale spatial-channel attention and edge-assisted enhancement (MSCA-EH) to preserve edge details while effectively utilizing spatial and exposure time information from inputs. As part of MSCA-EH, a window-based Transformer architecture is selected as the core module component to acquire holistic context. As presented in Figure 4, our MSCA mainly consists of multi-scale spatial attention (MSA), channel attention (CA), and window-based multi-head self-attention (W-MSA). In addition, different from the commonly used spatial-channel attention structure, the edge-assisted enhancement (EH) is multiplied after the attention to effectively improve the details of the fused result.

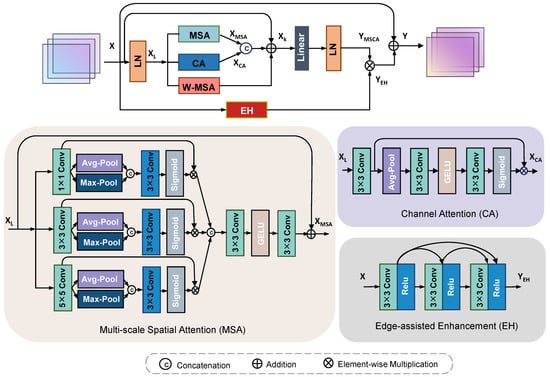

Figure 4.

The structure of the residual CNN-embedded Swin Transformer block (CSTB).

This embedding process can be formulated as

where , denote the output of MSCA, EH, and X is the initialization feature of the input in CSTB. Figure 4 displays the detailed configuration of the CSTB.

- Multi-scale Spatial Attention. Given a shallow fusion image of two EER images, multi-scale spatial attention (MSA) is designed followed by layer normalization (LN) to further fuse them in a coarse-to-precise manner. As presented in Figure 4, input is first split into three branches, each undergoing initial feature extraction with convolutions of size , , and , respectively. After each layer, average and max pooling are sequentially executed to capture global spatial information at different receptive fields. The pooled features from each branch are concatenated along the channel dimension and then passed through a convolution followed by a Sigmoid activation to generate spatial attention maps corresponding to each scale. The multi-branch attention maps are further concatenated and processed by a convolution, a GELU activation, and another convolution for feature calibration. Finally, a residual connection adds the calibrated features to , producing the enhanced feature . Similar to the algorithms in [,], the proposed algorithm is not based on weighted graphs like the algorithms in [,]. Therefore, the proposed method and the algorithms in [,] can effectively avoid brightness reversal artifacts in the fused images.

- Channel Attention. The channel attention (CA) is inserted in parallel with W-MSA and MSA. As shown in Figure 4, first passes through a convolution. Global context is captured via average pooling and is then processed by another convolution with GELU activation to model non-linear channel dependencies. A third convolution followed by Sigmoid generates channel-wise attention, which is multiplied with to produce the refined output . The whole process is similar to the hierarchical Transformer in [,] by reshaping the flattened token sequence back to the spatial grid to refine the local information, so as to reduce the blocking effect caused by the window segmentation mechanism. At the same time, because CA contains global information, the introduction of CA can dynamically adjust the weight of each channel, so that more pixels are activated. Similar to [] in W-MSA, we use shift W-MSA in continuous CSTB in a periodic manner.

Then, the dual attention block MSCA can be described as

where , , and denote the output of W-MSA, MSA, and CA.

- Edge-assisted Enhancement. In order to achieve local image detail refinement and facilitate global exposure fusion, edge-assisted enhancement (EH) is incorporated in parallel with the transformer module. As shown in Figure 4, input X goes through three convolutions with ReLU activations, with skip connections from the first two to the third layer. The output is . Similar to reference [], adding detailed features after fusion can sharpen the image and improve the image expression in gradient texture. We use the instance normalization (IN) in [] on half of the channels, with the other half preserving their original context through identity (ID) mapping.

The proposed AEAN net is designed upon the CSTB, which possesses two appealing advantages: the CSTB adopts a residual network architecture, which can reuse features to mitigate vanishing gradients, and an efficacious MSCA-EH structure is employed within the CSTB to suppress non-essential features and selectively propagate only high-information features, which results in effectively improving the fused image quality. As illustrated in Figure 4, the CSTB mainly includes W-MSA, MSA, CA, and EH. It combines a CA block and an MSA block in channel-wise and pixel-wise features, respectively, so that CSTB performs differential processing of distinct features and pixels, which can confer enhanced adaptability when dealing with heterogeneous types of information. Moreover, the proposed multi-scale structure is more conducive to preserving fine details and scene depth for the fused image.

3.3. Training Procedure and Objectives

The proposed AEAN undergoes end-to-end training. When there is hardly any radiance range overlap between two input images, directly fusing them will result in an imbalanced brightness of the overall image. Therefore, the essence of the proposed AEAN is to recover the lost brightness details of the two images. Similar to [,], that simulate the loss of brightness information by interpolating virtual exposure images, the proposed framework uses a set of real exposure images as ground truth to enable the AEAN to obtain the missing illumination cues of the inputs. Inspired by the algorithms in [], the loss functions are defined based on the fused image, ensuring that the result closely resembles the set of input images. Our reference fused images are created from multiple real exposure images, whereas the network takes only two EER images as input. The overall training objective is thus defined as

where is the rebuilding loss, is the consistency constraint, and is the color loss. Color loss is inherited from [], since the EER image fusion problem is similar to a dark image brightened in terms of color goals. Additionally, the similarity constraint , taken from [], effectively measures the quality of MEF results and is also employed as an objective function. We empirically set the weights and . The description is given as follows.

- Rebuilding loss. The loss function is defined between the reconstructed pixels in the fused images and the ground truth images as follows:Similarity constraint. We use SSIM between the fused images and the reference images to constrain the coherence of the intensity, constant, and structure. The SSIM loss is defined aswhere N denotes the total pixel count of input. and are the mean intensity of the and . Since a higher contrast means the pixel with a higher quality, and are the variances of and , respectively, and is the covariance between and . and represent a pair of small positive constants to prevent the possible instability.

- Color loss. In the RGB color space, the cosine angle between the three channels can be used to describe the consistency of color. To avoid potential color distortion and to maintain the inter-channel relationships, we utilize color loss aswhere and indicate the pixel value of the h- color channel in the fused output and the target image, respectively. The color channel h is from the three color channels .

4. Experiments and Results

The proposed AEAN is trained on the dataset collected by [,]. The dataset provides 800 8-bit sRGB images under diverse exposure configurations. To ensure the images depict static scenes, camera shake and object movement were strictly controlled during acquisition. A random split of the dataset assigns 700 sequences to the training set and the remaining 100 to the test set. Without loss of generality, for each scene, we select the images with the shortest and longest exposure times as inputs, and the fused image generated from all exposure images is used as the ground truth. In order to validate the generalization capability of ten MEF methods, the performance of qualitative and quantitative experiments on two datasets (i.e., [] and MEFB []) are evaluated. The dataset in [] is a classic dataset for traditional MEF algorithms. The MEFB database [] encompasses a diverse set of image types, including numerous images commonly used in recent fusion algorithms. During the training phase, the EER inputs are carefully aligned across exposures and then randomly cropped or resized to blocks to ensure consistency. The batch size is set to 1. The learning rate is initially set to and then decayed following a cosine annealing schedule for training 1000 epochs, using the Adam optimizer with parameters . We train our model on two NVIDIA 2080 Ti GPUs.

4.1. Evaluation of Different MEF Algorithms

Our AEAN algorithm is first evaluated in comparison with four conventional MEF algorithms in [,,,] and five data-driven exposure fusion algorithms in [,,,,].

- Quantitative Results. To quantify the luminance reversal in the fused result, two indices defined in [] are calculated for the ten MEF methods. One is , which describes the number of pixel pairs whose brightness order changes. Let and N represent the total number of the fused result, the brightness luminance reversal ratio is given by

The other is to describe the average difference between the pixel values of these pixel pairs after reversal. The mean value of the difference is given by

The moreserious the brightness inversion of the fused result, the larger the values of and . The luminance reversal levels corresponding to ten fusion algorithms are summarized in Table 1. This demonstrates that the proposed algorithm maintains the true brightness order in the fused image with respect to both indices. Moreover, the methods reported in [,,,] show promising results as well.

Table 1.

The and of ten different algorithms.

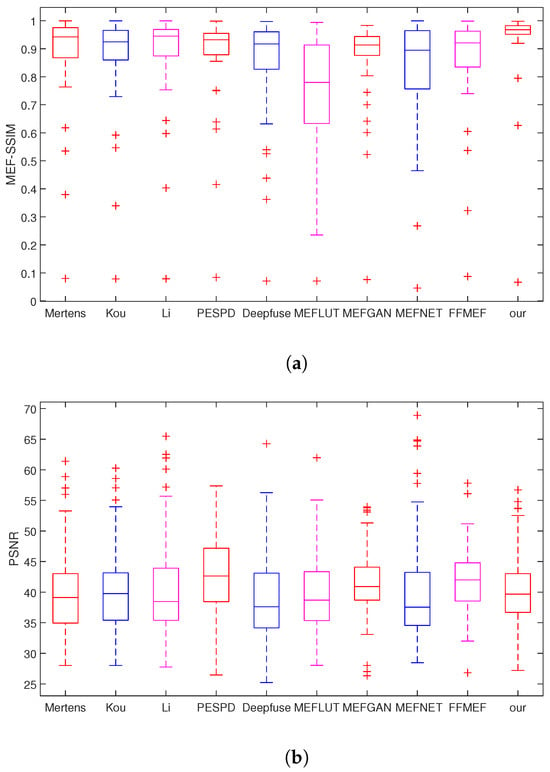

Furthermore, all ten methods are also compared from the perspective of MEF-SSIM and PSNR. The MEF-SSIM and PSNR calculate structural similarity and mean squared error (MSE) between the fused result and multiple exposure-bracketed reference images. According to Table 2, the proposed AEAN attained the highest MEF-SSIM score for fusing two EER images. The performance of PSNR is also well, ranking fourth among the ten algorithms, just two points lower than that of []. Figure 5 is the box plot depicting the distribution of the overall quality assessment results of the ten algorithms evaluated on the three test datasets. As shown in Figure 5a, the proposed algorithm achieves the highest median score under the MEF-SSIM metric, which indicates that it provides superior overall visual quality compared to the other algorithms. Furthermore, according to the PSNR metric in Figure 5b, the performance of all ten algorithms is relatively consistent, with small differences. Among them, the PESPD [] has the highest PSNR median score, while the proposed method ranks fourth. These results demonstrate that the fused images obtained by the proposed algorithm effectively preserve structural details while maintaining global luminance consistency.

Table 2.

The MEF-SSIM and PSNR of ten different algorithms.

Figure 5.

Comparison of quality assessment results by ten algorithms across the three test datasets using box plots. (a) is the MEF-SSIM box plots of ten algorithms. (b) is the PSNR box plots of ten algorithms.

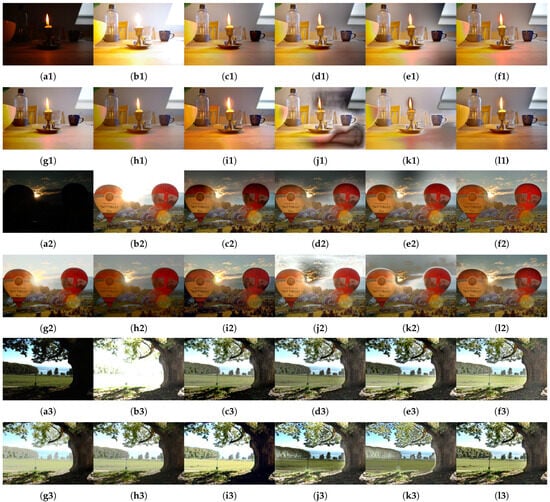

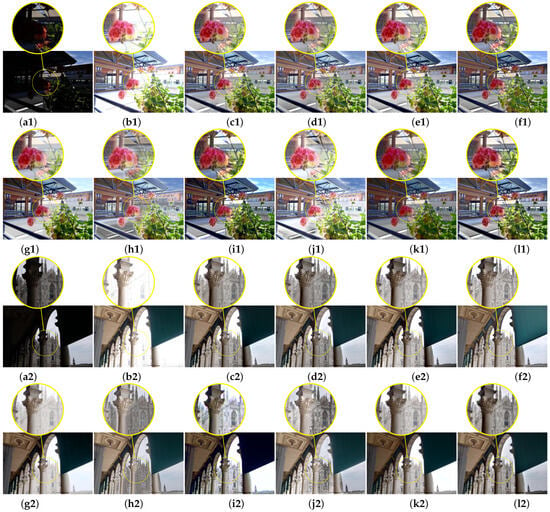

- Qualitative Results. In this subsection, we compare the ten algorithms from subjective perspectives. Specifically, the comparison is made in terms of brightness retention, information of the brightest and darkest regions, and scene depth. Figure 6 and Figure 7 show the visualization performance of EER image fusion on datasets [] and MEFB [], respectively.

As shown in Figure 6 and Figure 7, all ten methods can generate the fused image from two EER images. However, as shown in Figure 6, the algorithms based on traditional weight graphs in [,,,,,] have brightness reversal artifacts, while the algorithms in [,,] have halo artifacts, although the algorithms in [,] are much simpler than those in [,]. As shown in Figure 6, the improved inverse tangent function in [,] preserves the relative brightness order better than the Gaussian curve in [,]. Therefore, the brightness reversal artifacts of the fused image in algorithms such as [,] are more serious, but it can be seen from the enlarged portion of Figure 7 that the Gaussian curve can better reserve the highlight and shadow details. The single-resolution MEF methods in [,,] fail to preserve scene depth and local contrast as well as the MEF algorithms in [,,,,]. Therefore, it can be seen from Figure 7 that although these single-scale fusion algorithms can express details well, all elements from foreground to background are clear and sharp. However, such fused images feel sterile and struggle to guide attention or evoke mood. The learning-based algorithm in [] has a hierarchical structure but cannot preserve local contrast well, such as the grass and flower in Figure 7. The GAN-based algorithm in [] is based on supervised learning, which produces severe color distortion. The algorithms in [,,] are not based on weight maps, so they have advantages in avoiding halo artifacts in the fused result, but cannot preserve the information in the highlight and shadow areas well. Comparatively, the proposed fusion method preserves the authentic brightness distribution and depth of the scene, thereby effectively avoiding unnatural brightness reversal and halo artifacts. As a result, the fused image exhibits excellent visual realism, with both local and global details harmoniously preserved.

Figure 6.

The EER images and the fused results using the dataset []. (a1,a2,a3) are the input low-exposure images, (b1,b2,b3) are the input high-exposure images, (c1,c2,c3) are fused images by Mertens [], (d1,d2,d3) are by Kou [], (e1,e2,e3) are by Li [], (f1,f2,f3) are by PESPD [], (g1,g2,g3) are by Deepfuse [], (h1,h2,h3) are by FFMEF [], (i1,i2,i3) are by MEFGAN [], (j1,j2,j3) are by MEFNET [], (k1,k2,k3) are by MEFLUT [], and (l1,l2,l3) are by our algorithm.

Figure 7.

The EER images and the fused results using the dataset []. (a1,a2) are the low-exposure images and (b1,b2) are the high-exposure images. (c1,c2) are the fused results by Mertens [], (d1,d2) are by Kou [], (e1,e2) are by Li [], (f1,f2) are by PESPD [], (g1,g2) are by Deepfuse [], (h1,h2) are MEFLUT [], (i1,i2) are by MEFGAN [], (j1,j2) are by MEFNET [], (k1,k2) are by FFMEF [], and (l1,l2) are by our algorithm.

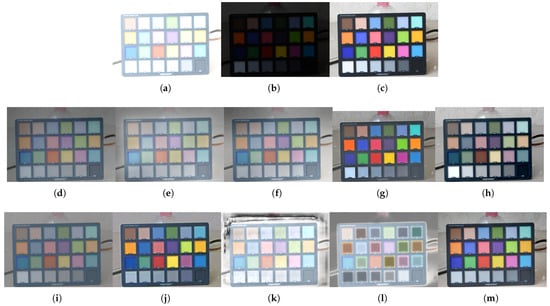

To facilitate a more effective comparison of the color fidelity among the ten algorithms, we captured a pair of color chart EER images and generated fused images using each algorithm. The fused images are shown in Figure 8. It can be seen that although the weight-map-based methods in [,,] exhibit brightness inversion between light and dark regions, they generally preserve the true color of the original images, as shown in Figure 8d–f. This is because the fused images produced by these methods derive their color information entirely from the input EER images, thereby avoiding the introduction of new color artifacts. In contrast, all the data-driven methods [,,,] introduce noticeable color distortions in the fused results. In comparison to other algorithms, our AEAN algorithm effectively maintains the natural color fidelity in the fused images.

Figure 8.

EER color chart images captured by ourselves and the corresponding fusion results from ten algorithms. (a,b) are two input EER images, (c) is the truth color image, (d) is the fused image by Mertens [], (e) is by Kou [], (f) is by Li [], (g) is by PESPD [], (h) is by Deepfuse [], (i) is by FFMEF [], (j) is by MEFGAN [], (k) is by MEFNET [], (l) is by MEFLUT [], and (m) is by our algorithm.

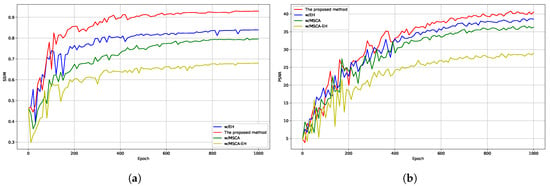

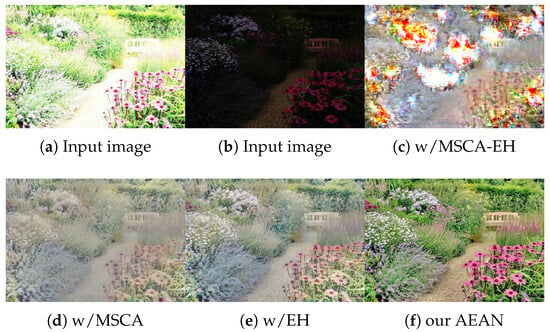

4.2. Ablation Study

To analyze the contribution of each part of our AEAN structure and the effectiveness of the loss function, we designed the following ablation studies. The quantitative outcomes of the ablation study for our AEAN method are shown in Table 3. As shown in the first line, directly utilizing the Transformer to exposure fusion fails to attain gratifying performance. The next two lines discuss the effectiveness of the MSCA and EH modules. In comparison with the fused image generated from the window-based Transformer, MSCA and EH embedded into the Transformer module can improve the MEF-SSIM. This illustrates the efficacy of our AEAN algorithms for two EER image fusion, because the MSCA and EH modules can effectively preserve local details of the fused image while reducing blocking artifacts. By adding two CNN modules at the same time, the performance can be further improved, as presented in the last row of Table 3. To comprehensively validate the effectiveness of each component, we carried out experiments with the model trained from scratch. The SSIM and PSNR values at different training epochs are presented in Figure 9. When the Swin Transformer is directly employed for fusing EER images, the quality of the fused images shows only limited improvement, primarily due to the persistence of block-like artifacts. By incorporating the MSCA or EH module individually, both SSIM and PSNR showed consistent enhancement, indicating their positive contribution. Notably, when MSCA and EH are all integrated into the W-WSA in a parallel and cascaded manner, the fusion performance is significantly improved. Furthermore, the experiments by training the model from scratch to evaluate the contribution of each loss term are also conducted. It is evident from Table 3 that color and SSIM loss are effective in improving the performance of our AEAN network.

Table 3.

Ablation study for investigating components of our algorithm.

Figure 9.

Comparisons of SSIM and PSNR between different structures of the proposed AEAN neural network. (a) shows the SSIM values across different epochs, while (b) illustrates the PSNR values over the same training period as well.

Apart from the objective comparison, Figure 10 provides an intuitive visualization of the contribution of each AEAN module to exposure fusion. It is noticeable that the blocking artifacts appear in the fused result when only the window-based Transformer model is used, as shown in Figure 10c. Moreover, in Figure 10d,e, the fused images only implanting MSCA or EH to the Transformer can reduce blocking artifacts. When the three are added together, the model performs better in local hue and depth of field, as shown in Figure 10f.

Figure 10.

Ablation study on individual components of the AEAN framework.

5. Conclusions

This paper proposes an attention-edge-assisted neural network (AEAN) for generating HDR images from a pair of registered EER images with symmetric and complementary exposure settings. Quantitative evaluations demonstrate that AEAN provides effective performance, with an average MEF-SSIM gain of 0.0384 across three benchmark datasets and a PSNR of 40.62 dB. Furthermore, AEAN substantially reduces luminance inversion artifacts, as measured by the brightness reversal ratio and mean value, thereby ensuring improved structural fidelity and visual realism compared with existing methods. These results underscore the effectiveness of AEAN in preserving structural consistency and enhancing visual realism in HDRI. Despite these advantages, AEAN presents several practical limitations. The framework requires accurate spatial alignment between input images, as even small misalignments can lead to artifacts such as ghosting and detail loss. Moreover, the model focuses on real-world scenarios, while its robustness on synthetic images requires further validation.

Building on these findings and limitations, our future work will focus on developing alignment-robust architectures capable of handling multi-frame inputs despite spatial misregistration. At the same time, the AEAN framework will be extended to support exposure interpolation and extrapolation, allowing more flexible HDR synthesis under diverse capture conditions. Together, these advancements aim not only to enhance the reliability of HDR generation but also to broaden the applicability of AEAN to unconstrained imaging pipelines.

In summary, AEAN demonstrates potential as a practical framework for HDRI, offering tangible improvements over existing methods. Its impact is particularly relevant for computational photography, augmented and virtual reality, medical imaging, and intelligent surveillance, where faithful luminance recovery and artifact suppression are critical for downstream analysis and human perception.

Author Contributions

Methodology, Y.Y.; Software, Y.Y.; Validation, Y.Y.; Formal analysis, Y.Y. and S.G.; Investigation, Y.Y.; Data curation, L.K.; Writing—original draft, Y.Y.; Writing—review & editing, S.G., L.K. and X.L.; Funding acquisition, Y.Y. and S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Hubei Natural Science Foundation of China (Grant No. 2025AFB006), and in part by the Hubei Key Research and Development Program of China (Grant No. 2024BAB110).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yeh, S.F.; Hsieh, C.C.; Cheng, C.J.; Liu, C.K. A dual-exposure single-capture wide dynamic range CMOS image sensor with columnwise highly/lowly illuminated pixel detection. IEEE Trans. Electron Devices 2012, 59, 1948–1955. [Google Scholar]

- Luo, Y.; Mirabbasi, S. A 30-fps 192 × 192 CMOS image sensor with per-frame spatial-temporal coded exposure for compressive focal-stack depth sensing. IEEE J. Solid-State Circuits 2022, 57, 1661–1672. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.C.; Delbruck, T. A 240 × 180 130 db 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Somanath, G.; Kurz, D. HDR environment map estimation for real-time augmented reality. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 11293–11301. [Google Scholar]

- Tan, X.; Chen, H.; Xu, K.; Xu, C.; Jin, Y.; Zhu, C.; Zheng, J. High dynamic range imaging for dynamic scenes with large-scale motions and severe saturation. IEEE Trans. Instrum. Meas. 2022, 71, 5003415. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, G.; Yu, M.; Yang, Y.; Ho, Y.S. Learning stereo high dynamic range imaging from a pair of cameras with different exposure parameters. IEEE Trans. Comput. Imaging 2020, 6, 1044–1058. [Google Scholar] [CrossRef]

- Catley-Chandar, S.; Tanay, T.; Vandroux, L.; Leonardis, A.; Slabaugh, G.; Pérez-Pellitero, E. FlexHDR: Modeling alignment and exposure uncertainties for flexible HDR imaging. IEEE Trans. Comput. Imaging 2025, 31, 5923–5935. [Google Scholar] [CrossRef]

- Mertens, T.; Kautz, J.; Van Reeth, F. Exposure fusion. In Proceedings of the Conference on Computer Graphics and Applications, Maui, HI, USA, 29 October–2 November 2007. [Google Scholar]

- Kou, F.; Li, Z.; Wen, C.; Chen, W. Multi-scale exposure fusion via gradient domain guided image fltering. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017. [Google Scholar]

- Li, Z.; Wei, Z.; Wen, C.; Zheng, J. Detail-enhanced multi-scale exposure fusion. IEEE Trans. Image Process. 2017, 26, 1243–1252. [Google Scholar] [CrossRef]

- Ram Prabhakar, K.; Sai Srikar, V.; Venkatesh Babu, R. Deepfuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; Volume 10, pp. 4714–4722. [Google Scholar]

- Ma, K.; Duanmu, Z.; Zhu, H.; Fang, Y.; Wang, Z. Deep guided learning for fast multi-exposure image fusion. IEEE Trans. Image Process. 2019, 29, 2808–2819. [Google Scholar] [CrossRef]

- Jiang, T.; Wang, C.; Li, X.; Li, R.; Fan, H.; Liu, S. Meflut: Unsupervised 1d lookup tables for multi-exposure image fusion. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 10542–10551. [Google Scholar]

- Zheng, K.; Huang, J.; Yu, H.; Zhao, F. Efficient Multi-exposure Image Fusion via Filter-dominated Fusion and Gradient-driven Unsupervised Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2804–2813. [Google Scholar]

- Yang, Y.; Wu, S. Multi-scale extreme exposure images fusion based on deep learning. In Proceedings of the IEEE 16th Conference on Industrial Electronics and Applications, Chengdu, China, 1–4 August 2021; pp. 1781–1785. [Google Scholar]

- Xu, S.; Chen, X.; Song, B.; Huang, C.; Zhou, J. CNN Injected transformer for image exposure correction. Neurocomputing 2024, 587, 127688. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, Y.; Yu, F. HiT-SR: Hierarchical transformer for efficient image super-resolution. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 483–500. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Gool, L.V.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1833–1844. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10012–10022. [Google Scholar]

- Hessel, C.; Morel, J. An extended exposure fusion and its application to single image contrast enhancement. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 137–146. [Google Scholar]

- Cai, J.R.; Gu, S.H.; Zhang, L. Learning a deep single image contrast enhancer from multi-exposure image. IEEE Trans. Image Process. 2018, 27, 2026–2049. [Google Scholar] [CrossRef]

- Zhang, J.; Luo, Y.; Huang, J.; Liu, Y.; Ma, J. Multi-exposure image fusion via perception enhanced structural patch decomposition. Inf. Fusion 2023, 99, 101895. [Google Scholar] [CrossRef]

- Li, H.; Ma, K.; Yong, H.; Zhang, L. Fast multi-scale structural patch decomposition for multi-exposure image fusion. IEEE Trans. Image Process. 2020, 35, 5805–5816. [Google Scholar] [CrossRef] [PubMed]

- Jia, W.; Song, Z.; Li, Z. Multi-scale exposure fusion via content adaptiv edge-preserving smoothing pyramids. IEEE Trans. Consum. 2022, 68, 317–326. [Google Scholar] [CrossRef]

- Yang, Y.; Cao, W.; Wu, S.; Li, Z. Multi-scale fusion of two largeexposure-ratio images. IEEE Signal Process. Lett. 2018, 25, 1885–1889. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, S.; Wang, X.F.; Li, Z. Exposure Interpolation for Two Large-Exposure-Ratio Images. IEEE Access 2020, 8, 227141–227151. [Google Scholar] [CrossRef]

- Li, Z.G.; Zheng, C.B.; Chen, B.; Wu, S. Neural-Augmented HDR Imaging via Two Aligned Large-Exposure-Ratio Images. IEEE Trans. Instrum. Meas. 2025, 72, 4508011. [Google Scholar] [CrossRef]

- Wu, K.; Chen, J.; Ma, J. DMEF: Multi-exposure image fusion based on a novel deep decomposition method. IEEE Trans. Multimed. 2022, 25, 5690–5703. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. Proc. Brit. Mach. Vis. Conf. 2018, 1–12. [Google Scholar] [CrossRef]

- Ma, K.; Zeng, K.; Wang, Z. Perceptual quality assessment for multi-exposure image fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 1397–1409. [Google Scholar] [CrossRef]

- Xu, H.; Ma, J.; Jiang, J.; Guo, X.; Ling, H. U2Fusion: A unified unsupervised image fusion network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 502–518. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, M.; Huang, S.; Wan, W. MIN-MEF: Multi-scale interaction network for multi-exposure image fusion. IEEE Trans. Instrum. Meas. 2024, 44, 502–518. [Google Scholar]

- Vs, V.; Valanarasu, J.M.; Oza, P.; Patel, V.M. Image fusion transformer. In Proceedings of the 2022 IEEE International Conference on Image Processing, Bordeaux, France, 16–19 October 2022; pp. 3566–3570. [Google Scholar]

- Qu, L.; Liu, S.; Wang, M.; Song, Z. TransMEF: A transformer-based multi-exposure image fusion framework using self-supervised multi-task learning. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2126–2134. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, Z.; Cheng, J.; Chen, X. Multi-exposure image fusion via multi-scale and context-aware feature learning. IEEE Signal Process. Lett. 2023, 30, 100–104. [Google Scholar] [CrossRef]

- Yang, Y.; Li, Z.; Wu, S. Low-Light Image Brightening via Fusing Additional Virtual Images. Sensors 2020, 20, 4614. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering high dynamic range radiance maps from photographs. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 3–8 August 1997; pp. 369–378. [Google Scholar]

- Chen, L.; Lu, X.; Zhang, J.; Chu, X.; Chen, C. Hinet: Half instance normalization network for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 182–192. [Google Scholar]

- Karaimer, H.C.; Brown, M.S. Improving color reproduction accuracy on camerass. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6440–6449. [Google Scholar]

- Zhang, X. Benchmarking and comparing multi-exposure image fusion algorithms. Inf. Fusion 2021, 74, 111–131. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).