Abstract

Given the significant potential of large language models (LLMs) in sequence modeling, emerging studies have begun applying them to time-series forecasting. Despite notable progress, existing methods still face two critical challenges: (1) their reliance on large amounts of paired text data, limiting the model applicability, and (2) a substantial modality gap between text and time series, leading to insufficient alignment and suboptimal performance. This paper introduces Hierarchical Text-Free Alignment (TS-HTFA) a novel method that leverages hierarchical alignment to fully exploit the representation capacity of LLMs for time-series analysis while eliminating the dependence on text data. Specifically, paired text data are replaced with adaptive virtual text based on QR decomposition word embeddings and learnable prompts. Furthermore, comprehensive cross-modal alignment is established at three levels: input, feature, and output, contributing to enhanced semantic symmetry between modalities. Extensive experiments on multiple time-series benchmarks demonstrate that TS-HTFA achieves state-of-the-art performance, significantly improving prediction accuracy and generalization.

1. Introduction

Time-series forecasting, as a fundamental task in artificial intelligence research, plays a pivotal role across various domains such as finance [1], healthcare [2], environmental monitoring [3], and industrial processes [4]. It evaluates a model’s ability to forecast future trends from historical data, requiring the model to effectively capture intricate temporal dependencies and evolving patterns. With the advancement of deep learning, models like Convolutional Neural Networks (CNNs) [5,6], Transformers [7], and Multilayer Perceptrons (MLPs) [8,9] have been increasingly applied to time-series forecasting, propelling sustained progress in this field.

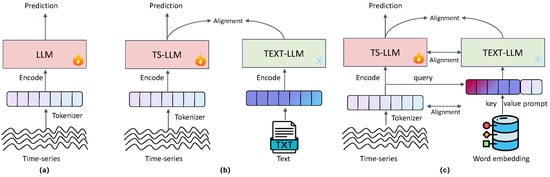

Large language models (LLMs) have recently demonstrated exceptional sequence modeling capabilities in language and vision tasks. Leveraging attention mechanisms and large-scale pretraining, LLMs possess powerful semantic representation and can effectively perform reasoning over extended periods. Consequently, they are considered to have substantial potential for addressing time-series forecasting. Several pioneering studies have explored the integration of LLMs, as shown in Figure 1a,b, which can be divided into two categories: single-stream models [10,11,12,13,14] and two-stream models [15,16,17,18,19,20]. In single-stream models, time-series signals are initially tokenized and subsequently mapped into the space of large language models through a simple adapter. Then, the model is fully fine-tuned using the time-series data. However, this straightforward approach may cause catastrophic forgetting, leading to degraded model performance. To fully exploit the representational capabilities of LLMs, two-stream models are designed with a time-series branch and an LLM branch with frozen parameters that takes paired language descriptions as input, with feature alignment during training to achieve cross-modal symmetry.

Figure 1.

Comparison of LLMs for time-series frameworks: (a) single-stream models; (b) two-stream models; (c) TS-HTFA (ours).

Despite notable progress, existing methods still face two critical challenges: (1) Dependence on paired textual annotations. Although incorporating language data can enhance model performance by introducing a symmetric alignment between modalities, time-series data, typically collected directly from sensors or smart devices, often lacks corresponding language descriptions to support such symmetry. Some methods [19,20] attempt to alleviate this limitation by generating synthetic text or relying on small-scale manually labeled data. However, they often fall short regarding sample diversity and temporal consistency with the time-series data. Consequently, the reliance on large-scale high-quality textual annotations restricts the model’s scalability in real-world scenarios. (2) Insufficient cross-modal alignment. Time-series data are represented as continuous signals, while natural language comprises discrete words, reflecting fundamental differences in their semantic characteristics. Existing methods [17,18] commonly project time-series signals and language data into a shared representation space through a single-layer feature mapping and coarse-grained feature alignment. However, they struggle to effectively capture the deep semantic relationships and structural characteristics between the two modalities, leading to suboptimal results and limited semantic symmetry.

To address the above-mentioned challenges, a novel method termed hierarchical text-free alignment (TS-HTFA) is proposed, featuring two primary innovations. Firstly, to overcome the reliance on textual annotations, it is hypothesized that the vocabulary of LLMs naturally contains some tokens implicitly related to time-series data. Based on this insight, a time-series-guided dynamic adaptive gating mechanism is designed to map time-series data into the feature space of the language model’s word embedding, generating corresponding virtual text tokens. Additionally, to avoid situations where specialized signals lack corresponding word embeddings in the existing vocabulary, prompt tuning is introduced, enabling the model to learn new virtual word embeddings. Secondly, to tackle insufficient cross-modal alignment between time series and language, theoretical analysis in Section 3.1 concludes that comprehensive alignment between two modalities necessitates alignment across multiple levels: input distribution, intermediate feature distribution, and output distribution. Based on this theory, a hierarchical alignment strategy is proposed. Specifically, at the input level, the dynamic adaptive gating mechanism is applied to map time-series data to virtual text tokens, ensuring consistency in input encoding. For intermediate features, contrastive learning is employed to optimize representations between time-series and language data by minimizing the semantic distance between positive samples. At the output level, optimal transportation is introduced to further align the two modalities in the prediction space.

The main contributions of this paper are as follows:

- A hierarchical text-free alignment framework is proposed, supported by novel probabilistic analysis, enabling comprehensive alignment across input, intermediate, and output spaces.

- Dynamic adaptive gating is integrated into the input embedding layer to align time-series data with textual representations and generate virtual text embeddings. Additionally, a novel combination of layerwise contrastive learning and optimal transport loss is introduced to align intermediate representations and output distributions.

- The proposed framework is validated through extensive experiments, achieving competitive or state-of-the-art performance on both long-term and short-term forecasting tasks across multiple benchmark datasets.

The remainder of this paper is structured as follows: Section 2 reviews related work on time-series forecasting and relevant large language models (LLMs). Section 3 introduces the theoretical foundations. Section 4 details the proposed TS-HTFA. Section 5 presents the experimental results. Section 6 analyzes the advantages and limitations of our method, as well as future research directions. Section 7 concludes with a summary.

2. Related Work

2.1. Time-Series Forecasting

In recent years, deep learning has revolutionized time-series forecasting, with numerous methods emerging to enhance predictive accuracy. Models such as recurrent neural networks (RNNs), Convolutional Neural Networks (CNNs), Multilayer Perceptrons (MLPs), and Transformers have been widely applied to time-series analysis. TimesNet [7], a CNN-based model, leverages Fourier Transform to identify multiple periods in time series, converting 1D data into 2D tensors to capture both intra- and inter-period variations using convolutional layers. DLinear [8], an MLP-based model, decomposes raw time series into trend and seasonal components via moving averages, extracts features through linear layers, and combines them for predictions. Transformer-based models have gained significant traction, leveraging their success in NLP. FEDformer [21] transforms time series into the frequency domain to compute attention scores and capture detailed structures. PatchTST [22] segments multivariate time-series channels into patches and processes them with a shared Transformer encoder, preserving local semantics while reducing computational costs and handling longer sequences. Crossformer [23] extracts channelwise segmented representations and employs a two-stage attention mechanism to capture cross-time and cross-dimension dependencies. iTransformer [24] transposes embeddings from the variable to the temporal dimension, treating each channel as a token and using attention mechanisms to capture inter-variable relationships, achieving excellent performance in most cases. Despite these advancements, challenges remain, such as limited training data, overfitting in specific domains, and the complexity of architectural designs.

2.2. LLMs for Time-Series Forecasting

The realm of large language models has witnessed significant advancements, demonstrating remarkable capabilities in natural language processing and finding applications across a wide range of domains. Recently, efforts have been made to adapt LLMs for time-series analysis to improve predictive performance. For instance, PromptCast [10] introduces a “codeless” approach to time-series forecasting, moving away from complex model architectures. Similarly, GPT4TS [25] proposes a unified framework for various time-series tasks by partially freezing the LLM while fine-tuning specific layers. TEMPO [11] focuses exclusively on time-series forecasting, incorporating elements such as time-series decomposition and soft prompts. Additionally, LLM4TS [12] presents a two-stage fine-tuning framework to address challenges in integrating LLMs with time-series data. Time-LLM [13] reprograms time series using natural language-based prompting and source data modality, effectively leveraging LLMs as efficient time-series predictors. S2IP-LLM [26] designs a tokenization module for cross-modality alignment and utilizes semantic anchors from pretrained word embeddings. Furthermore, UniTime [27] employs natural language as domain instructions to encode domain-specific information, which is then integrated into a Language-TS Transformer for effective cross-modality alignment. While these methods generally focus on adapting single-tower and fine-tuning LLMs for time-series tasks, they often overlook the distributional differences between textual and temporal input tokens, leading to sub-optimal performance. To address this issue, some approaches have adopted a dual-tower structure to enhance the alignment and transfer of information from LLMs to time-series data. For example, TimeCMA [28] employs a dual-tower architecture and fuses the two modalities at the final stage using cross-modality alignment. DECA [29] integrates time-series data with familiar linguistic components in the LLMs’ language environment through context alignment, enabling the models to better contextualize and understand time-series data. CALF [30] develops a cross-modal match module to reduce modality gaps, leveraging the resemblance between forecasting future time points and the generative, self-regressive nature of LLMs. Despite these efforts, the alignment strategies for integrating LLMs with time-series data remain insufficiently effective, and theoretical analyses in this area are still limited.

2.3. Cross-Modal Learning

Cross-modal fine-tuning enables the transfer of knowledge from models pretrained on data-rich modalities to data-scarce ones, addressing challenges such as data insufficiency and poor generalization [31]. Model reprogramming offers a resource-efficient alternative by repurposing pretrained models from a source domain to solve tasks in a target domain without fine-tuning, even when the domains differ significantly [32]. Recent studies have explored transferring the capabilities of large language models (LLMs) to other domains, including vision [33,34], audio [35], biology [36], and recommender systems [37], providing evidence of the cross-modal transfer capacity of pretrained models. In the context of time series, most research leverages the contextual modeling power of LLMs through fine-tuning to enhance forecasting performance. However, these approaches often overlook the inherent distributional differences between language and time-series modalities. To bridge this gap, the proposed method employs a three-stage alignment process to effectively transfer pretrained language model knowledge to the time-series domain.

3. Preliminaries

3.1. Theoretical Analysis

The proposed framework, TS-HTFA, is built upon the theoretical foundation of cross-modal knowledge transfer between textual and time-series domains. Specifically, a dual-tower architecture and triplet-level cross-modal distillation are employed to facilitate the transfer of knowledge from the textual domain () to the time-series domain () via a transformation function T:

From a probabilistic perspective, the two domains are defined as

Thus, aligning these distributions across domains is critical to ensure effective knowledge transfer. Specifically, the goal is to align the input distributions and , the joint distributions and , as well as the marginal distributions and .

To formally establish this, intermediate layer representation h is introduced, and the joint and marginal distributions are decomposed as follows:

This integral decomposition assumes that the intermediate representation provides a sufficient encoding of , such that . It also relies on standard measurability and integrability conditions to ensure proper probability normalization.

According to these decompositions, the objective is to achieve domain alignment by reconciling the input distributions , the intermediate representations , and the output distributions . This theoretical foundation serves as the motivation for the proposed three-stage alignment strategy. As a result, this alignment ensures that

3.2. Task Definition

Let represent a regularly sampled multivariate time-series dataset with N series and T timestamps, where denotes the values of N distinct series at timestamp t. The model input is defined as a look-back window of length L at timestamp t:

The prediction target is a horizon window of length at timestamp t, denoted as

The objective of time-series forecasting is to utilize historical observations to predict future values . A typical forecasting model , parameterized by , generates predictions as follows:

3.3. List of Abbreviations

To enhance clarity and readability, Table 1 provides a list of abbreviations used throughout the paper. Each abbreviation corresponds to a key concept or component relevant to the proposed framework.

Table 1.

List of abbreviations used in this paper.

4. Method Overview

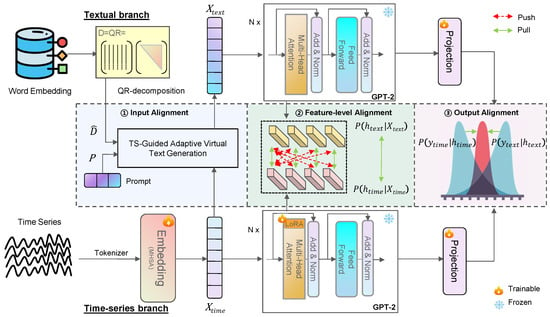

As shown in Figure 2, the proposed framework adopts a dual-branch structure comprising a time-series branch and a fixed language branch. During the training stage, the time-series branch is trained online using LoRA [38] with hierarchical text-free alignment to the language branch across multiple levels: input distribution Section 4.1, intermediate feature distribution Section 4.2, and output distribution Section 4.3. At the input stage, TS-Guided Adaptive Virtual Text Generation (TS-GAVTG) generates a paired virtual text dataset to activate the language branch’s capabilities. Contrastive learning is employed for intermediate feature alignment, while optimal transport (OT) is applied at the output layer to ensure distributional consistency. During inference, the language branch is excluded, and predictions are generated solely by the time-series branch.

Figure 2.

Overview of the proposed TS-HTFA. The framework adopts a dual-branch structure, where the time-series branch is hierarchically aligned with the fixed language branch across input, intermediate, and output distributions. During inference, the language branch is excluded, and predictions are generated solely from the time-series branch.

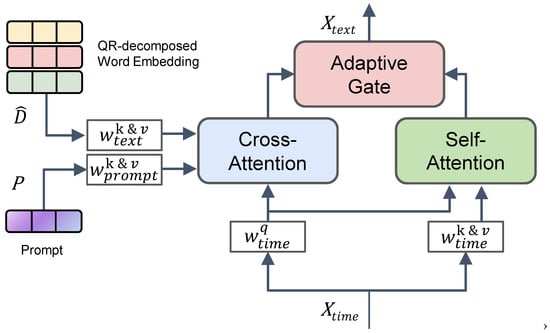

4.1. TS-Guided Adaptive Virtual Text Generation in Input Alignment

In the input phase, the TS-GAVTG module enhances time-series representation by integrating language priors through a dual-attention mechanism. Time-series data are first encoded into tokens, which serve as queries in a cross-attention mechanism. The keys and values for cross-attention are derived from QR-decomposed word embeddings and learnable prompts. Simultaneously, a self-attention mechanism processes the time-series tokens independently. To effectively merge these two sources of information, a dynamic adaptive gating mechanism fuses the outputs from cross-attention and self-attention, generating virtual paired text tokens. This fusion enriches the model’s understanding of temporal features by incorporating linguistic knowledge, as illustrated in Figure 3.

Figure 3.

Overview of the proposed TS-GAVTG module for generating virtual paired text data. Time-series data are encoded into tokens and serves as the query in the cross-attention mechanism, with keys and values derived from QR-decomposed word embeddings and learnable prompts. Simultaneously, the self-attention mechanism processes the time-series tokens independently. The gating mechanism fuses the outputs from both cross-attention and self-attention to generate virtual paired text tokens.

4.1.1. Time-Series Encoding

Given a multivariate time series , where L is the sequence length and P is the number of variables, an embedding layer maps each variable across timestamps into a shared latent space [24]. Multihead self-attention (MHSA) is then applied to obtain the time-series tokens , with M as the feature dimension of the pretrained large language models (LLMs). The resulting token set is , where are ordered tokens, and i denotes their position in the sequence.

4.1.2. QR-Decomposed Word Embedding

In pretrained LLMs, word embeddings serve as pivotal anchors, structuring and expanding the input distribution within the feature space. To leverage this structured distribution, the vocabulary of the language model is derived by performing QR decomposition on the word embedding dictionary to reduce its dimensionality. Specifically, the QR decomposition factorizes the matrix D into an orthogonal matrix Q and an upper triangular matrix R. By selecting the first d vectors from Q (corresponding to the rank of R), then a reduced principal embedding matrix is constructed, where . The orthogonality of Q ensures that the selected vectors are linearly independent, unlike those obtained through PCA [30] or representative selection [14], allowing them to effectively span a subspace of the language model’s feature space.

4.1.3. Virtual Text Generation via Dynamic Adaptive Gating

The text representation is generated from by applying self-attention within the time-series token and prompt-enhanced cross-attention with . The attention mechanism is described by:

The primary difference between self-attention and cross-attention lies in the source of the keys () and values (), resulting in the obtained and . and are the learnable prompt matrices, with being the prompt length and being the dimensionality of each attention head.

The prompts guide the cross-attention process by focusing on task-relevant features [39], aligning time-series data with text representations, and enhancing the language model’s ability to handle unseen time-series tokens. Unlike previous methods [13,30] that assume relevant keys and values can always be retrieved from the language model’s vocabulary, our prompt design addresses cases where such key-value pairs are unavailable.

The final text representation is obtained by adaptively combining the outputs from self-attention and cross-attention:

Here, and . This dual attention effectively integrates the time series and language information, enabling the generation of virtual text data based on time-series features, thereby facilitating cross-modal learning and promoting fine-grained symmetry at the semantic level. The design satisfies the condition that the two spaces of the text and time-series branches are not entirely disjoint, as GPT-2 can directly handle initial values or treat them as strings [10]. Our gating mechanism is specifically designed to incorporate semantics from compressed word embeddings while retaining numerical values from the time-series data, thereby maximizing the potential of the text branch.

4.2. Layerwise Contrastive Learning for Intermediate Network Alignment

To align the outputs of each intermediate layer in the temporal student branch with those in the textual teacher branch, a layerwise contrastive learning method is applied. This approach enhances the alignment of semantically congruent information between modalities by optimizing relative similarity in the feature space, rather than minimizing absolute differences [30]. Specifically, the InfoNCE loss is applied at each layer:

where denotes the similarity function, and is the temperature parameter. The terms and represent the aggregated intermediate features from the temporal and textual modalities, respectively, where average pooling has been applied to combine all tokens within each layer. The overall feature loss integrates these losses across layers:

where a decay factor is applied to give more weight to losses from layers closer to the input, reflecting their importance in the alignment process.

4.3. Optimal Transport-Driven Output Layer Alignment

To achieve coherent and unified predictions from both time-series and language modalities, optimal transport (OT) [40] is utilized for distributional alignment in the output layer. Unlike traditional loss, which captures pointwise differences, OT aligns the distributions holistically, effectively preserving semantic relationships across modalities.

The OT loss function is defined as

where is the transport plan, is the cost matrix derived from pairwise distances between and , and is the entropy regularization term controlled by .

This optimization is solved using the Sinkhorn algorithm [41], which efficiently computes the transport plan by iteratively normalizing P under marginal constraints. This approach balances transport cost and regularization, ensuring smooth and stable alignment. By leveraging OT, the proposed method captures global semantic relationships between modalities, ensuring coherent alignment beyond pointwise matching. Additionally, entropy regularization in the optimization process enhances stability and efficiency, making the alignment both robust and computationally efficient.

4.4. Total Loss Function for Cross-Modal Distillation

To ensure effective training and alignment across modalities, a combined loss function is employed to integrate multiple components. Specifically, the total loss for the textural student branch, following [30,42], is a weighted sum of the supervised loss of task , the feature alignment loss , and the output consistency loss . The total loss is given by:

where and are hyperparameters that balance the contributions.

5. Experiments

The proposed method was evaluated on standard time-series forecasting benchmarks, with comparisons against several state-of-the-art methods for both long-term (as shown in Table 4) and short-term forecasting (as shown in Table 5). These comparisons followed competitive research practices [7,13,30]. Additionally, ablation studies (as shown in Table 6) were conducted to assess the impact of each component. All experiments were performed under uniform settings across methods, adhering to established protocols [7], with evaluation pipelines available online https://github.com/thuml/Time-Series-Library (accessed on 27 March 2024).

5.1. Experimental Setups

5.1.1. Implementation Details

Following [25], GPT-2 [43] was used with its first six Transformer layers as the backbone. The model was fine-tuned using the LoRA method [38], with a rank of 8, an alpha value of 32, and a dropout rate of 0.1. These values align with commonly adopted settings shown to provide a good balance between performance and parameter efficiency in prior works [30]. Similarly, the prompt length was set to 8 based on established configurations [39] to maintain model flexibility without increasing computational overhead. The Adam optimizer [44] was applied with a learning rate of . The loss hyperparameters were configured as and . For the task loss, the smooth loss was adopted for long-term forecasting, while SMAPE was used for short-term prediction. The Optimal Transport parameter was set to , and the number of iterations was fixed at 100. InfoNCE was implemented using the Lightly toolbox https://github.com/lightly-ai/lightly (accessed on 6 May 2024 ). To balance the contributions from lower and higher layers, the decay factor was set to .

5.1.2. Baselines

Competitive baselines from the current time-series forecasting domain are selected and categorized as follows: (1) LLMs -based models: CALF [30], TimeLLM [13], GPT4TS [25], TimeCMA [28], UniTime [27], S2IP-LLM [26] and DECA [29]; (2) Transformer -based models: PatchTST [22], iTransformer [24], Crossformer [23], FEDformer [21] Autoformer [45], ETSformer [46], and PAttn, LTrsf [47]; (3) CNN -based models: MICN [6] and TimesNet [7]; (4) MLP -based models: DLinear [8] and TiDE [9]. N-HiTS [48] and N-BEATS [49] are included for short-term forecasting. To provide further insights, the key characteristics of each baseline model are summarized in Table 2.

Table 2.

Baseline models for time-series forecasting. The table categorizes models by architecture type and highlights their key mechanisms and applications.

5.1.3. Datasets

Experiments are conducted on two types of real-world datasets: long-term forecasting and short-term forecasting. For long-term forecasting, seven widely used datasets are utilized, including the Electricity Transformer Temperature (ETT) dataset with its four subsets (ETTh1, ETTh2, ETTm1, ETTm2), along with Weather, Electricity, and Traffic [45]. For short-term forecasting, the M4 dataset [51] is adopted, consisting of univariate marketing data collected at yearly, quarterly, and monthly intervals. Dataset statistics are summarized in Table 3

Table 3.

Statistics of all public datasets used in this study. The dimension indicates the number of time series (i.e., channels), and the dataset size is organized in training, validation, and testing.

5.1.4. Evaluation Metrics

For both long-term and short-term forecasting, dataset details are provided in the corresponding sections. Evaluation is conducted using standard metrics for each forecasting type. For long-term forecasting, two commonly used evaluation metrics are employed: Mean Square Error (MSE) and Mean Absolute Error (MAE). For short-term forecasting, three evaluation metrics are applied: Symmetric Mean Absolute Percentage Error (SMAPE), Mean Absolute Scaled Error (MASE), and Overall Weighted Average (OWA), where OWA is a specific metric used in the M4 competition. The calculations for these metrics are defined as follows:

Here, s represents the periodicity of the time-series data, and H denotes the number of data points (i.e., the prediction horizon in our case). and correspond to the ground truth and predicted values at the h-th step, where .

Input Lengths and Prediction Horizons. (1) Long-term Forecasting: The input time-series length T is fixed at 96 to ensure a fair comparison across datasets. Four distinct prediction horizons are considered: . (2) Short-term Forecasting: The prediction horizons are relatively short, ranging from 6 to 48. Correspondingly, the input lengths are set to be twice the size of the respective prediction horizons.

5.2. Main Results

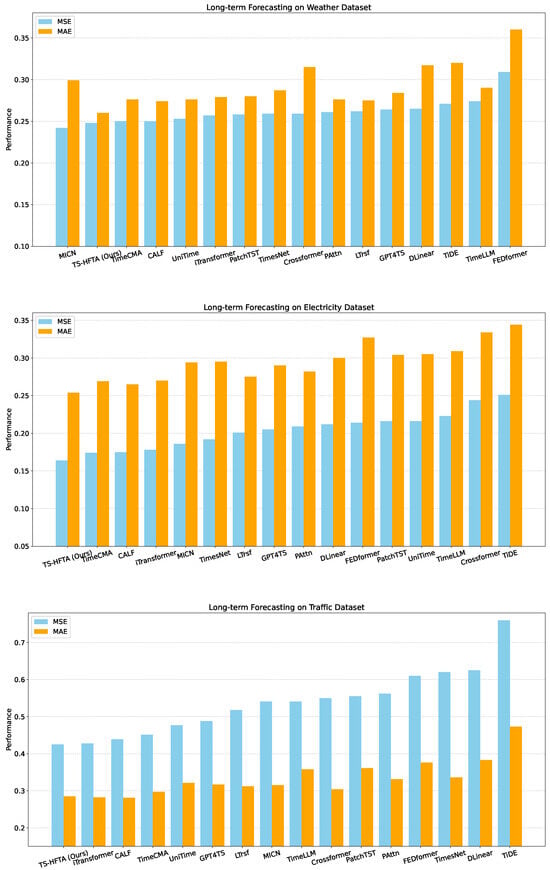

5.2.1. Long-Term Forecasting

Table 4 presents the quantitative results of our method and other state-of-the-art approaches on long-term forecasting benchmarks. To provide a more intuitive understanding, Figure 4 visualizes the forecasting results on representative datasets, including Weather, Electricity, and Traffic. These visualizations clearly demonstrate the effectiveness of our proposed TS-HTFA framework compared with other baselines, highlighting its superior capability in capturing long-term dependencies and reducing prediction errors.

Table 4.

Multivariate long-term forecasting results with input length . Results are averaged over 4 prediction lengths . A lower value indicates better performance, where bold red indicates the best result, and bold blue indicates the second-best result.

Figure 4.

Forecasting Results on three real-world datasets from top to bottom: Weather, Electricity, and Traffic. These results highlight the performance of different models on long-term multivariate forecasting tasks, showcasing key trends and model comparisons.

Specifically, on the ETTm2 dataset, TS-HTFA achieves an MAE of 0.301, representing a significant 6.2% improvement compared with CALF’s MAE of 0.321. This improvement indicates that TS-HTFA is better equipped to minimize errors in long-horizon predictions, even for challenging datasets with complex temporal patterns. On the Electricity dataset, TS-HTFA records an MSE of 0.164, which is 6.3% lower than CALF’s 0.175. This result further highlights the model’s capacity to provide more accurate predictions while reducing variance in its estimates.

Beyond these datasets, TS-HTFA also achieves competitive performance on the Weather and Traffic datasets. For example, in the Weather dataset, which contains highly seasonal and dynamic patterns, TS-HTFA’s ability to model long-term dependencies results in lower prediction errors compared with both TimeLLM [13] and CALF [30]. Similarly, in the Electricity dataset, where the relationships among time-series variables are intricate and interdependent, TS-HTFA outperforms competitors by effectively leveraging its architecture to capture these interactions over extended periods.

It is noted that PAttn [47] and LTrsf [47] do not exhibit high performance when evaluated under a uniform and comparable setting. Notably, the sequence modeling capabilities of LLMs are significantly weakened when the input sequence length becomes excessively large. This observation aligns with insights from the recent NeurIPS 2024 workshop by Christoph Bergmeir, who emphasized that input window length is a critical hyperparameter. He further noted that restricting input lengths to smaller values often favors more complex models over simpler ones (https://cbergmeir.com/talks/neurips2024/ (accessed on 15 December 2024)).

The superior performance of TS-HTFA can be attributed to its design, which emphasizes hierarchical alignment strategies. Unlike TimeLLM [13], which uses a sequential structure without explicit alignment strategy, and CALF [30], which employs naive cross-attention mechanisms, TS-HTFA adopts a prompt-enhanced cross-attention mechanism and a dynamic adaptive gating approach to activate the sequence modeling capabilities of large language models. This virtual text generation allows TS-HTFA to integrate semantics derived from compressed word embeddings while preserving the numerical characteristics of the time-series data. Consistent with recent studies [52,53], our findings support the conclusion that LLMs hold significant potential for advancing time-series analysis.

5.2.2. Short-Term Forecasting

Table 5 presents the quantitative results of our method and other state-of-the-art methods on short-term forecasting benchmarks. TS-HTFA achieves the best performance, leading with the lowest SMAPE (11.651), MASE (1.563), and OWA (0.837) across average intervals, as shown in Table 5, marking a significant 3.5% improvement over CALF. These results demonstrate TS-HTFA’s ability to deliver accurate short-term predictions, further highlighting its robustness in handling diverse temporal patterns.

Table 5.

Short-term forecasting results on the M4 dataset. Input lengths are and prediction are . The rows provided are weighted averaged from all datasets under different sampling intervals. A lower value indicates better performance, where bold red indicates the best result, and bold blue indicates the second-best result.

Overall, LLMs-based methods [13,25,30] consistently outperform traditional architectures such as MLP-based models [8,9], CNNs [5,6,7], and Transformer-based models [21,22,23,24,45,46]. The superiority of LLMs-based approaches lies in their enhanced ability to capture rich contextual information and adapt effectively to a wide range of tasks, enabling them to generalize across different datasets and temporal granularities. The M4 [51] dataset, which encompasses multiple frequencies (yearly, quarterly, monthly, etc.), presents significant challenges for traditional architectures due to their limitations in capturing patterns specific to varying periodicities. Traditional models often struggle to adapt their feature extraction mechanisms across diverse temporal granularities, leading to suboptimal performance when faced with datasets containing mixed-frequency components. TS-HTFA’s remarkable performance on this dataset demonstrates its effectiveness in modeling diverse seasonal and trend components, which are prevalent in marketing and economic data. The ability to generalize across various frequencies underscores TS-HTFA’s flexibility and adaptability in real-world applications.

5.2.3. Visualization

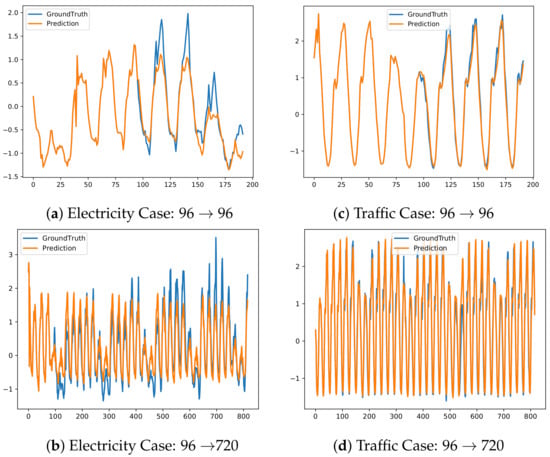

Figure 5 presents the long-term forecasting results for the Electricity and Traffic datasets under the input-96-predict-96 and input-96-predict-720 settings. Our TS-HTFA demonstrates a strong alignment between the predictions and the ground truth, especially on the Traffic dataset, where the predictions closely match the actual values. This superior performance can be attributed to the strong periodicity and inherent temporal symmetry of traffic patterns, such as daily and weekly cycles, which the model effectively captures. Conversely, the Electricity dataset is characterized by higher variability and complexity. It is affected by various factors including weather, industrial activities, and unexpected events. As a result, there are occasional deviations between the predictions and the ground truth. These differences emphasize the challenges presented by noisier and less predictable data.

Figure 5.

Long-term forecasting visualization cases for Electricity and Traffic. The blue lines represent the actual values, while the orange lines indicate the model’s predictions.

5.3. Ablation Studies

5.3.1. Ablation on Different Stage Alignment

To better understand the contribution of each component in TS-HTFA, ablation studies were conducted on the M4 dataset. These studies isolate the effect of individual modules, offering insights into their specific roles and their synergy in achieving state-of-the-art performance. Table 6 summarizes the results on the M4 dataset, with SMAPE, MASE, and OWA as key metrics. Lower values indicate better performance.

Table 6.

Ablation study on the M4 dataset. A lower value indicates better performance, where bold red indicates the best result, and bold blue indicates the second-best result.

Impact of : The dynamic adaptive gating module () reduces SMAPE from 11.956 to 11.821, demonstrating its pivotal role in adaptively generating the paired virtual texture token. By generating modality-specific virtual tokens while preserving numerical properties, effectively enhances the sequence modeling capabilities, especially for large input spaces.

Impact of : Adding the Intermediate Layer Alignment Feature Loss () leads to reductions in MASE and OWA, highlighting its effectiveness in stabilizing intermediate representations and refining temporal coherence. However, it results in a slight increase in SMAPE, likely due to localized trade-offs in optimizing specific temporal or feature patterns. Despite this, ensures robust multivariate correlation handling and contributes significantly to overall model performance.

Impact of : Incorporating the Output Layer Alignment Optimal Transport Loss () further reduces SMAPE to 11.651. This module ensures precise alignment of output distributions, refining predictions during the final stage and contributing to overall numerical stability.

Overall Performance: The complete model, integrating all three alignments, achieves state-of-the-art performance across metrics, with SMAPE (11.651), MASE (1.563), and OWA (0.837). This confirms the necessity and complementarity of , , and in advancing time-series forecasting. Each alignment contributes differently across stages. has the greatest influence at the input stage, driving adaptive virtual texture tokens. stabilizes intermediate layers by maintaining feature integrity, while focuses on refining final predictions, ensuring output precision.

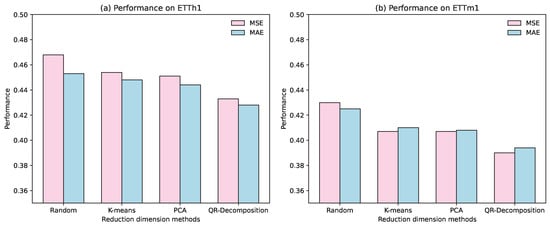

5.3.2. Ablation on Different Reduction Methods

To evaluate different approaches for vocabulary compression, a comparison was conducted among QR decomposition (https://numpy.org/doc/stable/reference/generated/numpy.linalg.qr.html (accessed on 20 November 2022)), PCA (https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.PCA.html (accessed on 9 May 2023)), K-means clustering (https://scikit-learn.org/stable/modules/clustering.html#k-means (accessed on 9 May 2023)), and random selection, using the same model accuracy metric. For a fair comparison, the vocabulary size in each method is reduced to match the rank of the matrix obtained from QR decomposition. The Figure 6 shows that QR decomposition achieved the highest performance. QR decomposition’s key advantage lies in maintaining orthogonality, ensuring that the prototype vectors can span the semantic space of large language models. This preservation of semantic structure gives it an edge over PCA and K-means, which, while effective, lose critical semantic relationships or are sensitive to initialization. Random selection performed the worst, highlighting the risks of unstructured compression. This may be the reason that in high-dimensional spaces, not all vectors are pairwise orthogonal, and this is due to semantic relationships.

Figure 6.

Ablation on different reduction methods on (a) ETTh1 and (b) ETTm1 datasets.

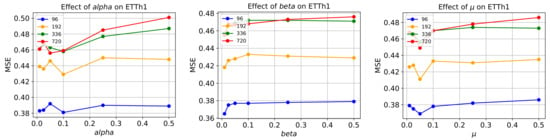

5.3.3. Hyperparameter Study

To analyze the impact of hyperparameters on model performance, a sensitivity analysis was conducted by varying , , and on the ETTh1 dataset. As shown in Figure 7, MSE remains relatively stable within these ranges. The best performance (MSE: 0.423) is achieved when , , and . Although further fine-tuning may yield minor improvements, the primary objective is to validate the robustness and effectiveness of the proposed method rather than optimizing hyperparameters for each dataset. Therefore, a uniform set of hyperparameters is applied across all datasets instead of re-tuning for each one. The results demonstrate that the proposed approach performs reliably across various settings, highlighting its practicality for diverse time-series forecasting tasks.

Figure 7.

Hyperparameter sensitivity analysis for the ETTh1 dataset. Each subfigure presents the effect of varying , , and values on the model’s performance.

5.4. Theoretical Complexity Analysis and Practical Model Efficiency

This section provides a comprehensive evaluation of the computational complexity and efficiency of the proposed TS-HTFA. The analysis includes comparisons with various baselines, highlighting both theoretical complexity and practical efficiency in terms of floating-point operations (FLOPs), parameter count, training speed, and prediction performance.

Theoretical Complexity: Table 7 shows the theoretical computational complexity per layer for various Transformer-based models. Unlike conventional Transformer-based approaches, whose computational complexity grows quadratically with the input sequence length T, our TS-HTFA model, inspired by [24], primarily associates its complexity with the number of channels C. Since the temporal dimension in time-series data is typically much larger than the number of channels, the inverted Transformer architecture effectively captures inter-channel dependencies. This design choice significantly reduces the overall computational complexity compared with other models.

Table 7.

Per-layer theoretical computational complexity of Transformer-based methods. T and H denote the input and prediction lengths, C the number of channels, and p the patch length.

Model Efficiency: This section presents a detailed comparison between the baseline models and the proposed TS-HTFA model in terms of floating-point operations (FLOPs), parameter count, training speed, and prediction performance. Table 8 reports the results on the ETTh1 dataset, where all models use an input and prediction length of 96 with the same batch size. The proposed model consistently outperforms other Transformer-based and CNN-based models while maintaining comparable or lower computational complexity for LLM-based models and competitive training times. Although our model has relatively higher FLOPs compared with traditional architectures, the overall number of trainable parameters remains low. Additionally, LoRA [38] can further reduce the parameter count while maintaining performance.

Table 8.

Efficiency comparison on the ETTh1 dataset (96→96). A lower value indicates better performance, where bold red indicates the best result, and bold blue indicates the second-best result.

Notably, the computational cost during training corresponds to the dual-tower structure; however, only a single-tower structure is needed during inference for time-series data, reducing the computational overhead by nearly half. Additionally, various inference optimization strategies for large language models, such as quantization, can further improve efficiency, representing promising directions for future exploration and optimization.

6. Discussion

Differences from Other Work. Our proposed method introduces several novel advancements compared with existing approaches. Unlike methods such as TimeLLM [13] and S2IP-LLM [26], which focus solely on input alignment, or DECA [29], which emphasizes intermediate alignment, or TimeCMA [28], which concentrates on output layer alignment, our three-stage alignment framework (input, intermediate, and output layers) provides a more comprehensive solution for leveraging LLMs in time-series forecasting. This is not merely a superficial combination of existing techniques but is supported by theoretical analysis (see Section 3.1). Specifically, our use of QR decomposition for LLM word embeddings ensures orthogonality and better spans the language model’s embedding space, leading to more efficient and effective alignment. This approach differs significantly from TimeLLM [13], which is based on resource-intensive online learning due to the large vocabulary size, and CALF [30], which may suffer performance degradation due to its use of PCA. Additionally, our TS-GAVTG module addresses the critical challenge of text-free scenarios by generating pseudo-text paired with time-series data. Unlike previous methods [13,26,30], which assume that all time-series tokens can retrieve related word embeddings, our approach employs an adaptive gating mechanism using prompt-based cross-attention to dynamically align time-series tokens with word embeddings. This innovation allows our model to handle text-free scenarios more effectively, making it more versatile and robust compared with existing approaches.

Challenges and Limitations. Despite its strengths, our method faces several challenges. First, our performance improvements are primarily driven by experimental results, relying on implicit alignment mechanisms to incorporate knowledge from LLMs. This approach, while effective, lacks fine-grained interpretability, making it difficult to fully understand how the model achieves its predictions. Second, while LLMs excel at sequence modeling, recent advancements in areas such as DeepSeek-R1 [54] reasoning suggest promising directions for further exploration. Specifically, applying reasoning capabilities to multimodal time-series data could unlock new insights and improve model performance.

Future Work. Future work should focus on interpretability, developing techniques to enhance the interpretability of our model, particularly in understanding how implicit alignment mechanisms contribute to performance improvements; reasoning in multimodal time-series data, exploring the integration of multimodal LLMs to empower time-series reasoning [55], enhancing decision-making and real-world applications.

7. Conclusions

In this paper, we propose TS-HTFA, a novel framework designed to enhance time-series forecasting by integrating LLMs through hierarchical text-free alignment. Unlike existing methods that focus on individual alignment components, TS-HTFA provides a holistic and theoretically grounded approach by aligning the input, feature, and output layers across modalities. TS-HTFA has several key advantages:

- Independence from paired textual data: TS-HTFA effectively leverages the representational power of LLMs without requiring annotated textual descriptions, reducing data preparation effort and enhancing adaptability.

- Enhanced alignment between numerical and linguistic models: By generating virtual text tokens, TS-HTFA allows LLMs to fully utilize their pretrained capabilities while maintaining the structural integrity of the time-series data.

- Lower computational cost: During inference, the linguistic branch is discarded, significantly reducing computational overhead compared with traditional LLM-based methods.

Additionally, TS-HTFA incorporates a dynamic adaptive gating mechanism to address input modality mismatches, layerwise contrastive learning to ensure intermediate feature consistency, and an optimal transport-driven strategy to reduce task-level output discrepancies. Experimental results highlight the framework’s superior performance across multiple benchmarks, achieving an approximately 5% improvement in long-term forecasting performance and a 1% overall performance boost in short-term forecasting performance compared with the second-best method. These results confirm the value of integrating these mechanisms into a unified framework.

Author Contributions

Conceptualization, P.W. and H.Z.; methodology, P.W. and S.D.; software, P.W. and Y.W.; validation, Y.W. and S.D.; formal analysis, Q.X. and W.Z.; data curation, P.W. and W.Y.; writing—original draft preparation. P.W., W.Z. and L.Z.; writing—review and editing. H.Z., T.Q. and Q.X.; visualization, P.W. and W.Y.; supervision, T.Q.; resources, L.Z.; funding acquisition, L.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Inspur Cloud Information Technology Co., Ltd. through the Intellectual Property Affairs Fund (2025, Independent Research Project: “Hairuo Large Model V5.0”) and by the Central Guidance for Local Science and Technology Development Fund of Shandong Province (2024, Project: “Research and Industrialization of AI Industry-Specific Large Models”).

Data Availability Statement

All the datasets are contained within this article and can be download at https://github.com/thuml/Time-Series-Library (accessed on 3 May 2024).

Conflicts of Interest

Author Liang Zhao is employed by Inspur Cloud Information Technology Co., Ltd. and acted as the company representative for the funding of this study. The funder provided computer resources, facilitated funding arrangements, and contributed to the manuscript through discussions.

References

- Zhang, C.; Sjarif, N.N.A.; Ibrahim, R. Deep Learning Models for Price Forecasting of Financial Time Series: A Review of Recent Advancements: 2020–2022. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1519. [Google Scholar] [CrossRef]

- Gao, P.; Liu, T.; Liu, J.W.; Lu, B.L.; Zheng, W.L. Multimodal Multi-View Spectral-Spatial-Temporal Masked Autoencoder for Self-Supervised Emotion Recognition. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1926–1930. [Google Scholar]

- Liu, P.; Wu, B.; Li, N.; Dai, T.; Lei, F.; Bao, J.; Jiang, Y.; Xia, S.T. Wftnet: Exploiting global and local periodicity in long-term time series forecasting. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 5960–5964. [Google Scholar]

- Pan, J.; Ji, W.; Zhong, B.; Wang, P.; Wang, X.; Chen, J. DUMA: Dual mask for multivariate time series anomaly detection. IEEE Sensors J. 2022, 23, 2433–2442. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Wang, H.; Peng, J.; Huang, F.; Wang, J.; Chen, J.; Xiao, Y. MICN: Multi-scale local and global context modeling for long-term series forecasting. In Proceedings of the International Conference on Learning Representations, Virtual Event, 25–29 April 2022. [Google Scholar]

- Wu, H.; Hu, T.; Liu, Y.; Zhou, H.; Wang, J.; Long, M. TimesNet: Temporal 2D-Variation Modeling for General Time Series Analysis. In Proceedings of the The Eleventh International Conference on Learning Representations, Virtual Event, 25–29 April 2022. [Google Scholar]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 11121–11128. [Google Scholar] [CrossRef]

- Das, A.; Kong, W.; Leach, A.; Sen, R.; Yu, R. Long-term Forecasting with TiDE: Time-series Dense Encoder. arXiv 2023, arXiv:2304.08424. [Google Scholar]

- Xue, H.; Salim, F.D. Promptcast: A new prompt-based learning paradigm for time series forecasting. IEEE Trans. Knowl. Data Eng. 2023, 36, 6851–6864. [Google Scholar] [CrossRef]

- Cao, D.; Jia, F.; Arik, S.O.; Pfister, T.; Zheng, Y.; Ye, W.; Liu, Y. Tempo: Prompt-based generative pre-trained transformer for time series forecasting. arXiv 2023, arXiv:2310.04948. [Google Scholar]

- Chang, C.; Peng, W.C.; Chen, T.F. Llm4ts: Two-stage fine-tuning for time-series forecasting with pre-trained llms. arXiv 2023, arXiv:2308.08469. [Google Scholar]

- Jin, M.; Wang, S.; Ma, L.; Chu, Z.; Zhang, J.Y.; Shi, X.; Chen, P.Y.; Liang, Y.; Li, Y.F.; Pan, S.; et al. Time-llm: Time series forecasting by reprogramming large language models. arXiv 2023, arXiv:2310.01728. [Google Scholar]

- Sun, C.; Li, Y.; Li, H.; Hong, S. TEST: Text prototype aligned embedding to activate LLM’s ability for time series. arXiv 2023, arXiv:2308.08241. [Google Scholar]

- Wang, Z.; Ji, H. Open vocabulary electroencephalography-to-text decoding and zero-shot sentiment classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; Volume 36, pp. 5350–5358. [Google Scholar]

- Qiu, J.; Han, W.; Zhu, J.; Xu, M.; Weber, D.; Li, B.; Zhao, D. Can brain signals reveal inner alignment with human languages? In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; pp. 1789–1804. [Google Scholar]

- Li, J.; Liu, C.; Cheng, S.; Arcucci, R.; Hong, S. Frozen language model helps ECG zero-shot learning. In Proceedings of the Medical Imaging with Deep Learning, PMLR, Paris, France, 3–5 July 2024; pp. 402–415. [Google Scholar]

- Jia, F.; Wang, K.; Zheng, Y.; Cao, D.; Liu, Y. GPT4MTS: Prompt-based Large Language Model for Multimodal Time-series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 23343–23351. [Google Scholar]

- Yu, H.; Guo, P.; Sano, A. ECG Semantic Integrator (ESI): A Foundation ECG Model Pretrained with LLM-Enhanced Cardiological Text. arXiv 2024, arXiv:2405.19366. [Google Scholar]

- Kim, J.W.; Alaa, A.; Bernardo, D. EEG-GPT: Exploring Capabilities of Large Language Models for EEG Classification and Interpretation. arXiv 2024, arXiv:2401.18006. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. FEDformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

- Nie, Y.; Nguyen, N.H.; Sinthong, P.; Kalagnanam, J. A Time Series is Worth 64 Words: Long-term Forecasting with Transformers. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023; 5 May 2023. [Google Scholar]

- Zhang, Y.; Yan, J. Crossformer: Transformer utilizing cross-dimension dependency for multivariate time series forecasting. In Proceedings of the International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Zhou, T.; Niu, P.; Wang, X.; Sun, L.; Jin, R. One Fits All: Power General Time Series Analysis by Pretrained LM. arXiv 2023, arXiv:2302.11939. [Google Scholar]

- Pan, Z.; Jiang, Y.; Garg, S.; Schneider, A.; Nevmyvaka, Y.; Song, D. S2 IP-LLM: Semantic Space Informed Prompt Learning with LLM for Time Series Forecasting. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Liu, X.; Hu, J.; Li, Y.; Diao, S.; Liang, Y.; Hooi, B.; Zimmermann, R. Unitime: A language-empowered unified model for cross-domain time series forecasting. In Proceedings of the ACM on Web Conference 2024, Singapore, 13–17 May 2024; pp. 4095–4106. [Google Scholar]

- Liu, C.; Xu, Q.; Miao, H.; Yang, S.; Zhang, L.; Long, C.; Li, Z.; Zhao, R. TimeCMA: Towards LLM-Empowered Time Series Forecasting via Cross-Modality Alignment. arXiv 2025, arXiv:2406.01638. [Google Scholar]

- Hu, Y.; Li, Q.; Zhang, D.; Yan, J.; Chen, Y. Context-Alignment: Activating and Enhancing LLM Capabilities in Time Series. In Proceedings of the International Conference on Learning Representations, Singapore, 24–28 April 2025. [Google Scholar]

- Liu, P.; Guo, H.; Dai, T.; Li, N.; Bao, J.; Ren, X.; Jiang, Y.; Xia, S.T. CALF: Aligning LLMs for Time Series Forecasting via Cross-modal Fine-Tuning. arXiv 2025, arXiv:2403.07300. [Google Scholar]

- Shen, J.; Li, L.; Dery, L.M.; Staten, C.; Khodak, M.; Neubig, G.; Talwalkar, A. Cross-Modal Fine-Tuning: Align then Refine. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Chen, P.Y. Model Reprogramming: Resource-Efficient Cross-Domain Machine Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 22584–22591. [Google Scholar]

- Pang, Z.; Xie, Z.; Man, Y.; Wang, Y.X. Frozen Transformers in Language Models Are Effective Visual Encoder Layers. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Lai, Z.; Wu, J.; Chen, S.; Zhou, Y.; Hovakimyan, N. Residual-based Language Models are Free Boosters for Biomedical Imaging Tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5086–5096. [Google Scholar]

- Jin, Y.; Hu, G.; Chen, H.; Miao, D.; Hu, L.; Zhao, C. Cross-Modal Distillation for Speaker Recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 7–14 February 2023. [Google Scholar]

- Vinod, R.; Chen, P.Y.; Das, P. Reprogramming Pretrained Language Models for Protein Sequence Representation Learning. arXiv 2023, arXiv:2301.02120. [Google Scholar]

- Zhao, Z.; Fan, W.; Li, J.; Liu, Y.; Mei, X.; Wang, Y.; Wen, Z.; Wang, F.; Zhao, X.; Tang, J.; et al. Recommender systems in the era of large language models (llms). IEEE Trans. Knowl. Data Eng. 2024. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Lee, Y.L.; Tsai, Y.H.; Chiu, W.C.; Lee, C.Y. Multimodal prompting with missing modalities for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14943–14952. [Google Scholar]

- Villani, C. Topics in Optimal Transportation; American Mathematical Society: Providence, RI, USA, 2021; Volume 58. [Google Scholar]

- Cuturi, M. Sinkhorn Distances: Lightspeed Computation of Optimal Transport. Adv. Neural Inf. Process. Syst. 2013, 26. [Google Scholar]

- Liu, Y.; Qin, G.; Huang, X.; Wang, J.; Long, M. Autotimes: Autoregressive time series forecasters via large language models. arXiv 2024, arXiv:2402.02370. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition Transformers with Auto-Correlation for Long-Term Series Forecasting. In Proceedings of the Advances in Neural Information Processing Systems, Red Hook, NY, USA, 6–14 December 2021. [Google Scholar]

- Woo, G.; Liu, C.; Sahoo, D.; Kumar, A.; Hoi, S.C.H. ETSformer: Exponential Smoothing Transformers for Time-series Forecasting. arXiv 2022, arXiv:2202.01381. [Google Scholar]

- Tan, M.; Merrill, M.; Gupta, V.; Althoff, T.; Hartvigsen, T. Are language models actually useful for time series forecasting? In Proceedings of the NeurIPS, Vancouver, BC, Canada, 9–15 December 2024.

- Challu, C.; Olivares, K.G.; Oreshkin, B.N.; Garza, F.; Mergenthaler, M.; Dubrawski, A. N-HiTS: Neural Hierarchical Interpolation for Time Series Forecasting. arXiv 2022, arXiv:2201.12886. [Google Scholar]

- Oreshkin, B.N.; Carpov, D.; Chapados, N.; Bengio, Y. N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. arXiv 2019, arXiv:1905.10437. [Google Scholar]

- Qiu, X.; Hu, J.; Zhou, L.; Wu, X.; Du, J.; Zhang, B.; Guo, C.; Zhou, A.; Jensen, C.S.; Sheng, Z.; et al. TFB: Towards Comprehensive and Fair Benchmarking of Time Series Forecasting Methods. arXiv 2024, arXiv:2403.20150. [Google Scholar] [CrossRef]

- Makridakis, S.; Spiliotis, E.; Assimakopoulos, V. The M4 Competition: Results, Findings, Conclusion and Way Forward. Int. J. Forecast. 2018, 34, 802–808. [Google Scholar] [CrossRef]

- Jiang, Y.; Pan, Z.; Zhang, X.; Garg, S.; Schneider, A.; Nevmyvaka, Y.; Song, D. Empowering Time Series Analysis with Large Language Models: A Survey. arXiv 2024, arXiv:2402.03182. [Google Scholar]

- Jin, M.; Zhang, Y.; Chen, W.; Zhang, K.; Liang, Y.; Yang, B.; Wang, J.; Pan, S.; Wen, Q. Position: What Can Large Language Models Tell Us about Time Series Analysis. In Proceedings of the Forty-First International Conference on Machine Learning, Vienna, Austria, 21–27 July 2024. [Google Scholar]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Kong, Y.; Yang, Y.; Wang, S.; Liu, C.; Liang, Y.; Jin, M.; Zohren, S.; Pei, D.; Liu, Y.; Wen, Q. Position: Empowering Time Series Reasoning with Multimodal LLMs. arXiv 2025, arXiv:2502.01477. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).