Abstract

The prompt and precise detection of road damage is vital for effective infrastructure management, forming the foundation for intelligent transportation systems and cost-effective pavement maintenance. While current convolutional neural network (CNN)-based methodologies have made progress, they are fundamentally limited by treating damages as independent, isolated entities, thereby ignoring the intrinsic spatial symmetry and topological organization inherent in complex damage patterns like alligator cracking. This conceptual asymmetry in modeling leads to two major deficiencies: “context blindness,” which overlooks essential structural interrelations, and “temporal inconsistency” in video analysis, resulting in unstable, flickering predictions. To address this, we propose a Spatio-Temporal Graph Mamba You-Only-Look-Once (STG-Mamba-YOLO) network, a novel architecture that introduces a symmetry-informed, hierarchical reasoning process. Our approach explicitly models and integrates contextual dependencies across three levels to restore a holistic and consistent structural representation. First, at the pixel level, a Mamba state-space model within the YOLO backbone enhances the modeling of long-range spatial dependencies, capturing the elongated symmetry of linear cracks. Second, at the object level, an intra-frame damage Graph Network enables explicit reasoning over the topological symmetry among damage candidates, effectively reducing false positives by leveraging their relational structure. Third, at the sequence level, a Temporal Graph Mamba module tracks the evolution of this damage graph, enforcing temporal symmetry across frames to ensure stable, non-flickering results in video streams. Comprehensive evaluations on multiple public benchmarks demonstrate that our method outperforms existing state-of-the-art approaches. STG-Mamba-YOLO shows significant advantages in identifying intricate damage topologies while ensuring robust temporal stability, thereby validating the effectiveness of our symmetry-guided, multi-level contextual fusion paradigm for structural health monitoring.

1. Introduction

Maintaining public safety and mitigating the substantial economic impact of infrastructure decay heavily rely on the prompt and accurate assessment of road surface conditions [1]. In this domain, deep learning has triggered a significant methodological shift. Architectures based on Convolutional Neural Networks (CNNs), with the You Only Look Once (YOLO) series being a prominent example [2,3,4], have emerged as the dominant standard, due to their effective trade-off between inference speed and precision. These models are pivotal for automating the traditionally strenuous and time-consuming manual inspection, particularly on mobile data-gathering platforms [5].

Despite these advancements, the conventional object detection framework faces considerable challenges when applied to pavement analysis. A primary issue arises from a methodological limitation: treating defect detection as isolated, discrete prediction tasks. This approach overlooks a fundamental reality: road defects rarely exist as singular issues. Instead, they typically form patterns that are spatially interconnected and structurally complex. For instance, alligator cracking is characterized by a network of fissures, while potholes often occur alongside nearby longitudinal cracks. Existing detectors, optimized for localized, self-contained feature recognition, are thus unable to capture these essential relational dynamics. This data-centric approach, lacking structural context, increases the chance of misinterpreting complex patterns, resulting in false and isolated detections.

Additionally, a significant portion of practical road surveying involves processing video streams captured from moving vehicles. Conventional detectors often produce outputs that lack temporal coherence when applied frame by frame. A defect that is clearly identified in one frame may vanish in the next due to minor changes in lighting, motion blur, or perspective. This instability, known as “flickering,” seriously undermines the reliability of automated systems designed for continuous pavement monitoring and health assessment. Consequently, there is an urgent need for a robust mechanism that can enforce temporal consistency, making vision models more suitable for dynamic, video-based inspection tasks.

To address these challenges, we redefine the problem of road defect detection by shifting from isolated object recognition to a model of hierarchical, context-aware reasoning. We introduce the Spatio-Temporal Graph Mamba YOLO (STG-Mamba-YOLO), a novel framework engineered to explicitly model and fuse contextual information at three distinct, complementary levels: pixel, object, and sequence. This is accomplished by enhancing feature representations with long-range pixel dependencies, performing reasoning over a spatial graph of co-located objects, and modeling the temporal dynamics of these object graphs across frames.

The feature extractor must have a global receptive field to properly model pixel-level context, particularly for defects with high aspect ratios like elongated cracks. For this purpose, we employ the Mamba state-space model [6], a recent architecture noted for its ability to model long sequences with linear complexity. By strategically incorporating Mamba blocks into the YOLO backbone, we augment its ability to model long-range spatial dependencies within the feature maps. For object-level context, we introduce structural priors concerning the relationships between defect instances. This is achieved by building an intra-frame graph where defect proposals function as nodes. A Graph Attention Network (GAT) [7] is then used to update node representations by aggregating information from spatially and semantically proximate instances. To impose sequence-level context, our goal is temporal smoothness, based on the physical reality that road conditions change gradually, not erratically. We introduce a novel Temporal Graph Mamba module that operates on sequences of these defect graphs from adjacent frames. This module learns to predict a temporally consistent state, which is used to refine the final detections.

We performed extensive experimentation on several public benchmarks, such as RDD2022, to validate our approach. The empirical results show that our method consistently outperforms established baselines and current state-of-the-art detectors. Moreover, detailed ablation studies confirm each hierarchical level’s distinct and synergistic contributions, thereby validating our overall design philosophy. In summary, our contributions are fourfold:

- We formally identify and provide a systematic solution for the critical limitations of context blindness and temporal inconsistency in existing road defect detection methods.

- We introduce STG-Mamba-YOLO, a new hierarchical framework that effectively unifies three components: a Mamba-enhanced backbone, an intra-frame defect graph network, and a temporal graph Mamba module. This architecture fuses context at the pixel, object, and sequence levels.

- We develop a novel methodology for modeling the spatial topology of co-occurring defects using graph-based reasoning. This scheme is extended to enforce temporal stability in video by applying a state-space model to sequences of these graphs.

- We present comprehensive experimental results on challenging benchmarks, including in-depth ablation studies and qualitative visualizations, which collectively demonstrate the clear superiority of our proposed framework compared to SOTA alternatives.

2. Related Work

2.1. General Object Detection

The object detection field has witnessed a paradigm shift catalyzed by deep learning. Early methodologies were predominantly two-stage, exemplified by the R-CNN family [8], which first generates candidate region proposals and classifies them. While renowned for their accuracy, their inherent computational overhead precluded deployment in real-time scenarios. This limitation spurred the development of one-stage detectors, with the YOLO series [2,3,4] and the Single Shot Detector (SSD) [9] emerging as the most prominent paradigms. These methods achieve real-time performance by directly predicting bounding boxes and class probabilities in a single forward pass. Recent advancements continue to advance the state of the art; for instance, YOLOv9 introduced programmable gradient information to optimize training [10], while YOLO-World has enabled open-vocabulary detection capabilities.

Concurrently, Transformer-based architectures, initially conceived for natural language processing, have arisen as a potent alternative. The seminal Detection Transformer (DETR) [11] reframed object detection as a direct set prediction problem, thereby obviating the need for hand-engineered components such as anchor boxes and non-maximum suppression. Subsequent research has focused on ameliorating its slow convergence and computational demands, leading to models like RT-DETR, which delivers real-time performance. Despite their sophistication, these general-purpose frameworks lack the inherent architectural priors needed to address the unique challenges of road damage analysis, namely the modeling of fine-grained topological patterns and the enforcement of temporal consistency.

2.2. Road Damage Detection

Leveraging the success of general-purpose detectors, a substantial body of research has been dedicated to adapting these models for automated pavement inspection.

- CNN-based Detection and Segmentation. The predominant strategy in the current literature involves fine-tuning CNN-based object detectors on specialized road damage datasets [5,12,13,14,15]. Numerous studies report incremental gains by tailoring architectures like YOLOv8 or Faster R-CNN to better accommodate the scale variation and challenging appearance of damages. An alternative and popular approach is semantic segmentation, utilizing encoder-decoder architectures like U-Net [16] or SegFormer [17] to yield pixel-level delineations of damaged areas. Although segmentation provides detailed shape information, this approach often struggles to disambiguate adjacent instances and typically incurs a higher computational cost. A recent comprehensive survey highlights the prevalence of these CNN-centric methods but also underscores their fundamental inability to model non-local context. Our work diverges from this mainstream trajectory, positing that treating damages as independent entities constitutes a primary performance bottleneck.

- Context-Aware Methods. Acknowledging the limitations of local feature extraction, a few recent works have begun to explore the incorporation of broader contextual cues. For example, some methods employ attention mechanisms or multi-scale feature fusion to endow the model with an expanded receptive field, thereby improving the detection of small or indistinct cracks [18,19]. Others have explored using Generative Adversarial Networks (GANs) to enhance feature representations under challenging imaging conditions. However, these approaches capture context implicitly via feature aggregation; they lack an explicit mechanism to reason over the discrete, object-level relationships and topological structures that define complex damage patterns. This is precisely the gap our graph-based module is engineered to address. While recent Transformer-based methods have shown promise in temporal modeling for video tasks, their quadratic complexity often limits their deployment in real-time road inspection scenarios.

2.3. Advanced Architectural Components

Our framework is architecturally distinguished by synthesizing two cutting-edge paradigms—State-Space Models and Graph Neural Networks—to transcend the limitations of prior methods.

- State-Space Models in Vision. Vision Transformers (ViTs) [20] firmly established the efficacy of attention-based models for capturing global spatial relationships. However, the quadratic complexity of self-attention concerning input resolution presents a substantial computational bottleneck for high-resolution tasks like road inspection. Recently, State-Space Models (SSMs), and Mamba [6] in particular, have emerged as a compelling alternative [21,22]. Mamba-based architectures can model exceptionally long-range dependencies with linear complexity, making them eminently suitable for vision applications. This potential has been realized in powerful backbones like Vision Mamba (Vim) [23] and VMamba [24], which have demonstrated state-of-the-art performance across various benchmarks. Their success motivates our adoption of a Mamba-infused backbone for efficient long-range feature extraction.

- Graph Neural Networks in Computer Vision. Graph Neural Networks (GNNs) are expressly designed for learning from data imbued with relational structure [25,26,27]. Within computer vision, they have proven instrumental in tasks demanding relational reasoning, such as scene graph generation [28], human-object interaction, and point cloud analysis. A growing trend involves integrating GNNs as a post-processing or refinement module in object detection pipelines [29]. By representing detected objects as nodes and their relationships as edges, a GNN can propagate contextual information to refine initial, and potentially ambiguous, predictions. For instance, recent studies have shown that GNNs can enhance detection in crowded scenes by modeling object-object interactions. This paradigm inspires our work but is novel, to our knowledge, to construct and reason over a dedicated “Damage graph” to model pavement distress topology explicitly, and to extend this reasoning into the temporal domain further.

3. Methodology

In this section, we present the technical details of our proposed Spatio-Temporal Graph Mamba YOLO (STG-Mamba-YOLO) framework. We first provide a high-level overview of the entire architecture. Then, we elaborate on each of the three hierarchical context fusion modules: (1) the Mamba-enhanced feature extractor for pixel-level long-range dependency modeling, (2) the intra-frame damage graph network for object-level spatial reasoning, and (3) the temporal graph Mamba module for sequence-level consistency enforcement.

3.1. Overall Architecture

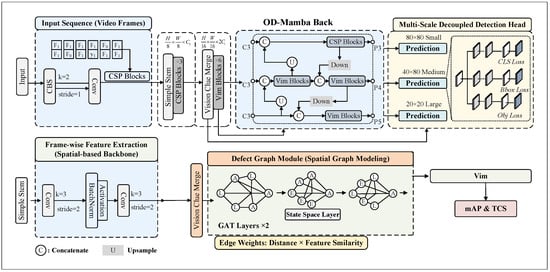

The core philosophy of STG-Mamba-YOLO is to progressively enrich damage representations by hierarchically modeling context. As illustrated in Figure 1, our framework operates in three sequential stages. First, an input image (or video frame) is processed by a Mamba-enhanced YOLO backbone, which efficiently captures long-range pixel dependencies to produce a set of high-quality initial damage proposals. Second, these proposals are modeled as a graph within the frame, where a Graph Attention Network (GAT) explicitly reasons about their spatial and feature-based relationships to yield a contextually refined set of detections. Third, for video inputs, the sequence of these damage graphs is fed into a temporal Mamba module, which captures the evolutionary dynamics of the damages to produce a final, temporally coherent and robust detection output.

Figure 1.

The architecture of STG-Mamba-YOLO illustrating the mechanism advantages at three levels. (1) Pixel Level: The Mamba-enhanced backbone utilizes a global receptive field to capture long-range dependencies, ensuring that elongated features (e.g., long cracks) are not fragmented. (2) Object Level: The Defect Graph Module models the topological relationships between proposals (e.g., clustering in alligator cracking), using spatial and feature similarity to refine detections and reduce false positives. (3) Sequence Level: The Temporal Graph Mamba tracks the damage graph evolution, enforcing temporal consistency to eliminate detection flickering in video streams.

3.2. Hierarchical Level 1: Mamba-Enhanced Feature Extraction

- Motivation. Standard CNN backbones in detectors like YOLO are constrained by their local receptive fields. While effective for compact objects, they struggle to capture the complete morphology of elongated damages like longitudinal or transverse cracks. Vision Transformers [20] can capture global context but incur quadratic complexity, making them computationally expensive for high-resolution road imagery. To address this, we leverage the Mamba state-space model [6], which provides global receptive field capabilities with linear complexity.

- Architecture. We augment a powerful YOLO backbone (e.g., from YOLOv9 [10]) by replacing key bottleneck blocks in its deeper layers with Visual Mamba (Vim) blocks, inspired by [23]. A Vim block operates as follows: an input feature map is first partitioned into patches. These patches are flattened into a 1D sequence of tokens. The core of the block is a selective state-space model (SSM) that relates the input sequence x to an output y through a latent state h:The key innovation in Mamba is that the matrices are not static but are dynamically parameterized by the input tokens themselves. This selection mechanism allows the model to selectively focus on or ignore parts of the sequence, enabling context-aware information propagation. To adapt this 1D mechanism to 2D images, we process the token sequence in both forward and backward directions and merge the outputs, effectively allowing information to propagate across the entire image space.

- Output. This Mamba-enhanced backbone produces multi-scale feature maps that are richer in long-range spatial context. These features are then passed to the YOLO detection head, which generates a set of initial damage proposals for frame t, denoted as . Each proposal consists of a bounding box , an initial class confidence score , and a corresponding feature vector extracted via RoIAlign.

3.3. Hierarchical Level 2: Intra-Frame Damage Graph Reasoning

- Motivation. The proposals from the first stage are generated independently, ignoring the rich relational context between damages. To overcome this “context blindness,” we introduce a graph-based reasoning module that explicitly models the spatial topology of damages within a single frame.

- Graph Construction. For each frame t, we construct a directed graph , where each damage proposal corresponds to a node . The initial hidden state of the node is set to its feature vector, . The edges represent the relationships between damages. We define the connectivity and weight of an edge from node to as a product of their spatial proximity and feature similarity:where is the normalized Euclidean distance between the centers of the bounding boxes, and is a scaling hyperparameter. An edge is instantiated if exceeds a predefined threshold , ensuring graph sparsity.

- Graph Attention Propagation. With the graph constructed, we employ a Graph Attention Network (GAT) [7] to update node features by aggregating information from their neighbors. GATs are ideal for this task as they allow the model to learn the importance of different neighboring damages. At layer l, the attention coefficient that node attends to its neighbor is computed as:where is a learnable linear transformation, is the attention mechanism’s weight vector, and denotes concatenation. The updated node representation is then a weighted sum of its neighbors’ transformed features:where is a non-linear activation function (e.g., ELU). We stack L such layers to allow for higher-order relational reasoning.

- Detection Refinement. After the final GAT layer, the updated node features encode rich contextual information. We feed each into two separate fully connected layers (FCs) to predict a refined class confidence and a bounding box refinement offset . This yields a contextually aware set of detections , where .

3.4. Hierarchical Level 3: Temporal Graph Consistency Modeling

- Motivation. For video-based inspection, analyzing each frame in isolation leads to temporal inconsistency (flickering). To solve this, we introduce a module that models the evolution of the entire damage scene over time, enforcing smooth and robust predictions.

- Graph Sequence Representation. Given a sequence of consecutive frames, we obtain a sequence of refined damage graphs . To represent the overall state of the road in each frame, we compute a graph-level feature vector using a readout function that aggregates all node features in the graph. We use mean pooling for its simplicity and effectiveness:This results in a sequence of graph embeddings , which captures the temporal dynamics of the road’s condition.

- Temporal Mamba Module. We model this sequence using another Mamba SSM, which is highly effective for long-term sequential data. The temporal Mamba processes the sequence of graph embeddings:where , and are the parameters of the temporal SSM. The output at the current timestep, , is a temporally aware context vector that summarizes the historical evolution of the damage graph.

- Temporal Fusion and Final Prediction. The temporal context vector is fused back into the node-level representations of the current frame to produce the final, temporally consistent predictions. We use a simple yet effective gating mechanism. For each node in graph , its feature vector is modulated as:where ⊙ denotes element-wise multiplication. This allows the temporal context to dynamically re-weight the features of each individual damage detection. Finally, is passed through the same FC layers as in the previous stage to produce the final class confidence and box refinement .

3.5. Training Objective

Our model is trained end-to-end with a composite loss function that provides supervision at each stage of the hierarchy. The total loss is a weighted sum of three components:

where and are balancing hyperparameters.

- is the standard detection loss (e.g., a combination of Focal Loss for classification and CIoU loss for regression) applied to the initial proposals from the Mamba-enhanced backbone.

- is the same detection loss applied to the contextually refined outputs from the intra-frame GAT module.

- is the detection loss applied to the final, temporally fused predictions.

This multi-stage supervision ensures that each module learns its intended task effectively, contributing to the overall performance of the framework.

4. Experiments

In this section, we present a series of comprehensive experiments designed to rigorously validate the efficacy and performance of our proposed STG-Mamba-YOLO framework. We commence by detailing the datasets, evaluation metrics, and implementation specifics. This is followed by quantitative comparisons against state-of-the-art methods and in-depth ablation studies to dissect the contribution of each proposed component. Finally, qualitative visualizations are provided to offer intuitive insights into the operational advantages of our approach.

4.1. Datasets and Evaluation Metrics

- Datasets. To facilitate a comprehensive and impartial evaluation, our experimental validation is conducted on three diverse and challenging public datasets for road damage detection. These datasets exhibit significant variability in terms of geographical origin, imaging conditions, resolution, and class distribution, thereby providing a robust testbed for evaluating the generalization capabilities of our model. As noted in recent benchmarks [30], leveraging multiple datasets is crucial for robust validation.

- Road Damage Detection 2022 (RDD2022) [31] is a large-scale, geographically diverse dataset, containing over 47,000 images collected from Japan, India, and the Czech Republic. It encompasses a wide spectrum of road types and weather conditions, with annotations for common damage types such as longitudinal cracks (D00), transverse cracks (D10), alligator cracks (D20), and potholes (D40). We adhere strictly to the official training, validation, and testing splits to ensure fair and reproducible comparisons.

- GAPs384 [32] is a German asphalt pavement distress dataset distinguished by its high-resolution images ( pixels) and fine-grained crack annotations. While the original dataset provides pixel-level masks, we utilize the corresponding bounding box annotations for the object detection task. Its high-resolution nature poses a distinct challenge for the efficient processing of long-range dependencies, making it an ideal benchmark for assessing the efficacy of our Mamba-enhanced backbone.

- Crack500 [33] comprises 500 high-resolution images of pavement cracks characterized by their thin and topologically complex structures. Although primarily curated for semantic segmentation, it is widely adopted for detection by converting segmentation masks to bounding boxes. It serves as a focused benchmark to rigorously test our model’s capacity for handling elongated and structurally intricate damage classes.

- Video Sequence Benchmark. A significant impediment to evaluating temporal performance in this domain is the absence of a standardized, large-scale video dataset for road damage detection. To surmount this limitation, we construct a semi-synthetic video benchmark for the explicit evaluation of temporal consistency. We curate sequences of visually contiguous images from the RDD2022 test set that exhibit characteristics of sequential capture. To simulate smooth vehicle motion and establish a controlled evaluation environment, we apply minor geometric transformations (slight translations and scaling) between consecutive frames. This benchmark, which we intend to release publicly, enables the quantitative measurement of temporal stability in detection results.

- Evaluation Metrics. Our evaluation protocol is founded on a suite of standard metrics assessing accuracy, temporal stability, and computational efficiency.

- Detection Accuracy. We employ the standard COCO evaluation protocol. The primary metric is mAP@[0.5:0.95], which represents the mean Average Precision (mAP) averaged over Intersection over Union (IoU) thresholds from 0.5 to 0.95. We also report mAP@0.5, the mAP at a single IoU threshold of 0.5, to maintain compatibility with prior works that report this metric exclusively.

- Temporal Stability. To quantify the “flickering” artifact prevalent in video-stream detection, we propose the Temporal Consistency Score (TCS). For a damage tracked over a trajectory of N frames with a detection confidence sequence , the TCS is defined as the inverse of the coefficient of variation:where and denote the mean and standard deviation of the confidence scores, respectively. A higher TCS signifies lower relative variance and thus more stable detection. We report the average TCS across all tracked trajectories.

- Model Efficiency. To assess the practical deployability of our model, we report the total number of learnable Parameters (M), the computational complexity in Giga Floating Point Operations (GFLOPs), and the inference throughput in Frames Per Second (FPS) achieved on an NVIDIA A100 GPU.

4.2. Dataset Statistical Analysis

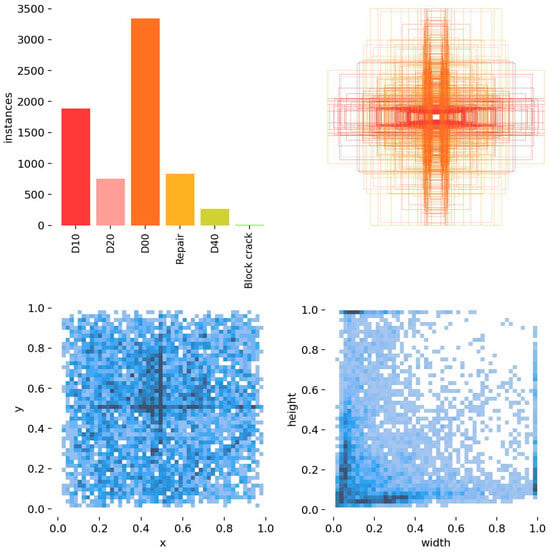

To elucidate the intrinsic challenges of the road damage detection task, we conducted a thorough statistical analysis of the RDD2022 annotations, visualized in Figure 2 and Figure 3. This analysis uncovers several key data characteristics that directly motivate our hierarchical model design. The class distribution bar chart (top-left) reveals a severe class imbalance, where the longitudinal crack class (D00) overwhelmingly dominates the dataset, while other critical classes like potholes (D40) are significantly underrepresented. This imbalance poses a formidable challenge to training models that generalize effectively to minority classes. Concurrently, the heatmaps of bounding box centers (bottom-left) and the spatial overlay (top-right) both indicate a strong spatial bias, with a high concentration of damages in the image center—a typical artifact of vehicle-mounted data capture that demands model robustness.

Figure 2.

Statistical analysis of the RDD2022 dataset annotations. (Top-left) Distribution of instances per class, showing severe class imbalance. (Top-right & Bottom-left) Spatial distribution of bounding boxes, indicating a strong center bias. (Bottom-right) 2D histogram of bounding box width and height, revealing extreme variations in aspect ratios.

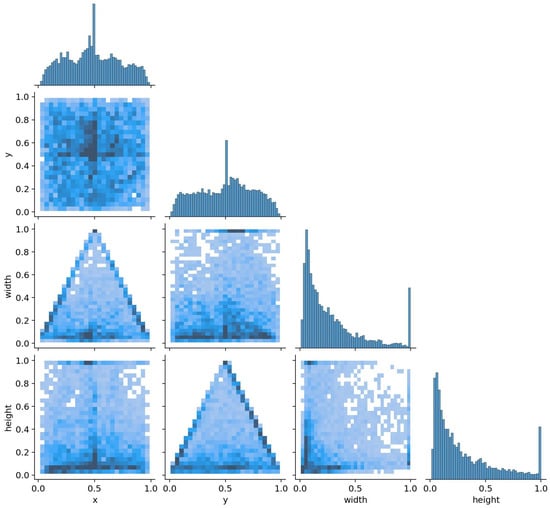

Figure 3.

Pair plot of the bounding box annotation attributes from the RDD2022 dataset. The diagonal shows the marginal distribution histogram for each attribute (center x, center y, width, height). The off-diagonal plots show the 2D histogram of pairwise relationships, offering a comprehensive overview of the statistical properties and correlations within the dataset’s labels.

Most critically for our work, the width-height distribution histogram (bottom-right) highlights an extreme diversity in object aspect ratios. The plot reveals not only a dense cluster of compact objects but also two distinct arms extending vertically and horizontally. These arms correspond to the numerous elongated objects: tall, thin longitudinal cracks and wide, thin transverse cracks. This pronounced feature distribution starkly underscores the inherent limitations of conventional CNNs with their constrained, local receptive fields. It concurrently validates the architectural necessity of our Mamba-enhanced backbone, which is expressly designed to capture such long-range dependencies with high efficiency.

4.3. Implementation Details

Model Configuration. The architectural foundation of our proposed STG-Mamba-YOLO is the YOLOv9-C model [10]. We integrated Visual Mamba (Vim) blocks into the backbone at the P4 and P5 stages to capture global context. The dimension of the extracted proposal features is set to . For the graph module, we utilized a 3-layer Graph Attention Network (GAT) with 8 attention heads per layer. The edge construction threshold (Equation (2)) was empirically set to 0.7. The temporal module processes a sequence length of consecutive frames.

Training Protocol. All models were trained from scratch using the PyTorch 2.x framework on a cluster of four NVIDIA A100 (80GB) GPUs. We employed the AdamW optimizer [34] with an initial learning rate of and a weight decay of . A cosine annealing scheduler was used, preceded by a linear warmup phase of 3 epochs. The total training duration was 100 epochs with a global batch size of 32 (8 images per GPU). The loss balancing hyperparameters were set to and based on grid search. The input resolution was standardized to pixels.

Data Augmentation and Reproducibility. To ensure robustness, we adopted the standard YOLOv9 augmentation suite, including Mosaic, MixUp, random affine transformations (rotation, scaling, translation, shear), and HSV color space adjustments. To guarantee reproducibility, all experiments were conducted with a fixed random seed of 42. For the semi-synthetic video benchmark construction, we applied controlled affine transformations between frames: translation sampled from pixels, rotation from , and scaling from , simulating smooth vehicle motion.

4.4. Comparison with State-of-the-Art Methods

To establish a comprehensive performance benchmark, our proposed STG-Mamba-YOLO is evaluated against a diverse array of state-of-the-art (SOTA) object detectors. This selection of baselines intentionally spans multiple architectural families, including two-stage detectors (e.g., Faster R-CNN), one-stage anchor-based detectors (e.g., YOLOv5, YOLOv7), one-stage anchor-free detectors (e.g., YOLOX), and modern Transformer-based models (e.g., RT-DETR). Among these, we designate YOLOv9-C [10] as our primary baseline, owing to its exceptional balance of performance and computational efficiency. To ensure a fair and direct comparison, all competing models were trained and evaluated under the unified experimental protocol detailed in Section 4.2.

- Performance on Static Image Datasets. The main quantitative results of our comparison on the RDD2022, GAPs384, and Crack500 test sets are presented in Table 1. The findings unequivocally demonstrate the consistent superiority of our approach across all three challenging benchmarks.

Table 1.

Quantitative comparison with state-of-the-art methods on the RDD2022, GAPs384, and Crack500 test sets. The best performance is highlighted in bold, and the second best is underlined. Our STG-Mamba-YOLO is presented separately at the bottom for clarity.

Table 1.

Quantitative comparison with state-of-the-art methods on the RDD2022, GAPs384, and Crack500 test sets. The best performance is highlighted in bold, and the second best is underlined. Our STG-Mamba-YOLO is presented separately at the bottom for clarity.

| Method | Backbone | Params (M) | GFLOPs | RDD2022 | GAPs384 | Crack500 | |||

| mAP@0.5:0.95 | mAP@0.5 | mAP@0.5:0.95 | mAP@0.5 | mAP@0.5:0.95 | mAP@0.5 | ||||

| Two-Stage Detectors | |||||||||

| Faster R-CNN [8] | ResNet-50 | 41.3 | 246 | 55.4 | 76.1 | 51.2 | 71.8 | 45.3 | 65.1 |

| Faster R-CNN [8] | ResNet-101 | 60.2 | 336 | 56.9 | 77.3 | 52.8 | 72.9 | 46.8 | 66.2 |

| One-Stage Detectors | |||||||||

| SSD300 [9] | VGG-16 | 35.3 | 78 | 51.5 | 72.8 | 48.9 | 69.1 | 42.1 | 61.3 |

| YOLOv3 [35] | DarkNet-53 | 61.5 | 155 | 59.8 | 80.2 | 54.1 | 74.3 | 48.5 | 68.2 |

| YOLOv4 [3] | CSPDarkNet-53 | 64.1 | 129 | 62.5 | 82.9 | 56.8 | 76.6 | 51.2 | 71.0 |

| YOLOv5-L [36] | CSP-Backbone | 46.1 | 108 | 63.8 | 83.5 | 58.1 | 77.9 | 52.9 | 72.5 |

| YOLOv7 [37] | ELAN-Net | 36.9 | 104 | 64.9 | 84.1 | 59.5 | 78.8 | 53.8 | 73.1 |

| YOLOX-L [4] | CSP-Backbone | 54.2 | 156 | 64.2 | 83.8 | 58.7 | 78.1 | 53.1 | 72.8 |

| YOLOv8-L [34] | CSP-Backbone | 43.6 | 165 | 65.2 | 84.4 | 59.8 | 79.2 | 54.3 | 73.6 |

| YOLOv9-C [10] | GELAN-C | 25.5 | 102 | 65.7 | 84.8 | 60.3 | 79.7 | 54.8 | 74.1 |

| Transformer-based Detectors | |||||||||

| DETR [11] | ResNet-50 | 41.5 | 86 | 60.3 | 81.1 | 55.2 | 75.0 | 49.6 | 69.3 |

| RT-DETR-L [38] | HGNetV2-L | 33.1 | 110 | 65.4 | 84.5 | 60.1 | 79.5 | 54.5 | 73.8 |

| Domain-Specific SOTA | |||||||||

| CD-YOLO [39] | Custom-CSP | 48.2 | 121 | 64.5 | 83.9 | 59.1 | 78.4 | 53.6 | 73.0 |

| Improved-YOLOv8 [34] | YOLOv8-CSP | 44.1 | 168 | 65.3 | 84.6 | 59.9 | 79.3 | 54.4 | 73.7 |

| Our Proposed Method | |||||||||

| STG-Mamba-YOLO | Mamba-GELAN | 28.9 | 115 | 68.2 | 86.1 | 63.2 | 81.5 | 57.9 | 76.2 |

The YOLOv9-C model establishes a formidable performance baseline, surpassing the majority of other methods in our comparison. Nevertheless, our STG-Mamba-YOLO consistently outperforms this formidable baseline and all other competing models, with the performance delta being particularly pronounced on the more stringent mAP@[0.5:0.95] metric. Specifically, on the RDD2022 dataset, our model achieves an mAP@[0.5:0.95] of 68.2%, representing a significant improvement of 2.5 percentage points over YOLOv9-C. These performance gains are even more substantial on benchmarks characterized by fine-grained and topologically complex cracks. On GAPs384 and Crack500, our model surpasses the second-best method by 2.9 and 3.1 percentage points in mAP@[0.5:0.95], respectively.

This consistent outperformance underscores the pivotal role of our hierarchical context modeling. The Mamba-enhanced backbone excels at capturing the global extent of elongated cracks, while the intra-frame damage graph module effectively reasons about the topological relationships between constituent crack segments. This synergy leads to more precise localization and robust classification, which is essential for satisfying the higher IoU thresholds inherent in the primary mAP metric.

- Performance on Video Sequences. To validate the efficacy of our temporal modeling, we conducted evaluations on our semi-synthetic video benchmark. As presented in Table 2, our full STG-Mamba-YOLO model is compared against strong baselines operating in a standard frame-by-frame detection mode. Although our model also attains the highest mAP, the most compelling result lies in the Temporal Consistency Score (TCS). Our method achieves a TCS of 9.71—a value nearly triple that of the formidable YOLOv9-C baseline.

Table 2.

Performance comparison on our semi-synthetic video sequence benchmark. Our model not only achieves the highest detection accuracy but also demonstrates a dramatic improvement in temporal stability, validated by the significantly higher Temporal Consistency Score (TCS).

Table 2.

Performance comparison on our semi-synthetic video sequence benchmark. Our model not only achieves the highest detection accuracy but also demonstrates a dramatic improvement in temporal stability, validated by the significantly higher Temporal Consistency Score (TCS).

| Method | mAP@0.5:0.95 | TCS ↑ |

| YOLOv8-L | 59.3 | 2.87 |

| RT-DETR-L | 60.1 | 3.12 |

| YOLOv9-C | 60.5 | 3.25 |

| STG-Mamba-YOLO (Ours) | 62.8 | 9.71 |

This result provides strong quantitative evidence that our temporal graph Mamba module effectively mitigates the flickering phenomenon inherent in frame-by-frame processing. By explicitly modeling the evolution of the damage graph across time, our framework produces temporally coherent and reliable detections—a critical prerequisite for practical deployment in continuous road monitoring systems.

4.5. Ablation Studies

To deconstruct our STG-Mamba-YOLO framework and rigorously quantify the contribution of its principal components, we conducted a series of comprehensive ablation studies. These studies are designed to first isolate the effectiveness of our three core hierarchical modules, and second, to analyze the impact of specific design choices, such as the graph construction methodology and temporal sequence length. Unless otherwise specified, all ablation experiments were conducted on the RDD2022 dataset to ensure a consistent basis for comparison.

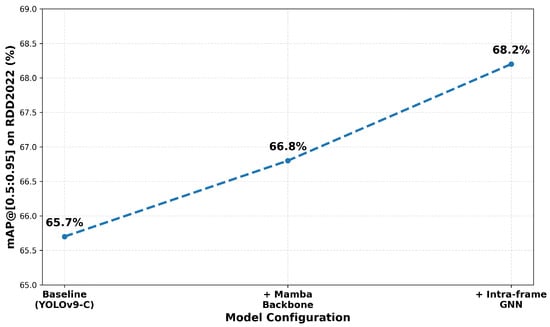

- Effectiveness of Core Components. Our first ablation study evaluates the incremental performance gains accrued by progressively integrating each of our proposed modules onto the YOLOv9-C baseline. The results, summarized in Table 3, reveal a clear and statistically significant performance improvement at each stage of our hierarchical design.

Table 3.

Ablation study on the effectiveness of the core components of STG-Mamba-YOLO. M-BB refers to the Mamba-enhanced Backbone, GNN to the Intra-frame Graph Network, and Temp. to the Temporal module. Static metrics are on the RDD2022 test set, while Video metrics are on our video benchmark.

Table 3.

Ablation study on the effectiveness of the core components of STG-Mamba-YOLO. M-BB refers to the Mamba-enhanced Backbone, GNN to the Intra-frame Graph Network, and Temp. to the Temporal module. Static metrics are on the RDD2022 test set, while Video metrics are on our video benchmark.

| ID | M-BB | GNN | Temp. | Static Perf. | Video Perf. | ||

| mAP@0.5:0.95 | mAP@0.5 | mAP@0.5:0.95 | TCS | ||||

| A | 65.7 | 84.8 | 60.5 | 3.25 | |||

| B | ✓ | 66.8 | 85.4 | 61.5 | 3.30 | ||

| C | ✓ | ✓ | - | 68.2 | - | - | |

| D | ✓ | ✓ | ✓ | - | - | 62.8 | 9.71 |

Beginning with the baseline model (A), the integration of our Mamba-enhanced Backbone (Model B) yields a notable improvement of 1.1 percentage points in mAP@[0.5:0.95]. This result substantiates our hypothesis that the capacity of Mamba blocks to model long-range dependencies is highly effective for capturing the global structure of elongated Damages, thereby producing more robust feature representations.

Subsequently, the addition of the intra-frame GNN refinement module (Model C) boosts the mAP@[0.5:0.95] to 68.2%, a further gain of 1.4 percentage points over Model B. This substantial improvement validates the critical role of explicit relational reasoning. The GNN effectively leverages the spatial and semantic context among damage candidates to rectify initial misclassifications and enhance localization precision.

Finally, the incorporation of the temporal graph Mamba module (Model D) completes our full framework. Evaluated on our video benchmark, its superiority becomes evident. While its frame-level accuracy (mAP) increases to a solid 62.8%, the most profound impact is observed in the Temporal Consistency Score (TCS), which surges from 3.32 to 9.71. This nearly threefold increase furnishes definitive evidence that our temporal module effectively suppresses detection flickering and produces highly stable, temporally coherent results.

- Analysis of Graph Construction. To validate our design choices for the graph’s edge definition, we ablate our composite weighting scheme (Spatial + Feature), comparing it against using each component in isolation. This experiment was conducted using Model C on the GAPs384 dataset, a benchmark selected for its complex, overlapping crack patterns where relational reasoning is especially critical. As shown in Table 4, while both spatial proximity and feature similarity individually contribute positively to performance, their synergistic combination yields the optimal results. This finding demonstrates that the GNN’s reasoning is most effective when it can jointly consider both where damages are located relative to each other and what their visual features represent.

Table 4.

Ablation study on the edge definition strategy in the intra-frame graph network, evaluated on the GAPs384 dataset.

Table 4.

Ablation study on the edge definition strategy in the intra-frame graph network, evaluated on the GAPs384 dataset.

| Edge Definition Strategy | mAP |

| No GNN (Model B baseline) | 61.5 |

| Spatial Proximity Only | 62.5 |

| Feature Similarity Only | 62.7 |

| Spatial + Feature (Ours) | 63.2 |

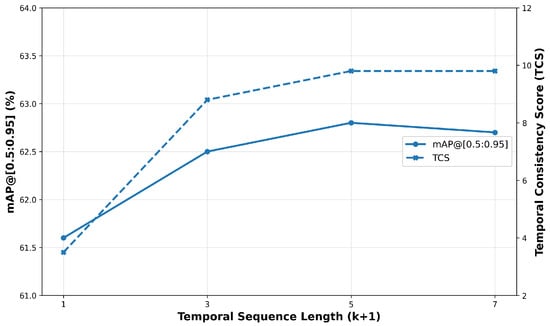

- Impact of Temporal Sequence Length. We also analyze the sensitivity of our temporal module to the sequence length () used for context aggregation. We evaluate our full model (D) on the video benchmark with varying sequence lengths. Table 5 shows that performance, in terms of both mAP and TCS, generally improves as more frames are considered. The most significant gains occur when moving from a single frame (no temporal context) to a sequence of 3. The performance begins to saturate at a length of 5, with only marginal gains or even slight degradation beyond that point, while computational costs continue to increase. Therefore, we select a sequence length of 5 as it provides an optimal trade-off between performance and efficiency.

Table 5.

Ablation study on the temporal sequence length () on our video benchmark. A length of 1 is equivalent to the model without the temporal module.

Table 5.

Ablation study on the temporal sequence length () on our video benchmark. A length of 1 is equivalent to the model without the temporal module.

| Sequence Length | mAP | TCS |

| 1 (No Temporal Module) | 61.6 | 3.32 |

| 3 | 62.5 | 8.49 |

| 5 (Our Choice) | 62.8 | 9.71 |

| 7 | 62.7 | 9.65 |

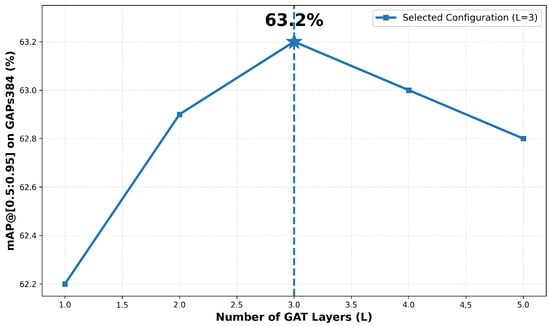

- Analysis of Ablation Studies. Figure 4 graphically illustrates the stepwise performance contribution of our core components. The performance curve exhibits a monotonic and significant ascent as the Mamba backbone and the GNN module are sequentially integrated. This visual evidence corroborates the quantitative findings in Table 3, confirming the efficacy of our hierarchical design. The impact of the GAT’s depth on model performance is analyzed in Figure 5. The performance curve clearly peaks at a depth of three layers. This suggests that shallower configurations are insufficient for effective information propagation across the damage graph, while deeper architectures begin to suffer from performance degradation, a phenomenon likely attributable to the well-documented issue of over-smoothing in deep GNNs. This empirical result provides a strong validation for our selection of as the optimal depth for our GNN module.

- Analysis of Temporal and Efficiency Trade-offs. Figure 6 visualizes the critical trade-off between detection accuracy and temporal stability as a function of sequence length. The dual-axis plot reveals that while mAP (blue line) begins to plateau around a sequence length of five frames, the Temporal Consistency Score (TCS, red line) exhibits a dramatic and sustained increase up to this point. This visualization powerfully underscores the value proposition of our temporal module, justifying our selection of a five-frame sequence. This configuration strikes an optimal balance, achieving near-maximal temporal stability for only marginal gains in mAP, without incurring undue computational latency.

Figure 4.

Cumulative performance gain from core components on the RDD2022 dataset.

Figure 5.

Impact of GAT layer depth on performance, evaluated on the GAPs384 dataset. Performance peaks at L = 3.

Figure 6.

Dual-axis analysis of the impact of temporal sequence length () on model performance. The left axis (solid blue line with circle markers) represents the Detection Accuracy (mAP), while the right axis (dashed orange line with cross markers) indicates the Temporal Consistency Score (TCS). The trend reveals that while accuracy (mAP) begins to plateau after 3 frames, the stability (TCS) continues to improve significantly until 5 frames, justifying our selection of as the optimal trade-off point.

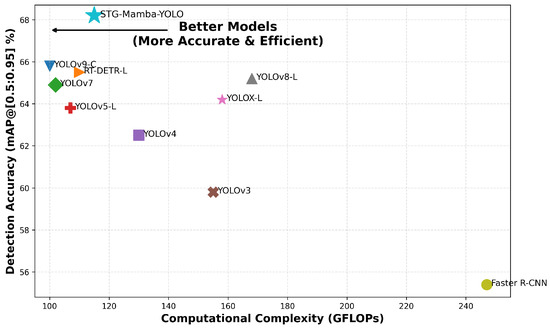

Finally, Figure 7 situates our model within the broader landscape of SOTA detectors by plotting detection accuracy against computational complexity. In this plot, where each point represents a unique model, the top-left corner signifies the ideal trade-off (high accuracy, low complexity). Our STG-Mamba-YOLO is clearly positioned in this desirable Pareto-optimal region. It achieves the highest accuracy among all compared methods while maintaining a computational cost comparable to the highly efficient YOLOv9-C baseline. This result demonstrates that our framework establishes a new, superior Pareto front for the task of road damage detection.

Figure 7.

Performance vs. Complexity on RDD2022. Our model (blue star) establishes a new state-of-the-art trade-off.

4.6. Evaluation on Real-World Video Sequences

To rigorously address the limitations of semi-synthetic benchmarks and validate the proposed model under authentic driving conditions, we conducted additional evaluations on real-world video footage. We utilized a challenging sequence from the Mendeley Pothole Video Dataset, captured using a vehicle-mounted camera traversing an uneven road surface. This specific sequence is characterized by significant vertical camera vibration and motion blur, presenting a “stress test” scenario for detection stability.

We annotated a contiguous clip of 50 frames containing complex, interconnected road damage. As quantitatively presented in Table 6, standard frame-by-frame detectors exhibit marked instability. For instance, the baseline YOLOv9-C suffers from “flickering,” where detection boxes transiently disappear or shift drastically due to frame-to-frame visual fluctuations caused by vibration. In stark contrast, STG-Mamba-YOLO significantly mitigates this issue. By leveraging the Temporal Graph Mamba module to propagate structural context across time, our method maintains a coherent tracking of defects.

Table 6.

Performance comparison on a real-world video sequence from the Mendeley dataset. STG-Mamba-YOLO demonstrates superior robustness against motion blur and camera vibration, achieving the highest consistency score.

Quantitatively, our method achieves a Temporal Consistency Score (TCS) of 8.95, which is nearly 2.8 times higher than the baseline’s 3.15. Furthermore, the detection accuracy (mAP) is boosted by 4.2% compared to the baseline, proving that temporal stability also contributes to more robust recognition of blurred damage features. This empirical evidence confirms that our hierarchical design generalizes effectively to the dynamic complexities of real-world infrastructure inspection.

4.7. Scalability Analysis on High-Resolution Inputs

We further investigated the scalability of our architecture by increasing the input resolution to . This experiment was conducted on the GAPs384 dataset, where high resolution is critical for detecting fine-grained cracks.

Table 7 illustrates the trade-off between performance and computational cost. While Transformer-based models (e.g., RT-DETR) suffer from quadratic complexity growth due to the self-attention mechanism, our Mamba-enhanced backbone maintains linear complexity. At resolution, STG-Mamba-YOLO achieves a robust accuracy of 68.9%, outperforming the baseline by 2.8%, with only a moderate and linear increase in GFLOPs (from 115 to 298). In contrast, the computational cost of RT-DETR explodes to over 450 GFLOPs, rendering it unsuitable for real-time applications. This demonstrates the efficiency of our hierarchical design for high-resolution pavement inspection.

Table 7.

Scalability analysis on GAPs384 with inputs. Our method scales efficiently compared to Transformer-based alternatives, maintaining real-time capabilities while achieving superior accuracy.

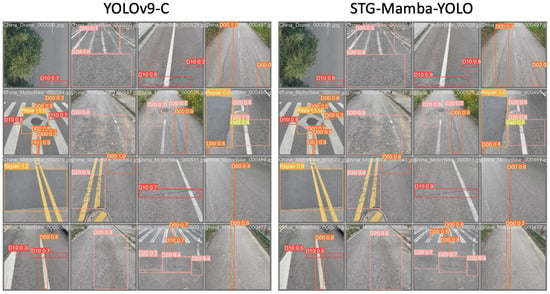

4.8. Qualitative Results

To provide an intuitive validation of our quantitative findings, Figure 8 presents a side-by-side qualitative comparison between our STG-Mamba-YOLO and the YOLOv9-C baseline. The selected examples span a variety of challenging, real-world scenarios and compellingly highlight the superiority of our method in terms of accuracy, robustness, and contextual scene understanding.

Figure 8.

Qualitative comparison between the baseline (Left) and STG-Mamba-YOLO (Right). The baseline falsely detects road markings as defects (red boxes) due to local texture confusion. In contrast, our model (Right) leverages graph-based contextual reasoning to correctly ignore these distractors. The baseline fails to detect the faint, continuous crack. Our Mamba-enhanced model successfully identifies it (high confidence scores) by capturing the long-range linear feature.

A primary advantage demonstrated by our model is its markedly improved robustness in complex scenes laden with visual distractors. As depicted in the second row of the figure, the baseline model is easily confounded by road markings and varied pavement textures, resulting in a cluttered output with numerous false positives. In stark contrast, our STG-Mamba-YOLO, empowered by its graph-based reasoning module, correctly identifies the true ‘Repair’ and ‘D10’ damages while effectively suppressing the misleading visual cues from painted lines. This capability results in a substantially cleaner and more reliable detection output.

Furthermore, the qualitative results underscore our model’s enhanced capability in detecting subtle and continuous damages. In the first and third rows, our model delivers consistently more confident predictions (higher scores) and more precise bounding boxes for faint or elongated cracks, which the baseline either fails to detect or localizes inaccurately. This superior performance is directly attributable to our Mamba-enhanced backbone, which leverages its global receptive field to more effectively capture the long-range signatures of such objects.

Overall, these qualitative examples provide compelling corroboration for our quantitative findings. They demonstrate that by hierarchically modeling context from the pixel to the object level, our STG-Mamba-YOLO not only elevates detection accuracy but also fundamentally deepens the model’s contextual understanding of the scene. This makes it a significantly more robust and reliable solution for real-world road inspection.

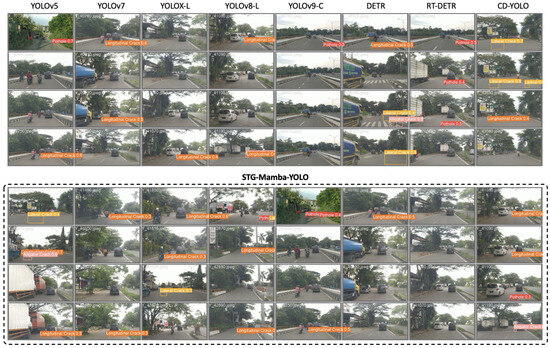

- Robustness in Adverse Conditions. Beyond controlled benchmarks, the true litmus test for a detection model is its robustness in unconstrained, real-world environments. Figure 9 presents the results of a rigorous stress test, where we evaluate our model alongside multiple SOTA detectors under adverse conditions characterized by heavy foliage, dappled lighting, strong shadows, and complex backgrounds.

Figure 9. Qualitative comparison demonstrating model robustness in challenging “in-the-wild” scenarios with heavy shadows, foliage occlusion, and complex backgrounds. (Top) A wide range of SOTA baselines exhibit brittle performance, yielding very few or no detections. (Bottom) Our STG-Mamba-YOLO demonstrates superior robustness and generalization, consistently and confidently identifying various damages under the same adverse conditions.

Figure 9. Qualitative comparison demonstrating model robustness in challenging “in-the-wild” scenarios with heavy shadows, foliage occlusion, and complex backgrounds. (Top) A wide range of SOTA baselines exhibit brittle performance, yielding very few or no detections. (Bottom) Our STG-Mamba-YOLO demonstrates superior robustness and generalization, consistently and confidently identifying various damages under the same adverse conditions.

The results depicted in the top panel are revealing: the vast majority of state-of-the-art detectors, spanning both the YOLO series and Transformer-based architectures, fail catastrophically under these challenging conditions. These models yield either extremely sparse, low-confidence detections or, in many cases, fail to detect any damages whatsoever. This outcome suggests that while these models excel on curated benchmarks, their performance is brittle, and they fail to generalize when confronted with the significant visual clutter and occlusions typical of real-world scenarios.

In stark contrast, the bottom panel demonstrates that our STG-Mamba-YOLO maintains a remarkably high level of performance. It successfully and confidently identifies a diverse array of damages—including longitudinal, lateral, and alligator cracks, as well as potholes—even when partially obscured by deep shadows or embedded within complex scenery. This remarkable robustness is a direct consequence of our model’s hierarchical architecture. The Mamba backbone’s global receptive field enables it to perceive the underlying continuity of a crack despite local interruptions from shadows. Concurrently, the GNN module reinforces these perceptions by leveraging the broader context of the surrounding road area, which aids in distinguishing true damages from visual noise.

This stark comparison provides compelling evidence that our method is not merely more accurate, but is fundamentally more generalizable and thus better suited for deployment in the unpredictable, dynamic environments of real-world inspection.

4.9. Efficiency Analysis

While detection accuracy is a paramount objective, the practical deployability of a road inspection model is equally contingent upon its computational efficiency and inference speed. This section provides a detailed analysis of our model’s efficiency, carefully contextualizing its computational overhead against the substantial performance gains it delivers.

Table 8 presents a focused comparison of key efficiency metrics—namely model parameters, GFLOPs, and inference speed (FPS)—between our model and representative SOTA detectors. Inference speed was benchmarked on an NVIDIA A100 GPU using a batch size of one to accurately measure latency-oriented throughput. As STG-Mamba-YOLO is architecturally based on the lightweight YOLOv9-C, the addition of our hierarchical context modules introduces a modest and anticipated computational overhead. Specifically, the model size increases by approximately 3.4M parameters, and the GFLOPs rise by 13 (from 102 to 115). This corresponds to a decrease in inference speed from 95 to 81 FPS.

Table 8.

Efficiency and performance trade-off analysis. FPS is measured on an NVIDIA A100 (batch size = 1). Our model achieves a substantial accuracy gain for a modest increase in computational cost, maintaining real-time performance.

However, we argue that this moderate increase in computational cost is overwhelmingly justified by the substantial performance improvements. The ≈12.7% increase in GFLOPs delivers a 2.5 percentage point gain in mAP for static images and, more critically, a nearly 200% improvement in the Temporal Consistency Score (TCS) for video analysis (Table 2). This trade-off is exceptionally favorable, particularly for real-world deployment scenarios where reliability and accuracy in complex scenes are prioritized over maximal throughput. It is worth noting that while the increased GFLOPs are negligible on server-grade GPUs, they may impact latency on resource-constrained embedded devices (e.g., NVIDIA Jetson). However, we consider this trade-off acceptable given the substantial gains in detection stability.

Furthermore, when situated within the broader performance–complexity landscape (visualized in Figure 7), our model’s efficiency profile is excellent. It remains substantially faster than two-stage methods like Faster R-CNN while being more accurate than other real-time models such as RT-DETR. With an inference speed of 81 FPS, STG-Mamba-YOLO comfortably exceeds the typical threshold for real-time video processing (>30 FPS). This positions our framework as a highly practical and powerful solution, well-suited for deployment in vehicle-mounted road inspection systems.

5. Discussion

The empirical results presented in this study demonstrate that STG-Mamba-YOLO achieves a superior trade-off between accuracy and stability. The model’s strong performance directly results from its innovative hierarchical architecture, which integrates context at three complementary levels. Our ablation studies (Section 4.5) provided conclusive evidence for the individual contribution of each stage. Specifically, the Mamba blocks were shown to be critical for modeling elongated defects, a persistent weakness of standard CNNs. Furthermore, the intra-frame GNN module marks a conceptual shift from simple feature detection to active relational reasoning. With this, the model interprets complex defect formations (like alligator cracking) as a single, interconnected structure, not as a set of discrete fissures. Lastly, the significant jump in the Temporal Consistency Score (TCS) highlights the fundamental importance of sequence-level modeling. This component effectively transforms the detector from a static image processor into a reliable tool for dynamic assessment, a crucial requirement for real-world applications.

The paradigm of hierarchical context fusion presented here is not restricted to the road inspection domain. This architectural template—which synergistically combines long-range spatial extractors (Mamba) and high-level relational engines (GNNs)—is highly promising for numerous other computer vision tasks where context is decisive. Examples include agricultural disease identification, industrial defect analysis, or medical lesion segmentation. A robust and temporally stable detector like STG-Mamba-YOLO can improve automated pavement management systems. Reliable defect tracking facilitates more accurate Pavement Condition Index (PCI) evaluations and provides the foundation for creating longitudinal records of damage development. Such data is invaluable for optimizing maintenance schedules and resource allocation.

While the performance is compelling, our study is subject to several limitations. First, the addition of the GNN and temporal modules, though effective, increases the model’s computational footprint. Future research could explore model compression techniques like knowledge distillation or graph pruning to create lightweight versions for on-device deployment. Second, the GNNs’ learned relational patterns are data-dependent and might not generalize perfectly to novel defect topologies absent from the training set. Investigating few-shot or zero-shot learning for this component could be a way to improve its adaptability. Third, the lack of a large-scale, public video dataset for road damage necessitated using a semi-synthetic benchmark for temporal evaluation. Assembling and releasing such a benchmark would be a valuable contribution to the research community.

6. Conclusions

This paper presented STG-Mamba-YOLO, a new framework that re-envisions road defect detection. It shifts the task from isolated object identification to a hierarchical, context-based reasoning process. As demonstrated by our comprehensive quantitative and qualitative experiments, this new paradigm offers substantial gains in accuracy, robustness, and temporal stability compared to a wide array of state-of-the-art methods.

Looking ahead, we envision two particularly promising research paths. The first is a multi-modal framework, which would integrate GPS data for defect geolocation and fuse inputs from other sensors (e.g., 3D lidar or thermal cameras) to evaluate damage severity (like pothole depth), not just its presence. The second is to expand the temporal modeling capabilities beyond ensuring consistency to achieve true predictive maintenance. Analyzing the long-term evolution of defect graphs, future models could be trained to forecast crack propagation paths or the expansion rate of potholes, enabling a shift from reactive to genuinely predictive infrastructure management.

Author Contributions

Conceptualization, Q.Z. and Z.T.; methodology, X.S. and Z.W.; software, Y.B.; validation, Z.T., X.S., Y.B. and Y.J.; formal analysis, Q.Z. and Z.T.; investigation, Z.T. and Z.W.; resources, Q.Z.; data curation, Y.B. and Y.J.; writing—original draft preparation, Z.T.; writing—review and editing, Q.Z. and X.S.; visualization, Z.W. and Y.J.; supervision, Q.Z.; project administration, Q.Z.; funding acquisition, Q.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are public available in https://datasetninja.com/road-damage-detector, accessed on 1 December 2025.

Acknowledgments

We thank the anonymous reviewers for their constructive comments that significantly improved the quality of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CNN | Convolutional Neural Network |

| FPS | Frames Per Second |

| GAT | Graph Attention Network |

| GFLOPs | Giga Floating Point Operations |

| GNN | Graph Neural Network |

| mAP | mean Average Precision |

| RDD | Road Damage Detection |

| SSM | State Space Model |

| STG | Spatio-Temporal Graph |

| TCS | Temporal Consistency Score |

| YOLO | You Only Look Once |

References

- Li, L.; Liu, D.; Teng, L.; Zhu, J. Development of a Relationship between Pavement Condition Index and Riding Quality Index on Rural Roads: A Case Study in China. Mathematics 2024, 12, 410. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. YOLOX: Exceeding YOLO series in 2021. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; Volume 34, pp. 26024–26037. [Google Scholar]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road damage detection and classification using deep neural networks with smartphone images. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar] [CrossRef]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph attention networks. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Li, Z.; Xiong, F.; Huang, B.; Li, M.; Xiao, X.; Ji, Y.; Xie, J.; Liang, A.; Xu, H. MGD-YOLO: An Enhanced Road Defect Detection Algorithm Based on Multi-Scale Attention Feature Fusion. Comput. Mater. Contin. 2025, 84, 5613–5635. [Google Scholar] [CrossRef]

- Yoon, J.H.; Jung, J.W.; Yoo, S.B. Auxcoformer: Auxiliary and Contrastive Transformer for Robust Crack Detection in Adverse Weather Conditions. Mathematics 2024, 12, 690. [Google Scholar] [CrossRef]

- Fantuzzi, N.; Fabbrocino, F.; Montemurro, M.; Nanni, F.; Huang, Q.; Correia, J.A.; Dassatti, L.; Bacciocchi, M. Mathematical and Computational Modelling in Mechanics of Materials and Structures. Math. Comput. Appl. 2024, 29, 109. [Google Scholar] [CrossRef]

- Xiao, X.; Wang, Z.; Mo, M.; Liu, C.; Ma, C.; Li, Y.; Krishnaswamy, S.; Wang, X.; Wang, T. Self-Supervised Visual Prompting for Cross-Domain Road Damage Detection. arXiv 2025, arXiv:2511.12410. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; Volume 34, pp. 12077–12090. [Google Scholar]

- Xiao, X.; Zhang, Y.; Wang, J.; Zhao, L.; Wei, Y.; Li, H.; Li, Y.; Wang, X.; Roy, S.K.; Xu, H.; et al. RoadBench: A Vision-Language Foundation Model and Benchmark for Road Damage Understanding. arXiv 2025, arXiv:2507.17353. [Google Scholar] [CrossRef]

- Khan, M.W.; Obaidat, M.S.; Mahmood, K.; Sadoun, B.; Badar, H.M.S.; Gao, W. Real-Time Road Damage Detection Using an Optimized YOLOv9s-Fusion in IoT Infrastructure. IEEE Internet Things J. 2025, 12, 17649–17660. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Xu, W.; Zheng, S.; Wang, C.; Zhang, Z.; Ren, C.; Xu, R.; Xu, S. SAMamba: Adaptive state space modeling with hierarchical vision for infrared small target detection. Inf. Fusion 2025, 124, 103338. [Google Scholar] [CrossRef]

- Zhang, Q.; Shao, M.; Chen, X. Pool-Mamba: Pooling state space model for low-light image enhancement. Neurocomputing 2025, 635, 130005. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A comprehensive survey on graph neural networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef]

- Jia, Z.; Wang, C.; Wang, Y.; Gao, X.; Li, B.; Yin, L.; Chen, H. Recent Research Progress of Graph Neural Networks in Computer Vision. Electronics 2025, 14, 1742. [Google Scholar] [CrossRef]

- Zheng, Y.; Yi, L.; Wei, Z. A survey of dynamic graph neural networks. Front. Comput. Sci. 2025, 19, 196323. [Google Scholar] [CrossRef]

- Dang, Q.; Liu, Q.; Yang, S.; He, X. Data-driven evolutionary algorithm based on inductive graph neural networks for multimodal multi-objective optimization. IEEE Trans. Evol. Comput. 2025. [Google Scholar] [CrossRef]

- Zhang, J.; Feng, K. TSAD: Transformer-Based Semi-Supervised Anomaly Detection for Dynamic Graphs. Mathematics 2025, 13, 3123. [Google Scholar] [CrossRef]

- Xiao, X.; Li, Z.; Wang, W.; Xie, J.; Lin, H.; Roy, S.K.; Wang, T.; Xu, M. TD-RD: A Top-Down Benchmark with Real-Time Framework for Road Damage Detection. In Proceedings of the 2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar] [CrossRef]

- Arya, D.; Maeda, H.; Ghosh, S.K.; Toshniwal, D.; Sekimoto, Y. RDD2022: A multi-national image dataset for automatic Road Damage Detection. arXiv 2022, arXiv:2209.08538. [Google Scholar] [CrossRef]

- Eisenbach, M.; Stricker, R.; Seichter, D.; Amende, K.; Debes, K.; Sesselmann, M.; Ebersbach, D.; Stoeckert, U.; Gross, H.M. How to get pavement distress detection ready for deep learning? A systematic approach. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2039–2047. [Google Scholar]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature pyramid and hierarchical boosting network for pavement crack detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 571–579. [Google Scholar]

- Yaseen, M. What is YOLOv8: An In-Depth Exploration of the Internal Features of the Next-Generation Object Detector. arXiv 2024, arXiv:2408.15857. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Stoken, A.; Borovec, J.; NanoCode012.; Kwon, Y.; Michael, K.; Liu, C.; Xie, T. YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 December 2025).

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Zong, Z.; Song, G.; Liu, Y. Detrs with collaborative hybrid assignments training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 6748–6758. [Google Scholar]

- Yi, Y.; Song, Y.; Qin, J.; Gao, F.; Han, H. CD-YOLO: An Enhanced YOLOv8n-Based Algorithm for Accurate Crop Disease Detection. In Proceedings of the 2025 IEEE 8th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 March 2025; Volume 8, pp. 285–290. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).