Bias-Alleviated Zero-Shot Sports Action Recognition Enabled by Multi-Scale Semantic Alignment

Abstract

1. Introduction

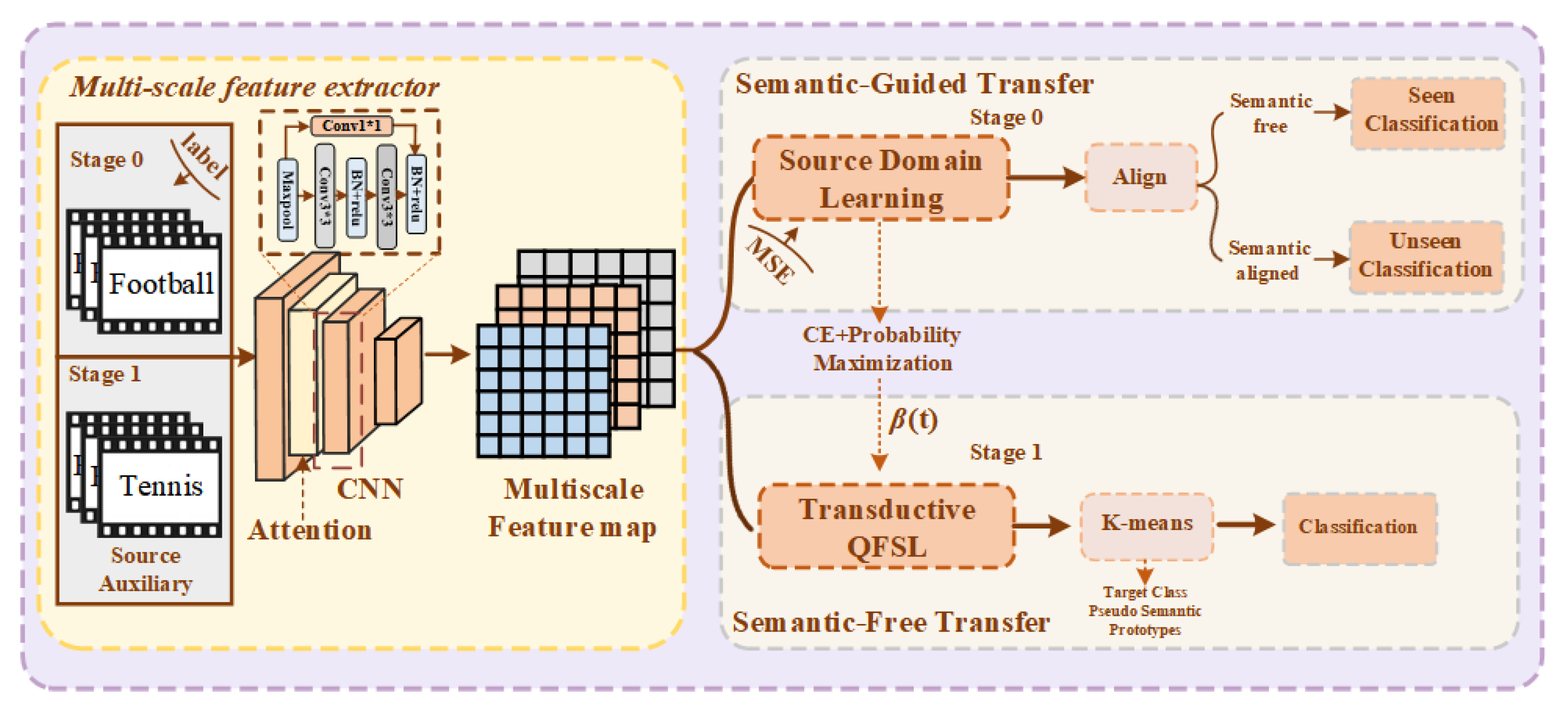

- This paper proposes a progressive MSA-ZSAR framework that decomposes training into source domain learning and transfer learning. The framework first establishes a stable visual–semantic mapping through regression-based alignment, and then extends it with an improved QFSL loss equipped with dynamic loss weighting. This design effectively mitigates class bias while enhancing generalization capability across seen and unseen categories.

- To address varying levels of semantic availability, two complementary strategies are developed. SGT leverages pre-defined kinematic embeddings and joint optimization to align visual and semantic spaces, whereas SFT constructs pseudo-semantic prototypes via unsupervised clustering and employs a progressive classify-then-recognize scheme. This dual strategy ensures effective transfer in both rich and limited-semantics scenarios.

- Beyond these modules, semantic prototypes are dynamically refined through the CPG mechanism, which expands the semantic space and captures intra-class diversity, particularly for unlabeled categories. Furthermore, a MME strategy aggregates predictions from multiple trained models or checkpoints, reducing variance and improving robustness. Together, these mechanisms yield superior accuracy and stability in generalized zero-shot sports action recognition.

2. Related Work

2.1. Sports Action Recognition Based on Deep Learning

2.2. Zero-Shot Learning and Generalized Zero-Shot Learning

2.3. Transductive Zero-Shot Learning

3. Proposed Method

3.1. Preparation Work

3.2. The Design of the MSA-ZSAR Framework

3.3. Design of Loss Function

4. Experiments

4.1. Experimental Setup

4.2. The Effectiveness of Dynamic Loss Weight

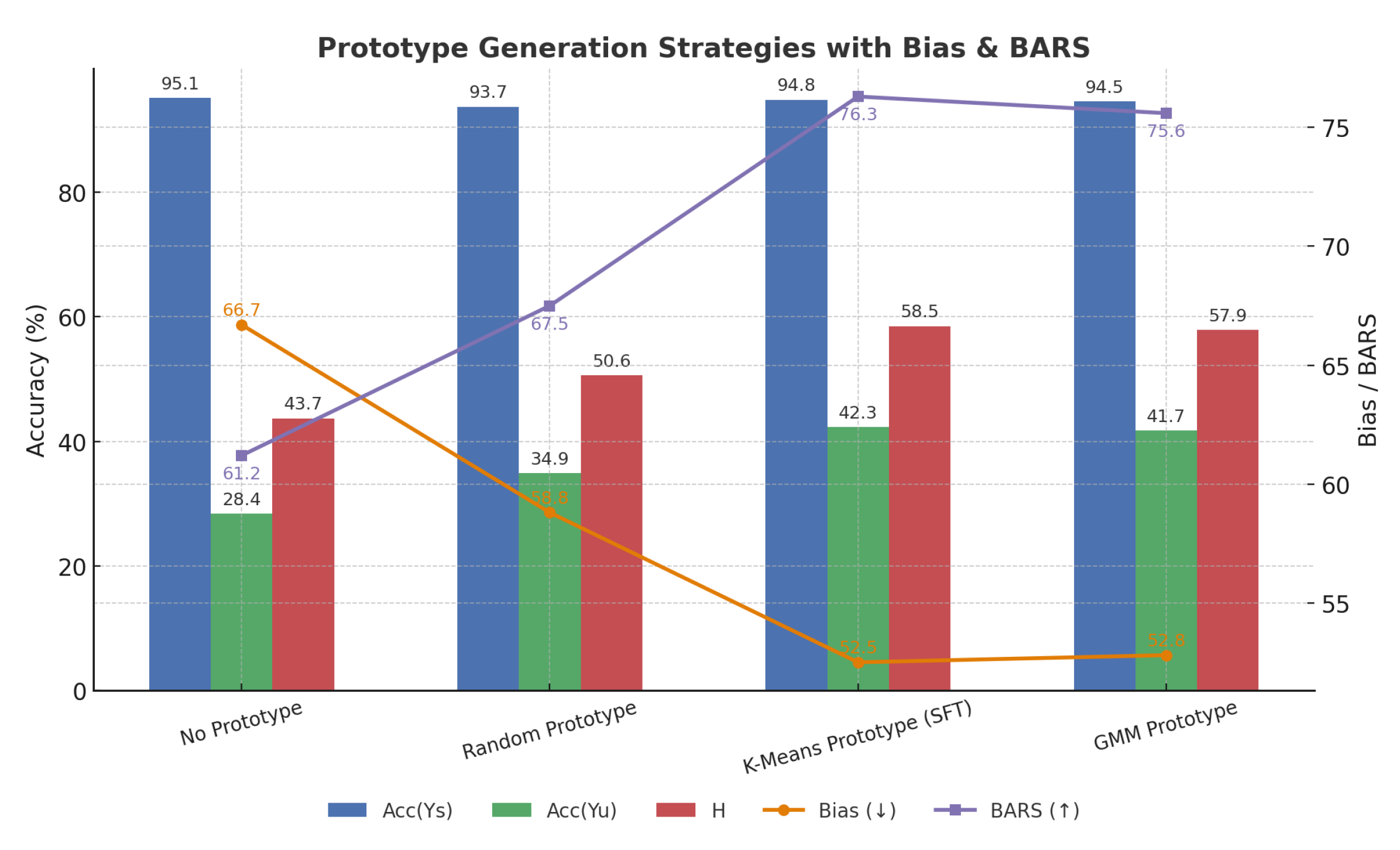

4.3. Quality of SFT Pseudo-Semantic Prototypes

4.4. Multi-Scale Feature Extraction Module (MSFE) and Robustness

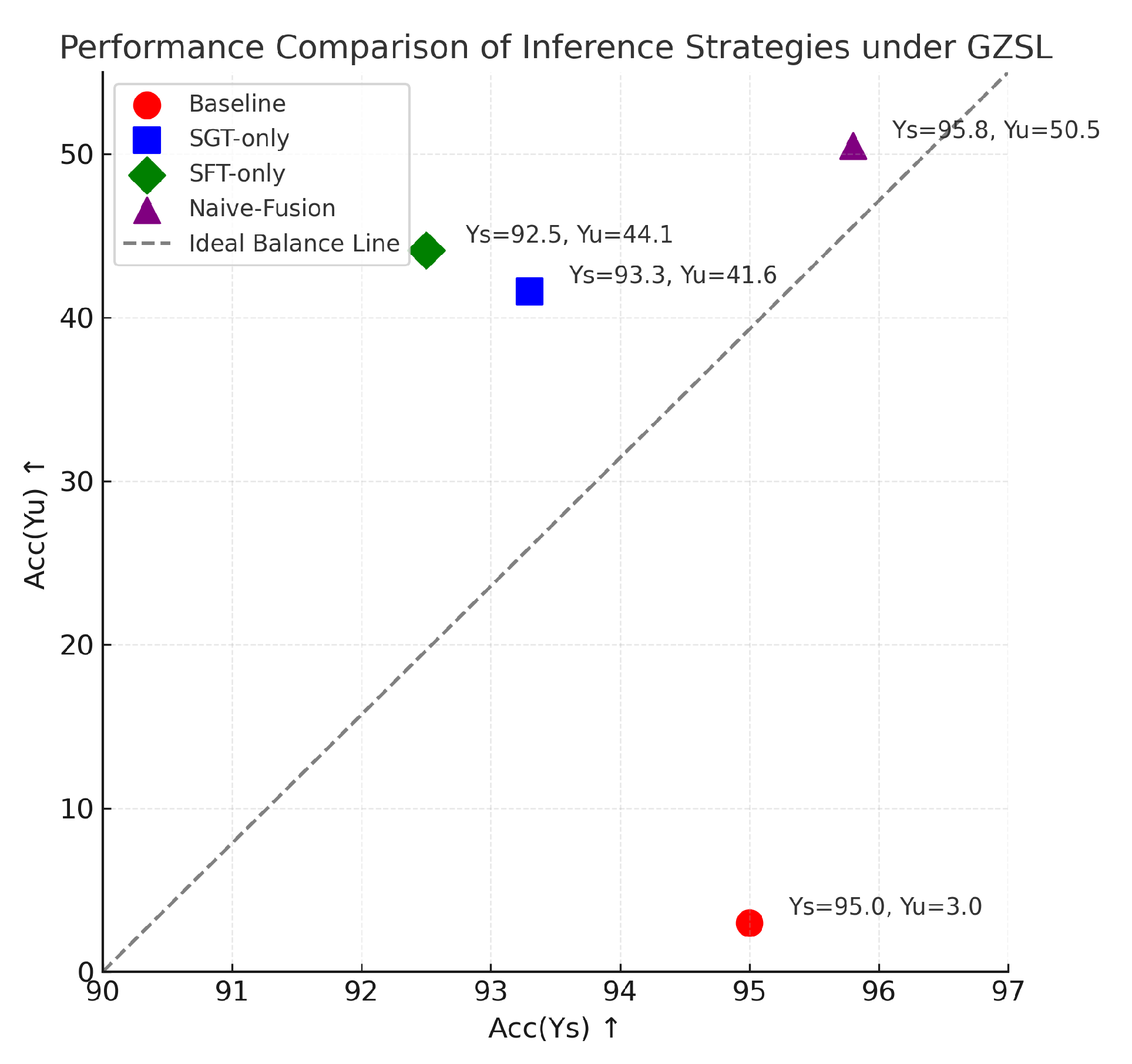

4.5. Bias Suppression Effects of SGT and SFT Branches and Joint Inference

4.6. Ablation Study

4.7. Comparative Experiments with Existing Methods

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mitsuzumi, Y.; Kimura, A.; Irie, G.; Nakazawa, A. Cross-Action Cross-Subject Skeleton Action Recognition via Simultaneous Action-Subject Learning with Two-Step Feature Removal. In Proceedings of the 2024 IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, 27–30 October 2024; pp. 2182–2186. [Google Scholar]

- Wang, G.; Guo, J.; Zhang, J.; Qi, X.; Song, H. Design of Human Action Recognition Method Based on Cross Attention and 2s-AGCN Model. In Proceedings of the 2024 IEEE 6th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Hangzhou, China, 23–25 October 2024; pp. 1341–1345. [Google Scholar]

- Chen, Y.; Zhang, J.; Wang, Y. Human action recognition and analysis methods based on OpenPose and deep learning. In Proceedings of the 2024 International Conference on Integrated Circuits and Communication Systems (ICICACS), Raichur, India, 23–24 February 2024; pp. 1–5. [Google Scholar]

- Huang, S. Sports Action Recognition and Standardized Training using Puma Optimization Based Residual Network. In Proceedings of the 2024 International Conference on Intelligent Algorithms for Computational Intelligence Systems (IACIS), Hassan, India, 23–24 August 2024; pp. 1–4. [Google Scholar]

- Kim, J.S. Efficient human action recognition with dual-action neural networks for virtual sports training. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Yeosu, Republic of Korea, 26–28 October 2022; pp. 1–3. [Google Scholar]

- Kim, J.S. Action Recognition System Using Full-body XR Devices for Sports Metaverse Games. In Proceedings of the 2024 15th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2024; pp. 1962–1965. [Google Scholar]

- Zhou, S. A survey of pet action recognition with action recommendation based on HAR. In Proceedings of the 2022 IEEE/WIC/ACM International Joint Conference on Web Intelligence and Intelligent Agent Technology (WI-IAT), Niagara Falls, ON, Canada, 17–20 November 2022; pp. 765–770. [Google Scholar]

- Sheikh, A.; Kuhite, C.; Chamate, S.; Raut, V.; Huddar, R.; Bhoyar, K.K. Sports Recognition in Videos Using Deep Learning. In Proceedings of the 2024 2nd International Conference on Emerging Trends in Engineering and Medical Sciences (ICETEMS), Nagpur, India, 22–23 November 2024; pp. 375–379. [Google Scholar]

- Mandal, D.; Narayan, S.; Dwivedi, S.K.; Gupta, V.; Ahmed, S.; Khan, F.S.; Shao, L. Out-of-distribution detection for generalized zero-shot action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9985–9993. [Google Scholar]

- Qiao, W.; Yang, G.; Gao, M.; Liu, Y.; Zhang, X. Research on Sports Action Analysis and Diagnosis System Based on Grey Clustering Algorithm. In Proceedings of the 2023 Asia-Pacific Conference on Image Processing, Electronics and Computers (IPEC), Dalian, China, 14–16 April 2023; pp. 472–475. [Google Scholar]

- Liu, Y.; Yuan, J.; Tu, Z. Motion-driven visual tempo learning for video-based action recognition. IEEE Trans. Image Process. 2022, 31, 4104–4116. [Google Scholar] [CrossRef] [PubMed]

- Tu, Z.; Xie, W.; Dauwels, J.; Li, B.; Yuan, J. Semantic cues enhanced multimodality multistream CNN for action recognition. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 1423–1437. [Google Scholar] [CrossRef]

- Kong, L.; Huang, D.; Qin, J.; Wang, Y. A joint framework for athlete tracking and action recognition in sports videos. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 532–548. [Google Scholar] [CrossRef]

- Tejero-de Pablos, A.; Nakashima, Y.; Sato, T.; Yokoya, N. Human action recognition-based video summarization for RGB-D personal sports video. In Proceedings of the 2016 IEEE International Conference on Multimedia and Expo (ICME), Seattle, WA, USA, 11–15 July 2016; pp. 1–6. [Google Scholar]

- Pratik, V.; Palani, S. Super Long Range CNN for Video Enhancement in Handball Action Recognition. In Proceedings of the 2024 3rd International Conference on Artificial Intelligence For Internet of Things (AIIoT), Vellore, India, 3–4 May 2024; pp. 1–6. [Google Scholar]

- Wang, K. Research on Application of Computer Vision in Movement Recognition System of Sports Athletes. In Proceedings of the 2024 International Conference on Electronics and Devices, Computational Science (ICEDCS), Marseille, France, 23–25 September 2024; pp. 999–1004. [Google Scholar]

- Guo, H.; Yu, W.; Yan, Y.; Wang, H. Bi-directional motion attention with contrastive learning for few-shot action recognition. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 5490–5494. [Google Scholar]

- Li, Y.; Liu, Z.; Yao, L.; Wang, X.; McAuley, J.; Chang, X. An entropy-guided reinforced partial convolutional network for zero-shot learning. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5175–5186. [Google Scholar] [CrossRef]

- Zhang, L.; Chang, X.; Liu, J.; Luo, M.; Li, Z.; Yao, L.; Hauptmann, A. TN-ZSTAD: Transferable network for zero-shot temporal activity detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3848–3861. [Google Scholar] [CrossRef] [PubMed]

- Keshari, R.; Singh, R.; Vatsa, M. Generalized zero-shot learning via over-complete distribution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 13300–13308. [Google Scholar]

- Min, S.; Yao, H.; Xie, H.; Wang, C.; Zha, Z.J.; Zhang, Y. Domain-aware visual bias eliminating for generalized zero-shot learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 12664–12673. [Google Scholar]

- Zhang, L.; Wang, P.; Liu, L.; Shen, C.; Wei, W.; Zhang, Y.; Van Den Hengel, A. Towards effective deep embedding for zero-shot learning. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 2843–2852. [Google Scholar] [CrossRef]

- Fu, Y.; Hospedales, T.M.; Xiang, T.; Gong, S. Transductive multi-view zero-shot learning. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 2332–2345. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Ding, G.; Jin, X.; Wang, J. Transductive zero-shot recognition via shared model space learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Xu, Y.; Han, C.; Qin, J.; Xu, X.; Han, G.; He, S. Transductive zero-shot action recognition via visually connected graph convolutional networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 3761–3769. [Google Scholar] [CrossRef] [PubMed]

- Rahman, S.; Khan, S.; Barnes, N. Transductive learning for zero-shot object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 6082–6091. [Google Scholar]

- Tian, Y.; Huang, Y.; Xu, W.; Kong, Y. Coupling Adversarial Graph Embedding for transductive zero-shot action recognition. Neurocomputing 2021, 452, 239–252. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, G. Semantic feedback for generalized zero-shot learning. In Proceedings of the 2024 4th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 19–21 January 2024; pp. 298–302. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, T. HMDB: A large video database for human motion recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2556–2563. [Google Scholar]

- Soomro, K.; Zamir, A.R.; Shah, M. Ucf101: A dataset of 101 human actions classes from videos in the wild. arXiv 2012, arXiv:1212.0402. [Google Scholar] [CrossRef]

- Sun, B.; Kong, D.; Wang, S.; Li, J.; Yin, B.; Luo, X. GAN for vision, KG for relation: A two-stage network for zero-shot action recognition. Pattern Recognit. 2022, 126, 108563. [Google Scholar] [CrossRef]

- Kim, T.S.; Jones, J.; Peven, M.; Xiao, Z.; Bai, J.; Zhang, Y.; Qiu, W.; Yuille, A.; Hager, G.D. Daszl: Dynamic action signatures for zero-shot learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 1817–1826. [Google Scholar]

- Su, T.; Wang, H.; Qi, Q.; Wang, L.; He, B. Transductive learning with prior knowledge for generalized zero-shot action recognition. IEEE Trans. Circuits Syst. Video Technol. 2023, 34, 260–273. [Google Scholar] [CrossRef]

- Gowda, S.N.; Sevilla-Lara, L. Telling stories for common sense zero-shot action recognition. In Proceedings of the Asian Conference on Computer Vision, Hanoi, Vietnam, 8–12 December 2024; pp. 4577–4594. [Google Scholar]

- Shang, J.; Niu, C.; Tao, X.; Zhou, Z.; Yang, J. Generalized zero-shot action recognition through reservation-based gate and semantic-enhanced contrastive learning. Knowl.-Based Syst. 2024, 301, 112283. [Google Scholar] [CrossRef]

| Abbreviation | Full Term |

|---|---|

| ZSL | Zero-Shot Learning |

| GZSL | Generalized Zero-Shot Learning |

| MSA-ZSAR | Multi-Scale Semantic Alignment framework for Zero-Shot Sports Action Recognition |

| SGT | Semantic-Guided Transfer |

| SFT | Semantic-Free Transfer |

| QFSL Loss | Quasi-Fully Supervised Learning Loss |

| CPG | Clustering-based Prototype Generation |

| MME | Multi-model Ensemble Evaluation |

| CE | Cross-Entropy |

| PM | Probability Maximization |

| MSE | Mean Squared Error |

| BARS | Bias-Alleviated Recognition Score |

| Acc(Ys) | Accuracy on Seen Classes |

| Acc(Yu) | Accuracy on Unseen Classes |

| H | Harmonic Mean |

| Strategy () | Acc(Ys) ↑ | Acc(Yu) ↑ | H ↑ | P(Yu) ↑ | R(Yu) ↑ | F1(Yu) ↑ | BARS ↑ |

|---|---|---|---|---|---|---|---|

| 1.0 | 90.5 ± 1.6 | 42.2 ± 2.0 | 55.7 ± 4.5 | 95.4 ± 0.7 | 82.2 ± 2.5 | 90.8 ± 0.8 | 73.2 ± 2.3 |

| min(2.0, 1.0+t/5) | 95.9 ± 1.2 | 50.5 ± 1.9 | 65.0 ± 1.5 | 98.9 ± 0.5 | 91.3 ± 1.7 | 95.0 ± 1.1 | 80.0 ± 1.9 |

| 2.0 | 92.1 ± 0.9 | 44.1 ± 0.8 | 57.5 ± 2.4 | 96.7 ± 0.5 | 83.7 ± 3.7 | 89.6 ± 2.0 | 73.5 ± 3.5 |

| Remove the target item | 85.2 ± 1.2 | 39.1 ± 2.1 | 53.2 ± 4.2 | 94.4 ± 1.3 | 80.8 ± 1.6 | 88.8 ± 2.9 | 71 ± 2.4 |

| Strategy | Acc(Ys) ↑ | Acc(Yu) ↑ | H ↑ | P(Yu) ↑ | R(Yu) ↑ | F1(Yu) ↑ |

|---|---|---|---|---|---|---|

| No prototype | 95.1 ± 1.8 | 28.4 ± 3.1 | 43.7 ± 2.4 | 92.0 ± 1.7 | 70.2 ± 5.1 | 79.7 ± 4.2 |

| Random prototype | 93.7 ± 2.4 | 34.9 ± 2.7 | 50.6 ± 2.5 | 95.5 ± 2.0 | 75.1 ± 1.5 | 84.2 ±2.5 |

| SFT | 94.8 ± 2.1 | 42.3 ± 1.7 | 58.5 ± 1.8 | 98.9 ± 1.9 | 90.4 ± 1.9 | 94.5 ± 1.4 |

| GMM Prototype | 94.5 ± 23 | 41.7 ± 2.3 | 57.9 ± 3.4 | 97.5 ± 0.7 | 89.1 ± 3.1 | 93.1 ± 0.8 |

| Model | Acc(Yu)-Clean ↑ | Acc(Yu)-Perturbed ↑ | ↓ | H-Clean ↑ | H-Perturbed ↑ | ↓ | P ↑ | R ↑ | F1 ↑ |

|---|---|---|---|---|---|---|---|---|---|

| Baseline | 50.5 ± 2.2 | 43.2 ± 1.9 | 7.3 | 41.3 ± 3.8 | 37.5 ± 5.6 | 2.8 | 90.5 ± 1.1 | 86.4 ± 2.9 | 87.5 ± 2.0 |

| MSFE | 50.8 ± 1.5 | 48.9 ± 1.7 | 2.1 | 65.2 ± 2.1 | 62.4 ± 1.8 | 0.8 | 93.7 ± 2.1 | 90.1 ± 3.2 | 92.2 ± 1.8 |

| Strategy | Acc(Ys) ↑ | Acc(Yu) ↑ | H ↑ |

|---|---|---|---|

| SGT-only | 93.3 ± 1.8 | 41.6 ± 2.3 | 57.5 ± 1.8 |

| SFT-only | 92.5 ± 1.0 | 44.1 ± 2.1 | 59.7 ± 2.4 |

| Naive-fusion | 95.8 ± 1.2 | 50.5 ± 1.5 | 65.0 ± 1.9 |

| Model Variant | Acc(Ys) | Acc(Yu) | H |

|---|---|---|---|

| Baseline | 90.1 ± 2.5 | 42.7 ± 2.0 | 47.7 ± 3.1 |

| + SGT | 92.3 ± 2.3 | 45.4 ± 2.4 | 53.4 ± 2.2 |

| + SFT | 91.6 ± 1.8 | 46.1 ± 2.7 | 55.8 ± 2.4 |

| + CPG | 92.0 ± 0.7 | 48.7 ± 3.0 | 58.9 ± 2.1 |

| + MME (Full Model) | 95.9 ± 1.2 | 50.8 ± 1.5 | 65.0 ± 1.5 |

| Model Variant | Params (M) | GFLOPs | Latency (ms) |

|---|---|---|---|

| Baseline | 33.1 | 28.5 | 6.8 |

| Baseline + MSFE | 36.4 | 32.7 | 7.5 |

| Full Model | 41.2 | 36.1 | 8.9 |

| Method | Model | Word Embeddings | HMDB51 (ACC(Yu) ↑) | UCF101 (ACC(Yu) ↑) | HMDB51 (H ↑) | UCF101 (H ↑) |

|---|---|---|---|---|---|---|

| FGGA [31] | I3D | W | 33.9 ± 2.8 | 30.1 ± 3.2 | N/A | N/A |

| DASZL [32] | TSM | A | N/A | 48.9 ± 2.9 | N/A | 45.3 ± 2.8 |

| GAGCN [25] | Ts+Pose | W | 31.1 ± 3.4 | 31.8 ± 3.7 | N/A | N/A |

| TLPK [33] | R3D | W | 45.6 ± 2.5 | 49.9 ± 2.3 | 37.1 ± 10.8 | 51.8 ± 4.4 |

| SDR-CLIP [34] | I3D | W | 38.9 ± 3.5 | 44.8 ± 3.2 | 43.1 ± 5.3 | 41.8 ± 2.2 |

| RGSCL [35] | STC | W | 32.8 ± 2.5 | 28.8 ± 3.4 | 40.1 ± 7.2 | 39.5 ± 5.8 |

| Proposed | R(2+1)D-18 | W | 49.2 ± 3.4 | 50.5 ± 1.5 | 64.2 ± 0.7 | 65.0 ± 1.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, Q.; Qin, W.; Meng, F.; Liu, H. Bias-Alleviated Zero-Shot Sports Action Recognition Enabled by Multi-Scale Semantic Alignment. Symmetry 2025, 17, 1959. https://doi.org/10.3390/sym17111959

Zheng Q, Qin W, Meng F, Liu H. Bias-Alleviated Zero-Shot Sports Action Recognition Enabled by Multi-Scale Semantic Alignment. Symmetry. 2025; 17(11):1959. https://doi.org/10.3390/sym17111959

Chicago/Turabian StyleZheng, Qiang, Wen Qin, Fanyi Meng, and Hongyang Liu. 2025. "Bias-Alleviated Zero-Shot Sports Action Recognition Enabled by Multi-Scale Semantic Alignment" Symmetry 17, no. 11: 1959. https://doi.org/10.3390/sym17111959

APA StyleZheng, Q., Qin, W., Meng, F., & Liu, H. (2025). Bias-Alleviated Zero-Shot Sports Action Recognition Enabled by Multi-Scale Semantic Alignment. Symmetry, 17(11), 1959. https://doi.org/10.3390/sym17111959