2. Related Work

In recent years, with the rapid advancement of edge computing and mimic computing technologies, resource scheduling and system defense have become focal points of academic research. As a key approach to improving computational efficiency and system stability, resource scheduling has been extensively studied in various domains such as cloud computing and edge computing. However, mimic servers, as a novel computing architecture that integrates diversity, dynamism, and redundancy, present significantly different challenges in scheduling and defense compared to traditional systems.

For example, mimic systems emphasize the “equivalent polymorphism” of execution entities and their runtime dynamic switching. Consequently, scheduling strategies must not only ensure efficient resource utilization but also address system unpredictability, security, and dynamic defense capabilities—requirements that are not commonly encountered in cloud-edge environments. Thus, despite substantial progress in traditional resource scheduling, there remains a clear gap in optimizing scheduling for mimic servers.

The scheduling algorithm proposed in this paper is specifically designed around the characteristics of mimic architectures, aiming to balance performance optimization with attack resistance, thereby addressing a critical gap in existing research [

12].

In the fields of cloud and edge computing, related studies have primarily focused on efficient resource allocation, task optimization, and reduction of latency and energy consumption [

13]. For example, Masadeh et al. [

14] proposed a cloud-computing task-scheduling approach based on the Sea Lion Optimization (SLnO) algorithm; by emulating the hunting behavior of sea lions and integrating a multi-objective optimization model, they reduced overall completion time, cost and energy consumption while improving resource utilization, thereby achieving superior scheduling performance. Wang et al. [

12] proposed a distributed remote-calibration prototype system based on a cloud-edge-end architecture. By integrating a high-precision frequency-to-voltage conversion module, satellite timing signals, environmental monitoring, video surveillance, and OCR technology, they solved the problems of traceability transmission and on-site intelligent data recording/extraction in remote calibration, enabling high-precision and intelligent remote calibration of power equipment. Chen Y. et al. [

15] proposed a flexible resource scheduling model combining Software-Defined Networking (SDN) with edge computing to address resource scheduling challenges in software-defined cloud manufacturing. Junchao Y. et al. [

16] developed a parallel intelligent-driven resource scheduling scheme for Intelligent Vehicle Systems (IVS) to tackle task delays and network load imbalance caused by dual dependencies in time and data. However, these works did not adequately consider integration with mimic defense mechanisms.

Cleverson V.N. et al. [

17] introduced an intent-aware reinforcement learning approach for radio resource scheduling in wireless access network slicing, aimed at fulfilling service quality intents of different slices, but the method lacks sufficient adaptability under attack scenarios. Dong Y. et al. [

18] proposed a deep reinforcement learning-based dynamic resource scheduling algorithm to adapt to device heterogeneity and network dynamics, but their model failed to fully leverage system diversity to enhance robustness.

Most of these efforts overlook the unique scheduling demands of mimic systems, including runtime dynamic switching, execution path uncertainty, and heterogeneity-driven redundancy defense strategies—making their approaches difficult to directly apply to mimic server environments.

In the field of task scheduling algorithms, Particle Swarm Optimization (PSO) has been widely applied to resource scheduling problems. For example, one study proposed a global path planning method for Autonomous Underwater Vehicles (AUVs) based on an improved T-distribution Fireworks–PSO algorithm, in which a T-distribution perturbation mechanism was introduced to enhance the algorithm’s global search capability and its ability to escape local optima [

19]. In addition, some works have combined PSO with reinforcement learning for UAV path planning—where reinforcement learning is used for real-time decision-making, and PSO is employed to optimize the search process of those decisions [

20].

From a security perspective, mimic computing emphasizes enhancing system unpredictability through diversity, dynamism, and randomness [

21], thus offering a theoretical foundation for defending against unknown attacks. Prior works such as Shao et al. [

22] introduced a heterogeneous executor selection mechanism, and Wang et al. [

23] designed a load balancing strategy, but most of these approaches failed to deeply explore how scheduling mechanisms themselves affect the effectiveness of mimic defense. In particular, they lack modeling of the dynamic and stochastic nature of scheduling behavior, making it difficult to maintain robustness under complex attack scenarios.

Currently, most mimic scheduling research still suffers from several notable limitations. First, existing methods often rely on fixed scheduling strategies or simplistic optimization goals, with limited adaptability to dynamic changes in system states [

24]. This makes the system vulnerable to predictability and exploitation by attackers, leading to poor defensive performance [

2]. Second, although some studies attempt to introduce randomness into task scheduling, they generally lack adaptive optimization strategies such as deep reinforcement learning, resulting in low scheduling flexibility in dynamic environments [

25]. Moreover, current research does not sufficiently improve randomization and unpredictability in resource scheduling, limiting their effectiveness in resisting intelligent attacks [

26].

To summarize, current mimic server scheduling approaches face the following architecture-specific challenges:

Weak dynamic defense capability: Most existing methods rely on static scheduling strategies and lack environment-aware adaptation mechanisms, making them ineffective in responding to changing attacker tactics in real-time [

18,

19].

Lack of unpredictability in scheduling outcomes: Task assignments often lack sufficient randomness and diversity, allowing attackers to infer patterns and launch targeted attacks [

27,

28].

Separation of defense mechanisms and scheduling logic: Many studies treat scheduling and defense as independent modules, rather than integrating them under the unified concept of “scheduling as defense” [

29].

Although existing studies have made progress in optimizing resource allocation, most have failed to fully integrate mimic defense principles, resulting in insufficient robustness against malicious attacks. Traditional methods typically rely on fixed scheduling strategies or simplified optimization objectives, lacking the necessary adaptability and diversity, which makes them susceptible to being predicted and exploited by attackers. Moreover, current approaches still exhibit significant deficiencies in the randomization and unpredictability of resource scheduling, limiting their effectiveness in resisting sophisticated and adaptive attacks.

To address these limitations, this paper proposes an efficient and secure scheduling solution for mimic servers by integrating deep reinforcement learning (DRL) with the core philosophy of mimic defense. The proposed design centers around the three foundational characteristics of mimic computing—dynamism, diversity, and redundancy—to systematically enhance the scheduling mechanism. We introduce a Deep Reinforcement Learning-based Mimic Defense Server Scheduling algorithm (DRLMDS) that bridges the gap in existing research by improving resilience under complex attack scenarios. This method breaks through the limitations of traditional scheduling systems that struggle to balance performance and security, and it offers a feasible path for the design of scheduling mechanisms in future high-security computing architectures.

3. DRLMDS Design

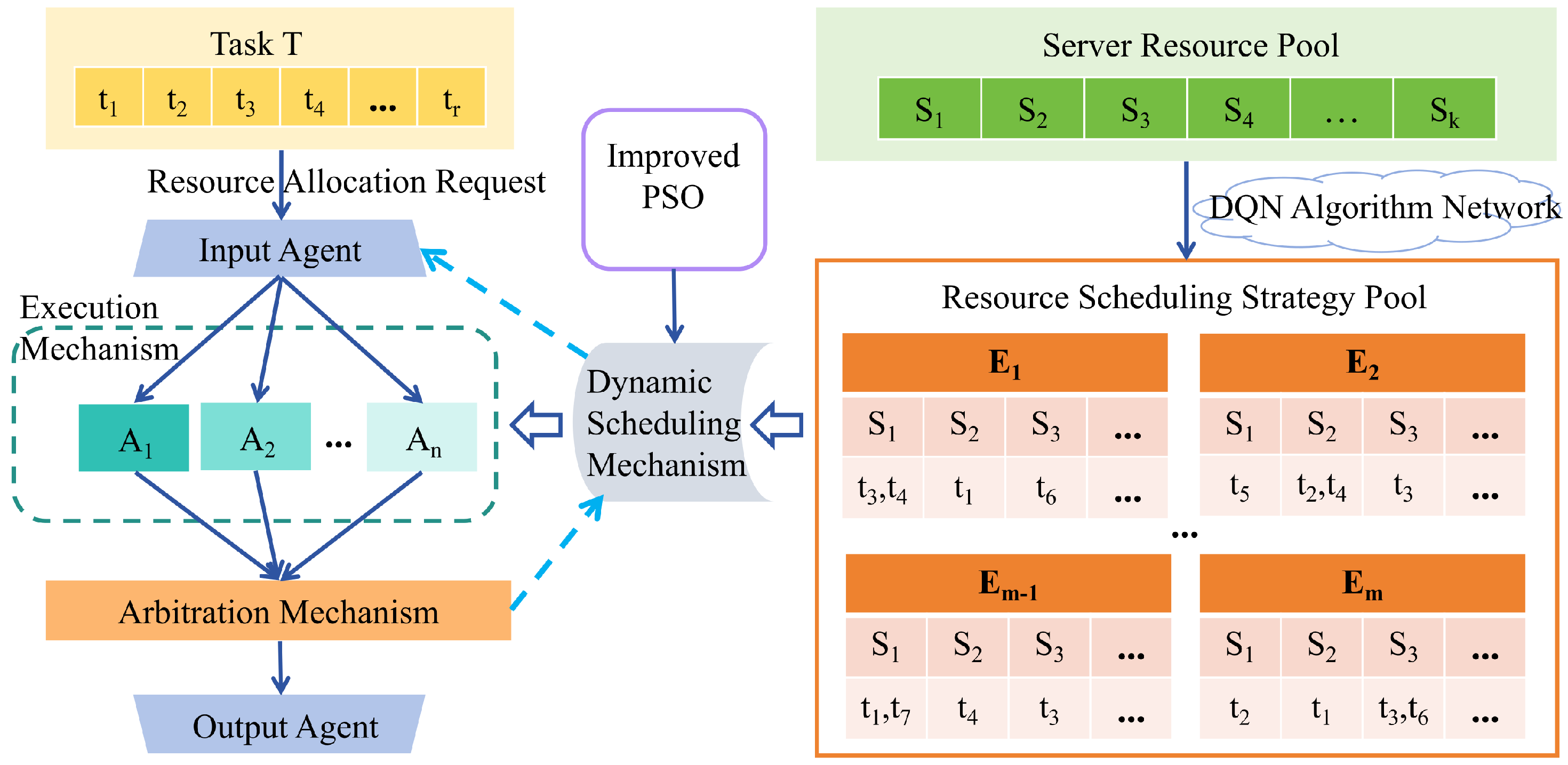

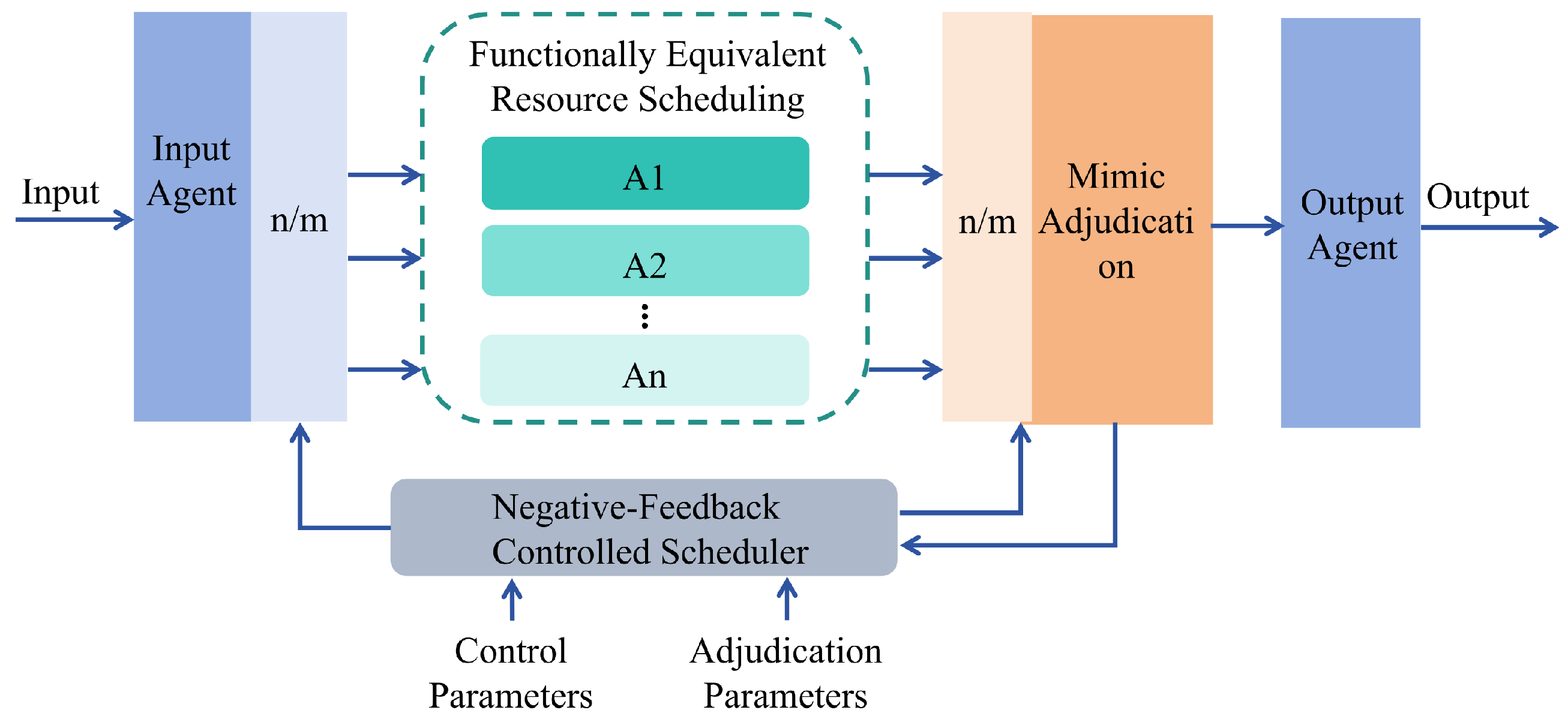

The proposed DRLMDS aims to enhance the robustness and resource utilization efficiency of mimic defense servers when facing malicious attacks by integrating deep reinforcement learning (DRL) with mimic defense principles. The mimic defense server adopts a symmetric architecture, in which computing resources, tasks, and decision-making mechanisms are evenly distributed across multiple nodes. DRLMDS reduces bias and enhances generality by designing a scheduling strategy that treats similar tasks and nodes symmetrically. By incorporating a symmetric decision-making mechanism, it further improves the fairness and robustness of scheduling decisions. Compared with traditional methods, this symmetry-aware design contributes to more efficient resource utilization and better load balancing. The overall framework of the algorithm, as illustrated in

Figure 2, consists of a deep reinforcement learning module, a mimic defense module, and a mechanism that integrates the two. By leveraging dynamic optimization and randomized strategies, DRLMDS significantly improves the system’s resistance to attacks and its efficiency in resource utilization.

3.1. Deep Reinforcement Learning Module

The deep reinforcement learning (DRL) module serves as the core component of the algorithm, responsible for dynamically adjusting resource allocation strategies based on the current system state [

30]. This paper employs Deep Q-Network (DQN) as the implementation framework for DRL (as shown in

Figure 3), where the agent interacts with the environment to learn the optimal scheduling policy [

13]. The DRL module achieves dynamic behavior through the following mechanisms [

5]:

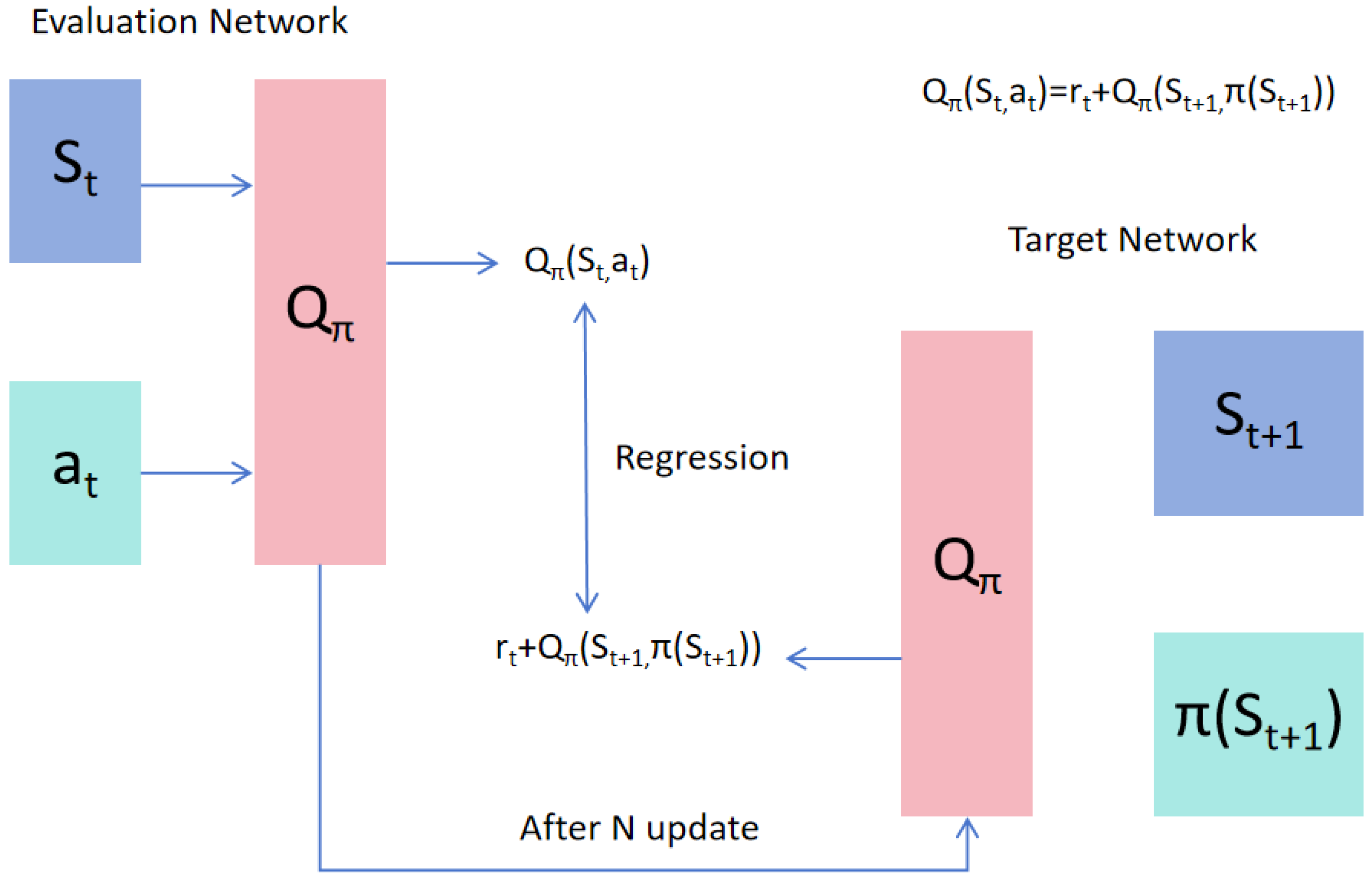

The Deep Q-Network (DQN) algorithm serves as a foundational implementation framework for deep reinforcement learning (DRL), utilizing two neural networks: the evaluation network and the target network. The evaluation network, also called the action network, is the main network in the DQN algorithm. It is responsible for selecting actions by taking the current state as input and outputting the Q-value estimates for each action through forward propagation. The target network is an auxiliary network used to calculate the target Q-values. Its parameters remain unchanged for a fixed period (either a certain number of training steps or a time interval), as shown in

Figure 4. This provides relatively stable target Q-values, reducing fluctuations during training and improving the stability and convergence of the algorithm.

The proposed DRLMDS leverages the structural symmetry of mimic defense servers to enhance scheduling fairness and load balancing. Specifically, symmetry is realized through:

Symmetric Resource Distribution: Computing resources, tasks, and arbitration mechanisms are evenly distributed across multiple heterogeneous nodes.

Symmetric Task-Node Mapping: Similar tasks are assigned to nodes with similar capabilities, ensuring that no single node is overloaded or underutilized.

Symmetric Decision-Making: The arbitration mechanism applies uniform criteria to evaluate scheduling strategies, avoiding bias toward any particular node or task type.

This symmetric design reduces scheduling bias, improves resource utilization, and enhances system robustness against targeted attacks.

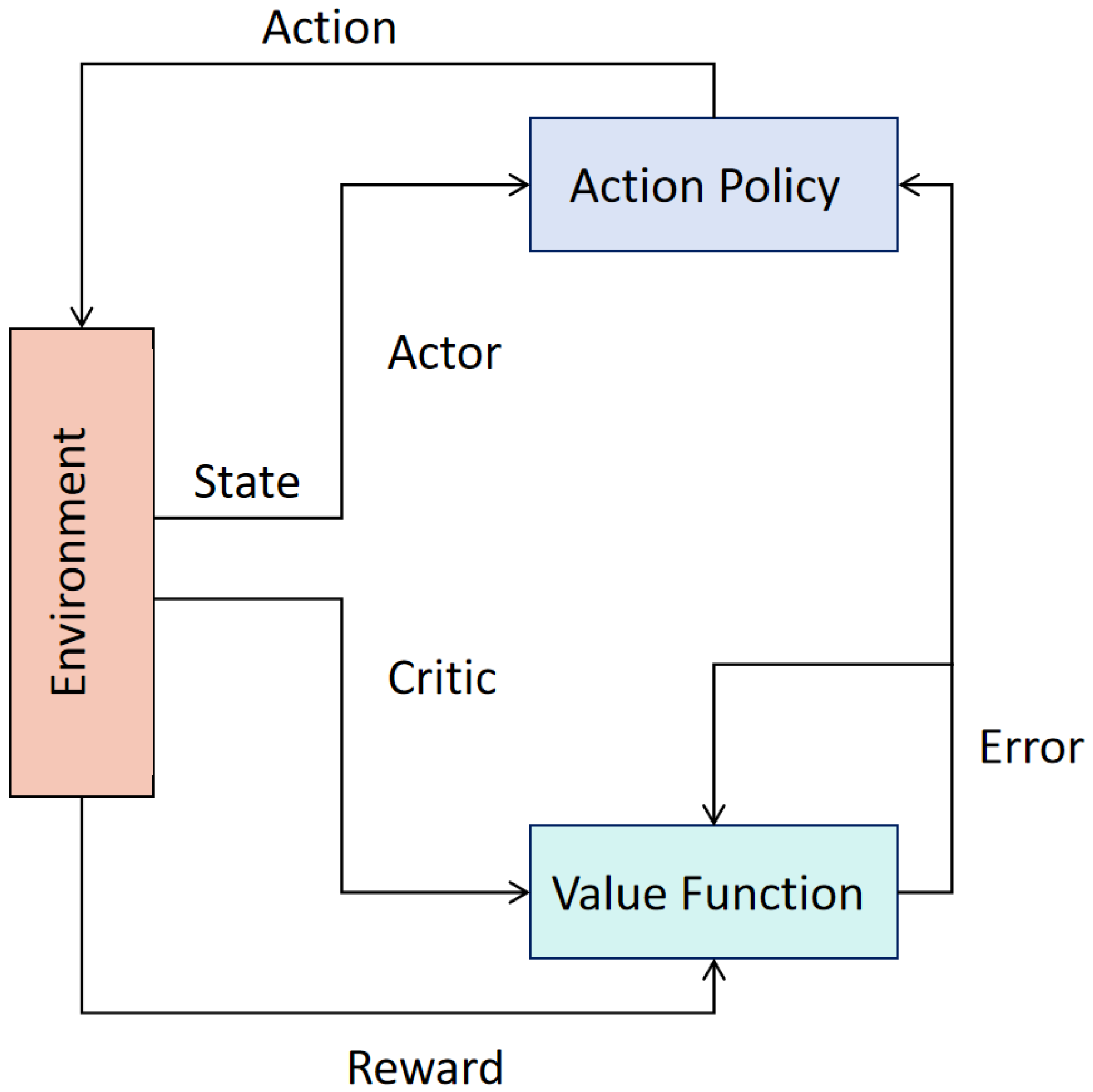

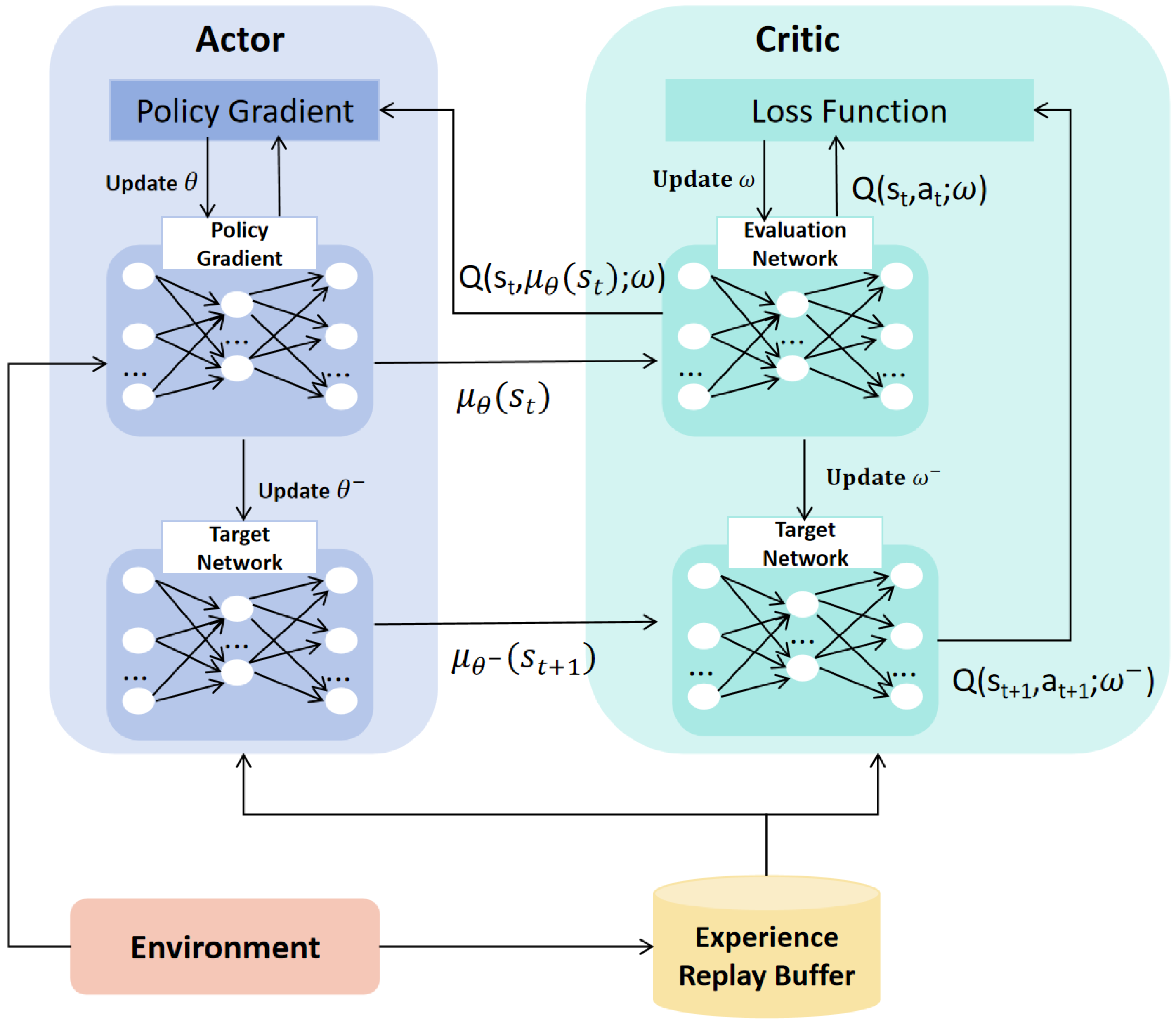

Building on the DRL framework established by DQN, the Deep Deterministic Policy Gradient (DDPG) algorithm introduces an actor-critic architecture, as shown in

Figure 5, to address limitations in handling continuous action spaces and enhance performance and efficiency. It combines the policy network (Actor) and the value function network (Critic): the Actor improves the policy by maximizing the Critic’s evaluation of the policy, while the Critic updates its parameters by comparing the actual rewards with the value function estimates. The Critic provides feedback on the policy to guide the Actor’s updates, and the Actor generates action strategies (especially in continuous spaces) to produce new experiences for the Critic to learn from.

In addition, DDPG retains key components from the DQN framework, including experience replay and target networks, as shown in the overall algorithm framework in

Figure 6. Similar to DQN, DDPG’s target networks keep parameters fixed for a certain period, allowing the algorithm to focus more on long-term cumulative rewards, slowing down target value updates, and improving stability. Experience replay, another inherited component, breaks the correlation between consecutive samples, reducing the impact of correlated data on training. By randomly sampling data from the experience buffer, the algorithm better leverages data diversity and coverage, avoiding overfitting to specific patterns and further enhancing stability and convergence.

3.1.1. State Space

The state space describes the environment’s state. In this paper, task delay and system energy consumption are used as the state representation after the execution of an action. The state is defined as:

where

represents the total transmission delay of the current scheduling scheme,

is the computation delay of processing tasks by edge servers,

is the total transmission energy consumption of data transferred from edge devices to servers in the edge system, and

is the total computation energy consumption generated by all edge servers during task processing.

3.1.2. Action Space

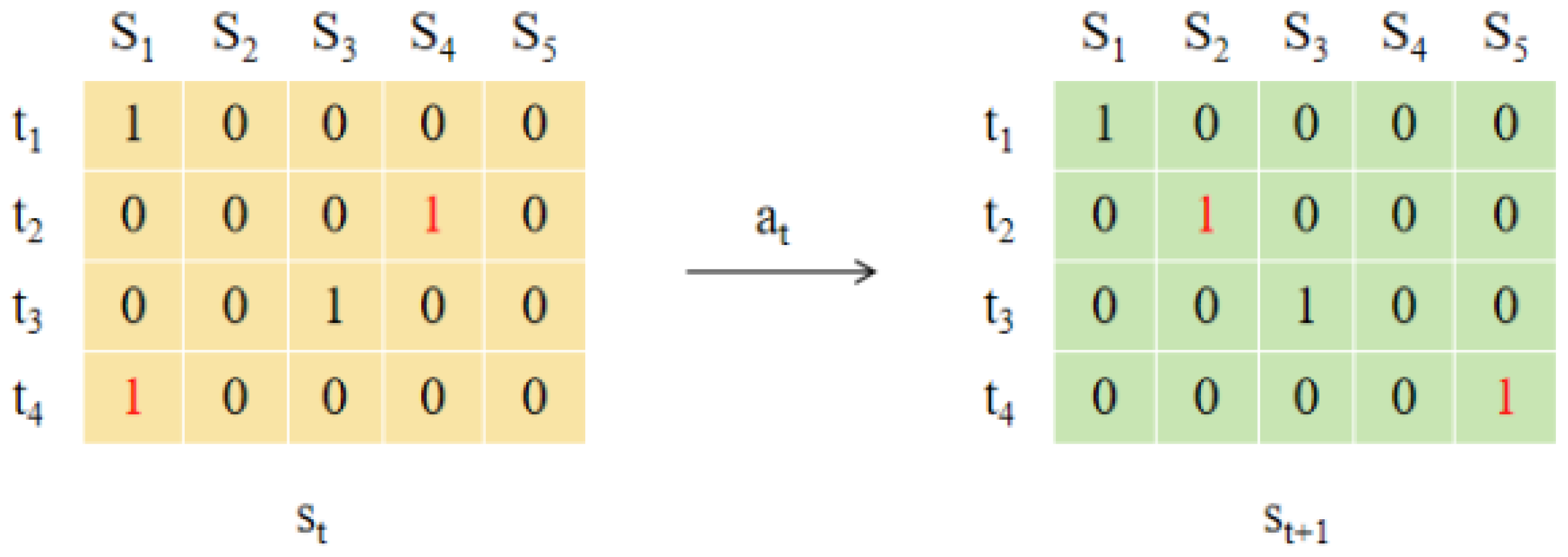

Each action in the action space represents a specific resource allocation scheme. Selecting an action corresponds to selecting a server, to which the task is transmitted for processing on the chosen edge server. For example, as shown in

Figure 7, given a task set

and a set of server resources

at time

t, the resource allocation matrix is shown in

Figure 7. An element in the matrix with value 1 indicates that the corresponding task is assigned to a server resource.

At time , task is allocated to server resource , task is allocated to , and tasks and are assigned to server resources and , respectively. At this point, the system is in state .

Based on the selected action , which determines the allocation scheme of resources, the allocation for and is maintained, while is reallocated to resource and is reallocated to , leading to the next state .

In terms of action selection, the algorithm is based on the -greedy policy and the reward function. With probability , the action with the highest reward is selected to enable the algorithm to approach the optimal value. With probability , a random action is chosen to expand the exploration of the state and action space, thereby avoiding the issue of falling into local optima. Meanwhile, the randomly selected state transition tuple is stored in the experience replay buffer to train the DQN network, which helps reduce the correlation between samples.

3.1.3. Reward Function

The objective of this study is to minimize task delay and system energy consumption. Therefore, the design of the reward function mainly considers these two aspects. Since the objective is negatively correlated with the reward function, the reward function

r is also calculated as shown in Equation (2), where

is the total delay of all tasks in the edge system, and

is the total energy consumption in the edge environment.

During the training of the DQN network, the loss function is used to optimize the network parameters by minimizing the difference between the target Q-network and the evaluation Q-network. The target Q-value is calculated as:

where

is the Q-value estimated by the evaluation network. Mean Squared Error (MSE) is adopted as the loss function for the DQN neural network, as shown in Equation (4).

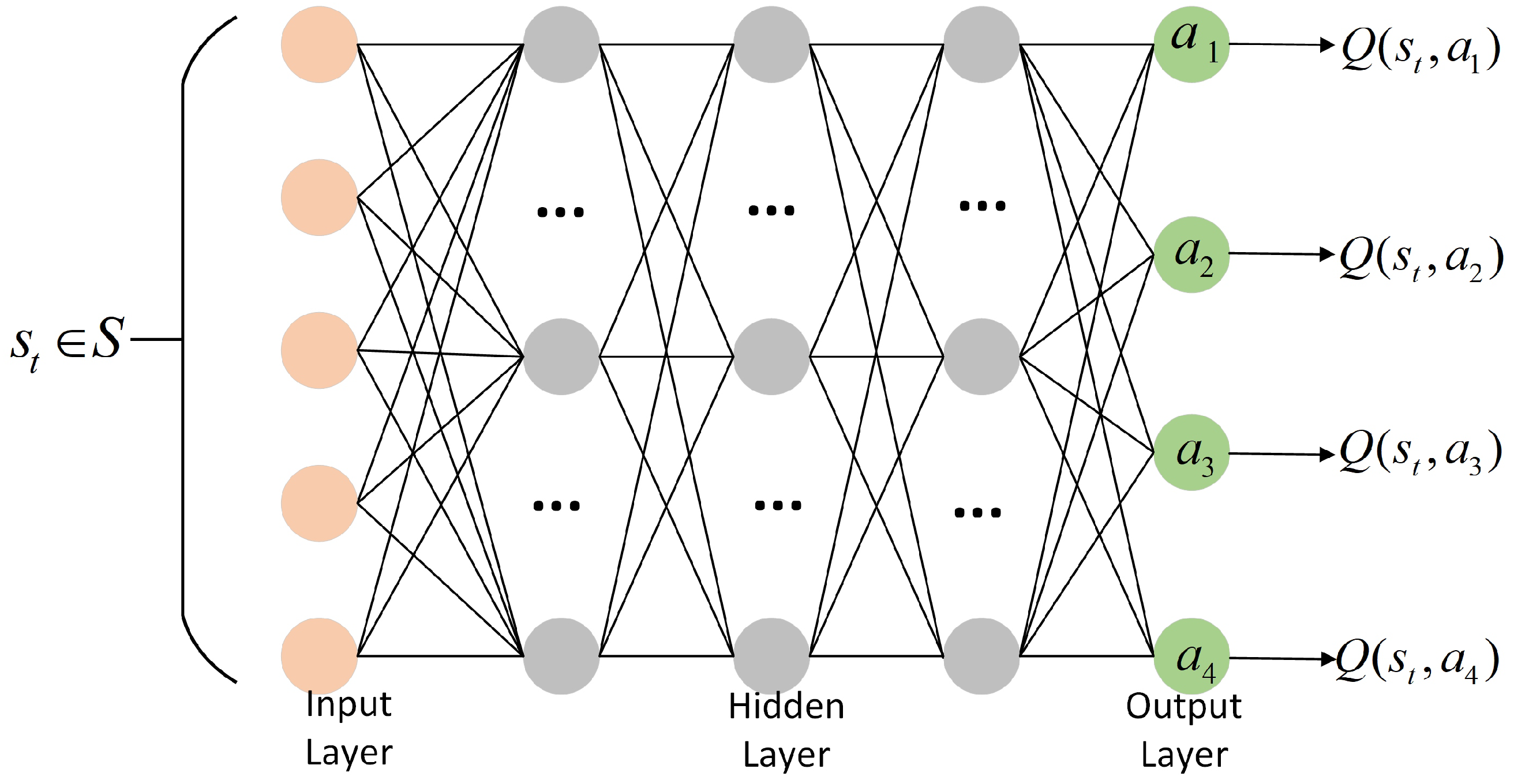

3.1.4. Network Architecture

The network component of the DQN algorithm consists of an evaluation network and a target network. Both networks share the same architecture and utilize a Deep Neural Network (DNN). The DNN structure used in this algorithm is shown in

Figure 8. It contains five connected layers, including one input layer, three hidden layers, and one output layer. The input layer receives state feature information, each hidden layer contains 32 nodes to learn the features among data, and the output corresponds to the Q-values for different actions. The activation function used is the ReLU function.

Figure 9 illustrates the architecture of the DQN module, where the agent continuously optimizes the scheduling policy through interactions with the environment.

3.2. Scheduling Optimization Based on Improved Particle Swarm Algorithm

Resource scheduling problems typically require consideration of multiple constraints and objective functions. The global search capability of the particle swarm algorithm allows it to find the optimal or near-optimal solutions across the entire solution space [

11]. This section presents improvements to the particle swarm algorithm by introducing hierarchical learning [

19] and the concept of t-distribution [

20]. Particles are classified into layers according to their fitness values. During the particle update process, lower-level particles learn from higher-level particles, thereby enhancing the overall quality of the swarm. To ensure particle diversity, mutations based on the t-distribution are applied to particles to expand the search space. Specifically, the improved particle swarm algorithm achieves heterogeneity and redundancy through the following methods:

Heterogeneity: The initial particle swarm is constructed based on initial scheduling schemes generated by the DQN algorithm, with each particle representing a resource scheduling scheme. These particles embody different scheduling strategies, reflecting the system’s heterogeneity.

Redundancy: By generating multiple particles, the system contains several redundant scheduling schemes that can perform the same or similar functions. When one scheduling scheme is attacked or fails, other schemes can take over the tasks, ensuring system stability and continuity.

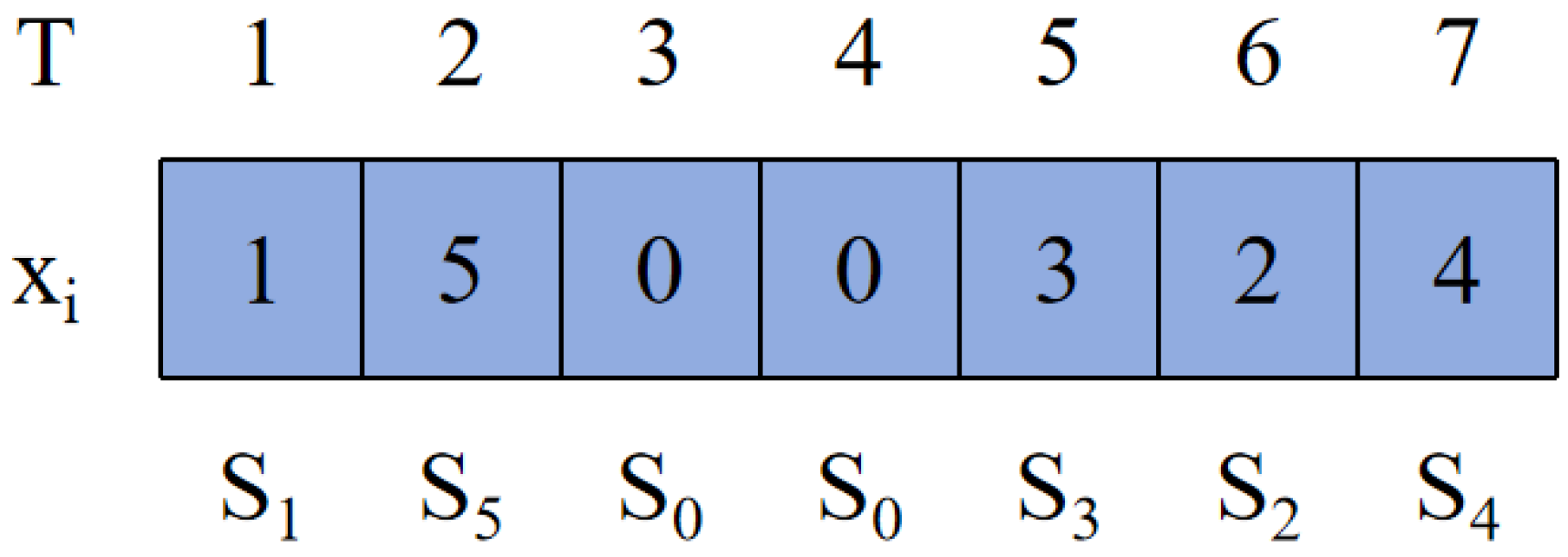

In this chapter, the DQN algorithm is used to generate the initial solutions for the particle swarm, improving the quality of the initial values. The top K scheduling schemes are selected from the experience replay pool of the DQN algorithm as particles in the particle swarm algorithm, denoted as . Each particle represents a resource scheduling scheme.

The dimension of each particle equals the number of tasks in the edge environment, where each dimension corresponds to a task. The value at each dimension indicates the server resource ID allocated to that task. As shown in

Figure 10, taking this as an example, resource

is assigned to tasks

, resource

is assigned to task

, resource

is assigned to task

, and resource

is assigned to task

.

In the particle swarm optimization algorithm, the fitness value of each particle reflects its quality in the solution space. The calculation of the fitness value is usually designed based on the objective function. The objective function in this chapter is to minimize system energy consumption and task latency. Therefore, a smaller fitness value indicates that the particle is closer to the optimal solution. When calculating the fitness value, this work considers constraint terms. For scheduling schemes where the latency exceeds the maximum allowed latency or the energy consumption exceeds the preset maximum energy, penalty values are assigned. The fitness value consists of three parts: task latency, system energy consumption, and the penalty value, as shown in Equations (5)–(7).

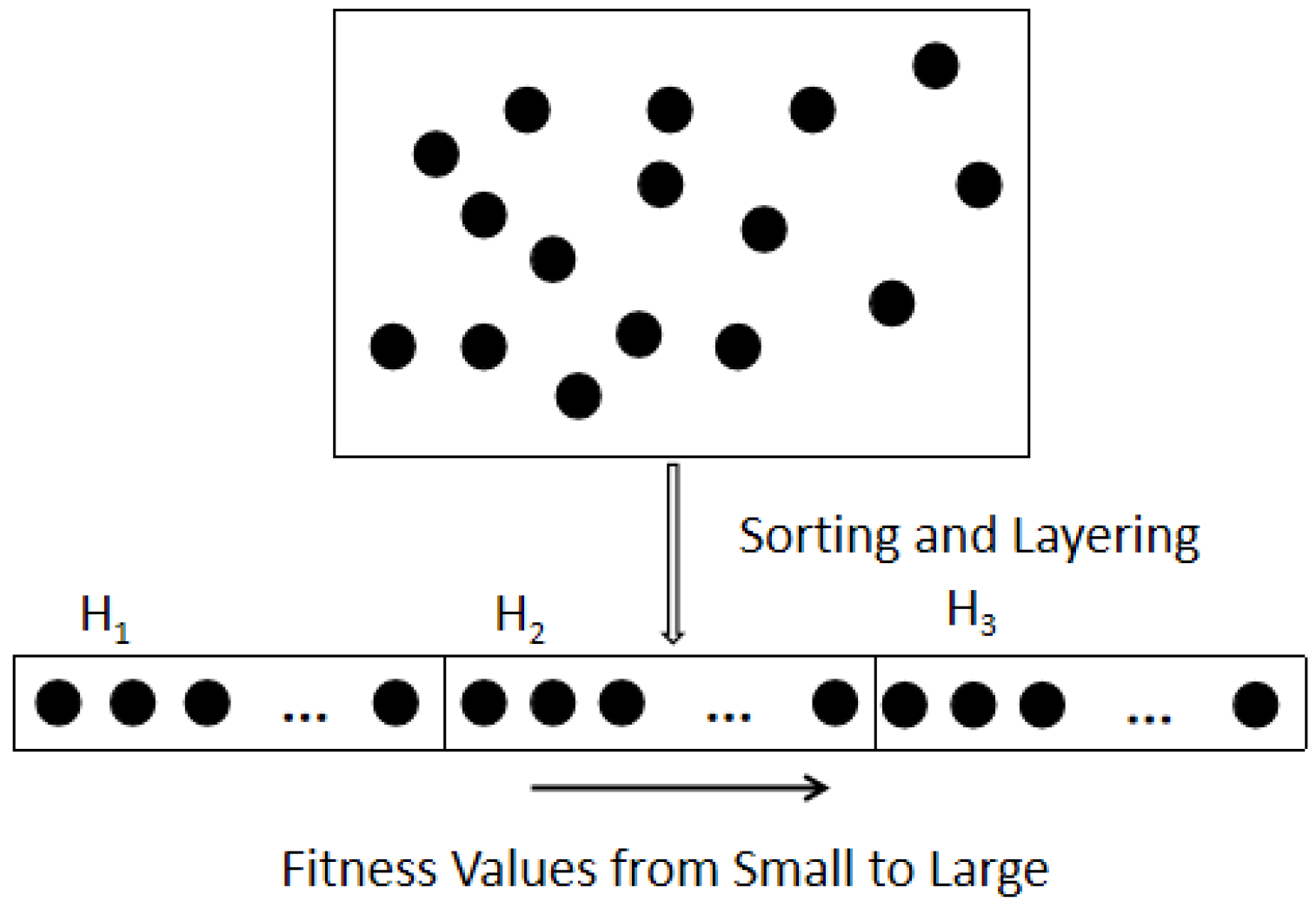

Each particle determines its personal best solution based on its own fitness value, which in turn guides the speed and direction of its position update. At the same time, particles with better fitness values attract other particles to move closer to them, thereby promoting the entire swarm to move toward the optimal solution through information exchange among particles. To facilitate particles moving toward the optimal solution, this section performs a sorting and layering operation on the particles according to their fitness values, as shown in

Figure 11.

The particle set in the particle swarm is . The particles are sorted in ascending order according to their fitness values . After sorting, the particles are renumbered, and the swarm is divided into three layers . The number of particles in the first and third layers corresponds to the first and the last of the sorted swarm, respectively.

After each iteration of the algorithm, particles are resorted and re-layered according to their fitness values, as shown in Equations (8) and (9):

where

N is the total number of particles in the swarm,

t is the current iteration number, and

T is the maximum number of iterations set by the algorithm. The first layer particle set is

, the second layer particle set is

, and the third layer particle set is

.

The particles in the first layer are closer to the global optimal solution. For updating particles in this layer, the traditional method is applied, where the velocity and position are updated based on the particle’s personal best and the global best solutions, as shown in Equation (10):

The particles in the second layer update their positions and velocities by learning from the particles in

. Two particles,

and

, are randomly selected from the first layer as references for the update. The update rules are shown in Equation (11):

For the particles in the third layer with relatively high fitness values, a

t-distribution mutation is applied to increase the randomness and diversity of the search. The

t-distribution, also known as Student’s

t-distribution, denoted by

, has the probability density function given by Equation (12), where

n is the degrees of freedom. When

, the

t-distribution can be regarded as a Cauchy distribution, and as

, the

t-distribution approaches the Gaussian distribution. Therefore, the

t-distribution has characteristics of both the Cauchy and Gaussian distributions:

The overall algorithm flow is shown in

Figure 12. The initial particle swarm is constructed based on the initial scheduling schemes generated by the DQN algorithm. Particles are sorted and layered according to their fitness values. For particles in the first layer, their velocity and position are updated based on their individual best solutions and the global best solution of the current layer’s swarm. For particles in the second layer, two particles are randomly selected from the first layer, and their velocity and position are updated according to specific formulas. For particles in the third layer, mutations are applied using the t-distribution to change their positions without altering their velocities. Before the iteration count reaches the maximum number set by the algorithm, after each iteration and update of the particle swarm, the fitness of each particle is recalculated, and particles are re-layered accordingly, while the global best solution is recorded.

3.3. Mimetic Defense Module

Specifically, DRLMDS achieves mimetic defense through the following four characteristics:

Dynamicity: The module implements a dynamic resource scheduling mechanism via the Deep Reinforcement Learning (DRL) module. This module adjusts resource allocation strategies in real-time based on the current system state, including task queue length, task latency, and server energy consumption. The DRL module employs adaptive learning rate adjustment and exploration strategies to cope with different types of attack scenarios. It continuously learns and updates strategies during runtime to dynamically resist external attacks. This dynamic adaptability ensures efficient system operation under complex and changing attack environments.

Heterogeneity: The system deploys heterogeneous servers running different OS versions or possessing diverse hardware architectures to build the mimetic environment. At the start of scheduling, the system generates initial scheduling strategies using a Deep Q-Network (DQN) and maps them to multiple particles in the particle swarm, with each particle representing a resource scheduling scheme. Differences in the structural design of these particles naturally reflect system heterogeneity. This heterogeneity not only improves resource utilization efficiency but also significantly increases the uncertainty of attack paths for adversaries, thereby enhancing system security.

Redundancy: The system ensures redundancy by deploying multiple servers with similar functions but different configurations. During scheduling, the system maintains multiple candidate scheduling strategies as backup paths. When a server is attacked or a task fails, the system can promptly switch to other redundant servers to execute the corresponding tasks. Through the pool of multiple scheduling schemes maintained by particle swarm optimization (PSO), the system can quickly call alternative solutions when the original plan fails, guaranteeing service continuity and fault tolerance.

Decision-making Process: The mimetic defense module introduces a decision-making mechanism to select the optimal strategy among multiple scheduling schemes. This decision process can evaluate candidate schedules based on predefined rules or trained machine learning models (e.g., neural network evaluators), considering factors such as task completion rate, security, energy consumption, and system load comprehensively. The decision-making mechanism ensures that the final selected scheduling strategy effectively enhances resource utilization while possessing strong anti-attack capabilities.

The mimetic defense module significantly enhances system unpredictability by introducing diversity and randomness into scheduling strategies. Specifically, on top of the actions output by the DRL module, random perturbations are added, causing variations in task allocation paths and schemes, which increase the difficulty for attackers to predict system behavior. For example, each task can generate multiple possible execution paths during allocation. By introducing perturbation functions or random selection strategies, the diversity of these paths is increased, forming diversified scheduling behaviors.

Moreover, the system architecture of the mimetic environment is illustrated in

Figure 13. This architecture deeply integrates the deep reinforcement learning module with the mimetic scheduling module. During the training phase, it learns resource scheduling strategies through extensive interactive data. In the operational phase, it dynamically collects state data for real-time scheduling and mimetic decision-making, thereby achieving a collaborative optimization of system security and scheduling performance.

Unlike prior approaches that treat scheduling and security as separate concerns, our method provides a holistic integration of mimic defense principles—dynamicity, heterogeneity, and redundancy—directly into the scheduling logic. This resolves the fundamental limitation of existing algorithms, which lack the inherent unpredictability, dynamic adaptation, and structural diversity required to defend against intelligent attacks in edge computing environments, thereby enabling a simultaneous optimization of both performance and security.

The mimetic defense module significantly enhances the system’s resilience against attacks by employing randomization and diversification strategies, making it difficult for attackers to predict the system’s scheduling patterns.

3.4. Deep Reinforcement Learning-Driven Mimetic Defense Mechanism

The core innovation of this work lies in combining deep reinforcement learning (DRL) with mimetic defense to achieve efficient and secure resource scheduling. The DRL module generates initial scheduling strategies, which are further optimized by the mimetic defense module to enhance system robustness. The specific integration mechanism is as follows:

The scheduling strategies produced by the DRL module serve as inputs, which the mimetic defense module optimizes through randomization and diversification. This dynamic optimization mechanism enables the system to adapt in real time to different attack scenarios.

The mimetic defense module increases system unpredictability by introducing diversity and randomness. Servers running different versions of operating systems introduce heterogeneity, further improving system security.

Additionally, the mimetic defense module generates multiple redundant scheduling strategies, ensuring system continuity even if some strategies are attacked or fail.

A decision-making (arbitration) mechanism within the mimetic defense module selects the optimal scheduling strategy. This mechanism evaluates and filters different strategies based on predefined rules or machine learning models, ensuring that the chosen strategy effectively resists attacks while improving resource utilization.

By integrating deep reinforcement learning with mimetic defense, the system not only dynamically optimizes resource allocation but also effectively counters malicious attacks, significantly improving overall system performance and security.

4. Experimental Design and Analysis

To verify the effectiveness of DRLMDS, this paper designs a resource scheduling experiment incorporating a mimic defense mechanism. The mimic defense environment is designed with the core concept of “diversified runtime environments and dynamic scheduling strategies,” aiming to enhance the system’s robustness and resource adaptability when facing various attacks. The experiment design covers the following four aspects:

Dynamic Validation: By simulating typical attack scenarios (DoS attacks and resource inference attacks), the system’s responsiveness and performance in dynamically adjusting resource scheduling strategies are verified. The DoS attack simulation logic involves introducing a continuous high-frequency stream of pseudo-tasks at the logical level, simulating an attacker causing the server resources to be busy for a prolonged period through network requests. The resource inference attack simulation logic assumes that attackers infer backend scheduling rules by analyzing task execution delays and resource allocation trajectories, thereby influencing subsequent task scheduling strategies. Under these attack scenarios, the system can identify abnormal task behaviors in real-time and dynamically adjust scheduling strategies (e.g., switching execution paths), effectively reducing task latency and energy consumption fluctuations.

Heterogeneity Validation: The experimental environment simulates multiple servers running different types of operating systems (such as Windows Server, Ubuntu, etc.) and various hardware specifications to construct a heterogeneous edge environment. The system can flexibly schedule tasks based on different server performances and load conditions, improving resource utilization. Specifically, under the heterogeneous server deployment environment, the system’s resource utilization improved by approximately 25% compared to a homogeneous environment, demonstrating strong heterogeneity adaptability.

Redundancy Validation: By simulating scenarios where some servers become unavailable due to attacks or failures, the stability of the system’s redundancy scheduling strategy is evaluated. Experimental results show that when the primary scheduling scheme fails, backup strategies can quickly take over tasks to ensure uninterrupted system services, improving system stability by about 40%.

Decision Process Validation: By comparing indicators such as energy consumption and task response time across different scheduling schemes, the effectiveness of the system’s decision module in selecting the optimal strategy is verified. The decision mechanism integrates rule-based models and empirical learning outcomes to ultimately select the scheduling scheme with the highest task completion rate and lowest energy consumption. The selected strategy outperforms baseline algorithms across multiple metrics. In experiments, the decision mechanism evaluates and filters different scheduling strategies based on predefined rules or machine learning models, ensuring that the chosen strategy effectively resists attacks and improves resource utilization efficiency. Specifically, the scheduling strategies selected by the decision mechanism demonstrate excellent performance in task response time, resource utilization, and system robustness.

4.1. Experimental Environment

The experimental environment simulates a typical edge computing scenario, consisting of multiple distributed edge nodes and terminal devices. To verify the effectiveness and robustness of DRLMDS, two types of attack scenarios are designed: DoS attacks and resource inference attacks, aiming to evaluate the system’s performance under different attack types. The DoS attack simulates high-frequency request attacks on edge servers to cause resource occupation; the resource inference attack periodically samples task processing times, builds time-series prediction models, and attempts to identify scheduling behavior patterns. By introducing dynamic attribute changes of nodes and attack logic simulation mechanisms, a typical mimic defense environment is reconstructed at the simulation level, thus validating the scheduling performance of DRLMDS under the “mimic environment.”

4.1.1. Experimental Setup

The experiments were conducted on Windows 10. The software stack comprised Python 3.8 and TensorFlow 2.7, running on hardware equipped with 16 GB RAM and an AMD Ryzen 7 5800H CPU.

4.1.2. Parameter Settings

To validate the practical effectiveness of the resource scheduling algorithm proposed in this chapter in reducing system energy consumption and shortening latency, experiments are conducted as follows. The algorithm parameters are set as shown in

Table 1. The number of edge servers is set to 20. For the number of tasks to be processed, this paper sets it to [100, 200, 300, 400, 500] to facilitate performance evaluation of DRLMDS under different workloads.

Currently, there is a lack of standardized training and testing datasets in the field of resource scheduling for edge computing. Therefore, the parameter values used in the experiments are based on related experimental settings from similar research on edge computing resource scheduling models [

1]. The parameter settings for the proposed resource scheduling model in this paper are referenced from the literature, with detailed values shown in

Table 2.

4.1.3. Experimental Metrics

To evaluate the algorithm’s performance, the experiment compares and assesses four aspects: system energy consumption, average task delay, task response time, and resource utilization. Additionally, the Inverted Generational Distance (IGD) is used to evaluate the performance of the algorithms on multi-objective problems, serving as an indicator of the quality of the solution set generated.

4.2. Experimental Results and Discussion

In this phase, experiments were carried out to assess the system performance under non-attack scenarios using conventional deep reinforcement learning (DRL)-based resource scheduling algorithms. Specifically, the experimental setup compared the proposed DRLMDS (Deep Reinforcement Learning-based Multi-Dimensional Scheduling) algorithm against two benchmark approaches: the Particle Swarm Optimization (PSO) algorithm and a hybrid method integrating Deep Q-Network (DQN) with Genetic Algorithm (hereafter denoted as DQN-GA).

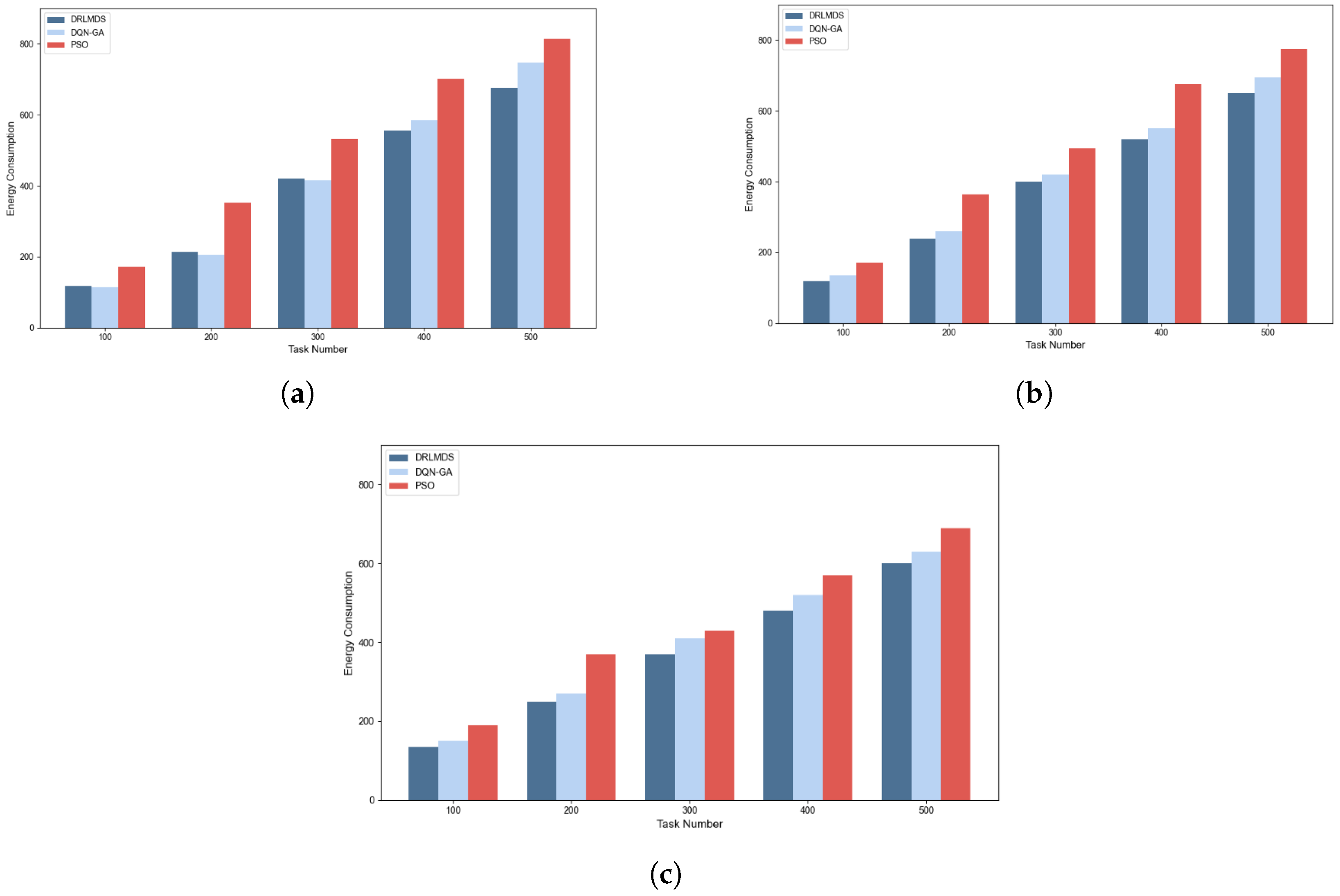

4.2.1. Evaluation of Energy-Saving Performance Under Variable Task and Server Quantities

To verify the energy-saving performance of DRLMDS, this section compares the system energy consumption of DRLMDS, PSO, and DQN-GA under different combinations of task numbers and server counts. The corresponding results are presented in

Figure 14, and key observations are summarized as follows:

Overall Trend: The system energy consumption of all three algorithms increases to a certain extent as the number of tasks rises. Among them, the PSO algorithm exhibits the highest energy consumption, with a near-linear growth trend as the task quantity increases.

Performance Gap Under Low Task Loads: When the number of tasks is small, the energy consumption difference between DRLMDS and DQN-GA is relatively minor. For example, with 10 servers and 100 tasks, DRLMDS reduces energy consumption by 6.69% compared to DQN-GA.

Performance Advantage Under High Task Loads: As the number of tasks reaches 400 or more, the energy consumption gap between DRLMDS and DQN-GA widens. When the task count reaches 500, DRLMDS achieves a 7.82% reduction in energy consumption relative to DQN-GA. This result indicates that DRLMDS demonstrates superior performance when handling large-scale task loads.

Additionally, the impact of server quantity on energy consumption varies with the task load:

When the number of tasks is ≤200, increasing the number of servers does not reduce the total energy consumption for any of the three algorithms. This phenomenon is attributed to the fact that surplus idle servers still consume energy, leading to unnecessary resource waste.

When the number of tasks reaches 300, the system energy consumption of all three algorithms decreases as the number of servers increases.

To further verify the energy optimization effectiveness of DRLMDS, this paper analyzes the energy consumption data of each algorithm when task number is fixed at 500, under three server configurations: 10, 20, and 30 servers. For each scenario, 10 independent runs are conducted per algorithm, and the Kruskal-Wallis H test (a non-parametric statistical test) is applied to ensure the robustness and reliability of the results.

4.2.2. Results of Statistical Analysis

The statistical results indicate that under all three server configurations, the differences in energy consumption among the three algorithms are statistically significant (all p-values ). A representative example (with 10 servers) is detailed below:

The average energy consumption values of DRLMDS, DQN-GA, and PSO are 680, 740, and 810 (units consistent with experimental metrics) respectively.

Their 95% confidence intervals are , , and , respectively, with a significance p-value of approximately .

This result confirms that there is a significant difference in energy performance among the three algorithms, and DRLMDS outperforms the other two methods by a statistically significant margin. Similarly, under the server configurations of 20 and 30 servers, DRLMDS maintains its superior energy efficiency and retains a stable performance advantage.

Building on the preceding confidence interval and significance test results, a definitive conclusion can be drawn: DRLMDS consistently reduces overall energy consumption across different server configurations and outperforms comparative algorithms (i.e., DQN-GA and PSO) by a statistically significant margin.

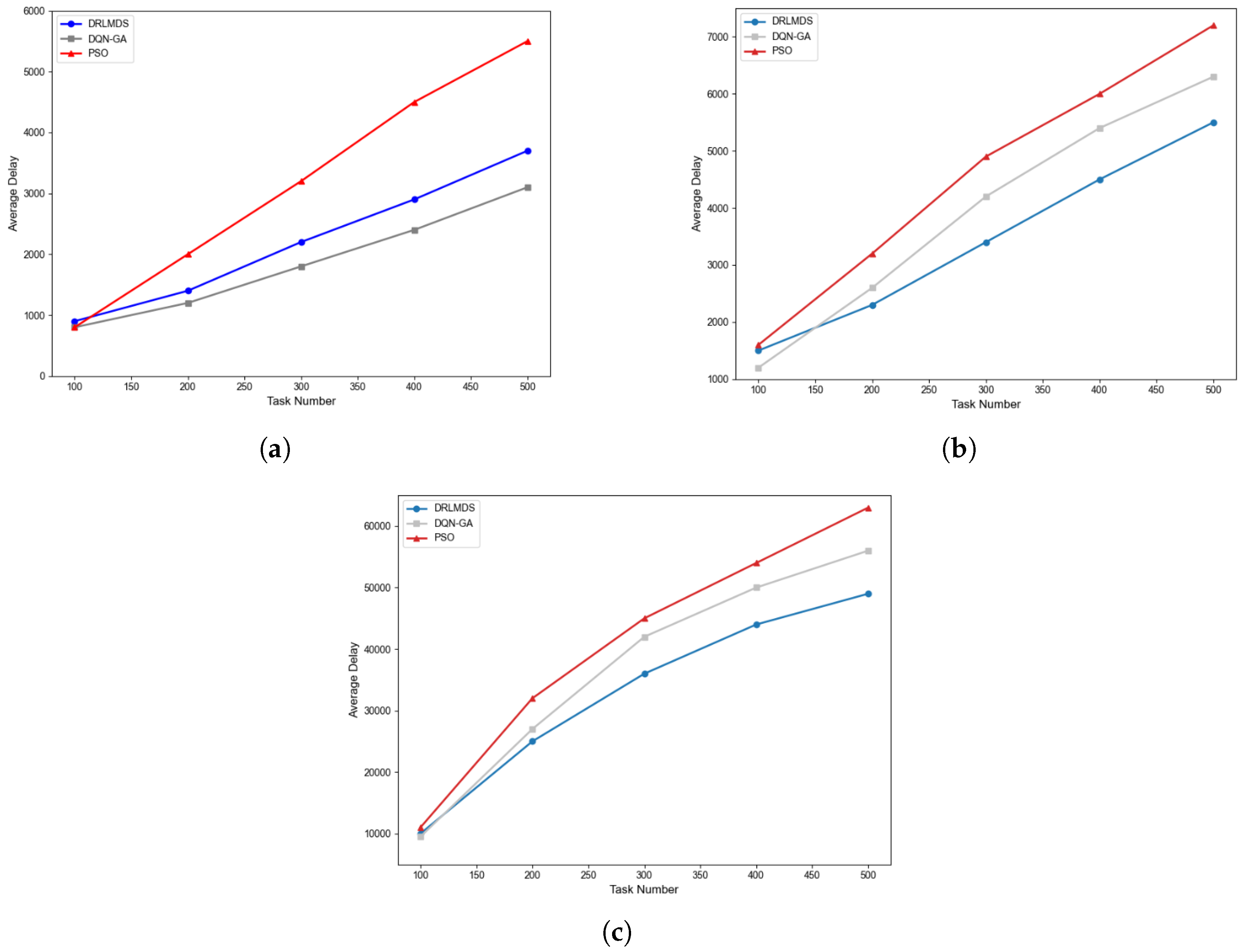

4.2.3. Evaluation of Average Task Delay Performance

Following the energy consumption analysis, this section assesses the performance of the three algorithms in terms of average task delay—a critical metric for real-world resource scheduling systems. Notably, task sizes in practical scenarios are not fixed; to reflect this reality, the experiment compares the average task delay of the algorithms under three distinct task size ranges: [50–100 KB], [100 KB–1 MB], and [1–2 MB]. The corresponding results are presented in

Figure 15.

Key Observations on Task Delay Trends

Across all task size categories, the average delay of all three algorithms increases as the number of tasks grows. However, two critical performance differences emerge:

Superiority over PSO: Both DRLMDS and DQN-GA achieve significantly lower average task delays compared to the PSO algorithm. This advantage stems from the DQN-based framework—algorithms rooted in DQN learn highly adaptive scheduling policies through continuous interaction with the system environment. Their neural network architectures excel at processing high-dimensional feature information in resource scheduling tasks, enabling real-time capture of key operational patterns. Consequently, hybrid approaches that combine swarm intelligence with deep reinforcement learning (e.g., DQN-GA and DRLMDS) outperform purely swarm intelligence-based methods (e.g., PSO) in solving resource allocation problems in edge computing environments.

Advantage of DRLMDS over DQN-GA: When the number of tasks reaches 500, DRLMDS further outperforms DQN-GA across all three task size ranges, achieving delay reductions of 10.08%, 11.11%, and 12.99% respectively. Moreover, as task size increases, DRLMDS maintains the lowest average task delay among the three algorithms and exhibits the slowest rate of delay growth with increasing task quantity.

Implications of Task Delay Results

These findings collectively indicate that DRLMDS not only delivers excellent energy efficiency, but also excels in handling large volumes of large-scale tasks—a critical requirement for real-world edge computing systems where both task size and quantity can fluctuate significantly.

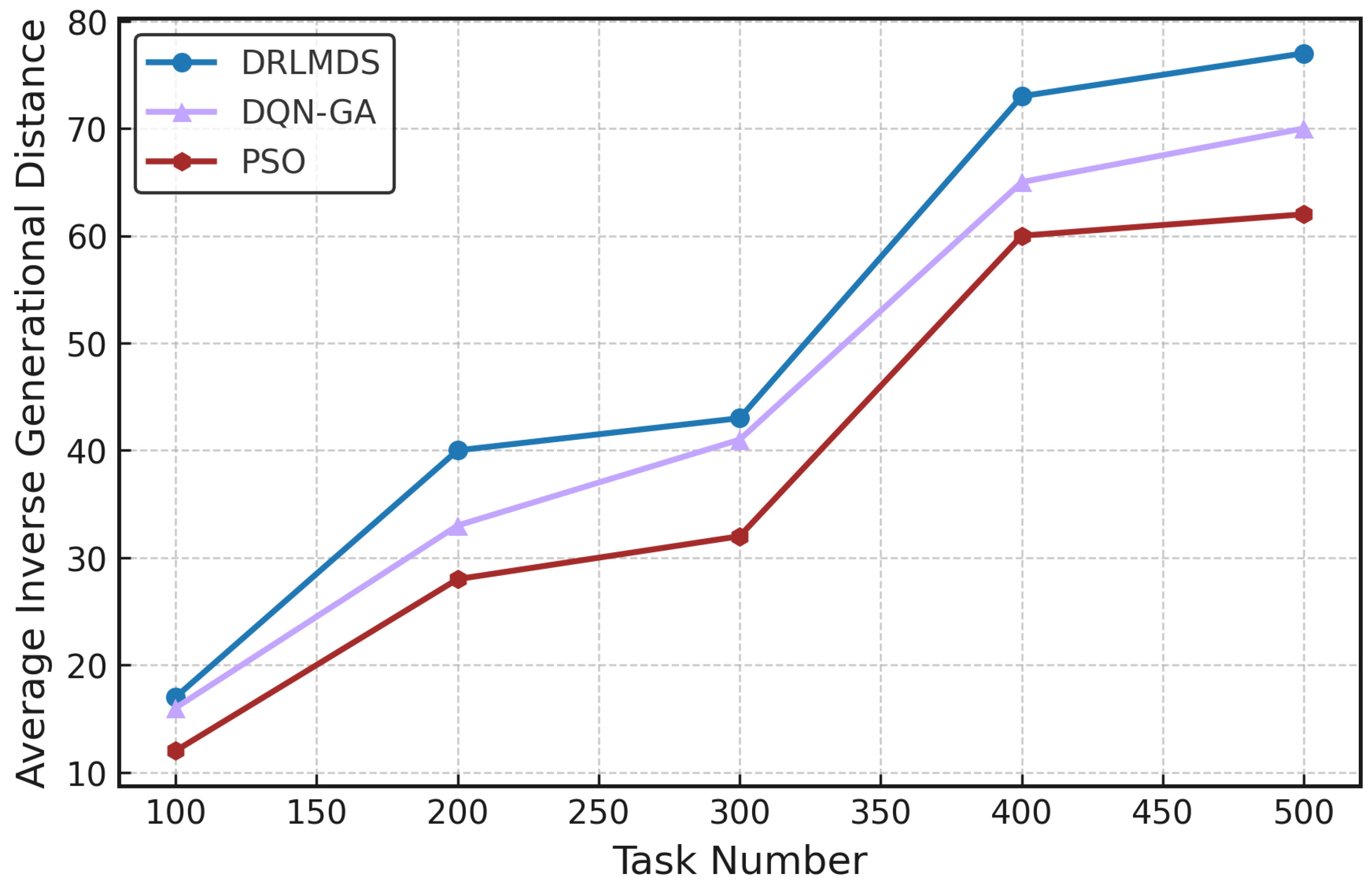

4.2.4. Evaluation of Algorithm Solution Set Quality

In parallel with the aforementioned performance metrics, this section assesses the quality of solution sets generated by the algorithms—critical for validating their effectiveness in multi-objective optimization. The Inverted Generational Distance (IGD) metric is adopted for this purpose. IGD quantifies the average distance from each solution in the true Pareto front to its nearest counterpart in the algorithm-generated approximated front; a smaller IGD value indicates higher solution quality and superior optimization performance.

To compare solution quality, the proposed DRLMDS is evaluated against PSO and DQN-GA using IGD values. Each algorithm was executed in 10 independent runs, and the average results are reported (see

Figure 16). Key observations include:

As the number of tasks increases, problem complexity rises, leading to a general decline in solution quality across all algorithms.

DRLMDS consistently generates higher-quality solution sets than PSO and DQN-GA. This advantage is attributed to two core design features of DRLMDS:DQN-based initialization of the particle swarm, which provides a more optimal starting point for search;A layered learning strategy for particle updates, which enhances the algorithm’s ability to explore and exploit the solution space.

To statistically validate the performance differences, a paired sample t-test was conducted on the IGD values from the 10 independent runs. The results confirm:

Compared to DQN-GA: DRLMDS achieves an average IGD improvement of 4.8, with a 95% confidence interval of and a p-value of 0.0143 (), indicating a statistically significant advantage.

Compared to PSO: DRLMDS delivers an average IGD improvement of 10.6, with a 95% confidence interval of and a p-value of 0.0035 (), demonstrating a highly significant performance edge.

These findings collectively confirm that DRLMDS offers superior solution quality and stability in resource scheduling optimization tasks.

4.2.5. System Performance Under Simulated Malicious Attack Scenarios

The second part of this section introduces a mimic defense mechanism to simulate malicious attack scenarios, evaluating three key system attributes: accuracy (in malicious behavior detection), latency, and malicious behavior suppression capability. Further, system performance is analyzed from three additional dimensions—latency, energy consumption, and cost—via simulation statistics.

Latency and Accuracy Under Attack Scenarios

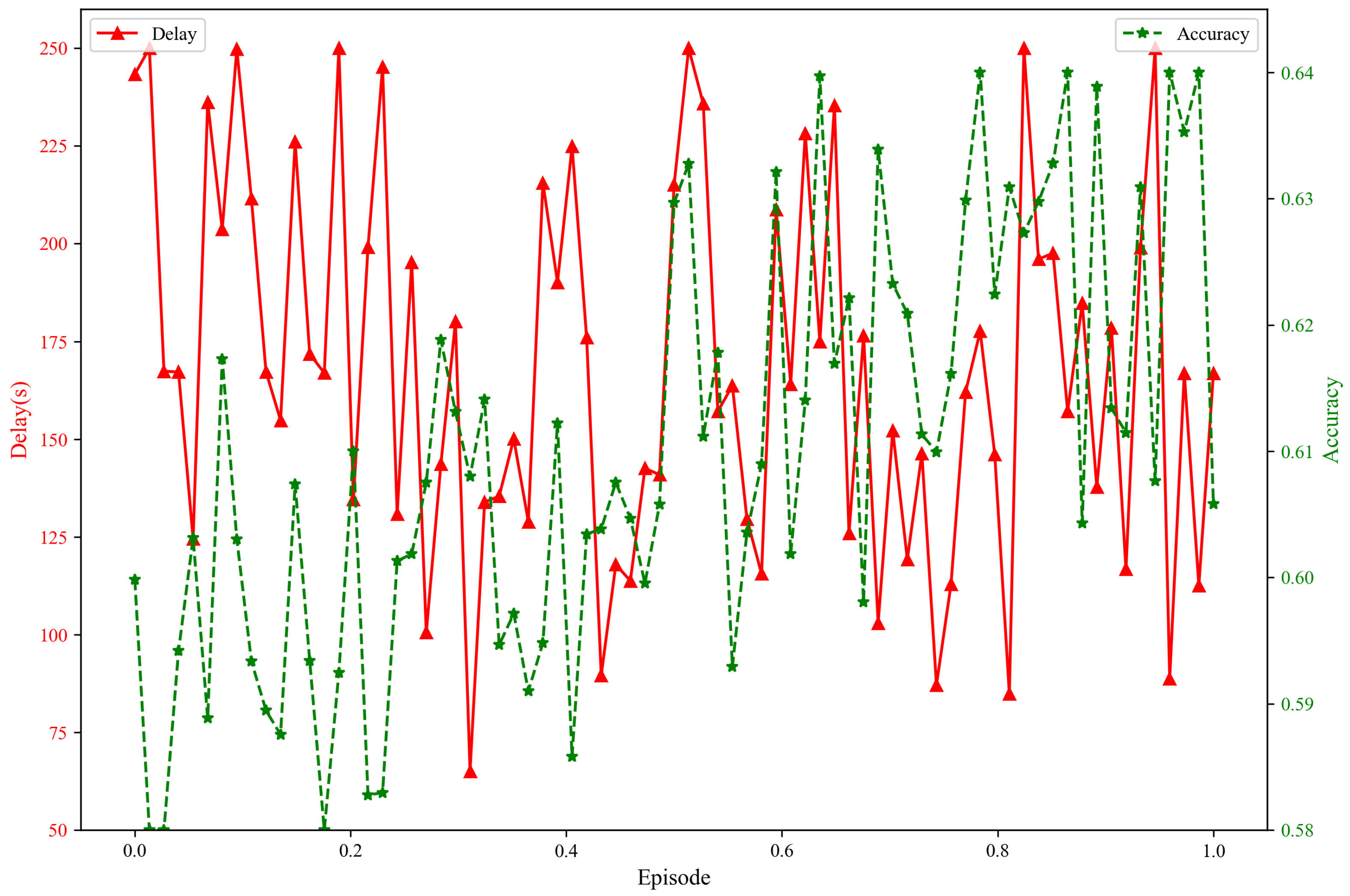

Figure 17 presents the experimental results of system latency and detection accuracy under simulated attacks with the mimic defense mechanism enabled. Key insights are as follows:

Latency Fluctuations: System latency exhibits significant fluctuations during training, particularly in the middle phases (e.g., around iteration steps –). This suggests potential performance bottlenecks or resource constraints at these stages, which may stem from factors such as increased data-processing overhead, sub-optimal computation-resource allocation, network delays, or inherent algorithmic limitations. For example, certain iterations may involve more complex decision-making (e.g., handling multiple concurrent attacks) or intensive computation (e.g., model-parameter updates), leading to prolonged processing times.

Stable Detection Accuracy: In contrast to latency, the system’s accuracy in detecting malicious behaviors remains relatively stable, ranging between and . As training progresses and more attack-related data are accumulated, accuracy tends to stabilize around .

Latency–Accuracy Relationship: While latency fluctuates within a relatively wide range (compared with accuracy variation), it does not converge toward a clear stable value. This instability may undermine system efficiency, especially in real-time application scenarios requiring consistent responsiveness. Notably, latency and accuracy appear to be influenced by distinct factors, with no direct correlation observed—latency does not determine accuracy, nor vice versa. Both metrics are independently affected by elements such as algorithmic optimizations, model-training complexity, or dataset characteristics.

To validate latency variations, the Kruskal–Wallis H test (non-parametric) was applied to latency data under different attack intensities. Results confirm significant differences in latency distribution across attack strengths (). For accuracy stability, the 95% confidence interval was calculated as , indicating high consistency within this range.

Trade-Offs Between Energy Consumption, Latency, and Cost

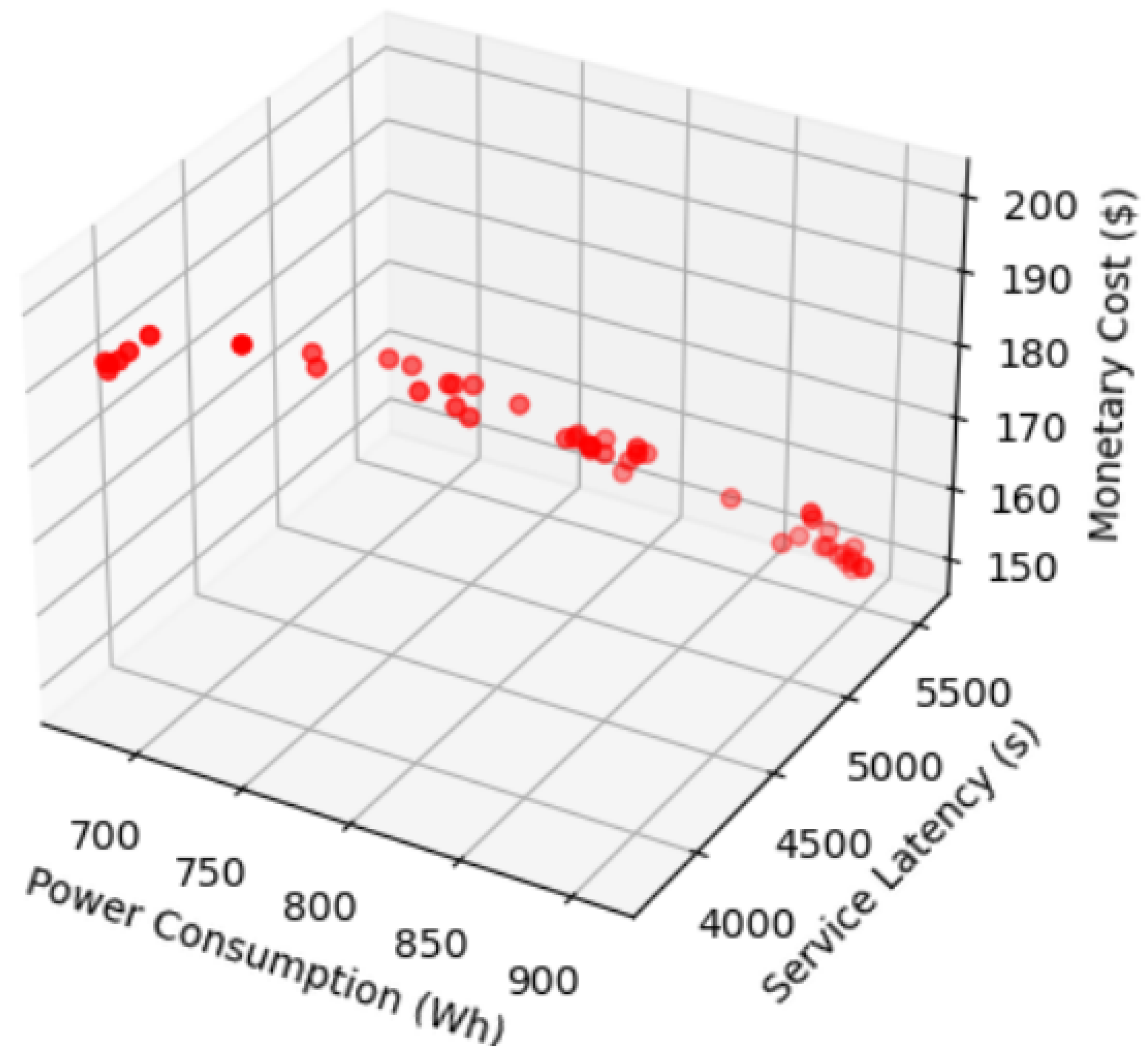

This section presents simulation results for system energy consumption, latency, and monetary cost; key relationships are visualised in

Figure 18 (data points are highlighted by red circles to emphasise trade-offs).The latency fluctuations are caused by policy exploration, path switching, and model updates, which stabilize with training.

Power Consumption vs. Service Latency:

Figure 18 reveals a negative correlation—as power consumption increases, service latency decreases. This indicates that the system can dynamically enhance performance (reduce latency) by increasing energy input, a critical capability for responding to dynamic crisis threats (e.g., sudden attack surges).

Power Consumption vs. Monetary Cost: A positive correlation is observed: higher power consumption leads to increased monetary cost. This is an expected outcome, as operational expenses (e.g., electricity bills) directly scale with energy usage in most systems.

Service Latency vs. Monetary Cost: A similar negative trend emerges—reduced service latency (i.e., improved performance) tends to correlate with lower monetary cost. This suggests that more efficient, low-latency systems may also be more cost-effective, potentially due to optimised resource utilisation (e.g., reduced idle time for servers).

The data confirms a tripartite trade-off among power consumption, service latency, and monetary cost: systems with higher power consumption typically offer lower latency but incur higher costs. From an optimization perspective, system design must balance these three parameters to meet target performance requirements while controlling operational expenses. To quantify these correlations, multiple regression analysis was performed on the collected data. The R2 value indicates that power consumption and service latency together explain 78% of the variance in monetary cost—confirming a significant statistical relationship between these factors (p < 0.05).

4.2.6. DRLMDS Performance Advantages Under Malicious Attacks

Building on the preceding analysis of simulated-attack scenarios, experimental results confirm that DRLMDS exhibits significant performance improvements when confronting malicious attacks. Key advantages are demonstrated across three critical system metrics: task-response time, resource utilization, and system robustness.

Statistical Validation of Improvements

To rigorously verify the significance of the observed performance gains, a comparative experiment was conducted against a baseline system without the mimic-defense mechanism. A paired-sample t-test was applied to evaluate the statistical significance of improvements in task-response time, resource utilization, and system robustness. The results confirm:

Task-response time: Average reduction of 30%, with a p-value —indicating a highly statistically significant improvement.

Resource utilization: Average increase of 25%, with the t-test confirming high statistical significance ().

System robustness: Approximately 40% improvement, also supported by a statistically significant result ().

4.2.7. Concluding Remarks

Rationale for Adopting the DQN Framework

This study selected the Deep Q-Network (DQN) framework as the core of its algorithm design for three key reasons:

Its inherent capability to handle high-dimensional feature spaces, which is essential for capturing complex resource-scheduling dynamics in edge computing;

Its ability to learn adaptive scheduling policies through continuous interaction with the system environment, enabling real-time adjustment to changing task loads and attack scenarios;

Its strong compatibility with swarm-intelligence algorithms (e.g., GA, PSO), facilitating the development of hybrid approaches that combine the strengths of both paradigms.

Significance of DRLMDS

The adoption of the DQN framework provides a solid technical foundation for the proposed DRLMDS algorithm. By integrating DQN-based initialization, layered learning for particle updates, and a mimic defense mechanism, DRLMDS achieves efficient and secure resource scheduling in complex edge computing environments—successfully balancing performance, resource efficiency, and resilience against malicious attacks. This makes DRLMDS a promising solution for real-world edge computing systems where dynamic task loads and security threats are prevalent.

5. Conclusions

To tackle the efficiency-security dilemma in edge computing resource scheduling, where traditional PSO algorithms fall short, this study pinpointed flaws in existing methods: poor adaptability, weak attack defense, and unbalanced objectives. We introduced the DRLMDS algorithm, combining Deep Reinforcement Learning (DRL) for adaptive optimization and mimetic defense for attack resistance. Experimental comparisons with PSO and DQN-GA evaluated energy consumption, latency, solution quality, and robustness, validated by statistical tests. Results show that integrating DRL and mimetic defense resolves the efficiency-security conflict: DRLMDS reduces task response time by 30%, boosts resource utilization by 25%, and enhances attack stability by 40%.

Additionally, DQN-based frameworks outperform swarm intelligence in complex edge scenarios. While offering theoretical contributions, replicable methodologies, and practical value for industrial/IoT edge systems, the study has limitations, including simulated homogeneous environments and unmeasured overheads. Future research will focus on developing a lightweight DRLMDS, testing in real-world APT scenarios, improving scalability and fault tolerance, and integrating with federated learning or digital twins.