1. Introduction

With the development of modern agriculture and animal husbandry, livestock breeding has become increasingly important for improving production efficiency and ensuring food safety [

1]. Advances in breeding technology directly affect livestock growth rate, health status, disease resistance, and the quality of meat and milk, all of which collectively determine the economic efficiency and market competitiveness of the livestock industry [

2]. Cattle breeding is vital in the development of the cattle industry [

3]. Body measurement, as an essential step in assessing livestock growth and development as well as selecting breeding stock, is crucial for precision breeding and improving livestock productivity [

4]. Cattle body measurement data not only reflect the animal’s growth status and health condition but can also be used to assess genetic potential, providing important scientific evidence for breeding selection [

5]. However, traditional cattle body measurements rely primarily on manual tools such as measuring sticks, tape measures, and calipers, which often induce varying degrees of stress in animals, compromise animal welfare, and increase both operator injury risk and measurement errors [

6]. Consequently, conventional measurement methods are inadequate for large-scale, intensive farming and fail to meet the modern livestock industry’s demand for efficient and accurate data collection [

7]. Therefore, developing rapid, accurate, and non-contact methods for cattle body measurement is a pressing issue that needs to be addressed [

8]. The development of computer vision and artificial intelligence has created new opportunities for livestock body measurement [

9]. Currently, livestock body measurement—particularly for cattle—mainly includes clustering-based methods using traditional machine learning, three-dimensional (3D) point cloud-based methods, object detection and instance segmentation-based methods, and keypoint detection-based methods. Clustering-based livestock body measurement methods using traditional machine learning can achieve non-contact measurement, thus improving animal welfare while saving labor and time. Zhang et al. [

10] employed an automatic foreground extraction algorithm based on Simple Linear Iterative Clustering (SLIC) and Fuzzy C-Means (FCM), combined with a midline extraction algorithm for symmetric bodies and a measurement point extraction algorithm, to derive segmented images and measurement points for sheep body measurement. Zheng et al. [

11] extracted cattle contour images using a fuzzy clustering algorithm, then used interval segmentation, curvature calculation, skeleton extraction, and pruning methods to derive keypoint information for cattle body measurement. Although these body measurement methods have made progress in automation, they still suffer from poor algorithmic robustness and limited detection accuracy.

Livestock body measurement methods based on deep learning and instance segmentation have achieved notable research progress. Qin et al. [

12] employed a Mask R-CNN network to identify sheep positions, used image binarization to extract sheep contours, and then used OpenCV’s ConvexHull function along with the U-chord length curvature method to derive body measurement points, thus calculating sheep body dimensions. Ai et al. [

13] used SOLOv2 instance segmentation to detect cattle and extract their body contours, used OpenCV to identify characteristic body parts, extracted keypoints via a discrete curvature calculation method, and computed cattle body measurements using the Euclidean distance method. Wang et al. [

14] employed the YOLOv5_Mobilenet_SE network to detect key anatomical regions of newborn piglets, from which they extracted keypoints and calculated body measurements. Peng et al. [

15] used a YOLOv8-based network to detect the posture and identity of yaks, then used the Canny and Sobel edge detection algorithms to extract yak contours for subsequent body measurement. Qin et al. [

16] proposed a method for measuring sheep body dimensions from multiple postures. They used Mask R-CNN to identify the contours and postures of sheep in back view and side view images, located points via the ConvexHull function, and calculated body measurements through real-distance conversion. Although these methods have made certain breakthroughs in measurement stability, they still involve complex procedures and require large amounts of data.

Livestock body measurement methods based on 3D point clouds support a wide range of measurement categories and offer high accuracy in 3D body measurements. Yang et al. [

17] used the Structure-from-Motion (SfM) photogrammetry method combined with Random Sample Consensus (RANSAC) to extract point clouds of dairy cattle, completed missing data using a spline curve smoothing-based completion method, and then employed morphological techniques to measure cattle body dimensions. Li et al. [

5] extracted body measurements of beef cattle from point cloud data by obtaining 12 micro-posture feature parameters from the head, back, torso, and legs, and refined posture errors using parameter adjustments to achieve more accurate body measurements. Jin et al. [

18] developed a non-contact automatic measurement system for goat body dimensions. Using an improved PointStack model, they segmented goat point clouds into distinct parts, used a novel keypoint localization method to identify measurement points, and subsequently obtained goat body measurements. Weng et al. [

19] proposed a cattle body measurement system that integrates the PointNet++-based Dynamic Unbalanced Octree Grouping (DUOS) algorithm with an efficient body measurement method derived from segmentation results, enabling automatic cattle measurement through the identification of key body regions and contour extraction. Lu et al. [

20] developed a two-stage coarse-to-fine method for cattle measurement by incorporating shape priors, posture priors, mesh refinement, and mesh reconstruction into the processing of cattle point clouds. Hou et al. [

21] used the CattlePartNet network to precisely segment cattle point clouds into key anatomical regions, identified and extracted measurement points using Alpha curvature, and measured body dimensions through slicing and cubic B-spline curve fitting. Xu et al. [

22] proposed a method for pig body measurement based on geodesic distance regression for direct detection of point cloud keypoints. They transformed the semantic keypoint detection task into a regression problem of geodesic distances from the point cloud to keypoints using heatmaps and used an improved PointNet++ encoder–decoder architecture to learn distances on the manifold, thus obtaining pig body measurements. Although these methods have further improved the range and accuracy of body measurements, they are limited by the high cost of measurement equipment, the large size of point cloud data, and the complexity of point cloud data processing.

In recent years, advances in human pose estimation and keypoint detection models have demonstrated significant advantages for livestock body measurement. Especially for keypoints with symmetry, as they exhibit bilateral symmetry in planar graphics, they can be better utilized for network model training, improvement, and error correction [

23,

24,

25]. Keypoints with relatively fixed positions, as they maintain a relatively fixed position across different image objects, assist the network model in performing efficient detection and cost-saving. Li et al. [

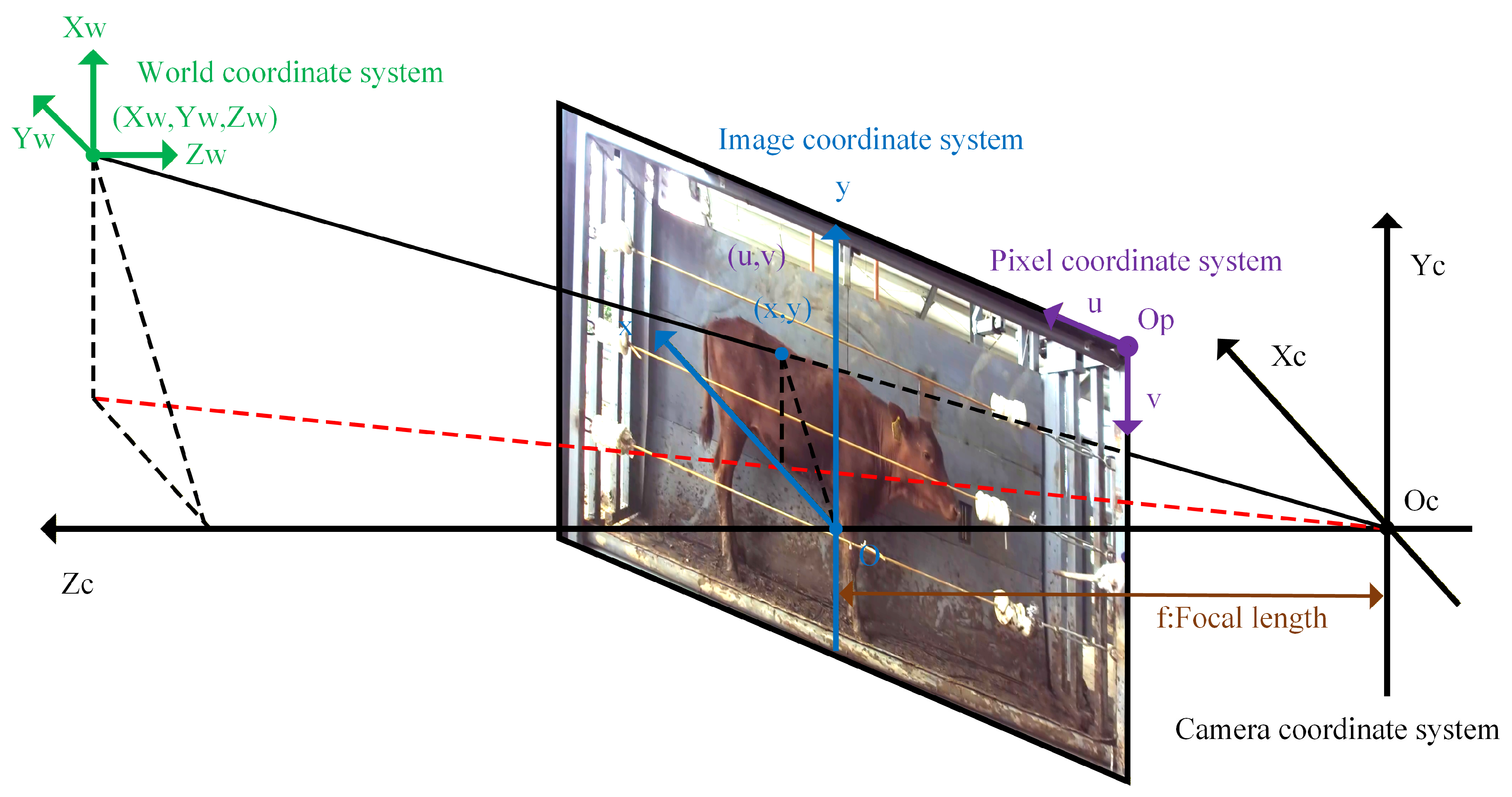

26] employed the Lite-HRNet network to detect keypoints for cattle body measurements and used Global–Local Path Networks to derive relative depth values. These were then converted into actual measurements of body height, body diagonal length, chest depth, and hoof diameter through real-distance calibration and the RGB camera imaging principle. Peng et al. [

27] extracted cattle body keypoints using YOLOv8-pose, mapped them to depth images, and used filtering. They then calculated body height, hip height, body length, and chest girth of beef cattle using Euclidean distance, Moving Least Squares (MLS), Radial Basis Functions (RBFs), and Cubic B-Spline Interpolation (CB-SI). Bai et al. [

28] used a Gaussian pyramid algorithm to convert cattle images into multi-scale representations and used the MobilePoseNet algorithm with scale alignment and fusion to derive high-precision keypoints. Diagonal body length, body height, chest girth, and rump length were then computed using Ramanujan’s equations and related formulas. Yang et al. [

29] used the CowK-Net network to extract two-dimensional (2D) cattle body keypoints, converted them to 3D keypoints using camera parameters, and used the RANSAC algorithm to detect the ground plane in the point cloud. The distances between keypoints were then calculated to derive diagonal body length, body height, hip height, and chest depth. Deng et al. [

30] captured side view images of cattle using a stereo camera, obtained depth information from the images using the CREStereo algorithm, and detected cattle body keypoints with the MobileViT-Pose algorithm. By combining depth data with keypoint coordinates, they calculated body height, body length, hip height, and rump length.

Keypoint detection-based approaches have simplified the process of cattle body measurement; however, because these models must perform both object detection and precise keypoint localization, they often suffer from high model complexity, a large number of parameters, substantial computational demands, and a limited range of measurable traits. YOLOv11 [

31], developed by Ultralytics, is a deep learning network composed of a backbone, neck, and head, offering high accuracy and fast inference speed. The YOLOv11 family includes multiple task-specific models—pose estimation (YOLOv11-pose), object detection (YOLOv11-detect), instance segmentation (YOLOv11-segment), and image classification (YOLOv11-classify)—as well as models of various scales (YOLOv11n, YOLOv11s, YOLOv11m, YOLOv11l, and YOLOv11x) [

32]. YOLOv11-pose unifies keypoint detection and object detection within a single network, significantly improving both the speed and accuracy of keypoint estimation. Its multi-scale feature fusion enables high-precision localization of keypoints across varying object sizes and complex backgrounds, while maintaining strong real-time performance and computational efficiency, thus reducing computational overhead without compromising accuracy [

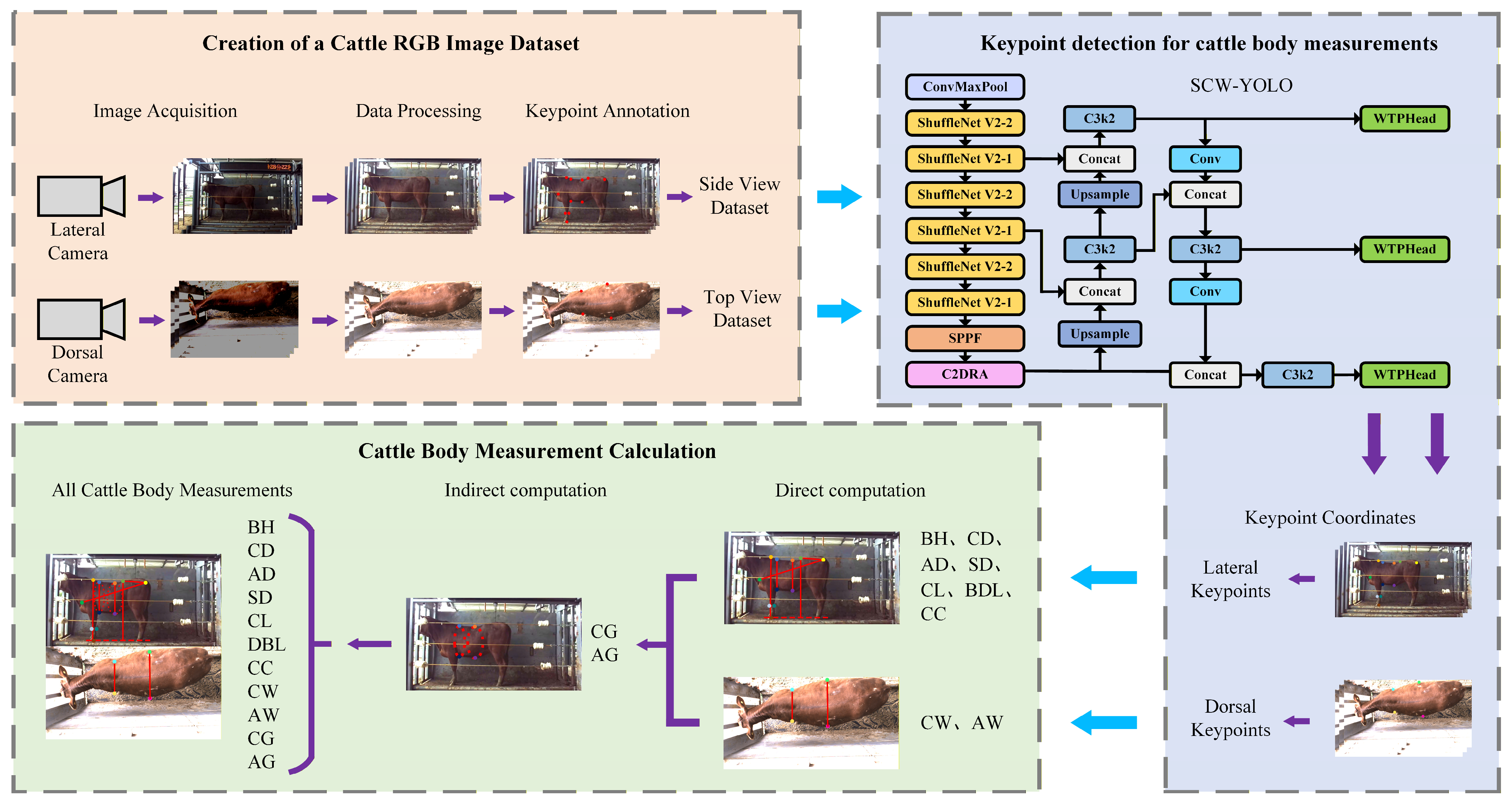

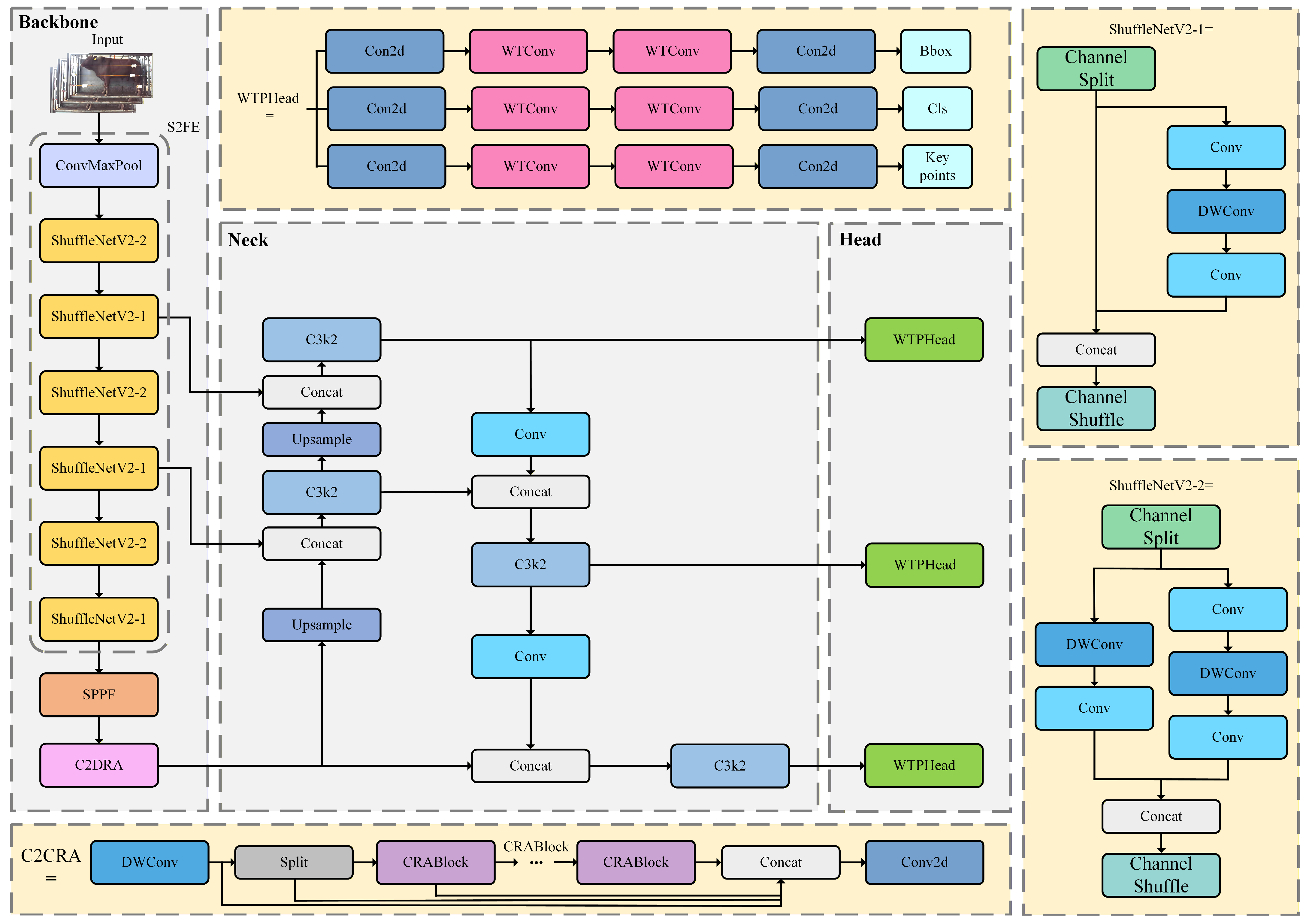

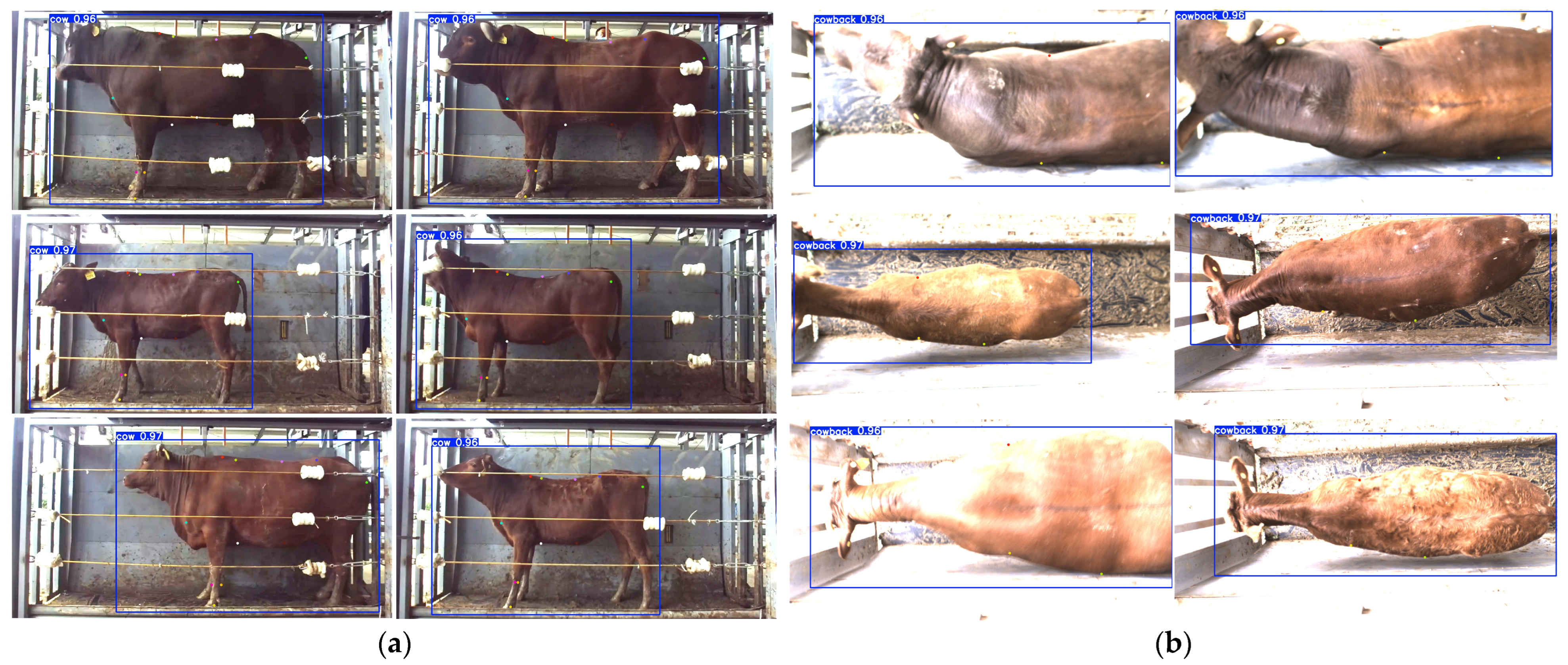

33]. Therefore, for efficient cattle body keypoint detection, this study adopts YOLOv11n-pose [

34], the most lightweight pose estimation model in the YOLOv11 series. Nevertheless, due to its multi-task nature (object and keypoint detection) and multi-layer convolutional operations, YOLOv11n-pose still faces challenges such as a large number of parameters, high computational complexity, and considerable model size. Building on YOLOv11n-pose, this study proposes S2FE C2DRA WTPHead-YOLO (SCW-YOLO), a novel lightweight cattle body keypoint detection model with the following enhancements:

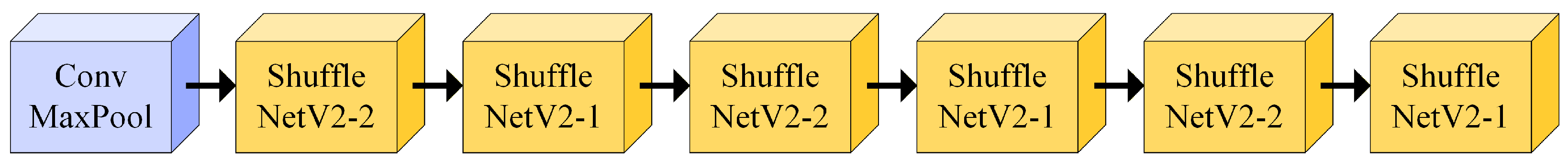

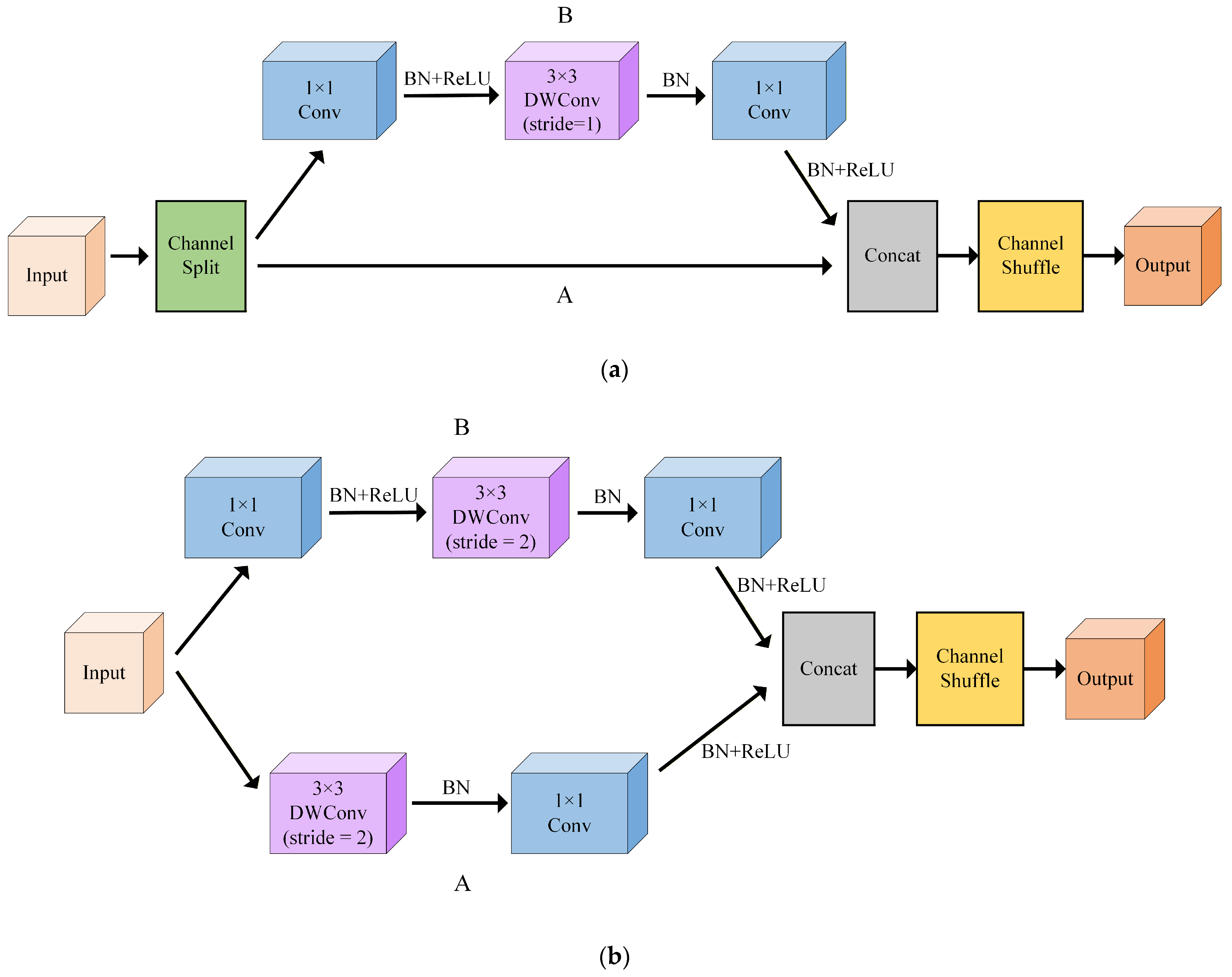

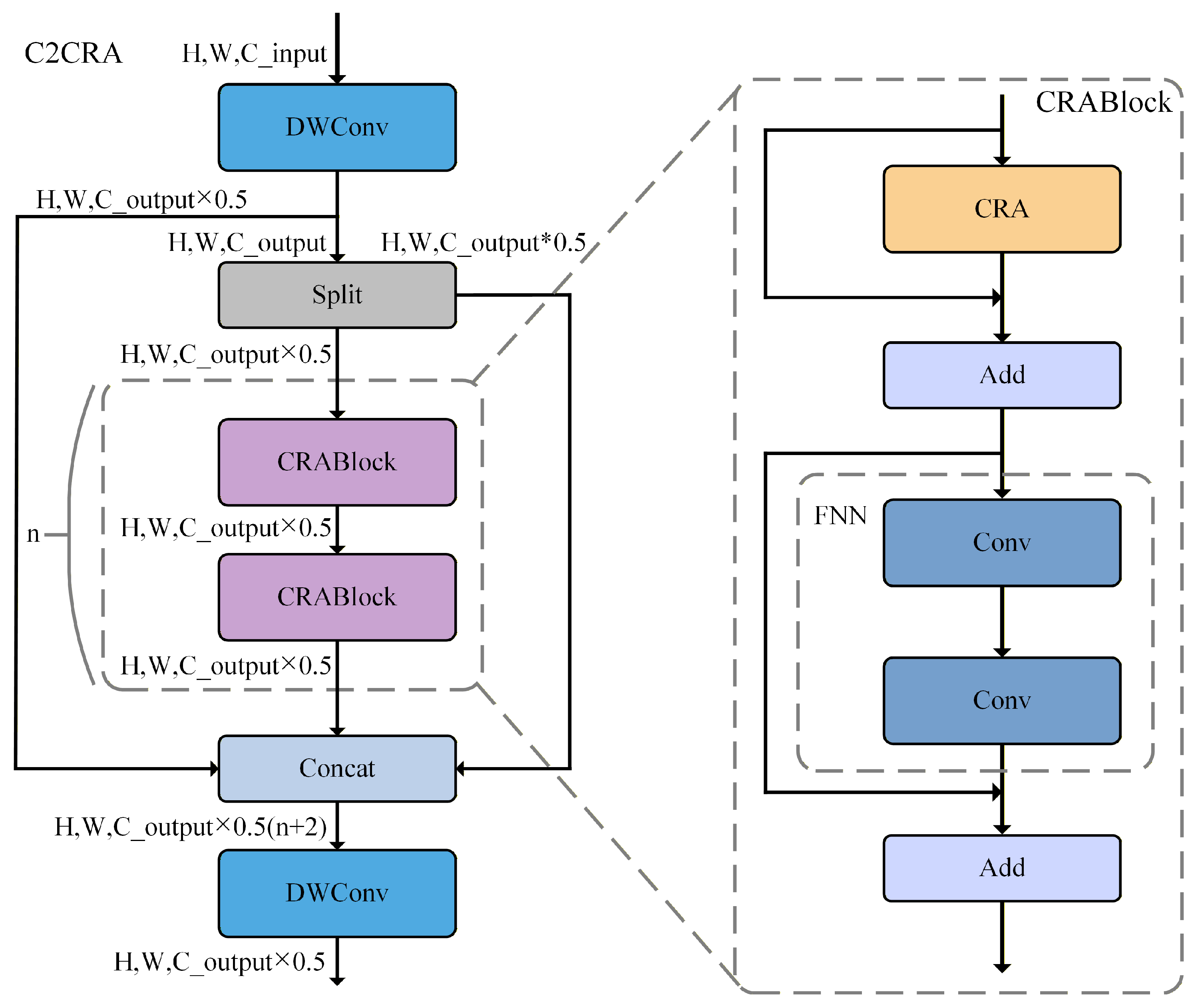

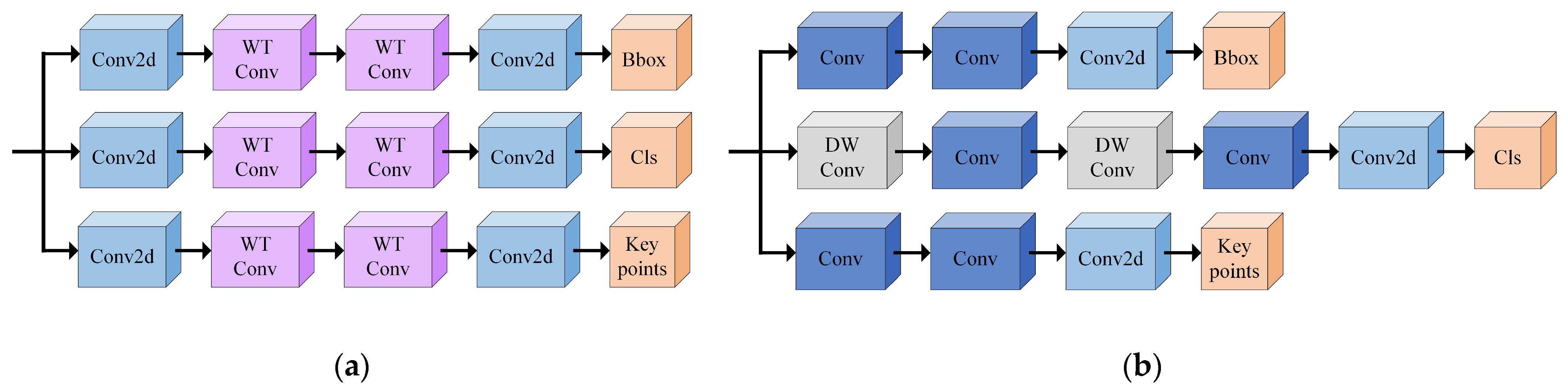

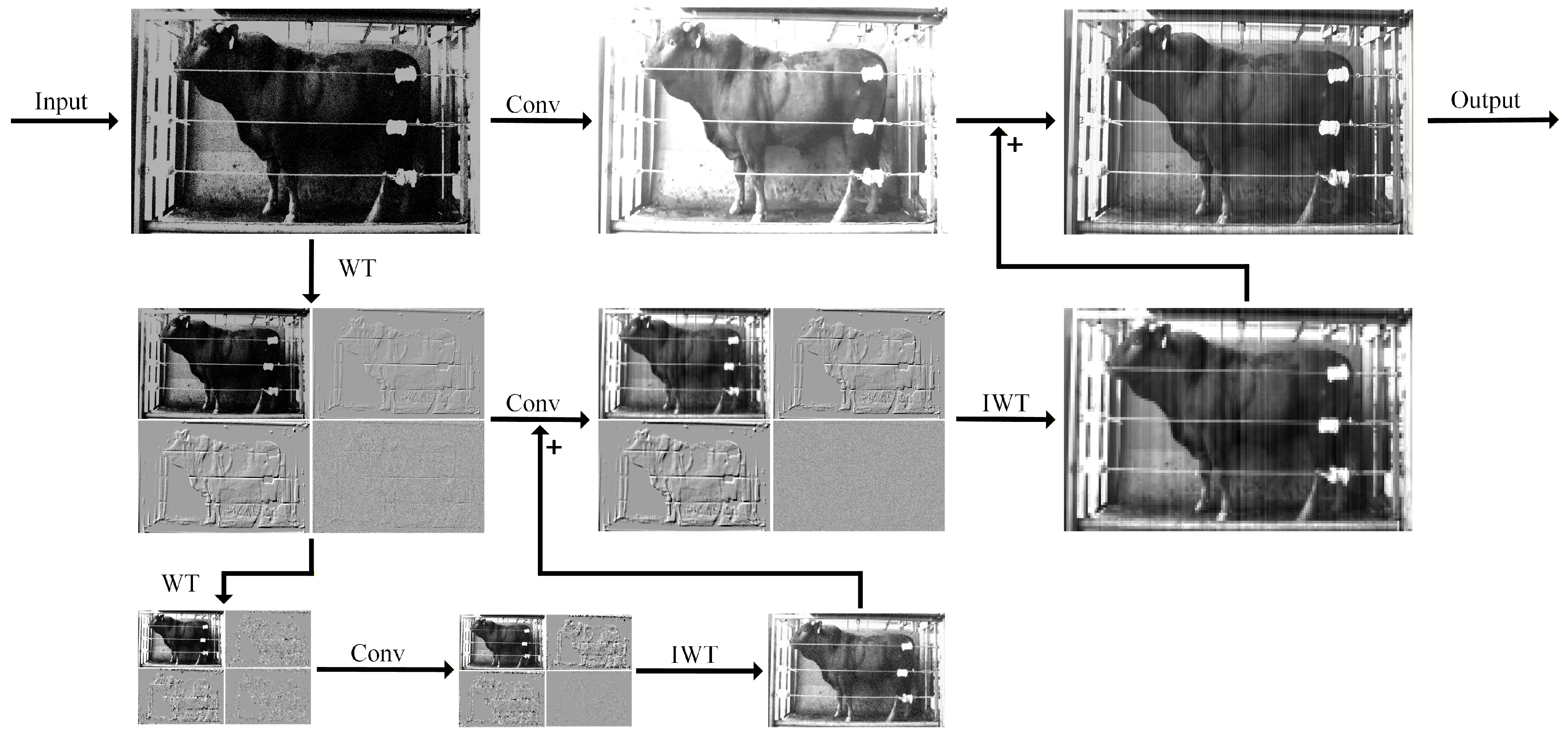

(1) A novel feature extraction module, ShuffleNet V2 Feature Extraction (S2FE), was designed to efficiently extract cattle keypoint features while reducing the number of model parameters and compute cost, thus decreasing the overall model size. (2) A new attention mechanism, Cross-Stage Partial with Depthwise Convolution and Channel Reduction Attention (C2DRA), was introduced to enhance global information extraction capability for cattle while simultaneously reducing computational overhead. (3) A novel keypoint detection head, Wavelet Convolution Pose Head (WTPHead), was designed to effectively expand the receptive field and further improve the lightweight nature of the model.

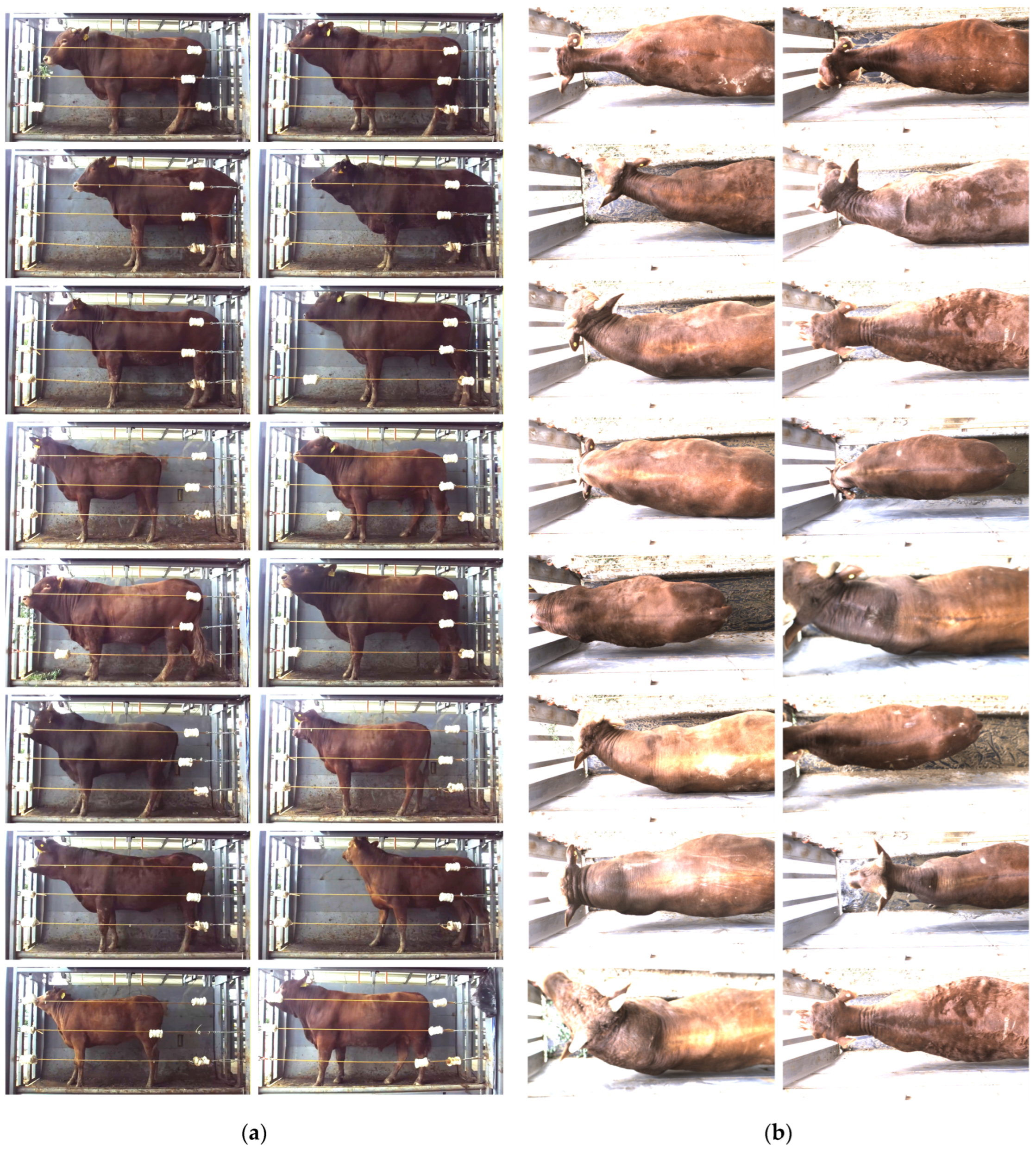

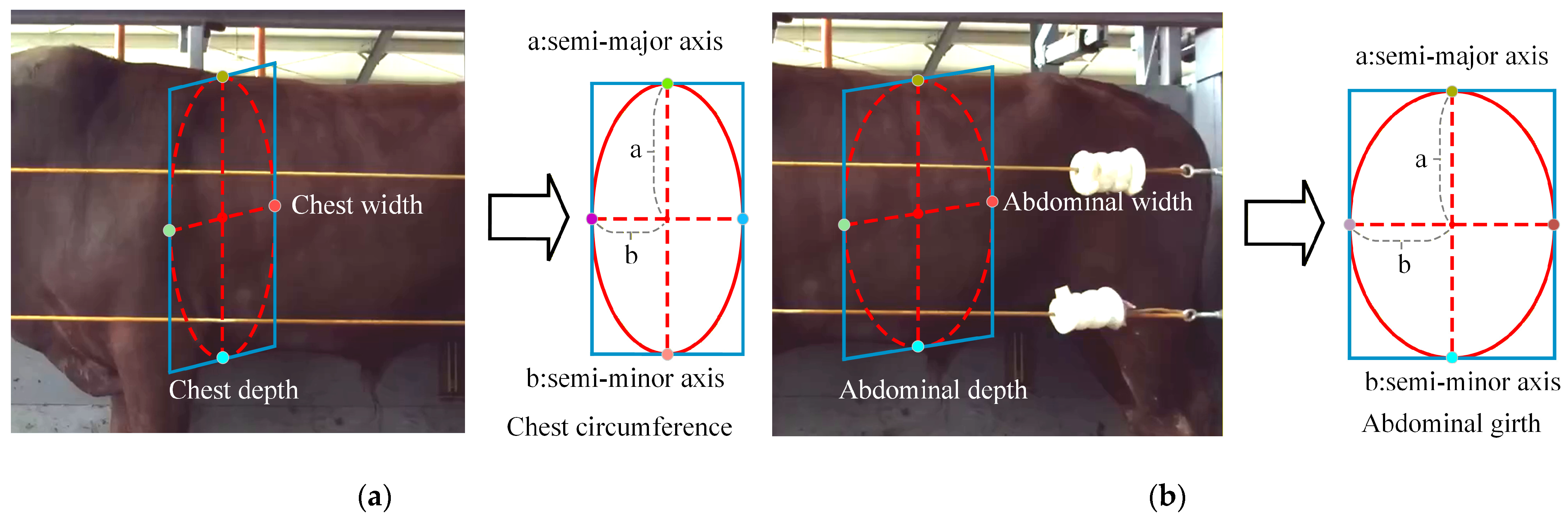

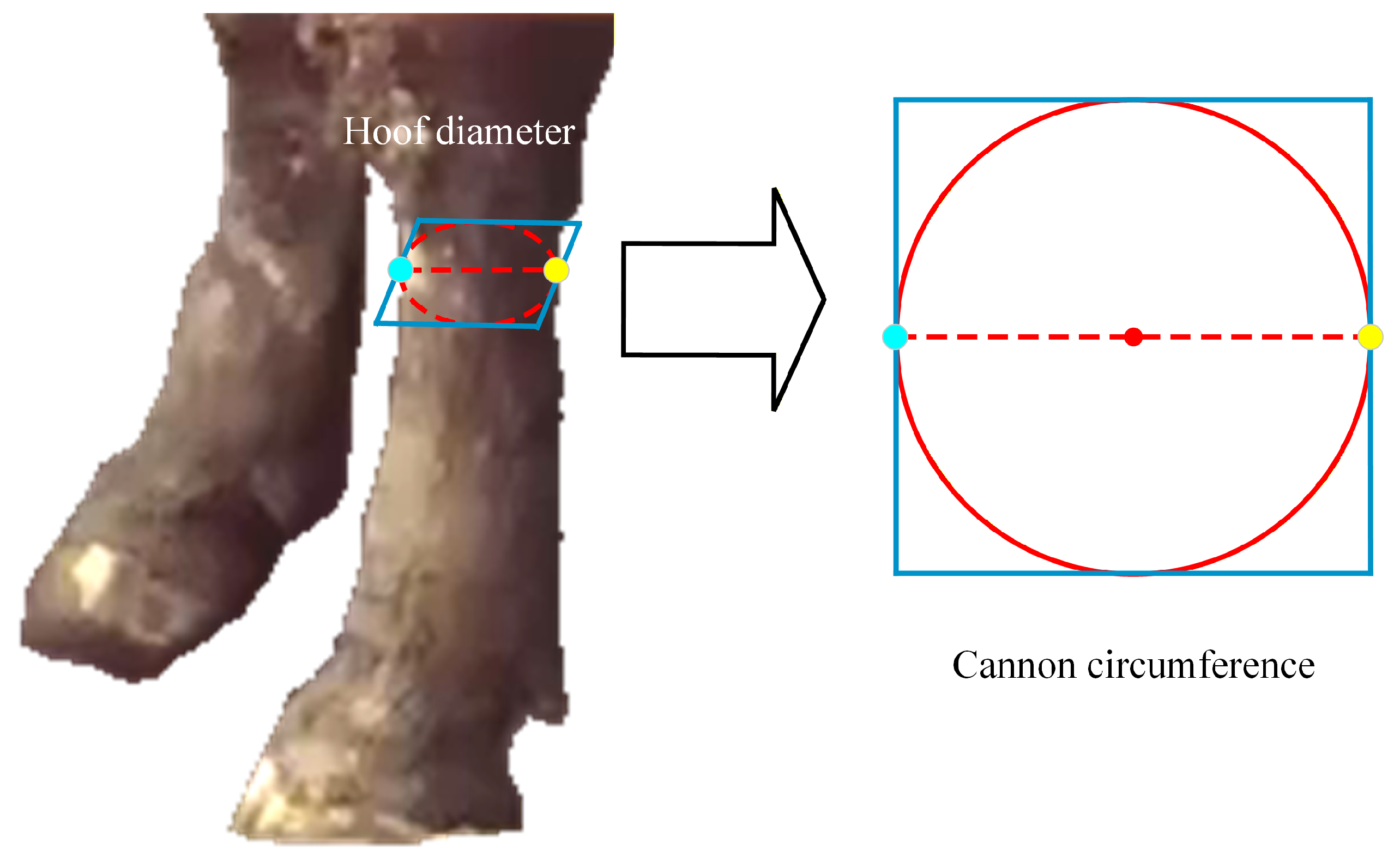

Using the constructed lateral and dorsal image datasets of cattle, the SCW-YOLO model designed in this study was used to extract 15 keypoints with symmetry and relatively fixed positions from cattle images, and to the body height, chest depth, abdominal depth, chest width, abdominal width, rump height, rump length, diagonal body length, cannon circumference, chest girth, and abdominal girth—11 body measurements required for breeding—were calculated using established formulas. Experiments conducted on the lateral and dorsal datasets showed that the proposed method achieved an average relative error of 4.7% in body measurement. Compared with the original YOLOv11n-pose model, the parameter count, compute cost, and model size were reduced by 58.2%, 68.8%, and 57%, respectively. These results demonstrate that the proposed approach provides an efficient and accurate solution for automated cattle body measurement.