Abstract

The rapid growth of digital communication has intensified spam-related threats, including phishing and malware, which employ advanced evasion tactics. Traditional filtering methods struggle to keep pace, driving the need for sophisticated machine learning (ML) solutions. The effectiveness of ML models hinges on selecting high-quality input features, especially in high-dimensional datasets where irrelevant or redundant attributes impair performance and computational efficiency. Guided by principles of symmetry to achieve an optimal balance between model accuracy, complexity, and interpretability, this study proposes an Enhanced Hybrid Quantum-Inspired Firefly and Artificial Bee Colony (EHQ-FABC) algorithm for feature selection in spam detection. EHQ-FABC leverages the Firefly Algorithm’s local exploitation and the Artificial Bee Colony’s global exploration, augmented with quantum-inspired principles to maintain search space diversity and a symmetrical balance between exploration and exploitation. It eliminates redundant attributes while preserving predictive power. For interpretability, Shapley Additive Explanations (SHAPs) are employed to ensure symmetry in explanation, meaning features with equal contributions are assigned equal importance, providing a fair and consistent interpretation of the model’s decisions. Evaluated on the ISCX-URL2016 dataset, EHQ-FABC reduces features by over 76%, retaining only 17 of 72 features, while matching or outperforming filter, wrapper, embedded, and metaheuristic methods. Tested across ML classifiers like CatBoost, XGBoost, Random Forest, Extra Trees, Decision Tree, K-Nearest Neighbors, Logistic Regression, and Multi-Layer Perceptron, EHQ-FABC achieves a peak accuracy of 99.97% with CatBoost and robust results across tree ensembles, neural, and linear models. SHAP analysis highlights features like domain_token_count and NumberOfDotsinURL as key for spam detection, offering actionable insights for practitioners. EHQ-FABC provides a reliable, transparent, and efficient symmetry-aware solution, advancing both accuracy and explainability in spam detection.

1. Introduction

The exponential expansion of digital communication—through email, web services, and online platforms—has transformed modern life, driving both personal and professional productivity to new heights. Yet this convenience comes at a cost: the ever-increasing volume of digital correspondence has fueled a dramatic rise in spam, now recognized as a leading cybersecurity threat worldwide [1,2]. Rather than merely being a nuisance that inundates inboxes, spam has become a gateway to more malicious attacks, consisting of phishing, the delivery of malware, and financial scams. Modern spam campaigns also rely on evasive techniques (or countermeasures) such as dynamic content generation, lexical obfuscation, and social engineering, which drive the ineffectiveness of basic static filtering or defense mechanisms [3,4].

Spam detection has historically centered on the use of static rule-based filters and blacklisting methods. These approaches utilize pre-defined patterns or continuously updated lists of known bad actors. However, spam campaigns have evolved significantly over time and incorporated a variety of tactics, such as URL obfuscation, and take advantage of malicious and compromised trusted infrastructures. Due to these reasons, and just to name a few of many, static rule-based and blacklisting approaches are easily bypassed. Therefore, these types of static mechanisms will often demonstrate high false negative rates and are slow to respond to new omnifaceted threats, inevitably falling short in providing the timely and robust protection a user needs [3].

Artificial intelligence (AI), particularly ML, has thus gained prominence as a foundation for next-generation spam detection [1,2,5,6,7]. Modern ML models, ranging from tree-based ensembles like Random Forest and XGBoost to neural networks, are adept at recognizing complex patterns in high-dimensional datasets and can continuously adapt as new data becomes available. However, the performance of the selection method depends critically on the relevance and quality of the input feature attributes. Large-scale URL datasets, such as the ISCX-URL2016 dataset, typically involve irrelevant or redundant attributes, which may contribute noise to the input, cause increased computational burden, and diminish performance. Thus, feature selection, the process of identifying the most useful indicators while discarding the remainder, is a crucial step for developing spam detection systems that are robust, interpretable, and efficient [8,9].

Conventional deterministic and greedy feature selection algorithms are typically computationally faster than other options; however, they often stall at local maxima or cease to be scalable for higher dimensions. Consequently, metaheuristic approaches, inspired by natural behaviors, have become more common for use as feature selection methods in cybersecurity and spam detection research [5,8,10,11,12]. Among the most prominent are the Firefly Algorithm (FA), renowned for its strong exploitation ability, and the Artificial Bee Colony (ABC) algorithm, valued for its exploratory power and ease of implementation. Each method has shown considerable advantages for traversing difficult search domains, but has limitations in the extent of performance when considered individually.

Recognizing that each metaheuristic brings distinct advantages, recent studies have explored hybrid algorithms that combine complementary mechanisms. For example, while FA performs strong local searches, adding the global exploratory search characteristic of the ABC method can lead to better and more effective optimization, which can rapidly identify a smaller set of informative, non-redundant attributes when performing spam detection [5,10]. In addition, with respect to metaheuristic methods, quantum-inspired evolutionary algorithms (QIEAs) have emerged that leverage ideas from quantum computing and quantum mechanical principles, such as (i) superposition; (ii) probabilistic representations; and (iii) quantum rotation operators, to produce better search diversity and more efficient convergence [13]. Overall, QIEAs produce better population diversity and the ability to avoid early stagnation and ultimately increase the quality of the selected smaller feature subsets, compared to conventional methods, since candidate solutions are encoded using quantum bits.

While these innovations have resulted in improvements in optimization and classification performance, there is still an ongoing limitation, as modern ML-based spam detection systems lack transparency and interpretability. Many effective models function as ‘black boxes’, which means that users and analysts are unable to understand or trust the rationale behind predictions [14,15]. This lack of understanding can hinder validation of the system, may erode user trust, and could potentially violate regulations requiring algorithmic explainability. In sectors with significant ethical and legal implications, explaining which factors are involved, equally as important (but not solely), is equally important in achieving high accuracy.

To address these intersecting challenges—namely, the need for optimized feature selection, computational efficiency, and transparent decision-making—this study introduces an Enhanced Hybrid Quantum-Inspired Firefly and Artificial Bee Colony (EHQ-FABC) algorithm for feature selection in spam detection. The proposed EHQ-FABC algorithm uniquely integrates quantum-inspired solution representations and adaptive quantum operators within a hybrid Firefly–Bee Colony metaheuristic. This approach exploits the Firefly algorithm’s fine-level exploitation of solutions, ABC’s global search capabilities, and quantum-inspired computation’s ability to preserve diversity [10,13]. In addition, the proposed EHQ-FABC is tightly coupled with explainable artificial intelligence (XAI) methodologies, specifically SHAP, to provide insight into individual feature contributions to its model predictions. With the addition of SHAP-based explainability, the proposed framework provides not only a highly optimized and compact feature subset but also allows for the better interpretability and transparency of spam detection decisions [14,15]. This is especially valuable for understanding variables that contribute to the discrimination of spam URLs versus legitimate URLs, such as domain token count (domain_token_count) and number of dots in URL (NumberOfDotsinURL) features.

Empirical evaluation of the proposed EHQ-FABC algorithm on the ISCX-URL2016 dataset demonstrates its ability to substantially reduce the feature set by more than 76% while constantly maintaining or improving the classification performance over several different ML models. In comparative analyses, EHQ-FABC outperforms or matches traditional filter, wrapper, embedded, and metaheuristic methods in both accuracy and parsimony. The integration of SHAP-based explainability further reveals that EHQ-FABC is able to reliably detect the most relevant features to discriminate between spam and non-spam URLs. These findings combined offer an exciting balance of performance, efficiency, and interpretability for a modern spam detection system.

A key contribution of this study lies in the integration of a hybrid metaheuristic for feature selection with post hoc explainability. At the algorithmic level, its design establishes a balanced interplay between exploration and exploitation, ensuring diversity in the search process while maintaining convergence toward optimal feature subsets. From an interpretability perspective, SHAP is applied post hoc on held-out test data to attribute feature contributions in a fair and transparent way, helping practitioners understand which URL characteristics drive spam vs. benign predictions. Together, these contributions advance both performance and explainability in spam detection—key requirements in security-sensitive applications. The main contributions of this research are as follows:

- A Robust Hybrid Metaheuristic Algorithm: the EHQ-FABC extends the current literature on feature selection in spam detection with a novel hybrid Firefly-Bee Colony optimization approach based on Quantum techniques.

- Transparent and Trustworthy Detection: The integration of SHAP-based explainability within the detection framework ensures that both feature selection and classification are interpretable, fostering regulatory compliance and operational trust.

- Empirical Validation: The rigorous evaluation of EHQ-FABC on the ISCX-URL2016 benchmark data demonstrated improved feature reduction and competitive accuracy.

- Addressing Practical and Theoretical Gaps: The proposed algorithm directly confronts longstanding challenges related to feature redundancy, irrelevance, computational overhead, and interpretability, bridging critical gaps in current spam detection literature.

In summary, this study contributes to the state of the art by integrating metaheuristic optimization with explainable AI, offering a competitive and transparent framework for effective spam detection in today’s evolving digital threat landscape. The remainder of this paper is organized as follows. Section 2 reviews relevant literature, including recent ML-based spam detection techniques. Section 3 details the methodology of the proposed EHQ-FABC algorithm. Section 4 reports the experimental setup and results obtained using the ISCX-URL2016 dataset, comparing the EHQ-FABC algorithm’s feature selection performance with baseline methods. It also provides an in-depth discussion of the findings, including feature importance analysis, SHAP-based model explanations, and practical implications for spam detection. Finally, Section 5 concludes the paper, highlighting the contributions and future research directions in developing optimized and explainable spam detection systems.

2. Related Work

Several works have been proposed for spam detection, transitioning from simplistic filters to complex, optimized, and explainable systems. Beginning with early works such as Mamun et al. [3], which investigated the use of lexical analysis of URLs to identify malicious URLs, the work accentuated how attackers would purposely obfuscate the text pattern in URLs in order to bypass simple filters. This research provides insight into static rule-based filters, which become obsolete as soon as there are new lexical tricks to evade the filter; hence, the need for a more adaptive spam and phishing detection approach.

The transition to ML marked a critical leap in this field. Patil and Singh [2] reviewed and implemented several supervised ML methods, including Naive Bayes, SVM, Random Forests, and neural networks, for spam detection. Tusher et al. [1] highlighted how ML models improved statistical spam detection by means of extracting additional text and metadata features to identify patterns of spam. Importantly, Patil and Singh [2] also highlighted the fact that while ML models outperform static filters, it is contingent on feature extraction and some level of data imbalance.

Somewhere along the pursuit of achieving high detection levels, the combination of accuracy and computational efficiency drove a trend where metaheuristic feature selection measures were widely adopted. As a relevant example, Abualhaj et al. [8] used the Firefly Algorithm (FA) on the ISCX-URL2016 dataset to reduce its dimensionality from 72 to only 31 features. In terms of outcomes, the use of the FA and the Decision Tree demonstrated a reasonable model improvement, resulting in a 99.81% accuracy. Their work stands out for not only improving performance metrics but also providing an empirical assessment of FA’s ability to balance exploration and exploitation, which is crucial in avoiding both underfitting and overfitting.

Building on this, Abualhaj et al. [5] introduced hybrid approaches that combined the FA with Harris Hawks Optimization (HHO). Their research showed that such combinations outperform single-algorithm approaches, with the Extra Trees classifier achieving 99.83% accuracy. This hybridization utilized the FA’s strong local search properties and HHO’s efficient global search, allowing for a more complex balance than either could achieve independently. The hybrid algorithm also addressed one of the main criticisms of traditional feature selection, which is the problem of local minima in high-dimensional spaces. The stand-alone efficacy of HHO was further validated by Abualhaj et al. [11], who demonstrated that HHO could reduce the ISCX-URL2016 feature set to just 10 features while still enabling classifiers such as Extra Trees and XGBoost to exceed 98% accuracy. Lastly, the new method was also notable because it permitted a systematic comparison of classifier performance and demonstrated, through a small selection of features, that it is possible to match and exceed the models using the complete dataset in terms of accuracy, but with reduced risk of overfitting and more feasible in practice.

The pursuit of more powerful optimization has naturally extended into the realm of quantum-inspired computing, a field that leverages principles from quantum mechanics, such as superposition, interference, and entanglement, to enhance classical algorithms [16]. These QIEAs are designed to maintain a richer diversity of potential solutions within the population, thereby mitigating premature convergence—a common pitfall in complex optimization landscapes like those found in high-dimensional feature selection. The foundational QIEA work by Han and Kim [17] demonstrated the potential of quantum-inspired algorithms to outperform their classical counterparts on combinatorial problems. More recently, Mandal and Chakraborty [11] provided a comprehensive review, highlighting how quantum-inspired methods offer significant benefits in diversity preservation during convergence through probabilistic representation and qubit rotation gates. This theoretical foundation is critical for understanding their application in spam detection. Panliang et al. [10] further demonstrated that QIEAs can deliver faster and more robust optimization when hybridized with classical metaheuristics like the FA and ABC, a finding which directly spurs our work to hybridize our feature selection approach.

Concurrently, the field of artificial intelligence has undergone a paradigm shift toward transparency and trust, catalyzed by the rise of XAI. The “black-box” nature of many high-performing models has created a significant barrier to their adoption in sensitive, real-world domains like cybersecurity [14]. The core mandate of XAI is to make the decision-making processes of AI models understandable to human users. Early surveys, such as the seminal work by Adadi and Berrada [18], systematically categorized XAI techniques into model-specific, model-agnostic, ante-hoc, and post hoc methods, framing the discourse for subsequent research. This is highly relevant to spam detection, where understanding why an email was flagged is as crucial as the flag itself. Among post hoc attribution methods, SHAP has become a de facto standard due to its solid game-theoretic axioms (local accuracy, missingness, symmetry), which ensure consistent attributions across features with equal contribution [19]. Zhang et al. [14] specifically reviewed XAI in cybersecurity and concluded that the lack of interpretability leads to lower trust and acceptance in regulatory settings. They explored various XAI methods (e.g., SHAP, LIME, rule extraction), which could be employed in conjunction with ML models to provide interpretability of individual predictions.

Expanding on this, Mohale and Obagbuwa [15] conducted a systematic review on XAI being integrated in the intrusion detection field. Their findings corroborated those of Zhang et al. [14], showing that tree-based and rule-based XAI models were favored by their clear interpretability; however, there was still a gap to achieve detection accuracy and provide explainability in real time. They emphasized the necessity of standard XAI metrics and frameworks, which will scale in dynamic large-scale cyber environments, which is also a critical challenge in modern spam detection. The practical integration of XAI with high-performing models has been demonstrated by Haripriya et al. [20]. Their work stands out for implementing deep learning models for intrusion detection and systematically integrating LIME and SHAP for post hoc explanation. They aimed to show not just that XAI tools explained the feature contributions of individual predictions, but also that model transparency improved analyst trust, establishing a template for more explainable spam detection systems.

Sarker et al. [21] have documented a case study showing the applicability of explainable AI in the, for example, spam email detection with a comparatively simple model (logistic regression). This study delivered findings that paired the interpretability of linear models with SHAP to develop and interpret Spam documents while providing the end user with meaningful guidance regarding model prediction. The Sarker et al. study’s findings suggest that even simple classifier models combined with XAI can deliver explainable and reliable spam filtering outputs that are usable in operating environments or regulatory contexts. Moreover, Adnan et al. [22] applied the ensemble method of stacking frameworks to other high-performing base models to improve the performance of Spam detection. They combined base models (logistic regression, decision trees, naïve Bayes, and AdaBoost) for performance and evaluation, as the combination demonstrated accuracy and F1-scores of above 98%. This demonstrates the importance of model diversity in simple spam patterns. Broader validation of feature selection and explainability approaches comes from related fields. Harahsheh et al. [9] used enhanced feature selection for attack detection in IoT environments, demonstrating that optimized feature subsets reduce computational costs while maintaining or improving accuracy. Ghalechyan et al. [4] explored neural network-based detection of phishing URLs, emphasizing the importance of relevant feature engineering and continual adaptation to new threat vectors—insights directly applicable to email spam detection.

Despite these advancements, several key limitations and open questions persist in the literature. While metaheuristic and quantum-inspired optimization algorithms have shown clear benefits for dimensionality reduction and performance, relatively few studies have seamlessly combined these approaches with robust XAI in large-scale spam detection settings. Additionally, much of the existing work focuses on either optimization or interpretability in isolation, with only a handful of studies exploring the synergistic integration of both for practical, real-time systems [14,15].

In summary, the literature highlights several critical themes: the inadequacy of traditional spam filters, the power of metaheuristic and hybrid optimization for feature selection, the imperative for explainable and trustworthy AI, and the gap in unified frameworks that combine these strengths in real-world, high-dimensional spam detection. The present study addresses this gap by proposing a novel EHQ-FABC feature selection algorithm, tightly coupled with SHAP-based explainability. This integrated approach aims to advance the state-of-the-art in both performance and transparency, supporting more robust, efficient, and trustworthy spam detection for real-world digital communication systems.

3. The Proposed EHQ-FABC Algorithm

This section introduces the proposed EHQ-FABC algorithm, a hybrid approach that integrates quantum-inspired encoding, Firefly-inspired exploration, Artificial Bee Colony refinement, and annealed stochastic perturbations within a multi-objective fitness framework. The goal is to identify compact, non-redundant subsets of URL features while maintaining strong predictive performance. Section 3.2, Section 3.3, Section 3.4, Section 3.5 and Section 3.6 describe each component and explain how they interact to form the full hybrid.

3.1. Methodological Rationale and Overview

The EHQ-FABC algorithm is a hybrid metaheuristic for feature selection in URL-based spam detection. It integrates quantum-inspired encoding (QIEAs) [17], with the Firefly-inspired global exploration [23], the ABC local refinement [24], and annealed stochastic perturbations (mild SA acceptance) to search high-dimensional subsets while discouraging redundancy and premature convergence. The design goals are: (i) parsimony (compact subsets), (ii) low redundancy (reduced inter-feature correlation), and (iii) stable optimization without heavy meta-tuning.

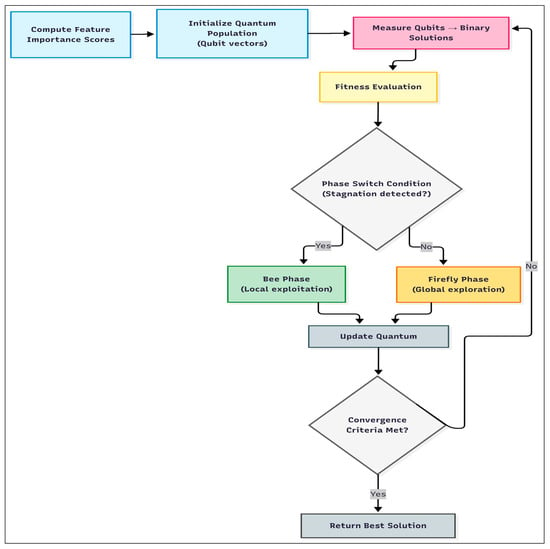

In contrast to fixed-phase schedules used in prior QIFABC variants [10], EHQ-FABC employs stagnation-aware phase switching: when the best fitness shows no improvement over a sliding window of W = 10 iterations and the improvement magnitude remains below ε = 0.001·|Fbest|, the algorithm switches between a Firefly-inspired exploration step and a Bee-inspired exploitation step. This data-driven logic ties phase transitions to the observed optimization state, maintaining a balanced exploration–exploitation interplay and reducing time spent in unproductive regions. In addition, Lévy/Cauchy-style perturbations are applied with a decay schedule (simulated-annealing acceptance early, tapering later) to encourage escape from local minima while converging steadily. A multi-objective fitness couples MCC (primary discriminator) with penalties on subset size and inter-feature correlation, steering the search toward compact, decorrelated masks that are easier to interpret downstream with SHAP. An overview of the workflow is shown in Figure 1, and Algorithm 1 provides pseudocode.

Figure 1.

Workflow of the EHQ-FABC algorithm.

| Algorithm 1: The EHQ-FABC Algorithm |

| Input: X, y, params Output: z_best (Set of selected features) 1: Initialize quantum-inspired population Θ(0) using Equation (3) 2: Evaluate initial fitness using Equation (8) 3: For t = 1 to Tmax do 4: Generate binary solutions Zt via quantum measurement (Equation (4)) 5: Evaluate fitness F(Zt) (Equation (8)) 6: Update the global best solution and stagnation counter 7: For each candidate θi in Θt 8: If random number < adaptive chaos factor pc(t): 9: Perform Firefly-inspired update (Equation (5)) 10: else: 11: Perform Bee-inspired update (Equation (6)) 12: Apply the Metropolis criterion based on simulated annealing schedule 13: End for 14: If stagnation is detected (Equation (7)): 15: Trigger phase transition and introduce diversity enhancement 16: Update adaptive parameters (thresholds, chaos factor, Lévy flight scale) 17: If termination conditions met: Break 18: End for 19: Return best-selected feature subset z_best |

3.2. Quantum-Inspired Representation

Before introducing the mathematical formalism, we clarify that the representation is quantum-inspired but fully simulated on classical hardware. Each feature is encoded as a qubit parameterized by an angle, providing a compact probabilistic representation of inclusion or exclusion. This encoding enables efficient exploration of the combinatorial subset space by maintaining diversity across candidate solutions. Simulated measurement then samples binary feature masks from these probabilities using an adaptive threshold and annealed noise schedule, ensuring broad exploration early and more deterministic evaluations later. In this way, the hybrid exploits both probabilistic search and deterministic evaluation without requiring any physical quantum device. This subsection details the representation and measurement process.

3.2.1. Quantum Bit (Qubit) Encoding

The foundation of EHQ-FABC is its quantum-inspired representation of the feature selection problem. Unlike traditional binary encoding, where a feature is either selected or not, our approach utilizes qubits to represent features. Each qubit exists in a probabilistic superposition of states, allowing for a more nuanced and exploratory search of the solution space. Specifically, candidate solutions are represented as quantum bit (qubit) vectors, defined mathematically as:

where θij ∈ [0, π/2], (j = 1, …, d) and d is the total number of features. This representation enables each feature to exist in a probabilistic superposition, supporting diverse exploration of the feature space [17].

3.2.2. Importance-Guided Initialization

To incorporate domain knowledge without hard-coding any inclusion, we initialize each feature’s qubit from a maximum-entropy prior and apply a bounded modulation by feature importance. Let fj denote the combined importance of feature j obtained by min–max normalizing a convex combination of Mutual Information and Random-Forest importance (Equation (2)).

We set the initial inclusion probability , with , and map to angles by Equation (3). This keeps close to 0.5 for average-importance features while softly nudging highly informative or weak features toward inclusion/exclusion. Crucially, no early top-k feasibility rule is enforced; initialization preserves exploration diversity and prevents leakage of prior importance into the objective. Formally, initialization incorporates domain knowledge by calculating feature importance scores fj, defined as:

where MI(xj, y) is mutual information, and RF is the Random Forest feature importance score [25,26]. Quantum angles are initially biased toward important features as follows:

This strategy significantly enhances convergence speed while ensuring initial diversity.

3.3. Adaptive Quantum Measurement

Binary masks zj ∈ {0, 1}d are obtained by simulated measurement of the qubit angles using an adaptive threshold and a mild annealed noise:

Specifically, the decision threshold (exploration-to-exploitation transition), and annealed noise term encourages broader early exploration while vanishing later. No mandatory top-k inclusion is enforced at measurement time. Prior knowledge enters only through the bounded initialization above; the wrapper search remains unbiased and free to discover compact, high-utility subsets.

3.4. Hybrid Optimization Cycle

3.4.1. Firefly Phase (Global Exploration)

This subsection introduces the Firefly-inspired component, which serves as the global search mechanism. The brightness-driven attractiveness dynamics guide the population toward promising regions of the solution space, ensuring broad exploration before local refinements are applied. Candidate updates follow brightness-driven attraction:

with attractiveness , Lévy flight scale , and Cauchy noise to enhance escape from local optima. Parameters (γ, λ0) are tuned via Optuna and lie in empirically robust ranges (γ ≈ 1.5–2.0; λ0 ≈ 0.4–0.7).

3.4.2. Bee Phase (Local Exploitation)

The ABC refinement intensifies the search around promising candidate subsets identified by the Firefly dynamics. This phase enhances exploitation by applying localized updates that complement the broader exploration of the Firefly component. Local refinements are performed using employed and onlooker bee updates, while scout diversification prevents stagnation. Local updates follow:

where ϕ ∼ U(−1, 1) applied element-wise and annealed noise decreases over time to balance refinement and diversity. In this way, the bee-inspired employed/onlooker updates strengthen local neighborhoods, while scout steps reintroduce diversity when progress stalls.

3.4.3. Stagnation Detection and Dynamic Phase Switching

To balance exploration and exploitation based on observed progress, we switch phases when no fitness improvement is detected within a sliding window of W = 10 iterations and the improvement magnitude stays below , as follows:

This data-driven rule balances exploration and exploitation based on observed improvement rather than a fixed schedule. Nearby settings () showed similar behavior in ablations, suggesting robustness.

3.5. Multi-Objective Fitness Evaluation

The composite fitness integrates predictive quality with penalties for subset size and inter-feature correlation, ensuring that the selected subsets are compact, interpretable, and non-redundant. This subsection outlines the multi-objective formulation used to balance these criteria and prevent overfitting. The composite fitness trades off predictive performance, parsimony, and de-correlation on the training split as follows:

where MCC is the Matthews Correlation Coefficient on training folds, |z| is the selected subset size, d is the total features, and is the mean absolute pairwise correlation of selected features. Adaptive parameters , are bounded so that the MCC term dominates; this prevents collapse to trivially small masks. Candidate updates are accepted only if the composite fitness improves, further guarding against underfitting. This formulation favors compact, non-redundant subsets that retain high discriminative power and support clear SHAPs downstream.

3.6. Termination Criteria

The algorithm terminates when any of the following hold:

- Maximum iterations: t ≥ Tmax = 100.

- Early-stop: No improvement in Fbest for 25 consecutive iterations.

- Subset stability: the average pairwise Jaccard overlap among the top-q elites exceeds 95% for five consecutive iterations (indicating reproducible convergence).

3.7. Computational Cost and Complexity

Let N denote population size, T the iteration budget, and d the number of features. Per iteration, measurement, and local updates are ; Firefly global moves are under full pairwise attractiveness; Bee refinements are . Fitness evaluation dominates: fitting/scoring a classifier on the training portion for each candidate yields , where n is the number of training samples and s the selected features. Overall, EHQ-FABC scales as:

Because the multi-objective fitness favors compact, decorrelated subsets, s quickly shrinks (≈17/72 in our best runs), reducing over time. With Tmax = 100, N = 50, and stagnation-aware early stopping, convergence is typically reached before the iteration budget. Hyperparameter tuning via Optuna (30 trials, CPU-only) completed within minutes on our workstation. EHQ-FABC is run offline; at deployment, inference uses a trained classifier on a small feature set, yielding low-latency predictions.

The EHQ-FABC method effectively integrates quantum-inspired strategies, swarm intelligence, adaptive phase transitions, and a multi-objective evaluation framework to robustly identify optimal and parsimonious feature subsets. This methodological design ensures superior performance in high-dimensional spam detection contexts, demonstrating both theoretical rigor and practical efficiency.

4. Experiments and Results

4.1. Dataset Description and Selection

This study employs the ISCX-URL2016 Spam Dataset, a benchmark developed by the Canadian Institute for Cybersecurity [3], which comprises a comprehensive and diverse collection of URLs categorized into five classes: benign, spam, phishing, malware, and defacement. For our research, we focus exclusively on the spam detection subset, containing 14,479 samples with a nearly balanced distribution—6698 spam and 7781 benign URLs. This balance ensures that classification models are not biased toward either class, supporting robust and fair evaluation of spam detection performance. ISCX-URL2016 is particularly suitable for our study because it offers transparent, human-interpretable attributes that align naturally with wrapper-based feature selection (EHQ-FABC) and post hoc SHAPs. Its rich, well-documented feature set allows us to not only optimize predictive performance but also provide meaningful, instance-level interpretability. Furthermore, ISCX-URL2016 is a widely used benchmark in URL-based spam research, publicly available, and rigorously documented, which ensures both direct comparability with prior work and reproducibility of our experimental results.

Each URL instance, as listed in Table 1, is represented by 79 attributes spanning three major categories: (i) lexical features (e.g., URL length, number of dots, number of tokens, and character composition), (ii) host-based and structural features (e.g., domain length, path depth, subdirectory tokens, and file extensions), and (iii) query/content features (e.g., query length, number of arguments, and presence of sensitive keywords). Collectively, these attributes capture both structural and semantic patterns that distinguish legitimate URLs from spam. The ISCX-URL2016 dataset is widely recognized as a standard benchmark for empirical assessment of malicious and spam URL detection systems.

Table 1.

ISCX-URL2016 features format.

4.2. Dataset Preprocessing

During data-quality screening of ISCX-URL2016, we identified seven fields with substantial missingness (>40%), concentrated primarily in benign samples: avgpathtokenlen (#6), NumberRate_Extension (#66), NumberRate_AfterPath (#67), Entropy_DirectoryName (#76), Entropy_Filename (#77), Entropy_Extension (#78), and Entropy_Afterpath (#79). To avoid introducing bias into the wrapper evaluation and to keep the protocol reproducible, we excluded these seven fields before any training or tuning, reducing the dimensionality from 79 to 72 features.

To prepare the dataset for ML, all feature values were scaled to the range [0, 1] using MaxAbs normalization. This method was selected because it preserves data sparsity and effectively mitigates the influence of features with widely disparate value ranges, ensuring that each feature contributes equally during model training. By normalizing the features and removing any null-valued attributes, we minimized the risk of bias in the learning algorithms and enhanced the dataset’s suitability for high-dimensional classification. These preprocessing steps are aligned with best practices in cybersecurity research and are essential for robust spam detection performance.

4.3. Experimental Setup

We adopted a stratified 80/20 train–test split to preserve class balance. The proposed EHQ-FABC algorithm and hyperparameter tuning were performed exclusively on the training split, while the held-out 20% portion was reserved for a single final evaluation. This leakage-safe protocol is crucial for ensuring a robust and unbiased assessment of performance, particularly for wrapper-based feature selection methods.

To further ensure the robustness and stability of our approach—a key concern when reducing dimensionality—we implemented a multi-faceted validation strategy. Unlike deep learning models assessed via epoch-wise loss curves, our method’s stability was demonstrated through: (i) Cross-classifier consistency, where the same EHQ-FABC-selected feature subset was evaluated across eight diverse ML models (as detailed in Section 4.3.1); (ii) Complementary metrics, including MCC (robust to class imbalance) and ROC-AUC (threshold-independent), to ensure performance gains were consistent and not an artifact of a single metric; and (iii) Controlled optimization dynamics, where the EHQ-FABC’s stagnation-aware switching and annealed perturbations promoted stable convergence, typically observed within 60–70 iterations, without erratic oscillation.

The optimization process itself targeted the MCC and incorporated penalties for subset size and inter-feature correlation to encourage compact, decorrelated feature masks that enhance generalization. On the held-out test set, we report Accuracy, Precision, Recall, F1 (macro), MCC, ROC-AUC, and Log loss. For probabilistic classifiers, a 0.5 decision threshold was applied when computing point-based metrics. Finally, all SHAPs (global and local) were computed post hoc on the held-out test split only, ensuring leakage-aware interpretability.

4.3.1. Classification Algorithms

To rigorously evaluate the proposed EHQ-FABC algorithm, we employed a comprehensive suite of ML classifiers, each selected for its unique strengths and applicability to URL classification tasks:

- CatBoost (CB): We include CB, a gradient boosting method optimized for categorical features. CB introduces techniques like ordered boosting and target-based encoding that effectively combat overfitting due to target leakage. These innovations make CB highly robust on structured data and have been shown to outperform other boosting implementations on many datasets [27].

- XGBoost (XGB): We utilize XGB, a scalable tree-boosting system known for its speed and accuracy in classification tasks. XGB implements advanced techniques (e.g., second-order optimization, cache-aware block structures) that allow it to efficiently handle large-scale data. It is widely used in data science competitions and real-world applications for its ability to achieve state-of-the-art results while maintaining computational efficiency [28].

- Random Forest (RF): We include the RF ensemble classifier, which constructs an ensemble of decision trees using random feature subsets and bagging. This approach improves accuracy by averaging multiple deep trees, reducing variance, and mitigating individual tree overfitting. Random Forests also provide useful estimates of feature importance (e.g., via permutation importance) [25].

- Extra Trees (ET): Also known as Extremely Randomized Trees, this is a variant of the random forest that introduces additional randomness in tree construction. Unlike standard RF, Extra Trees chooses split thresholds randomly for each candidate feature, rather than optimizing splits. This extra randomness tends to further reduce overfitting by decorrelating the trees, often improving generalization performance marginally over RF [29].

- Decision Tree (DT): As a baseline interpretable model, we use a single DT classifier. Decision trees greedily partition the feature space into regions, forming a tree of if-then rules. They are easy to interpret but prone to overfitting in high-dimensional spaces without pruning. Despite their tendency to memorize training data, decision trees provide a useful point of reference for model complexity [30].

- K-Nearest Neighbors (KNN): We employ KNN as a lazy learning classifier that predicts labels based on the majority class of the k most similar instances in the feature space. Renowned for its simplicity and effectiveness in applications like recommender systems and pattern recognition [31,32,33], KNN is adept at capturing local data patterns and complex decision boundaries, as it makes no strong parametric assumptions. However, KNN can suffer in high-dimensional feature spaces (the curse of dimensionality), which dilutes the notion of “nearest” neighbors, potentially degrading its performance as the feature count grows [34].

- Logistic Regression (LR): We include LR, a linear model that estimates class probabilities using the logistic sigmoid function. LR is well-suited for binary classification and produces calibrated probability outputs. It is fast and interpretable, but as a linear model, it may struggle to capture complex nonlinear relationships in high dimensions unless feature engineering or kernelization is used. Regularization (e.g., L2) is often applied to mitigate overfitting when the feature space is large [35].

- Multi-Layer Perceptron (MLP): We evaluate an MLP, i.e., a feed-forward artificial neural network with one or more hidden layers, trained with backpropagation. MLPs can model complex nonlinear relationships given sufficient hidden units, making them very flexible classifiers. In our context, the MLP can learn intricate feature interactions that linear models might miss. However, neural networks require careful tuning (architecture, regularization) to generalize well, especially with limited data [36].

These classifiers were chosen to provide a comprehensive evaluation framework, ensuring that the proposed EHQ-FABC algorithm’s performance is assessed across different learning paradigms–from robust tree-based ensembles to simple linear models and nonlinear neural networks. This diversity helps in understanding whether the feature selection benefits are classifier-agnostic or more pronounced in certain model families.

4.3.2. Hyperparameter Optimization

The effectiveness and stability of metaheuristic algorithms depend heavily on the careful tuning of their control parameters. To ensure optimal effectiveness, we utilized Optuna—a contemporary Bayesian optimization framework—as the basis for systematic hyperparameter tuning [37]. Optuna’s Tree-structured Parzen Estimator (TPE) efficiently navigates complex hyperparameter spaces, treating the optimization process as a series of sequential decisions and thereby accelerating convergence toward optimal configurations.

In this study, EHQ-FABC’s control parameters were systematically optimized using Optuna’s Tree-structured Parzen Estimator (TPE). We executed 30 trials to minimize the validation error under an 80/20 stratified train–test split (tuning confined to the training portion). The resulting configuration achieved stable convergence with validation error = 0.0. Notably, the Firefly light-absorption coefficient γ converged to 1.7706, balancing broad exploration against premature clustering, while the initial Lévy step size λ0 settled at 0.6370, enabling effective early exploration without destabilizing the search. Both lie within empirically robust ranges (γ ≈ 1.5–2.0; λ0 ≈ 0.4–0.7), indicating that EHQ-FABC’s performance is not unduly sensitive to fine adjustments. The full set of values and justifications appears in Table 2.

Table 2.

Hyperparameter settings and justifications of the EHQ-FABC algorithm.

To further ensure the reliability and fairness of our experimental comparisons, we extended hyperparameter optimization to all ML classifiers deployed in this work. Each model’s primary control parameters were subjected to the same Optuna-based optimization, allowing each to operate under conditions that maximize its predictive capability. Table 3 provides a comprehensive summary of the optimized hyperparameters for each ML model, including tree-based ensembles, neural networks, and linear models.

Table 3.

Optimized hyperparameters for ML models.

This dual-stage optimization—targeting both the feature selection process and the classification models—ensures a rigorous and fair evaluation of EHQ-FABC, while also maximizing the generalizability and robustness of our experimental findings.

4.3.3. Baseline Comparisons

To thoroughly evaluate the effectiveness of our proposed EHQ-FABC feature selection approach, we compare it against multiple state-of-the-art feature selection methods spanning several common paradigms:

- Full Feature Set: As a primary baseline, we first consider using all 72 extracted features (after preprocessing) with no feature selection. This represents the upper bound in terms of information available to the classifiers. Comparing other methods to this full-set baseline allows us to assess whether feature selection sacrifices any predictive accuracy or, ideally, improves it by removing noise/redundancy.

- Filter Methods: We employ the chi-square filter, which scores each feature by measuring its statistical independence from the class label (for classification, via a χ2 contingency test). Features with higher chi-square scores are more strongly associated with the class. This method is fast and simple, as it evaluates each feature individually. However, because it ignores feature inter-dependencies, the chi-square filter may select redundant features or miss complementary sets. We include it to represent univariate filter techniques that rank features by class relevance [38].

- Wrapper Methods: We use Recursive Feature Elimination (RFE) as a wrapper-based feature selector. RFE iteratively trains a classifier (in our case, we used a tree-based or linear estimator) and removes the least important feature at each step, based on the model’s importance weights, until a desired number of features remains. By evaluating feature subsets using an actual classifier, RFE can account for feature interactions and find a subset that optimizes predictive performance. The trade-off is higher computational cost, since multiple models must be trained. RFE is known to yield strong results, especially when using robust base learners [39].

- Embedded Methods: We include the LASSO (Least Absolute Shrinkage and Selection Operator) as an embedded feature selection approach. LASSO is a linear model with an L1 penalty on weights, which drives many feature coefficients to exactly zero during training. This performs variable selection as part of the model fitting process. We applied LASSO logistic regression to the full feature set; the features with non-zero coefficients after regularization are considered selected. LASSO tends to select a compact subset of the most predictive features, balancing accuracy with sparsity [40].

- Metaheuristic Methods: In addition to the above, we compare EHQ-FABC against a suite of population-based metaheuristic feature selection algorithms. These methods treat feature selection as a global optimization problem–typically maximizing an evaluation metric (e.g., classification accuracy or a fitness function)–and use bio-inspired heuristics to search the combinatorial space of feature subsets. We implemented the following well-known metaheuristics for comparison:

- ○

- Firefly Algorithm (FA): A swarm optimization inspired by fireflies’ flashing behavior. Candidate solutions (feature subsets) are “fireflies” that move in the search space, attracted by brighter (better) fireflies. FA is effective in the global search for multimodal optimization problems [41].

- ○

- Artificial Bee Colony (ABC): An algorithm mimicking the foraging behavior of honey bees in a colony. It maintains populations of employed bees, onlooker bees, and scout bees that explore and exploit candidate feature sets, sharing information akin to waggle dances. ABC excels at local exploitation and has shown strong performance on numeric optimization tasks [42].

- ○

- Particle Swarm Optimization (PSO): A socio-inspired optimization where a swarm of particles (feature subsets) “flies” through the search space, updating positions based on personal best and global best found so far. PSO balances exploration and exploitation through its velocity and inertia parameters and has been widely applied to feature selection problems for its simplicity and speed [43].

4.4. Evaluation Metrics

To comprehensively assess the performance of each classifier and feature selection method, we employed a diverse set of evaluation metrics. These metrics capture various aspects of classification performance–from basic accuracy to nuanced measures of ranking quality and probabilistic calibration–as well as a metric for the efficiency of feature selection itself [44]. Below, we define each metric and explain its significance:

- Accuracy: This is the simplest metric, defined as the proportion of correctly classified instances among all instances. Accuracy gives an overall sense of how often the classifier is correct. While easy to interpret, accuracy can be misleading in imbalanced datasets (where one class dominates); a high accuracy might simply reflect predicting the majority class. In our balanced evaluation scenarios; however, accuracy provides a useful first indicator of performance.

- Precision: Precision is the ratio of true positives to the total predicted positives, i.e., the fraction of instances predicted as positive that are positive. High precision means that when the model predicts an instance as the positive class (e.g., “spam” URL), it is usually correct. This metric is crucial when the cost of false positives is high, for example, in our context, a low precision would mean many benign URLs are being misclassified as spam, which could burden a security system with false alarms. Precision helps evaluate how trustworthy positive predictions are.

- Recall: Recall is the ratio of true positives to all actual positives, i.e., the fraction of positive class instances that the model successfully identifies. High recall means the model catches most of the positive instances (e.g., it identifies most of the spam URLs). This metric is vital when a missing positive instance has a large cost, in security, failing to detect a spam URL (false negative) could be dangerous. There is often a trade-off between precision and recall; we report both to give a balanced view of classifier performance.

- F1-macro: The F1-macro score is the unweighted average of F1-scores across all classes, where each class’s F1-score is the harmonic mean of its precision and recall. It treats all classes equally, regardless of their frequency, making it ideal for imbalanced datasets. A high F1-macro score indicates strong performance across both spam and benign classes, balancing false positives and false negatives. In our evaluation, the F1-macro score summarizes the classifier’s ability to handle both classes effectively, accounting for errors without bias toward the majority class.

- Matthews Correlation Coefficient (MCC): MCC is a single summary metric that takes into account all four entries of the confusion matrix (TP, TN, FP, FN). It is essentially the correlation coefficient between the predicted and true labels, yielding a value between −1 and +1. An MCC of 1 indicates perfect predictions, 0 indicates random performance, and −1 indicates total disagreement. MCC is particularly informative for imbalanced datasets because it remains sensitive to changes in minority class predictions, unlike accuracy. In our case, MCC provides a balanced assessment of performance, taking into account both positive and negative classifications. A higher MCC means the classifier is making accurate predictions on both classes (spam and benign) in a balanced way.

- ROC-AUC: The Receiver Operating Characteristic Area Under the Curve (ROC-AUC) measures the classifier’s ability to distinguish between classes by plotting the true positive rate against the false positive rate across various thresholds. Ranging from 0 to 1, an ROC-AUC of 1 indicates perfect separation, while 0.5 suggests random guessing. It is robust for imbalanced datasets, as it focuses on ranking predictions rather than absolute thresholds. In our evaluation, ROC-AUC assesses the classifier’s capability to differentiate spam from benign instances, with a higher score indicating better discriminative power.

- Log loss: Log loss evaluates the probabilistic confidence of the classifier’s predictions. It is defined as the negative log-likelihood of the true class given the predicted probability distribution. In practice, a classifier that assigns higher probability to the correct class will have a lower log loss. Log loss increases sharply for predictions that are both confident and wrong. This metric is important because it rewards classifiers that are not only correct but also honest about their uncertainty. In our evaluation, we use log loss to measure how well-calibrated the classifier’s probability outputs are–lower log loss indicates that the model’s confidence levels align well with reality, and it heavily penalizes any overly confident misclassifications.

- Feature Reduction Ratio (FRR): This metric quantifies the extent of dimensionality reduction achieved by a feature selection method. We define FRR as , where Ntotal = 72 is the original number of features and Nselected is the number of selected features, quantifying the extent of dimensionality reduction. FRR thus represents the percentage of features eliminated. For example, an FRR of 50% means the feature selection halved the dimensionality (selected 36 out of 72 features). A higher FRR is generally desirable as it means more feature reduction (simpler models), but it must be balanced against the impact on predictive performance. We report FRR to compare how aggressively each method trims features. Combined with the accuracy metrics, FRR helps illustrate the trade-off each feature selector makes between model simplicity and accuracy.

By examining all these metrics, we obtain a comprehensive picture of performance. Accuracy and F1-macro summarize overall effectiveness across both classes; Precision and Recall quantify the trade-off between false alarms and missed detections; MCC captures balanced correlation across all confusion-matrix terms; ROC-AUC assesses threshold-independent discrimination; log loss gauges prediction confidence; and FRR indicates the efficiency of feature selection. In the following sections, we will present these metrics for each classifier under each feature selection scenario, thereby highlighting how EHQ-FABC compares to other methods not just in accuracy, but in robustness and efficiency as well.

4.5. Experimental Results

4.5.1. Performance Comparison Analysis

This section meticulously examines the comparative classification performance of various ML models when integrated with a spectrum of feature selection methodologies, including the proposed EHQ-FABC algorithm, other metaheuristic approaches, and traditional filter, wrapper, and embedded methods. The evaluation is underpinned by a suite of critical metrics: Accuracy, Precision, Recall, F1-score, MCC, ROC-AUC, and Log loss. The overarching objective is to delineate which feature selection strategy consistently yields superior and robust performance across a diverse array of classifiers, thereby providing a quantitative foundation for our comparative assessment. The comprehensive results are systematically presented in Table 4. As a threshold-independent indicator of ranking quality, ROC-AUC reaches ceiling performance (≈1.000) for most tree-ensemble configurations and improves notably for weaker baselines (e.g., DT, LR) under EHQ-FABC—confirming that observed gains are not artifacts of a specific decision threshold.

Table 4.

Performance metrics for all classifiers across various feature selection methods.

Across the entire spectrum of classifiers investigated, the proposed EHQ-FABC feature selection method consistently demonstrates highly competitive performance. This is particularly salient when considering Accuracy and MCC, metrics of paramount importance in the evaluation of spam detection systems, where class imbalance can often skew traditional performance indicators. A discernible trend emerges: while the ‘Full Feature Set’ frequently establishes a strong baseline, judicious and effective feature selection not only preserves but often augments predictive accuracy, concurrently achieving substantial dimensionality reduction. Consistently high ROC-AUC values (often 1.000) further indicate excellent class separability after feature reduction, reinforcing robustness alongside compactness.

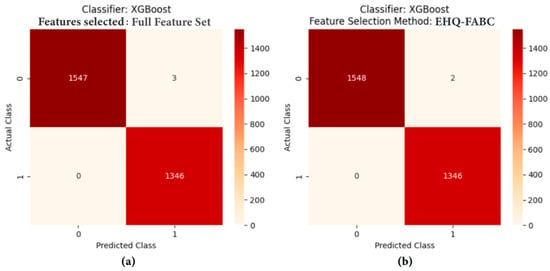

For the XGB classifier, the EHQ-FABC delivered an Accuracy, Precision, Recall, F1, and MCC of 0.9993. This performance is on par with the ‘Full Feature Set’ and PSO, signifying its robust and equivalent efficacy. The Log loss for EHQ-FABC with XGB was 0.0030, which, while slightly higher than RFE (0.0018) and LASSO (0.0021), still denotes excellent model calibration and predictive confidence. The confusion matrix for XGB with EHQ-FABC (Figure 2) shows 2 false positives and 0 false negatives, a slight improvement over the full feature set (Figure 2), which had 3 false positives and 0 false negatives, further validating the benefits of EHQ-FABC. Importantly, ROC-AUC remains at 1.000 across XGB configurations—including EHQ-FABC—demonstrating perfect ranking separability regardless of threshold selection.

Figure 2.

Confusion matrices for XGB: (a) Full feature set vs. (b) EHQ-FABC.

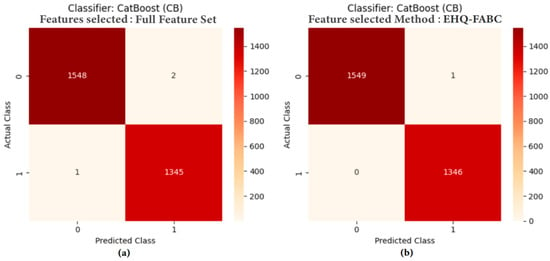

For the CB classifier, the EHQ-FABC method achieved the pinnacle of performance, registering an Accuracy, Precision, Recall, F1, and MCC of 0.9997, coupled with the lowest Log loss of 0.0026. This performance marginally yet significantly surpasses even the ‘Full Feature Set’ (0.9990 Accuracy), unequivocally highlighting the profound efficacy of EHQ-FABC in identifying a highly discriminative and optimal subset of features for the CB model. Examination of the confusion matrix for CB with EHQ-FABC (Figure 3) reveals an exceptionally low misclassification rate, with only 1 false positive and 0 false negatives, indicating near-perfect separation of spam and legitimate URLs. In contrast, the full feature set for CB (Figure 3) shows 2 false positives and 1 false negative, demonstrating the subtle but impactful improvement gained through EHQ-FABC. ROC-AUC is 1.000 for CB across all settings; paired with the best log loss under EHQ-FABC, this indicates both perfect discrimination and superior probability calibration.

Figure 3.

Confusion matrices for CB: (a) Full feature set vs. (b) EHQ-FABC.

For RF, the EHQ-FABC method achieved an Accuracy of 0.9983, notably outperforming the ‘Full Feature Set’ (0.9976). This observation strongly suggests that for ensemble methods such as RF, the application of a well-designed feature selection algorithm can lead to the development of more robust and generalizable models. The Log loss for EHQ-FABC with RF stood at 0.0073, which is demonstrably favorable when compared to other feature selection methods. ROC-AUC values for RF remain at 1.000 across all configurations, indicating that dimensionality reduction via EHQ-FABC preserves maximal ranking performance.

In the context of ET, the EHQ-FABC once again exhibited formidable performance, achieving an Accuracy of 0.9990. This result not only matches that of RFE but also surpasses the ‘Full Feature Set’ (0.9976). Such an outcome unequivocally indicates that the features meticulously selected by EHQ-FABC are profoundly relevant and contribute significantly to the robust generalization capabilities inherent in tree-based ensembles. Consistent with RF and XGB, ET achieves ROC-AUC = 1.000 for all selectors, including EHQ-FABC, confirming that discrimination remains at ceiling after reduction.

For the DT classifier, which is inherently more susceptible to the influence of irrelevant features, the EHQ-FABC achieved the highest Accuracy of 0.9965. This performance distinctly outperforms both the ‘Full Feature Set’ (0.9941) and all other feature selection methods evaluated. This constitutes a particularly significant finding, as it powerfully underscores EHQ-FABC’s unparalleled ability to refine the feature space even for simpler models, thereby leading to a marked enhancement in their predictive performance. Because single trees are more brittle, DT exhibits slightly lower ROC-AUC than ensembles; however, EHQ-FABC still improves DT’s ROC-AUC (0.9978 vs. 0.9969 with the full set), indicating better ranking despite the model’s simplicity.

With KNN, the EHQ-FABC achieved an Accuracy of 0.9990, representing a substantial improvement over the ‘Full Feature Set’ (0.9883) and proving highly competitive with Chi-Square (0.9986). The remarkably low Log loss of 0.0016 for EHQ-FABC when integrated with KNN is highly indicative of exceptionally confident and accurate predictions. KNN benefits markedly in ranking terms as well: ROC-AUC rises from 0.9973 (full set) to 1.000 with EHQ-FABC, mirroring the accuracy/MCC gains and the sharp drop in Log Loss. This suggests that the features meticulously selected by EHQ-FABC significantly enhance the separability of distinct classes within the feature space, leading to superior classification.

For LR, the EHQ-FABC achieved an Accuracy of 0.9900, marking a substantial improvement over the ‘Full Feature Set’ (0.8802) and demonstrating comparability with ABC (0.9900). While LASSO, a feature selection method renowned for its regularization properties, achieved a marginally higher accuracy of 0.9952, EHQ-FABC nonetheless exerts a profound and positive impact on the overall performance of LR. Crucially, ROC-AUC improves dramatically from 0.9516 (Full) to 0.9992 (EHQ-FABC), showing that the reduced subset substantially enhances LR’s discriminative capacity.

Finally, for MLP, the EHQ-FABC achieved an Accuracy of 0.9979, a performance that aligns with both LASSO and RFE, and significantly surpasses that of the ‘Full Feature Set’ (0.9931). This outcome strongly suggests that the features meticulously selected by EHQ-FABC are highly effective for neural network models, thereby contributing substantially to their robust and reliable performance. MLP’s ROC-AUC increases from 0.9997 (Full) to 1.000 (EHQ-FABC), indicating perfect ranking after feature reduction.

In summation, the EHQ-FABC feature selection method consistently either matches or demonstrably surpasses the performance of the ‘Full Feature Set’ and other established feature selection techniques across a broad spectrum of classifiers. Its most pronounced improvements are observed with CB, DT, and KNN, where it achieves the highest accuracy. This comprehensive analysis unequivocally demonstrates the inherent robustness and remarkable adaptability of EHQ-FABC in identifying optimal feature subsets, which in turn profoundly enhances the predictive power of diverse classification algorithms specifically tailored for spam detection. At the same time, near-ceiling ROC-AUC under EHQ-FABC across ensembles (and clear AUC gains for DT and LR) confirms that improvements persist across thresholds and are accompanied by strong probabilistic calibration (low log loss), reinforcing the method’s reliability in threshold-agnostic settings.

4.5.2. Feature Reduction Analysis

Feature reduction is crucial for creating models that are both interpretable and computationally efficient, particularly in high-dimensional spam detection tasks. Our comparative analysis, as detailed in Table 3, shows that all evaluated feature selection methods achieved significant dimensionality reduction, but with varying impacts on accuracy.

Traditional approaches like Chi-Square, LASSO, and RFE reduced the feature set by 48–62%, typically retaining 27–37 features. While these methods preserved accuracy to a degree, they did not consistently outperform the full feature set or the most advanced metaheuristics. Swarm intelligence-based methods such as FA and ABC achieved even greater reduction, but sometimes at the expense of slight drops in accuracy for certain classifiers. Notably, PSO attained the most aggressive reduction (retaining only 14 features) while still delivering near-optimal accuracy, especially with CB and XGB.

The proposed EHQ-FABC method distinguished itself by achieving a high FRR of 76.39% (retaining just 17 features) while simultaneously matching or surpassing the predictive performance of all other methods—including the full feature set—across all classifiers. This demonstrates that EHQ-FABC is exceptionally effective at identifying a compact, highly informative feature subset, leading to models that are both efficient and robust. The ability of EHQ-FABC to deliver top-tier accuracy with a minimal feature set is particularly valuable for real-world spam detection, where speed and interpretability are as critical as accuracy.

In summary, EHQ-FABC offers an outstanding balance, achieving substantial reduction in model complexity without sacrificing, and often improving, classification accuracy—a combination highly desirable for practical cybersecurity applications.

4.5.3. SHAP-Based Explainability Analysis

Understanding the multifaceted decision-making processes of complex ML models is more than an academic exercise; it is essential in sensitive domains and high-stakes applications such as spam detection. SHAP values provide a powerful and intuitive means of interpreting model predictions by quantifying the contribution of each feature to the final outcome. In this study, we analyze SHAP summary and bar plots for the XGB and CB classifiers trained on the feature subset selected by the proposed EHQ-FABC method. The objective is to highlight the most influential variables and thereby gain insight into the mechanisms that differentiate spam from benign URLs. All SHAP attributions (global and local) are computed post hoc on the held-out evaluation split to ensure leakage-aware interpretability.

It is important to note that SHAP serves solely as a post hoc explanatory tool. The EHQ-FABC framework optimizes feature subsets based on classification performance, subset size, and redundancy, while SHAP plays no role in the selection process. Instead, SHAP is applied after feature selection to explain the predictions of the trained models, attributing importance to the features retained by EHQ-FABC. This clear separation ensures that interpretability is preserved without influencing the optimization process.

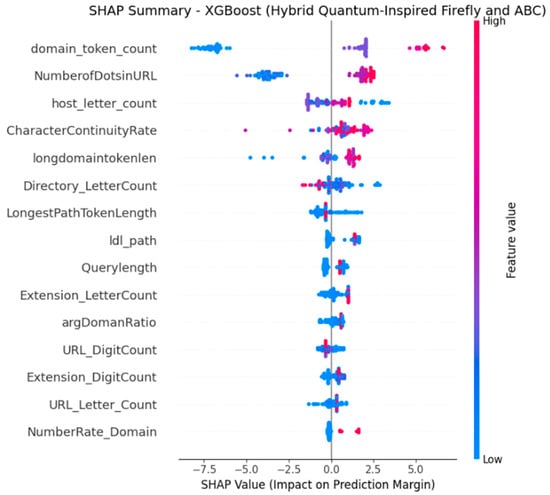

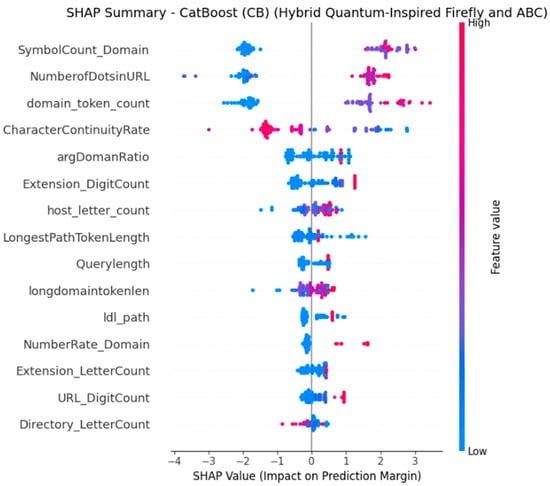

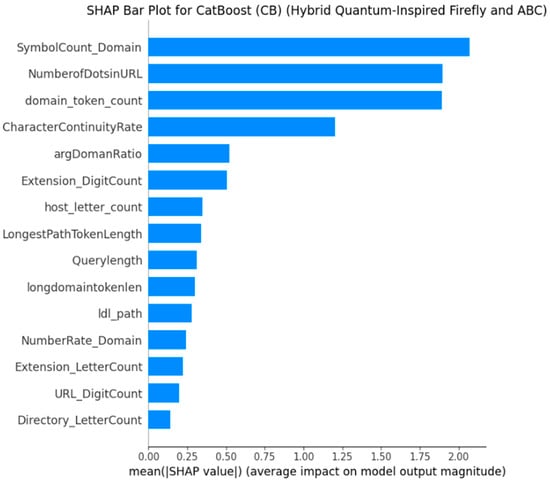

To aid interpretation, Figure 4, Figure 5, Figure 6 and Figure 7 are directly annotated in the text. For example, in Figure 4 (XGB summary), red points with high domain_token_count consistently push predictions toward the spam class, while blue points with low counts push toward benign. Similarly, Figure 6 (CB summary) shows SymbolCount_Domain as dominant: excessive special characters increase spam probability. These examples illustrate how SHAP visualizations correspond to intuitive URL-level cues, strengthening trust in the model’s reasoning.

Figure 4.

SHAP Summary Plot for XGB (EHQ-FABC Features). Red = higher feature values; points to the right increase spam probability.

Figure 5.

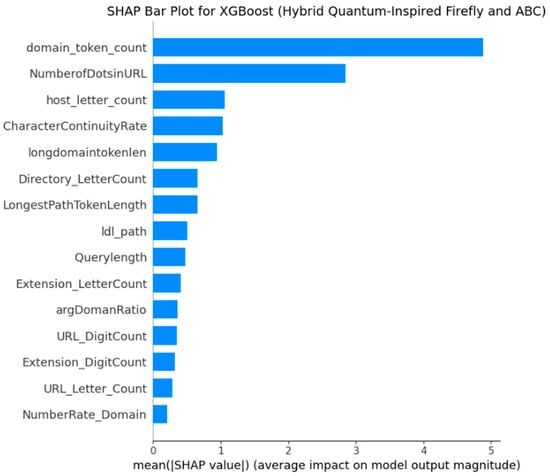

Mean |SHAP| values for XGB (EHQ-FABC features). The top features (domain_token_count, NumberOfDotsInURL) dominate the model’s decisions, aligning with known URL risk indicators.

Figure 6.

SHAP summary plot for CB (EHQ-FABC features). SymbolCount_Domain is the top contributor; high symbol counts (red) typically yield positive SHAP toward spam.

Figure 7.

Mean |SHAP| values for CB (EHQ-FABC features). SymbolCount_Domain leads, followed by NumberOfDotsInURL and domain_token_count. The ranking corroborates the XGB findings while reflecting CB’s emphasis on symbol density.

- SHAP Analysis for XGB with EHQ-FABC Features

Figure 4, which presents the SHAP Summary Plot for XGB (EHQ-FABC Features), and Figure 5, depicting the SHAP Bar Plot for XGB (EHQ-FABC Features), collectively address the feature importance and effect of those features on the XGB model predictions. The SHAP summary plot is a more advanced visualization for displaying the distribution of SHAP values for each feature. Each dot corresponds to a single instance from the dataset, showing the contribution of each of the features in the context of all the data. Additionally, the color of the dot communicates information about the feature value: red means that the feature value is large, while blue means that the value is small. At the same time, the x-axis quantifies the effect on the model’s output: positive SHAP values mean that the contribution pushes the prediction to the positive class (i.e., spam), and negative values imply the opposite: the negative class (i.e., legitimate). Across instances in Figure 4, domain_token_count dominates: high values (red points) typically yield positive SHAP (toward spam), while low values (blue) lean benign. NumberOfDotsInURL shows a similar pattern. Secondary features—including host_letter_count, CharacterContinuityRate, and longdomaintokenlen—modulate predictions in edge cases (e.g., unusually short/long hosts or repeated character patterns).

Illustrative URL examples (local SHAP; Figure 4): (i) A URL with domain_token_count = 12 and NumberOfDotsInURL = 5 received strong positive SHAP contributions from both features, pushing the prediction toward spam; the long alphanumeric path further reinforced this outcome. (ii) A short human-readable domain (e.g., “university.edu”) with domain_token_count ≤ 3 and NumberOfDotsInURL = 1 accrued negative SHAP contributions on these features, favoring the benign class. These cases illustrate how high tokenization and dot segmentation—common obfuscation markers—drive the XGB model’s decisions under the compact subset selected by EHQ-FABC.

Careful examination of these meaningful plots indicates a clear dominance of domain_token_count as the most impactful feature in the model. When we examine instances with a large amount (i.e., red dots) of domain_token_count, there is a strong positive SHAP for those instances, indicating that, generally, having a higher number of tokens in the domain component of a URL signals a higher probability of that URL being classified as spam. In instances with a low amount of domain_token_count (e.g., blue dots), there is generally a negative SHAP value, which pushes the model towards the legitimate class. This insightful observation strongly suggests that URLs possessing overly complex or unusually protracted domain names, which are frequently associated with phishing attempts or other malicious online activities, serve as potent indicators of spam.

Coming in as the second most impactful feature is NumberOfDotsinURL. Mirroring the behavior observed with domain_token_count, a higher incidence of dots within the URL (again, represented by red dots) generally correlates with a positive SHAP value, consequently escalating the likelihood of a spam prediction. Intuitively, it is easy to connect the notion that legitimate and safe URLs will have fewer dots in the URL in contrast to potentially malicious URLs, which tend to use manipulation by using an overabundance of dots as a tactic of deception against domain users.

Other notable and impactful features for the XGB model include host_letter_count, CharacterContinuityRate, and longdomaintokenlen. These features, tied to the lexical and structural aspects inherent in the properties of URLs, also play an integral part in the final decisions made by the model. For example, host_letter_count shows a more complicated relation where extremely high and low counts can impact the prediction, potentially indicating an anomalous host length as a suspicious signal. Finally, CharacterContinuityRate suggests that certain patterns of character redundancy or continuity within the URL can signal spam, as contributors used by the model to discriminate.

- SHAP Analysis for CB with EHQ-FABC Features

Figure 6 and Figure 7 show that the SHAP Summary Plot and the SHAP Bar Plot for the CB model (EHQ-FABC Features) provide significant information regarding feature importance and influences in the CB model. Some commonalities in important features can be seen when compared to the XGB model; however, the CB model does extract slightly different important features as a result of the aim of the model. These subtle and nuanced differences in features to model show how the two different tree-based models, whilst using the same fundamental approach to tree-based models, approach learning differently with their assigned importance weights. In Figure 6, SymbolCount_Domain is the top contributor; high symbol density typically increases spam probability. NumberOfDotsInURL and domain_token_count remain influential, corroborating XGB’s ranking. CharacterContinuityRate also contributes positively when continuity/redundancy patterns are present. argDomainRatio and Extension_DigitCount provide additional, model-specific signals.

Illustrative URL examples (local SHAP; Figure 6): On the held-out split, a URL exhibiting SymbolCount_Domain ≥ 6 (e.g., “xn--ex@mpl3-—.info”) and a long alphanumeric path showed positive SHAP contributions on SymbolCount_Domain and CharacterContinuityRate, tipping the prediction toward spam. Conversely, a well-structured domain with few symbols and a short path accrued negative SHAP from these same features, favoring benign. These examples exemplify how CB leverages symbol density and continuity patterns—within the reduced feature set—to separate obfuscated from human-readable domains.

The CB model identifies the feature SymbolCount_Domain clearly and definitively as the most important feature having an influence on the model. It is clear that the higher the count of symbols is in the domain’s string of characters (red dots), the stronger the conclusion that it is spam. This indicates that the red flags for spam from the model, in part, were identifying the presence of strange, hard-to-read, or excessive special characters in the domain name. This makes sense and follows common approaches that have been used by spammers using domain generation algorithms (DGAs) to create fake URLs.

NumberOfDotsinURL remains very important in CB and indicates continued consistency of this feature, weighing heavily into both strong tree-based models. Further, domain_token_count remains impactful to the CB model’s decision-making as well, although not as impactful as SymbolCount_Domain; it is still important.

CharacterContinuityRate has significant influence as well, with high values (red dots) typically leading to positive SHAP values—this strongly indicates that certain character combinations are very high flags for spam. Finally, argDomainRatio and Extension_DigitCount show some influence as well and are meaningful to the model, both of which suggest that argDomainRatio can indicate a longer-than-expected length of arguments to the domains’ length and that there are digits in the extension that can be a flag for the CB classifier as well.

- Insights and Comparison with Traditional Importance Rankings

The comprehensive SHAP analysis provides invaluable and granular insights into the intricate underlying mechanisms governing URL-based spam detection. Features associated with the inherent structural and lexical characteristics of the domain and URL, such as domain_token_count, NumberOfDotsinURL, SymbolCount_Domain, and CharacterContinuityRate, always appear to be extremely important, both in the XGB and CB models. This consistent importance strongly supports the notion that these features are important when it comes to smartly classifying URLs as malicious and legitimate.

Conventional importance measures, such as Gini importance for tree-based models or coefficients for linear models, present a global, aggregate representation of the overall contribution a feature provides to the model. SHAP values allow you to move beyond this aggregated global view of importance and provide a much more nuanced, instance-level explanation of importance, showing exactly how individual feature values impact predictions for a particular instance of data. For instance, while domain_token_count is undeniably globally important, the SHAP analysis specifically illustrates that it is high values of this feature that predominantly drive spam predictions. This level of detail, which is not usually available in traditional importance metrics, provides an actionable and deeper understanding of feature impact.

In the context of this analysis, there is general alignment of the top features noted in the SHAP analysis with the features we would typically expect from traditional importance ranking in tree-based models. Yet, the ability for SHAP to reveal both the direction and the extent of influence for specific feature values permits a much deeper and richer understanding. For example, the host_letter_count feature for XGB displays a more complicated relationship in which both high and low feature values can contribute to spam classification, findings that would often not be easily identified or obvious from a single combined importance measure. The slightly different feature appearing in the absolute top position between XGB (domain_token_count) and CB (SymbolCount_Domain) is a meaningful observation, pointing to the fact that while both are predictive, they are likely working with different data characteristics. This points to the multifaceted nature of spam URLs, alongside a deeper richness in understanding the potential impact of features. However, this deeper explainability is not only an academic benefit; it is fundamentally important to build trust and confidence in automated spam detection systems, but perhaps more importantly, to guide the future development of specific, robust, and ultimately more effective countermeasures to an ever-evolving landscape of spam tactics.

4.5.4. Comparison with Previous Studies

To position the proposed EHQ-FABC algorithm within the landscape of recent advancements in spam detection, Table 5 presents a comparative analysis of notable studies utilizing various feature selection and optimization strategies on the ISCX-URL2016 dataset. While previous works have reported significant improvements in classification accuracy through metaheuristic algorithms and advanced classifiers, they have primarily emphasized predictive performance, often overlooking the aspect of model transparency.

Table 5.

Comparison of the accuracy of our enhanced ML models with prior studies.