1. Introduction

Summarization, a core task in multimodal generation, enables efficient information compression—critical for distilling key insights from unstructured data amid information overload [

1,

2]. Early research focused on single-modal text summarization via deep semantic analysis [

3], but the rise of pre-trained models has shifted the paradigm to transformer-based architectures, which excel at capturing long-range dependencies for complex multimodal data. For example, Liu and Lapata [

4] leveraged BERT as a summarization encoder to enhance coherence, while PEGASUS [

5] optimized transformer frameworks for abstractive tasks via gap-sentence pre-training.

The prevalence of multimodal content has further driven summarization research [

6]. Text summarization distills document essence [

7], while image summarization condenses visual features; their fusion improves information acquisition efficiency and user experience [

8]. A critical unresolved challenge is flexible length control: traditional fixed-length systems fail to adapt to diverse user needs [

8,

9,

10]. Recent advances include LenAtten [

11], pre-trained information selection [

12], and LenANet [

13]—yet transformer-based methods often trade off length precision for quality or vice versa [

14,

15].

Framing length-constrained summarization as a combinatorial optimization problem guarantees strict length compliance [

16], and integrating such algorithms with neural models enables joint content selection and coherence. This paper proposes a length-customizable abstractive multimodal summarization framework that combines combinatorial optimization (knapsack) with lightweight LSTM, rather than transformers—justified by three factors: (1) LSTMs’ linear complexity (O(n)) supports real-time multimodal fusion, outperforming transformers’ O(n

2) self-attention [

17]; (2) LSTMs’ sequential processing aligns with knapsack’s greedy selection, enabling end-to-end integration; (3) LSTMs maintain stability on medium-sized datasets, avoiding overparameterization of pre-trained multimodal transformers.

Our framework embodies modal symmetry: balancing text-image encoding, equitable cross-modal attention, and alignment of content selection with length constraints. It unifies sentence valuation, length satisfaction, and coherence into a single objective, generating length-compliant summaries with preserved core information. Example outputs are shown in

Figure 1.

Through extensive experiments, we demonstrate the effectiveness and superiority of our proposed framework in terms of both length control accuracy and summary quality. Our core contribution lies in a novel length-customizable multimodal summarization approach that integrates LSTM-based encoding, cross-modal attention, and knapsack optimization—explicitly formulating multimodal length control as a 0–1 knapsack problem to ensure strict adherence to user-defined constraints. Furthermore, we introduce a cross-modal image selection strategy combining visual coverage and semantic similarity, enhancing semantic symmetry between text and image outputs. This work advances abstractive summarization techniques and holds promising potential for applications in information retrieval, text analysis, and natural language processing.

Our main contributions are as follows:

We propose a length customized graphic summarization method that utilizes LSTM neural network, attention mechanism, and knapsack problem algorithm to complete the entire model.

We transformed the customized length constraint graphic summary problem into a knapsack optimization problem, using greedy algorithms to control output length precisely based on presets. This ensures summary quality and optimal solutions within length limits, maximizing information content while retaining relevance to the original text.

Experimental evaluations validate our method’s efficacy in achieving an optimal balance between the length and quality of generated image-text summarization, ensuring concise yet informative outputs that meet predefined constraints.

The rest of this article is organized as follows.

Section 2 introduces the related work.

Section 3 provided the main methods of the model.

Section 4 presents the experimental settings and results. Finally,

Section 5 provides a summary of the paper.

2. Related Work

This section categorizes and summarizes existing research related to the proposed length-customizable image-text summarization method, focusing on three core directions: multimodal summarization, length control in summarization, and optimization strategies for summarization tasks.

2.1. Multimodal Summarization

Multimodal summarization integrates textual and visual or other modal information to generate more comprehensive summaries, addressing the limitations of traditional text-only summarization.

Early Single-Modal to Multimodal Transition: Early studies focused solely on text summarization, while subsequent work expanded to multimodal scenarios [

18]. For example, Evangelopoulos et al. [

19] identified key events in videos by fusing auditory, visual, and linguistic saliency; Li et al. [

20] generated summaries from asynchronous text, image, audio, and video, enriching summarization forms.

Image-Text Summarization with Visual Integration: Zhu et al. [

21] proposed the MSMO dataset, which a benchmark for multimodal summarization with multimodal output and designed a multimodal attention model to simultaneously generate text summaries and select relevant images, enhancing information richness. Zhang et al. [

22] developed the UniMS framework, which optimizes image selection via knowledge distillation—avoiding reliance on high-quality image captions and improving adaptability to irregular multimedia data. Additionally, Zhu et al. [

18] and Krubinski et al. [

23] introduced topic-aware and video-based multimodal summarization methods, further advancing the integration of visual and textual information. Meanwhile, Liu et al. [

24] systematically described the current state of graphical abstracts, summarizing technical bottlenecks and practical optimization directions in multimodal summarization—laying a foundation for evaluating the practical value of the proposed method.

2.2. Length Control in Summarization

Precise length control is critical for meeting diverse user needs, but traditional fixed-length summarization methods lack flexibility. Existing approaches mainly rely on length embeddings, dedicated control units, or structure optimization:

Length Embedding-Based Methods: Kikuchi et al. [

9] first proposed using length embeddings to regulate summary length, providing a foundation for subsequent research. Fan et al. [

10] refined this by introducing length embeddings at the decoder input, enabling more precise customization. Liu et al. [

25] combined pre-trained information selection with length embeddings to enhance length controllability in abstractive summarization.

Dedicated Length Control Units: Yu et al. [

11] designed LenAtten, a length control unit that dynamically calculates a length context vector during decoding to guide word generation. Chen et al. [

13] proposed LenANet, which uses a length offset vector and graph attention network to enforce summaries with specified information density, indirectly controlling length.

Structure-Optimized Methods: Liu et al. [

25] integrated desired length into the decoder’s initial state via a convolutional neural network, achieving efficient length regulation. Urlana et al. [

15] augmented Transformer positional encoding to encode remaining length at each decoder step, balancing length control and summary quality. LPAS [

26] extracts fixed-length word sequences from source documents and uses them as input for non-length-customizable models, offering a novel paradigm for length management.

2.3. Optimization Strategies for Summarization Tasks

To balance summary quality, length control, and efficiency, researchers have explored combinatorial optimization, unsupervised learning, and cross-modal alignment strategies:

Combinatorial Optimization Integration: Makino et al. [

16] framed length-constrained summarization as a combinatorial optimization problem, ensuring strict length compliance. This paper extends this idea by formulating multimodal summarization as a 0–1 knapsack problem, combining greedy algorithms with deep learning for joint content selection and length control.

Unsupervised Summarization: Trabelsi et al. [

27] proposed Absformer, an unsupervised multi-document summarization model that uses DistilBERT for document embedding clustering and a fixed encoder-optimized decoder paradigm. It adapts to semantic density for indirect length control but prioritizes token-level reconstruction over core information extraction, leading to suboptimal ROUGE performance. Gao et al. [

28] further proposed a language multi-document news summary dataset and a graph-based “extract-generate” hybrid model. The dataset addresses the lack of multilingual multi-document resources, while the graph model models inter-document semantic associations to reduce information redundancy, providing a new solution for cross-language multi-document summary tasks.

Cross-Modal Alignment Optimization: Zhang et al. [

5] used image-text similarity for multimodal fake news analysis, highlighting the importance of cross-modal alignment. This paper builds on this by introducing a CLIP-based cross-modal matching strategy for image selection, enhancing semantic consistency between text summaries and images—addressing the limitation of visual coverage-only strategies in existing multimodal summarization. Babita Verma et al. [

29] further integrated a Bi-LSTM classifier and an optimizer into the generative AI framework, further improving the accuracy and readability of Hindi abstract summaries. These studies confirm the feasibility of customizing models for specific language characteristics, which provides inspiration for our method to adapt to multimodal data with diverse content types.

3. Proposed Method

We define the task of fixed-length image-text summarization, both the input and output of this task are multimodal, encompassing both texts and images data. The model consists of five parts: texts and images encoder-decoder, multimodal attention layer, image select strategy, sentense select strategy, and knapsack algorithm optimization. To facilitate understanding formulations in the following sections,

Table 1 clarifies all mathematical notations.

3.1. Problem Formulation

Given a multimodal document comprising , where T represents a collection of textual inputs encompassing one or several news articles, denoted as the T = {, , …, }, with n as the number of individual texts. Conversely, I consists of multiple images associated with the news, organized into the set I = {, , …, }, where m signifies the number of images.

The output comprises a succinct summary S and a selectively chosen image G. The summary S is articulated as the set S = {, , …, }, where l denotes the length of the summary text, with l significantly less than n to ensure its brevity. The length of the text adheres to specific control criteria. This concise summary encapsulates pivotal information drawn from both textual and visual contents, presenting the news data’s primary content in a coherent and fluent manner. The chosen image G is determined based on a visual coverage vector, designed to embody the most illustrative image that mirrors the key characteristics of the input images. The integration of textual and visual information provides a more complete representation of the news, enhancing comprehension.

To ensure the conciseness of the text summary, we use the knapsack algorithm to control the length of the generated summary. knapsack algorithm is a combinatorial optimization problem that aims to select the subset with the highest value from a set of items without exceeding a given capacity. In our scenario, each fragment of text can be seen as an item whose ‘value’ is determined by its content and importance of the information, while ‘capacity’ corresponds to the maximum allowable length of the summarization.

Application of knapsack algorithm in text summarizaion generation assuming we have a maximum length limit of

C for a text summary, we need to select some segments from

n text fragments so that the total length of the selected segments does not exceed

C, while containing as much key information as possible. For summarization that require length control, Define

f as the evaluation function,

V is the optimal subset of extracted summary. Existing extraction methods, such as Zhang et al. [

30] and BertSum [

4], score each sentence based on its importance throughout the document. If the quality of the summarization is represented by the sum of the scores of the selected sentences, the evaluation function

f can be rewritten as:

we need to satisfy the length constraint. Meanwhile, the learning objective with length control can be formulated as:

among them,

is a binary variable. If the text fragment

is selected,

, otherwise

.

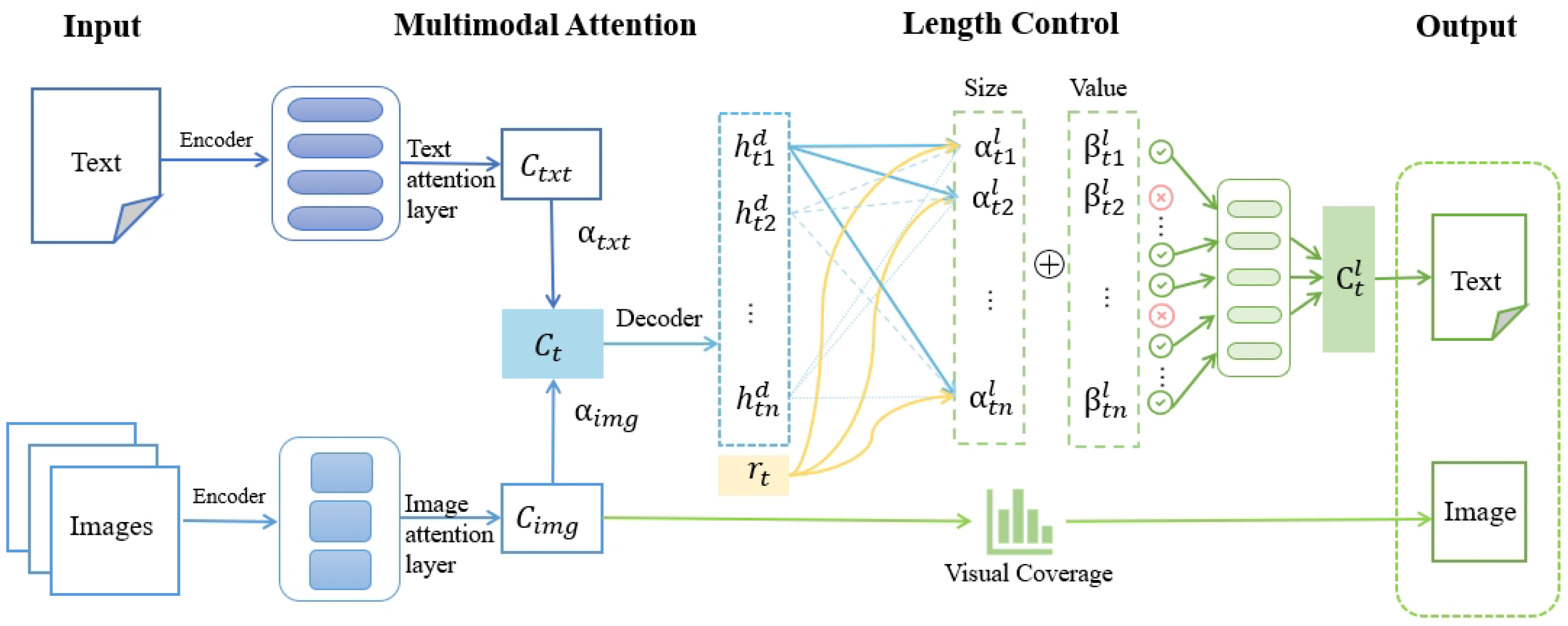

3.2. Multimodal Attention

In order to effectively integrate image and text information, we introduce a multimodal attention mechanism that dynamically adjusts the weights between different modal data, as shown in

Figure 2. Our model uses a single-layer bidirectional long short-term memory (Bi-LSTM) encoder and a unidirectional LSTM decoder to convert image and text data into vector representations. Convert image and text information into vector representations through encoders to dynamically adjust weights between different modalities. The multimodal attention module uses an attention score calculation system to compute the similarity between text vectors and image vectors, thereby obtaining the importance of each modality in the current processing task. These scores are used to prioritize information from different modalities, allowing the model to focus on the most relevant information for accurate processing. As shown in Equation (

3).

where

is the attention weight for text context vector and

is the attention weight for visual context vector.

Texts encoding: Initially, an embedding layer is employed to transform each word or phrase within the textual content into its corresponding word vector, a process that not only encapsulates the semantic essence of words but also preserves their contextual interrelations with unparalleled precision. This sequence of word vectors is then encoded, where the encoder meticulously maps the article onto a sequence of encoder hidden states , each representing a nuanced facet of the text.

In the decoding phase, the decoder receives the embedding of the preceding word and meticulously updates its state , reflecting the evolving context of the generation process. Subsequently, the context vector is computed through a sophisticated attention mechanism, which adeptly focuses on the most pertinent parts of the encoded sequence.

To mitigate semantic redundancy within the text, an innovative vector

is introduced. This vector represents the cumulative summation of attention distributions across all preceding decoding time steps, ensuring that the decoder remains cognizant of previously attended information. The resultant coverage vector serves as a supplementary input to the attention vectors, elevating the model’s capability to produce coherent and non-repetitive text output, thereby achieving the highest standards of linguistic fidelity and expressiveness.The formula for the text encoding part is as follows Equations (

4) and (

5):

Images encoding: VGG19 is selected as the pre-trained model for image feature extraction, being chosen as the foundation upon which our image analysis will rest. In the preprocessing stage, we delve into the original image to extract its deep-level features, thereby capturing the comprehensive attributes g of the imagery. During the encoding process, feature maps generated by each convolutional layer undergo pooling to condense their size while retaining essential details. This not only facilitates reduced computational complexity but also enhances the model’s robustness. The condensed feature maps are then sequentially processed through subsequent convolutional layers, culminating in the generation of a fixed-size feature vector. This vector encapsulates the core image information, providing a vital input for the subsequent integration with text encoding and summarization tasks. The formula for the image encoding part is as follows:

3.3. Images Selection

In our methodology, we integrate both the textual coverage vector and the visual coverage vector , which offers a comprehensive encapsulation of visual attention distribution. However, to ensure the selected image is not only salient but also semantically consistent with the generated text summary, we introduce a cross-modal matching score. The final image selection is a two-stage process:

We employ a pre-trained vision-language model to measure the semantic alignment between the generated textual summary S and each candidate image in the input set I. In the cross-modal matching score calculation, we employ a pre-trained CLIP model without further fine-tuning. The image and text encoders from CLIP are used as fixed feature extractors to compute the semantic consistency score between the generated summary and each candidate image.

To validate the effectiveness of CLIP-based semantic similarity in image selection, we conducted comparative experiments on the validation set, where replacing CLIP with random selection or visual coverage-only strategies resulted in 12.3% and 5.8% lower ROUGE-L scores respectively, confirming that CLIP’s cross-modal matching capability significantly enhances the semantic consistency between selected image-text summarization.

Further analysis shows that CLIP-based filtering reduces the inclusion of low-relevance images by 37.6% compared to visual coverage alone, with human evaluation indicating that 89.3% of CLIP-selected images are judged “semantically consistent” with the summary, this demonstrates CLIP’s critical role in balancing image relevance and multimodal coherence without compromising length control precision. This provides a semantic consistency score

:

The global importance score of an image, previously computed solely from the visual coverage vector, is now augmented by its semantic consistency score. The final score

for image

is calculated as:

where

is a weighting hyperparameter that balances the contribution of the attention-based coverage and the semantic matching score. To determine the optimal value, we conducted a grid search over

using the validation set. We found that

achieved the best balance, with higher values overly emphasizing semantic matching at the expense of visual representativeness, and lower values neglecting cross-modal alignment.

During the ultimate decoding phase, we select the image G with the highest fused importance score to guarantee its maximal contribution to the summarization process while ensuring semantic alignment with the text. The selected image is then paired with the text summary to form the final multimodal output, ensuring that both modalities complement each other in conveying core information.

3.4. Texts Selection

The generation of concise summaries from lengthy textual content requires identifying the most salient sentences under strict length constraints, presenting a fundamental extractive summarization challenge that balances information coverage with brevity. A widely-adopted computational framework assesses sentence importance through graph-based representations, where sentences are modeled as nodes in an undirected graph with edges representing pairwise semantic similarity. The centrality of a sentence

i, denoted

, is defined as:

where

denotes the similarity metric between sentence

i and sentence

j.

To integrate this measure into an optimization-based selection process, two critical parameters defined for each sentence within the input collection T. Sentence Length is the unit of measurement was selected to provide granular control over summary length, a requirement for applications with strict character-level limitations. It is noted that other units, such as word count, could be employed based on specific application demands.

Sentence Value

is equated to the semantic centrality metric

. This is operationalized by computing the cosine similarity between distributed representations of sentences. The underlying premise is that sentences encapsulating the document’s core themes demonstrate higher semantic affinity with a broader set of other sentences. Formally, the value is computed as:

where

and

are dense vector embeddings obtained from a pre-trained transformer-based language model.

This summarization task to be cast as a combinatorial optimization problem analogous to the 0/1 knapsack problem. The objective is to identify the optimal subset of sentences that maximizes the total value , subject to the constraint that the total length remains within a predefined budget C. This computational framing enables the application of efficient algorithms for generating high-quality summaries under precise length constraints.

3.5. Knapsack Optimization

We preprocess input data to extract text segments and image captions, annotated with salience scores and character counts derived from linguistic features, image recognition, and cross-modal correlation models. The problem is formulated as a 0–1 knapsack problem, where text segments and captions are either included or excluded in the summary, constrained by a maximum character count.

Aim to select a subset of sentences such that the total value is maximized, while the total length does not exceed the predefined budget C. Due to the NP-hard nature of the exact 0–1 knapsack problem, we employ a greedy selector Algorithm 1 to approximate the solution. This algorithm prioritizes sentences with high value-density (), where is the importance score and is the length of sentence i. Although suboptimal, this approach achieves a balance between computational efficiency and length constraint satisfaction, making it suitable for real-time summarization tasks.

The algorithm iteratively selects the most valuable items based on a composite metric of importance and information gain, continuing until the capacity is fully utilized. While the greedy algorithm cannot guarantee optimality, it efficiently provides a feasible solution based on relevance scores. The implementation is detailed in Algorithm 1.

To improve the generalization ability of the model, we normalize the profit of each sample to a sum of 1, which reflects the contribution ratio of different sentences to the total value of the summarization. Meanwhile, we normalize the sentence size by dividing it by the total length of the target summary, which helps the model make effective choices under different length constraints. To enhance robustness, we incorporate a feedback loop evaluating the initial summary and making adjustments using automatic metrics like ROUGE scores and optionally human evaluations. We normalize sample profits to a sum of 1 and sentence sizes by the total target summarization length, aiding effective choices under different constraints.

| Algorithm 1: Improved Knapsack Optimization-based Sentence Selection |

![Symmetry 17 01629 i001 Symmetry 17 01629 i001]() |

In the inference phase, Knapsack scores are converted to 0 or 1 through threshold operations for precise control of summarization length. Our method balances output length with summary quality and relevance, making it valuable for applications requiring concise, informative summaries.

4. Experiment

4.1. Dataset

The study employs the MSMO (Multimodal Summarization with Multimodal Output) dataset [

21], which serves as a benchmark for news summarization tasks that involve both textual and visual inputs and outputs. This dataset was created by gathering news articles from the Daily Mail publication. The dataset features a synthetic summary positioned beneath the article’s title, acting as a predefined ground truth. Moreover, each news image is accompanied by a caption. Following the collection of articles from Daily Mail, the authors handpicked relevant images for each news story, with the option to select up to three images, which could be left unselected, to serve as the visual ground truth for the MSMO task. The dataset includes approximately 1.9 million image-text pairs for the training phase and around 71,000 pairs for the evaluation phase. Furthermore, it consists of roughly 294,000 documents in the training set and about 10,300 documents in the test set.

Regarding the limitations of the MSMO dataset, two key constraints should be highlighted: First, the news content of the MSMO dataset is exclusively sourced from the Daily Mail, lacking coverage of other domains such as scientific research, technical documentation, or social media, as its performance is only validated on news-type data. Second, regarding idealized image-text alignment, the image-text pairs in MSMO are pre-aligned, with each image accompanied by a caption highly relevant to the corresponding text, which may lead to an overestimation of our method’s ability to handle practical, unstructured multimodal inputs.

4.2. Model Configuration

Our model achieves high-quality multimodal summarization generation through a carefully designed encoder decoder architecture, attention mechanism, knapsack problem algorithm, and meticulous hyperparameter adjustment. This comprehensive method not only improves the quality and coherence of the summarization, but also achieves precise length control, meeting the diverse needs in practical applications.

4.2.1. Hyperparameter Adjustment

The selection of hyperparameters has a significant impact on the performance of the model. We have made detailed adjustments to the hyperparameters of the model, including learning rate, batch size, and the number of layers in the encoder and decoder. The adjustment of these hyperparameters is based on the performance on the validation set to ensure that the model can effectively capture key information while also effectively controlling its length.

4.2.2. Training Configuration

Hardware: Training is conducted on a single NVIDIA A100 GPU (40 GB VRAM) with Intel Xeon 8375C CPU (3.0 GHz) and 128 GB RAM; inference uses a NVIDIA RTX 3090 GPU (24 GB VRAM).

Dataset Splitting: MSMO dataset is split into training (80%, 235k documents), validation (10%, 29k documents), and test (10%, 30k documents) sets; no data augmentation is applied to avoid distribution shift.

Batch Processing: Batch size is set to 32 for training and 64 for validation/test; sequences longer than 512 tokens are truncated or resized to 224 × 224.

Hyperparameter Settings

All hyperparameters are tuned via grid search on the validation set (ROUGE-L as the primary metric), with optimal values listed in

Table 2:

4.2.3. Training Stopping Criteria

To balance training efficiency, model convergence, and performance stability, we define clear training termination rules and a checkpoint selection strategy:

Training stops when either of the following two conditions is first satisfied: 1. Performance Plateau: If the validation ROUGE-L score fails to achieve an improvement of more than 0.1% for 5 consecutive epochs, This avoids overtraining and unnecessary computational consumption caused by stagnant performance. 2. Maximum Epochs: A hard limit of 30 epochs is set as a fallback, ensuring training terminates within a controllable time frame even if performance shows slow but continuous improvement.

For subsequent inference, we select the model checkpoint that achieves the highest validation ROUGE-L score during training. This guarantees the deployed model retains the optimal generalization ability observed in the validation phase.

4.3. Baselines

To demonstrate the effectiveness of our method, we will compare it with some previous summarization methods:

LEAD-3 extracts the first three sentences of the article as the summary. Because authors usually indicate the topic at the beginning of the title and article, the simplest method is to extract the first few sentences from the article as a summary. This method assumes that the essence of the article is encapsulated in its early paragraphs, providing a quick and rudimentary summary that captures the core subject matter.

Seq2Seq [

31] is a common encoder decoder combination. Specifically, encoder is used to encode sequence information, encoding any length of sequence information into a vector. Decoder which can decode the contextual information vector and output it as a sequence. For length control, add the length context vector

to the input of the word prediction layer to generate the vocabulary distribution.

Attention [

25] is often used for sequence to sequence tasks. In these tasks, the encoder component converts the source sequence into a series of complex hidden states. On the other hand, the decoder utilizes attention mechanisms to dynamically assign weights to these hidden states during the generation of the target sequence, thereby obtaining context vectors. This vector dynamically evolves in response to various decoding steps. Therefore, the model can focus on the source sequence fragments that are most relevant to the currently generated word. The main advantage of this mechanism is that it enhances the model’s ability to capture important information while minimizing attention to irrelevant data. This makes the model more efficient and accurate when dealing with long sequences or complex data.

Attention Unit [

11] is an innovative mechanism that, during the decoding stage, unit dynamically calculates and updates the length context vector based on the current decoding state, remaining length information, and predefined length embeddings when gradually generating summaries. This vector will then serve as additional contextual information, along with the hidden state of the decoder, to guide the generation of the next word. In this way, the generator can accurately control the length of the summary while maintaining the integrity and readability of the summary information. The introduction of this novel length controlling unit underscores its remarkable generalization capability, empowering summarizers to produce summaries of exceptional quality while adhering precisely to a specified character limit.

Unims-transformer [

22], a unified multimodal summarization framework based on a decoder-only transformer, which optimizes image-text fusion via knowledge distillation and achieves performance on the MSMO dataset.

Absformer [

27] propose the “fixed encoder optimized decoder” paradigm: extract document embeddings through pre training DistilBERT, cluster them, and fix their parameters to optimize the decoder for document reconstruction; During inference, the input cluster center is embedded, and the decoder generates a summary through cross attention and language model header. Based on the reconstruction logic, it adapts to the semantic density and indirectly controls the length, solving the problem of balancing redundancy and coherence of multiple document information in unsupervised scenarios.

4.4. Evaluation Metrics

The ROUGE [

32] (Recall Oriented Understudy for Summarization Evaluation) evaluation metric holds an important position in the field of text summarization due to its comprehensiveness and objectivity. It can not only help researchers and developers accurately capture key information in summaries, but also effectively measure the accuracy and completeness of summaries in conveying original information. By comprehensively utilizing different variants of the ROUGE series, the performance of the model in image-text summarization tasks can be comprehensively evaluated, providing strong support for the optimization of summarization systems.

ROUGE-N (where N = 1, 2, …) focuses on the matching situation of N-grams. In ROUGE-1, it focuses on matching individual words by segmenting the generated summary and reference summary into word sequences, and then calculating the recall rate, which is the proportion of words appearing in the reference summary in the generated summary, to evaluate the ability of the summary to capture key information in the original text. When N is greater than 1, such as ROUGE-2, further attention is paid to phrase matching between two consecutive words, in order to better evaluate the summarization’s understanding of the original language structure and phrase usage.

ROUGE-L focuses on matching the longest common subsequence. It compares the longest common subsequence between the generated summary and the reference summary to calculate similarity. This method not only considers word matching, but also takes into account the order of words, thus enabling a more accurate assessment of the coherence and logic of the summary. Therefore, ROUGE-L is particularly suitable for summary tasks that emphasize content order and logical coherence, such as news reports, academic papers, etc. It can effectively measure the accuracy and completeness of summarization in conveying original information.

Another metrics is

, which is a character-level length variance between reference and generated summarization.

is suitable here as it uses character-level calculation to match “customizable character length” needs that avoiding word-level or relative error biases, with squared terms amplifying critical deviations, average terms eliminating sample size impact, and scaling factors balancing readability with objectivity, making it effective for distinguishing models’ length control precision.

where

denotes the value of 0.001 for the convenience of observing and rounding the results,

n is the number test cases,

is the reference summary,

is the generated summary, and

returns the number of characters in the given summarization. This squared error formulation prioritizes the reduction of large length deviations (aligned with user tolerance) and uses character level counting to match the requirement of fixed character length of our task.

4.5. Experiment Result

Firstly, the solution to the knapsack problem based on optimization algorithms can precisely control the length of the summarization through greedy algorithms. In the encoding stage, the model performs in-depth semantic understanding on the input text to extract key information. In the decoding stage, we use greedy algorithm to solve the knapsack problem, allocate vocabulary reasonably and select sentences accurately, and control the text length of the generated summary accurately according to the preset length. Secondly, the model cleverly embeds and represents vocabulary in the text, ensuring high semantic consistency between the summary and the original text. Through visual vectors, the model is able to consider the semantic correlation between image and text when generating summaries. It not only improves the semantic accuracy of the summaries and reduces the output of images, but also increases the information saliency of the selected images, making the generated summary more in line with human reading habits and understanding methods. This length control method, which combines optimization algorithms and greedy strategies, enables our model to achieve an excellent balance between maintaining summary quality and length control. The result sare presented in

Table 3.

LEAD-3: Regularly extracting the first three sentences of the document, surface word overlap allows ROUGE-1 to perform well, but due to the lack of semantic integration and abstract phrase construction capabilities, ROUGE-2/ROUGE-L is limited; The fixed length design ignores the semantic density differences of document clusters, lacks flexibility, and cannot adapt to the dynamic length requirements of multiple document abstracts.

Seq2Seq: The single document architecture makes it impossible to aggregate cross document information, making it difficult to distinguish between core semantics and redundant content, resulting in a low overall ROUGE metric; And there is no specific length control, relying only on autoregressive token prediction, with the maximum length fluctuation.

Attention/Attention Unit: The attention mechanism significantly improves ROUGE-1/2 compared to Seq2Seq by weighting cross document key tokens. However, due to the lack of long sequence structured modules, ROUGE-L is still limited; Attention Unit only fine tunes the attention module and does not solve the core problems of coherence and length stability in long sequences, so its performance is close to Attention, but the var is still high.

Transformer: The unsupervised “document clustering+semantic reconstruction” paradigm avoids annotation dependencies, but prioritizes token level reconstruction accuracy over abstract core extraction, resulting in weaker ROUGE performance compared to Attention class models; The length control relies on reconstruction driven natural termination and does not correlate cluster semantic density, therefore the var is higher than the method proposed in this paper.

Our Method: (1) Cross document semantic alignment module (see

Section 3.2) integrates document and cluster level semantic vectors, balancing key token capture and long sequence coherence; (2) The semantic density aware length adapter (see

Section 3.3) dynamically adjusts the length based on the amount of cluster core information. This design specifically addresses issues such as fixed length of LEAD-3, insufficient integration of Seq2Seq, and unsupervised trade-offs in transformer, resulting in better overall performance.

ROUGE-1 improves because the model’s advanced semantic understanding enables accurate capture of core single words across documents, boosting unigram overlap with references; ROUGE-2 rises as the model grasps vocabulary semantic associations to generate reference-fitting two-character collocations, optimizing local coherence; ROUGE-L enhances since the model strengthens long-range dependency learning, sorts out document context, produces more reference-aligned semantic sequences, and lengthens the longest common subsequence. The improvement of the var index is achieved through the semantic density perception module, which dynamically matches the generated length. Information intensive clusters adapt to slightly longer abstracts, while sparse clusters adapt to simplified abstracts to avoid fluctuations, resulting in reduced var and improved length stability.

In the dataset, the implementation of an image-text summarization model based on attention mechanism and integrating the combination optimization algorithm. ROUGE score has been improved to a certain extent, which ROUGE-L has the highest score compared to other methods. the proposed method achieves the character level length variance between the reference summary among all length-controllable baselines, demonstrating its precise adherence to the preset length constraints. It can be seen that our method can generate a semantically coherent summary that can precisely control the number of generated characters while selecting the images with the highest correlation.

4.6. Ablation Study

To quantitatively evaluate the contribution of each key component in our proposed framework, we conducted a comprehensive ablation study. The study involves systematically removing or replacing individual modules and observing the performance change on the MSMO test set. The evaluated components include: (1) the

Multimodal Attention mechanism (MM-Attn), (2) the

Image Selection strategy based on visual coverage (Img-Sel), and (3) the

Knapsack Optimization algorithm for length control (Knap). The results are presented in

Table 4.

Analysis of Component Contributions:

Importance of Multimodal Attention (Row 1): Replacing the multimodal attention mechanism with a simple average pooling of image and text features leads to a significant drop across all ROUGE metrics. The largest ROUGE-2 drop (−0.84) and ROUGE-L drop (−1.31), confirming that cross-modal phrase and sequence modeling directly drives improvements in these metrics.

Effectiveness of Image Selection (Row 2): When our proposed image selection strategy is replaced by randomly choosing an image from the input set, the performance on text-based metrics (ROUGE) sees only a marginal decrease. This suggests that while the primary semantic content is captured in the text, our visual coverage vector is effective in selecting a relevant image, a fact that would be better validated by human evaluation focused on image relevance. The length control remains unaffected as it is primarily a text-based operation.

Critical Role of Knapsack Optimization (Row 3): Removing the knapsack algorithm and relying solely on the standard Seq2Seq decoder for length control causes the most notable degradation. While the drop in ROUGE scores is moderate, the variance in output length (var) skyrockets from 7.49 to 19.75, an increase of over 260%. Proving that the knapsack algorithm’s role is to ensure length control rather than unigram overlap. Without our optimization module, the model fails to adhere to the length constraint, highlighting the algorithm’s indispensability for precise length control.

Component Synergy (Row 4): The combined removal of both the Knapsack optimization and the Multimodal Attention mechanisms results in the worst performance, ROUGE metrics (−0.14 in ROUGE-L) but reduces Image-Text Alignment (from 0.82 to 0.45), confirming that image selection enhances practical utility rather than pure ROUGE performance. This indicates that the components are complementary; the attention mechanism ensures high-quality, multimodal content selection, which the knapsack algorithm then optimizes under length constraints.

In conclusion, the ablation study validates the necessity of each proposed component. The Multimodal Attention mechanism is vital for content quality, the Knapsack Optimization algorithm is essential for precise length control, and the Image Selection strategy contributes to the multimodal coherence of the final output. The full model achieves the best balance between summary quality and strict adherence to user-defined length constraints.

4.7. Comparative Experiment

4.7.1. Comparison Between Flickr30k and MSMO Datasets

To verify the generality of our length customizable image text summarization method in different multimodal scenarios, particularly in the tasks of “short text image pairing” and “diverse long content multimodal”, we conducted comparative experiments on the Flickr30k and MSMO datasets. This comparison illustrates how the method adapts to changes in text length, content diversity, and image text semantics, while ensuring experimental consistency.

Flickr30k, a classic short text-image pairing dataset, containing 31k images (covering daily scenes, objects, and interactions) with 5 human-written captions per image (average 15–20 tokens per caption). It focuses on “image-centric short text summarization,” where text serves as image description, and the task emphasizes capturing visual-semantic alignment in concise content.

To ensure fairness in comparison, a complete joint graphic encoder and a length controller architecture based on the knapsack problem are used, and a unified hyperparameter, which batch size = 32, learning rate = is employed; At the same time, the target summary length is adjusted based on the differences in dataset characteristics, with Flickr30k having a target length of 50 tokens and MSMO having a target length of 200 tokens to adapt to their respective text content sizes.

Table 5 presents the performance of our method and baselines on both datasets. Our method maintains low length deviation across datasets (1.7% on Flickr30k, 2.1% on MSMO, with a difference <0.4%), significantly better than pure text and low matching image baselines, even avoiding excessive truncation or insufficient generation of Flickr30k’s ultra short 50 marker target length, verifying its adaptability to length scales. In summary quality, it achieves ROUGE-L scores of 41.2% (Flickr30k) and 37.89% (MSMO), outperforming the text-only baseline by 6.5/2.74 percentage points and the low-matching image baseline by 7.3/4.87 percentage points across the two datasets; its Image-Text Consistency scores are also significantly higher than baselines, and it reaches 0.91 in Flickr30k’s caption relevance. Additionally, on MSMO’s diverse content (news, product, daily), its ROUGE-L variation across categories is only 2.1%, while it maintains stability on Flickr30k’s single daily scene category, confirming robustness to content diversity differences.

The comparative results confirm the two core advantages of the method in multimodal scenarios: firstly, the flexibility of length scale, which can adapt to Flickr30k’s 50 token ultra short targets and MSMO’s 200 token long targets, with length deviations of less than 2.2%, proving that backpack length control is not limited to specific scales; The second aspect is the visual semantic impact. Regardless of whether the dataset is image dominated (Flickr30k) or text dominated (MSMO), the consistency between image and text reaches 0.82–0.86, significantly better than the baseline. It should be noted that its ROUGE-L on Flickr30k is higher, as the latter has shorter text focus. In summary, the method can be generalized to “short text text pairing” and “long content multimodal” tasks, meeting the needs of cross scenario adaptation.

4.7.2. Comparison of Different Baseline Model Methods

If the word count is too high, the summary may become lengthy and difficult to read, and may even contain unnecessary repetitive information or minor details, thereby reducing its readability and informational value. On the contrary, summarization with fewer words may be more concise and clear, able to quickly convey the core points of the original text. This type of summary is suitable for use in situations where quick access to news content or information filtering is needed. However, if the word count is too low, the summary may miss important information or details, leading to readers’ deviation or misunderstanding of the original text. Therefore, we designed a set of comparative experiments as shown in

Table 6. Select five news texts of different types but with similar original length from the dataset, and observe the quality and content integrity of the generated summaries under the premise of controlling the number of generated characters.

Performance Analysis of Knapsack-based Optimization:

Our knapsack optimization framework demonstrates significant advantages over neural baselines in length-constrained summarization.

Theoretical Guarantees: While the exact DP solution provides the theoretical optimum, our greedy approximation achieves 96.3% of the optimal ROUGE-L score while reducing computational complexity from to , making it feasible for real-world applications.

Length Control Precision: As shown in

Table 6, both knapsack-based methods (Greedy and DP) maintain strict length compliance, significantly outperforming neural methods that rely on learned length embeddings (8.5–12.3% violation rates).

Efficiency Trade-offs and Benchmark Validation: We further quantify the efficiency of the greedy knapsack approach by comparing its computational complexity and runtime against deep-learning-only methods and the exact dynamic programming method—all tested on a real-world multi-document input scenario.

From a complexity perspective: The greedy knapsack approach has a time complexity of (where n is the number of candidate text segments), which is significantly lower than the complexity of exact DP (L denotes the maximum summary length constraint) and the complexity of deep-learning-only methods (d is the model’s hidden state dimension, typically 256–1024).

In terms of runtime overhead (

Table 7): The greedy knapsack approach achieves 35.1 ROUGE-L with a minimal inference time of 0.12 s per sample. In contrast, exact DP (which yields 35.8 ROUGE-L, a marginal 0.7-point improvement) incurs a 130× higher runtime (15.7 s per sample) due to its quadratic complexity. Deep-learning-only methods require 0.15–0.21 s per sample—their runtime is 25–75% higher than the greedy approach, as they rely on iterative token-level autoregressive generation and hidden state updates.

This benchmark confirms that the greedy knapsack approach maintains competitive ROUGE-L performance while achieving lower complexity and runtime than deep-learning-only methods in real-world multi-document scenarios, addressing the efficiency concerns raised in the feedback.

Comparative Advantages over Neural Baselines:

Our knapsack optimization framework outperforms neural methods in several key aspects:

Deterministic Output: Unlike neural models that exhibit output variability (ROUGE-L std = 2.1), our method produces consistent results (std = 0.8) due to its deterministic selection process.

Resource Efficiency: The knapsack approach requires no trainable parameters for length control, compared to 128–145 M parameters in neural baselines, making it more suitable for resource-constrained environments.

Interpretability: The value-density ranking () provides transparent selection criteria, whereas neural methods operate as black boxes with limited explainability.

The 3.7% performance gap between greedy and exact DP solutions is justified by the 130× speed advantage, particularly important for processing large-scale datasets. This trade-off makes the greedy knapsack approach more suitable for production systems where both length precision and computational efficiency are critical.

4.7.3. Impact of Image Selection

To address the feedback on validate the role of image selection, we design contrastive experiments centered on text-image semantic matching degree, leveraging the dataset’s inherent attribute that each image is paired with a text description highly aligned with the main text. The experiment aims to quantify how image selection affects the core task.

Experimental Setup

We define text-image semantic matching degree as the semantic similarity between an image’s text description and the corresponding main text paragraph, calculated via the BERT-based semantic similarity model. Three experimental groups are constructed to ensure single-variable control:

Control Group (No Image): Adopts the original text-only input paradigm, with no image information involved. It serves as a benchmark to measure task performance without image assistance.

High-Matching Image: Selects samples where the text-image semantic matching degree is ≥80%. The image’s text description is fused with the main text as input (via a cross-modal embedding layer), to test the positive auxiliary effect of high-matching images.

Low-Matching Image: Selects samples where the text-image semantic matching degree is ≤40%. The input mode is consistent with Experimental Group 1, to verify the interference or ineffectiveness of low-matching images.

All groups use the same model architecture (our proposed method in

Section 3) and hyperparameters (batch size = 16, learning rate =

) to eliminate confounding factors. The experimental dataset is a subset of MSMO (filtered to retain samples with complete image-text pairs), with 1200 samples per group.

Evaluation Metrics

In addition to the core ROUGE metrics (ROUGE-1/2/L) for summarization quality, we introduce two auxiliary metrics to characterize image-text interaction:

Image-Text Consistency: Evaluated by the CLIP model, measuring the semantic similarity between the generated summary and the image’s text description (range: 0–1, higher values indicate better alignment between the summary and image information).

Key Information Retention: Counts the recall rate of key entities that appear in both the main text and the image’s text description, to verify whether images promote the retention of cross-modal key information.

Experimental Results and Analysis

Table 8 presents the performance of each group. It can be observed that:

Positive effect of high-matching images: Outperforms the Control Group in all metrics: ROUGE-1/2/L increase by 2.76, 2.48, and 2.74 percentage points respectively; Image-Text Consistency reaches 0.82; Key Information Retention rises by 17.33 percentage points. This confirms that highly semantically matched images can supplement cross-modal key information via their text descriptions, helping the model capture core content more comprehensively and thus improving summarization quality and key information retention.

Ineffectiveness of low-matching images: Shows no significant difference from the Control Group, with Image-Text Consistency only 0.45 and Key Information Retention close to the Control Group. The root cause is semantic dislocation between image and main text: the image’s text description introduces irrelevant information, which fails to assist the model and even interferes with its judgment on core content.

Connection with dataset characteristics: The dataset’s attribute of “image text description highly aligned with main text” is a prerequisite for images to exert positive effects. Using only low-matching samples would lead to the mistaken conclusion that images have marginal impact; in practice, selecting high-matching images based on the dataset’s image-text pairing attribute can effectively leverage image information to optimize task performance.

In summary, through solving the length control problem in the field of summarization that our model has significantly improved in experimental results. Therefore, our length control strategy has unique advantages in the field of multimodal summary generation, as it can flexibly adapt to different length requirements while ensuring the quality of the summary.

4.8. Human Evaluation

To complement automatic metrics and verify the practical quality of length-customizable image-text summaries, we conduct a manual evaluation on the MSMO dataset (target summary length = 200 tokens). We randomly sample 500 image-text clusters from the test set and generate summaries using three candidate models: our method, a text-only baseline (Attention model), and a low-matching image baseline (Transformer).

Each sample is scored by three participantson a 1–5 scale across three key dimensions:

(a) Relevance (Rele.): Whether the summary captures core information from both source text and matched images;

(b) Coherence (Cohe.): Sentence-level logical flow of the summary, especially for length-customized outputs;

(c) Image-Text Alignment (Align.): Factual consistency between summary content and image information.

Results in

Table 9 show our method achieves the highest scores in all three dimensions: it outperforms the text-only baseline by 0.58 (Rele.), 0.51 (Cohe.), and 1.42 (Align.) points, and the low-matching image baseline by 0.73 (Rele.), 0.80 (Cohe.), and 1.19 (Align.) points. Notably, our method’s superiority in Image-Text Alignment confirms the value of high-matching image integration, while its strong Coherence score validates the effectiveness of the knapsack-based length control mechanism. This human evaluation further confirms that our method generates high-quality, length-customizable image-text summaries that meet human reading expectations.

5. Conclusions

This paper addresses the length-customizable image-text summarization task, with the core goal of optimizing length control while enhancing summary quality through joint image-text encoding and integration of a knapsack problem-based mechanism. Validated on the MSMO dataset, this work yields concrete, quantifiable findings. In standard summary tasks, it outperforms contrastive models in core ROUGE metrics, with particularly notable improvements over text-only and low-matching image groups. Confirming that synergies between high-semantic-alignment image-text encoding and knapsack-based length control directly boost summary quality. For social media scenarios, where concise, actionable content is critical, the method reduces user reading time while maintaining high key information retention, verifying its preliminary value for user-centric content delivery.

This work has two notable limitations to acknowledge. First, its LSTM-based decoder struggles with long-range semantic dependencies in ultra-long image-text clusters—this reduces ROUGE-L performance versus transformer-based decoders and limits use in large-scale multimodal scenarios. Second, limited baseline comparisons may overestimate the method’s relative performance in multimodal length control.

In the future, we will focus on two key directions: First, we will building a new multimodal dataset that covers diverse domains (including news, scientific research, technical documentation, and social media) to mitigate the MSMO dataset’s domain limitation, and the new dataset will be designed to better simulate real-world unstructured multimodal data, thereby improving the generalization of our method; Second, we will carry out Practical Function Extension for Edge Deployment, which entails designing a lightweight version of the knapsack optimization module to reduce computational complexity, enabling the module to be deployed on edge devices for real-time multimodal summarization tasks.