Abstract

The integration of Connected Cruise Control (CCC) and wireless Vehicle-to-Vehicle (V2V) communication technology aims to improve driving safety and stability. To enhance CCC’s adaptability in complex traffic conditions, in-depth research into intelligent asymmetrical control design is crucial. In this paper, the intelligent CCC controller issue is investigated by jointly considering the dynamic network-induced delays and target vehicle speeds. In particular, a deep reinforcement learning (DRL)-based controller design method is introduced utilizing the Twin Delayed Deep Deterministic Policy Gradient (TD3) algorithm. In order to generate intelligent asymmetrical control strategies, the quadratic reward function, determined by control inputs and vehicle state errors acquired through interaction with the traffic environment, is maximized by the training that involves both actor and critic networks. In order to counteract performance degradation due to dynamic platoon factors, the impact of dynamic target vehicle speeds and previous control strategies is incorporated into the definitions of Markov Decision Process (MDP), CCC problem formulation, and vehicle dynamics analysis. Simulation results show that our proposed intelligent asymmetrical control algorithm is well-suited for dynamic traffic scenarios with network-induced delays and outperforms existing methods.

1. Introduction

In recent years, the challenges of road safety and traffic congestion have grown increasingly urgent [1,2,3,4]. The Advanced Driver Assistance System (ADAS) has been widely studied as a solution to ease driving burdens and enhance road safety [5]. As an innovative ADAS technology, Connected Cruise Control (CCC) employs Vehicle-to-Vehicle (V2V) communication to develop perception ability and maintain stable traffic flow within a diverse vehicle platoon [6]. In particular, CCC has a flexible network topology and asymmetrical control ability, enabling platoon members to communicate with each other to share their motion information. Through the received information, the CCC controller empowers autonomous vehicles to achieve adaptive longitudinal control, thereby boosting safety, comfort, and fuel efficiency [7,8,9,10].

Safe, robust, and asymmetrical controller design is a crucial challenge in CCC research. Various factors induced by V2V communication, such as network-induced delays and connection topology, can significantly reduce system stability and security [11,12,13]. In [14], in order to reduce the system performance degradation introduced by network-induced delays, a predictive feedback control method is introduced based on the system state estimation. In [15], a semi-constant time gap policy is adopted to compensate for delays by utilizing historical information from the preceding vehicle rather than current states. However, works [14,15] assume a fixed constant delay, whereas network-induced delays should be considered stochastic and time-varying due to the complexity of vehicle communication networks [16,17,18,19]. In [20], the impact of stochastic time latency on string stability in connected vehicles is explored by evaluating the mean and covariance features of system dynamics. In [21], an optimal control strategy incorporating stochastic network-induced delays is derived as a two-step iteration. Although these studies address the influence of uncertain factors in wireless communication, they focus on static traffic situations or deterministic system models. In fact, the CCC system faces various disturbances, such as unpredictable acceleration or deceleration of the leading vehicle [22]. In other words, in order to adapt to dynamic traffic conditions, the expected state of the platoon must also exhibit time-varying features. Therefore, it is essential to develop a robust asymmetrical controller capable of compensating for these system disturbances and uncertainties.

According to the dynamic and unpredictable nature of traffic environments, reinforcement learning (RL) and deep reinforcement learning (DRL) have emerged as promising solutions [23,24,25]. Unlike most traditional model-based optimization techniques, RL agents learn control strategies directly from their interaction experiences within environments based on training parameter models. One of the earliest works to apply the RL approach to the design of safe controllers for longitudinal tracking is [26]. In [27], an automated driving framework employing Deep Q Networks (DQN) is presented and tested in a simulation environment. However, vehicle acceleration regulation falls within the realm of continuous action space [28]. In the research mentioned above, it is challenging to identify every action through discrete action design, which unavoidably results in behavior vibration and performance degradation. Subsequently, the Deterministic Policy Gradient (DPG) [29] is proposed to enable learning in a continuous action domain, along with several further developed algorithms such as Deep Deterministic Policy Gradient (DDPG), Soft Actor Critic (SAC), Advantage Actor Critic (A2C), and Twin Delayed DDPG (TD3) [30,31,32].

Unfortunately, intelligent approaches addressing the CCC controller issues in dynamic traffic situations are seldom explored. In addition, existing DRL-based algorithms focus on cases with no network-induced delays. Even if the delay is investigated, its impact on the Markov Decision Process (MDP) definition for CCC is also missed. Actually, as a real-time control application, the network-induced delay is one of most crucial factors influencing system control stability. Consequently, this article proposes an innovative DRL-based algorithm to address the asymmetrical cruise control optimization problem for dynamic vehicle scenarios in the presence of network-induced delays. The key contributions are outlined below.

- A novel DRL-based intelligent controller is designed for dynamic traffic conditions with network-induced delays. Through interaction with the traffic environment, the intelligent asymmetrical control strategy is updated via self-learning to meet the emerging requirements of cruise control systems.

- To account for the inevitable network-induced delays caused by wireless V2V communication, the effects of previous control strategies are analyzed in optimization problem formulation, dynamics analysis, and MDP definition for CCC. This approach effectively mitigates performance degradation due to network-induced delays and helps ensure the stability of the CCC system.

- A CCC algorithm employing the Twin Delayed Deep Deterministic Policy Gradient (TD3) is proposed to improve the asymmetrical adaptability of CCC systems in dynamic traffic conditions with varying desired velocities. By completing two stages of sampling and training, optimal control schemes can be generated through the maximization of a quadratic reward function, which is defined by state errors and control inputs.

2. System Modeling and Optimization Problem Formulation

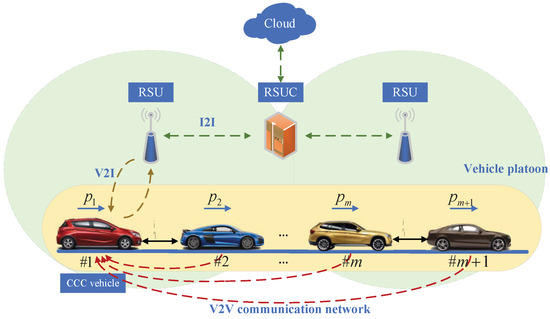

As depicted in Figure 1, the CCC system, based on the Internet of Vehicles, primarily comprises the vehicles, Roadside Units (RSUs), and the cloud. Specifically, this work focuses on a heterogeneous platoon of multiple vehicles traveling on a single lane. The CCC vehicle with the edge controller is in the tail, and the remaining vehicles are human-driven vehicles following the target vehicle m + 1 at the front of the platoon. Through V2V communication, the cruise control vehicle can obtain motion information from preceding vehicles, such as acceleration, velocity, and headway. Based on these information, the controller generates suitable real-time control strategies to ensure asymmetrical control stability.

Figure 1.

The CCC system architecture based on the Internet of Vehicles.

Considering human-driven vehicles, i, the system dynamics can be described using the classic car-following model [6]

where denotes the headway, represents the velocity, dot represents the time-dependent derivative t, and are system parameters determined by the behavior of the driver, and is the function of the velocity policy that

where is the maximum velocity, and are the minimum and maximum headway, respectively.

For a CCC vehicle, the dynamics are given by [11]

where represents the time-varying delay, and refers to the control strategy, namely, the acceleration.

In the vehicle platoon, each vehicle aims to achieve the leader velocity and corresponding desired headway , the dynamics of desired states are given by

where denotes the acceleration of the leading vehicle, which is time-varying due to the dynamic target vehicle velocity.

As a result, the velocity and headway errors can be represented as and . Based on (1) and (3), and the first-order approximation of the policy , the error dynamics for the vehicles can be expressed as follows.

where .

Then, based on (7), the dynamics in the k-th sampling interval in the discrete-time domain can be deduced as

where

and is the time-varying delay in the k-th sampling interval, which is commonly taken to be stochastic, n is a non-negative integer, is the sampling interval, and is the relevant control signal.

Note that, due to the complexity of the traffic environment and the dynamics of driving vehicles, the network-induced delay causes the time-varying features of and , and the variable desired state also introduces the uncertain item .

The objective of the cruise control is to minimize the velocity and headway errors, namely, to achieve the equilibrium . the optimization control issue of the CCC system can be expressed as follows using the common quadratic cost function:

where N represents the time length of cruise control, the term denotes the errors, represents the effect of control inputs, the expectation operator, , accounts for the stochastic nature of the desired state and time delays, and and denote pre-determined system parameters.

3. Drl-Based Controller Design

Considering the time-varying communication delay and desired states, the intelligent DRL-based asymmetrical control is designed to address the cruise control problem. Since the CCC’s actions are within the continuous-value range, utilizing discrete action strategies will result in a considerable increase in computational complexity and system performance degradation. A DRL algorithm known as TD3 has recently demonstrated promising results in the area of continuous control and has been validated in various applications. Next, on the basis of MDP analysis, the application of TD3 algorithm is proposed to learn CCC policy.

3.1. MDP Definition of CCC

MDP, typically used to define the DRL problem, can be described as the tuple , where represents the action space, state space, reward function, and transition dynamics, respectively. At each slot, the agent, which serves as the core brain of the DRL, detects the environment state in real time, executes action based on policy , and receives a reward. The aim of DRL is to derive to maximize , with being the discount factor. In the following, the MDP for the intelligent cruise control is defined in conjunction with the analysis in Section 2.

(1) State: Similar to (6), the state of the CCC system can be expressed as

where the first items of the state vector indicate the velocity and headway errors, while comparable from the typical state design, in order to resolve for the influence of network-induced delays, the state vector is supplemented with delayed control inputs .

In accordance with (3), the state space can be further specified as follows:

where and are the acceleration and deceleration limits, respectively.

(2) Action: The objective of the agent is to form the cruise control policy. Therefore, the action can be given by

(3) Transition dynamics: The transition dynamics take action and current state as inputs, yielding the subsequent state as output. Thus, based on (8), (10), and (12), the transition dynamics can be formulated as

where

(4) Reward function: Similar to (9), the headway error, velocity error, and control input serve as the principal components of the reward design. In distinction to optimization theory, where the objective is to minimize the cost function, DRL seeks to maximize the cumulative rewards. Consequently, the reward is defined using a negative value representation as follows

where

In relation to the CCC design employing control length N, the return for a specific number of trainings can be determined by using

3.2. Td3-Based CCC Algorithm

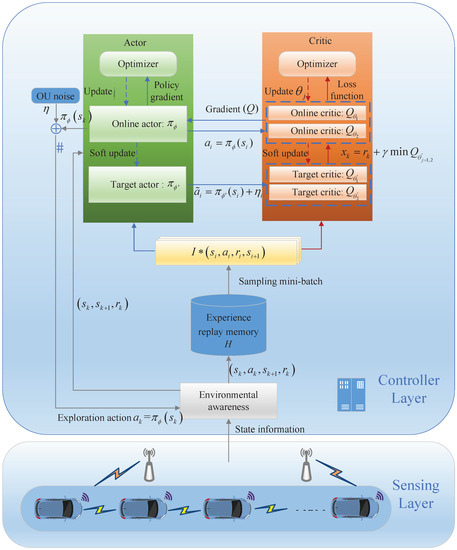

Figure 2 illustrates the structure of the TD3-based CCC algorithm, which primarily comprises a sensor layer and a controller layer. The sensor layer is specifically tasked with monitoring surrounding traffic conditions and gathering vehicle state data utilizing wireless network. The controller layer stores platoon data obtained from the sensor and trained within the TD3 framework. Subsequently, after gaining sufficient experience, the controller generates the near-optimal asymmetrical control strategies for the cruise control vehicle in real time.

Figure 2.

The architecture of TD3-based CCC algorithm.

TD3 is an algorithm belonging to the actor–critic network family, primarily consisting of six deep neural networks: the online actor , the target actor , two online critics , and two target critics . Here, Q denotes evaluation function, represents actor policy, and and are network parameters. The proposed TD3-based cruise control method typically trains to learn a near-optimal control strategy, while training the critic network to find appropriate Q value for evaluating the effectiveness of the control strategy.

TD3-based algorithm consists of two phases: sampling and training.

(1) Sampling: Initially, the velocity and headway information gathered as starting states through V2V communication. In the k-th time slot, the action policy can be generated using the state by the online actor. Considering the continuous action space, the exploration policy is designed as follows

where is a temporally correlated disturbance induced by an Ornstein–Uhlenbeck (OU) process.

Upon taking action as in (16), the subsequent state can be observed through the transition dynamics in (13), and the feedback reward can be obtained as in (14). The transition tuple , is stored in the experience replay memory buffer. Through interaction with the environment, a sufficient number of samples can be generated.

(2) Training: Actually, the training process involves optimizing both critic and actor components. In each episode, the mini-batch , including I samples, is randomly selected from the experience buffer H for training. Prior to updating the critic, the target action is smoothed and regularized to minimize the variance caused by function approximation errors, which is achieved by

where is clipped to keep the target in a small range.

The online critics are trained to update their parameters by minimizing a mean square error loss function given as

where represents the output of the online critics when the input state and action are introduced into the online critics. Meanwhile, denotes the target Q value, which is defined as

In (19), represents the current reward as determined by (14), while denotes the subsequent Q value obtained through the target critics, and corresponds to the next action policy (17). To address the potential overestimation of the Q value, the minimum value from the assessments of two reviewers is chosen.

In order to decrease the accumulation of errors during training, the actor and target networks are updated after e times of updating critics. Specifically, the online actor is trained to update its parameter by the policy gradient algorithm as follows

where ∇ is the gradient operator.

The objective of policy gradient is to assist the online actor network in selecting the appropriate action that can yield the higher Q value by increasing or decreasing the probability of a given action.

Next, for the target networks, their parameters are updated using a soft update method, which can be expressed as

where is a constant.

Finally, after enough training, the optimal CCC policy can be obtained as follows

where is the applicable online actor parameters.

The comprehensive TD3-based CCC algorithm is outlined in Algorithm 1.

| Algorithm 1 TD3-based CCC algorithm |

|

4. Simulations

In this section, simulations are presented to show the performance comparisons of the proposed intelligent asymmetrical control algorithm. A platoon consisting of 5 vehicles is analyzed, where the tail vehicle operates under CCC, while the remaining vehicles are human driven. Similar to [11,20], the following constraint parameters are used that = 30 [m/s], = 35 [m], = 5 [m], = 5 [m/s], = −5 [m/s], = 0.6, = 0.6. The sampling interval is = 0.1 s, and the time-varying communication delay is specified as a constrained random variable . The simulation parameters setting can be summarized as in Table 1.

Table 1.

Simulation parameters setting.

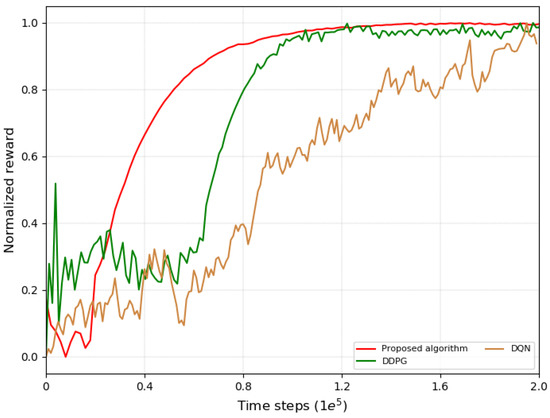

We evaluate the learning performance of the designed algorithm in relation to those of other classical DRL algorithms (i.e., DQN in [27] and DDPG in [30]) that do not consider time-varying factors. The hyper-parameters are set as follows: total training step , mini-bactch size 64, learning rates , discount factor , noise clip , policy delay . Figure 3 shows the normalized reward against the training step. The findings demonstrate that the proposed algorithm learns the most efficiently and converges before steps. The learning efficiency of DDPG is second, but it still fluctuates after convergence. However, algorithm DQN has the lowest learning efficiency and fails to converge within limited training. This indicates that it is challenging to learn CCC strategy in a complex dynamic environment, and the proposed algorithm may help learning by the MDP design considering the time-varying communication delay and desired state.

Figure 3.

Normalized rewards.

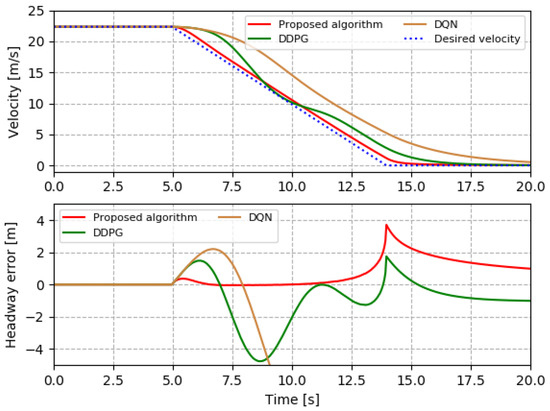

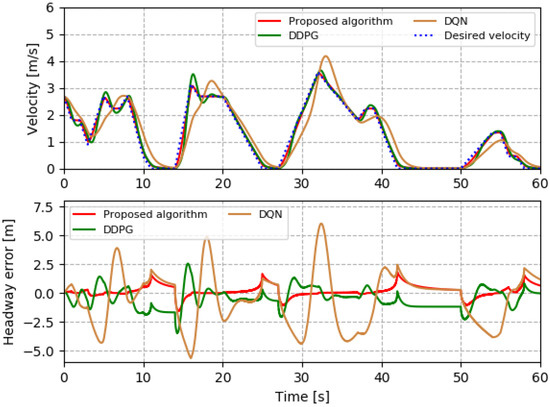

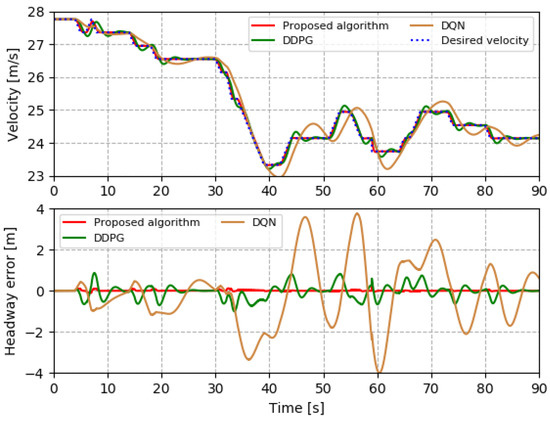

Next, a real-world dataset named DACT [33] is used to validate the effectiveness of proposed DRL-based intelligent control algorithm. The DACT data are a collection of driving trajectory data from Columbus and Ohio, and can be divided into multiple segments marked according to operation modes, such as acceleration and deceleration. In particular, three traffic scenarios are selected from the DACT dataset: a case with significant speed drop represents the harsh brake in an emergency situation, the speed variations around zero represent stop-and-go traffic in congestion, and the change in average speed represents the change in the speed limit in the road work area. As shown in Figure 4, Figure 5 and Figure 6, the proposed controller can accurately execute control decisions and track the desired states under three traffic scenarios. However, when using a controller trained by the DRL algorithm that does not consider time-varying factors, it may face greater speed variations and headway errors. For example, as shown in Figure 4, in order to achieve a harsh brake, the proposed algorithm provides fast control response and constant deceleration, while the other two algorithms respond slowly, which will cause too small of a headway and traffic safety problems. In the traffic congestion and road work areas of Figure 5 and Figure 6, the other two algorithms have obvious tracking errors, and the speed adjustment methods are more radical, which will not only reduce the driving comfort, but also endanger following and preceding vehicles. Therefore, the proposed controller has significant advantages in accurate tracking, comfort, and safety. In addition, using the real dataset DACT indicates that the proposed intelligent delay-aware control method has potential practical applications, such as urban driving control.

Figure 4.

Harsh brake in emergency situation.

Figure 5.

Stop-and-go traffic in congestion.

Figure 6.

Speed limit changes in work zone.

5. Conclusions

In this study, we propose a DRL-based asymmetric control algorithm for longitudinal control in CCC vehicles. This strategy considers time-varying traffic conditions and dynamic network-induced delays to improve traffic flow stability. Real-time movement information of a vehicle platoon is gathered through V2V wireless communication. As the vehicle’s action space is continuous, a TD3-based method for intelligent cruise control is introduced. Upon training completion, the CCC strategy for the cruise control system, defined by headway and velocity errors, as well as control input, is proposed by the cost function maximization. Simulation results indicate that the developed controller effectively minimizes the impact of communication delays and adapts to dynamic traffic conditions. Furthermore, the proposed asymmetrical control algorithm outperforms existing methods in terms of control performance. In the proposed intelligent control design, the dynamic target vehicle velocity and network-induced delays are investigated. However, other potential system constraints, such as bandwidth restriction and unreliable transmission, and much more complicated traffic scenarios with multiple CCC vehicles, are left for future works.

Author Contributions

Conceptualization, L.L., Z.W. and C.F.; methodology, L.L., S.J., Y.X., Z.W., C.F. and M.L.; software, S.J., Y.X., Z.W. and Y.S.; validation, L.L., S.J., Y.X., Z.W., C.F., M.L. and Y.S.; formal analysis, L.L., S.J., Y.X. and C.F.; investigation, S.J., Y.X. and C.F.; data curation, S.J., Y.X. and Y.S.; writing-original draft preparation, L.L., S.J., Y.X., Z.W., C.F., M.L. and Y.S.; writing-review and editing, L.L., S.J., Y.X., Z.W., C.F., M.L. and Y.S.; project administration, L.L. and Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Beijing Natural Science Foundation 4222002 and L202016, the Foundation of Beijing Municipal Commission of Education KM201910005026, and the Beijing Nova Program of Science and Technology Z191100001119094.

Data Availability Statement

The data presented in this study are available on request from the corresponding authors.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zadobrischi, E.; Cosovanu, L.M.; Dimian, M. Traffic flow density model and dynamic traffic congestion model simulation based on practice case with vehicle network and system traffic intelligent communication. Symmetry 2020, 12, 1172. [Google Scholar] [CrossRef]

- Kaffash, S.; Nguyen, A.T.; Zhu, J. Big data algorithms and applications in intelligent transportation system: A review and bibliometric analysis. Int. J. Prod. Econ. 2021, 231, 107868. [Google Scholar] [CrossRef]

- Li, Z.Z.; Zhu, T.; Xiao, S.N.; Zhang, J.K.; Wang, X.R.; Ding, H.X. Simulation method for train curve derailment collision and the effect of curve radius on collision response. Proc. Inst. Mech. Eng. Part J. Rail Rapid Transit. 2023, 1, 1–20. [Google Scholar] [CrossRef]

- Kim, T.; Jerath, K. Congestion-aware cooperative adaptive cruise control for mitigation of self-organized traffic jams. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6621–6632. [Google Scholar] [CrossRef]

- Wang, Z.; Jin, S.; Liu, L.; Fang, C.; Li, M.; Guo, S. Cognitive cars: A new frontier for ADAS research. IEEE Trans. Intell. Transp. Syst. 2011, 13, 170–195. [Google Scholar]

- Orosz, G. Connected cruise control: Modelling, delay effects, and nonlinear behaviour. Veh. Syst. Dyn. 2016, 54, 1147–1176. [Google Scholar] [CrossRef]

- Wang, Z.; Jin, S.; Liu, L.; Fang, C.; Li, M.; Guo, S. Design of intelligent connected cruise control with vehicle-to-vehicle communication delays. IEEE Trans. Veh. Technol. 2022, 71, 9011–9025. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Y.; Li, K.; Xu, Q. DeeP-LCC: Data-enabled predictive leading cruise control in mixed traffic flow. arXiv 2022, arXiv:2203.10639. [Google Scholar]

- Song, F.; Liu, Y.; Shen, D.; Li, L.; Tan, J. Learning control for motion coordination in wafer scanners: Toward gain adaptation. IEEE Trans. Ind. Electron. 2022, 69, 13428–13438. [Google Scholar] [CrossRef]

- Boddupalli, S.; Rao, A.S.; Ray, S. Resilient cooperative adaptive cruise control for autonomous vehicles using machine learning. IEEE Trans. Intell. Transp. Syst. 2022, 23, 15655–15672. [Google Scholar] [CrossRef]

- Jin, I.G.; Orosz, G. Optimal control of connected vehicle systems with communication delay and driver reaction time. IEEE Trans. Intell. Transp. Syst. 2016, 18, 2056–2070. [Google Scholar]

- Li, Z.; Zhu, N.; Wu, D.; Wang, H.; Wang, R. Energy-efficient mobile edge computing under delay constraints. IEEE Trans. Green Commun. Netw. 2021, 6, 776–786. [Google Scholar] [CrossRef]

- Li, Z.; Zhou, Y.; Wu, D.; Tang, T.; Wang, R. Fairness-aware federated learning with unreliable links in resource-constrained Internet of things. IEEE Internet Things J. 2022, 9, 17359–17371. [Google Scholar] [CrossRef]

- Molnar, T.G.; Qin, W.B.; Insperger, T.; Orosz, G. Application of predictor feedback to compensate time delays in connected cruise control. IEEE Trans. Intell. Transp. Syst. 2017, 19, 545–559. [Google Scholar] [CrossRef]

- Zhang, Y.; Bai, Y.; Hu, J.; Wang, M. Control design, stability analysis, and traffic flow implications for cooperative adaptive cruise control systems with compensation of communication delay. Transp. Res. Rec. 2020, 2674, 638–652. [Google Scholar] [CrossRef]

- Liu, X.; Goldsmith, A.; Mahal, S.S.; Hedrick, J.K. Effects of communication delay on string stability in vehicle platoons. In Proceedings of the 2001 IEEE Intelligent Transportation Systems, Proceedings (Cat. No. 01TH8585), Oakland, CA, USA, 25–29 August 2001; pp. 625–630. [Google Scholar]

- Peters, A.A.; Middleton, R.H.; Mason, O. Leader tracking in homogeneous vehicle platoons with broadcast delays. Automatica 2014, 50, 64–74. [Google Scholar] [CrossRef]

- Xing, H.; Ploeg, J.; Nijmeijer, H. Compensation of communication delays in a cooperative ACC system. IEEE Trans. Veh. Technol. 2019, 69, 1177–1189. [Google Scholar] [CrossRef]

- Zhang, S.; Zhou, Z.; Luo, R.; Zhao, R.; Xiao, Y.; Xu, Y. A low-carbon, fixed-tour scheduling problem with time windows in a time-dependent traffic environment. Int. J. Prod. Res. 2022, 1–20. [Google Scholar] [CrossRef]

- Qin, W.B.; Gomez, M.M.; Orosz, G. Stability and frequency response under stochastic communication delays with applications to connected cruise control design. IEEE Trans. Intell. Transp. Syst. 2016, 18, 388–403. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, Y.; Fang, C.; Liu, L.; Zhou, H.; Zhang, H. Optimal control design for connected cruise control with stochastic communication delays. IEEE Trans. Veh. Technol. 2020, 69, 15357–15369. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, Y.; Fang, C.; Liu, L.; Zeng, D.; Dong, M. State-estimation-based control strategy design for connected cruise control with delays. IEEE Syst. J. 2023, 17, 99–110. [Google Scholar] [CrossRef]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Fang, C.; Xu, H.; Yang, Y.; Hu, Z.; Tu, S.; Ota, K.; Yang, Z.; Dong, M.; Han, Z.; Yu, F.R.; et al. Deep-reinforcement-learning-based resource allocation for content distribution in fog radio access networks. IEEE Internet Things J. 2022, 9, 16874–16883. [Google Scholar] [CrossRef]

- Fang, C.; Guo, S.; Wang, Z.; Huang, H.; Yao, H.; Liu, Y. Data-driven intelligent future network: Architecture, use cases, and challenges. IEEE Commun. Mag. 2019, 57, 34–40. [Google Scholar] [CrossRef]

- Desjardins, C.; Chaib-Draa, B. Cooperative adaptive cruise control: A reinforcement learning approach. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1248–1260. [Google Scholar] [CrossRef]

- Sallab, A.E.; Abdou, M.; Perot, E.; Yogamani, S. Deep reinforcement learning framework for autonomous driving. arXiv 2017, arXiv:1704.02532. [Google Scholar] [CrossRef]

- Chen, J.; Li, S.E.; Tomizuka, M. Interpretable end-to-end urban autonomous driving with latent deep reinforcement learning. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5068–5078. [Google Scholar] [CrossRef]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the 31st International Conference on Machine Learning, PMLR, Beijing, China, 21–26 June 2014; pp. 1–6. [Google Scholar]

- Wang, S.; Jia, D.; Weng, X. Deep reinforcement learning for autonomous driving. arXiv 2018, arXiv:1811.11329, 1825–1829. [Google Scholar]

- Chen, J.; Yuan, B.; Tomizuka, M. Model-free deep reinforcement learning for urban autonomous driving. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 2765–2771. [Google Scholar]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Al Sallab, A.A.; Yogamani, S.; Perez, P. Deep reinforcement learning for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4909–4926. [Google Scholar] [CrossRef]

- Moosavi, S.; Omidvar-Tehrani, B.; Ramnath, R. Trajectory annotation by discovering driving patterns. In Proceedings of the 3rd ACM SIGSPATIAL Workshop on Smart Cities and Urban Analytics, Redondo Beach, CA, USA, 7–10 November 2017; pp. 1–4. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).