Robust Image Hashing Using Histogram Reconstruction for Improving Content Preservation Resistance and Discrimination

Abstract

1. Introduction

2. Literature Review

2.1. Transform Domain Feature

2.2. Spatial Feature

2.3. Statistical Features

2.4. Matrix Decomposition

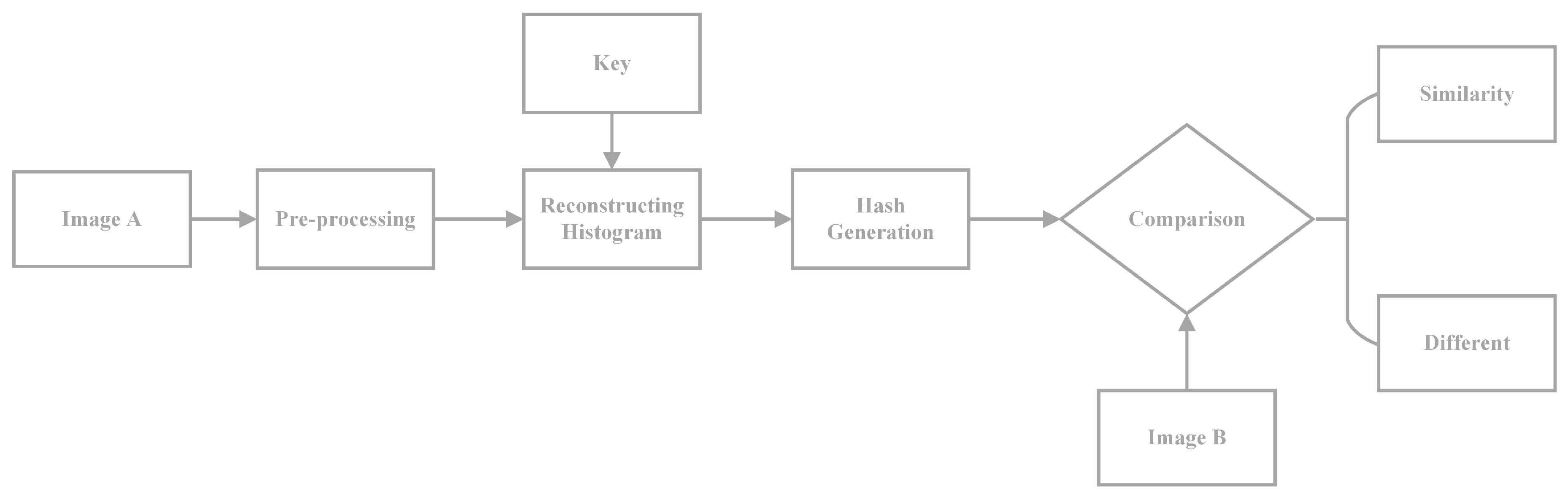

3. The Proposed Scheme

3.1. Pre-Processing

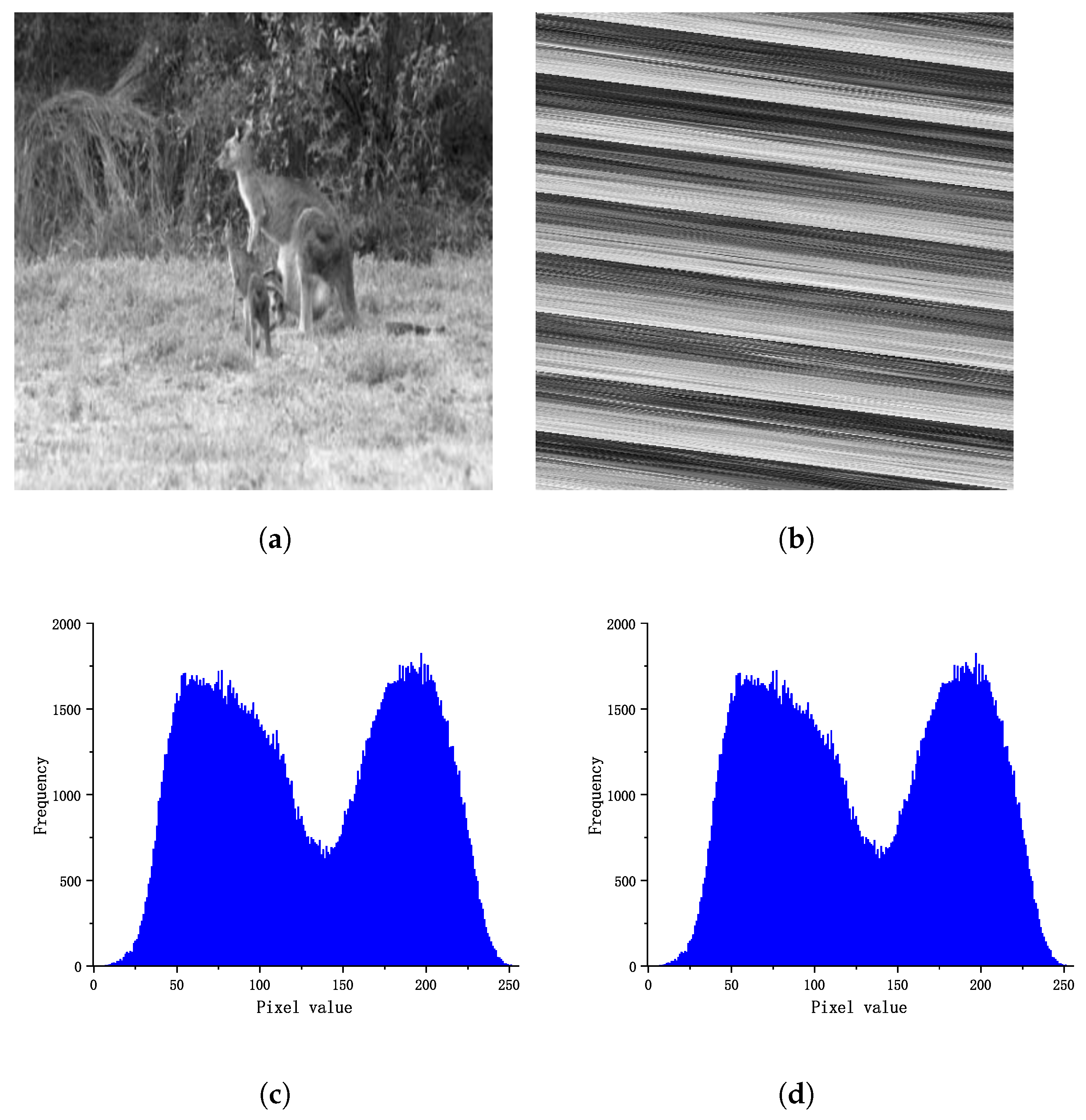

3.2. Reconstructing Histogram

3.3. Pixel Selecting

3.4. Hash Generation

3.5. Similarity Metric

4. Simulation Results

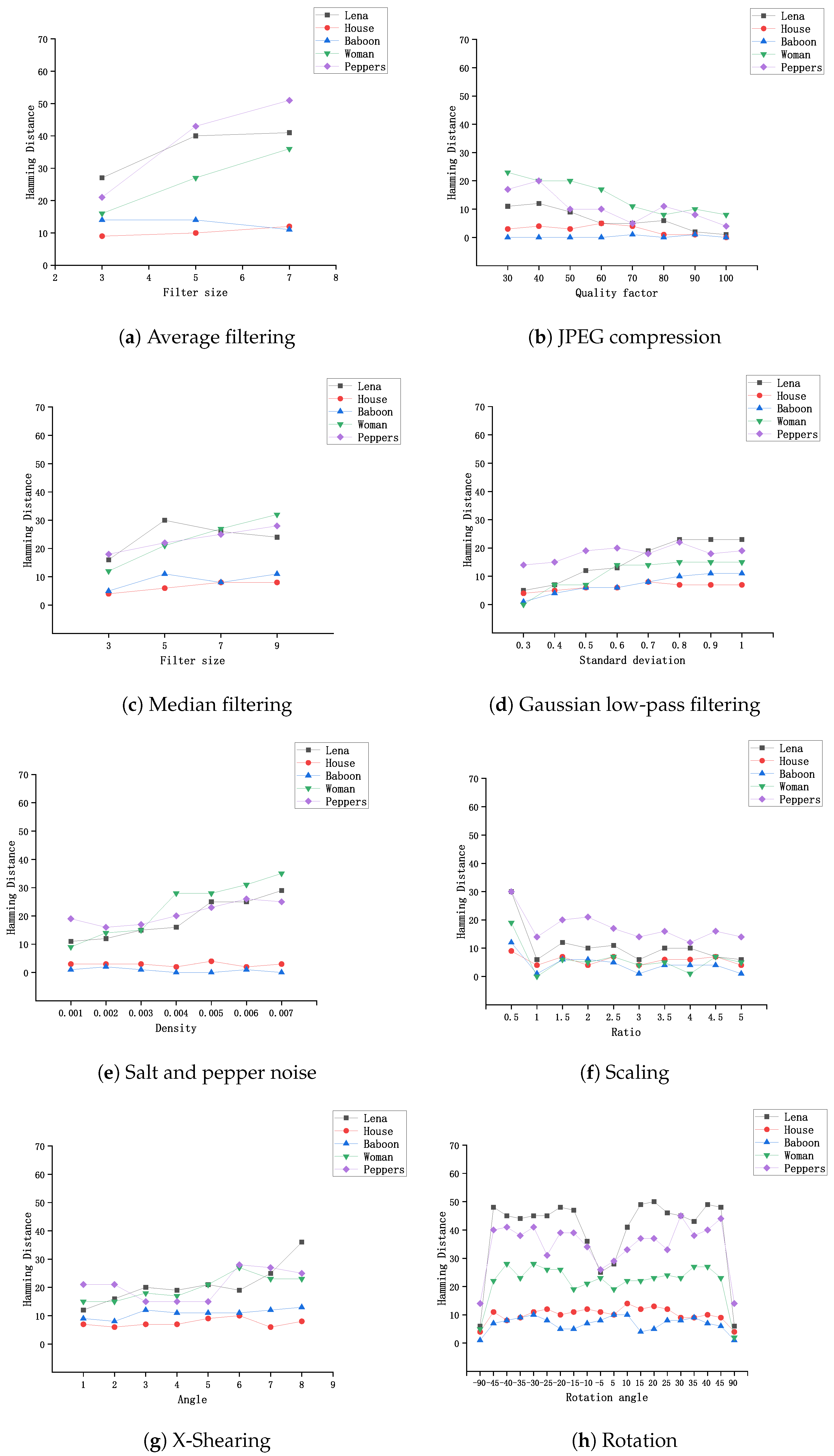

4.1. Perceptual Robustness

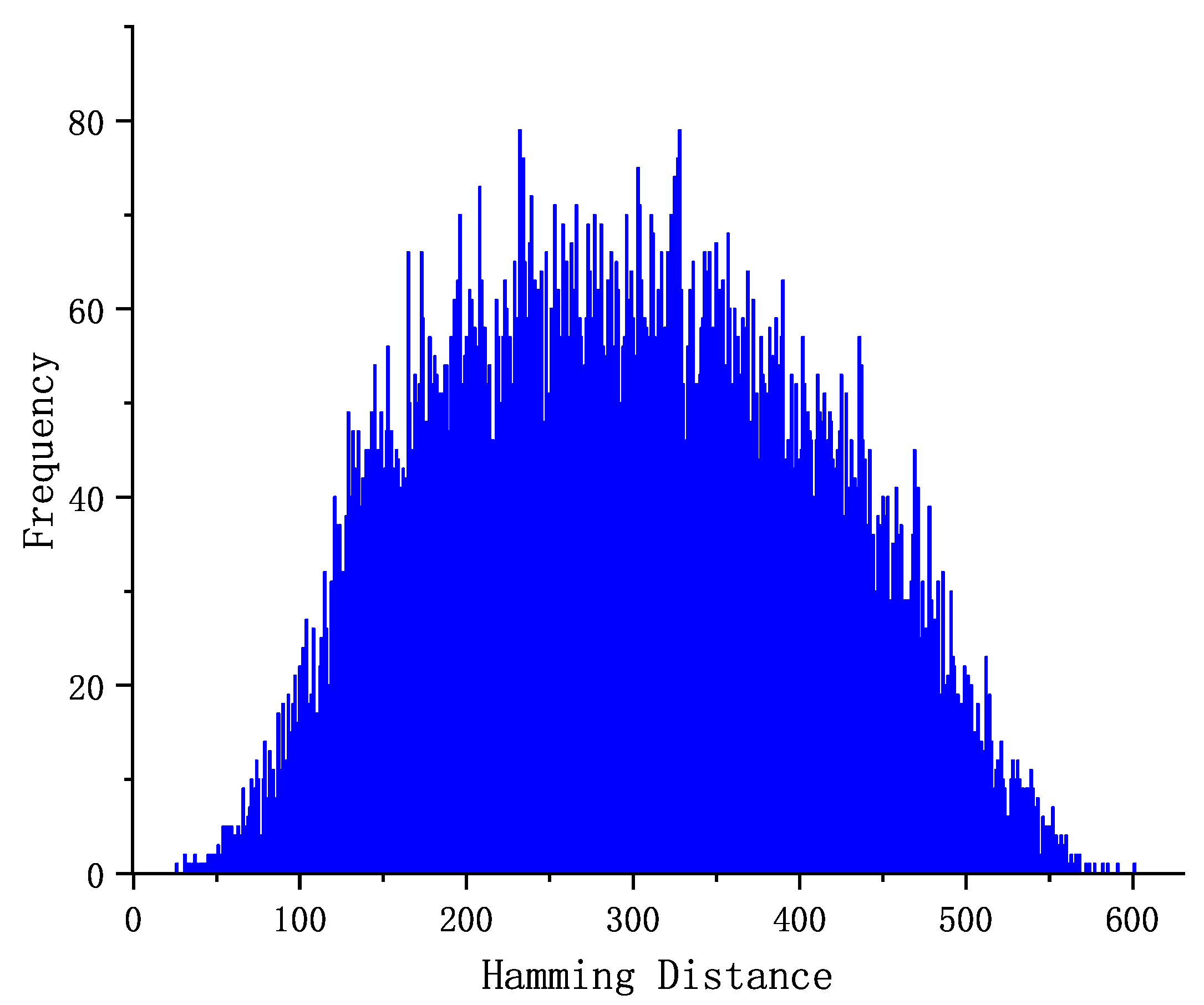

4.2. Discrimination

4.3. Tamper Detection Test

4.4. Influence of Neighborhood Size on Hash Performances

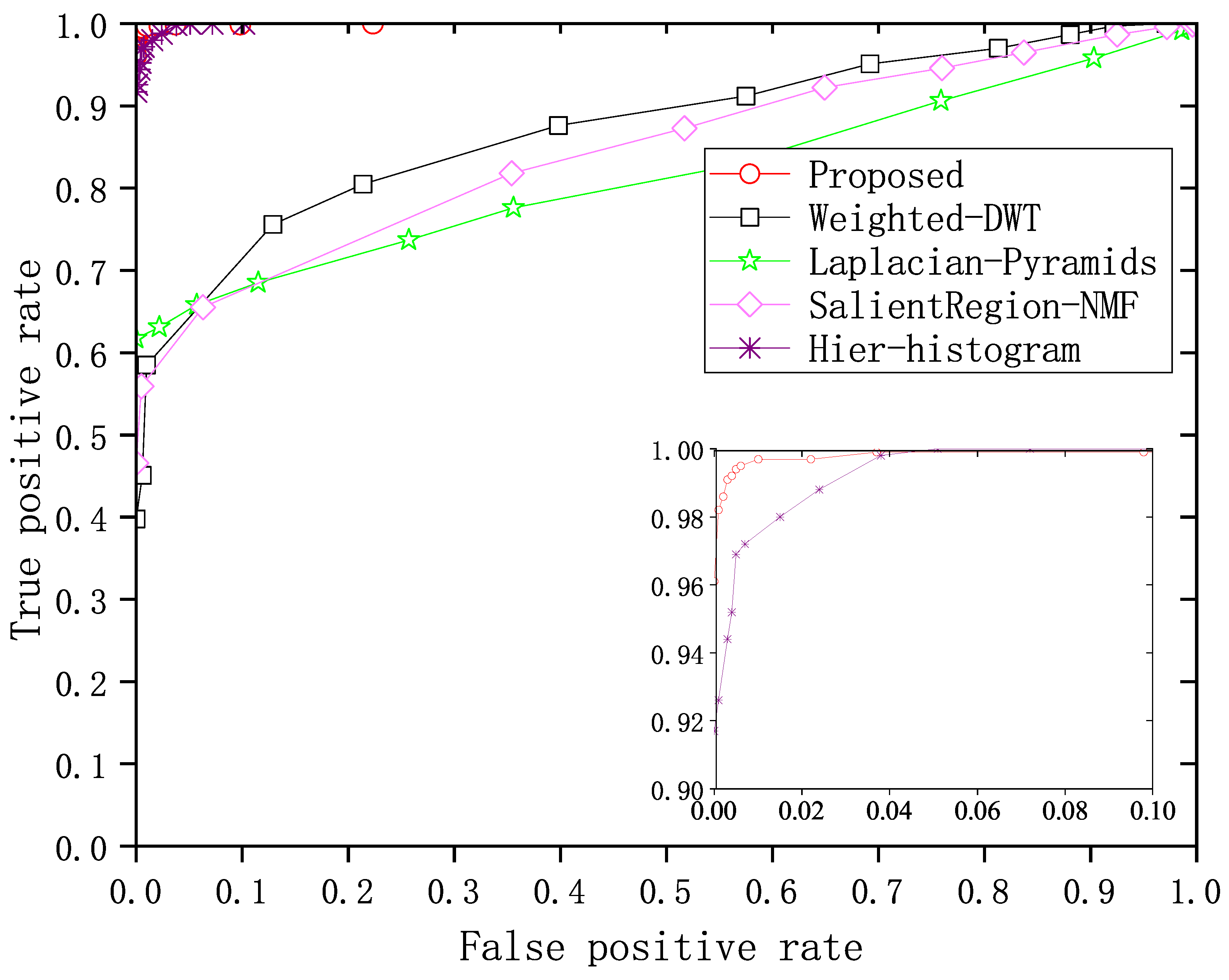

4.5. Performance Comparison

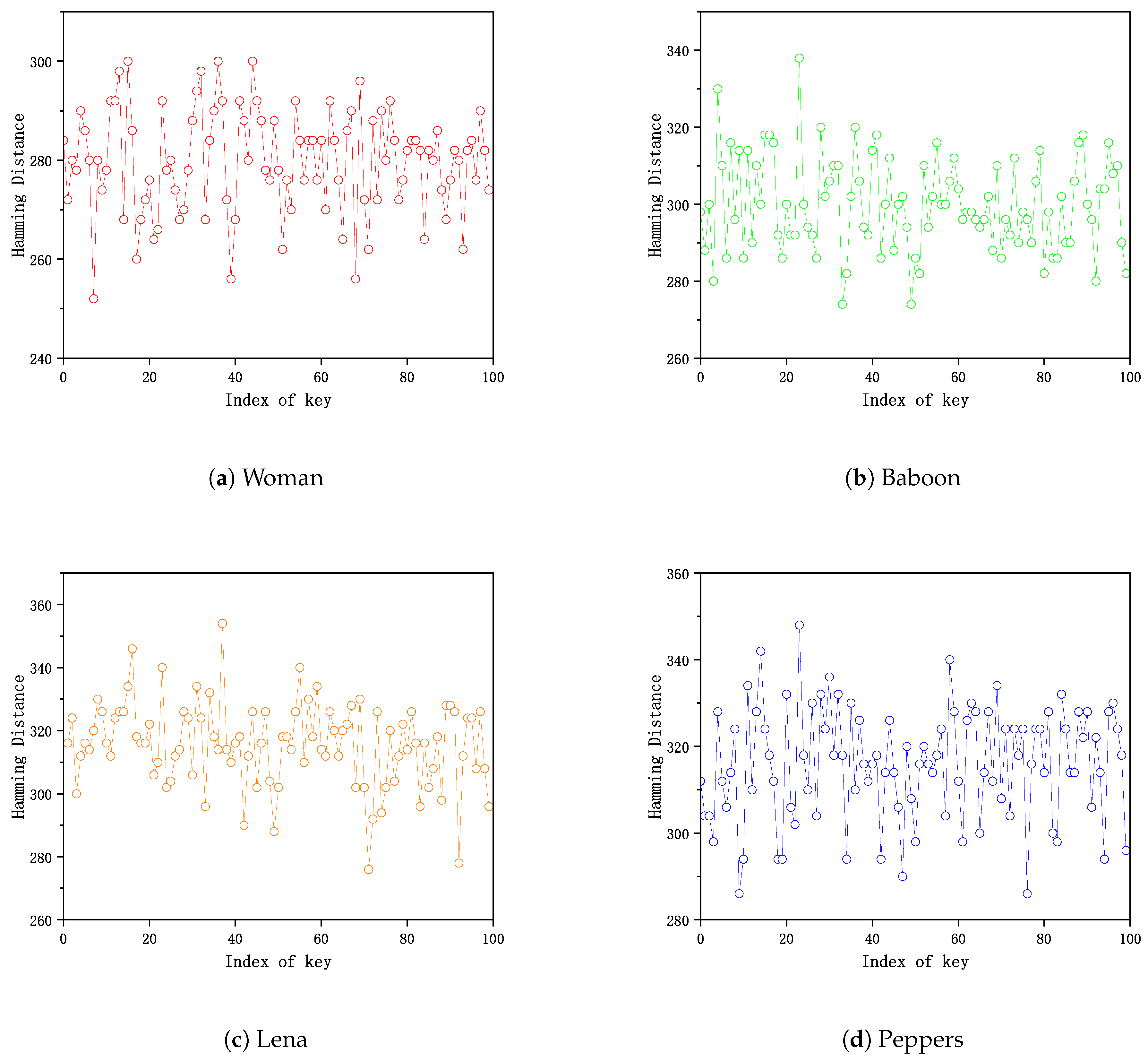

4.6. Key Sensitivity

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahmed, F.; Siyal, M.Y.; Abbas, V.U. A secure and robust hash-based scheme for image authentication. Signal Process. 2010, 90, 1456–1470. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, S.; Zhang, X.; Yao, H. Robust hashing for image authentication using Zernike moments and local features. IEEE Trans. Inf. Forensics Secur. 2012, 8, 55–63. [Google Scholar] [CrossRef]

- Vadlamudi, L.N.; Vaddella, R.P.V.; Devara, V. Robust hash generation technique for content-based image authentication using histogram. Multimed. Tools Appl. 2016, 75, 6585–6604. [Google Scholar] [CrossRef]

- Wang, C.; Wang, D.; Tu, Y.; Xu, G.; Wang, H. Understanding node capture attacks in user authentication schemes for wireless sensor networks. IEEE Trans. Dependable Secur. Comput. 2020, 19, 507–523. [Google Scholar] [CrossRef]

- Tagliasacchi, M.; Valenzise, G.; Tubaro, S. Hash-based identification of sparse image tampering. IEEE Trans. Image Process. 2009, 18, 2491–2504. [Google Scholar] [CrossRef]

- Lu, X.; Zheng, X.; Li, X. Latent semantic minimal hashing for image retrieval. IEEE Trans. Image Process. 2016, 26, 355–368. [Google Scholar] [CrossRef]

- Fonseca-Bustos, J.; Ramírez-Gutiérrez, K.A.; Feregrino-Uribe, C. Robust image hashing for content identification through contrastive self-supervised learning. Neural Netw. 2022, 156, 81–94. [Google Scholar] [CrossRef]

- Tang, Z.; Huang, Z.; Yao, H.; Zhang, X.; Chen, L.; Yu, C. Perceptual Image Hashing with Weighted DWT Features for Reduced-Reference Image Quality Assessment. Comput. J. 2018, 61, 1695–1709. [Google Scholar] [CrossRef]

- Lv, X.; Wang, Z.J. Reduced-reference image quality assessment based on perceptual image hashing. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 4361–4364. [Google Scholar]

- Tang, Z.; Zhang, X.; Zhang, S. Robust perceptual image hashing based on ring partition and NMF. IEEE Trans. Knowl. Data Eng. 2013, 26, 711–724. [Google Scholar] [CrossRef]

- Shaik, A.S.; Karsh, R.K.; Suresh, M.; Gunjan, V.K. LWT-DCT Based Image Hashing for Tampering Localization via Blind Geometric Correction. In ICDSMLA 2020, Proceedings of the 2nd International Conference on Data Science, Machine Learning and Applications, London, UK, 23–24 September 2020; Kumar, A., Senatore, S., Gunjan, V.K., Eds.; Springer: Singapore, 2022; pp. 1651–1663. [Google Scholar]

- Yan, C.P.; Pun, C.M.; Yuan, X.C. Multi-scale image hashing using adaptive local feature extraction for robust tampering detection. Signal Process. 2016, 121, 1–16. [Google Scholar] [CrossRef]

- Tang, Z.; Dai, Y.; Zhang, X. Perceptual hashing for color images using invariant moments. Appl. Math 2012, 6, 643S–650S. [Google Scholar]

- Lv, X.; Wang, Z.J. Perceptual image hashing based on shape contexts and local feature points. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1081–1093. [Google Scholar] [CrossRef]

- Shaik, A.S.; Karsh, R.K.; Islam, M.; Laskar, R.H. A review of hashing based image authentication techniques. Multimed. Tools Appl. 2022, 81, 2489–2516. [Google Scholar] [CrossRef]

- Xiang, S.; Kim, H.J.; Huang, J. Histogram-based image hashing scheme robust against geometric deformations. In Proceedings of the 9th Workshop on Multimedia & Security, Dallas, TX, USA, 20–21 September 2007; pp. 121–128. [Google Scholar]

- Cui, C.; Niu, X.M. A robust DIBR 3D image watermarking algorithm based on histogram shape. Measurement 2016, 92, 130–143. [Google Scholar] [CrossRef]

- Yong, S.C.; Park, J.H. Image hash generation method using hierarchical histogram. Multimed. Tools Appl. 2012, 61, 181–194. [Google Scholar]

- Karsh, R.K.; Laskar, R.H. Robust image hashing through DWT-SVD and spectral residual method. Eurasip J. Image Video Process. 2017, 2017, 31. [Google Scholar] [CrossRef]

- Huang, W.J. Robust image hash in Radon transform domain for authentication. Signal Process. Image Commun. 2011, 26, 280–288. [Google Scholar]

- Ouyang, J.; Liu, Y.; Shu, H. Robust hashing for image authentication using SIFT feature and quaternion Zernike moments. Multimed. Tools Appl. 2017, 76, 2609–2626. [Google Scholar] [CrossRef]

- Abbas, S.Q.; Ahmed, F.; Chen, Y. Perceptual image hashing using transform domain noise resistant local binary pattern. Multimed. Tools Appl. 2021, 80, 9849–9875. [Google Scholar] [CrossRef]

- Xue, M.; Yuan, C.; Liu, Z.; Wang, J. SSL: A Novel Image Hashing Technique Using SIFT Keypoints with Saliency Detection and LBP Feature Extraction against Combinatorial Manipulations. Secur. Commun. Netw. 2019, 2019, 9795621. [Google Scholar] [CrossRef]

- Tang, Z.; Dai, Y.; Zhang, X.; Zhang, S. Perceptual Image Hashing with Histogram of Color Vector Angles; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Gharde, N.D.; Thounaojam, D.M.; Soni, B.; Biswas, S.K. Robust perceptual image hashing using fuzzy color histogram. Multimed. Tools Appl. 2018, 77, 30815–30840. [Google Scholar] [CrossRef]

- Zong, T.; Xiang, Y.; Natgunanathan, I.; Guo, S.; Zhou, W.; Beliakov, G. Robust histogram shape-based method for image watermarking. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 717–729. [Google Scholar] [CrossRef]

- Hu, M.K. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 1962, 8, 179–187. [Google Scholar]

- Tang, Z.; Zhang, H.; Pun, C.M.; Yu, M.; Yu, C.; Zhang, X. Robust image hashing with visual attention model and invariant moments. IET Image Process. 2020, 14, 901–908. [Google Scholar] [CrossRef]

- Zhao, Y.; Wei, W. Perceptual image hash for tampering detection using Zernike moments. In Proceedings of the 2010 IEEE International Conference on Progress in Informatics and Computing, Shanghai, China, 10–12 December 2010; Volume 2, pp. 738–742. [Google Scholar]

- Hosny, K.M.; Khedr, Y.M.; Khedr, W.I.; Mohamed, E.R. Robust image hashing using exact Gaussian–Hermite moments. IET Image Process. 2018, 12, 2178–2185. [Google Scholar] [CrossRef]

- Kozat, S.S.; Venkatesan, R.; Mihçak, M.K. Robust perceptual image hashing via matrix invariants. In Proceedings of the 2004 International Conference on Image Processing, 2004—ICIP’04, Singapore, 24–27 October 2004; Volume 5, pp. 3443–3446. [Google Scholar]

- Monga, V.; Mihçak, M.K. Robust and Secure Image Hashing via Non-Negative Matrix Factorizations. IEEE Trans. Inf. Forensics Secur. 2007, 2, 376–390. [Google Scholar] [CrossRef]

- Wu, X.; Cui, C.; Wang, S. Perceptual Hashing Based on Salient Region and NMF. In Advances in Intelligent Information Hiding and Multimedia Signal Processing; Springer: Berlin/Heidelberg, Germany, 2022; pp. 119–127. [Google Scholar]

- Davarzani, R.; Mozaffari, S.; Yaghmaie, K. Perceptual image hashing using center-symmetric local binary patterns. Multimed. Tools Appl. 2016, 75, 4639–4667. [Google Scholar] [CrossRef]

- Shang, Q.; Du, L.; Wang, X.; Zhao, X. Robust Image Hashing Based on Multi-view Feature Representation and Tensor Decomposition. J. Inf. Hiding Multimed. Signal Process. 2022, 13, 113–123. [Google Scholar]

- Delaigle, J.F.; De Vleeschouwer, C.; Macq, B. Watermarking algorithm based on a human visual model. Signal Process. 1998, 66, 319–335. [Google Scholar] [CrossRef]

- Tang, Z.; Wang, S.; Zhang, X.; Wei, W. Structural feature-based image hashing and similarity metric for tampering detection. Fundam. Inform. 2011, 106, 75–91. [Google Scholar] [CrossRef]

- Bashir, I.; Ahmed, F.; Ahmad, J.; Boulila, W.; Alharbi, N. A secure and robust image hashing scheme using Gaussian pyramids. Entropy 2019, 21, 1132. [Google Scholar] [CrossRef]

- Dong, J.; Wang, W.; Tan, T. CASIA Image Tampering Detection Evaluation Database. In Proceedings of the 2013 IEEE China Summit and International Conference on Signal and Information Processing, Beijing, China, 6–10 July 2013. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Hamid, H.; Ahmed, F.; Ahmad, J. Robust Image Hashing Scheme using Laplacian Pyramids. Comput. Electr. Eng. 2020, 84. [Google Scholar] [CrossRef]

| Non-Malicious Attacks | Description | Parameter Value | Number |

|---|---|---|---|

| Average filtering | Filter size | 3, 5, 7 | 3 |

| Median filtering | Filter size | 3, 5, 7, 9 | 4 |

| 3 × 3 Gaussian low-pass filtering | Standard deviation | 0.3, 0.4, …, 1.0 | 8 |

| JPEG compression | Quality factor | 30, 40, …, 100 | 8 |

| Salt and pepper noise | Density | 0.001, 0.002, …, 0.007 | 7 |

| Scaling | Ratio | 0.5, 1.5, 2.0, …, 5.0 | 9 |

| X-Shearing | Angle | 1, 2, …, 8 | 8 |

| Rotation | Rotation angle | ±5, ±10, ±15, …, ±45, ±90 | 20 |

| Non-Malicious Attack | Minimum | Maximum | Mean | Standard Deviation |

|---|---|---|---|---|

| Average filtering | 0 | 63 | 16.71 | 13.14 |

| Median filtering | 0 | 62 | 18.12 | 12.82 |

| Gaussian filtering | 0 | 47 | 9.12 | 8.75 |

| JPEG compression | 0 | 33 | 6.66 | 5.76 |

| Salt and pepper noise | 0 | 62 | 10.35 | 10.16 |

| Scaling | 0 | 49 | 4.72 | 6.36 |

| X-Shearing | 1 | 40 | 11.5 | 8.12 |

| Rotation | 0 | 57 | 17.16 | 13.07 |

| Threshold | Collision Probability |

|---|---|

| 40 | |

| 42 | |

| 45 | |

| 48 | |

| 51 | |

| 54 | |

| 57 | |

| 60 | |

| 63 |

| Images | Hamming Distance |

|---|---|

| (a)–(b) | 107 |

| (c)–(d) | 72 |

| (e)–(f) | 153 |

| (g)–(h) | 93 |

| (i)–(j) | 199 |

| (k)–(l) | 133 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, Y.; Cui, C.; El-Latif, A.A.A. Robust Image Hashing Using Histogram Reconstruction for Improving Content Preservation Resistance and Discrimination. Symmetry 2023, 15, 1088. https://doi.org/10.3390/sym15051088

Jia Y, Cui C, El-Latif AAA. Robust Image Hashing Using Histogram Reconstruction for Improving Content Preservation Resistance and Discrimination. Symmetry. 2023; 15(5):1088. https://doi.org/10.3390/sym15051088

Chicago/Turabian StyleJia, Yao, Chen Cui, and Ahmed A. Abd El-Latif. 2023. "Robust Image Hashing Using Histogram Reconstruction for Improving Content Preservation Resistance and Discrimination" Symmetry 15, no. 5: 1088. https://doi.org/10.3390/sym15051088

APA StyleJia, Y., Cui, C., & El-Latif, A. A. A. (2023). Robust Image Hashing Using Histogram Reconstruction for Improving Content Preservation Resistance and Discrimination. Symmetry, 15(5), 1088. https://doi.org/10.3390/sym15051088