Abstract

In contemporary statistical methods, robust regression shrinkage and variable selection have gained paramount significance due to the prevalence of datasets characterized by contamination and an abundance of variables, often categorized as ‘high-dimensional data’. The Least Absolute Shrinkage and Selection Operator (Lasso) is frequently employed in this context for both the model and selecting variables. However, no one has attempted to apply regression diagnostic measures to Lasso regression, despite its power and widespread practical use. This work introduces a combined Lasso and diagnostic technique to enhance Lasso regression modeling for high-dimensional datasets with multicollinearity and outliers. We utilize a diagnostic Lasso estimator (D-Lasso). The breakdown point of the proposed method is also discussed. Finally, simulation examples and analyses of real data are provided to support the conclusions. The results of the numerical examples demonstrate that the D-Lasso approach performs as well as, if not better than, the robust Lasso method based on the MM-estimator.

1. Introduction

The usual estimators are inapplicable in cases where the matrix is singular. In practice, when estimating () or pursuing variable selection, high empirical correlations between two or a few other covariates (multicollinarity) lead to unstable outcomes. This study uses the least absolute shrinkage and selection operator (Lasso) estimation method in order to circumvent this issue.

Lasso estimation is a type of variable selection. It was first described by Tibshirani [1] and was further studied by Fan and Li [2]. They explored a class of penalized likelihood approaches to address these types of problems, including the Lasso problem.

In contrast, when outliers are present in the sample, classical least squares and maximum likelihood estimation methods often fail to produce reliable results. In such situations, there is a need for an estimation method that can effectively handle multicollinearity and is robust in the presence of outliers.

Zou [3] introduced the concept of assigning adaptive weights to penalize coefficients with different degrees of skewness. Due to the convex nature of this penalty, it typically leads to convex optimization problems, ensuring that the estimators do not suffer from local minima issues. These adaptive weights in the penalty term allow for oracle properties.

To create a robust Lasso estimator, the authors of [4] proposed combining the least absolute deviation (LAD) loss with an adaptive Lasso penalty (LAD-Lasso). This approach results in an estimator that is robust against outliers and proficient at variable selection. Nevertheless, it is important to note that the LAD loss is not designed for handling small errors; it penalizes small residuals severely. Consequently, this estimator may be less accurate than the classic Lasso when the error distribution lacks heavy tails or outliers.

In a different approach, Lambert-Lacroix, S. and Zwald, L. [5] introduced a novel estimator by combining Huber’s criterion with an adaptive Lasso penalty. This estimator demonstrates resilience to heavy-tailed errors and outliers in the response variable.

Additionally, ref. [6] proposed the Sparse-LTS estimator, which is a least-trimmed-squares estimator with an penalty. The study by Alfons demonstrates that the Sparse-LTS estimator exhibits robustness to contamination in both response and predictor variables.

Furthermore, ref. [7] combined MM-estimators with an adaptive penalty, yielding lower bounds on the breakdown points of MM-Lasso and adaptive MM-Lasso estimators.

Recently, ref. [8] introduced c-lasso, a Python tool, while [9] proposed robust multivariate Lasso regression with covariance estimation.

While recent years have seen a significant focus on outlier detection using direct approaches, a substantial portion of this research has centered on the utilization of single-case diagnostics (as seen in [10,11,12,13]). For high-dimensional data, ref. [12] introduced the identification of numerous influential observations within linear regression models.

This article introduces a novel Lasso estimator named D-Lasso. D-Lasso is grounded in diagnostic techniques and involves the creation of a clean subset of data, free from outliers, before calculating Lasso estimates for the clean samples. We anticipate that these modified Lasso estimates will exhibit greater robustness against the presence of outliers. Moreover, they are expected to yield minimized sums of squares of residuals and possess a breakdown point of 50%. This is achieved through the elimination of outlier influence, as well as addressing multicollinearity and variable selection via Lasso regression.

The paper’s structure is as follows: Section 2 provides a review of both classical and robust Lasso-type techniques. Section 3 introduces the diagnostic-Lasso estimator. Regression diagnostic measures are presented and discussed in Section 4. Section 5 offers a comparison of the proposed method’s performance against existing approaches, while Section 6 presents an analysis of the Los Angeles ozone data as an illustrative example. Finally, a concluding remark is presented in Section 7.

2. The Lasso Technique

2.1. Classical Lasso Estimator

Consider the situation in which the observed data are realizations of with a p-dimensional vector of covariates and univariate continuous response variables , a basic regression model has the following form: , where is the set of regression coefficients and is the ith error component. Tibshirani [1] assumed that was normalized such that the mean and variance of each ocvariate , is equal to 0 and 1, respectively. Letting , the classical-Lasso estimate is defined by

where is a Lasso tuning parameter. The following adaptive Lasso (adl-Lasso) criterion, which is a modified Lasso criterion, is proposed by Zou [3]:

where a is a known weights vector.

2.2. Robust Lasso Estimator

When there are outliers in the data, the standard least squares method fails to generate accurate estimates. The Lasso, however, is not robust against outliers since it is a particular instance of the penalized loss function of the that is susceptible to the penalty function [14].

In these situations, we need to build a Lasso estimation method that can be used in multicollinearity situations and works well enough when there are outliers. The following equation gives the LAD-Lasso regression method proposed by [4]; it combines the least absolute deviation (LAD) and the Lasso methods and is only resistant to outliers in the response variable, as shown by [14],

Combining Huber’s criterion with the adl-Lasso penalty, Ref. [5] produced another estimator that is resilient to heavy-tailed errors or outliers in the response. The Huber-Lasso estimator is written as follows:

where is a distribution scaling parameter and is Huber’s criterion as loss function as introduced in [15] for every positive real M. For each positive real M, the expression is as follows:

The Sparse-LTS estimator proposed by [6] combines a least-trimmed squares estimator with a penalty as follows:

where are the order statistics of the squared residuals and and denote the vector of squared with . Ref. [6] showed in a simulation study that the Sparse-LTS can be robust against contamination in both the response and predictor variables. Ref. [7] proposed the MM-Lasso estimator, which combines MM-estimators with an adaptive penalty, and obtained lower bounds on the breakdown points of the MM-Lasso and adaptive MM-Lasso estimators. The MM-Lasso estimator is given as follows:

where , , is an initial consistent and high breakdown point estimate of , , and is the M-estimate of the residuals’ scale.

3. Diagnostic-Lasso Estimator (D-Lasso)

This section proposes a new Lasso estimator based on the regression diagnostic method.

3.1. D-Lasso Estimator Formulation

A general idea to compute D-Lasso attempts first to create a clean, outlier-free subset of data. Let R represent the collection of observational indexes in the outlier-free subset, and are observation subsets indexed by R, and are estimated regression coefficients obtained by fitting the model to the set R.

And let be the corresponding sum of squares residual that finds the estimates corresponding to the clean samples having the smallest sum of squares of residuals. As such, as expected, the breakdown point is 50%. When the number of collection of observational indexes in the outlier-free subset in R equal to n then . This study suggests using in Lasso regression by replacing the value of in Equation (1) by . Thus, the D-Lasso can be expressed as follows:

3.2. Breakdown Point

The replacement finite-sample breakdown point is the most commonly used measure of an estimator’s robustness.

3.2.1. Definition

Rousseeuw and Yohai [16,17] introduced the breakdown point of an estimator, which is defined as the minimum fraction of outliers that may take the estimator beyond any bound. In other words, the breakdown point of an estimate shows the effects of replacing several data values by outliers. The breakdown point for the regression estimator of the sample is defined as

where are contaminated data obtained from by replacing m of the original n data by outliers.

3.2.2. Breakdown Point of D-Lasso Estimator

The D-Lasso Estimator’s breakdown point for subsets of size is provided by

This study suggests taking a value of equal to a fraction of the sample size, with , such that the final estimate is based on a sufficiently greater number of observations. This ensures a high enough statistical efficiency. The breakdown point that results from this is around (see [18]). Notice that the breakdown point is independent of the p dimension. Breakdown point is assured even if the number of predictor variables is greater than the sample size. Applying Equation (8) to the classical Lasso, which has (), yields a finite sample breakdown point of However, classical-Lasso is very sensitive to the presence of one outlier.

4. Regression Diagnostic Measures

4.1. Influential Observations in Regression

In this section, we introduce a different way of finding the clean subset R. A large body of literature is now available [10,11,12,13] for the identification of influential observations in linear regression. The Cook’s distance [19] and the differential of fits (DFFITS) [20] are two of the many influence measure that are currently accessible. The ith Cook’s distance is defined by [13] as:

where is the estimated parameter of with the ith observation deleted. The suggested cutoff point is . Equation (9) can be expressed as:

where is the ith standardized Pearson residual defined as:

where is the ith leverage value, which is in fact the ith diagonal element of the Hat matrix and is an appropriate estimate of .

The was introduced in [20], which is defined as:

where and are, respectively, the ith fitted response and the estimated standard error with the ith observation deleted. The relationship between and DFFITS is given by

Many other influence measures are available in the literature [11,21].

4.2. Identification of Multiple Influential Observations

The diagnostic tools discussed so far are designed for the identification of a single influential observation and are ineffective when masking and/or swamping occur. Therefore, we need detection techniques that are free from these problems. Ref. [12] introduced a group-deleted version of the residuals and weights in regression. Assume that d observations among a set of n observations are deleted. Let us denote a set of cases ‘remaining’ in the analysis by R and a set of cases ‘deleted’ by D. Therefore, R contains cases after d cases are deleted. Without loss of generality, assume that these observations are the last d rows of , y, and (variance–covariance matrix) so that

The generalized DFFITS (GDFFITS) is used [12] for the entire data set, defined as:

where is the fitted response when a set of data indexed by D is omitted and

where . The author considered observations as influential if .

4.3. Tuning D-LASSO Parameter Estimation

Robust 5-fold cross validation was used to select the penalization parameter from a collection of possibilities, with a -scale of the residuals serving as the objective function. The -scale was introduced by [22] to estimate the largeness of the residuals in a regression model in a robust and effective manner.

To find a set of candidate values for , we selected 30 equally spaced points between 0 and , where is about the smallest for which all the coefficients of except the intercept.

To estimate , we first used bivariate winsorization [23] to robustly estimate the maximal correlation between and . This estimate was used as an initial guess for , and then a binary search was used to improve it. If , then 0 is excluded from the candidate set.

5. Simulation Study

In this part, a simulation study that compared the proposed D-Lasso estimator’s performance to those of some other Lasso estimators is described. There are six estimators in the study: (i) D-Lasso, (ii) MM-Lasso, (iii) Sparse-LTS, (iv) Huber-LTS, (v) LAD-Lasso, and (vi) classical Lasso. Consider three different simulation scenarios:

Simulation 1: In the first simulation, multiple linear regression is taken into account with a sample size of 50 (n = 50) and 25 variables (p = 25), where each variable is selected from a joint Gaussian marginal distribution with a correlation structure of = 0.5.

The true regression parameters are set to be . The distribution of random errors e is generated from the following contamination model: where is the contamination ratio, is the signal to noise, which is chosen to be 3, is the standard normal distribution, and H is the Cauchy distribution to create a heavy-tailed distribution. The response variables are then calculated as follows:

The percentage of zero coefficients (Z.coef) equals 80%, and the percentage of non-zero coefficients (N.Z coef.) equals 20%.

Simulation 2: The second simulation process is similar to the first, except that the p and n values are different (p = 50, n = 150), and the response variables are calculated as follows:

The percentage of true zero coefficients (Z.coef) equals 90% and the percentage of true non-zero coefficients (N.Z.coef) equals 10%.

Simulation 3: The third simulation is similar to the second, but n is increased to 500 and = The response variables are then calculated as follows:

The percentage of true zero coefficients (Z.coef) equals 90% and the percentage of true non-zero coefficients (N.Z.coef) equals 10%.

The following data are looked at to see how well the approaches stand up to outliers and leverage points: (a) uncontaminated data; (b) vertical contamination (outliers on the response variables); (c) bad leverage points (outliers on the covariates).

The response variables and covariates are contaminated by certain ratios ( = 0.05, 0.10, 0.15, and 0.20) of vertical and high leverage points; these are created by randomly replacing some original observations with large values equal to 15 [24].

The simulations were performed in statistical software R. D-Lasso, MM-Lasso, Sparse-LTS, Huber-Lasso, LAD-Lasso, and classical Lasso. Using the measure suggested by [12], D-Lasso was assessed in order to determine which observations were influential, and was chosen as described in Section 4.3. For MM-Lasso we used the functions available in the github repository https://github.com/esmucler/mmlasso (accesses on 25 October 2017) [7].

The estimator was calculated using the sparseLTS() function from the robustHD package in R, and was chosen using a criterion as advocated by references [6]. Huber-Lasso used the package and the LAD-Lasso estimator was calculated using the package . The Lasso estimator was calculated using the lars() function from the lars package ([25]), where was chosen based on 5-fold cross-validation. , and , were chosen by applying the classical .

In each simulation run, there were 1000 replications. Four criteria are considered to evaluate the performances of the six methods, namely: (1) the percentage of zero coefficients (Z.coef), (2) the percentage of non-zero coefficients (N.Z.coef), (3) the average of mean squares of errors (), and (4) the median of the mean squares of errors Med(mse). A good method for Simulation 1 is the one that possesses the percentage of Z.coef closed to 80% and the percentage of N.Z.coef closed to 20%. However, a good method for Simulations 2 and 3 is the one that possesses the percentage of Z.coef and N.Z.coef reasonably close to 90% and 10%, respectively, with a good method having the least () and Med(mse) values.

Table 1.

The results of three scenarios of simulation study for uncontaminated data.

Table 2.

The results of three scenarios of simulation study for data with of vertical contamination.

Table 3.

The results of three scenarios of simulation study for data with of leverage points contamination.

The results clearly show the merit of D-Lasso. It can be observed from Table 1, Table 2 and Table 3 that the D-Lasso has the smallest values of and Med(mse) compared to the other methods.

In the case of no contamination, Table 1 shows that both classical and D-Lasso methods perform well in model selection ability. For example, in the scenario of Simulation 1, the classical Lasso successfully selected 80% of Z.coef and 20% N.Z.coef, followed by D-Lasso, which selected 77.6% and 22.4% for Z.coef and N.Z.coef, respectively.

However, the performance of other robust lasso methods is good, but it has a larger and Med(mse) than the other two methods. Furthermore, none of the methods suffer from false selection variables.

In the case of vertical outliers and leverage points, the classical Lasso is clearly influenced by the outliers, as reflected in the much higher and Med(mse). Furthermore, it tended to select more variables in the final model (overfitting) when the percentage of contamination increased to 20%.

On the other hand, in the case of vertical outliers, the robust Lasso methods (MM-Lasso, Sparse, Huber-Lasso, and LAD-Lasso) clearly maintain their excellent behavior. Sparse-LTS has a considerable tendency toward false selection when the percentage of contamination increases to 20%. Table 2 shows that the robust Lasso methods (Sparse, Huber-Lasso, and LAD-Lasso) were affected by the presence of leverage points in the data. The effect was worse with a higher percentage of bad leverage points in the data.

The results of D-Lasso and MM-Lasso are consistent for all percentages of contamination, but MM-Lasso has a larger than D-Lasso, which indicates that the performance of D-Lasso is more efficient than the other methods. For further illustrative purposes, the forthcoming section analyzes some real data sets.

6. Application to Real Data

6.1. Ozone Data

To assess the performance of D-Lasso in comparison with other Lasso methods, we analyzed the Los Angeles ozone pollution data, as originally studied by [26], which is available in the R package ‘cosso’. The Ozone dataset comprises 330 observations, each representing daily measurements of nine meteorological variables. The ozone reading serves as the predicted variable, while the remaining eight covariates are temperature (temp), inversion base height (invHt), pressure (press), visibility (vis), millibar pressure height (milPress), humidity (hum), inversion base temperature (invTemp), and wind speed (wind).

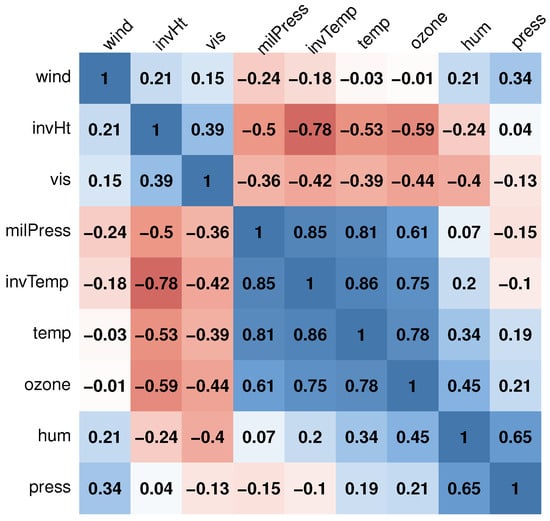

Figure 1 displays the correlation matrix of the meteorological variables, revealing significant correlations between the following pairs: (temp and invTemp), (invHt and invTemp), (milPress and invTemp), and (milPress and temp). Additionally, the Variance Inflation Factor (), calculated as , quantifies the increase in variance due to correlations among explanatory variables, where represents the unadjusted coefficient of determination for regressing the jth independent variable [27]. A commonly used default cutoff value is 5; only variables with a less than 5 are included in the model. If one or more variables in a regression exhibit high values, it indicates collinearity.

Figure 1.

Correlation matrix of variables in the Ozone data set. The colors reflect sign of correlation, where red reveals negative correlation and blue reveals a positive correlation.

The values of the predictors for the Ozone data are provided in Table 4. It is evident that invTemp has the highest value, followed by temp.

Table 4.

The for the covariates of the multiple regression model for Ozone data set.

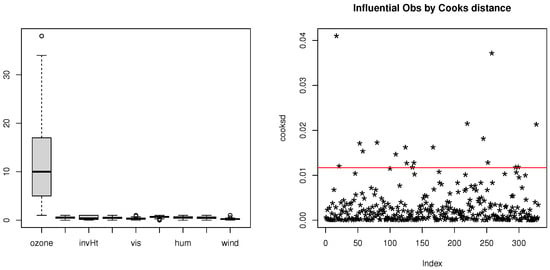

To identify potential outliers in the Ozone data, we used the function “outlier” in R, and we employed boxplots for the variables and evaluated Cook’s distance in a multiple regression model. Consequently, the Ozone dataset reveals the presence of one vertical outliers at row 38 and three leverage points, as illustrated in Figure 2.

Figure 2.

Boxplot of predictors (left) and Cook’s distance (an observations over red line have too much influence) (right) for Ozone data set.

We utilized the measure to detect outliers in the proposed D-Lasso estimators, while applying other Lasso methods for comparative purposes. We also calculated the Root Mean Squared Error () and R-squared values for these methods.

Table 5 presents the results of the various Lasso methods. Notably, classical Lasso and LAD-Lasso selected six non-zero coefficients, albeit with higher values. In contrast, Huber-Lasso, Sparse-LTS, and MM-Lasso selected four non-zero coefficients. D-Lasso, on the other hand, selects three non-zero coefficients (intercept, temp, and hum). The values of D-Lasso are smaller than those of Huber-Lasso, Sparse-LTS, and MM-Lasso, signifying the greater reliability of D-Lasso for this dataset.

Table 5.

The six Lasso estimators methods for the Ozone data set.

Furthermore, Table 5 demonstrates that across different Lasso criteria, the R-squared values of D-Lasso, Sparse-LTS, and MM-Lasso estimators are notably more acceptable than the R-squared values of other Lasso estimators.

6.2. Prostate Cancer Data

The Prostate cancer dataset encompasses 97 observations from male patients aged between 41 and 79 years. This dataset was originally sourced from a study conducted by [28] and is accessible through the R package ’genridge’. The response variable is the log(prostate specific antigen), denoted as Ipsa, while the explanatory variables include log(cancer volume) (lcavol), log(prostate weight) (lweight), age, log(benign prostatic hyperplasia amount) (lbph), seminal vesicle invasion (svi), log(capsular penetration) (lcp), Gleason score (gleason), percentage of Gleason scores 4 or 5 (pgg45), and log(prostate specific antigen) (lpsa).

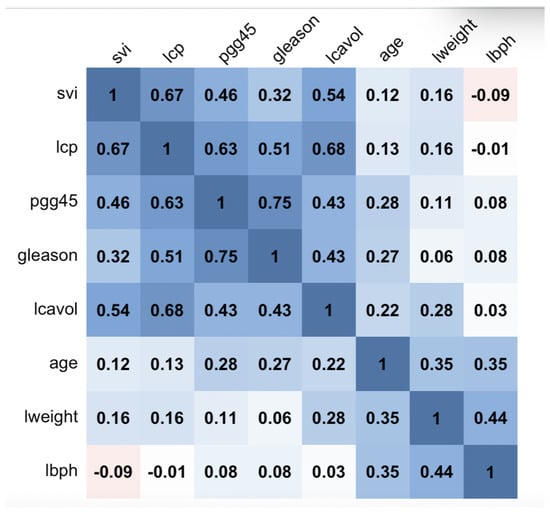

Figure 3 presents the correlation matrix of these variables, highlighting significant correlations between the following pairs: (pgg45, gleason) and (lcp, lcavol). Furthermore, the Variance Inflation Factor () values for the predictors in the Prostate cancer data are provided in Table 6, with pgg45 exhibiting the highest value, followed by lcp.

Figure 3.

Correlation matrix of variables in the Prostate cancer data set. The colors reflect sign of correlation, where red reveals negative correlation and blue reveals a positive correlation.

Table 6.

The for the covariates of the multiple regression model for Prostate cancer data set.

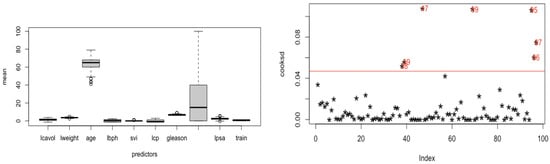

To identify potential outliers in the Prostate cancer data, we used the function “outlier” in R, and we employed boxplots for the variables and assessed Cook’s distance in a multiple regression model. As illustrated in Figure 4, the Prostate cancer dataset contains two vertical outliers and five leverage points.

Figure 4.

Boxplot of predictors (left) and Cook’s distance (an observations over red line have too much influence, and red numders show the row number of influence obevation in data) (right) for Prostate cancer data set.

For the identification of outliers in the proposed D-Lasso estimators, we utilized the measure, while other Lasso methods were applied for comparative analysis. We also calculated the Root Mean Squared Error () and R-squared values for these methods.

Table 7 presents the results of the different Lasso methods. Classical Lasso, Huber-Lasso, and MM-Lasso each select six non-zero coefficients, albeit with higher values. In contrast, LAD-Lasso selects seven non-zero coefficients with an of 3.1248, while Sparce-LTS selects five non-zero coefficients with an of 2.4334.

Table 7.

The six Lasso estimators methods for Prostate cancer data set.

D-Lasso, on the other hand, selects two zero coefficients (lcp and pgg45), and the value of D-Lasso is smaller than that of other methods. Consequently, D-Lasso is considered more reliable for this dataset.

7. Conclusions

The classical Lasso technique is often utilized for creating regression models, but it can be influenced by the presence of vertical and high leverage points, leading to potentially misleading results. A robust version of the Lasso estimator is commonly derived by replacing the ordinary squared residuals () function with a robust alternative.

This article aims to introduce robust Lasso methods that utilize regression diagnostic tools to detect suspected outliers and high leverage points. Subsequently, the D-Lasso is computed following diagnostic checks.

To assess the effectiveness of our newly proposed approaches, we conducted comparisons with the classical Lasso and existing robust Lasso methods based on LAD, Huber, Sparse-LTS, and MM estimators using both simulations and real datasets.

In this article, D-Lasso regression serves as the primary variable selection technique. Future endeavors may delve into exploring the asymptotic theoretical aspects and establishing the oracle properties of D-Lasso.

Author Contributions

Conceptualization, S.S.A. and A.H.A.; methodology, S.S.A. and A.H.A.; software, S.S.A.; validation, S.S.A. and A.H.A.; formal analysis, S.S.A.; writing—review and editing, S.S.A. and A.H.A.; visualization, S.S.A. and A.H.A.; supervision, S.S.A.; project administration, S.S.A. and A.H.A.; funding acquisition, S.S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia project number ISP-2024.

Data Availability Statement

The two data used here are applicable in R package.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Wang, H.; Li, G.; Jiang, G. Robust regression shrinkage and consistent variable selection through the LAD-Lasso. J. Bus. Econ. Stat. 2007, 25, 347–355. [Google Scholar] [CrossRef]

- Lambert-Lacroix, S.; Zwald, L. Robust regression through the Huber’s criterion and adaptive lasso penalty. Electron. J. Stat. 2011, 5, 1015–1053. [Google Scholar] [CrossRef]

- Alfons, A.; Croux, C.; Gelper, S. Sparse least trimmed squares regression for analyzing high-dimensional large data sets. Ann. Appl. Stat. 2013, 7, 226–248. [Google Scholar] [CrossRef]

- Smucler, E.; Yohai, V.J. Robust and sparse estimators for linear regression models. Comput. Stat. Data Anal. 2017, 111, 116–130. [Google Scholar] [CrossRef]

- Simpson, L.; Combettes, P.L.; Müller, C.L. C-lasso—A Python package for constrained sparse and robust re-gression and classification. J. Open Source Softw. 2020, 6, 2844. [Google Scholar] [CrossRef]

- Chang, L.; Welsh, A.H. Robust Multivariate Lasso Regression with Covariance Estimation. J. Comput. Graph. Stat. 2022, 32, 961–973. [Google Scholar] [CrossRef]

- Atkinson, A.C.; Riani, M.; Riani, M. Robust Diagnostic Regression Analysis, 2nd ed.; Springer: New York, NY, USA, 2000. [Google Scholar]

- Chatterjee, S.; Hadi, A.S. Regression Analysis by Example; John Wiley & Sons. Inc.: Hoboken, NJ, USA, 2006. [Google Scholar]

- Rahmatullah Imon, A. Identifying multiple influential observations in linear regression. J. Appl. Stat. 2005, 32, 929–946. [Google Scholar] [CrossRef]

- Ryan, T.P. Modern Regression Methods; John Wiley & Sons: Hoboken, NJ, USA, 2008; p. 655. [Google Scholar]

- Alshqaq, S.S.A. Robust Variable Selection in Linear Regression Models. Doctoral Dissertation, Institut Sains Matematik, Fakulti Sains, Universiti Malaya, Kuala Lumpure, Malaysia, 2015. [Google Scholar]

- Huber, P.J. Robust Statistics; Wiley: New York, NY, USA, 1981. [Google Scholar]

- Maronna, R.A.; Martin, R.D.; Yohai, V.J. Robust Statistics: Theory and Methods; Wiley Series in Probability and Statistics; John Wiley & Sons: Hoboken, NJ, USA, 2006. [Google Scholar]

- Rousseeuw, P.; Yohai, V. Robust regression by means of s-estimators. In Robust and Nonlinear Time Series Analysis; Springer: Berlin/Heidelberg, Germany, 1984; pp. 256–272. [Google Scholar]

- Saleh, S.; Abuzaid, A.H. Alternative Robust Variable Selection Procedures in Multiple Regression. Stat. Inf. Comput. 2019, 7, 816–825. [Google Scholar] [CrossRef]

- Cook, R.D. Detection of influential observation in linear regression. Technometrics 1977, 19, 15–18. [Google Scholar]

- Belsley, D.A.; Kuh, E.; Welsch, R.E. Regression Diagnostics: Identifying Influential Data and Sources of Collinearity; John Wiley & Sons: Hoboken, NJ, USA, 2005. [Google Scholar]

- Hadi, A.S. A new measure of overall potential influence in linear regression. Comput. Stat. Data Anal. 1992, 14, 1–27. [Google Scholar] [CrossRef]

- Yohai, V.J.; Zamar, R.H. High breakdown-point estimates of regression by means of the minimization of an efficient scale. J. Am. Stat. Assoc. 1988, 83, 406–413. [Google Scholar] [CrossRef]

- Khan, J.A.; Van Aelst, S.; Zamar, R.H. Robust linear model selection based on least angle regression. J. Am. Stat. Assoc. 2007, 102, 1289–1299. [Google Scholar] [CrossRef]

- Uraibi, H.; Midi, H. Robust variable selection method based on huberized LARS-Lasso regression. Econ. Comput. Econ. Cybern. Stud. Res. 2020, 54, 145–160. [Google Scholar]

- Hastie, T.; Efron, B. Lars: Least Angle Regression, Lasso and Forward Stagewise; R Package; Version 1.2. Available online: https://CRAN.R-project.org/package=lars (accessed on 15 November 2018).

- Breiman, L.; Friedman, J. Estimating Optimal Transformations for Multiple Regression and Corre-lation. J. Am. Stat. Assoc. 1985, 80, 580–598. [Google Scholar] [CrossRef]

- Fox, J. Regression Diagnostics: An Introduction; Sage Publications: New York, NY, USA, 2019. [Google Scholar]

- Stamey, T.A.; Kabalin, J.N.; McNeal, J.E.; Johnstone, I.M.; Freiha, F.; Redwine, E.A.; Yang, N. Prostate specific antigen in the diagnosis and treatment of adenocarcinoma of the prostate. II. Radical prostatectomy treated patients. J. Urol. 1989, 141, 1076–1083. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).