Abstract

This paper constructs the double penalized expectile regression for linear mixed effects model, which can estimate coefficient and choose variable for random and fixed effects simultaneously. The method based on the linear mixed effects model by cojoining double penalized expectile regression. For this model, this paper proposes the iterative Lasso expectile regression algorithm to solve the parameter for this mode, and the Schwarz Information Criterion (SIC) and Generalized Approximate Cross-Validation Criterion (GACV) are used to choose the penalty parameters. Additionally, it establishes the asymptotic normality of the expectile regression coefficient estimators that are suggested. Though simulation studies, we examine the effects of coefficient estimation and the variable selection at varying expectile levels under various conditions, including different signal-to-noise ratios, random effects, and the sparsity of the model. In this work, founding that the proposed method is robust to various error distributions at every expectile levels, and is superior to the double penalized quantile regression method in the robustness of excluding inactive variables. The suggested method may still accurately exclude inactive variables and select important variables with a high probability for high-dimensional data. The usefulness of doubly penalized expectile regression in applications is illustrated through a case study using CD4 cell real data.

1. Introduction

Linear mixed effect model (LME) is an important statistical model, which is widely used in various fields. The LME model includes the fixed effect and random effect. The fixed effect is used to represent the general characteristics of the sample population, and the random effect is used to depict the divergences between individuals and the correlation between multiple observations. The structure and property of this model reveal substantial differences compared to the general linear models and complete random coefficient linear models. Compared with other linear models, the inclusion of random effects in the mixed effects model captures the correlation between the observed variables.

Maximum likelihood and least square method are the classical methods of estimation used for the LME model. However, the least square method leads to biased estimates when the data with heavy-tailed distribution or significant heteroscedasticity. Koenker and Bassett (1978) [1] considered the quantile regression to solve such problems by regressing covariates according to the conditional quantiles of response, and capture the regression models under the all quantiles. However, the sparsity of sample variable is an issue which can not be ignored when it involves the correction analysis of variables, that is not all variables have predictive roles on regression analysis. In practical applications, massive candidate variables can be used for modeling analysis and prediction. Retaining the incorrelated variables in the model for prediction is undesirable, where the retention of irrelevant variables will produce the large deviation and non-interpretability of the model. How to select variables effectively is a challenging topic for the linear mixed effects models. Similar to the general linear regression, replacing the different types of penalty terms in the quantile regression can achieve synchrosqueezing for coefficients to reach target of variable selection. For example, Tibshirani (1996) [2] proposed a Lasso method with penalty terms, and selected variables by squeeze parameters sparsely in quantile regression. Quantile Lasso regression constraints some coefficients of irrelevant variables to 0 when the sum of absolute values of coefficients is less than a pre-specified constant, which not only can acquire a more simplified model and select variables simultaneously, also solve the problem of data with sparsity. Next, Zou (2006) [3] gave the proof of the oracle properties of adaptive Lasso in generalized linear models. Biswas and Das (2021) [4] proposed a Bayesian approach of estimating the quantiles of multivariate longitudinal data. Koenker (2004) [5] proposed the penalty quantile regression based on random effects, which can estimates parameter through weighting random effects information of multiple quantiles. Wang (2018) [6] proposed a new semiparametric approach that uses copula to account for intra-subject dependence and approximates the marginal distributions of longitudinal measurements, given covariates, through regression of quantiles. Wu and Liu (2009) [7] proposed the SCAD and adaptive Lasso penalized quantile regression method, and gave the oracle properties in the situation of variable number unchanged. For different types of data, Peng (2021) [8] illustrated the practical utility of quantile regression in actual survival data analysis through two cases. Li (2020) [9] constructed a double penalized quantile regression method, and used the Lasso algorithm to solve the parameters. It proved that the estimated accuracy of the double penalized quantile regression is better than the other quantile regression. And according to the article above, we find that double penalized quantile regression model lacks accuracy and stability in excluding inactive variables. And expectile regression can accurately reflect the tail characteristics of the distribution, the variables of the model can be accurately selected. Therefore, in order to excluding inactive variables more accurately, this paper cojoin the double penalty terms into the expectile regression to obtain the double penalized expectile regression model.

Newey and Powell (1987) [10] replaced the -norm loss function to -norm loss function for weighted least-squares, and proposed expectile regression. It regresses the covariates based on the conditional expectile of the response to obtain the regression model under all expectiles level. Almanjahie et al. (2022) [11] investigated the nonparametric estimation of the expectile regression model for strongly mixed function time series data. Gu (2016) [12] proposed the regularized expectile regression to analysis heteroscedastictiy of high-dimensional data. Farooq and Steinwart (2017) [13] analysed a support vector machine type approach for estimating conditional expectiles. Expectile and quantile are metric indicators that capture the tail behavior of data distribution. When covariates make different impacts on the distributions of different response, such as right or left skew, the two metrics can not only decrease the effects of outliers on statistical inference, but also provide a more comprehensive characteristics of entire distribution. Therefore, quantile and expectile regression provide a more comprehensive relation between the covariates and response.

Quantile regression is the generalization of median regression, and expectile regression is the generalization of mean regression, so expectile regression inherits the computational convenience and sensitivity to the observation values. Especially in the financial field, researchers need the sensity of expectile regression to data. For panel data, the model proposed by Schulze and Kauermann (2017) [14] allows multiple covariates, and a semi-parametric approach with penalized splines is pursued to fit smooth expectile curves. For cross-sectional data, expectile regression models and its applications have been studied. Sobotha et al. (2013) [15] discussed the expectile confidence interval based on large sample properties; Zhao and Zhao (2018) [16] proposed the penalized expectile regression model with SCAD penalty with the proof of asymptotic property; Liao et al. (2019) [17] proposed penalized expectile regression with adaptive Lasso and SCAD penalty for variable selection, and gave the proof of oracle properties under independent but different distributions of error terms. Waldmannetal et al. (2017) [18] proposed a combined Bayesian method with weighted least squares to estimate complex expectile regression. Next, the newly proposed iterative Lasso-expectile regression algorithm is used to solve the estimation of parameters and variables selections. Xu and Ding (2021) [19] combined the elastic network punishment with expectile regression and constructed the elastic network penalized expectile regression model. For the underlying optimization problem, Farooq and Steinwart (2017) [20] proposed an efficient sequential-minimal-optimization-based solver and derived its convergence. For the model selection problem, Spiegel et al. (2017) [21] introduced several approaches on selection criteria and shrinkage methods to perform model selection in semiparametric expectile regression. Expectile regression has also received attention in the economic and financial sector, particularly in actuarial and financial risk management. Daouia et al. (2020) [22] derive joint weighted Gaussian approximations of the tail empirical expectile and quantile processes under the challenging model of heavy-tailed distributions. Ziegel (2013) [23] applied expectile regression to the field of risk measurement.

Expectile regression is widely used in various fields. Including economic field [11,14,17,22,23], biomedical field [12,19] and health field [15,18,21] etc. Therefore, the study of expectile regression has many practical significances, and it is very necessary to study it.

To select the important variables into the model and the inactive variables excluded from the model more accurately, this paper combines the linear mixed effect model with the expectile regression model, and cojoin penalty terms into the estimation of random and fixed effects to construct the double penalized expectile regression for linear mixed effects model. And the iterative Lasso-Expectile regression algorithm is used to solve parameters. The asymptotic property of double penalized expectile regression estimation is proved. The simulation studies will analysis the results of coefficient estimation and variable selection of the method proposed in this paper under different conditions, and the robustness of this method in excluding inactive variables is mainly studied. Finally, based on the research on the real data of CD4 cells, the practical utility difference between the double penalized quantile regression and the double penalized expectile regression are compared.

The rest of this paper is organized as follows. We propose the double penalized expectile regression method and the iterative Lasso expectile regression algorithm in Section 2. The convergence of the algorithm and the asymptotic properties of the model are given in Section 3. In Section 4, we present the simulation studies. And a real data example is illustrated in Section 5. Moreover, this method is compared with the existing double penalized quantile regression method in parameter estimation and variable selection in simulation studies and real data analysis. In Section 6, we give the conclusions. In Appendices, we show the proofs of lemmas and asymptotic properties, and some graphs and tables obtained by simulation studies.

2. Methodologies

In order to get the specific formula of the linear mixed effect double penalized expectile regression model, we introduce the LME model and summarize the estimation methods. And give the specific steps of iterative lasso-expectile regression algorithm. Then we discuss the selection criteria of penalty parameters.

2.1. Model and Estimation

Firstly, we consider the LME model

where is the vector of fixed effects regression coefficients, is a vector of random effects. is the row vector of the known design matrix, is the covariate associated with random effects, and is the scalar of the ith subject’s continuous random variable. And we let , . The Equation (1) can be expressed as

where , , , and .

Next, we let , , , and , , , . So Considering the matrix form, the LME model (2) can be expressed as

Using the maximum likelihood and generalized least square method, the parameters and can be calculated. According to the known and , , consider following joint density function of and :

It is possible to determine the and by minimizing the twice negative logarithm of Equation (4). Equation (4) is not a typical log-likelihood function since are vectors of random effects parameters. To be more specific, the first part in Equation (4) is a weighted residual that accounts for within-subject variation, while is a representation of a penalty term resulting from random effects that accounts for between-subject variation.

Minimizing Equation (4) is identical to solving the following mixed model equation [24,25] for given the and P.

Expressed in matrix form as:

where , . Since this outcome had already been achieved, Robinson [26] credited Henderson [27] with the aforementioned normal equations. The “best linear unbiased predictor” (BLUP), which Goldberger [28] introduced, is used to refer to the random effect estimated value and associated estimated value . And Rao [24] put up a way to demonstrate the following Proposition 1:

Proposition 1.

solves, where.

Although the implicit estimation solution of random effects may be distinct from others, seeing the random effects estimation value as a penalized least squares estimator offers useful insights for the addition of punishment items. By shrinking the unrestricted reaches the expected value, and both accuracy of the estimator of and the individual fixed effect estimation will be improved. According to the classical statistical conclusion, in the case of non-informative priors, the posterior expectations of parameters of Bayesian estimation of LME are also solutions (5) and (6).

2.2. Double Penalized Expectile Regression

Li (2020) [9] proposed a double penalized quantile regression estimation for the LME model that finds and , i = 1,…,n that minimize

where the so-called check function of under level quantile regression is denoted by . Equation (7) can simultaneously perform a variable selection operation and estimate the mixed expectile functions of the response variable, as stated in Li (2020) [9].

Considering the lack of robustness of quantile regression for excluding inactive variables. Inspired by Newey and Powell (1987) [10], we can use the following method for the conditional expectile functions of the response of the observation on the individual ,

The same individual is connected to each component of . We suggest a double penalized expectile regression method for the LME model based on Equation (8), which find and , that minimize

where signifies the check function, and .

Compared with Equation (7), the expectile regression has the sensitivity to extreme values, and the square loss function used in the regression has the computational advantage. When discussing the asymptotic properties of the estimation, the covariance matrix of the asymptotic distribution does not need to calculate the density function of the residual. Therefore, compared with quantile regression, expectile regression depends more on the overall distribution, and similar to Equation (7), our method also considers selecting variables when estimating coefficients.

2.3. Iterative Lasso-Expectile Regression Algorithm

Obviously, it is very difficult to directly estimate parameters , , and of the iterative double penalized expectile regression model. We propose the Lasso-expectile regression algorithm to solve this optimization problem by selecting one variable and fixing another to solve and , it is equivalent to solving the general lasso expectile model. The iterative series can not guarantee the convergence to the global optimum, the objective function increases monotonically and achieves the maximum value. Therefore, the algorithm terminates after limited iterations and reaches a local optimum (the detailed proof in Section 3).

It is more efficient to combine the iterative approach with the adjustment of than to solve for a fixed since this algorithm can find the solution paths of with when provided . According to several selection criteria, we choose the best to fit and the best to fit . The SIC [29] (Schwarz Information Criterion) and GACV [30] (generalized approximate cross-validation criterion) are the two criteria that are most frequently employed for expectile regression, respectively.

where , , and the dimension of the process is , which is the same as one of the non-zero components of . Only when the result of SIC or GACV is at the inflection point is the ideal parameter obtained.

In fact, we can present a different set of tuning parameters firstly, such as dividing (0, a] evenly into parts, and then select the best tuning parameter based on these two criteria. In order to solve the parameter for the variables , , we compared these two criteria. The solution to might also be derived similarly provided .

We summarize the above iterative algorithm in the following Algorithm 1 steps. The specific steps of iterative Lasso-expectile regression algorithm are as follows.

| Algorithm 1. Iterative Lasso-Expectile Regression Algorithm. |

| Input: , , |

| Output: , , , . |

| Step 1: Give the initial value, , , and get the standard Lasso solution according to ; |

| Step 2: Iterate the following two Lasso optimization steps, , |

| ●, and the modified residual is ; |

| ●, and get a new response variable ; |

| Step 3: Terminate when for a pre-specified small value . |

Then we use an example to show the solution path in each iteration step for SIC and GACV. We consider the following the process of model data generating:

where , , , , are iid from standard normal distribution, and the random effects are iid from , . We use double penalized Lasso ecpectile regression (DLER) and double penalized Lasso quantile regression (DLQR) methods to study the two criteria, and get the following results.

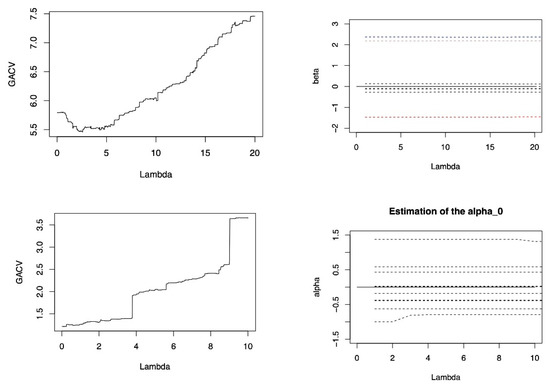

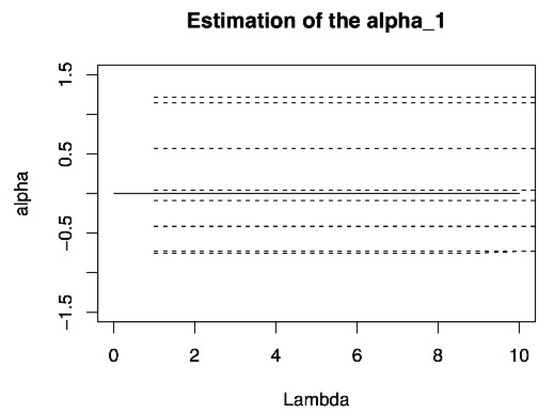

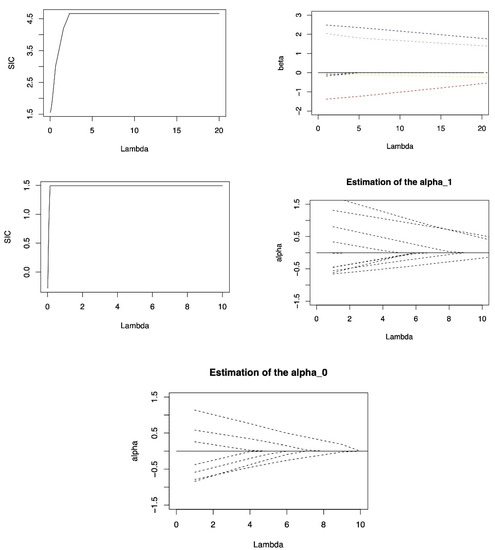

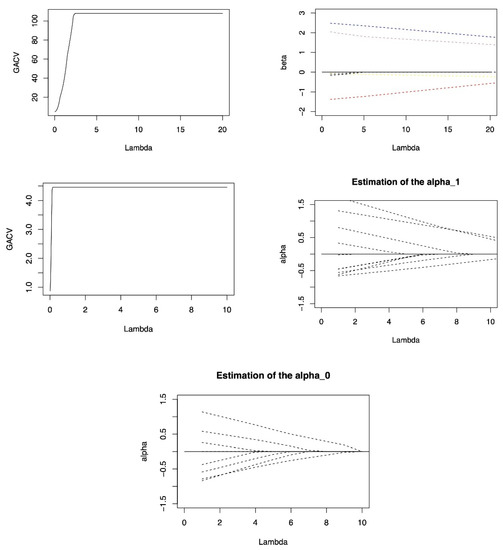

Figure A1 and Figure A2 (in the Appendix B) show the search paths of the DLER method for penalty parameters and under the SIC and GACV criteria in the first iteration respectively. It can be seen in the graph that the penalty parameter search paths of SIC and GACV are obviously different, but the corresponding optimal penalty parameter values are close.

Figure A3 and Figure A4 (in the Appendix B) show the results of DLQR method in the first iteration process respectively. The loss functions of DLER method and DLQR method are obviously different in nature. Under different penalty parameters, the criterion values of the two methods are not comparable, so they cannot be compared and analyzed horizontally from the numerical perspective of the two criteria. From the path of fixed effect coefficient, it can be seen that with the increase of penalty coefficient , DLER method will quickly compress the inactive covariate coefficients to 0, while the coefficients of important covariates change slowly around the true value. However, The DLQR method does not fluctuate much with changes in the penalty coefficient. It shows that the DLER method has an absolute advantage over DLQR in terms of variable selection.

3. Asymptotic Properties

In this part, we give the convergence of iterative Lasso expectile regression algorithm and the asymptotic property of double penalized expectile regression.

3.1. Convergence of Iterative Lasso-Expectile Algorithm

In order to prove the asymptotic properties of the double penalized expectile regression method. The following lemmas are provided:

Lemma 1.

There exists a single that minimizes the cost functionfor any given,,ifis a continuous and strictly convex function ofand. The same conclusion also applies to.

The Proof of Lemma 1 is given in Appendix A.

Lemma 2.

For a given ,

, definitionis the mapping of the above iterative algorithm for updating process ofin one step, thenis continuous.

The Proof of Lemma 2 is given in Appendix A.

Lemma 3.

The sequence solution provided in the iterative Lasso expectile algorithm reduces the objective functionandconverges tounder the assumption that there is a uniquefor the givenandto minimize.

The Proof of Lemma 3 is given in Appendix A.

3.2. Asymptotic Properties of DLER

In order to obtain the asymptotic properties of the double penalized expectile regression method, we first propose the following regularization assumptions:

(A1) has independent conditional distribution function and density function , given , , , and , , .

Where , , , , ;

(A2) Assuming that ,, are independent, and their distribution function and density function are and respectively, ;

(A3) Definition , , and there are the positive definite matrixes

where ;

(A4) , .

The above assumptions (A1) and (A3) are the standard conditions of the panel data models, where assumption (A1) is common in the literature of quantile regression, which not only ensures the independence between observation individuals, but also allows the heterogeneity within individuals; (A3) gives the full rank condition, according to Lindeberg-Feller central limit theorem, when , it will be simplified by , and then (A3) will be simplified.

We give the asymptotic properties of the double penalty expectile regression estimation of Equation (7), and consider the following objective function:

where is a convex function whose minimum value is

Theorem 1.

Under assumptions (A1)–(A4), when, , , if, , , and there is the, andis satisfied, so we have

where

According to the aforementioned theorem, for non-zero coefficients, the double penalized estimation resulted in bias, and the bias degree was regulated by the tuning parameter. The proof of Theorem 1 is given in the Appendix A.

4. Simulation Study

In this section, we illustrate the performance of the proposed double penalized expectile regression. In order to study the impact of the SIC criteria and the GACV criteria on double penalized Lasso expectile regression model and double penalized Lasso quantile regression model [9]. Simulation 1 is used to compare the two methods with different expectile levels and signal-to-noise ratio. And briefly denoted as DLER-SIC, DLER-GACV, DLQR-SIC, and DLQR-GACV; To illustrate the robustness of the DLER method in excluding inactive variables, simulation 2 and simulation 3 are given to study the impact of random effects on the coefficient estimation, comparing the performance of DLER and DLQR under different random effects. As well as comparing the obtained experimental values at different error distributions and different model sparsity to illustrate the advantage of DLER in variable selection; in order to illustrate the advantage of the DLER method in high-dimensional data, simulation 4 compares the two methods when dimensions of covariate is larger than the sample size.

In order to evaluate the accuracy of model coefficient estimation, we use mean square error (MSE) as the evaluation index. MSE is defined as:

where , and is the estimator of in the simulation. SD stands for the 100-repetition Bootstrap standard deviation. Corr expresses the average proportion of important variables that were entered into the model correctly, and Incorr expresses the average proportion of inactive variables that were entered into the model incorrectly. represent the total number of choosing each important variable into the model correctly and its bootstrap standard deviation respectively. represent the total number of correctly excluded each redundant variable out of the model and its bootstrap standard deviation respectively. Int () represent the total number of choosing intercept into the model and its bootstrap standard deviation.

Simulation 1.

The impact of “signal-to-noise ratio” on estimation.

We compared the effectiveness of the two tuning parameter criteria, SIC and GACV. The process for producing the model data listed below was rated as

We let , , ,

, and

are generated from with correlation between and being , . This paper sets the random effects are iid from , where . And , . The error terms are iid from , we set , and to compare the estimation methods. In every simulation, we consider the estimator of coefficients to be 0 if its absolute value is less than 0.1. We set in the iterative Lasso algorithm of DLER as the same settings in the iterative algorithm of DLQR of Li (2020) [8]. Table A1 and Table A2 (in the Appendix C) give the results of coefficient estimation and variable selection, respectively.

According to Table A1, the estimation accuracy of the model is first analyzed. When is fixed, the MSE of the two methods is almost reaches the minimum at , the estimation accuracy of the two methods is better, while the accuracy at the other fractile is slightly worse, but the difference is not obvious, for example when , MSE of DLER-SIC method is 0.292 at , while at the other expectile levels are 0.306 and 0.374, respectively. In this case, this shows that the DLER method has the same accuracy as the DLQR method in parameter estimation. In addition, according to the results of the two tunning parameter criterions, the estimation accuracy under the SIC is better than the GACV whether the DLER model or the DLQR model.

Next, analyze the accuracy of variable selection. According to the SD of each variable estimation in Table A1, when fixed , with the increase of , SD decreases gradually, and the accuracy of variable selection of the two models is gradually increased. For each important variable, according to Table A2, the DLER method can select 99% of the important variables into the model. In addition, for each inactive variable, comparing the results in the table, shows that when is fixed, with the increase of , the SD is also increasing, indicating that the accuracy of DLQR method is gradually weakened in excluding inactive variables out of the model. For DLER method, the SD is the largest at , DLER method has the low accuracy, it is still better than DLQR at this time, especially at , the SD is the smallest, indicating that with the increase of signal-to-noise ratio, DLER method is better than DLQR method in excluding the redundant variables. Combined with the results of Table A2, the same conclusion can be obtained.

Therefore, for the parameter estimation of the methods, when the signal-to-noise ratio with relatively large, the DLER method is better than the DLQR method. In terms of model variables selection, whether in SIC and GACV criteria, almost 99%of important variables can be selected in both methods. For the ability to exclude the redundant variables, the DLER method is more advantageous than the DLQR method. Therefore, in terms of the accuracy of parameter estimation, DLER method and DLQR method have the same effect. However, the DLER method is better than the DLQR method in terms of estimated stability and excluding inactive variables.

Simulation 2.

The influence of random effects.

We use the simulation to show the influence of random effects on DLER-SIC, DLER-GACV, DLQR-SIC and DLQR-GACV. The data generation model is Equation (15) with the fixed . And consider the covariance matrix of the random effect with three forms:

With the increasing of random effects, we get the results of 100 repetitions simulations with . Table A3 and Table A4 (in the Appendix C) give the results of estimation and variable selection of different methods under different random effects at .

According to Table A3, with the increasing interference of random effects, although the estimation accuracy of the two methods is decreasing, and the accuracy of the fixed effect coefficient is decreasing, especially the first two important covariates interfered by random effects. However, in term of variable selection, DLER method has little change in the accuracy of variable selection, and almost all the important variables can be choosing into the model, and the ability to exclude the inactive variables is still better than DLQR method. In particular, the DLER-SIC method, combined with Table A4, shows that the proportion of correctly choosing variables is 99%, and the accuracy of excluding the redundant variables is almost above 90%. In general, since the double penalized expectile regression takes into account the random effects while selecting fixed effects, it can be almost free from the interference of random effects in the accuracy of variables selection, but it will still be affected by random effects to a degree in the accuracy of fixed effect coefficient estimation. Therefore, in terms of the accuracy of parameter estimation, DLER method and DLQR method have the same effect. However, DLER is still more robust than DLQR in variable selection, even if the interference of random effects is added to the model.

Simulation 3.

The case of different model sparsity and error distribution.

We compare DLQR and DLER under different model sparsity and error distribution. The data generation model is Equation (15), considering the fixed effect as the following three cases

- (1)

- Dense

- (2)

- Sparse

- (3)

- High Sparse

We consider , , , the distribution of error term respectively comes form , and . Comparing the models DLER-SIC, DLER-GACV, DLQR-SIC and DLQR-GACV. Tables shows the results of the three models by 100 repetitions simulations. Table A5 and Table A6 show the results of coefficient estimation and variable selection under the dense model, Table A7 and Table A8 show the results under the sparse model, Table A9 and Table A10 show the results under the highly sparse model. (See Appendix C for tables).

According to Table A5. At this time, all variables are important variables. With the change of error distribution, MSE are increasing, and the estimation accuracy of the two methods are decreased. We find that DLER method and DLQR method have the same effect in term of the accuracy of parameter estimation. In addition, considering the accuracy of variable selection of the two methods. Although the two methods cannot completely choose all the important variables into the model, it can be known from Table A6 that the average of the correct variables retained by them is more than 7.6, which is very close to the true value 8. Moreover, when the error term is adjusted from the normal distribution to the heavy-tailed distribution, the change of DLER is the smallest in all methods, so it is weak on the influence of different error distributions. In summary, DLER is robust to the change of error distribution on variable selection.

Next, the results of sparse model and highly sparse model are analyzed. Firstly, the estimation accuracy of DLER method and DLQR method is analyzed. The results are similar to the dense model, with the error distribution becoming more complex, MSE are increasing, and the estimation accuracy of the two methods is decreased. Next, considering the accuracy of the variable selection in model, according to Table A7 and Table A8, when the error obeys the normal distribution, for the sparse model, the two methods have little difference in the accuracy of excluding the inactive variables. However, when the error obeys distribution or , the DLER method is significantly better than DLQR. Especially in the highly sparse model with error distribution , DLER excluding the inactive variables advantage is more obvious, especially DLER-SIC method. It shows that expectile regression is quite robust than quantile regression.

Simulation 4.

The case of high dimensional data.

High-dimensional data is widely available in the stock exchange market, biomedicine, aerospace and other fields, so the modeling and analysis of high-dimensional data has very important practical significance. Next, we investigate the performance of the proposed model in the high-dimensional scenarios of selecting variables. The data generation model is still the Equation (15), we reduce the sample size to , , that is, the total sample size is 100. In addition, 102 independent noise variables are added to the above sparse model, all variables are independent and identically distributed in , thus the total number of variables is 110, larger than the total sample size, and . There are three real important covariates and 107 redundant covariates. In addition, we set , . Table A11 and Table A12 (in the Appendix C) show 100 repeated results of the two methods at , where () denote the average and bootstrap standard deviation of all redundant variables being correctly excluded out of the model.

Firstly, analyze the estimation precision of the two methods. According to Table A11, in the situation of fixed quantile, when the dimension of covariates is larger than the sample size, the MSE is larger than the previous simulation, the change of the MSE value of the DLER method is less than DLQR, and the MSE of the DLQR method is significantly larger than the DLER, indicating that although the estimation accuracy of the two methods is decreasing, the DLER method is significantly better than the DLQR method, and the stability of the DLER method is better than the DLQR method. Next, analyze the accuracy of variable selection. According to Table A11 and Table A12, the DLER method can ensure that the proportion of excluding redundant variables is more than 95% under three expectile levels. When the expectile level is fixed, DLER method has absolute advantages over DLQR in excluding redundant variables, especially DLER-GACV method can exclude more than 97% of the inactive variables at . Therefore, when the dimension of covariates is larger than the sample size, DLER method is superior to DLQR in terms of estimation accuracy and excluding the inactive variables both at median and extreme expectile level .

5. Application

CD4 cells play an important role in determining the efficacy of AIDS treatment and the immune function of patients, so excluding inactive variables is important for analyzing CD4 cell data. We applied the model to the real data of CD4 cell count. For a complete description of the data set, please refer to Diggle P.J’ s homepage: https://www.lancaster.ac.uk/staff/diggle/, accessed on 30 June 2022, and we select a part of this data set. The response variable is the open-root conversion of CD4 cell count. The variables in the data set include the time of seroconversion (time), the age relative to a starting point (age), the smoking status depicted by the number of packets smoked (smoking), the number of sexual partners (sex partner), and the depression state and depression degree (depression). Choosing time, smoking, age and sex partner as important fixed effect to determine the CD4 cell numbers, where time and age are important random effects. On this basis, consider the following model:

where is the observation of the ith individual, is the observation of explanatory variables, and is a subset of . We set that the threshold of is 0.05, and the threshold of is 0.1. Table A13 (in the Appendix C) gives the results of the four methods at .

From the results of Table A13, the double penalized expectile regression method proposed can give the results of variable selection while estimating the coefficients. The variable with a value of 0 in the table indicates that it can be excluded from the model. It can be found that both estimation methods excluded variables and from the model. In the four variables, only time and smoking will affect CD4 cells. From the sign of coefficient estimation, the value of time is always less than 0, indicating that time has a negative influence on CD4 cells. The values of smoking are all larger than 0, indicating that smoking has a positive influence on CD4 cells.

Analysis of the numerical changes. For variable , under the SIC criterion, with the changes of expectile level, the DLER method fluctuates less, and the DLER is relatively stable. Indicating that the stability of the DLER-SIC method is slightly stronger. For variable , whether it is the SIC criteria or the GACV criteria, the numerical fluctuation of the DLER method is less than the DLQR method, indicating that the stability of DLER for estimation of variable is better than that of DLOR.

This shows that DLER method has strong practical utility for CD4 data in excluding inactive variables and analyzing its influencing factors.

6. Conclusions

In this paper, we propose the double penalized expectile regression method for linear mixed effects model, which imposes penalties on both fixed effects and random effects, fully accounting for the random effects in coefficient variable selection and estimation. The model proposed in this paper is found to be highly robust in excluding inactive variables after simulations and application studies. The conclusion is also supported by comparison with double penalized quantile regression method, through the comparison of the results of the two estimation methods, it can be found that the double penalized expectile regression has the same effect as the double penalized quantile regression in term of the accuracy of parameter estimation, but has absolute advantage in selecting variables, especially in excluding inactive variables.

When the signal-to-noise ratio is different, the precision of coefficient estimation and the accuracy of variable selection will be different. When the signal-to-noise ratio is larger, the proposed method outperforms the quantile model in terms of coefficient estimation ability and excluding inactive variables. In addition, because the double penalized expectile regression select variable for both fixed and mixed effects, it is almost undisturbed to random effects in terms of variable selection accuracy. When comparing the dense and sparse models, it is found that the sparser the model and the more complex the error term distribution, the advantage of the new method is more obvious, indicating that it has strong robustness. When the dimension of covariates is larger than the sample size, the method has obvious advantages in the estimation precision of coefficients and excluding inactive variables. Finally, when analyzing the real data, it is found that the new method has strong practical utility for the longitudinal data of CD4 cells, which can exclude its inactive variables and analyze the influence trend of various factors, therefore obtain accurate medical judgment.

Author Contributions

Conceptualization, J.C. and S.G.; methodology, S.G.; software, S.G.; validation, Z.Y., J.L. and Y.H.; formal analysis, S.G.; investigation, S.G.; resources, Z.Y.; data curation, J.L.; writing—original draft preparation, S.G.; writing—review and editing, S.G.; visualization, Z.Y.; supervision, J.C.; project administration, Y.H.; funding acquisition, J.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China under Grant 81671633 to J. Chen.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data in this paper have been presented in the manuscript.

Acknowledgments

Many thanks to reviewers for their positive feedback, valuable comments and constructive suggestions that helped improve the quality of this article. Many thanks to editors’ great help and coordination for the publish of this paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Proof of Lemma 1.

First, prove the existence of the conclusion.

We give and select randomly. Define

The continuity of shows that is a closed area. For a given , then

is contained in the spherical region of , so is bounded. Therefor there exists in such that reaches the minimum.

Then prove the uniqueness of the conclusion. It can be easily obtained from the strictly convex function of of . Similar to this, there is a specific such that reaches the minimum value for a given and . □

Proof of Lemma 2.

We can know that is a composite mapping that only depends on from the process of iterative algorithm, so all that must be demonstrated is that both and are continuous mappings. In addition, since given to solve , it is symmetrical to make reach the minimum with given to solve to make reach the minimum. Just has to be demonstrated that mapping continuous.

For simplicity, here we omit the superscript. Next define the function . is a convex continuous function because the function is convex.

Next, we prove that is true for every sequence . Since is a continuous function and for , when we have , that is, . And define

Since is a bounded closed set with sequence for a given ,and , there is a convergent subsequence whose limit value is . From the continuity of and , we can get

Lemma 1 demonstrates that the point at which reaches the minimal value for a given is unique, and from the equation we may get

Thus, we established that . The mapping is continuous for all sequences . □

Proof of Lemma 3.

Given and , from the definition in the iterative Lasso expectile algorithm, it is easy to obtain

Since is a strictly convex function, unless , at least one greater-than symbol must be included in the two formulas above. As a result, the sequence is strictly decreasing. And since , its limit is real, indicated by .

And consider

is a bounded closed region.

Clearly, we can have . Denote by randomly selecting a convergent subsequence .

Because the continuity of , we can obtain

Assuming that , that is, , so we can further obtain to produce

Define . It can be seen from Lemma 2 that is continuous, and because and are continuous, > 0 is present, resulting in

to make it true for every

It can be seen from that when is large enough, we have

Thus, to sum up .

This is obviously in contradiction with

So . We can obtain due to the limitation of any subsequent being the same.

The proof is ended. □

Proof of Theorem 1.

First, similarly [27], we decompose the objective function and equation to prove Theorem 1, we define the objective function as following

Our goal is to approximate by a quadratic function with a unique minimizing value, and use results to show that the asymptotic distribution of that minimizing quadratic function. This quadratic approximation is mainly composed by the Taylor expansion of the expected value and by a linear approximation function.

Let , , , The function is convex, continuously differentiable twice, reaches the minimum value when , and around it is represented as

where . According to

We give the first-order condition

Equation (13) can be simplified as follows

Taylor expansion of Equation (12) around can be regarded as a linear approximation function. Define

According to Equation (15), and . Give a definition

And

According to Koenker [5], the objective function can be reduced to

Let , , Then there is

Therefore, we decompose into four parts

where

For , conditions A2 and A3 mean Lindbergh condition, we have

where .

For , according to hypothesis A2, we have

For ,

For , it can be divided into two parts

by .

where and ;

In hypothesis A2, , is satisfied, we have

And .

According to the results of Equations (A1)–(A13). Although the function is convex and the point at which it reaches the minimum value is unique, we obtain

The proof is ended. □

Appendix B

The solution paths of and of DLQR and DLER under the two criteria.

Figure A1.

The solution paths of and of DLQR under criterion SIC.

Figure A2.

The solution paths of and of DLQR under criterion GACV.

Figure A3.

The solution paths of and of DLER under criterion SIC.

Figure A4.

The solution paths of and of DLER under criterion GACV.

Appendix C

Table A1.

The results 1 of simulation 1 under three signal-to-noise ratio.

Table A1.

The results 1 of simulation 1 under three signal-to-noise ratio.

| Parameters | Method | MSE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| DLER-SIC | 0.306 | 0.300 | 0.313 | 0.288 | 0.029 | 0.033 | 0.054 | 0.022 | 0.027 | 0.012 | |

| DLER-GACV | 0.486 | 0.303 | 0.411 | 0.383 | 0.027 | 0.035 | 0.056 | 0.024 | 0.035 | 0.025 | |

| DLQR-SIC | 0.280 | 0.261 | 0.297 | 0.250 | 0.060 | 0.046 | 0.091 | 0.015 | 0.024 | 0.020 | |

| DLQR-GACV | 0.289 | 0.266 | 0.299 | 0.254 | 0.063 | 0.049 | 0.088 | 0.037 | 0.039 | 0.031 | |

| DLER-SIC | 0.388 | 0.261 | 0.307 | 0.292 | 0.063 | 0.070 | 0.113 | 0.074 | 0.079 | 0.080 | |

| DLER-GACV | 0.490 | 0.266 | 0.371 | 0.342 | 0.066 | 0.069 | 0.113 | 0.085 | 0.084 | 0.078 | |

| DLQR-SIC | 0.527 | 0.234 | 0.332 | 0.335 | 0.108 | 0.088 | 0.167 | 0.076 | 0.069 | 0.086 | |

| DLQR-GACV | 0.535 | 0.236 | 0.333 | 0.324 | 0.110 | 0.098 | 0.169 | 0.083 | 0.086 | 0.084 | |

| DLER-SIC | 0.585 | 0.337 | 0.324 | 0.321 | 0.117 | 0.129 | 0.178 | 0.138 | 0.107 | 0.113 | |

| DLER-GACV | 0.649 | 0.342 | 0.330 | 0.343 | 0.139 | 0.133 | 0.186 | 0.139 | 0.128 | 0.110 | |

| DLQR-SIC | 0.868 | 0.341 | 0.337 | 0.375 | 0.162 | 0.162 | 0.244 | 0.134 | 0.165 | 0.117 | |

| DLQR-GACV | 0.894 | 0.353 | 0.325 | 0.398 | 0.163 | 0.163 | 0.244 | 0.153 | 0.152 | 0.119 | |

| DLER-SIC | 0.292 | 0.283 | 0.305 | 0.265 | 0.016 | 0.015 | 0.048 | 0.013 | 0.013 | 0 | |

| DLER-GACV | 0.509 | 0.283 | 0.438 | 0.342 | 0.015 | 0.019 | 0.049 | 0.014 | 0 | 0 | |

| DLQR-SIC | 0.207 | 0.242 | 0.262 | 0.224 | 0.026 | 0.022 | 0.083 | 0.015 | 0.015 | 0.017 | |

| DLQR-GACV | 0.219 | 0.240 | 0.256 | 0.231 | 0.032 | 0.038 | 0.093 | 0.029 | 0.026 | 0.032 | |

| DLER-SIC | 0.403 | 0.278 | 0.284 | 0.335 | 0.072 | 0.075 | 0.128 | 0.067 | 0.081 | 0.071 | |

| DLER-GACV | 0.472 | 0.276 | 0.320 | 0.359 | 0.076 | 0.074 | 0.133 | 0.065 | 0.069 | 0.063 | |

| DLQR-SIC | 0.424 | 0.273 | 0.262 | 0.325 | 0.088 | 0.095 | 0.160 | 0.079 | 0.058 | 0.073 | |

| DLQR-GACV | 0.464 | 0.272 | 0.269 | 0.324 | 0.095 | 0.097 | 0.167 | 0.086 | 0.075 | 0.091 | |

| DLER-SIC | 0.558 | 0.333 | 0.313 | 0.326 | 0.109 | 0.095 | 0.171 | 0.106 | 0.121 | 0.111 | |

| DLER-GACV | 0.634 | 0.334 | 0.323 | 0.338 | 0.129 | 0.125 | 0.179 | 0.134 | 0.126 | 0.115 | |

| DLQR-SIC | 0.673 | 0.317 | 0.300 | 0.346 | 0.119 | 0.135 | 0.216 | 0.122 | 0.106 | 0.114 | |

| DLQR-GACV | 0.720 | 0.320 | 0.311 | 0.361 | 0.118 | 0.129 | 0.218 | 0.133 | 0.121 | 0.121 | |

| DLER-SIC | 0.374 | 0.272 | 0.322 | 0.316 | 0.021 | 0.027 | 0.056 | 0.012 | 0.017 | 0 | |

| DLER-GACV | 0.456 | 0.275 | 0.389 | 0.369 | 0.025 | 0.020 | 0.058 | 0 | 0.021 | 0.007 | |

| DLQR-SIC | 0.307 | 0.275 | 0.320 | 0.255 | 0.054 | 0.027 | 0.105 | 0.024 | 0.023 | 0.028 | |

| DLQR-GACV | 0.321 | 0.275 | 0.318 | 0.260 | 0.070 | 0.039 | 0.097 | 0.031 | 0.039 | 0.041 | |

| DLER-SIC | 0.454 | 0.263 | 0.321 | 0.302 | 0.071 | 0.087 | 0.129 | 0.098 | 0.083 | 0.056 | |

| DLER-GACV | 0.538 | 0.262 | 0.400 | 0.359 | 0.072 | 0.086 | 0.124 | 0.106 | 0.070 | 0.064 | |

| DLQR-SIC | 0.547 | 0.251 | 0.319 | 0.309 | 0.097 | 0.117 | 0.187 | 0.093 | 0.060 | 0.070 | |

| DLQR-GACV | 0.570 | 0.255 | 0.317 | 0.317 | 0.103 | 0.117 | 0.198 | 0.099 | 0.081 | 0.078 | |

| DLER-SIC | 0.577 | 0.312 | 0.330 | 0.318 | 0.116 | 0.145 | 0.183 | 0.111 | 0.119 | 0.125 | |

| DLER-GACV | 0.617 | 0.309 | 0.347 | 0.336 | 0.130 | 0.146 | 0.187 | 0.116 | 0.123 | 0.115 | |

| DLQR-SIC | 0.701 | 0.283 | 0.336 | 0.322 | 0.119 | 0.137 | 0.246 | 0.131 | 0.129 | 0.129 | |

| DLQR-GACV | 0.770 | 0.304 | 0.329 | 0.348 | 0.121 | 0.153 | 0.247 | 0.154 | 0.150 | 0.157 |

Table A2.

The results 2 of simulation 1 under three signal-to-noise ratio.

Table A2.

The results 2 of simulation 1 under three signal-to-noise ratio.

| Parameters | Method | Corr (SD) | Incorr (SD) | Int | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DLER-SIC | 3 (0) | 1.04 (0.665) | 14 | 100 | 100 | 95 | 95 | 100 | 97 | 96 | 99 | |

| DLER-GACV | 2.99 (0.1) | 1.11 (0.751) | 14 | 100 | 99 | 96 | 94 | 100 | 95 | 94 | 96 | |

| DLQR-SIC | 3 (0) | 1.29 (0.518) | 0 | 100 | 100 | 87 | 91 | 100 | 99 | 96 | 98 | |

| DLQR-GACV | 3 (0) | 1.5 (0.628) | 0 | 100 | 100 | 84 | 91 | 100 | 92 | 90 | 93 | |

| DLER-SIC | 3 (0) | 1.6 (0.865) | 1 | 100 | 100 | 90 | 88 | 100 | 88 | 88 | 85 | |

| DLER-GACV | 2.99 (0.1) | 1.81 (0.982) | 1 | 100 | 99 | 87 | 84 | 100 | 82 | 82 | 83 | |

| DLQR-SIC | 3 (0) | 1.99 (0.959) | 0 | 100 | 100 | 73 | 79 | 100 | 82 | 87 | 80 | |

| DLQR-GACV | 3 (0) | 2.04 (1.063) | 0 | 100 | 100 | 75 | 76 | 100 | 83 | 81 | 81 | |

| DLER-SIC | 3 (0) | 1.87 (1.116) | 0 | 100 | 100 | 85 | 83 | 100 | 83 | 79 | 83 | |

| DLER-GACV | 3 (0) | 2.24 (1.311) | 0 | 100 | 100 | 73 | 76 | 100 | 78 | 72 | 77 | |

| DLQR-SIC | 3 (0) | 2.56 (1.351) | 0 | 100 | 100 | 67 | 70 | 100 | 69 | 65 | 73 | |

| DLQR-GACV | 3 (0) | 2.41 (1.280) | 0 | 100 | 100 | 64 | 70 | 100 | 72 | 74 | 79 | |

| DLER-SIC | 3 (0) | 0.75 (0.520) | 29 | 100 | 100 | 99 | 99 | 100 | 99 | 99 | 100 | |

| DLER-GACV | 2.98 (0.2) | 0.74 (0.525) | 30 | 99 | 99 | 99 | 98 | 100 | 99 | 100 | 100 | |

| DLQR-SIC | 3 (0) | 0.78 (0.645) | 35 | 100 | 100 | 96 | 96 | 100 | 98 | 99 | 98 | |

| DLQR-GACV | 3 (0) | 0.95 (0.770) | 36 | 100 | 100 | 95 | 91 | 100 | 94 | 96 | 93 | |

| DLER-SIC | 2.99 (0.1) | 1.37 (0.960) | 29 | 100 | 99 | 89 | 87 | 100 | 86 | 85 | 87 | |

| DLER-GACV | 2.99 (0.1) | 1.38 (1.080) | 27 | 100 | 99 | 85 | 86 | 100 | 89 | 86 | 89 | |

| DLQR-SIC | 2.99(0.1) | 1.66 (1.075) | 27 | 100 | 99 | 78 | 78 | 100 | 83 | 88 | 80 | |

| DLQR-GACV | 2.99 (0.1) | 1.76 (1.264) | 31 | 100 | 99 | 76 | 78 | 100 | 79 | 81 | 79 | |

| DLER-SIC | 3 (0) | 1.7 (1.124) | 25 | 100 | 100 | 82 | 79 | 100 | 80 | 81 | 83 | |

| DLER-GACV | 3 (0) | 1.98 (1.303) | 28 | 100 | 100 | 74 | 71 | 100 | 73 | 78 | 78 | |

| DLQR-SIC | 3 (0) | 2.38 (1.153) | 22 | 100 | 100 | 66 | 69 | 100 | 62 | 72 | 71 | |

| DLQR-GACV | 3 (0) | 2.22 (1.211) | 20 | 100 | 100 | 70 | 69 | 100 | 74 | 70 | 75 | |

| DLER-SIC | 2.99 (0.1) | 0.94 (0.528) | 15 | 100 | 99 | 98 | 96 | 100 | 99 | 98 | 100 | |

| DLER-GACV | 2.99 (0.1) | 0.96 (0.470) | 14 | 100 | 99 | 96 | 98 | 100 | 100 | 97 | 99 | |

| DLQR-SIC | 3 (0) | 1.29 (0.478) | 0 | 100 | 100 | 87 | 96 | 100 | 96 | 98 | 94 | |

| DLQR-GACV | 3 (0) | 1.47 (0.717) | 0 | 100 | 100 | 80 | 94 | 100 | 94 | 93 | 92 | |

| DLER-SIC | 3 (0) | 1.72 (0.877) | 0 | 100 | 100 | 88 | 84 | 100 | 82 | 86 | 88 | |

| DLER-GACV | 2.99 (0.1) | 1.84 (1.042) | 1 | 100 | 99 | 83 | 85 | 100 | 75 | 88 | 84 | |

| DLQR-SIC | 3 (0) | 2.04 (0.974) | 0 | 100 | 100 | 78 | 72 | 100 | 71 | 92 | 83 | |

| DLQR-GACV | 3 (0) | 2.12 (1.113) | 0 | 100 | 100 | 74 | 75 | 100 | 75 | 85 | 79 | |

| DLER-SIC | 3 (0) | 1.93 (1.066) | 0 | 100 | 100 | 87 | 80 | 100 | 83 | 82 | 75 | |

| DLER-GACV | 3 (0) | 2.16 (1.245) | 0 | 100 | 100 | 78 | 77 | 100 | 78 | 76 | 75 | |

| DLQR-SIC | 3 (0) | 2.44 (1.192) | 0 | 100 | 100 | 72 | 69 | 100 | 70 | 72 | 73 | |

| DLQR-GACV | 3 (0) | 2.62 (1.237) | 0 | 100 | 100 | 67 | 70 | 100 | 69 | 68 | 64 |

Table A3.

The results 1 of simulation 2 under different influence of random effects.

Table A3.

The results 1 of simulation 2 under different influence of random effects.

| Method | MSE | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| DLER-SIC | 0.336 | 0.268 | 0.314 | 0.281 | 0.016 | 0.012 | 0.054 | 0.022 | 0.010 | 0.026 | |

| DLER-GACV | 0.490 | 0.266 | 0.409 | 0.321 | 0.019 | 0.017 | 0.057 | 0.021 | 0.016 | 0.019 | |

| DLQR-SIC | 0.286 | 0.229 | 0.294 | 0.279 | 0.051 | 0.037 | 0.079 | 0.030 | 0.021 | 0.018 | |

| DLQR-GACV | 0.294 | 0.233 | 0.298 | 0.276 | 0.056 | 0.043 | 0.085 | 0.038 | 0.034 | 0.031 | |

| DLER-SIC | 1.318 | 0.539 | 0.559 | 0.568 | 0.013 | 0 | 0.049 | 0.021 | 0.014 | 0.012 | |

| DLER-GACV | 2.231 | 0.538 | 0.789 | 0.574 | 0.020 | 0 | 0.051 | 0.020 | 0.013 | 0.011 | |

| DLQR-SIC | 0.898 | 0.520 | 0.534 | 0.508 | 0.045 | 0.478 | 0.099 | 0.022 | 0.026 | 0 | |

| DLQR-GACV | 0.916 | 0.517 | 0.528 | 0.516 | 0.052 | 0.049 | 0.107 | 0.041 | 0.037 | 0.018 | |

| DLER-SIC | 2.577 | 0.876 | 0.906 | 0.726 | 0.022 | 0.024 | 0.049 | 0 | 0.017 | 0.022 | |

| DLER-GACV | 3.946 | 0.879 | 1.065 | 0.733 | 0.015 | 0.018 | 0.052 | 0.014 | 0.013 | 0.012 | |

| DLQR-SIC | 1.869 | 0.732 | 0.793 | 0.746 | 0.040 | 0.092 | 0.108 | 0.027 | 0.011 | 0.010 | |

| DLQR-GACV | 1.878 | 0.727 | 0.803 | 0.760 | 0.050 | 0.099 | 0.076 | 0.027 | 0.019 | 0.020 |

Table A4.

The results 2 of simulation 2 with different influence of random effects.

Table A4.

The results 2 of simulation 2 with different influence of random effects.

| Method | Corr (SD) | Incorr (SD) | Int | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| DLER-SIC | 3 (0) | 0.8 (0.651) | 29 | 100 | 100 | 98 | 99 | 100 | 98 | 99 | 97 | |

| DLER-GACV | 3 (0) | 0.81 (0.598) | 29 | 100 | 100 | 98 | 98 | 100 | 98 | 98 | 98 | |

| DLQR-SIC | 3 (0) | 1.03 (0.643) | 30 | 100 | 100 | 85 | 93 | 100 | 94 | 97 | 98 | |

| DLQR-GACV | 3 (0) | 1.17 (0.877) | 28 | 100 | 100 | 85 | 90 | 100 | 91 | 94 | 95 | |

| DLER-SIC | 2.91 (0.288) | 0.98 (0.376) | 9 | 100 | 91 | 99 | 100 | 100 | 97 | 98 | 99 | |

| DLER-GACV | 2.78 (0.462) | 0.99 (0.362) | 8 | 98 | 80 | 98 | 100 | 100 | 98 | 98 | 99 | |

| DLQR-SIC | 3 (0) | 1.12 (0.591) | 15 | 15 | 100 | 90 | 90 | 100 | 98 | 96 | 100 | |

| DLQR-GACV | 3 (0) | 1.24 (0.754) | 17 | 17 | 100 | 87 | 90 | 100 | 91 | 93 | 98 | |

| DLER-SIC | 2.75 (0.435) | 1.04 (0.470) | 6 | 99 | 76 | 98 | 98 | 100 | 100 | 98 | 96 | |

| DLER-GACV | 2.46 (0.673) | 1.01 (0.460) | 7 | 90 | 56 | 99 | 98 | 100 | 99 | 98 | 98 | |

| DLQR-SIC | 2.97 (0.171) | 1.11 (0.601) | 15 | 100 | 100 | 93 | 87 | 100 | 96 | 99 | 99 | |

| DLQR-GACV | 2.97 (0.171) | 1.24 (0.605) | 4 | 100 | 100 | 87 | 84 | 100 | 96 | 98 | 97 |

Table A5.

The results 1 of simulation 3 with dense model under different error distributions.

Table A5.

The results 1 of simulation 3 with dense model under different error distributions.

| Model | Method | MSE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Dense | |||||||||||

| DLER-SIC | 0.908 | 0.522 | 0.544 | 0.527 | 0.058 | 0.061 | 0.066 | 0.068 | 0.058 | 0.053 | |

| DLER-GACV | 0.922 | 0.522 | 0.558 | 0.550 | 0.058 | 0.062 | 0.066 | 0.068 | 0.059 | 0.053 | |

| DLQR-SIC | 0.853 | 0.468 | 0.485 | 0.527 | 0.079 | 0.079 | 0.081 | 0.093 | 0.076 | 0.070 | |

| DLQR-GACV | 0.882 | 0.463 | 0.476 | 0.536 | 0.088 | 0.086 | 0.087 | 0.102 | 0.084 | 0.073 | |

| DLER-SIC | 0.965 | 0.556 | 0.570 | 0.534 | 0.140 | 0.097 | 0.104 | 0.113 | 0.108 | 0.113 | |

| DLER-GACV | 1.114 | 0.551 | 0.560 | 0.590 | 0.187 | 0.114 | 0.125 | 0.126 | 0.131 | 0.163 | |

| DLQR-SIC | 0.871 | 0.518 | 0.525 | 0.467 | 0.113 | 0.099 | 0.101 | 0.118 | 0.108 | 0.094 | |

| DLQR-GACV | 0.872 | 0.520 | 0.520 | 0.484 | 0.115 | 0.095 | 0.088 | 0.113 | 0.103 | 0.095 | |

| DLER-SIC | 4.397 | 0.391 | 0.404 | 0.678 | 0.105 | 0.272 | 0.391 | 0.370 | 0.753 | 0.377 | |

| DLER-GACV | 4.828 | 0.535 | 0.405 | 0.795 | 0.306 | 0.310 | 0.397 | 0.370 | 0.725 | 0.424 | |

| DLQR-SIC | 2.450 | 0.249 | 0.350 | 0.605 | 0.039 | 0.089 | 0.031 | 0.072 | 0.092 | 0.127 | |

| DLQR-GACV | 2.157 | 0.237 | 0.361 | 0.555 | 0.008 | 0.0536 | 0.041 | 0.020 | 0.094 | 0.129 |

Table A6.

The results 2 of simulation 3 with dense model under different error distributions.

Table A6.

The results 2 of simulation 3 with dense model under different error distributions.

| Model | Method | Corr (SD) | Incorr (SD) | Int | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dense | ||||||||||||

| DLER-SIC | 7.99 (0.33) | 0.74 (0.44) | 26 | 94 | 96 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLER-GACV | 7.7 (0.63) | 0.74 (0.44) | 26 | 84 | 86 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLQR-SIC | 7.9 (0.33) | 0.78 (0.42) | 22 | 94 | 96 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLQR-GACV | 7.91 (0.32) | 0.81 (0.39) | 19 | 96 | 95 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLER-SIC | 7.72 (0.62) | 0.84 (0.37) | 16 | 83 | 90 | 99 | 100 | 100 | 100 | 100 | 100 | |

| DLER-GACV | 7.37 (0.92) | 0.83 (0.38) | 17 | 66 | 73 | 99 | 100 | 100 | 100 | 100 | 99 | |

| DLQR-SIC | 7.88 (0.33) | 0.84 (0.37) | 16 | 93 | 95 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLQR-GACV | 7.91 (0.29) | 0.84 (0.37) | 16 | 95 | 96 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLER-SIC | 7.6 (0.80) | 0.8 (0.40) | 20 | 100 | 100 | 100 | 100 | 80 | 80 | 100 | 100 | |

| DLER-GACV | 7.68 (0.47) | 1 (0) | 0 | 100 | 100 | 100 | 100 | 88 | 80 | 100 | 100 | |

| DLQR-SIC | 8 (0) | 0.8 (0.40) | 20 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | |

| DLQR-GACV | 8 (0) | 0.8 (0.40) | 20 | 100 | 100 | 100 | 100 | 100 | 100 | 100 | 100 |

Table A7.

The results 1 of simulation 3 with the sparse model under different error distributions.

Table A7.

The results 1 of simulation 3 with the sparse model under different error distributions.

| Model | Method | MSE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Sparse | |||||||||||

| DLER-SIC | 1.345 | 0.608 | 0.622 | 0.550 | 0.011 | 0 | 0.051 | 0.015 | 0.015 | 0.023 | |

| DLER-GACV | 2.084 | 0.608 | 0.849 | 0.606 | 0.011 | 0 | 0.052 | 0.019 | 0.014 | 0.018 | |

| DLQR-SIC | 0.978 | 0.508 | 0.527 | 0.544 | 0.030 | 0.033 | 0.123 | 0.030 | 0.020 | 0.013 | |

| DLQR-GACV | 0.947 | 0.516 | 0.527 | 0.529 | 0.036 | 0.037 | 0.106 | 0.037 | 0.028 | 0.027 | |

| DLER-SIC | 1.230 | 0.676 | 0.533 | 0.605 | 0.055 | 0.039 | 0.104 | 0.075 | 0.049 | 0.064 | |

| DLER-GACV | 2.737 | 0.670 | 1.083 | 0.742 | 0.070 | 0.053 | 0.204 | 0.082 | 0.057 | 0.075 | |

| DLQR-SIC | 1.008 | 0.522 | 0.498 | 0.530 | 0.065 | 0.049 | 0.102 | 0.041 | 0.047 | 0.048 | |

| DLQR-GACV | 1.000 | 0.529 | 0.492 | 0.531 | 0.066 | 0.055 | 0.106 | 0.046 | 0.047 | 0.049 | |

| DLER-SIC | 5.756 | 0.620 | 0.732 | 0.404 | 0.121 | 0 | 0.399 | 0.587 | 0.238 | 0.385 | |

| DLER-GACV | 6.791 | 0.708 | 0.753 | 0.307 | 0.384 | 0.073 | 0.542 | 0.682 | 0.521 | 0.460 | |

| DLQR-SIC | 2.099 | 0.593 | 0.411 | 0.310 | 0.105 | 0.193 | 0.152 | 0.119 | 0.046 | 0.062 | |

| DLQR-GACV | 1.758 | 0.638 | 0.385 | 0.342 | 0 | 0.173 | 0.113 | 0.095 | 0.049 | 0.034 |

Table A8.

The results 2 of simulation 3 with the sparse model under different error distributions.

Table A8.

The results 2 of simulation 3 with the sparse model under different error distributions.

| Model | Method | Corr (SD) | Incorr (SD) | Int | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sparse | ||||||||||||

| DLER-SIC | 2.91 (0.288) | 0.98 (0.40) | 8 | 100 | 91 | 99 | 100 | 100 | 99 | 98 | 98 | |

| DLER-GACV | 2.79 (0.478) | 0.98 (0.40) | 8 | 97 | 82 | 99 | 100 | 100 | 97 | 99 | 99 | |

| DLQR-SIC | 3 (0) | 1.09 (0.62) | 14 | 100 | 100 | 94 | 92 | 100 | 95 | 97 | 99 | |

| DLQR-GACV | 3 (0) | 1.17 (0.63) | 14 | 100 | 100 | 94 | 92 | 100 | 92 | 95 | 96 | |

| DLER-SIC | 2.94 (0.24) | 1.38 (0.84) | 8 | 100 | 94 | 91 | 96 | 100 | 87 | 93 | 87 | |

| DLER-GACV | 2.59 (0.71) | 1.55 (1.06) | 12 | 87 | 72 | 84 | 91 | 100 | 83 | 90 | 85 | |

| DLQR-SIC | 2.97 (0.17) | 1.27 (0.79) | 15 | 100 | 97 | 88 | 91 | 100 | 92 | 94 | 93 | |

| DLQR-GACV | 2.97 (0.17) | 1.38 (0.85) | 16 | 100 | 97 | 86 | 86 | 100 | 90 | 93 | 91 | |

| DLER-SIC | 2.84 (0.37) | 2.02 (0.62) | 0 | 100 | 84 | 79 | 100 | 100 | 80 | 59 | 80 | |

| DLER-GACV | 3 (0) | 4.62 (0.83) | 7 | 100 | 100 | 2 | 78 | 100 | 18 | 15 | 18 | |

| DLQR-SIC | 3 (0) | 2.66 (1.11) | 0 | 100 | 100 | 79 | 36 | 100 | 71 | 71 | 77 | |

| DLQR-GACV | 3 (0) | 2.09 (0.93) | 0 | 100 | 100 | 100 | 43 | 100 | 71 | 84 | 93 |

Table A9.

The results 1 of simulation 3 with the high sparse model under different error distributions.

Table A9.

The results 1 of simulation 3 with the high sparse model under different error distributions.

| Model | Method | MSE | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| High Sparse | |||||||||||

| DLER-SIC | 1.017 | 0.611 | 0.593 | 0.267 | 0.020 | 0.021 | 0.025 | 0.039 | 0.035 | 0.017 | |

| DLER-GACV | 3.096 | 0.613 | 0.981 | 0.253 | 0.017 | 0.022 | 0.025 | 0.033 | 0.016 | 0 | |

| DLQR-SIC | 0.773 | 0.523 | 0.493 | 0.475 | 0.038 | 0.029 | 0.030 | 0.020 | 0.018 | 0.035 | |

| DLQR-GACV | 0.81 | 0.528 | 0.509 | 0.477 | 0.048 | 0.040 | 0.036 | 0.039 | 0.041 | 0.036 | |

| DLER-SIC | 1.264 | 0.528 | 0.73 | 0.282 | 0.055 | 0 | 0.024 | 0.042 | 0.083 | 0.091 | |

| DLER-GACV | 2.478 | 0.519 | 1.019 | 0.307 | 0.069 | 0.039 | 0.03 | 0.039 | 0.092 | 0.087 | |

| DLQR-SIC | 0.792 | 0.474 | 0.576 | 0.394 | 0.082 | 0.072 | 0.051 | 0.061 | 0.036 | 0.039 | |

| DLQR-GACV | 0.777 | 0.483 | 0.575 | 0.374 | 0.056 | 0.054 | 0.047 | 0.038 | 0.029 | 0.035 | |

| DLER-SIC | 1.925 | 1.147 | 0.162 | 0.741 | 0 | 0.513 | 0 | 0 | 0 | 0.371 | |

| DLER-GACV | 14.720 | 1.200 | 0.298 | 1.289 | 0.932 | 1.201 | 0.602 | 0.513 | 0.227 | 0.334 | |

| DLQR-SIC | 0.798 | 0.650 | 0.256 | 0.484 | 0.117 | 0.095 | 0.082 | 0.029 | 0.124 | 0.121 | |

| DLQR-GACV | 0.822 | 0.567 | 0.238 | 0.415 | 0.116 | 0.088 | 0.060 | 0.0315 | 0.109 | 0.109 |

Table A10.

The results 2 of simulation 3 with the high sparse model under different error distributions.

Table A10.

The results 2 of simulation 3 with the high sparse model under different error distributions.

| Model | Method | Corr (SD) | Incorr (SD) | Int | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| High Sparse | ||||||||||||

| DLER-SIC | 1 (0) | 1.43 (0.78) | 4 | 100 | 75 | 98 | 98 | 98 | 92 | 95 | 97 | |

| DLER-GACV | 1 (0) | 1.27 (0.72) | 4 | 100 | 83 | 98 | 98 | 98 | 94 | 98 | 100 | |

| DLQR-SIC | 1 (0) | 1.59 (0.78) | 29 | 100 | 35 | 93 | 97 | 95 | 99 | 98 | 95 | |

| DLQR-GACV | 1 (0) | 1.71 (0.97) | 31 | 100 | 39 | 92 | 92 | 94 | 95 | 92 | 94 | |

| DLER-SIC | 1 (0) | 1.52 (0.92) | 2 | 100 | 75 | 91 | 100 | 98 | 94 | 93 | 95 | |

| DLER-GACV | 0.99 (0.1) | 1.7 (1.11) | 2 | 99 | 70 | 87 | 94 | 96 | 96 | 94 | 91 | |

| DLQR-SIC | 1 (0) | 2.25 (1.22) | 14 | 100 | 34 | 78 | 84 | 89 | 90 | 94 | 92 | |

| DLQR-GACV | 1 (0) | 1.94 (1.08) | 11 | 100 | 45 | 85 | 90 | 90 | 95 | 96 | 94 | |

| DLER-SIC | 1 (0) | 1.71 (0.76) | 0 | 100 | 82 | 100 | 53 | 100 | 100 | 100 | 94 | |

| DLER-GACV | 1 (0) | 6.53 (2.35) | 0 | 100 | 11 | 12 | 11 | 29 | 30 | 30 | 24 | |

| DLQR-SIC | 1 (0) | 3.93 (1.28) | 6 | 100 | 6 | 40 | 68 | 54 | 99 | 67 | 67 | |

| DLQR-GACV | 1 (0) | 3.06 (1.54) | 6 | 100 | 48 | 39 | 68 | 96 | 99 | 67 | 71 |

Table A11.

The results 1 of simulation 4 with the high dimensional model.

Table A11.

The results 1 of simulation 4 with the high dimensional model.

| Model | Method | MSE | ||||

|---|---|---|---|---|---|---|

| DLER-SIC | 4.783 | 0.010 | 0.513 | 0.360 | 0.657 | |

| DLER-GACV | 5.414 | 0.007 | 0.914 | 0.482 | 0.706 | |

| DLQR-SIC | 39.235 | 0.406 | 0.585 | 0.606 | 0.443 | |

| DLQR-GACV | 6.902 | 0.003 | 0.876 | 0.463 | 0.789 | |

| DLER-SIC | 5.870 | 0.165 | 0.671 | 0.383 | 0.882 | |

| DLER-GACV | 4.483 | 0.010 | 0.759 | 0.467 | 0.719 | |

| DLQR-SIC | 40.62433 | 0.413 | 0.593 | 0.625 | 0.507 | |

| DLQR-GACV | 7.799 | 0.009 | 0.916 | 0.514 | 0.723 |

Table A12.

The results 2 of simulation 4 with the high dimensional model.

Table A12.

The results 2 of simulation 4 with the high dimensional model.

| Model | Method | Corr (SD) | Incorr (SD) | ||||

|---|---|---|---|---|---|---|---|

| DLER-SIC | 2.34 (0.590) | 5.31 (2.237) | 95.953 | 100 | 44 | 90 | |

| DLER-GACV | 2.28 (0.805) | 3.46 (1.904) | 97.682 | 88 | 50 | 90 | |

| DLQR-SIC | 3 (0) | 94.3 (3.033) | 12.794 | 100 | 100 | 100 | |

| DLQR-GACV | 2.8 (0.426) | 68.44 (33.202) | 36.972 | 98 | 91 | 91 | |

| DLER-SIC | 2.27 (0.737) | 5.54 (7.885) | 95.738 | 96 | 53 | 78 | |

| DLER-GACV | 2.58 (0.684) | 5.32 (3.490) | 95.963 | 96 | 72 | 90 | |

| DLQR-SIC | 2.99 (0.1) | 94.46 (3.963) | 12.654 | 100 | 100 | 99 | |

| DLQR-GACV | 2.68 (0.490) | 42.06 (41.702) | 61.617 | 98 | 82 | 88 |

Table A13.

CD4 cell data: the estimation of DLER-SIC, DLER-GACV, DLQR-SIC, DLQR-GACV.

Table A13.

CD4 cell data: the estimation of DLER-SIC, DLER-GACV, DLQR-SIC, DLQR-GACV.

| Int | Time | Smoking | Age | Sex Partner | |

|---|---|---|---|---|---|

| DLER-SIC | −0.255 | −0.271 | 0.080 | 0 | 0 |

| DLER-GACV | −0.190 | −0.266 | 0.058 | 0 | 0 |

| DLQR-SIC | −0.851 | −0.314 | 0.117 | 0 | 0 |

| DLQR-GACV | −0.852 | −0.313 | 0.119 | 0 | 0 |

| DLER-SIC | −0.047 | −0.243 | 0.080 | 0 | 0 |

| DLER-GACV | 0 | −0.234 | 0.051 | 0 | 0 |

| DLQR-SIC | −0.422 | −0.258 | 0.117 | 0 | 0 |

| DLQR-GACV | −0.420 | −0.259 | 0.123 | 0 | 0 |

| DLER-SIC | 0.129 | −0.233 | 0.090 | 0 | 0 |

| DLER-GACV | 0.112 | −0.241 | 0.101 | 0 | 0 |

| DLQR-SIC | 0.126 | −0.208 | 0.113 | 0 | 0 |

| DLQR-GACV | 0.126 | −0.207 | 0.105 | 0 | 0 |

| DLER-SIC | 0.345 | −0.220 | 0.074 | 0 | 0 |

| DLER-GACV | 0.329 | −0.230 | 0.098 | 0 | 0 |

| DLQR-SIC | 0.557 | −0.167 | 0.104 | 0 | 0 |

| DLQR-GACV | 0.559 | −0.166 | 0.129 | 0 | 0 |

| DLER-SIC | 0.492 | −0.217 | 0.078 | 0 | 0 |

| DLER-GACV | 0.492 | −0.228 | 0.093 | 0 | 0 |

| DLQR-SIC | 0.959 | −0.141 | 0.154 | 0 | 0 |

| DLQR-GACV | 0.949 | −0.14 | 0.153 | 0 | 0 |

References

- Koenker, R.; Bassett, G., Jr. Regression quantiles. Econom. J. Econom. Soc. 1978, 46, 33–50. [Google Scholar] [CrossRef]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Zou, H. The adaptive lasso and its oracle properties. J. Am. Stat. Assoc. 2006, 101, 1418–1429. [Google Scholar] [CrossRef]

- Biswas, J.; Das, K. A Bayesian quantile regression approach to multivariate semi-continuous longitudinal data. Comput. Stat. 2021, 36, 241–260. [Google Scholar] [CrossRef]

- Koenker, R. Quantile regression for longitudinal data. J. Multivar. Anal. 2004, 91, 74–89. [Google Scholar] [CrossRef]

- Wang, H.J.; Feng, X.; Dong, C. Copula-based quantile regression for longitudinal data. Stat. Sin. 2019, 29, 245–264. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, Y. Variable selection in quantile regression. Stat. Sin. 2009, 19, 801–817. [Google Scholar]

- Peng, L. Quantile regression for survival data. Annu. Rev. Stat. Its Appl. 2021, 8, 413. [Google Scholar] [CrossRef]

- Li, H.; Liu, Y.; Luo, Y. Double Penalized Quantile Regression for the Linear Mixed Effects Model. J. Syst. Sci. Complex. 2020, 33, 2080–2102. [Google Scholar] [CrossRef]

- Newey, W.K.; Powell, J.L. Asymmetric least squares estimation and testing. Econom. J. Econom. Soc. 1987, 55, 819–847. [Google Scholar] [CrossRef]

- Almanjahie, I.M.; Bouzebda, S.; Kaid, Z.; Laksaci, A. Nonparametric estimation of expectile regression in functional dependent data. J. Nonparametr. Stat. 2022, 34, 250–281. [Google Scholar] [CrossRef]

- Gu, Y.; Zou, H. High-dimensional generalizations of asymmetric least squares regression and their applications. Ann. Stat. 2016, 44, 2661–2694. [Google Scholar] [CrossRef]

- Farooq, M.; Steinwart, I. Learning rates for kernel-based expectile regression. Mach. Learn. 2019, 108, 203–227. [Google Scholar] [CrossRef]

- Schulze Waltrup, L.; Kauermann, G. Smooth expectiles for panel data using penalized splines. Stat. Comput. 2017, 27, 271–282. [Google Scholar] [CrossRef]

- Sobotka, F.; Kauermann, G.; Schulze Waltrup, L.; Kneib, T. On confidence intervals for semiparametric expectile regression. Stat. Comput. 2013, 23, 135–148. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Y. Variable selection in expectile regression. Commun. Stat.-Theory Methods 2018, 47, 1731–1746. [Google Scholar] [CrossRef]

- Liao, L.; Park, C.; Choi, H. Penalized expectile regression: An alternative to penalized quantile regression. Ann. Inst. Stat. Math. 2019, 71, 409–438. [Google Scholar] [CrossRef]

- Waldmann, E.; Sobotka, F.; Kneib, T. Bayesian regularisation in geoadditive expectile regression. Stat. Comput. 2017, 27, 1539–1553. [Google Scholar] [CrossRef]

- Xu, Q.F.; Ding, X.H.; Jiang, C.X.; Yu, K.M.; Shi, L. An elastic-net penalized expectile regression with applications. J. Appl. Stat. 2021, 48, 2205–2230. [Google Scholar] [CrossRef]

- Farooq, M.; Steinwart, I. An SVM-like approach for expectile regression. Comput. Stat. Data Anal. 2017, 109, 159–181. [Google Scholar] [CrossRef]

- Spiegel, E.; Sobotka, F.; Kneib, T. Model selection in semiparametric expectile regression. Electron. J. Stat. 2017, 11, 3008–3038. [Google Scholar] [CrossRef]

- Daouia, A.; Girard, S.; Stupfler, G. Tail expectile process and risk assessment. Bernoulli 2020, 26, 531–556. [Google Scholar] [CrossRef]

- Ziegel, J.F. Coherence and elicitability. Math. Financ. 2016, 26, 901–918. [Google Scholar] [CrossRef]

- Rao, C.R.; Statistiker, M. Linear Statistical Inference and Its Applications; Wiley: New York, NY, USA, 1973. [Google Scholar]

- Harville, D. Extension of the Gauss-Markov theorem to include the estimation of random effects. Ann. Stat. 1976, 4, 384–395. [Google Scholar] [CrossRef]

- Robinson, G.K. That BLUP is a good thing: The estimation of random effects. Stat. Sci. 1991, 6, 15–31. [Google Scholar]

- Van Vleck, L.D.; Henderson, C.R. Estimates of genetic parameters of some functions of part lactation milk records. J. Dairy Sci. 1961, 44, 1073–1084. [Google Scholar] [CrossRef]

- Goldberger, A.S. Best linear unbiased prediction in the generalized linear regression model. J. Am. Stat. Assoc. 1962, 57, 369–375. [Google Scholar] [CrossRef]

- Koenker, R.; Ng, P.; Portnoy, S. Quantile smoothing splines. Biometrika 1994, 81, 673–680. [Google Scholar] [CrossRef]

- Yuan, M. GACV for quantile smoothing splines. Comput. Stat. Data Anal. 2006, 50, 813–829. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).