Abstract

Generalized progressive hybrid censored mechanisms have been proposed to reduce the test duration and to save the cost spent on testing. This paper considers the problem of estimating the unknown model parameters and the reliability time functions of the new inverted Nadarajah–Haghighi (NH) distribution under generalized Type-II progressive hybrid censoring using the maximum likelihood and Bayesian estimation approaches. Utilizing the normal approximation of the frequentist estimators, the corresponding approximate confidence intervals of unknown quantities are also constructed. Using independent gamma conjugate priors under the symmetrical squared error loss, the Bayesian estimators are developed. Since the joint likelihood function is obtained in complex form, the Bayesian estimators and their associated highest posterior density intervals cannot be obtained analytically but can be evaluated via Monte Carlo Markov chain techniques. To select the optimum censoring scheme among different censoring plans, five optimality criteria are used. Finally, to explain how the proposed methodologies can be applied in real situations, two applications representing the failure times of electronic devices and deaths from the coronavirus disease 2019 epidemic in the United States of America are analyzed.

1. Introduction

Today, reliability technology plays a very important role, because it measures the ability of a system to successfully perform its intended function under predetermined conditions for a specified period. In this framework, several studies on system reliability have been conducted (among others, see Chen et al. [1], Xu et al. [2], Hu and Chen [3], and Luo et al. [4]). Progressive Type-II censoring (PCS-T2) has been discussed quite extensively in the literature as a highly flexible censoring scheme (for details, see Balakrishnan and Cramer [5]). At time , n independent units are placed in a test in which the number of failures to be observed r and the progressive censoring , where , are determined. At the time of the first failure observed (say ), of the remaining surviving units are randomly selected and removed from the test. Similarly, at the time of the second failure (say ), of are randomly selected and removed from the test, and so on. At the time of the rth failure (say ), all remaining survival units are withdrawn from the test. However, when the experimental units are highly reliable, PCS-T2 may take a longer time to continue, and this is the main drawback of this censoring scheme. To overcome this drawback, Kundu and Joarder [6] proposed the progressive Type-I hybrid censoring scheme (PHCS-T1), which is a mixture of PCS-T2 and classical Type-I censoring.

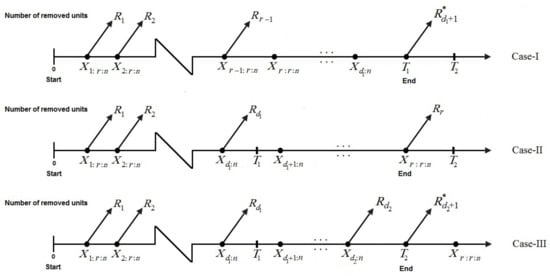

Under PHCS-T1, the experimental time cannot exceed T. In addition, the disadvantage of PHCS-T1 is that there may be very few failures that occur before time T, and thus the maximum likelihood estimators (MLEs) may not always exist. Therefore, to handle this problem, Childs et al. [7] proposed the progressive Type-II hybrid censoring scheme (PHCS-T2). Under PHCS-T2, the experiment stops at . Although PHCS-T2 guarantees a specified number of failures, it might take a long time to observe r failures. Therefore, Lee et al. [8] introduced the generalized progressive Type-II hybrid censoring scheme (GPHCS-T2). Suppose the integer r and the two thresholds are pre-assigned such that and . Let and denote the number of failures up to times and , respectively. At , of are withdrawn from the test at random. Following , of are withdrawn, and so on. According to the termination time , all remaining units are removed, and the experiment is stopped. It is useful to note that GPHCS-T2 modifies PHCS-T2 by guaranteeing that the test is completed at a predetermined time . Therefore, represents the absolute longest that the researcher is willing to allow the experiment to continue. The schematic diagram shown in Figure 1 represents that if , then we continue to observe failures, but without any further withdrawals up to time (Case-I) (i.e., ); if , we terminate the test at (Case-II); otherwise, we terminate the test at time (Case-III). Thus, an experimenter will observe one of the following three data forms:

Figure 1.

Diagram of GPHCS-T2.

Assume that denotes the corresponding lifetimes from a distribution with a cumulative distribution function (CDF) and probability density function (PDF) . Thus, the combined likelihood function of GPHCS-T2 can be expressed as

where , refer to Case-I, II, and III, respectively, and is a composite form of the reliability functions. The GPHCS-T2 notations from Equation (1) are listed in Table 1. Additionally, from Equation (1), different censoring plans can be obtained as special cases:

Table 1.

The GPHCS-T2 notations.

- PHCS-T1 by setting ;

- PHCS-T2 by setting ;

- Hybrid Type-I censoring by setting , ;

- Hybrid Type-II censoring by setting , ;

- Type-I censoring by setting , , ;

- Type-II censoring by setting , , .

Various studies based on GPHCS-T2 have also been conducted. For example, Ashour and Elshahhat [9] obtained the maximum likelihood and Bayes estimators of the Weibull parameters. Ateya and Mohammed [10] discussed the prediction problem of future failure times from the Burr-XII distribution. Seo [11] proposed an objective Bayesian analysis with partial information for the Weibull distribution. Cho and Lee [12] studied the competing risks from exponential data, and recently, Nagy et al. [13] investigated both the point and interval estimates of the Burr-XII parameters.

Nadarajah and Haghighi [14] introduced a generalization form of the exponential distribution called the Nadarajah–Haghighi (NH) distribution. Its density allows decreasing and unimodal shapes while the hazard rate exhibits increasing, decreasing, and constant shapes. Moreover, its density, survival, and hazard rate functions has two parameters, as in the Weibull, gamma and generalized exponential lifetime models. They also showed that the NH model can be interpreted as a truncated Weibull distribution. A new two-parameter inverse distribution, called the inverted Nadarajah–Haghighi (INH) distribution, for data modeling with decreasing and upside-down bathtub-shaped hazard rates as well as a decreasing and unimodal (right-skewed) density was introduced by Tahir et al. [15]. A lifetime random variable X is said to have INH distribution, where , and its PDF (), CDF (), reliability function (RF), , and hazard function (HF), at a mission time t are given, respectively, by

and

where and are the shape and scale parameters, respectively. By setting in Equation (2), the inverted exponential distribution is introduced as a special case. Tahir et al. [15] showed that the proposed distribution is highly flexible for modeling real data sets that exhibit decreasing and upside-down bathtub hazard shapes. Recently, from the times until breakdown of an insulating fluid between 19 electrodes recorded at 34 kV, Elshahhat and Rastogi [16] showed that the INH distribution is the best compared with other 10 inverted models in the literature.

To the best of our knowledge, we have not come across any work related to estimation of the model parameters or survival characteristics of the new INH lifetime model in the presence of data obtained from the generalized Type-II progressive hybrid censoring plan. Therefore, to close this gap, our objectives in this study are the following. First, we derive the likelihood inference for the unknown INH parameters and or any function of them, such as or . From the squared error (SE) loss, the second objective is to develop the Bayes estimates for the same unknown parameters by utilizing independent gamma priors. In addition, based on the proposed estimation methods, the approximate confidence intervals (ACIs) and highest posterior density (HPD) interval estimators for the unknown parameters of the INH distribution, are found. Since the theoretical results of and obtained by the proposed estimation methods cannot be expressed in closed form, in the programming language, the ‘maxLik’ (proposed by Henningsen and Toomet [17]) and ‘coda’ (proposed by Plummer et al. [18]) packages are used to calculate the acquired estimates. Using five optimality criteria, the third objective is to obtain the best progressive censoring plan. Using various combinations of the total sample size, effective sample size, threshold times, and progressive censoring, the efficiencies of the various estimators are compared via a Monte Carlo simulation. The estimators, thus obtained, are compared on the basis of their simulated root mean squared errors (RMSEs), mean relative absolute biases (MRABs), and average confidence lengths (ACLs). In addition, two different real data sets coming from the engineering and clinical fields are examined to see how the proposed methods can perform in practice and adopt the optimal censoring plan.

The rest of the paper is organized as follows. The maximum likelihoods and Bayes inferences of the unknown parameters and reliability characteristics are discussed in Section 2 and Section 3, respectively. The asymptotic and credible intervals are constructed in Section 4. The Monte Carlo simulation results are reported in Section 5. The optimal progressive censoring plans are discussed in Section 6. Two real-life data analyses are investigated in Section 7. Finally, we conclude the paper in Section 8.

2. Likelihood Estimators

Suppose is a GPHCS-T2 sample of a size from . By substituting Equations (2) and (3) into Equation (1), where is used instead of , the likelihood function of GPHCS-T2 (1) can be written as

where , and .

The corresponding log-likelihood function of Equation (6) becomes

where , and for Case-I, II, and III, respectively.

By differentiating Equation (7) partially with respect to and , the following two likelihood equations must be solved simultaneously after equating them to zero to obtain the MLEs and :

and

where , , such that

and

From Equations (8) and (9), it is clear that we have a system of two nonlinear equations which must be simultaneously satisfied to obtain the MLEs and of and in the INH model, respectively. Therefore, a closed-form solution for and does not exist and cannot be computed analytically. Therefore, for any given GPHCS-T2 data set, a numerical methods such as the Newton–Raphson iterative method can be used to calculate and . Once the estimates of and are obtained, by replacing and with and , the MLEs and of and , respectively, can be easily derived.

3. Bayes Estimators

In this section, based on the SE loss function, the Bayes estimators and associated HPD intervals of , , , and are developed. To establish this purpose, both INH parameters, and , are assumed to be independently distributed as gamma priors such as and , respectively. Several reasons to consider gamma priors are that: (1) they provide various shapes based on parameter values, (2) they are flexible in nature, and (3) they are fairly straightforward, concise, and may not lead to a result with a complex estimation issue. Then, the joint prior density of and is

where and for are assumed to be known. By combining Equations (6) and (10), the joint posterior PDF of and becomes

where C is the normalizing constant. Subsequently, the Bayes estimate of any function of and , such as , under SE loss is the posterior expectation of Equation (11), which is given by

It is clear that from Equation (11), the marginal PDFs of and cannot be obtained in explicit expression. For this purpose, we propose using Bayes Monte Carlo Markov chain (MCMC) techniques to generate samples from Equation (11) in order to compute the acquired Bayes estimates and to construct their HPD intervals.

To run the MCMC sampler, from Equation (11), the full conditional PDFs of and are given, respectively, as

and

Since the posterior PDFs of and in Equations (12) and (13), respectively, cannot be reduced analytically to any familiar distribution, the Metropolis–Hastings (M-H) algorithm is considered to solve this problem (for detail, see Gelman et al. [19] and Lynch [20]). The sampling process of the M-H algorithm is conducted as follows:

- Step 1: Set the initial values and .

- Step 2: Set .

- Step 3: Generate and from and , respectively.

- Step 4: Obtain and .

- Step 5: Generate samples and from the uniform distribution.

- Step 6: If and , then set and ; otherwise, set and , respectively.

- Step 7: Set .

- Step 8: Repeat steps 3–7 B times and obtain and for .

- Step 9: Compute the RF (Equation (4)) and HF (Equation (5)) using for a given mission time , respectively, asand

To guarantee the convergence of the MCMC sampler and remove the affection of the start values and , the first simulated varieties (say ) are discarded as burn-ins. Therefore, the remaining samples of , , or (say ) are utilized to compute the Bayesian estimates. However, the Bayes MCMC estimates of under the SE loss function are given by

4. Interval Estimators

In this section, the approximate confidence (based on the observed Fisher information) and HPD interval (based on the MCMC-simulated varieties) estimators of , , , or are obtained.

4.1. Asymptotic Intervals

To construct the ACIs for and , we first need to compute the asymptotic variance-covariance (AVC) matrix, which is obtained by inverting the Fisher information matrix. Under some regularity conditions, is approximately normal with a mean and variance . Following Lawless [21], by setting and in place of and , we estimate by as follows:

where and are obtained and reported in Appendix A. Thus, the two-sided ACIs for and are given, respectively, by

where denotes the upper percentage points of the standard normal distribution, where and are the main diagonal elements of Equation (14).

Moreover, to construct the ACIs of and , we first follow the delta method to find the estimated variance of and (see Greene [22]) as follows:

where and .

Then, the two-sided ACIs of and are given, respectively, by

Bootstrapping techniques which improve estimators or build confidence intervals of , , , or can be easily incorporated.

4.2. HPD Intervals

To construct HPD interval estimates of , , , or , the method suggested by Chen and Shao [23] is used. First, we order the MCMC samples of for as . As a result, the two-sided HPD interval of is given by

where is chosen such that

here denotes the highest value less than or equal to x.

5. Monte Carlo Simulation

To examine the performance of the proposed point and interval estimators introduced in the previous sections, an extensive Monte Carlo simulation is conducted. A total of 2000 GPHCS-T2 samples are generated from based on different choices for n (total test units), r (observed failure data), (ideal times), and (censoring plan). At mission time , the actual values of and are 0.765 and 3.13, respectively. To run the experiment according to generalized progressive Type-II hybrid censored sampling from the proposed model, we propose the following algorithm:

- Step 1. Set the parameter values of and .

- Step 2. Set the specific values of n, r, , , and .

- Step 3. Simulate a PCS-T2 sample of size r as follows:

- Generate independent observations of a size r as from .

- Set .

- Set for .

- Carry out the PCS-T2 sample of a size r from by inverting Equation (3) (i.e., ).

- Step 4. Determine at and at from the PCS-T2 sample.

- Step 5. Carry out the GPHCS-T2 sample as follows:

- If , then set , terminate the experiment at , and remove the remaining units . This is Case-I, and in this case, replace with those items obtained from a truncated distribution with a size .

- If , then terminate the experiment at and remove the remaining units . This is Case-II.

- If , then terminate the experiment at and remove the remaining units . This is Case-III.

For the given times and (0.4, 0.8), various choices of n and r are also considered, such as , and r is taken as the failure percentage (FP) such that for each n. For each given set of , seven different PCSs , where , denoted by , are considered (see Table 2). Two different sets of the hyperparameters are used, namely (prior A) and (prior B). The specified values for priors A and B are determined in such a way that the prior average returns to the true value of the target parameter.

Table 2.

Various PCSs used in Monte Carlo simulation.

For each unknown parameter, via the M-H sampler, 12,000 MCMC variates are generated, and the first 2000 values are eliminated as burn-ins. Hence, using the last 10,000 MCMC samples, the average Bayes estimates and 95% two-sided HPD intervals are computed. To run the MCMC sampler, the initial values of and are taken to be and , respectively.

A comparison between different point estimates is made based on their RMSE and MRAB values. Additionally, the performances of the proposed interval estimates are compared by using their ACLs. For the unknown parameter , , , or (say ), the average estimates (Av.Es), RMSEs, MRABs and ACLs of are computed as follows:

and

where denotes the calculated estimate at the jth sample of , is the number of generated sequence data, and and denote the lower and upper bounds, respectively, of the asymptotic (or credible HPD) interval of such that , , and . All numerical computations were performed via the ‘maxLik’ and ‘coda’ packages in 4.0.4 software.

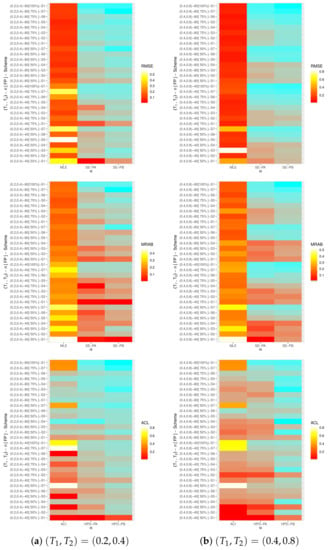

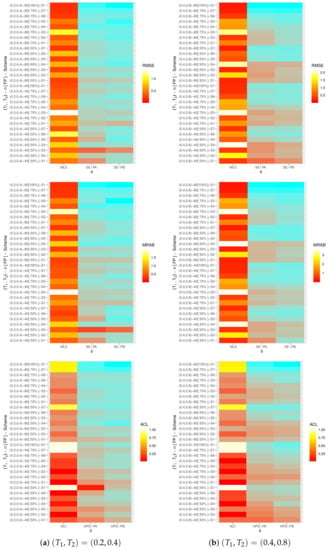

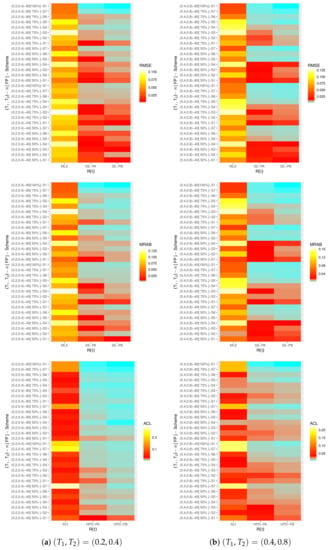

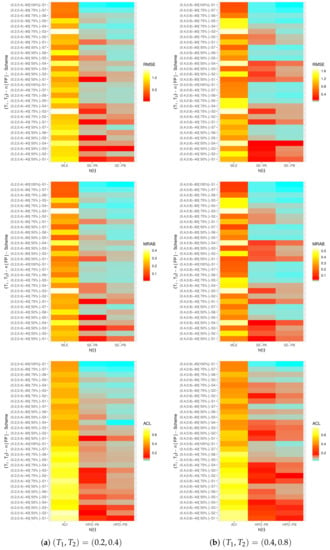

Graphically, utilizing heat-map plots, all simulated values for the RMSEs, MRABs, and ACLs of , , , and are shown in Figure 2, Figure 3, Figure 4 and Figure 5, respectively, while all simulation tables are provided as Supplementary Materials.

Figure 2.

Heat-maps for the estimation results for .

Figure 3.

Heat-maps for the estimation results for .

Figure 4.

Heat-maps for the estimation results for .

Figure 5.

Heat-maps for the estimation results for .

For specification, for each plot in Figure 2, Figure 3, Figure 4 and Figure 5, some notations have been used. For example, based on prior A (PA), for all unknown parameters, the Bayes estimates based on the SE loss function are mentioned as ‘SE-PA’, and their HPD interval estimates are mentioned as ‘HPD-PA’. From Figure 2, Figure 3, Figure 4 and Figure 5, some general observations can be made:

- The Bayesian estimates of all unknown parameters performed better than the frequentist estimates, as expected, in terms of the minimum RMSE, MRAB, and ACL values. This result was due to the fact that the Bayes point (or interval) estimates contained priority information of the model parameters but the others did not.

- As n (or FP) increases, the RMSEs, MRABs, and ACLs of all proposed estimates performed satisfactory. Similar performance was also observed when the sum of the removal patterns decreased.

- As increased, in most cases, the RMSEs and MRABs of all calculated estimates decreased significantly.

- As tended to increase, the ACLs for ACIs of and decreased while the HPD intervals increased. In addition, the ACLs of the ACI and HPD intervals for and narrowed.

- Since the variance of prior B was lower than the variance of prior A, the Bayesian results, including the point and interval estimators of , , , and , performed better under prior B than those obtained under prior A in terms of their RMSEs, MRABs, and ACLs.

- To sum up, the simulation results recommended that the Bayesian inferential approach via the M-H algorithm was the better than the others for estimating the unknown parameter(s) of life using generalized Type-II progressive hybrid censored data.

6. Optimal PCS-T2 Plans

Mostly in the context of reliability, the experimenter may desire to determine the ‘best’ censoring scheme from a collection of all available censoring schemes in order to provide the most information about the unknown parameters under study. Balakrishnan and Aggarwala [24] first investigated the problem of choosing the optimal censoring strategy in various scenarios. Many optimality criteria, however, have been proposed, and numerous results on optimal censoring designs have been investigated. The specific values of n (total test units), r (effective sample), and (ideal test thresholds) are chosen in advance based on the availability of the units, experimental facilities, and cost considerations, as well as the optimal censoring design , where , can be determined (see Ng et al. [25]). In the literature, several works have addressed the problem of comparing two (or more) different censoring plans (for example, see Pradhan and Kundu [26], Elshahhat and Rastogi [16], Elshahhat and Abu El Azm [27], and Ashour et al. [28]). However, to determine an optimum PCS-T2 plan, some commonly-used criteria were considered (see Table 3).

Table 3.

Some optimality criteria of progressive censoring plan.

From Table 3, it should be noted that the criteria and are intended to minimize the determinant and trace of the AVC matrix in Equation (14), while the criterion is intended to maximize the main diagonal elements of the observed Fisher’s matrix at its MLEs and . Regarding criteria and , Gupta and Kundu [29] stated that the comparison of two (or more) AVC matrices based on these criteria is not a trivial task because they are not scale-invariant.

Thus, based on criteria and , which are scale-invariant, one can determine the optimum censoring scheme of multi-parameter distributions. It is clear that the minimizing the associated variance of the logarithmic th quantile , where , is dependent on the choice of in . Additionally, for , the weight is a nonnegative function satisfying . Hence, the logarithmic for of the INH distribution is

Again, using Equation (15), the delta method is considered here to approximate the variance estimate of (say ), as follows:

where

is the gradient of with respect to and . Obviously, from Table 3, it can be seen that the optimized PCS-T2 plan that provides more information corresponded to the smallest value of optimality criteria and the highest value of optimality criteria.

7. Real-Life Applications

To demonstrate the adaptability and flexibility of the proposed methodologies to a real phenomenon, in this section, we shall provide two numerical applications using electronic devices and COVID-19 data sets.

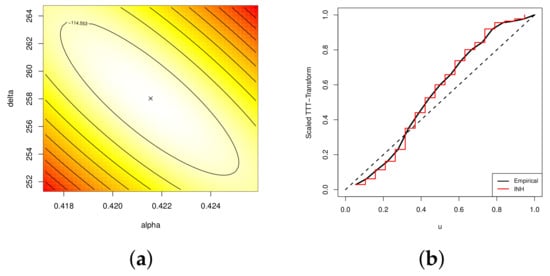

7.1. Electronic Device Data

In this application, we’ll analyze engineering real data given by Wang [30]. This data set consists of 18 observations of failure times of electronic devices: 5, 11, 21, 31, 46, 75, 98, 122, 145, 165, 196, 224, 245, 293, 321, 330, 350, and 420. We first checked whether Wang’s data fit INH distribution or not. For this purpose, the Kolmogorov–Smirnov (K-S) distance along with the associated p-value provided. Using all of Wang’s data, the MLEs (with their standard errors (St.E)) of and were 0.4215 (0.1073) and 258.03 (164.65), respectively, and the K-S (with its p-value) was 0.199 (0.418). This result indicates that Wang’s data were coming from INH lifetime model. To show the existence and uniqueness of and , the contour plot of the log-likelihood function with respect to and using all of Wang’s data is plotted in Figure 6a. It shows that the MLEs and existed and were unique. It is also clear that Wang’s data set was overly and generally flat. We also suggest taking these estimates as initial values to run any numerical evaluation required. Graphically, to identify the HF shapes of Wang’s data, the scaled total time on testing (TTT) transform is also plotted in Figure 6b. It indicates that the bathtub-shaped (decreasing-increasing) hazard rate was suitable for the fitting the INH model.

Figure 6.

(a) Contour plot of log-likelihood function and (b) empirical and fitted scaled TTT transform plot from Wang’s data.

From all of Wang’s data, based on various choices of and different PCSs , namely : , : , and : , different artificial GPHCS-T2 samples were generated when , which are presented in Table 4. Since the prior information about the model parameters was not available, the improper gamma priors (i.e., , for ) were used. However, to run the calculations, we used 0.0001 for all given hyperparameters. Using the MCMC algorithm described in Section 3, to develop the Bayes point estimates and associated HPD interval estimates, the first 5000 iterations were discarded from 30,000 MCMC samples. From Table 4, it can be seen that the point (with their St.Es) and 95% interval (with their interval lengths (ILs)) estimators derived by the maximum likelihood and Bayesian approaches of , , , and (at time ) were computed, and they are presented in Table 5. It is evident that the acquired inferences of , , , and derived using the Bayes approach performed better than those derived from the frequentist approach in terms of their minimum St.E and IL values.

Table 4.

Three artificial GPHCS-T2 samples from Wang’s data.

Table 5.

The point and interval estimates of , , , and under Wang’s data.

According to the proposed criteria given in Table 3, the problem of determining the optimum progressive censoring plan is present. Using the generated samples in Table 4, the calculated values of the optimum criteria are presented in Table 6. To distinguish them, the suggested optimum censoring schemes are denoted with asterisks (*). However, Table 6 shows that for Sample 1, was the optimal censoring using , and was the optimal censoring using , while was the optimal censoring using . For Samples 2 and 3, was the optimal censoring using , while was the optimal censoring using .

Table 6.

Optimum progressive censoring plan under Wang’s data.

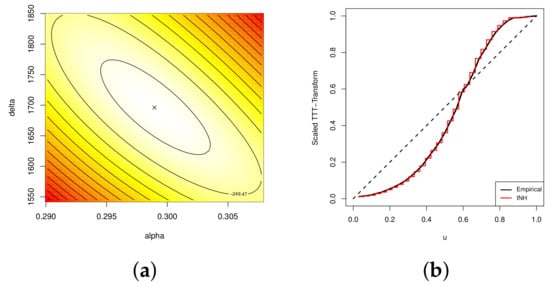

7.2. COVID-19 Data

This application provides an analysis of the confirmed deaths by the 7-day moving average for coronavirus disease (COVID-19) in the United States for 32 consecutive days from mid-March to mid-April 2020 (https://www.cdc.gov/ accessed on 3 March 2022), see Table 7. To check for the goodness of fit, the K-S statistic along with the associated p value were obtained. First, using complete COVID-19 data, the MLEs (with their St.Es) of and were 0.2989 (0.0521) and 1696.1 (895.34), respectively. Thus, the K-S (with its p value) was 0.1601 (0.348). This result demonstrates that the INH distribution is an adequate model to fit the COVID-19 data.

Table 7.

Deaths due to COVID-19 from mid-March to mid-April 2020 in USA.

The contour plot, using the complete COVID-19 data set, of the log-likelihood function with respect to and is displayed in Figure 7a. It indicates that the MLEs existed and were unique. Additionally, a plot of the scaled TTT transform for the COVID-19 data is displayed in Figure 7b. It shows that the bathtub-shaped (decreasing-increasing) hazard rate was adequate for fitting the INH model.

Figure 7.

(a) Contour plot of log-likelihood function. (b) Empirical or fitted scaled TTT transform plot derived from COVID-19 data.

In Table 8, from the complete COVID-19 data set when , different GPHCS-T2 samples based on various choices of , and were generated, namely : , : , and : . From Table 8, the maximum likelihood and Bayes estimates (with their St.Es) of , , , and for time were computed, and they are presented in Table 9. In addition, two-sided 95% ACI and HPD intervals with their ILs were calculated, and these are also listed in Table 9. From Table 9, it can be seen that the calculated inferences derived from the Bayesian MCMC estimates in terms of their St.Es performed better than the frequentist estimates, and the HPD interval estimates with respect to their ILs performed better than the others.

Table 8.

Three GPHCS-T2 samples from COVID-19 data.

Table 9.

The point and interval estimates of , , , and under COVID-19 data.

To discuss how to select the optimum censoring plan from the COVID-19 data, based on the generated samples in Table 4, the calculated values of the given criteria are reported in Table 10. It can be observed that for Sample 1, was the optimal scheme under , and was the optimal scheme under , while was the optimal scheme under . For Samples 2 and 3, was the optimal scheme under , while was the optimal scheme under compared with the other competing schemes. As a result, great information about the unknown INH parameters could be easily obtained using two recommended censoring schemes, namely left withdrawn, where , and uniformly withdrawn, where . This finding was due to the fact that the remaining surviving units were removed at an early stage (i.e., (left withdrawn) or (uniformly withdrawn)), and they could be used for other purposes, especially if the items put in the test were very costly. All conclusions derived from the COVID-19 data support the same findings in the case of electronic device data analysis. Finally, the analysis results from both the electronic devices and COVID-19 data sets support the simulation results.

Table 10.

Optimum progressive censoring plan under COVID-19 data.

8. Concluding Remarks

This study takes into account the statistical inference of the unknown model parameters, reliability, and hazard rate functions of the inverted Nadarajah–Haghighi lifetime model based on generalized Type-II progressive hybrid censoring. The frequentist estimates with their asymptotic confidence intervals for the unknown parameters and any function of them were computed using the Newton–Raphson iterative procedure via the ‘maxLik’ package in software. Since the likelihood function was obtained in complex form, the posterior density function was obtained in nonlinear form. Therefore, using the Metropolis–Hastings algorithm, the Bayesian estimates and the associated HPD intervals were developed under the assumption of independent gamma priors and by considering the squared error loss function. To compare the behavior of the acquired estimates, various simulation experiments based on different choices of total test units, observed failure data, threshold times and progressive censoring plans were conducted, and they showed that the Bayes MCMC approach performed quite satisfactorily compared with the frequentist approach. Electronic devices and COVID-19 data sets were analyzed to show the practical utility of the proposed methods in real-life phenomenon and to suggest the optimum censoring plan. Finally, the Bayesian MCMC paradigm to estimate the parameters, reliability, and hazard functions of the INH distribution under generalized Type-II progressive hybrid censoring was recommended. We hope that the results and methodologies proposed here will be beneficial to reliability practitioners and extended to other censoring plans.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/sym14112379/s1, Table S1: The RMSEs (1st column) and MRABs (2nd column) of ; Table S2: The RMSEs (1st column) and MRABs (2nd column) of ; Table S3: The RMSEs (1st column) and MRABs (2nd column) of ; Table S4: The RMSEs (1st column) and MRABs (2nd column) of ; Table S5: The ACLs of 95% ACI/HPD intervals of ; Table S6: The ACLs of 95% ACI/HPD intervals of ; Table S7: The ACLs of 95% ACI/HPD intervals of ; Table S8: The ACLs of 95% ACI/HPD intervals of .

Author Contributions

Methodology, A.E. and O.E.A.-K.; funding acquisition, H.S.M.; software, A.E.; supervision H.S.M.; writing—original draft, H.S.M. and O.E.A.-K.; writing—review and editing, A.E. and O.E.A.-K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Princess Nourah bint Abdulrahman University Researchers Supporting Project number PNURSP2022R175 from Princess Nourah bint Abdulrahman University in Riyadh, Saudi Arabia.

Data Availability Statement

The authors confirm that the data supporting the findings of this study are available within the article.

Acknowledgments

The authors acknowledge the support of Princess Nourah bint Abdulrahman University Researchers Supporting Project number PNURSP2022R175 from Princess Nourah bint Abdulrahman University in Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this article:

| ACI | Approximate confidence interval |

| ACL | Average confidence length |

| Av.E | Average estimate |

| AVC | Asymptotic variance–covariance |

| CDF | Cumulative distribution function |

| COVID-19 | Coronavirus disease |

| FP | Failure percentage |

| GPHCS-T2 | Generalized progressive Type-II hybrid censoring scheme |

| HF | Hazard function |

| HPD | Highest posterior density |

| IL | Interval length |

| INH | Inverted Nadarajah–Haghighi |

| K-S | Kolmogorov–Smirnov |

| MCMC | Monte Carlo Markov chain |

| M-H | Metropolis–Hastings |

| MLE | Maximum likelihood estimator |

| MRAB | Mean relative absolute bias |

| NH | Nadarajah–Haghighi |

| PCS-T2 | Progressive Type-II censoring |

| Probability density function | |

| PHCS-T1 | Progressive Type-I hybrid censoring scheme |

| PHCS-T2 | Progressive Type-II hybrid censoring scheme |

| RF | Reliability function |

| RMSE | Root mean squared error |

| SE | Squared error |

| St.E | Standard error |

Appendix A

When differentiating Equation (7) with respect to and , the elements of Equation (14) are as follows:

and

where

, , ,

and

References

- Chen, P.; Xu, A.; Ye, Z.S. Generalized fiducial inference for accelerated life tests with Weibull distribution and progressively type-II censoring. IEEE Trans. Reliab. 2016, 65, 1737–1744. [Google Scholar] [CrossRef]

- Xu, A.; Zhou, S.; Tang, Y. A unified model for system reliability evaluation under dynamic operating conditions. IEEE Trans. Reliab. 2019, 70, 65–72. [Google Scholar] [CrossRef]

- Hu, J.; Chen, P. Predictive maintenance of systems subject to hard failure based on proportional hazards model. Reliab. Eng. Syst. Saf. 2020, 196, 106707. [Google Scholar] [CrossRef]

- Luo, C.; Shen, L.; Xu, A. Modelling and estimation of system reliability under dynamic operating environments and lifetime ordering constraints. Reliab. Eng. Syst. Saf. 2022, 218, 108136. [Google Scholar] [CrossRef]

- Balakrishnan, N.; Cramer, E. The Art of Progressive Censoring; Birkhäuser: New York, NY, USA, 2014. [Google Scholar]

- Kundu, D.; Joarder, A. Analysis of Type-II progressively hybrid censored data. Comput. Stat. Data Anal. 2006, 50, 2509–2528. [Google Scholar] [CrossRef]

- Childs, A.; Chandrasekar, B.; Balakrishnan, N. Exact likelihood inference for an exponential parameter under progressive hybrid censoring schemes. In Statistical Models and Methods for Biomedical and Technical Systems; Vonta, F., Nikulin, M., Limnios, N., Huber-Carol, C., Eds.; Birkhäuser: Boston, MA, USA, 2008; pp. 319–330. [Google Scholar]

- Lee, K.; Sun, H.; Cho, Y. Exact likelihood inference of the exponential parameter under generalized Type II progressive hybrid censoring. J. Korean Stat. Soc. 2016, 45, 123–136. [Google Scholar] [CrossRef]

- Ashour, S.K.; Elshahhat, A. Bayesian and non-Bayesian estimation for Weibull parameters based on generalized Type-II progressive hybrid censoring scheme. Pak. J. Stat. Oper. Res. 2016, 12, 213–226. [Google Scholar]

- Ateya, S.; Mohammed, H. Prediction under Burr-XII distribution based on generalized Type-II progressive hybrid censoring scheme. J. Egypt. Math. Soc. 2018, 26, 491–508. [Google Scholar]

- Seo, J.I. Objective Bayesian analysis for the Weibull distribution with partial information under the generalized Type-II progressive hybrid censoring scheme. Commun.-Stat.-Simul. Comput. 2020, 51, 5157–5173. [Google Scholar] [CrossRef]

- Cho, S.; Lee, K. Exact likelihood inference for a competing risks model with generalized Type-II progressive hybrid censored exponential data. Symmetry 2021, 13, 887. [Google Scholar] [CrossRef]

- Nagy, M.; Bakr, M.E.; Alrasheedi, A.F. Analysis with applications of the generalized Type-II progressive hybrid censoring sample from Burr Type-XII model. Math. Probl. Eng. 2022, 2022, 1241303. [Google Scholar] [CrossRef]

- Nadarajah, S.; Haghighi, F. An extension of the exponential distribution. Statistics 2011, 45, 543–558. [Google Scholar] [CrossRef]

- Tahir, M.H.; Cordeiro, G.M.; Ali, S.; Dey, S.; Manzoor, A. The inverted Nadarajah–Haghighi distribution: Estimation methods and applications. J. Stat. Comput. Simul. 2018, 88, 2775–2798. [Google Scholar] [CrossRef]

- Elshahhat, A.; Rastogi, M.K. Estimation of parameters of life for an inverted Nadarajah–Haghighi distribution from Type-II progressively censored samples. J. Indian Soc. Probab. Stat. 2021, 22, 113–154. [Google Scholar] [CrossRef]

- Henningsen, A.; Toomet, O. maxLik: A package for maximum likelihood estimation in R. Comput. Stat. 2011, 26, 443–458. [Google Scholar] [CrossRef]

- Plummer, M.; Best, N.; Cowles, K.; Vines, K. coda: Convergence diagnosis and output analysis for MCMC. R News 2006, 6, 7–11. [Google Scholar]

- Gelman, A.; Carlin, J.B.; Stern, H.S.; Rubin, D.B. Bayesian Data Analysis, 2nd ed.; Chapman and Hall/CRC: Boca Raton, FL, USA, 2004. [Google Scholar]

- Lynch, S.M. Introduction to Applied Bayesian Statistics and Estimation for Social Scientists; Springer: New York, NY, USA, 2007. [Google Scholar]

- Lawless, J.F. Statistical Models and Methods for Lifetime Data, 2nd ed.; John Wiley and Sons: Hoboken, NJ, USA, 2003. [Google Scholar]

- Greene, W.H. Econometric Analysis, 4th ed.; Prentice-Hall: New York, NY, USA, 2000. [Google Scholar]

- Chen, M.H.; Shao, Q.M. Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 1999, 8, 69–92. [Google Scholar]

- Balakrishnan, N.; Aggarwala, R. Progressive Censoring Theory, Methods and Applications; Birkhäuser: Boston, MA, USA, 2000. [Google Scholar]

- Ng, H.K.T.; Chan, P.S.; Balakrishnan, N. Optimal progressive censoring plans for the Weibull distribution. Technometrics 2004, 46, 470–481. [Google Scholar] [CrossRef]

- Pradhan, B.; Kundu, D. Inference and optimal censoring schemes for progressively censored Birnbaum–Saunders distribution. J. Stat. Plan. Inference 2013, 143, 1098–1108. [Google Scholar] [CrossRef]

- Elshahhat, A.; Abu El Azm, W.S. Statistical reliability analysis of electronic devices using generalized progressively hybrid censoring plan. Qual. Reliab. Eng. Int. 2022, 38, 1112–1130. [Google Scholar] [CrossRef]

- Ashour, S.K.; El-Sheikh, A.A.; Elshahhat, A. Inferences and optimal censoring schemes for progressively first-failure censored Nadarajah-Haghighi distribution. Sankhya A 2022, 84, 885–923. [Google Scholar] [CrossRef]

- Gupta, R.D.; Kundu, D. On the comparison of Fisher information of the Weibull and GE distributions. J. Stat. Plan. Inference 2006, 136, 3130–3144. [Google Scholar] [CrossRef]

- Wang, F.K. A new model with bathtub-shaped failure rate using an additive Burr XII distribution. Reliab. Eng. Syst. Saf. 2000, 70, 305–312. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).