Abstract

Forests are a vital natural resource that directly influences the ecosystem. Recently, forest fire has been a serious issue due to natural and man-made climate effects. For early forest fire detection, an artificial intelligence-based forest fire detection method in smart city application is presented to avoid major disasters. This research presents a review of the vision-based forest fire localization and classification methods. Furthermore, this work makes use of the forest fire detection dataset, which solves the classification problem of discriminating fire and no-fire images. This work proposes a deep learning method named FFireNet, by leveraging the pre-trained convolutional base of the MobileNetV2 model and adding fully connected layers to solve the new task, that is, the forest fire recognition problem, which helps in classifying images as forest fires based on extracted features which are symmetrical. The performance of the proposed solution for classifying fire and no-fire was evaluated using different performance metrics and compared with other CNN models. The results show that the proposed approach achieves 98.42% accuracy, 1.58% error rate, 99.47% recall, and 97.42% precision in classifying the fire and no-fire images. The outcomes of the proposed approach are promising for the forest fire classification problem considering the unique forest fire detection dataset.

1. Introduction

Forests play a vital part in our lives by providing numerous resources such as minerals and materials needed for production. Forests purify the air by absorbing carbon dioxide and giving out oxygen [1], which is fundamental for human survival, and play an important role in providing habitats for several animal species. Forests act as a shield against sandstorms, thus preventing crops and maintaining balance in the ecological system. Forest fire cases have increased in recent years because of climate change [2,3]. The high temperature and dry weather result in fire, which destroys environment, wildlife, and natural resources, thus directly affecting human lives. Forests with coniferous (needle or cone) trees are more likely to catch fire than deciduous (leaved) trees due to the large quantity of sap, a highly flammable material, in their branches [4]. Coniferous trees grow very near to each other, resulting in a faster rate of fire spreading. Due to forest fires, millions of acres of forest lands are destroyed every year, causing significant economic losses. Several countries, such as America, Australia, Canada, and Brazil, have suffered from forest fire destruction.

The Australian fire in 2020 was the most devastating fire, resulting in losses of forest resources, animals, and human lives. It is estimated that around 14 million acres of forest land were burnt, about half a million animals and 23 people died, and 1500 houses were burned [5]. In 2018 and 2019, California forests and the Amazon rainforest suffered from fires that burnt millions of acres of land, causing huge losses [6]. In America, 85% of forest fires occurring between 1992 and 2015 were man-made, while 15% occurred naturally, due to factors such as lightning and climate change. This man-made forest fire could have been avoided by restricting human activities. As per [7], there has been a reduction in forest fire cases since the emergence of the COVID-19 global pandemic, during which several countries imposed total lockdown, reducing human activities [8]. Moreover, early forest fire detection could also be important in reducing the risk of large-scale forest fires by giving firefighters the opportunity and resources to extinguish the fire in an early stage.

With the aim of protecting forests from fire, governments around the world have stressed the importance of developing strategies for intelligent surveillance and detection of forest fires. The accuracy of detection and alerting the respective authorities is a significant factor that can reduce forest fire risks, which in the case of traditional human monitoring, affects the reliability of the issue. The Internet of Things (IoT), where intelligent devices are connected to the Internet, makes up an integral part of smart cities by applying technologies such as sensors, cloud computing, and wireless network [9]. These IoT devices [10] generate a large amount of data, which can be processed and analyzed by applying artificial intelligence (AI). Due to this huge amount of generated data, computer vision has emerged for intelligent surveillance.

Deep learning for detection and recognition is an integral part of computer vision technologies [11]. Deep neural networks (DNNs) that incorporate deep learning [12] have made it possible to solve real world problems more efficiently and have become important for intelligent surveillance [13,14]. Recently, researchers’ interest in DNNs, such as the convolutional neural network (CNN), which is symmetric, has intensified due to two main factors. Firstly, data storage technology has become cheaper, and secondly, high-performance graphic processing units (GPUs) have met the high computing power requirements. However, the requirement for large datasets is still the key problem for the development of better models in solving real-world tasks.

DNNs can be very useful for early forest fire detection and sending important information to the relevant authorities for necessary actions. Recently, several deep learning-based fire and smoke detection mechanisms have been proposed that achieved good results [15,16]. Continuous surveillance of the forest results in the detection of fire that is helpful for relevant departments to take action on time and prevent the fire from becoming a large-scale disaster. In this research, we aim to provide a mechanism for early detection of forest fires that can aid fire departments and disaster relief teams in responding promptly and reducing the fires’ impact on the environment, society, and the economy. The contributions of this research are:

- Presenting the literature of computer vision-based forest fire localization and classification methods in forest and wildland environments.

- Making use of the newly created dataset, this research further improves the detection accuracy in classifying fire and no-fire images of the forest fire detection dataset that focuses on forest settings unlike previous wildfire research that considers wildlands including bushes and farmlands etc.

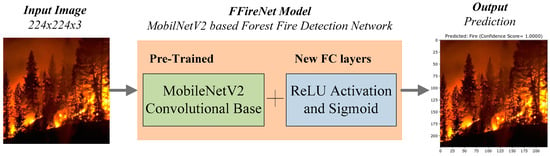

- Proposing a CNN-based transfer learning approach named FFireNet for forest fire classification on the local dataset. The solution explores the MobileNetV2 model by exploiting the trained weights of the convolutional base and adding fully connected layers for learning complex features and classification.

- Evaluating the proposed FFireNet method and comparing it with other CNN models on the forest fire dataset using various performance metrics to validate the performance of the proposed approach.

The remainder of this work is structured as follows. Section 2 details the review of the literature on vision-based forest fire localization and classification methods; Section 3 presents the forest fire detection system for the forest fire classification problem; Section 4 provides details about the newly created dataset and the data augmentation; detailed performance evaluation is presented in Section 5; and Section 6 concludes this work.

2. Literature Review

In recent years, several studies on forest fire detection systems have been conducted by considering classification and localization, of either only flame or smoke, or both. In [17], the authors proposed a smoke detection method based on synthetic smoke images using Faster R-CNN. In the proposed method, synthetic images were created by inserting smoke or simulative smoke images into the forest background image. Anim Hossain et al. [18] proposed a forest fire detection method using color and multi-color space local binary patterns for smoke and flame signatures and artificial neural networks. Their proposed approach detects flame and smoke using the aerial dataset. The authors in [19] proposed a hybrid solution using long short-term memory (LSTM) and You Only Look Once (YOLO) for the detection of smoke in a wildfire environment. In the proposed solution, the authors used a lightweight teacher–student LSTM that reduced the number of layers with better smoke detection results. Another method [20] explored the integration of fog computing and CNN to detect fire images. In the subsequent sections, we explore the forest fire localization and classification work previously proposed by the researchers in the literature.

2.1. Localization

Alexandrov et al. [21] analyzed different machine learning and CNN models for the localization of forest fires. In their research, the authors used their dataset to analyze the detection accuracy of the considered methods. In [22], the authors proposed a CNN-based fire detection mechanism. In their proposed method, the full image is first classified using the SVM and transfer learning on AlexNet. After the classification, a fine grain patch classifier is used to locate the fire patch using the pooling-5 layer. The authors found better accuracy of the patch localization than the whole image classification for fire detection.

The authors in [23] proposed a deep learning-based forest fire detection method for fire localization. In their research, the authors proposed a large-scale YOLOv3 network that locates the fire and smoke in the images. Another study by Li et al. [24] proposed CNN-based flame detection to monitor forest fire. Their proposed mechanism, named YOLO-Edge, is based on YOLOv4 by replacing the CSPDarkNet53 with MobileNetv3 to detect flame. The MobilNetv3 is used for feature extraction, while the YOLOv4 backbone network is used for flame localization. Table 1 explains the comparative analysis of the localization task for forest fire detection.

Table 1.

Comparative analysis of forest fire localization methods.

2.2. Classification

This subsection reviews the proposed forest fire detection methods for the classification task in the literature. In [25], the authors explored different CNN models on their local dataset for a forest fire detection system. Their proposed methodology explored AlexNet, VGG13, Modified VGG13, GoogleNet, and Modified GoogleNet to classify images of Fire and Non-Fire. Kaabi et al. [26] proposed smoke detection for a forest fire detection system using Deep Believe Network (DBN). In their proposed methodology, DBN is a stacked Restricted Boltzman Machine used for dimensionality reduction, feature extraction, and classification. Zhao et al. in [27] proposed a deep CNN-based forest fire classification method named Fire_Net. Their proposed Fire_Net model is inspired by an AlexNet 8-layered CNN model with a deeper 15-layered CNN for the classification task.

Chen et al. [28] proposed a CNN-based forest fire detection mechanism for classification of fire, smoke, and negative images. In their proposed method, CNN models consist of 9 and 17 layers, respectively, named CNN-9 and CNN-17 for classification. In [29], the authors proposed a novel approach, namely, attention-enhanced bidirectional long short-term memory (Abi-LSTM) to address the forest fire smoke classification problem. In their proposed scheme, Inceptionv3 is used for spatial feature extraction, Bi-LSTM for temporal feature extraction, and the attention network to optimize the classification process. Sousa et al. [30] proposed a wildfire detection method using transfer learning. In their proposed method, authors exploited the weights of the pre-trained Inceptionv3 model and used it for their new task of fire and not fire classification.

In [31], Govil et al. proposed terrestrial camera-based wildfire detection. Their proposed method used Inceptionv3 model-based classification of the smoke and non-smoke images for wildfire detection. In [32], the authors proposed deep learning-based forest fire classification. In their proposed approach, the fire and smoke are classified using the ForestResNet method based on the ResNet50 model. Another study [33] proposed a multilabel classification model for wildfire detection. Their proposed approach used transfer learning based on VGG16, ResNet50, and DenseNet121 for classifying flame, smoke, non-fire, and other objects in the images. Sun et al. [34] proposed a CNN model for smoke classification in forest fires. Their proposed CNN model applied batch normalization and multi-convolution kernels to optimize and improve classification accuracy. In [35], authors introduced the novel dataset for forest fire detection named DeepFire. In this research, authors explored the performance of the dataset on different machine learning algorithms and presented a VGG19-based transfer learning solution. Table 2 details the comparative analysis of classification methods for forest fire detection system.

Table 2.

Comparative analysis of forest fire classification methods.

The above-mentioned approaches for forest fire classification show good results in dealing with the classification problem. The forest fire dataset constraint remains the most significant for deep learning and a key obstacle in resolving the forest fire detection problem. In previous research on wildfire detection, the dataset included fire images in urban areas, riots, indoor fire, fires in open fields, industrial fires, among others. However, these datasets do not only represent forest landscapes. As a result, utilizing these datasets for the real-world challenge of forest fire detection may perform sub-optimally. The main aim of a precise classification of the forest fire detection problem is to reduce the number of false alarms and, at the same time, yield higher accuracy. To solve the above-mentioned issues, we present a deep learning-based forest fire detection method for early warning to avoid major disasters. The proposed research would improve forest fire surveillance and detection to make it more efficient and reliable.

3. Forest Fire Detection System

Forest fire detection is intrinsically challenging due to the difficulty of accessing isolated locations such as woods in the mountains. Furthermore, the environment is volatile, and the atmospheric conditions in such regions are changeable. These aspects have a significant influence on the development of efficient algorithms for early detection of forest fires. Therefore, we propose deep learning-based forest fire classification in smart city applications where AI-capable IoT devices (such as UAVs equipped with cameras) can be used. The surveillance of the forest for any fire event ensures the safety of this natural resource. The forest images taken are considered inputs to the DNN model and predict if there is fire. In the case of fire, the information can be sent via ad hoc network and cloud to the remote forest fire response center for further action. This paper focuses on the forest fire classification for accurate detection using the CNN. We perceive the mechanism for communication and information transmission to the remote forest fire response center to be outside the scope of this work.

3.1. Proposed Approach

We propose the CNN-based FFireNet method for the classification of forest fires. Subsequently, CNN-based applications require substantial computing power and data for optimal training and achieving high accuracy in detection. To overcome the dataset and computing power limitations, a deep learning technique known as transfer learning has shown promising results [36]. In transfer learning, the weights of a pre-trained CNN model trained on a different dataset for classifying numerous objects are frozen, and new fully connected layers are added to learn entirely new tasks with a dataset associated with this new task. In this research, the task of classification of forest fires is performed using MobileNetV2 [37] as a backbone network, which is originally trained on the ImageNet dataset [38]. We froze the weights of the convolutional base and added fully connected layers using activation functions.

3.2. MobileNetV2 Model

In contrast to typical residual models, the MobileNetV2 model [37] (a DNN model) is based on inverted residual architecture, where both the input and output of the block creates a narrow bottleneck, as depicted in Figure 1. MobileNetV2 has 53 layers and extracts features using lightweight depth-wise convolutions. Furthermore, to retain representational power, non-linearities in the narrow layers are reduced, thereby improving network performance.

Figure 1.

MobileNetV2 bottleneck residual blocks.

3.3. FFireNet

For FFireNet, this research used the MobileNetV2 model [37] by freezing its initial weights and adding fully connected layers on top of the convolutional base of the MobileNetV2 model. As the task considered in this research is new for the MobileNetV2, it is unsuitable to use the existing fully connected layers for training the models from scratch on the newly created local dataset. However, this paper used feature extraction knowledge of the model’s initial layers that are trained on the ImageNet dataset. These initial layers are for learning generic features whereas the last layers (also known as predictive layers) are task-specific. This work presents the binary classification of forest fire images which used fully connected layers for learning specific features and the classifier, as depicted in Figure 2.

Figure 2.

Working mechanism for forest fire detection.

3.4. Activation Functions

We used the sigmoid and rectified linear unit (ReLU) as activation functions in this study, which optimize the process and add non-linearity to the model. The ReLU () learns complex features and outputs the elementwise maximum and the input, i.e., . For forest fire classification, the sigmoid function (), which is also called the logistic function, used in this study outputs the probability of prediction and has a single output with values in the range from 0 to 1. The sigmoid () and ReLU () functions are given by the following equations:

3.5. Optimizer

We used the Stochastic Gradient Descent (SGD) as an optimizer to train the proposed MobileNetV2-based transfer learning approach in this study. SGD is responsible for convergence of the neural network. In SGD, the cost of only one sample per step is calculated, which makes the model work fast by solving the issue of high computation. The SGD optimizer is given by the following equation:

where is a parameter for example activations, biases, and weights, denotes the learning rate, represents the loss function gradient with respect to and , and shows the training samples.

3.6. Loss Function

In the proposed approach, a binary cross entropy loss function is used for binary classification and is only compatible with the sigmoid function; hence, it is also known as sigmoid cross entropy. The following equation is the binary cross entropy loss function:

where represents the label, i.e., 1 denotes the Fire class and 0 denotes No-Fire class, and is the predicted probability of .

4. Dataset

The dataset in the existing literature is related to wildfire, which has varied images from bush and wildfires, to urban environments. As this research focuses on forest fire, to address this problem, we considered our forest fire dataset to facilitate future work in proposing new methodologies. The dataset can be accessed at [39].

4.1. Pre-Processing on Dataset

The dataset consists of images with multiple angles of view. This allows the trained model to better discriminate between forest fire and no-fire. The dataset enables the model to detect the forest fire in the following different ways: (1) by spotting the fire flames or (2) fire flames with smoke. At this stage, we just considered these criteria for fire images in the dataset and equally divided the dataset with respect to the number of fire (1) and no-fire (0) images. The data classification is as follows:

Fire (1): Forest and mountain fire images with fire flames or/and smoke.

No-Fire (0): Forest and mountain images with different angles with no fire or smoke.

The aim of this criterion is to train the model with a variety of images and avoid confusion between interrelated scenes, for instance, the sunset in mountain and forest areas.

For optimal mode training and improved outcomes, the dataset must be refined. As a result, we searched through the dataset and implemented the necessary pre-processing, such as cropping only those images that were related to the targeted problem, such as a picture depicting fire on a mountain or forest. We scaled all the images to a uniform resolution of pixels after cropping. These pre-processing steps allowed the model to easily and quickly learn information about forest fires. Figure 3 depicts representative images from both classes in the forest fire dataset.

Figure 3.

Images from (a,b) Fire class and (c,d) No-Fire class.

4.2. Dataset Distribution

The dataset has a symmetric distribution, where 950 images belong to the Fire instance, and the remaining 950 images belongs to the No-Fire instance. For training, we used 80% of the data and 20% for testing purposes. Further, the training data were split into 80:20, where 20% of the data was used for validation. The data partitioning technique used for training and testing is shown in Table 3.

Table 3.

Dataset partitioning.

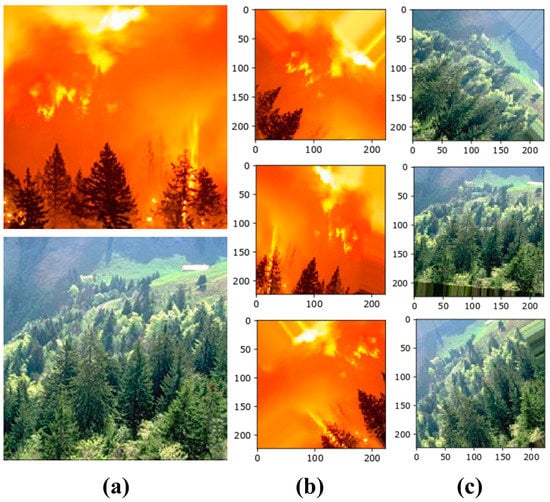

4.3. Augmentation of Data

The forest fire dataset has diverse images. However, the trained model may fail to generalize against the new and unseen data due to the limited representation of diverse images in the dataset. To have better representation of diverse images in the dataset, we performed augmentation on the training dataset to transform images by resizing, flipping, shifting, zooming, etc. First, the images of both classes were resized to , an accepted input image size of the MobileNetV2 model. The augmentations performed on the dataset are given in Table 4. Some sample images of the augmentations are illustrated in Figure 4.

Table 4.

Data augmentation.

Figure 4.

(a) Resized original images of both classes. Data augmentations on (b) Fire images and (c) No-Fire images.

5. Results and Discussion

In this section, we present the performance of the FFireNet method for the classification of the forest fire dataset. We evaluated the performance of the proposed approach on the forest fire dataset and compared it with NASNetMobile [40], Xception [41], InceptionV3 [42], and ResNet152V2 [43]. The environment for simulations was Anaconda Python 3.7 with Keras libraries. The simulation system configuration was a Dell i5-1135G7, 12GB DDR4, Intel Iris Xe 6GB. The following subsection details the performance analysis of this research.

5.1. Hyper-Parameter Selection

We used Stochastic Gradient Descent (SGD) for optimization and binary cross-entropy loss function for training the model. We used empirical testing to obtain the best values for each hyper-parameter. For the initial learning rate, we analyzed 20 different values between 0.00001 and 0.1. Similarly, we tested the model with different batch sizes of 16, 32, 64, and 100. Table 5 lists the hyper-parameters used for training purposes in the simulation after extensive testing.

Table 5.

Simulation parameters.

5.2. Performance Analysis of the Proposed Approach

The performance of the proposed solution in terms of correct identification of forest fires was evaluated using various metrics such as prediction accuracy, error rate, false negative rate, false positive rate, true negative rate, recall, precision, false discovery rate, and F1 score.

5.2.1. Training Performance Analysis of Proposed Approach

The training phase of the FFireNet model was carried out through 50 epochs with a total of 5000 iterations. It can be noted from the loss curve in Figure 5 that the training loss started with 28.73% at the first epoch but the loss continued to decrease, achieving the minimum loss of 1.02% in the final epoch. Similarly, the validation loss started with 8.83% at the first epoch and achieved the minimum loss of 0.21% in the final epoch.

Figure 5.

Training performance of the proposed approach on the forest fire dataset.

5.2.2. Prediction Accuracy and Error Rate

The prediction accuracy and error rate measure the model’s performance, that is, how well the method classified the considered problem. These two metrics are inversely proportional to each other. The goal of every DNN-based method is to have higher prediction accuracy and minimum error rate. The following equations give the prediction accuracy and error rate:

The is the true negative (accurately classified Not-Fire images), and is the true positive (accurately classified Fire images by the method). False positive is an image of No-Fire class classified as Fire, and false negative is contrary to , i.e., a Fire image classified as No-Fire. represents the error rate, which is where the model classified the images incorrectly.

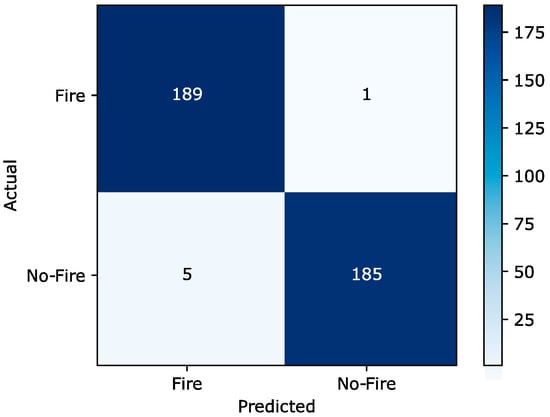

The confusion matrix is used to give predictive analysis of the forest fire classification, which provides a clear understanding of the proposed method in situations when accuracy alone can be vague. The confusion matrix of the proposed FFireNet approach is illustrated in Figure 6. This indicates that the proposed method shows good results for the problem of forest fire classification based on the greater diagonal values and small values of the confusion matrix’s diagonal. The proposed method has an accuracy of 98.42% and 1.58% error rate, with 185 and 189 true negatives and true positives, respectively, whereas the number of false negatives and positives was 1 and 5, respectively, as depicted in Table 6.

Figure 6.

Confusion matrix of the proposed approach on the test forest fire dataset.

Table 6.

Accuracy and error rate of the proposed approach on the forest fire dataset.

5.2.3. True and False Rates

Other metrics that are used for the evaluation of how well the method performed in classifying the images are False Positive Rate (), False Negative Rate (), True Positive Rate (), and True Negative Rate (). The following equations give these metrics:

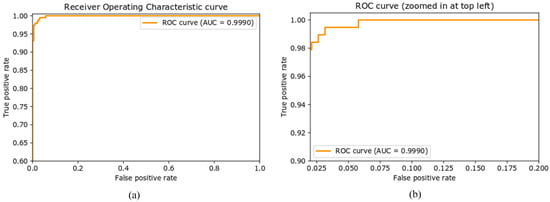

The proposed method has and of 97.37% and 99.47%, respectively, and 0.53% and 2.63% , as shown in Table 7. The Receiver Operative Characteristic (ROC) curve is a graphical representation that shows the prediction ability of a binary classifier as the prediction threshold is varied. The ROC curve is achieved by plotting (also known as sensitivity or recall) against . The Area Under the Curve (AUC) is a metric for class separability that indicates how effectively a model can discriminate between them. The higher the AUC score, the better the model’s performance at properly predicting classes. Figure 7 illustrates the ROC curve of the proposed method. The AUC value of 0.9990 indicates that the model has a 99.90% chance of correctly discriminating between positive and negative classes.

Table 7.

True and false rates of the proposed approach on the forest fire dataset.

Figure 7.

(a) ROC curve and (b) scaled representation.

5.2.4. Precision and Recall

Precision refers to the proportion of correct positive and total positive outcomes predicted by the model, whereas recall refers to the proportion of correct positive outcomes to all relevant samples that should have been classified as positive. Other metrics related to and include (false discovery rate) and (harmonic mean of recall and precision), which demonstrate how well the classifier predicts correctly. The equations of these metrics are:

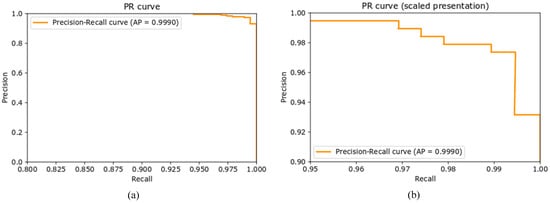

The proposed method achieved 97.42% , and 99.47% , with 2.58% and 98.43% and , respectively, as shown in Table 8. Another means of graphical representation for evaluating the performance of the classification method is the precision–recall curve (PR Curve), where recall is on the x-axis and precision on the y-axis. The curve can be observed to be close to the top right corner, indicating that the model performs better in predicting the classes. Figure 8 depicts the PR curve for the proposed method on the forest fire dataset. The proposed approach has 99.90% average precision (AP) for the classification of the forest fire dataset.

Table 8.

Precision and recall of the proposed approach for the forest fire dataset.

Figure 8.

(a) PR Curve and (b) scaled representation.

5.2.5. True Classifications

This subsection presents accurate predictions of both negative and positive classes. Figure 9 illustrates some images of the true negative and positive classifications. The proposed FFireNet approach showed good performance as compared to previously proposed work in [35] for classifying the forest fire dataset, with 374 out of 380 true predictions.

Figure 9.

True classifications: (a) positives and (b) negatives.

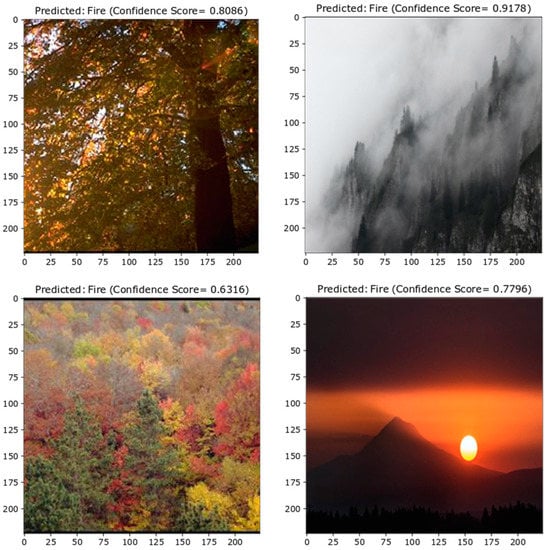

5.2.6. False Classifications

It is essential to minimize the false classifications in the forest fire detection problem. The false alarm percentage is crucial for forest fire detection as it weighs on the method’s applicability and reliability in real world. The classification threshold of the proposed approach is 0.5, which means that the image is assigned a label ‘Fire’ having a predicted probability value greater than 0.5, and is otherwise assigned ‘No-Fire’. Figure 10 shows randomly selected cases of . The reason for the misclassification of No-Fire images () may be that the color of sunlight is similar to that of fire and the proposed approach mistakenly interpreted it as a Fire image. In addition, the similar manifestations of sunset on the mountain top and mountain fire also confused the proposed approach. Moreover, the autumn leaves of the tress also looked similar to the fire. The image of dense fog around the mountains is considered to be fire smoke, resulting in false positives. It is pertinent to note that we observed only five false positive cases. These are perhaps due to the lack or insufficient representation of such regions from various angles in the training data, resulting in images without the fire being incorrectly labeled as fire images. However, it is interesting to note that the confidence score of the model for false classification is less than 90% for most of the cases. In order words, these can be considered as nearly missed cases where the model was not fully sure of the predictions.

Figure 10.

False positives (ground truth = No-Fire).

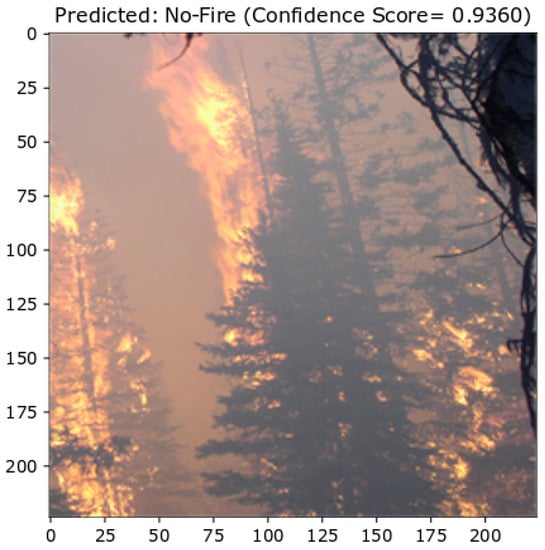

We observed similar issues for the falsely classified Fire image (), as depicted in Figure 11. It can be noted that the proposed approach has only one image. The inability of the proposed approach to recognize a fire instance, in this case, can be inferred as the major reason for the false negative. By observing this case, there can be numerous possibilities to explain which the model missed the fire, such as the fire seems to be in the background of the image, which might have either low or no representation in the training dataset. Secondly, the color of the fire in the image might be considered as the background of the tree branch image. According to our hypothesis, this false classification of the fire image might be due to the image quality, which is important in computer vision. The local dataset was created by obtaining images from multiple search engines, some of which had extremely poor spatial resolution. Moreover, resizing such images to a standard size in order to match with the input layer specification of the network further deteriorated the image quality. We also observed this during hyper-parameter selection, where the change in the size of the images significantly affected the training accuracy.

Figure 11.

False negative (ground truth = Fire).

This problem can be addressed in the future by improving the dataset in terms of spatial resolution and the number of images against each category. Moreover, the re-training approach might have constraints to this problem, as it was not sufficient for the last layer to learn accurate classification of this kind of instance. The solution to this problem can be further explored by fully training the model with a dataset having better spatial resolution and sizeable representation of similar image settings. Nonetheless, despite the dataset limitations, the proposed approach achieved optimal results with only six falsely classified images.

5.3. Performance Comparison with Other CNN Models

This subsection presents the performance comparison of the proposed approach on our forest fire dataset with other CNN models, NASNetMobile [40], Xception [41], InceptionV3 [42], and ResNet152V2 [43]. We used the transfer learning approach to train these models on the forest fire dataset. This was achieved by freezing the initial weights of the convolutional in these models and adding the classifier. As we have considered binary classification for forest fire recognition, we employed the sigmoid classifier which yields a single output in our case, Fire or No-Fire. The hyper-parameters used for training these models are listed in Table 5. Table 9 shows that the proposed approach has better performance on the newly created forest fire dataset than the other considered CNN models. The NASNetMobile shows better results in terms of classification accuracy than the others, and is followed by InceptionV3 and ResNet152V2 with the same classification accuracy. Xception showed the lowest accuracy among other models. From the table it can be noted that ResNet152V2 has the same recall as the proposed method, perhaps because the backbone MobileNetV2 model has residual blocks.

Table 9.

Comparative analysis of proposed approach with other CNN models.

5.4. Performance Comparison with Other Research Works in the Literature

This subsection compares the results of the previous forest fire classification works on their local datasets in the literature with the proposed approach on the forest fire dataset designed for the forest fire detection problem. For comparison, we considered classification works published in 2020 and 2021, and our previous work. Table 10 depicts the comparative analysis of the methods. Our previous method achieved 95.00% accuracy on our forest fire detection dataset as compared to the previous methods, where Sun et al. method achieved accuracy of 94.1% on their respective dataset. The proposed approach on the forest fire dataset shows superiority over the other methods and our previous proposed method in terms of , , , and . The Park et al. method using DenseNet121 achieved the highest precision of 99.1% on their local dataset compared to the rest, with a slightly high compared to the proposed approach. The reason for this edge is that the proposed approach resulted in a greater number of false positives, resulting in lower precision.

Table 10.

Comparative analysis of proposed approach with other classification research works.

6. Conclusions

This research focuses on a deep learning-based forest fire detection method for early warning. Recently, forest fires have been a serious issue due to natural and man-made climate effects. We presented an artificial intelligence-based forest fire detection method for early detection of forest fires to avoid major disasters. This research discussed vision-based forest fire localization and classification methods in detail. Furthermore, this work made use of the forest fire detection dataset, which solved the classification problem of discriminating Fire and No-Fire images. This research proposed a deep learning method named FFireNet, by leveraging the pre-trained convolutional base of the MobileNetV2 model and adding fully connected layers to solve the new task, which helped to classify forest fires. The performance of the proposed approach for classifying Fire and No-Fire classes was evaluated on different performance metrics and compared with other CNN models. The proposed approach achieved 98.42% accuracy, 1.58% error rate, 99.47% recall, and 97.42% precision in classifying the Fire and No-Fire images. The results of the proposed approach showed superiority over other CNN models such as InceptionV3, Xception, NASNetMobile, and ResNet152V2. The results of the proposed approach were also compared with previous works on forest fire classification task and showed a higher efficiency in terms of considered performance metrics on the newly curated forest fire dataset. The outcomes of the proposed approach are promising for the classification problem considering the new and diverse forest fire detection datasets. Future work will increase the spatial resolution of the images in the forest fire detection dataset. Moreover, a CNN-based image segmentation approach will be proposed to further reduce the rate of false alarms for the forest fire detection problem.

Author Contributions

Conceptualization, S.K. and A.K.; methodology, S.K.; software, S.K. and A.K.; validation, S.K. and A.K.; formal analysis, S.K. and A.K.; investigation, S.K. and A.K.; data curation, A.K.; writing—original draft preparation, S.K. and A.K.; writing—review and editing, S.K. and A.K.; funding acquisition, A.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Zhejiang Normal University Research Fund under Grant ZC304021938.

Data Availability

The dataset can be accessed at: https://www.kaggle.com/datasets/alik05/forest-fire-dataset (accessed on: 15 April 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zanchi, G.; Yu, L.; Akselsson, C.; Bishop, K.; Köhler, S.; Olofsson, J.; Belyazid, S. Simulation of water and chemical transport of chloride from the forest ecosystem to the stream. Environ. Model. Softw. 2021, 138, 104984. [Google Scholar] [CrossRef]

- Bo, M.; Mercalli, L.; Pognant, F.; Cat Berro, D.; Clerico, M. Urban air pollution, climate change and wildfires: The case study of an extended forest fire episode in northern italy favoured by drought and warm weather conditions. In Proceedings of the 7th International Conference on Energy and Environment Research, Jawa Tengah, Indonesia, 14–17 September 2020; de Sá Caetano, N., Salvini, C., Giovannelli, A., Felgueiras, C., Eds.; Energy Reports. 2020; pp. 781–786. [Google Scholar]

- Vardoulakis, S.; Marks, G.; Abramson, M.J. Lessons learned from the Australian bushfires: Climate change, air pollution, and public health. JAMA Intern. Med. 2020, 180, 635–636. [Google Scholar] [CrossRef] [PubMed]

- How Different Tree Species Impact the Spread of Wildfire. Available online: https://open.alberta.ca/dataset/how-different-tree-species-impact-the-spread-of-wildfire (accessed on 20 August 2021).

- Maxouris, C. Here’s Just How Bad the Devastating Australian Fires Are–by the Numbers. Available online: https://edition.cnn.com/2020/01/06/us/australian-fires-by-the-numbers-trnd/index.html (accessed on 20 August 2021).

- State of California. Thomas Fire Incident Information. Available online: https://fire.ca.gov/incident/?incident=d28bc34e-73a8-454d-9e55-dea7bdd40bee (accessed on 20 August 2021).

- Rodrigues, M.; Gelabert, P.J.; Ameztegui, A.; Coll, L.; Vega-Garcia, C. Has COVID-19 halted winter-spring wildfires in the mediterranean? Insights for wild-fire science under a pandemic context. Sci. Total Environ. 2021, 765, 142793. [Google Scholar] [CrossRef] [PubMed]

- Kountouris, Y. Human activity, daylight saving time and wildfire occurrence. Sci. Total Environ. 2020, 727, 138044. [Google Scholar] [CrossRef] [PubMed]

- Kim, T.; Ramos, C.; Mohammed, S. Smart city and IoT. Future Gener. Comput. Syst. 2017, 76, 159–162. [Google Scholar] [CrossRef]

- Hsiao, Y.C.; Wu, M.H.; Li, S.C. Elevated Performance of the Smart City—A Case Study of the IoT by Innovation Mode. IEEE Trans. Eng. Manag. 2021, 68, 1461–1475. [Google Scholar] [CrossRef]

- Khan, A.I.; Al-Habsi, S. Machine Learning in Computer Vision. Procedia Comput. Sci. 2020, 167, 1444–1451. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Khan, S.; Hassan, B.; Zheng, Z. CNN-Based Smoker Classification and Detection in Smart City Application. Sensors 2022, 22, 892. [Google Scholar] [CrossRef]

- Khan, S.; Teng, Y.; Cui, J. Pedestrian Traffic Lights Classification Using Transfer Learning in Smart City Application. In Proceedings of the IEEE 13th International Conference on Communication Software and Networks, Chongqing, China, 4–7 June 2021; pp. 352–356. [Google Scholar]

- Bu, F.; Gharajeh, M.S. Intelligent and vision-based fire detection systems: A survey. Image Vis. Comput. 2019, 91, 103803. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Taberkit, A.M.; Kechida, A. A review on early wildfire detection from unmanned aerial vehicles using deep learning-based computer vision algorithms. Sign. Proc. 2022, 190, 108309. [Google Scholar] [CrossRef]

- Zhang, Q.X.; Lin, G.H.; Zhang, Y.M.; Xu, G.; Wang, J.J. Wildland Forest Fire Smoke Detection Based on Faster R-CNN using Synthetic Smoke Images. Process Eng. 2019, 211, 441–446. [Google Scholar] [CrossRef]

- Hossain, F.M.A.; Zhang, Y.M.; Tonima, M.A. Forest fire flame and smoke detection from UAV-captured images using fire-specific color features and multi-color space local binary pattern. J. Unmanned Veh. Syst. 2020, 8, 285–309. [Google Scholar] [CrossRef]

- Jeong, M.; Park, M.; Nam, J.; Ko, B.C. Light-Weight Student LSTM for Real-Time Wildfire Smoke Detection. Sensors 2020, 20, 5508. [Google Scholar] [CrossRef] [PubMed]

- Srinivas, K.; Dua, M. Fog Computing and Deep CNN Based Efficient Approach to Early Forest Fire Detection with Unmanned Aerial Vehicles. In Proceedings of the International Conference on Inventive Computation Technologies (ICICIT), Tamil Nadu, India, 29–30 August 2019; Smys, S., Bestak, R., Rocha, A., Eds.; Springer Nature: Chennai, India, 2019; pp. 646–652. [Google Scholar]

- Alexandrov, D.; Pertseva, E.; Berman, I.; Pantiukhin, I.; Kapitonov, A. Analysis of Machine Learning Methods for Wildfire Security Monitoring with an Unmanned Aerial Vehicles. In Proceedings of the 24th Conference of Open Innovations Association FRUCT (Finnish-Russian University Cooperation in Telecommunications), Moscow, Russia, 8–12 April 2019; pp. 3–9. [Google Scholar]

- Zhang, Q.; Xu, J.; Xu, L.; Guo, H. Deep Convolutional Neural Networks for Forest Fire Detection. In Proceedings of the International Forum on Management, Education and Information Technology Application, Guangzhou, China, 30–31 January 2016; Kim, Y.H., Ed.; Atlantis Press: Amsterdam, The Netherlands, 2016; pp. 568–575. [Google Scholar]

- Jiao, Z.; Zhang, Y.; Mu, L.; Xin, J.; Jiao, S.; Liu, H.; Liu, D. A YOLOv3-based Learning Strategy for Real-time UAV-based Forest Fire Detection. In Proceedings of the Chinese Control and Decision Conference (CCDC), Hefei, China, 22–24 August 2020; IEEE: New York, NY, USA, 2020; pp. 4963–4967. [Google Scholar]

- Li, W.; Yu, Z. A Lightweight Convolutional Neural Network Flame Detection Algorithm. In Proceedings of the IEEE International Conference on Electronics Information and Emergency Communication (ICEIEC), Beijing, China, 18–20 June 2021; IEEE: New York, NY, USA, 2021; pp. 83–86. [Google Scholar]

- Lee, W.; Kim, S.; Lee, Y.T.; Lee, H.W.; Choi, M. Deep neural networks for wild fire detection with unmanned aerial vehicle. In Proceedings of the IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 5–10 January 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 252–253. [Google Scholar]

- Kaabi, R.; Sayadi, M.; Bouchouicha, M.; Fnaiech, F.; Moreau, E.; Ginoux, J.M. Early smoke detection of forest wildfire video using deep belief network. In Proceedings of the International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Sousse, Tunisia, 21–24 March 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–6. [Google Scholar]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Y.; Xin, J.; Wang, G.; Mu, L.; Yi, Y.; Liu, H.; Liu, D. UAV Image-based Forest Fire Detection Approach Using Convolutional Neural Network. In Proceedings of the IEEE Conference on Industrial Electronics and Applications (ICIEA), Xi’an, China, 19–21 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 2118–2123. [Google Scholar]

- Cao, Y.; Yang, F.; Tang, Q.; Lu, X. An Attention Enhanced Bidirectional LSTM for Early Forest Fire Smoke Recognition. IEEE Access 2019, 7, 154732–154742. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2019, 142, 112975. [Google Scholar] [CrossRef]

- Govil, K.; Welch, M.L.; Ball, J.T.; Pennypacker, C.R. Preliminary Results from a Wildfire Detection System Using Deep Learning on Remote Camera Images. Remote Sens. 2020, 12, 166. [Google Scholar] [CrossRef]

- Tang, Y.; Feng, H.; Chen, J.; Chen, Y. ForestResNet: A Deep Learning Algorithm for Forest Image Classification. J. Physics Conf. Ser. 2021, 2024, 012053. [Google Scholar] [CrossRef]

- Park, M.; Tran, D.Q.; Lee, S.; Park, S. Multilabel Image Classification with Deep Transfer Learning for Decision Support on Wildfire Response. Remote Sens. 2021, 13, 3985. [Google Scholar] [CrossRef]

- Sun, X.; Sun, L.; Huang, Y. Forest fire smoke recognition based on convolutional neural network. J. For. Res. 2020, 32, 1921–1927. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A Novel Dataset and Deep Transfer Learning Benchmark for Forest Fire Detection. Mob. Inf. Syst. 2022, 2022, 1–14. [Google Scholar] [CrossRef]

- Mihalkova, L.; Mooney, R. Transfer learning from minimal target data by mapping across relational domains. In Proceedings of the International Joint Conference on Artificial Intelligence (IJCAI), Pasadena, CA, USA, 11–17 July 2009; AAAI: Palo Alto, CA, USA, 2009; pp. 1163–1168. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE Computer Society, Conference Publishing Services: Los Alamitos, CA, USA, 2018; pp. 4510–4520. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 248–255. [Google Scholar]

- Forest Fire Dataset. Available online: https://www.kaggle.com/datasets/alik05/forest-fire-dataset (accessed on 15 April 2022).

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. arXiv 2017, arXiv:1707.07012. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA; pp. 1800–1807. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity Mappings in Deep Residual Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: New York, NY, USA; pp. 630–645. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).