Abstract

This article deals with asymmetrical spatial data which can be modeled by a partially linear varying coefficient spatial autoregressive panel model (PLVCSARPM) with random effects. We constructed its profile quasi-maximum likelihood estimators (PQMLE). The consistency and asymptotic normality of the estimators were proved under some regular conditions. Monte Carlo simulations implied our estimators have good finite sample performance. Finally, a set of asymmetric real data applications was analyzed for illustrating the performance of the provided method.

1. Introduction

Spatial regression models are used to deal with spatially dependent data which widely exist in many fields, such as economics, environmental science and geography. According to the different types of spatial interaction effects, spatial regression models can be sorted into three basic categories (see [1]). The first category is spatial autoregressive (SAR) models, which include endogenous interaction effects among observations of response variables at different spatial scales (see [2]). The second category is spatial durbin models (SDM), which include exogenous interaction effects among observations of covariates and endogenous interaction effects among observations of response variables at different spatial scales (see [3,4]). The third category is spatial error models (SEM), which include interaction effects among disturbance terms of different spatial scales (see [5]). Among them, the SAR models proposed by [6] may be the most popular. The developments in the testing and estimation of SAR models for sectional data were summarized in the books by [7,8,9] and the surveys by [10,11,12,13,14,15,16], among others. Compared to sectional data models, panel data models exhibit an excellent capacity for capturing the complex situations by using abundant datasets built up over time and adding individual-specific or time-specific fixed or random effects. Their theories, methods and applications can be found in the books by [17,18,19,20] and the surveys by [21,22,23,24,25,26], among others.

The above mentioned research literature mainly focuses on linear parametric models. Although the estimations and properties of these models have been well established, they are often unrealistic in application, for the reason that they are unable to accommodate sufficient flexibility to accommodate complex structures (e.g., nonlinearity). Moreover, mis-specification of the data generation mechanism by a linear parametric model could lead to excessive modeling biases or even erroneous conclusions.

Ref. [27] pointed out that the relationship between variables in space usually exhibits highly complexity in reality. Therefore, researches have proposed a number of solution methods. In video coding systems, transmission problems are usually dealt with wavelet-based methods. Ref. [28] proposed a low-band-shift method which avoids the shift-variant property of the wavelet transform and performs the motion compensation more precisely and efficiently. Ref. [29] obtained a wavelet-based lossless video coding scheme that switches between two operations based on the different amount of motion activities between two consecutive frames. More theories and applications can be found in [30,31,32]. In econometrics, some nonparametric and semiparametric spatial regression models have been developed to relax the linear parametric model settings. For sectional spatial data, ref. [33] studied the GMM estimation of a nonparametric SAR model; Ref. [34] investigated the PQMLE of a partially linear nonparametric SAR model. However, such nonparametric SAR models may cause the “curse of dimensionality” when the dimension of covariates is higher. In order to overcome this drawback, ref. [35] studied two-step SGMM estimators and their asymptotic properties for spatial models with space-varying coefficients. Ref. [36] proposed a semiparametric series-based least squares estimating procedure for a semiparametric varying coefficient mixed regressive SAR model and derived the asymptotical normality of the estimators. Some other related research works can be found in [37,38,39,40,41,42]. For panel spatial data, ref. [43] obtained PMLE and its asymptotical normality of varying the coefficient SAR panel model with random effects; Ref. [44] applied instrumental variable estimation to a semiparametric varying coefficient spatial panel data model with random effects and the investigated asymptotical normality of the estimators.

In this paper, we extend the varying coefficient spatial panel model with random effects given in [43] to a PLVCSARPM with random effects. By adding a linear part to the model of [43], we can simultaneously capture linearity, non-linearity and the spatial correlation relationships of exogenous variables in a response variable. By using the profile quasimaximum likelihood method to estimate PLVCSARPM with random effects, we proved the consistency and asymptotic normality of the estimators under some regular conditions. Monte Carlo simulations and real data analysis show that our estimators perform well.

This paper is organized as follows: Section 2 introduces the PLVCSARPM with random effects and constructs its PQMLE. Section 3 proves the asymptotic properties of estimators. Section 4 presents the small sample estimates using Monte Carlo simulations. Section 5 analyzes a set of asymmetric real data applications for illustrating the performance of the proposed method. A summary is given in Section 6. The proofs of some important theorems and lemmas are given in Appendix A.

2. The Model and Estimators

Consider the following partially linear varying coefficient spatial autoregressive panel model (PLVCSARPM) with random effects:

where i refers to a spatial unit; t refers to a given time period; are observations of a response variable; ; and are observations of p-dimensional and q-dimensional covariates, respectively; is an unknown univariate varying coefficient function vector; are unknown smoothing functions of u; is an unknown spatial correlation coefficient; is a regression coefficient vector of ; is an predetermined spatial weight matrix; is the ith component of ; are i.i.d. error terms with zero means and variance ; are i.i.d. variables with zero means and variance , are independent of . Let be the true parameter vector of and be the true varying coefficient function of .

The model (1) can be simplified as the following matrix form:

where ; ; ; ; ; ; ; is an identity matrix; is a identity matrix; ⊗ denotes the Kronecker product; is a vector consisting of 1.

Define ; then the model (2) can be rewritten as:

where I is an unit matrix. For the model (3), it is easy to get the following facts:

According to [12], the quasi-log-likelihood function of the model (3) can be written as follows:

where , and c is a constant.

By maximizing the above quasi-log-likelihood function with respect to , the quasi-maximum likelihood estimators of , and can be easily obtained as

By substituting (5)–(7) into (4), we have the concentrated quasi-log-likelihood function of as

It is obvious that we cannot directly obtain the quasi-maximum likelihood estimator of by maximizing the above formula because is an unknown function. In order to overcome this problem, we use the PQMLE method and working independence theory ([45,46]) to estimate the unknown parameters and varying coefficient functions of the model (1).

The main steps are as follows:

Step 1 Suppose that is known. can be approximated by the first-order Taylor expansion

for in a neighborhood of u, where is the first order derivative of . Let and ; then estimators of and can be obtained by

where , , is a kernel function and h is the bandwidth. Therefore, the feasible initial estimator of can be obtained by .

Denote , , and then we have

Therefore, we obtain

Let , and . It is easy to know that

where . Consequently, the initial estimator of is given by

where

Step 2 Replacing of (4) with , the estimator of can be obtained by maximizing approximate quasi-log-likelihood function:

In the real estimation of , the procedure is realized by following steps:

Firstly, assume is known. The initial estimators of , and are obtained by maximizing (9). Then, we find

Secondly, with the estimated , and , update by maximizing the concentrated quasi-log-likelihood function of :

Therefore, the estimator of is obtained by:

Step 3 By substituting into (10), (11) and (12), respectively, the final estimators of , and are computed as follows:

Step 4 By replacing with in (8), we get the ultimate estimator of :

where , .

3. Asymptotic Properties for the Estimators

In this section, we focus on studying consistency and asymptotic normality of the PQMLEs given in Section 2. To prove these asymptotic properties, we need the following assumptions to hold.

To provide a rigorous analysis, we make the following assumptions.

Assumption 1.

- (i)

- and are uncorrelated to and , and satisfy , , , , , , and , where represents the Euclidean norm. Moreover, and for some .

- (ii)

- , are random sequences. The density function of is non-zero, uniformly bounded and second-order continuously differentiable on , where is the supporting set of . exists, and it has a second-order continuous derivative; and are second-order continuously differentiable on ; , and for ; , and .

- (iii)

- Real valued function is second-order, continuously differentiable and satisfies the first order Lipschitz condition, at any u, , where and are positive and constant.

- (iv)

- are i.i.d. random variables from the population. Moreover, and for .

Assumption 2.

- (i)

- As a normalization, the diagonal elements of are 0 for all i and are at most of order , denoted by .

- (ii)

- The ratio as N goes to infinity.

- (iii)

- is nonsingular for any .

- (iv)

- The matrices and are uniformly bounded in both row and column sums in absolute value.

Assumption 3.

is a nonnegative continuous even function. Let , ; then for any positive odd number. Meanwhile, , .

Assumption 4.

If , and , then .

Assumption 5.

There is an unique to make the model (1) tenable.

Assumption 6.

, where

Remark 1.

Assumption 1 provides the essential features of the regressors and disturbances for the model (see [47]). Assumption 2 concerns the basic features of the spatial weights matrix and the parallel Assumptions 2–5 of [12]. Assumption 2(i) is always satisfied if is a bounded sequence. We allow to be divergent, but at a rate smaller than N, as specified in Assumption 2(ii). Assumption 2(iii) guarantees that model (2) has an equilibrium given by (3). Assumption 2(iv) is also assumed in [12], and it limits the spatial correlation to some degree but facilitates the study of the asymptotic properties of the spatial parameter estimators. Assumptions 3 and 4 concern the kernel function and bandwidth sequence. Assumption 5 offers a unique identification condition, and Assumption 6 is necessary for proof of asymptotic normality.

In order to prove large sample properties of estimators, we need introduce the following useful lemmas. Before that, we simplify the model (2) and obtain reduced form equation of Y as follows:

where ; . The above equations are frequently used in a later derivation.

Lemma 1.

Let be i.i.d. random vectors, where are scalar random variables. Further, assume that , where f denotes the joint density of . Let K be a bounded positive function with a bounded support, satisfying a Lipschitz condition. Given that for some ,

The proof can be found in [48].

Lemma 2.

Under Assumptions 1–4, we have

where and , .

Proof See the Appendix A.

Lemma 3.

Under Assumptions 1–4, we have.

Lemma 4.

Under Assumptions 1–4, we have

- (i)

- ;

- (ii)

- , ;

- (iii)

- , ;

- (iv)

- ,

The proof can be found in [37].

Lemma 5.

Under Assumptions 1–6, is a positive definite matrix and

Proof See the Appendix A.

With the above lemmas, we state main results as follows. Their detailed proofs are given in the Appendix A.

Theorem 1.

Under Assumptions 1–5, we have and .

Theorem 2.

Under Assumptions 1–5, we have .

Theorem 3.

Under Assumptions 1–5, we have and .

Theorem 4.

Under Assumptions 1–6, we have

where “” represents convergence in distribution, is the average Hessian matrix (information matrix when ε and b are normally distributed, respectively), and .

Theorem 5.

Under Assumptions 1–5, we have

where and satisfy and and is the second-order derivative of , . Furthermore, if , then we have

4. Monte Carlo Simulation

In this section, Monte Carlo simulations are presented, which were carried out to investigate the finite sample performance of PQMLEs. The sample standard deviation () and two root mean square errors ( and ) were used to measure the estimation performance. and are defined as:

where is the number of iterations; ) are estimates of for each iteration, is the true value of ; , and are the upper quartile, median and lower quartile of parametric estimates, respectively. For the nonparametric estimates, we took the mean absolute deviation error () as the evaluation criterion:

where are Q fixed grid points in the support set of u. In the simulations, we applied the rule of thumb method of [48] to choose the optimal width and let kernel function be an Epanechnikov kernel (see [33]).

We ran a small simulation experiment with and generated the simulation data from following model:

where we assume that , , , , , , , and and 0.75, respectively. Furthermore, we chose the Rook weight matrix (see [7]) to investigate the influence of the spatial weight matrix on the estimates. Our simulation results for both cases and are presented in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6.

Table 1.

The medians and SDs of MADE values for .

Table 2.

The medians and SDs of MADE values for .

Table 3.

The results of parametric estimates with T = 10(1).

Table 4.

The results of parametric estimates with T = 10(2).

Table 5.

The results of parametric estimates with T = 15(1).

Table 6.

The results of parametric estimates with T = 15(2).

By observing the simulation results in Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6, one can obtain the following findings: (1) The and for , , and were fairly small for almost all cases, and they decreased as N increased. The and for are not negligible for small sample sizes, but they decreased as N increased. (2) For fixed T, as N increased, the for , , , and decreased for all cases. and for and decreased rapidly, whereas the for estimates of the others parameters did not change much. For fixed N, as T increased, the behavior of the estimates of parameters was similar to the case where N changed under the fixed T. (3) The for of varying coefficient functions and decreased as T or N increased. Combined with the above three findings, we conclude that the estimates of the parameter and unknown varying coefficient functions were convergent.

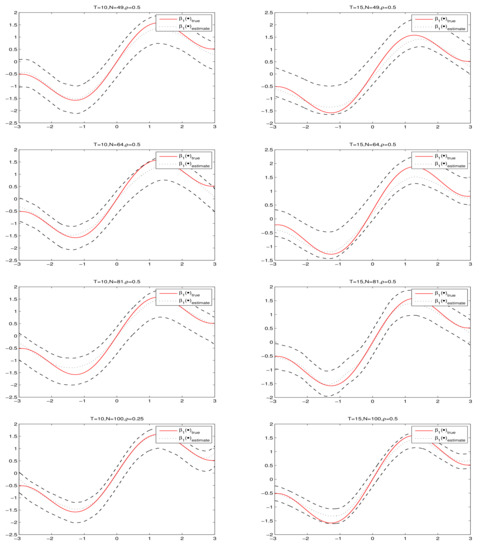

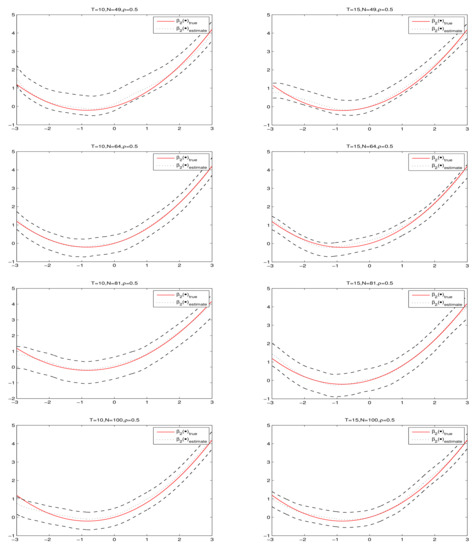

Figure 1 and Figure 2 present the fitting results and 95% confidence intervals of and under , respectively, where the short dashed curves are the average fits over 500 simulations by PQMLE, the solid curves are the true values of and the two long dashed curves are the corresponding 95% confidence bands. By observing every subgraph in Figure 1 and Figure 2, we can see that the short dashed curve is fairly close to solid curve and the corresponding confidence bandwidth is narrow. This illustrates that the nonparametric estimation procedure works well for small samples. To save space, we do not present the cases and because they had similar results as the case .

Figure 1.

The fitting results and 95% confidence intervals of under .

Figure 2.

The fitting results and 95% confidence intervals of under .

5. Real Data Analysis

We applied the housing prices of Chinese city data to analyze the proposed model with real data. The data were obtained from the China Statistical Yearbook, the China City Statistical Yearbook and the China Statistical Yearbook for Regional Economies. Based on the panel data of related variables of 287 cities at/above the prefecture level (except the cities in Taiwan, Hong Kong and Macau) in China from 2011 to 2018, we explored the influencing factors of housing prices of Chinese cities by PLVCSARPM with random effects.

Taking [49,50,51] as references, we collected nine variables related to housing prices of China cities, including each city’s average selling price of residential houses (denoted by HP, yuan/sq.m), the expectation of housing price trends (EHP, %), population density (POD, person), annual per capita disposable income of urban households (ADI, yuan), loan–to–GDP ratio (MON, %), natural growth rate of population (NGR, %), sulphur dioxide emission (SDE, 10,000 tons), area of grassland (AOG, 10,000 hectares) and the value of buildings completed (VBC, yuan/sq.m). According to result of non-linear regression, the established model is given by

where represents the ith observation of ln(HP) at time t; represents the ith observation of EHP at time t; means the ith observation of POD, ln(ADI), MON, NGR, SDE and AOG at time t, respectively, represents the ith observation of ln(VBC) at time t.

In order to transfer the asymmetric distribution of POD to nearly uniform distribution on (0,1), we set

The spatial weight matrix we adopted is calculated as follows:

where

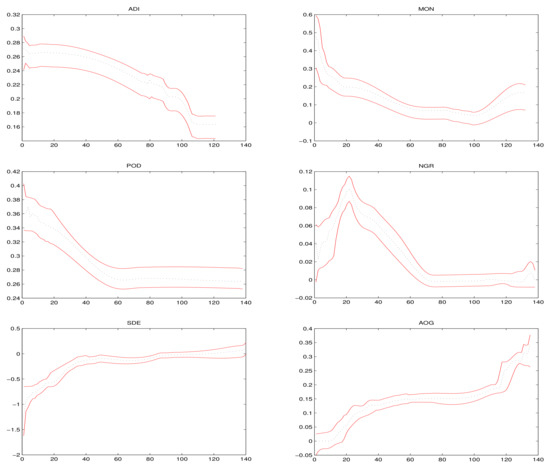

Figure 3 presents the estimation results and corresponding 95% confidence intervals of varying coefficient functions , where the black dashed line curves are the average fits over 500 simulations and the red solid lines are the corresponding 95% confidence bands. It can be seen from Figure 3 that all covariates variables have obvious non-linear effects on housing prices of Chinese cities.

Figure 3.

The estimated functions and corresponding 95% confidence intervals for the housing prices of Chinese cities.

The estimation results of parameters in the model (14) are reported in Table 7. It can be seen from Table 7: (1) all estimates of parameters are all significant; (2) spatial correlation coefficient , which means there exists a spatial spill over effect for housing prices of Chinese cities; (3) indicates that the expectation of housing price trends (EHP) has a promotional effect on housing prices of Chinese cities; (4) shows that the growth of housing prices in different regions is relatively stable and is less affected by external fluctuations.

Table 7.

The estimation results of unknown parameters in the model (14).

6. Conclusions

In this paper, we proposed PQMLE of PLVCSARPM with random effects. Our model has the following advantages: (1) It can overcome the “curse of dimensionality” in the nonparametric spatial regression model effectively. (2) It can simultaneously study the linear and non-linear effects of coveriates. (3) It can investigate the spatial correlation effect of response variables. Under some regular conditions, consistency and asymptotic normality of the estimators for parameters and varying coefficient functions were derived. Monte Carlo simulations showed the proposed estimators are well behaved with finite samples. Furthermore, the performance of the proposed method was also assessed on a set of asymmetric real data.

This paper only focused on the PQMLE of PLVCSARPM with random effects. In future research, we may try to extend our method to more general models, such as a partially linear varying coefficient spatial autoregressive model with autoregressive disturbances. In addition, we also need study the issues of Bayesian analysis, variable selection and quantile regression in these models.

Author Contributions

Supervision, S.L. and J.C.; software, S.L.; methodology, S.L.; writing—original draft preparation, S.L.; writing—review and editing, S.L., J.C. and D.C. All authors have read and agreed to the published version of manuscript.

Funding

This work was supported by the Natural Science Foundation of Fujian Province (2020J01170, 2021J01662); the Natural Science Foundation of China (12001105); the Program for Innovative Research Team in Science and Technology in Fujian Province University; and the Fujian Normal University Innovation Team Foundation “Probability and Statistics: Theory and Application” (IRTL1704).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are openly available in Reference [50].

Acknowledgments

The authors are deeply grateful to editors and anonymous referees for their careful reading and insightful comments. Their comments led us to significantly improve the paper.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

To proceed with the proofs of main lemmas and theorems, we first provide two frequently used evident facts (see [14]).

Fact 1. If the row and column sums of the matrices and are uniformly bounded in absolute value, then the row and column sums of are also uniformly bounded in absolute value.

Fact 2. If the row (resp. column) sums of are uniformly bounded in absolute value and is a conformable matrix whose elements are uniformly , then so are the elements of (resp. ).

Proof of Lemma 2.

Recall . By straightforward calculation, it is not difficult to get

where . Denote , , then we have

By Assumptions 1–3, we know

where and , are for any fixed t and l. According to Khinchine law of large numbers, we have . Therefore, it is not hard to get that

Let , then . Hence,

□

Proof of Lemma 3.

Recall . By straightforward calculation, we obtain

where for . Then, it is not hard to obtain

For the first term of above equality in (A1), by similar proof procedures of Lemma 2, it is easily to get that

Proof of Lemma 5.

Its proof is analogous to Lemma 4.2 in [34] and Theorem 3.2 in [12]. The major difference is that our model is panel model with random effects instead of sectional model.

In order to prove is positive definite, we need only prove that according to , where , are constants, and is a q dimension column vector.

It follows from that:

and

By straightforward calculations, it can be simplified to prove

where .

By Lemma 4 in [43], it is easy to obtain that Furthermore, according to Assumption 6, . Thus, we have and so . This completes the proof. □

Proof of Theorem 1.

Let and . We adopt the idea of Theorem 3.2 in [12] to prove the consistency of and . By the Consistency of Extrema Estimators in [52], it suffices to show that

where

and

By straightforward calculation, we have

Therefore, it only needs to prove that

and

We first prove (A2) holds.

it is obvious that

Thus, it is necessary to prove the last two terms of above equality converge to 0 in probability. By Lemma 3 and Assumption 1(iv), we have Moreover,

By weak law of large numbers,

Thus

It follows from that

By (A4) and (A5), we have

Similar to the proof of (A2), it is not difficult to verify that . According to the continuity of , we have

Consequently, the consistency of and are proved. □

Proof of Theorem 2.

It follows by straightforward calculation that

where

Moreover, , where and By Theorem 1, it is easy to obtain that

Thus,

Therefore, . Furthermore, we have by using Lemma 3. □

Proof of Theorem 3.

By Theorem 1, we have

To prove our conclusions, we only need to verify that

and

Firstly, we prove (A6) holds. It is easy to know

According to Khinchine law of large numbers, we have

and

Thus, . Therefore, . By similar way, it is not hard to get .

Proof of Theorem 4.

By Taylor expansion of at , we have

where and lies between and . By Theorem 1 and Theorem 3, we easily know that converges to in probability.

Denote

Thus,

Next, we prove

and

To prove (A8), we need to show that each element of converges to 0 in probability. It is not difficult to get

By mean value theorem, Assumption 2 and Lemma 5, we have

where lies between and . Similarly, we have

To prove (A9), we adopt the idea of [12]. It is easy to obtain that the components of are linear or quadratic functions of and their means are all . Under Assumption 1, we have that is asymptotically normal distributed with 0 means by using the CLT for linear-quadratic forms of Theorem 1 in [53]. In the following, we calculate its variance. According to the structure of the Fisher information matrix, we know that

where

Let and be the third moments of b and , respectively; and be the forth moments of b and , respectively; and be the ii-th element of A. By using the Lemma 5 and the facts that , , it follows by straightforward calculation that

Particularly, it is not difficult to know that when and are normally distributed, respectively. This completes the proof. □

Proof of Theorem 5.

According to Lemma 2, we know

where . By using the fact that , we have

Denote . By using Lemma 1 and Taylor expansion of at u, it is not difficult to get

According to the proof procedure of Lemma 2, we know

Thus

Define , then

and

According to CLT, we have

and

where and . Furthermore, if , then

By Lemma 3 and Theorem 2, we obtain that

In particular, when , holds. □

References

- Elhorst, J.P. Spatial Econometrics: From Cross-sectional Data to Spatial Panels; Springer: Berlin, Germany, 2014. [Google Scholar]

- Brueckner, J.K. Strategic interaction among local governments: An overview of empirical studies. Int. Reg. Sci. Rev. 2003, 26, 175–188. [Google Scholar] [CrossRef] [Green Version]

- LeSage, J.P.; Pace, R.K. Introduction to Spatial Econometrics; CRC Press, Taylor & Francis Group: Boca Raton, MA, USA, 2009. [Google Scholar]

- Elhorst, J.P. Applied spatial econometrics: Raising the bar. Spat. Econ. Anal. 2010, 5, 9–28. [Google Scholar] [CrossRef]

- Allers, M.A.; Elhorst, J.P. Tax mimicking and yardstick competition among governments in the Netherlands. Int. Tax Public Financ. 2005, 12, 493–513. [Google Scholar]

- Cliff, A.D.; Ord, J.K. Spatial Autocorrelation; Pion Ltd.: London, UK, 1973. [Google Scholar]

- Anselin, L. Spatial Econometrics: Methods and Models; Kluwer Academic: Dordrecht, The Netherlands, 1988. [Google Scholar]

- Anselin, L.; Bera, A.K. Patial dependence in linear regression models with an introduction to spatial econometrics. In Handbook of Applied Economics Statistics; Ullah, A., Giles, D.E.A., Eds.; Marcel Dekker: New York, NY, USA, 1998. [Google Scholar]

- Cressie, N. Statistics for Spatial Data; John Wiley & Sons: New York, NY, USA, 1993. [Google Scholar]

- Ord, J.K. Estimation methods for models of spatial interaction. J. Am. Stat. Assoc. 2004, 70, 120–126. [Google Scholar]

- Anselin, L. Spatial econometrics. In A Companion to Theoretical Econometrics; Baltagi, B., Ed.; Blackwell Publishers: Hoboken, NJ, USA, 2001. [Google Scholar]

- Lee, L.F. Asymptotic distributions of quasi-maximum likelihood estimators for spatial autoregressive models. Econometrica 2004, 72, 1899–1925. [Google Scholar] [CrossRef]

- Kelejian, H.H.; Prucha, I.R. A generalized spatial two stage least squares procedure for estimating a spatial autoregressive model with autoregressive disturbance. J. Real Estate Financ. Econ. 1998, 17, 99–121. [Google Scholar]

- Kelejian, H.H.; Prucha, I.R. A generalized moments estimator for the autoregressive parameter in a spatial model. Int. Econ. Rev. 1999, 40, 509–533. [Google Scholar]

- Lee, L.F. Best spatial two-stage least squares estimators for a spatial autoregressive model with autoregressive disturbances. Econom. Rev. 2003, 22, 307–335. [Google Scholar] [CrossRef]

- Lee, L.F. GMM and 2SLS estimation of mixed regressive, spatial autoregressive models. J. Econom. 2007, 137, 489–514. [Google Scholar]

- Baltagi. B. Econometric Analysis of Panel Data; John Wiley & Sons: New York, NY, USA, 2008. [Google Scholar]

- Arellano, M. Panel Data Econometrics; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Baldev, R.; Baltagi, B. Panel Data Analysis; Springer Science and Business Media: Berlin, Germany, 2012. [Google Scholar]

- Hsiao, C. Analysis of Panel Data; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Baltagi, B.; Song, S.H.; Koh, W. Testing panel data regression models with spatial error correlation. J. Econom. 2003, 117, 123–150. [Google Scholar]

- Baltagi, B.; Egger, P.; Pfaffermayr, M. A Generalized Spatial Panel Data Model with Random Effects; Working Paper; Syracuse University: New York, NY, USA, 2007. [Google Scholar]

- Elhorst, J.P. Specification and estimation of spatial panel data models. Int. Reg. Sci. Rev. 2003, 26, 244–268. [Google Scholar] [CrossRef]

- Kapoor, M.; Kelejian, H.H.; Prucha, I.R. Panel data models with spatially correlated error components. J. Econom. 2007, 140, 97–130. [Google Scholar]

- Yu, J.; Jong, R.D.; Lee, L.F. Quasi-Maximum Likelihood Estimators for Spatial Dynamic Panel Data with Fixed Effects When Both n and T are Large: A Nonstationary Case; Working Paper; Ohio State University: Columbus, OH, USA, 2007. [Google Scholar]

- Yu, J.; Jong, R.D.; Lee, L.F. Quasi-maximum likelihood estimators for spatial dynamic panel data with fixed effects when both n and T are large. J. Econom. 2008, 146, 118–134. [Google Scholar] [CrossRef] [Green Version]

- Paelinck, J.H.P.; Klaassen, L.H. Spatial Econometrics; Gower: Farnborough, UK, 1979. [Google Scholar]

- Park, H.W.; Kim, H.S. Motion estimation using low-band-shift method for wavelet-based moving-picture coding. IEEE Trans. Image Process. 2000, 9, 577–587. [Google Scholar] [CrossRef]

- Gong, Y.; Pullalarevu, S.; Sheikh, S. A wavelet-based lossless video coding scheme. In Proceedings of the 7th International Conference on Signal Processing, ICSP’04, Beijing, China, 31 August–4 September 2004; Volume 2, pp. 1123–1126. [Google Scholar]

- Guariglia, E.; Silvestrov, S. Fractional-Wavelet Analysis of Positive definite Distributions and Wavelets on ′(ℂ): Engineering Mathematics II; Springer: Cham, Switzerland, 2016; pp. 337–353. [Google Scholar]

- Guariglia, E. Primality, Fractality, and Image Analysis. Entropy 2019, 21, 304. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aoun, N.; El’Arbi, B.M.; Amar, C.B. Multiresolution motion estimation and compensation for video coding. In Proceedings of the IEEE International Conference on Signal Processing, Beijing, China, 3–7 December 2010. [Google Scholar]

- Su, L. Semiparametric GMM estimation of spatial autoregressive models. J. Econom. 2012, 167, 543–560. [Google Scholar]

- Su, L.; Jin, S. Profile quasi-maximum likelihood estimation of partially linear spatial autoregressive models. J. Econom. 2010, 157, 18–33. [Google Scholar] [CrossRef]

- Wei, H.; Sun, Y. Heteroskedasticity-robust semi-parametric GMM estimation of a spatial model with space-varying coefficients. Spat. Econ. Anal. 2017, 12, 113–128. [Google Scholar]

- Sun, Y.; Zhang, Y.; Huang, J.Z. Estimation of a semiparametric varying-coefficient mixed regressive spatial autoregressive model. Econom. Stat. 2019, 9, 140–155. [Google Scholar] [PubMed]

- Chen, J.; Sun, L. Spatial lag single-index panel model with random effects. Stat. Res. 2015, 32, 95–101. [Google Scholar]

- Li, K.; Chen, J. Profile maximum likelihood estimation of semi-parametric varying coefficient spatial lag model. J. Quant. Tech. Econ. 2013, 4, 85–98. [Google Scholar]

- Du, J.; Sun, X.; Cao, R.; Zhang, Z. Statistical inference for partially linear additive spatial autoregressive models. Spat. Stat. 2018, 25, 52–67. [Google Scholar]

- Hu, X.; Liu, F.; Wang, Z. Testing serial correlation in semiparametric varying coefficient partially linear errors-in-variables model. J. Syst. Sci. Complex 2009, 3, 483–494. [Google Scholar] [CrossRef]

- Hardle, W.; Hall, P.; Ichimura, H. Optimal smoothing in single-index models. Ann. Stat. 1993, 21, 157–178. [Google Scholar] [CrossRef]

- Opsomer, J.D.; Ruppert, D. Fitting a bivariate additive model by local polynomial regression. Ann. Stat. 1997, 25, 186–211. [Google Scholar] [CrossRef]

- Chen, J.; Sun, L. Estimation of varying coefficient spatial autoregression panel model with random effects. Stat. Res. 2017, 34, 118–128. [Google Scholar]

- Zhang, Y.Q.; Shen, D.M. Estimation of semi-parametric varying coefficient spatial panel data models with random-effects. J. Stat. Plan. Inference 2015, 159, 64–80. [Google Scholar] [CrossRef]

- Cai, Z. Trending time-varying coefficient time series models with serially correlated errors. J. Econom. 2007, 136, 163–188. [Google Scholar] [CrossRef]

- Lin, X.; Carroll, R. Nonparametric function estimation for clustered data when the predictor is measured without (with) error. J. Am. Stat. Assoc. 2000, 95, 520–534. [Google Scholar] [CrossRef]

- Fan, J.; Huang, T. Profile likelihood inferences on semiparametric varying coefficient partially linear models. Bernoulli 2005, 11, 1031–1057. [Google Scholar] [CrossRef]

- Mack, P.Y.; Silverman, W.B. Weak and strong uniform consistency of kernel regression estimations. Z. Wahrscheinlichkeitstheorie Verwandte Geb. 1982, 61, 405–415. [Google Scholar]

- Ding, R.; Ni, P. Regional spatial linkage and spillover effect of house prices of Chinas cities. Financ. Trade Econ. 2015, 6, 136–150. [Google Scholar]

- Sun, Y.; Wu, Y. Estimation and testing for a partially linear single-index spatial regression model. Spat. Econ. Anal. 2018, 13, 1–17. [Google Scholar] [CrossRef]

- Liang, Y.; Gao, T. An empirical analysis on the causes of price fluctuation of commercial housing in China. Manag. World 2006, 8, 76–82. [Google Scholar]

- White, H. Estimation, Inference and Specification Analysis; Cambridge University Press: New York, NY, USA, 1994. [Google Scholar]

- Kelejian, H.H.; Prucha, I.R. On the asymptotic distribution of the Moran I test statistic with applications. J. Econom. 2001, 104, 219–257. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).