Abstract

The main objective of this article is to propose a new method that would extend Popov’s extragradient method by changing two natural projections with two convex optimization problems. We also show the weak convergence of our designed method by taking mild assumptions on a cost bifunction. The method is evaluating only one value of the bifunction per iteration and it is uses an explicit formula for identifying the appropriate stepsize parameter for each iteration. The variable stepsize is going to be effective for enhancing iterative algorithm performance. The variable stepsize is updating for each iteration based on the previous iterations. After numerical examples, we conclude that the effect of the inertial term and variable stepsize has a significant improvement over the processing time and number of iterations.

1. Introduction

Let C to be a nonempty convex, closed subset of a Hilbert space and be a bifunction with for each The equilibrium problem for f upon C is defined as follows:

The equilibrium problem () has many mathematical problems as a particular case, for example, the fixed point problems, complementarity problems, the variational inequality problems (), the minimization problems, Nash equilibrium of noncooperative games, saddle point problems and problem of vector minimization (see [1,2,3,4]). The unique formulation of an equilibrium problem was specifically defined in 1992 by Muu and Oettli [5] and further developed by Blum and Oettli [1]. An equilibrium problem is also known as the Ky Fan inequality problem. Fan [6] presents a review and gives specific conditions on a bifunction for the existence of an equilibrium point. Many researchers have provided and generalized many results corresponding to the existence of a solution for the equilibrium problem (see [7,8,9,10]). A considerable number of methods are the earliest set up over the last few years concentrating on the different equilibrium problem classes and other particular forms of an equilibrium problem in abstract spaces (see [11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29]).

The Korpelevich and Antipin’s extragradient method [30,31] are efficient two-step methods. Flam [12,20] employed the auxiliary problem principle to set up the extragradient method for the monotone equilibrium problems. The consideration on the extragradient method is to figure out two natural projections on C to achieve the next iteration. If the computing of a projection on a feasible set C is hard to compute, it is a challenge to solve two minimal distance problems for the next iteration, which may have an effect on methods performance and efficiency. In order to overcome it, Censor initiated a subgradient extragradient method [32] where the second projection is replaced by a half-plane projection that can be computed effectively. Iterative sequences set up with the above-mentioned extragradient-like methods need to make use of a certain stepsize constant based on the Lipschitz-type constants of a cost bifunction. The prior knowledge about these constant imposes some restrictions on developing an iterative sequence because these Lipschitz-type constants are normally not known or hard to compute.

In 2016, Lyashko et al. [33] developed an extragradient method for solving pseudomonotone equilibrium problems in a real Hilbert space. It is required to solve two optimization problems on a closed convex set for each next iteration, with a reasonable fixed stepsize depends upon on the Lipschitz-type constants. The superiority of the Lyashko et al. [33] method compared to the Tran et al. [20] extragradient method is that the value of the bifunction f is to determine only once for each iteration. Inertial-type methods are based on the discrete variant of a second-order dissipative dynamical system. In order to handle numerically smooth convex minimization problem, Polyak [34] proposed an iterative scheme that would require inertial extrapolation as a boost ingredient to improve the convergence rate of the iterative sequence. The inertial method is commonly a two-step iterative scheme and the next iteration is computed by use of previous two iterations and may be pointed out to as a method of pacing up the iterative sequence (see [34,35]). In the case of equilibrium problems, Moudafi established the second-order differential proximal method [36]. These inertial methods are employed to accelerate the iterative process for the desired solution. Numerical studies indicate that inertial effects generally enhance the performance of the method in terms of the number of iterations and execution time in this context. There are many methods established for the different classes of variational inequality problems (for more details see [37,38,39,40,41]).

In this study, we considered Lyashko et al. [33] and Liu et al. [42] extragradient methods and present its improvement by employing an inertial scheme. We also improved the stepsize to its second step. The stepsize was not fixed in our proposed method, but the stepsize was set up by an explicit formula based on some previous iterations. We formulated a weak convergence theorem for our proposed method for dealing with the problems of equilibriums involving pseudomonotone bifunction within specific conditions. We also examined how our results are linked to variational inequality problems. Apart from this, we considered the well-known Nash–Cournot equilibrium model as a test problem to support the validity of our results. Some applications for variational inequality problems were considered and other numerical examples were explained to back the appropriateness of our designed results.

The rest of the article is set up as follows: In Section 2 we give a few definitions and significant results to be utilized in this paper. Section 3 includes our first algorithm involving pseudomonotone bifunction, and gives the weak convergence result. Section 4 illustrates some application of our results in variational inequality problems. Section 5 sets out numerical examinations to describe numerical performance.

2. Preliminaries

In this part we cover some relevant lemmas, definitions and other notions that will be employed throughout the convergence analysis and numerical part. The notion and presents for the inner product and norm on the Hilbert space . Let be a well-defined operator and is the solution set of a variational inequality problem corresponding operator G over the set C. Moreover stands for the solution set of an equilibrium problem over the set C and is any arbitrary element of or

Let be a convex function with subdifferential of g at defined as:

A normal cone of C at is given as

We consider various conceptions of a bifunction monotonicity (see [1,43] for details).

Definition 1.

The bifunction on C for is

- (i)

- strongly monotone if

- (ii)

- monotone if

- (iii)

- strongly pseudomonotone if

- (iv)

- pseudomonotone if

- (v)

- satisfying the Lipschitz-type condition on C if there are two real numbers such thatholds.

Definition 2.

[44] A metric projection of u onto a closed, convex subset C of is defined as follows:

Lemma 1.

[45] Let be metric projection from upon Thus

- (i)

- For each

- (ii)

- if and only if

This portion concludes with a few crucial lemmas which are advantageous in investigating the convergence of our proposed results.

Lemma 2.

[46] Let C be a nonempty, closed and convex subset of a real Hilbert space and be a convex, subdifferentiable and lower semi-continuous function on Moreover, is a minimizer of a function h if and only if where and stands for the subdifferential of h at x and the normal cone of C at x respectively.

Lemma 3

([47], Page 31). For every and the following relation is true:

Lemma 4.

[48] If , and are sequences in

holds with such that The following items are true.

- with

Lemma 5.

[49] Let be a sequence in and such that

- For each exists;

- All sequentially weak cluster point of lies in C;

Then weakly converges to a element of

Lemma 6.

[50] Assume are real sequences such that Take and Then there is a sequence in a manner that and

Due to Lipschitz-like condition on a bifunction f through above lemma, we have the following inequality.

Corollary 1.

Assume that bifunction f satisfy the Lipschitz-type condition on C through positive constants and Let where and Then there exits a positive real number λ such that

and where

Assumption 1.

Let a bifunction satisfies

- for all and f is pseudomonotone on feasible set

- f satisfy the Lipschitz-type condition on with constants and

- for all and satisfy

- need to be convex and subdifferentiable over for all fixed

Since is convex and subdifferentiable on for each fixed and subdifferential of at defined as:

3. An Algorithm and Its Convergence Analysis

We develop a method and provide a weak convergence result for it. We consider bifunction f that satisfies the conditions of Assumption 1 and The detailed method is written below.

Lemma 7.

If a sequence is set up by Algorithm 1. Then the following relationship holds.

Proof.

By definition of we have

By using Lemma 2, we obtain

From the above expression there is a and such that

Thus, we have

Since then for all Thus, we have

Since we obtain

Lemma 8.

Let sequence be generated by Algorithm 1. Then the following inequality holds.

Proof.

By definition of we have

Thus, there is a and such that

The above expression implies that

Since then for all This implies that

By we can obtain

| Algorithm 1 (The Modified Popov’s subgradient extragradient method for pseudomonotone ) |

|

Lemma 9.

Let and are sequences generated by Algorithm 1. Then the following inequality is true.

Proof.

Since then by the definition of gives that

The above implies that

By with we reach the following

Lemma 10.

If and in Algorithm 1. Then, is the solution of Equation (1).

Proof.

Setting and in Lemma 9, we get

By the means of in Lemma 7, we get

Since and then for all □

Remark 1.

(i). If in Algorithm 1, then It is obvious from Lemma 7.

(ii). If in Algorithm 1, then It is obvious from Lemma 8.

Lemma 11.

Let a bifunction is satisfying the assumptions (–). Thus, for each we have

Proof.

By substituting into Lemma 7, we get

By make use of implies that . Due to the pseudomonotonicity of a bifunction f we get Therefore, from Equation (11) we get

Corollary 1 implies that in Equation (6) is well-defined and

Since and using Lemma 9, we have

By vector algebra we have the following facts:

From the above last two inequalities and Equation (16) we obtain

By triangle inequality and elementary algebra gives the following inequality

From the above two inequalities we have the desired result

□

Theorem 1.

Suppose a bifunction is satisfying the Assumption 1. Then for all the sequences and are generated by Algorithm 1 weakly converge to

Proof.

From Lemma 11 we have

By definition of in the Algorithm 1 we may write

By Algorithm 1, and the above inequality ensures

From definition of in Algorithm 1 we obtain

By definition of and through Cauchy inequality, we achieve

Next, we are going to compute

The relationship in Equation (32) implies that the sequence is nonincreasing. Furthermore, by definition of we have

Additionally, by definition of we have

The expression in Equation (35) implies that

By Equation (21) we have

Fix and use above equation for Summing up, we get

letting in above expression we have

and

By using the triangular inequality we can easily derive the following from the above-mentioned expressions

Moreover, we follow the relationship in Equation (27) such that

The above expression with Equations (37) and (42) and Lemma 4 suggest that limits of and exist for each and imply that the sequences , and are bounded. We require to establish that every weak sequential limit point of the sequence lies in Take z to be any sequential weak cluster point of the sequence i.e., if there exists a weak convergent subsequence of that converges to it implies that also weakly converge to Our purpose is to prove Using Lemma 7 with Equations (13) and (15) we obtain

for any member y in The expressions in Equations (38), (43) and (44) as well as the boundedness of the sequence mean the right side of the above-mentioned inequality is zero. Taking condition () in (Assumption 1) and we obtain

Then implies that for all This determines that By Lemma 5, the sequences and weakly converges to □

We make in the Algorithm 1 and by following Theorem 1 we have an improved variant of Liu et al. [42] extragradient method in terms of stepsize.

Corollary 2.

Let a bifunction satisfies Assumption 1. For every the sequence and are set up in the subsequent manner:

- Initialization: Given and

- Iterative steps: For given and construct a half-space

- where

- Step 1: Compute

- Step 2: Update the stepsize as follows

- and compute

- Then and weakly converge to the solution

4. Solving Variational Inequality Problems with New Self-Adaptive Methods

We consider the application of our above-mentioned results to solve variational inequality problems involving pseudomonotone and Lipschitz-type continuous operator. The variational inequality problem is written in the following way:

An operator is

- monotone on C if ;

- L-Lipschitz continuous on C if ;

- pseudomonotone on C if

Note: If we choose the bifunction for all then the equilibrium problem transforms into the above variational inequality problem with This means that from the definitions of in the Algorithm 1 and according to the above definition of bifunction f we have

Similarly to the expression in Equation (48) the value from Algorithm 1 converts into

Due to and by subdifferential definition we obtain

and consequently for all This implies that

Assumption 2.

We assume that G is satisfying the following assumptions:

- .

- G is monotone on C and is nonempty;

- .

- G is pseudomonotone on C and is nonempty;

- .

- G is L-Lipschitz continuous on C through positive parameter

- .

- for every and satisfying

We have reduced the following results from our main results applicable to solve variational inequality problems.

Corollary 3.

Assume that is satisfying () in Assumption 2. Let and be the sequences obtained as follows:

- Initialization: Choose for a nondecreasing sequence such that and

- Iterative steps: For given and construct a half space

- where

- Step 1: Compute

- Step 2: The stepsize is updated as follows

- and compute

- Then the sequence and weakly converge to of

Corollary 4.

Assume that is satisfying () in Assumption 2. Let and be the sequences obtained as follows:

- Initialization: Choose and

- Iterative steps: For given and construct a half space

- Step 1: Compute

- Step 2: The stepsize is updated as follows

- and compute

- Thus and converge weakly to the solution of

We examine that if G is monotone then condition () can be removed. The assumption () is required to specify complies with the condition (). In addition, condition () is required to show after the inequality in Equation (47). This implies that the condition () is used to prove Now we will prove that by using the monotonicity of operator Since G is monotone, we have

By and Equation (46) we have

Since and for all Let for all Due to convexity of C the value for each We obtain

That is for all By as and the continuity of G gives for each which implies that

Corollary 5.

Assume that is satisfying () in Assumption 2. Let and be the sequences obtained as follows:

- Initialization: Choose for a nondecreasing sequence such that and

- Iterative steps: For given and construct a half space

- where

- Step 1:

- Step 2: The stepsize is updated as follows

- and compute

- Then the sequences and converges weakly to of

Corollary 6.

Assume that is satisfying () in Assumption 2. Let and be the sequences obtained as follows:

- Initialization: Choose and

- Iterative steps: For given and construct a half space

- Step 1:

- Step 2: The stepsize is updated as follows

- and compute

- Then and converge weakly to of

5. Computational Experiment

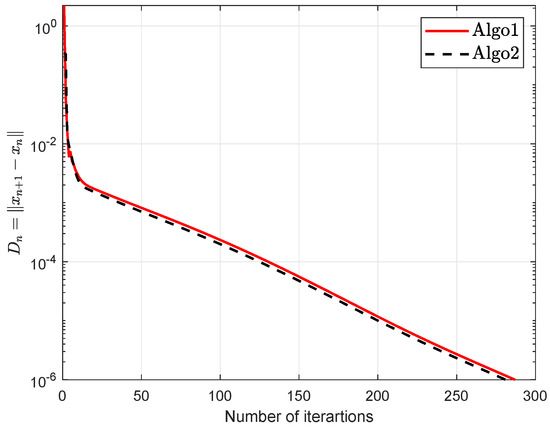

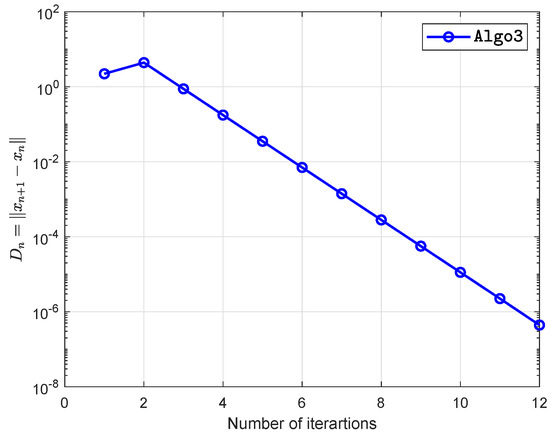

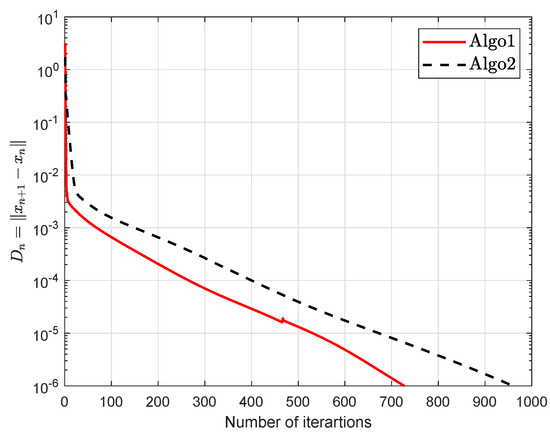

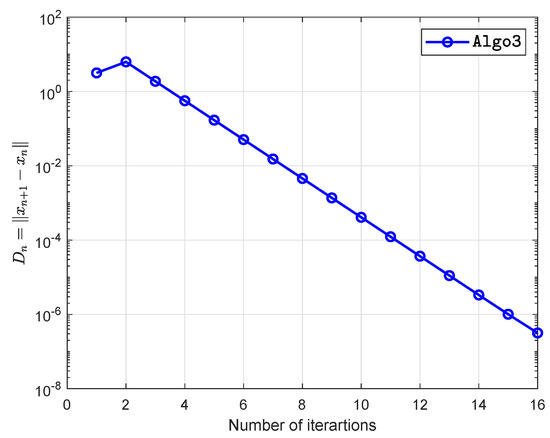

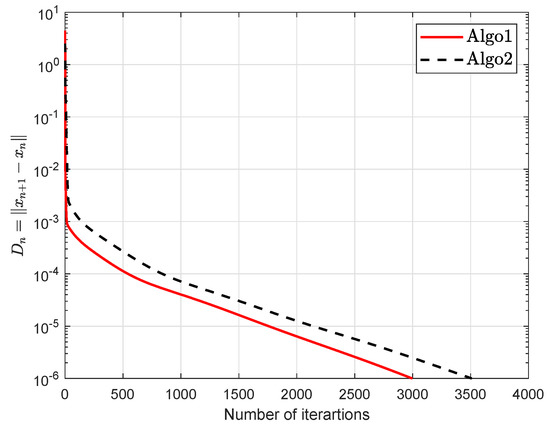

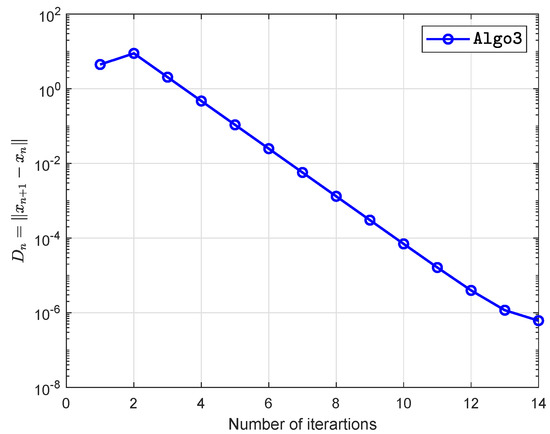

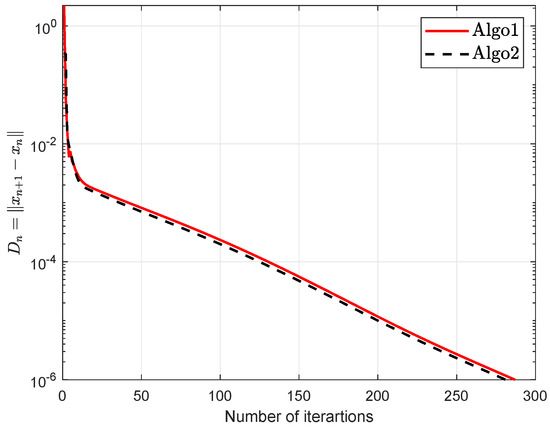

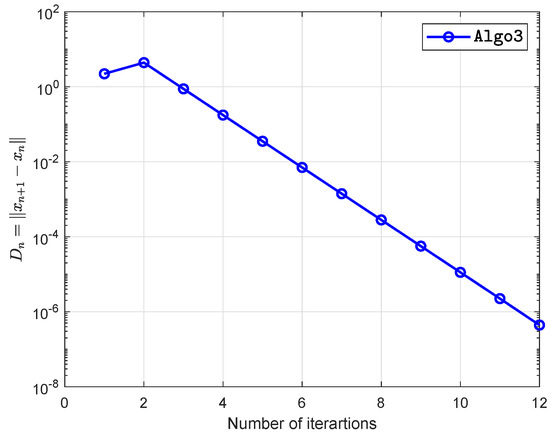

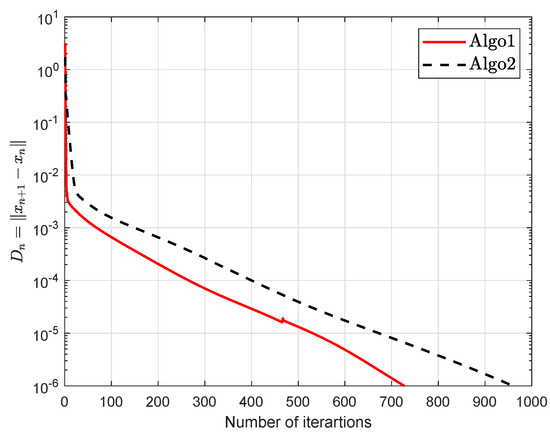

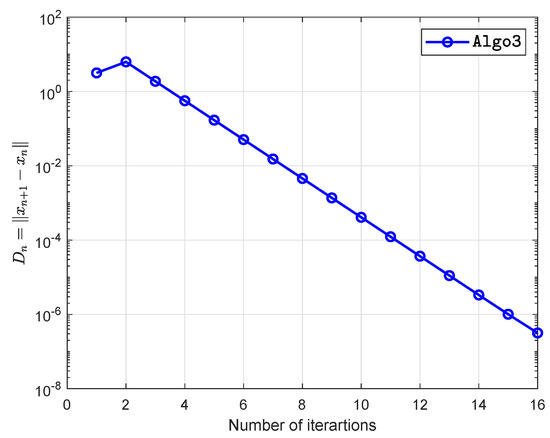

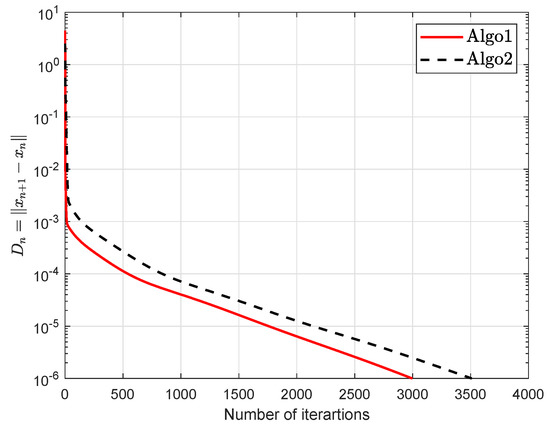

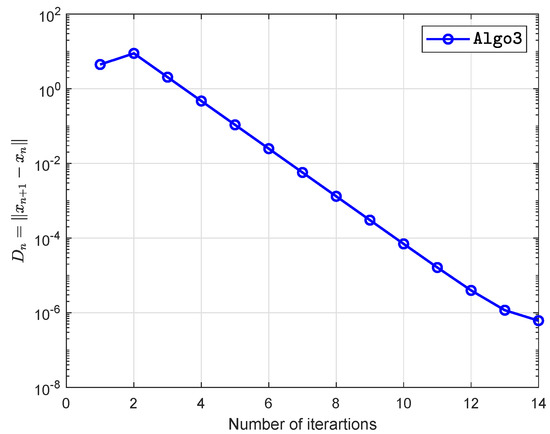

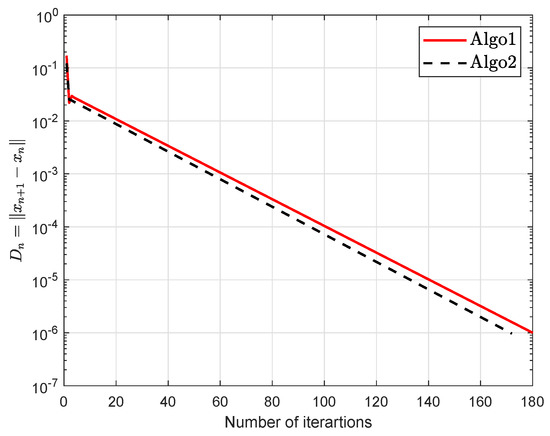

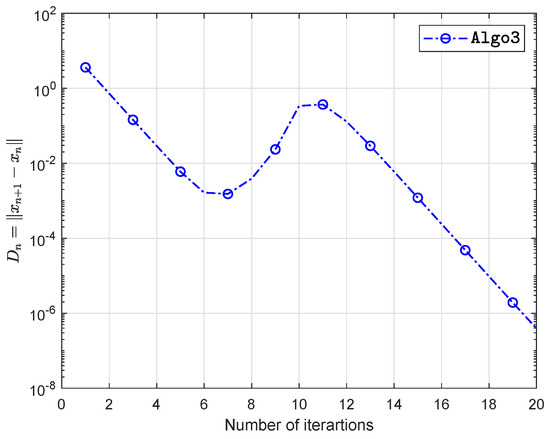

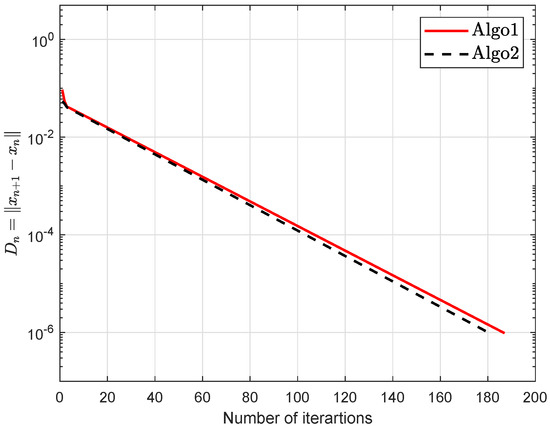

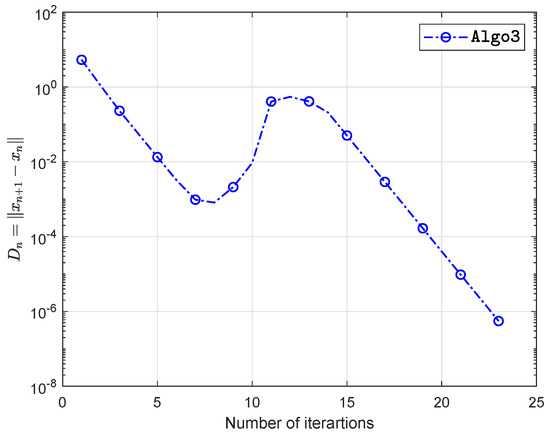

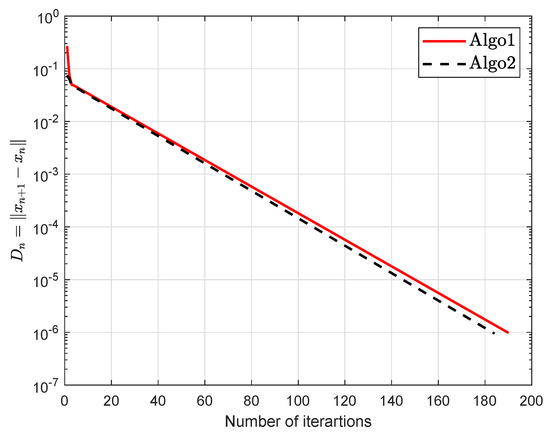

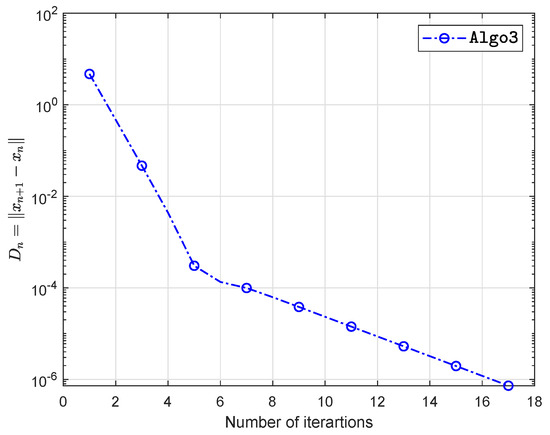

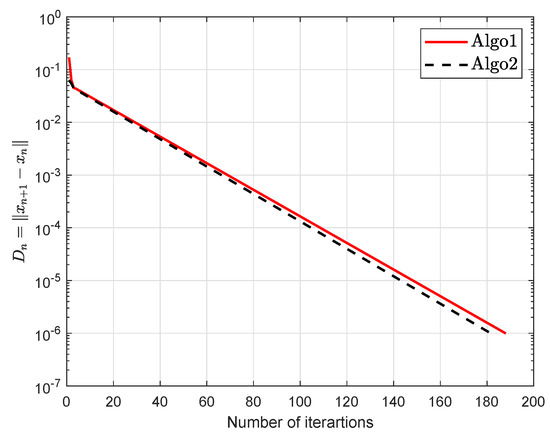

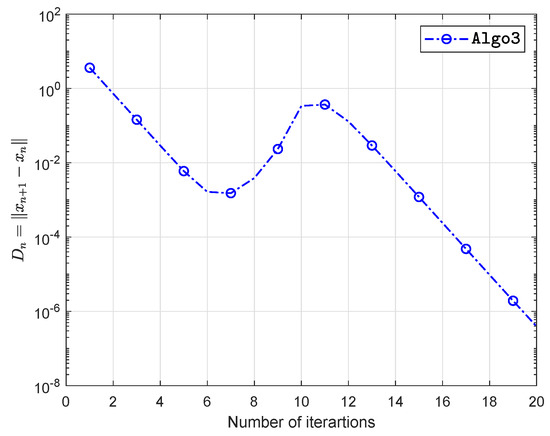

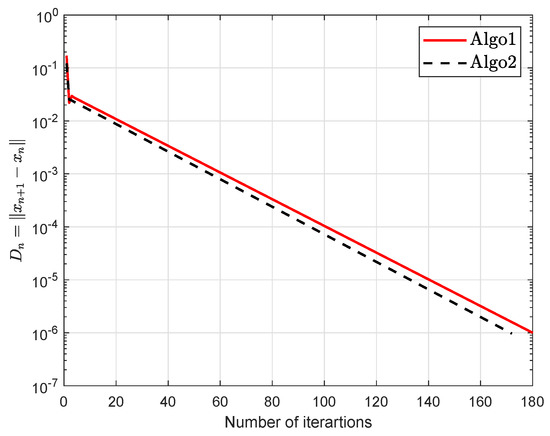

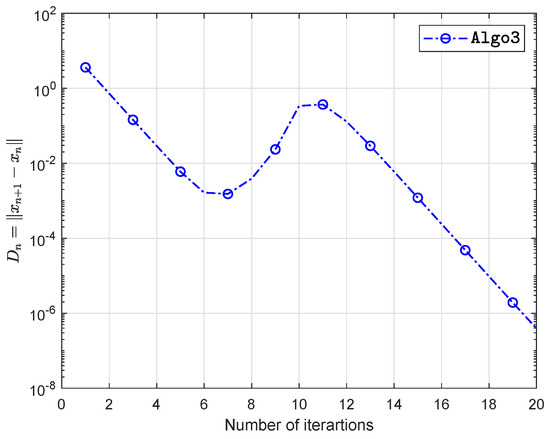

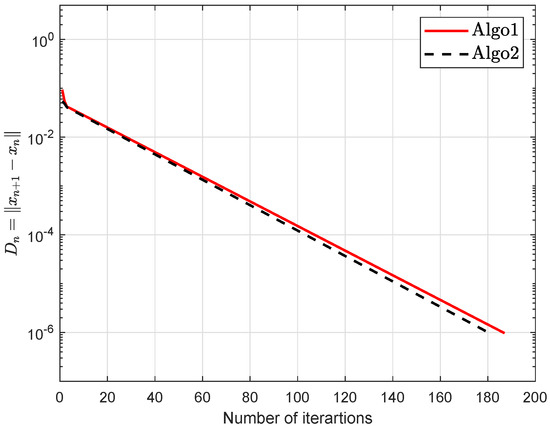

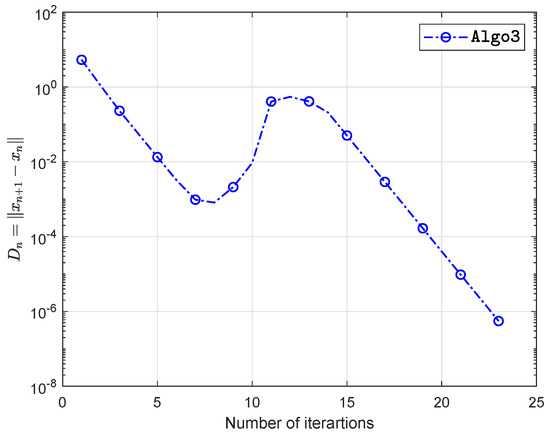

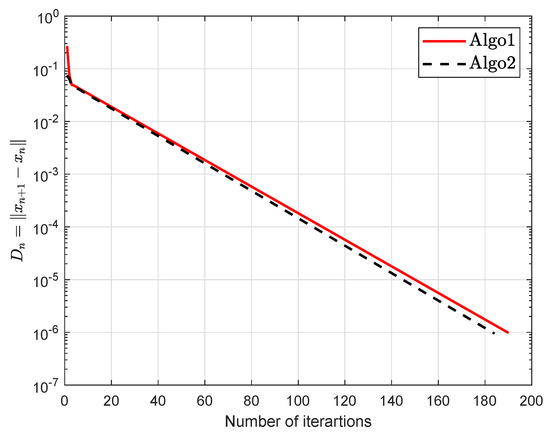

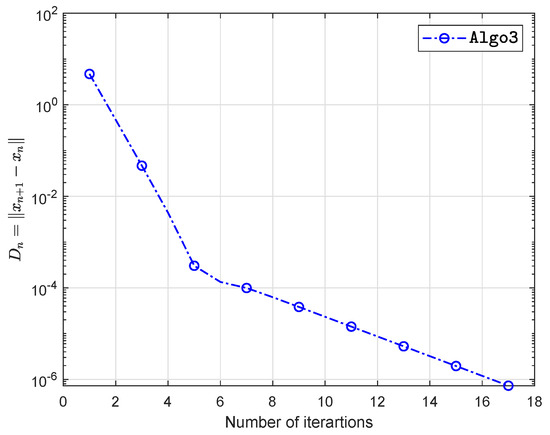

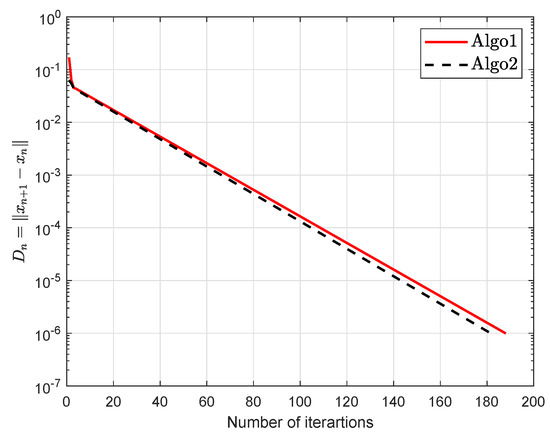

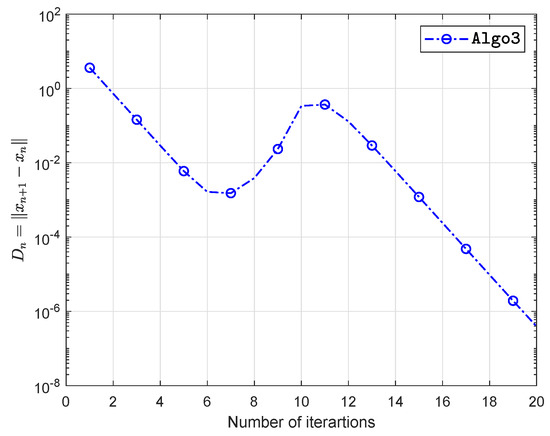

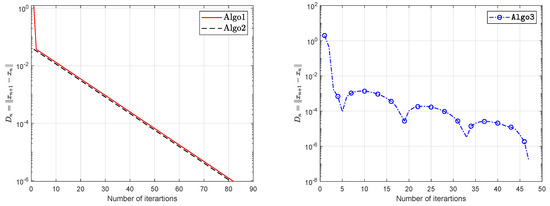

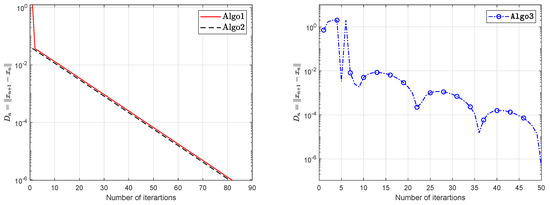

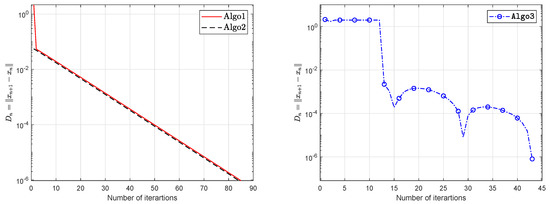

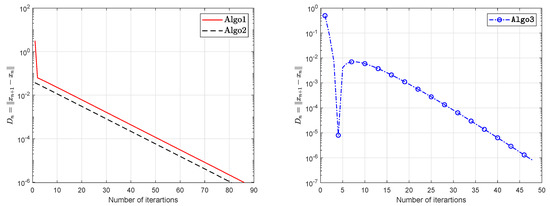

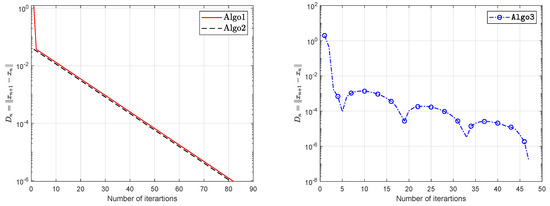

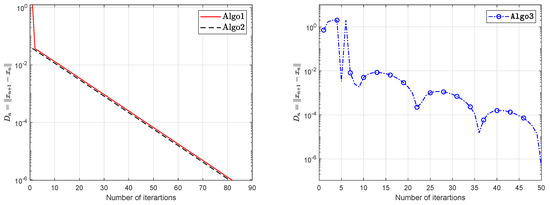

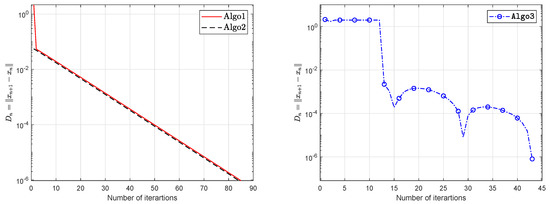

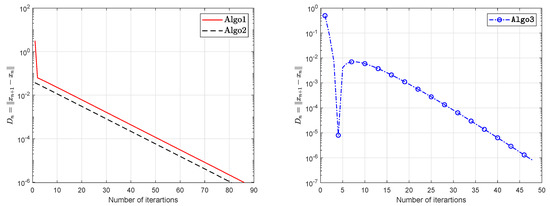

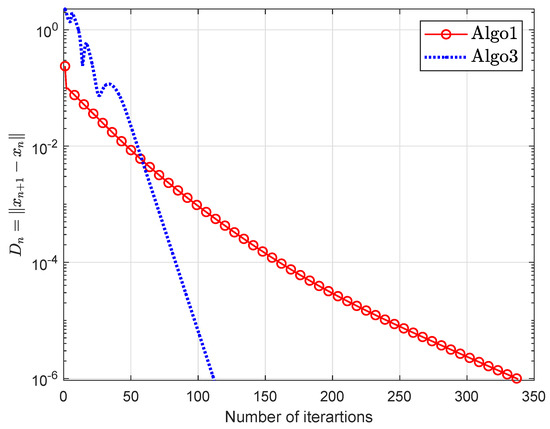

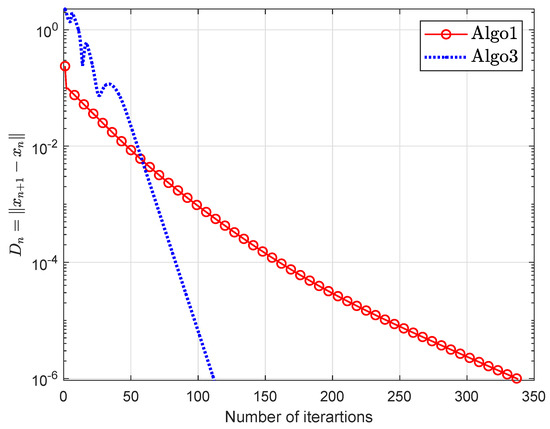

Numerical results produced in this section show the performance of our proposed methods. The MATLAB codes were running in MATLAB version 9.5 (R2018b) on a PC Intel(R) Core(TM)i5-6200 CPU @ 2.30GHz 2.40GHz, RAM 8.00 GB. In these examples, the x-axis indicates the number of iterations or the execution time (in seconds) and y-axes represents the values We present the comparison of Algorithm 1 (Algo3) with the Lyashko et al. [33] (Algo1) and Liu et al. [42] (Algo2).

Example 1.

Suppose that is defined by

where and A, B are matrices of order n where B an symmetric positive semidefinite and is symmetric negative definite with Lipschitz constants are (for more details see [20]). During Example 1, matrices are randomly produced (Two matrices are randomly generated E and F with entries from The matrix and ) and entries of d randomly belongs to The constraint set as

The numerical findings are shown in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 and Table 1 with and

Figure 1.

Example 1 when

Figure 2.

Example 1 when

Figure 3.

Example 1 when

Figure 4.

Example 1 when

Figure 5.

Example 1 when

Figure 6.

Example 1 when

Example 2.

Let be defined as

where We see that

which gives that bifunction f is monotone. The numerical findings are shown in Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12, Figure 13 and Figure 14 and Table 2 with and

Figure 7.

Example 2 when

Figure 8.

Example 2 when

Figure 9.

Example 2 when

Figure 10.

Example 2 when

Figure 11.

Example 2 when

Figure 12.

Example 2 when

Figure 13.

Example 2 when

Figure 14.

Example 2 when

Example 3.

Let be defined by

and let The operator G is Lipschitz continuous with and pseudomonotone. During this experiment we use with stepsize according Lyashko et al. [33] and Liu et al. [42]. We take , and The experimental results are shown in Table 3 and Figure 15, Figure 16, Figure 17 and Figure 18.

Figure 15.

Example 3 when

Figure 16.

Example 3 when

Figure 17.

Example 3 when

Figure 18.

Example 3 when

Example 4.

Take to be defined through

where A is a symmetric semidefinite matrix and is the proximal mapping through the function such that

The property of A and the proximal mapping B implies that G is monotone upon C [45]. The following is a feasible set

Table 4.

Example 4: The numerical results for Figure 19.

Figure 19.

Example 4 when

6. Conclusions

We have developed extragradient-like methods to solve pseudomonotone equilibrium problems and different classes of variational inequality problems in real Hilbert space. The advantage of our method is in designing an explicit formula for step size evaluation. For each iteration the stepsize formula is updated based on the previous iterations. Numerical results were reported to demonstrate numerical effectiveness of our results relative to other methods. These numerical studies suggest that inertial effects in this sense also generally improve the effectiveness of the iterative sequence.

Author Contributions

The authors contributed equally to writing this article. All authors have read and agree to the published version of the manuscript.

Funding

This research work was financially supported by King Mongkut’s University of Technology Thonburi through the ‘KMUTT 55th Anniversary Commemorative Fund’. Moreover, this project was supported by Theoretical and Computational Science (TaCS) Center under Computational and Applied Science for Smart research Innovation research Cluster (CLASSIC), Faculty of Science, KMUTT. In particular, Habib ur Rehman was financed by the Petchra Pra Jom Doctoral Scholarship Academic for Ph.D. Program at KMUTT [grant number 39/2560]. Furthermore, Wiyada Kumam was financially supported by the Rajamangala University of Technology Thanyaburi (RMUTTT) (Grant No. NSF62D0604).

Acknowledgments

The first author would like to thank the “Petchra Pra Jom Klao Ph.D. Research Scholarship from King Mongkut’s University of Technology Thonburi”. We are very grateful to the editor and the anonymous referees for their valuable and useful comments, which helps in improving the quality of this work.

Conflicts of Interest

The authors declare that they have conflict of interest.

References

- Blum, E. From optimization and variational inequalities to equilibrium problems. Math. Stud. 1994, 63, 123–145. [Google Scholar]

- Facchinei, F.; Pang, J.S. Finite-Dimensional Variational Inequalities and Complementarity Problems; Springer Science & Business Media: New York, NY, USA, 2007. [Google Scholar]

- Konnov, I. Equilibrium Models and Variational Inequalities; Elsevier: Amsterdam, The Netherlands, 2007; Volume 210. [Google Scholar]

- Yang, Q.; Bian, X.; Stark, R.; Fresemann, C.; Song, F. Configuration Equilibrium Model of Product Variant Design Driven by Customer Requirements. Symmetry 2019, 11, 508. [Google Scholar] [CrossRef]

- Muu, L.D.; Oettli, W. Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. Theory Methods Appl. 1992, 18, 1159–1166. [Google Scholar] [CrossRef]

- Fan, K. A Minimax Inequality and Applications, Inequalities III; Shisha, O., Ed.; Academic Press: New York, NY, USA, 1972. [Google Scholar]

- Yuan, G.X.Z. KKM Theory and Applications in Nonlinear Analysis; CRC Press: Boca Raton, FL, USA, 1999; Volume 218. [Google Scholar]

- Brézis, H.; Nirenberg, L.; Stampacchia, G. A remark on Ky Fan’s minimax principle. Boll. Dell Unione Mat. Ital. 2008, 1, 257–264. [Google Scholar]

- Rehman, H.U.; Kumam, P.; Sompong, D. Existence of tripled fixed points and solution of functional integral equations through a measure of noncompactness. Carpathian J. Math. 2019, 35, 193–208. [Google Scholar]

- Rehman, H.U.; Gopal, G.; Kumam, P. Generalizations of Darbo’s fixed point theorem for new condensing operators with application to a functional integral equation. Demonstr. Math. 2019, 52, 166–182. [Google Scholar] [CrossRef]

- Combettes, P.L.; Hirstoaga, S.A. Equilibrium programming in Hilbert spaces. J. Nonlinear Convex Anal. 2005, 6, 117–136. [Google Scholar]

- Flåm, S.D.; Antipin, A.S. Equilibrium programming using proximal-like algorithms. Math. Program. 1996, 78, 29–41. [Google Scholar] [CrossRef]

- Van Hieu, D.; Muu, L.D.; Anh, P.K. Parallel hybrid extragradient methods for pseudomonotone equilibrium problems and nonexpansive mappings. Numer. Algorithms 2016, 73, 197–217. [Google Scholar] [CrossRef]

- Van Hieu, D.; Anh, P.K.; Muu, L.D. Modified hybrid projection methods for finding common solutions to variational inequality problems. Comput. Optim. Appl. 2017, 66, 75–96. [Google Scholar] [CrossRef]

- Van Hieu, D. Halpern subgradient extragradient method extended to equilibrium problems. Rev. Real Acad. De Cienc. Exactas Fís. Nat. Ser. A Mat. 2017, 111, 823–840. [Google Scholar] [CrossRef]

- Hieua, D.V. Parallel extragradient-proximal methods for split equilibrium problems. Math. Model. Anal. 2016, 21, 478–501. [Google Scholar] [CrossRef]

- Konnov, I. Application of the proximal point method to nonmonotone equilibrium problems. J. Optim. Theory Appl. 2003, 119, 317–333. [Google Scholar] [CrossRef]

- Duc, P.M.; Muu, L.D.; Quy, N.V. Solution-existence and algorithms with their convergence rate for strongly pseudomonotone equilibrium problems. Pacific J. Optim 2016, 12, 833–845. [Google Scholar]

- Quoc, T.D.; Anh, P.N.; Muu, L.D. Dual extragradient algorithms extended to equilibrium problems. J. Glob. Optim. 2012, 52, 139–159. [Google Scholar] [CrossRef]

- Quoc Tran, D.; Le Dung, M.; Nguyen, V.H. Extragradient algorithms extended to equilibrium problems. Optimization 2008, 57, 749–776. [Google Scholar] [CrossRef]

- Santos, P.; Scheimberg, S. An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 2011, 30, 91–107. [Google Scholar]

- Tada, A.; Takahashi, W. Weak and strong convergence theorems for a nonexpansive mapping and an equilibrium problem. J. Optim. Theory Appl. 2007, 133, 359–370. [Google Scholar] [CrossRef]

- Takahashi, S.; Takahashi, W. Viscosity approximation methods for equilibrium problems and fixed point problems in Hilbert spaces. J. Math. Anal. Appl. 2007, 331, 506–515. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Cho, Y.J.; Yordsorn, P. Weak convergence of explicit extragradient algorithms for solving equilibirum problems. J. Inequal. Appl. 2019, 2019, 1–25. [Google Scholar] [CrossRef]

- Rehman, H.U.; Kumam, P.; Kumam, W.; Shutaywi, M.; Jirakitpuwapat, W. The Inertial Sub-Gradient Extra-Gradient Method for a Class of Pseudo-Monotone Equilibrium Problems. Symmetry 2020, 12, 463. [Google Scholar] [CrossRef]

- Ur Rehman, H.; Kumam, P.; Abubakar, A.B.; Cho, Y.J. The extragradient algorithm with inertial effects extended to equilibrium problems. Comput. Appl. Math. 2020, 39. [Google Scholar] [CrossRef]

- Argyros, I.K.; d Hilout, S. Computational Methods in Nonlinear Analysis: Efficient Algorithms, Fixed Point Theory and Applications; World Scientific: Singapore, 2013. [Google Scholar]

- Ur Rehman, H.; Kumam, P.; Cho, Y.J.; Suleiman, Y.I.; Kumam, W. Modified Popov’s explicit iterative algorithms for solving pseudomonotone equilibrium problems. Optim. Methods Softw. 2020, 1–32. [Google Scholar] [CrossRef]

- Argyros, I.K.; Cho, Y.J.; Hilout, S. Numerical Methods for Equations and Its Applications; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Korpelevich, G. The extragradient method for finding saddle points and other problems. Matecon 1976, 12, 747–756. [Google Scholar]

- Antipin, A. Convex programming method using a symmetric modification of the Lagrangian functional. Ekon. Mat. Metod. 1976, 12, 1164–1173. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 2011, 148, 318–335. [Google Scholar] [CrossRef]

- Lyashko, S.I.; Semenov, V.V. A new two-step proximal algorithm of solving the problem of equilibrium programming. In Optimization and Its Applications in Control and Data Sciences; Springer: Cham, Switzerland, 2016; pp. 315–325. [Google Scholar]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Moudafi, A. Second-order differential proximal methods for equilibrium problems. J. Inequal. Pure Appl. Math. 2003, 4, 1–7. [Google Scholar]

- Dong, Q.L.; Lu, Y.Y.; Yang, J. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D. Modified subgradient extragradient method for variational inequality problems. Numer. Algorithms 2018, 79, 597–610. [Google Scholar] [CrossRef]

- Dong, Q.; Cho, Y.; Zhong, L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2018, 70, 687–704. [Google Scholar] [CrossRef]

- Yang, J. Self-adaptive inertial subgradient extragradient algorithm for solving pseudomonotone variational inequalities. Appl. Anal. 2019. [Google Scholar] [CrossRef]

- Thong, D.V.; Van Hieu, D.; Rassias, T.M. Self adaptive inertial subgradient extragradient algorithms for solving pseudomonotone variational inequality problems. Optim. Lett. 2020, 14, 115–144. [Google Scholar] [CrossRef]

- Liu, Y.; Kong, H. The new extragradient method extended to equilibrium problems. Rev. Real Acad. De Cienc. Exactas Fís. Nat. Ser. A Mat. 2019, 113, 2113–2126. [Google Scholar] [CrossRef]

- Bianchi, M.; Schaible, S. Generalized monotone bifunctions and equilibrium problems. J. Optim. Theory Appl. 1996, 90, 31–43. [Google Scholar] [CrossRef]

- Goebel, K.; Reich, S. Uniform convexity. In Hyperbolic Geometry, and Nonexpansive; Marcel Dekker, Inc.: New York, NY, USA, 1984. [Google Scholar]

- Kreyszig, E. Introductory Functional Analysis with Applications, 1st ed.; Wiley: New York, NY, USA, 1978. [Google Scholar]

- Tiel, J.V. Convex Analysis; John Wiley: New York, NY, USA, 1984. [Google Scholar]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; Springer: New York, NY, USA, 2011; Volume 408. [Google Scholar]

- Alvarez, F.; Attouch, H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001, 9, 3–11. [Google Scholar] [CrossRef]

- Opial, Z. Weak convergence of the sequence of successive approximations for nonexpansive mappings. Bull. Am. Math. Soc. 1967, 73, 591–597. [Google Scholar] [CrossRef]

- Dadashi, V.; Iyiola, O.S.; Shehu, Y. The subgradient extragradient method for pseudomonotone equilibrium problems. Optimization 2019. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).