FCM-Based Approach for Locating Visible Video Watermarks

Abstract

1. Introduction

- Perceptibility: a visible watermark should be noticeable in gray and color host images/videos.

- Distinguishably: a visible watermark should be clear enough to be recognized or identified as different in any region of the hosted image if the region has a different texture, plain, and edge.

- Noticeably: a visible watermark should not be too noticeable, so the quality value of the hosted image/video remains acceptable.

- Transparency: a visible watermark should not conceal or brighten the host image by a notably large amount; the watermarked area should not have any artifacts or feature loss, and it should remain perceptible by the Human Visual System (HVS), with no impact on non-watermarked areas.

- Robustness: a visible watermark should be optimized to be able to survive against common kinds of attacks.

- The watermark embedding process should be automatic for all kinds of images/videos.

- 2D and 3D watermarks: based on dimensionality, the embedded watermark can be 2D or 3D.

- Fixed or moving watermarks: the watermark can change its location on the screen (i.e., from one corner to another), and other watermarks can be fixed.

- Single or multiple watermarks: in the same video, we can have single or multiple watermarks in different locations on the video frame/s.

2. Proposed FCM-Based Approach for Locating a Visible Video Watermark

- Shape-based category: opaque, transparent, and animated.

- Dimensionality-based category: 2D and 3D.

- Image-based category: text or image.

- Location-based category: fixed frame location (the watermark displayed on a fixed location on the frame) or different location on different frames.

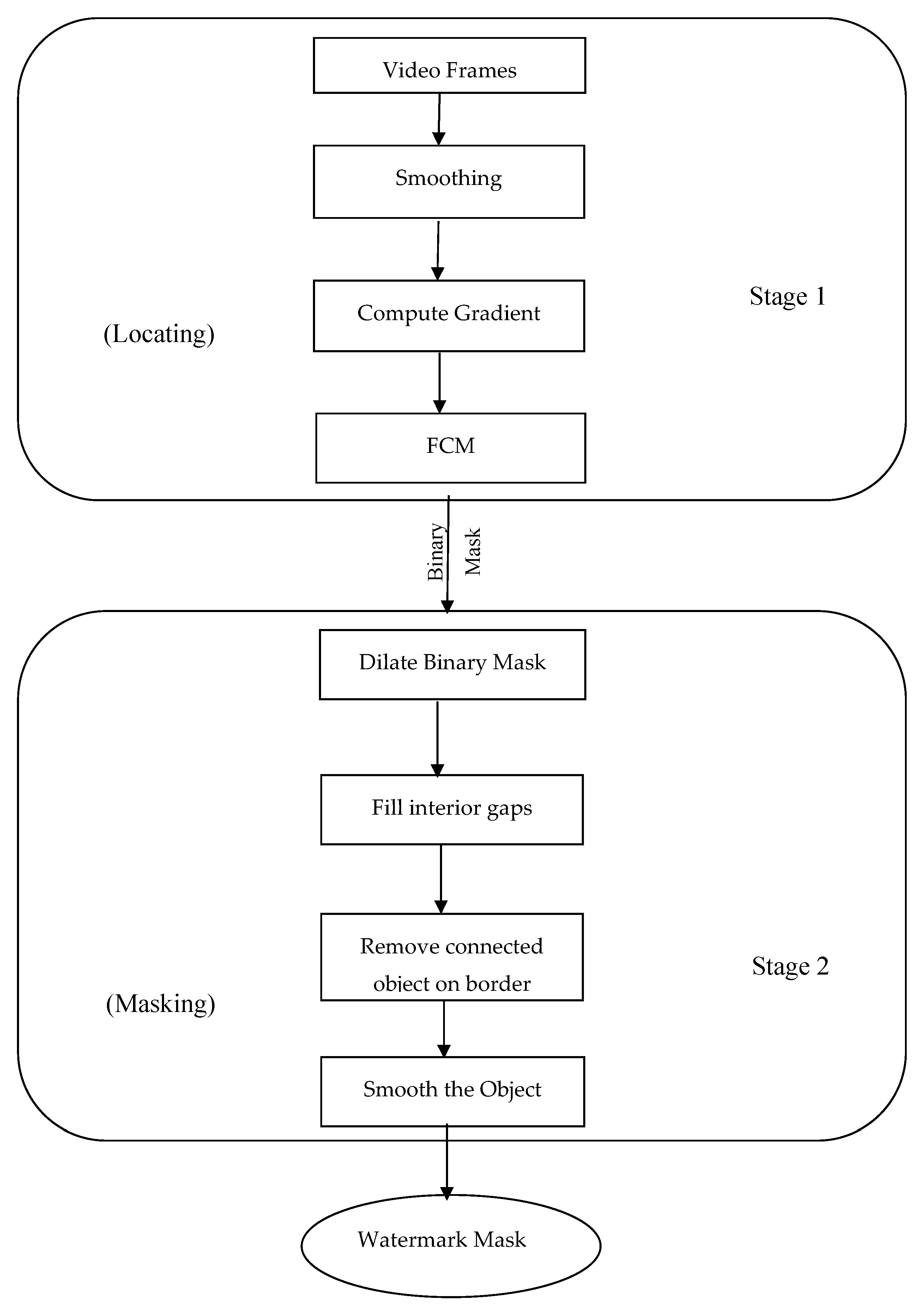

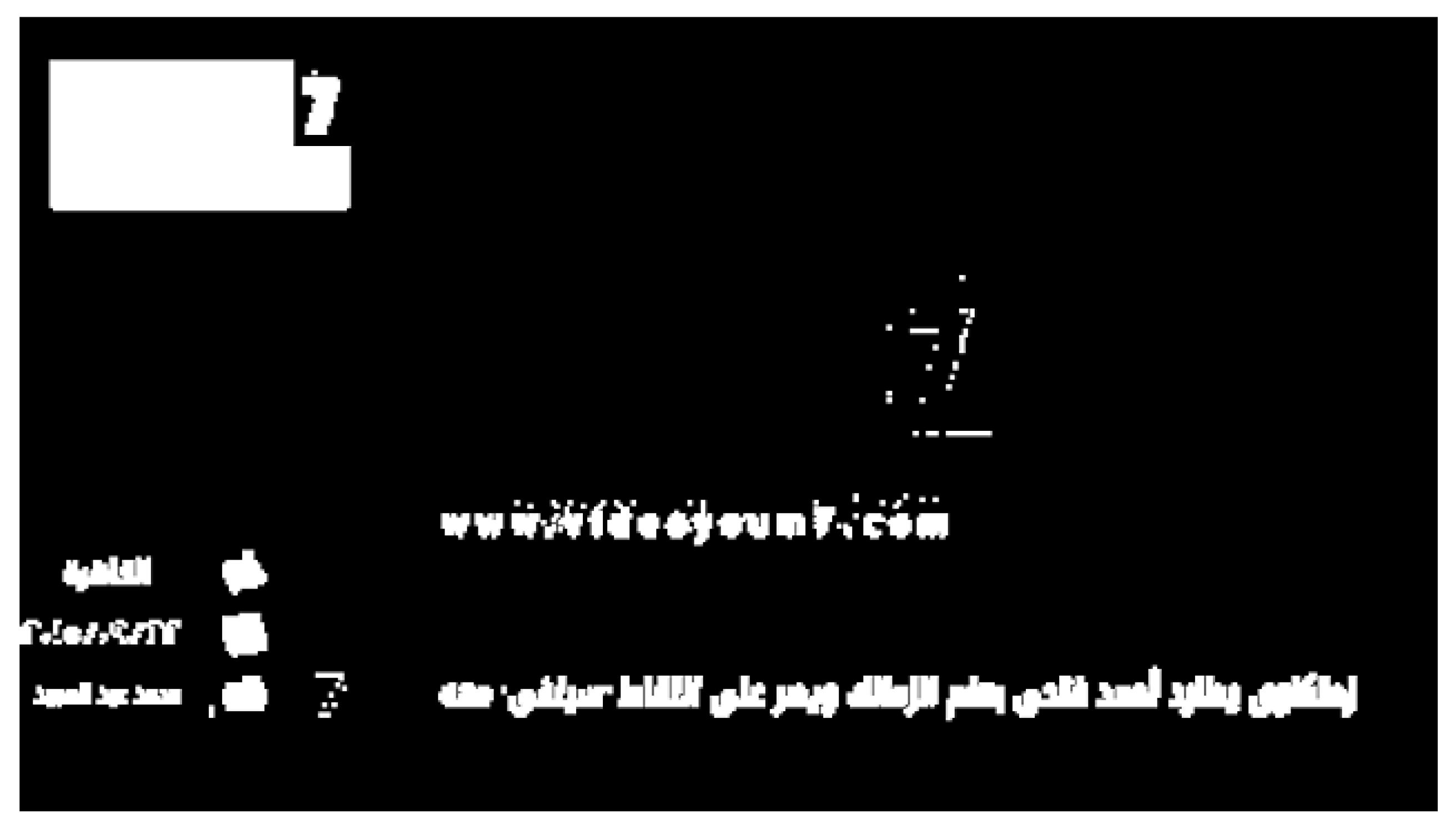

3. Two Stages of FCM-Based Technique for Locating Visible Watermark in Video

3.1. Edge Extraction Stage

3.1.1. Smoothing

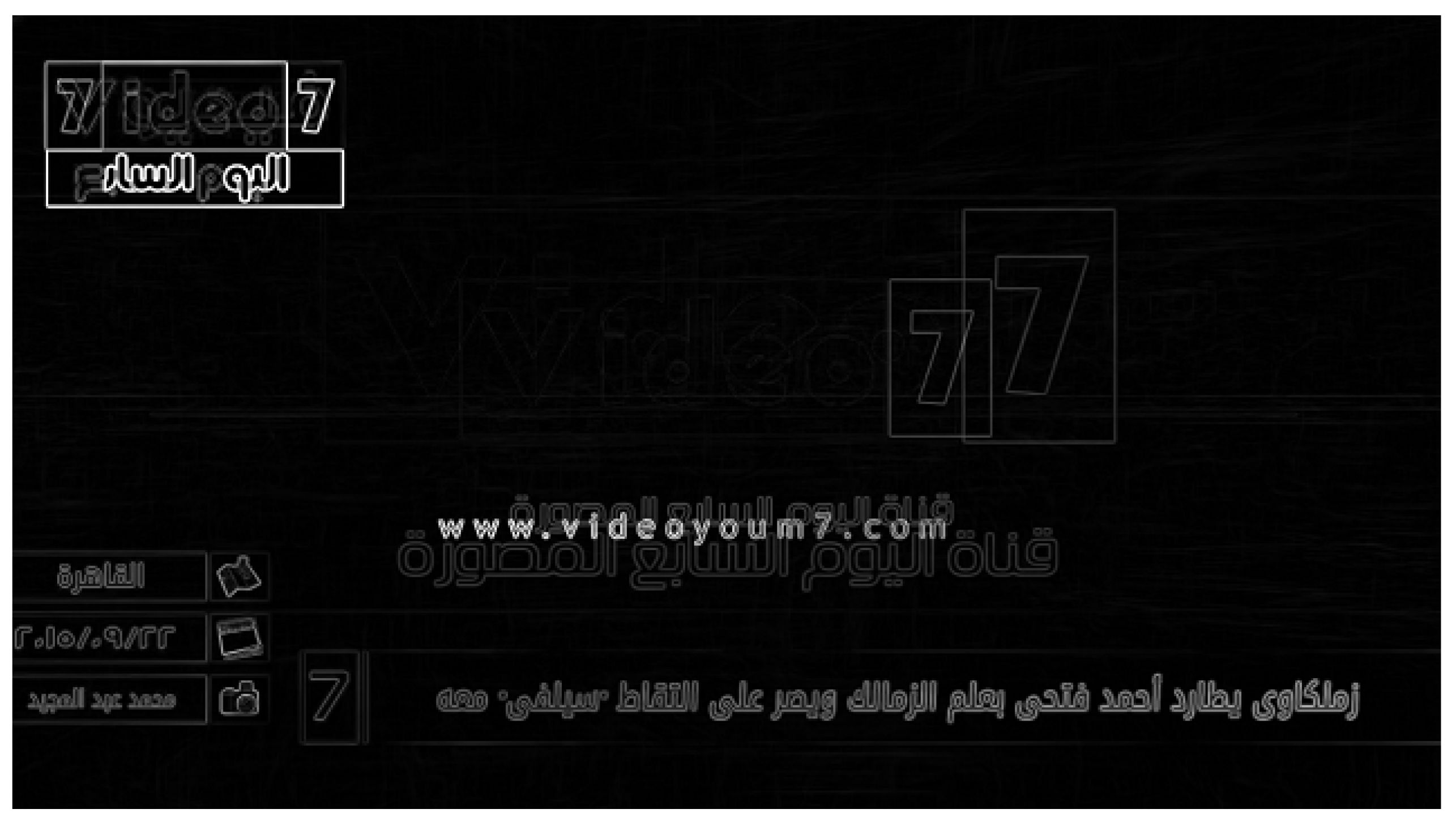

3.1.2. Gradient

3.1.3. Fuzzy C-Means Clustering (FCM)

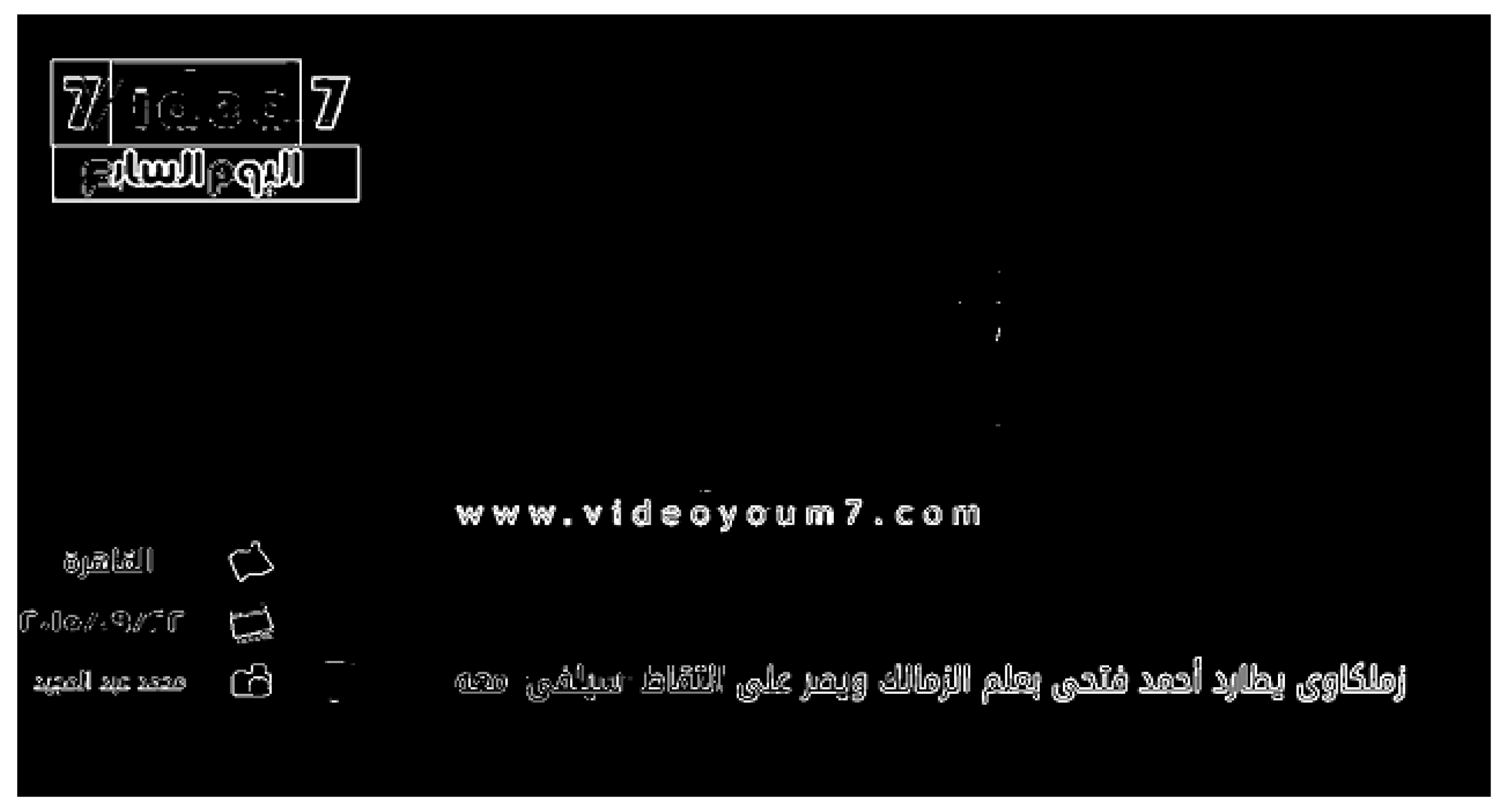

3.2. Masking Stage

3.2.1. Binary Dilation

3.2.2. Filling Interior Gaps

3.2.3. Smooth Watermark Objects Mask

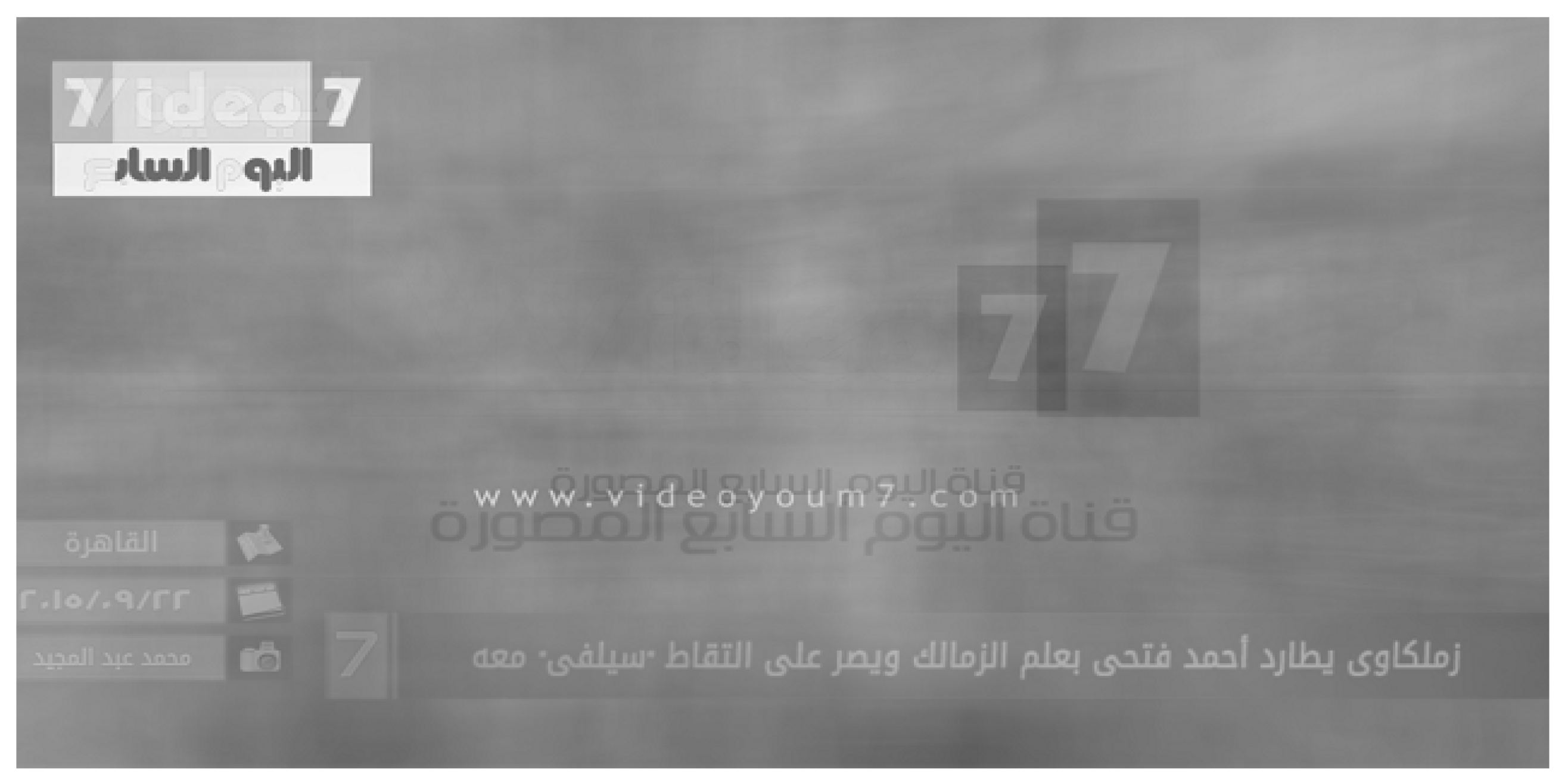

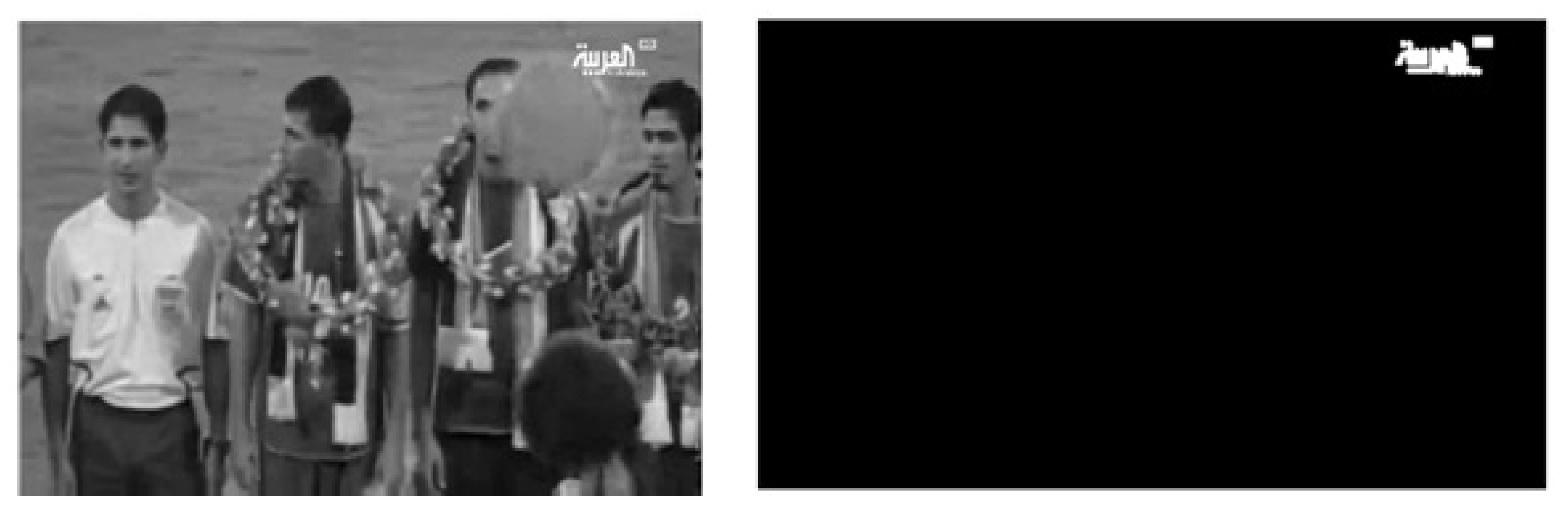

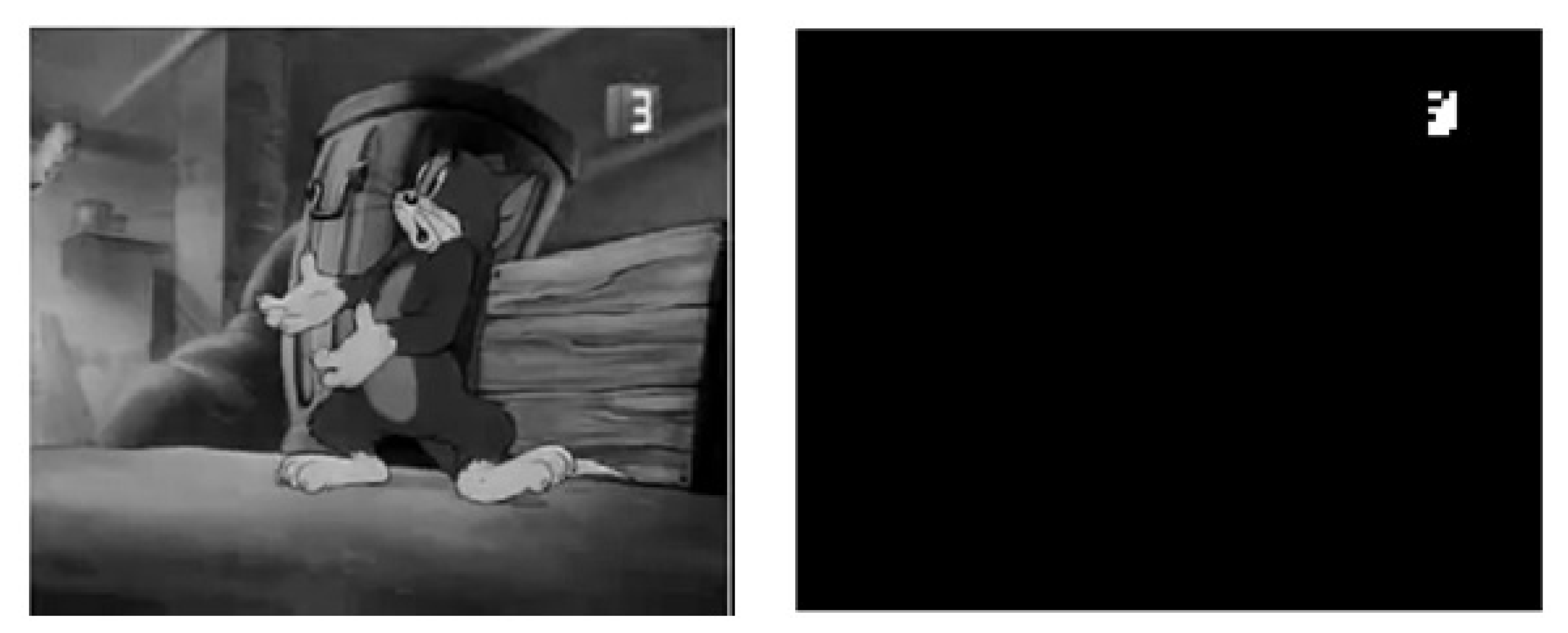

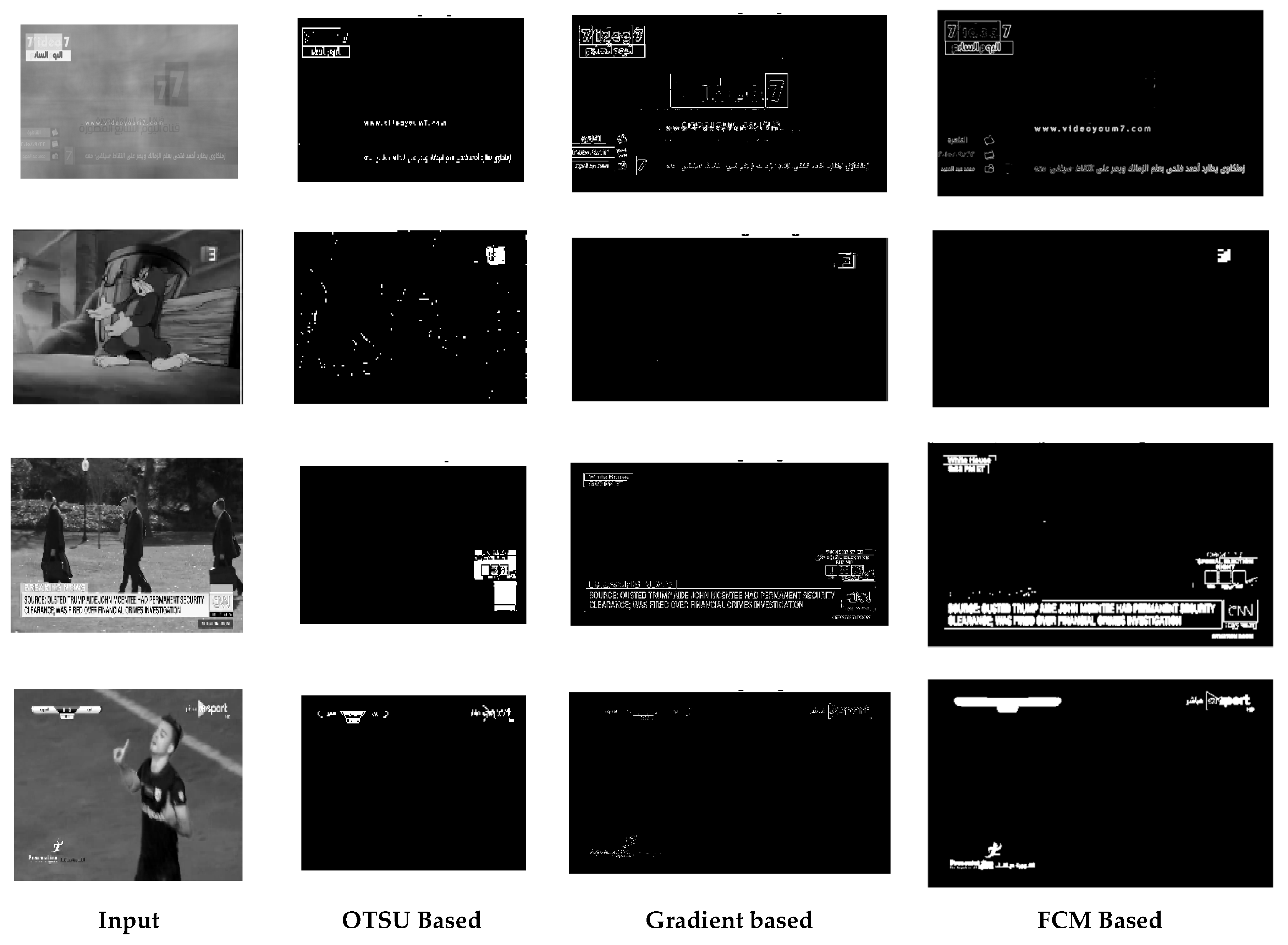

4. Experimental Results and Comparisons

- Fixed camera, fixed object.

- Fixed watermark location without animation.

- Multiple animated watermarks in the same video.

- 3D animated watermark.

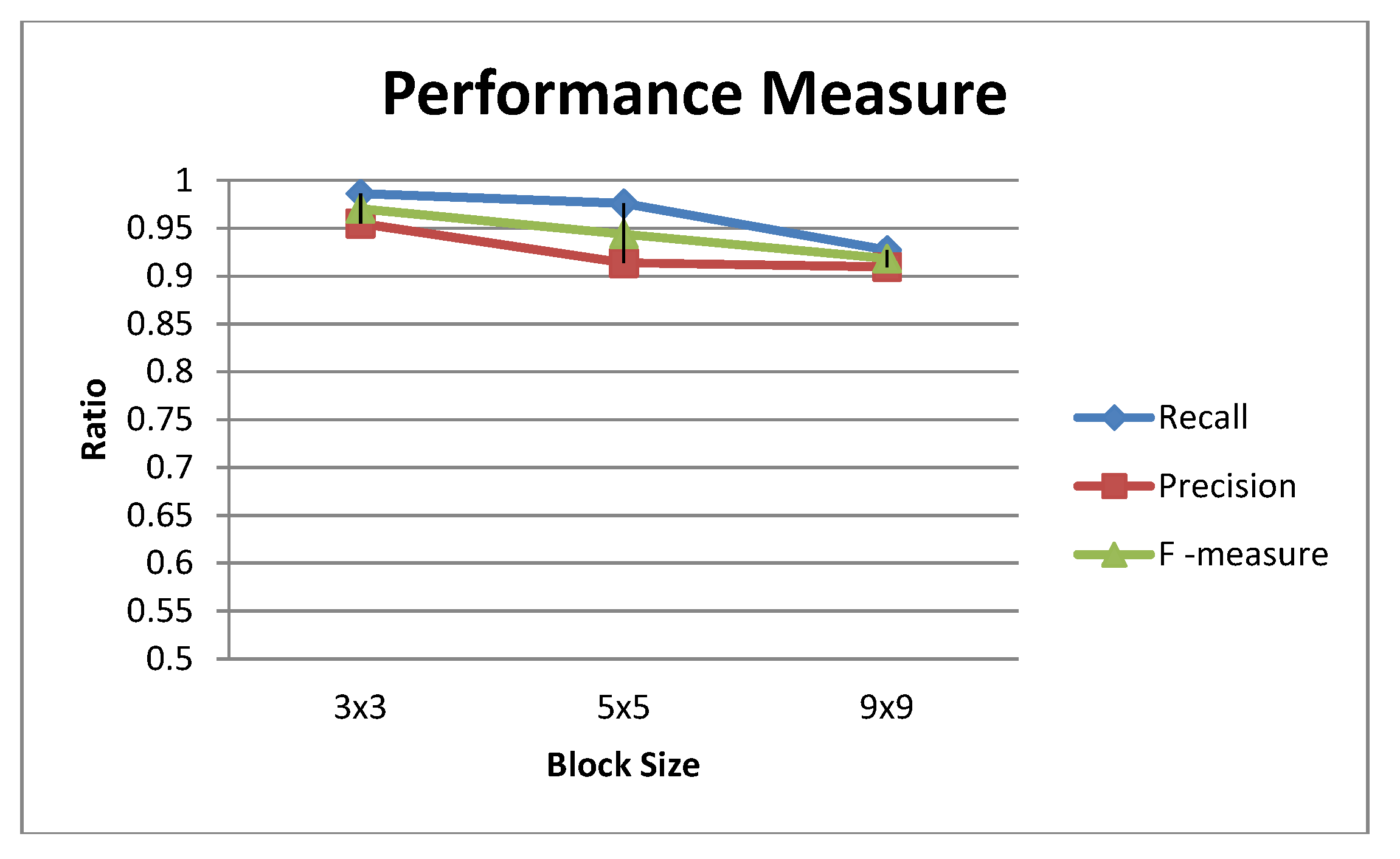

Performance Measure

5. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Yan, W.; Wang, J.; Kankanhalli, M. Automatic video logo detection and removal. ACM Trans. Multimed. Syst. 2005, 10, 5. [Google Scholar] [CrossRef]

- Kuo, C.; Chao, C.; Chang, W.; Shen, J. Broadcast Video Logo Detection and Removing. In Proceedings of the IEEE International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Harbin, China, 15–17 August 2008. [Google Scholar]

- Hu, Y.; Kwong, S.; Huang, J. An algorithm for removable visible watermarking. IEEE Trans. Circuits Syst. Video Technol. 2006, 16, 129–133. [Google Scholar]

- Garcia, H.; Navarro, E.; Reyes, R.; Ramos, C.; Miyatake, M. Visible Watermarking Technique Based on Human Visual System for Single Sensor Digital Cameras. Secur. Commun. Netw. 2017, 2017, 7903198. [Google Scholar]

- Huang, C.; Wu, J. Attacking visible watermarking schemes. IEEE Trans. Multimed. 2004, 6, 16–30. [Google Scholar] [CrossRef]

- Kankanhalli, M.R.; Ramakrishnan, K. Adaptive visible watermarking of images. In Proceedings of the 6th IEEE International Conference on Multimedia Computing and Systems, Florence, Italy, 7–11 June 1999. [Google Scholar]

- Langelaar, G.; Setyawan, I.; Lagendijk, R. Watermarking digital image and video data. A state-of-the-art overview. IEEE Signal Process. Mag. 2000, 17, 5. [Google Scholar] [CrossRef]

- Voloshynovskiy, S.; Pereira, S.; Pun, T.; Eggers, J.; Su, J.K. Attacks on digital watermarks: Classification, estimation-based attacks, and benchmarks. IEEE Commun. Mag. 2001, 39, 118–125. [Google Scholar] [CrossRef]

- Darwish, A.M. A video coprocessor: Video processing in the DCT domain. Proc. SPIE Media Processors 1999, 3655. [Google Scholar] [CrossRef]

- Wahby, A.M.; Mostafa, K.; Darwish, A.M. DCT-based MPEG-2 programmable coprocessor. In Proceedings of the SPIE International Society for Optical Engineering, San Jose, CA, USA, 24–25 January 2002; Volume 4674, pp. 14–20. [Google Scholar] [CrossRef]

- Fallahpour, M.; Shirmohammadi, S.; Semsarzadeh, M. Tampering Detection in Compressed Digital Video Using Watermarking. IEEE Trans. Instrum. Meas. 2016, 5, 1057–1072. [Google Scholar] [CrossRef]

- Lee, M.; Im, D.; Lee, H.; Kim, K.; Lee, H. Real-time video watermarking system on the compressed domain for high-definition video contents: Practical issues. Digit. Signal Process. 2012, 22, 190–198. [Google Scholar] [CrossRef]

- Lin, D.; Liao, G. Embedding Watermarks in Compressed Video using Fuzzy C-Means Clustering. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 October 2008. [Google Scholar]

- Li, Z. The study of security application of LOGO recognition technology in sports video. Eurasip J. Image Video Process. 2019, 1, 46. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale invariant key points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, L.V.; Tuytelaars, H.; Gool, T. Surf: Speeded up robust features. In ECCV; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Hinterstoisser, S.; Lepetit, S.; Ilic, S.; Fua, P.; Navab, N. Dominant orientation templates for real-time detection of texture-less objects. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010. [Google Scholar]

- Yan, W.Q.; Kankanhalli, M.S. Erasing video logos based on image inpainting. In Proceedings of the IEEE International Conference on Multimedia and Expo (ICME 2002), Lausanne, Switzerland, 26–29 August 2002. [Google Scholar]

- Kankanhalli, M.R. A DCT domain visible watermarking technique for images. In Proceedings of the IEEE International Conference on Multimedia and Expo, New York, NY, USA, 30 July–2 August 2000. [Google Scholar]

- Huang, B.; Tang, S. A contrast-sensitive visible watermarking scheme. IEEE Multimed. 2006, 13, 60–66. [Google Scholar] [CrossRef]

- Wang, J.; Wan, W.; Li, X.; Sun, J.; Zhang, H. Color image watermarking based on orientation diversity and color complexity. Expert Syst. Appl. 2020, 140, 112868. [Google Scholar] [CrossRef]

- Wang, X.; Li, D.; Li, S.; Lan, S. Video Corner-Logo Detection Algorithm based on Gradient Map of HSV. In Proceedings of the 2nd IEEE International Conference on Computer and Communications, Chengdu, China, 14–17 October 2016. [Google Scholar]

- Kim, H.; Kang, M.; Ko, S. An Improved Logo Detection Method with Learniing-based Verification for Video Classification. In Proceedings of the 2014 IEEE Fourth International Conference on Consumer Electronics Berlin (ICCE-Berlin), Berlin, Germany, 7–10 September 2014. [Google Scholar]

- Garcia, H.; Navarro, E.; Reyes, R.; Perez, G.; Miyatake, M.; Meana, H. An Automatic Visible Watermark Detection Method using Total Variation. In Proceedings of the 5th International Workshop on Biometrics and Forensics (IWBF), Coventry, UK, 4–5 April 2017. [Google Scholar]

- Shalaby, M.A.W.; Ortiz, N.R.; Ammar, H.H. A Neuro-Fuzzy Based Approach for Energy Consumption and Profit Operation Forecasting. In Advances in Intelligent Systems and Computing, Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 26–28 October 2019; Hassanien, A., Shaalan, K., Tolba, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 1058. [Google Scholar]

- Khaled, K.; Shalaby, M.A.W.; El Sayed, K.M. Automatic fuzzy-based hybrid approach for segmentation and centerline extraction of main coronary arteries. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 258–264. [Google Scholar] [CrossRef]

- Shalaby, M.A.W. Fingerprint Recognition: A Histogram Analysis based Fuzzy C-Means Multilevel Structural Approach. Ph.D. Thesis, Concordia University, Montreal, QC, Canada, 20 April 2012. [Google Scholar]

- Bezdek, J.C.; Keller, J.; Krisnapuram, R.; Pal, N.R. Fuzzy Models and Algorithms for Pattern Recognition and Image Processing; Springer: New York, NY, USA, 2005. [Google Scholar]

- Cozar, J.R.; Nieto, P.; Hern’andez-Heredia, Y. Detection of Logos in Low Quality Videos. In Proceedings of the 11th International Conference on Intelligent Systems Design and Applications, Córdoba, Spain, 22–24 November 2011. [Google Scholar]

- Holland, R.J.; Hanjalic, A. In Proceedings of the 2013 IEEE International Conference on Image Processing, Melbourne, Australia, 15–18 September 2003.

- Vincent, O.R.; Folorunso, O. A Descriptive Algorithm for Sobel Image Edge Detection. In Proceedings of the Informing Science & IT Education Conference (InSITE), Macon, France, 12–15 June 2009. [Google Scholar]

- Dunn, J.C. A Fuzzy Relative of the ISODATA Process and Its Use in Detecting Compact Well Separated Clusters. J. Cybern. 1973, 3, 32–57. [Google Scholar] [CrossRef]

- The MathWorks, Inc. Available online: https://www.mathworks.com/ (accessed on 1 February 2020).

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Xia, D.; Xu, S.; Feng, Q. A proof of the arithmetic mean-geometric mean-harmonic mean inequalities. Res. Rep. Collect. 1999, 2, 85–87. [Google Scholar]

| Block Size | False Positive | False Negative | True Positive | Recall | Precision | F-Measure |

|---|---|---|---|---|---|---|

| 3 × 3 | 10 | 3 | 212 | 0.986047 | 0.954955 | 0.970252 |

| 5 × 5 | 19 | 5 | 201 | 0.975728 | 0.913636 | 0.943662 |

| 9 × 9 | 19 | 15 | 191 | 0.927184 | 0.909524 | 0.918269 |

| OTSU | Gradient Based | Proposed | |

|---|---|---|---|

| Video 30 s | 115.16 | 130.96 | 128.07 |

| Video 60 s | 180 | 240.66 | 190.9 |

| Video 120 s | 250 | 380.29 | 280.02 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Embaby, A.A.; A. Wahby Shalaby, M.; Elsayed, K.M. FCM-Based Approach for Locating Visible Video Watermarks. Symmetry 2020, 12, 339. https://doi.org/10.3390/sym12030339

Embaby AA, A. Wahby Shalaby M, Elsayed KM. FCM-Based Approach for Locating Visible Video Watermarks. Symmetry. 2020; 12(3):339. https://doi.org/10.3390/sym12030339

Chicago/Turabian StyleEmbaby, A. Al., Mohamed A. Wahby Shalaby, and Khaled Mostafa Elsayed. 2020. "FCM-Based Approach for Locating Visible Video Watermarks" Symmetry 12, no. 3: 339. https://doi.org/10.3390/sym12030339

APA StyleEmbaby, A. A., A. Wahby Shalaby, M., & Elsayed, K. M. (2020). FCM-Based Approach for Locating Visible Video Watermarks. Symmetry, 12(3), 339. https://doi.org/10.3390/sym12030339