Abstract

In recent years, frequent outbreaks of Enteromorpha disasters in the Yellow Sea have caused substantial economic losses to coastal cities. In order to tackle the challenges of the low detection accuracy and high false negative rate of Enteromorpha detection in complex marine environments, this study proposes an object detection algorithm CEE-YOLOv8, improved from YOLOv8n, and establishes the Enteromorpha dataset. Firstly, this study integrates a C2f-ConvNeXtv2 module into the YOLOv8n Backbone network to augment multi-scale feature extraction capabilities. Secondly, an ECA attention mechanism is incorporated into the Neck network to enhance the perception ability of the model to different sizes of Enteromorpha. Finally, the CIoU loss function is replaced with EIoU to optimize bounding box localization precision. Experiment results on the self-made Enteromorpha dataset show that the improved CEE-YOLOv8 model achieves a 3.2% increase in precision, a 3.3% improvement in recall, and a 4.1% gain in mAP50-95 compared to the benchmark model YOLOv8n. Consequently, the proposed model provides robust technical support for future Enteromorpha monitoring initiatives.

1. Introduction

Enteromorpha is a large floating green alga, and its strong reproductive capacity leads to an exponential increase in biomass after a single reproductive cycle. The excessive proliferation of Enteromorpha can result in the formation of green tides, which affect the growth of benthic algae. Additionally, the large-scale proliferation of Enteromorpha can cause water eutrophication, leading to oxygen depletion and the death of marine organisms due to hypoxia and deteriorating water quality. Severe green tide coverage can also have a profound impact on tourism, fisheries, and marine safety in coastal cities [1,2]. Therefore, rapidly and accurately monitoring the distribution of Enteromorpha and understanding its drift law is of significant importance for effectively implementing control measures [3].

Yu et al. [4] proposed a Fully Automated Green Tide Extraction Method (FAGTE), which utilizes multi-source satellite remote sensing data to achieve high-precision monitoring of green tides in the Yellow Sea. Additionally, a method for merging results at various resolutions was proposed. In addition to utilizing the Gompertz and Logistic models for forecasting the growth patterns of green tides, this information serves as a foundation for implementing effective preventive and control strategies. Xu et al. [5] put forth a semi-automated approach for extracting green tides utilizing NDVI. To extract green tide data from the Yellow Sea between 2008 and 2012, remote sensing images from multiple satellites were utilized. This application served as a means to confirm the method’s universality. Dong et al. [6] proposed a boundary-assisted dual-path convolutional neural network (BADP-CNN) to address the issue of accurate boundary detection of Enteromorpha in high-spatial-resolution remote sensing images (HSRIs).

Currently, monitoring of Enteromorpha mainly relies on remote sensing data, which presents significant limitations in terms of temporal and spatial resolution, as well as data timeliness [7]. This makes it difficult to detect small-scale or dispersed Enteromorpha colonies and to capture the details of Enteromorpha drift and growth. As a result, research on the living environment and drift law of Enteromorpha still faces considerable challenges.

In contrast, vision-based object detection techniques, particularly YOLO, offer significant advantages in these scenarios. YOLO’s deep learning architecture excels in detecting small-scale objects by analyzing high-resolution imagery and leveraging real-time processing capabilities [8]. This allows YOLO to detect subtle or dispersed Enteromorpha colonies with higher accuracy, especially in areas where traditional methods fail to provide reliable results.

Meanwhile, with the development of deep learning technologies, significant advancements have been made in vision-based object detection methods. YOLO [9] series object detection models, known for their outstanding real-time processing capabilities and detection accuracy, have been successfully deployed in numerous application scenarios, particularly in the field of marine object detection [10].

Fu et al. [11] proposed an improved YOLOv4 model for marine vessel detection. They incorporated the CBAM attention mechanism into the original model to enhance its ability to detect small objects, resulting in a 2.02% improvement in map@50. Bi et al. [12] proposed an improved YOLOv7-based jellyfish detector, HD-YOLO. They established a jellyfish dataset and validated the effectiveness of HD-YOLO and related methods through comparative experiments. This approach provides a more accurate and faster detection method for jellyfish, along with a more versatile dataset. Wang et al. [13] proposed the YOLO11-YX algorithm for marine litter detection. Based on YOLO11s, they introduced the SDown downsampling module, the C3SE feature extraction module, and the FAN feature fusion module. Experimental results demonstrated a 2.44% improvement in detection accuracy for marine litter, providing a further solution for marine litter detection. Jia et al. [14] applied an improved YOLOv8 model to the field of marine organism detection. They incorporated the InceptionNeXt module into the backbone network of YOLOv8 to enhance feature extraction capabilities. The SEAM attention module was added to the Neck network to improve the detection of overlapping objects. Additionally, the NWD loss was integrated into the CIoU, improving the recognition of small objects. Compared to the original model, the mAP was increased by approximately 6.2%. Tian et al. [15] replaced the C2f module in the YOLOv8n network with deformable convolutions and integrated the SimAM attention mechanism before the detection head. They also replaced the traditional CIoU loss function with WIoU. The improved algorithm significantly enhanced the detection accuracy of marine flexible organisms. Jiang et al. [16] proposed a lightweight ship detection model, YOLOv7-Ship, which integrates the “Coordination Attention Mechanism” (CAM) and full-dimensional dynamic convolution (ODConv). This model addresses the trade-off between detection accuracy and real-time performance in complex marine environmental backgrounds. Wu et al. [17] proposed an improved YOLOv7 model that incorporates the ECA attention mechanism to reduce the model’s focus on redundant information, providing a more accurate advantage for underwater object detection.

Therefore, we have developed an intelligent tracking unmanned vessel monitoring system for Enteromorpha, which integrates meteorological, biological, chemical and other environment elements. The object detection model is deployed to the unmanned vessel monitoring system, enabling real-time tracking of Enteromorpha drift path and the collection of water quality parameters within its growth range. This is of significant importance for improving water quality and controlling Enteromorpha disasters.

This study will focus on the research of Enteromorpha detection algorithms. We have selected YOLOv8n as the benchmark model and make improvements to enhance the model’s detection accuracy while reducing false negative rates for Enteromorpha. These improvements will provide technical support for the recognition system of the unmanned vessel monitoring system. The contributions of this study are as follows:

- 1.

- The introduction of the more advanced feature extraction module ConvNeXtv2 into the C2f module helps to improve the feature extraction ability of the model for the edge part of Enteromorpha.

- 2.

- To enhance the model’s focus on the Enteromorpha region and boost detection accuracy, the Neck network incorporates the ECA attention mechanism.

- 3.

- The utilization of the EIoU loss function further optimizes the model’s bounding box localization ability, enhancing the recognition performance of Enteromorpha.

- 4.

- An all-weather Enteromorpha image dataset was constructed, and the application value of the proposed method was verified through comparative experiments.

The remainder of this paper is structured in the following manner. Section 2 introduces the construction of the dataset and provides a detailed description of the framework for improving the CEE-YOLOv8 model. Section 3 describes the experimental setup, configuration of training parameters, and performance metrics used to evaluate the model. Section 4 compares the CEE-YOLOv8 model with other models using various performance metrics. Section 5 presents a visual comparison between CEE-YOLOv8 and the benchmark model YOLOv8n, obtaining the expected results and validating the practical utility of the proposed method. Section 6 concludes this paper.

2. Data Collection and Proposed Methods

2.1. Data Collection

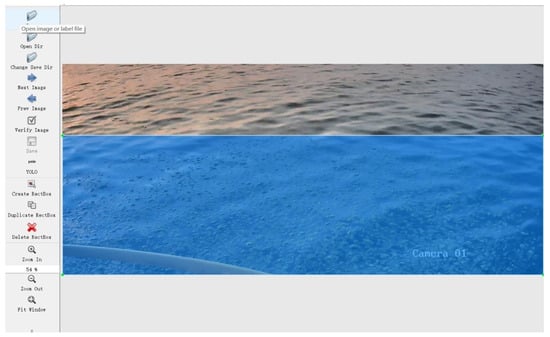

The dataset used in this study is an all-weather image dataset of Enteromorpha that was self-compiled. The images of Enteromorpha were collected through an independently developed online monitoring platform for Enteromorpha, with the collection site selected in Qingdao, as shown in Figure 1.

Figure 1.

Collecting Enteromorpha pictures.

To facilitate subsequent model training, the collected photographs were cropped to retain the Enteromorpha itself along with some background information. We utilized LabelImg 1.8.6 software to annotate the images, generating .txt files suitable for model training, as shown in Figure 2. Finally, these images were randomly divided into training, validation, and test sets in a ratio of 7:2:1.

Figure 2.

Labeling the target object with LabelImg.

2.2. Benchmark: YOLOv8n

In this research, we selected YOLOv8n as the reference model for enhancement due to its compact network architecture and superior detection precision. It is suitable for deployment on the Enteromorpha monitoring platform.

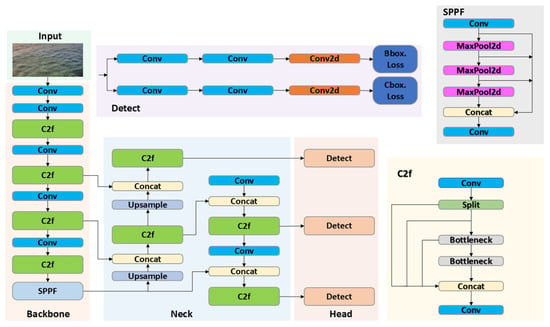

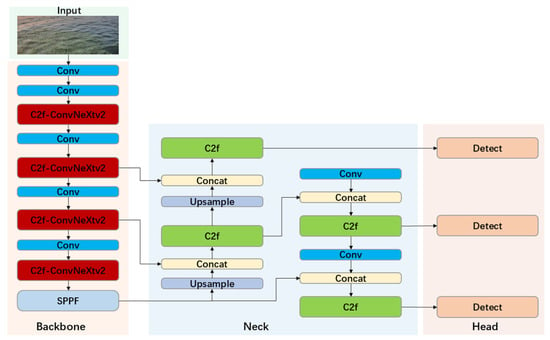

YOLOv8n contains four modules, Input, Backbone, Neck, and Head [18], as demonstrated in Figure 3.

Figure 3.

YOLOv8n modeling framework.

The input layer integrates methods like Mosaic data augmentation and Adaptive Anchor, which significantly increase the detection opportunities for small objects and improve the model’s robustness in recognizing occluded scenarios. The Backbone is composed of a concatenation of Conv, C2f, and SPPF modules. The newly designed C2f module, by incorporating residual connections and branching structures, establishes a richer gradient flow path, significantly enhancing the model’s feature extraction capability [19]. The SPPF module effectively merges local and global features at different scales through parallel maximum pooling operations, enabling multi-scale capture of contextual information for the target [20]. The neck utilizes an FPN (Feature Pyramid Network) + PAN (Path Aggregation Network) structure, which achieves efficient fusion of features at different levels through both top-down and bottom-up pyramid structures [21].

In the output stage, YOLOv8n completely abandons the conventional anchor-based mechanism and embraces a fully anchor-free detection method [22]. Additionally, it employs a decoupled design, separating the classification and regression tasks into independent parallel branches. In short, the overall architecture further improves detection accuracy and generalization capability based on the YOLOv7 framework.

2.3. C2f-ConvNeXtv2

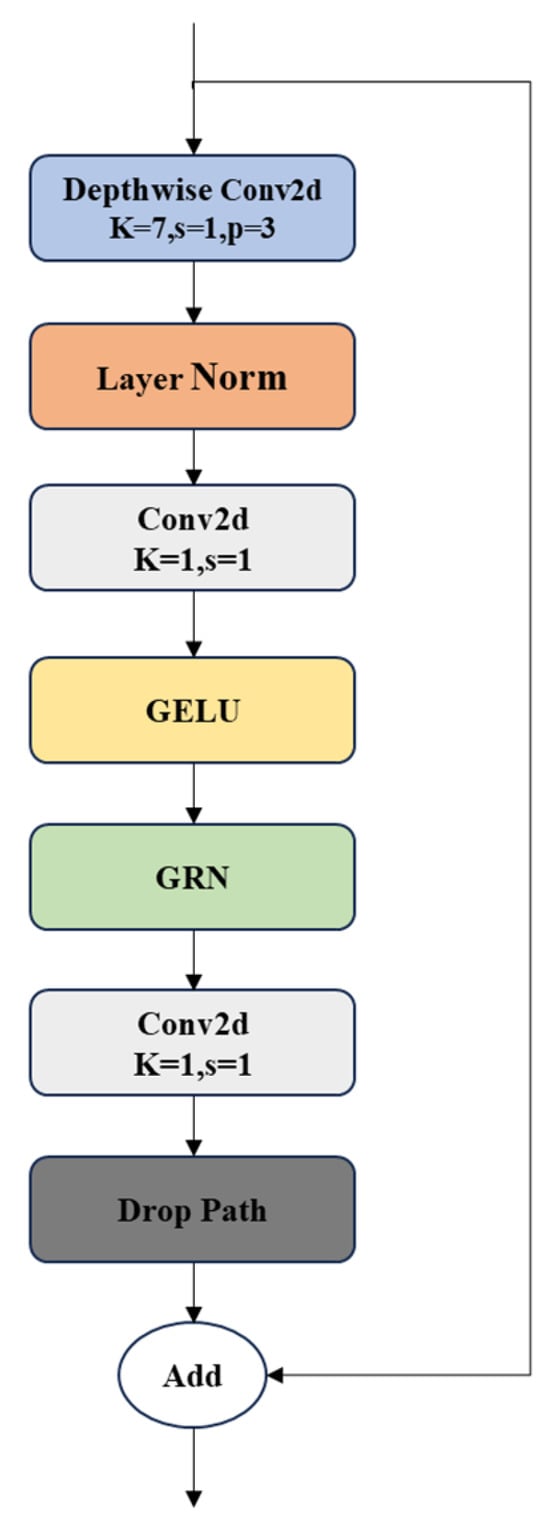

ConvNeXtv2 is a pure convolutional vision model that integrates the Transformer design concept and the advantages of convolutional networks. It significantly improves the feature extraction ability while maintaining efficient inference [23]. The network structure is shown in Figure 4.

Figure 4.

Diagram of the ConvNeXtv2 network structure.

ConvNeXtv2 first performs convolution operations on the feature map through depth-wise separable convolution. This network uses a large convolution kernel to expand the model’s receptive field, enabling it to extract the edge features of kelp more comprehensively [24].

Layer Normalization is employed to eliminate negative effects, such as gradient explosion, caused by the increased network depth. Furthermore, a convolution kernel is used to enhance the features of Enteromorpha edges, which allows for better utilization of channel-wise information, thereby improving the model’s expressive capability.

In ConvNeXtv2, the GELU activation function is selected to replace the function, as shown in the following equations.

Here, denotes the cumulative distribution function of the standard normal distribution, and its calculation formula is given as follows.

The ReLU function maps all negative input values to zero, which leads to the complete loss of information carried by those negative inputs. Additionally, ReLU is non-differentiable at , and its discontinuous derivative can cause gradient explosions during training, thereby increasing the risk of training instability.

Compared with the ReLU function, even if the input is negative, will still output a small positive value. Therefore, its negative input information is not completely discarded, but retained in a weaker form and participates in the subsequent calculation. Meanwhile, the GELU activation function is smoother and more continuous, providing better non-linear characteristics, enhancing gradient flow, and improving the speed and accuracy of neural network learning [25].

The most important design in ConvNeXtv2 is the introduction of the GRN layer (Global Response Normalization), which achieves inter-channel competition and global perception through pure mathematical constraints at the cost of zero parameters, effectively addressing the issue of feature redundancy in traditional convolutions [26].

The GRN layer first performs global feature aggregation on the feature maps of each channel using the L2 norm to obtain a set of aggregated vectors. Specifically, given an input feature , the spatial feature map is aggregated into a vector using a global function . Using the L2 norm for feature aggregation effectively suppresses noise and enhances the model’s generalization ability, resulting in a set of aggregated values, as shown in the following formula.

Then, a standard split normalization function is applied to the aggregated vectors to obtain a set of normalized values. The normalization operation is illustrated in the following formula.

In conclusion, the original input responses are calibrated using the calculated normalized scores, as illustrated by the subsequent formula.

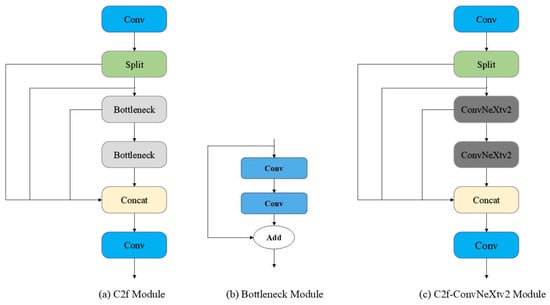

In this study, to enhance feature extraction capability, we propose a modified C2f module (named C2f-ConvNeXtv2) by replacing each Bottleneck unit within the original C2f structure with the ConvNeXtv2 module, while retaining the C2f’s core design of “branch splitting, parallel feature processing, and concatenation fusion”. The architecture of the C2f-ConvNeXtv2 module is illustrated in Figure 5, where the original Bottleneck is entirely substituted by the ConvNeXtv2 module.

Figure 5.

Diagram of the C2f-ConvNeXtv2 network structure.

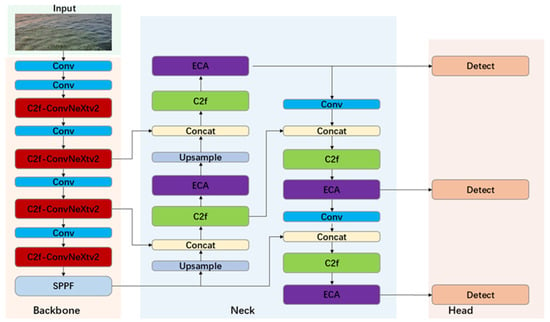

Subsequently, all C2f modules in the Backbone of YOLOv8n are completely replaced with the proposed C2f-ConvNeXtv2 modules. This full replacement ensures that the improved Backbone inherits the original C2f’s efficient gradient flow (via preserved branch splitting and residual mechanisms) while leveraging ConvNeXtv2’s advantages in capturing fine-grained textures [27]. This enables the model to accurately distinguish subtle features between macroalgal communities and complex marine backgrounds, such as wave reflections and foam. The improved YOLOv8n model is illustrated in Figure 6.

Figure 6.

Improved YOLOv8n modeling framework.

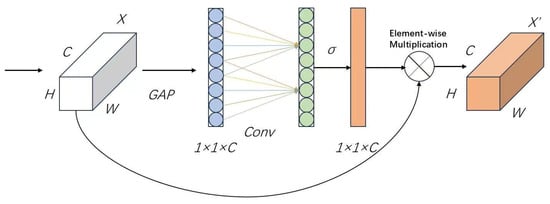

2.4. ECA Attention Mechanism in Neck

The ECA attention mechanism, proposed by Wang et al., is a lightweight channel attention module that captures inter-channel dependencies through local cross-channel interactions without the need for dimensionality reduction [28]. This approach significantly enhances computational efficiency while maintaining accuracy. The structure diagram of the ECA module is shown in Figure 7.

Figure 7.

ECA structure diagram.

First, in the ECA attention module, the input feature map for each channel undergoes GAP (global average pooling) to obtain a set of channel descriptors , as shown in the following formula.

Here, represents the height of the input feature map, represents the width of the input feature map, represents the number of channels of the input, and and represent the value of the element at the row and column of the input feature map.

Meanwhile, the obtained channel descriptors will form a -dimensional column vector . Subsequently, a one-dimensional convolution is applied to the compressed channel column vector , resulting in a set of processed column vectors ; the formula is shown below.

The column vector is mapped through the Sigmoid function to obtain a set of attention weights , as shown in the formula below.

Finally, the attention weights are multiplied with the input feature map channel-wise to obtain the output feature map , as shown in the formula below.

In this study, the ECA module is added after the C2f module in the Neck network of YOLOv8n, which effectively enhances the signal strength of important channels while suppressing secondary channels, allowing the model to focus more on regions relevant to the information of Enteromorpha [29].

Notably, ECA addresses the multi-scale challenge of Enteromorpha detection by adjusting channel attention weights to prioritize crucial spatial regions, especially small or scattered patches [30]. It also enhances attention to edge details, critical for detecting subtle or fragmented colonies that traditional models might miss due to limited receptive fields or inadequate feature extraction.

Compared to SE or CBAM, ECA is better suited here for its computational efficiency and effective channel-wise recalibration, key for multi-scale object detection in complex marine environments. The CEE-YOLOv8 network is illustrated in Figure 8.

Figure 8.

CEE-YOLOv8 modeling framework.

2.5. Loss Function

In this study, the original loss function CIoU [31] of YOLOv8n was replaced with EIoU [32], resulting in more accurate localization of the predicted bounding boxes. In object recognition tasks, the IoU (Intersection over Union) is employed to quantify the degree of overlap between the actual boxes and the anticipated boxes, with the calculation formula shown below.

represents the area of the predicted box, and represents the area of the ground truth box. The formula for the IoU loss function is as follows.

CIoU, based on IoU, adds constraints on the aspect ratio. The formula is as follows.

represents the coordinates of the center point of the predicted box , while represents the coordinates of the center point of the ground truth box .

represents the length of the diagonal of the minimum enclosing rectangle that covers both the predicted and ground truth boxes; denotes the weight coefficient; and signifies the parameter that measures the consistency of the aspect ratio. Their calculation formulas are as follows.

Compared to CIoU, EIoU directly optimizes the coverage distance of the bounding boxes, aspect ratio, and center point distances. This approach avoids the gradient vanishing problem that CIoU encounters with non-overlapping bounding boxes, reducing the harmful gradient effects of low-quality anchor boxes during the training process and improving the accuracy of bounding box matching. The formula for the EIoU loss function is as follows.

Here, and represent the width and height of the predicted box, while and denote the width and height of the ground truth box. Additionally, and indicate the width and height of the minimum enclosing rectangle.

3. Training Methodology and Evaluation Metrics

3.1. Experimentation and Parameter Configuration

The model training process in this research was carried out on a computer system that operated on the Windows 10 platform. The hardware configuration includes an Intel® CoreTM i7-8700 CPU (Intel Corporation, Santa Clara, CA, USA) operating at 3.2 GHz and an NVIDIA GeForce RTX 2080 Ti (NVIDIA Corporation, Santa Clara, CA, USA) graphics card. In terms of software environment, Python 3.8 was used as the programming language, and the model training process was implemented using the CUDA 11.3 acceleration library in conjunction with the PyTorch 1.12.1 deep learning framework.

In this study, the settings of the hyperparameters during the training process are presented in Table 1.

Table 1.

Training hyperparameter setting.

3.2. Evaluation Metrics

In order to assess the model’s detection capabilities, this research utilized various widely recognized evaluation measures in object detection tasks: Precision, Recall, mAP (mean Average Precision), F1 Score, GFLOPs (Giga Floating-Point Operations), Parameters (The Total Number of Parameters Required for Model Training) and FPS (Frames Per Second) [33].

Precision refers to the proportion of samples predicted as Enteromorpha that are actually Enteromorpha, as shown in Equation (19).

Recall refers to the proportion of actual Enteromorpha samples that are successfully identified by the model, as shown in Equation (20).

The F1 Score, which represents the harmonic mean of Precision and Recall, is shown in Equation (21).

mAP is the mean precision averaged across multiple categories, as shown in Equation (22).

In the formula, represents the number of true positive samples predicted as positive, represents the number of false positive samples predicted as positive, and represents the number of false negative samples predicted as negative [34]. denotes the total number of target categories; in this study, there is only one category, Enteromorpha; hence . is the average precision for the class.

4. Experiments and Analyses

4.1. Contrast Experiments

In order to thoroughly assess the detection capabilities of the newly introduced CEE-YOLOv8 model in this research, we conducted a comparison between CEE-YOLOv8 and various popular object detection models, such as SSD, YOLOv5s, YOLOv7tiny, and other different variants of YOLOv8 models. The comparative results are presented in Table 2.

Table 2.

Comparison of recognition performance among different models.

Analysis of Table 2 reveals that YOLOv8n performs excellently across all metrics. While YOLOv5s and YOLOv8m achieve superior F1 Scores and mAP50-95 values compared to YOLOv8n, their GFLOPS and parameters are significantly larger. Therefore, considering future practical deployment, this study selects the lightweight YOLOv8n as the benchmark model for further improvements. Meanwhile, the proposed CEE-YOLOv8 demonstrates superior performance across key metrics compared to the baseline model, achieving an F1 Score of 95.5% and an mAP50-95 of 87.9%. Compared to SSD, YOLOv5s, YOLOv7-tiny, YOLOv8m, and YOLOv8n, the F1 Score improved by 15.4%, 2.4%, 6.2%, 2.9%, and 3.3%, respectively. The mAP50-95 values increased by 16.8%, 2.3%, 13.6%, 3.8%, and 4.1%, respectively. Additionally, the GFLOPs of this model are only 7.4G, and its parameters are 2.8MB, which are also lower than those of the baseline model YOLOv8n. Meanwhile, CEE-YOLOv8 achieved an FPS of 134, which outperforms other models in detection speed. This suggests that the proposed method satisfies the requirements for real-time detection. In summary, CEE-YOLOv8 not only exhibits excellent detection performance but also provides favorable conditions for future practical deployments, demonstrating significant application value.

In this study, we conducted experiments to replace the C2f modules at different locations within the YOLOv8n network, explaining the rationale behind substituting the C2f module in the Backbone network with C2f-ConvNeXtv2. The experiments were divided into four groups: the baseline model YOLOv8n, improvements made solely to the C2f modules in the Backbone network, improvements made solely to the C2f modules in the Neck network, and improvements made to all C2f modules across the network. The experimental results are presented in Table 3.

Table 3.

Experimental Result Comparison of C2f Module Improvements at Different Positions in YOLOv8n Network.

Analysis of Table 3 indicates that the improvements made exclusively to the C2f modules in the Backbone network yielded the best performance, with a 0.9% increase in Precision, a 1.2% increase in Recall, and a 1.8% increase in mAP50-95.

In this study, to investigate the improvement in model performance by the ECA attention module, we introduced other widely used attention modules for a comparative experiment, such as CBAM, SE, and CA. Specifically, we replaced the ECA module in the Neck with different attention modules, positioning them at the same location after the C2f layer in the Neck section. The experimental results are presented in Table 4.

Table 4.

Comparison of Different Attention Modules.

4.2. Ablation Experiments

To further validate the effectiveness of the three proposed improvement strategies for the detection of Enteromorpha, ablation experiments were conducted in this study, and the results are presented in Table 5.

Table 5.

Comparison results of the ablation experiments.

According to Table 5, the three suggested improvement techniques in this study demonstrate certain enhancements compared to the benchmark model YOLOv8n, whether used individually or in pairs. This study improved the C2f in the Backbone network based on YOLOv8n by employing the advanced feature extraction module ConvNeXtv2, which enhanced the model’s feature extraction capability. Compared to YOLOv8n, Precision improved by 0.9%, Recall improved by 1.2%, and mAP50-95 improved by 1.8%. Meanwhile, incorporating the ECA module into the Neck network improved the model’s ability to concentrate on crucial information within the feature map, resulting in a Precision increase of 1.9%, a Recall increase of 2.6%, and a mAP50-95 increase of 2.5%. Finally, the use of the EIoU loss function when computing the boundary box regression loss improved the localization capability of the detection boxes, with Precision increasing by 3.2%, Recall increasing by 3.3%, and mAP50-95 increasing by 4.1%. Ablation experiments have thoroughly validated the effectiveness of the algorithm improvement strategies.

4.3. Comparative Analysis of YOLOv8n and CEE-YOLOv8

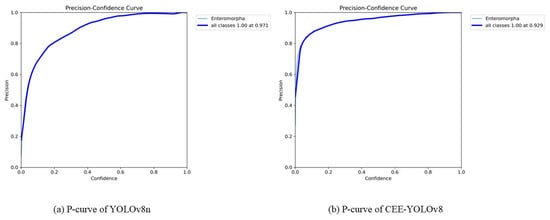

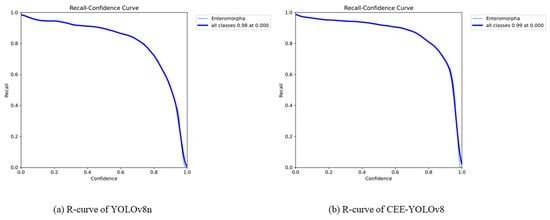

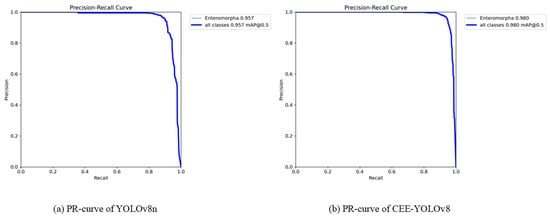

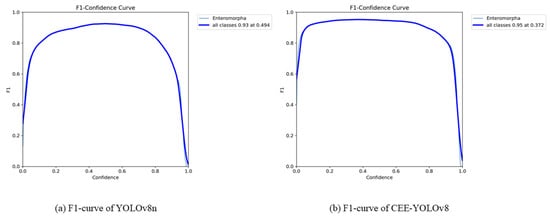

The previous data analysis has verified the advantages of the model CEE-YOLOv8 in core indicators like Precision, Recall, and mAP50-95. In order to further analyze the differences in detection characteristics between the two models, this study conducts an analysis of the performance curves of both models, which can further reveal the dynamic behavior of the models under different confidence threshold values, as shown in Figure 9, Figure 10, Figure 11 and Figure 12.

Figure 9.

Comparison of P curves before and after improvement.

Figure 10.

Comparison of R curves before and after improvement.

Figure 11.

Comparison of PR curves before and after improvement.

Figure 12.

Comparison of F1 curves before and after improvement.

The P curve can reflect the variation pattern of the detection accuracy of the model under different confidence threshold values, as shown in Figure 9.

In the range of 0–0.7, the overall position of the CEE-YOLOv8 model’s curve is higher than that of the benchmark model YOLOv8n. Moreover, the curve of CEE-YOLOv8 changes more smoothly. This indicates that the model CEE-YOLOv8 makes fewer false judgments on kelp compared to YOLOv8n, and CEE-YOLOv8 has stronger adaptability in complex marine environmental backgrounds.

The R curve illustrates the changing pattern of the detection accuracy of the model under different confidence threshold values, as shown in Figure 10.

Similarly to Figure 9, at different confidence threshold values, the curve of the CEE-YOLOv8 model is overall higher than that of the benchmark model YOLOv8n. Moreover, the curve of CEE-YOLOv8 declines more gently, indicating that the model CEE-YOLOv8 has lower false negatives for Enteromorpha than YOLOv8n, and the recognition effect of CEE-YOLOv8 for Enteromorpha is better.

The PR curve is plotted with Recall on the x-axis and Precision on the y-axis, visually presenting the dynamic correlation of the two indicators of the model at different confidence threshold values, as shown in Figure 11.

The area under the PR curve (AP) of CEE-YOLOv8 is larger, and the curve is closer to the upper right corner, indicating that the model performs better in balancing Precision and Recall. This suggests that the model can effectively control false detections even at high recall rates.

The F1 curve is a visualization tool used to evaluate the overall performance of a model in object detection tasks, reflecting the variation in the F1 score at different confidence thresholds, as shown in Figure 12.

The area under the F1 curve for the CEE-YOLOv8 model is larger than that of YOLOv8n. This indicates that CEE-YOLOv8 maintains a high F1 score across a wider range of confidence thresholds, demonstrating the model’s ability to stabilize the balance between Precision and Recall in complex marine environments with varying densities and shapes of Enteromorpha, without the need to adjust threshold values. Additionally, within the 0.1 to 0.9 range, its peak consistently exceeds that of YOLOv8n, implying that the model effectively reduces false positives for Enteromorpha while also minimizing false negatives.

In short, CEE-YOLOv8 enhances the extraction of edge features of Enteromorpha by incorporating the ConvNeXtv2 module, introduces the ECA attention mechanism to optimize scale perception, and replaces the EIoU loss function to improve localization accuracy. These improvements ultimately achieve a better balance between “completeness” and “accuracy” in Enteromorpha detection.

5. Discussion

5.1. Validation of Results

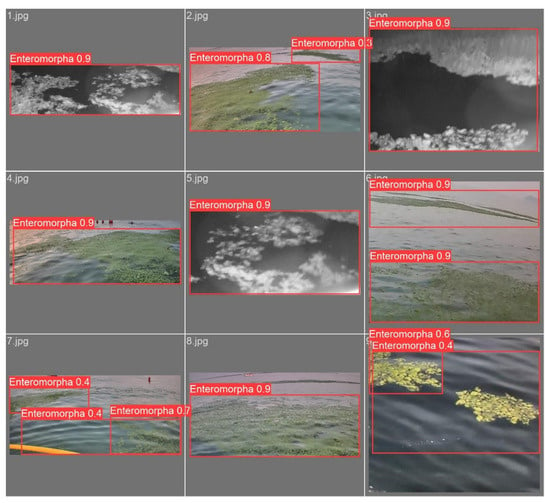

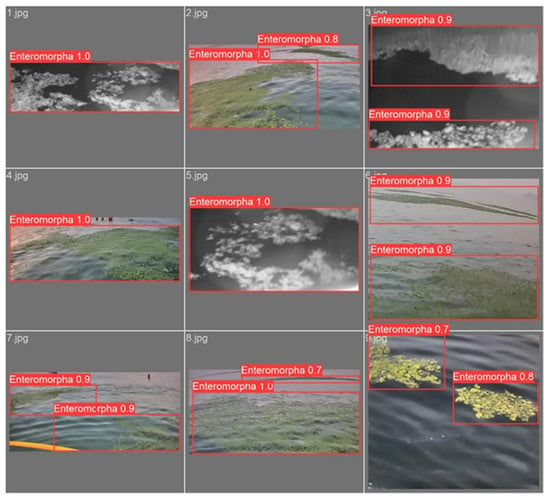

To better illustrate the performance differences between YOLOv8n and CEE-YOLOv8 visually, this study conducted inference on Enteromorpha detection using the trained models under the same experimental settings, with the outcomes depicted in Figure 13 and Figure 14. It can be intuitively noted that CEE-YOLOv8 performs exceptionally better in balancing detection precision and recall.

Figure 13.

The recognition results of the model YOLOv8n.

Figure 14.

The recognition results of the model CEE-YOLOv8.

Comparing the two sets of detection results, it can be observed that at night (3.jpg), the benchmark model YOLOv8n tends to confuse the sea surface background with Enteromorpha communities, misclassifying the ocean background as Enteromorpha. In contrast, CEE-YOLOv8 maintains a good degree of target discrimination in the night environment. Furthermore, when comparing 2.jpg and 8.jpg, it is evident that CEE-YOLOv8 demonstrates good recognition performance for Enteromorpha communities at a greater distance. However, YOLOv8n has a problem with missed detections, as it fails to identify all instances of Enteromorpha in the images. Additionally, in scenes containing two non-adjacent Enteromorpha communities (7.jpg and 9.jpg), CEE-YOLOv8 effectively achieves separate detection of targets without encountering issues of duplicate detections. Lastly, other test results further indicate that while YOLOv8n can detect Enteromorpha communities, it still exhibits lower confidence scores and reduced precision compared to the improved model, CEE-YOLOv8.

These differences can be attributed to targeted improvements to the model. The incorporation of the ConvNeXtv2 module significantly enhances the feature extraction capabilities of the C2f module, greatly improving the model’s ability to capture subtle edge features of Enteromorpha and expanding its perception range of target edges. This is particularly important given that the internal characteristics of Enteromorpha communities are often indistinct, and the edges of these communities can dissipate or settle over time. Such enhancements can improve the model’s feature extraction capacity for Enteromorpha of varying scales and forms, reducing missed detections and false positives caused by feature confusion, thus enhancing the robustness of Enteromorpha recognition in complex marine environments.

The integration of the ECA module further optimizes the model’s feature weighting for Enteromorpha, improving target discrimination in nighttime conditions and effectively reducing the false positive rate. The application of the EIoU loss function effectively increases the accuracy of bounding box localization, minimizing issues related to duplicate detections and boundary offsets. The synergistic effect of these three enhancements allows CEE-YOLOv8 to maintain stable detection performance even in challenging scenarios such as nighttime conditions, thereby demonstrating the effectiveness of the improvement strategies.

5.2. Limitations and Challenges

Although CEE-YOLOv8 has achieved significant improvements in Enteromorpha detection performance, its enhancement strategies still have certain limitations.

First, there is room for optimizing the balance between computational efficiency and performance. While the introduction of ConvNeXtv2 and ECA has enhanced feature extraction capabilities, the complexity of the convolution operations has increased the model’s inference time to some extent, particularly affecting its real-time performance on low-computing-power devices (such as unmanned vessel).

Secondly, the ability to adapt to harsh weather conditions still falls short. Although advancements have been made in nighttime conditions, the model’s capacity to discern edge characteristics might diminish in highly intricate scenarios. Conditions like heavy fog, intense wave disruptions, or the dense entanglement of Enteromorpha with other algal species can contribute to an increased probability of false positives.

These limitations point to directions for future research, such as the integration of lightweight network designs, the introduction of multimodal feature fusion, or the construction of more diverse Enteromorpha datasets.

6. Conclusions

This study focuses on Enteromorpha recognition, which plays a vital role in monitoring Enteromorpha drift law and acquiring environmental parameters necessary for Enteromorpha survival, as it is an integral part of intelligent unmanned vessel tracking systems. The discussion primarily emphasizes methods to enhance the accuracy of Enteromorpha detection and improve the performance metrics of the algorithms utilized.

This research enhances the C2f component within the Backbone architecture by incorporating the ConvNeXtv2 module. This enhancement enhances the model’s capacity to extract edge feature information related to Enteromorpha, thereby offering more detailed support for object detection. By integrating the ECA module into the Neck network, the model’s emphasis on Enteromorpha regions is heightened, which in turn boosts cross-scene adaptability. Furthermore, the employment of the EIoU loss function enhances the precision of bounding box localization by mitigating problems associated with boundary offsets and redundant annotations. Collectively, these three strategies enable the model to maintain stable capturing of subtle features in marine environments. Compared to the benchmark model YOLOv8n and other models (SSD, YOLOv5s, and YOLOv7-tiny), the proposed CEE-YOLOv8 demonstrates superior performance, achieving higher Precision, Recall, and mAP50-95. In addition, various quantitative metrics (P Curve, R Curve, PR Curve, and F1 Curve) and the final recognition results further validate the comprehensive performance advantages of the proposed model.

The performance advantages demonstrated by CEE-YOLOv8 support the monitoring tasks of unmanned vessels concerning Enteromorpha drift law, providing reliable technical support for the precise acquisition of environmental parameters essential for Enteromorpha survival. Notably, its stability in complex scenarios, such as nighttime conditions, meets the demands for highly robust detection models in marine ecological monitoring. In the future, the focus will be on further optimizing the model structure of CEE-YOLOv8 by employing techniques such as pruning and quantization to balance detection accuracy and real-time performance, thereby enhancing its adaptability in unmanned vessel monitoring. Additionally, efforts will be made to expand the sample coverage by increasing the dataset to include data under extreme lighting conditions, complex sea states, and mixed-species scenarios.

Author Contributions

Conceptualization, Y.L., H.L. and X.S.; methodology, X.S., R.M. and H.L.; software, X.S. and H.L.; validation, X.K., F.L. and Y.G.; investigation, X.S. and H.L. data curation, R.M. writing—original draft preparation, X.S., Y.G. and Q.S.; writing—review and editing, R.M., H.L. and X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Key Research and Development Program of China (2024YFC2817000), Major Scientific Research Project for the Construction of State Key Laboratory at Qilu University of Technology (Shandong Academy of Sciences) (Grant No. 2025ZDGZ01), TaiShan Scholars (tstp20240830), Shandong Province Key Research and Development Plan Project (International Science and Technology Cooperation) (2024KJHZ020), and Open Research Fund of Key Laboratory of Marine Ecological Monitoring and Restoration Technology, Ministry of Natural Resources (MEMRT202301).

Data Availability Statement

The original contributions presented in this study are included in the article material. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| YOLO | You Only Look Once |

| ECA | Efficient Channel Attention |

| CIoU | Complete Intersection over Union |

| EIoU | Efficient Intersection over Union |

| GRN | Globel Response Normalization |

| SSD | Single-Shot MultiBox Detector |

| CBAM | Convolution Block Attention Module |

| CA | Coordinate Attention |

| SE | Squeeze and Excitation |

References

- Xia, Z.; Liu, J.; Zhao, S.; Sun, Y.; Cui, Q.; Wu, L.; Gao, S.; Zhang, J.; He, P. Review of the development of the green tide and the process of control in the southern Yellow Sea in 2022. Estuar. Coast. Shelf Sci. 2024, 302, 108772. [Google Scholar] [CrossRef]

- Li, L.; Liang, Z.; Liu, T.; Lu, C.; Yu, Q.; Qiao, Y. Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization. Water 2025, 17, 430. [Google Scholar] [CrossRef]

- Hu, L.; Zeng, K.; Hu, C.; He, M.-X. On the remote estimation of Ulva prolifera areal coverage and biomass. Remote Sens. Environ. 2019, 223, 194–207. [Google Scholar] [CrossRef]

- Yu, T.; Peng, X.; Wang, Y.; Xu, S.; Liang, C.; Wang, Z. Green tide cover area monitoring and prediction based on multi-source remote sensing fusion. Mar. Pollut. Bull. 2025, 215, 117921. [Google Scholar] [CrossRef]

- Xu, S.; Yu, T.; Xu, J.; Pan, X.; Shao, W.; Zuo, J.; Yu, Y. Monitoring and forecasting green tide in the Yellow Sea using satellite imagery. Remote Sens. 2023, 15, 2196. [Google Scholar] [CrossRef]

- Dong, Z.; Liu, Y.; Wang, Y.; Feng, Y.; Chen, Y.; Wang, Y. Enteromorpha prolifera detection in high-resolution remote sensing imagery based on bounda-ry-assisted dual-path convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4208715. [Google Scholar] [CrossRef]

- Zhou, R.; Sha, J.; Wen, R.; Li, J.; Pan, Y.; Wei, M.; Wang, H.; Wang, T.; Zhang, J.; Zhao, S. Present situation and prospect of green tide monitoring technology. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Chongqing, China, 6–7 March 2021; IOP Publishing: Bristol, UK, 2021; Volume 769, p. 032043. [Google Scholar]

- Pawłowski, J.; Kołodziej, M.; Majkowski, A. Implementing YOLO convolutional neural network for seed size detection. Appl. Sci. 2024, 14, 6294. [Google Scholar] [CrossRef]

- Murat, A.A.; Kiran, M.S. A comprehensive review on YOLO versions for object detection. Eng. Sci. Technol. Int. J. 2025, 70, 102161. [Google Scholar] [CrossRef]

- Yang, D.; Solihin, M.I.; Ardiyanto, I.; Zhao, Y.; Li, W.; Cai, B.; Chen, C. A streamlined approach for intelligent ship object detection using EL-YOLO algorithm. Sci. Rep. 2024, 14, 15254. [Google Scholar] [CrossRef]

- Fu, H.; Song, G.; Wang, Y. Improved YOLOv4 marine target detection combined with CBAM. Symmetry 2021, 13, 623. [Google Scholar] [CrossRef]

- Bi, W.; Sun, X.; Li, J.; Jin, Y. HD-YOLO: The hydroid of aurelia detector. Mar. Pollut. Bull. 2024, 210, 117346. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, S.; He, Y.; Zhang, Y. YOLO11-YX: An efficient algorithm for marine debris target detection. Mar. Pollut. Bull. 2025, 221, 118511. [Google Scholar] [CrossRef]

- Jia, R.; Lv, B.; Chen, J.; Liu, H.; Cao, L.; Liu, M. Underwater object detection in marine ranching based on improved YOLOv8. J. Mar. Sci. Eng. 2023, 12, 55. [Google Scholar] [CrossRef]

- Tian, Y.; Liu, Y.; Lin, B.; Li, P. Research on marine flexible biological target detection based on improved YOLOv8 algorithm. PeerJ Comput. Sci. 2024, 10, e2271. [Google Scholar] [CrossRef]

- Jiang, Z.; Su, L.; Sun, Y. YOLOv7-Ship: A lightweight algorithm for ship object detection in complex marine environments. J. Mar. Sci. Eng. 2024, 12, 190. [Google Scholar] [CrossRef]

- Wu, Q.; Cen, L.; Kan, S.; Zhai, Y.; Chen, X.; Zhang, H. Real-time underwater target detection based on improved YOLOv7. J. Real-Time Image Process. 2025, 22, 43. [Google Scholar] [CrossRef]

- Li, Q.; Zhang, G.; Yang, P. CL-YOLOv8: Crack detection algorithm for fair-faced walls based on deep learning. Appl. Sci. 2024, 14, 9421. [Google Scholar] [CrossRef]

- Hang, X.; Zhu, X.; Gao, X.; Wang, Y.; Liu, L. Study on crack monitoring method of wind turbine blade based on AI model: Integration of classification, detection, segmentation and fault level evaluation. Renew. Energy 2024, 224, 120152. [Google Scholar] [CrossRef]

- Xu, G.; Lin, Z.; Shi, Y.; Wu, J.; Xu, H.; Wang, G.; Zhang, T. Recognition of chlorophyll rings using YOLOv8. Sci. Rep. 2025, 15, 13934. [Google Scholar] [CrossRef]

- Rasheed, A.F.; Zarkoosh, M. Optimized YOLOv8 for multi-scale object detection. J. Real-Time Image Process. 2024, 22, 6. [Google Scholar] [CrossRef]

- Fan, Y.; Zhang, L.; Li, P. A lightweight model of underwater object detection based on YOLOv8n for an edge computing plat-form. J. Mar. Sci. Eng. 2024, 12, 697. [Google Scholar] [CrossRef]

- Xu, Y.; Li, J.; Zhang, L.; Liu, H.; Zhang, F. CNTCB-YOLOv7: An effective forest fire detection model based on ConvNeXtV2 and CBAM. Fire 2024, 7, 54. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y.; Shao, X.; Zheng, A. An efficient fire detection algorithm based on Mamba space state linear attention. Sci. Rep. 2025, 15, 11289. [Google Scholar] [CrossRef]

- Shen, L.; Lang, B.; Song, Z. Object detection for remote sensing based on the enhanced YOLOv8 with WBiFPN. IEEE Access 2024, 12, 158239–158257. [Google Scholar] [CrossRef]

- Gendy, G.; Sabor, N. Transformer-style convolution network for lightweight image super-resolution. Multimed. Tools Appl. 2025, 84, 33195–33218. [Google Scholar] [CrossRef]

- Varol Arısoy, M.; Uysal, İ. BiFPN-enhanced SwinDAT-based cherry variety classification with YOLOv8. Sci. Rep. 2025, 15, 5427. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y. Tea disease identification based on ECA attention mechanism ResNet50 network. Front. Plant Sci. 2025, 16, 1489655. [Google Scholar] [CrossRef]

- Ni, H.; Shi, Z.; Karungaru, S.; Lv, S.; Li, X.; Wang, X.; Zhang, J. Classification of typical pests and diseases of rice based on the ECA attention mechanism. Agriculture 2023, 13, 1066. [Google Scholar] [CrossRef]

- Sahragard, E.; Farsi, H.; Mohamadzadeh, S. Advancing semantic segmentation: Enhanced UNet algorithm with attention mechanism and deformable convolution. PLoS ONE 2025, 20, e0305561. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 3 April 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- Ye, J.; Zhang, Y.; Li, P.; Guo, Z.; Zeng, S.; Wei, T. Real-time dense small object detection model for floating litter detection and removal on water surfaces. Mar. Pollut. Bull. 2025, 218, 118189. [Google Scholar] [CrossRef]

- Niu, C.; Song, Y.; Zhao, X. SE-Lightweight YOLO: Higher accuracy in YOLO detection for vehicle inspection. Appl. Sci. 2023, 13, 13052. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).