Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR)

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Hydrometeorological Data

2.3. Snow Cover

2.4. GT

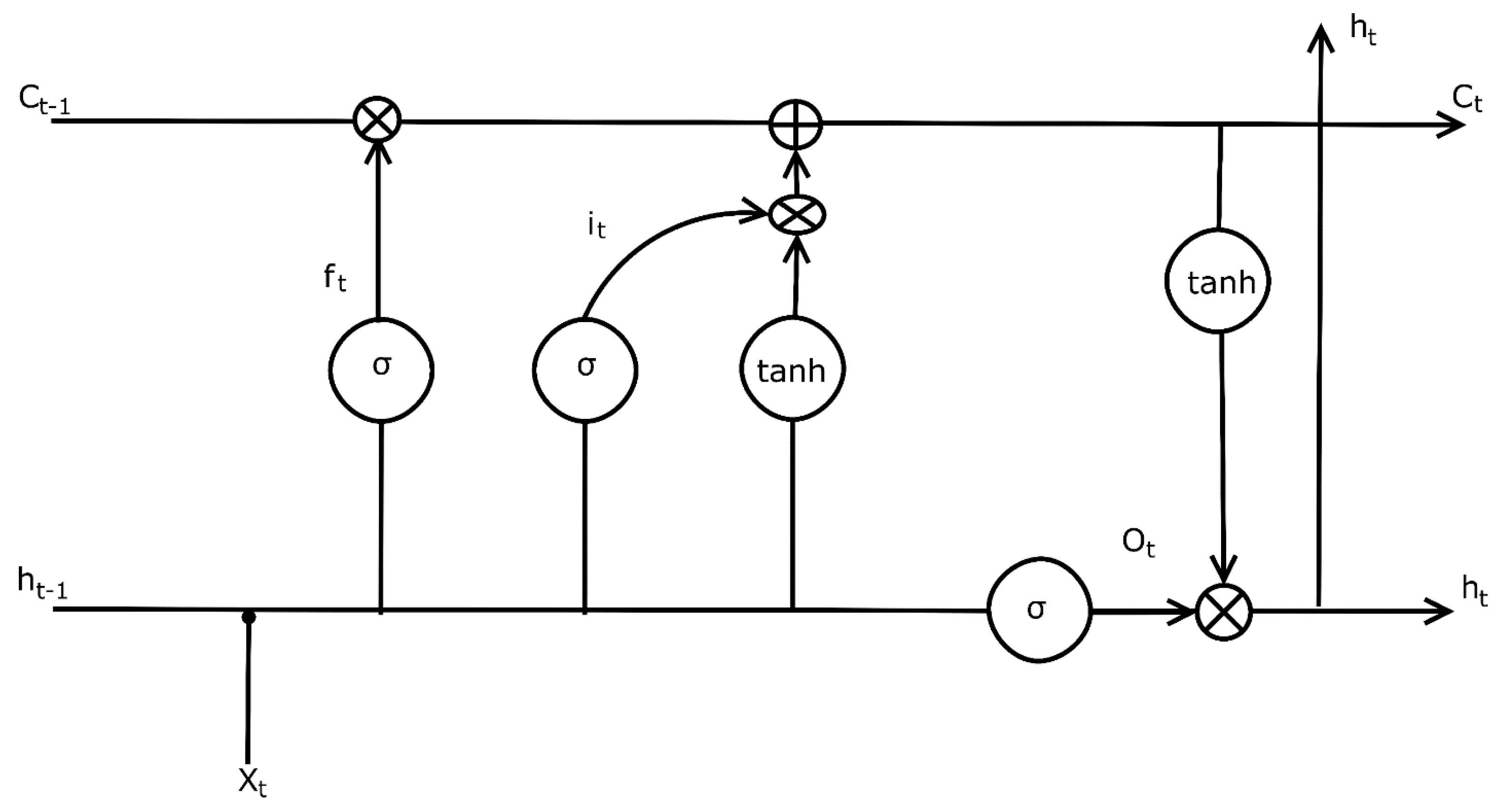

2.5. LSTM

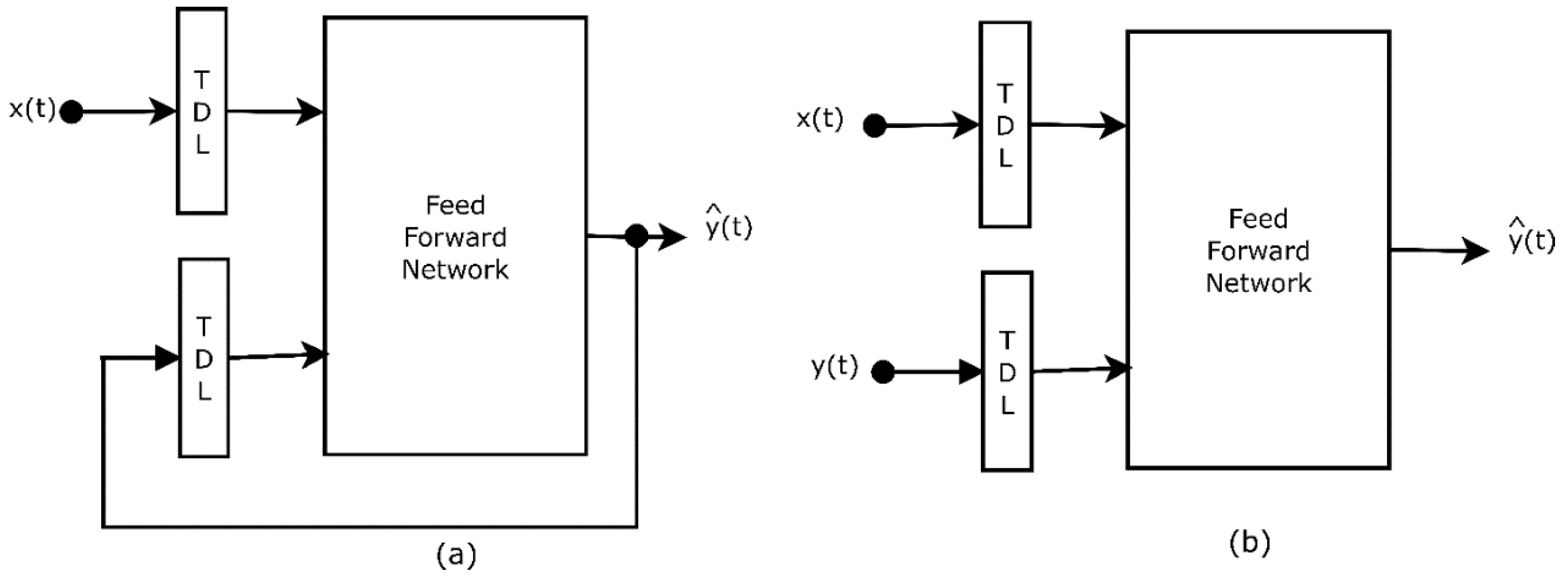

2.6. NARX

2.7. SVR

2.8. GPR

2.9. Performance Indices

3. Results

3.1. Selection of Input Combination

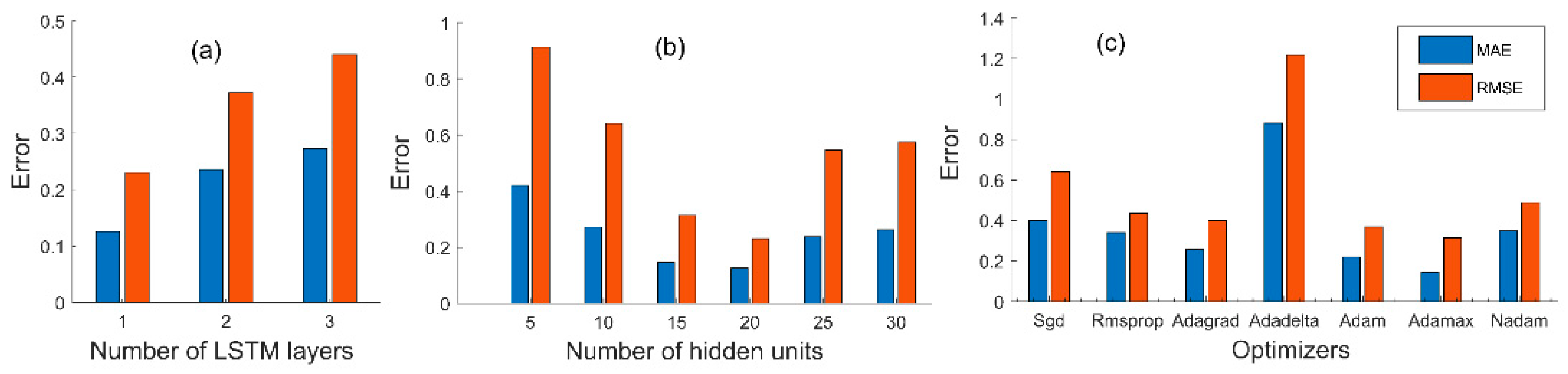

3.2. Effect of Hyperparameters on Model Performance

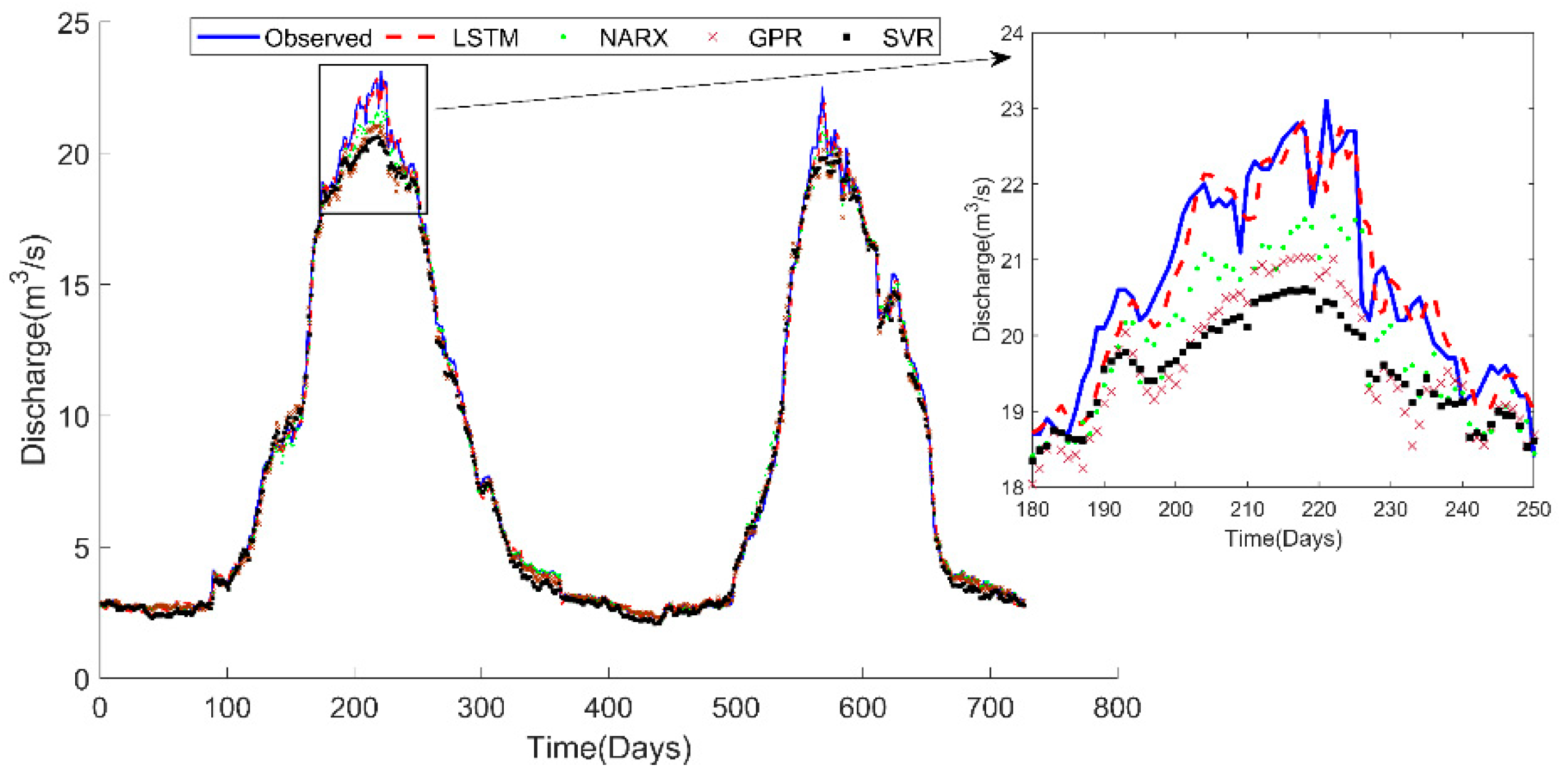

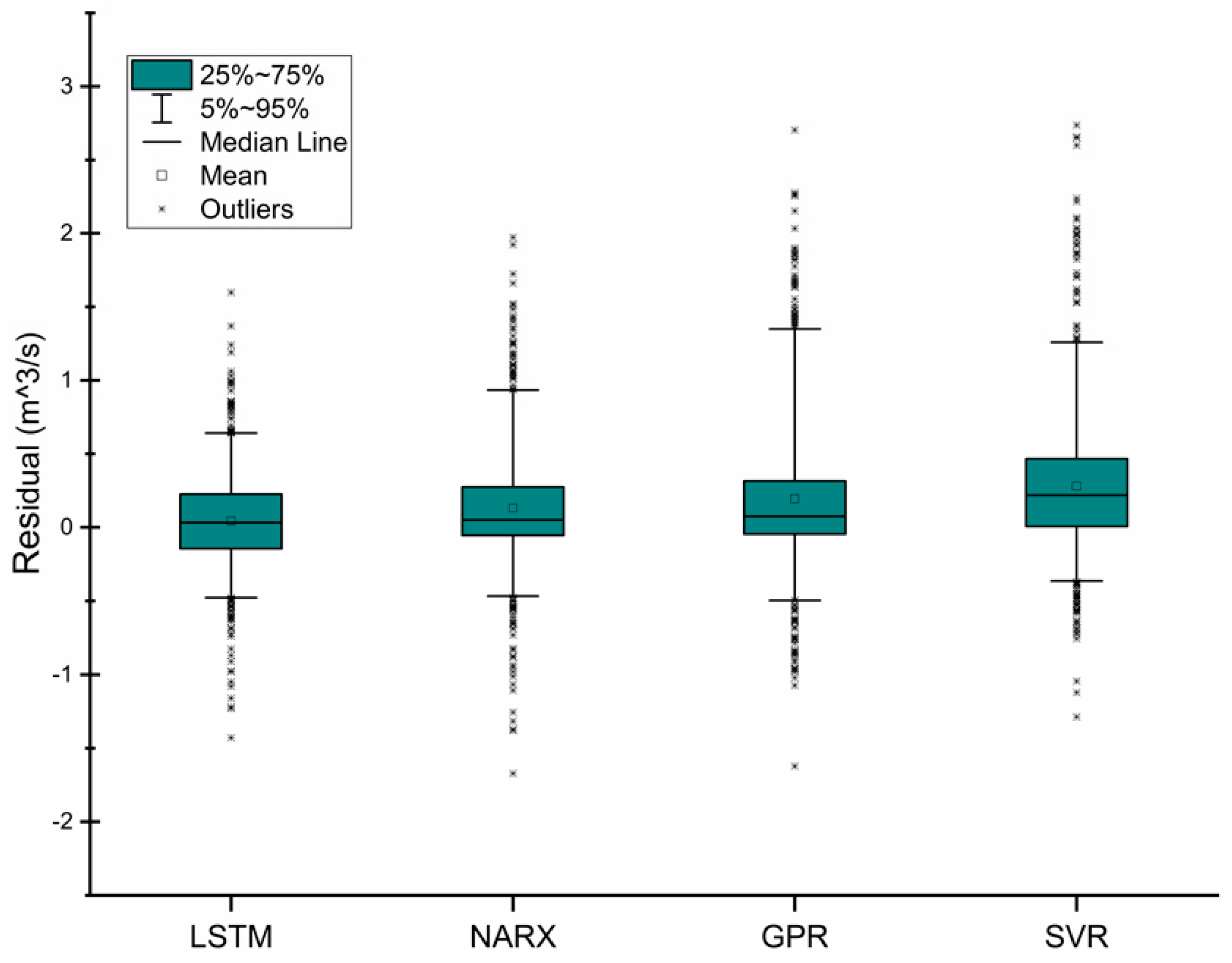

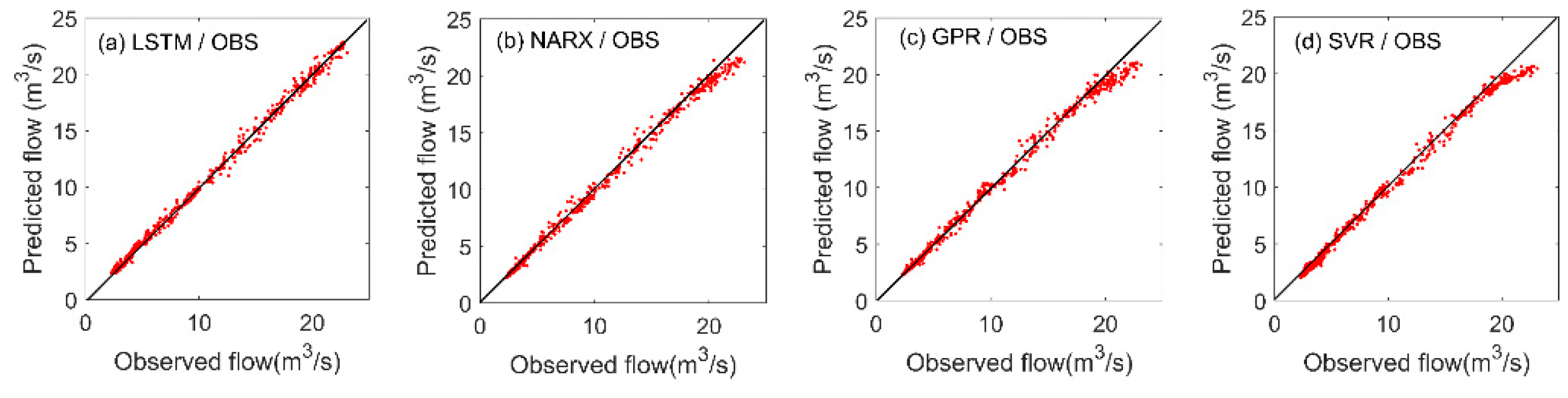

3.3. Comparison of ML models

3.4. Sensitivity Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wester, P.; Mishra, A.; Mukherji, A.; Shrestha, A.B. The Hindu Kush Himalaya Assessment; Wester, P., Mishra, A., Mukherji, A., Shrestha, A.B., Eds.; Springer International Publishing: Cham, Switzerland, 2019; ISBN 978-3-319-92287-4. [Google Scholar]

- Kumar, M.; Marks, D.; Dozier, J.; Reba, M.; Winstral, A. Evaluation of distributed hydrologic impacts of temperature-index and energy-based snow models. Adv. Water Resour. 2013, 56, 77–89. [Google Scholar] [CrossRef]

- Griessinger, N.; Schirmer, M.; Helbig, N.; Winstral, A.; Michel, A.; Jonas, T. Implications of observation-enhanced energy-balance snowmelt simulations for runoff modeling of Alpine catchments. Adv. Water Resour. 2019, 133, 103410. [Google Scholar] [CrossRef]

- Ohmura, A. Physical Basis for the Temperature-Based Melt-Index Method. J. Appl. Meteorol. 2001, 40, 753–761. [Google Scholar] [CrossRef]

- Massmann, C. Modelling Snowmelt in Ungauged Catchments. Water 2019, 11, 301. [Google Scholar] [CrossRef]

- Martinec, J.; Rango, A. Parameter values for snowmelt runoff modelling. J. Hydrol. 1986, 84, 197–219. [Google Scholar] [CrossRef]

- ASCE Artificial Neural Networks in Hydrology. I: Preliminary Concepts. J. Hydrol. Eng. 2000, 5, 115–123. [Google Scholar] [CrossRef]

- ASCE Artificial Neural Networks in Hydrology. II: Hydrologic Applications. J. Hydrol. Eng. 2000, 5, 124–137. [Google Scholar] [CrossRef]

- Callegari, M.; Mazzoli, P.; de Gregorio, L.; Notarnicola, C.; Pasolli, L.; Petitta, M.; Pistocchi, A. Seasonal River Discharge Forecasting Using Support Vector Regression: A Case Study in the Italian Alps. Water 2015, 7, 2494–2515. [Google Scholar] [CrossRef]

- De Gregorio, L.; Callegari, M.; Mazzoli, P.; Bagli, S.; Broccoli, D.; Pistocchi, A.; Notarnicola, C. Operational River Discharge Forecasting with Support Vector Regression Technique Applied to Alpine Catchments: Results, Advantages, Limits and Lesson Learned. Water Resour. Manag. 2018, 32, 229–242. [Google Scholar] [CrossRef]

- Uysal, G.; Şensoy, A.; Şorman, A.A. Improving daily streamflow forecasts in mountainous Upper Euphrates basin by multi-layer perceptron model with satellite snow products. J. Hydrol. 2016, 543, 630–650. [Google Scholar] [CrossRef]

- Martinec, J. Snowmelt—Runoff model for stream flow forecasts. Hydrol. Res. 1975, 6, 145–154. [Google Scholar] [CrossRef]

- Nagesh Kumar, D.; Srinivasa Raju, K.; Sathish, T. River Flow Forecasting using Recurrent Neural Networks. Water Resour. Manag. 2004, 18, 143–161. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kratzert, F.; Klotz, D.; Brenner, C.; Schulz, K.; Herrnegger, M. Rainfall—Runoff modelling using Long Short-Term Memory (LSTM) networks. Hydrol. Earth Syst. Sci. 2018, 22, 6005–6022. [Google Scholar] [CrossRef]

- Fan, H.; Jiang, M.; Xu, L.; Zhu, H.; Cheng, J.; Jiang, J. Comparison of Long Short Term Memory Networks and the Hydrological Model in Runoff Simulation. Water 2020, 12, 175. [Google Scholar] [CrossRef]

- Le, X.-H.; Ho, H.V.; Lee, G.; Jung, S. Application of Long Short-Term Memory (LSTM) Neural Network for Flood Forecasting. Water 2019, 11, 1387. [Google Scholar] [CrossRef]

- Kratzert, F.; Klotz, D.; Shalev, G.; Klambauer, G.; Hochreiter, S.; Nearing, G. Towards Learning Universal, Regional, and Local Hydrological Behaviors via Machine-Learning Applied to Large-Sample Datasets. arXiv 2019, arXiv:1907.0845623, 5089–5110. [Google Scholar] [CrossRef]

- Kirkham, J.D.; Koch, I.; Saloranta, T.M.; Litt, M.; Stigter, E.E.; Møen, K.; Thapa, A.; Melvold, K.; Immerzeel, W.W. Near real-time measurement of snow water equivalent in the Nepal Himalayas. Front. Earth Sci. 2019, 7, 1–18. [Google Scholar] [CrossRef]

- Thapa, S.; Li, B.; Fu, D.; Shi, X.; Tang, B.; Qi, H.; Wang, K. Trend analysis of climatic variables and their relation to snow cover and water availability in the Central Himalayas: A case study of Langtang Basin, Nepal. Theor. Appl. Climatol. 2020. [Google Scholar] [CrossRef]

- RGI Consortium. Randolph Glacier Inventory—A Dataset of Global Glacier Outlines: Version 6.0; GLIMS Technical Report: Boulder, CO, USA, 2017. [Google Scholar] [CrossRef]

- Ragettli, S.; Pellicciotti, F.; Immerzeel, W.W.; Miles, E.S.; Petersen, L.; Heynen, M.; Shea, J.M.; Stumm, D.; Joshi, S.; Shrestha, A. Unraveling the hydrology of a Himalayan catchment through integration of high resolution in situ data and remote sensing with an advanced simulation model. Adv. Water Resour. 2015, 78, 94–111. [Google Scholar] [CrossRef]

- Yasutomi, N.; Hamada, A.; Yatagai, A. Development of a Long-term Daily Gridded Temperature Dataset and Its Application to Rain/Snow Discrimination of Daily Precipitation. Glob. Environ. Res. 2011, V15N2, 165–172. [Google Scholar]

- Huffman, G.J.; Bolvin, D.T.; Nelkin, E.J.; Wolff, D.B.; Adler, R.F.; Gu, G.; Hong, Y.; Bowman, K.P.; Stocker, E.F. The TRMM Multisatellite Precipitation Analysis (TMPA): Quasi-Global, Multiyear, Combined-Sensor Precipitation Estimates at Fine Scales. J. Hydrometeorol. 2007, 8, 38–55. [Google Scholar] [CrossRef]

- Immerzeel, W.W.; Droogers, P.; de Jong, S.M.; Bierkens, M.F.P. Remote Sensing of Environment Large-scale monitoring of snow cover and runoff simulation in Himalayan river basins using remote sensing. Remote Sens. Environ. 2009, 113, 40–49. [Google Scholar] [CrossRef]

- NASA—National Aeronautics and Space Administration, TRMM. Available online: https://pmm.nasa.gov/data-access/downloads/trmm (accessed on 9 September 2019).

- Hall, D.K.; Riggs, G.A. MODIS/Terra Snow Cover 8-Day L3 Global 500 m SIN Grid, Version 6; NASA NSIDC DAAC: Boulder, CO, USA, 2016; Available online: https://nsidc.org/data/mod10a2 (accessed on 29 August 2018). [CrossRef]

- Stigter, E.E.M.; Wanders, N.; Saloranta, T.M.; Shea, J.M.; Bierkens, M.F.P.P.; Immerzeel, W.W. Assimilation of snow cover and snow depth into a snow model to estimate snow water equivalent and snowmelt runoff in a Himalayan catchment. Cryosphere 2017, 11, 1647–1664. [Google Scholar] [CrossRef]

- Hall, D.K.; Riggs, G.A.; Salomonson, V.V.; Digirolamo, N.E.; Bayr, K.J. MODIS snow-cover products. Remote Sens. Environ. 2002, 83, 181–194. [Google Scholar] [CrossRef]

- NASA/METI/AIST/Japan Spacesystems, and U.S./Japan ASTER Science Team. ASTER Global Digital Elevation. Modeldistributed by NASA EOSDIS Land Processes DAAC; 2009. Available online: https://lpdaac.usgs.gov (accessed on 4 April 2018). [CrossRef]

- Maier, H.R.; Jain, A.; Dandy, G.C.; Sudheer, K.P. Methods used for the development of neural networks for the prediction of water resource variables in river systems: Current status and future directions. Environ. Model. Softw. 2010, 25, 891–909. [Google Scholar] [CrossRef]

- Stefánsson, A.; Končar, N.; Jones, A.J. A note on the gamma test. Neural Comput. Appl. 1997, 5, 131–133. [Google Scholar] [CrossRef]

- Durrant, P.J. WinGamma: A Non-Linear Data Analysis and Modelling Tool with Applications to Flood Prediction; Cardiff University: Wales, UK, 2001. [Google Scholar]

- Chollet, F. Keras. Available online: https://keras.io (accessed on 14 October 2019).

- KC, K.; Yin, Z.; Wu, M.; Wu, Z. Depthwise separable convolution architectures for plant disease classification. Comput. Electron. Agric. 2019, 165, 104948. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar] [CrossRef]

- Lin, T.; Horne, B.G.; Tino, P.; Giles, C.L. Learning long-term dependencies in NARX recurrent neural networks. IEEE Trans. Neural Netw. 1996, 7, 1329–1338. [Google Scholar] [CrossRef]

- Guzman, S.M.; Paz, J.O.; Tagert, M.L.M. The Use of NARX Neural Networks to Forecast Daily Groundwater Levels. Water Resour. Manag. 2017, 31, 1591–1603. [Google Scholar] [CrossRef]

- Alsumaiei, A.A. A Nonlinear Autoregressive Modeling Approach for Forecasting Groundwater Level Fluctuation in Urban Aquifers. Water 2020, 12, 820. [Google Scholar] [CrossRef]

- Banihabib, M.E.; Bandari, R.; Peralta, R.C. Auto-Regressive Neural-Network Models for Long Lead-Time Forecasting of Daily Flow. Water Resour. Manag. 2019, 33, 159–172. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer New York: New York, NY, USA, 1995; Volume 66, ISBN 978-1-4757-2442-4. [Google Scholar]

- Dibike, Y.B.; Velickov, S.; Solomatine, D.; Abbott, M.B. Model Induction with Support Vector Machines: Introduction and Applications. J. Comput. Civ. Eng. 2001, 15, 208–216. [Google Scholar] [CrossRef]

- Rasmussen, C.E.; Williams, C.K.I. Gaussian Processes for Machine Learning; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Wang, J.; Hu, J. A robust combination approach for short-term wind speed forecasting and analysis—Combination of the ARIMA (Autoregressive Integrated Moving Average), ELM (Extreme Learning Machine), SVM (Support Vector Machine) and LSSVM (Least Square SVM) forecasts using a GPR (Gaussian process regression) model. Energy 2015, 93, 41–56. [Google Scholar] [CrossRef]

- Kling, H.; Fuchs, M.; Paulin, M. Runoff conditions in the upper Danube basin under an ensemble of climate change scenarios. J. Hydrol. 2012, 424, 264–277. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Bengio, Y. Practical recommendations for gradient-based training of deep architectures. In Neural Networks: Tricks of the Trade; Springer: Cham, Switzerland, 2012; pp. 1–33. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pradhananga, N.S.; Kayastha, R.B.; Bhattarai, B.C.; Adhikari, T.R.; Pradhan, S.C.; Devkota, L.P.; Shrestha, A.B.; Mool, P.K. Estimation of discharge from Langtang River basin, Rasuwa, Nepal, using a glacio-hydrological model. Ann. Glaciol. 2014, 55, 223–230. [Google Scholar] [CrossRef]

| Measures | Equation | Description |

|---|---|---|

| modified Kling–Gupta efficiency (KGE’) | KGE’ provides an overview of bias ratio (), correlation (), and variability . The optimum value for KGE’, , and is 1. No cross-correlation between the bias ratio and variability is ensured by using CV in calculating [45]. | |

| Nash–Sutcliffe efficiency (NSE) | NSE provides the relative magnitude of residual variance compared to the measured data variance [46]. | |

| Coefficient of determination () | provides the intensity of the link between measured and simulated values. Its value ranges from 0 to 1, closer to 0 indicates a lower correlation while close to 1 represents a high correlation. | |

| Root–mean–square error (RMSE) | RMSE is the standard deviation of the errors. Lower RMSE value shows a better fit. | |

| Mean absolute error (MAE) | MAE is the absolute difference between measured and simulated values. Lower MAE values indicate the lower error. |

| Model | Input | Gamma | V-Ratio |

|---|---|---|---|

| M1 | Qt−1, St−1, Pt−1, Tt−1, Qt−2, St−2, Pt−2, Tt−2 | 0.00175 | 0.00702 |

| M2 | Qt−1, St−1, Tt−1, Qt−2, St−2, Tt−2 | 0.00093 | 0.00374 |

| M3 | Qt−1, Pt−1, Tt−1, Qt−2, Pt−2, Tt−2 | 0.00278 | 0.01112 |

| M4 | Qt−1, St−1, Pt−1, Tt−1, St−2, Pt−2, Tt−2 | 0.00214 | 0.00858 |

| M5 | Qt−1, St−1, Pt−1, Tt−1, Pt−2, Tt−2 | 0.00366 | 0.01466 |

| M6 | Qt−1, St−1, Pt−1, Tt−1, Tt−2 | 0.00236 | 0.00944 |

| M7 | Qt−1, St−1, Pt−1, Tt−1, Qt−2 | 0.00222 | 0.00889 |

| M8 | Qt−1, Qt−2 | 0.00230 | 0.00922 |

| M9 | Qt−1, St−1, Pt−1, Tt−1 | 0.00245 | 0.00983 |

| M10 | Qt−1, St−1, Tt−1 | 0.00226 | 0.00904 |

| M11 | Qt−1, Pt−1, Tt−1 | 0.00259 | 0.01037 |

| M12 | Qt−1, St−1 | 0.00275 | 0.01100 |

| M13 | Qt−1, Pt−1 | 0.00282 | 0.01130 |

| M14 | Qt−1, Tt−1 | 0.00261 | 0.01044 |

| M15 | Qt−1 | 0.00321 | 0.01287 |

| Training | Testing | |||

|---|---|---|---|---|

| Algorithm | RMSE | R | RMSE | R |

| LM | 0.424264 | 0.996 | 0.734847 | 0.99 |

| BR | 0.387298 | 0.996 | 0.640312 | 0.991 |

| SCG | 0.568331 | 0.994 | 0.712741 | 0.99 |

| Kernel Function | RMSE | MAE |

|---|---|---|

| Quadratic | 0.897 | 0.6504 |

| Squared Exponential | 0.8235 | 0.5544 |

| Exponential | 0.9141 | 0.6672 |

| Matern 5/2 | 0.8646 | 0.5923 |

| Matern 3/2 | 0.8616 | 0.5928 |

| Training Phase | Testing Phase | ||||||

|---|---|---|---|---|---|---|---|

| Models | Inputs | R2 | MAE | RMSE | R2 | MAE | RMSE |

| LSTM | M2 | 0.997 | 0.02 | 0.06 | 0.997 | 0.112 | 0.173 |

| NARX | M2 | 0.996 | 0.114 | 0.26 | 0.996 | 0.28 | 0.486 |

| GPR | M2 | 0.99 | 0.24 | 0.554 | 0.99 | 0.564 | 0.812 |

| SVR | M2 | 0.99 | 0.252 | 0.558 | 0.99 | 0.561 | 0.851 |

| Models | Inputs | KGE’ | NSE | R2 | MAE | RMSE |

|---|---|---|---|---|---|---|

| LSTM | M1 | 0.99 | 0.995 | 0.997 | 0.124 | 0.201 |

| M2 | 0.99 | 0.995 | 0.997 | 0.112 | 0.173 | |

| M3 | 0.988 | 0.994 | 0.997 | 0.186 | 0.287 | |

| NARX | M1 | 0.971 | 0.989 | 0.995 | 0.312 | 0.55 |

| M2 | 0.974 | 0.991 | 0.996 | 0.28 | 0.486 | |

| M3 | 0.969 | 0.989 | 0.995 | 0.317 | 0.503 | |

| GPR | M1 | 0.947 | 0.971 | 0.99 | 0.601 | 0.886 |

| M2 | 0.95 | 0.973 | 0.99 | 0.564 | 0.812 | |

| M3 | 0.944 | 0.966 | 0.987 | 0.634 | 0.978 | |

| SVR | M1 | 0.945 | 0.967 | 0.987 | 0.605 | 0.926 |

| M2 | 0.949 | 0.971 | 0.99 | 0.561 | 0.851 | |

| M3 | 0.942 | 0.966 | 0.986 | 0.641 | 0.986 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thapa, S.; Zhao, Z.; Li, B.; Lu, L.; Fu, D.; Shi, X.; Tang, B.; Qi, H. Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR). Water 2020, 12, 1734. https://doi.org/10.3390/w12061734

Thapa S, Zhao Z, Li B, Lu L, Fu D, Shi X, Tang B, Qi H. Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR). Water. 2020; 12(6):1734. https://doi.org/10.3390/w12061734

Chicago/Turabian StyleThapa, Samit, Zebin Zhao, Bo Li, Lu Lu, Donglei Fu, Xiaofei Shi, Bo Tang, and Hong Qi. 2020. "Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR)" Water 12, no. 6: 1734. https://doi.org/10.3390/w12061734

APA StyleThapa, S., Zhao, Z., Li, B., Lu, L., Fu, D., Shi, X., Tang, B., & Qi, H. (2020). Snowmelt-Driven Streamflow Prediction Using Machine Learning Techniques (LSTM, NARX, GPR, and SVR). Water, 12(6), 1734. https://doi.org/10.3390/w12061734