Near-Surface Temperature Prediction Based on Dual-Attention-BiLSTM

Abstract

1. Introduction

2. Materials and Methods

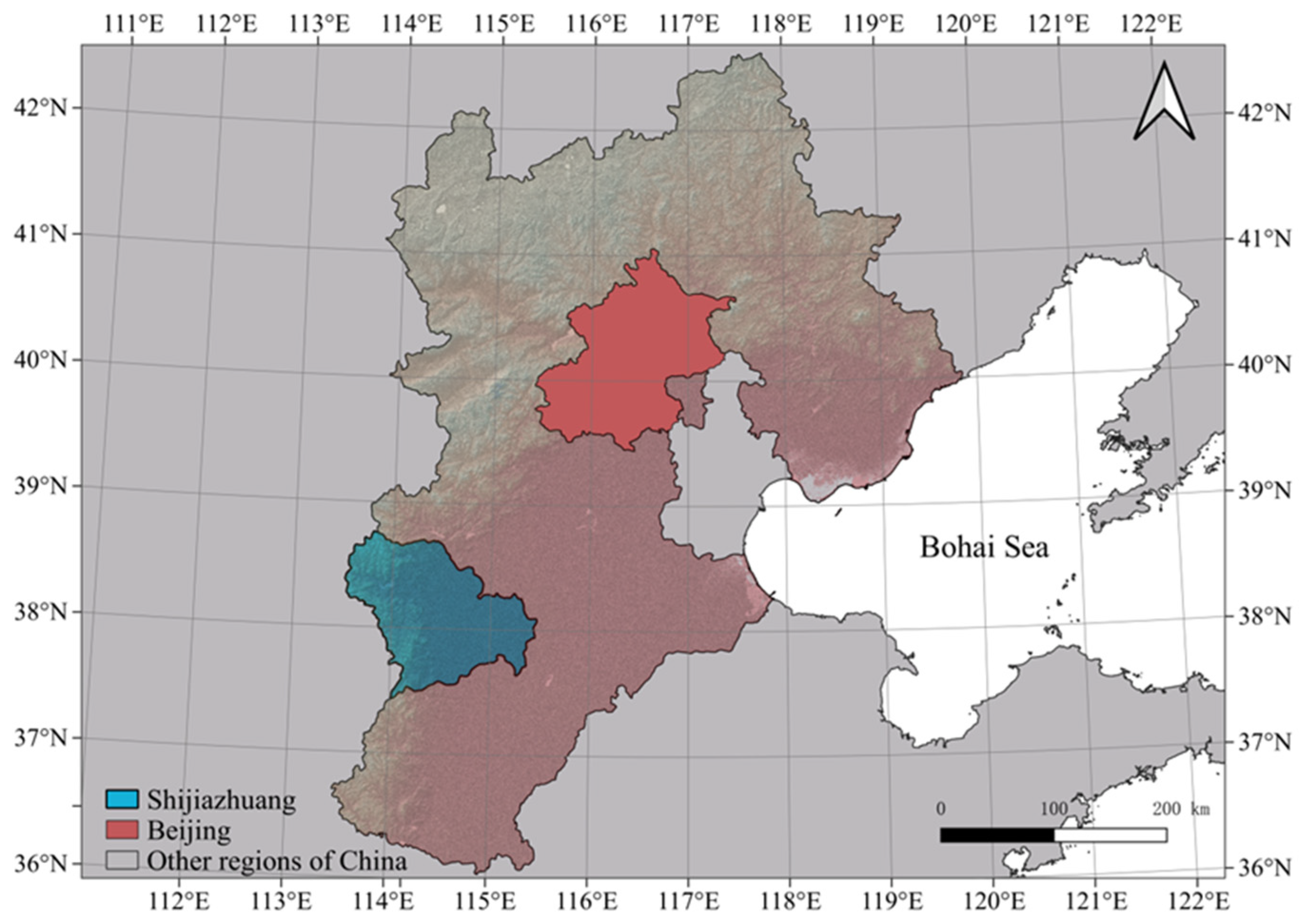

2.1. Data

2.2. Attention Mechanism Design

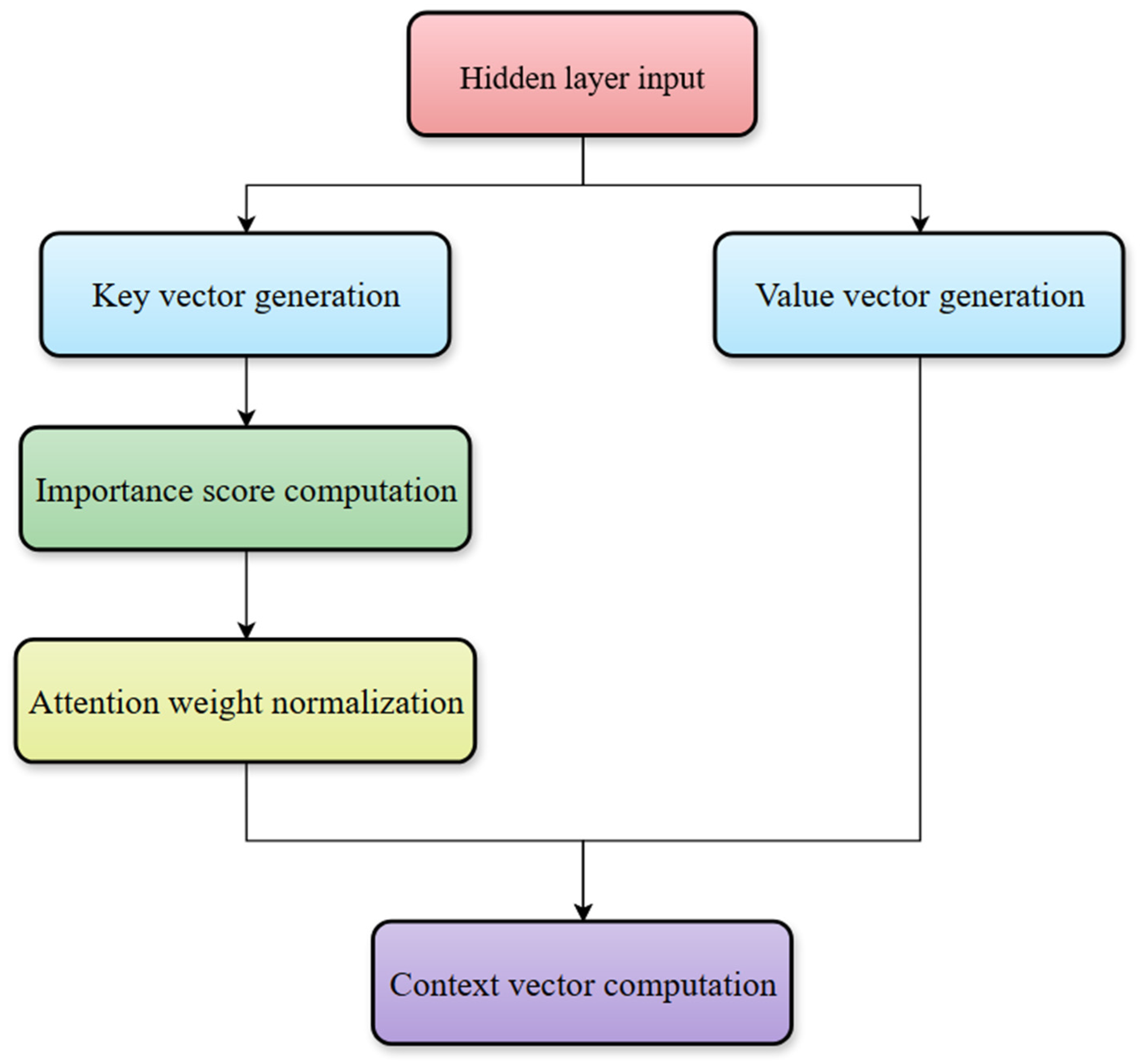

2.2.1. Key-Value Attention Mechanism

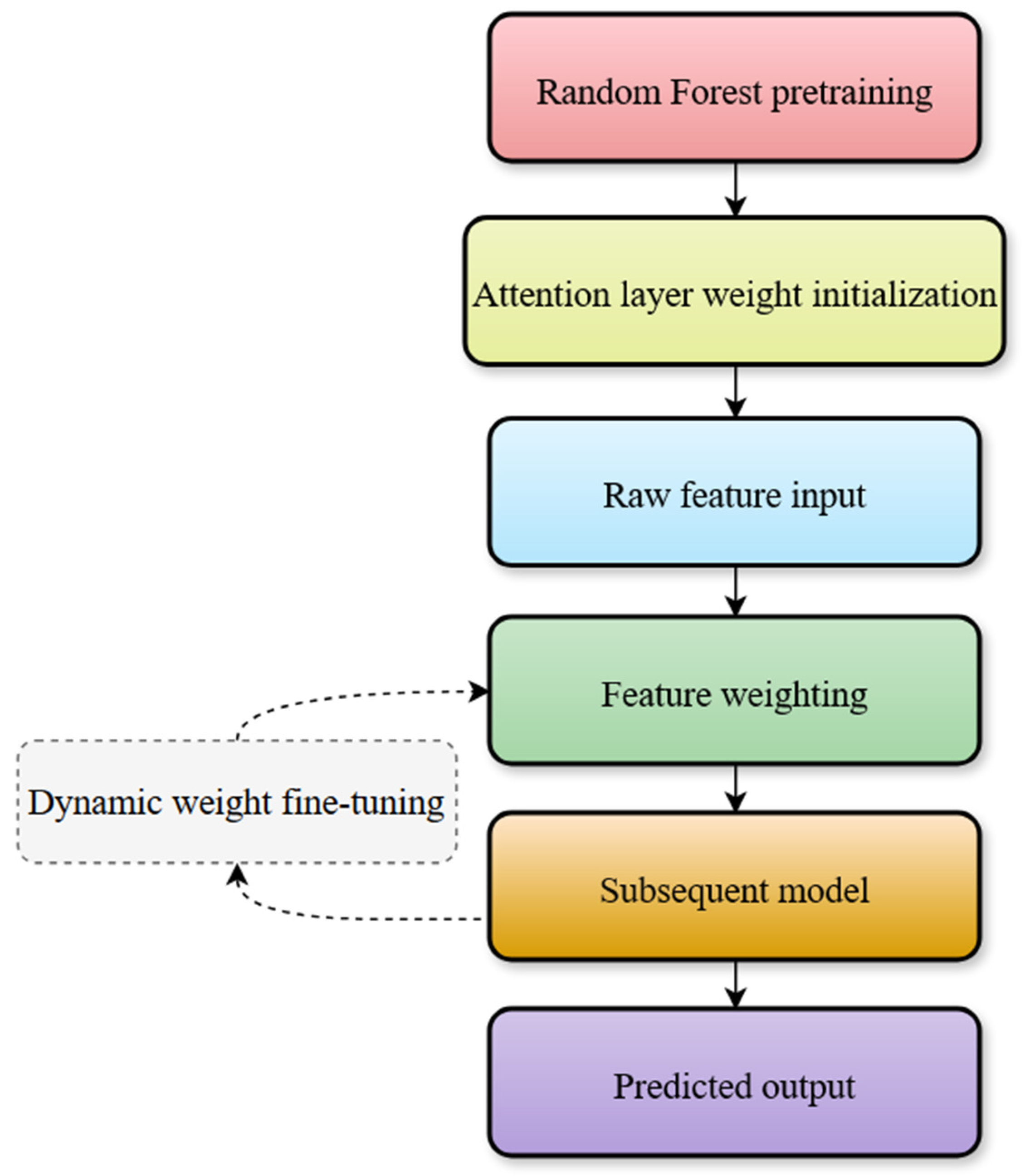

2.2.2. Feature Attention Mechanism

2.2.3. BiLSTM Model with Attention Mechanisms

2.3. Random Forest Feature Extraction

2.4. Experiment Scheme Design

2.5. Model Evaluation Metrics

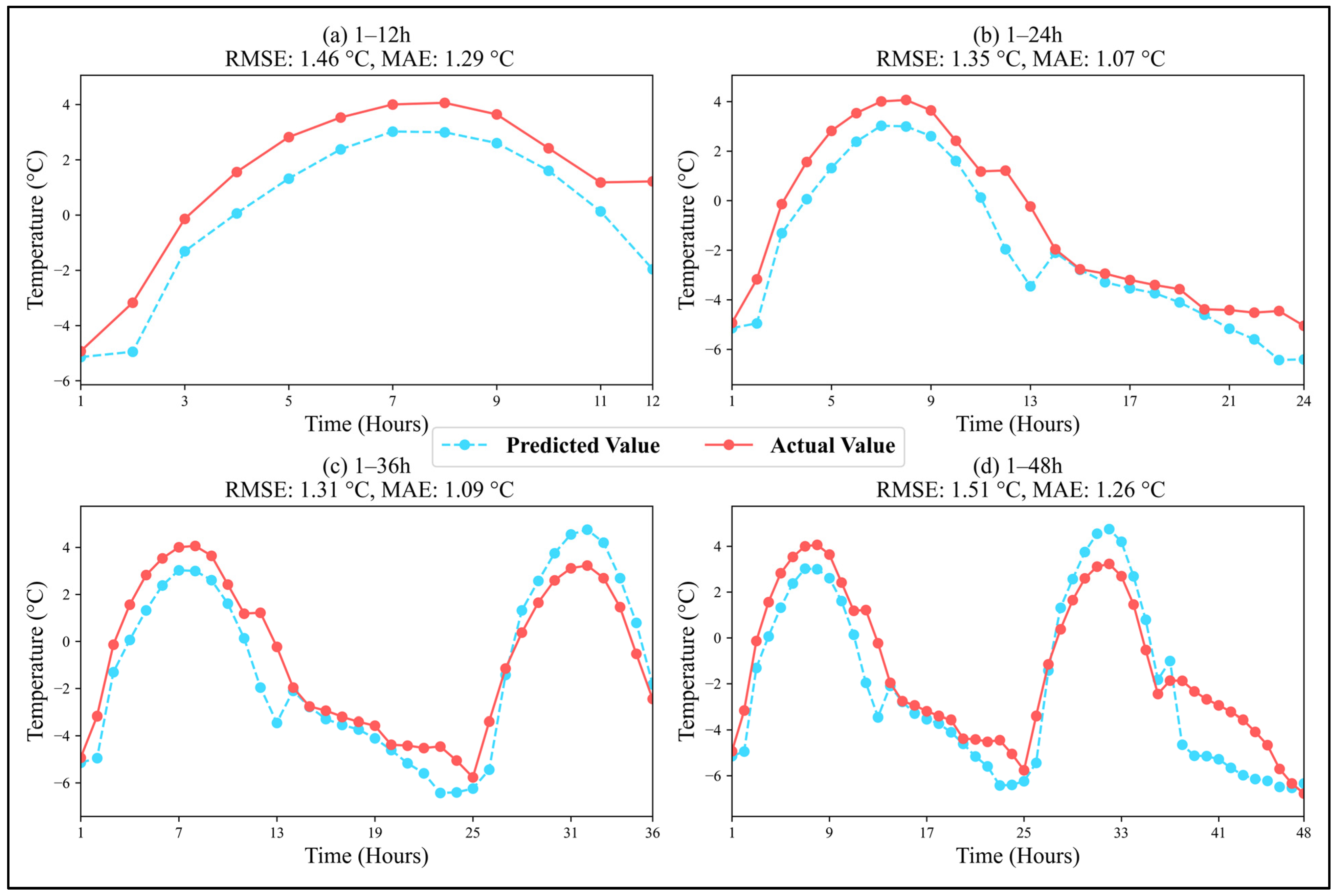

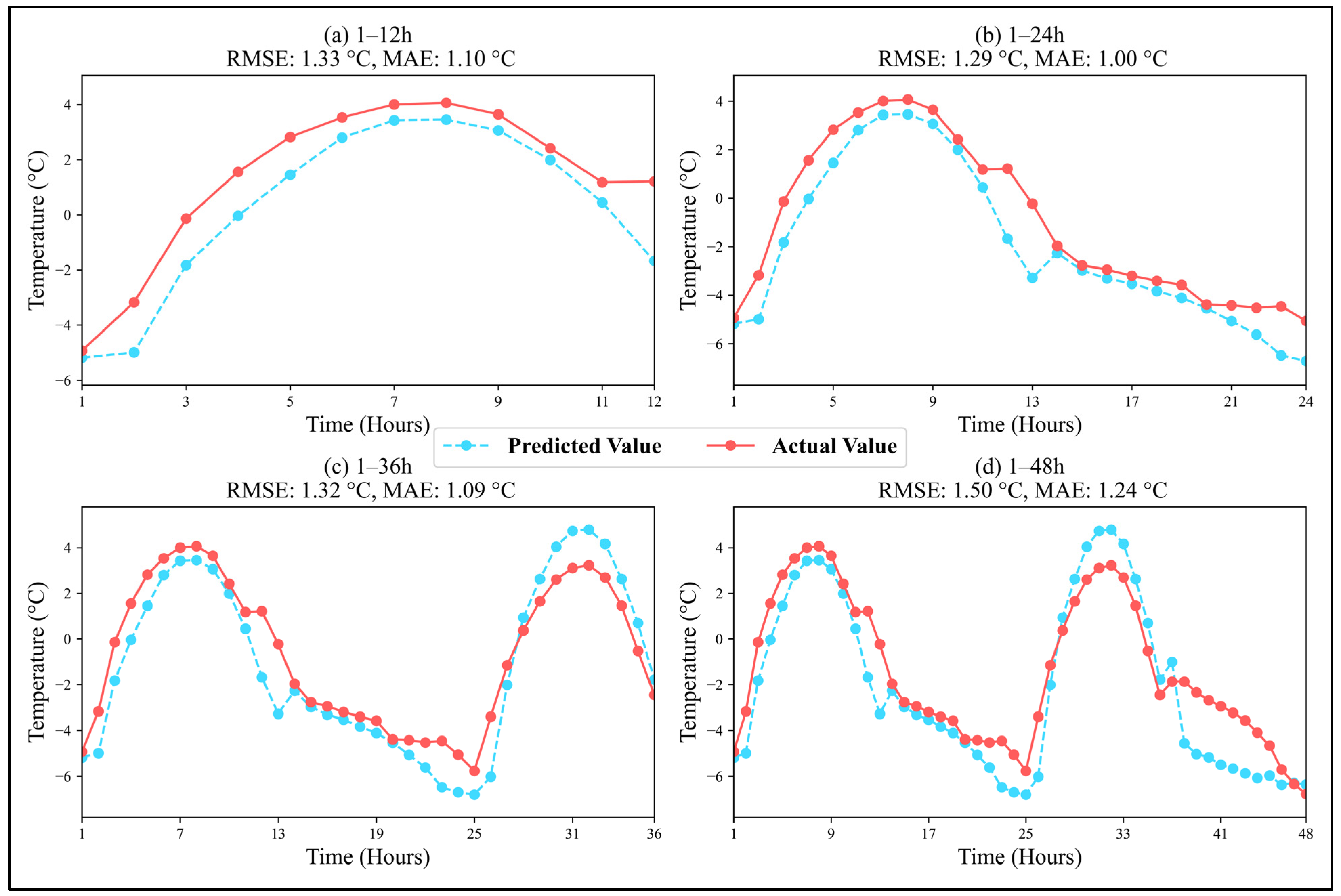

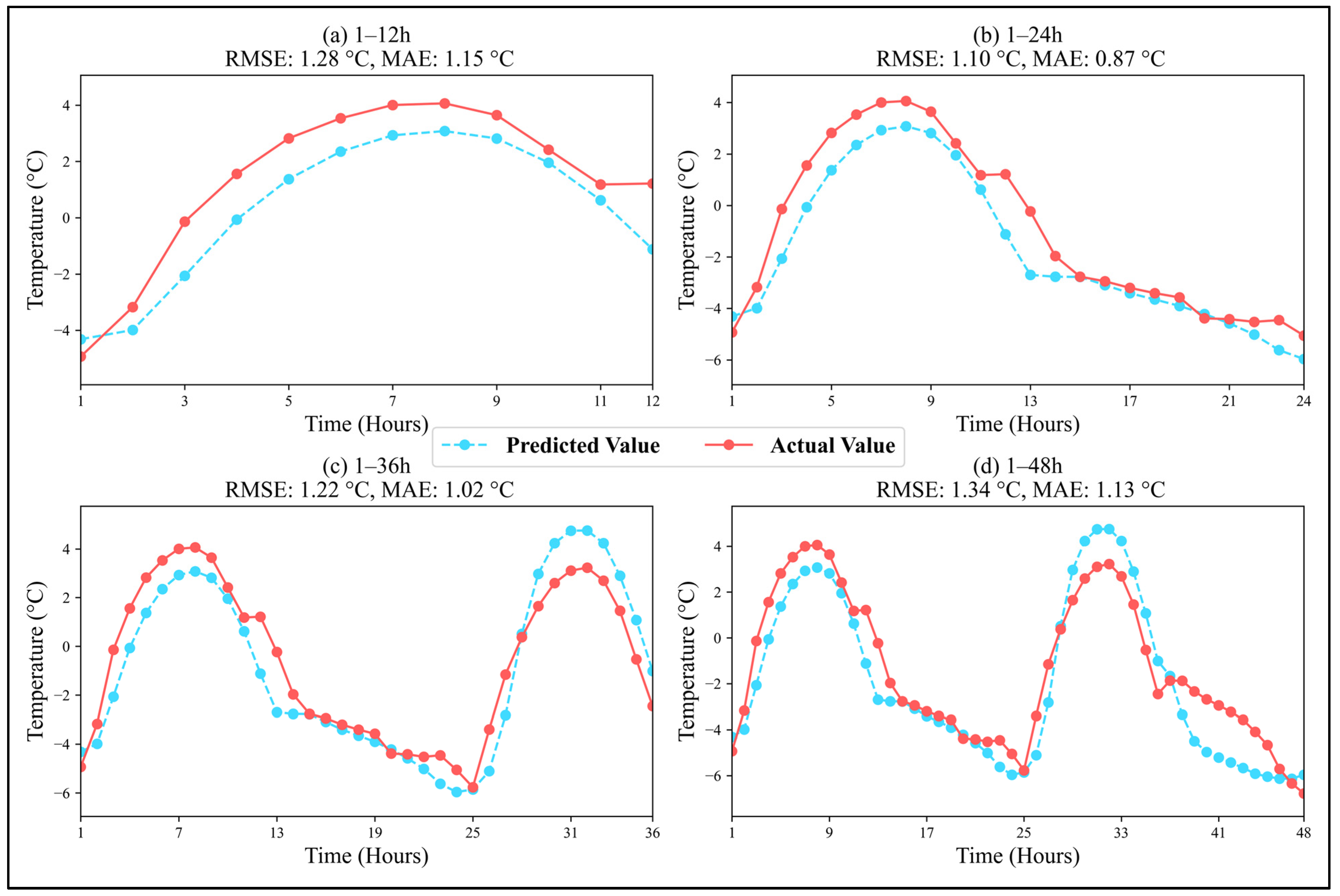

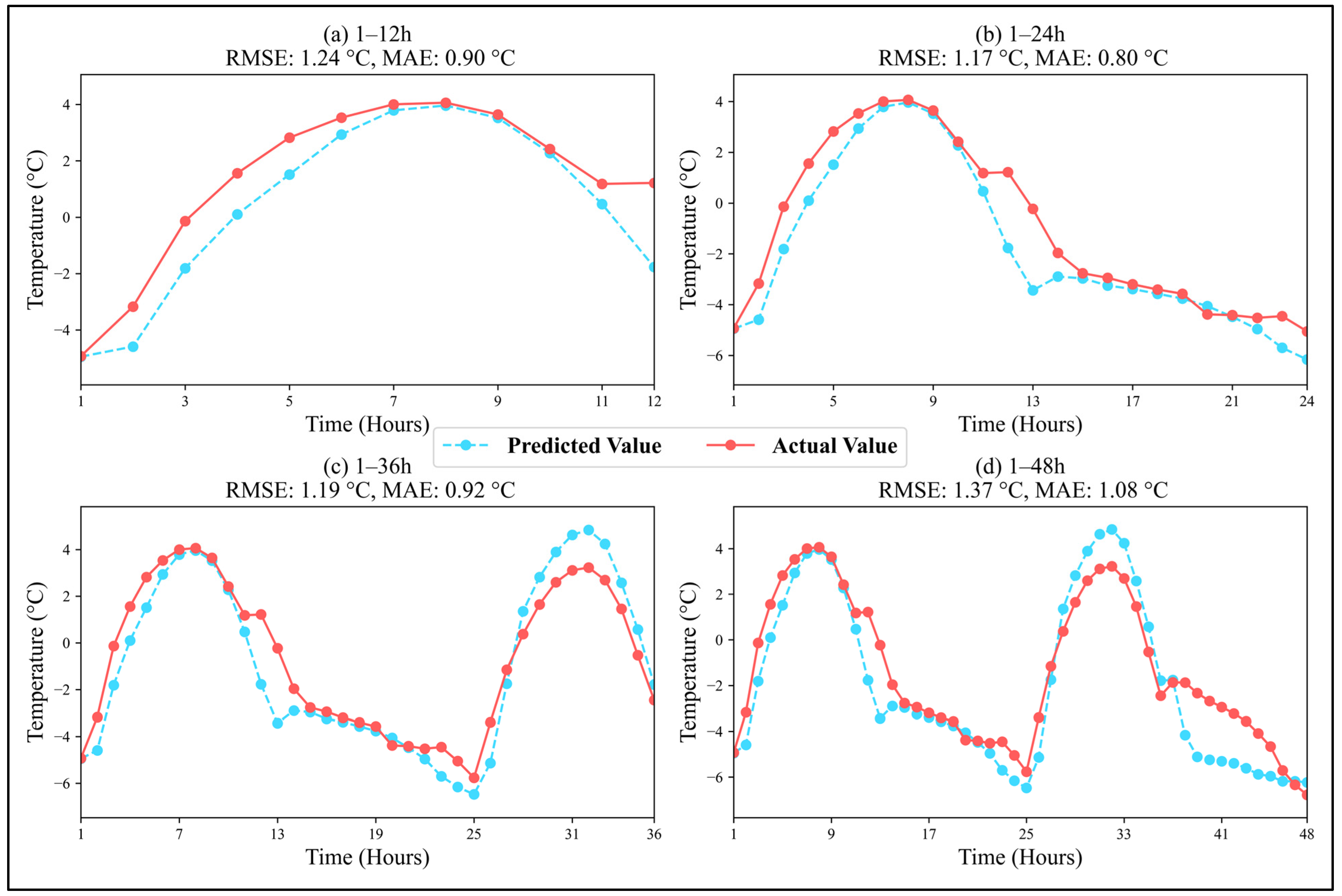

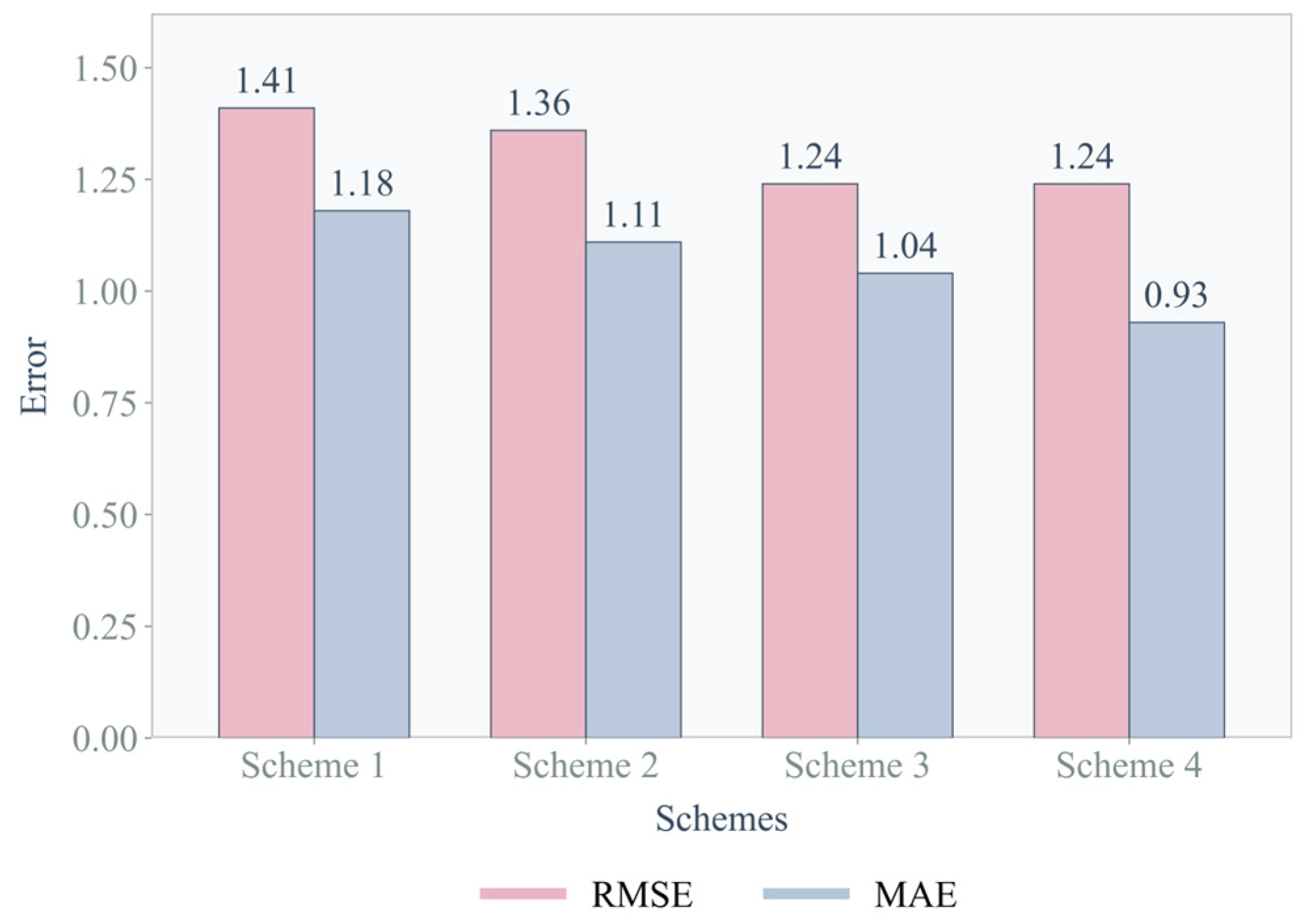

3. Results

4. Discussion

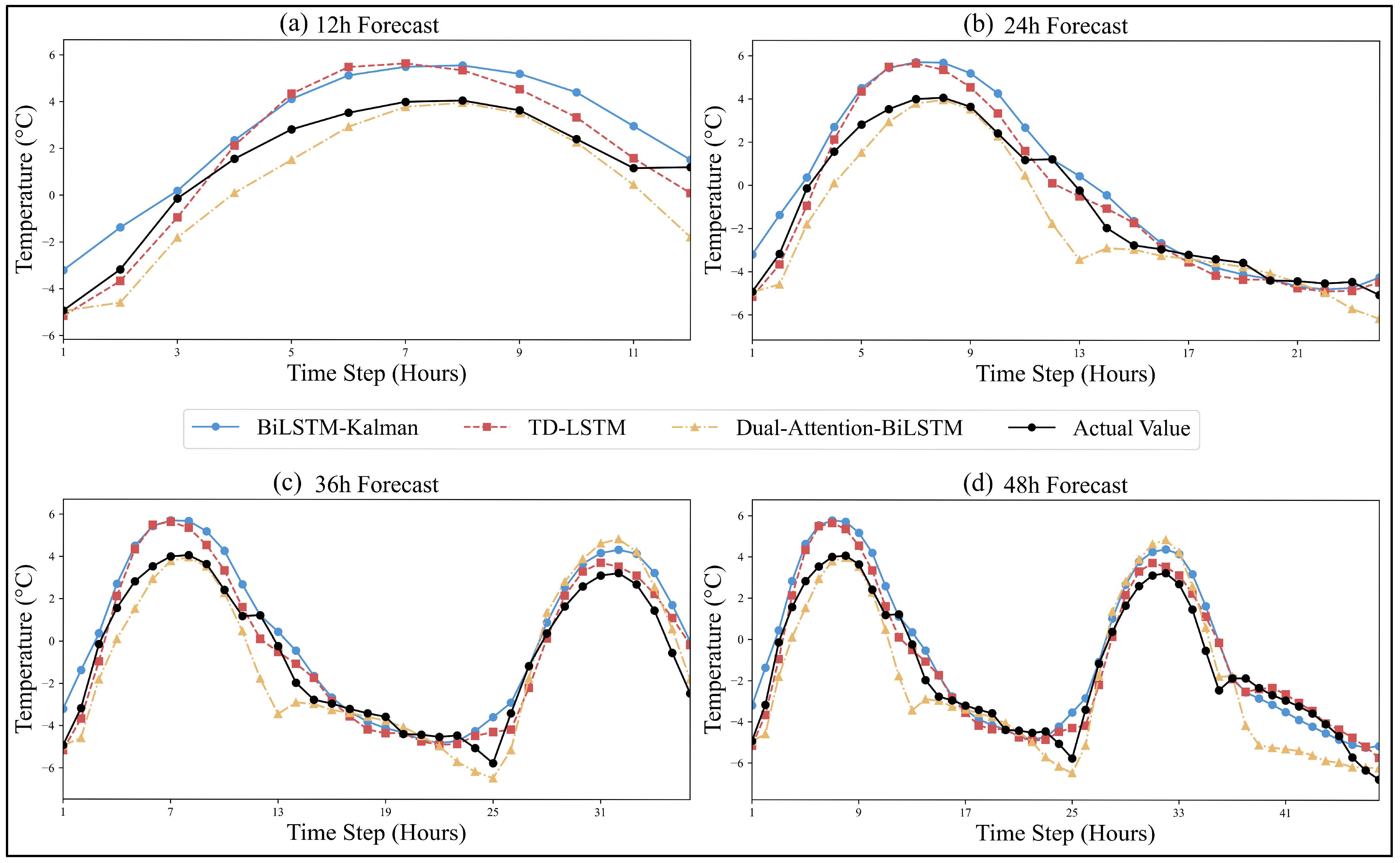

4.1. Comparison of Three Models

4.2. Limitations and Future Research

5. Conclusions

- The feature attention mechanism, integrated with the random forest algorithm, helps the model focus on key meteorological features during early training, dynamically reducing the interference from redundant information and significantly improving the model’s feature selection capability.

- The key-value attention mechanism enhances the model’s ability to learn contextual information across different time steps. By mapping keys and values, the model captures important temperature change features during critical moments, overcoming the limitation of traditional attention mechanisms that treat features within the same time step as being homogeneous.

- The results of the comparison of the four models demonstrate that using only the BiLSTM model yields a limited prediction performance. Introducing either attention mechanism improves the accuracy, while combining both attention mechanisms yields the best performance. This demonstrates that the synergistic effect of the dual attention mechanisms significantly enhances the model’s predictive capability. However, analysis of the results for each scheme revealed that the model performs best for 24-h predictions. This may be because the model was trained with a 24-h input window, allowing for better learning within this time frame. This reflects the model’s generalization ability, which still needs to be improved across various forecast periods.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bochenek, B.; Ustrnul, Z. Machine Learning in Weather Prediction and Climate Analyses—Applications and Perspectives. Atmosphere 2022, 13, 180. [Google Scholar] [CrossRef]

- Chen, H.; Wang, K.; Zhao, M.; Chen, Y.; He, Y. A CNN-LSTM-attention based seepage pressure prediction method for Earth and rock dams. Sci. Rep. 2025, 15, 12960. [Google Scholar] [CrossRef]

- Davis, K.F.; Downs, S.; Gephart, J.A. Towards food supply chain resilience to environmental shocks. Nat. Food 2021, 2, 54–65. [Google Scholar] [CrossRef] [PubMed]

- Jin, D.; Qin, Z.; Yang, M.; Chen, P. A Novel Neural Model with Lateral Interaction for Learning Tasks. Neural Comput. 2021, 33, 528–551. [Google Scholar] [CrossRef] [PubMed]

- Dengfeng, Z.; Chaoyang, T.; Zhijun, F.; Yudong, Z.; Junjian, H.; Wenbin, H. Multi scale convolutional neural network combining BiLSTM and attention mechanism for bearing fault diagnosis under multiple working conditions. Sci. Rep. 2025, 15, 13035. [Google Scholar] [CrossRef]

- Donas, A.; Galanis, G.; Pytharoulis, I.; Famelis, I.T. A Modified Kalman Filter Based on Radial Basis Function Neural Networks for the Improvement of Numerical Weather Prediction Models. Atmosphere 2025, 16, 248. [Google Scholar] [CrossRef]

- Farhangmehr, V.; Imanian, H.; Mohammadian, A.; Cobo, J.H.; Shirkhani, H.; Payeur, P. A spatiotemporal CNN-LSTM deep learning model for predicting soil temperature in diverse large-scale regional climates. Sci. Total. Environ. 2025, 968, 178901. [Google Scholar] [CrossRef]

- Godoy-Rojas, D.F.; Leon-Medina, J.X.; Rueda, B.; Vargas, W.; Romero, J.; Pedraza, C.; Pozo, F.; Tibaduiza, D.A. Attention-Based Deep Recurrent Neural Network to Forecast the Temperature Behavior of an Electric Arc Furnace Side-Wall. Sensors 2022, 22, 1418. [Google Scholar] [CrossRef]

- Hou, J.; Wang, Y.; Hou, B.; Zhou, J.; Tian, Q. Spatial Simulation and Prediction of Air Temperature Based on CNN-LSTM. Appl. Artif. Intell. 2023, 37, 2166235. [Google Scholar] [CrossRef]

- Intergovernmental Panel on Climate Change (IPCC). Framing, Context, and Methods. In Climate Change 2021—The Physical Science Basis: Working Group I Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2023; pp. 147–286. [Google Scholar] [CrossRef]

- Yan, K.; Gan, J.; Sui, Y.; Liu, H.; Tian, X.; Lu, Z.; Abdo, A.M.A. An LSTM neural network prediction model of ultra-short-term transformer winding hotspot temperature. AIP Adv. 2025, 15, 035103. [Google Scholar] [CrossRef]

- Liu, H.; Zheng, H.; Wu, L.; Deng, Y.; Chen, J.; Zhang, J. Spatiotemporal Evolution in the Thermal Environment and Impact Analysis of Drivers in the Beijing–Tianjin–Hebei Urban Agglomeration of China from 2000 to 2020. Remote. Sens. 2024, 16, 2601. [Google Scholar] [CrossRef]

- Lyu, Y.; Wang, Y.; Jiang, C.; Ding, C.; Zhai, M.; Xu, K.; Wei, L.; Wang, J. Random forest regression on joint role of meteorological variables, demographic factors, and policy response measures in COVID-19 daily cases: Global analysis in different climate zones. Environ. Sci. Pollut. Res. 2023, 30, 79512–79524. [Google Scholar] [CrossRef]

- Miao, L.; Yu, D.; Pang, Y.; Zhai, Y. Temperature Prediction of Chinese Cities Based on GCN-BiLSTM. Appl. Sci. 2022, 12, 11833. [Google Scholar] [CrossRef]

- Mohanty, L.K.; Panda, B.; Samantaray, S.; Dixit, A.; Bhange, S. Analyzing water level variability in Odisha: Insights from multi-year data and spatial analysis. Discov. Appl. Sci. 2024, 6, 363. [Google Scholar] [CrossRef]

- Natras, R.; Soja, B.; Schmidt, M. Ensemble Machine Learning of Random Forest, AdaBoost and XGBoost for Vertical Total Electron Content Forecasting. Remote. Sens. 2022, 14, 3547. [Google Scholar] [CrossRef]

- Price, I.; Sanchez-Gonzalez, A.; Alet, F.; Andersson, T.R.; El-Kadi, A.; Masters, D.; Ewalds, T.; Stott, J.; Mohamed, S.; Battaglia, P.; et al. Probabilistic weather forecasting with machine learning. Nature 2025, 637, 84–90. [Google Scholar] [CrossRef]

- Sevgin, F. Machine Learning-Based Temperature Forecasting for Sustainable Climate Change Adaptation and Mitigation. Sustainability 2025, 17, 1812. [Google Scholar] [CrossRef]

- Tran, T.T.K.; Bateni, S.M.; Ki, S.J.; Vosoughifar, H. A Review of Neural Networks for Air Temperature Forecasting. Water 2021, 13, 1294. [Google Scholar] [CrossRef]

- Wang, T. Improved random forest classification model combined with C5.0 algorithm for vegetation feature analysis in non-agricultural environments. Sci. Rep. 2024, 14, 10367. [Google Scholar] [CrossRef]

- Xiao, H.; Xu, P.; Wang, L. The Unprecedented 2023 North China Heatwaves and Their S2S Predictability. Geophys. Res. Lett. 2024, 51, e2023GL107642. [Google Scholar] [CrossRef]

- Xue, D.; Lu, J.; Leung, L.R.; Teng, H.; Song, F.; Zhou, T.; Zhang, Y. Robust projection of East Asian summer monsoon rainfall based on dynamical modes of variability. Nat. Commun. 2023, 14, 3856. [Google Scholar] [CrossRef] [PubMed]

- Yuan, L.; Li, W.; Deng, W.; Sun, W.; Huang, M.; Liu, Z. Cell temperature prediction in the refrigerant direct cooling thermal management system using artificial neural network. Appl. Therm. Eng. 2024, 254, 123852. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, J.; Wang, Y.; Han, J.; Xu, Y. Improving 2 m temperature forecasts of numerical weather prediction through a machine learning-based Bayesian model. Meteorol. Atmos. Phys. 2025, 137, 9. [Google Scholar] [CrossRef]

- Sun, D.; Huang, W.; Yang, Z.; Luo, Y.; Luo, J.; Wright, J.S.; Fu, H.; Wang, B. Deep Learning Improves GFS Wintertime Precipitation Forecast Over Southeastern China. Geophys. Res. Lett. 2023, 50, e2023GL104406. [Google Scholar] [CrossRef]

- Patel, R.N.; Yuter, S.E.; Miller, M.A.; Rhodes, S.R.; Bain, L.; Peele, T.W. The Diurnal Cycle of Winter Season Temperature Errors in the Operational Global Forecast System (GFS). Geophys. Res. Lett. 2021, 48, e2021GL095101. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, Y.; Zhang, C.; Li, N. Machine Learning Methods for Weather Forecasting: A Survey. Atmosphere 2025, 16, 82. [Google Scholar] [CrossRef]

- Jahangiri, M.; Asghari, M.; Niksokhan, M.H.; Nikoo, M.R. BiLSTM-Kalman framework for precipitation downscaling under multiple climate change scenarios. Sci. Rep. 2025, 15, 24354. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, T.; Han, G.; Gou, Y. TD-LSTM: Temporal Dependence-Based LSTM Networks for Marine Temperature Prediction. Sensors 2018, 18, 3797. [Google Scholar] [CrossRef]

| Model Scheme | BiLSTM | Feature Attention Mechanism | Key-Value Attention Mechanism |

|---|---|---|---|

| Scheme 1 | √ | ||

| Scheme 2 | √ | √ | |

| Scheme 3 | √ | √ | |

| Scheme 4 | √ | √ | √ |

| Time | Error Type | 12 h | 24 h | 36 h | 48 h | |

|---|---|---|---|---|---|---|

| Model | ||||||

| Dual-Attention-BiLSTM | RMSE | 1.24 °C | 1.17 °C | 1.19 °C | 1.37 °C | |

| BiLSTM-Kalman | RMSE | 1.46 °C | 1.18 °C | 1.28 °C | 1.18 °C | |

| TD-LSTM | RMSE | 1.11 °C | 0.89 °C | 0.95 °C | 0.88 °C | |

| Dual-Attention-BiLSTM | MAE | 0.90 °C | 0.80 °C | 0.92 °C | 1.08 °C | |

| BiLSTM-Kalman | MAE | 1.35 °C | 0.97 °C | 1.07 °C | 0.98 °C | |

| TD-LSTM | MAE | 0.99 °C | 0.74 °C | 0.79 °C | 0.70 °C | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, W.; Du, M.; Li, C.; Du, G. Near-Surface Temperature Prediction Based on Dual-Attention-BiLSTM. Atmosphere 2025, 16, 1175. https://doi.org/10.3390/atmos16101175

Xie W, Du M, Li C, Du G. Near-Surface Temperature Prediction Based on Dual-Attention-BiLSTM. Atmosphere. 2025; 16(10):1175. https://doi.org/10.3390/atmos16101175

Chicago/Turabian StyleXie, Wentao, Mei Du, Chengbo Li, and Guangxin Du. 2025. "Near-Surface Temperature Prediction Based on Dual-Attention-BiLSTM" Atmosphere 16, no. 10: 1175. https://doi.org/10.3390/atmos16101175

APA StyleXie, W., Du, M., Li, C., & Du, G. (2025). Near-Surface Temperature Prediction Based on Dual-Attention-BiLSTM. Atmosphere, 16(10), 1175. https://doi.org/10.3390/atmos16101175