Abstract

Dimensionality reduction (DR) is an essential pre-processing step for hyperspectral image processing and analysis. However, the complex relationship among several sample clusters, which reveals more intrinsic information about samples but cannot be reflected through a simple graph or Euclidean distance, is worth paying attention to. For this purpose, we propose a novel similarity distance-based hypergraph embedding method (SDHE) for hyperspectral images DR. Unlike conventional graph embedding-based methods that only consider the affinity between two samples, SDHE takes advantage of hypergraph embedding to describe the complex sample relationships in high order. Besides, we propose a novel similarity distance instead of Euclidean distance to measure the affinity between samples for the reason that the similarity distance not only discovers the complicated geometrical structure information but also makes use of the local distribution information. Finally, based on the similarity distance, SDHE aims to find the optimal projection that can preserve the local distribution information of sample sets in a low-dimensional subspace. The experimental results in three hyperspectral image data sets demonstrate that our SDHE acquires more efficient performance than other state-of-the-art DR methods, which improve by at least 2% on average.

1. Introduction

Hyperspectral remote sensing images have been taking a significant role in earth observation and climate models. Every collected pixel point indicates a high-dimensional sample that consists of a broad range of electromagnetic spectral band information [1,2]. Nevertheless, the high correspondence of adjacent bands not only leads to information redundancy but also requires tremendous time and space complexity, and the high-dimensional data also make hyperspectral image analysis a challenging task as a consequence of the Hughes phenomenon [3]. As Chang et al. proposed in [4], there can exist at most 94% redundant electromagnetic spectral band information, on the prem that adequate valuable information can be extracted for machine learning. In view of the aforementioned issues, hyperspectral data dimensionality reduction (DR) turns out to be a crucial part of data processing [5,6], usually via projecting original high-dimensional data into a low-dimensional space on the condition of maintaining as much valuable information as possible.

Supervised DR methods manage to increase the between-class separability and decrease the within-class divergency, such as linear discriminant analysis (LDA) [7], nonparametric weighted feature extraction (NWFE) [8], and local Fisher discriminant analysis (LFDA) [9]. LDA intends to maintain global discriminant information according to available labels, which is proven to work well in the case that samples from the same class follow Gaussian distribution. As an extension to LDA, LFDA is proposed to eliminate the limitation of LDA that requests the reduced dimensionality to be less than the total number of sample classes and ignores the local structural information.

However, in various practical applications, labeling samples exactly is labor intensive, computationally expensive, and time-consuming due to the limitations of experimental conditions, especially in hyperspectral remote sensing images [10]. So many research studies focus on unsupervised cases. Locality preserving projection (LPP) [11] and principal component analysis (PCA) are the representatives of unsupervised DR methods [12]. Different from LPP, on the purpose of preserving the local manifold structure of data, PCA aims at maintaining the global structure of data by maximizing sample variance.

A great deal of research demonstrates that high-dimensional data can be described by or similar to a smooth manifold in a low-dimensional space [13,14,15] and propose some DR methods based on manifold learning. Laplacian eigenmaps (LE) [14] try to maintain local manifold structure by constructing an undirected graph that indicates the pairwise relationship of samples. Locally linear embedding (LLE) [15] tries to reconstruct samples in a low-dimensional space while maintaining their local linear representation coefficients under the assumption that local samples follow a certain linear representation in a manifold patch. Yan et al. summarize relevant DR approaches and proposed a general graph embedding framework [16], which contains a series of variant graph embedding models, including neighborhood preserving embedding (NPE) [17], LPP, and several expanded versions to LPP [11,18,19]. For these graph embedding-based DR models, researchers usually utilize Euclidean distance to construct adjacent graphs [20], where vertices indicate samples and the weighted edges reflect pairwise affinities between two samples. Consequently, there exist two basic problems to be addressed.

- The conventional graph embedding-based DR methods, for example, LPP, aims to preserve the local adjacent relationship of samples by constructing a weight matrix which only takes the affinity between pairwise samples into account. However, the weight matrix fails to reflect the complex relationship of samples in high order [21], leading to the loss of information.

- When employed to calculate the similarity between two samples, the usual Euclidean distance is merely related to the two samples themselves but hardly considers the influence caused by their ambient samples [22,23] and ignores the distribution information of samples, which usually plays an important role for further data processing.

Accordingly, we propose a novel similarity distance-based hypergraph embedding method (SDHE) for unsupervised DR to solve the two above issues. Unlike conventional graph embedding-based models that only describe the affinity between two samples, SDHE is based on hypergraph embedding, which can take advantage of the complicated sample relationships in high order [24,25,26]. Besides, a novel similarity distance is defined instead of Euclidean distance to measure the affinity between samples because the similarity distance can not only discover complex geometrical structure information but also make use of the local distribution information of samples.

The remainder of our work is organized as follows. In Section 2, some related work is introduced, including the classic graph embedding model (LPP) and hypergraph embedding learning. Section 3 proposes our similarity distance-based hypergraph embedding method (SDHE) for dimensionality reduction in detail. In Section 4, we adopt three real hyperspectral images to evaluate the performance of SDHE in comparison with other related DR methods. Finally, Section 5 provides the conclusions.

2. Related Work

2.1. Notations of Unsupervised Dimensionality Reduction Problem

We focus on the unsupervised dimensionality reduction problem. The dataset is denoted as , where represents the sample with feature values, denotes the number of total samples. In order to obtain a discriminative low-dimensional representation for each , an optimal projection matrix is to be learned. We denote or , where as the data in the transformed space.

2.2. Locality Preserving Projection (LPP)

As is shown in [27], numerous high-dimensional observation data contain low-dimensional manifold structures, which motivates us to solve DR problems by extracting local metric information hidden in the low-dimensional manifold. Graph embedding has been proposed to present certain statistical or geometric characteristics of samples via constructing a graph embedding model [16]. In particular, LPP utilizes K nearest neighbors (KNN) algorithm to construct an adjacent graph so that local neighborhood structure is considered in feature space [17]. The basic derivation idea of the Formulas (1)–(4) comes from [17].

LPP is formulated to find a projection matrix by minimizing.

where is a diagonal matrix with diagonal entries , and is Laplacian matrix. The symmetric weighted matrix is defined on an adjacent graph, in which each entry corresponds to a weighted edge denoting the similarity between two samples. The most popular approach to define is as below:

where denotes the heat kernel parameter, and increases monotonously with the decrease of distance between and .

Therefore, if samples and are the K nearest neighbors of each other, the mapped samples and are close to each other in the transformed space as well, due to the heavy penalty incurred by . Usually a constraint is imposed to ensure a meaningful solution, where denotes the identity matrix. Then the final optimization problem can be written as follows:

The solution to the optimal projection matrix can be translated into the following generalized eigenvalues problem.

where denotes the eigenvector matrix of and denotes the eigenvalue matrix whose diagonal entries are eigenvalues corresponding with .

2.3. Hypergraph Embedding

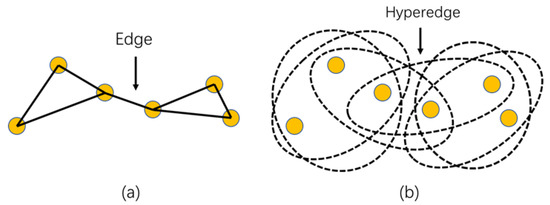

Since hypergraph theory is proposed, hypergraph learning has made promising progress in many applications in recent years, and the basic derivation idea of the Formulas (5)–(8) comes from [26,28,29]. As an extension to the classic graph, a hypergraph facilitates the representation of a data structure by capturing adjacent sample relationships in high order, which overcomes the limitation of a classic graph in that each edge only considers the affinity between pairwise samples. Unlike a classic graph, where a weighted edge links up two vertices, the hyperedge consists of several nodes in a certain neighborhood. Figure 1 is taken as an example of a classic graph and hypergraph.

Figure 1.

(a) Classic graph built by two nearest neighbors. (b) Hypergraph built by built by two nearest neighbors.

The hypergraph . Here, denotes the vertex set corresponding to samples, and denotes the hyperedge set, in which each hyperedge is assigned a positive weight . For a certain vertex, its K nearest neighbors (let K = 2 in Figure 1b) are found out to make up a hyperedge, and an incidence matrix is defined to express the affiliation between vertices and hyperedges as follows:

Then each hyperedge is assigned with a weight computed by:

where is the Gaussian kernel parameter. According to incidence matrix and hyperedge weight , the vertex degree for each vertex is defined as:

and the hyperedge degree for each hyperedge is defined as:

Namely, denotes the number of vertices that belong to the same hyperedge .

3. Proposed Method

In this section, we propose a novel unsupervised DR method called similarity distance-based hypergraph embedding (SDHE). Below we first give a kind of hypergraph embedding-based similarity, then construct a novel similarity distance, and finally, propose a similarity distance-based hypergraph embedding model for DR.

3.1. Hypergraph Embedding-Based Similarity

It is a reasonable choice to describe a high-order similarity relationship with a hypergraph rather than a simple graph. Because the hypergraph has the characteristic that each hyperedge connects more than two vertices and these vertices share one weighted hyperedge, i.e., the samples in the same hyperedge are regarded as a whole. A hyperedge consists of the sample together with its K nearest neighbors, thus an incidence matrix is defined by Equation (5) to represent the affiliation between vertices and hyperedges. Then a positive weight is assigned to the hyperedge according to Equation (6), and the weight of hyperedge is calculated by summing up the certain relationships between sample with its nearest neighbors.

However, the weight of hyperedge excessively relies on parameter . If is too small, the hypergraph will approach a simple graph inducing that the hypergraph cannot depict high order sample relationship sufficiently. Otherwise, if is too large, one hyperedge would connect too much number of vertices sharing the common weight of the hyperedge, which fails to reflect vertices’ own unique similarity characteristics. It is worth noting that outliers also share hyperedge weight with other vertices, and stated thus, hypergraph embedding is sensitive to outliers (usually noise), so we manage to modify the disadvantage by constructing a robust similarity to alleviate the sensitiveness of outliers. The similarity between arbitrary two samples and is defined as follows:

where the notations and have been defined in Equations (5) and (6), respectively.

According to Equation (9), the similarity between samples and is calculated by summing up all the weight of these common hyperedges they both belong to. The weight of common hyperedge is associated with local sample distribution; next, we explains how it works. On the one hand, each hyperedge connects vertices so that the weight of hyperedge becomes larger if these vertices are distributed compactly, and vice versa. If an outlier and its nearest neighbors make up a hyperedge, then the hyperedge has a smaller weight because the distribution of these vertices is more scattered. In other words, outlier has little contribution to the weight of hyperedge, making the measure of similarity more robust. On the other hand, each vertex can belong to several different hyperedges. When two vertices are very close to each other, they can participate in more of the same hyperedges and have a higher similarity as we expect. Considering the sample distribution means we can mine more valuable information from the training samples of the same size according to their local structure and distribution relationship in the hypergraph, especially small-size samples. Our experiments conducted on different data sets have confirmed the conclusion, as shown in Section 4.

3.2. Similarity Distance Construction

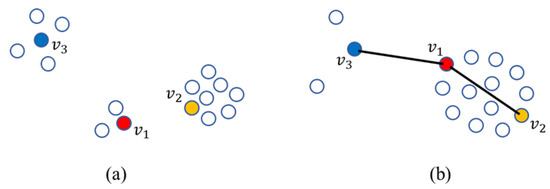

Euclidean distance is the most popular tool to measure the similarity between samples in graph embedding-based DR methods [30]. However, it is not very accurate for analyzing hyperspectral images problem. For example, as depicted in Figure 2a, three samples and are from three different classes respectively, and is closer to but far away from in Euclidean Distance. Accordingly, and are more likely to be misclassified into the same class when we ignore some complex structure and distribution information, which probably leads to the increase of classification error. For another example, as depicted in Figure 2b, Euclidean distance from to is equivalent to that from to . But and are more likely to belong to the same class according to the distribution of samples, which cannot be reflected intuitively by Euclidean distance. So we are motivated to propose a novel similarity distance to replace Euclidean distance.

Figure 2.

Diagrammatic presentation of comparison between Euclidean distance and similarity distance. (a) Three samples are from three different classes; (b) Three samples are from two classes.

It is natural that if two samples have high similarity, they are likely to come from the same class, even though we know nothing about their exact labels in unsupervised DR problem. That is to say, when two similar samples are mapped to low-dimensional space, they ought to be close to each other according to their similarity in original feature space. Directly using the similarity to represent the distance relationship encounters the problem of non-uniform measurement, so we normalize the similarity by defining the relative similarity as below:

where has been defined in Equation (9), and denote the minimum and maximum elements in similarity matrix , respectively. Thus corresponds to and corresponds to . As a normalized metric of , reflects the probability that samples and belong to the same class. Besides, the relative similarity matrix consisting of entries is sparse because the majority of entries , i.e., there exists no hyperedge that contains samples and simultaneously.

Based on the relative similarity , a novel similarity distance is defined for measuring the location relationship of samples as below:

where and . Specially, if , we define . And the similarity distance is symmetric, i.e., .

In order to get an intuitive recognition of similarity distance, Figure 2b gives a directed diagram to explain how it works. Despite the equivalent Euclidean distance from to or , the sample distribution around and is denser than that around and . According to Equation (6), denser distribution leads to the larger weight of hyperedge and corresponds to larger similarity. A larger similarity means smaller similarity distance, which demonstrates that the similarity distance between and is smaller than that between and . Obviously, the result accords with our intuitive judgment.

One advantage of Euclidean distance is simple and easy to acquire, but also limits the amount of information it can take along with. Whereas the geometrical structure of hyperspectral data in high-dimensional feature space is complex and hard to learn, Euclidean distance cannot effectively reflect the interaction between samples. However, via using similarity distance, we can discover crucial information that is not directly exhibited through geometrical distance and make great progress in analyzing hyperspectral images DR problem.

3.3. Similarity Distance-Based Hypergraph Embedding Model

As portrayed in the above two sections, we extract similarity from hypergraph embedding, then utilize the similarity to construct similarity distance. Now we propose our similarity distance-based hypergraph embedding (SDHE) model for DR, whose basic idea is to find out a projection matrix that projects original high-dimensional data to low-dimensional manifold space while preserving the similarity distance among samples.

Similar to LPP, a penalty factor is defined to balance the similarity distance between samples and in transformed space as follows:

where is formulated in Equation (11) and is a positive heat kernel parameter. Thus, the optimization problem of SDHE is formulated to minimize.

where is a diagonal matrix with diagonal entries , and is the Laplacian matrix.

Therefore, if samples and have a small similarity distance in the original feature space, the mapped samples and would be close to each other in the transformed feature space as well due to the heavy penalty incurred by . In order to avoid a degeneracy solution, the final optimization problem is formulated as follows by adding a regularization term.

which is a trace-ratio problem, can be reduced to solve the following generalized eigenvalues problem.

where represents generalized eigenvalue. The optimal projection matrix is acquired by choosing eigenvectors corresponding with the first maximum eigenvalues.

An outline of SDHE Algorithm 1 is summarized as follows:

| Algorithm 1: SDHE |

| Require: Training samples , dimensionality of transformed space , the number of nearest neighbors K, the Gaussian kernel parameters h and t |

| Ensure: The optimal projection matrix . |

| Step 1: Embed hypergraph by using K nearest neighbors algorithm and get affiliation relationship according to Equation (5); Step 2: Calculate the weight of each hyperedge according to Equation (6); Step 3: Calculate the similarity by Step 4: Translate the similarity into relative similarity : Step 5: Construct the similarity distance by ; Step 6: Construct penalty factor by ; Step 7: Calculate and ; Step 8: Solve generalized eigenvalues problem Step 9: is the eigenvectors corresponded with maximum eigenvalues. |

4. Result and Discussion

In this section, the validity of our proposed SDHE method was tested on three hyperspectral data sets compared with some related DR methods. The DR effectiveness was evaluated according to classification accuracy, which was calculated by the nearest neighbor (NN) classifier after different DR methods were conducted on the data set, respectively.

4.1. Hyperspectral Images Data Set

Our experiments were conducted by employing three standard hyperspectral image data sets as follows; more details are shown in Section 4.3.

4.1.1. Pavia University

The Pavia University scene was gathered by the reflective optics system imaging spectrometer (ROSIS) optical sensor over Pavia, northern Italy. It is a 610 × 610 pixels image that was divided into 9 classes grounds truth with 103 spectral bands after some invalid samples had to be removed.

4.1.2. Salinas

The Salinas scene was acquired by the airborne visible/infrared imaging spectrometer (AVIRIS) sensor over Salinas Valley, Southern California, in 1998. This area consists of 512 × 217 pixels with 224 spectral bands. Discarded 20 water absorption bands, it contains 16 classes of observations with 204 spectral bands.

4.1.3. Kennedy Space Center

The Kennedy Space Center (KSC) data was acquired by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) instrument over the KSC, Florida, in 1996. It consists of 13 classes of observations with 176 spectral bands after we discarded uncalibrated and noisy bands that cover the region of water absorption features.

4.2. Experimental Setup

4.2.1. Training Set and Testing Set

Considering the distinct scale and distribution of the data sets above, we randomly choose 15, 20 or 25 samples from per class in Pavia University, Salinas and KSC scenes to make up the training sets, respectively. Naturally, the rest of the samples were regarded as testing sets. In addition, a random 10-fold validation method was adopted, that is, the partition process was repeated 10 times independently to weaken the influence of random bias.

4.2.2. Data Pre-Processing

As Camps-Valls G et al. proposed in [31], we utilized spatial mean filtering to enhance hyperspectral data classification. For example, assuming a pixel with coordinate , we denote its local pixel neighborhood as below:

For pixels at the edge of image, the samples were mirrored before using spatial mean filtering. Then all the pixels had their spatial neighborhood including pixels, where indicates the width of spatial filtering window. Finally, each pixel is represented by:

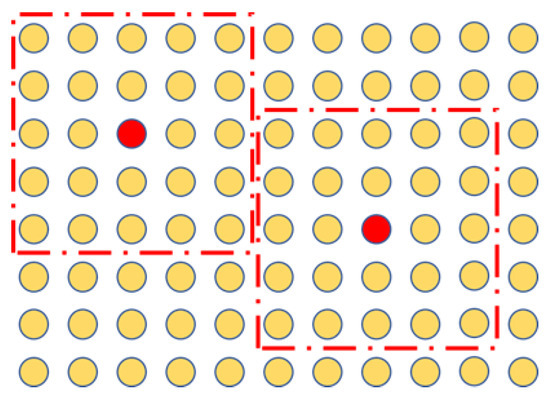

In our experiments, we set for all the hyperspectral images, that is, the width of spatial neighborhood is 5, as depicted in Figure 3. Besides, the filtered data is normalized by min-max scaling as a popular routine.

Figure 3.

Spatial neighborhood of data pre-processing. The red dashed line indicates the width of the selected pixels’ spatial neighborhood.

4.2.3. Comparison and Evaluation

In order to evaluate the effectiveness of different DR methods, the testing set is transformed into low-dimensional data utilizing the optimal projection matrix, which is learned from the training set. As a contract, two classical unsupervised DR methods PCA [12] and LPP [11], two state-of-the-art unsupervised DR methods BH and SH [32], as well as two supervised DR methods LFDA [9] and NWFE [8], were compared with our proposed SDHE method. As a baseline to illustrate others, the raw data (RAW) is also directly classified without DR. In our experiments, the nearest neighbor (NN) classifier is adopted for classification, and we can acquire overall accuracy (OA), average accuracy (AA), and kappa coefficient (KC) together with their standard deviations (STD) to evaluate these DR methods.

4.2.4. Parameter Selection

It is essential to select the appropriate parameters for different DR methods in our experiments. The number of nearest neighbors is selected from the given set of , the Gaussian kernel parameters h and are selected from the given set of , respectively. In order to decrease the influence of random bias, we repeat each single experiment 10 times, with every combination of parameters and randomly divided training and testing sets. The optimal combination of parameters is acquired associated with the highest mean overall accuracy (OA).

4.3. Experimental Results

To have a further knowledge of our data sets, Table 1, Table 2 and Table 3 present the detailed ground truth classes and the number of their individual samples for Pavia University, Salinas, and KSC respectively.

Table 1.

Ground truth classes and their individual samples number for Pavia University.

Table 2.

Ground truth classes and their individual samples number for Salinas.

Table 3.

Ground truth classes and their individual samples number for KSC.

First, we randomly choose 20 samples per class to comprise the training set, and the rest samples are regarded as a testing set. Then we can learn a projection matrix with the training set and conduct DR on the testing set by making use of the projection matrix learned before. The reduced dimensionality is fixed at 30, which turns out to be a relatively stable state for all the related DR methods in our experiments. Finally, the nearest neighbor (NN) classifier is adopted for classification, and these processes are repeated 10 times to get the mean classification accuracy with the corresponding standard deviation. Below we display our experimental results in the form of a Table and Figure together with some relevant discussion.

The experimental results for the three hyperspectral data sets are displayed in Table 4, Table 5 and Table 6, and the bolded experimental values indicate the best performance among all the competitive DR methods. In addition, the optimal parameters for our SDHE are for Pavia University, for Salinas, for KSC.

Table 4.

Classification accuracy (%) at 30-dimensionality for Pavia University with the training set of 20 samples per class.

Table 5.

Classification accuracy (%) at 30-dimensionality for Salinas with the training set of 20 samples per class.

Table 6.

Classification accuracy (%) at 30-dimensionality for KSC with the training set of 20 samples per class.

As listed in Table 4, Table 5 and Table 6, our proposed SDHE acquires prominently higher classification accuracy than other competitive DR methods in AA, OA, and KC. Note that both BH and SH belong to hypergraph embedding DR methods, as well as our SDHE, but they have a comparatively poor performance in that they ignore the sample distribution information and Euclidean distance cannot reveal intrinsic similarity. What is more, the results of RAW, NWFE, and PCA are very similar to each other, which demonstrates that the transformed feature spaces founded by NWFE or PCA cannot promote classification effectiveness but reduce the redundancy of high-dimensional data to make data processing more efficient, but they still outperform other DR methods for KSC. All the competitive DR methods except for SDHE reach a very near classification accuracy for Salinas.

For each individual class, the SDHE also prevailed over the other related DR methods in the total 6 of 9 classes for Pavia University, 5 of 16 classes for Salinas and 11 of 13 classes for KSC. Remarkably, the SDHE was notably superior to others, especially in the classes that had a comparatively lower classification accuracy, especially the 1st, 2nd and 3rd classes in Pavia University, the 8th and 15th classes in Salinas and the 4th, 5th and 6th classes in KSC. Although the SDHE was inferior to the others in several classes, the classification accuracy gaps between these classes of different DR methods were narrow.

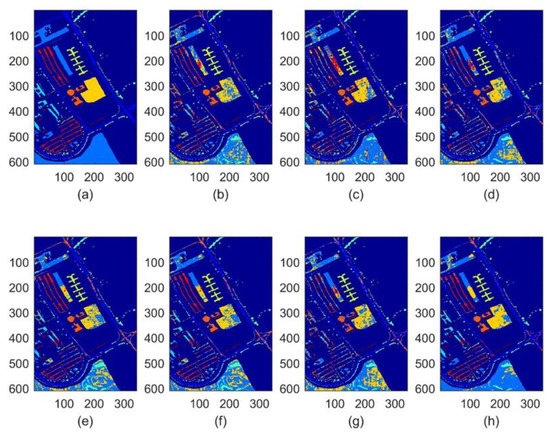

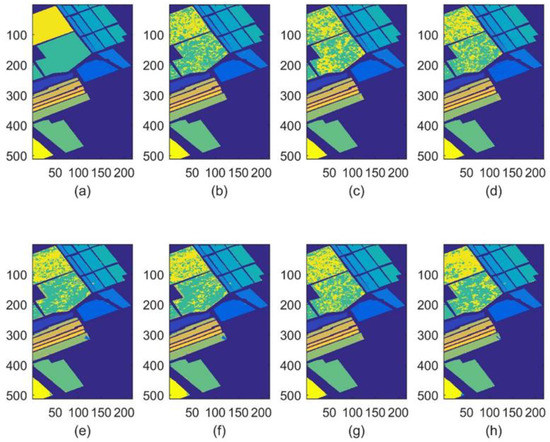

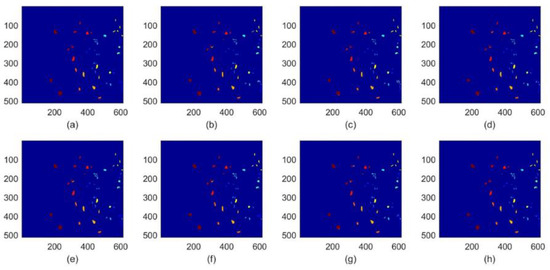

In order to present the classification effectiveness of different DR methods intuitively, the samples of testing set were given different pseudo labels, which were simulated by NN classifier after we conducted corresponding DR methods. Then, the results are portrayed via classification maps in Figure 4, Figure 5 and Figure 6. For each Figure, the subfigure (a) indicates the ground truth of original hyperspectral data set, the subfigure (b–h) indicates the performance of BH, LFDA, LPP, NWFE, PCA, SH and our proposed SDHE, respectively. The higher classification accuracy means the less miscellaneous samples in the corresponding subfigure, and the key points were highlighted by a white circle in subfigure (h) of Figure 4 and Figure 5. Obviously, there are less miscellaneous samples in our proposed SDHE in contrast to the others.

Figure 4.

Classification maps for Pavia University. (a) Ground truth, (b) BH, (c) LDFA, (d) LPP, (e) NWFE, (f) PCA, (g) SH, (h) the proposed SDHE. The different colors indicate the different classes.

Figure 5.

Classification maps for Salinas. (a) Ground truth, (b) BH, (c) LDFA, (d) LPP, (e) NWFE, (f) PCA, (g) SH, (h) the proposed SDHE. The different colors indicate the different classes.

Figure 6.

Classification maps for KSC. (a) Ground truth, (b) BH, (c) LDFA, (d) LPP, (e) NWFE, (f) PCA, (g) SH, (h) the proposed SDHE. The different colors indicate the different classes.

To research the DR effectiveness on the different sizes of training sets, we randomly selected 15, 20, or 25 samples per class as the training set, and the rest samples were regarded as the testing set, respectively. Then the related experimental results, including OA (%) and STD for the three hyperspectral data sets are listed in Table 7, Table 8 and Table 9.

Table 7.

Classification accuracy (%) at 30-dimensionality for the different sizes of training sets in Pavia University.

Table 8.

Classification accuracy (%) at 30-dimensionality for the different sizes of training sets in Salinas>.

Table 9.

Classification accuracy (%) at 30-dimensionality for the different sizes of training sets in KSC>.

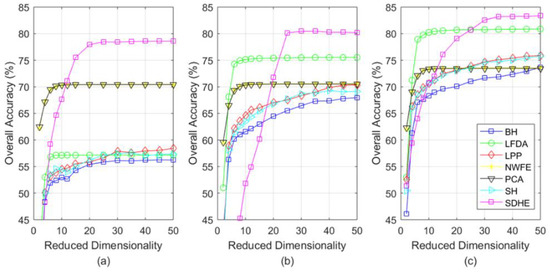

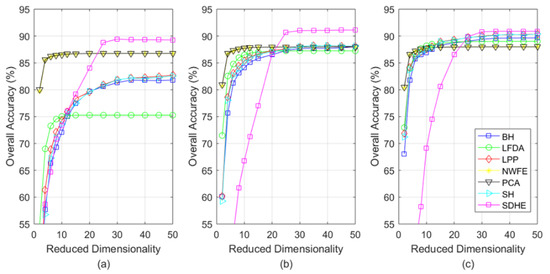

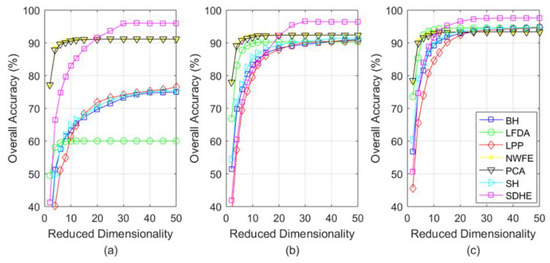

Besides considering the influence of reduced dimensionality on classification accuracy, Figure 7, Figure 8 and Figure 9 draw relevant curve figures according to the same training sets with their OAs of different DR methods listed in Table 7, Table 8 and Table 9. By adding the x-axis to denote the change of reduced dimensionality, the performances of different DR methods are depicted in Figure 7, Figure 8 and Figure 9. For each Figure, the subfigure (a–c) indicates the training set of 15, 20, or 25 samples per class, respectively.

Figure 7.

The OA (%) with the change of reduced dimensionality for Pavia University. (a) Indicates the training set of 15 samples per class, (b) indicates the training set of 20 samples per class, (c) indicates the training set of 25 samples per class.

Figure 8.

The OA (%) with the change of reduced dimensionality for Salinas. (a) Indicates the training set of 15 samples per class, (b) indicates the training set of 20 samples per class, (c) indicates the training set of 25 samples per class.

Figure 9.

The OA (%) with the change of reduced dimensionality for KSC. (a) indicates the training set of 15 samples per class, (b) indicates the training set of 20 samples per class, (c) indicates the training set of 25 samples per class.

According to Table 7, Table 8 and Table 9, despite the size of training sets ranging from 15 to 25 samples per class, the SDHE always performs best among all the related DR methods. We found that the smaller size of the training set, the greater superiority our SDHE had than other DR methods. Note that when the training set consisted of 15 samples per class, the LFDA not only performed poorly in OA but also had a much higher STD, which means the performance of LFDA was sensitive to the small size training set because the local within-class scatter matrix was likely to be singular or ill-conditioned. But the LFDA had a rapid increase in classification accuracy with the increasing size of the training set. However, as listed in Table 8, the mean OA decreases with the size of the training set became larger, from 20 to 25 samples per class for Salinas, because of the parameter values were discrete, which limits the optimal accuracy the model can achieve. Thus, it is a normal phenomenon.

According to Figure 7, Figure 8 and Figure 9, the SDHE still outperforms other DR methods in the different sizes of training sets. With the increase of reduced dimensionality, the classification accuracy increases rapidly in the beginning but then reaches a steady level, which proves the reasonability of analyzing the results at 30-dimensionality previously. It is worth mentioning that the smaller size of the training set the more outstanding advantage our SDHE possesses, for the reason that the use of hypergraph and similarity distance help to mine more hidden information. Empirically, when the reduced dimensionality is more than 15, our SDHE shows a remarkable advantage.

5. Conclusions

Three main contributions of our work are listed as follows:

- A novel similarity distance is proposed via hypergraph construction. Compared with Euclidean distance; it can make better use of the sample structure and distribution information; for the reason that it considers not only the adjacent relationship between samples but also the mutual affinity of samples in high order.

- The proposed similarity distance is employed to optimize DR problem, i.e., our proposed SDHE aims to maintain the similarity distance in a low-dimensional space. In this way, the similarity in capturing the structure and distribution information between samples is inherited in the transformed space.

- When applied for the classification task of three different hyperspectral images, our SDHE is proved to perform more effectively, especially the size of the training set is comparatively small. As shown in Table 7, Table 8 and Table 9, our method improves OA, AA, and KC by at least 2% on average on different data sets.

Furthermore, our work is to use a graph to mine the intrinsic geometric information of the data. Graph data itself is a kind of structured data that is different from our work. For graph learning, there are many ways to perform dimensionality reduction in graphs, such as weight pruning, vertex pruning, and joint weight and vertex pruning [33]. In addition, compared with graphs, where each sample is a structure, the input of our data is a vector. If the input is tensor, tensor decompositions will be suitable [34]. Compared with the neural network [35], which often requires large-scale computation, our method is more like a single-layer neural network with a special objective function, which has the advantage of effectively utilizing lightweight computing resources.

We propose a similarity distance-based hypergraph embedding method (SDHE) for unsupervised dimensionality reduction. First, the hypergraph embedding technique is employed to discover the complicated affinity of samples in high order. Then we take advantage of the complicated affiliation between vertices and hyperedges to construct a similarity matrix, which includes the local distribution information of samples. Finally, based on hypergraph embedding and the similarity matrix, a novel similarity distance is proposed to be an alternative substitute for Euclidean distance, which can better reflect complicated geometry structure information of data well. The experimental results in three hyperspectral image data sets demonstrate that our proposed SDHE obtains more efficient performance than other popular DR methods. For further study, we prepare to derive the similarity distance to semi-supervised model learning, which can combine discriminative analysis with structure and distribution information, and wish to make good progress in the remote sensing field of a climate model.

Author Contributions

Methodology, S.F.; writing-original draft preparation, W.Q.; writing-review and editing, X.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Special Project for the Introduced Talents Team of Southern Marine Science and Engineering Guangdong Laboratory (Guangzhou) (GML2019ZD0603) and the Chinese Academy of Sciences (No. E1YD5906).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tan, K.; Wang, X.; Zhu, J.; Hu, J.; Li, J. A novel active learning approach for the classification of hyperspectral imagery using quasi-Newton multinomial logistic regression. Int. J. Remote Sens. 2018, 39, 3029–3054. [Google Scholar] [CrossRef]

- Zhong, Z.; Li, J.; Luo, Z.; Chapman, M. Spectral–spatial residual network for hyperspectral image classification: A 3-D deep learning framework. IEEE Trans. Geosci. Remote Sens. 2017, 56, 847–858. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Chang, C.-I. Hyperspectral Data Exploitation: Theory and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Yu, C.; Lee, L.-C.; Chang, C.-I.; Xue, B.; Song, M.; Chen, J. Band-specified virtual dimensionality for band selection: An orthogonal subspace projection approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2822–2832. [Google Scholar] [CrossRef]

- Wang, Q.; Meng, Z.; Li, X. Locality adaptive discriminant analysis for spectral–spatial classification of hyperspectral images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2077–2081. [Google Scholar] [CrossRef]

- Fan, Z.; Xu, Y.; Zuo, W.; Yang, J.; Tang, J.; Lai, Z.; Zhang, D. Modified principal component analysis: An integration of multiple similarity subspace models. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 1538–1552. [Google Scholar] [CrossRef]

- Kuo, B.-C.; Li, C.-H.; Yang, J.-M. Kernel nonparametric weighted feature extraction for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1139–1155. [Google Scholar]

- Sugiyama, M. Dimensionality reduction of multimodal labeled data by local fisher discriminant analysis. J. Mach. Learn. Res. 2007, 8, 1027–1061. [Google Scholar]

- Zhong, Z.; Fan, B.; Duan, J.; Wang, L.; Ding, K.; Xiang, S.; Pan, C. Discriminant tensor spectral–spatial feature extraction for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2014, 12, 1028–1032. [Google Scholar] [CrossRef]

- Wang, R.; Nie, F.; Hong, R.; Chang, X.; Yang, X.; Yu, W. Fast and orthogonal locality preserving projections for dimensionality reduction. IEEE Trans. Image Process. 2017, 26, 5019–5030. [Google Scholar] [CrossRef]

- Jolliffe, I.T.; Cadima, J. Principal component analysis: A review and recent developments. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2016, 374, 20150202. [Google Scholar] [CrossRef]

- Wang, Q.; Lin, J.; Yuan, Y. Salient band selection for hyperspectral image classification via manifold ranking. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1279–1289. [Google Scholar] [CrossRef]

- Belkin, M.; Niyogi, P. Laplacian eigenmaps for dimensionality reduction and data representation. Neural Comput. 2003, 15, 1373–1396. [Google Scholar] [CrossRef]

- Roweis, S.T.; Saul, L.K. Nonlinear dimensionality reduction by locally linear embedding. Science 2000, 290, 2323–2326. [Google Scholar] [CrossRef] [PubMed]

- Yan, S.; Xu, D.; Zhang, B.; Zhang, H.-J. Graph embedding: A general framework for dimensionality reduction. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 830–837. [Google Scholar]

- He, X.; Cai, D.; Yan, S.; Zhang, H.-J. Neighborhood preserving embedding. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, Beijing, China, 17–21 October 2005; pp. 1208–1213. [Google Scholar]

- Zhong, F.; Zhang, J.; Li, D. Discriminant locality preserving projections based on L1-norm maximization. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 2065–2074. [Google Scholar] [CrossRef] [PubMed]

- Soldera, J.; Behaine, C.A.R.; Scharcanski, J. Customized orthogonal locality preserving projections with soft-margin maximization for face recognition. IEEE Trans. Instrum. Meas. 2015, 64, 2417–2426. [Google Scholar] [CrossRef]

- Goyal, P.; Ferrara, E. Graph embedding techniques, applications, and performance: A survey. Knowl. -Based Syst. 2018, 151, 78–94. [Google Scholar] [CrossRef]

- Yu, J.; Tao, D.; Wang, M. Adaptive hypergraph learning and its application in image classification. IEEE Trans. Image Process. 2012, 21, 3262–3272. [Google Scholar]

- Sun, Y.; Wang, S.; Liu, Q.; Hang, R.; Liu, G. Hypergraph embedding for spatial-spectral joint feature extraction in hyperspectral images. Remote Sens. 2017, 9, 506. [Google Scholar] [CrossRef]

- Du, W.; Qiang, W.; Lv, M.; Hou, Q.; Zhen, L.; Jing, L. Semi-supervised dimension reduction based on hypergraph embedding for hyperspectral images. Int. J. Remote Sens. 2018, 39, 1696–1712. [Google Scholar] [CrossRef]

- Xiao, G.; Wang, H.; Lai, T.; Suter, D. Hypergraph modelling for geometric model fitting. Pattern Recognit. 2016, 60, 748–760. [Google Scholar] [CrossRef]

- Armanfard, N.; Reilly, J.P.; Komeili, M. Local feature selection for data classification. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1217–1227. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Bai, L.; Liang, Y.; Hancock, E. Joint hypergraph learning and sparse regression for feature selection. Pattern Recognit. 2017, 63, 291–309. [Google Scholar] [CrossRef] [Green Version]

- Tenenbaum, J.B.; Silva, V.D.; Langford, J.C. A global geometric framework for nonlinear dimensionality reduction. Science 2000, 290, 2319–2323. [Google Scholar] [CrossRef]

- Zhang, L.; Gao, Y.; Hong, C.; Feng, Y.; Zhu, J.; Cai, D. Feature correlation hypergraph: Exploiting high-order potentials for multimodal recognition. IEEE Trans. Cybern. 2013, 44, 1408–1419. [Google Scholar] [CrossRef] [PubMed]

- Du, D.; Qi, H.; Wen, L.; Tian, Q.; Huang, Q.; Lyu, S. Geometric hypergraph learning for visual tracking. IEEE Trans. Cybern. 2016, 47, 4182–4195. [Google Scholar] [CrossRef] [PubMed]

- Feng, F.; Li, W.; Du, Q.; Zhang, B. Dimensionality reduction of hyperspectral image with graph-based discriminant analysis considering spectral similarity. Remote Sens. 2017, 9, 323. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Gomez-Chova, L.; Muñoz-Marí, J.; Vila-Francés, J.; Calpe-Maravilla, J. Composite kernels for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2006, 3, 93–97. [Google Scholar] [CrossRef]

- Yuan, H.; Tang, Y.Y. Learning with hypergraph for hyperspectral image feature extraction. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1695–1699. [Google Scholar] [CrossRef]

- Stanković, L.; Mandic, D.; Daković, M.; Brajović, M.; Scalzo, B.; Li, S.; Constantinides, A.G. Data analytics on graphs Part I: Graphs and spectra on graphs. Found. Trends® Mach. Learn. 2020, 13, 1–157. [Google Scholar] [CrossRef]

- Cichocki, A.; Mandic, D.; De Lathauwer, L.; Zhou, G.; Zhao, Q.; Caiafa, C.; Phan, H.A. Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Signal Process. Mag. 2015, 32, 145–163. [Google Scholar] [CrossRef]

- Stanković, L.; Mandic, D.; Daković, M.; Brajović, M.; Scalzo, B.; Li, S.; Constantinides, A.G. Data analytics on graphs part III: Machine learning on graphs, from graph topology to applications. Found. Trends® Mach. Learn. 2020, 13, 332–530. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).