Abstract

Physics-guided machine learning (PGML) methods are emerging as valuable tools for modelling the constitutive relations of solids due to their ability to integrate both data and physical knowledge. While various PGML approaches have successfully modeled time-independent elasticity and plasticity, viscoelasticity remains less addressed due to its dependence on both time and loading paths. Moreover, many existing methods require large datasets from experiments or physics-based simulations to effectively predict constitutive relations, and they may struggle to model viscoelasticity accurately when experimental data are scarce. This paper aims to develop a physics-guided recurrent neural network (RNN) model to predict the viscoelastic behavior of solids at large deformations with limited experimental data. The proposed model, based on a combination of gated recurrent units (GRU) and feedforward neural networks (FNN), utilizes both time and stretch (or strain) sequences as inputs, allowing it to predict stress dependent on time and loading paths. Additionally, the paper introduces a physics-guided initialization approach for GRU–FNN parameters, using numerical stress–stretch data from the generalized Maxwell model for viscoelastic VHB polymers. This initialization is performed prior to training with experimental data, helping to overcome challenges associated with data scarcity.

1. Introduction

Machine learning has made significant strides in various fields of science and engineering, driven by the proliferation of digital data, increasing computing power, and advanced algorithms. It has achieved notable success in applications such as speech recognition [1], image classification [2], and cognitive science [3]. Recently, machine learning has emerged as a promising tool for predicting deformation patterns [4,5] by modeling the mechanical constitutive behavior of solids, particularly the relationship between mechanical stress and strain. However, modeling both time and history-dependent nonlinear constitutive relations such as viscoelasticity presents challenges for two reasons. First, many state-of-the-art machine learning techniques (e.g., feedforward/convolutional/recurrent neural networks, FNN/CNN/RNN) [6,7,8] require large datasets and often lack robustness, failing to guarantee convergence when only limited experimental stress–strain data are available from constitutive experiments, such as uniaxial tensile or bending tests. Second, modelling time and history-dependent nonlinear stress–strain relations can be difficult for many machine learning techniques, including the popular FNN, due to their limited capacity to handle sequential information. Therefore, advancing machine learning approaches to effectively model viscoelasticity in solids at large deformation is crucial for furthering the study of solid mechanics problems.

Physics-guided machine learning (PGML) methods, which integrate established physical principles with data-driven approaches, have proven beneficial in solid mechanics by simultaneously incorporating data information and physical knowledge [9,10,11]. These studies can be broadly classified into three categories: (i) discovering constitutive equations from data [12,13,14,15,16,17]; (ii) solving governing equations [18,19,20,21,22]; (iii) predicting data-driven constitutive relations [15,23,24,25,26,27,28]. Here, we focus on elaborating the third category. To predict data-driven constitutive relations, significant efforts have been made using deep neural networks, including FNN, temporal convolutional network (TCN), and RNN, among others. For example, Linka et al. [15] proposed a data-driven constitutive neural network model for predicting the mechanical constitutive behavior of hyperelastic materials. This model integrates physical laws and the symmetry of mechanical properties into the network structure, enabling the simultaneous use of information from three sources: stress–strain data, theoretical knowledge from materials science, and additional data such as microstructural or processing information; Danoun et al. [23] developed a thermodynamically consistent RNN model to simulate the mechanical response of elastoplastic materials under multi-axial and non-proportional loading conditions. Their work demonstrates that incorporating thermodynamic consistency can significantly enhance the predictive capabilities of such surrogate models; Wang et al. [24] developed a TCN model for materials with an ultra-long-history-dependent stress–strain relation. Their TCN model outperforms RNN models, including Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) models, in terms of both performance and speed for various sequential tasks. Abdolazizi et al. [28] proposed viscoelastic Constitutive Artificial Neural Networks (vCANNs), a novel physics-informed machine learning framework for anisotropic nonlinear viscoelasticity at finite strains. This approach is based on generalized Maxwell models, enhanced with neural networks to capture nonlinear strain- and strain rate-dependent properties. However, most of these models require a moderate to large amount of labeled data from experiments or physics-based simulations to accurately learn time-independent constitutive relations, such as those for path-independent elastic materials and path-dependent plastic materials [26,27]. They often face challenge in modelling the viscoelasticity of solids, which depends on both time and loading paths, especially when experimental data are scarce. Additionally, incorporating physical knowledge of viscoelasticity, such as viscous dissipation energy, directly into the training process is challenging. This difficulty arises because instantaneous viscous deformation and its conjugated driving force, two key components for calculating viscous dissipation energy, are difficult to obtain through experiments.

Therefore, this paper aims to develop a physics-guided machine learning model for predicting the viscoelasticity of solids at large deformation with scarce experimental data. First, we propose a surrogate model of viscoelasticity based on GRU and FNN (GRU–FNN). This model inputs both time and stretch (or strain) sequences, enabling it to predict stress based on time and loading path. Second, we implement a physics-guided initialization for the GRU–FNN parameters by training on numerical stress–stretch data derived from the generalized Maxwell model for viscoelastic solids under large deformations. This approach allows the model to predict the viscoelastic behavior of solids even with scarce experimental stress–stretch data. Unlike existing data-driven models that use residuals of the governing equations or variational forms as penalizing terms to restrict the solution space, our strategy effectively avoids the need for direct measurements of viscous deformation.

The remainder of this paper is organized as follows. In Section 2, a continuum theoretical framework for the viscoelasticity of solids at large deformation is given and constitutive equations including the state and evolving equations are derived. In Section 3, a GRU–FNN model for viscoelasticity is established. In Section 4, uniaxial viscoelasticity of commercially available dielectric polymers VHB4905 is modelled. Finally, conclusions are given in Section 5.

2. Thermodynamic Formulation of Constitutive Laws for Viscoelastic Solids at Large Deformation

Consider a solid bounded by the surface , defined in a fixed reference configuration, which deforms into with the surface . The deformation gradient is then given by

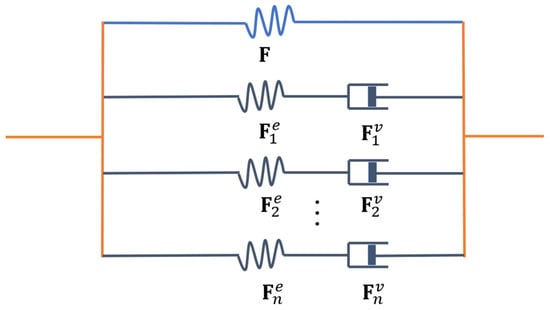

Here, is a function that maps an arbitrary material point inside into a spatial point inside , and represents the gradient operator with respect to the coordinates . To describe the viscoelasticity of solids under large deformation, a generalized Maxwell model [29,30,31,32,33] is employed, as shown in Figure 1. In this model, the solid is represented by an equilibrium spring, characterized by the deformation gradient , along with parallel Maxwell elements (each consisting of a spring and a dashpot in series), characterized by the elastic deformation gradient and the viscous deformation gradient , leading to the following relation:

Figure 1.

The generalized Maxwell model for solids under large deformation. It is assumed to be equivalent to an equilibrium spring with a deformation gradient F, and n parallel Maxwell element, each characterized by an elastic deformation gradient and a viscous deformation gradient (1 ≤ i ≤ n).

Accordingly, the right Cauchy–Green deformation tensors, and , where the superscript denotes the transpose, are used to measure the deformation of the equilibrium spring and the elastic deformation of the Maxwell elements, respectively.

Neglecting inertial effects, the balance laws of force and moment in the current configuration are given by

where is the Cauchy stress tensor and is the body force per unit volume in , which can be expressed in the reference configuration as

where denotes the first P–K stress tensor and denotes the body force per unit volume in . Let denote the determinant of , and we have the relations and .

Let and represent the internal energy density and the heat source per unit volume in , respectively, and denote the heat flux per unit area on . The energy balance law in the current configuration is then expressed as

whose corresponding form in the reference configuration is

where or equivalently a superposed dot represents the material time derivative. and denote the internal energy density and the heat source per unit volume in , respectively, while represents the heat flux per unit area on . The relationships , and hold for the above two equations. The first term on the right-hand side represents the mechanical work, while the second and third terms account for the energy from heat flow across the surface and the heat source inside the body, respectively.

Let denote the entropy per unit volume in and the absolute temperature. The entropy inequality in the current configuration is expressed locally as

which can also be written in the reference configuration as

where is the entropy per unit volume in , related to by .

Introducing the Helmholtz free energy density and considering the energy balance (5), the inequality (7) becomes

Similarly, by introducing the Helmholtz free energy density , where , and considering the energy balance (6), the inequality becomes

This imposes the thermodynamic constraint on solids. For convenience, we will use the formulations provided in the reference configuration in the following sections.

Considering the thermo-viscoelastic effects in solids, the Helmholtz free energy density can be assumed to be a function of variables , i.e.,

whose material time derivative can be further written as

The first and second terms on the right-hand side of Equation (12) can be rewritten as

with

where the superscript ‘’ denotes the inverse of a tensor. Then, substitution of Equations (12) and (13) into Equation (10) yields

In the case where and are independent of and , the first two terms of the above inequality must vanish, leading to the following constitutive relations:

Thus, the inequality (15) reduces to

where is the non-equilibrium Mandel stress tensor [34], defined as

More specially, to satisfy the thermodynamic constraint imposed by inequality (18), the following constitutive equations are derived:

where is a second order tensor and is a fourth order tensor, both of which are positive-definite. Here, Equation (20) represents the Fourier heat conduction law, and Equation (21) describes the rheological viscous flow rule [30,34].

The Helmholtz free energy density is assumed to be additively decomposed as follows:

where and represent the equilibrium and non-equilibrium Helmholtz free energy densities, corresponding to the stretching of the single spring and the springs in Maxwell element, respectively. We adopt the Gent model [35] for both and to account for the strain-stiffening effect, where solids may stiffen sharply as the stretch approaches their extension limit [36], as follows:

where the symbol ‘’ denotes the trace of a tensor, and represent the equilibrium modulus of the single spring and the nonequilibrium modulus of the ith Maxwell element, respectively, and denote the extension limits of the single spring and the ith Maxwell element spring, respectively. Substituting Equations (22) into (16) and (19), we have

Next, consider a case that the elastic deformations of springs are incompressible, which generally applies to polymers [32]. Lagrange multipliers and are introduced to enforce these constraint conditions, modifying the free energy density as

where is the determinant of the elastic deformation gradient . Replacing with in Equations (16) and (19), we can rewrite and as

where is the second-order unit tensor. Furthermore, the Cauchy stress tensor can be obtained using the relations , and in Equation (28), as follows:

By employing and the multiplicative decomposition of from Equation (2), the condition of viscous incompressibility, or , where is the determinant of , can be deduced. From this, the fourth-order tensor in Equation (21) can be expressed as [32]

where is the fourth-order unit tensor and is the viscosity of the subnetwork. The relaxation time for the Maxwell element is then defined as [31].

The corresponding deformation gradient in tensile tests, without shear deformation, can be expressed as

Here , , are the principal stretches of the deformation gradient ; , , are the principle stretches of the elastic deformation gradient ; and , , are the principal stretches of the viscous deformation gradient . Additionally, considering the equal lateral stretches during the uniaxial tensile test and the incompressible deformation condition , we have

with , , being the principal stretches of the deformation gradients , , along the tensile direction, respectively.

Let , denote the components of along the three principal directions, respectively. Substituting Equations (32) and (33) into Equation (28), we obtain

According to the boundary condition for uniaxial loading–unloading tests, we can further derive

and

Here, the effect of deformation incompressibility on the stress is considered, and the Lagrange multipliers are eliminated according to the boundary conditions.

Let , denote the components of along the three principal directions, respectively. Similarly, substituting Equations (32) and (33) into Equation (29), we obtain

Then, substitution of Equations (37), (32) and (31) into Equation (21) yields

This describes the viscous flow in solids subjected to uniaxial tension. It is important to note that the elastic incompressibility of the springs does not affect the viscous flow, as only the deviatoric stress, excluding the hydrostatic pressure , derives the viscous flow.

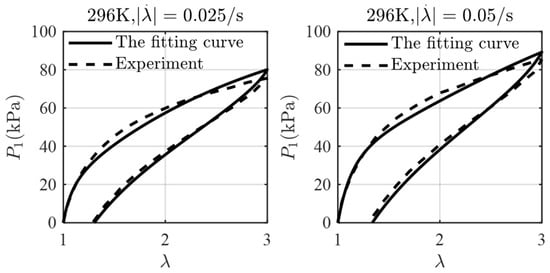

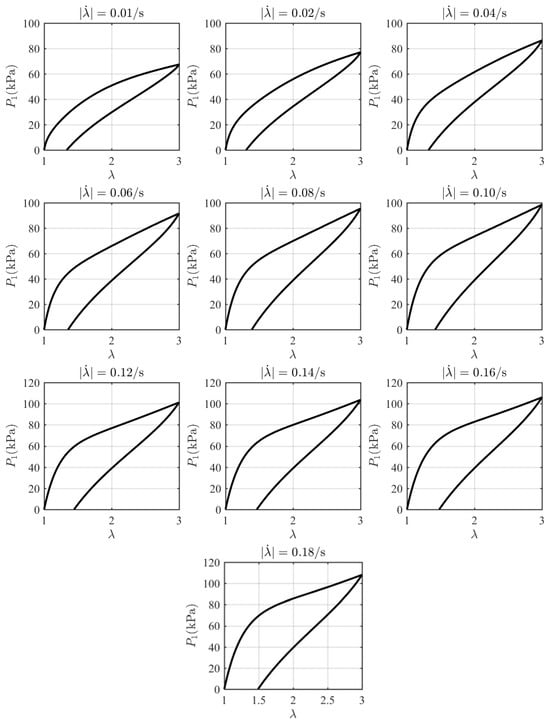

Under the initial conditions , , the coupled Equations (36) and (38) can be solved simultaneously to predict the mechanical behaviors based on the given material parameters. A finite difference approach is used to obtain the numerical results for the evolving stress as a function of the stretch at ten different stretching rates. The model parameters are obtained by fitting Equations (36) and (38) with the experimental data at 296K as n = 3, , , , , , , , . Great consistency between the experimental data and the theoretical model is shown in Figure 2. With these parameters determined, the theoretical data at different stretching rates are calculated as shown in Figure 3. The stress can be observed to increase with stretch during the loading phase and decrease with stretch during the unloading phase. Each loading–unloading cycle exhibits characteristic viscoelastic behavior, with the peak stress magnitude rising as the stretching rate increases.

Figure 2.

The fitting stress–stretch curves between theoretical model and experimental data.

Figure 3.

Theoretical stress–stretch curves at different stretching rates.

The initialization of parameters of the following machine learning model will be obtained by training these theoretical results, which is proven to be an effective strategy for predicting viscoelasticity of solids with scarce experimental data. This strategy avoids directly imposing physical constraints on the training procedure since the specific governing equations are usually uncertain and the physical quantities such as viscous deformation and its conjugated driving force are hard to obtain via experiments. In the next section, a machine learning model for predicting viscoelasticity will be introduced.

3. Machine Learning Method for Predicting Viscoelasticity

As mentioned, the FNN architecture is not well suited for handling history-dependent behaviors because there is no direct relationship between the network inputs at a current time step and the outputs from previous time steps. To address this issue, the RNN architecture is introduced as a reliable model for managing this history dependency. RNN training utilizes the Backpropagation Through Time (BPTT) algorithm, which applies backpropagation [37] to a time sequence. However, when dealing with long sequence inputs, RNN training may suffer from the common problems of vanishing and exploding gradients during backpropagation. This occurs because RNN builds very deep computational graphs by repeatedly applying the same operation at each time step of a long sequence. The LSTM and GRU are specialized recurrent neural network architectures designed to tackle these vanishing and exploding gradient issues. Memory cells in LSTM and GRU networks are equipped with gated units that dynamically control the flow of information, allowing the networks to “forget” old and unnecessary information and avoid the problems associated with multiplying large sequences of numbers during temporal backpropagation. GRU have the advantage of avoiding overfitting and reducing training time compared to LSTM, as GRU with two gates have significantly few parameters than LSTM with four gates.

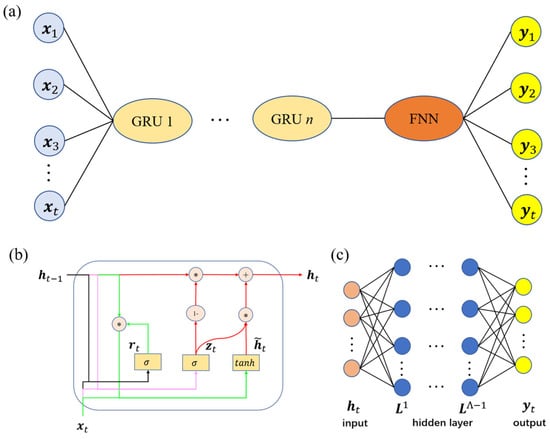

To predict the time-dependent stress of viscoelastic materials, a surrogate model based on GRU and FNN (GRU–FNN) is employed, as illustrated in Figure 4a. At each time step, the GRU cell manages the information flow using a reset gate , which regulates the integration of new input with the previous memory; and an update gate , which determines how much of the previous memory should be retained; and a hidden state , which transfers information from the candidate hidden state forward, as depicted in Figure 4b. These two gates control the information flow between the long-term hidden state and the predictions at each time step. FNN then processes the final hidden state of the GRU as unidirectional flow signals, moving from the input layer through hidden layers to the output layer, as shown in Figure 4c. A detailed introduction to GRU and FNN will follow.

Figure 4.

(a) GRU–FNN architecture; (b) details of GRU; (c) details of FNN.

3.1. Gated Recurrent Unit

The reset gate output is [8,38]

Here, the weight matrix connects the input at a specific time to the current hidden layer within the reset gate, while the weight matrix represents the recurrent connection between the previous and current hidden layers within the reset gate. The initial hidden state is initialized the null vector . denotes the bias vector, and refers to the activation function.

The update gate output is

where , , are respectively two weights matrices and a bias vector regulating the update mechanism in this gate.

The candidate hidden state is

where and are two different weight matrices, is a bias vector, the symbol denote the Hadamard product of two vectors with identical dimensions, is the hyperbolic tangent function.

The GRU output current hidden state is

where is a unit vector, i.e., a vector filled with ones.

The total number of parameters in a GRU cell is related to the dimension of the input vector and the number of neurons in the hidden layers of the GRU cell, given by

Note that the above bias vector is considered to consist of two different bias vectors related to two different weight matrices, respectively.

3.2. Feedforward Neural Network

The FNN can be described by the following compositional function [39]:

where the symbol denotes the composition operator, the superscript represents the total number of the neural network, and is the activation function with the following form:

Here, the superscript denotes the layer number of the neural network, the input vector from the layer (specially, and ), and respectively the weight matrices and biases vector of layer. Note that all hidden states of the last GRU are input into the same FNN in turn for training. The total parameters in FNN can be calculated as

where is the number of neurons of the layer.

3.3. Training Procedure

The loss function, defined as the mean absolute error (MAE), is expressed as

where and denote the ground-truth and predicted outputs at the time sequence, is the total number of datapoints in the training dataset, and represents the parameters of the neural networks. These parameters are updated by minimizing the loss function, as follows:

where represents the values of that minimize . Common optimization methods for minimizing the loss function include gradient decent techniques (such as Adam) and quai-Newton methods (such as L-BFGS). In this work, the Adam optimizer [40] is tentatively employed to update the hyperparameters. The Backpropagation (BP) technique and Backpropagation Through Time (BPTT) technique are used to compute the gradients of the loss function with respect to the parameters of FNN and GRU, respectively. In the following sections, the GRU–FNN model consists of three stacked GRU layers with units each and a single time-distributed dense layer with neurons. This configuration balances computational cost and error minimization through trial and error. The leaky rectified linear unit (LeakyReLU) is used as the activation function for the GRU, while the linear function is used for the FNN.

4. Results and Discussions

4.1. Initialization of GRU-FNN Parameters by Training Theoretical Data

The inputs and outputs are unrolled through time steps, i.e., and with m = 100. Let , and denote the loading time, the stretch and the first P-K stress at the time step, respectively. is calculated by the following formula:

where is the maximum of during loading tests. The inputs and the outputs are used for the uniaxial loading-unloading viscoelasticity. Ten samples are generated by capturing 100 points per curve in Figure 2 and then split into training data (80% of total samples) and testing data (20% of total samples). The training data with a batch size of 2 are used during model’s training process, while the testing data are used for validation of the model’s generalization capabilities.

The GRU–FNN model is implemented in the software library Keras 2.10.0 and its information is listed in Table 1. In the output shape of layers, the first None refers to the batch, the second number the time steps and the third number the units (i.e., the dimension of the hidden state) of GRU or the dimension of the outputs of the dense layer. According to Equation (43), the numbers of parameters of GRU 1 and GRU 2 (GRU 3) layers are calculated respectively as 3 × 100 × (2 + 2 + 100) = 31,200 and 3 × 100 × (2 + 100 + 100) = 60,600. According to Equation (46), the number of parameters of the dense layer is calculated as 1 × (100 + 1) = 101.

Table 1.

Information of the GRU-FNN model.

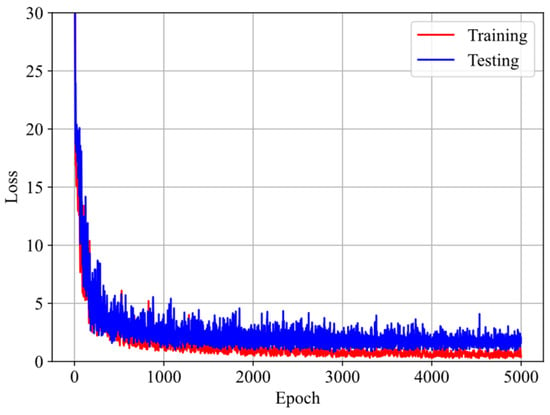

Figure 5 illustrates the evolution of loss with respect to epoch during training and testing. It is observed that the loss decreases dramatically at first and then stabilizes at a steady state. Additionally, the testing loss is slightly higher than the training loss. These observations suggest that the model does not suffer from overfitting or underfitting issues.

Figure 5.

Evolution of the loss during training and testing with respect to epochs.

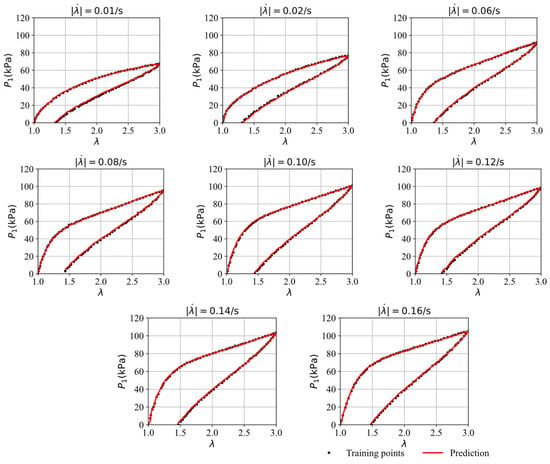

Figure 6 compares the stress–stretch curves from the training data with the model’s predictions at different stretching rates after 5000 epochs. It was observed that the loss value of the training data stabilizes around 0.50 after approximately 5000 epochs through trial and error. Therefore, we trained the model for 5000 epochs. To evaluate the model’s accuracy under various conditions, the root mean square error (RSME) is employed, defined as , where and represents the ground truth and the predicted values. The RMSE quantifies the discrepancy between the predicted results and the training or testing data. RMSE values for all cases in this work are listed in Table 2. For the stretching rates of = 0.01/s, 0.02/s, 0.06/s, 0.08/s, 0.12/s, 0.14/s, and 0.16/s, the corresponding RMSE values are 0.76 0.56, 0.53, 0.71, 0.85, 0.83, 0.73, and 0.63, respectively. The predictions from the GRU–FNN model are highly consistent with training data, and the hysteresis curves exhibit typical viscoelasticity.

Figure 6.

The comparison of the stress–strain curves from the training data and the model’s prediction after 5000 epochs.

Figure 7 compares the stress–stretch curves from the testing data with the model’s predictions at two different stretching rates after 5000 epochs. For the stretching rates of = 0.04/s and 0.18/s, the corresponding RMSE values are 3.41 and 1.52, respectively. The testing data, which was not used during the training process, is accurately predicted by the trained model, demonstrating the GRU–FNN model’s strong generalization capabilities in predicting viscoelastic behaviors. The parameter values (weights and biases) here after 5000 epochs are saved for use as initial parameters in training the GRU–FNN model on experimental data.

Figure 7.

The comparison of the stress–strain curves from testing data and model’s prediction after 5000 epochs.

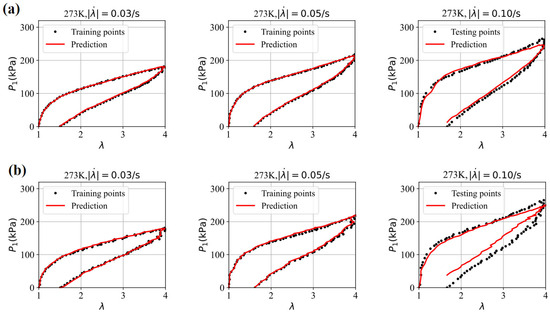

4.2. Initialization of GRU–FNN Parameters by Training Theoretical Data of Viscoelasticity of VHB4905 with Scarce Data from Experiments

In the thermo-viscoelastic experiments of Liao and Hossain [41], VHB4905 samples with dimensions of 100 m × 10 mm × 0.5 mm are used for cyclic loading–unloading tests to investigate the time- and stretching rate-dependent viscoelasticity of VHB polymers. Three datasets, selected from the experimental stress–stretch curves at 273 K and three different stretching rates, i.e., 0.03/s, 0.05/s, and 0.10/s, are split into two training datasets for learning the viscoelastic behaviors and one testing dataset for checking the GRU–FNN model’s capabilities in predicting viscoelasticity of materials with scarce training data.

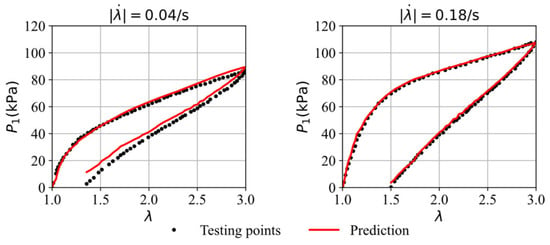

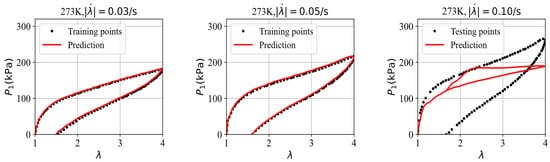

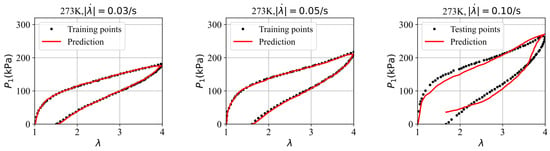

First, the three datasets are used with the GRU–FNN model initialized with default parameter values (weights and biases), and the predicted stress is obtained after 5300 epochs. Figure 8 shows the evolution of the loss with respect to epochs during training and testing. It is observed that the loss for testing data are significantly higher than that for training data after several epochs and fluctuates at a high level, indicating model overfitting. Figure 9 compares the stress–stretch curves from the experimental data with the model’s predictions at 273 K after 5300 epochs. For the stretching rates of = 0.03/s, 0.05/s, and 0.10/s, the corresponding RMSE values are 2.84, 3.07, and 62.49, respectively. The training data from cyclic experiments at = 0.03/s and = 0.05/s are predicted accurately. However, the testing data from cyclic experiments at = 0.10/s show substantial divergence with the predicted results, further demonstrating the poor generalization capabilities of the model in predicting viscoelasticity of materials with scarce training data.

Figure 8.

Evolution of loss with respect to epochs during training and testing at 273 K.

Figure 9.

The comparison of the stress–stretch curves from the experimental data and the model’s prediction at 273 K after 5300 epochs.

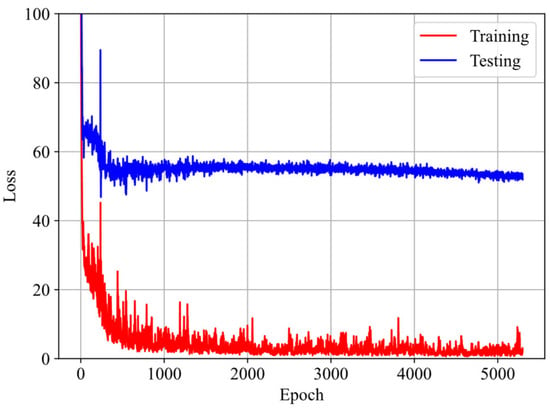

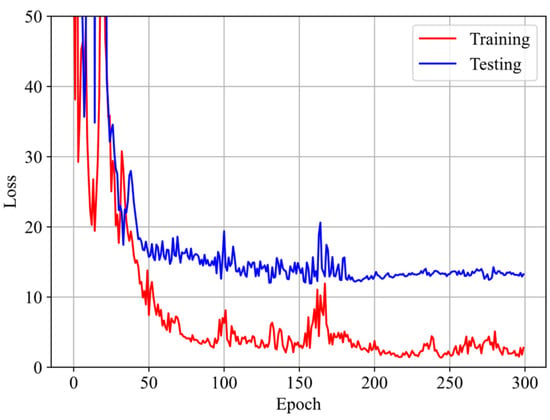

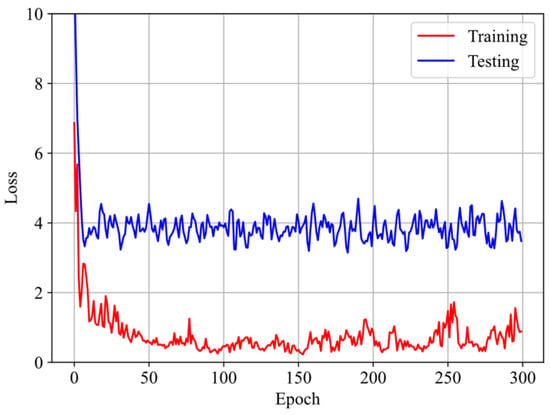

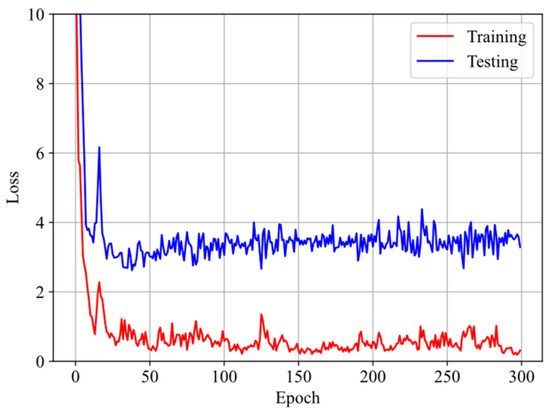

To address the overfitting issues associated with scarce data, the parameter values from training the GRU–FNN model with theoretical data are used as the initialization for training on the experimental data. Figure 10 shows the evolution of the loss with respect to epochs during both training and testing. It is evident that the gap between the loss for training and testing data has significantly narrowed compared to Figure 8. Given that the theoretical data were trained for 5000 epochs, the experimental data were trained 300 epochs to maintain consistency in the total training duration (5300 epochs). Figure 11 compares the stress–stretch curves from the experimental data with the model’s predictions at 273 K after 300 epochs. It was also observed that the loss values of training data and testing data stabilize after approximately 300 epochs through trial and error. For the stretching rates of = 0.03/s, 0.05/s, and 0.10/s, the corresponding RMSE values are , , and , respectively. The high degree of consistency between the predicted and experimental stress–stretch curves indicates that our approach has effectively alleviated the overfitting issues.

Figure 10.

Evolution of the loss with respect to epochs during training and testing at 273 K.

Figure 11.

The comparison of the stress–strain curves from the experimental data and the model’s prediction at 273 K.

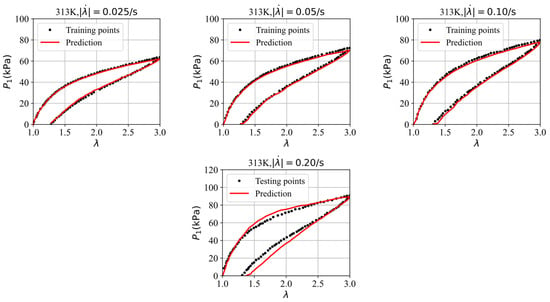

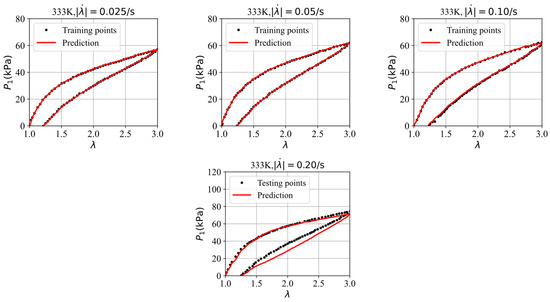

Finally, we split four datasets, selected from the stress–stretch curves at four different stretching rates, i.e., 0.025/s, 0.05/s, 0.10/s, and 0.20/s in the experiments of VHB4905 samples with dimensions of 130 mm × 10 mm × 0.5 mm [42], into three training datasets and one testing dataset to further assess the GRU–FNN model’s capabilities in predicting the viscoelasticity of materials across different temperatures. The saved parameter values from training with theoretical data are used for initialization. Figure 12 shows the evolution of the loss with respect to epochs during training and testing at 313 K. It can be seen that the gap of the loss during training and testing is smaller than that observed in Figure 9 due to the inclusion of an additional training dataset. Figure 13 compares the stress–stretch curves from the experimental data and the model’s predictions at 313 K after 300 epochs. For the stretching rates of = 0.025/s, 0.05/s, 0.10/s, and 0.20/s at 313 K, the corresponding RMSE values are 0.81, 1.32, 1.72, and 4.55, respectively. Figure 14 shows the evolution of the loss with respect to epochs during training and testing at 333 K. The evolution trend is similar with that in Figure 12. Figure 15 compares the stress–stretch curves from the experimental data and the model’s predictions at 333 K after 300 epochs. At 333 K, for the same stretching rates, the RMSE values are 0.24, 0.31, 0.53, and 4.27, respectively. The model accurately predicts the training data and provides reasonable predictions for the testing data, demonstrating improved generalization capabilities.

Figure 12.

Evolution of loss with respect to epochs during training and testing at 313 K.

Figure 13.

The comparison of the stress–strain curves from the experimental data and the model’s prediction at 313 K.

Figure 14.

Evolution of loss with respect to epochs during training and testing at 333 K.

Figure 15.

The comparison of the stress–strain curves from the experimental data and the model’s prediction at 333 K.

Table 2.

Statics of RMSE values.

Table 2.

Statics of RMSE values.

| Cases | Stretching Rates (/s) | RMSE Values (kPa) |

|---|---|---|

| Figure 6 | (0.01,0.02,0.06,0.08,0.10,0.12,0.14,0.16) | (0.76,0.56,0.53,0.71,0.85,0.83,0.73,0.63) |

| Figure 7 | (0.04,0.18) | (3.41,1.52) |

| Figure 9 | (0.03,0.05,0.10) | (2.84,3.07,62.49) |

| Figure 11 | (0.03,0.05,0.10) | (1.90,2.62,15.70) |

| Figure 13 | (0.025,0.05,0.10,0.20) | (0.81,1.32,1.72,4.55) |

| Figure 15 | (0.025,0.05,0.10,0.20) | (0.24,0.31,0.53,4.27) |

4.3. Analyzing the Sensitivity of the GRU-FNN Model

To test the sensitivity of the GRU–FNN model to input data, a random number is generated from a standard normal distribution (mean = 0, standard deviation = 1). The generated noise value is then multiplied by 0.5% or 1.0% of the original stretch values, resulting in a noise adjustment value that is proportional to the magnitude of the original data. This value is added to the original data to simulate noise in the data. Since the time series can be calculated based on the stretching strain and stretching rate, we only apply noise processing to the stretching strain sequence here. The predicted results of the dataset at 273 K with added noise are shown in Figure 16. It can be observed that the model is resilient to small amounts of noise. However, as the noise increases, the prediction error also increases. When the noise exceeds the error between adjacent points, the prediction curve tends to become unsmooth and may even change direction, leading to a complete failure of the prediction. The same pattern was also observed in the predictions of the other two datasets at 313 K and 333 K, respectively, which are not shown here, but the corresponding RMSE values are listed in Table 3.

Figure 16.

The comparison of the stress–strain curves from the model’s prediction at 273 K and the experimental data with (a) 0.5% noise and (b) 1.0% noise.

Table 3.

Statics of RMSE values.

5. Conclusions

In this paper, a physics-guided GRU–FNN model for predicting the viscoelasticity of solids at large deformation is proposed and validated by the comparison of the stress–stretch curves from the model prediction and the uniaxial experiments of VHB4905. The major novelty of the present work lies in the following aspects. First, the time- and loading path- dependent stress can be predicted by utilizing both time and stretch/strain sequences as inputs of the proposed GRU–FNN model. Second, the saved values of the parameters after training theoretical data are employed as the parameter initialization of GRU–FNN model for learning the viscoelastic behavior of solids with scarce experimental data, which significantly alleviates the overfitting issues that arise in the case of scarce data. The results show that the GRU–FNN model can reduce the RMSE by several times, significantly improving its generalization ability in scarce data scenarios. The physics-guided initialization of parameters in this work provides a new idea on how to incorporate physical knowledge into a machine learning model when the governing equations describing physical phenomenon in one material cannot be specified. The model can be applied to predict multiaxial stress–strain relations of viscoelastic solids in a similar manner when the experimental data from multiaxial tests are available, which will be our future study subject. This study facilitates the rapid prediction of materials’ nonlinear viscoelastic properties and lays a theoretical foundation for future real-time monitoring and performance prediction of polymer-based devices such as electronic artificial muscles and dielectric elastomer actuators.

Author Contributions

Conceptualization, B.Q. and Z.Z.; Methodology, B.Q.; Writing—original draft, B.Q.; Writing—review & editing, Z.Z.; Supervision, Z.Z.; Funding acquisition, B.Q. and Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Guangdong Basic and Applied Basic Research Foundation (Grant No. 2023A1515111166), the Development and Reform Commission of Shenzhen (No. XMHT20220103004), the Shenzhen Natural Science Fund (No. GXWD20231130100351002) and the National Natural Science Foundation of China (Grant No. 11932005).

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech Recognition Using Deep Neural Networks: A Systematic Review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhuang, F.; Wang, J.; Ke, G.; Chen, J.; Bian, J.; Xiong, H.; He, Q. Deep Subdomain Adaptation Network for Image Classification. IEEE Trans. Neural Networks Learn. Syst. 2021, 32, 1713–1722. [Google Scholar] [CrossRef] [PubMed]

- Lake, B.M.; Salakhutdinov, R.; Tenenbaum, J.B. Human-level concept learning through probabilistic program induction. Science 2015, 350, 1332–1338. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Wang, Z.; Zhang, S.; Liu, X.; Tan, J. Reinforced quantum-behaved particle swarm-optimized neural network for cross-sectional distortion prediction of novel variable-diameter-die-formed metal bent tubes. J. Comput. Des. Eng. 2023, 10, 1060–1079. [Google Scholar] [CrossRef]

- Yang, C.; Li, Z.; Xu, P.; Huang, H. Recognition and optimisation method of impact deformation patterns based on point cloud and deep clustering: Applied to thin-walled tubes. J. Ind. Inf. Integr. 2024, 40, 100607. [Google Scholar] [CrossRef]

- Masi, F.; Stefanou, I.; Vannucci, P.; Maffi-Berthier, V. Thermodynamics-based Artificial Neural Networks for constitutive modeling. J. Mech. Phys. Solids 2021, 147, 104277. [Google Scholar] [CrossRef]

- Zhang, P.; Yin, Z.-Y. A novel deep learning-based modelling strategy from image of particles to mechanical properties for granular materials with CNN and BiLSTM. Comput. Methods Appl. Mech. Eng. 2021, 382, 113858. [Google Scholar] [CrossRef]

- Tancogne-Dejean, T.; Gorji, M.B.; Zhu, J.; Mohr, D. Recurrent neural network modeling of the large deformation of lithium-ion battery cells. Int. J. Plast. 2021, 146, 103072. [Google Scholar] [CrossRef]

- Jiao, S.; Li, W.; Li, Z.; Gai, J.; Zou, L.; Su, Y. Hybrid physics-machine learning models for predicting rate of penetration in the Halahatang oil field, Tarim Basin. Sci. Rep. 2024, 14, 5957. [Google Scholar] [CrossRef]

- Hu, H.; Qi, L.; Chao, X. Physics-informed Neural Networks (PINN) for computational solid mechanics: Numerical frameworks and applications. Thin-Walled Struct. 2024, 205, 112495. [Google Scholar] [CrossRef]

- Herrmann, L.; Kollmannsberger, S. Deep learning in computational mechanics: A review. Comput. Mech. 2024, 74, 281–331. [Google Scholar] [CrossRef]

- Linka, K.; Kuhl, E. A new family of Constitutive Artificial Neural Networks towards automated model discovery. Comput. Methods Appl. Mech. Eng. 2023, 403, 115731. [Google Scholar] [CrossRef]

- Eggersmann, R.; Kirchdoerfer, T.; Reese, S.; Stainier, L.; Ortiz, M. Model-free data-driven inelasticity. Comput. Methods Appl. Mech. Eng. 2019, 350, 81–99. [Google Scholar] [CrossRef]

- Linka, K.; Tepole, A.B.; Holzapfel, G.A.; Kuhl, E. Automated model discovery for skin: Discovering the best model, data, and experiment. Comput. Methods Appl. Mech. Eng. 2023, 410, 116007. [Google Scholar] [CrossRef]

- Linka, K.; Hillgärtner, M.; Abdolazizi, K.P.; Aydin, R.C.; Itskov, M.; Cyron, C.J. Constitutive artificial neural networks: A fast and general approach to predictive data-driven constitutive modeling by deep learning. J. Comput. Phys. 2021, 429, 110010. [Google Scholar] [CrossRef]

- Fuhg, J.N.; Bouklas, N. On physics-informed data-driven isotropic and anisotropic constitutive models through probabilistic machine learning and space-filling sampling. Comput. Methods Appl. Mech. Eng. 2022, 394, 114915. [Google Scholar] [CrossRef]

- Eghtesad, A.; Tan, J.; Fuhg, J.N.; Bouklas, N. NN-EVP: A physics informed neural network-based elasto-viscoplastic framework for predictions of grain size-aware flow response. Int. J. Plast. 2024, 181, 104072. [Google Scholar] [CrossRef]

- Abueidda, D.W.; Lu, Q.; Koric, S. Meshless physics-informed deep learning method for three-dimensional solid mechanics. Int. J. Numer. Methods Eng. 2021, 122, 7182–7201. [Google Scholar] [CrossRef]

- Abueidda, D.W.; Koric, S.; Abu Al-Rub, R.; Parrott, C.M.; James, K.A.; Sobh, N.A. A deep learning energy method for hyperelasticity and viscoelasticity. Eur. J. Mech. A/Solids 2022, 95, 104639. [Google Scholar] [CrossRef]

- Abueidda, D.W.; Koric, S.; Sobh, N.A.; Sehitoglu, H. Deep learning for plasticity and thermo-viscoplasticity. Int. J. Plast. 2021, 136, 102852. [Google Scholar] [CrossRef]

- As’ad, F.; Avery, P.; Farhat, C. A mechanics-informed artificial neural network approach in data-driven constitutive modeling. Int. J. Numer. Methods Eng. 2022, 123, 2738–2759. [Google Scholar] [CrossRef]

- Cen, J.; Zou, Q. Deep finite volume method for partial differential equations. J. Comput. Phys. 2024, 517, 113307. [Google Scholar] [CrossRef]

- Danoun, A.; Prulière, E.; Chemisky, Y. Thermodynamically consistent Recurrent Neural Networks to predict non linear behaviors of dissipative materials subjected to non-proportional loading paths. Mech. Mater. 2022, 173, 104436. [Google Scholar] [CrossRef]

- Wang, J.-J.; Wang, C.; Fan, J.-S.; Mo, Y. A deep learning framework for constitutive modeling based on temporal convolutional network. J. Comput. Phys. 2022, 449, 110784. [Google Scholar] [CrossRef]

- Benabou, L. Development of LSTM networks for predicting viscoplasticity with effects of deformation, strain rate and temperature history. J. Appl. Mech. 2021, 88, 071008. [Google Scholar] [CrossRef]

- Gorji, M.B.; Mozaffar, M.; Heidenreich, J.N.; Cao, J.; Mohr, D. On the potential of recurrent neural networks for modeling path dependent plasticity. J. Mech. Phys. Solids 2020, 143, 103972. [Google Scholar] [CrossRef]

- Mozaffar, M.; Bostanabad, R.; Chen, W.; Ehmann, K.; Cao, J.; Bessa, M.A. Deep learning predicts path-dependent plasticity. Proc. Natl. Acad. Sci. USA 2019, 116, 26414–26420. [Google Scholar] [CrossRef]

- Abdolazizi, K.P.; Linka, K.; Cyron, C.J. Viscoelastic constitutive artificial neural networks (vCANNs)–A framework for data-driven anisotropic nonlinear finite viscoelasticity. J. Comput. Phys. 2024, 499, 112704. [Google Scholar] [CrossRef]

- Banks, H.T.; Hu, S.; Kenz, Z.R. A brief review of elasticity and viscoelasticity for solids. Adv. Appl. Math. Mech. 2015, 3, 1–51. [Google Scholar] [CrossRef]

- Hong, W. Modeling viscoelastic dielectrics. J. Mech. Phys. Solids 2011, 59, 637–650. [Google Scholar] [CrossRef]

- Reese, S.; Govindjee, S. A theory of finite viscoelasticity and numerical aspects. Int. J. Solids. Struct. 1998, 35, 3455–3482. [Google Scholar] [CrossRef]

- Zhou, J.; Jiang, L.; Khayat, R.E. A micro–macro constitutive model for finite-deformation viscoelasticity of elastomers with nonlinear viscosity. J. Mech. Phys. Solids. 2018, 110, 137–154. [Google Scholar] [CrossRef]

- Su, X.; Peng, X. A 3D finite strain viscoelastic constitutive model for thermally induced shape memory polymers based on energy decomposition. Int. J. Plast. 2018, 110, 166–182. [Google Scholar] [CrossRef]

- Qin, B.; Zhong, Z.; Zhang, T.-Y. A thermodynamically consistent model for chemically induced viscoelasticity in covalent adaptive network polymers. Int. J. Solids. Struct. 2022, 256, 111953. [Google Scholar] [CrossRef]

- Gent, A.N. A new constitutive relation for rubber. Rubbery Chem. Technol. 1996, 69, 59–61. [Google Scholar] [CrossRef]

- Arruda, E.M.; Boyce, M.C. A three-dimensional constitutive model for the large stretch behaviour of rubber elastic materials. J. Mech. Phys. Solids. 1993, 41, 389–412. [Google Scholar] [CrossRef]

- Lecun, Y. A Theoretical Framework for Back-Propagation. In Artificial Neural Networks; Mehra, P., Wah, B., Eds.; IEEE Computer Society Press: Piscataway, NJ, USA, 1992. [Google Scholar]

- Cho, K.; Merrïenboer, B.V.; Gulcehre, C.; Bougares, F.; Schwenk, H.; Bahdanau, D.; Bengio, Y. Learning phrase representations using RNN encoder–decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078v3. [Google Scholar]

- Amini Niaki, S.; Haghighat, E.; Campbell, T.; Poursartip, A.; Vaziri, R. Physics-informed neural network for modelling the thermochemical curing process of composite-tool systems during manufacture. Comput. Methods Appl. Mech. Engrg 2021, 384, 113959. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2015, arXiv:1412.6980v9. [Google Scholar]

- Liao, Z.; Hossain, M.; Yao, X.; Mehnert, M.; Steinmann, P. On thermo-viscoelastic experimental characterization and numerical modelling of VHB polymer. Int. J. Non-Linear Mech. 2020, 118, 103263. [Google Scholar] [CrossRef]

- Mehnert, M.; Hossain, M.; Steinmann, P. A complete thermo–electro–viscoelastic characterization of dielectric elastomers, Part I: Experimental investigations. J. Mech. Phys. Solids. 2021, 157, 104603. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).