Abstract

Machine learning techniques have become a popular solution to prediction problems. These approaches show excellent performance without being explicitly programmed. In this paper, 448 sets of data were collected to predict the neutralization depth of concrete bridges in China. Random forest was used for parameter selection. Besides this, four machine learning methods, such as support vector machine (SVM), k-nearest neighbor (KNN) and XGBoost, were adopted to develop models. The results show that machine learning models obtain a high accuracy (>80%) and an acceptable macro recall rate (>80%) even with only four parameters. For SVM models, the radial basis function has a better performance than other kernel functions. The radial basis kernel SVM method has the highest verification accuracy (91%) and the highest macro recall rate (86%). Besides this, the preference of different methods is revealed in this study.

1. Introduction

The neutralization of concrete is a major factor that influences the service life of R.C. bridges. The alkaline environment around steel bars will be impaired by carbon dioxide and other acid materials, such as acid rain [1,2]. Subsequently, steel bars are likely to be oxidized, especially with the effect of chloride ions and moisture in the concrete. Once the steel bars are corroded, the bearing capacity of bridges will be impaired [3,4,5].

Currently, the total number of bridges in China is nearly one million, and many bridges have been in service for more than ten years. It is necessary to provide a solution for the prediction of the neutralization depth of existing bridges. However, in real engineering, the influence factors are coupled, which makes it difficult to estimate the neutralization status of concrete. Besides this, for existing bridges, another difficulty is the loss of original bridge construction information. However, some information (e.g., water cement ratio, maximum nominal aggregate size, and cement content) is often considered necessary for the prediction of neutralization depth. Besides this, predicting the neutralization of the concrete in inland river bridges is interesting as regards the special service ambiance of these concrete components. The influences of the river, wind, traffic load and some unknown factors are significant. However, accurately quantifying the effects of these factors by formulas is difficult.

Machine learning (ML) is proving to be an efficient approach to solving the above problems. ML refers to the capability of computers to obtain knowledge from datasets without being explicitly programmed [6]. It includes many powerful methods, such as support vector machine (SVM), decision tree, k-means, AdaBoost and k-nearest neighbor (KNN). One ML method mainly consists of two parts: the decision function and objective function. For a new data point, the decision function is used to predict its category. The decision function contains some pending parameters that must be determined by optimizing the objective function. The objective function at least contains a loss function and a regularization item. The loss function depicts the gap between true values and prediction values; the regularization item is used to avoid model overfitting. ML methods have been widely used in civil engineering. The first application of ML was to promote structural safety [7]. Nowadays, ML is used in structural health monitoring [8,9,10,11], reliability analysis [12,13], and earthquake engineering [14,15,16].

In addition, machine learning techniques show great potential in the concrete industry. The complexity of concrete makes it difficult to developing prediction models. However, models developed by ML methods always achieve a high accuracy [17,18,19,20]. Topçu et al. [21] proposed an artificial neural networks (ANN) model to evaluate the effect of fly ash on the compressive strength of concrete. The results show that the root-mean-squared error (RMSE) of the ANN model is less than 3.0. Bilim et al. [22] constructed an ANN model to predict the compressive strength of ground granulated blast furnace slag (GGBFS) concrete. Sarıdemir et al. [23] used ANN and a fuzzy logic method to predict the long-term effects of GGBFS on the compressive strength of concrete. Their results show that the fuzzy logic model has a low RMSE (3.379); however, the ANN model’s RMSE (2.511) is lower. Golafshani et al. [24] used grey wolf optimizer to improve the performance of the ANN model and an adaptive neuro-fuzzy inference system model in predicting the compressive strength of concrete. Kandiri et al. [25] developed some ANN models with a slap swarm algorithm to estimate the compressive strength of concrete. The results show that this algorithm can reduce the RMSE of ANN models. Machine learning methods can be used for classification problems, regress problems, feature selection and data mining. Compared with conventional models, machine learning models are good at gaining information from data.

Machine learning can select a few effective parameters for developing models. Han et al. [26] measured the importance of parameters based on the random forest method, and then used this approach to establish prediction models. Their results show that the performance of models can be obviously improved by parameter selection. Random forest is an effective feature selection method [27]. It is widely used in bioscience [28,29], computer science [30], environmental sciences [31], and many other fields. Zhang et al. [32] used random forest to select important features from a building energy consumption dataset. Yuan et al. [33] employed random forest to rank the features of house coal consumption.

In this paper, random forest was adopted for parameter selection. SVM, KNN, AdaBoost and XGBoost were used to develop prediction models. These ML methods have been successfully used in many fields [34,35,36,37]. A comparison among these ML models was also conducted to reveal the preference for different methods in the prediction of neutralization depth.

2. Dataset Description and Analysis

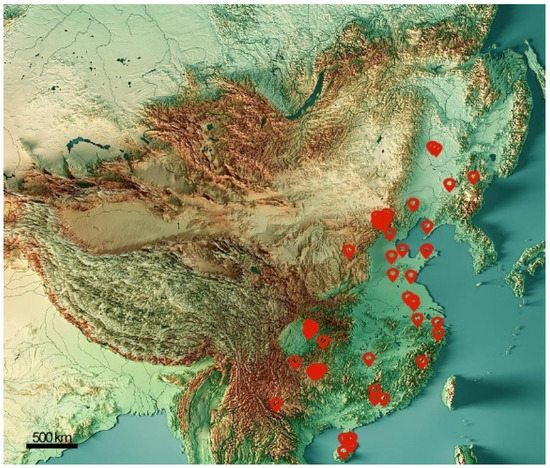

The dataset, which focuses on the neutralization depth of R.C. bridges in China, includes 448 samples. Parameters such as service time, concrete strength, bridge load class and environmental conditions were considered in this study. Figure 1 shows the distribution of these bridges. The full information of the dataset is shown in the Appendix A. The dataset was collected from references [38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68], and the meteorological data of the city were collected from the environmental meteorological data center of China. These references [38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68] are included in two professional Chinese document databases: CNKI and WANFANG DATA. All the samples included in the Appendix A are the detection data of existing bridges.

Figure 1.

The distribution of the bridges involved in this study.

The neutralization depth of concrete was tested with phenolphthalein, and the compressive strength was measured with a resiliometer and calculated according to the Technical Specification for Inspecting of Concrete Compressive Strength by Rebound Method (JGJ/T 23-2011). The vehicle load of bridges was divided into two levels, according to the General Specifications for Design of Highway Bridges and Culverts (JTG D60-2015). There are no missing data in the dataset; any samples containing missing values were abandoned during collection. Table 1 gives a detailed description of the dataset. The climatic division used in Table 1 is derived from the climatic division map of the geographic atlas of China (Peking University) [69].

Table 1.

Detailed information of the dataset.

The reliability of the ML models relies on the quality of the dataset. Generally, ML models have an excellent performance within the scope of the training dataset. However, predicting the neutralization depth of a new sample outside the range of the training dataset is difficult for ML models. Therefore, a dataset with a wide scope is necessary for the reliability of ML models. Table 1 shows the range of this dataset. The service time, compressive strength, and load level of samples in the dataset can cover the main status of existing bridges. The temperature, humidity, acid rain, and climate status can cover the majority of service environments of existing bridges in China. The distribution of samples shown in Figure 1 also shows that the dataset has a large scope. Besides this, the histograms in Table 1 show that the values of samples have good continuity. Therefore, it is believed that this dataset is effective for developing ML models.

The imbalanced distribution of temperature and RH is also revealed in the histograms. The RH of most of the samples is around 72.5–82.5%, and the temperature of most of the samples is around 15 °C. The parts that have few points will receive less attention from the ML models because of the imbalance in the dataset. However, this negative effect caused by imbalances can be alleviated through increasing the penalty applied to the misclassification of the samples in these parts.

3. Parameter Evaluation and Selection

This study aims to develop diagnosis models for existing R.C. bridges, so parameter selection is important as it will alleviate the difficulty of obtaining parameters in real engineering. Random forest is widely used in feature selection [27]. It is used for supervised learning, and it does not require the dataset to obey normal distribution [33]. Obviously, the dataset used in this study does not obey normal distribution.

3.1. Random Forest for Parameter Evaluation

Random forest is a combination of decision trees. First, samples are selected from the dataset as a training set through put-back sampling, and a decision tree is generated from these n samples. Then features are randomly selected and split at each node of the decision tree. The above process is repeated times ( is the number of decision trees in a random forest), and finally a random forest model is generated.

In the process of generating decision trees, the Gini coefficient is usually used to split nodes. Random forest evaluates the importance of parameters by calculating the average change in the Gini coefficient of feature during the splitting process of the nodes. Assuming there are, in total, d features in kth decision tree, the probability of a sample belonging to class is , and there are classes; then, the Gini coefficient is defined as:

For dataset , the Gini coefficient is:

where is the subset of samples belonging to class in the dataset . On node , feature divides dataset into two parts, and , so the changes in the Gini coefficient are:

Therefore, the importance of parameter in the th decision tree is:

where is the number of nodes divided by feature on dataset . Therefore, the importance of feature can be calculated by Equation (5):

where is the number of characteristics, and is the number of decision trees in the random forest.

3.2. Results and Discussions

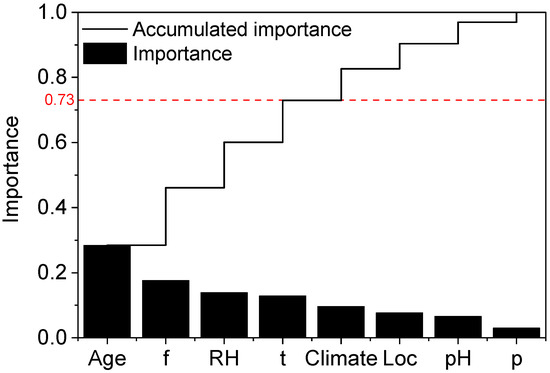

Figure 2 shows the results of parameter evaluation. It is noted that temperature, concrete strength , RH and age are more important than climate, location of components, acid rain, and load level. The cumulative importance of the top four parameters reaches 0.73. Climate, which represents the rough ambient conditions of neutralization, is often considered an important feature. However, due to its high correlation with other environment parameters, the results show that it is not so important. The random forest approach tends to place some of the highly correlated features on the top, but place the others at the end.

Figure 2.

Importance of each parameter.

Further, Climate and Loc are noun parameters. Generally, a noun parameter cannot be used directly to establish models. A common approach for preprocessing noun parameters is unique heat coding, and this will create a new virtual parameter for each unique value of the noun parameter. Therefore, the old parameter Climate will generate six new parameters, and Loc will generate four new parameters. Adding so many new parameters is unnecessary, because of their low importance. Therefore, Climate, Loc, pH and p were omitted in the next study.

In addition, it is important to discuss the limitations of the ML models used in this study. For ML models, their validity scope depends on the range of the dataset. These models are actually empirical models. In this study, the ML models were based on age, RH, and , so the validity scope of the models is a four-dimensional space determined by the dataset. The search algorithm can be used to determine the valid scope of the models. For example, when a new sample is obtained and one wants to know if the sample is in the valid scope, one can search the dataset and find the new sample’s neighboring points. Then, the neighboring points can be used for judging if the new sample is in the valid range.

4. Machine Learning Models

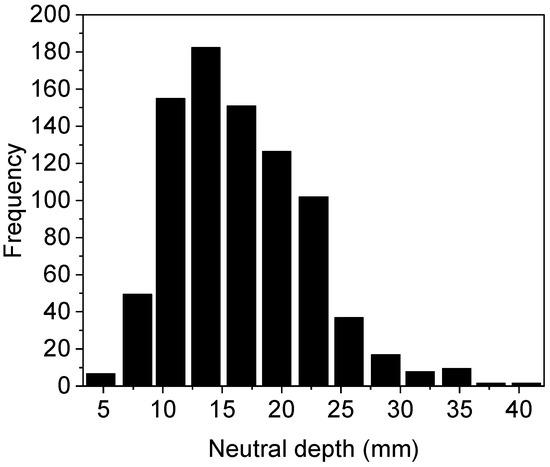

The current prediction models require a large number of input parameters, and output a mean value of neutral depth. However, the dispersion of concrete’s neutral depth is great. Figure 3 illustrates the histogram of the neutralization depth data of the Nanjing Yangtze river bridge’s concrete components. All components in Figure 3 have the same service time and concrete mix proportion. It is noted that the discreteness of those components is obvious. Thus, this paper decided to predict the level of neutral depth. Table 2 shows the classification of the neutral depth of concrete of bridges. 6 mm was chose as a boundary between slight level and medium level in this study. This is because the relationship between the neutralization depth and concrete’s compressive strength will become uncertain in the appraisal of old buildings when the neutralization depth of concrete is greater than 6 mm. According to Technical Specification for Inspecting of Concrete Compressive Strength by Rebound Method (JGJ/T 23-2011), when the neutralization depth is greater than 6 mm, the test results cannot reflect the actual strength of the concrete. In addition, 25mm was selected as the boundary between the medium level and the serious level, since the protective layer thickness of components of bridges in China is often between 20 and 30mm. When the neutral depth reaches 25mm, the neutral area is likely to reach the surface of the steel bars.

Figure 3.

Histogram of neutral depth of concrete components of the Nanjing Yangtze river’s bridge.

Table 2.

The classification of neutralization depth.

4.1. Support Vector Machine

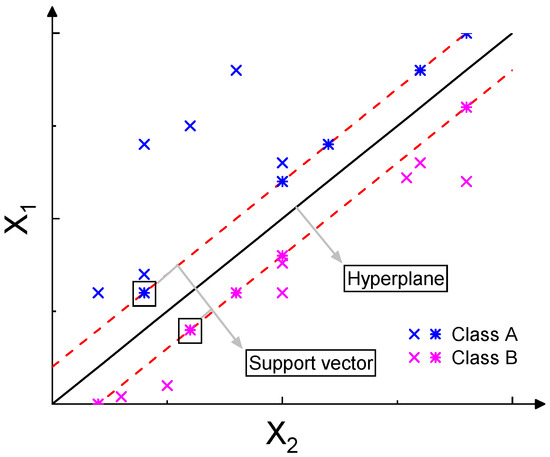

SVM is a binary classification model; its purpose is to find a hyperplane in order to classify samples into two classes [70]. SVM finds the hyperplane by maximizing the margin between the two classes. The margin refers to the shortest distance between the closest data points to the hyperplane. Therefore, only a few points, which are called support vectors, can influence the hyperplane. Because the majority of the samples are insignificant, SVM offers one of the most robust and accurate algorithms among all well-known modeling methods when the dataset is not huge [37]. Considering the size of the dataset used in this study, SVM is obviously attractive. Figure 4 shows an illustration of SVM.

Figure 4.

An illustration of support vector machine (SVM).

Developing an SVM model can help in solving the following problem [70]:

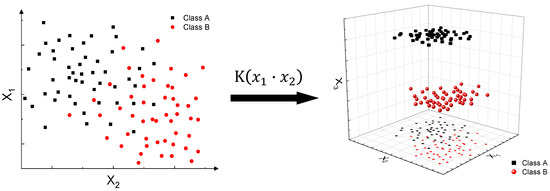

K(x·z) is the kernel function. The data points in a low-dimensional space can be transformed into the data points in a high-dimensional space through the kernel function [70]. Therefore, a nonlinear problem can turn into a linear problem. Figure 5 depicts the effects of the kernel function. Common kernel functions include polynomial kernel, radial basis kernel and hyperbolic tangent kernel. () is the sample point, and is the number of samples in the dataset. indicates the penalty for misclassification. can be obtained by solving Equation (6). Then, the decision function can finally be obtained:

Figure 5.

An illustration of the effect of kernel function.

4.2. K-Nearest Neighbor

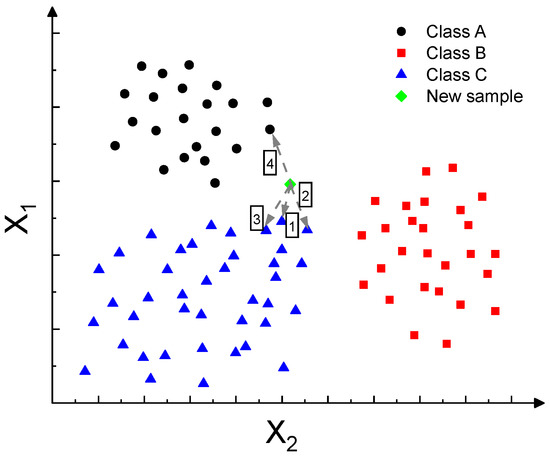

KNN is one of the most concise classification algorithms, and it is also recognized as one of the top ten data mining algorithms [37]. For a new sample, KNN will find k samples closest to this sample in the dataset. The classification of this new sample depends on the voting results of those k samples. Figure 6 shows an illustration of KNN. In Figure 6, we suppose , and the four closest data points to the new sample are marked with numbers. Points 1, 2, and 3 belong to class C, and only point 4 belongs to class A. Therefore, this new sample should be classified into class C. Compared with other ML methods, KNN is simpler, but is also effective [71]. KNN is often used for comparison with other ML methods in some studies [71,72], as well as this study.

Figure 6.

An illustration of K-nearest neighbor (KNN).

The decision function of KNN can be written as follows:

represents the class . is the range that covers these samples. is an indicator function; if , then , otherwise, . The purpose of KNN is to find the optimal number of nearest neighbors.

4.3. AdaBoost

AdaBoost is one of the most representative methods in machine learning [37]. AdaBoost is a famous ensemble learning algorithm. This method will develop a lot of weak classifiers, and finally combines these weak classifiers into a strong classifier. Therefore, the decision function can be written as follows [37]:

is the decision function of the th weak classifier . is the weight of , and this coefficient is calculated by the accuracy of . In this study, decision tree models were used as the weak classifiers. AdaBoost first generates a weak decision tree model, gets its decision function , and updates the weight of the samples according to the performance of . If one data point is misclassified by , it will be assigned a greater weight in the next round. The weight of samples is updated in the th round, and will be fitted based on these samples and their weights in the th round. , the weight of , will be calculated via the accuracy of .

4.4. XGBoost

XGBoost (extreme gradient boosting) was proposed in 2016, and soon became a popular method for its excellent performance in Kaggle competitions [73]. XGBoost is one of the most popular emerging ML approaches. However, its application in civil engineering is not as common as the application of other conventional ML methods, such as SVM, KNN, and ANN. Most of the applications of XGBoost in civil engineering have been undertaken in the last two years. Hu et al. [74] used XGBoost to predict the wind pressure coefficients of buildings. Pei et al. [75] developed a pavement aggregate shape classifier based on XGBoost. In this study, XGBoost is selected on behalf of the other new ML methods for the comparison with other representative ML methods.

The XGBoost method first develops a weak classifier. Then, the next weak classifier is designed to reduce the gap between the true value and the prediction value of the first weak classifier. For th training, the decision function can be written as follows:

represents the weight of weak classifier . When the mean-squared error is chosen as the loss function of the models, the objective function requiring optimization in generating a new weak classifier can be written as follows:

is a regularization item, and is the number of samples. In this study, the tree model was selected as the weak classifier. The tree model is the commonest weak classifier in the application of XGBoost.

4.5. Multi-Class Problem

Some machine learning methods (e.g., SVM) are designed for binary classification problems, but a multi-class problem was studied in this study. Therefore, a one-to-one strategy (OVO) is considered. OVO is a common approach for multi-class problems [76,77,78]. OVO methods generate a hyperplane between any two categories, and will generate hyperplanes for an classification problem. For a new sample, all models are utilized, and the final results depend on the vote among ML models.

4.6. Results and Discussions

Z-score normalization is used for the normalization of the dataset. Normalization can alleviate the influence of the parameters’ different scales. Besides this, in order to improve the reliability of ML models, it is necessary to divide all samples into two parts: T1 and T2. T1, the training dataset, is used for training ML models, and T2, the testing dataset, is used for testing the performance of ML models. This study made 70% of the original dataset into a training dataset.

Training accuracy, verification accuracy and macro recall rate were used as the indicators for the optimization of the parameters of the models. The mesh search tuning approach was used to find the optimal values of the parameters of the models. Under-fitting and over-fitting can be avoided by comparing the model’s training accuracy and verification accuracy. Besides this, in order to improve the reliability of results, the dataset was randomly divided 10 times, and each child dataset has the same distribution. The final results were based on the performances of these 10 models. Table 3 shows the results of mesh search tuning.

Table 3.

Results of mesh search tuning for SVM models.

Table 3 shows that the training accuracy of ML models is very close to their verification accuracy, which illustrates that overfitting is avoided. Both accuracy and macro recall rate were adopted for estimating ML models. In fact, macro recall rate is more important than accuracy when the imbalance of datasets is considered. Macro recall rate is an index for depicting the ratio of samples correctly classified by the classifier to samples that should be correctly classified. Obviously, the verification accuracy (91%) and the macro recall rate (86%) of the radial basis kernel SVM model are higher than those of other models. Besides this, the gap between the verification accuracy and the training accuracy of the radial basis kernel SVM model is 2%, so there is no obvious evidence of overfitting. Besides this, the performance of radial basis kernel and polynomial kernel are better than that of hyperbolic tangent kernel. Radial basis kernel is the best kernel function in this study. Compared with other methods, KNN also seemed to be attractive. Besides this, the maximum gap between the models in terms of verification accuracy is 22%. However, for macro recall rate, the maximum gap can reach 40%. This may be due to the influence of the uneven distribution of the dataset. The macro recall rate is sensitive to imbalance data. As an evaluation index, the macro recall rate is more representative.

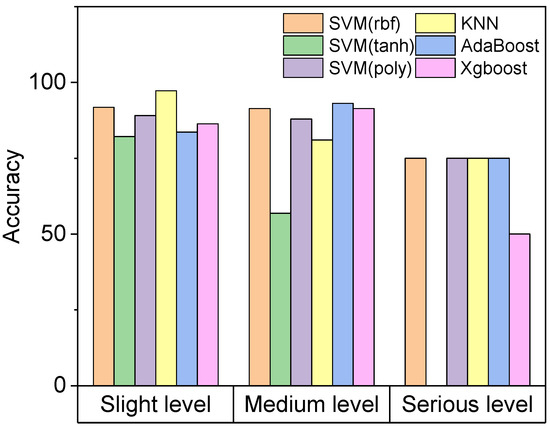

Table 4 shows the obfuscation matrixes of models. All models were established by scikit-learn. For Li and Lj (i, j = 1, 2, 3) in Table 4, the value in row Li and column Lj represents the number of samples that are actually class Li but are predicted to be class Lj by the models. The green area in Table 4 shows the number of test samples that are rightly classified, and the yellow area shows the number of test samples that are wrongly classified. Based on Table 4, the accuracy of the models in terms of the neutralization level of concrete can be obtained (Figure 7).

Table 4.

The obfuscation matrixes of models.

Figure 7.

Accuracy of models on different neutralization levels of concrete.

Even though the radial basis kernel SVM model has the highest verification accuracy and the highest macro recall rate, Table 4 and Figure 7 show that the KNN model is better at classifying the samples with a slight level than other methods (accuracy > 97%). However, compared with other models, the KNN model only achieves a moderate performance in the prediction of medium-level samples (accuracy = 81%). The AdaBoost model is the best classifier in predicting the neutralization depth of medium-level samples (accuracy > 93%).

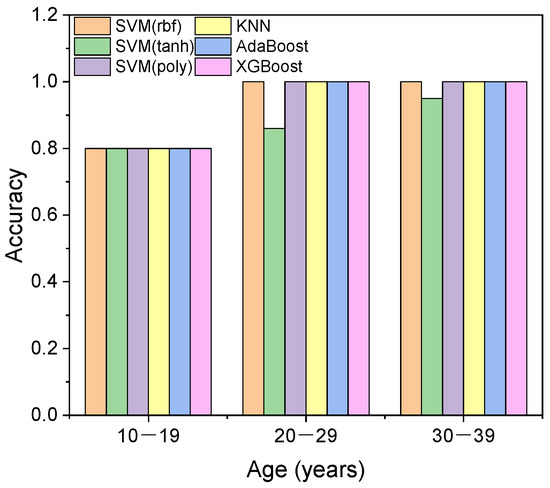

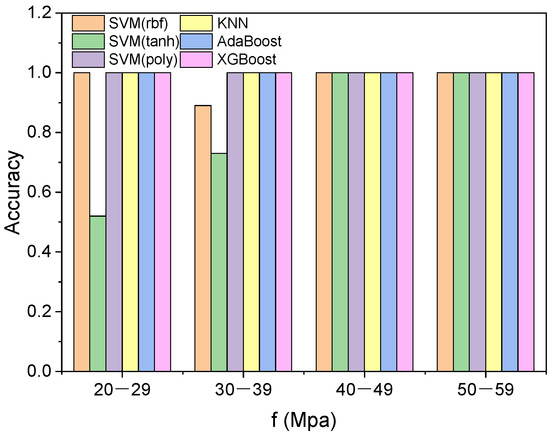

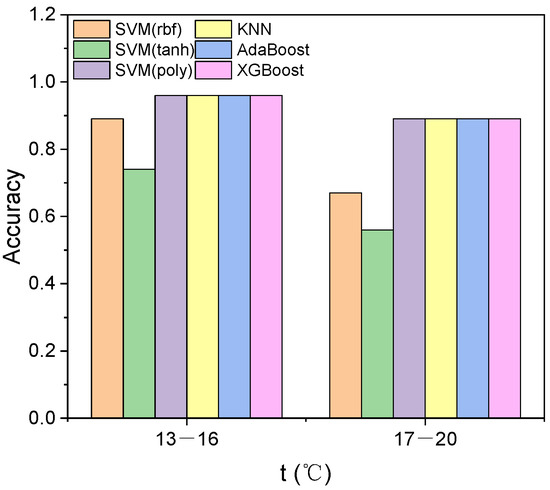

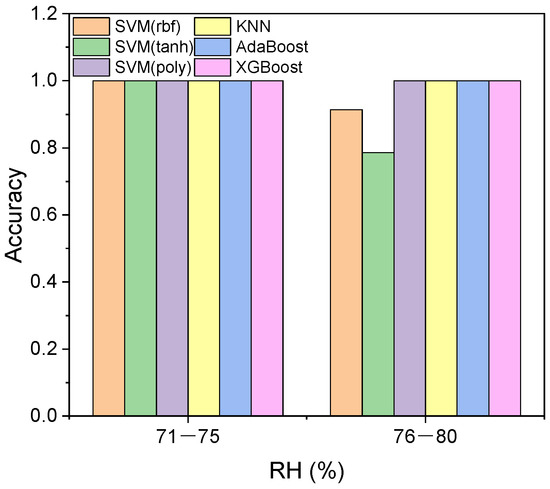

Besides this, Figure 8 and Figure 9 show that most ML models reach a high accuracy in predicting the neutralization depth of concrete components with a longer service life (20–39 years) and a higher compressive strength (40–59 Mpa). Figure 10 and Figure 11 show that most ML models achieve a high accuracy in predicting the neutralization depth of concrete component in a lower temperature (13–16 °C) and a lower humidity (71–75%) environment. It is believed that when the neutralization depth of concrete reaches the boundary of two levels, the accuracy will decline. Therefore, a rough warning range for the neutralization depth of concrete in terms of parameters can be obtained. For instance, for service time, the range is 10–19. The warning range implies that the neutralization depth of concrete is likely to reach the next level, and more attention should be paid to these bridges.

Figure 8.

The accuracy of models in terms of the service time of concrete.

Figure 9.

The accuracy of models in terms of the compressive strength of concrete.

Figure 10.

The accuracy of models in terms of the temperature of exposure situations.

Figure 11.

The accuracy of models in terms of the relative humidity of exposure situations.

5. Conclusions

In this paper, four-parameter ML models for predicting the neutralization depth levels of the concrete components of existing bridges were established. Four representative ML methods were used in this study. The following conclusions can be drawn:

- This study used SVM, KNN, AdaBoost and XGBoost to predict the neutralization depth level of the concrete of existing bridges, and the results show that the radial basis kernel SVM model has the highest validation accuracy (91%) and the highest macro recall rate (86%), with only four parameters. The radial basis kernel function is the best kernel functions in this study. Compared with other models, the radial basis kernel SVM model and KNN model achieve a better performance;

- The results reveal the preference of ML methods. KNN is good at classifying slight-level samples (accuracy > 97%), and AdaBoost is the best method for the prediction of medium-level samples (accuracy > 93%). Machine learning shows great potential in predicting the neutralization depth of concrete with very few parameters, and evaluating the durability level of existing bridges;

- Random forest was used for parameter selection. The results show that temperature, concrete strength, RH and service time are more important than climate, acid rain, location of components, and load level. The cumulative importance of these top four parameters reaches 73%. The performance of the models shows that random forest is an effective approach for parameter selection.

Author Contributions

Conceptualization, S.C. and K.D.; methodology, K.D.; data curation, K.D.; writing, K.D.; supervision, S.C., J.L. and C.X.; project administration, S.C., J.L. and C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available in a publicly accessible repository. The data presented in this study are openly available in the Appendix A.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Detailed information of 448 sets of data.

Table A1.

Detailed information of 448 sets of data.

| Age | f | t | RH | pH | p | Climate | Loc 1 | d | Reference | Age | f | t | RH | pH | p | Climate | Loc 1 | d | Reference |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 49 | 25 | 16 | 75 | 5 | 2 | north subtropics | Ar | 19.72 | self-test | 7 | 45 | 21.8 | 83 | 6 | 1 | edge tropics | Bpier | 2.8 | [56] |

| 49 | 35 | 16 | 75 | 5 | 2 | north subtropics | Ar | 11.48 | 7 | 45 | 21.8 | 83 | 6 | 1 | edge tropics | Bpier | 1.5 | ||

| 49 | 25 | 16 | 75 | 5 | 2 | north subtropics | Ar | 17.02 | 23 | 35 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 7.483 | [57] | |

| 49 | 20 | 16 | 75 | 5 | 2 | north subtropics | Ar | 20.23 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 5.937 | ||

| 49 | 15 | 16 | 75 | 5 | 2 | north subtropics | Ar | 16.47 | 23 | 40 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 11.453 | ||

| 49 | 20 | 16 | 75 | 5 | 2 | north subtropics | Ar | 20.23 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.917 | ||

| 49 | 25 | 16 | 75 | 5 | 2 | north subtropics | Ar | 11.11 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.35 | ||

| 49 | 20 | 16 | 75 | 5 | 2 | north subtropics | Ar | 12.15 | 23 | 35 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.563 | ||

| 49 | 25 | 16 | 75 | 5 | 2 | north subtropics | Ar | 16.38 | 23 | 60 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4 | ||

| 49 | 15 | 16 | 75 | 5 | 2 | north subtropics | Ar | 23.45 | 23 | 60 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.577 | ||

| 49 | 20 | 16 | 75 | 5 | 2 | north subtropics | Ar | 20.62 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.673 | ||

| 49 | 15 | 16 | 75 | 5 | 2 | north subtropics | Ar | 21.57 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.12 | ||

| 49 | 30 | 16 | 75 | 5 | 2 | north subtropics | Bpla | 8.4 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.63 | ||

| 49 | 30 | 16 | 75 | 5 | 2 | north subtropics | Bpla | 8.4 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.56 | ||

| 49 | 30 | 16 | 75 | 5 | 2 | north subtropics | Bpla | 5.43 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.343 | ||

| 49 | 30 | 16 | 75 | 5 | 2 | north subtropics | Bpla | 5.43 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.853 | ||

| 49 | 15 | 16 | 75 | 5 | 2 | north subtropics | Bpier | 18.55 | 23 | 60 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.497 | ||

| 49 | 15 | 16 | 75 | 5 | 2 | north subtropics | Bpier | 18.55 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 5.067 | ||

| 19 | 55 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 60 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.3 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1.5 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.267 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bm | 2 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.453 | ||

| 19 | 55 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1.5 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.097 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bm | 2 | 23 | 55 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.47 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1.5 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.31 | ||

| 19 | 35 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 5.18 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.7 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 20 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 14.773 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 3.85 | ||

| 19 | 40 | 16 | 75 | 5 | 1 | north subtropics | Bm | 1 | 23 | 40 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.513 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bm | 0.5 | 23 | 45 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 4.467 | ||

| 19 | 40 | 16 | 75 | 5 | 1 | north subtropics | Bm | 0.5 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 9.923 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 1 | 23 | 50 | 20.5 | 77 | 4 | 2 | south subtropics | Ar | 6.83 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 1.5 | 23 | 30 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 16.51 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 1.5 | 23 | 30 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 6.037 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 1 | 23 | 25 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 5.857 | ||

| 19 | 40 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 2 | 23 | 20 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 6.753 | ||

| 19 | 50 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 1 | 23 | 25 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 16.21 | ||

| 19 | 45 | 16 | 75 | 5 | 1 | north subtropics | Bpier | 0.5 | 23 | 35 | 20.5 | 77 | 4 | 2 | south subtropics | Bpier | 11.597 | ||

| 20 | 40 | 16.2 | 82 | 4 | 1 | mid-subtropics | Ar | 4 | [38] | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 6.2 | [58] |

| 20 | 45 | 16.2 | 82 | 4 | 1 | mid-subtropics | Bm | 3.5 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 6 | ||

| 20 | 45 | 16.2 | 82 | 4 | 1 | mid-subtropics | Bm | 3.5 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 6 | ||

| 20 | 40 | 16.2 | 82 | 4 | 1 | mid-subtropics | Bm | 3.5 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 6.5 | ||

| 20 | 40 | 16.2 | 82 | 4 | 1 | mid-subtropics | Bm | 4 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 5.5 | ||

| 20 | 40 | 16.2 | 82 | 4 | 1 | mid-subtropics | Bpier | 3.5 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 5.5 | ||

| 35 | 40 | 17.4 | 80 | 4 | 1 | mid-subtropics | Ar | 3 | [39] | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 5.8 | |

| 35 | 25 | 17.4 | 80 | 4 | 1 | mid-subtropics | Ar | 2.5 | 3 | 25 | 5.8 | 65 | 6 | 2 | mid temperate zone | Bm | 6.2 | ||

| 35 | 40 | 17.4 | 80 | 4 | 1 | mid-subtropics | Bpla | 3 | 20 | 25 | 14.4 | 72 | 6 | 1 | warm temperate | Bm | 9.7 | [59] | |

| 35 | 45 | 17.4 | 80 | 4 | 1 | mid-subtropics | Bm | 1.5 | 20 | 35 | 14.4 | 72 | 6 | 1 | warm temperate | Bpier | 11.2 | ||

| 32 | 45 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.53 | [40] | 12 | 30 | 22.7 | 76 | 5 | 2 | south subtropics | Bm | 1.68 | [60] |

| 32 | 45 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.63 | 12 | 35 | 22.7 | 76 | 5 | 2 | south subtropics | Bm | 1.46 | ||

| 32 | 45 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.4 | 12 | 30 | 22.7 | 76 | 5 | 2 | south subtropics | Bm | 1.63 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.73 | 12 | 30 | 22.7 | 76 | 5 | 2 | south subtropics | Bm | 1.53 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.93 | 26 | 25 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bm | 7.6 | https://wenku.baidu.com/view/f66434afce2f0066f53322d8.html?sxts=1575776593501 (accessed on 20 February 2021) | |

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9.07 | 26 | 25 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bm | 9.1 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.7 | 26 | 30 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bm | 9 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9 | 26 | 25 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bm | 9.5 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.6 | 26 | 25 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bm | 10.2 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.83 | 26 | 20 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpla | 13.5 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9.2 | 26 | 25 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 9.72 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9.2 | 26 | 20 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 8.8 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.63 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 1.9 | https://wenku.baiducom/view/73a61a52f5335a8102d220b8.html?sxts=1575779109843 (accessed on 20 February 2021) | |

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.83 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.5 | ||

| 32 | 35 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9.37 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3 | ||

| 32 | 45 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.47 | 12 | 55 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.7 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.9 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 1.5 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 7.97 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.1 | ||

| 32 | 45 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.83 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.5 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.2 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 7.93 | 12 | 55 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.5 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 9.23 | 12 | 55 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.5 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.83 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.9 | ||

| 32 | 40 | 16.2 | 78 | 4 | 2 | north subtropics | Ar | 8.6 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.5 | ||

| 41 | 40 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 6 | [41] | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.5 | |

| 41 | 40 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 9 | 12 | 55 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 1.6 | ||

| 41 | 40 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 7 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.8 | ||

| 41 | 40 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 8 | 12 | 50 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.5 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 6 | 12 | 35 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 4 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 5 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 3.5 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 9 | 12 | 35 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 2.5 | ||

| 41 | 30 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 5 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 2.6 | ||

| 41 | 30 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 5 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 3.5 | ||

| 41 | 45 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 7 | 12 | 35 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 4 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 4 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 4 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 6 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 3 | ||

| 41 | 35 | 14.5 | 70 | 5 | 2 | warm temperate | Bm | 10 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 3.5 | ||

| 16 | 20 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 4 | [42] | 12 | 35 | 17.2 | 77 | 4 | 1 | north subtropics | Bm | 3.9 | |

| 16 | 15 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 4.5 | 12 | 30 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 3.8 | ||

| 16 | 35 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 4 | 12 | 35 | 17.2 | 77 | 4 | 1 | north subtropics | Bpier | 2.5 | ||

| 16 | 20 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 5 | 20 | 60 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | [61] | |

| 16 | 20 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 4 | 20 | 60 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 16 | 20 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 4.5 | 20 | 55 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 16 | 30 | 19.9 | 78 | 4 | 2 | mid-subtropics | Bpier | 5.5 | 20 | 60 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 40 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | [43] | 20 | 50 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | |

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 50 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 55 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 30 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 55 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 40 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 50 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 30 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 45 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0.8 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 60 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 40 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 40 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 35 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0.8 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 3 | 20 | 45 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 4 | 20 | 35 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 1.4 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 20 | 30 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 2.6 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 20 | 35 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 1.8 | ||

| 20 | 45 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 40 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0.2 | ||

| 20 | 40 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 20 | 55 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 20 | 40 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 1.8 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 20 | 50 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0.4 | ||

| 20 | 45 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 3 | 20 | 50 | 4.2 | 62 | 6 | 1 | mid temperate zone | Bpier | 0 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 21 | 35 | 12.8 | 55 | 6 | 1 | warm temperate | Bm | 12 | [62] | |

| 20 | 40 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 1 | 21 | 35 | 12.8 | 55 | 6 | 1 | warm temperate | Bm | 10 | ||

| 20 | 45 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 21 | 35 | 12.8 | 55 | 6 | 1 | warm temperate | Bm | 9 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 21 | 25 | 12.8 | 55 | 6 | 1 | warm temperate | Bm | 10 | ||

| 20 | 35 | 16.6 | 77 | 4 | 2 | mid-subtropics | Ar | 2 | 21 | 30 | 12.8 | 55 | 6 | 1 | warm temperate | Bpier | 11 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | [44] | 21 | 35 | 12.8 | 55 | 6 | 1 | warm temperate | Bpier | 12 | |

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 30 | 12.8 | 55 | 6 | 1 | warm temperate | Bpier | 14 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 1 | 21 | 35 | 12.8 | 55 | 6 | 1 | warm temperate | Bpier | 10 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 45 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 13 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 35 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 12 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 1 | 21 | 40 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 11 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 35 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 8 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 1 | 21 | 30 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 9 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 25 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 12 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 1 | 21 | 30 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 13 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 1 | 21 | 35 | 12.8 | 55 | 6 | 2 | warm temperate | Bm | 10 | ||

| 9 | 55 | 16.5 | 79 | 4 | 1 | mid-subtropics | Bm | 0.5 | 21 | 30 | 12.8 | 55 | 6 | 2 | warm temperate | Bpier | 10 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 3.5 | [45] | 21 | 35 | 12.8 | 55 | 6 | 2 | warm temperate | Bpier | 9 | |

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 5.5 | 21 | 30 | 12.8 | 55 | 6 | 2 | warm temperate | Bpier | 9 | ||

| 15 | 55 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 4 | 21 | 30 | 12.8 | 55 | 6 | 2 | warm temperate | Bpier | 11 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 5.5 | 21 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 7 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 7 | 21 | 30 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 11 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 6.5 | 21 | 40 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 13 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 5.5 | 21 | 35 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 9 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 8 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 5 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 8.5 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 7 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 10.5 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 4 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 12 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 13 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 11.5 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 9 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 8.5 | 16 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 10 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 6.5 | 14 | 50 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 6 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 3.5 | 14 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 7 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 5.5 | 14 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 8 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 4 | 14 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 6 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 5.5 | 14 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 7 | ||

| 15 | 45 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 3.5 | 14 | 45 | 12.4 | 57 | 6 | 1 | warm temperate | Bm | 10 | ||

| 15 | 50 | 12.7 | 63 | 5 | 1 | warm temperate | Bm | 6.5 | 14 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 11 | ||

| 15 | 35 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 5 | 14 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 5 | ||

| 15 | 35 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 3 | 14 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 9 | ||

| 15 | 40 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 5.5 | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 6 | ||

| 15 | 40 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 6 | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 7 | ||

| 15 | 35 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 3.5 | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 8 | ||

| 15 | 40 | 12.7 | 63 | 5 | 1 | warm temperate | Bpier | 3 | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 6 | ||

| 20 | 25 | 15 | 57 | 6 | 1 | warm temperate | Ar | 6 | [46] | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 9 | |

| 20 | 30 | 15 | 57 | 6 | 1 | warm temperate | Ar | 6 | 12 | 50 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 7 | ||

| 20 | 25 | 15 | 57 | 6 | 1 | warm temperate | Ar | 6 | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 8 | ||

| 31 | 45 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 11.3 | [47] | 12 | 55 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 6 | |

| 31 | 35 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 10.8 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 31 | 40 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 10.7 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 1 | ||

| 31 | 40 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 8.7 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 31 | 55 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bpier | 12.3 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 1 | ||

| 31 | 50 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bpier | 10.3 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 1 | ||

| 31 | 25 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 13.7 | 9 | 55 | 12.7 | 54 | 6 | 1 | warm temperate | Bpier | 1 | ||

| 31 | 45 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 11.5 | 7 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 31 | 50 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 7.7 | 7 | 50 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 3 | ||

| 31 | 45 | 17.5 | 80 | 4 | 2 | mid-subtropics | Bm | 11.3 | 7 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 31 | 40 | 17.5 | 80 | 4 | 2 | mid-subtropics | Ar | 11 | 7 | 50 | 12.7 | 56 | 6 | 2 | warm temperate | Bpier | 3 | ||

| 31 | 50 | 17.5 | 80 | 4 | 2 | mid-subtropics | Ar | 11.3 | 7 | 55 | 12.7 | 56 | 6 | 2 | warm temperate | Bpier | 3 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | [48] | 7 | 50 | 12.7 | 56 | 6 | 2 | warm temperate | Bpier | 4 | [62] |

| 19 | 20 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 8 | 60 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 8 | 60 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 3 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 8 | 60 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 2 | ||

| 19 | 20 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 8 | 60 | 12.4 | 57 | 6 | 1 | warm temperate | Bpier | 3 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 6 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 5 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 6 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 4 | ||

| 19 | 20 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 6 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 5 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 6 | 55 | 12.7 | 56 | 6 | 1 | warm temperate | Bpier | 5 | ||

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 18 | 25 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 13 | [63] | |

| 19 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 18 | 30 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 12 | ||

| 19 | 20 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 2 | 18 | 30 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 15 | ||

| 38 | 30 | 15.3 | 77 | 4 | 2 | north subtropics | Bm | 5 | 18 | 15 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 16 | ||

| 31 | 25 | 15.3 | 77 | 4 | 2 | north subtropics | Ar | 5.5 | 18 | 25 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 16 | ||

| 21 | 30 | 15.3 | 77 | 4 | 2 | north subtropics | Ar | 5 | 18 | 25 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 18 | ||

| 10 | 30 | 22.1 | 77 | 4 | 1 | south subtropics | Bm | 10.5 | [49] | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 17 | |

| 10 | 50 | 22.1 | 77 | 4 | 1 | south subtropics | Bm | 7.5 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 20 | ||

| 28 | 30 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 7 | [50] | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 18 | |

| 28 | 35 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 11.5 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bm | 18 | ||

| 28 | 30 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 14.5 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpier | 16 | ||

| 28 | 35 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 18.5 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpier | 20 | ||

| 28 | 35 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 18.5 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpier | 20 | ||

| 28 | 30 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 20 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpier | 19 | ||

| 28 | 30 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 22 | 18 | 20 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpier | 17 | ||

| 28 | 25 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 22.5 | 18 | 25 | 17.2 | 78 | 5 | 1 | mid-subtropics | Bpla | 16 | ||

| 28 | 25 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 25 | 15 | 50 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.96 | [64] | |

| 28 | 25 | 18.1 | 79 | 4 | 2 | mid-subtropics | Ar | 26.5 | 15 | 50 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.71 | ||

| 37 | 45 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 3.6 | [51] | 15 | 45 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.51 | |

| 37 | 45 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 4.5 | 15 | 45 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.5 | ||

| 37 | 40 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 3.7 | 15 | 50 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.39 | ||

| 37 | 40 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 3.2 | 15 | 40 | 13 | 71 | 5 | 1 | warm temperate | Bm | 1.88 | ||

| 37 | 35 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 3.3 | 35 | 25 | 9.5 | 51 | 6 | 2 | mid temperate zone | Bm | 49.87 | [65] | |

| 37 | 45 | 11.3 | 65 | 5 | 2 | warm temperate | Ar | 4.4 | 35 | 30 | 9.5 | 51 | 6 | 2 | mid temperate zone | Bm | 45.46 | ||

| 10 | 30 | 15.3 | 77 | 4 | 1 | north subtropics | Bm | 6.5 | [52] | 35 | 25 | 9.5 | 51 | 6 | 2 | mid temperate zone | Bm | 45.99 | |

| 10 | 25 | 15.3 | 77 | 4 | 1 | north subtropics | Bm | 5 | 35 | 25 | 9.5 | 51 | 6 | 2 | mid temperate zone | Bm | 49.79 | ||

| 10 | 30 | 15.3 | 77 | 4 | 1 | north subtropics | Bm | 7.5 | 13 | 25 | 24 | 78 | 5 | 1 | edge tropics | Bpier | 33.11 | [66] | |

| 10 | 30 | 15.3 | 77 | 4 | 1 | north subtropics | Bm | 1 | 13 | 40 | 23 | 80 | 5 | 1 | edge tropics | Bm | 24.75 | ||

| 10 | 15 | 15.3 | 77 | 4 | 1 | north subtropics | Bm | 3 | 12 | 50 | 23 | 80 | 5 | 1 | edge tropics | Bm | 17.02 | ||

| 10 | 15 | 15.3 | 77 | 4 | 1 | north subtropics | Bpier | 6 | 11 | 50 | 24 | 80 | 5 | 1 | edge tropics | Bm | 15.2 | ||

| 10 | 35 | 15.3 | 77 | 4 | 1 | north subtropics | Bpier | 3.5 | 11 | 25 | 23 | 80 | 5 | 1 | edge tropics | Bpla | 21.14 | ||

| 10 | 25 | 15.3 | 77 | 4 | 1 | north subtropics | Bpier | 5.5 | 13 | 40 | 26 | 80 | 5 | 1 | edge tropics | Bm | 25.69 | ||

| 10 | 40 | 15.3 | 77 | 4 | 1 | north subtropics | Bpier | 2 | 13 | 50 | 26 | 80 | 5 | 1 | edge tropics | Bm | 21.36 | ||

| 10 | 30 | 15.3 | 77 | 4 | 1 | north subtropics | Bpier | 5 | 10 | 40 | 22 | 86 | 5 | 1 | edge tropics | Bm | 7.1 | ||

| 59 | 35 | 12.7 | 62 | 6 | 2 | warm temperate | Bm | 47 | [53] | 15 | 40 | 26 | 80 | 5 | 1 | edge tropics | Bm | 21 | |

| 59 | 30 | 12.7 | 62 | 6 | 2 | warm temperate | Bm | 42 | 9 | 30 | 26 | 80 | 5 | 1 | edge tropics | Bpier | 31 | ||

| 59 | 30 | 12.7 | 62 | 6 | 2 | warm temperate | Bm | 35 | 14 | 30 | 26 | 82 | 5 | 1 | edge tropics | Bpier | 15.7 | ||

| 15 | 15 | 25.9 | 78 | 5 | 1 | edge tropics | Bm | 7 | [54] | 11 | 25 | 26 | 82 | 5 | 1 | edge tropics | Bpier | 17 | |

| 15 | 20 | 25.9 | 78 | 5 | 1 | edge tropics | Bm | 7.5 | 13 | 30 | 26 | 82 | 5 | 1 | edge tropics | Bpier | 11.2 | ||

| 15 | 25 | 25.9 | 78 | 5 | 1 | edge tropics | Bm | 6 | 1 | 30 | 22 | 82 | 5 | 1 | edge tropics | Bpier | 3 | ||

| 11 | 25 | 13 | 65 | 6 | 2 | warm temperate | Bm | 9.7 | [55] | 13 | 30 | 26 | 78 | 5 | 1 | edge tropics | Bpier | 35.6 | |

| 7 | 55 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 1.32 | [56] | 30 | 20 | 13 | 71 | 5 | 1 | warm temperate | Bm | 10 | [67] |

| 7 | 50 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 3.18 | 30 | 15 | 13 | 71 | 5 | 1 | warm temperate | Bm | 34 | ||

| 7 | 55 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 1.39 | 30 | 15 | 13 | 71 | 5 | 1 | warm temperate | Bm | 22 | ||

| 7 | 50 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 0.62 | 28 | 55 | 16.1 | 71 | 5 | 1 | north subtropics | Bm | 7 | [68] | |

| 7 | 40 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 2.19 | 28 | 60 | 16.1 | 71 | 5 | 1 | north subtropics | Bm | 7.5 | ||

| 7 | 40 | 21.8 | 83 | 6 | 1 | edge tropics | Bm | 1.01 | 28 | 35 | 16.1 | 71 | 5 | 1 | north subtropics | Bpier | 23 |

1 “Ar” represents arch ring; “Bm” represents beam; “Bpier” represents bridge pier; “Bpla” represents bridge platform.

References

- Ahmad, S. Reinforcement corrosion in concrete structures, its monitoring and service life prediction—A review. Cem. Concr. Compos. 2003, 25, 459–471. [Google Scholar] [CrossRef]

- Liu, B.; Qin, J.; Shi, J.; Jiang, J.; Wu, X.; He, Z. New perspectives on utilization of CO2 sequestration technologies in cement-based materials. Constr. Build. Mater. 2020, 121660. [Google Scholar] [CrossRef]

- Papadakis, V.G.; Vayenas, C.G. Experimental investigation and mathematical modeling of the concrete carbonation problem. Chem. Eng. Sci. 1991, 46, 1333–1338. [Google Scholar] [CrossRef]

- Saetta, A.V. The carbonation of concrete and the mechanisms of moisture, heat and carbon dioxide flow through porous materials. Cem. Concr. Res. 1993, 23, 761–772. [Google Scholar] [CrossRef]

- Ta, V.L.; Bonnet, S.; Kiesse, T.S.; Ventura, A. A new meta-model to calculate carbonation front depth within concrete structures. Constr. Build. Mater. 2016, 129, 172–181. [Google Scholar] [CrossRef]

- Salehi, H.; Burgueno, R. Emerging artificial intelligence methods in structural engineering. Eng. Struct. 2018, 171, 170–189. [Google Scholar] [CrossRef]

- Stone, J.; Blockley, D.; Pilsworth, B. Towards machine learning from case histories. Civ. Eng. Syst. 1989, 6, 129–135. [Google Scholar] [CrossRef]

- Liu, C.; Liu, J.; Liu, L. Study on the damage identification of long-span cable-stayed bridge based on support vector machine. In Proceedings of the International Conference on Information Engineering and Computer Science, Wuhan, China, 19–20 December 2009. [Google Scholar]

- Figueiredo, E.; Park, G.; Farrar, C.R.; Worden, K.; Figueiras, J. Machine learning algorithms for damage detection under operational and environmental variability. Struct. Health Monit. 2011, 10, 559–572. [Google Scholar] [CrossRef]

- Bartram, G.; Mahadevan, S. System Modeling for SHM Using Dynamic Bayesian Networks; Infotech Aerospace: Garden Grove, CA, USA, 2012. [Google Scholar]

- Son, H.; Kim, C. Automated color model–based concrete detection in construction-site images by using machine learning algorithms. J. Comput. Civ. Eng. 2011, 26, 421–433. [Google Scholar] [CrossRef]

- Dai, H.; Zhao, W.; Wang, W.; Cao, Z. An improved radial basis function network for structural reliability analysis. J. Mech. Sci. Technol. 2011, 25, 2151–2159. [Google Scholar] [CrossRef]

- Lu, N.; Noori, M.; Liu, Y. Fatigue reliability assessment of welded steel bridge decks under stochastic truck loads via machine learning. J. Bridge Eng. 2016, 22, 1–12. [Google Scholar] [CrossRef]

- Oh, C.K. Bayesian Learning for Earthquake Engineering Applications and Structural Health Monitoring. Doctor’s Thesis, California Institute of Technology, Pasadena, CA, USA, 2008. [Google Scholar]

- Alimoradi, A.; Beck, J.L. Machine-learning methods for earthquake ground motion analysis and simulation. J. Eng. Mech. 2015, 141, 04014147. [Google Scholar] [CrossRef]

- Rafiei, M.H.; Adeli, H. NEEWS: A novel earthquake early warning model using neural dynamic classification and neural dynamic optimization. Soil Dyn. Earthq. Eng. 2017, 100, 417–427. [Google Scholar] [CrossRef]

- Xu, C.; Yun, S.; Shu, Y. Concrete strength inspection conversion model based on SVM. J. Luoyang Inst. Sci. Technol. Nat. Sci. Ed. 2008, 2, 84–86. (In Chinese) [Google Scholar]

- Chen, B.; Guo, X.; Liu, G. Prediction of concrete properties based on rough sets and support vector machine method. J. Hydroelectr. Eng. 2011, 6, 251–257. [Google Scholar]

- Chen, B.T.; Chang, T.P.; Shih, J.Y.; Wang, J.J. Estimation of exposed temperature for fire-damaged concrete using support vector machine. Comput. Mater. Sci. 2009, 44, 913–920. [Google Scholar] [CrossRef]

- Aiyer, B.G.; Kim, D.; Karingattikkal, N.; Samui, P.; Rao, P.R. Prediction of compressive strength of self-compacting concrete using least square support vector machine and relevance vector machine. KSCE J. Civ. Eng. 2014, 18, 1753–1758. [Google Scholar] [CrossRef]

- Topçu, İ.B.; Sarıdemir, M. Prediction of compressive strength of concrete containing fly ash using artificial neural networks and fuzzy logic. Comput. Mater. Sci. 2008, 41, 305–311. [Google Scholar] [CrossRef]

- Bilim, C.; Atiş, C.D.; Tanyildizi, H.; Karahan, O. Predicting the compressive strength of ground granulated blast furnace slag concrete using artificial neural network. Adv. Eng. Softw. 2009, 40, 334–340. [Google Scholar] [CrossRef]

- Sarıdemir, M.; Topçu, İ.B.; Özcan, F.; Severcan, M.H. Prediction of long-term effects of GGBFS on compressive strength of concrete by artificial neural networks and fuzzy logic. Constr. Build. Mater. 2009, 23, 1279–1286. [Google Scholar] [CrossRef]

- Golafshani, E.M.; Behnood, A.; Arashpour, M. Predicting the compressive strength of normal and High-Performance Concretes using ANN and ANFIS hybridized with Grey Wolf Optimizer. Constr. Build. Mater. 2020, 232, 117266. [Google Scholar] [CrossRef]

- Kandiri, A.; Golafshani, E.M.; Behnood, A. Estimation of the compressive strength of concretes containing ground granulated blast furnace slag using hybridized multi-objective ANN and salp swarm algorithm. Constr. Build. Mater. 2020, 248, 118676. [Google Scholar] [CrossRef]

- Han, Q.; Gui, C.; Xu, J.; Lacidogna, G. A generalized method to predict the compressive strength of high-performance concrete by improved random forest algorithm. Constr. Build. Mater. 2019, 226, 734–742. [Google Scholar] [CrossRef]

- Čehovin, L.; Bosnić, Z. Empirical evaluation of feature selection methods in classification. Intell. Data Anal. 2010, 14, 265–281. [Google Scholar] [CrossRef]

- Lunetta, K.L.; Hayward, L.B.; Segal, J.; Van Eerdewegh, P. Screening large-scale association study data: Exploiting interactions using random forests. BMC Genet. 2004, 5, 1–13. [Google Scholar] [CrossRef]

- Ma, J.; Li, S.; Qin, H.; Hao, A. Adaptive appearance modeling via hierarchical entropy analysis over multi-type features. Pattern Recognit. 2019, 98, 107059. [Google Scholar] [CrossRef]

- Domínguez-Jiménez, J.A.; Campo-Landines, K.C.; Martínez-Santos, J.C.; Delahoz, E.J.; Contreras-Ortiz, S.H. A machine learning model for emotion recognition from physiological signals. Biomed. Signal Process. Control 2020, 65, 101646. [Google Scholar] [CrossRef]

- Choubin, B.; Abdolshahnejad, M.; Moradi, E.; Querol, X.; Mosavi, A.; Shamshirband, S.; Ghamisi, P. Spatial hazard assessment of the PM10 using machine learning models in Barcelona. Sci. Total Environ. 2020, 701, 134474. [Google Scholar] [CrossRef]

- Zhang, C.; Cao, L.; Romagnoli, A. On the feature engineering of building energy data mining. Sustain. Cities Soc. 2018, 39, 508–518. [Google Scholar] [CrossRef]

- Yuan, P.; Lin, D.; Wang, Z. Coal consumption prediction model of space heating with feature selection for rural residences in severe cold area in China. Sustain. Cities Soc. 2019, 50, 101643. [Google Scholar] [CrossRef]

- Zheng, L.; Cheng, H.; Huo, L.; Song, G. Monitor concrete moisture level using percussion and machine learning. Constr. Build. Mater. 2019, 229, 117077. [Google Scholar] [CrossRef]

- Azimi-Pour, M.; Eskandari-Naddaf, H.; Pakzad, A. Linear and non-linear SVM prediction for fresh properties and compressive strength of high volume fly ash self-compacting concrete. Constr. Build. Mater. 2020, 230, 117021. [Google Scholar] [CrossRef]

- Feng, D.C.; Liu, Z.T.; Wang, X.D.; Chen, Y.; Chang, J.Q.; Wei, D.F.; Jiang, Z.M. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 2019, 230, 117000. [Google Scholar] [CrossRef]

- Wu, X.; Kumar, V.; Quinlan, J.R.; Ghosh, J.; Yang, Q.; Motoda, H.; McLachlan, G.J.; Ng, A.; Liu, B.; Philip, S.Y.; et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef]

- Hu, T. Inspection and Evaluation of Reinforced Concrete Arch Bridges. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2012. (In Chinese). [Google Scholar]

- Li, C. Research on Inspection, Evaluation and Reinforcement Technology of Existing Reinforced Concrete Arch Bridges. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2011. (In Chinese). [Google Scholar]

- Zhang, J. Study on Durability Evaluation Technology of In-Service Hyperbolic Arch Bridge. Master’s Thesis, Shandong University of Science and Technology, Qingdao, China, 2007. (In Chinese). [Google Scholar]

- Hu, J.; Wu, J.; Tang, J. Inspection and strengthening of a reinforced concrete truss arch bridge. J. Suzhou Univ. Sci. Technol. 2017, 30, 66–68. (In Chinese) [Google Scholar]

- Zhang, J. Research on Health Inspection and Reinforcement Technology of Existing Concrete Bridges. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2013. (In Chinese). [Google Scholar]

- Yu, H. Inspection and Evaluation of Existing Bridges. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2010. (In Chinese). [Google Scholar]

- Lu, X. Detection and Evaluation of Bearing Capacity of Existing Concrete Bridges. Master’s Thesis, Southwest Jiaotong University, Chengdu, China, 2016. (In Chinese). [Google Scholar]

- Ding, Y. Durability Evaluation of Qilin High Speed Concrete Bridges in Service and Analysis of Seismic Capability after Deterioration. Master’s Thesis, Xian University of Architecture and Technology, Xian, China, 2017. [Google Scholar]

- Liu, D. Analysis of Bearing Capacity of Existing Reinforced Concrete Arch Bridges. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2016. (In Chinese). [Google Scholar]

- Hou, H. Performance Assessment of Existing Concrete Bridge. Master’s Thesis, Chongqing Jiaotong University, Chongqing, China, 2013. (In Chinese). [Google Scholar]

- Sun, T. Study on Detection and Reinforcement of Old Bridges in Guiyang city. Master’s Thesis, Tianjin University, Tianjin, China, 2004. (In Chinese). [Google Scholar]

- Liu, J. Durability Assessment and Prediction of Concrete Bridges. Master’s Thesis, Hunan University, Changsha, China, 2014. (In Chinese). [Google Scholar]

- Zhong, H. Research on Durability Test and Reliability Evaluation of Existing Reinforced Concrete Bridge Members. Master’s Thesis, Changsha University of Science and Technology, Changsha, China, 2004. (In Chinese). [Google Scholar]

- Han, L. Study on Durability Evaluation and Maintenance Scheme of Reinforced Concrete Bridge. Master’s Thesis, Dalian University of Technology, Dalian, China, 2007. (In Chinese). [Google Scholar]

- An, Z. Research and Application of Bridge Inspection and Evaluation. Master’s Thesis, Guizhou University, Guiyang, China, 2009. (In Chinese). [Google Scholar]

- Zhang, J. Reliability Analysis and Residual Life of RC Bridges in Service. Master’s Thesis, Hebei University of Technology, Tianjin, China, 2014. (In Chinese). [Google Scholar]

- Xie, L. Durability Evaluation and Residual Life Prediction of In-Service Reinforced Concrete Bridges. Master’s Thesis, Wuhan University of Technology, Wuhan, China, 2009. (In Chinese). [Google Scholar]

- Ren, F. Study on Durability Evaluation of Reinforced Concrete Bridges. Master’s Thesis, Shandong Polytechnic University, Jinan, China, 2000. (In Chinese). [Google Scholar]

- Gao, X. Evaluation and Improvement of Carbonation Durability for Concrete Bridges. Master’s Thesis, Dalian University of Technology, Dalian, China, 2018. (In Chinese). [Google Scholar]

- Jiang, Y. Study on Structural Performance Evaluation and Reinforcement Technology of a Highway Hyperbolic Arch Bridge. Master’s Thesis, South China University of Technology, Guangzhou, China, 2009. (In Chinese). [Google Scholar]

- Zhang, B. Research on the Durability Evaluation Method of Reinforced Concrete Girder Structure Based on Fuzzy Theory. Master’s Thesis, Jilin University, Changchun, China, 2015. (In Chinese). [Google Scholar]

- Cai, E. Study on a New Comprehensive Evaluation Method for Durability of Existing Reinforced Concrete Bridges. Master’s Thesis, Tianjin University, Tianjin, China, 2007. (In Chinese). [Google Scholar]

- Dong, C. Fuzzy Comprehensive Evaluation of Concrete Bridge Durability. Master’s Thesis, Wuhan University of Technology, Wuhan, China, 2004. (In Chinese). [Google Scholar]

- Zhang, B. Technical Research on Inspection and Evaluation of Qinghong Bridge. Master’s Thesis, Liaoning Technical University, Fuxin, China, 2005. (In Chinese). [Google Scholar]

- Bao, Q. Research on the Durability of Reinforced Concrete Bridges in Beijing. Master’s Thesis, Beijing University of Technology, Beijing, China, 2003. (In Chinese). [Google Scholar]

- Guo, D. Prediction of Performance Degradation and Residual Service Life of In-Service R,C, Bridges in Coastal Areas. Master’s Thesis, Zhejiang University, Zhejiang, China, 2014. (In Chinese). [Google Scholar]

- Li, F. Analysis on the Present Situation of Prestressed Concrete Bridge Structure in Service and Evaluation of Residual Bearing Capacity. Master’s Thesis, Qingdao University of Technological, Qingdao, China, 2010. (In Chinese). [Google Scholar]

- Zhang, C. Study on Durability Test of Hongtu Bridge. Master’s Thesis, Northeastern University, Shenyang, China, 2005. (In Chinese). [Google Scholar]

- Dai, Y. Damage Analysis and Reinforcement Technology of Small and Medium-Sized Bridges in Hainan Province. Master’s Thesis, Southeast University, Nanjing, China, 2012. (In Chinese). [Google Scholar]

- Zhao, L. Detection and Evaluation of Durability of R,C, Bridge under Marine Environment. Master’s Thesis, Qingdao Technological University, Qingdao, China, 2010. (In Chinese). [Google Scholar]

- Su, Y. Reinforcement Based on Diseases Detection with Analysis in Bridge of Huaihe River Bridge. Master’s Thesis, AnHui University of Science and Technology, Huainan, China, 2013. (In Chinese). [Google Scholar]

- College of Urban and Environmental Science, Peking University. Climatic zoning map of China; Geographic Data Sharing Infrastructure. Available online: http://geodata.pku.edu.cn (accessed on 20 February 2021).

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Poul, A.K.; Shourian, M.; Ebrahimi, H. A Comparative Study of MLR, KNN, ANN and ANFIS Models with Wavelet Transform in Monthly Stream Flow Prediction. Water Resour. Manag. 2019, 33, 2907–2923. [Google Scholar] [CrossRef]

- Rafiei, M.H.; Adeli, H. A novel machine learning-based algorithm to detect damage in high-rise building structures. Struct. Des. Tall Spec. Build. 2017, 26, e1400. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Hu, G.; Liu, L.; Tao, D.; Song, J.; Tse, K.T.; Kwok, K.C.S. Deep learning-based investigation of wind pressures on tall building under interference effects. J. Wind Eng. Ind. Aerodyn. 2020, 201, 104138. [Google Scholar] [CrossRef]

- Pei, L.; Sun, Z.; Yu, T.; Li, W.; Hao, X.; Hu, Y.; Yang, C. Pavement aggregate shape classification based on extreme gradient boosting. Constr. Build. Mater. 2020, 256, 119356. [Google Scholar] [CrossRef]

- Dong, E.; Li, C.; Li, L.; Du, S.; Belkacem, A.N.; Chen, C. Classification of multi-class motor imagery with a novel hierarchical SVM algorithm for brain-computer interfaces. Med. Biol. Eng. Comput. 2017, 55, 1809–1818. [Google Scholar] [CrossRef] [PubMed]

- López, J.; Maldonado, S. Multi-class second-order cone programming support vector machines. Inf. Sci. 2016, 330, 328–341. [Google Scholar] [CrossRef]

- Zhang, R.; Huang, G.B.; Sundararajan, N.; Saratchandran, P. Multicategory Classification Using an Extreme Learning Machine for Microarray Gene Expression Cancer Diagnosis. IEEE/ACM Trans. Comput. Biol. Bioinform. 2007, 4, 485–495. [Google Scholar] [CrossRef] [PubMed]

- Burges, C.J.C. A tutorial on support vector machines for pattern recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).