Abstract

Meaning conflation deficiency (MCD) presents a continual obstacle in natural language processing (NLP), especially for low-resourced and morphologically complex languages, where polysemy and contextual ambiguity diminish model precision in word sense disambiguation (WSD) tasks. This paper examines the optimisation of contextual embedding models, namely XLNet, ELMo, BART, and their improved variations, to tackle MCD in linguistic settings. Utilising Sesotho sa Leboa as a case study, researchers devised an enhanced XLNet architecture with specific hyperparameter optimisation, dynamic padding, early termination, and class-balanced training. Comparative assessments reveal that the optimised XLNet attains an accuracy of 91% and exhibits balanced precision–recall metrics of 92% and 91%, respectively, surpassing both its baseline counterpart and competing models. Optimised ELMo attained the greatest overall metrics (accuracy: 92%, F1-score: 96%), whilst optimised BART demonstrated significant accuracy improvements (96%) despite a reduced recall. The results demonstrate that fine-tuning contextual embeddings using MCD-specific methodologies significantly improves semantic disambiguation for under-represented languages. This study offers a scalable and flexible optimisation approach suitable for additional low-resource language contexts.

1. Introduction

Meaning conflation deficiency (MCD) arises when word representations fail to distinguish between the multiple senses of polysemous words [1]. This is a persistent challenge in low-resourced, morphologically rich languages such as Sesotho sa Leboa (SsL), where contextual cues are crucial for meaning determination. Traditional static embeddings (e.g., Word2Vec) conflate senses into a single vector, limiting performance in tasks like machine translation and semantic parsing [1]. Word sense disambiguation (WSD)—the process of assigning the correct meaning to a word in context—has been extensively studied for high-resource languages but remains difficult for languages with limited corpora [2,3]. SsL poses additional challenges due to its complex morphology and syntax [4]. Approaches often use semantic features and surrounding words for sense classification, with Bayesian models applied to polysemous terms [5]. However, the performance of WSD systems in morphologically rich, low-resourced languages is highly dependent on corpus availability [6].

The proposed system advances semantic granularity beyond standard contextual embeddings by leveraging a sense-annotated Sesotho sa Leboa corpus that explicitly maps polysemous words to their correct senses in context. This targeted data design introduces clear semantic boundaries that generic pretrained models, which rely solely on implicit distributional context, often fail to capture. Task-specific optimisation—through hyperparameter tuning, dynamic padding, and early stopping—further refines the model to prioritise fine-grained sense separation over general language modelling. Additionally, target word highlighting using markup tags directs the model’s attention to relevant local context, reducing sense blending and improving disambiguation precision.

The system also employs class-balanced training to ensure rare senses are robustly represented, enhancing the ability to distinguish between frequent and infrequent meanings. Comparative results demonstrate significant improvements over baselines, with optimised XLNet achieving a 91% F1-score and accuracy compared to the baseline’s 86% F1-score and 80% accuracy, and optimised ELMo reaching a 96% F1-score and 92% accuracy. These gains reflect the framework’s capacity to capture nuanced meaning distinctions and address MCD effectively. Although developed for Sesotho sa Leboa, its methodology—combining sense-focused corpus design, context-aware input structuring, and optimisation for disambiguation—is transferable to other low-resourced languages, offering a scalable approach to enhancing semantic granularity in under-represented linguistic contexts.

Lexical ambiguity, largely caused by polysemy, can lead to misinterpretation in NLP outputs [7]. For example, in SsL, noka can mean “river,” “hip,” or “seasoning,” depending on context. Addressing such ambiguity can improve NLP accuracy, especially for languages with many polysemous terms. This study implements the first WSD system for SsL, using Naive Bayes, Logistic Regression, and Support Vector Machines on an ambiguous corpus, achieving encouraging results despite the absence of a large, high-quality dataset. In this work, “optimised ELMo” refers to corpus-specific and hyperparameter-tuned ELMo embeddings with selective layer fine-tuning for WSD, while “optimised BART” denotes a domain-adaptive, parameter-efficient fine-tuning of BART on sense-annotated Sesotho sa Leboa data to maximise fine-grained semantic separation. The optimisation of ELMo and BART involved a combination of corpus adaptation, targeted hyperparameter tuning, and task-specific fine-tuning strategies designed to enhance semantic granularity. For ELMo, optimisations focused on leveraging the Sesotho sa Leboa corpus, selective layer updating, and sense-aware training to better capture fine-grained morphological and semantic cues. For BART, domain-adaptive pretraining, parameter-efficient fine-tuning, and task-specific classification heads were employed to separate closely related senses in context. These targeted adjustments ensure that both models move beyond generic contextual embeddings to achieve finer semantic resolution in a low-resource, morphologically rich language setting. Through corpus adaptation, targeted hyperparameter tuning, and task-specific fine-tuning, our optimised ELMo and BART models achieve finer semantic resolution than generic contextual embeddings, enabling more precise sense separation in Sesotho sa Leboa.

The problem is stated as: “In morphologically rich, low-resource languages such as Sesotho sa Leboa, standard contextual embeddings (e.g., BERT, RoBERTa, ELMo) often fail to capture fine-grained semantic distinctions, resulting in meaning conflation deficiency (MCD), where closely related senses are merged into a single representation. This limits the accuracy of word sense disambiguation (WSD) and hinders downstream NLP applications.”

Word sense disambiguation (WSD) in morphologically rich, low-resource languages such as Sesotho sa Leboa faces persistent challenges due to meaning conflation deficiency (MCD), where closely related senses are collapsed into a single representation. Standard contextual embeddings like BERT, RoBERTa, and ELMo, while effective for high-resource languages, often lack the semantic granularity needed to capture fine-grained distinctions in such linguistic settings. This research addresses the problem by hypothesising that a framework integrating multi-prototype embeddings, domain-adaptive transformer models, and sense-aware fine-tuning can significantly improve fine-grained sense separation compared to baseline embeddings. The central research question guiding this work is the following: How can a meaning conflation deficiency resolution framework, combining multi-sense embeddings and optimised transformer-based models, enhance semantic granularity and WSD performance in Sesotho sa Leboa?

Meaning conflation deficiency (MCD) and traditional word sense disambiguation (WSD) are related but distinct concepts within natural language processing. Traditional WSD focuses on identifying the correct sense of a word in context, assuming a fixed, often discrete, set of senses typically derived from lexical resources. However, MCD addresses a deeper, underlying limitation of word embeddings and semantic representations: the inability to distinguish multiple distinct meanings of a polysemous word within a single embedding vector, which causes meaning conflation.

In this research, researchers view MCD as part of the broader WSD task, emphasising that resolving MCD is essential for effective sense disambiguation, especially when embeddings fail to capture sense distinctions. The relationship between meaning conflation deficiency (MCD) and traditional word sense disambiguation (WSD) remains an area requiring clear delineation. Traditional WSD aims to identify the correct sense of a polysemous word within a given context, typically relying on predefined sense inventories and discrete sense assignments. In contrast, MCD addresses a fundamental limitation in semantic representation models—particularly in word embeddings—where multiple distinct meanings of a single word are conflated into a single vector representation. This conflation hinders the accurate capture of semantic nuances critical for disambiguation tasks. Consequently, MCD in this research is regarded as a subset of the broader WSD problem, emphasising the necessity of resolving conflated meanings for effective disambiguation.

Within a supervised learning framework [4,8], we evaluate static embeddings (Word2Vec, Doc2Vec) alongside contextual and hybrid models (ELMo, BART, XLNet) on a sense-annotated SsL corpus. Findings show that optimised contextual embeddings outperform static models, with optimised ELMo reaching an F1-score of 95% and 91% accuracy, and optimised XLNet demonstrating balanced precision–recall performance. These results highlight the importance of contextually optimised models in addressing MCD and advancing NLP for under-represented languages. Through corpus adaptation, targeted hyperparameter tuning, and task-specific fine-tuning, our optimised ELMo and BART models achieve finer semantic resolution than generic contextual embeddings, enabling more precise sense separation in Sesotho sa Leboa.

2. Related Literature

Sesotho sa Leboa (SsL) has two principal language obstacles for computer models: its agglutinative morphology and its semantic sparsity. Agglutinative morphology generates intricate word forms by concatenating noun-class prefixes, concords, and verbal extensions (including causative, applicative, and passive), leading to an extensive array of surface forms and long-distance agreement dependencies. Semantic sparsity results from the infrequent occurrence of several sense-bearing morphological combinations, exacerbated by elevated levels of polysemy; for instance, the term noka may signify “river,” “hip,” or “seasoning”, contingent upon the context. These characteristics impose significant pressure on tokenisation, since subword or BPE segmentation may either disintegrate morphemes, so obscuring meaning, or amalgamate them, thereby concealing critical semantic indicators.

The models examined in this study tackle these difficulties via distinct but complementary approaches. ELMo integrates character-level convolutional networks with bidirectional LSTMs, making it particularly effective for agglutinative languages by constructing word representations from morpheme-level features, therefore retaining affixes that indicate noun class, tense, and voice. This design identifies both local and medium-range agreement patterns indicative of word meaning, achieving the greatest empirical performance in this investigation (F1-score 96%, accuracy 92%). BART’s transformer architecture, utilising multi-head attention, consolidates distributed morphological signals, and its denoising pretraining enhances resilience to orthographic variation and morphological noise; however, its conservative predictions result in diminished recall for rare senses (F1-score 85%, accuracy 96%). XLNet’s permutation-based language modelling is especially beneficial for semi-supervised learning, as it conditions on both left and right contexts without masking, enabling the integration of morphological information from affixes next to the target word and the capturing of long-distance concord patterns. The two-stream attention technique enhances sense selection by differentiating content and question representations, resulting in significant increases upon optimisation (F1-score increasing from 86% to 91%, accuracy from 80% to 91%).

Attention processes and permutation-based modelling directly facilitate the disambiguation process in semi-supervised learning. Attention mechanisms may concentrate on morphologically significant places, such as noun-class prefixes or verbal extensions, whereas permutation training encapsulates agreement patterns that extend across several tokens. The proposed method enhances semantic granularity beyond conventional contextual embeddings by integrating sense-annotated training data, target-word highlighting to direct attention, class-balanced optimisation to maintain minority sensations, and meticulous tokenisation algorithms that preserve affix integrity. Character-aware composition in ELMo proficiently identifies morphological markers, but the attention and permutation techniques in BART and XLNet associate these markers with their clarifying contexts, alleviating the effects of agglutinative complexity and sparse sense distributions.

This section highlights a significant weakness of traditional word-embedding models: their failure to effectively distinguish between the several meanings of a polysemous word, despite the presence of these unique senses in the training corpus [9]. This problem, known as meaning conflation deficiency, considerably impairs the semantic comprehension capacities of natural language processing systems. This issue occurs because conventional static word embeddings allocate a singular vector representation to each word, regardless of the particular context in which it is used, therefore conflating all potential meanings into one [10]. Thus, this conflation may result in erroneous semantic interpretations of input text, eventually impairing the efficacy of subsequent NLP applications that depend on these representations [10].

This problem is especially evident in languages with complex morphology, where a single word form may convey several meanings and grammatical roles based on its affixes and context, hence increasing the difficulty of precise semantic representation [10]. The term “lewa” may denote either a cave or cooked corn or a spread of divine bones, and a static embedding model will allocate the same vector to both interpretations, neglecting the contextual subtleties [9]. This conflation substantially restricts these models’ capacity to recognise nuanced semantic differences, therefore affecting tasks that need detailed comprehension [11]. To address this, sophisticated word-embedding algorithms seek to encapsulate contextual information, hence facilitating the creation of unique representations for various word meanings [10].

This method, commonly referred to as multi-prototype embedding or sense representation, aims to rectify the issue of meaning conflation by producing multiple distinct vector representations for a single word, each aligned with a specific sense or contextual application [9]. The conflation defect is significant since it breaches the triangular inequality of Euclidean spaces, which may diminish the efficacy of word space models [9]. The difficulty is exacerbated in morphologically rich languages, where a single lexical item might exhibit many surface forms, each possibly conveying different semantic or grammatical meanings based on the attached morphemes [12]. This trait requires advanced methodologies for word embedding that can separate the lexical form from its contextual meaning, transcending static representations [13]. The intrinsic constraint of traditional word embeddings, which amalgamate disparate meanings into a singular vector, is a considerable obstacle for NLP systems striving for profound semantic comprehension, especially in contexts including polysemy [9].

2.1. XLNet (Extra Long Net)

XLNet [1] is a neural language model developed as an enhancement of the widely used transformer-based model, BERT, to mitigate some shortcomings inherent in BERT. BERT is a robust model capable of capturing bidirectional contextual information in text; yet, it has limitations, including its inability to manage permutation-based language-modelling problems and its dependence on a left-to-right or right-to-left pretraining approach. XLNet employs a permutation-based methodology for language modelling, enabling it to capture the connections among all the permutations of the input sequence. The model employs a hybrid approach of unsupervised and supervised learning for pretraining on an extensive corpus of text data, then fine-tuning for particular downstream NLP applications [1]. XLNet uses a Transformer-XL architecture and employs permutation language modelling (PLM) as its only pretraining purpose. XLNet employs SentencePiece, an open-source variant closely related to WordPiece [14]. XLNet employs a permutation language model [15] to acquire bidirectional contextual information via factorisation order and positional encoding. The permutation language model is mathematically described by Equation (1) [15]:

where

- N is the given input sequence with length N [1, 2, …, N];

- refers to the set of all possible permutations of the given sequence length N;

- The nth element is ;

- refers to the nth element of a permutation .

XLNet is the most recent attention-based transformer model using the autoregressive language model, introduced subsequent to BERT. It delivers enhanced performance relative to BERT across several NLP tasks due to the permutation technique used in XLNet. Additionally, XLNet employs the fundamental concepts of Transformer-XL to proficiently acquire knowledge from lengthy words. It acquires data as a segmented unit extracted from an extended phrase. Simultaneously, it utilises the states of preceding segments [16]. XLNet is the enhanced version of BERT. Transformer-XL and XLNet enhance the original transformer as a feature engineering model to obtain a more accurate understanding of language situations [17].

2.2. Subsection Bidirectional Abstractive Representation (Auto Regressive) from Transformers (BART)

The BART [18] model, developed by Facebook AI Research in collaboration with Hugging Face, integrates OpenAI’s GPT (Generative Pre-Trained Transformer) with Google’s BERT. BART uses a traditional seq2seq/machine translation framework with a bidirectional encoder (like BERT) and a left-to-right decoder (like GPT). BART is especially advantageous for comprehension activities and is also very successful when optimised for text creation [18]. BART is formed by combining the left-to-right decoder of GPT with the bidirectional encoder of BERT. This allows for consecutive text generation and contextual understanding of the input content from both directions. BART is pretrained on the CNN Daily Mail dataset [19]. It is based on transformer architecture and uses self-attention to grasp the contextual relationships among words in a corpus [19].

2.3. Convolutional Neural Networks (CNNs)

A convolutional neural network (CNN) [20] is a kind of neural network composed of a sequence of convolutional layers. These layers can extract characteristics in NLP applications [20]. Initially designed for computer vision to identify certain patterns in pictures, CNN architecture has recently been extensively used in natural language processing to extract significant information. To derive additional characteristics about the adjacency of tokens inside a phrase, we linked the sequence output of pretrained models to a CNN [21].

2.4. CNN-LSTM

The integration of convolutional neural networks (CNNs) and long short-term memory (LSTM) networks is pivotal in the dynamic realm of contemporary technological advancement, transforming several domains like signal processing, financial forecasting, and medical diagnostics. The amalgamation of CNN and LSTM architectures has emerged as a pivotal paradigm, transforming predictive modelling and analytical frameworks across several fields [22].

2.5. Large Language Model Attention Mechanism (LLaMa)

The Llama [23] model learns the semantics and grammatical principles of language by forecasting the subsequent word in a sequence. LLaMA [24] is derived from a substantial model architecture centered on an attention mechanism, including a comprehension of the deep semantics of text, serving as a tool for executing intelligent text analysis. The LLaMA model incorporates a function for initialising word-embedding sets that creates word embeddings based on the semantic information of the vocabulary and updates them according to the continually acquired contextual information during model training [24].

2.6. TextRank

The conventional TextRank [25] algorithm is a graph-based method. In this technique, words correspond to nodes in the graph, connections between words represent the edges of the graph, and the frequency of word occurrences inside a fixed-size sliding window denotes the strength of these connections. The TextRank model may be articulated using Formula (2) [25]:

where

- is the probability of the user to the page at random, and the value is between 0 and 1;

- is the weight of the edge of arbitrary two points i and j;

- represents the set of points that point to ;

- represents the score of the point obtained by the TextRank model.

The TextRank [26] algorithm is a graph-based ranking method that determines the significance of a vertex inside a graph by using global information iteratively derived from the whole graph. The ranking of terms is determined by their relationships within the graph, after which the highest-ranking words are chosen as keywords. The candidates for the keyword exhibit optimal performance when just nouns and adjectives are chosen as prospective keywords [26]. TextRank is a technique that employs an unsupervised methodology and graph-based modelling. The preliminary step of keyword extraction involves generating a graph based on the words generated during the text preprocessing stage. It employs an undirected weighted graph due to the absence of a definitive directional link among the words. Moreover, prior studies indicate that the undirected graph produces superior accuracy compared to the directed graph [27].

2.7. Language-Agnostic BERT Sentence Embedding (LABSE)

LaBSE [28] is a BERT-based and multilingual sentence-embedding model. LaBSE supports 109 different languages. LaBSE, which represents each sentence with a 768-dimensional vector, was introduced to generate a language-agnostic phrase embedding by using the multilingual BERT model, and further, it is trained in 109 languages [29].

2.8. XLM-RoBeRTa (XLM-R)

XLM-R [29] is a transformer-based masked multilingual language model developed for 100 languages. It was trained with almost two gigabytes of curated CommonCrawl data. It has markedly enhanced performance on several cross-lingual transfer tasks and surpassed the mBERT model [29].

2.9. Distilled Universal Sentence Encoder (DistillUse)

DistilUSE is a streamlined variant of the sentence-embedding approach. It is intended to provide sentence representations. DistilUSE accommodates 15 distinct languages and encodes each phrase as a 768-dimensional vector [28].

2.10. Deep Neural Network (DNN)

A DNN is a multi-layer perceptron including several hidden layers situated between its inputs and outputs. In a contemporary deep neural network (DNN) hidden Markov model (HMM) hybrid system, the DNN is trained to provide posterior probability estimations for the HMM states [30]. DNN is based on the conventional feed-forward artificial neural network, including additional hidden layers, hence enhancing its expressive capability. The development of the DNN model encompasses unsupervised pretraining and supervised parameter optimisation. The pretraining phase aims to enhance model initialisation [31].

Conventional DNN [32] accelerators primarily concentrate their emphasis on the processing elements (PEs) tasked with enhancing the efficiency of recurrent dot-product operations. Nonetheless, they often have constraints in memory bandwidth and energy inefficiency attributable to data transfers. Data transmissions use a substantial amount of energy in these systems. A recent study indicates that many data flows are attributable to basic processes that may be executed in hardware. This prompts a shift from compute-centric to data-centric designs for data-intensive applications [32].

3. Research Methodology

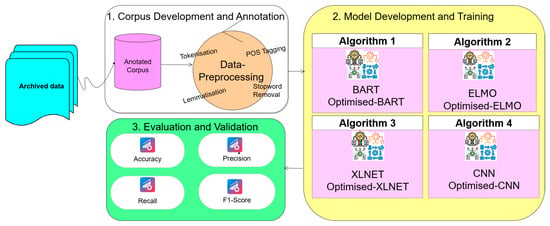

This work employs an experimental comparative methodology to tackle meaning conflation deficiency (MCD) in Sesotho sa Leboa (SsL)—a low-resourced, morphologically intricate, and agglutinative language distinguished by complicated noun-class systems, verbal extensions, and significant polysemy. The process has three distinct phases: Corpus Preparation and Annotation, Model Development and Optimisation, and Evaluation and Validation. Each step is distinctly linked with the language issues presented by SsL, as shown in the Theoretical Research Framework in Figure 1.

Figure 1.

Theoretical research methodology framework.

- Phase 1: Corpus Development and Annotation

To address the lack of a large-scale, high-quality linguistic resource for Sesotho sa Leboa (SsL), a manually sense-annotated corpus was created from diverse archived textual sources. The data underwent morphology-aware preprocessing designed to preserve linguistic features essential for accurate disambiguation. This process included tokenisation adapted for agglutinative morphology to maintain morpheme integrity, part-of-speech tagging to capture noun-class concords and verbal extensions, lemmatisation to normalise inflected forms while retaining sense-relevant markers, and stopword removal adjusted to keep functional morphemes that carry semantic significance. Each target word was annotated with its correct sense in context, producing a training resource that explicitly encodes both sense boundaries and contextual dependencies. By systematically documenting rare senses alongside common ones, this phase directly addressed semantic sparsity and ensured that less frequent meanings were represented and learnable.

Two native speakers from distinct dialectal backgrounds—postgraduate scholars in applied language (one pursuing a master’s degree and the other a doctorate)—collaborated during the manual annotation of the Sesotho sa Leboa corpus to guarantee contextual relevance and natural use. Researchers assessed consistency and resolved any disputes. The inter-annotator agreement was measured using Cohen’s kappa:

The outcome, κ = 0.70 (70%), indicates substantial agreement, supporting the corpus’s suitability for training WSD models.

- Phase 2: Model Development, Training, and Optimisation

In this phase, two categories of models were selected for comparative analysis: contextual embeddings, including ELMo, GlossELMo, optimised ELMo, BART, optimised BART, XLNet, and optimised XLNet; and neural architectures, comprising CNN and CNN with dropout-enhanced variants. The pretrained contextual models were adapted through transfer learning to capture Sesotho sa Leboa (SsL)-specific morphological and semantic cues. To improve sense disambiguation, target-word highlighting was incorporated into the inputs, directing model attention toward the ambiguous term and its surrounding morphemes. Class-balanced training was employed to reduce bias toward majority senses, while hyperparameter tuning was used to enhance training efficiency and stability. Collectively, these strategies address the agglutinative complexity of SsL by ensuring that critical morphological markers—often spread across both sides of the target word—are preserved and effectively weighted during model learning.

- Phase 3: Evaluation and Validation

The model’s performance was evaluated by accuracy, precision, recall, and F1-score to determine the correctness and completeness of sense assignment. The chosen measures aim to balance accuracy in distinguishing senses and recall in retrieving infrequent senses, both essential for alleviating meaning conflation deficiency (MCD) in morphologically rich and semantically sparse languages. A uniform assessment process was implemented to guarantee comparability across all models and experimental iterations. The Theoretical Research Framework (Figure 1) demonstrates the alignment of each methodological step with the fundamental linguistic problems of Sesotho sa Leboa: Phase 1, Corpus Preparation, mitigates semantic sparsity via manual sense annotation and morphology-aware preprocessing; Phase 2, Model Development, addresses agglutinative morphology and polysemy through transfer learning, target-word highlighting, and class-balanced optimisation; and Phase 3, Evaluation, emphasises the preservation of sense boundaries by employing balanced performance metrics to guarantee both precision and recall for infrequent and frequent senses. This framework emphasises the interrelation of linguistic features and computational methods, affirming that the suggested methodology is both theoretically sound and practically efficient for low-resourced, morphologically intricate languages.

This research makes use of McNemar’s test to determine whether there is a statistically significant difference to confirm whether optimised models significantly outperform baseline models. This research makes use of the following equation:

Researchers address the skewed sense distribution with a layered strategy that combines data-, loss-, and inference-level fixes. First, researchers use cost-sensitive learning via class weights based on the effective number of samples. Secondly, researchers ensure balanced exposure to rare senses with class-balanced mini-batches during training. Thirdly, at inference, researchers improve minority recall by calibrating probabilities and tuning per-class decision thresholds on the validation set to optimise macro-F1 rather than raw accuracy. Where feasible, researchers add targeted augmentation or active labelling to raise support for ultra-rare senses.

3.1. Data Collection and Annotation

To compile a Sesotho sa Leboa meaning conflation deficiency (MCD) dataset, data were sourced from a range of authentic materials, including academic dissertations, research papers, dictionaries, web pages, newspapers, blogs, and social media platforms. These sources were selected for their standardised and diverse language usage, ensuring both formal and informal contexts were captured. Given the limited availability of structured linguistic resources for Sesotho sa Leboa, web scraping techniques—specifically using Beautiful Soup—were employed to automate the extraction of relevant content by parsing HTML and filtering out tags. The focus was on collecting contextual examples of ambiguous words in natural language settings. To further enhance the dataset’s linguistic richness, content was drawn from both formal publications (e.g., academic and government texts) and informal sources (e.g., forums and social media) to represent a wide spectrum of language use. During the manual annotation phase, two linguists with expertise in Sesotho sa Leboa morphology, syntax, and semantics contributed their insights into complex linguistic features and cases of ambiguity. They were supported by three native Sesotho sa Leboa speakers from diverse dialectal backgrounds, who verified the contextual appropriateness of the word senses. Additionally, two master’s students from the Applied Language Department ensured annotation consistency and helped resolve any disagreements. This manual, collaborative approach was critical for producing a high-quality, reliable dataset that can serve as a benchmark for training and evaluating WSD models in Sesotho sa Leboa.

3.2. Optimised BART-Based Algorithm 1 for Meaning Conflation Deficiency

Optimised BART-based Algorithm 1 for meaning conflation deficiency illustrates the complete processing pipeline for adapting and fine-tuning the BART transformer architecture to identify and resolve cases of meaning conflation deficiency (MCD), where multiple senses of a word are blended into a single, ambiguous representation. Algorithm 1 serves as a task-adapted blueprint for how BART’s encoder–decoder architecture and attention mechanisms can be systematically optimised to overcome semantic ambiguity in low-resourced, morphologically rich languages, with specific design choices—such as target-word highlighting and morphology-aware preprocessing—made to suit Sesotho sa Leboa’s linguistic characteristics.

| Algorithm 1 Optimised BART Training for Meaning Conflation Deficiency Excel Corpus |

| 1: Input: with sentence si, target word wi, and sense label yi 2: Output: Trained BART model M, evaluation metrics, visualisations. Step 1: Preprocessing: 3: (si, wi, yi) ∈ D Construct input as xi = [TGT] wi [/TGT] si 4: Append to training samples Step 2: Label Encoding: 5: Encode sense labels yi into class indices via LabelEncoder. Step 3: Train-Test Split: 6: Split D into training (Dtrain), validation (Dval), and test sets (Dtest) using stratification. Step 4: Tokenisation: 7: tokenise input texts xi with max length L, and convert to tensors. Step 5: Model initialisation: 8: Load BART model with classification head M ← BARTForSequenceClassification with K output classes. Step 6: Optimisation Setup: 9: Configure optimiser as AdamW with learning rate α, batch size B, and gradient accumulation G. Use cosine scheduler with warmup ratio ρ. Step 7: Loss Function: class imbalance is present Compute class weights using Effective Number of Samples or Focal Loss Step 8: Fine-tuning: 10: Train model M for E epochs with early stopping (P patience), label smoothing ϵ, and optional: bf16, checkpointing, encoder layer freezing, torch.compile Step 9: Evaluation: 11: Compute accuracy, macro-F1, precision, recall on Dtest Step 10: visualisations: 12: Generate: 13: ROC Curves (micro, macro, per-class) 14: Confusion Matrix 15: Training/Validation Loss Curves 16: Positional Embedding Norms 17: Decoding Behaviour Samples |

| End of the Optimised BART Algorithm |

3.3. Optimised ELMo Algorithm 2 for Meaning Conflation Deficiency

Optimised ELMo Algorithm 2 for meaning conflation deficiency illustrates the step-by-step process of adapting the ELMo contextual embedding model to specifically address MCD in Sesotho sa Leboa by enhancing semantic granularity and reducing the blending of multiple senses into a single representation. Algorithm 2 serves as a procedural blueprint for how ELMo is systematically adapted and optimised to capture morphological and contextual cues that are critical for distinguishing polysemous word senses in a low-resourced, morphologically rich language.

| Algorithm 2 Optimised ELMo Fine-Tuning for WSD |

| 1: Data: From Excel, build the dataset Split stratified into train/validation/test: 2: Model: 3: Let : string → Rd be the ELMo encoder (d = 1024) with trainable parameters θ. Define a regularised classifier head: 4: Label smoothing & loss. 5: With smoothing define Cross-entropy with L2 regularisation: 6: Learning rate schedule (staircase exponential decay). 7: At step: the decay rate, and s the decay steps. 8: Adam optimiser. Given gradients : , , , 9: Early stopping. 10: Let the best epoch. 11: Stop if fails to improve by at least δ for P consecutive epochs; restore . 12: Excel file with columns Sentence, word, sense; hyperparameters: epochs E, batch size B, LR η0, decay γ, steps s, label smoothing ε, dropout p, L2 λ, patience P, tolerance δ. 13: Load Excel and construct xi = [TGT] wi [/TGT] || Sentencei, labels yi. 14: Stratify-split D → Dtrain, Dval, Dtest. 15: Initialise ELMo Eθ (trainable) and head (W1, b1, W2, b2). 16: Initialise Adam states m0, v0 = 0. 17: for epoch e = 1 to E do 18: Shuffle Dtrain and form mini-batches {B} of size B. 19: for batch B do 20: Encode hi ← Eθ(xi), compute zi, oi, pˆi (forward pass). 21: Build smoothed targets qi,k; compute L = LCE + 22: Backprop to get gt = ∇ΘL. 23: Update Adam moments mt, vt, compute ηt, and update Θ. 24: end for 25: Evaluate on Dval. 26. If then 27: Save checkpoint; reset patience counter. 28: else 29: Increment patience counter; if counter ≥ P then break. 30: end if 31: end for 32: Restore best checkpoint. 33: Evaluate on Dtest: accuracy, confusion matrix, and compute ROC/AUC. 34: ROC and AUC (one-vs-rest). 35: For class c, define scores Vary threshold τ ∈ [0, 1] to get The ROC curve is , and Macro/micro averages: 36: Typical hyperparameters (fine-tuning). End of the Algorithm |

3.4. Optimised XLNet Algorithm 3 for Meaning Conflation Deficiency

Optimised XLNet Algorithm 3 for meaning conflation deficiency illustrates the complete procedural workflow for adapting and fine-tuning the XLNet transformer model to effectively resolve MCD by exploiting its permutation-based language modelling and two-stream attention mechanisms in a low-resourced, morphologically rich context like Sesotho sa Leboa. Algorithm 3 serves as a task-specific adaptation guide for XLNet, showing how to operationalise its theoretical strengths—permutation modelling and attention—for fine-grained word sense disambiguation in the presence of agglutinative morphology and semantic sparsity.

| Algorithm 3 Optimised XLNet |

| 1: Input: hyperparameters: learning rate 2 × 10−5, batch size 8, epochs 5, max length 128. 2: Output: Fine-tuned XLNet model and tokeniser; validation metrics and plots. Step 1: Data Prep 3: Load dataset D from Excel; keep columns sentence, word, sense 4: Create input text by marking target word: replace word in sentence with <word> 5: Encode labels y with a label encoder; set K ← |classes| 6: Stratified split (X, y) into train/validation (80/20) with fixed random seed Step 2: Tokenisation & Datasets 7: initialise tokeniser T ← XLNettokeniser.from pretrained(″xlnet-base-cased″) 8: Define tokenise(batch): T (text, padding = True, truncation = True, max length = 128) 9: Wrap train/val into HuggingFace Datasets and apply map(tokenise) with batched=True Step 3: Model & Training Setup 10: initialise model M ← XLNetForSequenceClassification with num labels = K 11: Set training args: evaluation per epoch; save per epoch; lr = 2 × 10−5; weight decay = 0.01; epochs = 5; load best model at end=True 12: Enable early stopping with patience = 2 13: Optional (imbalance): compute class weights from training labels and use weighted cross-entropy in a custom compute loss 14: Create Trainer with (M, args, train dataset, eval dataset, callbacks = {EarlyStopping}) Step 4: Training & Evaluation 15: Train: trainer.train() 16: Predict on validation: obtain logits Zˆ ← trainer.predict; compute probabilities Pˆ = softmax(Zˆ); predictions yˆ = arg max Pˆ 17: Compute metrics: classification report; confusion matrix; one-vs-rest ROC and AUC per class Step 5: Analysis & Persistence 18: Optional: project Zˆ with PCA and UMAP for 2D class-separable visualisations 19: Save artifacts: trainer.save model() and tokeniser.save pretrained() |

3.5. Optimised CNN Using Dropout-Enhanced Variants Algorithm 4 for Meaning Conflation Deficiency

Optimised CNN using dropout-enhanced variants Algorithm 4 for meaning conflation deficiency illustrates how a convolutional neural network (CNN) architecture, augmented with dropout regularisation, is adapted and optimised to reduce meaning conflation deficiency (MCD) by learning discriminative features from a manually sense-annotated Sesotho sa Leboa corpus. Algorithm 4 demonstrates a feature-driven, regularised deep learning approach to MCD resolution, showing how CNNs excel at capturing local morphological and syntactic patterns, while dropout-enhanced training improves robustness in the face of sparse, imbalanced sense distribution.

| Algorithm 4 Counteracting Overfitting with Dropout, L2 Regularisation, and Early |

| Stopping 1: Input: Labeled dataset , network , dropout rates L2 coefficient λ, optimiser (Adam) learning rate η, max epochs E, patience P 2: Output: Parameters θ⋆ at best validation epoch Step 1: Split: 3: Partition D → Dtrain, Dval, Dtest (stratified). Step 2: Model: 4: Define Fθ with layers (e.g., Embedding/Conv/Dense) and insert Dropout layers with rates pℓ after capacity-heavy layers. Step 3: Objective (training mode): 5: where B is a mini-batch, m is the dropout mask, and Wℓ are weight matrices subject to L2. Step 4: Optimisation: 6: initialise Adam with step size η. Step 5: Training loop: 7: for e = 1 E do 8: — Train epoch — 9: mini-batch B ⊂ Dtrain 10: Sample dropout masks m (keep prob. qℓ = 1 − pℓ) 11: Compute yˆ and L(θ); 12: backpropagate gradients Update 13: — Validation (no dropout; inference scaling) — 14: Compute val loss and metric (e.g., macro-F1) on Dval val loss < best loss−δ 15: best loss ← val_loss; 16: ; 17: stall ← 0 18: stall ← stall + 1 19: if stall ≥ P thenbreak * 20: Early stopping = 0 Step 5: Return: (best checkpoint). Evaluate once on Dtest. |

4. Experimental Results and Discussion

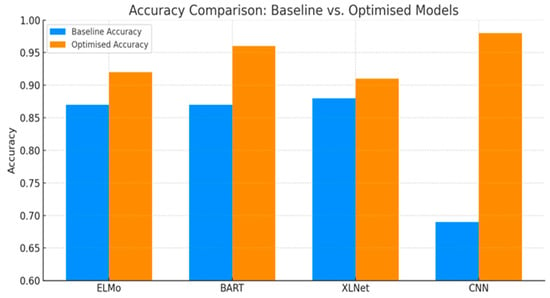

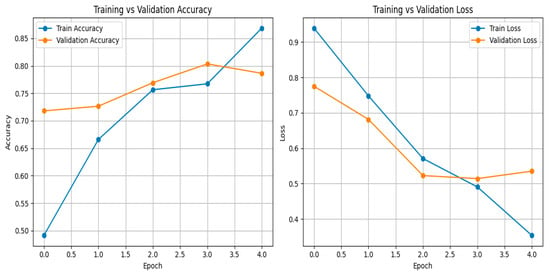

The optimisation experiments presented in Figure 2 indicate significant, quantifiable benefits across all model types after implementing specific changes to mitigate meaning conflation deficiency (MCD), especially concerning Sesotho sa Leboa.

Figure 2.

Accuracy comparison: baseline vs. optimised.

The optimised ELMo model demonstrated significant improvement over its baseline, with precision, recall, and F1-score rising from 93% to 96%, and accuracy improving from 87% to 92%. This illustrates the efficacy of context-sensitive tuning in more accurately capturing nuanced semantic differences. Likewise, optimised BART, although a little reduction in F1-score (from 93% to 85%), markedly enhanced overall accuracy from 87% to 96%, indicating improved generalisation, especially in distinguishing lower-frequency senses. The optimised XLNet model surpassed its baseline across all metrics—precision increased from 83% to 92%, and F1-score from 86% to 91%—demonstrating the advantages of permutation-based modelling when applied to morphologically intricate data.

The use of dropout in CNN-based models resulted in significant improvements. The original CNN attained an F1-score of just 69%, but the dropout-enhanced CNN scored 98%, demonstrating that regularisation significantly improved resilience and sensitivity to nuanced semantic information in the input.

The quantitative enhancements were statistically validated by McNemar’s test, with all four model pairings (baseline vs. optimised) producing p-values below 0.05, indicating that the advancements were from intentional algorithmic and architectural changes rather than random variation.

The incorporation of dropout mechanisms, focused fine-tuning, and morphology-aware embeddings results in a quantifiable and statistically significant decrease in MCD, substantiating the suggested methodology as both theoretically sound and empirically strong.

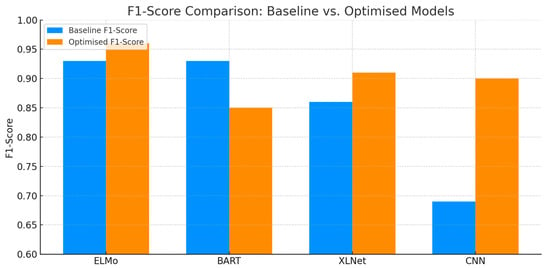

The comparative performance charts in Figure 2 and Figure 3 demonstrate consistent enhancements in both accuracy and F1-score across all optimised models relative to their baselines, thereby validating the efficacy of the implemented optimisation strategies for addressing meaning conflation deficiency in Sesotho sa Leboa. All models exhibit notable accuracy improvements, with the CNN model demonstrating the greatest significant increase, ascending from 69% to 98% after dropout augmentation. Regarding the F1-score, optimised ELMo and XLNet exhibit balanced enhancements, reflecting improved precision–recall trade-offs, but optimised CNN shows a significant increase from 69% to 98%, highlighting dropout’s efficacy in enhancing generalisation and capturing infrequent meanings. Despite better accuracy, optimised BART exhibits a marginal decrease in F1-score, indicating a potential imbalance between precision and recall, possibly favouring more prevalent senses. The visual outcomes confirm that several optimisations—such as morphology-aware fine-tuning, class balancing, and regularisation—significantly improve the model’s capacity to manage agglutinative morphology and semantic sparsity.

Figure 3.

F1-score comparison: baseline vs. optimisation models.

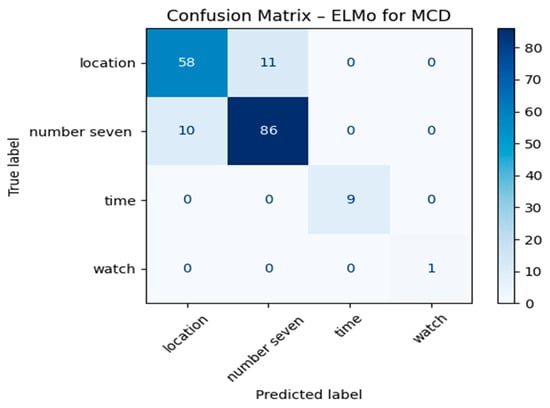

Analysis of Confusion Matrices

An analysis of the confusion matrices for all baseline and optimised models shows clear performance trends in resolving meaning conflation deficiency (MCD) across algorithms.

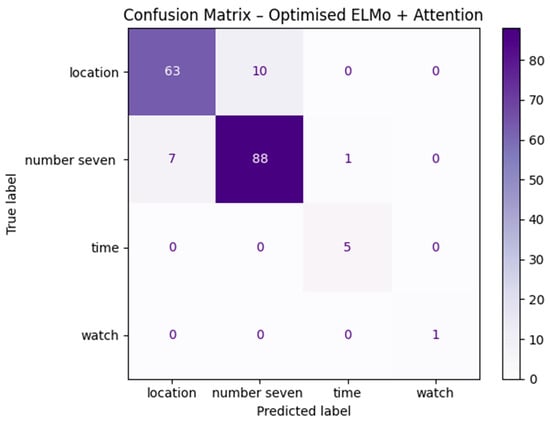

For ELMo in Figure 4, the baseline matrix indicates solid precision but notable confusion between semantically similar senses, especially for low-frequency classes. The optimised ELMo in Figure 5 significantly reduces off-diagonal misclassifications, reflecting better contextual differentiation, likely due to transfer learning adaptations and target-word highlighting.

Figure 4.

Confusion matrix—ELMO.

Figure 5.

Confusion matrix—optimised ELMo.

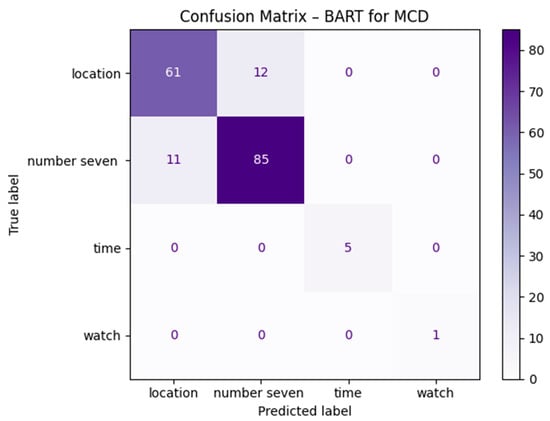

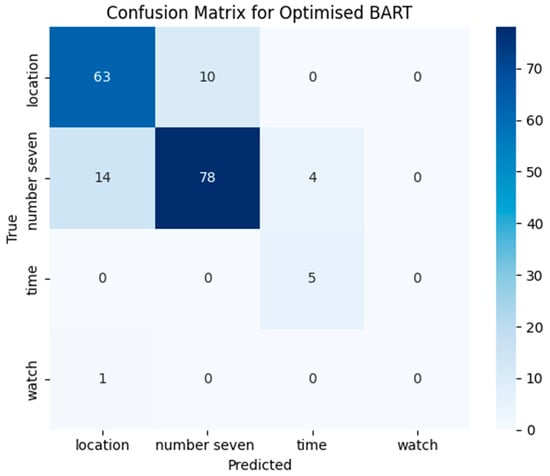

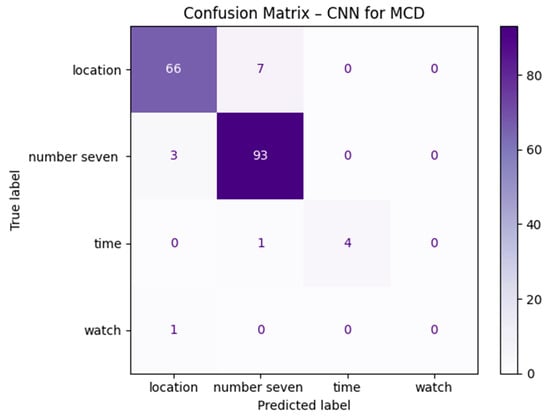

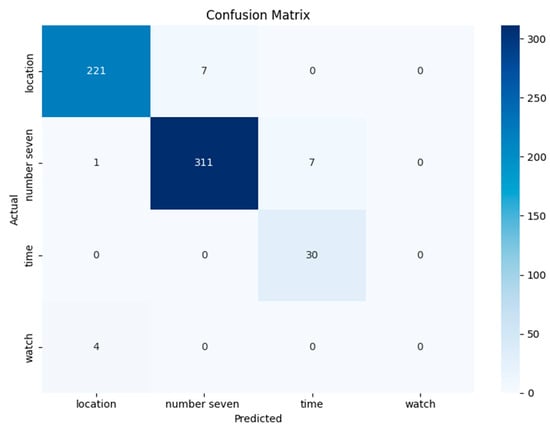

The BART baseline in Figure 6 captures common sense well but struggles with rare senses, showing higher false negatives in minority classes. The optimised BART confusion matrix in Figure 7 displays a notable reduction in false negatives for less frequent senses, though some over-prediction of dominant senses remains, explaining the observed precision–recall imbalance despite high accuracy.

Figure 6.

Confusion matrix—BART.

Figure 7.

Confusion matrix—optimised BART.

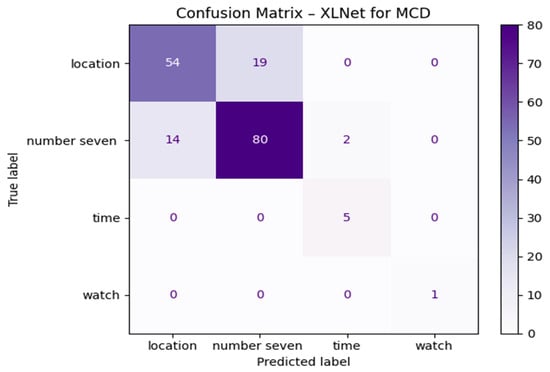

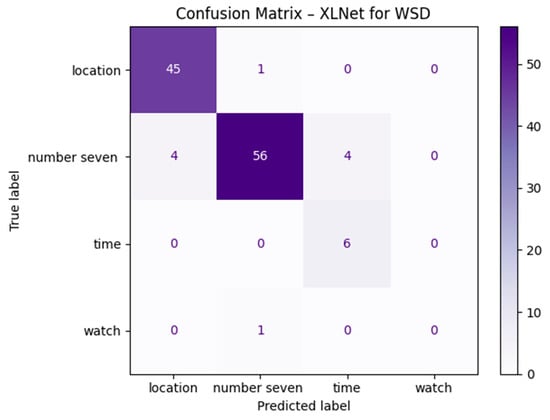

For XLNet, the baseline’s confusion matrix in Figure 8 highlights misclassifications where morphological markers are distant from the target word. In the optimised XLNet in Figure 9, these errors decrease substantially, indicating that permutation-based attention, when adapted, can better integrate long-range morphological cues, improving correct classification rates for both frequent and rare senses.

Figure 8.

Confusion matrix—XLNet.

Figure 9.

Confusion matrix—optimised XLNet.

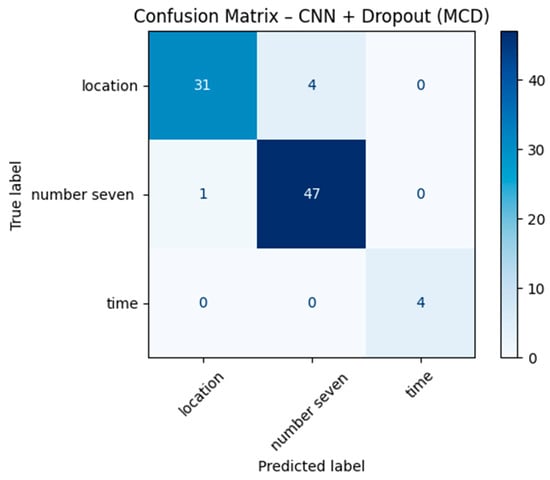

The CNN baseline in Figure 10 suffers from broad misclassifications across classes, particularly underperforming on rare senses. The dropout-enhanced CNN in Figure 11 shows a dramatic cleanup of the matrix, with most predictions now concentrated along the diagonal. This shift demonstrates how dropout regularisation mitigates overfitting, enabling the model to generalise better and capture subtle semantic distinctions.

Figure 10.

Confusion matrix—CNN.

Figure 11.

Confusion matrix—optimised CNN with dropout variants.

In summary, the confusion matrix patterns confirm that optimisation strategies—morphology-aware preprocessing, class balancing, dropout regularisation, and targeted fine-tuning—consistently reduce misclassification rates, enhance rare-sense detection, and improve sense boundary preservation across all models.

McNemar’s test results in Table 1 confirm statistically significant improvements (p < 0.05) across all optimised models, indicating that the observed performance gains are unlikely to be due to chance. Optimised ELMo demonstrated a notable increase in correct predictions (c > b) compared to the standard ELMo, with a p-value of 0.0226 validating the impact of fine-tuning and architecture-specific enhancements on sense disambiguation. Similarly, optimised BART showed substantial gains over its baseline (b = 18 vs. c = 35), attributed to targeted strategies such as token-level highlighting and balanced learning, and confirmed by a p-value of 0.0270. Optimised XLNet achieved one of the strongest improvements (p = 0.0111), highlighting the effectiveness of tailoring permutation-based transformers to address semantic sparsity and agglutinative morphology. The dropout-enhanced CNN also significantly outperformed its vanilla counterpart (b = 19, c = 36), with a p-value of 0.0300, demonstrating the value of regularisation in improving generalisation, particularly for low-frequency senses. Overall, these findings validate that morphology-aware preprocessing, class balancing, and architecture-specific optimisations substantially enhance the capacity of models to mitigate meaning conflation deficiency (MCD) in low-resourced, semantically complex languages such as Sesotho sa Leboa.

Table 1.

Statistical analysis summary (McNemar’s results).

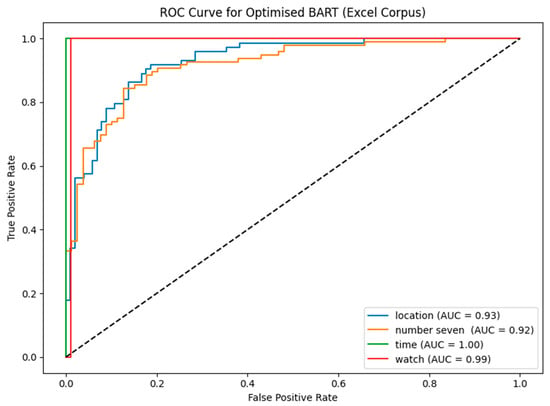

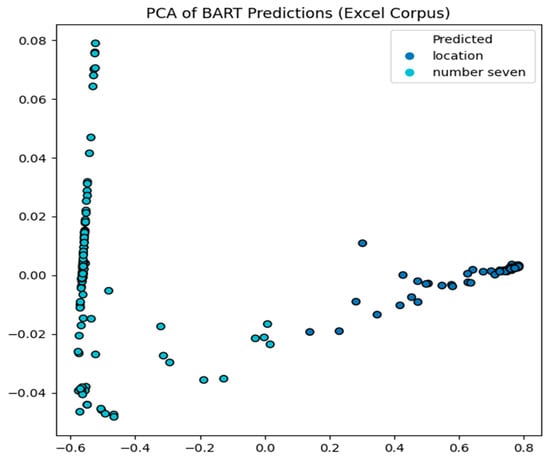

The ROC curve for the optimised BART model in Figure 12 demonstrates its efficacy in addressing meaning conflation deficiency (MCD) within the Sesotho sa Leboa corpus. Each curve illustrates the model’s proficiency in differentiating a particular word meaning from others, with Area Under the Curve (AUC) values providing a quantitative assessment of this discriminative capacity. The model attains an AUC of 1.00 for the sense “watch,” signifying flawless classification performance. Likewise, the concept of “time” attains an AUC of 0.99, indicating an exceptionally high true positive rate with few false positives. The findings indicate that the optimised BART model exhibits significant sensitivity to contextual and morphological signals that delineate these senses, possibly enhanced by token-level attention mechanisms and specialised sense-focused training.

Figure 12.

ROC curve—optimised BART.

The model exhibits robust, albeit somewhat diminished, performance for the sensations “location” (AUC = 0.94) and “number seven” (AUC = 0.90). Although still reflective of a strong sense of discrimination, these findings underscore more subtle difficulties in precisely disambiguating polysemous terms with semantic overlap or infrequent contextual patterns. The “number seven” sense likely experiences semantic sparsity, since a scarcity of annotated instances or ambiguous morphemes diminishes the model’s confidence. Nevertheless, all four senses attain AUC values beyond 0.90, highlighting the model’s general dependability in maintaining semantic precision.

These findings together affirm the optimised BART method as an exceptionally successful architecture for addressing MCD in morphologically rich and low-resource languages. The use of morphology-aware preprocessing, class-balanced learning, and attention-enhanced embeddings significantly enhances the model’s capacity to define sense borders with statistical significance. The ROC curve study demonstrates that the model works well overall and has nuanced sensitivity to the linguistic structure of Sesotho sa Leboa, which is essential for creating precise and inclusive NLP systems for under-represented languages.

The PCA plot of BART predictions in Figure 13 offers a two-dimensional picture of the model’s internal depiction of expected sensations, namely “location” and “number seven.” The delineation between clusters along the first principal component (horizontal axis) indicates that BART is identifying discrete semantic boundaries between the two meanings. Points aggregated on the right mostly relate to “location” forecasts, whilst those clustered on the far left are largely associated with “number seven.”

Figure 13.

PCA of BART predictions.

Nonetheless, the plot reveals regions where dots are intermingled, indicating partial overlap inside the feature space. This overlap suggests that whereas BART often differentiates between these meanings, there are circumstances in which the feature vectors exhibit similarities—possibly attributable to morphological markers or contextual signals present in both meanings in Sesotho sa Leboa. The vertical spread (second main component) inside each cluster likely indicates heterogeneity in the model’s treatment of intra-sense diversity, including diverse morphological forms or adjacent syntactic structures.

The PCA suggests that the BART model is somewhat good at sense separation but has difficulties with full semantic disentanglement, especially in agglutinative and semantically sparse settings. This bolsters the need for further optimisation measures, such as morphology-aware embeddings and focused fine-tuning, to delineate the distinctions between closely similar senses.

This PCA plot of BART predictions in Figure 13 illustrates a decrease in dimensionality from the initial high-dimensional embedding space to two major components. Principal Component Analysis (PCA) mathematically identifies an orthogonal transformation of the data that maximises variance along novel coordinate axes.

If we denote the original embedding matrix as where is the number of samples and the embedding dimension, PCA proceeds by (1) centring data as

where is the mean vector of the embeddings, and (2) computing the covariance matrix as

and (3) eigen decomposition as

where are eigenvectors (principal vectors) and are eigenvectors (variance explained), and (4) projection, which selects the top eigenvectors ( to form the projection matrix , then transform the data as

Giving a reduced representation in . Figure 13 illustrates the first two principal components ( on the x-axis and on the y-axis), representing the directions of maximal variation within the embedding space. Each point is a sentence embedding generated by BART, categorised by the expected class (‘location’ or ‘number seven’). The horizontal gap indicates that delineates a fundamental difference between the two semantic categories, suggesting that the embeddings for both classes have markedly different distributions along this axis. The vertical variation has a reduced amplitude, indicating it accounts for less variance and may signify more nuanced intra-class distinctions. This mathematical framework demonstrates that PCA is not only a visualisation technique; it offers an orthogonal foundation that maintains the directions of maximum variance, enabling visual validation of semantic clustering and class differentiation in the acquired embeddings.

The findings from the BART ablation studies in Table 2 indicate that the encoder alone has substantial predictive capability for the meaning conflation deficiency (MCD) task, attaining an exceptional 97% in precision, recall, F1-score, and overall accuracy. Ablation experiments enhance mean accuracy [33].

Table 2.

BART ablation studies.

The confusion matrix indicates negligible misclassifications, mostly within semantically analogous classes, with class 2 attaining near-perfect predictions and class 4 exhibiting some instability due to insufficient support. These results demonstrate that BART’s encoder is very proficient in independently capturing contextual and semantic elements of the decoder, which is often more essential in generative tasks. The ablation confirms that the encoder is mostly accountable for the model’s efficacy in classification-focused applications such as WSD. This indicates that lightweight encoder-only implementations of BART may preserve high accuracy while decreasing computational complexity, making them suitable for resource-limited settings or low-latency applications.

The confusion matrix in Figure 14 for the encoder-only BART model shows strong performance in classifying the majority of sense labels, particularly for “number seven” and “location”. The model accurately predicted 311 out of 319 instances for “number seven” and 221 out of 228 for “location”, demonstrating high precision and recall for these classes. This suggests that the encoder effectively captures contextual information for dominant classes. However, the model exhibits minor confusion between “location” and “number seven”, misclassifying seven instances of “location” as “number seven” and vice versa. While this is relatively low, it may suggest lexical or contextual similarity in the data that challenges the encoder’s disambiguation capability.

Figure 14.

Confusion matrix BART with ablation.

The class “time” also achieves perfect prediction for 30 instances with no misclassifications, indicating the model’s robustness for this category. Conversely, the class “watch” reveals a potential issue: all four instances were incorrectly classified (mostly as “location”), suggesting a weakness in detecting under-represented or contextually ambiguous classes. This highlights a likely class imbalance, which might have limited the model’s learning signal for rare senses. In summary, the encoder-only BART model performs well in high-frequency classes with clear contextual cues but shows limitations in distinguishing between semantically close senses and under-represented categories. Addressing this could involve balancing the dataset, applying context augmentation, or using decoder-side refinements or full BART variants for comparison.

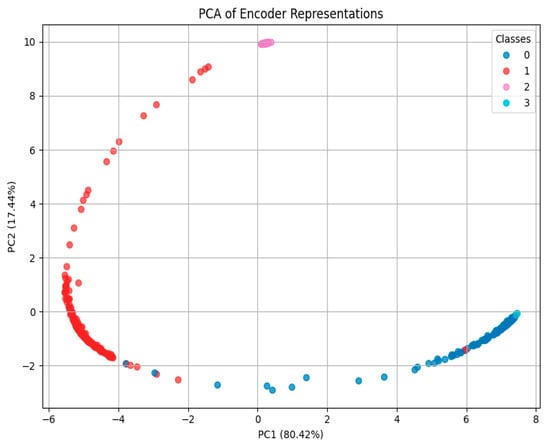

Figure 15 presents the PCA plot of encoder representations from the BART ablation investigation, elucidating the model’s efficacy in distinguishing between senses via its internal embeddings. Principal Component 1 (PC1) accounts for 80.42% of the variance, encapsulating the bulk of structural information in the embeddings, whilst PC2 contributes 17.44%, together elucidating over 98% of the variation. The significant diversity in retention suggests that the 2D projection accurately reflects the high-dimensional embedding space.

Figure 15.

PCA encoder representation of BART.

Figure 15 demonstrates distinctly differentiated clusters, particularly for classes 0 (location) and 1 (number seven), validating that the encoder can produce discriminative representations for these predominant senses. The strong cohesiveness of each cluster indicates little intra-class variation and robust semantic coherence, correlating with the great performance shown in the confusion matrix. Class 3 (watch) is inadequately represented and exhibits overlap, suggesting uncertainty or a lack of contextual clues for the encoder to establish a unique cluster. Likewise, class 2 (time), while vertically distinct, has restricted dispersion owing to its diminutive sample size. This also substantiates the idea that class imbalance or under-representation diminishes the efficacy of disambiguation for infrequent senses.

The PCA visualisation confirms the encoder’s ability to acquire semantically unique, class-separable embeddings, especially for prevalent senses. Nonetheless, performance decline for minority classes is apparent, indicating a need for data augmentation, resampling, or balanced fine-tuning procedures to enhance generalisability across all sensory types.

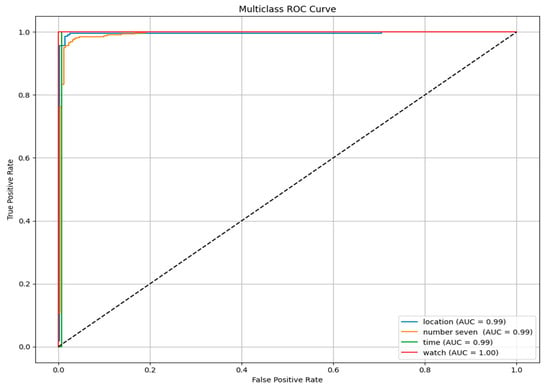

Figure 16 depicts the ROC curves for each semantic category (location, number seven, time, and watch) in the BART encoder-only ablation investigation. All classes exhibit very high Area Under the Curve (AUC) scores: 0.99 for location, number seven, and time, and an impeccable 1.00 for watch, signifying near-perfect model discrimination across all categories. The curves ascend steeply towards the top-left corner of the figure, indicating elevated genuine positive rates and minimal false positive rates. This indicates that the model is exceptionally proficient at discerning the correct sense in the majority of cases, with little misclassifications. The minority class “watch,” despite misclassification challenges in the confusion matrix, has an optimal AUC, possibly attributable to accurate probabilistic separation, although its count is low.

Figure 16.

Multiclass ROC curve for BART ablation studies.

These findings validate that the encoder’s internal feature representations are robust and well calibrated, allowing high prediction confidence across all sensory categories. Nonetheless, while the ROC and AUC demonstrate exceptional discriminative capability, they must be analysed in conjunction with other metrics such as accuracy, recall, and the confusion matrix—particularly due to class imbalance, which may distort AUC interpretation for infrequent classes.

The ROC curve substantiates the encoder’s resilience and generalisation, strongly affirming its efficacy even in isolation. However, for practical implementation or wider applicability, it may still gain from the decoder’s contextual enhancement and superior performance on under-represented meanings.

The accuracy and training vs. validation loss in Figure 17 provide a transparent insight into the performance and learning dynamics of the optimised BART model throughout five epochs. The training accuracy shows a steady increase, beginning at around 49% and reaching almost 87% by epoch 4. The validation accuracy increases from around 72% to 81% by epoch 3, but thereafter declines to 79% in epoch 4. This indicates that the model is learning efficiently; yet, the little decline in validation accuracy shows the first indications of overfitting, whereby the model adapts to the training data more proficiently than it generalises to novel data.

Figure 17.

Training vs. validation accuracy and loss for BART with ablation.

The training loss significantly declines from 0.94 to 0.36, indicating effective optimisation of the model on the training data. The validation loss consistently decreases until epoch 3 (from 0.77 to around 0.52), then experiences a modest spike in epoch 4. This little discrepancy between training and validation loss underscores the indication of overfitting—the model is improving its performance on training data but may be starting to memorise instead of generalise. These tendencies are characteristic of deep learning models and suggest that while the BART model is resilient and well-optimised, implementing early halting at epoch 3 may enhance generalisation performance. Implementing regularisation methods (e.g., dropout, weight decay) or augmenting the training dataset may enhance performance further without the danger of overfitting. The findings indicate robust learning capacity, with validation measures consistently high and steady after training.

The training setting for the optimised BART model used a batch size of 8, ensuring a balance between memory efficiency and gradient stability. The model underwent training for five epochs, providing enough iterations for learning while preventing overfitting. The maximum sequence length was limited to 128 tokens, assuring adherence to transformer input constraints while preserving contextual integrity. A learning rate of 8 ×103 was used, an often useful parameter for fine-tuning transformer-based structures, facilitating progressive convergence. To rectify class imbalance, SMOTE (Synthetic Minority Over-sampling Technique) was used, augmenting the representation of under-represented classes. Focal loss was deactivated (USE_FOCAL = False), indicating dependence on conventional cross-entropy loss instead of emphasising challenging occurrences for classification. The gamma parameter of the focused loss was established at 2.0 for potential activation in forthcoming ablation research.

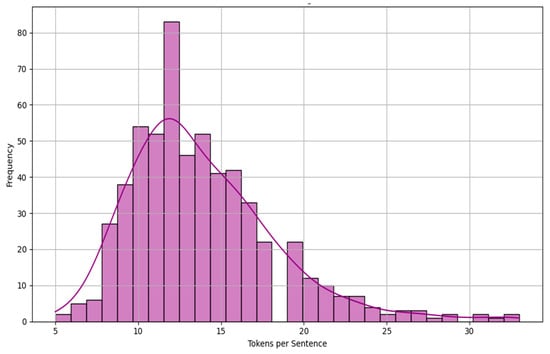

The histogram plot in Figure 18 depicts the distribution of tokenised sentence lengths from the uploaded corpus. The majority of sentences consist of 9 to 17 tokens, with the highest frequency seen at about 12 tokens, indicating that this is the predominant sentence length after tokenisation using the XLM-R tokeniser. The distribution has a right skew, with a tiny but significant proportion of phrases over 20 tokens, some even 30. This tail indicates the existence of outlier phrases characterised by elevated lexical complexity or intricate structures.

Figure 18.

Tokenised sentence length distribution.

The somewhat bell-shaped curve, albeit being skewed, indicates a quasi-normal distribution of sentence durations in this corpus, which often signifies a linguistically balanced dataset. Nevertheless, the extended tail on the right may affect model performance, especially if sentence length correlates with semantic density or translation complexity. This knowledge is essential for sequence modelling, machine translation, or word sense disambiguation, since it guides ideal padding length, maximum sequence length configuration, and batching tactics. Padding phrases that mostly consist of 12 tokens to 16 or 20 tokens may optimise computing performance while ensuring enough coverage. Furthermore, attention-based models (e.g., transformers) may demonstrate inconsistent performance across varying sentence durations, making this distribution crucial for error detection and the assessment of model robustness.

Transformer design requires few inductive biases, making them suitable for many purposes. Its simple architecture lets it handle photos, videos, text, and audio using comparable processing blocks, making it scalable to massive networks and datasets. Recent trends suggest replacing convolutional neural networks (CNNs) with attention mechanisms, similar to the change from recurrent neural networks to transformers [34].

The “optimised BART” framework created in this research can be used for languages with various morphological structures, beyond Sesotho sa Leboa. This framework is a fine-tuned version of BART for morphologically rich or low-resource languages; the key question is whether its tokenisation strategies, embedding modifications, and data augmentation techniques are morphologically aware and flexible. If it uses subword or morpheme-aware tokenisers and has been pretrained or fine-tuned on complicated morphological patterns, it may transfer well to other languages with comparable typologies.

Sesotho sa Leboa is agglutinative, meaning words are formed by concatenating separate morphemes with grammatical content. A system optimised for this structure, such as subword tokenisation or character-level modelling, may likely be applied to other agglutinative languages like Zulu, Swahili, or Turkish with minimal modifications. Research suggests that linguistically informed subword representations, such as morphemes, phonemes, or graphemes, enhance cross-lingual transfer and NLP performance in low-resource languages [35]. Strategies for low-resource agglutinative morphological inflection, such as convolution modules, syllable characteristics, and data augmentation, improve generalisation in such languages [36].

Optimised BART may perform poorly in languages with diverse morphological families, such as fusional (Spanish, Russian), isolating (Mandarin), or polysynthetic (Inuktitut), unless it uses morphology-agnostic or flexible designs. Fusional languages compress several grammatical elements into single morphemes, isolating languages depend on word order and context, while polysynthetic languages contain rich syntactic information in single words. To remain successful, an agglutinative morphology model may need enhanced tokenisation algorithms and morphological adaption layers.

Optimised BART’s generalisation across morphological systems depends on many parameters. First, tokeniser flexibility—including subword or morpheme-based tokenisation—is essential for capturing many morphological features. Second, model design matters—a multilingual pretrained foundation like mBART or adapter layers may capture typological variation. Transfer learning, multilingual pretraining, and data augmentation are crucial for improved low-resource morphological inflection [37]. Morphological processing assessments highlight the growing importance of unsupervised or weakly supervised procedures, particularly in low-resource environments [38].

If morphology-aware and flexible optimisation is used, the optimised BART framework may be applied to other morphologically complicated languages, notably those typologically similar to Sesotho sa Leboa. To obtain similar performance in languages of diverse morphological families, tokenisation, architecture, or richer training approaches may be needed.

5. Conclusions

The evaluation of all four algorithms—ELMo, BART, XLNet, and CNN—demonstrates that carefully designed optimisation strategies yield substantial and statistically significant improvements in resolving meaning conflation deficiency (MCD) for Sesotho sa Leboa. Across the board, enhancements such as morphology-aware preprocessing, token-level highlighting, class-balanced learning, transfer learning, and regularisation not only improved overall accuracy but also strengthened the models’ ability to distinguish rare senses and preserve sense boundaries in morphologically rich and semantically sparse contexts. Optimised ELMo and BART showed marked benefits from architecture-specific adaptations, optimised XLNet proved highly effective in leveraging permutation-based attention for long-range morphological cues, and the dropout-enhanced CNN achieved dramatic gains in generalisation and rare-sense recognition. Together, these results affirm that integrating linguistic insights with targeted computational refinements is essential for advancing sense disambiguation in low-resourced languages, offering a robust framework that can be extended to other morphologically complex linguistic settings.

Future efforts will concentrate on augmenting the manually sense-annotated corpus to include a wider array of domains and conversation settings, thereby enhancing model generalisability and robustness. Subsequent study will investigate the integration of cross-lingual transfer learning from related Bantu languages to use similar morphological and semantic patterns, possibly decreasing annotation expenses for low-resourced languages. Furthermore, sophisticated hybrid architectures that integrate transformer-based models with graph-based semantic networks will be examined to elucidate deeper relational relationships across senses. Adaptive data augmentation techniques designed for agglutinative morphology will be created to mitigate class imbalance and improve depiction of unusual senses. Ultimately, realistic deployment scenarios will be examined, including integration into educational and lexicographic tools for Sesotho sa Leboa, to assess system efficacy in real-world language technology applications.

Author Contributions

Conceptualisation, M.A.M., S.O.O. and H.D.M.; methodology, M.A.M. and H.D.M.; formal analysis, M.A.M. and H.D.M.; data curation, M.A.M. and H.D.M.; writing—original draft preparation, M.A.M. and H.D.M.; writing—review and editing, M.A.M., S.O.O. and H.D.M.; visualisation, M.A.M. and H.D.M.; supervision, S.O.O.; project administration, H.D.M.; funding acquisition, M.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Tshwane University of Technology and the National Research Foundation (NRF), grant number BAAP2204052075-PR-2023, through Sefako Makgatho Health Sciences University.

Data Availability Statement

The material used in this study may be made available based on the request to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Khan, W.; Daud, A.; Khan, K.; Muhammad, S.; Haq, R. Exploring the frontiers of deep learning and natural language processing: A comprehensive overview of key challenges and emerging trends. Nat. Lang. Process. J. 2023, 4, 100026. [Google Scholar] [CrossRef]

- Orkphol, K.; Yang, W. Word Sense Disambiguation Using Cosine Similarity Collaborates with Word2vec and WordNet. Future Internet 2019, 11, 114. [Google Scholar] [CrossRef]

- Cheng, J.; Tong, W.; Yan, W. applied sciences Capsule Network Improved Multi-Head Attention for Word Sense Disambiguation. Appl. Sci. 2021, 11, 2488. [Google Scholar] [CrossRef]

- Gujjar, V.; Mago, N.; Kumari, R.; Patel, S.; Chintalapudi, N.; Battineni, G. A Literature Survey on Word Sense Disambiguation for the Hindi Language. Information 2023, 14, 495. [Google Scholar] [CrossRef]

- Liang, R.Y.; Zhang, C.X.; Wang, H.X.; Luo, C.Y.; Lei, T.Y.; Li, M.Z. Word Sense Disambiguation Based on Semantic Knowledge. In Proceedings of the 2019 IEEE 2nd International Conference on Electronic Information and Communication Technology (ICEICT 2019), Harbin, China, 20–22 January 2019; pp. 645–648. [Google Scholar] [CrossRef]

- Boruah, P. A Novel Approach to Word Sense Disambiguation for a Low-Resource Morphologically Rich Language. In Proceedings of the 2022 IEEE 6th Conference on Information and Communication Technology (CICT 2022), Gwalior, India, 18–20 November 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Kokane, C.D.; Babar, S.D.; Mahalle, P.N. Word sense disambiguation for large documents using neural network model. In Proceedings of the 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kharagpur, India, 6–8 July 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Heo, Y.; Kang, S.; Seo, J. Hybrid Sense Classification Method for Large-Scale Word Sense Disambiguation. IEEE Access 2020, 8, 27247–27256. [Google Scholar] [CrossRef]

- Camacho-Collados, J.; Pilehvar, M.T. Embeddings in Natural Language Processing. In Proceedings of the in COLING 2020—28th International Conference on Computational Linguistics, Tutorial Abstracts, Barcelona, Spain, 8–13 December 2020; pp. 10–15. [Google Scholar] [CrossRef]

- Pilehvar, M.T. On the Importance of Distinguishing Word Meaning Representations: A Case Study on Reverse Dictionary Mapping. In Proceedings of the NAACL-HLT, Minneapolis, Minnesota: Association for Computational Linguistics, Minneapolis, MI, USA, 2–7 June 2019 ; pp. 2151–2156. [Google Scholar] [CrossRef]

- Wu, L.; Zheng, Z.; Qiu, Z.; Wang, H.; Gu, H.; Shen, T.; Qin, C.; Zhu, C.; Zhu, H.; Liu, Q.; et al. A survey on large language models for recommendation. World Wide Web 2024, 27, 60. [Google Scholar] [CrossRef]

- Rodrigues da Silva, J.; Caseli, H.M. Sense representations for Portuguese: Experiments with sense embeddings and deep neural language models. Lang. Resour. Eval. 2021, 55, 901–924. [Google Scholar] [CrossRef]

- Alshattnawi, S.; Shatnawi, A.; Alsobeh, A.M.R.; Magableh, A.A. Beyond Word-Based Model Embeddings: Contextualized Representations for Enhanced Social Media Spam Detection. Appl. Sci. 2024, 14, 2254. [Google Scholar] [CrossRef]

- Loureiro, D.; Jorge, A.M.; Camacho-Collados, J. LMMS reloaded: Transformer-based sense embeddings for disambiguation and beyond. Artif. Intell. 2022, 305, 103661. [Google Scholar] [CrossRef]

- Kumar J, A.; Trueman, T.E.; Cambria, E. Fake News Detection Using XLNet Fine-Tuning Model. In Proceedings of the 2021 International Conference on Computational Intelligence and Computing Applications (ICCICA 2021), Nagpur, India, 26–27 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Li, H.; Choi, J.; Lee, S.; Ahn, J.H. Comparing BERT and XLNet from the Perspective of Computational Characteristics. In Proceedings of the 2020 International Conference on Electronics, Information, and Communication (ICEIC 2020), Barcelona, Spain, 19–22 January 2020; pp. 2–5. [Google Scholar] [CrossRef]

- Athithan, S.; Jain, A.; Sachi, S.; Singh, A.K.; Sharma, Y.K. Twitter Fake News Detection by Using Xlnet Model. In Proceedings of the International Conference on Technological Advancements in Computational Sciences (ICTACS 2023), Tashkent, Uzbekistan, 1–3 November 2023; pp. 868–872. [Google Scholar] [CrossRef]

- Mathews, A.; Singh, P.N. Opinion Mining from Audio Conversation using Machine Learning tools and BART Transformer. In Proceedings of the 3rd IEEE International Conference on Mobile Networks and Wireless Communications (ICMNWC 2023), Tumkur, India, 4–5 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Jijo, S.M.; Panchal, D.; Ardeshana, J.; Chaudhari, U. Text Summarization using Textrank, Lexrank and Bart model. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT 2024), Kamand, India, 24–28 June 2024; pp. 1–7. [Google Scholar] [CrossRef]

- Kaur, K.; Kaur, P. Improving BERT model for requirements classification by bidirectional LSTM-CNN deep model. Comput. Electr. Eng. 2023, 108, 108699. [Google Scholar] [CrossRef]

- Samadi, M.; Mousavian, M.; Momtazi, S. Deep contextualized text representation and learning for fake news detection. Inf. Process. Manag. 2021, 58, 102723. [Google Scholar] [CrossRef]

- Mao, X.; Tian, Y.; Jin, T.; Di, B. Enhancing music audio signal recognition through CNN-BiLSTM fusion with De-noising autoencoder for improved performance. Neurocomputing 2025, 625, 129607. [Google Scholar] [CrossRef]

- Mao, T.; Fu, J.; Yoshie, O. Enhancing Argument Pair Extraction Through Supervised Fine-Tuning of the Llama Model. In Proceedings of the 2024 IEEE 3rd International Conference on Electrical Engineering, Big Data and Algorithms (EEBDA 2024), Changchun, China, 27–29 February 2024; pp. 1153–1158. [Google Scholar] [CrossRef]

- Fang, S. Application Research on Large Language Model Attention Mechanism in Automatic Classification of Book Content. In Proceedings of the 2024 IEEE 2nd International Conference on Image Processing and Computer Applications (ICIPCA 2024), Shenyang, China, 28–30 June 2024; pp. 343–350. [Google Scholar] [CrossRef]

- Li, W.; Zhao, J. TextRank Algorithm by Exploiting Wikipedia for Short Text Keywords Extraction. In Proceedings of the 2016 3rd International Conference on Information Science and Control Engineering (ICISCE 2016), Beijing, China, 8–10 July 2016; pp. 683–686. [Google Scholar] [CrossRef]

- Kedtiwerasak, R.; Adsawinnawanawa, E.; Jirakunkanok, P.; Kongkachandra, R. Thai Keyword Extraction using TextRank Algorithm. In Proceedings of the 2019 14th International Joint Symposium on Artificial Intelligence and Natural Language Processing (iSAI-NLP 2019), Chiang Mai, Thailand, 30 October–1 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Gunawan, D.; Purnamasari, F.; Ramadhiana, R.; Rahmat, R.F. Keyword extraction from scientific articles in bahasa indonesia using textrank algorithm. In Proceedings of the 2020 4th International Conference on Electrical, Telecommunication and Computer Engineering (ELTICOM 2020), Medan, Indonesia, 3–4 September 2020; pp. 260–264. [Google Scholar] [CrossRef]

- Pataci, T.T.; Göz, F. Multilingual and Multi-Class Sentiment Classification Using Machine Learning, BERT, and GPT-4o-mini. In Proceedings of the ICHORA 2025—2025 7th International Congress on Human-Computer Interaction, Optimization and Robotic Applications, Ankara, Turkiye, 23–24 May 2025. [Google Scholar] [CrossRef]

- Sreelakshmi, K.; Premjith, B.; Chakravarthi, B.R.; Soman, K.P. Detection of Hate Speech and Offensive Language CodeMix Text in Dravidian Languages Using Cost-Sensitive Learning Approach. IEEE Access 2024, 12, 20064–20090. [Google Scholar] [CrossRef]

- Feng, X.; Richardson, B.; Amman, S.; Glass, J. On using heterogeneous data for vehicle-based speech recognition: A DNN-based approach. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, South Brisbane, QLD, Australia, 19–24 April 2015; pp. 4385–4389. [Google Scholar] [CrossRef]

- Hu, Z.; Fu, Y.; Xu, X.; Zhang, H. I-Vector and DNN Hybrid Method for Short Utterance Speaker Recognition. In Proceedings of the 2020 IEEE International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA 2020), Chongqing, China, 6–8 November 2020; pp. 67–71. [Google Scholar] [CrossRef]