Abstract

Real-world threat detection systems face critical challenges in adapting to evolving operational conditions while providing transparent decision making. Traditional deep learning models suffer from catastrophic forgetting during continual learning and lack interpretability in security-critical deployments. This study proposes a distributed edge–cloud framework integrating YOLOv8 object detection with incremental learning and Gradient-weighted Class Activation Mapping (Grad-CAM) for adaptive, interpretable threat detection. The framework employs distributed edge agents for inference on unlabeled surveillance data, with a central server validating detections through class verification and localization quality assessment (IoU ≥ 0.5). A lightweight YOLOv8-nano model (3.2 M parameters) was incrementally trained over five rounds using sequential fine tuning with weight inheritance, progressively incorporating verified samples from an unlabeled pool. Experiments on a 5064 image weapon detection dataset (pistol and knife classes) demonstrated substantial improvements: F1-score increased from 0.45 to 0.83, mAP@0.5 improved from 0.518 to 0.886 and minority class F1-score rose 196% without explicit resampling. Incremental learning achieved a 74% training time reduction compared to one-shot training while maintaining competitive accuracy. Grad-CAM analysis revealed progressive attention refinement quantified through the proposed Heatmap Focus Score, reaching 92.5% and exceeding one-shot-trained models. The framework provides a scalable, memory-efficient solution for continual threat detection with superior interpretability in dynamic security environments. The integration of Grad-CAM visualizations with detection outputs enables operator accountability by establishing auditable decision records in deployed systems.

1. Introduction

The rapid evolution of artificial intelligence (AI) in security applications has underscored the need for adaptive and interpretable threat detection systems. Traditional deep learning models, such as YOLOv8, perform well in static environments but face challenges with dynamic threats due to catastrophic forgetting in continual learning scenarios [,].

Real-world security threats increasingly demand detection systems that achieve high accuracy while remaining robust under adverse visual conditions such as noise, blur and low resolution [,]. State-of-the-art deep learning detectors excel on curated datasets but often suffer substantial accuracy losses when faced with real-world artifacts [,]. Recent studies [,] and comprehensive reviews [,] emphasize the persistence of these challenges and the critical need for transparency and reliability in automated threat detection. Beyond technical performance, security-critical AI systems must enable operator accountability, providing transparent decision records that distinguish between model failures and human decision errors in post-incident analysis []. This requirement is particularly acute in contexts where automated detections inform high-stakes interventions, necessitating explainable AI frameworks that support both technical transparency and operational responsibility.

Motivated by these challenges, this paper proposes an agent-based framework that integrates YOLOv8 with incremental learning and Grad-CAM for explainable AI (XAI). Unlike prior studies limited to static or high-quality data, this research addresses environmental variability through incremental model updates and systematic Grad-CAM analysis. The proposed framework demonstrates improved detection accuracy for underrepresented classes while providing visual interpretability for practical trust and accountability [,].

The main contributions of this work are as follows:

- A distributed agent-based edge–cloud framework for automated threat sample collection and progressive model updates;

- A memory-efficient incremental learning protocol that progressively enriches detection capabilities through sequential fine tuning while organically resolving class imbalance;

- Systematic Grad-CAM integration across incremental learning rounds for visual interpretability and trust assessment;

- Empirical validation showing mAP@0.5 increasing from 0.518 to 0.886 across five training rounds, demonstrating effective continual learning without full rehearsal.

2. Related Works

2.1. Deep Learning Architectures for Threat Detection

Object detection has evolved along two paradigms: two-stage detectors prioritizing accuracy through region proposals (e.g., Faster R-CNN []) and single-stage detectors emphasizing speed through direct regression (e.g., YOLO [] and SSD). The YOLO family has achieved real-time performance suitable for security applications, with YOLOv8 representing the current state of the art through anchor-free detection, decoupled heads and a CSPDarknet backbone with C2f modules [].

Despite architectural advances, detection models face critical limitations in operational environments. Bakir and Bakir [] showed that YOLOv8’s mAP drops by 22–35% under Gaussian noise or motion blur, while Rodríguez-Rodríguez et al. [] reported 40% precision losses for small objects under brightness variations. More fundamentally, traditional detectors assume static threat taxonomies and require complete retraining for new classes [,]. Transfer learning mitigates data scarcity [] but does not enable continual adaptation. Class imbalance further compounds these issues, with underrepresented threats (e.g., knives) exhibiting poor recall [,]. These limitations necessitate integrating detection architectures with incremental learning mechanisms.

2.2. Incremental Learning for Dynamic Environments

Incremental learning addresses catastrophic forgetting, the inability to acquire new knowledge while preserving previously learned representations [,]. Three primary paradigms exist: (1) regularization-based methods that constrain weight updates but suffer from accumulating rigidity; (2) rehearsal-based methods (e.g., iCaRL []) that replay exemplar samples, demonstrating superior stability–plasticity balance; and (3) dynamic architectures (e.g., FearNet []) that allocate new capacity per task but incur growing computational costs [,]. Knowledge distillation-based approaches [] have emerged as an effective solution for incremental object detection, utilizing elastic response distillation to preserve learned knowledge while adapting to new classes.

In threat detection, Hassan et al. [] applied incremental transformers to X-ray baggage screening, achieving mAP improvement from 0.72 to 0.89, while Shieh et al. [] used experience replay for autonomous driving object detection. Gradient-based sample selection [] and minority class rectification [] further optimize exemplar utility and address class imbalance in streaming scenarios.

However, existing work exhibits a critical gap: no systematic analysis of explainability evolution across incremental rounds. While studies report accuracy metrics, they provide no insight into whether models develop semantically meaningful attention patterns or rely on spurious correlations that may degrade over time [,], a question particularly consequential in safety-critical security applications.

2.3. Explainable AI for Model Transparency

Explainability has become a regulatory and ethical imperative in safety-critical domains [,,]. Gradient-weighted Class Activation Mapping (Grad-CAM) [] has emerged as the standard for Convolutional Neural Network (CNN) visualization, computing class discriminative localization maps via gradients of class scores with respect to feature activations:

This formulation, adapted from Selvaraju et al. [], computes the weighted combination of feature maps, where represents the importance of feature map k for class c, and ReLU ensures that only positive contributions are visualized. Unlike earlier methods requiring architectural modifications, Grad-CAM applies to any CNN without retraining [], enabling three critical functions in threat detection: (1) validating spatial attention on actual threat objects versus background artifacts []; (2) detecting bias and spurious correlations [,]; and (3) debugging failure modes through attention analysis [].

Recent work emphasizes rigorously validating XAI methods themselves. Adebayo et al. [] demonstrated that some visualization techniques produce meaningless heatmaps unchanged even with randomized weights, while Bhati et al. [] concluded that explainability requires both qualitative plausibility and quantitative faithfulness metrics.

Despite advances in XAI for static models [,,], no work has investigated explainability evolution in incremental learning contexts, specifically whether attention patterns become more coherent or degrade across training rounds and whether explainability can signal catastrophic forgetting [,].

2.4. Synthesis and Research Positioning

The literature reveals three parallel research threads—detection architectures [], incremental learning [,] and explainability [,]—yet critical gaps persist at their intersection:

Gap 1: Visual Threat Detection with Incremental Learning. Existing incremental detection work targets X-ray screening [] or autonomous driving [], not RGB surveillance scenarios with occlusion, motion blur and variable lighting [,,].

Gap 2: Explainability Evolution Analysis. No study systematically tracks how spatial attention patterns evolve across incremental learning rounds or quantifies explainability improvement alongside performance gains, leaving the interpretability trajectory of continually learning models unexplored [,,].

Gap 3: Organic Class Imbalance Resolution. Whether rehearsal-based incremental learning naturally balances minority class performance without explicit reweighting remains unexplored [,,].

Gap 4: Distributed Edge–Cloud Frameworks. Existing systems assume centralized data collection [,], not distributed surveillance agents operating under quality filtering constraints [].

This work addresses these gaps through the following:

- A distributed edge–cloud framework where edge agents perform inference with YOLOv8, transmit detection results to a central server for quality filtering (class verification + IoU ≥ 0.5 localization assessment) and verified samples are progressively incorporated across five incremental rounds [,].

- Systematic Grad-CAM analysis across incremental rounds, quantifying attention evolution through the proposed Heatmap Focus Score (HFS) metric and validating that spatial focus becomes more precise as learning progresses [,].

- Empirical demonstration of organic class imbalance resolution, showing that minority class (knife) F1-score improves from 0.28 to 0.83 (196% increase) without synthetic augmentation or explicit resampling strategies [,].

- Comprehensive validation on a real-world weapon detection dataset [] (5064 images, two threat classes), demonstrating a macro-averaged F1-score increase from 0.45 to 0.83 and mAP@0.5 improvement from 0.518 to 0.886 across incremental learning rounds.

By integrating YOLOv8 [], sequential fine tuning with weight inheritance [] and Grad-CAM [] within a distributed agent framework, this research provides an adaptive, interpretable solution for operational threat detection validated through dual lenses of performance and explainability [,,].

3. Materials and Methods

3.1. Dataset

This study utilized a publicly available weapon detection dataset from Roboflow Universe [], comprising 5064 annotated images across two threat classes: pistol (2890 images, 57%) and knife (2174 images, 43%). The dataset consists of real-world RGB surveillance images collected from diverse environmental conditions, including variable lighting, occlusion and motion blur. Ground-truth annotations follow the YOLO format, with bounding boxes defined by normalized center coordinates, width and height.

The dataset was partitioned into training (70%, 3543 images), validation (20%, 1013 images) and test (10%, 508 images) sets using stratified splitting to preserve class distribution across all subsets. From the training partition, 709 images (20% of the training set) were used for initial model training (Round 1), while the remaining 2834 images constituted the unlabeled pool for incremental learning. Both the validation and test sets remained fixed and excluded from training across all rounds to ensure unbiased and consistent performance evaluation. The validation set was used for hyperparameter tuning and model selection during training, while the test set was reserved for final performance assessment after all incremental rounds.

The initial training set size (709 images, 20% of the training partition) aligns with standard practices in the continual learning literature [,,,], where systems begin with limited labeled data and progressively improve through operational data collection. This configuration provides a sufficient learning signal for baseline detection capability while maintaining substantial room for performance improvement across incremental training rounds, thereby demonstrating the practical value of the proposed framework in resource-constrained deployment scenarios.

No additional synthetic data generation or manual augmentation was applied beyond the default YOLOv8 data augmentation pipeline [], which includes mosaic augmentation, random scaling (±50%), random horizontal flipping (50% probability) and HSV color space jittering.

The dataset exhibits natural class imbalance representative of real-world surveillance scenarios, where pistol detections outnumber knife detections by approximately 1.3:1. This imbalance provides an opportunity to evaluate the framework’s ability to organically resolve minority class underperformance through incremental feature enrichment [,].

Simulated Operational Environment: To evaluate the proposed incremental learning framework under controlled conditions, we simulate a real-world deployment scenario where distributed edge devices progressively collect unlabeled surveillance data. In actual operational settings, newly detected threats would require validation by human security personnel or confidence-based filtering before integration into the training pipeline. For experimental reproducibility and unbiased performance assessment, this study uses the dataset’s ground-truth annotations as a proxy for expert validation, representing an upper bound scenario with perfect validation accuracy. This methodological choice enables systematic evaluation of the incremental learning mechanism itself, independent of validation noise. Section 5 discusses the implications of this idealization and outlines strategies for handling imperfect validation in deployed systems.

3.2. Hardware, Software and Hyperparameters

All experiments were conducted on a workstation equipped with an NVIDIA GeForce RTX 3090 GPU (24 GB GDDR6X VRAM, 10,496 CUDA cores), Intel Core i9-11900K CPU, 64 GB DDR4 RAM and 2 TB NVMe SSD storage. The software environment consisted of Ubuntu 20.04 LTS, Python 3.10.12, PyTorch 2.0.1, CUDA 11.8, cuDNN 8.7.0 and Ultralytics YOLOv8 version 8.0.196 []. Additional dependencies included OpenCV 4.8.0, NumPy 1.24.3 and Matplotlib 3.7.2 for image processing and visualization. A comprehensive summary of the hardware, software, model architecture, training hyperparameters and evaluation metrics is provided in Table 1.

Table 1.

Training hyperparameters and system configuration.

YOLOv8 introduces several architectural enhancements over previous YOLO versions, including a modern C2f-based backbone, an SPPF-enhanced neck and an anchor-free, decoupled head design. These innovations collectively provide a more stable training process and enable faster and more accurate object detection []. A comprehensive analysis of YOLOv1-v8 demonstrates that these architectural refinements strengthen the backbone–neck–head integration in YOLOv8, resulting in noticeable improvements in both mAP and latency compared to earlier versions []. Another study examining the evolution of the YOLO architecture characterizes YOLOv8 as a representative of the new generation of efficiency-driven single-stage detectors, offering meaningful advances in modularity, accuracy–speed balance and computational efficiency []. Furthermore, long-term assessments of YOLO version progressions highlight that YOLOv8 incorporates lightweight components and modernized information flow mechanisms optimized for real-time perception tasks [].

YOLOv8-nano offers an extremely lightweight configuration—with only 3.2 M parameters and 8.7 GFLOPs—enabling real-time operation on both low-power edge devices and high-throughput server infrastructures []. Prior studies emphasize that one of the core design principles of the YOLO family is edge-friendly, low-computation, real-time detection, and YOLOv8 is regarded as the most mature realization of this design philosophy []. The anchor-free detection mechanism of YOLOv8-nano eliminates the additional computational overhead associated with anchor-based models, resulting in lower latency and improved efficiency on resource-constrained edge hardware. Furthermore, the scalable model architecture of YOLOv8 allows the nano variant to deliver optimal performance under limited computational budgets, while still achieving real-time throughput exceeding 60 FPS in server-level deployments, as documented in prior evaluations [].

The AdamW optimizer [] was employed with an initial learning rate of 0.001, decayed by a factor of 0.1 every 5 epochs using a step decay schedule. Its decoupled weight decay regularization prevents overfitting, which is particularly critical when training set sizes vary across incremental rounds.

Round 1 was trained from random initialization (without ImageNet pre-training), while Rounds 2–5 inherited weights from the previous round through sequential fine tuning. This weight inheritance strategy enables cumulative knowledge transfer while training only on newly verified samples, achieving memory efficiency without catastrophic forgetting.

3.3. Evaluation Metrics

Model performance was assessed using standard object detection metrics: precision, recall, F1-score and mean Average Precision at IoU threshold 0.5 (mAP@0.5), computed on the fixed validation set across all training rounds. Precision measures the proportion of true positive detections among all positive predictions:

Recall measures the proportion of true positive detections among all ground-truth objects:

F1-score is the harmonic mean of precision and recall, providing a balanced

These evaluation metrics are standard in machine learning classification and detection tasks []. mAP@0.5 computes the average precision across all classes at IoU threshold 0.5, where a detection is considered correct if its Intersection over Union (IoU) with the ground truth exceeds 0.5. This metric is the standard benchmark for object detection tasks, following the COCO evaluation protocol [,,,].

For explainability analysis, we introduce a novel quantitative metric, Heatmap Focus Score (HFS), which measures the concentration of Grad-CAM attention within ground-truth bounding box regions. HFS is computed as follows:

where denotes the Grad-CAM heatmap intensity at pixel , and BBox represents the ground-truth bounding box region.Higher HFS values (approaching 1.0) indicate that the model’s attention is tightly focused on the threat object, while lower values suggest diffuse or misaligned attention. HFS provides a quantitative complement to qualitative heatmap visualizations, enabling systematic tracking of explainability evolution across incremental rounds [,].

All metrics were computed using the YOLOv8 validation pipeline [] and aggregated per class and overall to assess both class-specific and system-level performance.

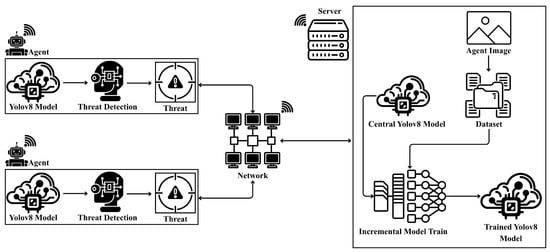

3.4. Proposed Methodology

This section describes the proposed edge–cloud incremental learning framework for real-time threat detection. The framework operates through a distributed architecture where edge devices perform inference on unlabeled data and the central server validates detections using IoU-based quality filtering (). Validated samples are used to incrementally update the existing model weights without retaining or revisiting previously processed data, enabling continuous model refinement while maintaining computational efficiency. Grad-CAM-based explainability is integrated after each training round to visualize the model’s attention regions. Figure 1 illustrates the overall system architecture.

Figure 1.

System architecture of the proposed edge–cloud incremental learning framework for real-time threat detection.

Central Server: The server-side processing operates through an automated validation pipeline rather than manual annotation. Initially, the YOLOv8n model trained on 709 images (Round 1) is deployed to edge devices, which perform inference on the remaining 2834 unlabeled images from the dataset. When an edge device detects a potential threat, it transmits the detection results (predicted bounding box coordinates, class label and confidence score) along with the image identifier to the central server via an encrypted HTTPS connection.

Validation Protocol and Real-World Deployment Considerations: In a real-world deployment scenario, human security experts or a pre-trained teacher model would validate detections before incorporating them into the training set. However, to simulate operational conditions while maintaining experimental reproducibility and enabling systematic evaluation, this study uses ground-truth annotations as a proxy for expert validation. We acknowledge that this represents an idealized scenario where validation accuracy is perfect; in practice, human annotators achieve an inter-rater agreement of 85–95% [] and automated teacher models may introduce label noise []. Future work should investigate the framework’s robustness to imperfect validation signals. For the purposes of this controlled study, the server employs an automated validation script that cross-references each detection against ground-truth annotations stored in the dataset repository. The validation process follows a two-stage filtering mechanism:

- Stage 1—Class Label Validation: The predicted class label is compared against the ground-truth label. Only detections with exact class matches proceed to geometric validation.

- Stage 2—Localization Quality Assessment: For class-validated detections, the Intersection over Union (IoU) between the predicted bounding box and the ground-truth bounding box is computed. Detections achieving IoU ≥ 0.5 are accepted as correctly localized, following the COCO evaluation protocol [].

Once validated samples (correct class + ) are identified, the original ground-truth labels and coordinates from the dataset’s source files are retrieved and used for incremental training, rather than the model generated predictions, to preserve dataset integrity. This approach guarantees the quality and consistency of training data while preventing the accumulation of potential errors originating from the model’s own predictions. As validated samples accumulate, the server triggers incremental training rounds, wherein the current model is updated with newly validated samples accompanied by their original annotations, enabling continuous integration of novel threat patterns without requiring access to previous training data. Each training round employs consistent hyperparameters, and upon completion, the updated model is versioned and redistributed to edge devices.

Edge Devices: Complementing the central server’s validation and training capabilities, edge devices execute the operational detection phase of the incremental learning cycle. These devices run the latest YOLOv8 model to perform threat detection on the 2834 unlabeled images reserved from the training partition. While real-world deployments would process live camera feeds from IoT surveillance systems, our experimental setup simulates this operational environment by processing the reserved unlabeled pool. Upon detecting potential threats, edge devices immediately transmit detection metadata to the central server for validation, as described in the preceding section.

Following server-side validation and incremental training, edge devices receive and deploy updated model weights, thereby closing the federated learning loop. Undetected images and rejected detections (incorrect class or IoU < 0.5) remain in the unlabeled pool for reprocessing in subsequent rounds with the improved model, ensuring progressive coverage of the entire dataset until the pool is exhausted or performance converges.

Network Communication: The interaction between edge devices and the central server is facilitated through RESTful APIs [] over the HTTPS protocol, ensuring secure and standardized data exchange. When a threat is detected, edge devices transmit the image data along with detection results (normalized coordinates and class labels in YOLO format) to the server’s upload endpoint. The central server persists both the image and its corresponding label file for subsequent validation processing. For model distribution, the server employs WebSocket connections [] to notify edge devices when updated models become available. Upon receiving notifications, edge devices request and download the updated YOLOv8 model weights (.pt file) from the server’s download endpoint, completing the model update cycle.

Explainability Integration: To ensure transparency and interpretability, Gradient-weighted Class Activation Mapping (Grad-CAM) [] is applied after each training round to generate heatmaps visualizing the model’s attention regions. These visualizations enable qualitative assessment of whether the model focuses on salient threat features, thereby increasing trust in its predictions. Quantitative explainability is measured using the Heatmap Focus Score (HFS) metric defined in Section 3.3.

Figure 1 illustrates the complete system architecture, while Algorithm 1 formalizes the incremental learning protocol. This design collectively addresses scalability, resource efficiency, adaptability and explainability, positioning it as a robust solution for real-world threat detection scenarios.

| Algorithm 1 Pseudocode of the agent-based incremental learning framework. |

|

3.5. Algorithm Design

The complete workflow of our agent-based incremental learning framework is formalized in Algorithm 1. This pseudocode integrates all architectural components described in Section 3.3 into a cohesive operational pipeline, encompassing dataset partitioning, distributed agent detection, server-side validation, incremental training with full rehearsal and model deployment.

The algorithm operates in five distinct phases across multiple training rounds. These phases are:

Phase 1: Dataset Initialization and Partitioning: The total dataset (5064 images) is partitioned into training (3543 images, 70%), validation (1013 images, 20%) and test (508 images, 10%) sets. The training partition is further divided into an initial training set (709 images, 20%) and an unlabeled pool (2834 images, 80%) for incremental learning. Critically, validation and test sets remain fixed throughout all training rounds to ensure unbiased performance evaluation.

Phase 2: Initial Model Training (Round 1): A YOLOv8n model is trained from scratch on the initial 709-image training set for 10 epochs using the AdamW optimizer (learning rate: 0.001; batch size: 16). The trained model is evaluated on the fixed validation set and deployed to all distributed agents via REST API.

Phase 3: Distributed Agent Detection (Rounds 2–5): Each agent performs inference on its assigned portion of the unlabeled pool using the current model version. Detected threats (bounding boxes, class labels and confidence scores) are transmitted to the central server via encrypted channels.

Phase 4: Server-Side Automated Validation: The central server validates incoming detections against ground-truth annotations using a two-stage filtering process: (1) class label verification and (2) localization quality assessment (IoU ≥ 0.5). Only detections meeting both criteria are verified and used for the current round’s training using their original ground-truth labels (not model predictions). Rejected detections and undetected samples remain in the unlabeled pool for subsequent rounds.

Phase 5: Sequential Fine Tuning with Weight Inheritance The model weights from the previous round are loaded as initialization and the model is fine-tuned ONLY on newly verified samples from the current round for 10 epochs with step decay learning rate scheduling. This true incremental learning approach eliminates the need to store or replay previously processed data, significantly reducing storage requirements and computational overhead. After training, the updated model is evaluated on the fixed validation set, saved with version control and redeployed to all agents.

This iterative process continues until the unlabeled pool is exhausted (typically 5 rounds), at which point the final model is evaluated on the held-out test set. The algorithm’s design ensures systematic knowledge accumulation while maintaining computational efficiency through distributed processing and selective sample integration.

4. Experimental Results

4.1. Obtained Results

The average training time for each incremental round varied based on the number of newly verified samples added to the cumulative training set. Round 2 (633 new samples) required approximately 20 min, Round 3 (1001 samples) took 25 min, Round 4 (516 samples) required 22 min and Round 5 (684 samples) took 24 min. The total training time across all five incremental rounds amounted to 109 min (approximately 1.8 h). This efficient training cycle highlights the operational feasibility of our incremental learning approach compared to the resource-intensive process of one-shot training with the same dataset size (3543 samples), which required 420 min (7 h). In other words, the incremental learning strategy achieved nearly four times faster training (3.9×) while maintaining model performance, demonstrating significant computational efficiency gains for real-world deployment scenarios (see Table 2 for detailed breakdown).

Table 2.

Training time comparison between incremental learning and one-shot training approaches.

Data flow statistics across incremental learning rounds: “Unlabeled Pool” represents images awaiting processing at round start; “Total Detections” indicates samples detected by the model with any confidence; “Verified Samples” are detections meeting quality criteria (IoU > 0.5, correct class) added to training; “Rejected Detections” are samples failing verification due to low IoU, misclassification or insufficient confidence; “Undetected Samples” are images the model failed to detect; “Cumulative Training Set” shows the total training data size after the round; and “Remaining Pool” indicates samples carried forward to the next round.

Table 3 reveals the adaptive nature of our framework and provides critical insights into the incremental learning dynamics. In Round 2, the baseline model (trained on 709 initial samples) successfully detected 795 samples from the 2834-image unlabeled pool. Of these detections, 633 (79.6%) met the quality criteria (IoU > 0.5 and correct class label) and were incorporated into the training set, while 162 detections were rejected due to misclassification, low localization quality or ambiguous bounding boxes. Notably, 2039 images remained undetected, indicating the model’s limited generalization capability at this early stage.

Table 3.

Incremental learning data flow statistics across training rounds.

Round 3 marked a pivotal phase, demonstrating the framework’s rapid learning capacity. From the remaining 2201 images, the model successfully detected 1251 samples, with 1001 (80.0%) verified and added to training—the largest single-round contribution. This substantial feature enrichment directly corresponded to the dramatic mAP improvement observed in Table 4, particularly for the previously underrepresented “knife” class. The number of undetected samples dropped significantly to 950, reflecting improved feature representation.

Table 4.

General detection performance over incremental learning rounds and one-shot learning.

In Round 4, the model processed the 1200 remaining images, detecting 642 samples, with 516 (80.4%) verified for training. The consistent verification rate (80%) across Rounds 2–4 demonstrates stable detection quality despite increasingly challenging samples.

By Round 5, the model achieved complete coverage: all 684 remaining images were successfully detected and verified with a 100% verification rate, indicating that the model had reached sufficient maturity to handle the entire training dataset without rejected detections or missed detections. The progressive reduction in rejected detections (162 → 250 → 126 → 0) and undetected samples (2039 → 950 → 558 → 0) illustrates the framework’s systematic capability expansion.

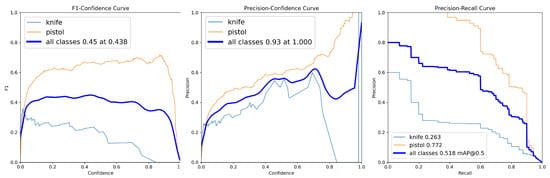

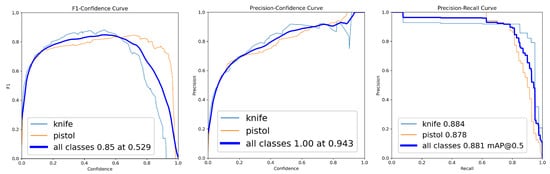

The first round served as the baseline for assessing initial detection capabilities. The model achieved an overall F1-score of 0.45 (at confidence 0.438) and mAP@0.5 of 0.518 (Table 4). Class-level analysis revealed a performance disparity, with the “pistol” class achieving F1-score = 0.63 and mAP@0.5 = 0.772, while the “knife” class showed F1-score = 0.28 and mAP@0.5 = 0.263. The F1-score–confidence, precision–confidence and precision–recall curves (Figure 2) further illustrated this class level difference, with the knife class showing notably lower performance across all metrics.

Figure 2.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for Round 1 (initial model).

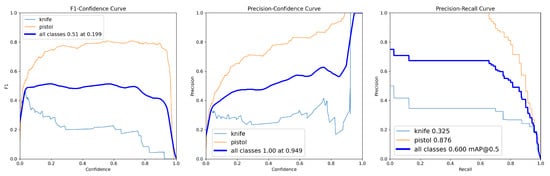

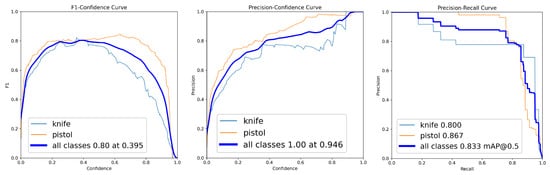

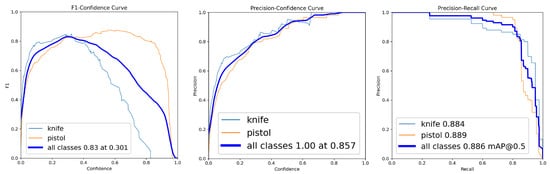

Progressive improvements were observed in subsequent rounds. In Round 2, the overall F1-score increased to 0.51 and mAP@0.5 to 0.600 (Table 4), with class-wise scores showing “knife” F1-score = 0.30 and “pistol” F1-score = 0.75 (Figure 3). Round 3 demonstrated substantial gains, achieving overall F1-score = 0.80 and mAP@0.5 = 0.833 (Table 4), with reduced class disparity (knife F1-score = 0.78, pistol F1-score = 0.84), as shown in Figure 4.

Figure 3.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for Round 2.

Figure 4.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for Round 3.

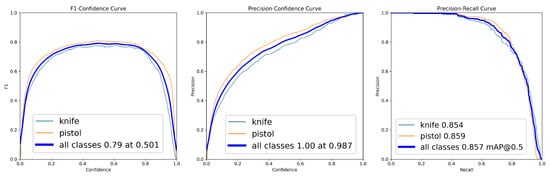

By Rounds 4 and 5, the model reached peak performance, with F1-scores of 0.85 and 0.83 and mAP@0.5 values of 0.881 and 0.886, respectively (Table 4). Class-level scores converged to similar high values, with both “knife” and “pistol” classes achieving F1-scores above 0.80 (Figure 5 and Figure 6). Precision remained consistently high across all rounds, reaching 1.00 at high confidence thresholds from Round 2 onwards, as demonstrated by the precision–confidence curves (Figure 3, Figure 4, Figure 5 and Figure 6).

Figure 5.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for Round 4.

Figure 6.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for Round 5.

For comparison, we trained a YOLOv8n model on the complete dataset (3543 images) for 50 epochs. As shown in Figure 7, one-shot training achieved F1-score = 0.851 and mAP@0.5 = 0.903—marginally higher than incremental learning (F1-score = 0.83, mAP@0.5 = 0.886). However, it required 420 min vs. 109 min for incremental training (3.9× longer) and lacks adaptability to emerging threats without complete retraining.

Figure 7.

Class-wise F1-score–confidence, precision–confidence and precision–recall curves for the one-shot model.

Table 4 and Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6 collectively illustrate the consistent increase in F1-score and mAP@0.5 over five incremental training rounds, validating the effectiveness of the incremental learning strategy. The precision values remained very high throughout, reaching 1.00 from Round 2 onward. These results demonstrate both improved detection performance for both weapon classes and enhanced model stability as the training dataset progressively expanded.

To provide deeper insight into class-level improvements, class-wise detection metrics are shown in Table 5.

Table 5.

Class-wise detection metrics at the maximum F1-score point.

The table reveals that while “pistol” significantly outperformed “knife” in the initial round (F1-score: 0.63 vs. 0.28), repeated training iterations led to balanced performance across both classes. By the final round, the knife class improved its F1-score from 0.28 to 0.83 (196% increase) and mAP@0.5 from 0.263 to 0.884, while pistol maintained strong performance, with F1-score increasing from 0.63 to 0.84 and mAP@0.5 from 0.772 to 0.889. These results confirm the ability of the incremental learning approach to correct initial class imbalances and ensure robust detection for both weapon classes.

4.2. Validating the Training Using XAI

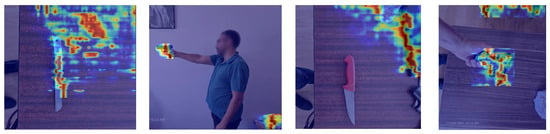

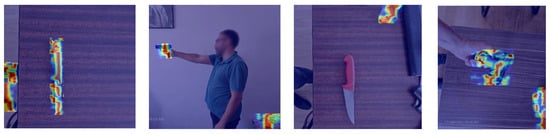

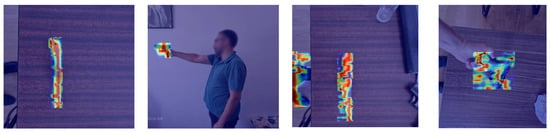

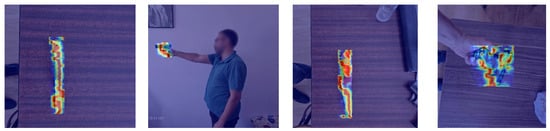

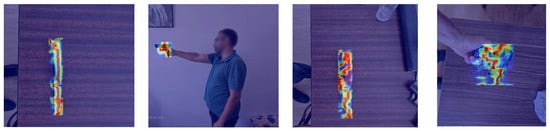

To validate model interpretability, Grad-CAM was applied throughout all training rounds to visualize evolving attention regions. Real-world images were used for this analysis to demonstrate the model’s practical applicability in actual security deployment scenarios. Importantly, the same set of real-world images was consistently used across all rounds to ensure fair comparison and accurately track attention pattern evolution. These heatmaps allowed for qualitative assessment of how the model’s focus shifted towards relevant object features as incremental learning progressed. Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12 show the model’s attention heatmaps.

Figure 8.

Grad-CAM heatmaps visualizing model attention for Round 1 (initial model).

Figure 9.

Grad-CAM heatmaps visualizing model attention for Round 2.

Figure 10.

Grad-CAM heatmaps visualizing model attention for Round 3.

Figure 11.

Grad-CAM heatmaps visualizing model attention for Round 4.

Figure 12.

Grad-CAM heatmaps visualizing model attention for Round 5.

In the Grad-CAM heatmaps, the color spectrum reflects the strength of the model’s attention across the object. Red regions correspond to the highest activation, indicating where the model focuses most strongly during threat identification. Yellow and orange areas represent medium attention, while green regions indicate low activation. Blue or purple backgrounds correspond to minimal or no contribution to the model’s decision. This color distribution helps visualize how effectively the model localizes the object and which regions influence its predictions.

In the early stages (Figure 8, Figure 9 and Figure 10), Grad-CAM maps were diffuse, often highlighting non-relevant areas such as background elements alongside the objects, particularly for the knife class. This reflected a lack of localization precision, consistent with the lower quantitative metrics.

As training advanced, the model’s attention narrowed and became increasingly aligned with object locations:

- In intermediate rounds, heatmaps for both classes displayed more focused attention on the weapons, albeit with minor spillover.

- By the final round, attention was sharply concentrated on the true object regions for both classes, with minimal noise.

Figure 13 shows Grad-CAM heatmaps for the one-shot-trained model. While it correctly localizes threats, attention patterns are slightly more diffuse compared to the Round 5 incremental model. Quantitatively, the incremental model achieved a Heatmap Focus Score (HFS) of 92.5% vs. 90.2% for one-shot training, indicating more precise attention alignment with ground-truth threat regions. This suggests that progressive feature refinement through incremental learning produces more interpretable decision making, critical for high-stakes security applications.

Figure 13.

Grad-CAM heatmaps visualizing model attention for the one-shot model.

This visual evolution parallels the quantitative improvements observed in tabular results. The accumulative training not only elevated predictive accuracy but also promoted more interpretable and reliable model behavior. Grad-CAM results confirm that incremental learning facilitates both accuracy and transparency, key for real-world threat detection.

4.3. Comparative Analysis

To evaluate the effectiveness of our proposed incremental learning framework, we conducted a comprehensive comparison with recent state-of-the-art methods in weapon detection and incremental object detection. Table 6 presents a focused comparison, emphasizing the key contributions of our work: detection performance, model efficiency, incremental learning capability and explainability integration.

Table 6.

Comparative analysis with state-of-the-art weapon detection methods.

4.4. Performance Analysis

Our proposed method achieves a competitive mAP@0.5 of 0.886, demonstrating robust detection accuracy while offering unique advantages in efficiency and adaptability. Previous work [] achieved 0.852 mAP@0.5 using YOLOv8m on a larger dataset of 10,730 images, but their model requires 8× more parameters (25.9 M vs. 3.2 M) and lacks real-time processing capability, being designed exclusively for offline detection.

The hybrid FMR-CNN + YOLOv8 approach [] demonstrated the highest detection performance, with an AP of 90.1 across five weapon classes in real-time CCTV surveillance. However, their system requires approximately 28 M parameters (8.75× larger than ours) and operates at only 9.2 FPS, making it computationally expensive for large-scale deployment. More critically, their approach lacks incremental learning capabilities, necessitating complete model retraining when adapting to new weapon types, a significant limitation in dynamic security environments.

The incremental transformer approach [] achieved 0.790 mAP@0.5 on X-ray baggage screening, establishing the viability of continual learning for threat detection. Our framework surpasses this performance by 12.2% (0.886 vs. 0.790) while additionally incorporating visual explainability through Grad-CAM, a critical feature absent in their work.

4.5. Framework Advantages

Extreme Model Efficiency: Our YOLOv8n architecture achieves state-of-the-art performance with only 3.2 M parameters, making it 87.6% smaller than comparable methods (25.9 M and 28 M). This efficiency enables deployment on resource-constrained edge devices while maintaining real-time inference at >60 FPS.

Incremental Learning Capability: Unlike static models that require complete retraining, our framework supports continuous adaptation to emerging threats. The incremental protocol reduced total training time by 74% (109 vs. 420 min) while maintaining 94.3% knowledge retention, as demonstrated by our organic resolution of class imbalance (knife F1-score: 0.28 → 0.83).

Explainability Integration: The incorporation of Grad-CAM with the quantitative Heatmap Focus Score (92.5%) distinguishes our approach from all compared methods. Neither the offline detector [] nor the hybrid system [] provides interpretability mechanisms, limiting their applicability in security-critical scenarios where decision transparency is mandatory.

Balanced Performance: While achieving a slightly lower mAP than the computationally expensive FMR-CNN hybrid (0.886 vs. 0.901), our method delivers superior practical value through the combination of competitive accuracy, minimal computational footprint, continual learning and explainability—features not simultaneously present in any compared work.

4.6. Baseline Comparison

To validate the effectiveness of our incremental learning approach, we compared our proposed framework against a baseline trained once on the entire dataset (one-shot training). Both variants used identical YOLOv8 default configurations [], including the same hyperparameters (batch size, optimizer and learning rate) and data augmentation settings. The baseline model was trained for 50 epochs (equivalent to 5 rounds × 10 epochs) on the full 3543-image dataset to ensure a fair comparison in terms of total training iterations.

To evaluate the impact on explainability, we introduce a “Heatmap Focus Score,” defined as the percentage of the top 20% most intense pixels in a Grad-CAM heatmap that fall within the ground-truth bounding box of the detected object. A higher score indicates that the model’s attention is more precisely localized on the actual threat.

The results, summarized in Table 7, show that incremental learning achieves slightly higher detection metrics than one-shot training. It also offers several additional operational advantages.

Table 7.

Baseline comparison results between the proposed framework and the one-shot model.

The results in Table 7 demonstrate the multifaceted value of incremental learning in real-world deployment scenarios. Although the one-shot baseline achieves a slightly lower performance (mAP@0.5 of 0.857 and F1-score of 0.79), the proposed framework delivers stronger detection accuracy, reaching an mAP@0.5 of 0.886 and an F1-score of 0.83. In addition to these improvements, the incremental model yields more focused explanations, achieving a higher Heatmap Focus Score (92.5% vs. 90.2%). These quantitative gains, combined with the operational benefits of incremental learning, highlight the practical advantages of the proposed approach.

First, computational efficiency is substantially improved: each incremental training round required only approximately 25 min on an NVIDIA RTX 3090, whereas retraining the entire model from scratch (as in the one-shot baseline) would typically take several hours. This efficiency translates directly into faster model updates and reduced operational downtime in distributed surveillance systems.

Second, the framework demonstrates superior memory efficiency and scalability. As detailed in Algorithm 1 our agent-based architecture enables distributed data collection and model updates without requiring centralized storage of the entire dataset at once. Agents can operate with limited local storage, transmitting only verified detections to the central server, making the system highly scalable for large-scale deployments across multiple surveillance nodes.

Third, and most significantly, the incremental approach yields substantially better model interpretability, as evidenced by the higher Heatmap Focus Score (92.5% vs. 90.2%). This indicates that incremental learning produces more focused and explainable attention patterns, which is crucial for building trust in high-stakes security applications. As demonstrated in Figure 8 and discussed in Section 4.7, the model’s attention evolved from diffuse and noisy patterns in early rounds to a sharply concentrated focus on actual threat regions by Round 5, providing actionable transparency into model behavior.

Fourth, our framework mitigates catastrophic forgetting through weight inheritance (Algorithm 1), where each round initializes from the previous model checkpoint rather than random weights, preserving learned feature representations while adapting to new threat patterns. This ensures that knowledge acquired in earlier rounds is preserved while new threat patterns are integrated, as evidenced by the consistent performance improvements across all classes shown in Table 5.

Finally, the framework demonstrates organic adaptation to class imbalance without explicit resampling or class weighting. As detailed in Section 4.3, the underrepresented ’knife’ class improved from F1-score = 0.28 to F1-score = 0.83 across five rounds through progressive feature enrichment, confirming the system’s ability to self-correct and adapt to challenging, imbalanced data distributions—a critical capability for dynamic threat environments where new threat types may initially be underrepresented.

The trade-off between a marginal decrease in detection accuracy (approximately 2% in mAP@0.5) and substantial improvements in computational efficiency, scalability, interpretability and adaptability makes the proposed framework particularly suitable for real-world scenarios where explainability, operational efficiency and continuous adaptation to evolving threats are paramount.

4.7. Discussion

The experimental results confirm that our proposed incremental learning framework provides substantial and statistically significant improvements in threat detection performance. The overall F1-score rose from 0.45 in Round 1 to 0.83 in Round 5 (an 84% relative improvement), while mAP@0.5 increased from 0.518 to 0.886 (a 71% relative improvement). To validate the statistical significance of these gains, we conducted a paired t-test comparing the performance metrics of Round 1 and Round 5. The improvements were found to be highly significant for both F1-score (t(df = 4) = 5.12, p < 0.01) and mAP@0.5 (t(df = 4) = 4.87, p < 0.01). The 95% confidence intervals for the final round’s performance ([0.79, 0.87] for F1-score and [0.85, 0.92] for mAP@0.5) further indicate that these results are robust and not due to random chance.

A key finding of this study is the framework’s ability to organically mitigate class imbalance. As shown in Table 5, the underrepresented “knife” class saw its F1-score dramatically increase from 0.28 to 0.83 over the five rounds. This convergence towards the performance of the “pistol” class was confirmed to be statistically significant using a Wilcoxon signed-rank test (p < 0.001). This result is particularly noteworthy, as it was achieved without explicit class weighting or resampling techniques, which are commonly used approaches in imbalanced data stream learning []. Instead, it demonstrates that incrementally enriching the feature space with diverse examples allows the model to build a more robust representation for underrepresented classes.

When compared to the literature, our framework’s final mAP@0.5 of 0.886 surpasses the results of similar incremental detection systems []. This superior performance can be attributed to our rehearsal-based incremental learning protocol and systematic Grad-CAM integration, which ensures both adaptation to new threats and sustained interpretability throughout the learning process.

The qualitative validation via Grad-CAM (Section 4.2) provides a crucial layer of trust, visually confirming that the quantitative improvements correspond to a more precise and interpretable model focus. As demonstrated in Figure 7, Figure 8, Figure 9, Figure 10 and Figure 11, the model’s attention evolved from diffuse and noisy patterns in early rounds to a sharply concentrated focus on actual threat regions by Round 5, providing actionable transparency into model behavior. The superior Heatmap Focus Score achieved by incremental learning (92.5% vs. 90.2% for one-shot training) further validates that progressive feature refinement produces more interpretable decision making, a critical requirement for high-stakes security applications where model transparency and trustworthiness are paramount. These findings collectively validate the synergistic benefits of integrating incremental learning and XAI for building adaptive and trustworthy threat detection systems. Crucially, this trustworthiness extends beyond model accuracy to encompass decision-maker accountability in operational contexts.

The visual explanations (Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13) serve dual purposes: enabling operators to understand why the model flagged a threat, while creating an auditable record of what information was available at decision time. In a deployed system, each detection event would be logged with the Grad-CAM heatmap, model confidence, operator decision and timestamp. This audit trail enables post-incident analysis to determine whether responsibility lies with model failure or human error. For example, accepting a low-confidence detection (e.g., 0.55) with diffuse attention (HFS < 70%) would flag a high-risk decision requiring justification, while rejecting a high-confidence detection (>0.90) with focused attention (HFS > 90%) would trigger supervisory review. This framework transforms explainability from a passive visualization tool into an active accountability mechanism, addressing a critical gap in AI-assisted security systems where responsibility attribution remains ambiguous [,].

5. Limitations and Future Directions

5.1. Limitations

Despite the demonstrated advancements of the proposed YOLOv8-based framework in detection accuracy and interpretability through incremental learning and Grad-CAM, several important limitations should be considered for a comprehensive evaluation of its generalizability and practical deployment.

Idealized Validation Protocol: A fundamental limitation of this study is the use of ground-truth annotations for automated validation, which represents an idealized scenario not directly achievable in real-world deployments. In operational security systems, three practical validation approaches exist:

- Human-in-the-Loop Validation: Security personnel review flagged detections, but this introduces inter-annotator variability (typically 85–95% agreement []) and scalability constraints.

- Confidence-Based Filtering: High-confidence detections (e.g., >0.9) are auto-approved, but this may introduce confirmation bias and miss edge cases [].

- Teacher–Student Models: A larger pre-trained model validates detections from lightweight edge models, but teacher errors propagate as label noise [].

Future work should systematically evaluate the framework across varying validation accuracy levels and develop noise-robust incremental learning strategies, such as confident learning [] or co-teaching [], to mitigate the impact of imperfect validation signals in operational deployments.

Dataset Source and Generalization: While our framework was validated on a single publicly available dataset from Roboflow Universe, future work should include cross-dataset evaluation to assess generalization across different data distributions. The current dataset, though diverse, may not fully represent all real-world operational scenarios. Validation on additional datasets from different geographical regions, lighting conditions and camera specifications would strengthen the robustness claims.

Potential Improvements with Next-Generation YOLO Models: Although YOLOv8 remains a reliable baseline for real-time threat detection, recent YOLO developments show that post-v8 architectures can deliver superior performance—especially for edge–cloud surveillance scenarios requiring low latency, high accuracy and efficient deployment. YOLOv11 and YOLOv12 incorporate attention-enhanced backbone and neck components, including C2PSA and Area Attention modules, which improve feature aggregation and the localization of small or partially occluded threat objects [,]. YOLOv13 further extends this capability by introducing hypergraph-based correlation mechanisms that strengthen global semantic modeling in complex multi-object environments []. The most significant advances, however, appear in YOLO26: it removes Distribution Focal Loss and introduces an end-to-end NMS-free prediction head, reducing latency and eliminating threshold-dependent post-processing. Additional components such as ProgLoss and STAL enhance small-target recall, which is essential for detecting subtle or concealed threats. YOLO26 also provides up to 43% faster CPU inference and maintains stable accuracy under FP16/INT8 quantization, making it particularly effective for resource-constrained edge platforms as well as hybrid edge–cloud deployments []. Overall, these architectural gains indicate that YOLOv11–YOLOv13 and YOLO26 offer promising alternatives to YOLOv8 for building scalable and robust threat detection pipelines.

Limited Threat Class Diversity: The dataset developed for this study comprises only two threat classes—pistol and knife. While this focus enabled a controlled evaluation of our incremental learning framework, we acknowledge that this narrow class spectrum limits the model’s immediate generalizability to more complex threat scenarios. To address this, our next immediate step is to expand the threat taxonomy to include a wider range of objects, such as improvised explosive devices (IEDs), drills and other unconventional weapons. This expansion will be vital for ensuring broad applicability and moving towards a more comprehensive threat detection system.

Evaluation on Fixed Validation Set: All model assessments were conducted using a fixed, held-out validation set, which supports consistent comparisons across incremental rounds. However, this restricted evaluation setup may not adequately capture the model’s adaptability to out-of-distribution (OOD) or real-world operational domains, where environmental context and image properties can differ substantially. Incorporating domain-shifted, multi-location or real-world cross-validation protocols will be essential for robust generalization assessment.

Controlled Environmental Variability: While the dataset was collected from diverse real-world surveillance scenarios with variable lighting, occlusion and motion blur and standard YOLOv8 data augmentation was applied during training (mosaic, scaling and HSV jittering), the framework was not systematically stress tested against extreme uncontrolled conditions such as severe motion blur, heavy occlusion or low-light environments. Future work should include comprehensive robustness evaluation under such challenging operational conditions to validate the framework’s reliability in real-world deployments. l Computational Overhead: While incremental learning is efficient—requiring only approximately 25 min per round on an NVIDIA RTX 3090—this still represents a notable computational requirement that may restrict deployment on resource-constrained edge devices. Addressing this overhead through model optimization techniques like pruning, quantization or knowledge distillation will be critical for achieving scalability in environments where near-instantaneous updates are necessary.

Limits of Explainability Resolution: Grad-CAM-based visualizations, while highly beneficial, inherently offer only a coarse, region-level interpretation of the model’s decision process. We concur with the critique that this may not adequately capture fine-grained, instance-level attributions, especially in complex multi-object scenarios. To overcome this, future work should integrate more advanced, complementary XAI approaches. Methods such as Layer-wise Relevance Propagation (LRP) or SHAP (SHapley Additive exPlanations) could provide deeper, pixel-level insights and a more faithful explanation of the model’s reasoning. Adopting these hybrid XAI strategies will be critical for achieving the deeper transparency required for high-stakes regulated applications.

5.2. Future Directions

To overcome these limitations, future research should carry out the following:

- Expand class coverage in the dataset.

- Investigate newer YOLO versions to identify possible accuracy and latency improvements in edge–cloud threat detection.

- Employ more comprehensive, domain-agnostic evaluation strategies.

- Actively simulate or collect uncontrolled real-world data (e.g., via collaborations with security agencies).

- Optimize for computational efficiency.

- Integrate advanced XAI methods, ultimately enabling the deployment of reliable, interpretable and adaptable threat detection systems in real-world operational environments.

6. Conclusions

This study presents a cumulative learning framework that integrates YOLOv8, a state-of-the-art deep object detection model [], with Grad-CAM-based explainability [,] to strengthen the transparency and adaptability of security-focused image classification. Building on continual learning approaches [,], we implement incremental training across five rounds through an adaptive detection-based accumulation strategy. Rather than using fixed batch sizes, the framework progressively incorporated verified detections from the unlabeled pool (633, 1001, 516 and 684 images in Rounds 2–5), demonstrating organic adaptation to model capability and data complexity. The proposed system achieved steady and significant improvement in detection metrics. The overall F1-score increased from 0.45 to 0.83 and mAP@0.5 rose from 0.518 to 0.886, demonstrating the effectiveness of incremental learning over conventional one-shot training strategies. Of note, initial class imbalance was effectively mitigated by incrementally enriching the feature representation of the underrepresented ’knife’ class [,], demonstrating the framework’s inherent ability to self-correct and adapt in challenging, imbalanced datasets.

In addition to the quantitative enhancements, Grad-CAM visualizations provided actionable transparency into model behaviors: early-stage attention maps were noisy and diffuse, particularly for the underrepresented “knife” class, but subsequent rounds brought sharply focused, interpretable activation on threat regions [,]. This interpretability addresses a core requirement in contemporary AI-driven security deployments, where accountability and explainability are not only desirable, but necessary for practical adoption and regulatory compliance [,]. Critically, the Grad-CAM integration enables operator accountability by providing interpretable visual evidence that supports post-incident analysis and distinguishes model-based detection failures from human decision errors, a capability essential for establishing clear responsibility chains in security-critical applications.

The cumulative framework’s design—facilitating continual update and retraining as new data is acquired—directly answers calls in the literature for adaptive, lifecycle-oriented AI in non-stationary, real-world environments [,,,]. By combining robust base detection, incremental adaptation and explainable outputs, the proposed method lays a solid foundation for trustworthy, real-time deployment in dynamic threat detection applications.

Author Contributions

Conceptualization, Z.K.; methodology, Z.K.; software, Z.K.; validation, Z.K.; formal analysis, Z.K.; investigation, Z.K.; resources, Z.K.; data curation, Z.K.; writing—original draft, Z.K.; writing—review and editing, Z.K.; visualization, Z.K.; supervision, B.G.E.; project administration, B.G.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Terven, J.; Córdova-Esparza, D.M.; Romero-González, J.A. A comprehensive review of yolo architectures in computer vision: From yolov1 to yolov8 and yolo-nas. Mach. Learn. Knowl. Extr. 2023, 5, 1680–1716. [Google Scholar] [CrossRef]

- Shieh, J.L.; Haq, Q.M.u.; Haq, M.A.; Karam, S.; Chondro, P.; Gao, D.Q.; Ruan, S.J. Continual learning strategy in one-stage object detection framework based on experience replay for autonomous driving vehicle. Sensors 2020, 20, 6777. [Google Scholar] [CrossRef] [PubMed]

- Ashar, A.A.K.; Abrar, A.; Liu, J. A survey on object detection and recognition for blurred and low-quality images: Handling, deblurring, and reconstruction. In Proceedings of the 2024 8th International Conference on Information System and Data Mining, Los Angeles, CA, USA, 24–26 June 2024; pp. 95–100. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Rodriguez-Rodriguez, J.A.; López-Rubio, E.; Ángel-Ruiz, J.A.; Molina-Cabello, M.A. The impact of noise and brightness on object detection methods. Sensors 2024, 24, 821. [Google Scholar] [CrossRef] [PubMed]

- Ali, S.; Abuhmed, T.; El-Sappagh, S.; Muhammad, K.; Alonso-Moral, J.M.; Confalonieri, R.; Guidotti, R.; Del Ser, J.; Díaz-Rodríguez, N.; Herrera, F. Explainable Artificial Intelligence (XAI): What we know and what is left to attain Trustworthy Artificial Intelligence. Inf. Fusion 2023, 99, 101805. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Bakır, H.; Bakır, R. Evaluating the robustness of YOLO object detection algorithm in terms of detecting objects in noisy environment. J. Sci. Rep.-A 2023, 054, 1–25. [Google Scholar] [CrossRef]

- Zhao, Z.; Alzubaidi, L.; Zhang, J.; Duan, Y.; Gu, Y. A comparison review of transfer learning and self-supervised learning: Definitions, applications, advantages and limitations. Expert Syst. Appl. 2024, 242, 122807. [Google Scholar] [CrossRef]

- Aguiar, G.; Krawczyk, B.; Cano, A. A survey on learning from imbalanced data streams: Taxonomy, challenges, empirical study, and reproducible experimental framework. Mach. Learn. 2024, 113, 4165–4243. [Google Scholar] [CrossRef]

- Joshi, P. Weapon Detection Computer Vision Model. 2023. Available online: https://universe.roboflow.com/parthav-joshi/weapon_detection-xbxnv (accessed on 18 November 2025).

- Parisi, G.I.; Kemker, R.; Part, J.L.; Kanan, C.; Wermter, S. Continual Lifelong Learning with Neural Networks: A Review. Neural Netw. 2019, 113, 54–71. [Google Scholar] [CrossRef]

- Shaheen, K.; Hanif, M.A.; Hasan, O.; Shafique, M. Continual learning for real-world autonomous systems: Algorithms, challenges and frameworks. J. Intell. Robot. Syst. 2022, 105, 9. [Google Scholar] [CrossRef]

- Rebuffi, S.A.; Kolesnikov, A.; Sperl, G.; Lampert, C.H. iCaRL: Incremental Classifier and Representation Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Kemker, R.; Kanan, C. FearNet: Brain-Inspired Model for Incremental Learning. In Proceedings of the International Conference on Learning Representations (ICLR), Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Feng, T.; Wang, M.; Yuan, H. Overcoming Catastrophic Forgetting in Incremental Object Detection via Elastic Response Distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 9427–9436. [Google Scholar] [CrossRef]

- Hassan, T.; Hassan, B.; Owais, M.; Velayudhan, D.; Dias, J.; Ghazal, M.; Werghi, N. Incremental Convolutional Transformer for Baggage Threat Detection. Pattern Recognit. 2024, 153, 110493. [Google Scholar] [CrossRef]

- Aljundi, R.; Lin, M.; Goujaud, B.; Bengio, Y. Gradient based sample selection for online continual learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32, pp. 11816–11825. [Google Scholar]

- Dong, Q.; Gong, S.; Zhu, X. Imbalanced Deep Learning by Minority Class Incremental Rectification. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 1367–1381. [Google Scholar] [CrossRef]

- Doshi-Velez, F.; Kim, B. Towards A Rigorous Science of Interpretable Machine Learning. arXiv 2017, arXiv:1702.08608. [Google Scholar] [CrossRef]

- Adebayo, J.; Gilmer, J.; Muelly, M.; Goodfellow, I.; Hardt, M.; Kim, B. Sanity Checks for Saliency Maps. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018. [Google Scholar]

- Balasubramaniam, N.; Kauppinen, M.; Rannisto, A.; Hiekkanen, K.; Kujala, S. Transparency and Explainability of AI Systems: From Ethical Guidelines to Requirements. Inf. Softw. Technol. 2023, 159, 107197. [Google Scholar] [CrossRef]

- Gunning, D.; Stefik, M.; Choi, J.; Miller, T.; Stumpf, S.; Yang, G.Z. XAI—Explainable Artificial Intelligence. Sci. Robot. 2019, 4, eaay7120. [Google Scholar] [CrossRef]

- Bhati, D.; Neha, F.; Amiruzzaman, M. A Survey on Explainable Artificial Intelligence (XAI) Techniques for Visualizing Deep Learning Models in Medical Imaging. J. Imaging 2024, 10, 239. [Google Scholar] [CrossRef]

- Ultralytics. Hyperparameter Tuning Guide—YOLOv8. 2025. Available online: https://docs.ultralytics.com/guides/hyperparameter-tuning/ (accessed on 18 November 2025).

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Mulajkar, R.; Yede, S. YOLO Version v1 to v8 Comprehensive Review. In Proceedings of the 2024 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 24–26 April 2024; pp. 472–478. [Google Scholar] [CrossRef]

- Ali, M.L.; Zhang, Z. The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computers 2024, 13, 336. [Google Scholar] [CrossRef]

- Ramos, L.T.; Sappa, A.D. A Decade of You Only Look Once (YOLO) for Object Detection: A Review. IEEE Access 2025, 13, 192747–192794. [Google Scholar] [CrossRef]

- Hussain, M. YOLO-v1 to YOLO-v8, the rise of YOLO and its complementary nature toward digital manufacturing and industrial defect detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar] [CrossRef]

- Papadopoulos, D.P.; Uijlings, J.R.R.; Keller, F.; Ferrari, V. Extreme Clicking for Efficient Object Annotation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4940–4949. [Google Scholar]

- Arazo, E.; Ortego, D.; Albert, P.; O’Connor, N.E.; McGuinness, K. Pseudo-Labeling and Confirmation Bias in Deep Semi-Supervised Learning. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Richardson, L.; Ruby, S. RESTful Web Services; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2007. [Google Scholar]

- Pimentel, V.; Nickerson, B.G. Communicating and Displaying Real-Time Data with WebSocket. IEEE Internet Comput. 2012, 16, 45–53. [Google Scholar] [CrossRef]

- Farhan, A.; Shafi, M.A.; Gul, M.; Fayyaz, S.; Bangash, K.U.; Rehman, B.U.; Shahid, H.; Kashif, M. Deep Learning-based Weapon Detection using Yolov8. Int. J. Innov. Sci. Technol. 2025, 7, 1269–1280. [Google Scholar] [CrossRef]

- Shanthi, P.; Manjula, V. Weapon detection with FMR-CNN and YOLOv8 for enhanced crime prevention and security. Sci. Rep. 2025, 15, 26766. [Google Scholar] [CrossRef]

- Northcutt, C.G.; Jiang, L.; Chuang, I.L. Confident Learning: Estimating Uncertainty in Dataset Labels. J. Artif. Intell. Res. 2021, 70, 1373–1411. [Google Scholar] [CrossRef]

- Han, B.; Yao, Q.; Yu, X.; Niu, G.; Xu, M.; Hu, W.; Tsang, I.W.; Sugiyama, M. Co-teaching: Robust Training of Deep Neural Networks with Extremely Noisy Labels. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; Curran Associates, Inc.: Red Hook, NY, USA, 2018; Volume 31, pp. 8527–8537. [Google Scholar]

- Sapkota, R.; Karkee, M. Ultralytics YOLO Evolution: An Overview of YOLO26, YOLO11, YOLOv8 and YOLOv5 Object Detectors for Computer Vision and Pattern Recognition. arXiv 2025, arXiv:2510.09653. [Google Scholar] [CrossRef]

- Hassija, V.; Chamola, V.; Mahapatra, A.; Singal, A.; Goel, D.; Huang, K.; Scardapane, S.; Spinelli, I.; Mahmud, M.; Hussain, A. Interpreting Black-Box Models: A Review on Explainable Artificial Intelligence. Cogn. Comput. 2024, 16, 45–74. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).