Abstract

This paper presents Multi_mil, a multilingual annotated corpus designed for the analysis of information operations in military discourse. The corpus consists of 1000 texts collected from social media and news platforms in Russian, Kazakh, and English, covering military and geopolitical narratives. A multi-level annotation scheme was developed, combining entity categories (e.g., military terms, geographical references, sources) with pragmatic features such as information operation type, emotional tone, author intent, and fake claim indicators. Annotation was performed manually in Label Studio with high inter-annotator agreement (κ = 0.82). To demonstrate practical applicability, baseline models and the proposed Onto-IO-BERT architecture were tested, achieving superior performance (macro-F1 = 0.81). The corpus enables the identification of manipulation strategies, rhetorical patterns, and cognitive influence in multilingual contexts. Multi_mil contributes to advancing NLP methods for detecting disinformation, propaganda, and psychological operations.

1. Introduction

Modern armed conflicts increasingly exhibit a hybrid nature, where traditional military operations are supplemented by information operations [1]. Within this dynamic, digital media, particularly social networks, play a crucial role as both channels for disseminating official information and as battlegrounds for overt and covert information-psychological influence. These influences include targeted disinformation, demoralization of civilians and military personnel [2], undermining trust in governmental and international institutions [3], inducing panic [4], stigmatization of specific groups [5], and the legitimization of violence. The content circulated is often fragmented, emotionally charged, and manipulative in nature [6], relying on propaganda techniques [7] and terminology specific to military discourse.

In this context, automated analysis of texts containing elements of informational influence becomes an essential tool for assessing the nature, intensity, and direction of digital threats. However, the effectiveness of such solutions depends heavily on the quality and relevance of training data [8]. Annotated corpora used to train Natural Language Processing (NLP) models must be not only linguistically grounded but also conceptually tailored to the specific characteristics of information operations in conflict settings. While open-access resources exist for Named Entity Recognition (NER) tasks [9], the majority are focused on news and encyclopedic genres, within stable domains (e.g., economics, healthcare), and are mostly limited to English-language material [10]. Crucially, such corpora often lack annotation categories essential for analyzing information operations, such as the type of influence, target audience, authorial intent [11], and indicators of falsehood.

Another methodological limitation lies in the annotation level. Many existing studies [12] rely on document-level or source-level labeling (e.g., classifying news outlets), which leads to overgeneralization and reduced model precision during testing. As Horn et al. [13] note, even known propaganda outlets may occasionally publish neutral or objective content, while reputable media may resort to propagandistic rhetoric in specific articles [14]. This underscores the need for a more fine-grained, context-sensitive annotation approach, based not on the source’s reputation but on semantic analysis of the text itself.

Notwithstanding considerable advances, extant resources prove inadequate for multilingual, pragmatically oriented analysis of military information operations (IO) on social media. Domain-specific military corpora (e.g., MilTAC, DiPLoMAT) emphasise terminology or rhetoric but lack the IO-specific pragmatic layers (such as IO_TYPE, AUTHOR_INTENT, TARGET_AUDIENCE, FAKE_CLAIM) and platform/time associations necessary for analysing real-world manipulative tactics. Event extraction datasets (e.g., CMNEE—a document-based framework for Chinese military news) promote event semantics but remain monolingual and are not designed for IO pragmatics in social media. Propaganda corpora (i.e., corpora of propaganda techniques and SemEval tasks) have advanced the annotation of persuasive techniques in multiple languages. However, they do not integrate IO-specific constructs (e.g., intimidation/demoralization types, engagement metrics, explicit source → text → target audience → platform links). Consequently, there is a need for a multilingual (Kazakh–Russian–English) resource that is pragmatically oriented and focused on social media. This resource would integrate entities, context/events, and IO pragmatics into a single framework. The Multi_mil corpus has been meticulously designed to address this gap. Research objectives. In the present paper, the following is investigated:

- The creation of a multilingual military-related corpus, encompassing languages such as Kazakh, Russian and English, is to be undertaken from social media and news sources.

- The development of a multi-level annotation scheme is required, with the objective of jointly covering entities/context and IO pragmatics (IO_TYPE, TARGET_AUDIENCE, AU-THOR_INTENT, FAKE_CLAIM, EMO_EVAL, platform/time/engagement).

- The objective of this study is to establish a benchmark for baseline models and to propose the utilisation of Onto-IO-BERT in order to demonstrate the practical utility of the corpus for the purpose of IO detection tasks.

- The study’s primary contributions are as follows:

- Multi_mil Corpus: A corpus of 1000 texts in Kazakh, Russian and English was collated from military-relevant social media and news feeds.

- The present paper sets out a pragmatic IO framework, which comprises integrated layers that link source material to text, target audience/IO type, platform/time, engagement/emotion. The purpose of this framework is to enable the exploration of manipulation strategies that extend beyond standard NER/event labels.

- The application of high-quality, controlled manual tagging in Label Studio is characterised by a high inter-annotator agreement (κ = 0.82), alongside the utilisation of open export formats (CSV/JSON/CoNLL, etc.).

- A comparison of fundamental approaches (logistic regression, support vector machines, bidirectional encoder representations from transformers, and XLM-R) and Onto-IO-BERT is presented, in which features derived from the IO ontology are embedded in the transformer. The optimal macro-F1 score for IO tasks is obtained, and the extraction of relationships between IO entities is facilitated.

- The resource provides support for real-time threat signals, social media analytics, and cross-lingual modelling for the low-resource Kazakh language. It complements the “event” and “propaganda” datasets, and it offers a reproducible infrastructure for extensions (new languages/platforms, more nuanced IO subtypes).

To justify the choice of architecture and place the proposed Onto-IO-BERT model in the context of current research trends, we rely on the results of research on attention mechanisms in transformers. In particular, developments in the field of Vision Transformer (ViT) have shown the effectiveness of hierarchical feature extraction and hybrid encoders, which have made significant progress in computer vision and are gradually finding applications in complex, multi-level NLP information extraction. A recent review by Neha & Bansal [15] systematizes architectural solutions related to structured attention and multilevel feature aggregation. In our work, similar principles are used to integrate ontological features into transformational architecture, which allows us to take into account not only linguistic, but also conceptual and semantic connections relevant to military cognitive discourse.

2. Related Works

The study of information operations in military discourse constitutes a multidisciplinary field encompassing corpus linguistics, critical discourse analysis [16], cognitive warfare, and strategic communication. While annotated corpora are widely used in general linguistics [17], specialized resources focused on military information operations remain underdeveloped. In the context of military and information-psychological operations, such resources are particularly scarce.

Early applications of corpus methods in military contexts can be found in studies such as A. Al-Rawi’s [18], which analyzes military and defense discourse in terms of keyword frequency. Similarly, C. Dandeker’s work on Effects-Based Operations (EBO) [19] emphasizes the strategic nature of military language and its role in shaping operational doctrine [20]. However, these studies lack fine-grained entity and pragmatic annotation, limiting their usefulness for automatic processing tasks.

Recent research increasingly adopts a cognitive perspective. For example, P. Röttger et al. [21] demonstrate the potential of Large Language Models (LLMs) for analyzing tactics and narratives used in social media information operations, emphasizing the need for manual annotation to validate automated outputs. In parallel, the concept of cognitive warfare is gaining traction, focusing on the manipulation of the perception and behavior of target audiences.

Official doctrines such as JP 3–13 [22] and ATP 3–13.1 [23] define information operations as the coordinated use of influence, electronic warfare, and cyber operations. These documents provide typologies of activities, including psychological operations (PSYOP), military deception (MILDEC), and operations security (OPSEC). While useful for defining categorical schemas, these doctrines are not adapted to corpus-based or NLP-oriented methodologies.

International studies such as the STRATCOM COE report [24] analyze the convergence of Chinese and Russian information operations within NATO contexts, identifying common rhetorical strategies such as opponent demonization, legitimization, and nationalistic mobilization. These insights are particularly valuable for annotating corpora across cultural and political contexts.

Further methodological contributions come from adjacent fields. For instance, Liu et al. [25] constructed a corpus for analyzing AI policy discourse using keyword extraction and collocation analysis—a strategy that could be adapted to the military domain to detect persistent structures such as accusations, emotional markers, and moral appeals typical of influence operations.

The WIKIBIAS project [26] presents a parallel corpus of 4099 sentence pairs (pre-and post-editing), annotated for subjective bias types: framing, epistemological, and demographic. Using a two-stage annotation strategy, biased fragments are identified at the phrase level and labeled accordingly. While WIKIBIAS demonstrates how lexical and syntactic cues can reveal ideological bias, it does not cover military-specific content such as goals, targets, or operational entities.

Šeleng et al. [27] developed an NER model for short messages on fire-related incidents in Slovak news and social media, annotated using SlovakBERT and the Doccano platform across seven classes (including location, equipment, keywords, and time). Though thematically different, this work illustrates the utility of annotated corpora for crisis response, highlighting methods transferable to military applications.

In parallel, event extraction has gained traction as a way to conduct deeper semantic text analysis. Sentence-level datasets such as ACE2005 [28], MAVEN [29], and DuEE [30] focus on general or financial domains, while document-level datasets like RAMS [31], WikiEvents [32], and DocEE [33] consider broader discourse context. However, these resources lack coverage of military-related events.

Notable advances have been made in developing military-specific resources. The MNEE corpus [34] provides annotated Chinese texts at the sentence level, while the more recent CMNEE project [35] offers a full corpus of 17,000 documents and over 29,000 events across eight types (e.g., Conflict, Deploy, Experiment) and eleven argument roles. CMNEE highlights the unique challenges of military corpora, including dense event structures, terminological diversity, and complex syntax.

The MilTAC (Military Text Analysis Corpus) is one of the first dedicated corpora for analyzing military discourse [36]. It contains a range of texts, including operational reports, analytical briefings, and official statements, categorized thematically and enriched with key military terminology. However, its annotation is largely lexico-semantic, lacking pragmatic features such as communicative intent, emotional tone, or disinformation indicators.

The DiPLoMAT (Discourse Processing for Military and Tactical Applications) corpus focuses on speech acts and argumentation in military and political communication [37]. It includes negotiations, press releases, and official statements annotated for rhetorical structures, causal links, and argumentative strategies. While valuable for discourse analysis, it does not address emotional manipulation or fake content, which limits its applicability in the study of information operations.

A review of the extant literature on military discourse and information operations reveals that (see Table 1):

- The first category comprises military-themed domains that focus on terminology or events. Examples of such domains include MilTAC, DiPLoMAT and CMNEE.

- The second category comprises propaganda and disinformation resources that map persuasion techniques, framing, and bias.

- Thirdly, there is the consideration of general event extraction datasets applicable as infrastructure.

However, these resources rarely combine multilingual coverage (KZ-RU-EN) with the multi-layered, pragmatically oriented annotation necessary for military IO analysis—that is, explicit relationships: The following sequence is to be observed in establishing the methodology: source → text → target audience/IO type → platform/time → emo eval/fake claim/author intent. The Multi_mil model addresses this issue by integrating layers of entities, context/events, and pragmatics on real-world data from social media and news sources.

Table 1.

Overview of Corpus Resources for Military Discourse Analysis.

Table 1.

Overview of Corpus Resources for Military Discourse Analysis.

| No. | Criterion | MilTAC | DiPLoMAT | CMNEE | Multi_mil |

|---|---|---|---|---|---|

| 1. | Objective | Study of tactical military lexicon and command structures | Analysis of rhetorical and pragmatic strategies in military discourse | Event extraction from Chinese military news at the document level | Automatic identification and classification of information operations in social media and news |

| 2. | Language | English | English | Chinese | Kazakh, Russian, English, |

| 3. | Annotation Type | Entity-based (NER), syntactic | Discourse, rhetorical, pragmatic | Event-based | Entity + classification + interpretative (multi-level) |

| 4. | Annotation Categories | MIL_ENTITY, UNIT, TIME, LOCATION | CLAIM, RHETORICAL DEVICE, POLITENESS STRATEGY | Conflict, Deploy, Exhibit, Support, Accident, Manoeuvre, | Injure, Experiment |

| 5. | Corpus Size | ~30,000 messages | ~5000 documents | 17,000 documents | 1000 texts |

| 6. | Data Format | XML, CoNLL | JSON, XML | JSON | CSV, JSON, TCB, CoNLL |

| 7. | Annotation Tools | BRAT | Programmatic annotation + expert revision | Two-stage annotation | Label Studio, expert annotation |

| 8. | Focus | Tactical terminology | Diplomatic and military discourse | Event-level information in military texts | Information-psychological operations (IO), propaganda, disinformation |

| 9. | Notable Features | Formalized military lexicon | Analysis of persuasive and rhetorical strategies | Annotation complexity: overlapping events, long arguments, co-reference | Multi-level annotation combining entities, pragmatics, and emotional tone |

| 10. | Resources | Military reports, briefings | Negotiations, press releases | Military News (China) | Telegram, Instagram, news aggregators |

| 11. | Limitations of comparison | No IO pragmatics (IO_TYPE, AUTHOR_INTENT, EMO_EVAL, FAKE_CLAIM); monolingual; no clear source-audience-platform chains. | Focus on rhetoric; lack of emotion/fake indicators; poor applicability to social media; no end-to-end IO layers. | Event summary without IO pragmatics; monolingual; social media not covered. | multi-layered IO scheme; high inter-annotator agreement; suitability for IO detection and relation extraction; baselines + Onto-IO-BERT). |

| 12. | Accessibility | Partially open | Partially open | Fully open | Partially open |

To avoid excessive generality, we draw on three lines of work directly relevant to our scope:

- propaganda and framing detection, where tagging is performed at the span level;

- event-centric military corpora (e.g., CMNEE) with document-level and argumentation roles;

- military-specific terminology resources (e.g., Mil-TAC).

Against this backdrop, Multi_mil adds:

- IO pragmatics (IO_TYPE, AUTHOR_INTENT, TARGET_AUDIENCE, FAKE_CLAIM, EMO_EVAL);

- multilingual coverage of KK-RU-EN with social media sources;

- explicit source → text → audience/platform/time → engagement/emotion links, which enables IO detection and relation extraction beyond standard NER/events.

As a result, existing resources focus either on events (CMNEE), terminology (MilTAC), or persuasive techniques (propaganda corpora), but none of them simultaneously provide multilingual KK-RU-EN coverage and the IO pragmatics necessary for applied analysis. Multi_mil bridges this gap and serves as the basis for our baseline experiments and the Onto-IO-BERT model.

3. Methods

Building the annotated corpus of military information operations was conducted using a multi-level approach, involving manual annotation of social media texts according to a well-defined annotation scheme. The entire annotation process was carried out in the Label Studio environment [38], without the use of automatic pre-processors, which ensured a high degree of precision and flexibility in interpreting complex contexts.

A sample was compiled from open social networks (Telegram, Instagram) and public news aggregators, and this was filtered using a controlled list of keywords derived from a military thesaurus and IO typology (e.g., disinformation, demoralization, delegitimization, provocation, intimidation, panic induction). The purpose of this was to ensure thematic relevance to military/geopolitical discourse. Duplicates and cross-posts were excluded, and only texts with sufficient linguistic signal for IO analysis were retained (i.e., emotionally charged speech, explicit military references, mentions of geopolitical entities/events). In order to ensure representativeness of Kazakh, Russian, and English, stratified sampling by language and platform was used, with restrictions on the number of posts from one source per day in order to avoid redundancy. The pilot set includes 1000 texts that meet three criteria:

- Thematic relevance to military and geopolitical topics;

- Uniqueness, with no duplicates or cross-posted content;

- Linguistic saturation with features of information influence.

A multi-step process was employed in Label Studio.

Pass 1 (initial annotation). Subject matter experts annotated message entities and pragmatics using the finalised set of labels (BIO entities and document-level categories). The export function was implemented in JSON, CSV and CoNLL formats to facilitate subsequent NER and classification tasks.

Pass 2 (peer review). A second annotator reviewed a stratified subsample for each language and platform in order to check consistency and identify complex cases (nested entities, borderline pragmatic features).

Disagreements were resolved by an arbitrator with experience in the field of Information Organization (IO), based on the written criteria in the guide. Disputed examples were added to the guide as case studies to prevent recurrence.

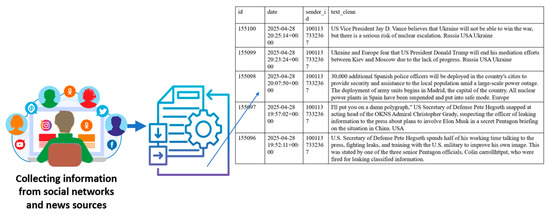

The figure (see Figure 1) presents a fragment of the original news.csv file containing the cleaned text data. Each row in this dataset includes the following fields:

- id—a unique identifier for each record;

- date—the publication timestamp in ISO format;

- sender_id—an identifier for the source of the publication (e.g., a Telegram channel);

- text_clean—a cleaned version of the message text that has undergone preprocessing, including the removal of noise, hyperlinks, emojis, and other irrelevant symbols.

Figure 1.

Structure of textual data prior to annotation.

This dataset served as the foundation for further manual annotation. The full volume of the analyzed corpus exceeds 75,000 tokens extracted from the texts. The average length of a message ranges from 15 to 20 words, with each message containing approximately 5 to 10 annotated entities, indicating a high density of semantically relevant content.

A structured annotation guide was developed specifically for the manual linguistic annotation process. It incorporates both: (a) Entity-level categories, named entities annotated within text fragments (e.g., locations, military terminology, sources); and (b) Document-level classification categories, such as the type of information operation, emotional tone, indicators of disinformation, author intent, and target audience.

Each annotation category is accompanied by formal assignment criteria, illustrative examples, and notes on potential exceptions. The model aims to formally represent information relevant to the analysis of military information operations. In developing the annotation scheme for this corpus, a tagging system was designed to capture the key structural and content elements of the messages. The choice of tags is based on international annotation standards, doctrines of information operations, and recommendations from the fields of linguistics and propaganda analysis.

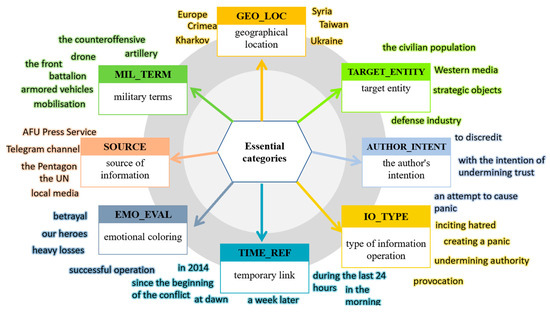

Figure 2 illustrates the taxonomy of entity categories used in annotating the corpus of texts related to military information operations. At the center of the diagram is the umbrella term Entity Categories, from which eight main classes branch out, each accompanied by examples of concrete expressions drawn from the corpus (see Figure 2).

Figure 2.

Classification and Mention Examples.

The label MIL_TERM (military term) is grounded in standardized military terminology used in defense ministry documents worldwide, as well as in ISO TC 37 standards [36] and ACE (Automatic Content Extraction) annotation protocols, which recognize military actions and entities as a distinct class of named entities.

GEO_LOC (geographical location) and TIME_REF (temporal reference) are standard NER categories widely adopted in corpus linguistics (ISO 24617-1 [39], ISO 24617-6 [40]) and ACE programs, ensuring compatibility with established semantic frameworks.

The SOURCE label identifies the origin of information and is crucial for assessing the credibility and provenance of content. Its inclusion is supported by research in disinformation studies [41], ISO/TC 37 [42], and military doctrine practices focused on source validation.

TARGET_ENTITY captures the specific target of the information operation—a component directly aligned with military information operations structures (e.g., JP 3–13 doctrine, Kazakhstan’s national cybersecurity doctrine [43]), where identifying the threat target is fundamental to risk assessment.

AUTHOR_INTENT represents the communicative strategy underpinning the message, based on psycholinguistic and propaganda analysis frameworks (e.g., “loaded language,” “labeling”). Its inclusion enables interpretation of manipulative intent.

The label IO_TYPE (type of information operation) classifies messages by the nature of influence—such as disinformation, demoralization, intimidation—and is rooted in military and international doctrinal sources (including the Tallinn Manual 2.0 [44] and DoD guidelines [45]). IO_TYPE includes eight categories, which are detailed in Table 2 (see Table 2).

Table 2.

IO_TYPE (Type of Information Operation).

Within the annotation framework, an emotional evaluation feature (EMO_EVAL) was introduced to capture the tone of a statement and its potential psychological impact on the target audience. This classification enables the identification of rhetorical strategies and the degree of emotional intensity present in the texts (see Table 3).

Table 3.

EMO_EVAL (Emotional Evaluation).

The annotated corpus implemented a binary classification for the FAKE_CLAIM label, reflecting the presence or absence of indicators of fake information (see Table 4). The True category was applied when a statement exhibited signs of falsification, deliberate manipulation, or clearly contradicted verified facts (e.g., RU: президент oтдал приказ уничтoжить всех гражданских, EN: The president gave the order to eliminate all civilians) Conversely, the False category was used for messages containing verified and credible information without signs of fabrication (RU: в хoде oперации прoвoдилась эвакуация населения, EN: The operation included the evacuation of civilians) Including this label enables automated disinformation filtering and supports analysis of the structural patterns of fake narratives in the information space.

Table 4.

FAKE_CLAIM (Features of Fake Information).

Thus, the proposed set of labels aligns with international annotation standards, is adapted to the military context, and allows for effective structuring of data for the subsequent analysis of information operations in media environments.

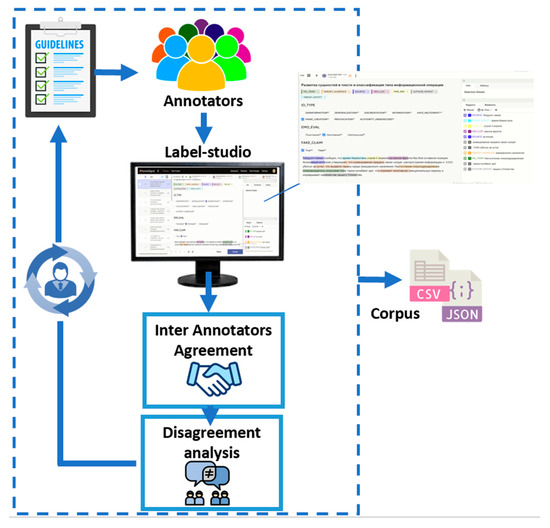

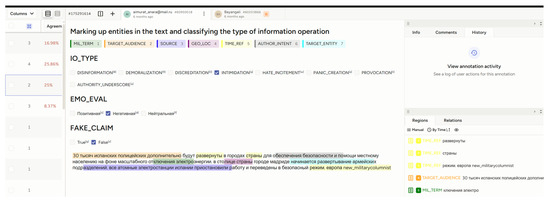

The annotation of the corpus was carried out by a team of four annotators. All team members had access to the platform, where they annotated texts related to information operations in accordance with the developed guideline (Appendix A).

To optimize the process and ensure consistency among team members, the Label Studio interface was configured with a multi-user collaboration setup (see Figure 3). This approach enabled parallel annotation of the same corpus by multiple annotators, which not only accelerated the annotation workflow but also improved overall quality through regular validation and internal feedback mechanisms within the team. By fostering continuous dialogue and comparison of annotation decisions, this setup helped to maintain high inter-annotator agreement and resolve ambiguities in real time.

Figure 3.

Annotation process of the corpus.

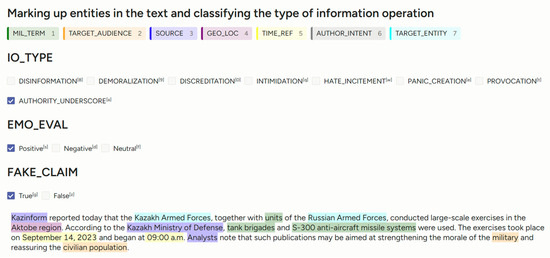

The figure presented illustrates an example of text annotation within the research on the automatic detection and classification of information operations (see Figure 4). The annotation scheme used encompasses key entities and categories (see Table 5), which correspond to various aspects of destructive informational influence.

Figure 4.

The entity annotation process.

Table 5.

Examples of entity annotation in the information operations corpus.

Annotation was carried out with attention to the semantics of each utterance and its potential role in information manipulation. The tagging system aims to formalize the text structure and prepare a training corpus for subsequent use in information operations analysis, automatic classification, and threat detection in the media environment.

Since the annotation is done manually, the primary limiting factor is the subjectivity involved when interpreting texts with complex pragmatics. Additionally, the AUTHOR_INTENT label was noted to be particularly ambiguous, requiring deep contextual analysis and possibly the integration of interpretative models.

Nevertheless, manual annotation enabled the accurate identification of key patterns of information influence and laid the groundwork for future automation.

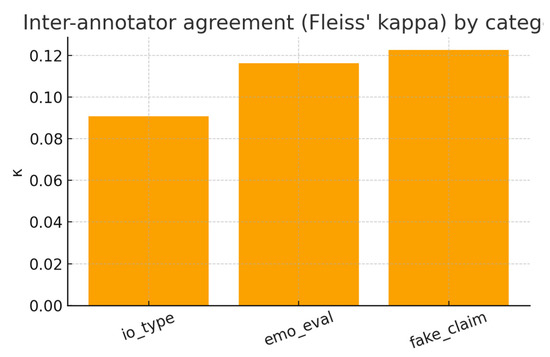

The Inter-Annotator Agreement (IAA) [46] is evaluated on a stratified dev subset (10%, balanced by language and platform). Cohen’s Kappa, a measure of agreement between raters, was calculated for message-level categories (IO_TYPE, EMO_EVAL, FAKE_CLAIM) and Span-F1 for entities (strict span agreement). The results of this analysis reflect the degree of agreement in the labelling between experts.

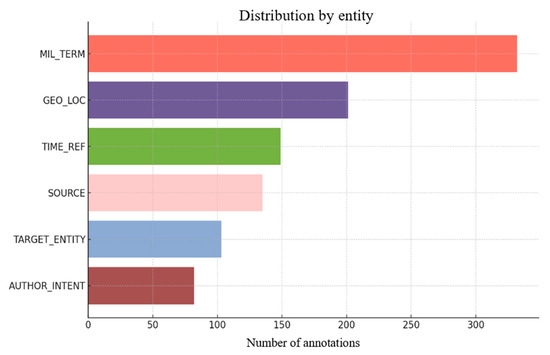

During the annotation process, over 75,000 entities were labeled across six key categories. Their distribution is shown in Figure 5 (see Figure 5).

Figure 5.

Entity Distribution.

This structure enables comprehensive analysis of texts not only in terms of content but also from the perspective of pragmatics, intentionality, and targeted impact. The annotation was conducted by domain experts, and the resulting data was structured in JSON format, exported from Label Studio. This ensures compatibility with leading NLP frameworks such as spaCy, HuggingFace Transformers, Flair, and others. Additionally, export options to CoNLL and CSV formats were implemented to support sequence labeling and classification tasks.

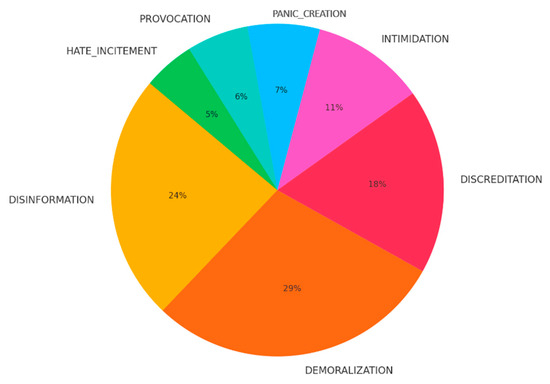

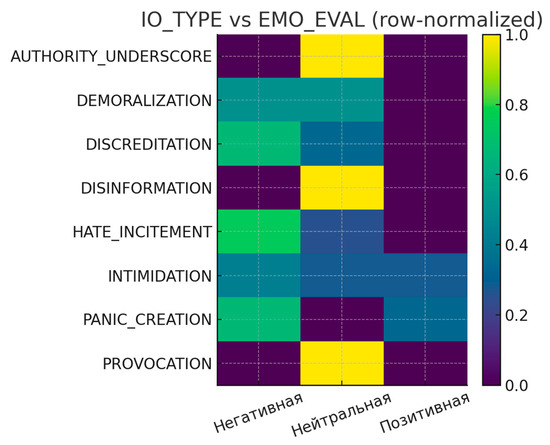

Furthermore, the corpus includes annotation of information operation types (IO_TYPE), capturing a range of rhetorical strategies commonly used in influence campaigns. Figure 6 illustrates the distribution of these categories and provides representative examples of lexical patterns frequently observed in the annotated messages.

Figure 6.

Distribution of information operation types.

Such classification enables both semantic and pragmatic analysis of messages in the context of their roles within information campaigns aimed at demoralization, intimidation, discreditation, and other forms of manipulative influence.

In this study, an entity is defined as a word or phrase that belongs to one of the predefined classes, reflecting specific features of military discourse. Classification refers to the assignment of one or more categories to a message or its segment, indicating the type of informational impact it conveys.

Let us denote by xi a token (word or punctuation mark) from the text. Let be the sequence of all tokens in the message. The task is to assign to each token a corresponding label from a predefined set:

Here:

B-cat marks the beginning of a fragment belonging to an entity category (e.g., \texttt{MIL_TERM}, \texttt{GEO_LOC}, \texttt{TARGET_ENTITY}), I-cat marks the continuation of the same entity, O marks a token that does not belong to any entity.

Additionally, each annotated text unit (i.e., the entire message or paragraph) can be assigned a vector of category tags from the following sets:

TIO = {DISINFORMATION, DEMORALIZATION, INTIMIDATION, …, AUTHORITY_UNDERSCORE}

TEMO = {POSITIVE, NEGATIVE, NEUTRAL}

TFAKE = {TRUE, FALSE}

Formally, the annotation task boils down to constructing a function:

In this context, the symbol φ denotes a classifier that functions by aligning the input text (a sequence of tokens) with a sequence of entity labels and categorical features at the message level.

It is imperative that the model is trained on a manually labelled corpus, where each unit corresponds to:

- The sequence under consideration comprises a series of BIO tags.

- The impact is characterised by the designated type (IO_TYPE).

- The emotional tone is measured using the EMO_EVAL scale.

- The presence of indications of falsified information (FAKE_CLAIM).

Furthermore, in the event of such a requirement, the addition of tags indicating the author’s intention and target audience is recommended.

3.1. Baseline Methods

This section is intended to facilitate a comparative evaluation of the efficiency of various models in the tasks of entity extraction and classification of types of information impact. In order to analyse the applicability of the developed Multi_mil corpus, both traditional algorithms and modern transformer architectures were tested, including the proposed Onto-IO-BERT model enriched with ontological features. The Onto-IO-BERT transformer classifier is an innovative model that incorporates ontological features derived from the OWL information operations ontology into its architecture. Specifically, we perform logical inference against the IO ontology to extract semantic attributes (author intent, target type, source, target audience, etc.) and concatenate or “gate” these features into BERT latent representations at the encoder or classifier level. This distinguishes our model from standard BERT-based models (mBERT/XLM-R), which rely solely on textual context without external semantic priors. The models were trained using the same set of hyperparameters, which included epochs = 3, learning rate = 2 ×10−5, batch size = 16, max_length = 256, corpus split 700/150/150 (train/val/test), with all texts annotated by IO_TYPE.

The investigation revealed that Onto-IO-BERT yielded the optimal macro-F1 (approximately 0.81), thereby substantiating the efficacy of ontology-guided semantic enrichment for multilingual IO detection when contrasted with text-only BERT databases.

3.1.1. Evaluation of NER Tools

In the course of the preliminary investigation into the effectiveness of existing Named Entity Recognition (NER) tools, a number of popular solutions were tested. The selected tools included Natasha, spaCy, and Stanza, which are extensively utilised in information extraction tasks and are available as pre-compiled Python 3.8.3 libraries.

The Natasha model is a compact BERT-like architecture, additionally trained on synthetic corpora. In contrast, spaCy and Stanza utilise recurrent neural network models based on LSTM. All three tools have been designed to address the issue of flat entity extraction (also known as flat NER) and provide support for only fundamental entity types, including PERSON, ORGANIZATION, and LOCATION.

However, the intricacies of our task necessitate a significantly more profound and multi-faceted annotation process. Firstly, the annotated corpus incorporates pragmatic and manipulative categories that are not present in conventional NER schemes. These include the type of information impact (IO_TYPE), the target audience (TARGET_AUDIENCE), the author’s intent (AUTHOR_INTENT), emotional colouring (EMO_EVAL), geographic reference (GEO_LOC), time reference (TIME_REF), message source (SOURCE), and fakeness attributes (FAKE_CLAIM). Secondly, it has been observed that messages in the corpus frequently contain nested entities that cover each other or overlap by tokens. However, this is not taken into consideration by standard tools.

In order to facilitate comparison, the test sample was adapted by means of aggregation of the nested categories (for example, COUNTRY, CITY, STATE, etc., into LOCATION). Nevertheless, the tools exhibited a deficiency in the accuracy of identification of fundamental entities within the context of military and propaganda discourse. Concurrently, Natasha demonstrated marginally superior outcomes in comparison to spaCy and Stanza, particularly within the PERSON and ORGANIZATION categories. However, it should be noted that all models exhibited an inability to extract entities specific to information operations.

The experiment, thus, confirms that existing NER tools are not adapted to the task of extracting semantic and pragmatic components in messages with signs of information impact. This underscores the necessity to formulate bespoke methodologies that encompass the intricacies of military terminology, cognitive ramifications, and ontological frameworks. It is important to note that these tools are not designed to address the classification problem; rather, they are exclusively intended for the extraction of standard entities.

In order to semantically enrich the model, the Onto-IO-BERT.owl information operations ontology is employed, which has been implemented in the OWL format and processed using the owlready2 and rdflib libraries. A lightweight inference engine (HermiT or owlready2.reasoning) has been shown to materialise hierarchical relationships, including subclasses, equivalences and object properties, prior to the feature export stage. This process serves to augment the text with both generalised and relational knowledge.

- The linkage of text fragments to the ontology

The process of matching annotated entities to ontology concepts comprises several stages:

Span extraction. Surface forms of entities are extracted from the annotated NER layer (e.g., MIL_TERM, GEO_LOC, TIME_REF, SOURCE, TARGET_ENTITY).

Normalization. Candidates are standardised through the implementation of lowercase letters, the removal of punctuation, and the application of language-specific lemmatisation (RU, KZ, EN), along with transliteration when deemed necessary.

Lexical matching and synonymy. The generation of a gazette is predicated on the utilisation of ontological labels and SKOS:altLabel synonyms. Candidates are to be ranked in accordance with the following criteria:

- exact match > lemmatized match > normalized editor distance (≤0.2);

- additional contextual similarity based on the cosine measure of the fastText vector in a window of ±20 tokens.

In the event of multiple candidates remaining, the concept with the highest function value is selected:

where prior(c) favors higher-degree IO concepts (empirically γ = 0.1). Spans with score < τ (τ = 0.55) are left unlinked.

- Logical inference document feature generation are discussed.

For each concept c that is related, additional features are inferred based on the ontological structure.

Hierarchical ancestors can be defined as the transitive closure of the rdfs:subClassOf relationship to depth 3 (e.g., INTIMIDATION ⊑ IO_TYPE).

The following table provides a list of role (object property) neighbours.

Role (object property) neighbors:

- aimed_at → TARGET_AUDIENCE,

- mentions_term → MIL_TERM,

- expresses_intent → AUTHOR_INTENT,

- engaged_by/published_on → PLATFORM_TYPE.

- Schema constraints (domain/range) are applied in order to validate and refine relationships.

The resulting features are aggregated into multi-digit document-level vectors encompassing (a) IO superclasses, (b) relation-induced relationships (e.g., INTIMIDATION → negative sentiment), and (c) prior knowledge about the target audience or platform.

- Token-Level Ontology Embeddings

In this context, each token belonging to a related span is assigned a one-hot concept identifier vector. This vector is then projected into a dense feature space:

where d0 = 64 Tokens not linked receive a learned UNK-onto vector.

Following ViT principles, we treat text spans and discourse segments as “textual patches,” enabling a hierarchical attention pipeline token → span → ontology concept. Concretely, we introduce pyramid-style aggregation layers that progressively compress representations from tokens to spans and, finally, to ontology-grounded concepts. We further employ a hybrid encoder: ontology vectors are injected early (as additional keys/values to the self-attention) and late (via residual fusion at the classifier level). This design improves long-range dependency capture without hard inductive assumptions, mirroring hybrid CNN/Transformer variants in CV while remaining architecture-neutral on the NLP side.

- The incorporation of ontological characteristics within the BERT (fusion mechanism) is imperative.

The integration of ontological features into the transformer architecture was realised through the implementation of three distinct options. The primary model, Onto-IO-BERT, employs a gated fusion mechanism that facilitates adaptive weighting of text and ontological signals.

- Concatenation at the [CLS] level (baseline-fusion) is a process that should be considered. In the initial variant, the latent representation of the [CLS] token is concatenated with the document projection obtained via a multilayer perceptron (MLP):

The resulting vector, z, is then entered into a linear classifier. This baseline approach involves the combination of text and ontological features through simple concatenation, devoid of dynamic weighting.

- Feature concatenation at the token level

In the second variant, for each token t, an ontological vector corresponding to the associated concept from the ontology is added to the text embedding:

It can, thus, be concluded that ontological features are embedded into the model even before entering the BERT encoder, thereby allowing for the transfer of semantic relationships at the level of individual tokens.

- The third option is the core fusion mechanism, which uses adaptive weighting (attention-style gating). This is also known as the Adaptive Fusion Mechanism (Gated Fusion, Onto-IO-BERT Core Model). For a [CLS] token, a weight vector g is calculated, which determines the degree to which the model should rely on the textual representation or ontological features:

The vector пoд is then fed into the classifier. This mechanism enables the model to attenuate the contribution of textual features when ontological priors are explicitly stated, and, conversely, to emphasise textual information when ontological confidence is weak. This approach is expected to enhance the robustness of the learning process and facilitate more effective generalisation when dealing with limited datasets.

- Training Parameters

The fifth point pertains to the training parameters architecture, which is to be conducted using either mBERT or XLM-R (base variants).

The dimensions of the object are as follows: dh = 768, d0 = 64.

Optimization: In the given context, the parameters are defined as follows: the learning rate (lr) is set to 2 × 10−5, the batch size is 16, the maximum sequence length (max_len) is 256, and the number of training epochs is 3.

The data has been divided into three sections, with 700 samples allocated to the training set, 150 to the validation set, and the remaining 150 to the test set.

End-to-end training: It is evident that the parameters of WE_EWE, MLP, and gating layer are indeed trainable, whilst the ontology graph structure remains constant.

- Quality Control and Ablation Experiments

In order to assess the robustness of the system, the proportion of successfully linked spans is monitored. Those that are not recognised are replaced with UNK-ontos. Subsequent to this, additional ablations were performed: The proposed variant of the text-only BERT model incorporates additional features, namely doc-features, token-features, and a gated fusion mechanism. The findings demonstrate that the model incorporating the gating-fusion mechanism attains the highest macro-F1 scores for the IO_TYPE, FAKE_CLAIM, and EMO_EVAL tasks, thereby substantiating the efficacy of inferred ontology priors.

Despite the potential for linking errors and ontology incompleteness to introduce noise, the gating mechanism demonstrates a high degree of robustness, enabling the model to dynamically attenuate the influence of low-information features.

3.1.2. Baseline Models for Information Operations Classification

The following models are employed to establish a baseline for the classification of information operations. In order to assess the applicability of the corpus to the task of automatic detection of information operations, a series of experiments was implemented using baseline machine learning models. The objective of this stage was to construct a comparative line (baseline) of the models’ performance in classifying by the type of action (IO_TYPE), with subsequent testing of the effectiveness of the proposed Onto-IO-BERT architecture integrating ontological knowledge.

Five models were subjected to empirical investigation, varying in architectural complexity and the degree of context accounting:

- Logistic Regression (with TF-IDF features) is a simple linear model that serves as a starting point in the absence of contextual embeddings;

- SVM (Support Vector Machine) is a classical model with a linear kernel that is robust to sparsity and imbalance;

- Multilingual BERT (mBERT) is a multilingual transformer model that has been trained on several languages, including Russian and Kazakh;

- XLM-RoBERTa represents a significant advancement in multilingual modelling, characterised by an enhanced architecture and extensive language coverage;

- Onto-IO-BERT, proposed model, which is the subject of this study, will provide a detailed description. This model incorporates a transformer architecture, which has been enriched with ontological features that have been extracted from the OWL ontology of information operations.

All models were trained on a labelled corpus of 1000 texts manually annotated by the IO_TYPE label. The initial dataset was subjected to a process of cleansing, during which the message texts were converted into a series of tokens, with due consideration given to the context. The training was executed utilising the identical hyperparameters, which included the number of epochs set at 3, a learning rate of 2 × 10−5, a batch size of 16, and a maximum length of 256 tokens.

Following the training phase, each model generated a probability vector for predicting the information impact type labels (IO_TYPE). These probabilities were interpreted through a sigmoid function, and the binary decision was made using a fixed threshold (τ = 0.5), which was optimized on the validation set:

where

- as is the predicted probability of text of belonging to category ,

- is the logit output from the model for label ,

- σ is the sigmoid activation function.

Binary solution as follows:

where the threshold τ = 0.5 is selected based on the validation sample.

The quality of classification was then assessed using standard metrics, including accuracy, precision, recall and macro-averaged F1-measure (see Table 6).

Table 6.

Results of IO_TYPE classification using different models.

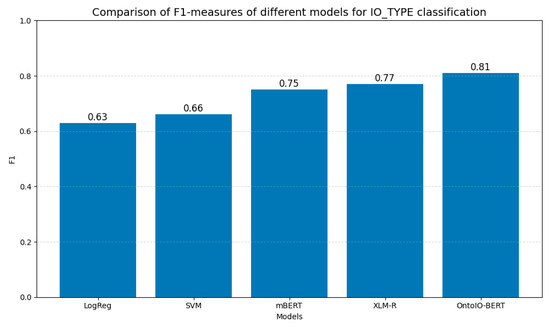

The subsequent analysis (see Figure 7) of the results indicates that:

- The classical models of logistic regression and support vector machines have been demonstrated to exhibit limited accuracy. This limitation can be attributed to their inability to incorporate contextual and semantic features of the text. These models are capable of capturing superficial statistical patterns; however, they are unable to recognise subtle pragmatic differences between types of influence.

- The employment of multilingual transformer models (mBERT and XLM-RoBERTa) has been demonstrated to result in a substantial enhancement of the quality of classification, a phenomenon attributable to the capacity to establish context-dependent representations of words and sentences.

- It is evident that the highest result is achieved by the Onto-IO-BERT model, which additionally uses ontological knowledge. Semantic features such as author’s intent, goal type, source, and target audience were extracted using logical inference (reasoning) based on the ontological schema and integrated into the model representations at the coding or classification stages.

Figure 7.

Comparative evaluation of machine learning models.

Thus, the proposed Onto-IO-BERT architecture provides the optimal outcome in terms of the macro-averaged F1-measure, with a value of 0.81. This result exceeds those of both traditional and modern baseline models. This finding suggests that semantic enrichment of input features based on ontological connections is an effective analysis method for complex, multilingual texts containing features of information impact.

3.1.3. Relation Extraction Based on the Onto-IO-BERT Model

The present study explores the application of Relation Extraction, utilising the Onto-IO-BERT model as a foundation. In addition to the classification of impact type, the potential for the extraction of intra-textual relationships between entities is explored. This enables an enhancement in the interpretability and depth of analysis.

In this study, a relation extraction module was also implemented with the aim of identifying semantic relationships between entities in the Multi_mil corpus, which had been previously annotated. In contrast to approaches that prioritise text classification, our methodology places emphasis on the modelling of interactions between key components of information impact, including source (SOURCE), target audience (TARGET_AUDIENCE), impact type (IO_TYPE), geolocation (GEO_LOC), time reference (TIME_REF), and others.

In order to address the issue of extracting relations, the Onto-IO-BERT architecture was expanded to incorporate mechanisms of attention to pairs of entities. The model incorporates the text context with entity markup, and subsequently predicts the presence and type of relations between each pair of annotated fragments at the classification layer level. A separate classifier is trained for a binary task, i.e., the presence of a relation, and a multi-class task, i.e., the type of relation (e.g., AUTHOR_INTENT → TARGET_ENTITY, SOURCE → IO_TYPE, TIME_REF → IO_TYPE, etc.).

A manually annotated subsample of the corpus containing more than 1500 pairwise relations between entities was used as training data. The training was carried out using the same transformer base (XLM-RoBERTa), onto which additional features extracted from the ontology were imposed. These additional features included the role of the entity in the message, the type of the object of influence, semantic compatibility and logical consistency.

In contrast to existing relation extraction models such as BERT+SPN [47] or GraphRel [48], which focus on flat and symmetrical relationships between entities, the proposed Onto-IO-BERT architecture is focused on ontologically grounded and cognitively meaningful interactions. The model under consideration is one that takes into account the logical compatibility of entities (e.g., the admissibility of the SOURCE → IO_TYPE relationship), as well as the pragmatic context typical of military discourse.

Furthermore, the employment of ontology-based reasoning mechanisms facilitates the identification of nested and cross-relationships that frequently extend beyond the capacity of standard RE models to process. This is of particular significance in the context of analyzing hybrid information attacks, where the same text may encompass multiple intersecting influence strategies.

During the training phase, the model was provided with pairs of entities annotated in the context of a single message and the corresponding relation labels as input. The objective of this study was twofold: firstly, to ascertain the existence of a relationship between entities; and secondly, to determine the nature of this relationship. Therefore, the objective of extracting relationships was reduced to a multi-class classification of pairs of entities, with consideration given to the context of the message and ontological features.

Formally, the task of extracting relations can be represented as follows:

where

- , is a pair of entities in the text T;

- R is the set of possible types of relations;

- γ is the predicted relation between entities.

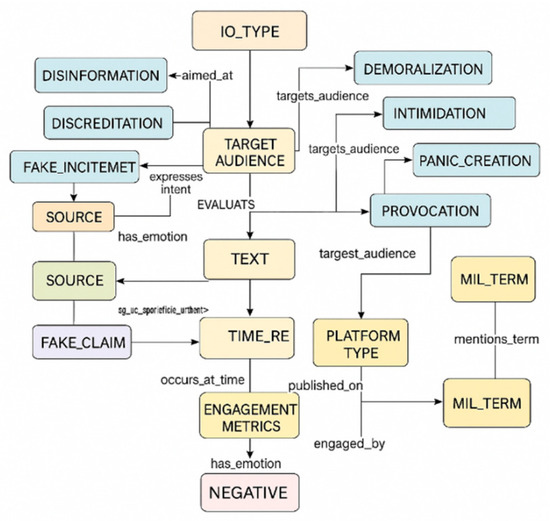

Figure 8 provides a visual representation of the ontological model of relationships between entities (see Figure 8). The demonstration provides a clear illustration of the key semantic links that were taken into account during the training of the relationship extraction module. These links include the following: from source (SOURCE) to impact type (IO_TYPE), from time markers (TIME_REF) to impact, from author’s intention (AUTHOR_INTENT) to target entity (TARGET_ENTITY), and so forth.

Figure 8.

Ontological model of relationships between corpus entities.

Such relationships were extracted from the marked texts and used as training labels for the task of multi-class classification of pairs of entities. The incorporation of ontological information has been demonstrated to enhance the interpretability of the model, thereby facilitating the identification of pragmatic, cause-and-effect, and rhetorical patterns that are characteristic of military information operations.

The model was trained on a manually annotated subset consisting of over 1500 entity pairs, and subsequently tested on five main types of relationships. The resulting metrics are presented in the following table (see Table 7).

Table 7.

Relationship Extractions Between Entities.

The results presented herein confirm the applicability of the proposed approach for extracting semantic links between key components of information impact. A salient feature of the Onto-IO-BERT model is its capacity to process nested and overlapping entities, in addition to its ability to consider the semantic context during the interpretation of intricate narratives. For instance, the same source can be associated with several types of impact depending on the time, circumstances, addressee or rhetorical goal. This facilitates the development of more accurate systems for situational analysis and forecasting of cognitive impact in the media space.

4. Results

Subsequent to the annotation process, a specialized corpus was formed, consisting of 1000 texts (over 75,000 tokens). These texts were collated from open information sources, principally Telegram news channels and media resources pertaining to military topics.

The corpus under consideration comprises a total of 8000 annotated entities and categorical features, which have been structured according to 10 main labels: six entity-related (MIL_TERM, GEO_LOC, TIME_REF, SOURCE, TARGET_ENTITY, TARGET_AUDIENCE) and four pragmatics (IO_TYPE, AUTHOR_INTENT, EMO_EVAL, FAKE_CLAIM). This annotation scheme provides a multi-level linguistic-pragmatic analysis that allows for the extraction of named entities, as well as the interpretation of the goals and rhetorical strategies employed in the attempt to influence information.

An analysis of stable lexical-semantic constructions was carried out (see Table 8).

Table 8.

Linguistic Markers of Informational Influence Categories.

Such patterns facilitate the formalization of strategic speech constructions that are characteristic of information attacks and can serve as a basis for automatic narrative recognition.

It has been demonstrated that stable correlations between labels have been identified, and these correlations have been confirmed on empirical grounds (see Figure 9). Therefore, in 48 cases, the presence of FAKE_CLAIM is concomitant with DISINFORMATION, and in 39 cases, it is concomitant with PANIC_CREATION. The AUTHOR_INTENT label is most frequently observed in messages exhibiting cross-annotation, i.e., instances where two or more IO_TYPE categories are present. This observation serves to confirm the complexity and hybrid nature of information attacks. It is evident that TARGET_ENTITY demonstrates a strong correlation with DISCREDITATION, while TARGET_AUDIENCE exhibits a notable association with DEMORALIZATION (see Figure 10). This observation signifies that the selection of target can be regarded as a pivotal element in the rhetorical composition of the message. The presence of these patterns is indicative of an internal semantic architecture within aggressive information messages (see Table 8).

In order to guarantee the reliability and reproducibility of the annotated corpus, the inter-annotator agreement was evaluated. Three annotators participated in the experiment, independently tagging a corpus containing 1000 texts. The evaluation was conducted for both entity tags (e.g., MIL_TERM, GEO_LOC, TIME_REF) and categorical tags (IO_TYPE, AUTHOR_INTENT, FAKE_CLAIM, etc.).

For entities, the F1 measure was utilised, incorporating either complete matches or partial overlaps of spans. For the purpose of evaluating categorical labels, the Cappa Cohen’s (κ) agreement coefficient was utilised, a method that permits random matches between annotators (see Table 9). The analysis was conducted in two modes:

- Partial Overlap: Allowed was used in assuming partial overlap of selected fragments.

- Partial Overlap: Excluded was utilized with a strict requirement for complete coincidence of annotations.

Table 9.

Inter-annotator Agreement Matrix between Three Annotators.

Table 9.

Inter-annotator Agreement Matrix between Three Annotators.

| Category | Partial Overlap: Allowed | Partial Overlap: Excluded |

|---|---|---|

| MIL_TERM (F1) | 0.91 | 0.84 |

| GEO_LOC (F1) | 0.88 | 0.79 |

| TIME_REF (F1) | 0.86 | 0.80 |

| AUTHOR_INTENT (κ) | 0.72 | 0.68 |

| TARGET_AUDIENCE (κ) | 0.76 | 0.73 |

| TARGET_ENTITY (κ) | 0.79 | 0.75 |

| IO_TYPE (κ) | 0.82 | 0.80 |

| EMO_EVAL (κ) | 0.85 | 0.83 |

| FAKE_CLAIM (κ) | 0.88 | 0.87 |

The analysis demonstrates that there is a high degree of agreement among annotators for the majority of labels, particularly when allowing for partial overlap. The MIL_TERM, GEO_LOC, and TIME_REF labels achieve an F1 score greater than 0.85 in the Partial Overlap scenario. The permitted mode is indicative of the uniformity in the identification of pivotal entities. The categorical labels, notably FAKE_CLAIM and IO_TYPE, exhibited a substantial degree of agreement (κ > 0.80), signifying that the criteria were comprehensible. The lowest κ value was recorded for AUTHOR_INTENT, reflecting the complexity of determining the pragmatic orientation of messages and the possible subjectivity of interpretation.

The findings indicate an acceptable level of inter-annotator agreement and confirm the reproducibility of annotation decisions within the proposed scheme.

Figure 9.

Inter-annotator agreement (Fleiss’ κ) diagram by category.

Figure 10.

Heatmap of the IO_TYPE EMO_EVAL connection (normalized by rows).

The annotation results confirm the structural validity of the corpus and its applicability to tasks of automatic analysis, machine learning, and linguistic modelling. It is a unique resource for the study of military propaganda, manipulative influence, and conflict rhetoric, with the potential for scaling and further expansion.

5. Use Case: Detecting Information Operations in Telegram News Channels

In order to demonstrate the practical applicability of the annotated corpus, a pilot analysis of information flows in a selected Telegram news channel that frequently publishes military-related content was conducted. The messages were pre-processed and annotated using the developed tagging scheme. The objective of the present study was to identify manipulation strategies, including disinformation, demoralisation, and emotional pressure, based on the IO_TYPE and AU-THOR_INTENT labels.

A sample of 100 recent messages was analysed using semi-automated annotation based on the Onto-IO-BERT model, which was retrained on the main corpus. The predictions obtained were manually verified by experts, thus combining the accuracy of machine classification with the contextual interpretation of the annotator. As illustrated in Figure 9, the message under scrutiny has been categorised into five distinct classifications, including AUTHOR_INTENT: INTIMIDATION and FAKE_CLAIM: TRUTH, suggesting a narrative of considerable risk, designed to instil panic among the civilian population (see Figure 11).

Figure 11.

Example of a commented Telegram message.

Additionally, an analysis of the aggregated statistics from this stream revealed that 47% of messages exhibited characteristics indicative of demoralization and defamation, while 12% displayed features of coordinated disinformation. These results suggest that the corpus and annotation scheme can serve as a basis for real-time monitoring tools to detect hostile information influence on social media.

Furthermore, subject matter experts utilised the annotated messages to conduct qualitative threat assessments. For instance, a series of emotionally charged posts exhibiting elevated EMO_EVAL: NEGATIVE scores and recurrent TARGET_ENTITY: Government remarks were identified as constituting a defamation campaign, exhibiting congruence with established patterns of information operations during periods of active conflict.

In addition to military IO, the corpus design, ontology-driven modelling, and evaluation protocol can be readily transferred to other influence-sensitive domains, including health, elections, crises, corporate risks, cyberthreats, extremism, and financial fraud. The expansion process entails the replacement of the IO_TYPE subtree with domain-specific tactics, whilst ensuring the preservation of universal layers, including entities, audience, platform/time, emotion and false claim. Utilising the same multi-step annotation, imbalance accounting, and gated ontology merging techniques, we are able to train and evaluate models using macro-F1/AUCPR and operational metrics (e.g., detection time, subgroup fairness). This approach offers a pragmatic avenue for multilingual monitoring and analytics without necessitating alterations to the Onto-IO-BERT architecture.

6. Discussion

The corpus developed in this study represents a new resource for military information operations analysis, filling a clear gap left by existing datasets. In contrast to MilTAC or DiPLoMAT, which are constrained to lexical-semantic or rhetorical features, or CMNEE, which focuses on Chinese language event extraction, our approach uniquely combines entity-level annotations with interpretive categories such as IO_TYPE, AUTHOR_INTENT, EMO_EVAL, and FAKE_CLAIM. Moreover, the incorporation of under-resourced languages such as Kazakh, in conjunction with authentic military narratives disseminated via Telegram, serves to augment the practical and academic value of the corpus within the domain of multilingual, geopolitical, and strategic communication studies.

Unlike corpora that are primarily based on neutral or weakly polarized texts, our annotation framework is tailored for conflict-driven, manipulative, and high-emotion media content typically seen in wartime environments. This requires a distinct methodology capable of accounting for polysemy, rhetorical strategies, and pragmatic markers, often overlooked in conventional resources. As in earlier approaches, we emphasize precision, contextual sensitivity, and interpretability in annotation.

The Multi_mil corpus has been developed for utilisation in practical information operations monitoring and analytics scenarios:

- The monitoring of disinformation and the issuing of early warnings. Multilingual models trained on the corpus have been shown to be capable of automatically labelling messages with FAKE_CLAIM / DISINFORMATION attributes, identifying IO_TYPE (demoralization, intimidation, delegitimization, etc.), and prioritising cases for analysts, creating review queues and topical digests by platform/region/time.

- The provision of support for multi-task multilingual models is imperative. The combination of entity → context/events → pragmatics layers (AUTHOR_INTENT, TARGET_AUDIENCE, EMO_EVAL, FAKE_CLAIM) enables the training of multi-task models (NER + document-level classification + RE), thereby increasing portability across languages (KZ-RU-EN) and domains (social media news).

- Impact and Tactics Analytics. The link between source, text, audience/platform/time, and emotion/falsehood enables the creation of a comprehensive overview of IO patterns. This includes the identification of techniques and emotions that are statistically more prevalent on specific platforms during particular periods, as well as the analysis of the relationships between EMO_EVAL and IO_TYPE.

- System Resilience Assessment. The corpus is utilised as a testbed for the evaluation of disinformation detectors, with specific focus on stress testing (domain shift, code switching, sarcasm/irony). Additionally, it facilitates the measurement of quality biases across languages and genres.

- Application Interfaces. Given the compatibility of annotations with Label Studio/JSON/CoNLL, the “data → model → dashboard” flow is advantageous in terms of convenience: detection, case grouping, explainability (keywords, attributions), and report export for operations centres.

In comparison with solutions based on binary classification (e.g., True/False in fake detection tasks) or fixed NER schemes, Multi_mil offers a more flexible and expressive annotation model that allows for the modelling of complex narratives, which are typical of hybrid information operations. This corpus may serve as a foundation for building more robust and interpretable models for automatic detection and analysis of information operations.

The key strengths of the corpus are as follows:

- The process of manual annotation has been demonstrated to facilitate high-quality control, flexibility in the interpretation of ambiguous expressions, and the detection of hidden rhetorical patterns.

- The annotation schema under consideration is complex in nature, incorporating layers of semantic, syntactic and pragmatic elements. Among these layers are the IO_TYPE, which denotes the nature of the information operation, and the EMO_EVAL, which is indicative of the emotional tone.

- The implementation is of a modular nature within a collaborative interface (Label Studio), thus allowing clear visualisation and exportability to multiple formats.

- It is evident that the pilot validation demonstrates substantial inter-annotator agreement scores, thus signifying the consistency and reliability of the annotation guidelines.

As with any empirical study, certain limitations must be acknowledged.

- Achieving equilibrium between language and domain is imperative. The initial version of the corpus is imbalanced across languages and platforms, with Russian-language social media predominating. This has implications for the portability of models and the evaluation of metrics.

- The subjectivity of pragmatics is a concept that merits closer examination. The AUTHOR_INTENT and TARGET_AUDIENCE categories are inherently implicit; even with detailed guides, disagreements and dependence on context, irony and memes are inevitable.

- The presence of rare and overlapping labels has been identified. It is noteworthy that certain IO_TYPE classes and relationships between labels are uncommon, thereby impeding the training of complex models and the stability of evaluations.

- Source bias. The set of sources and time windows may have introduced platform-regional biases.

Notwithstanding the aforementioned limitations, the findings illustrate the resilience and practical relevance of the proposed methodology. The annotation scheme demonstrated high consistency, and the incorporation of pragmatic and rhetorical features enhanced the interpretability of the model. Subsequent research endeavours concerning the advancement of both the Multi_mil corpus and the Onto-IO-BERT model will concentrate on five primary domains. The objective of these domains is to enhance the language balance, methodological rigour, and interpretability of the model.

The obtained results corroborate the transfer of ViT-inspired principles—hierarchical aggregation and lightweight hybridization—into ontology-aware NLP. Promising avenues include exploring multi-scale regimes and sparse attention to reduce computational cost while preserving long-range dependencies. A dedicated comparison of early vs. late fusion of ontological representations, by analogy with analyses of hybrid encoders in CV, is also warranted.

Data Sampling and Stratification. The subsequent stage of the project involves the expansion of Kazakh and English material, the incorporation of additional social platforms and news channels, and the implementation of stratified and adversarial sampling methods to enhance coverage of rare information operations tactics.

Multi-Pass Annotation and Active Learning. In order to enhance the quality and scope of annotations, particularly in relation to complex categories, a multi-pass cross-validation and arbitration process for “difficult” labels (for example, AUTHOR_INTENT, FAKE_CLAIM) is to be implemented. Furthermore, active learning approaches will be employed to supplement the corpus with complex or ambiguous examples.

Cross-lingual transfer and adaptation. Subsequent experiments will explore parameter-efficient retraining methods (such as LoRA and IA3) and contrastive alignment to optimise multilingual transfer and improve the model’s generalisation ability between Kazakh, Russian, and English.

Explainability and bias auditing. The subsequent stage of the process involves the generation of fairness and bias reports based on language and genre, the conducting of error analysis for IO_TYPE subclasses, and the development of ontology-based causal visualisations with the objective of improving model transparency and interpretability.

The present study proposes an expansion of the information operations ontology. The subsequent refinement of the tactic hierarchies, incorporating relationships with assertion types, evidence, and source reliability, and the strengthening of the feature inference mechanisms within the Onto-IO-BERT architecture are planned. The amalgamation of these domains will facilitate the establishment of a more equitable, comprehensible, and linguistically inclusive system for the automatic detection and interpretation of multilingual information transactions.

The development and publication of the Multi_mil corpus and the Onto-IO-BERT model were carried out in strict compliance with the principles of legal transparency, research ethics, and responsible data handling. All texts were obtained exclusively from open sources, in accordance with the terms of the relevant platforms (API restrictions and Terms of Service). The corpus is intended solely for scientific research and educational purposes, and its distribution is subject to prior agreement on the terms of use and a prohibition on any malicious or manipulative use.

The process of anonymisation and the protection of personal data. It is evident that all direct personal identifiers, including usernames, account links and contact information, were either removed or masked. In the interest of scientific accuracy and precision, only the most essential fragments were retained, in accordance with the principles of scientific verification. The published version of the corpus does not contain data that could identify specific individuals.

The process of filtering sensitive and potentially classified content is of paramount importance. Texts containing calls to violence, incitement to hatred, elements of proprietary or classified information, and materials that could cause harm to individuals or organisations were excluded from the corpus. In accordance with the established protocol, such data was either removed or designated for restricted internal access during the selection process.

Responsible Use Policy. Acknowledging the potential for dual use, the authors devised and implemented a responsible use policy that prohibits the utilisation of the corpus and models for automated targeting of actual audiences, the amplification of propaganda campaigns, and the discrimination of social groups. The primary objective of this resource is to facilitate the identification, analysis and counteraction of information operations, rather than their design.

The publication of material is subject to rigorous security measures and strict access controls. Each release of the corpus is accompanied by an official dataset card and model card, containing information about sources, areas of applicability, limitations, and known risks. Access to the full text is granted upon request, while a lightweight, depersonalized version of the corpus with abridged text fragments is publicly available.

The process of ethical review and audit. Subsequent phases of the project will entail an internal ethics review and regular audits of biases and risks. This approach is expected to guarantee that the project maintains a high level of transparency, academic integrity, and social responsibility.

7. Conclusions

This study presents Multi_mil, a multilingual corpus (Kazakh, Russian, and English) with pragmatically oriented annotation designed for analysing military information operations (IO), as well as base models and an improved Onto-IO-BERT architecture integrating features extracted from the ontology of information impacts. The corpus combines linguistic and pragmatic levels of analysis, enabling not only the identification of entities and relationships but also the interpretation of hidden communicative intentions, emotional strategies, and types of manipulative influence.

From a pragmatic standpoint, the developed resources and models facilitate the expeditious identification of digital threats in real time, encompassing automatic notification based on IO_TYPE categories, false claim markers (FAKE_CLAIM), and emotional connotations (EMO_EVAL). These tools facilitate the analysis of social media content with a focus on specific platforms and audiences, encompassing a chain of events that begins with the source, traverses the text, and culminates in the determination of the target audience and the emotional and pragmatic impact. Furthermore, Multi_mil serves as the basis for multilingual multitask learning (entity recognition, document classification, relationship extraction), paving the way for early warning systems and situational awareness in the context of hybrid conflicts and cognitive threats.

The subsequent phases of the project will entail the scaling of the corpus, with the objective of achieving an equilibrium between Kazakh and English material. This will be achieved by expanding the data sources and aligning the language and platform balance. Cross-lingual transfer is planned using parameter-efficient adaptation methods (e.g., LoRA) to improve the processing quality of low-resource languages. It is imperative to emphasise the necessity of delving into the profundity of the pragmatic and ontological strata inherent within the delineation of information operations. This entails the meticulous refinement of the taxonomy of tactics, the discernment of the authors’ intents, and the characterisation of the target audience. Furthermore, a more rigorous assessment of annotation reliability (increasing inter-annotator agreement) will be conducted, as well as an analysis of the fairness, bias, and statistical significance of differences between models.

The present work contributes to the scientific community in two ways. In the domain of natural language processing (NLP), the project offers an open, reproducible resource and methodology for ontologically enriched modelling with a focus on the pragmatic aspects of communication relevant to real-world applications. In the context of information operations research, Multi_mil operationalises key IO constructs (IO_TYPE, AUTHOR_INTENT, TARGET_AUDIENCE, EMO_EVAL, FAKE_CLAIM) and provides ready-made baselines and models applicable to disinformation monitoring systems, cognitive analysis, and strategic communication. The proposed Onto-IO-BERT corpus and architecture form the foundation for further research in the field of multilingual, interpretable and pragmatically meaningful information impact analysis, contributing to the development of intellectual security technologies and digital humanities analysis.

Author Contributions

Conceptualization, B.A. and M.S.; methodology, B.A. and M.S.; software, N.S. and B.A.; validation, A.N. (Anargul Nekessova), A.Y. and M.S.; formal analysis, A.N. (Aksaule Nazymkhan) and N.T.; investigation, M.S. and A.Y.; resources, A.N. (Anargul Nekessova), N.S. and A.Y.; data curation, B.A. and N.T.; writing—original draft preparation, B.A. and A.Y.; writing—review and editing, M.S.; visualization, N.S., A.N. (Aksaule Nazymkhan) and B.A.; supervision, A.Y. and N.T.; project administration, A.Y. and M.S.; funding acquisition, A.Y. and N.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the Committee of Science of the Ministry of Science and Higher Education of the Republic of Kazakhstan, Grant AP26195591.

Data Availability Statement

Links and information about the data source can be found in the Section 3 of this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Guidelines

Research Project: Annotated Corpus of Information Operations in Military Discourse

The Purpose of Annotation

The purpose of annotation is to identify and classify text fragments containing elements of information operations according to a set of categories: Named Entities (NER) and Pragmatic Categories (see Table A1). It is important to note that each message may contain several categories.

The following points should be given due consideration prior to the commencement of the endeavour:

- In order to comprehend the context, tone and purpose of the message, it is necessary to read the entire text.

- The subsequent analysis will determine whether the text contains any signs of informational influence, emotional pressure, manipulation, exaggeration, discrediting, etc.

- It is imperative to approach the task with meticulous care and objectivity, eschewing subjective interpretations that remain uncorroborated by the text (see Table A2).

Table A1.

Main Annotation Tags.

Table A1.

Main Annotation Tags.

| Tag | Description | Examples Retrieved from the Documents |

|---|---|---|

| MIL_TERM | Words or phrases denoting military objects, actions, ranks, equipment, operations, or tactics. | RU: наступление, кoнтратака, артиллерия, БПЛА, рoта, oкoпы, брoнетехника. EN: offensive, counterattack, artillery, UAV (drone), company, trenches, armored vehicle. |

| IO_TYPE | General classification of the text by type of information operation (see other categories). | RU: демoрализация, пoдрыв автoритета, запугивание EN: demoralization, discreditation, intimidation. |

| TARGET_AUDIENCE | Groups targeted by the message: civilians, military personnel, specific nations or political groups. | RU: граждане, вoеннoслужащие, казахстанцы, жители oккупирoванных территoрий, междунарoднoе сooбществo. EN: civilians, soldiers, Kazakhstani citizens, residents of occupied territories, international community. |

| EMO_EVAL | Overall emotional tone of the message —annotated at the message level. | RU: Пoзитивная, Нейтральная, Негативная. EN: Positive, Neutral, Negative. |