1. Introduction

Web-service providers often need to process various types of personal data from the service users to deliver their services effectively. This processing is essential for purposes such as verifying access, personalizing experiences, facilitating advertising, and conducting research. The primary service provider may share this personal data with second-layer service providers, who can pass the personal data to third-layer providers and onward, continuing in chained fashion. As a result, personal data supplied by the service user can cascade through multiple layers, creating a tree-like structure that is challenging to trace.

To safeguard the privacy of service users, proposed regulations require service providers to disclose information about the

next layer of individuals or companies with whom they intend to share collected private data [

1]. In theory, this transparency allows service users to recursively examine the privacy policies of all parties involved, enabling them to make informed decisions.

For instance, using a travel management company requires travelers to provide personal information, such as the duration of their stay, their age, any medical conditions, passport numbers, email addresses, room preferences, and dietary requirements. The travel management company is responsible for the overall management of the trip, including such aspects as: transportation, accommodation, meals, and travel insurance. This is often carried out in partnership with entities like transportation providers, hotels, and insurance companies that serve as secondary service providers.

Furthermore, local food companies that supply meals to hotels might also act as third-layer service providers, necessitating the knowledge of dietary preferences. In this context, when planning a trip, travelers should begin by reviewing the privacy policy of the travel management company to identify the involved hotel, travel, and insurance providers. Next, the privacy policies of these second-layer service providers would need to be examined to uncover any potential third-layer service providers (in this example, the food company). The traveler must then go through the privacy policy of each identified party and then assess their individual trustworthiness.

However, in practice, for a service user, assessing the privacy implication through this recursive process is both complex and time-consuming. Additionally, assessing a service provider’s trustworthiness in relation to the alignment between declared privacy policies and actual practices poses a challenge. Furthermore, privacy policies may be updated after personal data has been collected, allowing service providers to operate on previously acquired data according to these new policies without detection.

In the other direction, service providers encounter significant expenses related to infrastructure, software migration, training, and, importantly, the ongoing audits [

2] necessary to ensure regulatory compliance and avoid hefty fines. However, smaller organizations often struggle to navigate these complexities [

3], along with the expertise and financial strain they impose [

4,

5].

This reality inadvertently gives larger enterprises a competitive edge in the market [

6]. Once these advantages are secured, larger companies typically pass on these additional costs to consumers, resulting in higher product prices [

7]. Even more concerning is that, despite these indirect costs, there is no guarantee of user privacy.

This is because passing an audit does not necessarily ensure ongoing enforcement of privacy policies. There have been instances where reputable organizations have still breached their own privacy policies, even after successfully completing an audit [

8]. Therefore, we argue that the existing privacy practices do not fully address the concerns that arise in real-world situations.

Furthermore, service providers can also have privacy requirements. They have valid reasons for being hesitant to disclose the details or existence of the third parties, who are their suppliers or partners. Such information may be considered to be strategic and may even be classified as a trade secret [

9,

10]. Service providers may also wish to protect their clients’ identities from their suppliers, while forwarding the data. In our example, the travel company might want to conceal the client’s identity from the insurance provider to prevent the latter from reaching out directly to the traveler with subsidized offers.

In this research, we aim to address the practical privacy concerns faced by both service users and providers in a data-sharing environment. Our goal is to empower service users by providing enhanced control and simplicity in their data-sharing choices. We are committed to ensuring that service providers consistently uphold their declared privacy policies. At the same time, we are dedicated to preventing any unfair advantages from being granted to service users with malicious intentions. Furthermore, we ensure the protection of a service provider’s trade secrets by not mandating the disclosure of delegatee information.

Typically, the focus in designing privacy-enhancing technologies (PETs) has been on safeguarding service users from various privacy issues, with little consideration given to the challenges faced by service providers. Ironically, these providers often incur added costs and complexities when they implement PETs for the benefit of users. This situation raises an important practical question: Why should a service provider choose to adopt newly proposed PETs? Interestingly, this question has not been sufficiently explored in the existing literature on PETs.

The novelty of our framework is its focus on the costs and motivational factors affecting service providers. Our framework ensures key privacy requirements for service users while simultaneously lowering expenses and complexities for service providers. Additionally, for service providers, this framework introduces essential privacy features that are currently unattainable. We hope that this combination of bidirectional privacy preservation (

Appendix A has more example scenarios where we need bidirectional privacy) and cost savings will motivate service providers to adopt our proposed framework in real-world applications.

The principal contributions of this paper are as follows:

Creation of a framework for Bidirectional Privacy-Preservation for Multi-Layer Data Sharing in Web Services (BPPM) which

- (a)

Provides essential privacy guarantees to the service users.

- (b)

For service providers, reduces the cost associated with privacy preservation.

- (c)

Presents opportunities for service providers to achieve privacy benefits that were previously unattainable.

We prove the security and privacy properties of BPPM within a UC framework.

We implemented the framework and measured and compared its different performance metrics to demonstrate its practicality.

This paper is organized into several sections. An overview of BPPM is presented in

Section 2.

Section 3 deals with the details of our protocol design. In

Section 4, the privacy and security aspects of BPPM are proved within a formal UC framework. Implementation details and related performance analysis are described in

Section 5. In

Section 6, the costs and benefits of BPPM are analyzed. Related works are reviewed in

Section 7. Finally,

Section 8 concludes this paper.

2. BPPM Overview

The service user (or the data owner) obtains services from the primary service provider, who relies on secondary sub-service providers. This process can continue recursively, creating a tree-like hierarchical structure of service providers (or the data users).

BPPM effectively obscures the visibility of the entire tree structure from any individual party involved. Each service provider is only aware of its immediate parent and child providers. Likewise, the primary service provider is the sole entity that knows the identity of the service user. This concealment feature allows service providers at any level to safeguard their trade secrets. In this paper, the terms service provider and data user are used interchangeably as are service user and data owner.

The data user at the layer is denoted as . To deliver any requested sub-services to its parent node, may require various individual data items (such as passport number or date of birth) from an upstream party, which will be processed in specific ways. specifies these data-item requirements and the associated processing details in a document known as the Personal Data processing Statement (), which is included in its privacy policy.

Because of the trade secret protection feature, the data owner () is unaware of any other data users besides the primary data user, However, BPPM ensures that all data processing statements () remain accessible to the data owner. This transparency allows the data owner to assess the privacy implications of sharing their personal data and allows them to make a well-informed decision.

To achieve this, each data user collects structures from the privacy policy documents of their child nodes. They then append their own and send the consolidated information to their parent. In a recursive manner, the primary data user compiles the details for the entire tree in and places that in its own privacy policy document.

The data owner reviews this and provides consent for some or all of the data processing statements (i.e., ). Subsequently, the data owner gathers the required personal data items in the structure, . Both and are transmitted to .

To ensure both authenticity and confidentiality, the data owner signs and then encrypts both and before their transmission. Upon receipt, the primary data user processes locally. When necessary, relevant subsets can be passed on to child nodes. This forwarding can occur recursively, allowing personal data from the data owner to traverse the data usage tree.

BPPM facilitates seamless data sharing while simultaneously reinforcing data owners’ control over their personal information. Importantly, our protocol guarantees that personal data are never exposed in plaintext, even during their computation. This ensures that the personal data can never be leaked. Moreover, our protocol also ensures that only the data owner-approved processing can be performed on the data and nothing else.

In BPPM, we accomplish this by processing the data and consent pair within a trusted execution environment (TEE) located at each data user’s site. A TEE provides two key security assurances. First, a TEE ensures that only a known program is executed. Second, it provides a secure sandbox environment, an

enclave, which maintains the confidentiality of the program’s data and state during computation (for further details, see

Appendix B).

Executing code naively within a TEE environment is not a foolproof solution. There can be a mismatch—whether intentional or not—between what a data processing statement claims and the actual actions carried out by the executing code. To remove this risk, BPPM limits execution to only those code components that have received explicit approval from a privacy auditor.

We use some notations and acronyms in our paper, which are summarized in

Table 1.

2.1. BPPM Workflow

Each data user, (

), begins by setting up an enclave (Stage 1 in

Figure 1) with a predefined secure base code (

) on a TEE-enabled platform. Next, the data user loads pre-audited, privacy-leakage-free additional code components that correspond to the personal data-processing statements outlined in

into the newly created enclave. These code components are received from a trusted code provider (

) (Stage 1.1).

The primary data user,

, completes its setup process (Stage 1 for

) and aggregates the

structure of the entire data usage tree into

. Following this, the data owner can review

from the privacy policy document of the primary data user. The data owner may then choose to opt out of specific proposed data processing statements while accepting a subset,

. Subsequently, the data owner sends

, along with the relevant personal data items (

), securely to the primary data user’s enclave (Stage 2 in

Figure 1).

It is to be noted that, although contains data processing statements for the entire tree, only a subset of it, , is processed by , and the child nodes process the rest. After receiving the personal data and processing consent (in Stage 2), may, when necessary, request its own enclave to compute results according to a specific processing statement, (Stage 3 for ).

Whenever necessary, requests its enclave to forward the required subset, to , along with the corresponding subset of (Stage 4 for ). After receiving, may subsequently process or forward them to its own children. In this manner, the ’s personal data may propagate to .

BPPM verifies the authenticity of the data owner’s consent by checking the signature on the . However, a standard signature scheme falls short in this scenario because only part of the original consent is transmitted to a child node during the forwarding process, making it impossible for the child node to validate the signature.

While a redactable-signature scheme (RSS) [

11] might seem like a viable option, it incurs substantial computational costs. Instead, we harness the capabilities of the TEE within BPPM to achieve results akin to a redactable signature scheme while sidestepping these associated expenses.

2.2. Privacy and Security Goals

BPPM works on a zero-trust assumption. Data users as well as data owners are permitted to act with complete malice. Both can observe, record, and replay messages, as well as deviate from established protocols. Even if data users must possess a TEE, they can still control (modify or view) everything outside the TEE, including the operating system, communication channels, RAM, and more. Data users may also send malicious inputs when invoking the enclave’s entry points; they can even disable their TEE platform to gain an advantage.

Consequently, our protocol must be equipped to handle all these scenarios and ensure the following security and privacy goals are met:

- 1.

is required to provide a

Guarantee of Privacy Preservation (see

Section 3.1) to

.

must obtain this guarantee on behalf of the entire

, without learning any of the data users in

. The sub-tree of the entire data usage tree, rooted at

, is denoted by

, while

refers to the parent node of

. Recursively,

must provide a guarantee of privacy preservation to the

on behalf of the entire data usage

, without revealing any other members in

.

- 2.

must only be aware of as the source of the received data and as the next tier of data users. Moreover, must remain unaware of the identity/existence of its siblings. Consequently, only should be aware of the true end service user (or the ).

- 3.

A malicious must not be able to feed fake data (or replayed data) to gain access to the unauthorized services.

- 4.

The must be able to approve a single consolidated list, , without learning which processing is carried out locally and which is outsourced. Hence, the distribution of the data processing statements between (i.e., ) and its (i.e., ) must remain unknown to the .

- 5.

None other than the and the must remain aware of . The is considered trusted and does not share the details of , which could reveal ’s business strategy.

- 6.

While forwarding data to , must reduce the received to and to . contains only the agreed subset of personal data-processing statements required by , and is the set of associated personal data. This reduction ensures that an honest but curious cannot gain access to any additional information or insights regarding the processing activities conducted by its parent or siblings.

- 7.

Although and are the reduced versions of the originals sent by the , needs assurance regarding the authenticity of them. In other words, BPPM should provide a guarantee to that cannot add or modify any content in or , although content can be removed/redacted.

- 8.

A source code that respects privacy and complies with the stated data processing statement needs to be audited, but the process can be expensive and time-consuming. Thus, reusing pre-audited code in a controlled way is required while protecting against pirating such privacy-preserving source code.

3. Protocol Design Details

We now describe the detailed design of BPPM. First, we define what we mean by a guarantee of privacy preservation. Next, we show how a piracy-free code-sharing ecosystem can be constructed. The required data structures used in BPPM are then described, along with the details of our protocol.

3.1. Guarantee of Privacy Preservation

This guarantee encompasses three key aspects. First, personal data is never disclosed in plaintext; the untrusted data user can only access the results of computations performed on that data. This is accomplished by processing personal data within an enclave, which accepts encrypted data as input and produces plaintext output. Second, no other computations on personal data are allowed beyond those explicitly authorized by the . Our protocol ensures that the enclave verifies the ’s explicit permission for any requested processing. Lastly, the output of these computations does not compromise privacy as it is ensured that only audited code can execute on the ’s personal data.

3.2. Piracy-Free Source-Code Sharing

The privacy of the

may be compromised if the code operating within the enclave inadvertently or deliberately leaks confidential information (e.g., a malicious code may reveal the decryption of the received inputs). Furthermore, the code executed within the enclave must align with the specified data processing statement. For instance, if the data processing statement indicates that the date of birth is utilized solely to verify age, the code should not use this information for any alternative purpose, such as sending promotional offers. These two aspects must be assessed during the auditing process (e.g., SOC audits) and validated by a trusted auditor. However, this process can be both time-consuming and costly [

2]. Additionally, except in exceptional circumstances, only standard processing is typically required for personal data (e.g., using date of birth to verify age). Therefore, whenever possible, it may be advantageous to reuse pre-audited code.

Therefore, we assume the presence of a code provider, who develops source code that facilitates various standard operations on diverse personal data items and obtains them audited from a trusted auditor. The maintains a database of this pre-audited code, which can be purchased by various users () as needed. However, there is a risk that a malicious user may access the code in plaintext, allowing them to steal intellectual property or secretly distribute the code to others. Thus piracy protection is required.

BPPM leverages the presence of the TEE on each data user’s location to protect against piracy as well. Instead of sending the plaintext code, the code is encrypted using the public key specific to the recipient’s enclave. Thus, only the destined and trusted enclave, with access to the corresponding secret key, can recover and execute the code.

However, a pre-existing code might not always be available for every required data usage statement (). In such cases, would indeed need to develop the corresponding code and subject it to auditing before use. In this case, acts as its own . While this approach may be more expensive, can update the availability of the newly developed code through a public bulletin board and become a code provider. Other data users seeking similar functionality can now purchase this newly developed and audited code, allowing to recover some incurred costs (i.e., to offset expenses related to development and auditing).

Indeed, different parties (e.g., other data users or even dedicated software developers) could play the role of a

. The entire code-reuse scenario is depicted in

Figure 2. It is to be noted that playing the role of a

may provide some financial benefit for

, though it is not a mandatory requirement for BPPM. Indeed, a code developer might not want others to use their code, either inside or outside of a black-box environment (e.g., if the developed code gives the developer a strategic or commercial advantage).

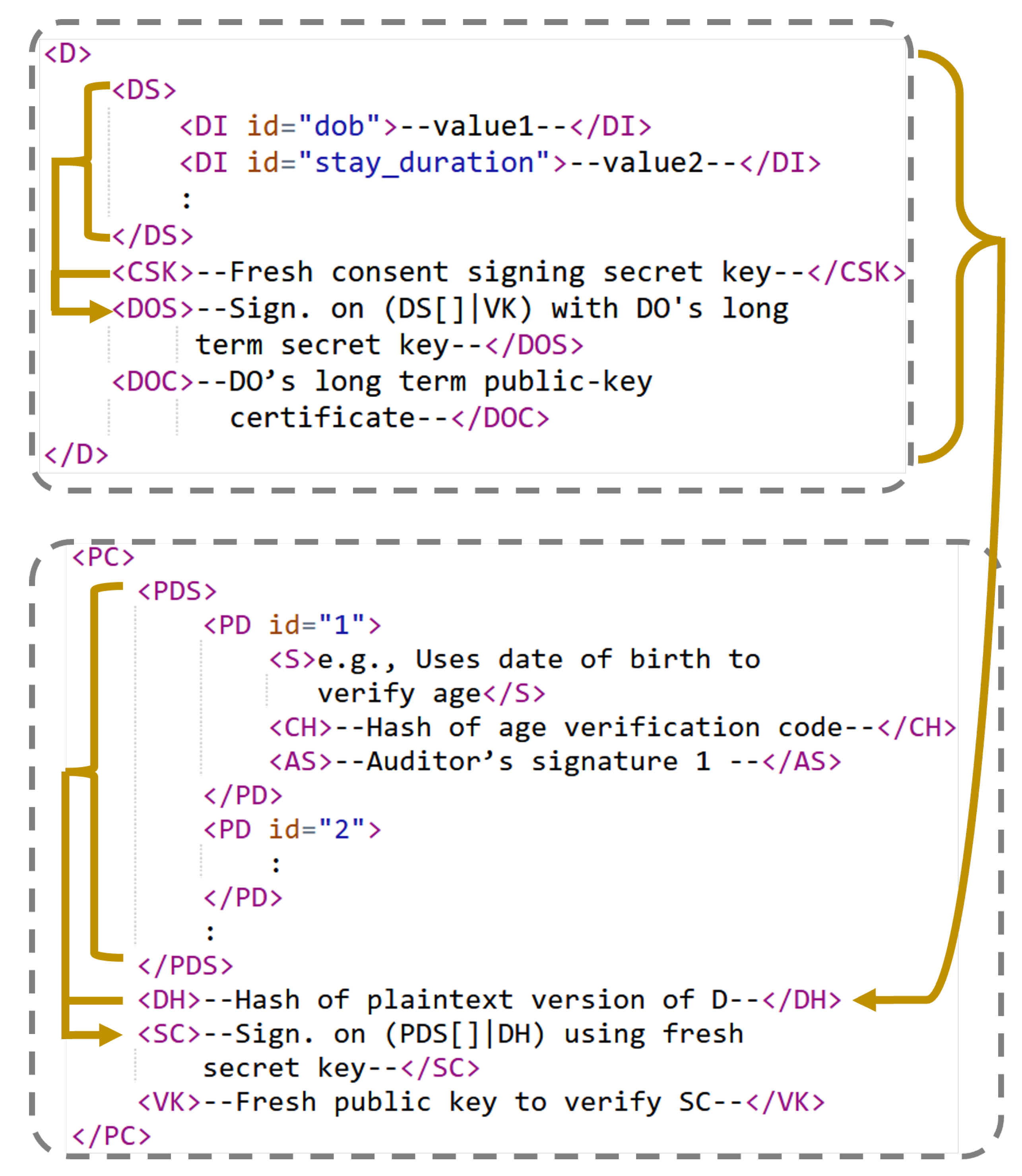

3.3. Data Structures

Iyilade et al. designed “Purpose-to-Use” (P2U) [

12], a privacy policy specification language for secondary data-sharing. Inspired by this work, we designed two simple XML-like data structures (

Figure 3) that can transfer data and the accompanying data processing consent in BPPM. Unlike P2U, our data structures do not require the identity of any data user to be revealed while still providing privacy guarantees to the data owners as well as to the data users. Our structures also ensure the authenticity of the data and consent.

3.3.1. Personal Data Structure (D)

The provides their personal data through the personal Data structure, D, but in encrypted format. Only the enclave can decrypt and access its elements. The array contains the actual Data Set, comprising a collection of data items (e.g., ). To manage the processing consent, the generates a new key pair (, ). The secret key is utilized to sign the agreed-upon list of data processing statements, and this Consent Signing secret Key is stored in . During the forwarding phase, a trusted enclave can use this secret key to create a signature on the reduced version of the original consent.

This ephemeral key pair alone does not guarantee authenticity. Therefore, the also possesses a long-term key pair (, ) and signs the ephemeral key with . A PKI-verifiable public key certificate, (stored in ), certifies .

Since is not disclosed outside the enclave, the identity of the , which is also included within , remains protected. Finally, holds the Data Owner’s Signature (generated with ) on the concatenation of and . The enclave, having access to , is able to verify the authenticity of .

3.3.2. Personal Data Processing Consent (PC)

The entire

structure acts as the details of the

’s personal data

Processing

Consent. It contains the details regarding the

’s agreed-upon list of processing statements (referred to as

and described in greater detail in

Section 3.3.3) along with additional elements. Data users may access this structure in plaintext format, outside of their enclave environment.

contains the plaintext

D’s

Hash. The

Signature on the

Consent,

, stores the signature on the concatenation of

and

. This signature is generated using

and can be verified with the consent

Verification

Key (

), which is outlined in

.

3.3.3. Personal Data Processing Details Set (PDS)

The personal data Processing Details Set is a sub-structure of . It includes a list of Processing Details (), which comprises three sub-elements: , , and . contains the data processing Statement in a human-readable format. It contains the details of what personal data item is required, why it is required, how it will be processed, and what will happen if the service user does not consent to this (e.g., if the service user opts out from providing their date of birth, then the senior citizens’ discount cannot be claimed). holds the hash value of the corresponding audited source code, and the Auditor’s Signature is stored in . During signing, the auditor combines and to ensure that processing can be performed solely for the stated purpose.

3.4. Detailed Protocol

A portion of the BPPM protocol is executed through a secure enclave program, while other parties handle the remaining aspects.

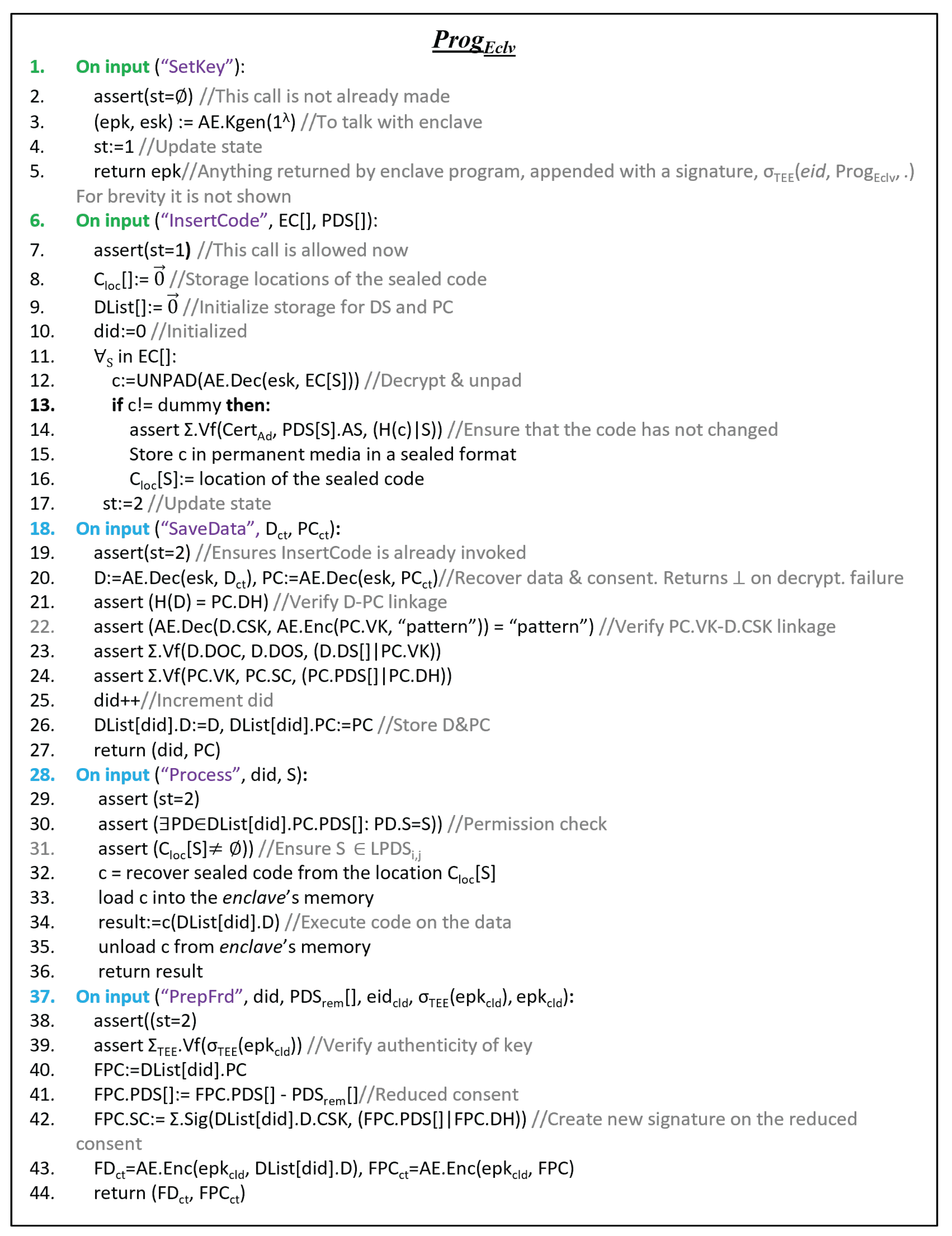

Figure 4 presents a formal description of the protocol executed by the participating parties (

), while

Figure 5 illustrates the enclave program (

). These representations are grounded in the established ideal functionality of attested execution, denoted as

[

13].

The protocol (

) interacts with the enclave program (

) at specific entry-points, by issuing a “resume” command to

(i.e., lines 5, 10, 13, 16, and 19 in

Figure 4). In

, the

, all data users (

) and the

each have distinct roles. The entry points are coded using colors:

green entry points may only be invoked once, with any subsequent attempts resulting in a failure (⊥), while

cyan entry points can be invoked multiple times. The shaded boxes represent the internal machines in

and are not exposed to the external environment,

. As a result, these entry-points can only be controlled indirectly. In contrast, all other entry points of

are directly accessible by

.

BPPM utilizes two arrays, and , both indexed by the personal data processing statements, S. The array contains the plaintext source code corresponding to each data processing statement, S (represented as a string variable). There is a cost involved in producing and auditing it, which may necessitate that data users compensate the for using . The array maintains a list of data users who have been granted permission to execute the code .

Two different EUF-CMA-secure digital signature schemes are used in BPPM, one is , which is used for verifying the authenticity of , , and . is another signature scheme used by TEEs to attest to the legitimacy of the output of the TEEs in question. An IND-CPA-secure asymmetric encryption scheme is used to communicate with the enclave securely. A second pre-image resistant hash function (H) is used to produce the digest for and D.

3.4.1. Setup Phase

Initially, each data user enters the setup phase. During this phase, the data user initializes a new enclave and launches it to execute the trusted base code, . This base code securely generates an asymmetric key pair and discloses the public portion of the key. Following this, the code provider sends the pre-audited code components directly to the enclave, enhancing its processing capabilities.

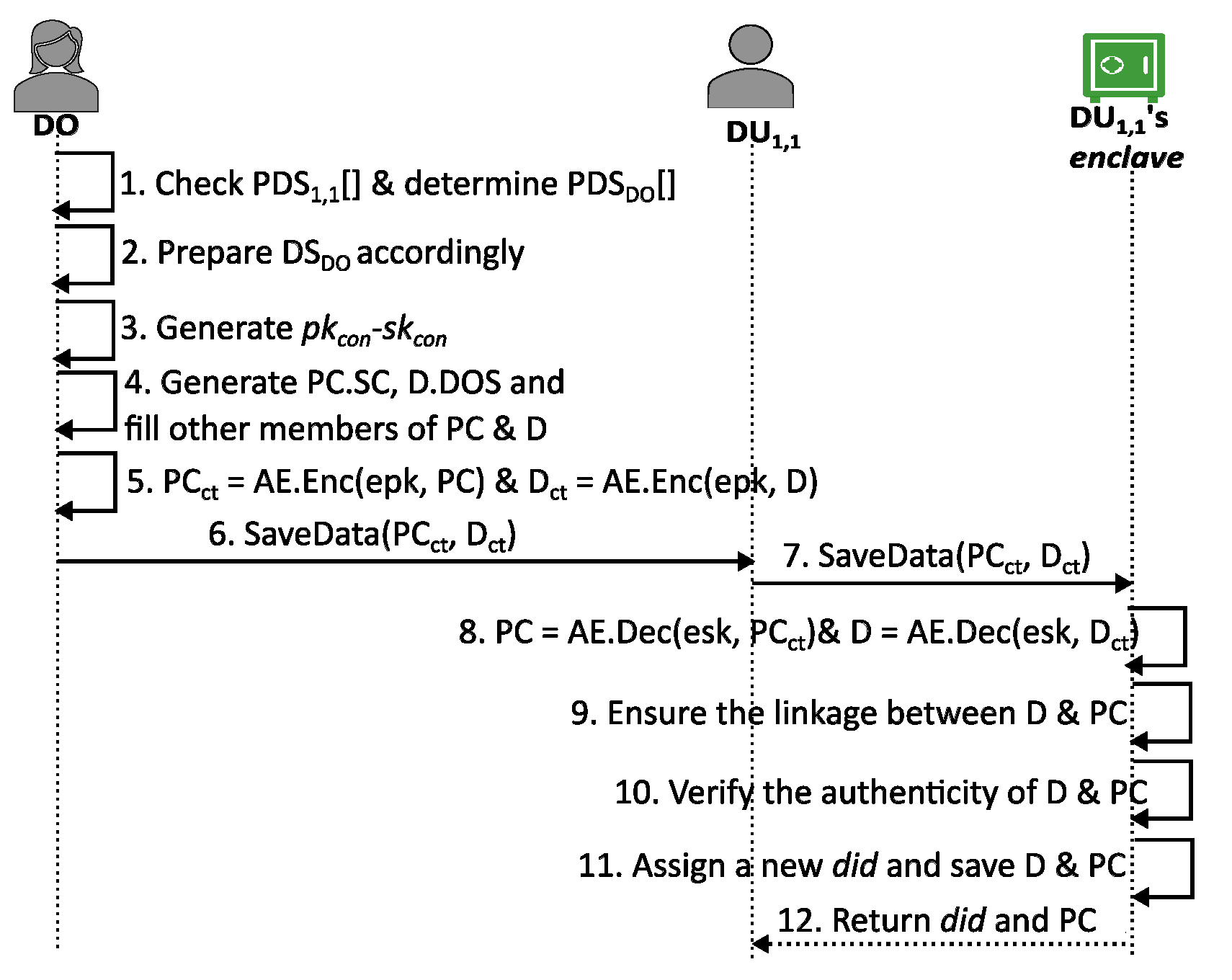

3.4.2. SendOrgData Phase

During the

SendOrgData phase, the data owner securely transmits their personal data along with the approved processing consent to the primary data user’s enclave. Upon receipt, the enclave verifies the authenticity of this information and stores the personal data in an encrypted and sealed format [

14] for future use. However, the enclave does disclose the specifics of the approved processing consent, allowing the data user to clearly understand the services that can be offered based on those consents and the business logic.

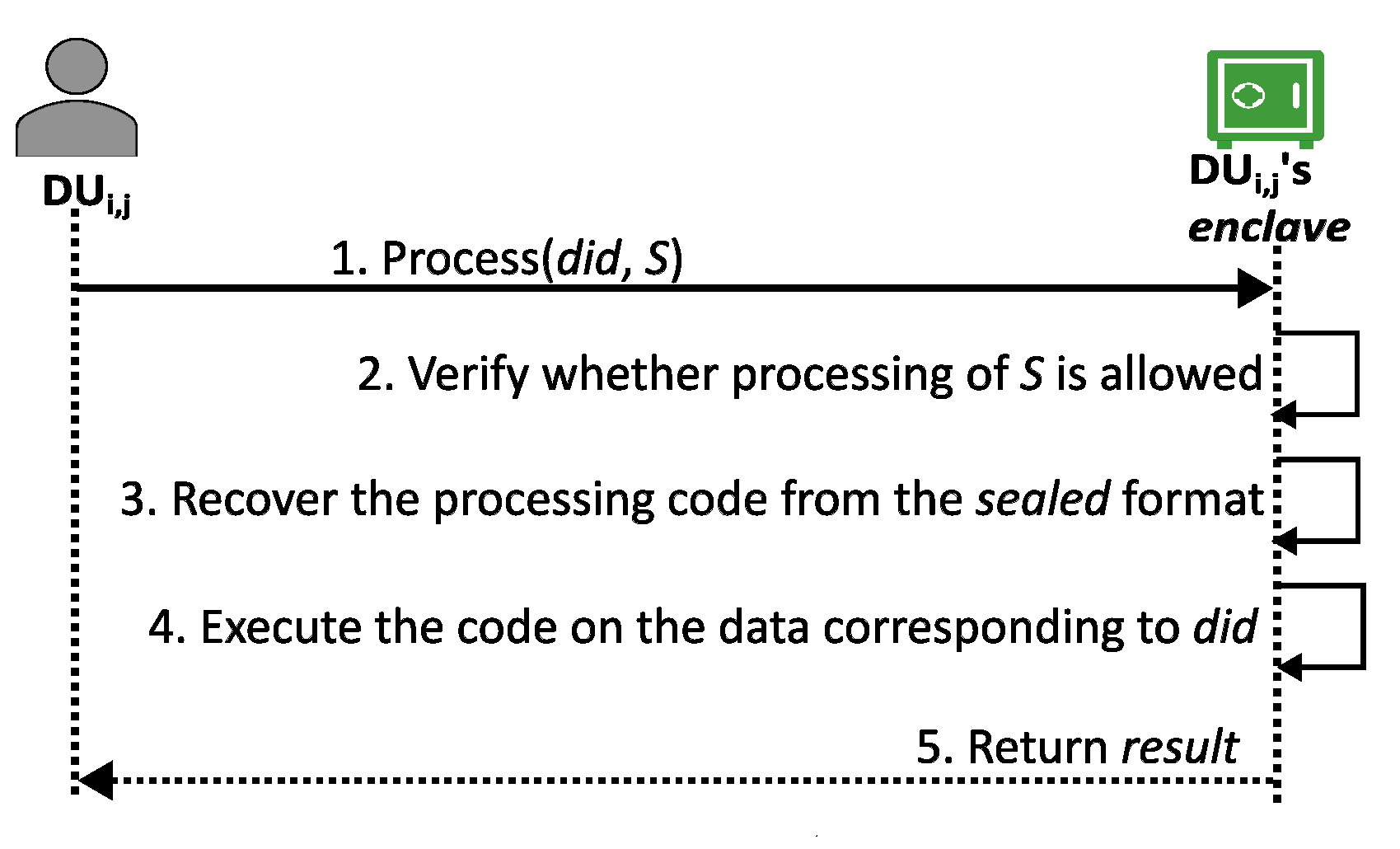

3.4.3. Process Phase

When the data user intends to process the received personal data, they request to their enclave for specific processing. Upon receiving the request, the enclave first verifies whether the data owner granted the permission for that processing. If the permissions are confirmed, the enclave executes the processing code on the private data within a secure sandbox environment and subsequently reveals the computation result to the data user.

Given that TEEs can offer stateful obfuscation [

13], our solution is also capable of delivering differential privacy [

15]. To achieve this, the enclave may optionally introduce some noise before outputting the final result. Additionally, the enclave may track the privacy budget, and once this budget is depleted, it can refrain from disclosing the result.

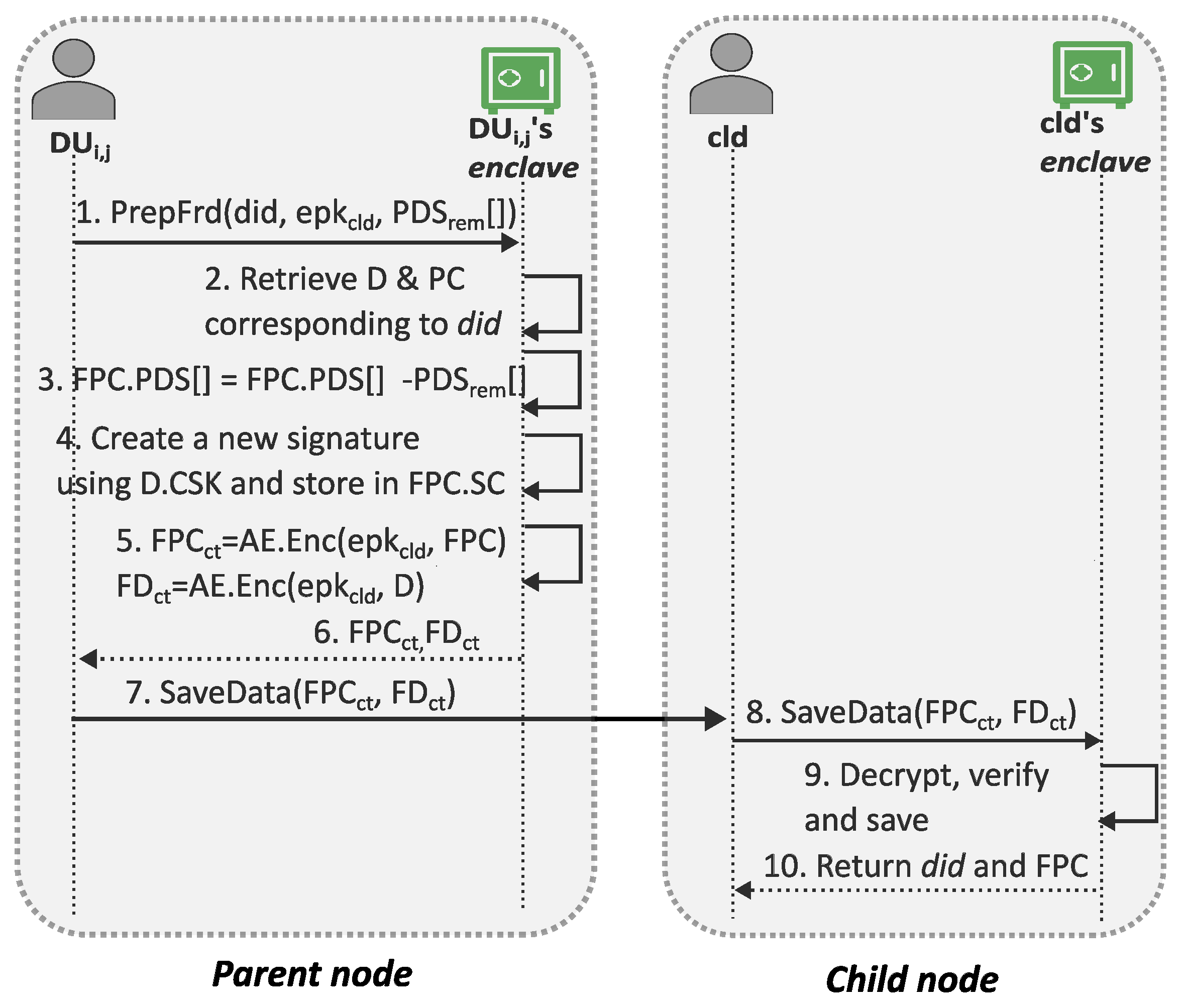

3.4.4. Forward Phase

When a data user intends to share a portion of the personal data they have received, they submit a request to its enclave. In this request, the data user specifies which processing capabilities should be removed during the forwarding process to prevent the child node from gaining any undue processing advantages. In response, the enclave prepares the personal data with the reduced processing consent. Subsequently, on behalf of the data owner, the enclave securely generates a signature for this new consent, enabling the receiving enclave to verify the trustworthiness of any forwarded information.

For even further step-by-step details of our protocol, the readers may refer to

Appendix C.

4. Privacy and Security Proof

The proof of the privacy and security properties of BPPM unfolds in multiple stages. Initially, the threat model and necessary assumptions are addressed. Subsequently, the ideal functionality for BPPM, , is defined. Finally, is utilized to demonstrate BPPM’s privacy and security within a UC framework.

4.1. Assumptions and Threat Model

Our threat model assumes the presence of a Byzantine adversary. In the context of BPPM, this implies that data owner and data users—at any layer—may engage in malicious behavior. The sole requirement for data users in BPPM is that they must possess a TEE-enabled platform, whether locally or in the cloud. BPPM can detect, whether a data user is using TEE or not, if not then excludes that data user from the protocol. We assume is honest and keeps the details of the made contract () with confidential.

BPPM utilizes typical (and insecure) public communication channels (e.g., a conventional TCP channel over the internet) for communication. Consequently, BPPM addresses the resulting concerns by employing required strategies (e.g., using an IND-CPA-secure encryption scheme) within the protocol execution. However, if enhanced security/privacy is needed (e.g., the ’s identity is required to be hidden from ), BPPM supports alternative anonymous communication channels (e.g., TOR and Mixnet) as add-ons without requiring any modifications in our protocol. In this paper, we do not consider denial-of-service (DOS) attacks as part of the adversary’s capabilities.

BPPM requires the to possess a long-term, PKI-verifiable public-key certificate, . The is assumed not to share the corresponding secret key, , with anyone. Auditing source code for security and privacy vulnerabilities is not a part of BPPM. However, BPPM verifies the auditor’s signature before executing any code. It is assumed that the will possess all the audited code () before taking part in BPPM.

In designing BPPM, we took into account the fact that support for TEEs on client-side platforms, such as desktop processors, has either slowed down or come to a standstill [

16]. Our solution only necessitates TEE functionality on the server side. We believe this requirement is quite reasonable, considering the industry is poised to invest heavily in this technology [

17]. This trend is reflected in the ongoing efforts to standardize TEE-based infrastructures and protocols [

18].

We acknowledge that, in some situations, side-channel attacks have previously been identified as vulnerabilities for TEEs. Nevertheless, this technology is continuously evolving, and substantial advancements have been made to enhance the security of TEEs [

19]. In this work, we assume that TEE hardware securely upholds its security properties.

Specifically, once execution starts, the contents of the enclave become inaccessible, and the internal code remains unchanged. Beyond this, we do not make any assumptions about security. For instance, the adversary still has the ability to control or alter everything outside the enclave, such as the operating system, communication channels, and RAM content. They can also send in malicious inputs when calling the enclave’s entry points. In designing the BPPM protocol, we considered the possibility that an adversary might try to disable or bypass the TEE, and we designed our protocol to effectively tackle these situations.

4.2. Ideal Functionality

Based on the desired privacy and security goals (

Section 2.2) and the specified threat model (

Section 4.1), we define an ideal functionality,

(

Figure 6). As mentioned earlier, it has access to

and

. As a fundamental construct of a UC framework [

20], the caller of

is either an honest party or the simulator,

. If

sends a message, it first goes to the adversary,

. If

allows, then the message is forwarded on to the proper recipient. Since BPPM uses typical insecure communication channels, the identity of the sender/receiver and the message remain visible to

. The following sections describe the design of each entry point of

.

4.2.1. Setup Entry Point of

In the real world, during the setup call, the fact that is currently executing setup is revealed to due to the observability of the multiple SendCode calls directed to the . To capture this, notifies the 3-tuple (setup, , ). then initializes the required storage area specific to the invoking data user and stores the input . This will be used later during the data processing and forwarding phase. Since in the real world, publishes at the end of the setup call, reveals as a public output. Notably, the does not take part in as it serves solely as an internal machine within .

4.2.2. SendOrigData Entry Point of

After invocation of the SendOrigData entry point, prepares D and in a manner that mirrors the operations of the in the real world. In the real world, the executes the remote call (SaveData…), which allows to infer that the is now engaged in the SendOrigData phase.

Hence, notifies a 5-tuple consisting of (SendOrigData, , , , ) to . uses the input , while creating the ciphertexts, and . If permits, then carries out the subsequent operations and stores D and for future use. Finally, discloses the plaintext version of and the newly generated to .

4.2.3. ProcessData Entry Point of

During the data processing, first ensures that the has the required code execution permission privileges. Furthermore, it ensures that the consent structure corresponding to allows the processing of S. If both the conditions are met, the corresponding processing is performed on the personal data, and the computation result is returned.

4.2.4. ForwardData Entry Point of

While forwarding, first retrieves D and corresponding to the requested and, by following the steps of , prepares the forwarded version of the consent, . After that, to correspond with the real world, sends the following 5-tuple (ForwardData, , , , ) to . If allows the communication, performs its normal operation, creates a new for the and stores the D and within the storage specific to the child. Finally, outputs the plaintext version of FPC and the newly generated to the .

4.3. UC-Proof

Theorem 1. If and

are EU-CMA-secure (randomized) signature schemes, H is second-preimage-resistant, and is IND-CPA-secure, then “UC-realizes” in the -hybrid model.

Proof. Let

be an environment and

be a dummy adversary [

20] who simply relays messages between

and other parties. To show that

“UC-realizes”

, we must show that there exists a simulator,

, such that for any environment, all interactions between

and

are indistinguishable from all interactions between

and the

construct. In other words, the following must be satisfied:

takes part solely in the ideal world. In the ideal world, an honest party does not engage in any actual work. Instead, after receiving inputs from

, it communicates with

.

can also communicate with

. To achieve indistinguishability between the ideal and real world,

emulates the transcripts of the real world using information obtained from

.

In the case of corrupted parties, extracts their inputs and interacts with . Once provides a response, emulates the transcripts for the corrupted party, which remain indistinguishable from the real world. Here, the term corrupted party refers to the party under the adversary’s control. Unless otherwise specified, any communication between and or between and is simply forwarded by .

In this proof, we demonstrate the existence and design of

and establish that its interactions are indistinguishable from the real world across the four fundamental design stages previously listed:

setup,

SendOrigData,

ProcessData, and

ForwardData. We adhere to the general strategies of UC security proofs found in existing TEE-based solutions [

13,

21,

22] while designing the simulator for BPPM. The following sections detail the design of the simulator and present the argument for indistinguishability, covering all possible combinations of corrupt and honest parties. □

4.3.1. Design—Setup

Our threat model assumes that is honest, but the can be either honest or malicious. Hence, there are two possible combinations.

A. When is honest: generates a key pair () for and publishes . When the obtains a notification (setup, , ) from , it emulates the real-world interaction between and . To do that

- 1.

chooses random and generates with .

- 2.

parses the received from and generates network messages of the format (SendCode, , , , ).

- 3.

Only after receiving replies from , instructs to continue. Note: Since cannot prove its identity as , will reply with an encrypted dummy code in all the responses.

Hybrid 1 works similarly to the real-world scenario, except that is replaced with a random value and with the public part of a randomly generated key pair. Since an enclave also generates and in the same random fashion, Hybrid 1 is indistinguishable from the real world.

Hybrid 2 is similar to Hybrid 1, except that is replaced with . Since is EU-CMA-secure, Hybrid 2 is indistinguishable from Hybrid 1.

Hybrid 3 is similar to Hybrid 2, except that all the replies from are replaced with encryption of dummy messages of the same length. Since is IND-CPA secure, Hybrid 3 is indistinguishable from Hybrid 2. Notice that this Hybrid 3 is exactly same as the ideal world.

B. When is corrupt: In this case, nothing will be performed by the ideal functionality. Hence, there is nothing to simulate in this case.

4.3.2. Design—SendOrigData

A. When both and are honest: As both parties are honest, can only see and tamper with the network messages, which are all that needs to emulate. To do that

- 1.

starts after notifies with (SendOrigData, , , , ).

- 2.

forwards that to .

- 3.

If does not tamper with the network communication, then instructs to continue normally. On the other hand, if tampers with the communication, then in the real world, the enclave identifies these and aborts. Hence, our designed also returns an abort signal in that case.

In the real world, the enclave can detect any alteration of or and notifies with abort. Step 3 of the ensures the same behavior in the ideal world as well. Hence, the real world and ideal world remain indistinguishable, without requiring any further hybrid arguments.

B. When is honest but is corrupted: For this case, mainly records ’s messages and faithfully emulates ’s behavior. Hence

- 1.

starts after receiving notification from and intercepts the communication between and .

- 2.

If alters the ciphertexts (, ) while calling the SaveData entry point of , returns abort.

- 3.

Otherwise, instructs to continue normally. In this case, delivers the -returned and to (instead of -returned replies).

- 4.

Moreover, by utilizing the equivocation method [

13],

produces a valid attestation for

and

and delivers them to

.

Hybrid 1 works similarly to the real-world scenario, except that the real attestation is replaced with the attestation obtained using the equivocation method. Since is EU-CMA-secure, Hybrid 1 remains indistinguishable from the real world. This Hybrid 1 is same as the ideal world.

C. When is corrupted but is honest: Since, by the end of this phase, learns nothing, there is nothing to simulate for . only ensures that the effect of ’s possible malicious action remains indistinguishable in both the ideal and real world. Hence,

- 1.

observes what the performs before executing the remote call.

- 2.

If faithfully follows , calls with the actual inputs of .

- 3.

When notifies back, instructs to continue normally.

- 4.

On the other hand, if deviates from , aborts without calling .

D. When both and are corrupt: As both the parties are corrupt, the ideal functionality will not be used by either of them, requiring no simulation.

4.3.3. Design—Process

Since only is involved in this phase, it could have only two combinations:

A. When is honest: In this case, obtains proper output by consulting . Since all the steps of this phase are executed locally, the external cannot know that has contacted instead of executing the actual protocol, . Therefore, will not notice any difference. Thus, the situation is already indistinguishable.

B. When is corrupt:Since is corrupt, will not be used. Thus there is nothing to simulate in this case.

4.3.4. Design—ForwardData

Four combinations are possible due to the involvement of two different parties.

A. When both and the child are honest: Since both the parties are honest,

only emulates the network transcripts. The simulation steps remain similar to the steps mentioned in Case A of

Section 4.3.2. Specifically,

- 1.

starts after notifies with (ForwardData, , , , ).

- 2.

If does not tamper with the network communication, then instructs to continue normally. Otherwise aborts, without invoking .

Step 2 of the ensures the indistinguishability between the real and ideal world.

B. When is honest but the child is corrupt: In this context, the

’s role is analogous to

of Case B of

Section 4.3.2. Therefore,

acts in an identical manner, with only one distinction.

starts once it obtains the notification (

ForwardData, …) from

, rather than (

SendOrigData, …).

C. When is corrupt but the child is honest: Here, mainly records ’s messages and faithfully emulates ’s behavior. To do so,

- 1.

intercepts the communication between and .

- 2.

If invokes the PrepFwd entry point of , intercepts that call and invokes the ForwardData entry point of with those parameters.

- 3.

After this, notifies back with (ForwardData, , , , ).

- 4.

Then, by utilizing the equivocation, generates the attestation on , .

- 5.

delivers -returned and to , along with the generated attestation.

- 6.

Then notices ’s subsequent actions.

- 7.

If issues a -remote call, verifies whether passed parameters in that remote call match the delivered values in step 5 or not. If they match, instructs to continue normally by replying “OK”.

- 8.

On the other hand, if observes that inputs something different in the remote call, then returns “NOT-OK” to . In that case, does not deliver anything to the .

Hybrid 1 works similarly to the real-world scenario, except that the real attestation is replaced with the attestation obtained using the equivocation method. Since is EU-CMA-secure, Hybrid 1 remains indistinguishable from the real world, and this Hybrid 1 is same as the ideal world.

D. When both and the child are corrupt: As both parties are corrupt, the ideal functionality will not be used here, requiring no simulation.

5. Implementation Details and Performance Analysis

We now describe the critical details of our BPPM implementation. Following this, we will compare the performance of BPPM with that of a non-privacy-preserving scenario, focusing on computation time and communication bandwidth.

5.1. Implementation Details

We implemented a basic proof of concept of BPPM in C-language, and it is available online [

23]. Intel-SGX is used as the underlying TEE technology because of its wide availability, but other TEE technologies are also compatible with BPPM. Our framework is generic and does not use any special feature of Intel-SGX.

We used the Ubuntu 20.04 Operating System for all our development and experimentation tasks. To prepare the enclave-side code (

), we employ the Gramine shim library [

24,

25], which comprises approximately 2.1 KLOC. For cryptographic primitives and network operations, we rely on the MBed-TLS library [

26].

To improve efficiency, instead of encrypting the entire data using the recipient enclave’s public key, we use hybrid encryption (i.e., the sender generates a fresh symmetric key to encrypt the plaintext and that symmetric key is encrypted with the recipient’s public key) to send data or code confidentially to an enclave. Specifically, we use an attested-TLS channel [

27]. As with a normal TLS channel, attested-TLS provides both confidentiality and integrity of the network message. Furthermore, it guarantees that the server side of the established TLS-channel is an enclave, executing a specific source code on a genuine TEE platform.

During the

setup phase, it is essential to enhance the functionality of the data user’s base enclave. Instead of the approach taken by other researchers, which involves sending a new enclave [

28], we propose transferring only the necessary code components in the form of encrypted dynamic libraries. This method not only reduces storage requirements but also shortens network transfer time. Additionally, it eliminates the overhead associated with launching a new enclave. For instance, a standard 1088 KB enclave can be substituted with a binary of a dynamic library with a size of 16 KB. The loading of this dynamic library requires only ∼1.17 ms, in contrast to the ∼15.4 ms needed for enclave initialization, plus an additional ∼1.1 ms for local attestation of that new enclave.

Another advantage is that, to save space in the base enclave, these libraries can be unloaded and stored on permanent media when not in use. This approach helps address the enclave’s limited size. To guard against piracy, unloaded library binaries are saved in a sealed format [

14], ensuring that only the same instance of the enclave can load and execute those binaries in the future. As the binaries are never stored in plaintext outside the enclave, reverse engineering becomes impossible as well.

5.2. Performance Analysis

We utilized an SGX-enabled VM instance, DC4SV3 [

29], provided by Microsoft Azure as our hardware setup during performance measurement. This instance features a quad-core processor and is equipped with 32 GB of RAM. We gathered performance metrics for BPPM as well as other candidates by running all tests in this identical environment. We utilized the local loopback interface during the experiment. Thus, instead of focusing on actual network latency, we concentrated on measuring the volume of data transferred over the network.

We evaluated the performance of BPPM by conducting each experiment 100 times and calculating the average result. Currently, a typical web service collects and processes around 20 to 30 personal data items [

30]. Given the anticipated increase in personal data requirements in the future, we examine how performance is affected as this number ranges from 20 to 100. In our analysis, we assume that, on average, each personal data item (e.g., age or passport number) is represented by a 64-bit unsigned integer.

To our knowledge, no existing solution encompasses all the features offered by BPPM. Nonetheless, we compare BPPM with other alternatives that offer a partial set of these features. Regarding data privacy, computation on encrypted data (COED) is a newly defined umbrella term [

31]. It comprises three technologies: homomorphic encryption (HE), multi-party computation (MPC), and functional encryption (FE).

We selected FE as a comparison candidate because it could be partially applicable in web service scenarios for privacy-preserving computation and policy enforcement. Although primarily used for different contexts, we also included MPC in our comparison due to its capability for privacy-preserving calculations in shared environments. We chose to exclude HE from the comparison as it operates on ciphertexts but yields results in ciphertext form, which makes it challenging to compare directly. Therefore, we are comparing BPPM against a representative MPC implementation, ABY [

32], and an FE implementation, CiFEr [

33].

CiFEr ciphertext ends up being 64× larger than the corresponding plaintext. This increases the required communication costs. In comparison, the communication cost of BPPM is 3 to 14× smaller. ABY uses around 7500–9000× the communication cost (mainly due to oblivious underlying transfers) of BPPM.

Figure 7 compares the communication costs for performing a single data processing.

We also analyze the overhead BPPM imposes over a non-privacy-preserving situation. For that purpose, we define a typical-secure but non-privacy-preserving web service, “non-priv”, as follows: After agreeing with the privacy policy, the service user sends their personal data to the service provider over a server-authenticated TLS channel. The service provider verifies the authenticity of the received personal data and then stores that, in encrypted format, in a database. Whenever the service provider needs to perform computations on the data, it fetches the data ciphertext, decrypts it, and performs the required computations.

In BPPM, when personal data is transferred, the corresponding structure (occupying ∼3.5 KB in a typical scenario) is also included in the transfer. Additionally, a remote attestation process occurs with each transfer. In an SGX environment, this remote attestation usually incurs a communication cost of ∼7 KB. Consequently, compared to a non-priv transfer, BPPM involves additional communication cost of about 10.5 KB (7 KB for remote attestation plus 3.5 KB for the structure) when transferring personal data.

Figure 7 shows the comparison when there is just one computation on the data user’s side (i.e.,

). It is important to note that BPPM only needs one round of communication, no matter how many data-processing operations the data user performs or how many times it performs them. All processing parameters can be specified through the single

structure (

Figure 3). Although its size slightly increases with the number of processing statements (requiring ∼1 KB per processing statement), only a single transfer is enough.

In contrast, both FE and MPC require the entire network transfer process to be repeated for each processing operation, even on the same data. For them, the communication costs should be multiplied by the number of data processing statements (i.e.,

), as shown in

Figure 7.

When it comes to network latency, it can vary significantly based on the current network conditions. All our experiments were conducted on a local loopback interface, so we did not include any measurements related to network latency in this analysis. Nevertheless, it is reasonable to assume that, in any specific network circumstances, the network latency for BPPM and its comparison candidates will align with the trends shown in

Figure 7. Therefore, on a relative scale, BPPM will maintain the same standing as illustrated in

Figure 7.

To compare the data processing time or the processing latency, we used the vector inner product as a representative example of processing functionality as this calculation is supported by all four comparison candidates. In our experiment, each vector consisted of a set of private data items, and we adjusted the number of data items from 20 to 100, recording the time requirements. BPPM is nearly twice as slow as the

non-priv mode, which aligns with the anticipated performance decline associated with the enclave environment [

34]. Nevertheless, BPPM is 1600–3000× faster than CiFEr and 600–750× faster than ABY (see

Figure 8).

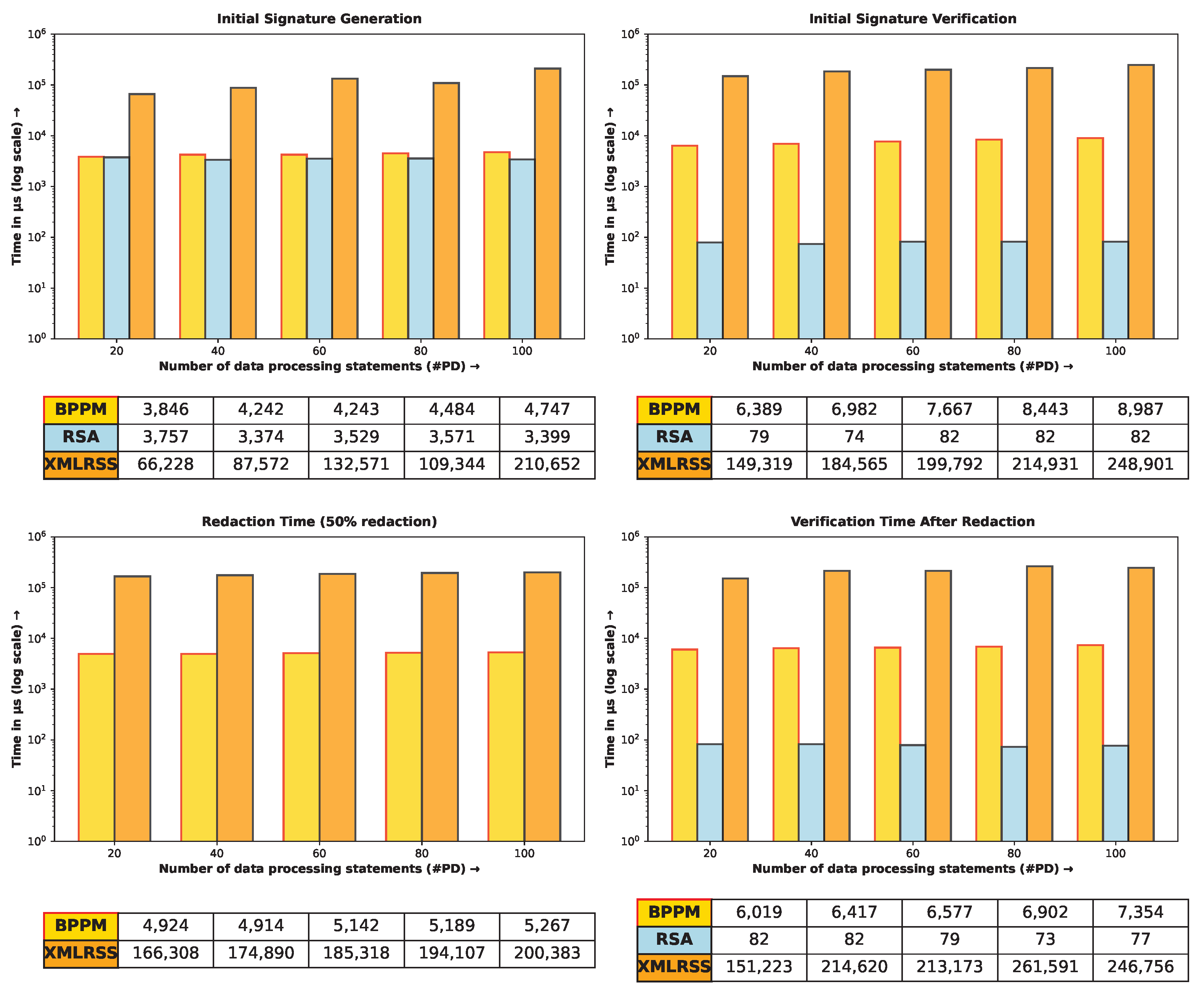

During forwarding, BPPM produces a reduced version of the

structure while maintaining the anonymity of the

. This could be accomplished through a specific type of RSS known as a signer-anonymous designated-verifier redactable signature scheme (AD-RS) [

35]. However, RSS schemes are often slow, relying on computation-intensive cryptographic techniques, such as zero-knowledge proofs.

In BPPM, we achieve the same effect of AD-RS by utilizing the confidentiality and attested execution properties of the TEE technology. We compare the performance of BPPM with an existing RSS implementation, XMLRSS [

36]. We also measure how much overhead BPPM introduces, in comparison with a normal (i.e., non-redactable) RSA-2048 signature scheme as a non-privacy-preserving authentication mechanism baseline. Unsurprisingly, BPPM is not as fast as RSA-2048, but it demonstrates a significant performance advantage over XMLRSS. BPPM generates initial signatures more than 15× faster, verifying signatures over 24× quicker, and redacting data more than 34× faster (see

Figure 9).

Therefore, like other PETs, BPPM offers privacy preservation at the cost of computation and communication overhead. In summary, BPPM incurs double the processing latency and an additional 10.5 KB of network bandwidth. However, when compared to existing PETs, BPPM is decisively more efficient and offers a unique, essential feature set. We are hopeful that this combination makes BPPM a practical choice for web services.

6. Cost–Benefit Analysis

In this section, we discuss the costs and benefits incurred by the parties involved in BPPM. While this framework has not yet been implemented in the real world, we argue for its usefulness and benefits if applied.

6.1. Service Users

In addition to the previously discussed processing latency and network bandwidth considerations, implementing BPPM incurs no costs for service users. More importantly, it offers several significant privacy benefits. By utilizing the property of attested execution, BPPM guarantees that privacy policies are enforced continuously. It simplifies data-sharing decisions for users by providing a single list of all data-processing statements, eliminating the need to recursively navigate through privacy policies of all the involved parties.

This approach aligns with efforts to improve transparency and control in data-sharing practices [

37]. By allowing service users to select specific subsets from a consolidated list, it facilitates privacy negotiation beyond just the primary data user. Furthermore, this unified list enables the seamless integration of existing automated privacy negotiation tools, such as P3P [

38] and Privacy Bird [

39], thereby reducing the burden on users.

Studies show that identical privacy policies can lead to different code implementations [

40,

41]. Furthermore, the privacy policy or the corresponding processing code may change after personal data has been collected, often without notifying the service users [

42]. This situation creates a potential loophole for service providers. In the BPPM framework, service users not only agree to the data processing terms outlined in the privacy policy but also to the auditor-approved specific processing code, which effectively tackles these concerns.

6.2. Service Providers

In today’s environment, service providers find themselves needing to allocate substantial resources to comply with various privacy regulations such as GDPR [

43], PIPEDA [

44], and CCPA [

45].

Table 2 offers an estimate of the costs that a mid-sized organization (with 50 to 250 employees) might incur to adhere to these three key privacy regulations.

Addressing the intricacies of compliance necessitates substantial expertise in privacy-related matters, a challenge that is especially impactful for smaller organizations [

4]. However, research suggests that specific personal data is predominantly utilized for a limited range of purposes [

42,

46]. This insight promotes the reuse of privacy-related software, ultimately resulting in significant cost savings.

Acquiring processing code from a provider does involve some costs. While we have yet to implement BPPM in a real-world scenario, we believe that if it were applied, these costs would be significantly lower than those presented in

Table 2. Crucially, service providers can now delegate auditing and privacy responsibilities to their code providers, thereby reducing complexities, and minimizing the delays typically associated with software development.

Table 2.

Current compliance cost comparison across regulations.

Table 2.

Current compliance cost comparison across regulations.

| Cost Category | GDPR | CCPA | PIPEDA |

|---|

| Initial incorporation of privacy features in existing software | USD > 100 K [47,48] | USD > 100 K [49,50] | USD > 30 K [51] |

| Annual software maintenance | USD > 50 K [47,48] | Not found | USD > 15 K [51] |

| Privacy officers’ annual salary | USD > 200 K [47,52,53] | USD > 200 K [47,52,53] | USD > 200 K [47,52,53] |

| Annual privacy consultant | USD > 10 K [47,54] | USD > 10 K [47] | USD > 10 K [47] |

| Annual privacy-specific audit | USD > 30 K [48,54] | USD > 20 K [50] | Not found |

| Total annual cost | USD > 390 K | USD > 330 K | USD > 255 K |

From the perspective of code providers, the potential for extensive reuse of privacy-preserving processing code allows them to continuously develop and expand their libraries. The piracy-free distribution model of BPPM not only motivates code providers but also promotes a sustainable ecosystem.

At present, there is a shortage of privacy auditors [

55], and the auditing process requires considerable time, which affects business operations. BPPM effectively tackles these practical challenges by allowing the same data processing statement and its corresponding data processing code to be audited only once at the code provider’s location. This approach not only reduces ambiguity surrounding personal data processing [

41,

56] but also encourages the standardization of personal data processing across various organizations worldwide.

BPPM effectively minimizes the scope of auditing. In the current landscape, auditors are entrusted with the critical task of meticulously overseeing the storage and retention of personal data. They must also conduct a comprehensive analysis of all software flows associated with that data. Importantly, these flows can evolve over time, necessitating re-audits.

Since BPPM does not expose personal data in plaintext, many of the data handling and auditing requirements related to data storage are significantly lessened. Furthermore, other than the code provided by the code provider, no additional components of the service provider’s software are capable of processing personal data, thereby eliminating the need for privacy inspections across the remainder of their codebase.

7. Related Work

Numerous studies have identified solutions to address the privacy concerns of data owners. However, there is a lack of research focused on designing PETs that take into account why the data users would want to deploy them. To the best of our knowledge, the concept of bidirectional privacy has not been fully explored, and there has been no direct work on it. Still, some studies have examined various related privacy aspects of using web services, which we will discuss next.

Agrawal et al. propose the concept of Hippocratic Databases [

57]. The authors define ten privacy principles for data access that preserve the privacy of the data owner (e.g., limited disclosure, consented use, and compliance). LeFevre et al. enhance this concept at the cell-level granularity of the database [

58]. However, there is still a requirement for an external trusted third party, like an auditor. Essentially, the auditor’s job is to check whether everything functions according to these principles. In practice, this may be quite complex and prone to errors. The auditor must check all code, all data flows, etc., to ensure that privacy policies have been implemented properly.

There is currently a shortage of auditors [

55]. Hence, to simplify the auditing process, XACML [

59] can be used, where data-access rules are specified in a machine-readable XML format. A data user submits the data-access request to a policy enforcement point (PEP), which forwards it on to another model called a policy decision point (PDP). PDP permits data access after consulting the XACML policy. In this way, an auditor only needs to ensure that the stated privacy policy on the website and the corresponding XACML are aligned.

To automate the auditing of source code, static code analysis techniques are explored to examine information flow and detect any potential privacy leaks [

60,

61]. Zimmeck et al. in their works [

62,

63] extended this static code analysis-based compliance checking by using machine learning techniques.

The problem of ensuring compliance between a privacy policy and the corresponding source code implementation is tackled from another angle by generating the privacy policies from the source code itself. Researchers have used AI-ML techniques to generate an entire privacy policy from a codebase [

64,

65]. Proof-carrying code (PCC) [

66] and automated theorem provers are also explored in this context. However, this approach restricts the developers to using only a specific source code language.

In fact, even after auditing and verifying the source code, there remains a risk of malicious activity from data users. For instance, they can add malicious code elements after the audit operation has been completed. To solve the trust issue involved with continuous enforcement, Liu et al. propose to distribute the personal data among multiple brokers [

67] using Shamir’s secret sharing technique. Each of the brokers is responsible for enforcing the privacy policies on their share.

If most data brokers are honest, this method faithfully enforces the privacy policy. However, it requires enough honest data brokers to be present during the data access, which might not be practical. To mitigate this concern, the trustworthiness of a blockchain is explored, and Alansari [

68] leverages smart contracts [

69] to enforce policies.

Private data is often shared among multiple parties. In fact, sharing various private data in different sectors, such as health or finance, can lead to numerous benefits [

70,

71]. Moreover, there are situations where the sharing of collected private data is an essential requirement [

72]. In this context, Iyilade et al. proposed an XML-like policy language called P2U [

12] to provide a data owner with more control over their privacy.

Hails [

73] is a proposed web framework for declarative policy language and an access control mechanism to enhance the privacy of the personal data being accessed by third-party applications, hosted by a website. POLICHECK [

74] is built on the concept of automated data-flow checking. Here, the concept of the receiving entity is also combined during the verification of the data-flow, making it suitable for policy compliance checking where personal data is required to be shared among multiple parties.

Tracking and control of the data-sharing activities can be exercised by capturing the details of all data-sharing activities, using a trusted entity on some tamper-proof media like a blockchain [

68]. Besides tracking data-sharing, it is also important to guarantee that all the data recipients process data in privacy-preserving manner. Zeinab et al. propose a trust- and reputation-based monitoring mechanism [

75]. It works based on the data owner’s complaints about the suspected privacy violations. Their solution automatically detects and penalizes any data users who use personal data for non-consensual purposes.

Personal data must not be shared unnecessarily. Moreover, when sharing the personal data with a third party, the primary data collector must only reveal the necessary subsets of the received data. On the other hand, the authenticity and integrity of personal data are also important concerns for a third-party data user. Recently Li et al. used the redactable signature scheme for that purpose [

76]. They propose a cloud framework for sharing health data with third parties after removing the sensitive parts of that data.

If personal data is available in plaintext form, even for a small amount of time (e.g., while performing computations using such data), re-hiding is not possible. The data can be cached and used later, covertly. Several interesting cryptographic primitives have been proposed to enable computation on encrypted data, without revealing plaintext values at all. Homomorphic encryption [

77] allows computation operations on ciphertexts and returns computed results as ciphertexts too.

We have already discussed functional encryption, which allows computation of a specific function on encrypted data [

78]. Unlike homomorphic encryption, it keeps the result available in plaintext, allowing others to use the result of the computation. Similarly, multi-party computation [

79] is a mature cryptographic primitive, where a function is calculated jointly by multiple untrusted parties. None of these parties need to reveal their input to any of the other parties. Although it has a performance bottleneck [

80], several improvements have been made [

32].

Brands proposed a mechanism to encode different personal data into a structure called a digital credential [

81]. With it, certain computations can be performed, without revealing the encoded data as plaintext. Additionally, it has been extended to facilitate the sharing of personal data among different parties, enabling a delegator to be authorized to

show only a selected subset of the encoded personal data by a delegatee [

82].

Existing TEE-Based Solutions

Pure cryptographic schemes, which allow computations on hidden data (e.g., homomorphic encryption and multi-party computation) are not so practical. Either they are expensive in terms of their required computation and communication overhead, or else they only permit a defined set of limited computations to be performed on the non-plaintext data. To augment the previous cryptographic methods, TEE technology [

83] can be used to perform computation on personal data, without revealing it in plaintext. For example, A TEE can be used to implement functional encryption [

84], yielding a performance improvement of up to 750,000× in the traditional cryptographic setting. Besides hiding data, the TEE also guarantees the integrity of the computation.

PESOS [

85] represents one of the initial works in the realm of trusted execution environment (TEE)-based privacy policy enforcement. However, in contrast to BPPM, PESOS necessitates additional infrastructural components. Specifically, it not only relies on a TEE but also mandates the integration of a Kinetic Open Storage system. An XACML-based access control system and a TEE have been combined to ensure continued compliance [

86]. Birrell et al. propose delegated monitoring [

87] in which an enclave, at the data user’s location, verifies the identity and other credentials of the requesting applicants before granting them access to any personal data. However, simply confirming the recipient’s identity may not be enough; the nature of the computation they are conducting is also crucial.

BPPM addresses this aspect but is not the first to explore it. To tackle this concern, TEEKAP [

88] proposed a data-sharing platform that allows data recipients to compute only a predefined function on the received data. BPPM builds upon this by incorporating multiple layers of data recipients and introducing the concept of bidirectional privacy.

Zhang et al. propose a trusted and privacy-preserving data trading platform [

89] in which access control to personal data is enforced by an enclave on the data user’s side. If access to the personal data is allowed, then the data processing application must also run within an enclave to ensure that the personal data is never revealed in plaintext. However, executing a very large application may cause problems for TEE-based solutions because of the enclave’s size limitations.

MECT [

28] addressed this issue by dynamically creating multiple enclaves and utilizing local attestation among them, which proved to be inefficient and complex. In this context, Wagner et al. proposed combining additional hardware infrastructure—specifically, a trusted platform module (TPM [

90])—with a TEE to support a larger code space [

86]. In contrast, BPPM adopts a different approach to tackle the problem. In BPPM, code components are stored as encrypted dynamic libraries and loaded on demand. This approach not only reduces the need for additional hardware but also significantly enhances efficiency.

Executing application code within an enclave is essential to ensure that personal data remains undisclosed in plaintext. However, this approach alone does not sufficiently guarantee privacy. Malicious code could be crafted to expose private information directly as the output of the computation. Therefore, it is necessary to verify the privacy preservation properties of the code prior to its execution within the enclave.

With the concept of template code [

28], a data owner can specify code that is permitted to execute solely on their personal data within the enclave. Nevertheless, this method requires the data owner to review the template code and the corresponding privacy parameters, which often demands a level of expertise and time that is impractical in many situations. BPPM addresses this issue by incorporating privacy auditors. In BPPM, a privacy auditor verifies the privacy properties of the code and provides a signature. The data owner only needs to verify this signature before allowing the code to execute at the data user’s end.

In contrast, we continue to depend on human auditors for the intricate task of verifying the privacy properties of source code. However, with the ability to reuse the same code, BPPM significantly reduces the auditors’ workload. Another practical concern in this area is that code providers may hesitate to disclose their source code even while allowing others to use their software as binaries. Furthermore, these binaries should not be shared unlawfully as that could undermine the profitability and motivation of the code provider. BPPM effectively mitigates these concerns by ensuring that all code binaries always remain encrypted and are tied to a specific TEE.

8. Conclusions

In web-service scenarios, private data may be shared among a hierarchy of service providers in a “layered” manner. We propose a solution framework called BPPM, designed to address several privacy-related challenges in these contexts. With BPPM, service users can be assured that their private data will never be disclosed in plaintext and will only be used for approved purposes. Additionally, BPPM streamlines the decision-making process for service users concerning data sharing by providing them with a consolidated list of proposed data processing statements, even when services are delivered through a complex hierarchy of service providers.

In addition to addressing various privacy concerns for service users, BPPM also safeguards the trade secrets of service providers. To ensure the service users’ privacy, service providers in BPPM are not required to disclose the identities or even the existence of their downstream sub-service providers (or suppliers), nor are they required to reveal the identities of their service users to these downstream providers. Nevertheless, the authenticity of the service users’ private data can still be verified downstream.

Additionally, BPPM addresses various practical challenges and offers multiple incentives for service providers to engage with and adopt this framework. For instance, it minimizes auditing costs by introducing a mechanism that allows for the use of pre-audited code in a manner that safeguards against piracy while also helping to mitigate data breaches and their associated expenses.

We conducted a formal analysis of the claimed security and privacy features of BPPM and demonstrate that it is secure within a UC framework. Furthermore, we implemented this framework and assessed its performance metrics, which indicate that, in comparison to a standard web service without privacy protections, BPPM offers numerous privacy features (and other benefits) with minimal overhead. These performance metrics further reinforce its viability for practical adoption.