Simple Summary

Human papillomavirus (HPV) status is a key prognostic and therapeutic marker in oropharyngeal squamous cell carcinoma (OPSCC). Although p16 immunohistochemistry is widely used as a surrogate test, its limited specificity often requires additional molecular analyses, increasing diagnostic time and costs. In this study, we applied a weakly supervised deep learning (CLAM) model to predict HPV status directly from routine hematoxylin and eosin (H&E) whole-slide images, without requiring manual annotations. Using 123 slides from two independent cohorts, the model achieved good classification accuracy and consistently targeted tumor-rich regions. Importantly, in several discordant cases, the model’s predictions aligned with HPV molecular results rather than p16 staining, suggesting biological relevance. A complementary morphometric analysis at the cellular level further confirmed the distinct nuclear features associated with HPV status. Overall, this work supports the feasibility of deep learning-based HPV prediction from standard pathology slides, offering a potential complementary tool to streamline HPV testing in routine diagnosis.

Abstract

Background: Human papillomavirus (HPV) plays a crucial role in the pathogenesis of oropharyngeal squamous cell carcinomas (OPSCC). Accurate HPV status classification is essential for therapeutic stratification. While p16 immunohistochemistry (IHC) is the clinical surrogate marker, it has limited specificity. Methods: In this study, we implemented a weakly supervised deep learning approach using the Clustering-constrained Attention Multiple-Instance Learning (CLAM) framework to directly predict HPV status from hematoxylin and eosin (H&E)-stained whole-slide images (WSIs) of OPSCC. A total of 123 WSIs from two cohorts (The Cancer Genome Atlas (TCGA) cohort and OPSCC cohort from the University of Naples Federico II (OPSCC-UNINA)) were used. Results: Attention heatmaps revealed that the model predominantly focused on tumor-rich regions. Errors were primarily observed in slides with conflicting p16/in situ hybridization (ISH) status or suboptimal quality. Morphological analysis of high-attention patches confirmed that cellular features extracted from correctly classified slides align with HPV status, with a Random Forest classifier achieving 83% accuracy at the cell level. Conclusions: This work supports the feasibility of deep learning-based HPV prediction from routine H&E slides, with potential clinical implications for streamlined, cost-effective diagnostics.

Keywords:

HPV; OPSCC; deep learning; weakly supervised learning; CLAM; whole-slide imaging; digital pathology 1. Introduction

1.1. Human Papillomavirus (HPV) in Head and Neck Cancer

Oropharyngeal squamous cell carcinoma (OPSCC), a tumor that primarily affects the tonsils, root of the tongue, soft palate, and uvula, is undergoing one of the most significant etiological transformations [1]. Until a few decades ago, OPSCC was closely linked to known risk factors (smoking and alcohol), but today, human papillomavirus (HPV) infection has become the predominant etiological agent. This rapid transition is particularly evident in Western countries, such as North America and Northern Europe, where HPV-positive OPSCC has become the prevalent form. Here, HPV-related tumors now account for 60–80% of all OPSCC cases [1,2]. European epidemiological data confirm the extent of this change, documenting a striking three-fold increase in the incidence of HPV-positive OPSCC in males over the past twenty years [1]. Patients with HPV-positive exhibit distinct clinical features, including higher response rates to therapy, improved progression free survival, and better overall survival (OS) compared to their HPV-negative counterparts [2,3,4,5]. Given the more favorable prognosis of HPV-positive tumors and the substantial side effects associated with multimodal treatments, numerous clinical trials have investigated de-escalation treatments [6,7,8,9,10]. Consequently, accurate identification of HPV status is essential for appropriate therapeutic stratification. Currently, the standard approach relies on immunohistochemical (IHC) detection of p16, a surrogate marker for HPV, as recommended by the 8th edition of the American Joint Committee on Cancer’s (AJCC) staging system [11]. However, while p16 IHC demonstrated a high sensitivity (0.97), its specificity (0.84) remains suboptimal [12]. For this reason, p16 IHC is commonly combined with molecular assays—such as HPV DNA or RNA in situ hybridization (ISH) and PCR-based tests—to achieve a more accurate determination of HPV status [13,14]. These techniques, while useful, remain time-consuming, expensive, and not universally available. Therefore, new, complementary strategies are needed that leverage morphological information to achieve reliable HPV identification.

1.2. Artificial Intelligence for HPV Status Prediction from H&E Slides

Artificial intelligence (AI) and computational pathology have emerged as promising tools to address these diagnostic challenges [15,16]. Several reviews have highlighted the growing number of AI-based approaches developed for HPV status prediction in head and neck cancers, emphasizing their potential to complement or reduce reliance on expensive and time-consuming molecular testing [17,18,19,20]. In particular, deep learning models applied to hematoxylin and eosin (H&E) whole-slide images (WSIs) have demonstrated an ability to capture subtle morphological patterns associated with HPV-induced carcinogenesis, features that may be challenging to consistently recognize through human visual assessment alone. Based on this concept, Klein et al. developed a convolutional neural network (CNN)-based model that generated an HPV prediction score directly from H&E-stained slides of OPSCC, demonstrating good diagnostic performance and demonstrating that the histology-derived score stratified patient prognosis beyond conventional clinicopathological factors [21]. More recently, Wang et al. [22] proposed a novel deep learning algorithm for HPV infection prediction in a large cohort of head and neck cancers using routine histology images, reporting robust discrimination between HPV-positive and HPV-negative tumors. In our previous work, we addressed this challenge of HPV status prediction using a handcrafted machine learning approach based on single-cell morphometric features extracted from manually annotated H&E regions in OPSCC cases. The best classifier, with an accuracy above 90%, was obtained when training on cases positive both for p16INK4a immunostain and for HPV DNA by ISH/INNO-LiPA® [23]. This first study demonstrated that HPV infection is associated with reproducible morphological signatures observable at the single-cell level, confirming the biological plausibility of morphology-based prediction. This approach, like the more recent fully supervised models, is effective in navigating the high heterogeneity of a histological slide (which contains both tumor and non-tumor tissue) because they rely on detailed annotations that clearly isolate the tumor region of interest (ROI). However, they suffer from a crucial limitation: they rely on extensive manual annotation, which is costly in terms of time and resources. To address these limitations, we investigated a weakly supervised deep learning approach that requires only slide-level labels. To this end, we implemented Clustering-constrained Attention Multiple-Instance Learning (CLAM) [24] to directly predict HPV status from whole-slide images (WSIs) of H&E-stained OPSCC. CLAM is a well-known weakly supervised framework that uses a robust attention mechanism to identify and focus on the most predictive histological areas. This mechanism not only enables classification without explicit ROI annotation, but also provides valuable interpretability of what the model is learning, enabling the localization of relevant histological patterns. In this study, we tested the feasibility of using CLAM to directly predict HPV status from H&E-stained WSIs of OPSCC cases, and we further analyzed whether high-attention regions correspond to morphologically relevant cell types using an auxiliary feature-based classifier.

2. Materials and Methods

2.1. Data Collection

We collected H&E-stained WSIs from two distinct cohorts. The first cohort is from The Cancer Genome Atlas-Head and Neck Squamous Cell Carcinoma (TCGA-HNSC) and includes 10 WSIs of OPSCC. HPV status for these cases was determined using both p16INK4a immunohistochemistry (IHC) and in situ hybridization (ISH), with five cases classified as HPV-positive and five as HPV-negative. The second cohort, named OPSCC-UNINA, includes 113 histological slides of OPSCC obtained from the archives of the Pathology Unit of the University “Federico II” of Naples. The HPV infection status of these specimens was determined by p16INK4a IHC. In a subset of cases, additional molecular confirmation was available via ISH/INNO-LiPA®. The detailed methodology for both techniques has been previously described [23]. However, to ensure homogeneity in labeling for training the deep learning model, HPV status determined by p16 IHC was used as ground truth for all UNINA samples. Among the OPSCC-UNINA cases, 41 were HPV-positive and 72 were HPV-negative. For external validation, we used an independent set of 35 HPV-negative WSIs from UNINA, which were entirely separate from the 113 cases included in the OPSCC-UNINA training cohort. The full lists of cases analyzed in the present study are in Supplementary File S1.

2.2. Slide Digitization

The histological slides of the OPSCC-UNINA cohort were digitized using a Leica Aperio AT2 scanner (Leica Biosystems Imaging, Vista, CA, USA) at 20× magnification. Before scanning, each slide was carefully cleaned with solvent and sterile gauze to remove any contaminants or artifacts that could compromise image quality. This ensured optimal conditions for image analysis before model training.

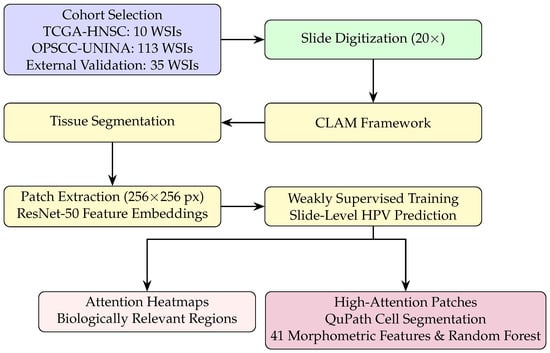

2.3. Workflow Overview

Figure 1 summarizes the full analytical pipeline, including cohort selection, WSI preprocessing, weakly supervised learning, and attention-guided morphometric validation.

Figure 1.

Workflow of the CLAM-based weakly supervised analysis with preprocessing, slide-level classification, and attention-guided morphometric validation.

2.4. Computational Framework: CLAM

2.4.1. Overview

To implement a weakly supervised learning approach for whole-slide image (WSI) classification, the Clustering-constrained Attention Multiple-Instance Learning (CLAM) framework [24] was adopted. This approach allows for whole-slide predictions without the need for patch- or region-of-interest (ROI)-level annotations. We used the official implementation released by the Mahmood Lab (https://github.com/mahmoodlab/CLAM (accessed on 1 October 2024)). In preprocessing, WSIs were first subjected to tissue segmentation to exclude background areas and then subdivided into non-overlapping patches of size 256 × 256 pixels. Each patch was converted into a 1024-dimensional feature vector using a ResNet-50 network pre-trained on ImageNet. During training, the model evaluates and classifies all patches, assigning each an “attention score” that determines its contribution or importance to the collective slide-level representation. This representation is computed using an attention pooling rule, which combines feature vectors by weighting them according to their attention score. CLAM also includes a supervised clustering part where the patches with the highest and lowest attention scores are separated into distinct clusters. The overall loss function combines the slide-level classification loss with a patch-level clustering loss.

2.4.2. Performance Evaluation and Interpretability

Training was conducted according to a 10-fold Monte Carlo cross-validation scheme, with the dataset randomly split into 80% training, 10% validation and 10% testing for each fold, maintaining the stratification by class. Optimization was performed using the Adam algorithm with a learning rate of and an early stop criterion after 20 consecutive epochs without improvement of the validation loss, up to a maximum of 200 epochs. A batch size of 1 was used (one slide per batch) and no data augmentation techniques were applied. At the end of each fold, the model performance was evaluated in terms of accuracy (ACC) and area under the ROC curve (AUC), both on the validation and test set, using the official scripts provided by the CLAM framework. Finally, to increase the interpretability of the model, attention heatmaps were generated, highlighting the regions of the slide that most influenced the predictive decision.

2.5. Feature Evaluation

To assess whether high-attention regions reflected morphologically significant differences, we conducted a supervised cellular-level analysis on truly positive and truly negative patches identified by the CLAM model. A total of 1230 high-attention patches were selected and analyzed using QuPath (v0.6.0-rc3) [25]. Each patch inherited the slide-level HPV label, and cells were detected using QuPath’s cell detection module with adjusted parameters. A total of 133,125 cells were segmented and characterized by 41 morphological and staining-related features.

2.5.1. Morphological Feature-Based Classification

We trained a Random Forest classifier using the extracted features to distinguish HPV-positive from HPV-negative cells. The model, implemented in Python 3.10 using the scikit-learn library, consisted of 500 trees and default hyperparameters. The analysis was conducted following a formal approach, previously described and validated by Varricchio et al. [23]. Source code was adapted from the official scikit-learn documentation https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html (accessed on 1 October 2024).

2.5.2. Computational Environment

All experiments were conducted on a Windows workstation equipped with an NVIDIA RTX A2000 GPU (12 GB VRAM), Intel(R) Xeon(R) W5-3465X CPU, and 64 GB of RAM.

3. Results

3.1. CLAM Model Performance on Internal Cross-Validation

We trained a CLAM model on 123 Whole-Slide Images (WSIs) from two cohorts: OPSCC-UNINA (n = 113) and TCGA (n = 10). In the 10-fold cross-validation setting, the CLAM model showed moderate performance with substantial variability across folds (Table 1). The average test area under the curve (AUC) was 0.5324, and the corresponding test accuracy was 56.5%, whereas validation performance was consistently higher, with an average AUC of 0.7178 and accuracy of 78.2%. Notably, Fold 6 achieved a perfect test AUC of 1.0 and the highest test accuracy of 80.0%, suggesting optimal class separation within that subset (Table 1).

Table 1.

Performance metrics of the CLAM model across the 10-fold cross-validation. For each fold, the table reports the test and validation AUC, along with the corresponding test and validation accuracy values. The last row summarizes the average performance across all folds.

3.2. Global Classification and Probability Analysis

For each WSI, the model outputs both the predicted class and the associated probabilities for HPV-positive and HPV-negative labels. Overall, 78.9% of WSIs were correctly classified (Table 2), with the majority showing high confidence. However, about 21% of the WSIs (26 out of 123, of which 23 from the OPSCC-UNINA dataset and 3 from TCGA) were misclassified (Figure 2) (Supplementary File S2).

Table 2.

Summary of classification results on the two datasets. The table reports, for each cohort, the total number of WSIs, the number correctly classified by the CLAM model, the number of misclassified slides, and the corresponding classification accuracy. The final row provides the aggregated results across both datasets.

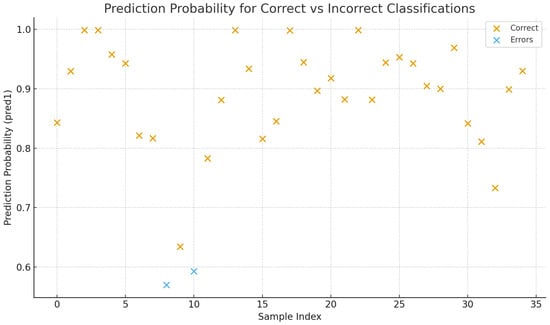

Figure 2.

Scatter plot showing the model’s prediction probabilities for the external test set. Each point represents one tile from the 35 HPV-negative WSIs. Orange markers indicate tiles correctly classified as HPV-negative, while blue markers highlight misclassified tiles predicted as HPV-positive. Misclassified samples display borderline confidence values (0.593 and 0.570), consistent with predictions near the decision threshold. These tiles originated from a slide containing a large air bubble.

Among the 21% of misclassified WSIs (26 of 123), several recurring patterns were identified. In four WSIs, the model prediction probabilities were close to the classification threshold, suggesting uncertainty in the decision-making process. These borderline cases often showed nearly equal probabilities for both HPV-positive and HPV-negative classes (e.g., 0.512 vs. 0.487). In four other WSIs, model predictions were discordant with IHC-based labels but concordant with INNO-lipa results. Some samples labeled as HPV-positive by IHC but negative by INNO-lipa were also predicted as negative by the model. This observation suggests that p16 IHC, while commonly used as a surrogate marker, may occasionally lead to false-positive classifications. These findings emphasize the added value of computational models in flagging cases that are ambiguous or potentially misclassified when relying on a single biomarker. Six additional WSIs had significant artifacts, such as tissue folds, tears, or glass markers, which likely impaired the model’s ability to extract relevant histological features. Notably, all three misclassified slides from the TCGA cohort fell into this category, indicating a potential link between technical quality and misclassification.

3.3. External Test Set Performance

The trained model was evaluated on an independent test set of 35 HPV-negative WSIs. The model correctly classified 33 out of 35 cases, yielding an accuracy of 94.3%. Most correctly classified slides had strong negative probabilities (80–90%). The two misclassified slides were predicted as HPV-positive with probabilities of 0.593 and 0.570, both of which are close to the classification threshold. One of these slides contained a large air bubble, likely compromising the feature extraction process (Supplementary File S3).

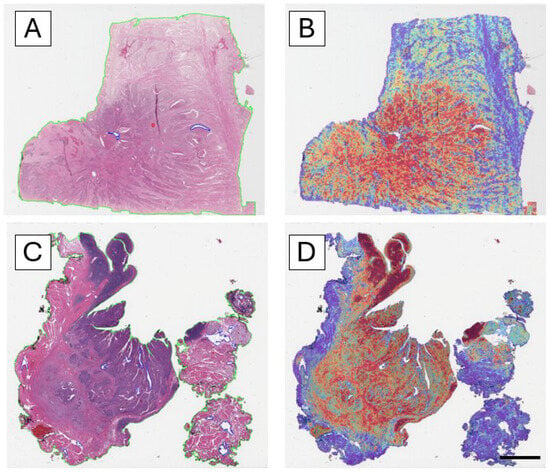

3.4. Interpretability Through Attention Maps

Analysis of the attention heatmaps revealed that the model consistently focused on tumor-rich regions, particularly in correctly classified cases (Figure 3). This behavior was observed across both training and external datasets, supporting the hypothesis that the model learns biologically relevant features for HPV status classification.

Figure 3.

Interpretability of the model through attention heatmaps. Attention maps use a color scale ranging from blue (low attention/probability) to red (high attention/probability), where red highlights the regions that most strongly contribute to the model’s prediction. (A) H&E whole-slide image of a correctly classified HPV-negative case. (B) Corresponding attention heatmap showing high-attention areas (red–yellow) concentrated in tumor-rich regions. (C) H&E whole-slide image of a correctly classified HPV-positive case. (D) Corresponding attention heatmap, again highlighting strong attention over tumor regions. Across both negative and positive cases, the model consistently focuses on tumor-rich regions, indicating that it learns morphologically relevant patterns for HPV status prediction (scale bar: 50 pixel size).

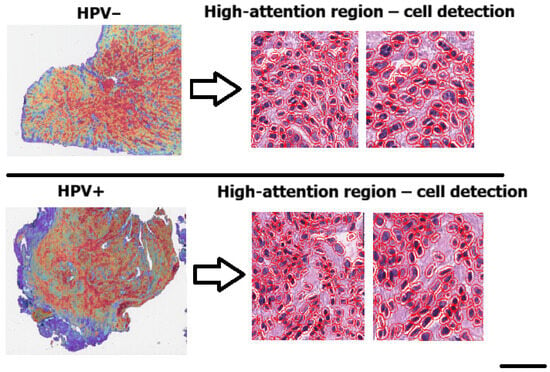

3.5. Cell-Level Analysis

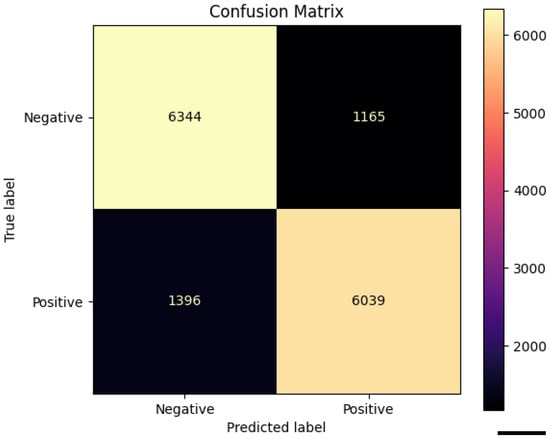

To further evaluate whether the CLAM model was focusing on biologically meaningful regions, we performed a cell-level morphometric analysis restricted to the high-attention patches. Specifically, we extracted only the patches corresponding to the highest-attention areas (red regions in the CLAM heatmaps) and applied QuPath v0.6.0 to perform cell detection within these regions. For each detected cell, QuPath provided 41 morphological and staining-related features. Each cell inherited the HPV label of the high-attention patch from which it was extracted. Figure 4 We trained a Random Forest classifier on a balanced dataset of 74,718 cells (37,359 per class), each labeled according to the patch from which it was extracted. The model achieved an overall accuracy of 82.9%, with a precision of 84%, recall of 81%, and F1-score of 0.83 for the HPV-positive class, as summarized in Table 3. Classification performance was consistent across both classes, confirming the presence of robust morphometric signals differentiating HPV-related tumors. The confusion matrix shown in Figure 5 further confirms the balanced performance of the classifier, with comparable accuracy in both HPV-positive and HPV-negative classes.

Figure 4.

Cell detection performed with QuPath within the high-attention patches (red regions in the CLAM heatmaps). These regions were used to extract single-cell morphometric features for the Random Forest analysis (scale bar: 50 pixel size).

Table 3.

Classification performance of the Random Forest model at cell level. Summary of the classification metrics computed for the Random Forest model. The table reports precision, recall, and F1-score for each class (HPV-negative and HPV-positive), as well as macro and weighted averages. The overall accuracy of the model is indicated below the table.

Figure 5.

Confusion matrix of the Random Forest classifier trained on 41 cell-level morphometric features extracted from high-attention regions selected by CLAM. The model shows comparable performance across classes (6344 true negatives and 6039 true positives), supporting the presence of consistent cellular-level morphological signatures driving the weakly supervised model’s predictions (scale bar: 50 pixel size).

4. Discussion

Predicting HPV status directly from H&E-stained slides using deep learning represents a promising and innovative frontier in computational pathology. Building on our previous work with handcrafted morphological features, which demonstrated high accuracy in distinguishing HPV-positive from HPV-negative OPSCC and highlighted the complementary nature of p16 IHC and molecular testing, we sought to explore a more scalable, annotation-free approach. In this study, we employed the CLAM framework, a weakly supervised deep learning model, to classify HPV status using only slide-level labels. The model achieved an overall classification accuracy of 78.9% on the internal dataset and performed strongly on an independent test set, reaching 94.3% accuracy. These findings confirm the feasibility of extracting biologically relevant features from routine H&E slides without manual region-of-interest annotation.

The attention maps generated by the CLAM model consistently highlighted tumor-rich epithelial regions, indicating that these areas contributed most to the slide-level HPV prediction. High-attention zones corresponded to morphologically distinctive regions, often characterized by cohesive tumor nests with atypical nuclei and reduced keratinization—features typically associated with HPV-related OPSCC. This spatial localization suggests that the model prioritizes biologically relevant structures rather than stromal or artefactual components, providing a visual representation of the regions driving the classification.

Despite these encouraging results, performance during cross-validation was variable (mean AUC: 0.5324), with one fold achieving perfect discrimination (AUC = 1.0; accuracy = 80%). More specifically, the discrepancy between validation and test performance (mean validation AUC: 0.7178 vs. mean test AUC: 0.5324) and the wide range of test AUC values across folds (0.18–1.00, Table 1). This variability is likely due to the limited number of slides per fold, the intrinsic heterogeneity of OPSCC, and occasional image artefacts, all of which may have affected model performance.

Moreover, our cross-validation strategy relied on random slide-level splits, which may have further amplified these fluctuations. Label noise may also have contributed. HPV status was assigned mainly by p16 IHC; therefore, occasional p16-positive but truly HPV-negative tumours may have led to mislabelled cases, increasing performance variability. In this context, the very high AUC observed in fold 6 most likely reflects a particularly favourable distribution of clearly positive and clearly negative cases rather than a genuinely superior model. Overall, these findings highlight the need for larger, multi-institutional cohorts and more specific and harmonised ground-truth definitions to obtain more stable estimates of weakly supervised model performance. Recent studies, such as Klein et al. (AUC = 0.80) [26], Wang et al. (AUC = 0.8371) [22], and Adachi et al. (AUC = 0.905) [27], performed better. Comparative tables with previously published works are in Supplementary File S4. However, direct comparison with these benchmark studies is not straightforward, as they differ substantially in cohort size, population structure, and evaluation strategy. While these studies often rely on larger or more homogeneous datasets and standardized workflows, our contribution is to demonstrate that weak supervision remains effective even in the absence of manual annotations, producing interpretable attention maps and identifying cases that may be misclassified by p16 IHC under real-world conditions.

Indeed, in four misclassified cases, model predictions were discordant with p16 status but aligned with the INNO-LiPA results, suggesting that the model may detect histological cues more closely associated with actual HPV infection. Such findings reinforce the notion that computational models can serve as a second opinion or flag potentially misclassified cases when relying solely on p16. Technical artifacts also contributed to misclassification. Slides with tissue folds, air bubbles, or annotation markers often impaired model performance, underscoring the need for quality control as an integral component of digital pathology workflows. To enhance model interpretability, attention heatmaps were generated, which consistently localized to tumor-rich areas.

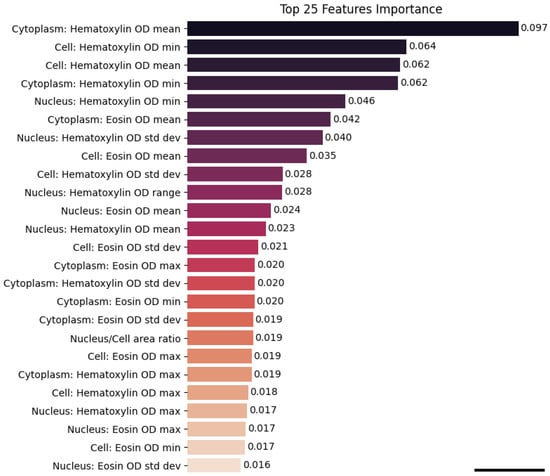

To further investigate the nature of these regions, we extracted 1230 high-attention patches and performed supervised cell-level morphological analysis using QuPath. The Random Forest classifier trained on the resulting 133,000 segmented cells achieved an accuracy of 82.9%. Feature importance analysis revealed that the most influential variables were colorimetric, particularly haematoxylin optical density metrics associated with nuclear morphology and chromatin texture (Figure 6). Remarkably, these same features emerged as top contributors in our prior feature-based machine learning model, which was trained on manually annotated cells, suggesting strong biological consistency between traditional and deep learning approaches [23].

Figure 6.

Feature importance ranking from the Random Forest classifier trained on 41 cell-level morphometric and staining-related features. The plot displays the top 25 most important features, highlighting their relative contribution to the classification of HPV-positive and HPV-negative cells. Notably, haematoxylin-related nuclear metrics emerge as the most influential predictors (scale bar: 50 pixel size).

Nuclear chromatin structure and hematoxylin optical density, the morphometric characteristics that our research found to be the most discriminative, are very consistent with the known biological distinctions between HPV-positive and HPV-negative OPSCC [28,29,30,31]. The fact that these biologically grounded nuclear features were consistently ranked as the most important predictors in the Random Forest model—and were concentrated within the high-attention CLAM regions—supports the conclusion that the weakly supervised classifier is detecting true HPV-associated nuclear phenotypes rather than spurious visual patterns. This congruence between known disease abnormalities and computational features increases the model’s interpretability in a clinical setting and supports the biological plausibility of its predictions.

Overall, our findings support the potential of weakly supervised deep learning to deliver both accurate and interpretable predictions of HPV status in OPSCC. The reproducibility of key morphometric features, alignment with molecular results in ambiguous cases, and localization of model attention to biologically plausible regions are all hallmarks of a robust pipeline.

The introduction of weakly supervised deep learning models into HPV diagnostic workflows also requires careful recalibration of diagnostic cut-off thresholds. Traditional thresholds optimized for p16 IHC or molecular assays may not fully capture the confidence distributions generated by AI-based classifiers. Establishing method-specific and biologically informed cut-offs will therefore be essential to ensure reliable integration of these models into routine OPSCC diagnostics [32,33]. For clinical adoption, however, seamless integration into laboratory workflows remains essential. The recent framework proposed by Angeloni et al. [34], which enables HL7-based communication between AI systems and laboratory information systems (LIS), represents a critical step toward deployment. Equally important is integrating predictive outputs—such as heatmaps or confidence scores—into widely used platforms like QuPath to support daily diagnostic utility.

5. Limitations and Future Directions

The main limitation of the present study is that the cohort size (123 WSI), although comparable to previous reports, remains relatively small and contributed to cross-validation variability. Moreover, ground-truth definition was largely based on p16 IHC, which, while widely used, may misclassify some HPV-negative tumours as positive, introducing label noise that affects model stability. External validation was limited to HPV-negative cases, preventing a full assessment of generalizability. Despite these limitations, the model produced biologically plausible attention maps and, in discordant cases, its predictions aligned with molecular INNO-LiPA results rather than p16, suggesting that weakly supervised approaches can capture true HPV-related morphology. Future work will include expanding to a multi-institutional dataset, applying stricter quality control to mitigate artefacts, and refining ground-truth labels by integrating molecular HPV detection alongside p16.

6. Conclusions

In summary, we demonstrate that weakly supervised deep learning can predict HPV status directly from routine H&E-stained OPSCC slides using only slide-level labels. Although cross-validation performance was mixed, the model achieved good overall accuracy on the internal cohort and high specificity on an independent set of HPV-negative tests. Attention heatmaps consistently highlighted tumor-rich regions, and a complementary cellular-level analysis confirmed that high-attention areas exhibit reproducible morphometric signatures that differ between HPV-positive and HPV-negative tumors. These results suggest that weakly supervised models such as CLAM can recover biologically meaningful patterns without manual region-of-interest annotation and could potentially complement p16 IHC and molecular testing.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/cancers17243938/s1, Supplementary File S1—Table S1: Summary of patient characteristics and biomarker results across datasets; Supplementary File S2—Table S2: Case-level predictions and discordant results from CLAM and Random Forest models; Supplementary File S3—Table S3: Slide-level predictions with class probabilities and notes; Supplementary File S4—Table S4: Comparison of model strategies across the four studies. Table S5: Dataset characteristics across the four studies. Table S6: Ground truth definitions used in the four studies. Table S7: Model performance comparison across all studies. Table S8: Summary of strengths and limitations.

Author Contributions

Conceptualization: F.M.; Methodology: F.M., G.I., S.V., A.M. and D.C.; Software: A.C. and F.M.; formal analysis: F.M., A.C. and G.I.; writing original draft: A.C., F.M. and G.I.; writing review and editing: S.S., R.M.D.C. and D.R.; funding acquisition: S.S., F.M. and G.I. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the project “Rare cancers of the head and neck: a comprehensive approach combining genomic, immunophenotypic and computational aspects to improve patient prognosis and establish innovative preclinical models–RENASCENCE” (project code PNRR-TR1-2023-12377661).

Institutional Review Board Statement

This study was performed according to the Declaration of Helsinki and in agreement with Italian law for studies based only on retrospective analyses on routine archival FFPE tissue. Written informed consent from the living patient, following the indication of Italian Legislative Decree No. 196/03 (Codex on Privacy), as modified by EU Regulation 2016/679 of the European Parliament and Commission, was obtained at the time of surgery.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data used in this study are available partly from public repositories and partly from institutional archives. The TCGA-HNSC whole-slide images and associated metadata are publicly accessible via the GDC Data Portal: https://portal.gdc.cancer.gov/projects/TCGA-HNSC (accessed on 1 March 2025). Data from the OPSCC-UNINA cohort contain potentially identifiable patient information and are therefore not publicly available due to privacy and ethical restrictions; full case lists are provided in Supplementary File S1. The CLAM framework source code is openly available at https://github.com/mahmoodlab/CLAM (accessed on 1 March 2025). The Random Forest implementation was adapted from the official scikit-learn documentation.

Acknowledgments

Google Gemini 1.5 Pro was used by the authors to revise or translate the text, enhancing the grammar and English language of this work. The authors then critically reviewed and revised the output, ensuring full responsibility for the content.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| ACC | Accuracy |

| AJCC | American Joint Committee on Cancer |

| AUC | Area under the ROC curve |

| AI | Artificial intelligence |

| CLAM | Clustering-constrained Attention Multiple-Instance Learning |

| DL | Deep learning |

| FFPE | Formalin-fixed paraffin-embedded |

| H&E | Hematoxylin and eosin |

| HPV | Human papillomavirus |

| IHC | Immunohistochemistry |

| ISH | In situ hybridization |

| ML | Machine learning |

| OPSCC | Oropharyngeal squamous cell carcinoma |

| OS | Overall survival |

| ROI | Region of interest |

| RF | Random Forest |

| TCGA | The Cancer Genome Atlas |

| WSI | Whole-slide image |

References

- Lechner, M.; Liu, J.; Masterson, L.; Fenton, T.R. HPV-associated oropharyngeal cancer: Epidemiology, molecular biology and clinical management. Nat. Rev. Clin. Oncol. 2022, 19, 306–327. [Google Scholar] [CrossRef]

- Ang, K.K.; Harris, J.; Wheeler, R.; Weber, R.; Rosenthal, D.I.; Nguyen-Tân, P.F.; Westra, W.H.; Chung, C.H.; Jordan, R.C.; Lu, C.; et al. Human papillomavirus and survival of patients with oropharyngeal cancer. N. Engl. J. Med. 2010, 363, 24–35. [Google Scholar] [PubMed]

- Fakhry, C.; Westra, W.H.; Li, S.; Cmelak, A.; Ridge, J.A.; Pinto, H.; Forastiere, A.; Gillison, M.L. Improved survival of patients with human papillomavirus–positive head and neck squamous cell carcinoma in a prospective clinical trial. J. Natl. Cancer Inst. 2008, 100, 261–269. [Google Scholar]

- Rischin, D.; Young, R.J.; Fisher, R.; Fox, S.B.; Le, Q.T.; Peters, L.J.; Solomon, B.; Choi, J.; O’Sullivan, B.; Kenny, L.M.; et al. Prognostic significance of p16INK4A and human papillomavirus in patients with oropharyngeal cancer treated on TROG 02.02 phase III trial. J. Clin. Oncol. 2010, 28, 4142–4148. [Google Scholar] [CrossRef]

- Posner, M.; Lorch, J.; Goloubeva, O.; Tan, M.; Schumaker, L.; Sarlis, N.; Haddad, R.; Cullen, K. Survival and human papillomavirus in oropharynx cancer in TAX 324: A subset analysis from an international phase III trial. Ann. Oncol. 2011, 22, 1071–1077. [Google Scholar] [CrossRef]

- Perri, F.; Longo, F.; Caponigro, F.; Sandomenico, F.; Guida, A.; Della Vittoria Scarpati, G.; Ottaiano, A.; Muto, P.; Ionna, F. Management of HPV-related squamous cell carcinoma of the head and neck: Pitfalls and caveat. Cancers 2020, 12, 975. [Google Scholar] [CrossRef]

- Rosenberg, A.J.; Vokes, E.E. Optimizing treatment de-escalation in head and neck cancer: Current and future perspectives. Oncologist 2021, 26, 40–48. [Google Scholar]

- Cmelak, A.; Li, S.; Marur, S.; Zhao, W.; Westra, W.H.; Chung, C.H.; Gillison, M.L.; Gilbert, J.; Bauman, J.E.; Wagner, L.I.; et al. E1308: Reduced-dose IMRT in human papilloma virus (HPV)-associated resectable oropharyngeal squamous carcinomas (OPSCC) after clinical complete response (cCR) to induction chemotherapy (IC). J. Clin. Oncol. 2014, 32, LBA6006. [Google Scholar] [CrossRef]

- Chera, B.S.; Amdur, R.J.; Tepper, J.E.; Tan, X.; Weiss, J.; Grilley-Olson, J.E.; Hayes, D.N.; Zanation, A.; Hackman, T.G.; Patel, S.; et al. Mature results of a prospective study of deintensified chemoradiotherapy for low-risk human papillomavirus-associated oropharyngeal squamous cell carcinoma. Cancer 2018, 124, 2347–2354. [Google Scholar]

- Yom, S.; Harris, J.; Caudell, J.; Geiger, J.; Waldron, J.; Gillison, M.; Subramaniam, R.; Yao, M.; Xiao, C.; Kovalchuk, N.; et al. Interim futility results of NRG-HN005, A randomized, phase II/III non-inferiority trial for non-smoking p16+ oropharyngeal cancer patients. Int. J. Radiat. Oncol. Biol. Phys. 2024, 120, S2–S3. [Google Scholar] [CrossRef]

- Craig, S.G.; Anderson, L.A.; Schache, A.G.; Moran, M.; Graham, L.; Currie, K.; Rooney, K.; Robinson, M.; Upile, N.S.; Brooker, R.; et al. Recommendations for determining HPV status in patients with oropharyngeal cancers under TNM8 guidelines: A two-tier approach. Br. J. Cancer 2019, 120, 827–833. [Google Scholar] [CrossRef]

- Jordan, R.C.; Lingen, M.W.; Perez-Ordonez, B.; He, X.; Pickard, R.; Koluder, M.; Jiang, B.; Wakely, P.; Xiao, W.; Gillison, M.L. Validation of methods for oropharyngeal cancer HPV status determination in US cooperative group trials. Am. J. Surg. Pathol. 2012, 36, 945–954. [Google Scholar] [CrossRef] [PubMed]

- Singhi, A.D.; Westra, W.H. Comparison of human papillomavirus in situ hybridization and p16 immunohistochemistry in the detection of human papillomavirus-associated head and neck cancer based on a prospective clinical experience. Cancer 2010, 116, 2166–2173. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, Y.; Bai, W.; Wang, B.; Wei, J.; Ji, R.; Xin, Y.; Dong, L.; Jiang, X. Feasibility of immunohistochemical p16 staining in the diagnosis of human papillomavirus infection in patients with squamous cell carcinoma of the head and neck: A systematic review and meta-analysis. Front. Oncol. 2020, 10, 524928. [Google Scholar] [CrossRef]

- Cui, M.; Zhang, D.Y. Artificial intelligence and computational pathology. Lab. Investig. 2021, 101, 412–422. [Google Scholar] [PubMed]

- Tizhoosh, H.R.; Pantanowitz, L. Artificial intelligence and digital pathology: Challenges and opportunities. J. Pathol. Inform. 2018, 9, 38. [Google Scholar] [PubMed]

- Song, C.; Chen, X.; Tang, C.; Xue, P.; Jiang, Y.; Qiao, Y. Artificial intelligence for HPV status prediction based on disease-specific images in head and neck cancer: A systematic review and meta-analysis. J. Med. Virol. 2023, 95, e29080. [Google Scholar]

- Broggi, G.; Maniaci, A.; Lentini, M.; Palicelli, A.; Zanelli, M.; Zizzo, M.; Koufopoulos, N.; Salzano, S.; Mazzucchelli, M.; Caltabiano, R. Artificial intelligence in head and neck cancer diagnosis: A comprehensive review with emphasis on radiomics, histopathological, and molecular applications. Cancers 2024, 16, 3623. [Google Scholar] [CrossRef]

- Migliorelli, A.; Manuelli, M.; Ciorba, A.; Stomeo, F.; Pelucchi, S.; Bianchini, C. Role of Artificial Intelligence in Human Papillomavirus Status Prediction for Oropharyngeal Cancer: A Scoping Review. Cancers 2024, 16, 4040. [Google Scholar] [CrossRef]

- Yao, H.; Zhang, X. A comprehensive review for machine learning based human papillomavirus detection in forensic identification with multiple medical samples. Front. Microbiol. 2023, 14, 1232295. [Google Scholar] [CrossRef]

- Klein, S.; Quaas, A.; Quantius, J.; Löser, H.; Meinel, J.; Peifer, M.; Wagner, S.; Gattenlöhner, S.; Wittekindt, C.; von Knebel Doeberitz, M.; et al. Deep learning predicts HPV association in oropharyngeal squamous cell carcinomas and identifies patients with a favorable prognosis using regular H&E stains. Clin. Cancer Res. 2021, 27, 1131–1138. [Google Scholar] [CrossRef]

- Wang, R.; Khurram, S.A.; Walsh, H.; Young, L.S.; Rajpoot, N. A novel deep learning algorithm for human papillomavirus infection prediction in head and neck cancers using routine histology images. Mod. Pathol. 2023, 36, 100320. [Google Scholar] [CrossRef]

- Varricchio, S.; Ilardi, G.; Crispino, A.; D’Angelo, M.P.; Russo, D.; Di Crescenzo, R.M.; Staibano, S.; Merolla, F. A machine learning approach to predict HPV positivity of oropharyngeal squamous cell carcinoma. Pathol.-J. Ital. Soc. Anat. Pathol. Diagn. Cytopathol. 2024, 116. [Google Scholar] [CrossRef]

- Lu, M.Y.; Williamson, D.F.; Chen, T.Y.; Chen, R.J.; Barbieri, M.; Mahmood, F. Data-efficient and weakly supervised computational pathology on whole-slide images. Nat. Biomed. Eng. 2021, 5, 555–570. [Google Scholar]

- Bankhead, P.; Loughrey, M.B.; Fernández, J.A.; Dombrowski, Y.; McArt, D.G.; Dunne, P.D.; McQuaid, S.; Gray, R.T.; Murray, L.J.; Coleman, H.G.; et al. QuPath: Open source software for digital pathology image analysis. Sci. Rep. 2017, 7, 16878. [Google Scholar] [CrossRef]

- Klein, S.; Wuerdemann, N.; Demers, I.; Kopp, C.; Quantius, J.; Charpentier, A.; Tolkach, Y.; Brinker, K.; Sharma, S.J.; George, J.; et al. Predicting HPV association using deep learning and regular H&E stains allows granular stratification of oropharyngeal cancer patients. npj Digit. Med. 2023, 6, 152. [Google Scholar] [CrossRef]

- Adachi, M.; Taki, T.; Sakamoto, N.; Kojima, M.; Hirao, A.; Matsuura, K.; Hayashi, R.; Tabuchi, K.; Ishikawa, S.; Ishii, G.; et al. Extracting interpretable features for pathologists using weakly supervised learning to predict p16 expression in oropharyngeal cancer. Sci. Rep. 2024, 14, 4506. [Google Scholar] [CrossRef] [PubMed]

- Lewis, J.S. Morphologic diversity in human papillomavirus-related oropharyngeal squamous cell carcinoma: Catch Me If You Can! Mod. Pathol. 2017, 30, S44–S53. [Google Scholar] [CrossRef][Green Version]

- Chernock, R.D.; El-Mofty, S.K.; Thorstad, W.L.; Parvin, C.A.; Lewis, J.S. HPV-Related Nonkeratinizing Squamous Cell Carcinoma of the Oropharynx: Utility of Microscopic Features in Predicting Patient Outcome. Head Neck Pathol. 2009, 3, 186–194. [Google Scholar] [CrossRef] [PubMed]

- Lim, Y.X.; Mierzwa, M.L.; Sartor, M.A.; D’Silva, N.J. Clinical, morphologic and molecular heterogeneity of HPV-associated oropharyngeal cancer. Oncogene 2023, 42, 2939–2955. [Google Scholar] [CrossRef] [PubMed]

- Molony, P.; Werner, R.; Martin, C.; Callanan, D.; Nauta, I.; Heideman, D.; Sheahan, P.; Heffron, C.; Feeley, L. The role of tumour morphology in assigning HPV status in oropharyngeal squamous cell carcinoma. Oral Oncol. 2020, 105, 104670. [Google Scholar] [CrossRef]

- Skjervold, A.H.; Pettersen, H.S.; Valla, M.; Opdahl, S.; Bofin, A.M. Visual and digital assessment of Ki-67 in breast cancer tissue—A comparison of methods. Diagn. Pathol. 2022, 17, 45. [Google Scholar] [CrossRef] [PubMed]

- Martino, F.; Varricchio, S.; Russo, D.; Merolla, F.; Ilardi, G.; Mascolo, M.; dell’Aversana, G.O.; Califano, L.; Toscano, G.; De Pietro, G.; et al. A Machine-learning Approach for the Assessment of the Proliferative Compartment of Solid Tumors on Hematoxylin-Eosin-Stained Sections. Cancers 2020, 12, 1344. [Google Scholar] [CrossRef]

- Angeloni, M.; Rizzi, D.; Schoen, S.; Caputo, A.; Merolla, F.; Hartmann, A.; Ferrazzi, F.; Fraggetta, F. Closing the gap in the clinical adoption of computational pathology: A standardized, open-source framework to integrate deep-learning models into the laboratory information system. Genome Med. 2025, 17, 60. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).