Abstract

In order to improve the positioning accuracy of the micromanipulation system, a comprehensive error model is first established to take into account the microscope nonlinear imaging distortion, camera installation error, and the mechanical displacement error of the motorized stage. A novel error compensation method is then proposed with distortion compensation coefficients obtained by the Levenberg–Marquardt optimization algorithm combined with the deduced nonlinear imaging model. The compensation coefficients for camera installation error and mechanical displacement error are derived from the rigid-body translation technique and image stitching algorithm. To validate the error compensation model, single shot and cumulative error tests were designed. The experimental results show that after the error compensation, the displacement errors were controlled within 0.25 μm when moving in a single direction and within 0.02 μm per 1000 μm when moving in multiple directions.

1. Introduction

With the rapid development of modern biomedical technology, micromanipulation techniques have obtained widespread application, including nucleus transfer, microinjection, microdissection, embryo transfer, etc. However, accurate positioning plays a vital role in the micromanipulation [1,2,3]. Micro positioning technology uses image processing algorithms to visually locate the cells and microorganisms, converts image coordinates into object coordinates, and sends the converted coordinate information to the end effector for corresponding micro positioning [4]. Therefore, it is of great significance to study the systematic errors and compensation methods of microscopic vision systems. In order to improve the precision of micro-positioning, digital image correlation (DIC) is usually used to compensate the coordinate conversion error between the vision module of the micro-operating system and the end-effector [5,6,7,8,9,10]. For example, Lee [11] proposed a vision based high precision self-calibration method by the use of a designed chess board for converting the position relationship between the end-effector and the camera without operator intervention in the calibration process. At high magnifications, however, the optical microscope imaging cannot strictly meet the pinhole imaging model due to lens distortion, which will deteriorate the measurement of DIC [12,13,14]. Normally, the rigid-body translation technique is used for eliminating the distortion of the microscope camera [15,16,17,18,19]. Koide [20] describes a conversion technique based on reprojection error minimization by directly taking images of the calibration pattern. Malti [21] optimized the distortion parameters, the camera intrinsics and the hand–eye transform with epipolar constraints. Although the above method can effectively solve the camera distortion problem, it ignores the mechanical errors arising from the installation error of the camera and the mechanical displacement error. It can be seen that neither the rigid body translation method nor the DIC method can eliminate camera distortion errors and mechanical errors at the same time. In addition, in the current research, there is no comprehensive method to compensate these errors simultaneously.

The research object of this paper is a micromanipulation system based on machine vision, in which the camera and micromanipulation platform are the key components of positioning. In order to improve the positioning accuracy, not only the camera distortion error must be considered, but also the analysis and calculation of mechanical errors such as camera installation error and the mechanical displacement error of the micromanipulation platform. However, these are rarely discussed together in former works. Therefore, this paper proposes a novel error compensation model that unites the camera distortion errors compensation and the mechanical error compensation. The influences and derivation of the above errors are studied first. Then, a novel error compensation model is established to comprehensively take into account the microscope nonlinear imaging distortion, camera installation error, and the mechanical displacement error. Finally, to validate the error compensation model, single shot error tests and cumulative error tests were designed. The experimental results show that the proposed method is not only easy to implement, but also leads to accurate positioning.

2. System Overview

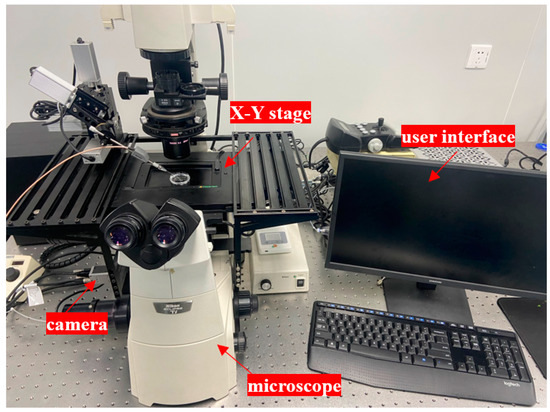

As shown in Figure 1, the micromanipulation system consists of a camera (scA1300-32 gm, Basler, Inc., Lubeck, Germany) which captures at 30 Hz in real time, a standard inverted microscope (Nikon Ti, Nikon, Inc., Tokyo, Japan), and a micromanipulation platform with a motorized X-Y stage (travel range of 75 mm along both axis, MLS-1740, Micro-Nano Automation Institute Co., Ltd., Suzhou City, China).

Figure 1.

The hardware system of the micromanipulation system.

3. Derivation of System Errors

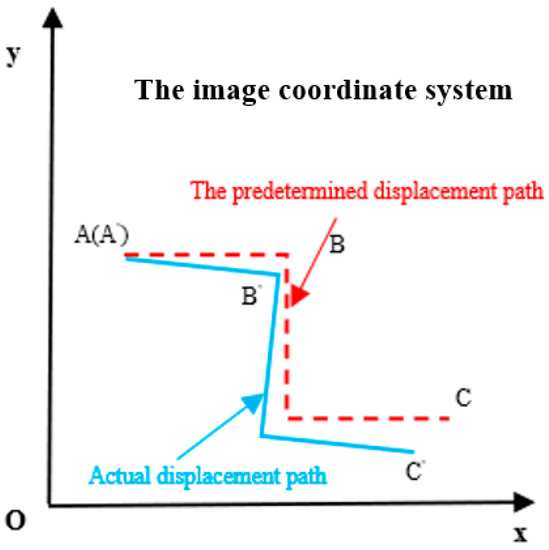

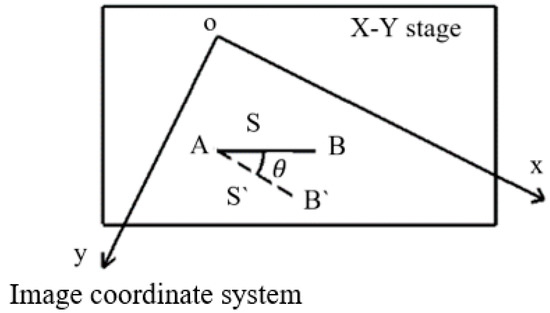

As the operating time of the micromanipulation system increases, the cumulative error of the system has a great impact on the accuracy of the micropositioning, and even leads to its failure. As shown in Figure 2, when the motorized stage is moved from point A to point C, due to the system errors, the actual displacement path of the platform deviates from the predetermined displacement path.

Figure 2.

Deviation of displacement path caused by system error. The predetermined displacement path is moved from point A to point C via point B, while the actual displacement path of the platform is moved from point A′ to point C′ via B′ which deviates from the predetermined displacement path due to the system errors.

3.1. Image Model Error

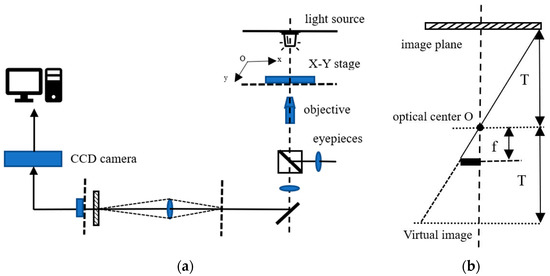

The microscope imaging model will affect the positioning accuracy. At present, the commonly used imaging models are pinhole models or parallel projection models. However, the basic assumption of these imaging models is linearity, and the lens group of a microscope is susceptible to handling, shaking and other factors, causing distortion and non-linearity. Image distortion is caused by the manufacturing and assembly errors of the optical system, which will lead to the transform error while transforming between image coordinate and physical coordinate during micromanipulation [22]. In this paper, the microscope is equipped with a standard camera interface for installing a camera. There is a dedicated optical path inside the microscope for imaging, as shown in Figure 3a, which presents the composition diagram of the vision system. For a micro-vision system equipped with an infinity-corrected optical path, its basic geometric imaging model can be simplified as shown in Figure 3b.

Figure 3.

The vision system and simplified imaging model of the micromanipulation system. (a) The diagram of the vision system, (b) The simplified imaging model. T is the working distance of the microscope and f is the focal length of the objective lens.

Assume that there is a point in the physical coordinate system whose coordinate is P = (X, Y, 0)T, and after microscope imaging, its corresponding coordinate in the image coordinate system is p = (u, v)T. If the image distortion is ignored, the relationship between P and p is obtained according to the geometric principle of 2D photography:

where represents any scale factor.

H represents the homography matrix of the imaging model.

and represent the homogeneous coordinates in the physical coordinate system and image coordinate system without considering the Z component, respectively.

A represents the internal parameter matrix .

R12 represents the first two columns of the 3 × 3 external parameter rotation matrix R. .

t represents the translation vector in the external parameter matrix. .

Assuming that L and l represent a pair of lines in the physical coordinate system and image coordinate system, respectively. According to the contravariant property of point-line mapping, the relationship between its homogeneous coordinates can be expressed as:

However, due to the existence of lens distortion, the linear model in Equations (1) and (2) does not apply. The relationship between theoretical projection coordinates and actual projection coordinates in the image coordinate system is non -linear, which can be described as:

where k is a second-order distortion factor.

The nonlinear imaging model formula can be obtained as:

The translation vector in Equation (1) needs to be further corrected. The expression of the actual microscope magnification M and the actual translation vector is obtained below:

where and represent the magnification of the microscope along the u axis and the v axis, respectively.

and represent the physical side lengths of the camera pixel unit along the u axis and the v axis, respectively.

The transformation matrix caused by the lens distortion is:

where is the ratio of the actual magnification and the theoretical amplification multiple.

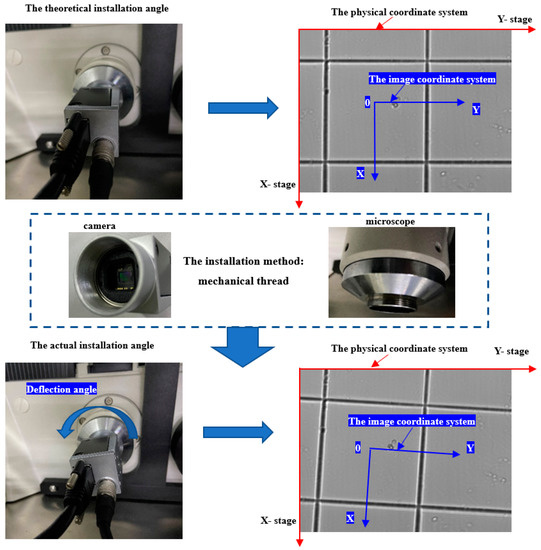

3.2. Camera Installation Error

The camera of the micromanipulation system is fixed to the microscope through a screw connection. However, this mechanical fixing method, whether it is a thread or other high-precision connection methods, inevitably has a certain deflection angle which is expressed as a clear deviation between the image coordinate system and the physical coordinate system, as shown in Figure 4, after being highly magnified by the microscope.

Figure 4.

Deviation of the image coordinate caused by camera deflection angle. The camera is connected with the microscope by mechanical threaded. This installation method is easy to produce a deflection angle which is expressed as a clear deviation between the image coordinate system and the physical coordinate system.

The coordinate transformation caused by the deflection of the camera can be written as:

where and are the conversion factors about rotation deflection angles around the X-axis and Y-axis of the physical coordinate system, respectively.

3.3. Mechanical Displacement Error

The X-Y stage of the micromanipulation system generates the displacement error due to the mechanical drive, which will accumulate with the increase of the displacement. So the mechanical displacement error of the system needs to be compensated. The homogeneous transformation matrix of coordinates can be written as:

where and are the mechanical displacement errors of X-direction and Y-direction, respectively.

4. Establishment of Error Compensation Model

It can be seen from the last section that the error of the micromanipulation platform is mainly composed of three parts: the image distortion error, the deflection angle error between the image coordinate system and the physical coordinate system, and the mechanical displacement error. So, the compensation for the systematic error mainly compensates for these three parts.

4.1. The Compensation Principles

A. The compensation principle of the image distortion error

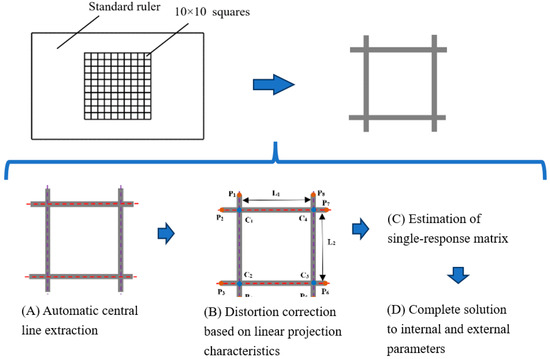

This article calculates the image distortion error with the standard ruler shown in Figure 5. The parameters required for image distortion error models are obtained through image processing. Image distortion error compensation is mainly implemented through the four steps: automation center line extraction, distortion correction based on linear projection characteristics, estimation of the homography matrix, and the complete solution of internal and external parameters. The specific process and implementation method are shown in Figure 5.

Figure 5.

The process of image distortion error.

Image distortion can cause the lines of projection not to be straight. Therefore, the problem of parameter solving in the deformed projection model is the problem of non-linear optimization. If there are n straight lines in the physical coordinate system, after the digital camera imaging, the central line is discrete to several discrete points. The distorted coordinates (, )T are mapped through the distortion model Equation (3) to obtain the corresponding theoretical point coordinates. Then, the following nonlinear optimization objective function can be established:

where

represents the number of discrete points on the theoretical center line.

and represent the theoretical coordinate of the i-th point on the j-th point of the theoretical center line, respectively.

and represent the tilt angle and interception of the i-th theoretical center line, respectively.

According to the target function in Equation (9) and the central line coordinates extracted from the previous step, the distortion coefficient K, main point coordinate (U0, V0)T, and the tilt angle and interception can be obtained through the Levenberg–Marquardt optimization algorithm.

Since the entire projection is a 2D projection, the homography matrix H is a 3 × 3 matrix. For the intersection line pattern, through the above steps, 4 pairs of parallel straight lines and 4 pairs of intersecting dots can be obtained through the above steps. Thus, the complete estimation of the homography matrix is further achieved. Assume that and are the point pairs of the line pairs after distortions. Then, from the Equations (1) and (2), the following can be obtained:

By means of a singular value decomposition matrix, the homologous matrix H can be obtained, and , , can be further obtained through matrix decomposition.

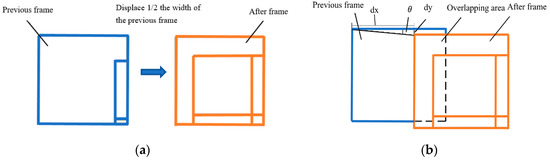

B. The compensation principle of the deflection error between image coordinate system and physical coordinate system

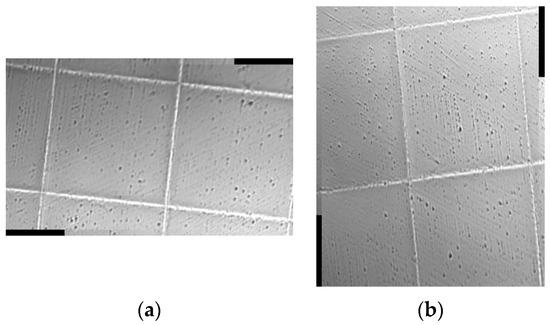

The deflection angle error between the image coordinate system and the physical coordinate system is primarily caused by the installation error of the camera. For the calculation of the deflection angle between the image coordinate system and the physical coordinate system, a series of frames that the previous frame and the after frame has an 1/2 width’s (while displacing along X-direction of the stage) or height’s (while displacing along Y-direction of the stage) overlap are obtained by using the standard rule shown in Figure 5. Take X-direction displacement as an example: two adjacent frames with partially overlapping images as shown in Figure 6a are selected and processed by the computer for image stitching. In the process, the SIFT algorithm [23] is used for image feature extraction, the RANSAC algorithm [24] is applied for image feature matching to eliminate outliers, and key matching points are put into use for image stitching. Finally, the displacement errors dy and dx can be calculated using the stitched image as shown in Figure 6b. Then, the deflection angle error between the image coordinate system and the physical coordinate system is obtained, i.e., of the camera installation is written as

Figure 6.

Image stitching schematic diagram. (a) Two adjacent images, (b) Image stitching.

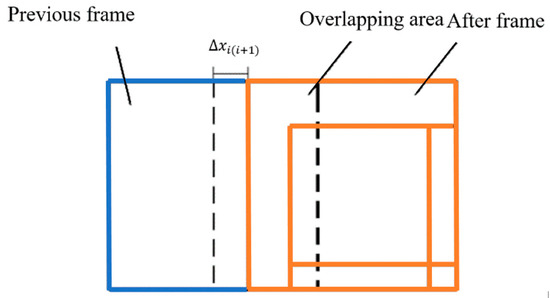

C. The compensation principle of the mechanical displacement error

In a certain displacement direction, the difference between the theoretical and the actual displacement value of the platform is the displacement error in this direction after the compensation of the deflection angle error. Thus, the platform was moved several times in a fixed step along the positive and negative directions of the X-direction and the Y-direction, respectively, and the corresponding images were captured by the camera after each displacement. After that, the image stitching algorithm mentioned in Section 4.1B can be used to obtain the average displacement error of the platform along the X-direction and Y-direction which can be represented by , , and , respectively.

Taking the X-direction as an example, a schematic diagram of mechanical displacement error calculation is shown in Figure 7 after the deflection angle error is compensated. Then, the calculation formula of is written as

where is the displacement error value obtained after image stitching of the first image and the second image in the positive direction of X-direction; is the displacement error value of the second and third images. Similarly, is the displacement error value of the nth and images.

Figure 7.

Schematic diagram of mechanical displacement error calculation.

Based on the axisymmetric principle, and have similar calculation principles to .

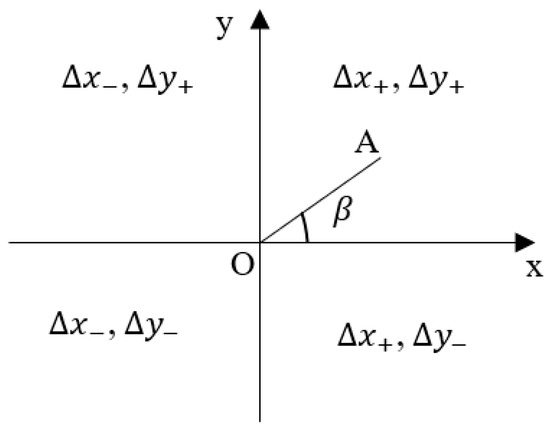

When the platform moves in a non-axial direction, the displacement error of the system is combined by the X-direction and Y-direction systematic errors. For example, as shown in Figure 8, when displacing from point O to point A in the physical coordinate system, the mechanical displacement error consists of and . While it is in other quadrants, the corresponding mechanical displacement errors distribution is also shown in Figure 8.

Figure 8.

The distribution of mechanical displacement error.

Thus, the formula for calculating the compensation coefficient of mechanical displacement error is modeled as:

where is the mechanical error compensation coefficient of the micromanipulation platform. is the positive deviation angle between the displacement direction and the X-direction. belongs to First quadrant, belongs to Second quadrant, belongs to Third quadrant, belongs to Fourth quadrant.

4.2. Error Compensation Model

Combined with Equations (6)–(8), the error transformation matrix can be obtained:

To compensate the system errors, an error compensation model needs to be established. As shown in Figure 9, when the stage is expected to move from point to point , due to the system error, the stage actually moves to point . This can be expressed as

where and are the vectors of expected displacement and actual displacement, respectively.

Figure 9.

Schematic diagram of expected displacement and actual displacement. The expected vector displacement is from point A to point B, but the actual displacement becomes vector that is from point A to point . The deflection angle between the expected displacement and actual displacement is .

Further,

where and are the first and second lines of , which represent the compensation coefficient of the image distortion of the X-direction and the Y-direction, respectively. s is the magnitude of .

Since the mechanical error of X-Y stage is positively linearly correlated with the moving distance, the error compensation model is as follows:

It can be seen from Equation (17) that the establishment of the error model is mainly related to the five compensation coefficients , , , and .

5. Experimental Results and Discussion

5.1. Calibration of Error Compensation Model

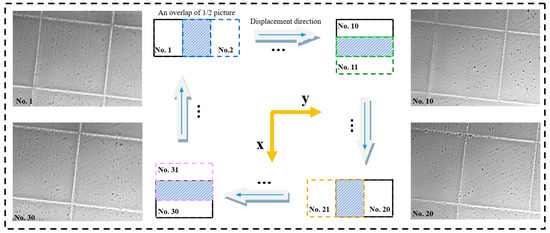

In order to determine the error compensation formula, an experiment was designed in this paper. A high-precision scale, the center of which consists of 20 × 20 squares with a side length of 50 μm, was selected as the standard ruler, and the image resolution was set as 1200 × 900. When the image samples were collected, the X-Y stage was used to adjust the step length under a 20× objective so that there is an overlap of 1/2 between adjacent images. A total of 40 images were taken according to the stepping method shown in Figure 10.

Figure 10.

Image acquisition method. A total of 40 pictures were collected over multiple displacements by using the X-Y stage with a step size of half the width of the image (when it is displaced along the X-direction) or half the height (when it is displaced along the Y-direction). No.1 represents the first picture. No.2 represents the second picture, and so on.

The error compensation coefficients of image distortion are obtained through the algorithm of Section 4.1A. The results are shown in Table 1.

Table 1.

Results of error compensation coefficient M, .

Among the 40 images, 10 groups of pairwise adjacent images were randomly selected for image stitching to obtain the error compensation coefficient of the deflection error between image coordinate system and platform coordinate system. Image stitching was performed on adjacent images. The effect diagram of this when moving in the X-direction is shown in Figure 11a, and when moving in the Y-direction is shown in Figure 11b. Thus, the deflection angle error compensation coefficient is obtained. The results are shown in Table 2.

Figure 11.

Effect diagram of image stitching. (a) X-direction, (b) Y-direction.

Table 2.

Results of error compensation coefficient .

The mechanical displacement error obtained by image stitching is shown in Table 3.

Table 3.

Results of , , and .

Thus, the compensation coefficient of mechanical displacement error can be obtained.

According to Equations (17), the error compensation formula of the micromanipulation platform is further obtained as follows:

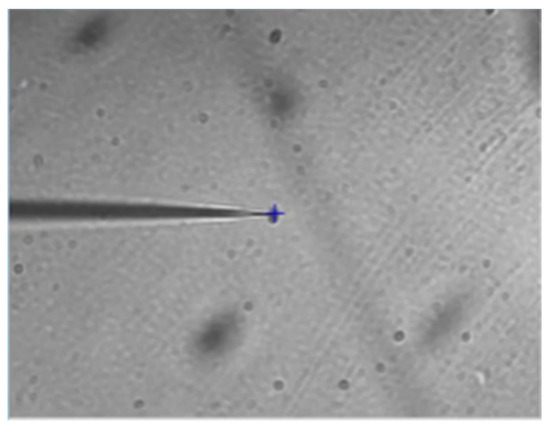

5.2. Single Shot Error Test

In order to further measure the accuracy of the error compensation formula of the micromanipulation platform, the single shot error test was performed in this paper, and the center of the image was set as the origin. The test was started by clicking the mouse to randomly select points of interest other than the origin. Then, the relative distance size between the selected point of interest and the origin in the image coordinate system was calculated, and the corresponding step command was transferred to the microoperation platform, so that the point of interest displaces to the origin. Finally, the position error between the point of interest after displacing and the origin can be calculated as the single shot error, which is also the error value of the algorithm after compensation. The single shot error test interface is shown in Figure 12.

Figure 12.

Single shot error test interface.

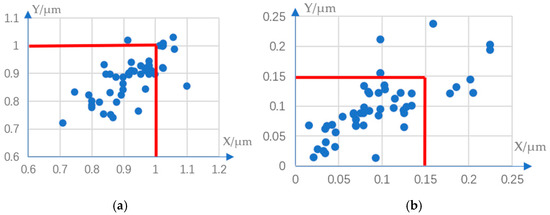

Based on the above-mentioned single shot error test rule, 50 groups of tests before and after the error compensation were carried out, respectively. The results for the absolute values of the difference in X-direction and Y-direction between the point of interest after displacing and the target position (the origin of the coordinate system in the diagram) are plotted in the coordinate system shown in each figure of Figure 13.

Figure 13.

Single shot error before and after compensation. (a) before, (b) after.

The comparison of the test results shows that the errors of the single shot error test before compensation are all above 0.7 μm, and some even as high as 1 μm. But after compensation, the error of the single shot error test is basically within 0.2 μm, the coverage rate is 82%, the maximum error is within 0.25 μm, and the errors are all within the acceptable range of biological operations.

5.3. Cumulative Error Test

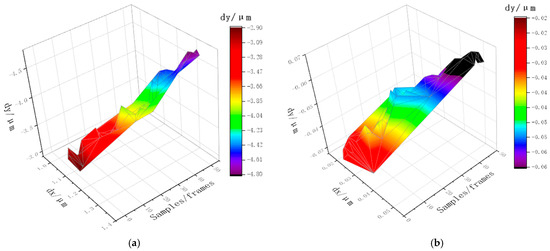

The experimental analysis using the single shot error test has proved that the systematic error of the micromanipulation platform can be basically eliminated by the method in this paper. However, the single shot error test is only a test and analysis of the error compensation effect in a single displacement direction, which cannot reflect the cumulative error after multiple displacements. Therefore, in order to further measure the cumulative errors after the error compensation of the micromanipulation system, the following experiments were designed for analysis. First, image sample collection was carried out through the visual module using the image acquisition method shown in Figure 11. By adopting this method, if there is no systematic error, the first image and the last image of each group should line up over each other exactly in theory. Therefore, the image stitching error between the first and last image can be calculated as the cumulative error value.

According to the above methods, 50 groups of image samples before and after error compensation were collected. The classification of the 50 groups of experiments is shown in Table 4.

Table 4.

Classification of the experiments.

According to the image stitching error of samples, the cumulative error distribution diagram was obtained as shown in Figure 14. Before compensation, the displacement error in the X-direction of the 50 groups was basically in the range of 1~1.4 μm, and which in the Y-direction was −2.9~−4.8 μm. After the error compensation, the displacement error in the X-direction of the 50 groups of samples was reduced to 0.01~0.06 μm, and that in the Y-direction was reduced to −0.02~−0.07 μm. In addition, through five kinds of experiments, it can be seen that as the total step length in each direction increased, the cumulative error in both the X-direction and the Y-direction also increased. However, before compensation, the overall X-direction error difference between classification 5 and classification 1 shown in Table 4 is about 0.4 μm, and the Y-direction error difference is about 1.9 μm. In comparison, the compensated X-direction error difference can be controlled within 0.05 μm, and the Y-direction can be controlled within 0.07 μm. For every 1000 μm of platform displacement, the cumulative error increases within 0.02 μm. It can be seen that through the error compensation, the cumulative error of the micromanipulation platform has been significantly reduced, so that its error value can meet the basic accuracy requirements of the micromanipulation.

Figure 14.

Cumulative errors before and after compensation. (a) before, (b) after.

6. Conclusions

To reduce positioning errors in micromanipulation, modeling and compensation principles were proposed to deal with the error caused by the non-linear imaging distortion and mechanical errors such as camera installation error and the mechanical displacement error of the micromanipulation platform. The influences and derivation of those errors are analyzed first. Then, a novel and comprehensive error compensation model is established based on the compensation principle of each error. The distortion error compensation coefficients were obtained by the Levenberg–Marquardt optimization algorithm combined with the deduced nonlinear imaging model. The mechanical error compensation coefficients were derived from the rigid-body translation technique and image stitching algorithm. Finally, to validate the error compensation model, single shot and cumulative error tests were designed. The experimental results show that the positioning accuracy of the micromanipulation system has been significantly improved. The systematic error of a single displacement can be controlled within 0.25 μm, while the cumulative displacement is controlled within 0.02 μm per 1000 μm, which basically meets the positioning requirements of cell microorganisms.

Author Contributions

Conceptualization, M.H.; software, M.H.; investigation, C.Y. and R.Z.; resources, Y.W.; writing—original draft preparation, M.H.; writing—review and editing, B.Y., Y.S. and C.D.; supervision, C.R. and Z.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China grant number 62273247, the Natural Science Foundation of the Jiangsu Higher Education Institutions of China grant number 20KJA460008.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sariola, V.; Jääskeläinen, M.; Zhou, Q. Hybrid Microassembly Combining Robotics and Water Droplet Self-Alignment. IEEE Trans. Robot. 2010, 26, 965–977. [Google Scholar] [CrossRef]

- Rodríguez, J.A.M. Microscope self-calibration based on micro laser line imaging and soft computing algorithms. Opt. Lasers Eng. 2018, 105, 75–85. [Google Scholar] [CrossRef]

- Gorpas, D.S.; Politopoulos, K.; Yova, D. Development of a computer vision binocular system for non-contact small animal model skin cancer tumour imaging. In Proceedings of the SPIE Diffuse Optical Imaging of Tissue, Munich, Germany, 12–17 June 2007; pp. 6629–6656. [Google Scholar]

- Su, L.; Zhang, H.; Wei, H.; Zhang, Z.; Yu, Y.; Si, G.; Zhang, X. Macro-to-micro positioning and auto focusing for fully automated single cell microinjection. Microsyst. Technol. 2021, 27, 11–21. [Google Scholar] [CrossRef]

- Wang, Y.Z.; Geng, B.L.; Long, C. Contour extraction of a laser stripe located on a microscope image from a stereo light microscope. Microsc. Res. Tech. 2019, 82, 260–271. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, X.M.; Gan, J.Q.; Li, H.; Ge, P. Displacement measurement system for inverters using computer micro-vision. Opt. Lasers Eng. 2016, 81, 113–118. [Google Scholar] [CrossRef]

- Sha, X.P.; Li, W.C.; Lv, X.Y.; Lv, J.T.; Li, Z.Q. Research on auto-focusing technology for micro vision system. Optik 2017, 142, 226–233. [Google Scholar] [CrossRef]

- Tsai, R. A versatile camera calibration technique for high-accuracy 3D machine vision metrology using off-the-shelf TV cameras and lenses. IEEE J. Robot Autom. 1987, 3, 323–344. [Google Scholar] [CrossRef]

- Korpelainen, V.; Seppä, J.; Lassila, A. Design and characterization of MIKES metrological atomic force microscope. Precis. Eng. 2010, 34, 735–744. [Google Scholar] [CrossRef]

- Steger, C. A comprehensive and Versatile Camera Model for Cameras with Tilt Lenses. Int. J. Comput. Vis. 2017, 123, 121–159. [Google Scholar] [CrossRef]

- Lee, K.H.; Kim, H.S.; Lee, S.J.; Choo, S.W.; Lee, S.M.; Nam, K.T. High precision hand-eye self-calibration for industrial robots. In Proceedings of the 2018 International Conference on Electronics, Information, and Communication (ICEIC), Honolulu, HI, USA, 24–27 January 2018. [Google Scholar]

- Maraghechi, S.; Hoefnagels, J.P.; Peerlings, R.H.; Rokoš, O.; Geers, M.G. Correction of scanning electron microscope imaging artifacts in a novel digital image correlation framework. Exp. Mech. 2019, 59, 489–516. [Google Scholar] [CrossRef]

- Lapshin, R. Drift-insensitive distributed calibration of probe microscope scanner in nanometer range: Real mode. Appl. Surf. Sci. 2019, 470, 1122–1129. [Google Scholar] [CrossRef]

- Yothers, M.; Browder, A.; Bumm, A. Real-space post-processing correction of thermal drift and piezoelectric actuator nonlinearities in scanning tunneling microscope images. Rev. Sci. Instrum. 2017, 88, 013708. [Google Scholar] [CrossRef]

- Liu, X.; Li, Z.; Zhong, K.; Chao, Y.; Miraldo, P.; Shi, Y. Generic distortion model for metrology under optical microscopes. Opt. Laser Eng. 2018, 103, 119–126. [Google Scholar] [CrossRef]

- Yoneyama, S.; Kitagawa, A.; Kitamura, K.; Kikuta, H. In-plane displacement measurement using digital image correlation with lens distortion correction. JSME Int. 2006, 49, 458–467. [Google Scholar] [CrossRef]

- Yoneyama, S.; Kikuta, H.; Kitagawa, A.; Kitamura, K. Lens distortion correction for digital image correlation by measuring rigid body displacement. Opt. Eng. 2006, 45, 023602. [Google Scholar] [CrossRef]

- Pan, B.; Yu, L.; Wu, D.; Tang, L. Systematic errors in two-dimensional digital image correlation due to lens distortion. Opt. Laser Eng. 2013, 51, 140–147. [Google Scholar] [CrossRef]

- Tiwari, V.; Sutton, M.A.; McNeill, S.R. Assessment of high speed imaging systems for 2D and 3D deformation measurements: Methodology development and validation. Exp. Mech. 2007, 47, 561–579. [Google Scholar] [CrossRef]

- Koide, K.; Menegatti, E. General hand-eye calibration based on reprojection error minimization. IEEE Robot. Autom. Lett. 2019, 4, 1021–1028. [Google Scholar] [CrossRef]

- Malti, A. Hand–eye calibration with epipolar constraints: Application to endoscopy. Robot. Auton. Syst. 2013, 61, 161–169. [Google Scholar] [CrossRef]

- Hartley, R.; Kang, S.B. Parameter-free radial distortion correction with center of distortion estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1309–1321. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).