Two Linear Unmixing Algorithms to Recognize Targets Using Supervised Classification and Orthogonal Rotation in Airborne Hyperspectral Images

Abstract

:1. Introduction

1.1. Data Representation and Extraction of Spectral Information

2. Problem Statement

2.1. Motivation and Research Approach

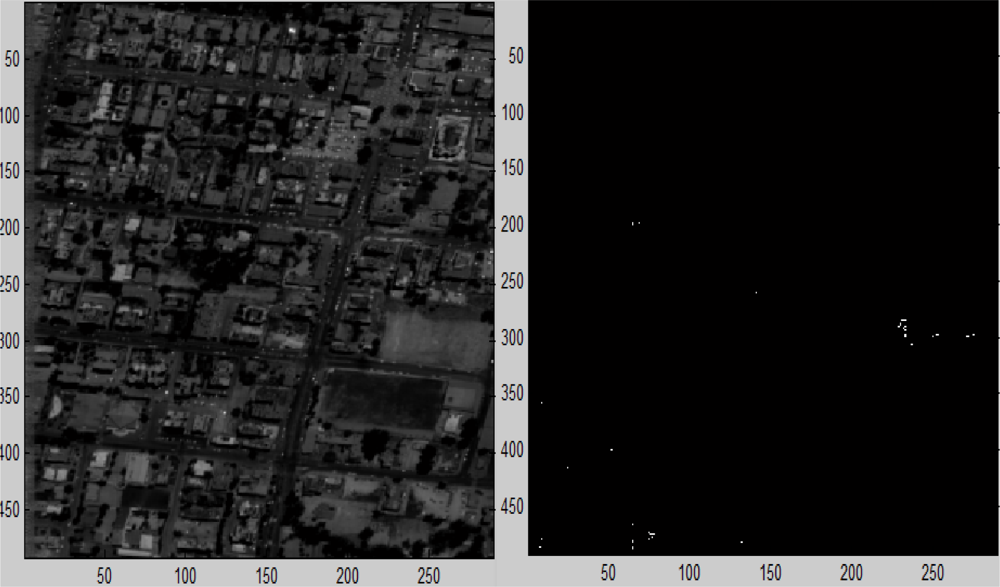

3. Related Work

- Linear approach: Under the linear mixing model, where the number of endmembers and their spectral signatures are known, hyperspectral unmixing is a linear problem, which can be addressed, for example, by the ML setup [8] and by the constrained least squares approach [9]. These methods do not supply sufficiently accurate estimates and do not reflect the physical behavior. Distinction between different material’s spectra is conditioned generally by the distinction in the behavior of the first and second derivatives and not by a trend.

- Independent component analysis (ICA) is an unsupervised source separation process that finds a linear decomposition of the observed data yielding statistically independent components [10,11]. It has been applied successfully to blind source separation, to feature extraction and to unsupervised recognition such as in [12], where the endmember signatures are treated as sources and the mixing matrix is composed by the abundance fractions. Numerous works including [13] show that ICA cannot be used to unmix hyperspectral data.

- Geometric approach: Assume a linear mixing scenario where each observed spectral vector is given by r = x+n=Mγa+n, γa = s, where r is an L-vector (L is the number of bands), M = [m1, m2, . . . mp] is the mixing matrix (mi denotes the ith endmember signature and p is the number of endmembers present in the sensed area), s ≜ γa (γ is a scale factor that models illumination variability due to a surface topography), a = [a1, a2, . . . ap]T is the abundance vector that contains the fractions of each endmember ((·)T denotes a transposed vector) and n is the system’s additive noise. Owing to physical constraints, abundance fractions are nonnegative and satisfy the so-called positivity constraint . Each pixel can be viewed as a vector in a L-dimensional Euclidean space, where each channel is assigned to one axis. Since the set {a ∈ p : , ak > 0 for all k} is a simplex, then the set Sx ≜ {x ∈ L : x = Ma, , ak > 0 for all k} is also a simplex whose vertices correspond to endmembers.Several approaches [14,15,16] exploited this geometric feature of hyperspectral mixtures. The minimum volume transform (MVT) algorithm [16] determines the simplex of a minimal volume that contains the data. The method presented in [17] is also of MVT type, but by introducing the notion of bundles, it takes into account the endmember variability that is usually present in hyperspectral mixtures.The MVT type approaches are complex from computational point of view. Usually, these algorithms first find the convex hull defined by the observed data and then fit a minimum volume simplex to it. Aiming at a lower computational complexity, some algorithms such as the pixel purity index (PPI) [15] and the N-FINDR [18] still find the maximum volume simplex that contains the data cloud. They assume the presence of at least one pure pixel of each endmember in the data. This is a strong assumption that may not be true in general. In any case, these algorithms find the set of most of the pure pixels in the data.

- Extending subspace approach: A fast unmixing algorithm, termed vertex component analysis (VCA), is described in [19]. The algorithm is unsupervised and utilizes two facts: 1. The endmembers are the vertices of a simplex; 2. The affine transformation of a simplex is also a simplex. It works with projected and unprojected data. As PPI and N-FINDR algorithms, VCA also assumes the presence of pure pixels in the data. The algorithm iteratively projects data onto a direction orthogonal to the subspace spanned by the endmembers already detected. The new endmember’s signature corresponds to the extreme projection. The algorithm iterates until all the endmembers are exhausted. VCA performs much better than PPI and better than or comparable to N-FINDR. Yet, its computational complexity is between one and two orders of magnitude lower than N-FINDR.If the image is of size approximately 300 × 2, 000 pixels, then this method, which builds linear span in each step, is too computationally expensive. In addition, it relies on “pure” spectra which are not available all the time.

3.1. Linear Classification for Threshold Optimization

4. Mathematical Preliminaries

4.1. Definitions

4.1.1. Sparse-Independency

- If W is a dependent basic subspace of y1 and y2 then dim(W) ≤ r;

- There exists a dependent basic subspace W of y1 and y2 where dim(W) = r.

- For example, sparse-independent vectors have a basis dependent rank zero and they are ɛ-sparse-ergodic independent.

- Denote by PrW (y) the orthogonal projection of the vector y ∈ n onto the subspace W ⊂ n.

- Let q be a positive value. A vector x is called q − ɛ − sparse if the cardinality of its support {i : |xi| > ɛ} is less or equal to q.

4.1.2. Classification

4.2. The Main Propositions

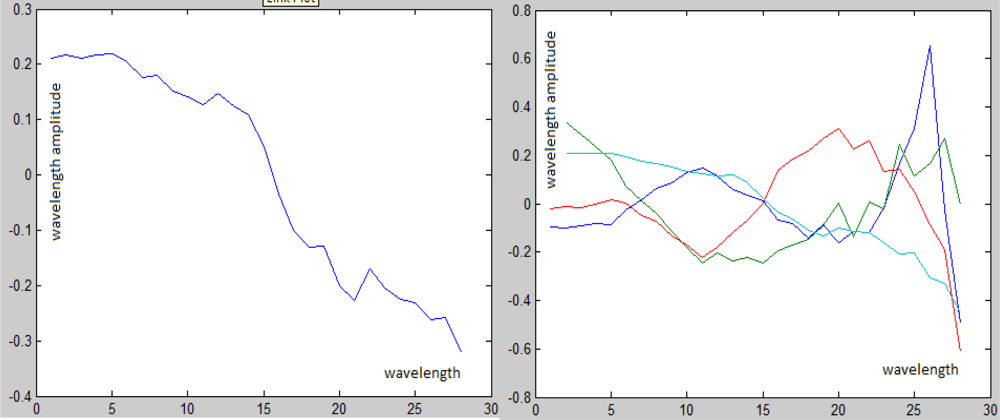

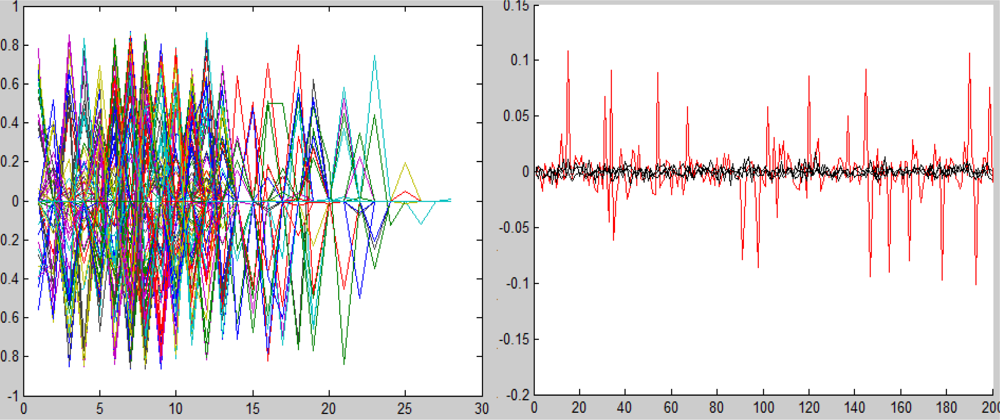

5. Random Orthogonal Transformation Algorithm for Unmixing (ROTU)

5.1. The Unmixing Algorithm

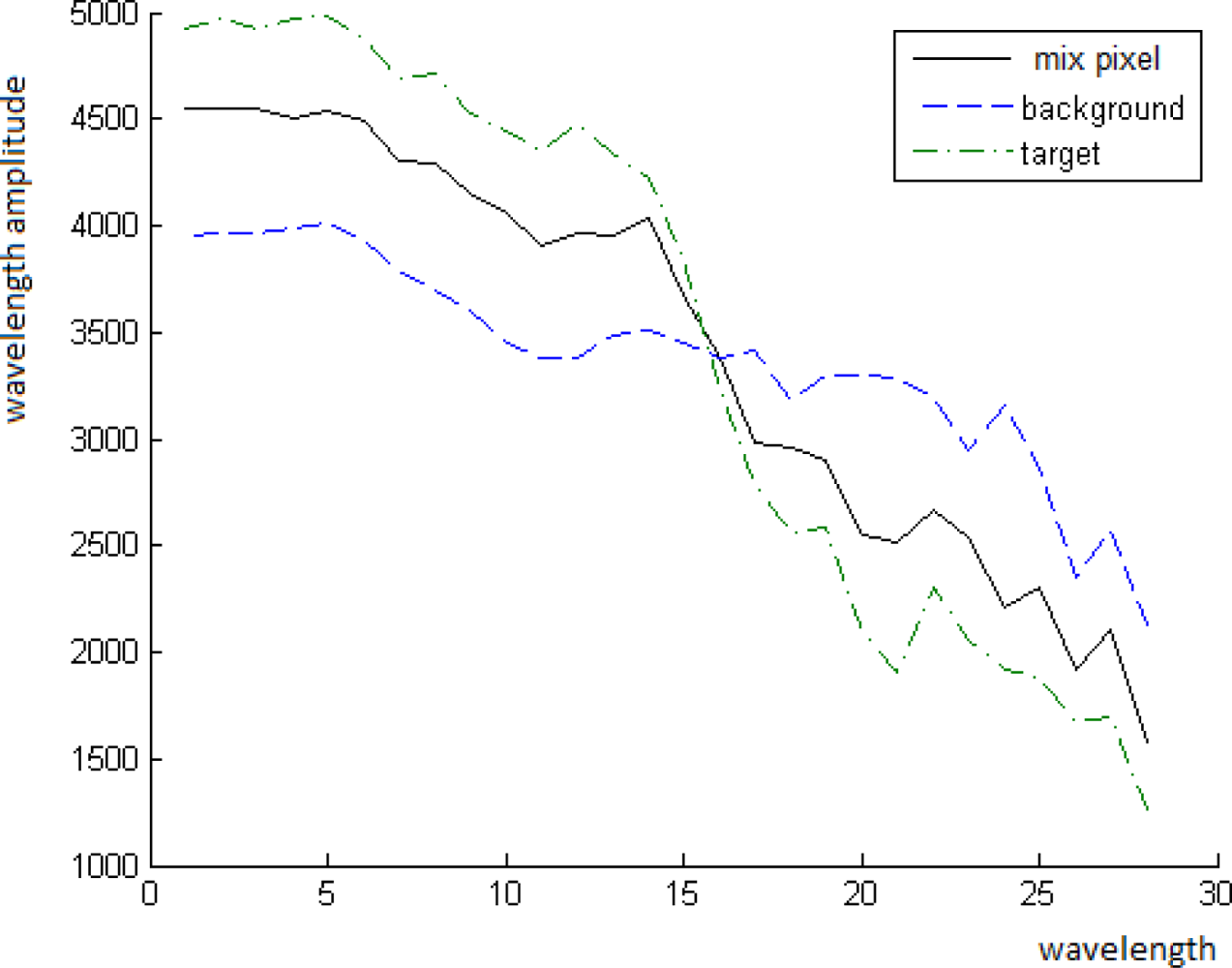

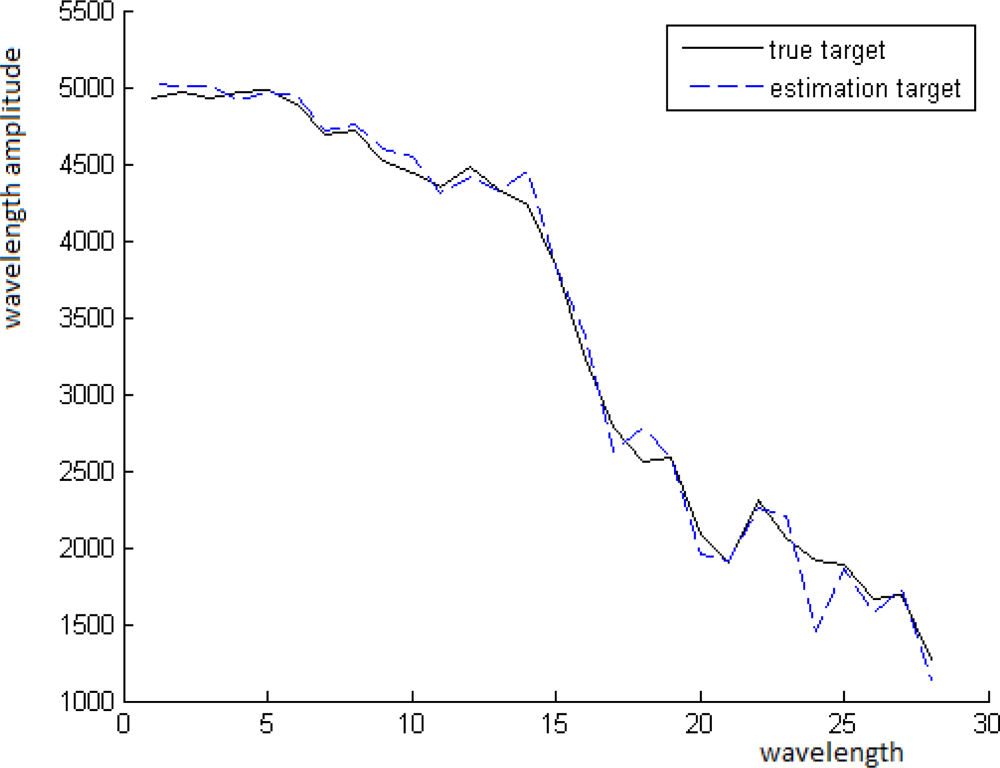

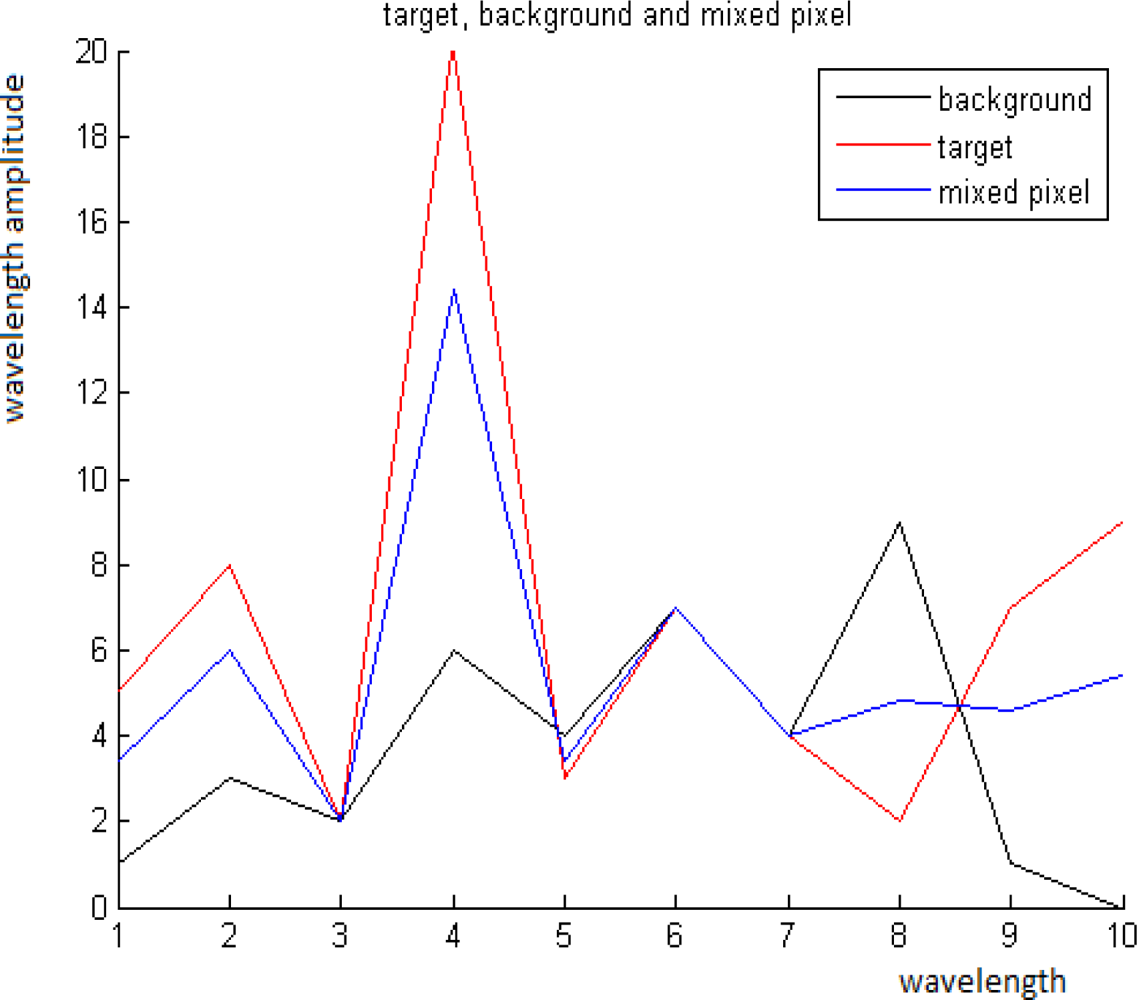

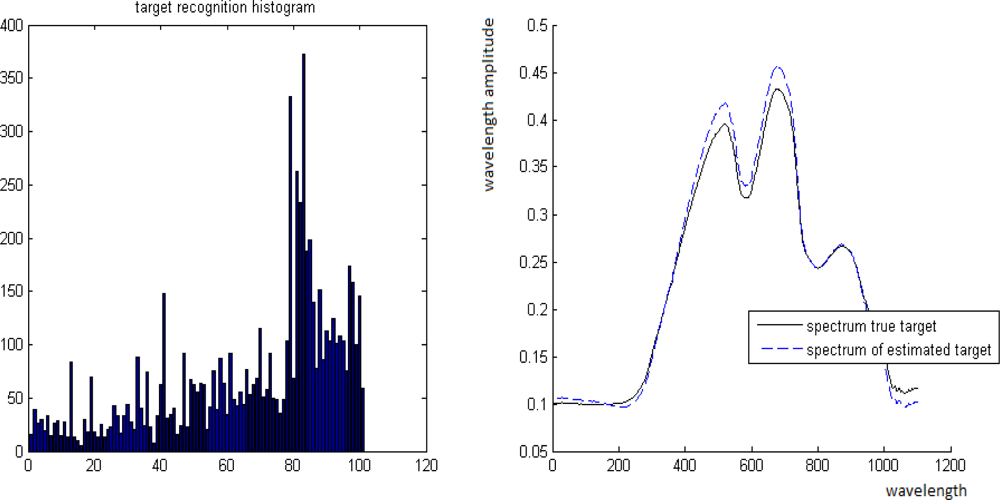

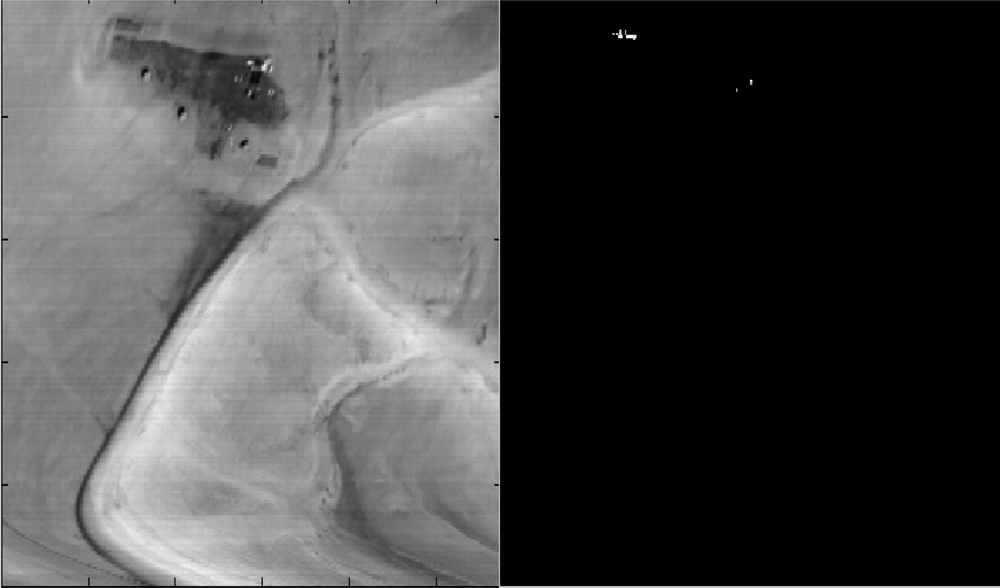

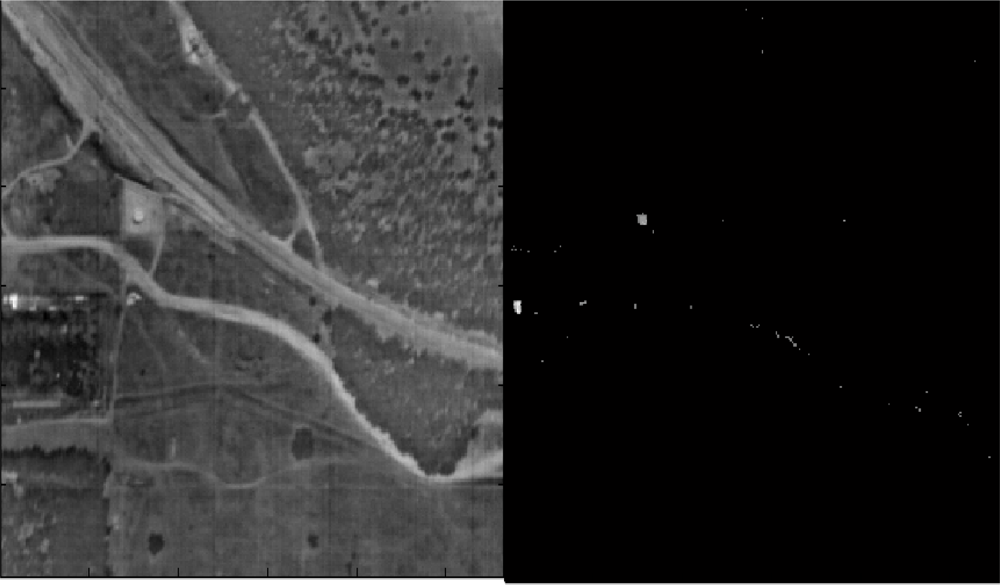

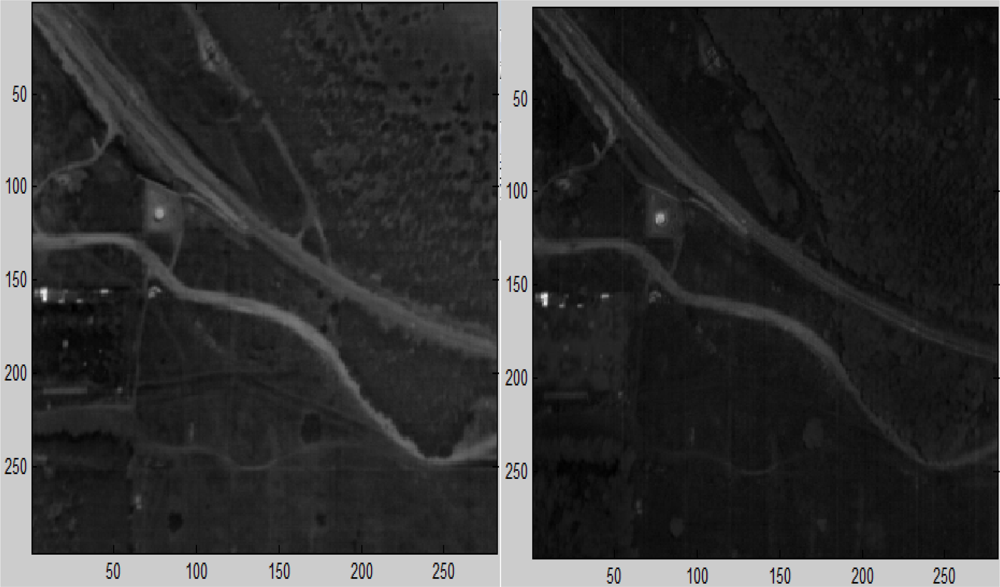

5.2. Experimental Results

6. Classification for an Unmixing (CLUN) Algorithm

- Learning phase: Finding typical background features by the application of PCA to the spectra of randomly selected multi-pixels from the scene where a multi-pixel is one pixel with all its wavelengths;

- Separation of the target’s spectra from the background via a linear operator, which is obtained from the “learning” phase;

- Construct a set of sensors which are highly sensitive to targets and insensitive to background;

- Embedding the spectra from the scene into a space generated by “good” sensors vectors and detect the points that contain the target that has a big norm value.

6.1. Sensor Building Algorithm (SBA)

- Let T be the target’s spectrum: Construct the functionsuch that ξ maximizes F (ξ) and W is a weight coefficient.

- Given a set of weak classifiers Γ: Perform s iterations to collect the s functions from Γ each of which provides a local supremum for the function F. Each function ξ from Γ is named a “weak classifier”. If s is big (for example, s = 1000), then our algorithm becomes more accurate while the computational time becomes longer.

- Construct the “strong classifier”: It is a linear operator Ξ = [ξ1, ξ2, ..., ξs], where each ξi was obtained in step 2. This operator is a “projection separator” (see Proposition 4.1).

- Construct T1 = Ξ (T) (see Definition 4.6), where T is the spectrum of the target.

- Choose an integer r > 1000 and a value for ∊ > 0.

- First iteration: Select a subset Γ̂ of r vectors from Γ via random choices.

- Assume s−1 iterations took place. We describe now the sth step. We select a subset Γ̂ of r vectors from Γ via a random choice such that for each ξi, i = 1, .., s − 1, dist(Γ̂, ξi) > ∊ holds.

- Let ξs = argmax{F (ξ)|ξ ∈ Γ̂}.

- corr(Ξ(P), T1) is close to 1.

- t = ‖Ξ(P)‖/‖T1‖ is the portion of the target in the current pixel.

6.2. Description of the CLUN Algorithm

- Apply the sensor building algorithm (SBA - Algorithm 1).

- Construct an operator that is the projection separator Ξ(P) from Definition 4.6.

- Apply the operator Ξ(P) to each pixel’s spectrum.

- The set {Y1, . . . , Yω} is separable according to Definition 3.5;

- The set {Y1, . . . , Yω} is inseparable according to Definition 3.5.

6.3. Implementations of the CLUN Algorithm

- d − support local − characteristic is the set of weak classifiers.

- d − support global − characteristic is the set of weak classifiers.

- Assume that Γ = {ξi,j} is the set of weak classifiers where 〈ξi,j, X〉 = Xi − Xj. This set of weak classifiers works well if the vectors from Φ and Ω have a low entropy. It means that many coordinates have equal values and random differences between some coordinates provide sparse and independent behavior.

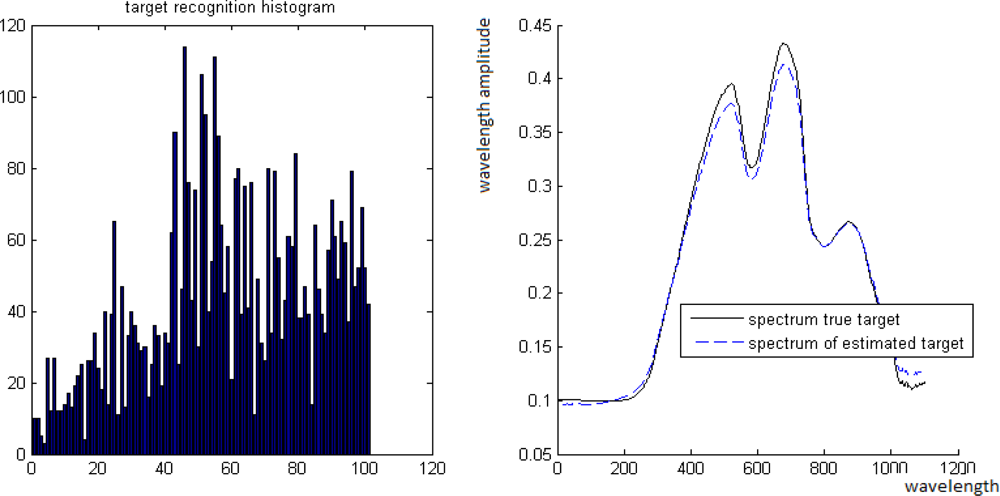

6.4. Experimental Results for Sub- and Above Pixel Target Recognition

7. Conclusions

References

- Madar, E. Literature Survey on Anomaly Detection in Hyperspectral Imaging; Research Report; Technion: Haifa, Israel, 2009. [Google Scholar]

- Averbuch, A.; Zheludev, M.; Zheludev, V. Unmix and target recognition in hyperspectral images, 2011; submitted.

- Spectral Imaging Ltd. Specim Camera; 2006. Available online: http://www.specim.fi/ (accessed on 1 February 2012).

- Bioucas-Dias, J.; Plaza, A. An Overview on Hyperspectral Unmixing: Geometrical, Statistical, and Sparse Regression Based Approaches. Proceeding of 2011 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Vancouver, BC, Canada, 24–29 July 2011; pp. 1135–1138.

- Bioucas-Dias, J.; Plaza, A. Hyperspectral unmixing: Geometrical, statistical and sparse regression-based approaches. Proc. SPIE 2010, 7830, 78300A-78300A-15. [Google Scholar]

- Manolakis, D.; Shaw, G. Detection algorithms for hyperspectral imaging applications. IEEE Signal Proc. Mag 2002, 19, 29–43. [Google Scholar]

- Manolakis, D.; Marden, D.; Shaw, G. Hyperspectral image processing for automatic target detection applications. Lincoln Lab J 2003, 14, 79–114. [Google Scholar]

- Settle, J.J. On the relationship between spectral unmixing and subspace projection. IEEE Trans. Geosci. Remote Sens 1996, 34, 1045–1046. [Google Scholar]

- Chang, C.I. Hyperspectral Imaging: Techniques for Spectral Detection and Classification; Kluwer Academic: New York, NY, USA, 2003. [Google Scholar]

- Common, P. Independent component analysis: A new concept. Signal Process 1994, 36, 287–314. [Google Scholar]

- Hyvarinen, A.; Karhunen, J.; Oja, E. Independent Component Analysis; John Wiley & Sons Inc: New York, NY, USA, 2001. [Google Scholar]

- Bayliss, J.D.; Gualtieri, J.A.; Cromp, R.F. Analysing hyperspectral data with independent component analysis. Proc. SPIE 1997, 3240, 133–143. [Google Scholar]

- Nascimento, J.M.P.; Bioucas-Dias, J.M.P. Does independent component analysis play a role in unmixing hyperspectral data? IEEE Trans. Geosci. Remote Sens 2005, 43(1), 175–187. [Google Scholar]

- Ifarraguerri, A.; Chang, C.I. Multispectral and hyper-spectral image analysis with convex cones. IEEE Trans. Geosci. Remote Sens 1999, 37, 756–770. [Google Scholar]

- Boardman, J. Automating Spectral Unmixing of AVIRIS Data Using Convex Geometry Concepts. Proceedings of the Fourth Annunl Airborne Gcoscicnce Workshop, Washington, DC, 25–29 October 1993; pp. 11–14.

- Craig, M.D. Minimum-volume transforms for remotely sensed data. IEEE Trans. Geosci. Remote Sens 1994, 32, 542–552. [Google Scholar]

- Bateson, C.; Asner, G.; Wessman, C. Endmember bundles: A new approach to incorporating endmember variability into spectral mixture analysis. IEEE Trans. Geosci. Remote Sens 2000, 38, 1083–1094. [Google Scholar]

- Winter, M.E. N-FINDR: An algorithm for fast autonomous spectral end-member determination in hyperspectral data. Proc. SPIE 1999, 3753, 266–275. [Google Scholar]

- Nascimento, M.P.; Bioucas-Dias, M. Vertex component analysis: A fast algorithm to unmix hyperspectral data. IEEE Trans. Geosci. Remote Sens 2005, 4, 898–910. [Google Scholar]

- Dobigeon, N.; Moussaoui, S.; Coulon, M.; Tourneret, J.-Y.; Hero, A.O. Joint Bayesian endmember extraction and linear unmixing for hyperspectral imagery. IEEE Trans. Signal Process 2009, 57, 4355–4368. [Google Scholar]

- Moussaoui, S.; Carteretb, C.; Briea, D.; Mohammad-Djafaric, A. Bayesian analysis of spectral mixture data using markov chain monte carlo methods. Chemometr. Intell. Lab 2006, 81, 137–148. [Google Scholar]

- Arngren, M.; Schmidt, M.N.; Larsen, J. Bayesian Nonnegative Matrix Factorization with Volume Prior for Unmixing of Hyperspectral Images. Proceedings of IEEE Workshop on Machine Learning for Signal Processing (MLSP), Grenoble, France, 2–4 September 2009; pp. 1–6.

- Cristianini, N.; Shawe-Taylor, J. Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Burges, J.C. A tutorial on Support Vector Machines for pattern recognition. Data Min. Knowl. Disc 1998, 2, 121–167. [Google Scholar]

- Duda, R.O.; Hart, P.E.; Stork, D.G. Pattern Classification; John Wiley & Sons Inc: New York, NY, USA, 2001. [Google Scholar]

Share and Cite

Averbuch, A.; Zheludev, M. Two Linear Unmixing Algorithms to Recognize Targets Using Supervised Classification and Orthogonal Rotation in Airborne Hyperspectral Images. Remote Sens. 2012, 4, 532-560. https://doi.org/10.3390/rs4020532

Averbuch A, Zheludev M. Two Linear Unmixing Algorithms to Recognize Targets Using Supervised Classification and Orthogonal Rotation in Airborne Hyperspectral Images. Remote Sensing. 2012; 4(2):532-560. https://doi.org/10.3390/rs4020532

Chicago/Turabian StyleAverbuch, Amir, and Michael Zheludev. 2012. "Two Linear Unmixing Algorithms to Recognize Targets Using Supervised Classification and Orthogonal Rotation in Airborne Hyperspectral Images" Remote Sensing 4, no. 2: 532-560. https://doi.org/10.3390/rs4020532

APA StyleAverbuch, A., & Zheludev, M. (2012). Two Linear Unmixing Algorithms to Recognize Targets Using Supervised Classification and Orthogonal Rotation in Airborne Hyperspectral Images. Remote Sensing, 4(2), 532-560. https://doi.org/10.3390/rs4020532