Highlights

What are the main findings?

- The MPIFNet integrates multiple perspectives to achieve multi-scale semantic feature extraction of complex land cover objects.

- The GLMBSA integrates global and local information, while DBHWT extracts multi-level and frequency-domain features, jointly enhancing the model’s feature representation.

What are the implications of the main findings?

- The MPIFNet provides an effective solution for semantic segmentation of complex remote sensing images, improving the recognition of objects at different scales.

- The modular design demonstrates the potential of feature enhancement, providing a basis for lightweight, efficient remote sensing segmentation models.

Abstract

Remote sensing acquires Earth surface information without physical contact through sensors operating at diverse spatial, spectral, and temporal resolutions. In high-resolution remote sensing imagery, objects often exhibit large scale variation, complex spatial distributions, and strong inter-class similarity, posing persistent challenges for accurate semantic segmentation. Existing methods still struggle to simultaneously preserve fine boundary details and model long-range spatial dependencies, and lack explicit mechanisms to decouple low-frequency semantic context from high-frequency structural information. To address these limitations, we propose the Multi-Perspective Information Fusion Network (MPIFNet) for remote sensing semantic segmentation, motivated by the need to integrate global context, local structures, and multi-frequency information into a unified framework. MPIFNet employs a Global and Local Mamba Block Self-Attention (GLMBSA) module to capture long-range dependencies while preserving local details, and a Double-Branch Haar Wavelet Transform (DBHWT) module to separate and enhance low- and high-frequency features. By fusing spatial, hierarchical, and frequency representations, MPIFNet learns more discriminative and robust features. Evaluations on the Vaihingen, Potsdam, and LoveDA datasets through ablation and comparative studies highlight the strong generalization of our model, yielding mIoU results of 86.03%, 88.36%, and 55.76%.

1. Introduction

Remote sensing semantic segmentation plays a crucial role in a wide range of applications, including urban planning, environmental monitoring, disaster assessment, and precision agriculture [1,2,3,4]. Unlike natural images, remote sensing imagery exhibits several distinctive challenges, such as large variations in object scales, complex and irregular object boundaries, and highly heterogeneous land-cover distributions. These characteristics make it difficult to achieve accurate and robust pixel-level classification, especially in densely built-up and geographically diverse scenes.

Early remote sensing image segmentation approaches mainly relied on handcrafted features and traditional image processing techniques, such as thresholding [5] and region growing [6]. Although these methods exploited low-level cues including color, texture, and shape, they were highly sensitive to parameter settings and scene variability, and often failed to generalize to complex real-world scenarios.

With the advent of deep learning, convolutional neural networks (CNNs) significantly advanced remote sensing semantic segmentation by enabling hierarchical feature learning. Fully Convolutional Networks (FCNs) [7] pioneered end-to-end dense prediction, while U-Net [8] introduced encoder–decoder structures to recover spatial details through skip connections. Subsequent studies further enhanced performance by incorporating multiscale feature representations and pyramid structures [9,10,11]. However, due to the inherently local receptive fields of convolutions, CNN-based models often struggle to capture long-range spatial dependencies, which are critical for modeling large-scale objects and contextual relationships in remote sensing scenes. Moreover, as ground object information becomes increasingly complex and dispersed, CNNs alone are insufficient to model global context. To mitigate this limitation, attention mechanisms have been introduced [12,13,14], enabling networks to selectively focus on relevant regions and better capture long-range dependencies. Building on this idea, Transformer [15] architectures have recently emerged as powerful alternatives by leveraging self-attention mechanisms to establish global dependencies. Vision Transformer (ViT) [16] and its derivatives [17,18,19] have demonstrated strong performance in semantic segmentation by modeling long-range contextual interactions. Nevertheless, excessive reliance on global self-attention may lead to the loss of fine-grained local details and blurred object boundaries, which are particularly detrimental for remote sensing tasks that require precise delineation of complex structures such as building edges and road networks.

To balance global context modeling and local detail preservation, hybrid CNN–Transformer frameworks have been proposed. Representative methods such as FTransUNet [20] and A TransUNet [21] integrate convolutional encoders with Transformer modules to combine local texture modeling and global context reasoning. While these hybrid approaches improve performance to some extent, they still face challenges in effectively modeling multiscale structures and preserving fine boundary details under highly heterogeneous remote sensing scenarios.

To address these issues, advanced spatial sequence modeling techniques have recently gained momentum. State Space Models (SSMs) [22] and Mamba-based frameworks offer efficient long-range dependency modeling with linear complexity. Existing Mamba-based methods, such as CPSSNet [23], employ dual-branch SSM structures to separately capture spatial and channel dependencies but suffer from limited inter-branch semantic fusion. PPMamba [24], built upon SS2D, further improves spatial context modeling through directional state-space scanning; however, its modeling remains predominantly spatial and lacks mechanisms to incorporate fine texture cues or frequency-aware information. In parallel, frequency-domain methods such as Fourier operations [25] and wavelet-based approaches including SFFNet [26] decompose features into low- and high-frequency components to strengthen structural details and boundary sharpness. Nevertheless, they focus mainly on frequency representation and neglect complementary long-range spatial dependencies.

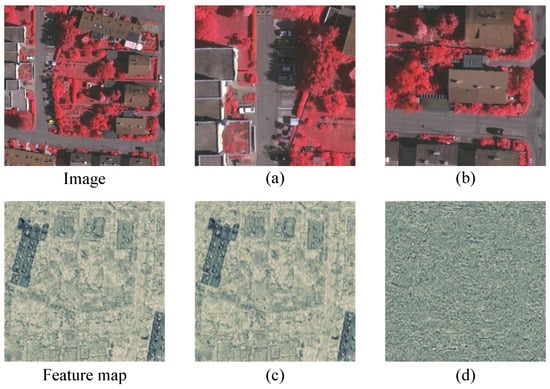

Based on these insights, we design the Multi-Perspective Information Fusion Network (MPIFNet) to explicitly model complementary feature representations from multiple viewpoints. As illustrated in Figure 1, remote sensing images exhibit distinct characteristics when analyzed at different levels. From a spatial-scale perspective, large-scale structures provide stable semantic cues, while fine-scale details preserve object boundaries and local texture patterns. From a frequency-domain perspective, low-frequency components encode global layout and category-level information, whereas high-frequency components capture edges and structural details. These observations motivate our multi-perspective modeling framework and provide the foundation for the design of MPIFNet. The primary contributions of this work are summarized as follows:

Figure 1.

Characteristic information under different perspectives. (a,b) represent the feature information under different scale perspectives. (c,d) represent the feature information under the low frequency and high frequency perspectives.

- We introduce MPIFNet, a network designed to integrate global and local perspectives, multi-level features, and frequency-domain information, enabling comprehensive capture of multiscale semantic cues and effective extraction of complex features.

- We propose a Global and Local Mamba Block Self-Attention mechanism that enhances multiscale feature representation by simultaneously capturing global context from large-scale regions and local details from fine-grained areas. This dual-perspective design enables the model to effectively integrate semantic cues across different object scales, resulting in more accurate and comprehensive segmentation performance.

- Block self-attention, as a part of the integrated GLMBSA mechanism, is capable of capturing long-range dependencies from global, local, and combined views. By extending attention beyond local blocks, it preserves fine details while enhancing global context, effectively balancing local precision and global understanding.

- To mitigate feature loss and enrich semantics, we design the DBHWT module, which integrates multi-level features and employs the Haar Wavelet Transform to extract both high- and low-frequency components, enhancing semantic discrimination across scales.

2. Related Work

2.1. CNN-Based Semantic Segmentation

Fully Convolutional Networks (FCNs) [7] pioneered end-to-end semantic segmentation using convolutional and deconvolutional layers, but their simplicity often leads to the loss of spatial detail. U-Net [8] addressed this with symmetric encoder–decoder structures and skip connections, preserving fine-grained features, yet it remains limited in handling large scale variations. DeepLab [9,10,11] introduced Atrous Spatial Pyramid Pooling (ASPP) to capture multiscale context via dilated convolutions. Despite these improvements, CNN-based approaches still struggle under complex backgrounds and with small or irregular objects, highlighting the need for richer semantic modeling and better global context reasoning.

2.2. Transformer-Based Semantic Segmentation

Attention mechanisms were proposed to mitigate CNNs’ limitations in capturing global dependencies. SENet [27] and DANet [28] enhanced feature representation via channel and spatial–channel attention, while ECANet [29] improved efficiency through local channel interactions. Transformers [15], leveraging self-attention, overcome spatial locality by modeling long-range interactions, with variants such as Longformer [30] and BigBird [31] improving computational efficiency. However, these models often require high computation, complex architectures, and careful hyperparameter tuning, which can limit scalability. Moreover, excessive reliance on global attention may reduce local structural accuracy, a critical factor for remote sensing tasks with fine-grained boundaries.

2.3. Mamba-Based Semantic Segmentation

Mamba [32] introduces a selective state-space mechanism for long-sequence modeling with linear complexity, offering computationally efficient alternatives to standard self-attention. Extensions such as VMamba [33] and VSSM [34] enable bidirectional and bidimensional context modeling, enhancing spatial perception. While Mamba-based methods improve efficiency and global context capture, existing approaches often lack effective fusion with local or frequency-domain cues, limiting fine-scale boundary preservation and multiscale semantic integration in remote sensing imagery.

2.4. Frequency Domain-Based Semantic Segmentation

Frequency-domain methods complement spatial modeling by capturing information at multiple scales. Wavelet Transform (WT) [35] supports multiresolution analysis, preserving both global and fine-grained details. Wavelet-CNN [36] and later works [37,38] fuse spectral cues to enhance object representation. SFFNet [26] demonstrates that Haar wavelet decomposition can improve boundary sharpness and semantic discrimination. Despite these benefits, most frequency-based approaches overlook long-range spatial dependencies, which limits their performance on complex remote sensing scenes.

2.5. Research Gap and Motivation for This Work

Although methods based on CNN, Transformer, Mamba, and frequency-domain have advanced remote sensing segmentation, existing approaches typically address either local structure, global context, or multiscale and frequency cues in isolation. Very few methods integrate these complementary perspectives simultaneously, which is essential for accurately segmenting heterogeneous landscapes with complex object boundaries. Motivated by this gap, we propose the Multi-Perspective Information Fusion Network (MPIFNet), which unifies spatial–frequency modeling with Mamba-based global–local feature interaction. Unlike prior methods, MPIFNet effectively fuses local and global dependencies while preserving frequency-domain cues, enabling more accurate and robust segmentation in multiscale and heterogeneous remote sensing scenes.

3. Methodology

3.1. Overall Architecture

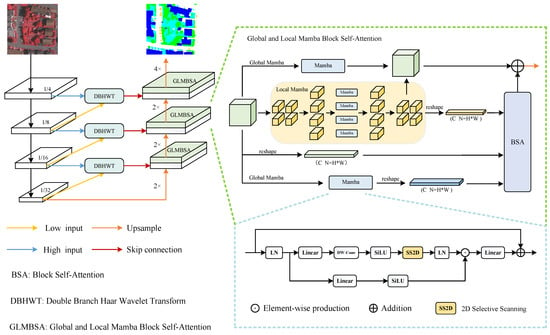

The overall framework is illustrated in Figure 2. We adopt the classic U-Net architecture [8], with ConvNeXt [39] as the encoder backbone to extract spatial features, where the number of channels increases progressively from 96 to 768. The model is trained with a hybrid loss function that combines cross-entropy and Dice losses. To enrich semantic information, we introduce a Double-Branch Haar Wavelet Transform (DBHWT) in the skip connections, enabling the extraction of multi-level and multi-frequency features. In the decoder, we design a plug-and-play Global and Local Mamba Block Self-Attention (GLMBSA) module, inserted after each upsampling layer. This module incorporates a State Space Model (SSM) based Mamba block [32] and jointly applies Global Mamba and Local Mamba mechanisms to capture both global contextual dependencies and fine-grained local details across scales. Finally, Block Self-Attention is applied to enhance both global and local semantic interactions, yielding more complete and precise feature representations for semantic segmentation.

Figure 2.

The overall structure of MPIFNet. The U-Net network structure is used, with a Double-Branch Haar Wavelet Transform applied in the skip connection part. After each decoder, Global and Local Mamba Block Self-Attention is introduced.

3.2. Global and Local Mamba Block Self-Attention

We introduced the State Spatial Model (SSM), which is the core of the Mamba approach, and the specific computation details can be referenced in the original paper [32].

To extend the one-dimensional state space model (SSM) to two-dimensional feature maps , we adopt 2D Selective Scanning (SS2D) formulation. Specifically, the input feature map is scanned along both the horizontal and vertical directions, where one-dimensional SSMs are applied separately. Formally, the SSM in 1D is defined as:

where is the input sequence, the hidden state, and the output. Mamba further introduces an input-dependent gating mechanism:

where and are projections of , and is the sigmoid function.

For the two-dimensional case, the SS2D module applies SSMs along the horizontal (W) and vertical (H) axes independently:

where and denote selective scanning along the horizontal and vertical dimensions, respectively. The final output is obtained by fusing the two directions:

This design enables SS2D to capture long-range dependencies in both spatial dimensions while preserving computational efficiency, which is particularly beneficial for dense prediction tasks such as semantic segmentation.

However, for the Mamba block we adopt, although its scanning mechanism enables the capture of global information across the entire feature map, it may to some extent overlook local detailed information. Therefore, we proposed a Global and Local Mamba Block Self-Attention, as shown in Figure 2. To capture localized information and compensate for deficiencies in local detail, the input features is first processed globally to extract contextual information. The feature map is then partitioned along the height H and width W dimensions into four spatial subregions: top left(), top-right(), bottom left(), and bottom-right(). The computation is formally expressed as follows:

Each subregion is then independently processed by two layers of Mamba blocks to extract localized contextual representations:

where, , , and represent local scans in different positions.

Finally, the enhanced local features are hierarchically concatenated to reconstruct the full feature map. First, features are concatenated along the height dimension:

Then, the two halves are concatenated along the width dimension to form the final Local Mamba feature map:

This hierarchical procedure effectively integrates local features from different quadrants while preserving fine-grained details and global context.

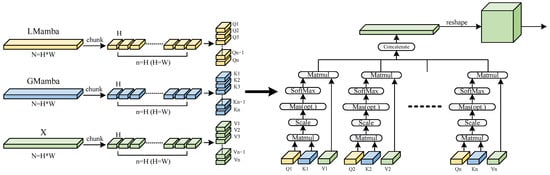

After that, to enable effective fusion of Global Mamba and Local Mamba features, a Block Self-Attention (BSA) mechanism is incorporated into the GLMBSA framework, as illustrated in Figure 3. The corresponding pseudo-code is provided in Algorithm 1. In this context, Local Mamba focuses on capturing fine-grained spatial details within confined windows, preserving boundaries and small-scale structures, while Global Mamba models long-range dependencies across the entire feature map to maintain contextual consistency for large-scale objects. This mechanism facilitates detailed interaction between local and global features: the feature representations from the Mamba modules replace the conventional Query(Q) and Key(K), while the original input X is used as Value(V). In this way, the locally derived Q and globally contextualized K jointly guide the attention process, whereas the unaltered values (V) retain the original spatial details. This complementary interaction allows the network not only to capture high-level contextual semantics but also to refine fine-grained textures, thereby achieving more effective feature fusion than simple concatenation or direct mixing.

| Algorithm 1 Block Self-Attention in GLMBSA |

|

Figure 3.

Illustration of Block Self-Attention. The module first divides three components—Global Mamba, Local Mamba, and original features—into blocks. After performing self-attention computations, it aggregates all processed features.

To preserve local details while aggregating long-range context, a block-wise partitioning strategy is introduced. Specifically, the input query tensor , where , is divided into n non-overlapping spatial blocks , each of length H. The same partitioning is applied to K and V, yielding and . Attention is computed independently within each block, allowing the network to focus on both local correlations and contextual dependencies.

By integrating block-wise attention with feature fusion, the BSA module balances the contribution of local and global information. This design preserves fine-grained spatial details while aggregating long-range dependencies, thereby enhancing semantic consistency, boundary precision, and overall segmentation accuracy. The detailed computation process is illustrated as follows:

where, Chunk operation is performed in N dimensions and is divided into n pieces, each with a length of H, and .

Each block is then processed with self-attention, and the feature information after self-attention encompasses the contextual information within the corresponding region. The process is as follows:

Finally, these processed blocks are concatenated to form a complete feature map with richer information. The computation is as follows:

The above approach extends computation from block-level processing to whole-area processing, thereby enabling interaction between global and local contextual information. The entire GLMBSA process for the input feature can be expressed as:

where is the global feature map, is the local feature map, and is the feature map produced by their integration.

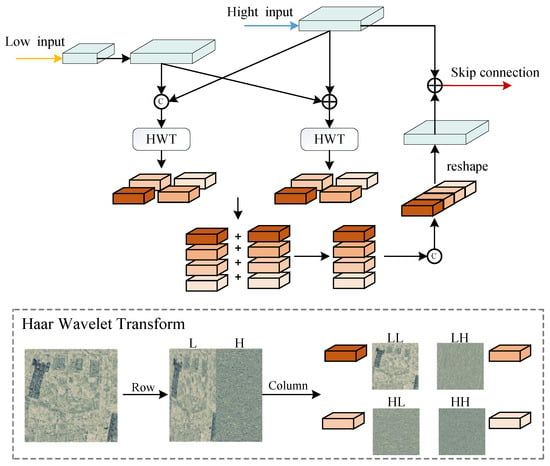

3.3. Double-Branch Haar Wavelet Transform

Traditional skip connection models typically propagate only the feature information from the current layer, which may result in the loss of critical semantic cues. To capture richer semantic information and enhance the interaction between features across different levels, we propose a Double-Branch model structure. Combining high- and low-level features, this architecture enhances the completeness and expressiveness of semantic representations. Furthermore, we incorporate the Haar Wavelet Transform to extract semantic information at varying frequency scales. By fusing semantic features from multiple perspectives, this design helps improve the model’s segmentation performance. We refer to this structure as the Double-Branch Haar Wavelet Transform (DBHWT), as illustrated in Figure 4.

Figure 4.

Illustration of Double-Branch Haar Wavelet Transform. The double-branch structure originates from different skip connection layers. Each branch processes four frequency components extracted via Haar wavelet transform, with final feature integration achieved through additive fusion followed by channel concatenation.

Although CNNs progressively abstract visual information, high-level feature maps, such as those in the skip connections of our U-Net, still retain structured spatial and frequency characteristics. The low-frequency responses primarily capture global semantic context, whereas the high-frequency responses emphasize fine-grained details including edges, boundaries, and textures. The proposed DBHWT module leverages this property by decomposing CNN-derived features into low- and high-frequency sub-bands. Through this decomposition, DBHWT explicitly separates semantic-rich low-frequency components from detail-enhanced high-frequency components, thereby strengthening multi-scale feature representation. Moreover, the double-branch design provides complementary information extraction: one branch performs element-wise addition to emphasize semantic consistency, while the other performs channel concatenation to preserve discriminative details. Both branches undergo Haar wavelet decomposition and frequency-wise fusion, resulting in multi-scale enriched feature maps that capture both global semantic context and fine-grained structural details.

To implement this double-branch fusion, the lower-layer feature map is first padded and transformed to align with the spatial and channel dimensions of the current-layer feature map. One branch performs channel concatenation, while the other performs element-wise addition, enabling complementary feature interaction. The resulting combined feature maps are then decomposed via Haar wavelet transform into four frequency components—LL for low-frequency structures, LH for vertical details, HL for horizontal details, and HH for diagonal details. These frequency-wise features are fused to produce semantically enriched, multi-scale representations, which are then delivered through the skip connections to guide feature reconstruction in the decoder.

The wavelet transformation is first applied along the rows and subsequently decomposes high-level feature maps into low- and high-frequency components. The low-frequency component preserves global structures, while the high-frequency component emphasizes edges, textures, and fine-grained details. This multi-scale decomposition facilitates effective integration of spatial context with local information, thereby enhancing the network’s representational capacity for downstream tasks. For each input channel , the wavelet transformation begins with a row-wise operation, as formulated below:

where represents low-frequency information and represents high-frequency information. The row index i varies from 1 to H (height), while the column index j spans 1 to (width) of the feature map.

The subsequent column-wise calculation is performed as follows:

where represents low-frequency information, captures vertical high-frequency features, corresponds to horizontal high-frequency features, and encodes diagonal high-frequency features. Indices i and j run from 1 to along the rows and columns, respectively.

The four different high and low-frequency components obtained from the wavelet transform of the two branches are then summed together, with components of the same frequency combined. Finally, the four components are subsequently concatenated along the channel dimension to form the feature representation, and then linear interpolation and convolution are carried out to restore them to the original size, and then through the residual network, the multi-information feature map covering the contextual semantic information is finally obtained.

The DBHWT model captures semantic information at multiple levels, decomposes spatial features into high-frequency components to retain local details and low-frequency components to preserve global context, and performs cross-frequency extraction and fusion, ultimately producing comprehensive and enriched feature representations.

3.4. Loss Function

The loss function integrates cross-entropy with Dice loss to guide model optimization. This hybrid loss function aims to leverage the strengths of both metrics, with cross-entropy focusing on pixel-wise classification accuracy and Dice loss improving the model’s performance on imbalanced datasets by emphasizing the correspondence between predicted and true regions. It is formulated as:

where N and K represent the number of samples and the number of classes. and represent the one-hot encoding of the true semantic labels and the corresponding softmax output of the network, where . Specifically, indicates the confidence that sample n belongs to class k.

4. Experimental and Analysis

4.1. Datasets

Vaihingen Dataset: This dataset was obtained using aerial sensors and covers the medium-sized city of Vaihingen in southern Germany. The images were captured from an aerial platform and have high spatial resolution. The entire dataset consists of 33 images, with each image containing RGB and near-infrared bands. The image content includes 6 main categories, which are: Buildings (red annotation), Cars (cyan annotation), Trees (green annotation), Ground (white annotation), Low vegetation (yellow annotation), and Miscellaneous objects/Background (blue annotation).

Potsdam Dataset: The dataset, supplied by ISPRS, contains high-resolution aerial imagery and serves as a standard benchmark for semantic segmentation in remote sensing applications. The dataset focuses on the Potsdam region in Germany and is primarily used for evaluating land cover classification in urban areas. Similar to the Vaihingen dataset, the image content covers 6 main categories. Meanwhile, this dataset includes five-band multispectral images, including RGB bands, near-infrared bands, and a DHM (Digital Height Model). The DHM provides height information for terrain and buildings, which helps with object segmentation.

LoveDA Dataset: Sourced from Wuhan University, this dataset was constructed and made publicly available by researchers specializing in remote sensing image processing. Its uniqueness lies in its coverage of both urban and rural scenes, which enables it to address the complex issues arising from the differences in land cover between urban and rural areas. The dataset provides high-resolution remote sensing images with a resolution of 0.3 meters per pixel, allowing for the capture of fine details of surface objects. In total, the dataset includes 5987 images, divided into two categories: urban and rural. Specifically, it contains 2522 urban area images and 3465 rural area images. The distinct urban and rural scene variations in this dataset provide valuable generalization for evaluating semantic segmentation algorithms.

4.2. Experimental Details

Experiments were conducted within the PyTorch 2.3 framework on a single NVIDIA A40 GPU. The AdamW optimizer was chosen, with weight decay serving to improve generalization performance, prevent overfitting, and accelerate the training process. A cosine learning rate scheduling strategy is applied, with the learning rate initialized to and weight decay applied at 0.01. Since the original images on the Vaihingen and Potsdam datasets are relatively large and cannot be input directly into the model, we crop the images to 1024 × 1024. The LoveDA dataset does not require random cropping of the images. Training consisted of 105 epochs for Vaihingen and Potsdam and 45 epochs for LoveDA, reflecting the characteristics of the datasets: Vaihingen and Potsdam are relatively small, while LoveDA contains more diverse scenes and categories. These epoch settings are consistent with most baseline studies, ensuring fair comparison and the validity of our experiments. Standard data augmentation techniques were employed, including random scaling (0.5, 0.75, 1.0, 1.25, 1.5), horizontal and vertical flipping, and cropping to 512 × 512. During testing, Test Time Augmentation (TTA) with rotations and flips was applied to enhance model robustness and accuracy.

4.3. Evaluation Metrics

Evaluation of the proposed approach was conducted using three widely adopted metrics in remote sensing segmentation: Mean Intersection over Union (), Overall Accuracy (), and the F1 score. Their definitions are as follows:

where represents the true positives for class i, represents the true negatives for class i, presents the false positives for class i, represents the false negatives for class i, and N is the number of classes.

Computation of the F1 score requires first understand precision and recall, defined as follows:

where, , , , and represent the numbers of true positive, false positive, false negative, and true negative predictions, respectively.

The calculation formula of F1 score is as follows:

4.4. Comparative Experiments

4.4.1. Comparative Experiments on the Vaihingen Dataset

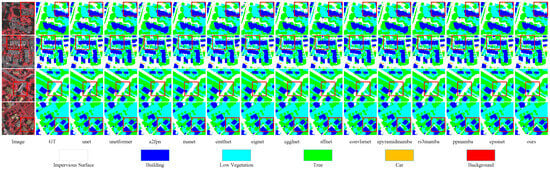

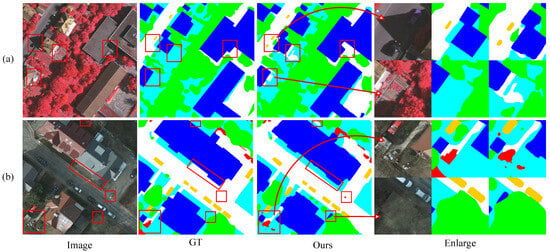

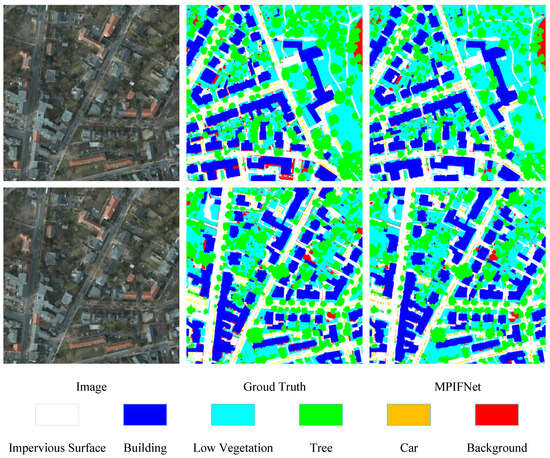

The experimental comparison on the Vaihingen dataset is summarized in Table 1, showing that MPIFNet attained 92.32% for mF1, 94.05% for OA, and 86.03% for mIoU. Compared to SFFNet [26] using the Haar wavelet transform, our MPIFNet outperforms it by 0.65%, 0.54% and 1.12% in mF1, OA and mIoU, respectively. Compared to RS3Mamba [40] using the Mamba module, we achieve improvements of 1.29%, 0.63% and 2.15% in mF1, OA and mIoU, respectively. Furthermore, compared to ConvLSR-Net [41] using the same backbone network, our experimental results show improvements of 0.75%, 0.43%, and 1.27%. The results show that MPIFNet outperforms existing models in terms of overall performance. In Figure 5, we show the visualized segmentation results of the comparative experiments. To more clearly highlight the segmentation capability of our model, we performed a comparison with the original images. As seen in Figure 6a, compared to the label maps, our model better preserves detailed structures from the original images, particularly improving the segmentation of the car and impervious surface classes, while minimizing misclassification over large homogeneous areas. This also confirms that feature information from different perspectives can effectively assist the network in segmentation, thereby improving its segmentation capability. Figure 7 presents the overall segmentation maps for ID35 and ID38.

Table 1.

Comparison of quantitative results on the Vaihingen dataset between our method and SOTA approaches. The best values in each column are highlighted in bold and underlined, while the second-best values are highlighted in bold. All metrics are reported as percentages (%).

Figure 5.

Visualization of segmentation results on the Vaihingen dataset, where GT represents ground truth. The red square marks the area to be compared.

Figure 6.

Comparison with the real label: (a) an image from the Vaihingen dataset; (b) an image from the Potsdam dataset. The red square marks the region for comparison, and the arrow points to its enlarged visualization. The colored blocks represent different semantic classes: white for impervious surface, blue for building, cyan for low vegetation, green for tree, yellow for car, and red for background.

Figure 7.

Overall segmentation maps for ID 35 and ID 38 are shown in the figure.

4.4.2. Comparison of Parameter Count and Complexity

To examine model efficiency, we compared the number of parameters, computational cost, and inference speed of our approach with current state-of-the-art (SOTA) models. The results summarized in Table 2, show that our model achieves superior segmentation performance while maintaining a reasonable balance between complexity and speed. Although MPIFNet has more parameters than some lightweight methods, its performance gains clearly justify the added complexity, and it does not have the highest parameter count among the SOTA models. In terms of computational cost, MPIFNet remains highly competitive. It does not have the highest FLOPs among the compared models, and its inference time and FPS demonstrate a favorable balance between speed and accuracy. Compared with CPSSNet, which has the longest inference time of 77.1 ms and the lowest FPS of 13.0, MPIFNet is considerably faster with an inference time of 57.6 ms and an FPS of 17.4, while also achieving higher segmentation accuracy with an mIoU of 86.03% compared to 84.73%. Although lightweight models such as A2-FPN or E-PyramidMamba achieve higher FPS due to lower computational demand, MPIFNet provides substantially better segmentation performance. Overall, the increases in parameter count, FLOPs, and inference time are justified by the improvements in accuracy, demonstrating that MPIFNet maintains an effective balance between computational efficiency and segmentation performance across multiple evaluation metrics.

Table 2.

Comparison of parameters and computation of different networks. The minimum values of Params, FLOPs and Inference Time are highlighted in bold and underline, as smaller values indicate better efficiency.

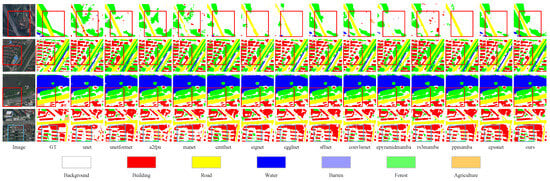

4.4.3. Comparative Experiments on the Potsdam Dataset

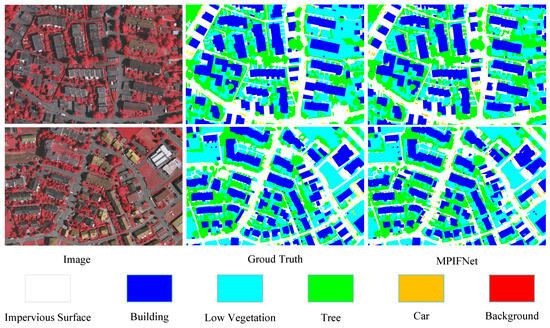

The comparative experimental outcomes on the ISPRS Potsdam dataset are summarized in Table 3, showing that mF1, OA, and mIoU reached 93.69%, 92.53%, and 88.36%, respectively. Similarly, compared to models using the same backbone network, our model outperforms them. When compared to similar approaches, our model also shows better performance. Our model outperforms existing SOTA methods according to mF1, OA, and mIoU metrics. Figure 8 presents the visual segmentation results from the comparative experiments. Figure 6b demonstrates the comparison with the ground truth label maps for this dataset. Similarly, our model captures more detailed information from the original images while minimizing misclassification over large areas. These observations indicate the model’s robust generalization ability. Figure 9 shows the overall segmentation results for ID 3_13 and 3_14.

Table 3.

Comparison of quantitative results on the Potsdam dataset between our method and SOTA approaches. The best values in each column are highlighted in bold and underlined, while the second-best values are highlighted in bold. All metrics are reported as percentages (%).

Figure 8.

Visualization of segmentation results on the Potsdam dataset, where GT represents ground truth. The red square marks the area to be compared.

Figure 9.

Overall segmentation maps for ID and ID are shown in the figure.

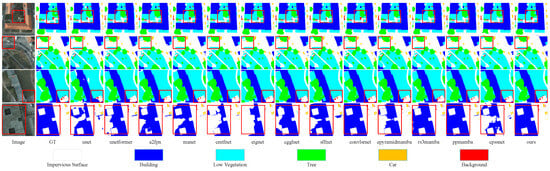

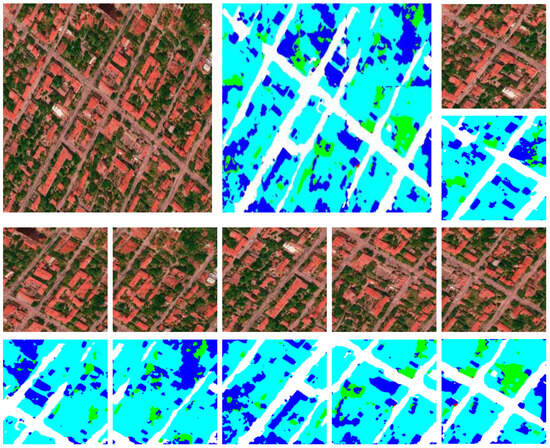

4.4.4. Comparative Experiments on the LoveDA Dataset

The performance of MPIFNet was further validated using the LoveDA dataset. The results in Table 4 show an mIoU of 55.76%, outperforming previously reported methods. High segmentation accuracy was observed for the Building and Forest classes, with IoUs of 66.37% and 46.38%, respectively, and other classes also yielded satisfactory results. Overall, our method achieves the best average performance metrics. Visualization results are provided in Figure 10.

Table 4.

Comparison of quantitative results on the LoveDA dataset between our method and SOTA approaches. The best values in each column are highlighted in bold and underlined, while the second-best values are highlighted in bold. All metrics are reported as percentages (%).

Figure 10.

Visualization of segmentation results on the LoveDA dataset, where GT represents ground truth. The red and blue square marks the area to be compared.

4.5. Ablation Experiments

4.5.1. Ablation Experiments of MPIFNet Components

To assess the effectiveness of the DBHWT and GLMBSA modules, ablation studies were performed by adding each module separately to the baseline, yielding two experimental configurations: baseline + DBHWT and baseline + GLMBSA. The corresponding results are presented in Table 5. Due to the plug-and-play nature of GLMBSA, it can be removed without affecting the structure after integrating DBHWT. When only the DBHWT module was applied, the model achieved performance gains on the Vaihingen dataset of 0.93% (mF1), 0.49% (OA), and 1.51% (mIoU), and on the Potsdam dataset of 0.55%, 0.60%, and 0.95%, respectively. This confirms the effectiveness of DBHWT in enhancing feature representation through multi-frequency information fusion. In another configuration, the DBHWT module was removed and replaced with standard skip connections, while GLMBSA was embedded. The model achieved even greater improvements: 1.00% (mF1), 0.46% (OA), and 1.62% (mIoU) on Vaihingen, and 0.96%, 1.08%, and 1.06% on Potsdam, respectively. These results demonstrate that GLMBSA significantly improves segmentation precision by effectively capturing global-local contextual dependencies.Although the introduction of both modules increases parameter count and computational cost, their combined use yields the most substantial performance gains. Specifically, the integration of both DBHWT and GLMBSA resulted in improvements of 1.37% (mF1), 0.69% (OA), and 2.27% (mIoU) on Vaihingen, and 1.00%, 1.25%, and 1.75% on Potsdam. These results demonstrate that the two modules complement each other and that their combined use improves the model’s robustness and generalization in semantic segmentation.

Table 5.

Ablation Experiments on the Vaihingen and Potsdam datasets. Best values and ours in columns are shown in bold and underlined. All scores are expressed as a percentage (%).

4.5.2. Ablation of the GLMBSA Module

Ablation experiments were conducted to evaluate the capability of GLMBSA in capturing multiscale features. First, we removed the local feature extraction component and retained only global modeling, referred to as Global Mamba (GMamba). Second, we retained only the local perspective, denoted as Local Mamba (LMamba). Third, we removed the Block Self-Attention mechanism and directly fused global and local features from both branches, termed Global and Local Mamba (GLMamba). To assess multiscale recognition capability, we focused on segmentation performance for low vegetation (representing large-scale regions) and cars (representing small-scale objects). The results, summarized in Table 6, reveal distinct performance characteristics. As shown in the second row, LMamba improves car segmentation but degrades accuracy for larger regions like low vegetation, indicating limited global context. Conversely, the third row shows that simple fusion of global and local features (GLMamba) improves performance on large-scale categories, suggesting partial alleviation of scale-related trade-offs. Finally, the fourth row—embedding the Block Self-Attention mechanism to explicitly model interactions between global and local features—achieves the best results on both object types. This confirms that GLMBSA enhances multiscale representation and contributes to comprehensive performance gains.

Table 6.

Effectiveness of the GLMBSA module. Best values are shown in bold and underlined.

4.5.3. Ablation of the DBHWT Module

Ablation experiments were performed by modifying the network structure to assess the effectiveness of the proposed Double-Branch Haar Wavelet Transform (DBHWT) module in feature extraction. Several structural variants were designed for comparison. When HWT is applied to a single-branch skip connection, the structure is termed One-Branch Haar Wavelet Transform (OBHWT). Conversely, when HWT is removed but the dual-branch structure is preserved, with two feature maps combined through element-wise addition, the variant is referred to as Double Branch (DB). The corresponding results are reported in Table 7. The DB configuration facilitates the integration of multi-level semantic features, enriching contextual representation and improving feature discrimination. Meanwhile, the incorporation of HWT enables frequency decomposition, allowing for more compact and informative encoding of structural details. Through the combined integration of these features, the DBHWT module captures additional semantic cues, leading to enhanced segmentation outcomes.

Table 7.

Effectiveness of the DBHWT module. Best values are shown in bold and underlined.

4.5.4. The Impact of the GLMBSA Module’s Position Embedding

Given the plug-and-play nature of the GLMBSA module, we explored its effectiveness when embedded at different stages of the network—specifically in the encoder, decoder, and both. In the encoder-only setting, the module was inserted after each block to evaluate its impact on high-level feature extraction, while a hybrid configuration placed the module in both the encoder and decoder. The corresponding results are shown in Table 8. As indicated by the second and third rows, inserting GLMBSA solely in the encoder yields limited performance gains, likely because the encoder focuses on abstract representation learning, where capturing long-range dependencies is less critical. In contrast, integrating GLMBSA into the decoder significantly improves performance, as the decoder is responsible for spatial reconstruction, where global-local context modeling proves more beneficial. Decoder-only placement achieves the highest mIoU (85.38%) with lower Params (61.7 M) and Flops (85.3 G), while encoder-only placement yields slightly lower mIoU (84.78%) with higher computational cost (70.6 M Params, 89.7 G Flops), highlighting the trade-off between segmentation performance and model complexity and underscoring the clear advantage of decoder placement. Interestingly, embedding the module in both stages leads to a slight performance drop, possibly due to increased model complexity causing overfitting, and redundant feature modeling introducing interference. Overall, these results suggest that placing GLMBSA in the decoder achieves the most effective balance between accuracy and efficiency, enhancing spatial detail recovery without incurring unnecessary overhead.

Table 8.

Impact of GLMBSA embedding placement on model performance: encoder, decoder, and combined stages. Best values are shown in bold and underlined. Check mark indicates the module is included, whereas the hyphen indicates it is excluded.

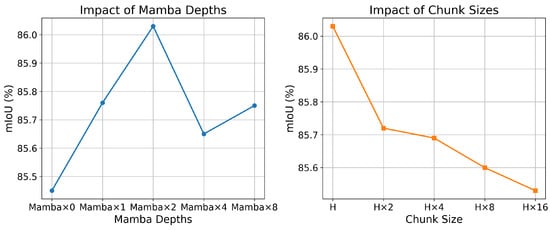

4.5.5. Ablation of Mamba Depths

To investigate how the depth of the Mamba module affects model performance, we designed five configurations (Mamba × 0, × 1, × 2, × 4, and × 8), while keeping the remaining architecture consistent. Experiments were conducted on the Vaihingen dataset. Table 9 and Figure 11 show that both the parameter count and computational complexity (FLOPs) rose steadily with increasing module depth, from 59.6 M and 72.4 G for Mamba × 0 to 72.7 M and 135.9 G for Mamba × 8. However, performance metrics showed non-monotonic trends. Among all configurations, Mamba × 2 achieved the best results, with a mean F1 of 92.32%, OA of 94.05%, and mIoU of 86.03%, significantly outperforming the others. This can be attributed to the intrinsic properties of the Mamba block: its selective state space mechanism efficiently captures long-range dependencies, but excessive stacking introduces redundant global context and increases optimization difficulty, leading to diminished generalization. In contrast, Mamba × 2 provides sufficient depth to exploit the advantages of Mamba in modeling global dependencies while avoiding redundancy and overfitting, thereby striking the optimal balance between accuracy and efficiency.

Table 9.

Comparison of computations for mamba blocks of different depths. Best values are shown in bold and underlined.

Figure 11.

Effect of Mamba Depths and Chunk Sizes on Model Performance.

4.5.6. Ablation of Self-Attention Chunk Sizes

To investigate how chunk size affects Block Self-Attention performance, we tested five configurations—H, H × 2, H × 4, H × 8, and H × 16—keeping the parameter count fixed at 62.9 M. As shown in Table 10 and Figure 11, testing on the Vaihingen dataset reveals that more partitions increase FLOPs slightly (88.3 G to 96.2 G) and reduce segmentation accuracy progressively. Specifically, the mean F1 score dropped from 92.32% (H) to 92.03% (H × 16), while mIoU decreased from 86.03% to 85.53%. These results suggest that excessive chunking compromises the model’s ability to capture long-range dependencies, thereby limiting contextual representation and semantic discrimination. In contrast, the configuration with chunk size H achieved the best trade-off between accuracy and efficiency, confirming the advantage of smaller chunk sizes for preserving global context and maintaining segmentation quality.

Table 10.

Comparison of computations with different Self-Attention chunking size. Best values are shown in bold and underlined.

4.5.7. Ablation of Self-Attention Computation

To further explore the influence of different feature modeling strategies within Block Self-Attention on semantic segmentation performance, we conducted comparative experiments by applying Global Mamba (GMamba), Local Mamba (LMamba), or retaining the original features (denoted as X) to the Query (Q), Key (K), and Value (V) components. The results on the Vaihingen dataset, presented in Table 11, demonstrate that the choice of modeling strategy markedly affects performance, with mIoU values ranging from 85.48% to 86.03% across configurations. The best performance (mIoU of 86.03%) was achieved when Q employed LMamba, K adopted GMamba, and V remained unchanged (X). This suggests that using LMamba for Q enhances local spatial discrimination, while incorporating GMamba into K improves global contextual understanding. Retaining the original features in V appears beneficial for preserving stable semantic representations. Overall, these findings highlight that an appropriate combination of local and global modeling strategies can further enhance the effectiveness of Block Self-Attention, providing valuable guidance for future architectural design.

Table 11.

Comparison of Self-Attention calculation for different combinations. Best values are shown in bold and underlined.

4.5.8. Ablation of Different Wavelet Bases

In the ablation study of different wavelet bases, we compared Haar with Daubechies, Symlet, Coiflet, and Biorthogonal wavelets. As shown in Table 12, the overall performance across different bases is relatively close, which demonstrates that our framework is not overly sensitive to the specific choice of wavelet. Nevertheless, Haar achieves the highest results, with an OA of 94.05% and mIoU of 86.03%. This improvement can be explained by the characteristics of the Haar wavelet. Its piecewise constant basis function is highly sensitive to abrupt changes in intensity, allowing it to better capture edges and sharp boundaries that are critical in remote sensing semantic segmentation tasks. In addition, the simplicity of Haar avoids the excessive smoothing effect that often occurs in smoother wavelets such as Daubechies, Symlet, or Coiflet, thereby preserving more discriminative high-frequency details. Finally, Haar provides complementary information to convolutional networks, since convolutional layers tend to emphasize smooth and low-frequency components, whereas Haar strengthens high-frequency edge features. These combined advantages explain why Haar delivers superior segmentation performance compared with other wavelet bases in our experiments.

Table 12.

Comparison of computations with different Wavelet Bases. Best values are shown in bold and underlined.

In addition to the quantitative results, we provide a qualitative comparison of the low-frequency components extracted by different wavelet bases, as shown in Figure 12. The figure visualizes the decomposed wavelet components corresponding to Daubechies, Symlet, Coiflet, Biorthogonal, and Haar. It can be observed that Haar preserves sharper and more distinct structural details, such as building edges and linear features, compared with the smoother wavelets, which produce more diffused low-frequency patterns. This visual evidence confirms that Haar not only captures critical edge information more effectively but also retains low-frequency texture details, complementing convolutional feature maps that tend to emphasize smooth, low-frequency components.

Figure 12.

Visualization of Wavelet Low-Frequency Components.

4.5.9. Ablation of Different Backbone

To evaluate the effect of backbone architectures, we conducted experiments with ResNet, Swin, and ConvNeXt families. The experimental results are shown in Table 13. The lightweight ResNet18 (18.6 M/52.5 G) achieves the lowest performance with 91.45% F1 and 84.58% mIoU, while ResNet50 (31.3 M/66.6 G) improves the results to 92.07% F1 and 85.57% mIoU, confirming the benefit of increased network depth. Under comparable computational costs, modern architectures exhibit clear advantages: ConvNeXt-Tiny (41.2 M/66.2 G) reaches 92.14% F1 and 85.71% mIoU, outperforming ResNet50 despite similar complexity, and Swin-T (40.9 M/68.4 G) achieves a comparable 91.99% F1 and 85.46% mIoU, highlighting the effectiveness of attention-based designs. Among all tested models, ConvNeXt-Small (62.9 M/88.3 G) delivers the best overall results with 92.32% F1, 94.05% OA, and 86.03% mIoU, establishing a new performance benchmark. These findings demonstrate that our framework can effectively exploit the hierarchical features of modern high-capacity backbones, with ConvNeXt emerging as the most competitive choice under similar computational budgets.

Table 13.

Comparison of computations with different Backbone. Best values are shown in bold and underlined.

4.5.10. Ablation of Partitioning Strategies in Local Mamba

To evaluate the effectiveness of different partitioning strategies in the local Mamba branch, we compared the default block-based partitioning with several sliding window configurations. As summarized in Table 14, sliding windows with sizes and strides of 32 × 32/16, 64 × 64/16, and 128 × 128/64 lead to increased computational costs, with FLOPs rising up to 101.8G, while providing only marginal improvements in accuracy. For instance, the best sliding window setting, 32 × 32/16, achieves an mIoU of 85.85%, which remains lower than the 86.03% obtained by the original block-based design. In contrast, our proposed method achieves the highest overall accuracy, with an mIoU of 86.03% and an OA of 94.05%, while maintaining a lower computational cost of 88.3 G FLOPs. These results indicate that the direct use of sliding windows does not substantially enhance segmentation performance and may compromise efficiency. By comparison, the tailored GLMBSA partitioning offers a more favorable trade-off between local detail extraction and computational efficiency, thereby validating the effectiveness of our design.

Table 14.

Comparison of Computational Efficiency and Performance under Different Local Mamba Partitioning Strategies. Best values are shown in bold and underlined.

4.5.11. Ablation of Different Noise Levels

To further assess the robustness of MPIFNet under noisy conditions, we conducted experiments by injecting Gaussian noise with different intensities, namely = 0.01, 0.03, and 0.05, into the input data. We compared MPIFNet with two representative baselines, ConvLSRNet and CPSSNet, under identical settings. As summarized in Table 15, all models experience performance degradation as the noise level increases. Nevertheless, MPIFNet consistently outperforms the compared methods across all noise intensities in terms of F1, OA, and mIoU. For instance, under strong noise with = 0.05, MPIFNet achieves an mIoU of 74.15%, exceeding the 71.34% of ConvLSRNet and the 71.55% of CPSSNet. Regarding the relative performance decline, MPIFNet shows a drop of 13.8%, which is smaller than the 15.8% observed in ConvLSRNet and the 15.6% in CPSSNet. These results confirm that the proposed multi-perspective feature integration effectively mitigates noise-induced degradation and ensures stable performance in practical scenarios.

Table 15.

Comparison of computations with different Noise levels. Best values are shown in bold and underlined.

5. Discussion

From a remote sensing perspective, MPIFNet contributes to existing research by integrating context-aware and frequency-aware modeling. The GLMBSA module captures both long-range contextual relationships and localized spatial details. Mamba-based architectures, including RS3Mamba, have shown strong ability to maintain semantic consistency across large-scale structures, such as urban blocks and road networks, but may struggle with boundary localization or small, sparse objects. Conversely, ConvLSR-Net emphasizes localized spatial refinement to mitigate such issues. Our findings suggest that combining long-range contextual awareness with mechanisms that preserve local continuity achieves more stable segmentation across object scales, particularly in heterogeneous, multiscale landscapes.

Complementing this, the DBHWT module introduces explicit frequency-domain decomposition, a widely adopted approach in remote sensing for separating coarse semantic structures from fine-grained boundary and texture information. Prior studies, such as SFFNet, demonstrate that emphasizing high-frequency components enhances boundary sharpness, particularly for objects with intricate outlines. Consistent with these findings, our results indicate that distinguishing low-frequency semantic structures from high-frequency details improves the discriminability of classes with subtle spectral differences or complex boundaries, such as low vegetation and impervious surfaces. By situating MPIFNet within this context, we demonstrate that it not only aligns with established practices but also addresses limitations observed in prior models, providing a balanced approach for diverse remote sensing scenes.

In terms of class-wise segmentation performance, MPIFNet benefits categories characterized by distinct structural patterns or rich textures. Extremely small or rare classes (e.g., narrow roads, scattered vegetation patches) remain challenging due to inherent sample imbalance, a common issue in remote sensing segmentation. Importantly, even modest improvements in IoU (1–3%) can have practical significance in operational scenarios. For instance, a 1% increase in road segmentation accuracy can substantially improve topological continuity, ensuring that road junctions and narrow linear features are correctly connected. This observation is consistent with findings reported in recent studies, such as UX-Net [48], which emphasize the importance of feature continuity for sparse linear structures. To support reproducibility and practical applicability, all training protocols, preprocessing steps, and evaluation metrics follow widely accepted standards in the remote sensing community, and the model consistently performs across Vaihingen, Potsdam, and LoveDA datasets, demonstrating adaptability to variations in spatial resolution, scene composition, and urban–rural environments.

Despite its strengths, several limitations remain. The relatively deep hierarchical structure and multi-branch design increase computational overhead, potentially limiting deployment on real-time or resource-constrained platforms. In addition, MPIFNet has not been thoroughly evaluated on extremely high-resolution images (e.g., sub-meter resolution), and its robustness under seasonal variations and across different sensors remains unclear. Standard benchmark datasets alone are insufficient to examine these practical challenges.

To provide a preliminary assessment beyond standardized benchmarks, we tested MPIFNet on a single remote sensing image obtained from publicly available online sources, which better represents operational scenarios. By partitioning the image into subregions, we illustrated both overall segmentation results and fine-grained local patterns, as shown in Figure 13. Since ground-truth annotations are unavailable, quantitative evaluation is not possible; nevertheless, full-resolution inference shows that MPIFNet maintains stable boundary delineation and coherent class predictions, demonstrating strong robustness and generalization.

Figure 13.

MPIFNet segmentation results on a real-world remote sensing image, illustrating overall and local structure. The colored blocks represent different semantic classes: white for impervious surface, blue for building, cyan for low vegetation, green for tree, yellow for car, and red for background.

Future work will address both model and application-level limitations. On the model side, we plan to develop lightweight MPIFNet variants to reduce computational costs, incorporate class-balanced learning and synthetic data augmentation to improve performance on underrepresented categories, and explore domain adaptation and multimodal fusion to enhance cross-season and cross-sensor generalization. On the application side, we will establish evaluation pipelines combining large-area batch inference, semi-automatic annotation, and scenario-driven simulation to systematically assess runtime performance, scalability, and deployment stability, bridging the gap between benchmark evaluation and real-world operational scenarios.

6. Conclusions

In this work, we revisit the long-standing challenge of jointly capturing global contextual relations and preserving fine-grained structural details in high-resolution remote sensing semantic segmentation. While existing transformer- and CNN-based approaches address these aspects separately, they often struggle to achieve a balanced representation capable of handling large object scale variations and subtle boundary transitions. Our proposed MPIFNet addresses this by integrating spatial dependency modeling and multi-frequency feature decoupling within a unified architecture. Experimental results across the Vaihingen, Potsdam, and LoveDA datasets demonstrate that the synergy between the GLMBSA and DBHWT modules effectively enhances both semantic coherence and structural precision. Beyond performance gains, our findings underscore the importance of jointly leveraging spatial, hierarchical, and frequency information to construct a more comprehensive feature representation for complex urban scenes. The consistent improvements observed in both ablation studies and benchmark comparisons further validate the robustness and generalizability of our design. Moreover, this study provides insight into how spatial state models and wavelet-based frequency decomposition can be synergistically embedded into modern segmentation networks—a paradigm that may inspire future research on multi-perspective representations, particularly for tasks involving highly heterogeneous or visually ambiguous regions.

Author Contributions

Conceptualization, S.C. and J.L.; methodology, J.L.; software, J.L.; validation, J.L., S.C. and A.D.; resources, S.C.; data curation, J.L.; writing—original draft preparation, J.L.; visualization, J.L.; supervision, S.C.; project administration, A.D.; funding acquisition, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Natural Science Foundation of Xinjiang Uygur Autonomous Region under Grant 2025D01C50, the National Natural Science Foundation of China under Grant 62562058, and in part by the Xinjiang University Graduate Innovation Project under Grant XJDX2025YJS193.

Data Availability Statement

Vaihingen and Potsdam datasets are available at: https://www.isprs.org/resources/datasets/benchmarks/UrbanSemLab/default.aspx (accessed on 10 January 2025). Loveda dataset is available at: https://github.com/Junjue-Wang/LoveDA (accessed on 15 January 2025). To support reproducibility and further research, we have made our implementation publicly available. The code contains detailed information regarding the pre-trained model, configuration files, and result reproduction. The code is located at: https://github.com/CCRG-XJU/MPIFNet (accessed on 15 January 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Blaschke, T.; Lang, S.; Lorup, E.; Strobl, J.; Zeil, P. Object-oriented image processing in an integrated GIS/remote sensing environment and perspectives for environmental applications. Environ. Inf. Plan. Politics Public 2000, 2, 555–570. [Google Scholar]

- Maktav, D.; Erbek, F.; Jürgens, C. Remote sensing of urban areas. Int. J. Remote Sens. 2005, 26, 655–659. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote sensing for agricultural applications: A meta-review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Zheng, Z.; Zhong, Y.; Wang, J.; Ma, A.; Zhang, L. Building damage assessment for rapid disaster response with a deep object-based semantic change detection framework: From natural disasters to man-made disasters. Remote Sens. Environ. 2021, 265, 112636. [Google Scholar] [CrossRef]

- Pal, S.K.; Ghosh, A.; Shankar, B.U. Segmentation of remotely sensed images with fuzzy thresholding, and quantitative evaluation. Int. J. Remote Sens. 2000, 21, 2269–2300. [Google Scholar] [CrossRef]

- Espindola, G.; Câmara, G.; Reis, I.; Bins, L.; Monteiro, A. Parameter selection for region-growing image segmentation algorithms using spatial autocorrelation. Int. J. Remote Sens. 2006, 27, 3035–3040. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.C. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Feng, S.; Song, R.; Yang, S.; Shi, D. U-net Remote Sensing Image Segmentation Algorithm Based on Attention Mechanism Optimization. In Proceedings of the 9th International Symposium on Computer, Information Processing and Technology, Xi’an, China, 24–26 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 633–636. [Google Scholar]

- Zuo, R.; Zhang, G.; Zhang, R.; Jia, X. A deformable attention network for high-resolution remote sensing images semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2021, 60, 4406314. [Google Scholar] [CrossRef]

- Li, X.; Xu, F.; Liu, F.; Lyu, X.; Tong, Y.; Xu, Z.; Zhou, J. A synergistical attention model for semantic segmentation of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400916. [Google Scholar] [CrossRef]

- Vaswani, A. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Dosovitskiy, A. An image is worth 16×16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and multiscale transformer fusion network for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 2004612. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, X.; Li, J. DWin-HRFormer: A high-resolution transformer model with directional windows for semantic segmentation of urban construction land. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5400714. [Google Scholar] [CrossRef]

- He, X.; Zhou, Y.; Zhao, J.; Zhang, D.; Yao, R.; Xue, Y. Swin transformer embedding UNet for remote sensing image semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4408715. [Google Scholar] [CrossRef]

- Ma, X.; Zhang, X.; Pun, M.O.; Liu, M. A multilevel multimodal fusion transformer for remote sensing semantic segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5403215. [Google Scholar] [CrossRef]

- Huo, Y.; Gang, S.; Dong, L.; Guan, C. An Efficient Semantic Segmentation Method for Remote-Sensing Imagery Using Improved Coordinate Attention. Appl. Sci. 2024, 14, 4075. [Google Scholar] [CrossRef]

- Ding, H.; Xia, B.; Liu, W.; Zhang, Z.; Zhang, J.; Wang, X.; Xu, S. A novel mamba architecture with a semantic transformer for efficient real-time remote sensing semantic segmentation. Remote Sens. 2024, 16, 2620. [Google Scholar] [CrossRef]

- Yang, Y.; Yuan, G.; Li, J. Dual-Branch Network for Spatial–Channel Stream Modeling Based on the State-Space Model for Remote Sensing Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5907719. [Google Scholar] [CrossRef]

- Mu, J.; Zhou, S.; Sun, X. PPMamba: Enhancing Semantic Segmentation in Remote Sensing Imagery by SS2D. IEEE Geosci. Remote Sens. Lett. 2025, 22, 6001705. [Google Scholar] [CrossRef]

- Zhang, J.; Shao, M.; Wan, Y.; Meng, L.; Cao, X.; Wang, S. Boundary-aware spatial and frequency dual-domain transformer for remote sensing urban images segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5637718. [Google Scholar] [CrossRef]

- Yang, Y.; Yuan, G.; Li, J. SFFNet: A Wavelet-Based Spatial and Frequency Domain Fusion Network for Remote Sensing Segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 3000617. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3146–3154. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Beltagy, I.; Peters, M.E.; Cohan, A. Longformer: The long-document transformer. arXiv 2020, arXiv:2004.05150. [Google Scholar] [CrossRef]

- Zaheer, M.; Guruganesh, G.; Dubey, K.A.; Ainslie, J.; Alberti, C.; Ontanon, S.; Pham, P.; Ravula, A.; Wang, Q.; Yang, L.; et al. Big bird: Transformers for longer sequences. Proc. Adv. Neural Inf. Process. Syst. 2020, 33, 17283–17297. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Liu, Y. VMamba: Visual State Space Model. CoRR 2024, arXiv:2401.10166. [Google Scholar]

- Zhang, D.; Zhang, D. Wavelet transform. In Fundamentals of Image Data Mining: Analysis, Features, Classification and Retrieval; Springer: Cham, Switzerland, 2019; pp. 35–44. [Google Scholar]

- Habte, S.B.; Ibenthal, A.; Bekele, E.T.; Debelee, T.G. Convolution Filter Equivariance/Invariance in Convolutional Neural Networks: A Survey. In Proceedings of the Pan-African Conference on Artificial Intelligence, Addis Ababa, Ethiopia, 4–5 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 191–205. [Google Scholar]

- Wang, C.; Shi, A.Y.; Wang, X.; Wu, F.M.; Huang, F.C.; Xu, L.Z. A novel multi-scale segmentation algorithm for high resolution remote sensing images based on wavelet transform and improved JSEG algorithm. Int. J. Light Electron Opt. 2014, 125, 5588–5595. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Z.; Yang, J.; Zhang, H. Wavelet transform feature enhancement for semantic segmentation of remote sensing images. Remote Sens. 2023, 15, 5644. [Google Scholar] [CrossRef]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11976–11986. [Google Scholar]

- Ma, X.; Zhang, X.; Pun, M.O. RS3Mamba: Visual state space model for remote sensing image semantic segmentation. IEEE Geosci. Remote Sens. Lett. 2024, 21, 6011405. [Google Scholar] [CrossRef]

- Zhang, R.; Zhang, Q.; Zhang, G. LSRFormer: Efficient transformer supply convolutional neural networks with global information for aerial image segmentation. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5610713. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Li, R.; Wang, L.; Zhang, C.; Duan, C.; Zheng, S. A2-FPN for semantic segmentation of fine-resolution remotely sensed images. Int. J. Remote Sens. 2022, 43, 1131–1155. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Zhang, C.; Duan, C.; Su, J.; Wang, L.; Atkinson, P.M. Multiattention Network for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5607713. [Google Scholar] [CrossRef]

- Ni, Y.; Liu, J.; Cui, J.; Yang, Y.; Wang, X. Edge guidance network for semantic segmentation of high resolution remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 9382–9395. [Google Scholar] [CrossRef]

- Ni, Y.; Liu, J.; Chi, W.; Wang, X.; Li, D. CGGLNet: Semantic Segmentation Network for Remote Sensing Images Based on Category-Guided Global-Local Feature Interaction. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5615617. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Dong, S.; Meng, X.; Zhang, X.; Hong, D. PyramidMamba: Rethinking Pyramid Feature Fusion with Selective Space State Model for Semantic Segmentation of Remote Sensing Imagery. arXiv 2024, arXiv:2406.10828. [Google Scholar] [CrossRef]

- Lv, Y.; Yu, Z.; Yin, Z.; Qin, J. Remote Sensing Image Road Segmentation Based on Conditions Perceived 3D UX-Net. J. Indian Soc. Remote Sens. 2025, 53, 4005–4015. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license.