Abstract

The operational environment of UAVs poses unique challenges for object detection compared to conventional methods. When UAVs capture remote sensing images from elevated altitudes, objects often appear minuscule and can be easily obscured by complex backgrounds. This increases the likelihood of false positives and missed detections, thereby complicating the detection process. Furthermore, the hardware resources available on UAV platforms are typically highly constrained. To meet deployment requirements, researchers often must compromise some detection accuracy in favor of a more lightweight model. To address these challenges, we propose PS-YOLO, a fast and precise network specifically designed for UAV-based object detection. In the proposed network, we first design a lightweight backbone based on partial convolution. Then, we introduce a more efficient neck network called FasterBIFFPN to replace the original PAFPN, enabling more effective multi-scale feature fusion. Finally, we propose the GSCD head. GSCD employs shared convolutions to enhance the network’s ability to learn common features across objects of different scales and introduces Normalized Gaussian Wasserstein Distance Loss (NWDLoss) to improve detection accuracy. This detection head effectively increases inference speed without significantly increasing parameter counts. The proposed PS-YOLO is validated on the Visdrone2019 dataset, and the results demonstrate that PS-YOLO provides a 2% improvement in precision, 0.5% improvement in recall, 1.3% improvement in mean average precision (mAP), 41.3% reduction in parameter counts, 6.1% reduction in computational cost, and 26.73 FPS improvement in inference speed compared to the benchmark model YOLOv11-s.

1. Introduction

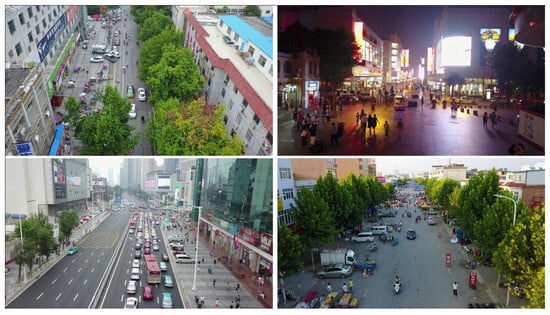

Unmanned aerial vehicles (UAVs) possess the unique capability to operate in three-dimensional spaces inaccessible to humans, significantly extending human perception and operational capacity in task execution. In recent years, UAVs have demonstrated critical utility across diverse domains, including agricultural monitoring [1], urban planning [2], transportation management [3], and post-disaster rescue [4]. The successful execution of these tasks relies on reliable object detection, a fundamental requirement for many advanced computer vision applications [5]. In recent years, neural networks have attracted considerable research attention owing to their powerful feature extraction capabilities and autonomous learning mechanisms. As a result, deep neural network-based methods have become the dominant approach for object detection. Within computer vision, UAV object detection utilizing deep neural networks has emerged as a prominent research area. However, most current object detection methods are designed for large datasets such as PASCAL VOC [6,7] and COCO [8]. These datasets typically consist of natural scenes with simple backgrounds, where objects occupy a significant portion of the images, enabling relatively high detection accuracy. In contrast, UAV aerial imagery presents unique challenges. Captured from high altitudes with wide fields of view, the objects of interest often occupy only a small percentage of the image and tend to be densely distributed. When ground objects are extremely small, extracting their texture and other distinguishing features becomes particularly challenging. Moreover, UAV aerial imagery often contains complex, dynamic backgrounds. During ground reconnaissance, objects are frequently occluded by clouds, mountainous terrain, dense vegetation, and urban structures, resulting in substantial background clutter. These factors significantly complicate data-driven object detection [9], as demonstrated in Figure 1.

Figure 1.

Typical UAV image example: (a) small objects; (b) objects are densely distributed; and (c) complex background.

As shown in Figure 1, object detection in UAV imagery poses significant challenges. Direct application of mainstream object detection models to UAV-based tasks often yields suboptimal accuracy. In practical implementations, UAVs typically capture video streams while onboard computers perform real-time detection, with results subsequently transmitted to ground stations. However, UAV platforms face significant hardware limitations. Conventional convolutional neural networks require extensive multiply–accumulate operations and substantial computational resources for each network layer. Even with the deployment of lightweight models, using those that are not specifically designed for the task can lead to further declines in detection accuracy.

To overcome these limitations, we present PS-YOLO, an optimized object detection network specifically designed for UAV applications. Based on the YOLOv11 framework, PS-YOLO incorporates architectural enhancements in both convolutional operations and model structure. Our approach achieves simultaneous model compression and accuracy improvement, enabling faster and more precise object detection in UAV systems.

The primary contributions of this paper are as follows:

- We integrate partial convolution into YOLOv11’s C3k2 module and redesign the backbone network using this enhanced module. This architectural modification yields a lighter and faster model while preserving high detection accuracy.

- We developed FasterBIFFPN (Faster Bidirectional Feature Fusion Pyramid Network), an innovative neck module that enables efficient multi-scale feature fusion. This architecture achieves robust feature integration with reduced parameters while demonstrating superior performance for UAV object detection applications.

- We propose the Gaussian-Shared Convolutional Detection (GSCD) head, an efficient detection head that improves feature extraction across multi-scale feature maps through shared convolutional operations while maintaining low computational overhead. Additionally, GSCD employs Normalized Gaussian Wasserstein Distance Loss (NWDLoss) for bounding box regression, enhancing object detection performance.

We applied the proposed series of improvement strategies to the YOLOv11-s model and conducted comparison and ablation experiments on the Visdrone2019 dataset. The experimental results show that our well designed modules can effectively improve the accuracy of the model, and that PS-YOLO achieves faster detection speed and higher detection accuracy with only about half of the number of parameters of the baseline model, thereby empowering UAV applications.

2. Related Work

2.1. UAV Object Detection

Object detection is a crucial task in computer vision, forming the basis for numerous downstream applications. In recent years, the rapid development of unmanned aerial vehicle (UAV) technology has led to its extensive application in both military and civilian fields, rendering UAV-based object detection a vital research area. UAVs usually fly at altitudes ranging from tens to hundreds of meters. From a UAV’s perspective, the field of view is generally wide, but the number of pixels representing an object is small, the resolution is low, and the available feature information is limited. These factors pose significant challenges to UAV image-based object detection. Consequently, many researchers have designed networks specifically for object detection from a UAV perspective. For example, Song et al. [10] built upon the single-stage object detector (SSD) and performed a targeted search for aspect ratios and scales of anchor boxes using k-means++. Additionally, they argued that multiple pooling operations could lead to sampling aliasing effects and introduced low-pass filters to improve the detection of small objects. TPH-YOLOv5 [11] incorporates the transformer prediction head and the CBAM module into the YOLOv5 [12] framework, significantly improving the network’s detection accuracy for small ground objects. LAI-YOLO [13] is built upon the YOLOv5 framework and significantly improves the model’s accuracy by introducing a novel feature fusion network (DFM-CPFN) and a feature extraction module (VoVNet). LAI-YOLO strikes an improved balance between detection accuracy and efficiency. UAV-YOLOv8 [14] integrates the BiFormer attention module and the FFNB feature processing module into the YOLOv8 backbone, while also introducing WIoU-v3 into the regression loss. These enhancements significantly improve the model’s detection accuracy for small objects while simultaneously reducing its parameter count. Drone-YOLO [15] introduces an enhanced neck component, sandwich fusion module, and RepVGG module into YOLOv8 [16], greatly boosting detection accuracy for small objects. ERGW-net [17] leverages the strengths of the ResNet, Inception Net, and YOLOv8 networks by improving backbone and neck structures, as well as designing the LGWPIoU loss function, resulting in enhanced efficiency and detection accuracy for object detection tasks. PVswin-YOLOv8-s [18] introduces innovative designs such as Swin Transformer and Soft-NMS to improve the network’s capability of detecting pedestrians and vehicles in occluded scenes.

Although the aforementioned models are remarkable, they fail to achieve an optimal balance between accuracy and computational efficiency. This situation underscores the need for more efficient network architectures or sophisticated optimization strategies to further enhance model performance.

2.2. Network Lightweighting Techniques

Neural network-based approaches have achieved remarkable success in the field of image processing. However, their large number of parameters and high computational complexity present challenges for deployment on resource-constrained devices. As a result, network lightweighting has emerged as a critical area of research. Current lightweighting techniques primarily include the design of lightweight model architectures [19], quantization [20], knowledge distillation [21], and network pruning [22]. Quantization is an optimization technique that maps high-precision model parameters in neural networks to low-precision representations, thereby reducing computational complexity and enhancing inference efficiency. Conventional neural network models typically employ 32-bit floating-point (FP32) numbers to fully capture the dynamic range of parameters and the representational capacity of features. To enable lightweight deployment, trained model parameters are often converted from FP32 to lower-precision formats such as 16-bit half-precision floating point (FP16) or 8-bit integers (INT8s), thereby reducing model sizes, storage requirements, and computational costs. Knowledge distillation is a technique that transfers the knowledge of a large, high-performing model (the teacher model) to a smaller model (the student model). Its core idea is to use the output of the teacher model to guide the training of the student model, allowing it to retain the essential information learned by the larger model. By mimicking the teacher model’s outputs, the student model can more efficiently learn the underlying features and patterns in the data. Network pruning aims to reduce the complexity of a neural network by removing redundant parameters, channels, or modules that contribute minimally to the model’s expressive capacity. Eliminating these redundancies directly decreases the model size and lowers computational overhead, typically without significantly compromising the model’s accuracy. Lightweight architectures aim to achieve superior accuracy while minimizing the number of parameters and computational overhead. The development of efficient convolutional neural network architectures customized for edge devices, aiming to provide high-quality services, has become the most active research direction today. As a result, many innovative network architectures have emerged. MobileNetV1 [23] pioneered depthwise separable convolution, a technique that separates standard convolutions into two stages: depthwise and pointwise convolutions. Depthwise separable convolution substantially reduces parameter count while preserving model accuracy. Unlike the simple stacking method in MobileNetV1, MobileNetV2 [24] adopts the skip connection technique from ResNet and utilizes inverted residual blocks to further enhance model performance. MobileNetV3 [25] employs neural architecture search (NAS) to design a more efficient network structure and integrates various optimization techniques. These include adding the SE (Squeeze-and-Excitation) module after depthwise convolution, replacing the swish activation function with the more efficient h-swish and optimizing the number of channels. These enhancements significantly improve the model’s performance while preserving its lightweight architecture. ShuffleNet [26,27] significantly reduces model parameters and accelerates inference by introducing group convolution and channel shuffle operations. Group convolution decreases computational complexity, while channel shuffle enhances information flow across feature channels, ensuring efficient feature learning. GhostNet [28] leverages a cheap and efficient linear transformation operation to generate additional feature maps, significantly enhancing feature representation while maintaining a lightweight design. This innovative approach has set GhostNet apart from other lightweight networks of its time and has been recognized by industry insiders as one of the best. However, recent studies have shown that while group convolution and depthwise convolution reduce the number of parameters, they also lead to increased memory access costs. As a result, the actual latency of the model may not necessarily improve and, in some cases, may even increase. Therefore, Chen et al. [29] developed a novel convolutional operator named partial convolution, which reduces computational cost and memory access while maintaining minimal accuracy loss. This has made partial convolution a highly competitive solution in recent years.

2.3. Lightweight UAV Object Detection Model

To ensure that the designed UAV object detection model meets the requirements of practical applications, designers commonly employ the model lightweighting techniques discussed in Section 2.2 to develop efficient and lightweight UAV object detection models. For example, RTD-Net [30] integrates both a transformer module and a lightweight feature extraction module (LEM) into the backbone network, enhancing detection accuracy without substantially increasing model size. Bu et al. [31] applied INT8 scale factor quantization to the YOLOv8 model and deployed it on an unmanned aerial platform, achieving an average inference speed exceeding 100 FPS. EL-YOLO [32] employed DSConv and TensorRT quantization techniques to perform lightweight optimization across the entire network, achieving a processing speed of 24 FPS on an airborne edge computing platform. Zhang et al. [33] proposed a real-time detection and identification algorithm for UAV infrared detection, using Picodet as the base framework and incorporating an improved lightweight LCNet as the backbone network, resulting in a 31 FPS increase in detection speed and a 7% improvement in detection accuracy. Liu et al. [34] developed a lightweight real-time object detection model for UAV remote sensing, utilizing Fasternet-16 as the backbone and constructing a neck network with PConv. The model is memory efficient and demonstrates significant speed advantages on low-power hardware platforms. LUMF-YOLO [35] adopts YOLOv4 as the base framework and integrates the lightweight MobileNetV2 as the backbone network, enhancing model accuracy while reducing the number of parameters. Muzammul et al. [36] proposed a quantum-inspired ultra-small object detection model incorporating structured pruning and INT8 quantization, effectively reducing computational load and achieving an inference time of 8.1 ms. MINIAOD [37] employs Ghost convolution to construct a lightweight backbone network and integrates the GSConv module to build a feature-enhanced network, improving the model’s target learning capability while reducing computational complexity.

Although the aforementioned models have yielded promising results, several limitations remain. Some methods excessively prioritize real-time performance at the expense of network architecture design, leading to suboptimal performance in complex scenarios. Conversely, other approaches focus too heavily on accuracy in network architecture design, resulting in models that are initially very large, and even after quantization and pruning still retain a vast number of parameters. This suggests that the previous models do not achieve an optimal balance between accuracy and real-time performance. Therefore, greater effort is required in carefully designing models. Enhancing both the accuracy and generalization ability of the model, while maintaining its lightweight nature, has become a key research focus in lightweight UAV object detection.

3. Method

3.1. Framework of PS-YOLO

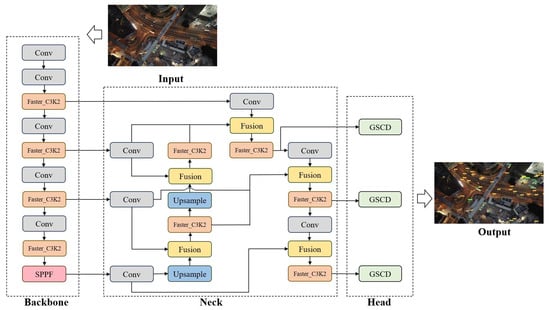

To address the challenges in UAV remote sensing object detection, including low model accuracy, conflict between real-time and accuracy, and difficulty in deploying on edge devices, this paper introduces PS-YOLO, a lightweight network tailored for UAV image object detection. The PS-YOLO model is based on the YOLOv11-s [38] framework, and we improve the backbone network, neck, and detection head to achieve faster and better UAV object detection. Figure 2 presents the framework of PS-YOLO.

Figure 2.

Framework of PS -YOLO.

Specifically, in the backbone network, we integrate partial convolution operators into the original C3k2 structure, introducing the Faster_C3k2 module, which reduces the number of parameters. By incorporating Faster_C3k2 into the backbone, we effectively decrease the overall model complexity. Additionally, we reevaluate the C2PSA attention module in YOLOv11. While the C2PSA module can enhance detection accuracy in UAV-based object detection tasks, it significantly increases the parameter count. This added complexity outweighs its benefits when deploying the network on UAV platforms. Therefore, we remove the original C2PSA module to enhance deployment efficiency. In the neck network, we redesign the original structure by incorporating bidirectional information flow through cross-scale connections and learnable weights. Additionally, we embed Faster_C3k2 into the neck network, introducing FasterBIFFPN. This lightweight and efficient neck network enhances information interaction between features of different scales at a minimal computational cost, improving detection accuracy while reducing parameter count. GSCD is a shared convolution-based detection head, where shared convolution enhances the multi-scale detection capability while keeping the parameter count relatively low. Additionally, we observe that the IoU-based loss function performs poorly in small object detection. To address this, we introduce NWDLoss, which improves detection accuracy without increasing the parameter count.

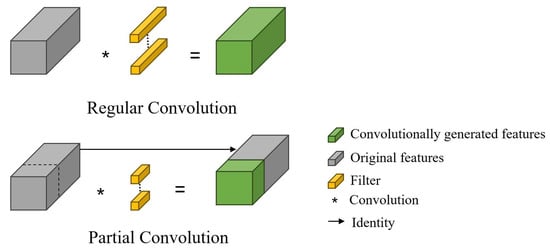

3.2. Partial Convolution and Faster_C3k2

Fasternet [29] is a novel lightweight convolutional neural network architecture that has garnered significant attention due to its simple design concept and compact network structure. The innovation of Fasternet lies in its introduction of partial convolution (PConv). Unlike previous methods, such as depthwise separable convolution and group convolution, PConv does not primarily aim for extreme parameter reduction. Instead, it focuses on minimizing both computational redundancy and memory access overhead, resulting in improved inference speed. The operation of PConv is illustrated in Figure 3. Given a set of input feature maps, PConv performs a convolution operation on a subset of the channels, leaving the remaining channels unprocessed, and then combines the convolution-generated feature maps with the original feature maps. In fact, some studies [28,39] have demonstrated that there are high degrees of similarity between the feature maps of different channels, i.e., there are large amounts of feature redundancy in the feature maps. Therefore, PConv convolves the feature maps of only some of the channels, which not only reduces the computational cost but also achieves similar accuracy easily. For convenience, PConv usually selects a set of consecutive channels for feature extraction.

Figure 3.

Regular Convolution and Partial Convolution.

For the purpose of analysis, it is assumed that the input and output feature maps have the same number of channels. For an input feature map , the number of parameters introduced by a standard convolution operation is given by

Using PConv, the parameter count is significantly reduced to

The memory access count for a standard convolution (Conv) operation is given by

For PConv, the memory access count is significantly reduced to

In Equations (1)–(4), denotes the height of the feature map, denotes the width of the feature map, and denotes the number of channels in the input (or output) feature map, is the kernel size, and represents the number of channels on which the convolution operation is performed. When setting the ratio , the number of FLOPs (Floating-Point Operations) for PConv is reduced to of that of standard convolution, and the memory access count is reduced to of the standard convolution’s memory access.

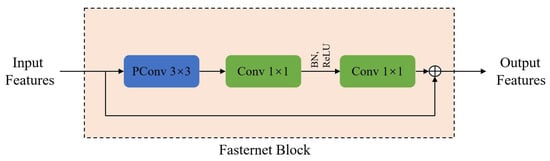

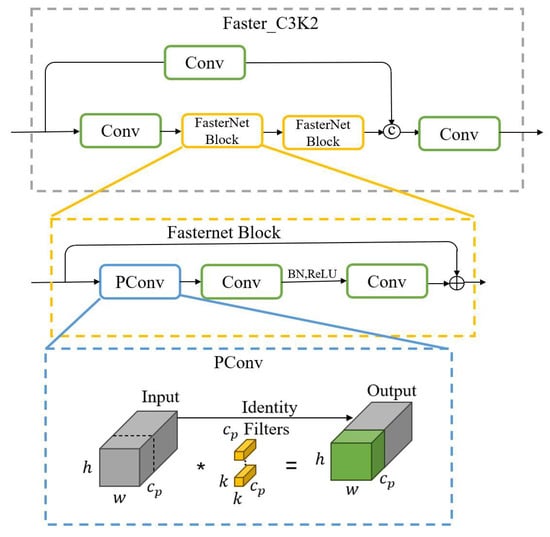

Fasternet is primarily composed of stacked Fasternet Blocks, and the structure of a Fasternet Block is shown in Figure 4. Each Fasternet Block consists of one PConv module and two pointwise convolutions (PWConvs). The first PWConv expands the channel dimension and is followed by an activation function to enhance feature representation, while the second PWConv compresses the channel dimension to retain the most important features. This structure maximizes the utilization of the original feature information while maintaining high accuracy.

Figure 4.

Structure diagram of Fasternet Block.

It is worth noting that although the design of partial convolution is very delicate, its accuracy is still inferior to that of conventional convolution. Partial convolution is specifically designed for lightweight models, discarding a portion of the original features and processing only a subset of them to enhance real-time performance. Consequently, replacing all conventional convolutions with partial convolutions would significantly degrade accuracy. In UAV object detection, prioritizing real-time performance often compromises model accuracy, making it difficult to provide reliable detection services. On the other hand, prioritizing accuracy can overwhelm the UAV platform with a large number of parameters and high computational demands. To address this challenge and better balance accuracy with computational load, we replace the Bottleneck in C3k2 with the Fasternet Block to create the Faster_C3k2 module. We then replace the original C3k2 module in the network with Faster_C3k2. This approach reduces network complexity while maintaining sufficiently high accuracy. Figure 5 shows the structure of Faster_C3k2.

Figure 5.

Structure diagram of Faster_C3k2.

3.3. Faster Bidirectional Feature Fusion Pyramid Network

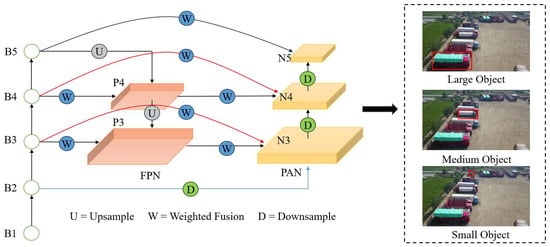

The neck network of YOLOv11 retains the classic Path Aggregation Feature Pyramid Network (PAFPN) [40]. PAFPN builds on the Feature Pyramid Network (FPN) [41] by incorporating a bottom-up path. The original top-down path uses upsampling operations to propagate deep semantic information to shallower layers, thereby enhancing the semantic representation of shallow features. In contrast, the bottom-up path utilizes convolutional operations to pass shallow detailed features to deeper layers. This bi-directional structure enhances the neck network’s ability to capture and integrate both semantic and detailed information. For small objects, detailed information from shallow layers is more important, while for large objects, semantic information from deeper layers plays a more crucial role. However, PAFPN directly fuses feature maps of different resolutions, which can result in information conflicts. This issue becomes even more pronounced in UAV imagery, where the features of small objects are often overshadowed by those of larger objects. Furthermore, the computational cost associated with the PAFPN structure is relatively high for a lightweight network, prompting the need to reconsider YOLOv11’s neck design. Drawing inspiration from BIFPN [42], we introduce cross-scale connectivity and feature-weighted fusion operations into the neck without increasing the parameter count. Additionally, we integrate the more lightweight Faster_C3k2 into the neck network, resulting in the Faster Bidirectional Feature Fusion Pyramid Network (FasterBIFFPN). Cross-scale connectivity enables feature maps from different layers to exchange information more effectively, enhancing the semantic representation of small objects and detailed information of large objects. Meanwhile, feature-weighted fusion adaptively adjusts the importance of features based on the characteristics of objects at different scales. This is particularly crucial for small object detection in UAV images, as the features of small objects often do not reach the deeper layers of the network, while deeper feature maps still contain residual background information. By increasing the weight of shallow features and decreasing the weight of deeper features, the complexity of the background is effectively suppressed, thereby improving model detection performance. This approach further reduces parameter count in the neck while achieving more efficient feature fusion, all without relying on any attentional mechanisms. Figure 6 illustrates the structure of FasterBIFFPN.

Figure 6.

Structure diagram of FasterBIFFPN.

In the figure, B1 to B5 represent feature maps at varying resolutions within the backbone network, P3 to P4 denote feature maps at different resolutions in the FPN, and N3 to N5 indicate feature maps at distinct resolutions in the PAN. The B1 feature map exhibits the highest resolution, with the resolutions of the feature maps from B1 to B5 progressively halving. Similarly, the feature maps in the FPN and PAN follow the same resolution pattern. The B2-B4 feature maps in the backbone network are fed into FasterBIFFPN, while we disregard feature maps that have only a single input path and do not process them. This is because such feature maps, typically obtained through upsampling and downsampling, contribute less to feature fusion. In contrast, feature maps with two input paths are typically involved in feature fusion, and as such, they contribute more significantly to the fusion process. We introduce connections at the nodes where the backbone network feature maps align with the resolution of the PAN’s feature maps, enabling the fusion of richer features without the need for additional modules. The connections between B4-N4 and B3-N3 are supplementary connections, indicated by red arrows in the figure. Notably, the N3 feature map in the PAN is initially formed by stitching B3 from the backbone network with P3 from the FPN. In FasterBIFFPN, we introduce an additional downsampling path to enhance feature fusion for small object detection. This increases the proportion of original detailed features within the feature map, thereby strengthening the representation of small objects. The additional downsampling paths are indicated by blue arrows in the figure. The feature map fusion process can be represented as follows:

In Equations (5) and (6), Faster_C3k2 and Conv refer to the corresponding module operations. The weighted fusion operation involves multiplying the feature maps at the same resolution by their respective learnable weights and then summing them. The weight for each feature map is initially set to 1, and as training progresses, the weights are automatically updated based on the loss function. Here, we adopt the fast normalization fusion method from BIFPN [42], and the process is as follows:

In this process, represents the weighted feature map, and denotes the different feature maps of the same size. The learnable weights are non-negative values, and when they are fed into the ReLU function, the weights are normalized. The constant is a small value introduced to guarantee the stability of the normalization process.

3.4. Gaussian-Shared Convolutional Detection Head

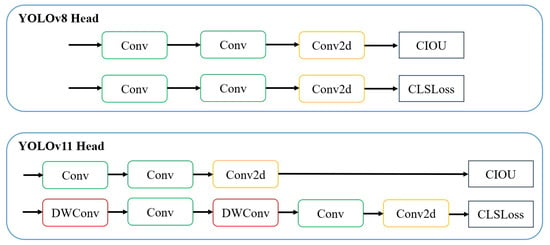

Similar to YOLOv8, YOLOv11 employs a decoupled detection head to separate localization and classification tasks. This separation minimizes interference between the two tasks, resulting in improved performance, especially in complex scenes. The detection head structures of YOLOv8 and YOLOv11 are illustrated in Figure 7.

Figure 7.

YOLOv8 detection head and YOLOv11 detection head.

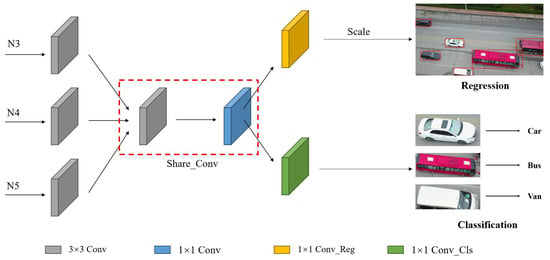

However, UAV images often contain not only complex scenes but also a large number of small objects. This combination significantly reduces both the inference speed and detection accuracy of the model, posing a considerable challenge for UAV object detection. To make matters worse, the detection head of YOLOv11 employs depthwise convolution (DWConv) to reduce parameter count. Although DWConv effectively lowers the number of parameters, it introduces a high volume of memory access operations and makes parallelization of independent channel-wise convolutions difficult. As a result, the actual inference speed is further diminished. Inspired by Faster R-CNN [43] and Normalized Gaussian Wasserstein Distance Loss (NWDLoss) [44], we designed a detection head called the Gaussian-Shared Convolutional Detection (GSCD) head. On one hand, GSCD incorporates shared convolution to enhance the model’s efficiency in feature extraction and to improve its ability to generalize across feature maps of varying sizes. This contributes to improved overall performance and increased inference speed. On the other hand, GSCD integrates NWDLoss to enhance the model’s detection capability, particularly for small objects. Figure 8 illustrates the structure of the GSCD head.

Figure 8.

Structure diagram of GSCD head.

In the figure, N3 to N5 represent the three feature maps with varying resolutions produced by the neck network. The Share_Conv module consists of a 3 × 3 convolution layer and a 1 × 1 convolution layer. Both the Conv_Reg and Conv_Cls components are convolutional layers without normalization or activation functions. Initially, the three feature maps are passed through a 3 × 3 convolution to unify their channel dimensions. They are then simultaneously input into the Share_Conv module to extract shared features across different scales. Finally, the extracted features are fed into the respective regression and classification branches.

In the regression branch, to detect objects at different scales, the three features are scaled differently. Here, we introduce three learnable factors, Scale, which apply element-wise multiplication to adjust the regression predictions without changing the feature map size. The scaled regression features are concatenated with the features from the classification branch, and the final detection results are output. All the aforementioned convolutional operations are standard convolutions, which significantly enhance feature extraction efficiency without compromising accuracy. This design improves inference speed while maintaining a low parameter count, thereby ensuring efficient real-time performance in UAV object detection.

Traditional IoU-based loss functions primarily focus on the overlap area between the predicted and ground truth bounding boxes. This makes the detection head overly sensitive to positional deviations, especially for small objects. Even a slight shift in the predicted bounding box can lead to significant fluctuations in the IoU-based loss value. Therefore, it is necessary to improve the loss function to address this limitation. NWDLoss models bounding boxes as two-dimensional (2D) Gaussian distributions and measures the similarity between them by calculating the distance between their respective distributions. Unlike IoU-based losses, NWDLoss is not significantly affected by the degree of overlap between the predicted and ground truth boxes. This property alleviates gradient optimization difficulties when the boxes do not overlap and enhances robustness in detecting small objects. Therefore, we incorporate NWDLoss into the regression loss function to improve performance, particularly for small object detection.

The principle of NWDLoss is as follows:

For a bounding box with a center coordinate , width , and height , the inscribed ellipse of can be represented as follows:

The probability density function (PDF) of the 2D Gaussian distribution is given by

where represents the coordinates , represents the mean vector, represents the covariance matrix. When

The ellipse in Equation (8) becomes the density contour of a 2D Gaussian distribution, then the horizontal frame can be built as a 2D Gaussian distribution , where

For 2D Gaussian distributions and , the second-order Wasserstein distance between and is defined as

where is the Frobenius parameter. For the Gaussian distributions and modeled by the bounding box and , Equation (12) can be simplified as

Normalization using the exponential form yields the normalized Wasserstein distance

where is the average absolute size of the object, used to normalize the Wasserstein distance, which is calculated as expressed below:

where is the total number of objects in the dataset, and and are the width and height of the th object box, respectively.

We weighted fuse the CIOU loss with NWDLoss to obtain an improved loss function, and it is expressed as follows:

where is the Gaussian distribution of the prediction frame, is the Gaussian distribution of the real object frame, and is a human-set coefficient.

4. Experiment

4.1. Datasets

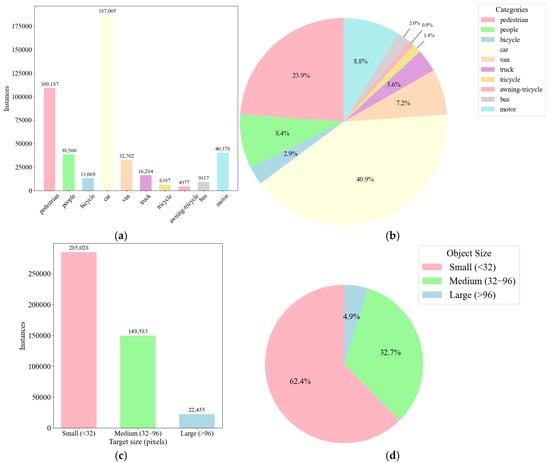

We conducted a series of comparative and ablation experiments on the widely recognized Visdrone2019 dataset [45] to evaluate the effectiveness of the proposed approach. The Visdrone2019 dataset was developed by the AISKYEYE team at the Machine Learning and Data Mining Laboratory of Tianjin University. As a large-scale benchmark dataset, it exhibits substantial diversity in terms of image resolution, camera altitude, urban and rural scenes, weather conditions, object density, and lighting variations. These rich and complex scenarios make object detection on this dataset particularly challenging. The Visdrone2019 dataset contains 6471 training images, 548 validation images, and 1610 test images. The dataset has a total of 10 categories, mainly people and common vehicles (cars, vans, motorcycles, bicycles, etc.). An overview of the Visdrone2019 dataset is provided in Figure 9.

Figure 9.

(a) Number of each category in the Visdrone2019 dataset; (b) percentage of each category in Visdrone2019 dataset; (c) number of different-sized objects in the Visdrone2019 dataset; and (d) percentage of different-sized objects in Visdrone2019 dataset.

As shown in Figure 9, the Visdrone2019 dataset exhibits a severe class imbalance issue. Specifically, cars constitute the largest proportion, accounting for 40.9% of the total objects, pedestrians have the second largest proportion, but their proportion is only 23.9%, about half of the number of cars, whereas awning tricycles make up only 0.9%. Additionally, the vast majority of objects in the dataset are small objects (defined as bounding boxes with an area smaller than 32 × 32 pixels), which account for 62.4% of all labeled objects, more than the sum of large and medium objects. This makes object detection based on Visdrone2019 more difficult; on the one hand, small objects are easily hidden in complex backgrounds and become difficult to detect, and on the other hand, when small objects overlap (e.g., a person riding a bicycle in the image), the small objects will become even more difficult to identify and distinguish.

4.2. Evaluation Metrics

The experimental evaluation assesses the proposed method across two key dimensions: detection performance and network complexity. The evaluation metrics include Precision (P), Recall (R), Mean Average Precision (mAP), Million Parameters (M), Giga Floating Point Operations (GFLOPs), and Frames Per Second (FPS).

The computational formulas for each evaluation metric are provided below:

Here, TP (True Positive) refers to correctly predicted positive samples, FP (False Positive) refers to incorrectly predicted positive samples, and FN (False Negative) refers to incorrectly predicted negative samples. The mAP is a commonly used metric for evaluating object detection performance, which becomes meaningful only when the Intersection over the Union (IoU) threshold is specified. In this paper, we primarily use mAP50 and mAP50–95 as evaluation metrics. Specifically, mAP50 represents the mean average precision at an IoU threshold of 0.5, while mAP50–95 is the average of mAP scores calculated at IoU thresholds ranging from 0.5 to 0.95 in steps of 0.05.

4.3. Implementation Details

The experiments in this study were conducted on an Ubuntu 22.04 operating system with PyTorch 2.1.2, Python 3.10, and CUDA 11.8. The hardware setup included an Intel Xeon Platinum 8352V CPU and two NVIDIA RTX 3080 GPUs. Each network was trained for 300 epochs using the SGD optimizer, with an initial learning rate of 0.01, which gradually decayed to 1 × 10−4. The weight decay coefficient was set to 5 × 10−4, the momentum was 0.937, and the batch size was 32. Considering the large size of the images in the Visdrone2019 dataset, inputting the network according to the original size will lead to a very long training process, while too small an input image size will lead to a sharp decrease in accuracy. To balance the accuracy of the network and the training speed, we uniformly adjusted the input image to 640 × 640 pixels. For a fair comparison, all models involved in the experiment were trained using input images of the same resolution and training hyperparameters, and none of them used pre-training weights.

4.4. Comparison with the YOLOv11 Network

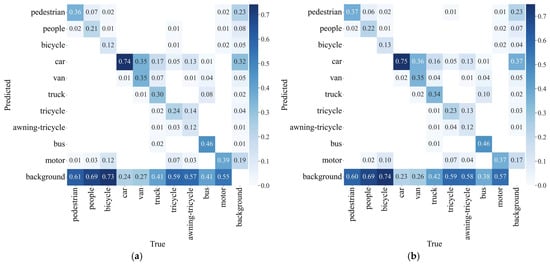

This section presents a series of comparative experiments between the proposed PS-YOLO model and the baseline YOLOv11-s on the Visdrone2019 dataset. Figure 10 illustrates the confusion matrices of both PS-YOLO and YOLOv11-s. In the confusion matrix, the vertical axis represents the predicted classes, while the horizontal axis denotes the ground truth classes. Each value along the diagonal indicates the correct classification ratio for the corresponding class, reflecting the model’s accuracy in predicting each object category. These diagonal values are the primary focus, as they represent the classification accuracy of the network for each individual class. The comparison between the two models reveals that PS-YOLO outperforms YOLOv11-s in recognizing most object categories. This indicates that PS-YOLO exhibits stronger recognition capabilities and delivers superior overall performance in both detecting and classifying diverse object types, achieving higher accuracy than the baseline model.

Figure 10.

(a) Confusion matrix for YOLOv11-s and (b) confusion matrix for PS-YOLO.

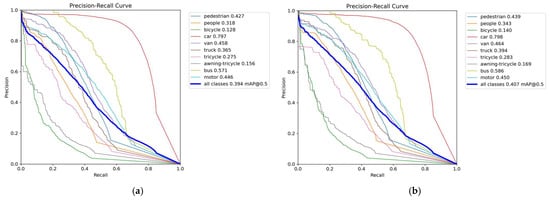

Figure 11 shows the Precision-Recall (P-R) curves of PS-YOLO and YOLOv11-s. In each subplot, the AP for each category is displayed in the top right corner. The AP metric quantifies the model’s capability to accurately detect specific object categories. It is easy to see from the comparison that the AP values of PS-YOLO are higher than YOLOv11-s in all categories. This demonstrates that PS-YOLO delivers superior overall performance compared to the baseline model, with improved capability to detect objects across all categories.

Figure 11.

(a) P-R curves of YOLOv11-s and (b) P-R curves of PS-YOLO.

To evaluate the effectiveness of the proposed approach, we applied our improvement strategy to different variants of the YOLOv11 model and conducted comparisons with their respective baseline versions. The experimental results are summarized in Table 1, with the best-performing results in each version highlighted in bold. A comprehensive analysis of the data reveals that PS-YOLO consistently outperforms the baseline models in both detection accuracy and model compactness. Regarding computational complexity, all versions of PS-YOLO demonstrate reduced complexity compared to their counterparts except for PS-YOLO-m, which exhibits slightly higher complexity than YOLOv11-m. Nevertheless, the overall enhancements introduced by our approach prove to be effective, delivering significant performance gains across multiple dimensions.

Table 1.

Performance comparison between PS-YOLO network and YOLOv11 network.

4.5. Comparison with Other Mainstream Models

To further evaluate the performance of PS-YOLO, this section combines both qualitative and quantitative approaches to conduct a comprehensive evaluation on the Visdrone2019 dataset. We first compare PS-YOLO-n with advanced lightweight networks, including YOLOX-Tiny [46], YOLOV7-Tiny [47], PP-PiCoDet-L [48], SOD-YOLO [49], GCL-YOLO [5], and the latest YOLOv12-n [50]. The results of the comparison are presented in Table 2, with the top two labeled in red and blue.

Table 2.

Comparison of PS-YOLO-n with advanced lightweight models.

As shown in Table 2, PS-YOLO-n achieves the second-highest accuracy among all compared models, with only a slightly higher computational complexity than GCL-YOLO-n. Compared to the lightweight YOLO models such as YOLOv7-Tiny, YOLOX-Tiny, and YOLOv12-n, PS-YOLO-n demonstrates superior accuracy while maintaining fewer parameters and lower GFLOPs, highlighting its efficiency. In comparison with PP-PiCoDet-L, PS-YOLO-n delivers comparable performance while using only approximately 42% of the parameters and 57% of the GFLOPs, despite being slightly less accurate. When compared with SOD-YOLO-n and GCL-YOLO-n, PS-YOLO-n offers higher accuracy and lower GFLOPs than SOD-YOLO-n, albeit with a somewhat larger model size. The experimental results clearly demonstrate that our method effectively optimizes the structure of the benchmark model. Under model size constraints, PS-YOLO—thanks to its compact design—achieves leading performance among a wide range of lightweight networks.

On this basis, we also compare PS-YOLO with various mainstream object detection models, including popular YOLO series models Faster R-CNN [43], Cascade R-CNN [51], TPH-YOLOv5 [11], LAI-YOLOv5s [13], FFCA-YOLO [52], and Li et al. [53], among others. The comparison results are shown in Table 3, with the top two labeled in red and blue.

Table 3.

Performance of different models on Visdrone2019 dataset.

It can be seen that the classic two-stage networks (Faster R-CNN and Cascade R-CNN) are complex and computationally expensive, with relatively low accuracy. This is attributed to the reliance of the two-stage algorithm on region proposals, which inherently introduces a substantial number of parameters. Furthermore, the Visdrone2019 dataset contains a significant proportion of small objects, for which the candidate regions often exhibit insufficient sensitivity. This results in increased computational complexity without a corresponding improvement in accuracy, ultimately leading to a high rate of missed detections. This suggests that such networks are not well-suited for deployment on UAVs, where both accuracy and efficiency are crucial. Compared to the two-stage networks, YOLO series networks have lower parameter counts and computational complexity, offering advantages in terms of real-time performance. However, YOLO networks are not explicitly tailored for UAV-based object detection tasks. They have not been optimized for model size, and there is still room for further optimization of their inherent feature fusion approach. Consequently, their overall performance is suboptimal compared to PS-YOLO. In comparison with other mainstream methods, PS-YOLO demonstrates superior performance in terms of precision and recall on the validation set, achieving a precision of 51.4% and a recall of 39.4%. The mAP50 was second at 40.7%. On the test set, PS-YOLO achieved the highest recall (34%) and mAP50 (32.3%), while precision was only 0.2% lower than the baseline model YOLOv11s. Notably, although PS-YOLO did not achieve the highest precision on the validation set, it achieved a similar performance to that of Li et al. using only half the parameter count. Overall, PS-YOLO demonstrates outstanding performance across all evaluation metrics.

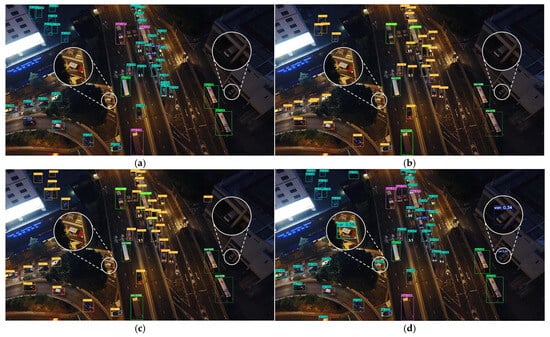

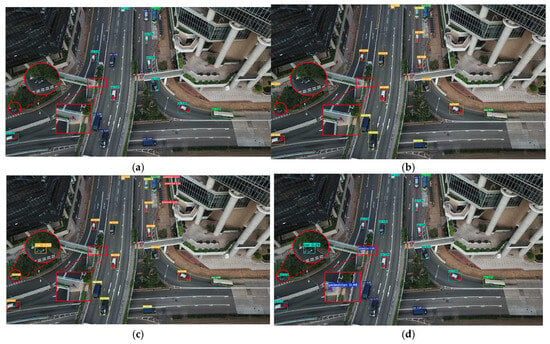

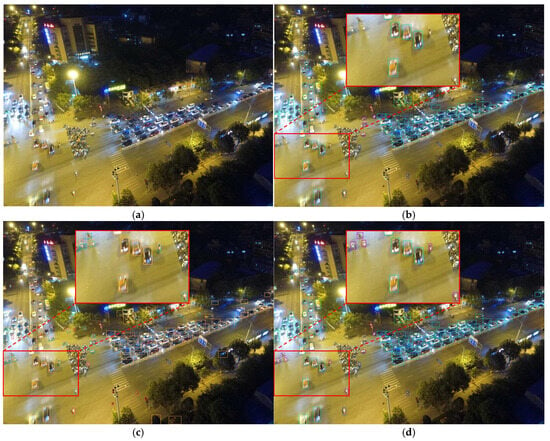

To more clearly demonstrate the superior performance of PS-YOLO, we selected several networks with strong overall performance and compared their detection capabilities in images featuring complex backgrounds and varying lighting conditions. The visualization results are presented in Figure 12 and Figure 13.

Figure 12.

Detection results in a night-time street scenario. The lighting conditions of this scene are insufficient and partially obscured. (a) YOLOv11-s; (b) FFCA-YOLO; (c) TPH-YOLOv5; and (d) PS-YOLO.

Figure 13.

Detection results in a daytime street scenario. The scene has adequate lighting conditions and partial occlusion. (a) YOLOv11-s; (b) FFCA-YOLO; (c) TPH-YOLOv5; and (d) PS-YOLO.

Figure 12 illustrates a night-time street scenario, a typical example of a scene with insufficient lighting conditions. In the circled area to the left, a car is driving under an overpass, and its position is very concealed, with a slight occlusion of the car’s body. PS-YOLO is the only method that successfully detects it, while the other methods fail to do so. In the circled area to the right, a white van is parked in a garage, with the environment being dimly lit and the car partially occluded. Again, PS-YOLO is the only method to correctly detect it, while the other methods fail to detect it.

Figure 13 shows a daytime street scenario, a typical example of a well-lit environment. In the circled area to the left, a black car is driving out, with most of its body blocked by trees. Only TPH-YOLOv5 and PS-YOLO can detect it, while YOLOv11-s and FFCA-YOLO fail to detect it. In the rectangular area on the left, two pedestrians are walking on a pedestrian overpass, appearing particularly small in the image. Only PS-YOLO detects one of them, while the other methods fail to detect them.

In summary, PS-YOLO performs the best in both low-light and well-lit scenarios. It also demonstrates strong robustness in handling occlusions and detecting small objects, this shows that our proposed PS-YOLO reduces the occurrence of false and missed detections and is particularly suitable for performing object detection tasks under UAV viewpoints.

4.6. Extended Experiment

To validate the performance of the proposed model in challenging scenarios with complex backgrounds, dense object distribution, and small object sizes, we carefully selected 100 challenging UAV images in the validation and test sets of the Visdrone2019 dataset and constructed a small data subset called complex-test to specifically test the model’s performance in extreme scenarios. A partial example is shown in Figure 14.

Figure 14.

Samples in the complex-test subset.

In this subsection, we use the baseline model and the novel RT-DETR (Real-Time Detection Transformer) model [54] to compare with the proposed method on the complex-test subset. Considering the large number of model parameters in the RT-DETR family, we use its minimal version RT-DETR-R18 to compare with the m-version of PS-YOLO on the complex-test subset as shown in Table 4, with the top two labeled in red and blue.

Table 4.

Comparison results on complex-test subsets.

As can be seen from Table 4, PS-YOLO-m has a higher detection accuracy than the benchmark model YOLOv11-m in complex scenarios, realizing the detection task with a smaller number of parameters and faster speed. Compared with RT-DETR-R18, although the accuracy of PS-YOLO-m is lower, PS-YOLO-m has a lower number of model parameters than RT-DETR-R18 and achieves a similar detection accuracy to RT-DETR-R18, while more importantly, the inference speed of PS-YOLO-m is much better than RT-DETR-R18 by a factor of three. This suggests that PS-YOLO will be more likely to be the choice for practical applications when the difference in accuracy is not demanding, and PS-YOLO-m has similar performance to RT-DETR-R18 with better real-time performance.

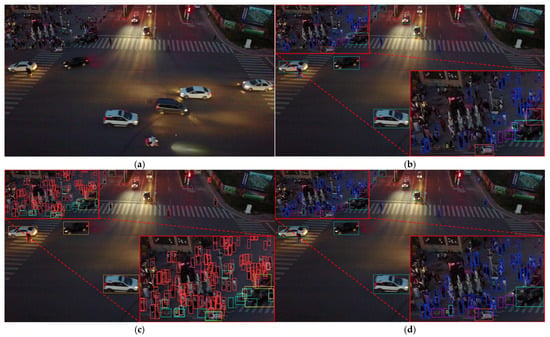

Next, we perform the detection tasks of the three models on the complex-test subset to more intuitively reflect the detection performance of the proposed methods in extreme environments. Considering the small size and dense distribution of the objects in the images, all the detection results hide the labels and confidence levels to avoid the objects from being obscured by the textual information of the detection boxes. Some of the detection samples on the complex-test subset are shown in Figure 15 and Figure 16.

Figure 15.

Typical complex scene (top view perspective) and the detection results of different models in this scene. (a) Original image; (b) detection results of YOLOv11-m; (c) detection results of RT-DETR-R18; and (d) detection results of PS-YOLO-m.

Figure 16.

Typical complex scene (oblique viewing angle) and the detection results of different models in this scene. (a) Original image; (b) detection results of YOLOv11-m; (c) detection results of RT-DETR-R18; and (d) detection results of PS-YOLO-m.

Figure 15 was taken by the UAV at a higher altitude looking down, the illumination conditions are inadequate, the object distribution is particularly dense and there are a large number of small objects. On the left side of Figure 15, there are several people crossing the intersection and YOLOv11-m did not detect them, while RT-DETR-R18 and PS-YOLO-m detected them. Figure 16 was taken by the UAV at a lower altitude and at an inclined angle. The light in the figure is much dimmer and in the upper left corner of Figure 16 the distribution of pedestrians is particularly dense and intertwined with the background. Many targets in this region cannot be detected by YOLOv11-m, which indicates the limitations of YOLOv11-m in dealing with complex backgrounds and small targets, while a comparison shows that PS YOLO-m, on the other hand, detects more small targets (pedestrians and motorcycles) and our proposed PS-YOLO ameliorates this shortcoming well. However, RT-DETR-R18 performs better in this scenario, successfully detecting more targets that were missed by PS-YOLO. This indicates that, in complex environments, DETR-based models are better equipped to handle the associated challenges.

In summary, the proposed method improves the detection performance of the baseline model in complex environments in terms of accuracy and speed and can provide fast and good detection services in complex environments. However, compared with the advanced DETR method, our method still has limitations in terms of accuracy. DETR is a good choice when the arithmetic power is sufficient and the requirement for accuracy is high, but PS-YOLO is a better choice when the arithmetic power is limited and some loss of accuracy is acceptable.

4.7. Ablation Experiments

To comprehensively validate the effectiveness of each module proposed for UAV object detection, we first analyzed each module individually. The impact of using GSCD before and after in different networks is shown in Table 5.

Table 5.

The comparison results of GSCD on the Visdrone2019-Val dataset, with the best results highlighted in bold.

Integrating GSCD into YOLOv8-s improves its precision by 3.3%, mAP50 and mAP50–95 by 0.5% and 0.3%, respectively, and FPS by 17.49. The addition of GSCD to YOLOv11-s improves the precision by 0.9%, the recall and mAP50 by 0.1%, the APsamll by 0.2%, and the FPS is improved by 42.05. The experimental data show that our proposed GSCD head requires little additional computational cost and improves the inference speed and detection accuracy of the model, which is helpful for real-time detection on UAV platforms. On the one hand, the combination of shared convolution enables the detection head to pay more attention to the common features of objects at different scales, improving the overall performance of the model. In addition, the shared convolution avoids the inefficient operation brought by the depth-separable convolution in the YOLOv11 detection head, which is why the number of parameters of the model increases slightly but the FPS is improved after adding the GSCD head. On the other hand, the introduction of NWDLoss reduces the sensitivity of the detection head to small object frame offset errors, which facilitates the UAV object detection task.

The impact of using FasterBIFFPN in different networks, before and after, is shown in Table 6. Introducing FasterBIFFPN into YOLOv8-s improved YOLOv8-s by 3.4% in accuracy, 1.1% and 1.9% on mAP50 and mAP50–95, and 1.1%, 2.7%, and 2.7% on APsmall, APmedium, and APlarge, respectively. The model parameter counts were reduced by 1.46 M. Similarly, FasterBIFFPN improves the accuracy of YOLOv11-s by 2.2%, mAP50 and mAP50–95 by 1.3% and 1.6%, and APsmall, APmedium, and APlarge by 1%, 2.5%, and 0.1%, respectively, while the number of parameters decreases by 2.34 M. This shows that FasterBIFFPN effectively enhances the feature representation of different layers, which improves the detection performance of the model for objects of different scales, and the detection ability of the model for small objects is also significantly enhanced. We can notice that after incorporating FasterBIFFPN, the GFLOPs increase, mainly because FasterBIFFPN adds extra cross-scale connections, which also increases the frequency of activation function usage. Additionally, the fusion method between feature maps changes from simple concatenation to weighted fusion, which requires multiple multiplication and addition operations. These operations increase the computational load, resulting in higher GFLOPs. Overall, however, the computational cost introduced by FasterBIFFPN is relatively small and provides a sizable performance gain, and our improvements are still valid.

Table 6.

The comparison results of FasterBIFFPN on the Visdrone2019-Val dataset, with the best results highlighted in bold.

After confirming the effectiveness of GSCD and FasterBIFFPN, we conducted ablation experiments on the benchmark model (YOLOv11-s) to evaluate the impact of each improvement strategy, and Table 7 presents the experimental results. We note that YOLOv11 added the C2PSA module to the backbone, which was originally designed to strengthen the feature extraction capability of the backbone through a self-attentive mechanism. However, this module was placed at the end of the backbone and we already know that most of the objects in the Visdrone2019 dataset are small in size, and most of the small object features are submerged after being downsampled by a factor of 32. The contribution of the C2PSA module to extracting small object features is minimal. Therefore, in the ablation experiment, to keep the model lightweight, we removed the original C2PSA module from the YOLOv11-s backbone network. Experimental data show that the C2PSA module brings a huge number of parameters (for a lightweight model), but it brings little performance gain, and worse, the C2PSA module significantly decreases the network’s inference speed. Therefore, removing the C2PSA module is reasonable. After removing the C2PSA module, we sequentially added Faster_C3k2, GSCD, and FasterBIFFPN to the network. When only Faster_C3k2 was added, the model exhibited a decrease in mAP50 and mAP50–95 by 0.5% and 0.4%, respectively, while the parameter count was reduced by 1.18 M. This is because Faster_C3k2 has fewer parameters than C3k2, and its feature extraction ability is slightly weaker. Weakening of feature extraction capability inevitably leads to loss of accuracy. On this basis, we added GSCD and Faster_C3k2 to the network, and both improved the accuracy of the network to different degrees. FasterBIFFPN brought the largest performance gain, increasing mAP50 and mAP50–95 by 1.6% and 1.4%, respectively, while further reducing the number of parameters to 5.52M. This indicates that FasterBIFFPN’s feature fusion method is simple and efficient, effectively merging multi-scale features and alleviating the information conflict between features at different levels. Although the contribution of GSCD to accuracy is not as significant as FasterBIFFPN, it effectively improves the network’s FPS while enhancing accuracy. This suggests that the shared convolution in GSCD improves the network’s capability to extract common features while enabling efficient inference without relying on depthwise or group convolutions. Finally, when we apply all the improved strategies to the benchmark model (Yolov11-s_noC2PSA + Faster_C3k2 + FasterBIFFPN + GSCD), we obtain the PS-YOLO model proposed in this paper. Compared to the benchmark model YOLOv11-s, PS-YOLO is more lightweight, featuring 41.3% fewer model parameters, 6.1% lower GFLOPs, and 26.73% higher FPS. In terms of detection performance, PS-YOLO outperforms, achieving a 1.3% improvement in mAP50 and a 0.6% improvement in mAP50–95. This result demonstrates that each of the improvement strategies applied to the baseline model is effective.

Table 7.

Experimental results of our improvement strategies. √ indicates that the module has been added, × indicates that the module has been removed.

4.8. Discussion

This section analyzes the performance characteristics of PS-YOLO for UAV object detection based on experimental results. Our comparative evaluation against the baseline YOLOv11-s model demonstrates PS-YOLO’s superior detection capabilities, as evidenced by confusion matrix analysis and precision-recall curves. Furthermore, when applying our proposed improvements to various YOLOv11 model scales, we observed consistent parameter reduction alongside accuracy improvements across all model variants. We further evaluated PS-YOLO against state-of-the-art methods through comprehensive comparisons. When benchmarking PS-YOLO-n against mainstream lightweight models, our analysis revealed the following: (1) the second-highest accuracy (surpassed only by PP-PiCoDet-L) and (2) the second-lowest model complexity (exceeded only by GCL-YOLO-n). Comparative studies with PS-YOLO-s against mainstream detection models showed superior performance in both model compactness and detection accuracy. Qualitative visual comparisons with top-performing models under varying illumination conditions demonstrated PS-YOLO’s robust detection capabilities. To rigorously evaluate performance in challenging environments, we constructed a specialized “complex-test” subset from the Visdrone2019 validation and test sets. Through comprehensive qualitative and quantitative comparisons with both the baseline model and state-of-the-art RT-DETR, we observed that PS-YOLO consistently outperformed the baseline in extreme scenarios while demonstrating slightly lower accuracy than RT-DETR. Notably, although RT-DETR achieved superior accuracy on Complex Test, its inference speed was significantly slower than PS-YOLO. This performance trade-off suggests that while RT-DETR may be preferable for maximum accuracy, PS-YOLO represents the optimal solution for real-time UAV applications where speed is critical. In the ablation experiment, we quantitatively analyzed the impact of each proposed improvement strategy. Among them, Faster_C3k2 effectively reduced the model’s parameter count and computational complexity but did not contribute to detection performance. GSCD improved the model’s inference speed and slightly improved its detection performance while relying solely on traditional convolution. FasterBIFFPN contributed the most to the model’s detection performance while effectively reducing the model’s parameter count.

However, we also found some limitations, such as the limited contribution of GSCD to accuracy. In order to maintain the light weight of the detection head, we may need to improve it by combining more advanced loss functions. Moreover, although the proposed model reduces both the number of parameters and computational complexity, the extent of reduction differs between the two. The reduction in model parameters is much greater than the computational complexity, mainly because both FasterBIFFPN and GSCD increase GFLOPs. FasterBIFFPN adds cross-scale connections, which results in additional activation function usage frequency and weighted computation, leading to more computational complexity. In addition, GSCD abandons the use of depthwise convolution from the YOLOv11 detection head, which consequently results in increased computational complexity.

5. Conclusions

In this study, we present PS-YOLO, a lighter, faster, and more efficient object detection framework, developed based on the YOLOv11-s baseline model. The network is designed for practical applications of UAV object detection, aiming at faster and better UAV object detection tasks. Specifically, PS-YOLO reduces the network’s parameter counts and complexity by introducing Faster_C3k2, achieving significant lightweight benefits with only a small loss in accuracy. Additionally, we demonstrated through theoretical analysis and experiments that the C2PSA module is not very helpful for small objects, so we removed it to streamline the backbone network. In the neck section, we proposed a more efficient neck network called FasterBIFFPN. This network achieves more efficient multi-scale feature fusion by introducing cross-scale connections, feature-weighted fusion, and the lightweight Faster_C3k2 module. Finally, we propose a lightweight and efficient detection head (GSCD) which introduces shared convolution and NWDLoss to simultaneously improve the inference speed and detection accuracy of the network while relying only on conventional convolution. We conducted extensive experiments on the Visdrone2019 dataset and analyzed the results both qualitatively and quantitatively. The experimental results confirmed the exceptional performance of PS-YOLO. Compared to other advanced methods, PS-YOLO has fewer parameters, higher detection accuracy, and strikes the best balance between precision and real-time performance.

Despite the satisfactory results of our work, we find that there is room for further reduction in the computational complexity of PS-YOLO, and in addition, there is potential for further improvement of PS-YOLO’s performance in scenarios with a dense distribution of objects. In future research, we plan to incorporate more advanced model pruning techniques to further reduce the computational complexity of PS-YOLO, and at the same time, we will explore more advanced modules and loss functions to further improve the detection performance of PS-YOLO in object-distributed dense scenes.

Author Contributions

Conceptualization, H.Z. and Y.Z. (Yan Zhang); methodology, H.Z.; software, H.Z.; validation, H.Z., Z.S. and L.Z.; formal analysis, H.Z. and L.Z.; investigation, H.Z.; resources, H.Z. and Y.Z. (Yan Zhang); data curation, H.Z.; writing—original draft preparation, H.Z.; writing—review and editing, H.Z., L.Z. and Y.Z. (Yu Zhang); visualization, H.Z.; supervision, H.Z.; project administration, H.Z.; funding acquisition, Y.Z. (Yan Zhang). All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (No. 62401575).

Data Availability Statement

The Visdrone2019 is available at https://github.com/VisDrone (accessed on 3 March 2025).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| UAV | Unmanned Aerial Vehicle |

| PASCAL VOC | The PASCAL Visual Object Classes |

| COCO | Common Objects in Context |

| PS-YOLO | Partial convolution and Shared convolution-based YOLO |

| YOLO | You Only Look Once |

| FasterBIFFPN | Faster Bidirectional Feature Fusion Pyramid Network |

| GSCD | Gaussian-Shared Convolutional Detection Head |

| PConv | Partial Convolution |

| PWConv | Pointwise Convolution |

| FPN | Feature Pyramid Network |

| PAN | Path Aggregation Network |

| PAFPN | Path Aggregation Feature Pyramid Network |

| BIFPN | Bidirectional Feature Pyramid Network |

| DWConv | Depthwise Convolution |

| NWDLoss | Normalized Gaussian Wasserstein Distance Loss |

| IoU | Intersection over Union |

| CIOU | Complete IOU |

| P | Precision |

| R | Recall |

| AP | Average Precision |

| mAP | Mean Average Precision |

| GFLOPs | Giga Floating Point Operations |

| FPS | Frames Per Second |

| SGD | Stochastic Gradient Descent |

| RT-DETR | Real-Time Detection Transformer |

References

- Albattah, W.; Masood, M.; Javed, A.; Nawaz, M.; Albahli, S. Custom CornerNet: A drone-based improved deep learning technique for large-scale multiclass pest localization and classification. Complex Intell. Syst. 2023, 9, 1299–1316. [Google Scholar] [CrossRef]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Liang, H.; Wang, H.; Hong, Y. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Byun, S.; Shin, I.K.; Moon, J.; Kang, J.; Choi, S.I. Road traffic monitoring from UAV images using deep learning networks. Remote Sens. 2021, 13, 4027. [Google Scholar] [CrossRef]

- Surmann, H.; Worst, R.; Buschmann, T.; Leinweber, A.; Schmitz, A.; Senkowski, G.; Goddemeier, N. Integration of UAVs in Urban Search and Rescue Missions. In Proceedings of the 2019 IEEE International Symposium on Safety, Security, and Rescue Robotics (SSRR), Würzburg, Germany, 2–4 September 2019; pp. 203–209. [Google Scholar] [CrossRef]

- Cao, J.; Bao, W.; Shang, H.; Yuan, M.; Cheng, Q. GCL-YOLO: A GhostConv-based lightweight yolo network for UAV small object detection. Remote Sens. 2023, 15, 4932. [Google Scholar] [CrossRef]

- Everingham, M.; Zisserman, A.; Williams, C.K.I.; Gool, L.V.; Allan, M.; Bishop, C.M.; Chapelle, O.; Dalal, N.; Deselaers, T.; Dorko, G.; et al. The PASCAL Visual Object Classes Challenge 2007 (VOC2007) Results. 2007. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/index.html (accessed on 3 March 2025).

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. 2012. Available online: http://host.robots.ox.ac.uk/pascal/VOC/voc2012/index.html (accessed on 3 March 2025).

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context, 2014. Available online: https://cocodataset.org/ (accessed on 3 March 2025).

- Zhang, Y.; Zhang, Y.; Fu, R.; Shi, Z.; Zhang, J.; Liu, D.; Du, J. Learning nonlocal quadrature contrast for detection and recognition of infrared rotary-wing UAV targets in complex background. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Song, S.Q.; Li, X.; Zhu, X.F. Urban road vehicle detection method by aerial photography based on improved SSD. Transducer Microsyst. Technol. 2021, 40, 114–117. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. arXiv 2021, arXiv:2108.11539. [Google Scholar]

- Jocher, G.; Stoken, A.; Chaurasia, A.; Borovec, J.; Kwon, Y.; Michael, K.; Changyu, L.; Fang, J.; Skalski, P.; Hogan, A.; et al. Ultralytics/Yolov5: V6.0-YOLOv5n ’Nano’ Models, Roboflow Integration, TensorFlow Export, OpenCV DNN Support, 2021. Available online: https://zenodo.org/records/5563715 (accessed on 3 March 2025).

- Deng, L.; Bi, L.; Li, H.; Chen, H.; Duan, X.; Lou, H.; Zhang, H.; Bi, J.; Liu, H. Lightweight aerial image object detection algorithm based on improved YOLOv5s. Sci. Rep. 2023, 13, 7817. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Zhang, Z. Drone-YOLO: An Efficient Neural Network Method for Target Detection in Drone Images. Drones 2023, 7, 526. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics/tree/main/ultralytics/cfg/models/v8 (accessed on 3 March 2025).

- Aibibu, T.; Lan, J.; Zeng, Y.; Lu, W.; Gu, N. An efficient rep-style gaussian–wasserstein network: Improved uav infrared small object detection for urban road surveillance and safety. Remote Sens. 2023, 16, 25. [Google Scholar] [CrossRef]

- Tahir, N.U.A.; Long, Z.; Zhang, Z.; Asim, M.; ELAffendi, M. PVswin-YOLOv8s: UAV-based pedestrian and vehicle detection for traffic management in smart cities using improved YOLOv8. Drones 2024, 8, 84. [Google Scholar] [CrossRef]

- Xu, J.; Tan, X.; Luo, R.; Song, K.; Li, J.; Qin, T.; Liu, T.Y. NAS-BERT: Task-agnostic and adaptive-size BERT compression with neural architecture search. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, Singapore, 14–18 August 2021; pp. 1933–1943. [Google Scholar]

- Liu, Y.; Zhang, W.; Wang, J. Zero-shot adversarial quantization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1512–1521. [Google Scholar]

- Zhu, J.; Tang, S.; Chen, D.; Yu, S.; Liu, Y.; Rong, M.; Yang, A.; Wang, X. Complementary relation contrastive distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9260–9269. [Google Scholar]

- Wimmer, P.; Mehnert, J.; Condurache, A. Interspace pruning: Using adaptive filter representations to improve training of sparse cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12527–12537. [Google Scholar]

- Howard, A.G. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Ye, T.; Qin, W.; Zhao, Z.; Gao, X.; Deng, X.; Ouyang, Y. Real-time object detection network in UAV-vision based on CNN and transformer. IEEE Trans. Instrum. Meas. 2023, 72, 1–13. [Google Scholar] [CrossRef]

- Bu, D.; Sun, B.; Sun, X.; Guo, R. Research On YOLOv8 UAV Ground Target Detection Based On RK3588. In Proceedings of the 2024 2nd International Conference on Computer, Vision and Intelligent Technology (ICCVIT), Huaibei, China, 24–27 November 2024; pp. 1–5. [Google Scholar]

- Xue, C.; Xia, Y.; Wu, M.; Chen, Z.; Cheng, F.; Yun, L. EL-YOLO: An efficient and lightweight low-altitude aerial objects detector for onboard applications. Expert Syst. Appl. 2024, 256, 124848. [Google Scholar] [CrossRef]

- Zhang, Q.; Zhou, L.; An, J. Real-time recognition algorithm of small target for UAV infrared detection. Sensors 2024, 24, 3075. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Chen, C.; Huang, Z.; Chang, Y.C.; Liu, L.; Pei, Q. A Low-Cost and Lightweight Real-Time Object-Detection Method Based on UAV Remote Sensing in Transportation Systems. Remote Sens. 2024, 16, 3712. [Google Scholar] [CrossRef]

- Wang, S.; Li, G.; He, B.; Cheng, B.; Ding, Y.; Li, W. LUMF-YOLO: A lightweight object detection network integrating UAV motion features. Computing 2025, 107, 25. [Google Scholar] [CrossRef]

- Muzammul, M.; Assam, M.; Qahmash, A. Quantum-Inspired Multi-Scale Object Detection in UAV Imagery: Advancing Ultra-Small Object Accuracy and Efficiency for Real-Time Applications. IEEE Access 2024, 13, 2173–2186. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Fu, Q.; Si, B.; Zhang, D.; Kou, R.; Yu, Y.; Feng, C. MINIAOD: Lightweight Aerial Image Object Detection. IEEE Sens. J. 2025, 25, 9167–9184. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Zhang, Q.; Jiang, Z.; Lu, Q.; Han, J.N.; Zeng, Z.; Gao, S.H.; Men, A. Split to be slim: An overlooked redundancy in vanilla convolution. arXiv 2020, arXiv:2006.12085. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, BC, Canada, 11–17 October 2021; pp. 10781–10790. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A normalized Gaussian Wasserstein distance for tiny object detection. arXiv 2021, arXiv:2110.13389. [Google Scholar]

- Du, D.; Zhu, P.; Wen, L.; Bian, X.; Lin, H.; Hu, Q.; Peng, T.; Zheng, J.; Wang, X.; Zhang, Y.; et al. VisDrone-DET2019: The vision meets drone object detection in image challenge results. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Yu, G.; Chang, Q.; Lv, W.; Xu, C.; Cui, C.; Ji, W.; Dang, Q.; Deng, K.; Wang, G.; Du, Y.; et al. PP-PicoDet: A better real-time object detector on mobile devices. arXiv 2021, arXiv:2111.00902. [Google Scholar]

- Li, Y.; Li, Q.; Pan, J.; Zhou, Y.; Zhu, H.; Wei, H.; Liu, C. Sod-yolo: Small-object-detection algorithm based on improved yolov8 for uav images. Remote Sens. 2024, 16, 3057. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for small object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Li, Y.; Fan, Q.; Huang, H.; Han, Z.; Gu, Q. A modified YOLOv8 detection network for UAV aerial image recognition. Drones 2023, 7, 304. [Google Scholar] [CrossRef]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).