Abstract

Recently, a network based on selective state space models (SSMs), Mamba, has emerged as a research focus in hyperspectral image (HSI) classification due to its linear computational complexity and strong long-range dependency modeling capability. Originally designed for 1D causal sequence modeling, Mamba is challenging for HSI tasks that require simultaneous awareness of spatial and spectral structures. Current Mamba-based HSI classification methods typically convert spatial structures into 1D sequences and employ various scanning patterns to capture spatial dependencies. However, these approaches inevitably disrupt spatial structures, leading to ineffective modeling of complex spatial relationships and increased computational costs due to elongated scanning paths. Moreover, the lack of neighborhood spectral information utilization fails to mitigate the impact of spatial variability on classification performance. To address these limitations, we propose a novel model, Dual-Aware Discriminative Fusion Mamba (DADFMamba), which is simultaneously aware of spatial-spectral structures and adaptively integrates discriminative features. Specifically, we design a Spatial-Structure-Aware Fusion Module (SSAFM) to directly establish spatial neighborhood connectivity in the state space, preserving structural integrity. Then, we introduce a Spectral-Neighbor-Group Fusion Module (SNGFM). It enhances target spectral features by leveraging neighborhood spectral information before partitioning them into multiple spectral groups to explore relations across these groups. Finally, we introduce a Feature Fusion Discriminator (FFD) to discriminate the importance of spatial and spectral features, enabling adaptive feature fusion. Extensive experiments on four benchmark HSI datasets demonstrate that DADFMamba outperforms state-of-the-art deep learning models in classification accuracy while maintaining low computational costs and parameter efficiency. Notably, it achieves superior performance with only 30 training samples per class, highlighting its data efficiency. Our study reveals the great potential of Mamba in HSI classification and provides valuable insights for future research.

1. Introduction

1.1. Background

In the field of remote sensing, hyperspectral imaging and synthetic aperture radar [1,2] have attracted widespread attention from researchers. With the development of hyperspectral imaging technology, the acquisition of hyperspectral images (HSIs) has become increasingly convenient and efficient [3]. Unlike ordinary visual images that only have RGB channels, HSIs capture the spatial information of the target object while also capturing dozens or even hundreds of continuous spectra. Benefiting from this rich and detailed spectral information, HSIs enable more precise object identification through comprehensive characterization of material composition, structural properties, and physical states. Owing to these advantages, HSIs are widely used in various fields, such as medical imaging [4,5], agriculture [6], mineral resource exploration [7], and so on. HSI classification, as a key link in these applications, has always been a research hotspot.

HSI classification methods are generally categorized into traditional machine learning (ML) and deep learning (DL) methods. In early research, numerous ML-based methods were successfully applied to HSI classification, including support vector machines (SVMs) [8], logistic regression [9], random forests [10], and k-means clustering [11]. With the advancement of deep learning techniques, HSI classification has witnessed remarkable progress in recent years. Current mainstream DL architectures can be roughly divided into convolutional neural networks (CNNs) [12,13,14], graph convolutional networks (GCNs) [15,16], and Transformers [17]. HSI datasets acquired by optical sensors (e.g., AVIRIS, ROSIS, ITRES CASI 1500) encounter several notable challenges in land cover classification: (1) the high dimensionality of spectral data, which leads to the curse of dimensionality and redundant information; (2) spatial variability, where pixels of the same class exhibit distinct spectral responses due to varying illumination, background mixing, or environmental conditions; and (3) the scarcity of labeled samples, as pixel-level annotation in remote sensing is expensive and time-consuming. These challenges demand models that can efficiently capture both spectral and spatial dependencies while remaining robust under limited supervision. To address the above challenges, deep learning methods such as CNNs and Transformer have been widely applied to hyperspectral image classification. CNNs are effective at modeling local spatial structures but are inherently limited by their receptive fields, making it difficult to capture long-range spatial dependencies. Transformer-based methods, on the other hand, can model global contextual relationships, but they typically require large-scale labeled datasets and involve high computational complexity, which limits their applicability in low-sample settings. These inherent limitations of existing models hinder further improvements in HSI classification accuracy, highlighting the need for an efficient approach that can capture long-range spatial dependencies while maintaining relatively low computational complexity.

Recently, state-space models (SSMs) [18] have aroused heated discussions in the field of DL; especially structured state-space sequence models (S4) [19] have attracted widespread attention in sequence modeling. Mamba [20] introduced a selection mechanism based on S4, enabling the model to selectively retain input-dependent information while maintaining indefinite memory. By employing a hardware-aware algorithm, Mamba achieves higher computational efficiency than Transformer. Benefiting from its linear complexity in modeling long-range dependencies, Mamba has achieved remarkable success in language modeling [20]. Inspired by the success of Mamba in language modeling, more and more Mamba-based methods have been applied to the field of HSI classification [21,22,23]. However, due to the image-spectrum merging characteristic of HSI, existing Mamba-based HSI classification methods still have the following limitations. On the one hand, unlike 1D sequences, the HSI spatial dimension is a 2D spatial structure. Current Mamba-based HSI classification methods [24,25,26,27] typically flatten the 2D spatial structure into the 1D sequence and then use a fixed scanning strategy to extract spatial features from the 1D sequence, which inevitably changes the spatial relationship between pixels, destroys the inherent contextual information in the image, and affects the classification results. On the other hand, current Mamba-based HSI classification methods [21,25] usually extract spectral features from the perspective of changing the spectral scanning direction, without considering the dependency of neighboring spectral information. In addition, these strategies of adding scanning directions inevitably increase the computational cost. These limitations compel us to design a structure-aware HSI classification model capable of capturing both spatial and spectral dependencies between neighboring features in the latent state space.

1.2. Related Work

HSI classification methods in early research primarily focused on spectral feature extraction from HSIs. Traditional approaches such as principal component analysis (PCA) [28,29], independent component analysis (ICA) [30], and linear discriminant analysis (LDA) [31] were commonly employed for HSI classification. However, these conventional methods exhibited limited generalization capability and weak representational capacity for the extracted features, resulting in unsatisfactory classification performance. In contrast, DL techniques not only demonstrate superior generalization ability but can also adaptively learn high-level semantic features. These advantages have led to their widespread application across various research domains, particularly in image classification [32,33,34], object detection [35,36,37,38], and semantic segmentation [39].

Among the prevalent backbone networks in DL, CNNs and their variants have been widely adopted for HSI classification. This is because they can effectively extract high-level semantic features and conveniently capture both spectral and spatial characteristics of HSIs. Hu et al. [40] treated spectral information as objects and employed 1D-CNN to extract spectral-dimensional features for HSI classification. To incorporate spatial information, Makantasis et al. [41] first reduced HSI dimensionality through R-PCA, then used patches of neighboring pixels around central pixels as training samples for 2D-CNN-based classification. However, using 2D-CNN alone cannot simultaneously capture joint spectral-spatial features. Hamida et al. [42] segmented HSIs into 3D cubes suitable for 3D-CNN processing, stacking multiple 3D-CNN layers for classification. Roy et al. [43] proposed a HybridSN network, combining 2D-CNN and 3D-CNN strategies, where 3D-CNN first extracted joint spectral-spatial features, followed by 2D-CNN learning more abstract spatial features. As network depth increases, models may encounter the gradient vanishing issue during training. To address this, He et al. [44] introduced residual connections. Zhong et al. [45] developed an end-to-end spectral-spatial residual network (SSRN) that complements feature information between each 3D convolutional layer and subsequent layers through residual blocks, achieving cross-layer feature enhancement and significantly improving classification performance. Zhang et al. [46] proposed a CNN-based spectral partitioning residual network (SPRN) that divides input spectra into multiple sub-bands and employs parallel improved residual blocks for feature extraction, effectively enhancing spectral-spatial feature representation. However, these CNN-based methods are fundamentally limited by their kernel sizes, resulting in insufficient understanding of global HSI structures. Furthermore, CNNs cannot establish long-range dependencies, making them ineffective for comprehensive spectral information extraction.

In recent years, Transformers have emerged as another mainstream framework for HSI classification due to their powerful long-range modeling capability and global spatial feature extraction ability. Dosovitskiy et al. [47] pioneered the Vision Transformer (ViT), marking a significant attempt to apply Transformer in the visual domain by directly processing sequences of image patches. Subsequently, numerous ViT variants have been adapted for HSI classification. Hong et al. [48] proposed a pure Transformer-based network named SpectralFormer for sequential information extraction in HSI classification. However, SpectralFormer underutilizes spatial positional information, resulting in suboptimal classification performance. To address this limitation, Sun et al. [49] developed an SSFTT network, which first converts shallow spectral-spatial features extracted by convolutional layers into deep semantic tokens, then employs Transformer for semantic modeling. This CNN–Transformer hybrid architecture effectively captures high-level semantic features to improve classification accuracy. Roy et al. [50] introduced a novel model named MorphFormer that integrates learnable spectral and spatial morphological convolutions with Transformer to enhance interactions between image tokens and class tokens regarding structural and shape information in HSI. Ma et al. [51] proposed LSGA-ViT, incorporating a light self-Gaussian-attention (LSGA) mechanism with Gaussian absolute position bias to better simulate HSI data distribution, making attention weights more concentrated around central query patches. Zhao et al. [52] observed that the inherent global correlation modeling in Transformer overlooks effective representation of local spatial-spectral features. To address this, they developed GSC-ViT, which specifically enhances the extraction of local spectral-spatial information. However, the self-attention mechanism [17] presents computational challenges due to its quadratic complexity, which not only increases computational costs but also limits the model’s capacity for long-range dependency modeling [53].

Recently, a new DL architecture, SSMs, has attracted widespread attention in the academic community. SSMs are good at capturing long-range dependencies and can be efficiently parallelized [53], positioning them as a strong competitor to CNNs and Transformers. Mamba is a typical representative of SSMs, with excellent computational efficiency and powerful feature extraction capability. In computer vision, Zhu et al. [53] designed a general vision backbone network named Vision Mamba (Vim) based on the location sensitivity of visual data and the global context requirements of visual understanding. The network uses a bidirectional Mamba structure to replace the self-attention mechanism. Liu et al. [54] proposed a VMamba network, which introduced the Cross-Scan Module (CSM) to traverse the spatial domain and transform any non-causal visual image sequence patch sequence, which helps to narrow the gap between the orderliness of one-dimensional selective scanning and the non-sequential structure of 2D visual data. In the field of remote sensing, Chen et al. [55] proposed an RSMamba for remote sensing image classification. The model proposed a dynamic multi-path activation mechanism to enhance Mamba’s ability to model non-causal data. Cao et al. [56] proposed a model called M3amba, a new end-to-end CLIP-driven Mamba model for multimodal fusion, and designed a multimodal Mamba fusion architecture with linear complexity and a cross-attention module Cross-SS 2D for full and effective information fusion. In HSI classification, Sun et al. [26] proposed a DBMamba model, which first used CNN to extract shallow spectral spatial features and then used bidirectional Mamba to extract higher-level semantic features based on the shallow features to achieve classification. Huang et al. [23] proposed an SS-Mamba model, which first converts the HSI cube to spatial and spectral token sequences through a token generation module. The sequences processed by Mamba blocks are then fed into a feature enhancement module for final classification. Lu et al. [57] developed the SSUM model, comprising the Spectral Mamba branch and the Spatial Mamba branch, where the features output from both branches are combined to produce classification results. Wang et al. [25] proposed an LE-Mamba model for HSI classification, using a multi-directional local spatial scanning mechanism in the spatial dimension to improve the extraction of non-causal local features and a bidirectional scanning mechanism in the spectral dimension to capture fine spectral details. He et al. [22] proposed a new 3DSS-Mamba framework. In order to overcome the limitations of the traditional Mamba algorithm that can only model causal sequences and is not suitable for high-dimensional scenes, an algorithm based on the 3D-Spectral-Spatial Selective Scanning (3DSS) mechanism was proposed, and five scanning paths were constructed to examine the impact of dimensional priority [22]. Existing Mamba-based HSI classification methods attempt to solve the problem of aligning HSI spatial structure with sequential Mamba by continuously adjusting the scanning strategy, but there are limitations in effectiveness and efficiency. In addition, when processing HSI spectral information, only considering the spectral scanning direction cannot fully extract the intrinsic information of the spectrum.

1.3. Motivation and Contribution

HSI classification is to classify each pixel into a certain category, and a single pixel shows a certain correlation with its spatially close pixels. Making full use of spatial structural information is conducive to improving the classification results. Conventional classification methods use image patches centered on classified pixels as the input of the model. However, Mamba-based models usually convert 2D image patches into 1D sequences for spatial feature scanning. Although existing scanning strategies partially solve the problem of insufficient feature extraction after the spatial structure is converted into a sequence structure, they have certain limitations in terms of effectiveness and efficiency. On the one hand, directional scanning inevitably changes the spatial relationship between pixels, thereby destroying the original semantic information. For example, the distance between a pixel and its left or right (horizontal) neighbor is 1, while the distance between its upper or lower (vertical) neighbor is equal to the width of the 2D image patch, which hinders the model from understanding the spatial relationship in the original HSI 2D image patch. On the other hand, fixed scanning paths [24,25,26,27], such as four-directional scanning [25], cannot effectively capture the complex spatial relationship in the 2D image patch, and the introduction of more scanning directions requires additional calculations. In addition, HSI has spatial variability [58]; that is, pixels of the same category present different spectral features in space, which increases the difficulty of the model in extracting spectral features and thus affects the overall classification performance.

In order to overcome the limitations of existing scanning strategies and mitigate the impact of spatial variability, this paper proposes a new HSI classification framework named Dual-Aware Discriminative Fusion Mamba (DADFMamba). DADFMamba not only captures the spatial dependency of HSI neighboring features from the latent state space, but also uses the spectral features of the target pixel’s neighbors to reduce spatial variability, thereby improving the classification performance. DADFMamba consists of the Spatial-Structure-Aware Fusion Module (SSAFM), the Spectral-Neighbor-Group Fusion Module (SNGFM) and the Feature Fusion Discriminator (FFD). In SSAFM, a new structure-aware state fusion (SASF) equation is introduced into the original Mamba to effectively capture the HSI spatial features. In SNGFM, a neighbor spectrum enhancement (NSE) strategy is proposed to overcome the interference caused by spatial variability. On this basis, a new scanning mechanism, grouped spectrum scanning (GSS), is proposed, which divides the enhanced spectral features into several small groups to better distinguish the subtle differences in spectral information. At the same time, this mechanism makes the model computationally friendly. Finally, FFD with a residual structure is used to implement HSI classification.

The main contributions of this paper are summarized as follows:

- A new Mamba-based HSI classification method named Dual-Aware Discriminative Fusion Mamba (DADFMamba) is proposed. It achieves dual awareness of HSI spatial and spectral structures by modeling spatial structure context and spectral neighborhood correlation in a unified framework.

- In SSAFM, to address the challenge of spatial structure loss in existing Mamba-based HSI classification methods, we introduce a novel structure-aware state fusion (SASF) equation. This equation not only enables effective skip connections between non-sequential elements in the sequence but also enhances the model’s ability to capture spatial relationships in HSIs.

- In SNGFM, in order to overcome the interference caused by spatial variability, a new NSE strategy is proposed. In addition, in order to better distinguish the subtle differences in spectral features, a new GSS mechanism is developed. Through the organic combination of the two, both the spectral feature extraction capability and computational efficiency are improved.

The remainder of this paper is organized as follows. Section 2 introduces the State Space Model and presents the detailed architecture of the proposed DADFMamba. Section 3 comprehensively describes the experimental process, including dataset selection, implementation details, comparative experiments, parameter analysis, and ablation studies. Finally, Section 4 concludes this work and provides suggestions for future research directions.

2. Material and Methods

2.1. Preliminaries

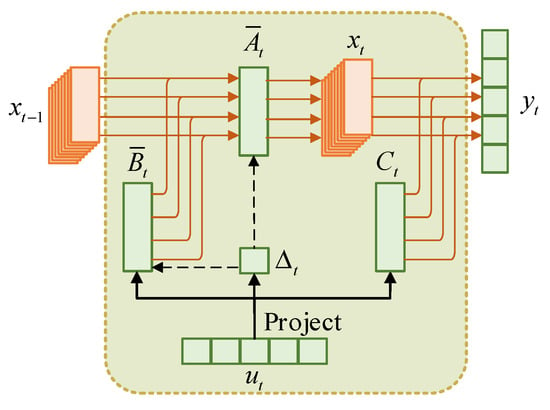

Recently, structured state space models (S4) have been actively influenced by RNNs, CNNs and the classic state space model and have shown great potential in sequence modeling. Equations (1)–(3) follow the classical Kalman filter formulation for linear dynamical systems, originally proposed in [59], and later introduced into the deep learning context by Mamba [20]. A one-dimensional function or sequence is used as input, and the output is mapped to , , through a state variable . This process can be expressed by the linear ordinary differential Equation (1), using as the state transfer matrix, and as the projection parameters, and implemented using skip connections.

In order to effectively extend the continuous system to DL to process discrete problems such as images and natural language, the continuous system needs to be discretized. Usually the zero-order hold (ZOH) rule is used for discretization, and the time scale parameter is introduced to represent the interval between discrete time steps, and the continuous parameters and are transformed into discrete parameters and . This can be obtained by Formula (2).

Although traditional state space models (SSMs) have achieved linear time complexity, since they are time-invariant systems, parameters , , and cannot be flexibly adjusted according to the changes in data at different time steps, resulting in an inability to accurately describe processes that change over time in the real world. To overcome this limitation, Mamba improves SSM through a selection mechanism to make the model parameters input-dependent and change with different inputs, thus turning it into a time-varying system. This can pay more attention to relevant information and provide a more accurate and realistic representation of dynamic systems. Specifically, by modifying parameters , , and to simple functions of the input sequence , input-dependent parameters , , and are obtained. Then the input-dependent discrete parameters and are calculated accordingly. The discrete state transition and observation equations can be obtained from Equation (3).

The process described above can be briefly summarized in Figure 1.

Figure 1.

SSM in traditional Mamba.

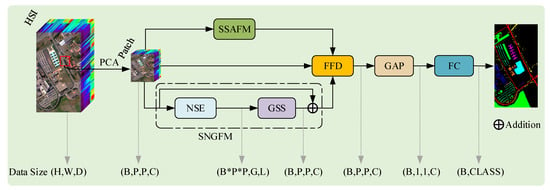

2.2. Overview

This paper proposes a Dual-Aware Discriminative Fusion Mamba (DADFMamba) model, which is specifically designed to capture the spatial correlation of neighboring pixel features in HSI and discriminate the spectral details in HSI. The overall architecture is shown in Figure 2 and can be divided into three key components: Spatial-Structure-Aware Fusion Module (SSAFM), Spectral-Neighbor-Group Fusion Module (SNGFM), and Feature Fusion Discriminator (FFD).

Figure 2.

Overview of the DADFMamba framework.

Specifically, the original HSI data , where , and represent the height, width and number of spectral channels, respectively, and the label corresponding to each pixel is . In order to retain the main spectral information and remove redundant information, principal component analysis (PCA) dimensionality reduction is first performed to obtain , where represents the reduced spectral dimension. For the patch-level-based HSI classification method, in order to avoid the loss of edge information, after edge filling of , 3D patches are extracted on the data to obtain 3D patch , where is the size of the patch. Finally, the processed data are randomly divided into training and test sets and input into the model.

Spatial autocorrelation is a fundamental property in geographic information science and remote sensing, rooted in the principle of spatial proximity, namely, spatially adjacent units tend to exhibit higher similarity [60]. In HSI, this phenomenon is particularly prominent, as neighboring pixels typically belong to the same land-cover class and present similar spectral responses. Inspired by this observation, leveraging both spatial structural information and spectral characteristics from neighboring pixels can significantly enhance the classifier’s discriminative capability for the center pixel. To this end, we propose an SSAFM and an NSE strategy, which aim to improve feature representation by modeling local spatial structures and reinforcing spectral consistency. These designs contribute to improved classification accuracy and robustness in HSI classification tasks. In the proposed model, the SSAFM is first introduced to maximally utilize HSI spatial neighborhood information during feature extraction. This module directly establishes connectivity between neighboring HSI pixels in the state space by applying linear weighting to neighboring state variables within each neighborhood, thereby extracting features that incorporate HSI spatial structures. This operation enhances the model’s sensitivity to land-cover boundaries and spatial adjacency relationships. Concurrently, the SNGFM incorporates spectral information from neighboring pixels to augment the target pixel’s spectral characteristics. The enhanced features are then partitioned into small-range patches to extract intrinsic physical properties of land-cover categories, effectively reducing the impact of spatial variability on classification results. Subsequently, the FFD serves as a discriminator to assess the relative importance of spatial and spectral features. It adaptively assigns weights to the spatial features from SSAFM and spectral features from SNGFM, enabling optimal spatial-spectral feature fusion. The fused feature maps then undergo a Global Average Pooling (GAP) operation, reducing their spatial dimensions to 1 × 1. Finally, the pooled features are flattened into a 1D vector and fed into a fully connected classifier to produce the final classification results.

2.3. Spatial-Structure-Aware Fusion Module (SSAFM)

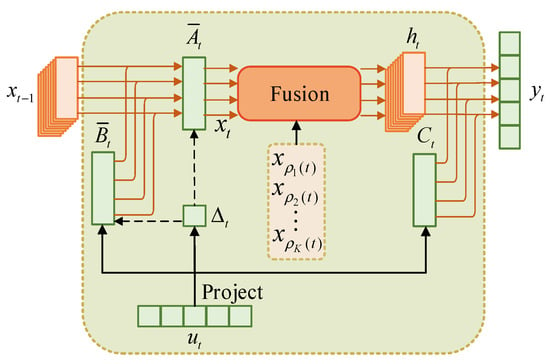

In order to make full use of spatial structural information for HSI classification, image patches centered on the classified pixels are conventionally used as input. However, the Mamba model typically converts 2D image patches into 1D sequence data and performs various scanning strategies on the 1D sequence data. This will destroy the original spatial structure of HSI and affect the classification results. In order to solve this problem and maintain the spatial structural relationship between pixels in HSIs, SSAFM is proposed. Different from the existing methods of scanning HSI from multiple directions [24,25,26], this module introduces a new structure-aware state fusion (SASF) equation to the original Mamba, which can be described by Equation (4). The spatial dependency of neighboring features of HSI is captured from the latent state space.

where is the original state variable, is the structure-aware state variable, is the neighbor set, and is the index of the kth neighbor of position . Figure 3 illustrates the SSM flow in SSAFM. Compared with the original Mamba in Figure 1, it can be seen that the original state variable is directly affected by its previous state , while the structure-aware state variable combines the additional neighboring state variables through a fusion mechanism, where represents the size of the neighbor set. By considering spatial and temporal information, the fused variable obtains richer semantics, thereby improving adaptability and a more comprehensive understanding of HSI.

Figure 3.

SSM in SSAFM ‘fusion’ refers to our proposed structure-aware state fusion (SASF) equation.

The details of the SSAFM are shown in Figure 4. The HSI data of the input model is first flattened from the spatial dimension to a 1D sequence and the state is calculated using the state transition equation . The calculated state is reshaped into a 2D format. In order to enable each state to perceive its neighboring states in the 2D space, the SASF equation is introduced. For the state variable , the weight is used to apply linear weighting to the neighboring states in its neighborhood set , effectively integrating the local dependency information into the new state , reducing the dependence on a single state information, thereby improving the prediction performance of the overall context. Finally, the output is obtained from through the observation equation . The process can be described by Equation (5).

where and represent the spatial dimension size and number of channels of the input model data patch, respectively. Flatten and Reshape refer to flattening and reshaping into a visual format in the spatial dimension, respectively, resulting in . Transition and Observation represent the state transition equation and observation equation, respectively. The SASF equation can not only capture the local features of the image but also retain the global context modeling ability of the original Mamba model to a certain extent, thereby achieving a more comprehensive visual representation.

Figure 4.

Structure of SSAFM.

In practice, three 3 × 3 depth-wise kernels with dilation factors d = 1, 3, and 5 are used to construct the HSI feature neighbor set , and the SASF equation can be rewritten as Equation (6).

where represents the kernel weight of the dilation factor d at position , represents the neighbor of state at position , and represents the width of the HSI patch.

2.4. Spectral-Neighbor-Group Fusion Module (SNGFM)

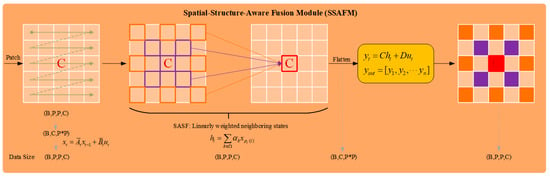

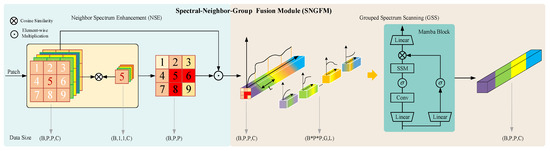

In addition to spatial structural information, HSIs also carry a large amount of spectral information. Reasonable use of spectral information can complement spatial information and improve the model’s ability to distinguish fine-grained information. The existing Mamba-based methods [25,26] directly use Mamba or Mamba with a bidirectional scanning mechanism to process the entire spectral data and do not use the spectral information of the target pixel’s spatial neighborhood. Since HSI has spatial variability, this will affect the model’s ability to identify substances. In addition, the spectral information of HSI contains a lot of redundant information, which will greatly increase the computational cost. Based on the above considerations, we designed SNGFM. First, the spectral information of the spatial neighborhood is used to enhance the spectral features of the target pixel. Then, in order to reduce data redundancy in HSI and facilitate the SSM to focus on subtle differences in the spectral domain, the enhanced spectral features are partitioned into groups, enabling efficient spectral information extraction through grouped scanning, as shown in Figure 5.

Figure 5.

Structure of SNGFM, including neighbor spectrum enhancement (NSE) and grouped spectrum scanning (GSS).

2.4.1. Neighbor Spectrum Enhancement (NSE)

In order to alleviate the phenomenon that the same substance exhibits different spectral characteristics due to spatial variability, it is very important to use neighboring spectrum information to enhance the spectral characteristics of the target pixel. The patch obtained after PCA dimensionality reduction is input into the module, where and are the size of the patch and the dimension of the spectrum after PCA dimensionality reduction, respectively. Cosine similarity [61] is widely used to measure the angular similarity between high-dimensional vectors. In the context of HSI, each pixel can be viewed as a high-dimensional spectral vector. By computing the cosine similarity between a target pixel and its neighboring pixels, the method effectively captures spectral consistency, which is crucial for preserving material identity across spatial regions. This similarity measure is scale-invariant, ensuring robustness to illumination variation, a common challenge in real-world HSI acquisition. Moreover, this mechanism can be interpreted under the framework of graph signal processing, where each pixel is a node and similarity-based edge weights reflect the strength of connections. The enhanced feature representation thus approximates a spectral-aware spatial smoothing process that improves intra-class compactness and inter-class separability in the learned feature space. Considering that the information of the classified pixel plays a dominant role in the patch, the cosine similarity between the classified pixel and the spectral information of each spatial neighborhood pixel in the patch is calculated to obtain the similarity weight . The more similar the two are, the more likely they are to obtain a higher score, while the score of the noise pixel is lower. Then the input patch and are weighted to obtain the spectral feature of the classified pixel enhancement. The whole processing process is shown in the following formula:

where is the element-wise multiplication operation and is the coordinate of the pixel in the patch.

2.4.2. Grouped Spectrum Scanning (GSS)

The inherent redundancy in HSIs presents significant challenges for efficient feature extraction. To address this issue, the proposed grouped scanning method effectively mitigates spectral redundancy by partitioning spectral bands into multiple fixed-length subgroups and processing each independently using selective state space models. This decomposition transforms high-dimensional spectral data into multiple low-dimensional subproblems, which not only enhances the model’s capacity to discern subtle spectral variations but also makes the framework more computationally friendly.

Specifically, the enhanced spectral feature is divided into G groups of length L from the spectral dimension and scanned using Mamba, where and are the size of the patch and the dimension of the spectrum after PCA dimensionality reduction, , and C is set to be exactly divisible by G. The extracted spectral feature can be obtained by the following formula:

where represents the features extracted by Mamba, G denotes the number of groups, and L indicates the length of each group.

2.5. Feature Fusion Discriminator (FFD)

In this paper, SSAFM and SNGFM extract the spatial and spectral features of HSI, respectively. SSAFM captures neighborhood spatial features from the state space, and SNGFM extracts spectral features in groups after introducing neighborhood spectral information. To effectively integrate spectral and spatial features for classification, we adopt a commonly used weighted feature fusion strategy, similar to that in [62]. Specifically, we introduce a Feature Fusion Discriminator (FFD) module, which adaptively evaluates the contribution of spatial and spectral features to the classification outcome and guides the feature fusion by assigning appropriate weights. Furthermore, considering the demand for sample-efficient models in HSI classification, we incorporate residual learning to mitigate potential overfitting during training. The fusion process can be formally expressed as follows:

where denotes the fused spatial-spectral features, while and represent the fusion weights for spatial and spectral features, respectively. These weights are randomly initialized and ultimately determined through backpropagation during the training process.

Following the FFD, a Global Average Pooling (GAP) layer is applied, which performs average pooling on the spatial dimensions of to obtain , subsequently fed into a fully connected layer to produce the final classification results. The DADFMamba network is trained using a cross-entropy loss function [63], defined as follows:

where represents the ground truth labels, denotes the predicted outputs of the DADFMamba, and indicates the total number of training samples.

2.6. Datasets Description

To comprehensively evaluate the effectiveness of the proposed model, four benchmark datasets were selected: Indian Pines, Pavia University, Salinas, and Houston. We selected these four datasets because they are widely used in remote sensing and cover real-world application scenarios such as agricultural monitoring, land cover classification, and urban analysis. These datasets differ significantly in terms of the number of classes, scene structure, and spectral–spatial characteristics, making them suitable for comprehensively evaluating the generalization ability and robustness of the proposed model under diverse and challenging remote sensing conditions. For each dataset, 30 samples per class were randomly chosen for training, with the remaining samples used for testing.

The HSI datasets and sensor descriptions used in this study were obtained from publicly available sources. Except for the removal of certain bands severely affected by water absorption, as recommended by the dataset providers, no additional spectral band selection was performed. This preprocessing approach is consistent with common practices in the literature and ensures the reproducibility and representativeness of the experimental results. The datasets mentioned above are as follows:

- (1)

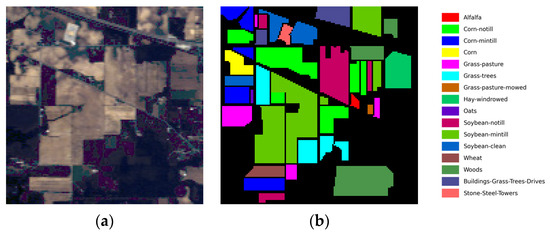

- Indian Pines: The dataset was collected by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor at the Indian Pine Test Site in northwestern Indiana in 1992 and consists of 224 spectral reflectance bands with a wavelength range of 0.4 to 2.5 μm. The image consists of 145 × 145 pixels and 16 land cover classes. In this experiment, a total of 200 bands were selected, and the noise bands (104–108), (150–163), and 220 were discarded. The pseudo-color image and the ground truth map are shown in Figure 6a,b, respectively. The number of training and test samples is described in detail in Table 1.

Figure 6. Indian Pines dataset. (a) False-color image, (b) ground truth map.

Figure 6. Indian Pines dataset. (a) False-color image, (b) ground truth map. Table 1. The numbers of samples in the Indian Pines dataset.

Table 1. The numbers of samples in the Indian Pines dataset.

- (2)

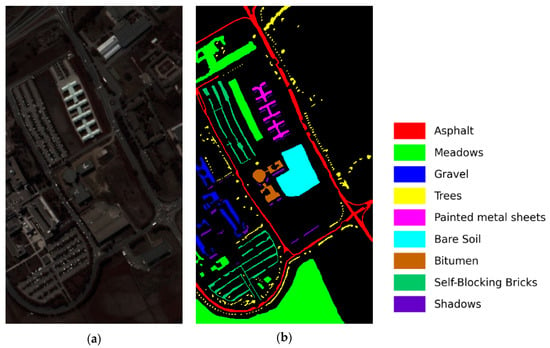

- Pavia University: This dataset was collected in 2001 at the University of Pavia in northern Italy using the Reflection Optical System Imaging Spectrometer (ROSIS) sensor. It consists of 103 bands with a wavelength range from 0.43 to 0.86 μm. The image includes 610 × 340 pixels and 9 land cover classes with a spatial resolution of 1.3 m. The pseudo-color image and the ground truth classification map are shown in Figure 7a,b, respectively. The number of training and test samples is described in detail in Table 2.

Figure 7. Pavia University dataset. (a) False-color image, (b) ground truth map.

Figure 7. Pavia University dataset. (a) False-color image, (b) ground truth map. Table 2. The numbers of samples in the Pavia University dataset.

Table 2. The numbers of samples in the Pavia University dataset.

- (3)

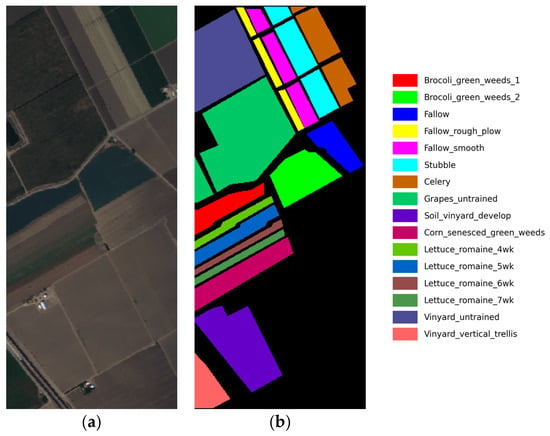

- Salinas: This dataset was collected by the 224-band AVIRIS sensor in the Salinas Valley, California, USA. The image consists of 512 × 217 pixels, and 20 water absorption bands are excluded, namely (108–112), (154–167), and 224 bands. The data only provides sensor radiance values, including landforms such as vegetables, bare soil, and vineyards. The ground truth labels of the Salinas dataset contain a total of 16 categories with a spatial resolution of 3.7 m. The pseudo-color image and the ground truth classification map are shown in Figure 8a,b, respectively. The number of samples in the training set and test set is detailed in Table 3.

Figure 8. Salinas dataset. (a) False-color image, (b) ground truth map.

Figure 8. Salinas dataset. (a) False-color image, (b) ground truth map. Table 3. The numbers of samples in the Salinas dataset.

Table 3. The numbers of samples in the Salinas dataset.

- (4)

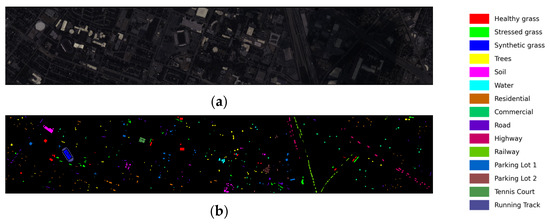

- Houston: The image was collected by the ITRES CASI 1500 HS imager on the campus of the University of Houston and its neighboring areas, with 144 spectral bands and an image size of 349 × 1905. It was provided by the 2013 IEEE Geoscience and Remote Sensing Society (GRSS) Data Fusion Competition. The dataset contains 15 classes. The pseudo-color image and the ground truth classification map are shown in Figure 9a,b, respectively. The number of training and test samples is described in detail in Table 4.

Figure 9. Houston dataset. (a) False-color image, (b) ground truth map.

Figure 9. Houston dataset. (a) False-color image, (b) ground truth map. Table 4. The numbers of samples in the Houston dataset.

Table 4. The numbers of samples in the Houston dataset.

3. Results

In this section, we first introduce experimental details. Subsequently, we compare the classification performance with several state-of-the-art (SOTA) network models. Next, we analyze the model’s performance under different key parameter configurations, including batch size, patch size, and PCA value with number of groups. Following this, ablation studies and computational complexity analysis are conducted. Finally, we investigate the impact of varying numbers of training samples on classification accuracy.

3.1. Experiment Details

The experimental configuration is implemented under the following settings:

- The proposed method was implemented using PyTorch 2.0.1, and its performance is evaluated on an NVIDIA GeForce RTX 3080 GPU (NVIDIA, Santa Clara, CA, USA).

- This paper uses three commonly used evaluation indicators: overall accuracy (OA), average accuracy (AA), and Kappa coefficient (κ × 100) to compare the performance of various methods.

- To ensure the fairness of the experiment, all compared methods follow the optimal parameter configuration in their respective papers. For the Indian Pines dataset, the batch size is set to 64, the patch size is set to , 40 principal components are retained, and they are divided into 5 subgroups for feature extraction. For the Pavia University dataset, the batch size is set to 32, the patch size is set to , 40 principal components are retained, and they are divided into 5 subgroups for feature extraction. For the Salinas dataset, the batch size is set to 128, the patch size is set to , 40 principal components are retained, and they are divided into 8 subgroups for feature extraction. For the Houston dataset, the batch size is set to 256, the patch size is set to , 40 principal components are retained, and they are divided into 5 subgroups for feature extraction. Finally, the network is trained using the Adam optimizer with weight decay set to 0.001, and the learning rate and epoch are set to 0.01 and 100, respectively.

3.2. Quantitative Evaluation

To further verify the effectiveness of DADFMamba, this section quantitatively analyzes the proposed DADFMamba with the state-of-the-art HSI classification methods based on CNN and Transformer architectures, as follows:

- 2DCNN [41]: This model reduces the dimensionality of the original data through R-PCA, passes it into two convolutional layers for feature extraction, and finally implements classification through a fully connected layer.

- 3DCNN [42]: The model consists of a 3D convolution layer, a 3D pooling layer, a 1D convolution layer, and a fully connected layer for classification.

- HybridSN [43]: The model is a mixture of 2D and 3D CNN models, including three 3D convolutional layers, one 2D convolutional layer and three full connections, and finally classification is achieved through softmax.

- SPRN [46]: The model mainly consists of a padding layer, two grouped convolutional layers, two residual modules, a fully connected layer, and softmax is used for classification after the global pooling layer.

- SpectralFormer [48]: The model includes a layer for processing spectral information, a patch embedding layer, a transformer layer and an MLP layer for classification.

- SSFTT [49]: The model consists of a 3D convolutional layer, a 2D convolutional layer, a transformer layer with positional encoding, and a linear layer for classification.

- MorphFormer [50]: The module consists of a convolutional layer, a self-attention module based on morphology joint in the spectral-spatial domain, and a linear layer for classification.

- GSC-VIT [52]: The model consists of grouped point-wise convolutional layers, two feature extraction modules consisting of convolutional layers and transformer layers, and a fully connected layer for classification.

- 3DSS-Mamba [22]: The model consists of a 3D convolutional layer, multiple 3D-Spectral-Spatial Mamba blocks, and finally a linear layer for classification.

- SS-Mamba [23]: The model comprises a spectral-spatial token generation module followed by multiple stacked spectral-spatial Mamba blocks. After spectral-spatial feature enhancement, classification is achieved through a linear layer.

- SSUM [57]: The model is composed of a Spectral Mamba branch and a Spatial Mamba branch, with classification ultimately performed by fusing the features from both branches.

Table 5, Table 6, Table 7 and Table 8 show the results of the quantitative comparison of the four datasets, including the accuracy of each category, overall accuracy (OA), average accuracy (AA), and Kappa coefficient (κ). The comparison methods can be roughly categorized into three groups: CNN-based, Transformer-based, and Mamba-based.

Table 5.

Classification performance obtained by different methods for the Indian Pines dataset (the optimal performance of OA, AA, and κ × 100 are bolded; no.1–16 represents the accuracy of each category).

Table 6.

Classification performance obtained by different methods for the Pavia University dataset (the optimal performance of OA, AA, and κ × 100 are bolded; no. 1–9 represents accuracy of each category).

Table 7.

Classification performance obtained by different methods for the Salinas dataset (the optimal performance of OA, AA, and κ × 100 are bolded; no. 1–16 represents accuracy of each category).

Table 8.

Classification performance obtained by different methods for the Houston dataset (the optimal performance of OA, AA, and κ × 100 are bolded; no. 1–15 represents accuracy of each category).

Among the selected comparative methods, 2D-CNN, 3D-CNN, and HybridSN do not achieve satisfactory classification accuracy on the four datasets. This can be attributed to the limited receptive fields of traditional convolutional kernels, which restrict their ability to capture long-range dependencies. In contrast, SPRN segments the input spectral bands into several non-overlapping contiguous sub-bands and applies equivalent grouped convolutions to extract features. The experimental results show that SPRN achieves better performance, as the segmented spectral information helps compensate for the receptive field limitations of standard CNNs. Although SpectralFormer is built upon a Transformer architecture and theoretically has strong long-range modeling capabilities, it relies solely on spectral features for classification and lacks spatial information modeling, which leads to suboptimal performance across all datasets. SSFTT combines 3D and 2D convolutions to extract shallow spectral-spatial features and then leverages the Transformer’s ability to model high-level semantic features over long distances. By integrating the strengths of both CNNs and Transformers, it achieves relatively strong classification performance on all four datasets. GSC-ViT, a recently proposed ViT-based method, introduces a Groupwise Separable Convolution module composed of grouped pointwise and group convolution. Additionally, it replaces the conventional Multi-head Self-Attention (MSA) in ViT with a Groupwise Separable Self-Attention module to better capture both global and local spatial features. As a result, GSC-ViT also achieves competitive performance across all four datasets. 3DSS-Mamba, SS-Mamba, and SSUM represent novel applications of Mamba backbone networks in HSI classification, offering new research perspectives for this field. However, 3DSS-Mamba exhibits clear limitations; it demonstrates poor classification performance across all four datasets when training sample sizes are limited. In contrast, both SS-Mamba and SSUM achieve satisfactory results on all four datasets. Specifically, SSUM calculates the spectral average of all neighboring pixels around the target pixel and uses this average to generate enhanced spectral features. While this approach can partially mitigate spatial variability, the spectral averaging process cannot differentiate importance levels among pixels, potentially blurring fine boundaries of small ground objects and leading to suboptimal classification. Compared to SSUM, DADFMamba employs cosine similarity measurements between the center pixel and its neighbors, assigning higher weights to more similar pixels, which more effectively suppresses spatial variability effects. Additionally, SSAFM better preserves the original spatial structural information of HSIs. Consequently, DADFMamba achieves the best classification performance.

Although most of the aforementioned methods have achieved satisfactory classification performance, the proposed DADFMamba still outperforms them across all four datasets. Specifically, on the Indian Pines dataset, compared with the best-performing method SPRN, DADFMamba achieves improvements of 2.93%, 1.31%, and 3.33% in OA, AA, and κ × 100, respectively. On the Pavia University dataset, DADFMamba outperforms SPRN by 1.14%, 0.26%, and 0.57% in OA, AA, and κ × 100, respectively. On the Salinas dataset, the method achieves improvements of 0.28%, 0.04%, and 0.32% over SSFTT in OA, AA, and κ × 100, respectively. For the Houston dataset, DADFMamba surpasses SS-Mamba by 0.97%, 0.69%, and 1.05% in OA, AA, and κ × 100, respectively. These experimental results strongly demonstrate the superiority of DADFMamba in classification performance.

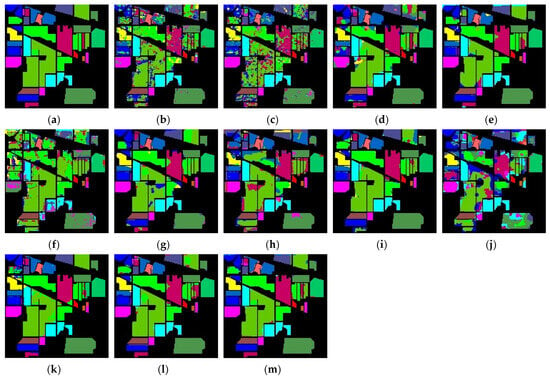

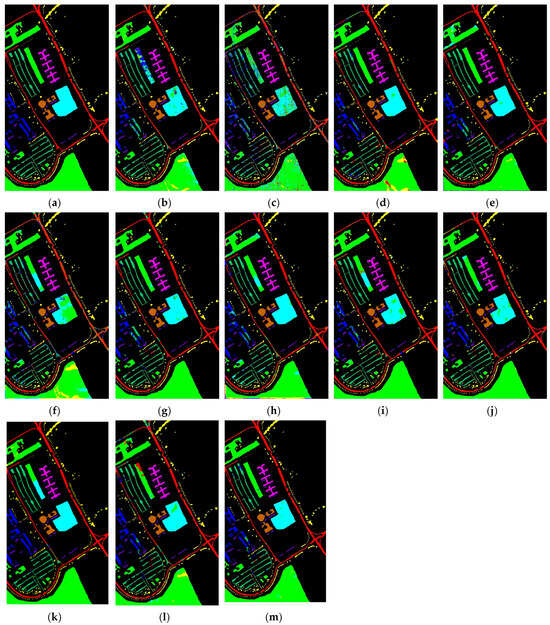

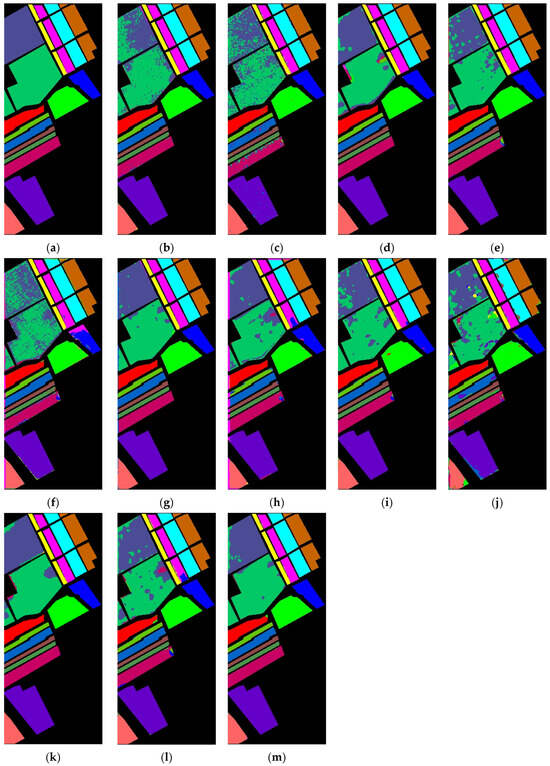

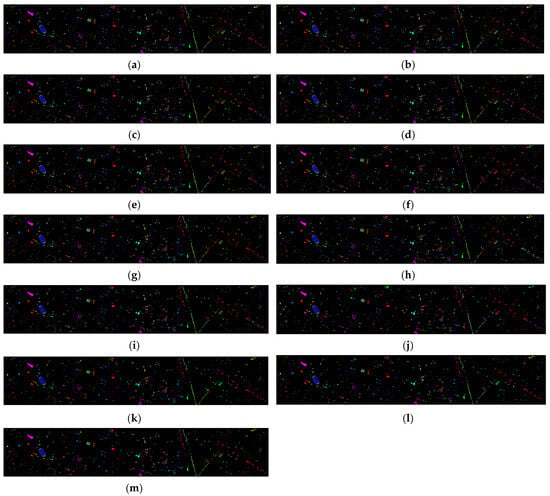

3.3. Comparison of Classification Maps

To more intuitively present the results of the quantitative experiments, this section visualizes the classification maps of all methods across different datasets, as shown in Figure 10, Figure 11, Figure 12 and Figure 13. Among the CNN-based methods, it is evident that the local receptive field of CNNs leads to category confusion between neighboring classes. This issue is particularly noticeable in the Salinas dataset, where the classes ‘Grapes_untrained’ (bright green) and ‘Vinyard_untrained’ (dark blue–purple) are adjacent in spatial location, resulting in frequent misclassifications between the two in the classification maps. Furthermore, due to the reliance on small patches for classification, CNNs tend to overemphasize local features while ignoring global consistency, which leads to salt-and-pepper noise in the classification results. This phenomenon is especially prominent in the Pavia University dataset. In addition, CNN-based methods show unsatisfactory visualization results on the Indian Pines dataset. For Transformer-based methods, SpectralFormer suffers from insufficient utilization of spatial information, resulting in noisy classification maps, particularly on the Indian Pines and Houston datasets. In contrast, both SSFTT and GSC-ViT produce more desirable and accurate classification results.

Figure 10.

Classification maps produced by various methods applied to the Indian Pines dataset: (a) ground truth; (b) 2DCNN; (c) 3DCNN; (d) HybridSN; (e) SPRN; (f) SpectralFormer; (g) SSFTT; (h) MorphFormer; (i) GSC-VIT; (j) 3DSS-Mamba; (k) SS-Mamba; (l) SSUM; (m) DADFMamba.

Figure 11.

Classification maps produced by various methods applied to the Pavia University dataset: (a) ground truth; (b) 2DCNN; (c) 3DCNN; (d) HybridSN; (e) SPRN; (f) SpectralFormer; (g) SSFTT; (h) MorphFormer; (i) GSC-VIT; (j) 3DSS-Mamba; (k) SS-Mamba; (l) SSUM; (m) DADFMamba.

Figure 12.

Classification maps produced by various methods applied to the Salinas dataset: (a) ground truth; (b) 2DCNN; (c) 3DCNN; (d) HybridSN; (e) SPRN; (f) SpectralFormer; (g) SSFTT; (h) MorphFormer; (i) GSC-VIT; (j) 3DSS-Mamba; (k) SS-Mamba; (l) SSUM; (m) DADFMamba.

Figure 13.

Classification maps produced by various methods applied to the Houston dataset: (a) ground truth; (b) 2DCNN; (c) 3DCNN; (d) HybridSN; (e) SPRN; (f) SpectralFormer; (g) SSFTT; (h) MorphFormer; (i) GSC-VIT; (j) 3DSS-Mamba; (k) SS-Mamba; (l) SSUM; (m) DADFMamba.

The proposed DADFMamba achieves classification maps that most closely resemble the ground truth across all four public datasets. For the Indian Pines dataset, DADFMamba outperforms GSC-ViT in the lower-left region for the class ‘Soybean-notill’ (deep pink) and also demonstrates superior classification performance in the upper-left region compared to the best-performing method, SPRN. On the Pavia University dataset, although the overall results are highly comparable to those of SPRN, DADFMamba achieves slightly better accuracy in the lower Bright Green region and the central Bright Cyan region. For the Salinas dataset, DADFMamba shows fewer misclassifications between the spatially adjacent classes ‘Grapes_untrained’ (bright green) and ‘Vinyard_untrained’ (dark blue–purple) than the strongest baseline method, SSFTT. These results collectively demonstrate the superior fine-grained land-cover classification capability of the proposed method.

3.4. Parameters Analyzed

Experiments have shown that in HSI classification, batch size, patch size, and PCA value with number of groups all have a significant impact on classification performance. This section provides an analysis of these parameters.

3.4.1. Batch Size

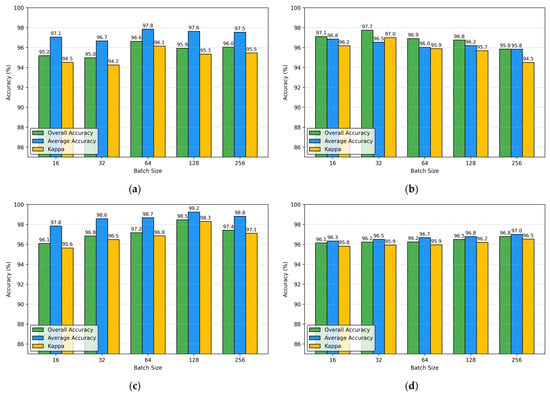

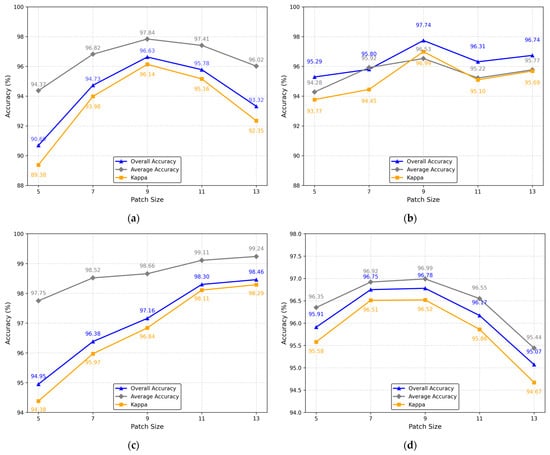

To investigate the impact of batch size on DADFMamba’s performance metrics (OA, AA, and κ) across all four datasets while keeping other parameters fixed, we conducted experiments with batch sizes selected from {16, 32, 64, 128, 256}. The experimental results are presented in Figure 14.

Figure 14.

The impact of different batch sizes on OA, AA, and κ obtained by the proposed DADFMamba for the four datasets: (a) Indian Pines; (b) Pavia University; (c) Salinas; (d) Houston.

3.4.2. Patch Size

Patch size not only affects the classification results but is also one of the key parameters that affect memory consumption and computational load. In Figure 15, the patch size is quantitatively analyzed. It can be seen from Figure 15a,b,d that as the patch size increases, OA, AA, and κ all have a trend of first increasing and then decreasing and reach a maximum value when the patch size is nine. However, for the Salinas dataset, as the patch size increases, OA, AA, and κ continue to increase. Considering all factors, for the Salinas dataset, the patch size is set to 13, and for the other three datasets, the patch size is set to 9.

Figure 15.

The impact of different patch sizes on OA, AA, and κ obtained by the proposed DADFMamba for the four datasets: (a) Indian Pines; (b) Pavia University; (c) Salinas; (d) Houston.

3.4.3. Varied PCA Bands with Spectral Groups

Since the number of bands after PCA-based dimensionality reduction not only affects the classification performance of the model but also impacts its parameter size and computational cost, and considering that the number of groups in the GSS is usually determined based on the reduced band count, extensive experiments were conducted on four publicly available datasets to explore their joint effects on OA, model parameters, and FLOPs. The experimental results are shown in Table 9, Table 10, Table 11 and Table 12. From the tables, it can be observed that as the number of reduced bands increases, both the number of parameters and FLOPs of the model increase. However, when the number of reduced bands is fixed, increasing the number of groups leads to a gradual decrease in the model’s parameter count and FLOPs. Moreover, the OA reaches its peak when the group count is set to a moderate level. This indicates that an optimal balance is achieved between computational cost and classification performance, further demonstrating the effectiveness of GSS. Specifically, for all four datasets, the number of bands after dimensionality reduction is set to 40. For the Indian Pines, Pavia University, and Houston datasets, the number of groups is set to 5, while for the Salinas dataset, it is set to 8.

Table 9.

Performance of Indian Pines with varied PCA bands and spectral groups.

Table 10.

Performance of Pavia University with varied PCA bands and spectral groups.

Table 11.

Performance of Salinas with varied PCA bands and spectral groups.

Table 12.

Performance of Houston with varied PCA bands and spectral groups.

3.5. Ablation Study

This section evaluates the effectiveness of SASF, NSE, GSS and FFD by using Case 1 (direct patch input to the Mamba model for classification) as the baseline. The “Sum” method denotes direct summation of spatial and spectral features. Experimental results are shown in Table 13.

Table 13.

Result of ablation study.

Case 2 presents the classification results after incorporating the SASF module. SASF captures the spatial dependencies of neighboring features in HSI by performing linear weighting on neighboring pixels while also preserving the spatial structural information of the HSI. It can be observed that all evaluation metrics across the four datasets show improvement.

Case 3 presents the classification results after combining SASF with the NSE module. NSE enhances spectral features by computing the cosine similarity between the central pixel and its neighboring pixels, aiming to mitigate the impact of spatial variability in HSI. The evaluation metrics on all four datasets show varying degrees of improvement, demonstrating the effectiveness of the NSE module.

Case 4 presents the classification results after integrating SASF with the GSS module. GSS divides the spectral information into multiple smaller groups for feature extraction by the Mamba. Its group-wise scanning mechanism enables the model to focus on subtle spectral differences while maintaining computational efficiency. When combined with SASF, the results are comparable to those in Case 3. However, compared to the baseline model, significant improvements are observed across all four datasets.

Case 5 presents the classification results obtained by combining NSE and GSS. The spectral information is first enhanced by NSE and then processed by the GSS mechanism to capture subtle spectral differences. Compared to the baseline model, this combination achieves competitive results, demonstrating the effectiveness of both modules.

Case 6 shows the results of directly adding features after combining the three strategies. In contrast, Case 7 integrates all four strategies, enabling the model to capture both the spatial dependencies of neighboring features and the subtle spectral variations in HSI. As a result, it achieves the highest classification performance across all four datasets. This confirms the effectiveness of the SASF module in enhancing neighboring pixel features, the NSE module in mitigating spatial variability, the GSS module in capturing spectral details, and the FFD module in feature fusion.

3.6. Complexity Analysis

We compared parameters, floating point operations (FLOPs), and running time with several SOTA methods on the Indian Pines dataset. The comparison results are listed in Table 14, and the top three of each indicator are bolded. For 2D-CNN and 3D-CNN methods, these methods have relatively simple structures, and the other compared models even use convolution, so it is normal for these methods to achieve the best results in these four indicators. Specifically, in terms of parameters, 3DSS-Mamba, which is also a Mamba-based method, achieved the best results, which may be because our method adds dilated convolutions to increase the number of parameters when implementing the SASF equation. In terms of FLOPs, the value of our method is smaller than that of other methods. In terms of training time and test time, our method is also at the upper-middle level. Overall, our method achieved competitive results, which also demonstrates the effectiveness of the DADFMamba method.

Table 14.

Comparison of parameters, FLOPs, and running time on the Indian Pines dataset (top 3 metrics bolded; Ttr: training time, Tte: testing time).

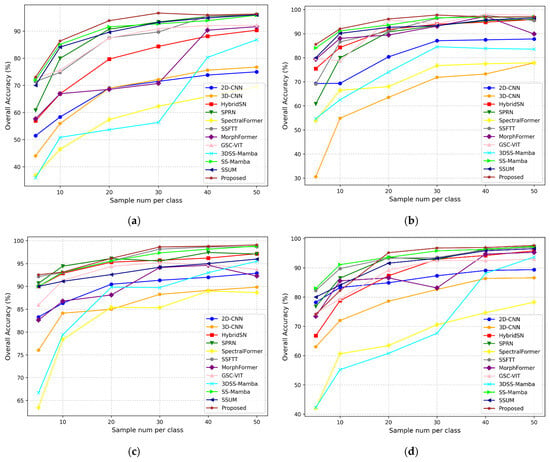

3.7. Comparison Under Different Numbers of Training Samples

In this section, we use OA as the evaluation metric to conduct experiments with varying numbers of training samples (5, 10, 20, 30, 40, and 50 samples per class) for both the proposed method and comparison methods on the Indian Pines, Pavia University, Salinas, and Houston datasets. The experimental results are shown in Figure 16. On Indian Pines, our model achieves over 80% accuracy with just 10 samples per class and continues to improve steadily, outperforming all baselines at each sample level. The accuracy curve flattens beyond 30 samples, indicating a performance saturation point where additional data yields marginal gains. At Pavia University, the model achieves above 90% accuracy with only 10 samples per class, showing remarkable data efficiency. Compared with traditional CNNs and Transformer-based models (e.g., SpectralFormer), our method converges faster and saturates earlier, which is desirable in low-label scenarios. On Salinas, a relatively less challenging dataset due to strong spectral separability, our method reaches high accuracy early (about 95% accuracy at 20 samples), demonstrating early convergence and efficient feature learning. On the Houston dataset, as the number of training samples per class increases from 5 to 20, the proposed model demonstrates a steady improvement in classification accuracy, reflecting high data efficiency. Beyond 20 samples, the performance gradually stabilizes, highlighting the model’s robustness in complex urban hyperspectral scenarios. As the number of training samples increases, most methods show gradual improvement in classification performance. However, in the Pavia University dataset, GSC-VIT exhibits some fluctuations, and similar behavior is observed for MorphFormer in the Houston dataset. Although 3DSS-Mamba performs relatively poorly across all four datasets, its OA improves rapidly with increasing training samples. Due to its smaller parameter size, the proposed method reaches a performance plateau when the training samples exceed a certain number. Notably, our method demonstrates competitive performance across all four datasets under different training sample sizes, further validating the effectiveness of the algorithm.

Figure 16.

Overall classification accuracy of various algorithms under different training sample sizes: (a) Indian Pines; (b) Pavia University; (c) Salinas; (d) Houston.

To further evaluate the performance of the proposed method under extremely low-sample conditions, we conduct five-shot classification experiments on three representative HSI datasets: Indian Pines, Pavia University, and Salinas. Specifically, only 5 labeled samples per class are used for training, while the remaining samples are used for testing. We compare our model with several representative few-shot learning methods, including RN-FSC [64], DCFSL [65], and SSDA [66]. As shown in Table 15, the proposed method achieves the highest overall classification accuracy on all three datasets, demonstrating superior performance and strong generalization capability in few-shot learning scenarios.

Table 15.

Overall accuracy (%) under 5-shot setting (5 samples per class).

4. Discussion

4.1. Dataset Diversity and Its Impact on Generalization

The four HSI datasets selected in this study are widely used and representative in the field of hyperspectral image classification. These datasets differ significantly in terms of scene types, spectral-spatial complexity, number of classes, and degree of class imbalance, thus providing a comprehensive benchmark to evaluate the generalization ability of the proposed method.

The Indian Pines and Salinas datasets mainly represent agricultural scenes and contain numerous crop types with similar spectral characteristics but different semantic labels. For example, the Indian Pines dataset includes multiple soybean-related categories such as Soybean-Notill, Soybean-Mintill, and Soybean-Clean, while the Salinas dataset contains romaine lettuce classes at different growth stages, such as Lettuce_romaine_4wk, 5wk, 6wk, and 7wk. These categories are spectrally similar and semantically close, which significantly increases the classification difficulty and can easily lead to misclassification. Nevertheless, the proposed model achieves superior classification accuracy compared to other methods, even under such challenging conditions. This demonstrates that the NSE strategy can effectively leverage neighborhood spectral information to enhance feature representation, thereby improving the model’s ability to distinguish fine-grained semantic categories.

In contrast, the Pavia University and Houston datasets represent typical urban land cover scenes. As the number of classes increases from 9 (Pavia) to 15 (Houston), the classification accuracy drops from 97.74% to 96.78%. This decrease may be attributed to the increased semantic granularity and the more prominent class imbalance present in the Houston dataset. Although each class was limited to 30 training samples to ensure a fair comparison, the distribution of test samples remains imbalanced. As a result, the model may tend to favor classes with higher sample frequencies during prediction, which can negatively impact overall performance.

In summary, the number of classes, sample distribution, and semantic similarity are key factors influencing model generalization. The experimental results on these four representative datasets demonstrate that the proposed DADFMamba model exhibits strong robustness and generalization ability, maintaining high performance across various scene types and complex class semantics.

4.2. Practical Significance in Remote Sensing Applications

Although classification accuracy is an important metric for evaluating the performance of models, real-world applications often face more complex challenges, including data heterogeneity, semantic overlap between classes, class imbalance, limited labeled samples, and environmental interference. Therefore, assessing a model’s adaptability and practicality in real-world scenarios is equally crucial.

The proposed DADFMamba model not only achieves outstanding classification performance on multiple benchmark datasets, but more importantly, the NSE strategy demonstrates strong transferability and practical applicability. For example, in agricultural remote sensing tasks, datasets like Indian Pines and Salinas contain many crop types with highly similar spectral characteristics, making them difficult to distinguish using conventional models. DADFMamba effectively utilizes neighborhood contextual information to enhance semantic discrimination, showing great potential for applications such as fine-grained crop classification, pest and disease detection, and crop type estimation.

Moreover, urban remote sensing datasets like Pavia University and Houston typically involve challenges such as mixed pixels, shadow interference, and class imbalance. The proposed method integrates the Spatial Structure Adaptive Fusion Module (SSAFM) and the SNGFM, which provide robust feature extraction capabilities. This enables the model to better identify fine-grained urban land-cover types, making it applicable to tasks such as urban land-use classification, smart city planning, and emergency response management.

Furthermore, DADFMamba demonstrates strong generalization even under the constraint of only 30 training samples per class, which is particularly valuable for addressing the challenge of limited labeled data in real-world remote sensing applications. Future work could further explore its transferability across regions and time, thereby promoting the development of hyperspectral classification models toward practicality and generalization.

4.3. Outlook and Future Work

Although the proposed model achieves strong performance across several representative HSI classification benchmarks, there remain several promising directions for future research. First, real-world remote sensing scenarios often involve challenges such as class imbalance and label noise, which may adversely affect the model’s generalization and robustness. Future work could consider incorporating class-aware loss functions (e.g., focal loss, class-balanced loss), dynamic sampling strategies, or noise-robust training methods (e.g., noise-aware learning) to improve the model’s adaptability in complex annotation environments. Additionally, integrating the proposed approach with semi-supervised learning or transfer learning paradigms offers potential to further enhance its applicability in real-world, low-resource settings.

Second, beyond hyperspectral imagery, alternative remote sensing data sources such as LiDAR and Synthetic Aperture Radar (SAR) provide unique advantages in certain scenarios, including better penetration capability and geometric information extraction. Although the current model is designed for optical hyperspectral data, its structure-aware fusion mechanism and feature enhancement strategies are inherently generalizable. Future research could explore adapting the method to multi-source or cross-modal remote sensing tasks, thereby evaluating its robustness and effectiveness under more diverse data conditions.

In summary, addressing practical challenges and extending the model across sensing modalities are valuable future directions that could further improve its applicability and engineering value in real-world remote sensing applications.

5. Conclusions

In this paper, we investigate the limitations of current Mamba-based methods in HSI classification. Specifically, on one hand, Mamba is inherently constrained by the input data format—it typically reshapes the 2D spatial dimensions of HSIs into 1D sequential data and employs various scanning patterns to capture local spatial dependencies. However, increasing the number of scanning directions remains limited in handling complex spatial structures, and longer scanning paths lead to increased computational cost. On the other hand, due to spatial variability in HSIs, existing Mamba-based methods have not sufficiently addressed this issue, resulting in suboptimal classification performance. To overcome these limitations, we propose DADFMamba, a novel framework that jointly captures spatial and spectral structures while adaptively integrating both types of features. In the SSAFM, we introduce a structure-aware state fusion (SASF) equation that utilizes dilated convolutions to capture spatial structural dependencies. To alleviate the impact of spatial variability, the SNGFM introduces the NSE strategy and GSS mechanism: NSE enhances target spectral features using neighboring spectral information, and GSS divides the enhanced spectral features into multiple groups to explore inter-group relationships and extract fine-grained spectral features. Finally, the FFD performs adaptive feature fusion. Extensive experiments were conducted on four benchmark HSI datasets to verify the effectiveness of the proposed method. The results demonstrated that our approach achieved higher classification accuracy than existing methods on all datasets, with OA improvements of 2.93%, 1.14%, 0.28%, and 0.97%, respectively. These results clearly indicate that the proposed method exhibits strong robustness and cross-domain generalization capability. Moreover, the model has advantages such as a small number of parameters and low memory consumption, making it well-suited for real-world remote sensing applications.

However, the proposed method also has some limitations. It does not consider few-shot classification scenarios (e.g., one-shot, one-shot). Furthermore, real-world remote sensing scenes often suffer from class imbalance and label noise, which require further investigation. In future work, we plan to explore the application of Mamba-based methods under few-shot learning settings and more comprehensively address the challenges in realistic remote sensing environments. For example, incorporating LiDAR data, which is less affected by cloud cover, may help further enhance classification performance.

Author Contributions

Conceptualization, J.Z. and M.S.; formal analysis, M.S.; methodology, J.Z.; software, J.Z.; resources, J.Z. and S.C.; writing—original draft preparation, J.Z.; writing—review and editing, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Provincial Natural Science Foundation of China (No. LH2019F038), the Basic Research Fund for the Provincial Universities in Heilongjiang Province (No. 145409323), and the Postgraduate Innovative Research Project of Qiqihar University (No. QUZLTS_CX2024049).

Data Availability Statement

The Indian Pines, Pavia University, and Salinas datasets are available at http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes (accessed on 1 September 2023). The Houston dataset is available at https://technical-community-spotlight.ieee.org/ieee-grss-announces-plans-for-2013-data-fusion-contest/ (accessed on 1 September 2020).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| HSI | hyperspectral image |

| SSM | state space model |

| DADFMamba | Dual-Aware Discriminative Fusion Mamba |

| SSAFM | Spatial-Structure-Aware Fusion Module |

| SNGFM | Spectral-Neighbor-Group Fusion Module |

| FFD | Feature Fusion Discriminator |

| SASF | structure-aware state fusion |

| NSE | neighbor spectrum enhancement |

| GSS | grouped spectrum scanning |

| GAP | Global Average Pooling |

| ML | machine learning |

| DL | deep learning |

| SVM | support vector machine |

| CNN | convolutional neural network |

| GCN | graph convolutional network |

| PCA | principal component analysis |

| ICA | independent component analysis |

| SOTA | state-of-the-art |

| OA | overall accuracy |

| AA | average accuracy |

| Kappa | Kappa coefficient |

| Adam | adaptive moment estimation |

| FLOPs | floating point operations |

References

- Deng, Y.; Tang, S.; Chang, S.; Zhang, H.; Liu, D.; Wang, W. A novel scheme for range ambiguity suppression of spaceborne SAR based on underdetermined blind source separation. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5207915. [Google Scholar] [CrossRef]

- Chang, S.; Deng, Y.; Zhang, Y.; Zhao, Q.; Wang, R.; Zhang, K. An advanced scheme for range ambiguity suppression of spaceborne SAR based on blind source separation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5230112. [Google Scholar] [CrossRef]

- Zhu, Q.; Deng, W.; Zheng, Z.; Zhong, Y.; Guan, Q.; Lin, W.; Zhang, L.; Li, D. A spectral-spatial-dependent global learning framework for insufficient and imbalanced hyperspectral image classification. IEEE Trans. Cybern. 2021, 52, 11709–11723. [Google Scholar] [CrossRef] [PubMed]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: A Review of Theory and Applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Khan, U.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V.K. Trends in Deep Learning for Medical Hyperspectral Image Analysis. IEEE Access 2021, 9, 79534–79548. [Google Scholar] [CrossRef]

- Yang, G.; Huang, K.; Sun, W.; Meng, X.; Mao, D.; Ge, Y. Enhanced mangrove vegetation index based on hyperspectral images for mapping mangrove. ISPRS J. Photogramm. Remote Sens. 2022, 189, 236–254. [Google Scholar] [CrossRef]

- Peyghambari, S.; Zhang, Y. Hyperspectral remote sensing in lithological mapping, mineral exploration, and environmental geology: An updated review. J. Appl. Remote Sens. 2021, 15, 031501. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Haut, J.; Paoletti, M.; Paz-Gallardo, A. Cloud implementation of logistic regression for hyperspectral image classification. In Proceedings of the 17th International Conference Computational and Mathematical Methods in Science and Engineering, Rota, Spain, 4–8 July 2017; pp. 1063–2321. [Google Scholar]

- Ham, J.; Chen, Y.; Crawford, M.M. Investigation of the random forest framework for classification of hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2005, 43, 492–501. [Google Scholar] [CrossRef]

- Haut, J.M.; Paoletti, M.; Plaza, J. Cloud implementation of the K-means algorithm for hyperspectral image analysis. J. Supercomput. 2017, 73, 514–529. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural. Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Krichen, M. Convolutional neural networks: A survey. Computers 2023, 12, 151. [Google Scholar] [CrossRef]

- Taye, M.M. Theoretical understanding of convolutional neural network: Concepts, architectures, applications, future directions. Computation 2023, 11, 52. [Google Scholar] [CrossRef]

- Zhang, S.; Tong, H.; Xu, J. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef] [PubMed]

- Wu, F.; Souza, A.; Zhang, T.; Fifty, C.; Yu, T.; Weinberger, K. Simplifying graph convolutional networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6861–6871. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 1–11. [Google Scholar]

- Gu, A. Modeling Sequences with Structured State Spaces; Stanford University Press: Stanford, CA, USA, 2023. [Google Scholar]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Yao, J.; Hong, D.; Li, C.; Chanussot, J. Spectralmamba: Efficient mamba for hyperspectral image classification. arXiv 2024, arXiv:2404.08489. [Google Scholar] [CrossRef]

- He, Y.; Tu, B.; Liu, B.; Li, J.; Plaza, A. 3DSS-Mamba: 3D-spectral-spatial mamba for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5534216. [Google Scholar] [CrossRef]