Abstract

Remote sensing (RS) data can be attained from different sources, such as drones, satellites, aerial platforms, or street-level cameras. Each source has its own characteristics, including the spectral bands, spatial resolution, and temporal coverage, which may affect the performance of the vehicle detection algorithm. Vehicle detection for urban applications using remote sensing imagery (RSI) is a difficult but significant task with many real-time applications. Due to its potential in different sectors, including traffic management, urban planning, environmental monitoring, and defense, the detection of vehicles from RS data, such as aerial or satellite imagery, has received greater emphasis. Machine learning (ML), especially deep learning (DL), has proven to be effective in vehicle detection tasks. A convolutional neural network (CNN) is widely utilized to detect vehicles and automatically learn features from the input images. This study develops the Improved Deep Learning-Based Vehicle Detection for Urban Applications using Remote Sensing Imagery (IDLVD-UARSI) technique. The major aim of the IDLVD-UARSI method emphasizes the recognition and classification of vehicle targets on RSI using a hyperparameter-tuned DL model. To achieve this, the IDLVD-UARSI algorithm utilizes an improved RefineDet model for the vehicle detection and classification process. Once the vehicles are detected, the classification process takes place using the convolutional autoencoder (CAE) model. Finally, a Quantum-Based Dwarf Mongoose Optimization (QDMO) algorithm is applied to ensure an optimal hyperparameter tuning process, demonstrating the novelty of the work. The simulation results of the IDLVD-UARSI technique are obtained on a benchmark vehicle database. The simulation values indicate that the IDLVD-UARSI technique outperforms the other recent DL models, with maximum accuracy of 97.89% and 98.69% on the VEDAI and ISPRS Potsdam databases, respectively.

1. Introduction

Driven by the challenges of rapid urbanization, urban cities are determined to apply modern sociotechnical transformations and change into smart cities. However, the success of these transformations depends heavily on detailed knowledge of spatial–temporal dynamics [1]. There has been much research discussion in the of science and engineering communities about the application of machine learning (ML) and artificial intelligence (AI) towards big data analytics and digitization, owing to the expansion of computer vision (CV) techniques [2]. Computer technology, used to perform tasks such as segmentation, detection, and the classification of remote sensing imagery (RSI), has become one of the major research topics. Among them, vehicle detection in RSI plays a major role in defense, urban vehicle supervision, safety-assisted driving, traffic planning, and so on. Fast and accurate detection approaches are essential for such tasks [3]. Compared with other detection targets in RSI (aeroplanes, buildings, and ships), the tasks of target detection become increasingly complex because there exist fewer target pixels, complex background interference data, and an irregular distribution of vehicle targets [4]. The detection of vehicles in RSI has been investigated for many years and has attained promising outcomes.

The deep learning (DL) methods and the traditional ML approaches are the two classes of target detection techniques using aerial images. The low-level image features, including color, corner, edge, shape, and texture, are extracted for classification and training in the ML approaches [5]. Researchers have introduced a target detection architecture, which uses a local binary pattern (LBP) in combination with histogram of gradients (HOG), for the detection of vehicle targets. The color difference is used for the recognition of blob-like areas extracted from grayscale and color features [6]. Moreover, there are CV technologies that use the optical flow and frame difference for the detection of moving vehicles. Vehicle detection aims to identify each instance of the vehicle in RSI. In former approaches, researchers have developed and extracted features and later categorized them to accomplish target detection [7]. The basic concept is to extract vehicle features and utilize ML methods for classification. RSI has been extensively utilized in several domains, namely urban and rural planning, disaster monitoring, national defense construction, agricultural management, and so on [8]. There also exists a huge gap between the detection performance and accuracy compared to the tremendous growth of DL techniques. Network models based on DL approaches have been prominently employed for large computer vision domains and have shown effective results [9]. Two categories of detection networks have been formed and continuously enhanced, single-phase networks and two-phase networks, with the progression of hardware technology and big data [10]. This study develops the Improved Deep Learning-Based Vehicle Detection for Urban Applications using Remote Sensing Imagery (IDLVD-UARSI) technique. The major aim of the IDLVD-UARSI method emphasizes the recognition and classification of vehicles in RSI. To achieve this, the IDLVD-UARSI method utilizes an improved RefineDet model for the vehicle detection and classification process. Once the vehicles are detected, the classification process takes place using the convolutional autoencoder (CAE) model. Finally, a Quantum-Based Dwarf Mongoose Optimization (QDMO) algorithm is applied to ensure an optimal hyperparameter tuning process and it helps in achieving improved performance. The simulation results of the IDLVD-UARSI technique are obtained on benchmark databases. The major contributions of the paper are given as follows.

- The IDLVD-UARSI technique significantly advances vehicle detection in urban environments using an improved RefineDet model, CAE classification, and QDMO-based hyperparameter tuning. To the best of our knowledge, the IDLVD-UARSI technique has never existed in the literature.

- It introduces an improved RefineDet model tailored to the challenges of urban contexts, resulting in more accurate and robust vehicle detection. Thus, CAEs can effectively extract and improve the accuracy of vehicle classification.

- The application of the Quantum-Based Dwarf Mongoose Optimization (QDMO) algorithm for hyperparameter tuning is a unique and innovative contribution. QDMO fine-tunes the model’s parameters, optimizing the overall performance of the vehicle detection and classification process.

2. Related Works

Tan et al. [11] utilized a DL method to discover vehicles in high-resolution satellite RSI. Initially, the images were categorized by employing the AlexNet model, and then the vehicle target identification capability of the Faster R-CNN technique was verified. In [12], the authors developed a backbone architecture with a context data component and attention module to detect vehicles from a real image, which allowed the extraction feature network to improve the deployment of context data and prominent areas. Rafique et al. [13] presented a new vehicle recognition and segmentation technique for the monitoring of smart traffic, which employed a CNN for real image classification. Later, the identified vehicles were grouped into distinct subgroups. Eventually, detected vehicles were monitored by kernelized filter and Kalman filter (KF)-based methods.

In [14], the authors selected a single-phase DL-based target detection approach for research depending on the model’s real-time processing necessities. Moreover, the study improved the YOLOv4 architecture and introduced a novel technique. Primarily, a classification setting of the non-maximal suppression threshold was developed to improve the performance without impacting the speed. Secondarily, the authors analyzed the anchor frame allocation difficulty in YOLOv4 and developed two allocation systems. The authors [15] suggested an unsupervised domain adaptation algorithm that does not need trained data and can retain identification outcomes in the target domain at a minimal cost. The authors enhanced adversative domain adaptation by employing rehabilitation loss to enable the learning of semantic features. Xiao et al. [16] developed a bi-directional feature fusion module (BFFM) to construct a multiscale feature pyramid to detect targets at various measures. A feature decoupling model (FDM) was developed that uses the fusion of channel and spatial attention. In addition, a localization refinement module (LRM) was developed to automatically optimize the anchor box constraints to attain the spatial arrangement of anchor boxes and object regression features.

In [17], the authors recommended an innovative Multi-Scale and Occlusion Aware Network (MSOA-Net) for the classification of UAV-based vehicles. The MSFAF-Net with self-adaptive FFM was developed. In RATH-Net, a self-attention module is suggested to guide the location-sensitive sub-networks. Cao et al. [18] presented a novel OD method that makes improvements to the YOLOv5 network. The integration of RepConv, BiFPN, Transformer, and Encoder techniques into the unique YOLOv5 results in increased accuracy of detection. The C3GAM algorithm was developed by presenting the GAM attention module to overcome the issue of difficult contextual regions.

In [19], the authors focused on real-time smaller vehicle detection for UAVs from RSI and presented a depthwise-separable attention-guided network (DAGN) dependent on YOLOv3. Primarily, the authors integrated the feature concatenation and attention block to provide the model with a great capability to separate essential and irrelevant features. Afterwards, the authors enhanced the loss function and candidate integration approach from YOLOv3. Zheng et al. [20] presented a learning approach termed Vehicle Synthesis GANs (VS-GANs) for the generation of annotated vehicles in RSI. This approach rapidly creates higher-quality annotated vehicle data instances and significantly assists in the training of vehicle detectors. Yu et al. [21] examined a convolution CapsNet to detect vehicles in higher-resolution RSI. Primarily, a testing image was segmented into super-pixels to generate meaningful and non-redundant patches. Afterwards, these patches were input into a convolution CapsNet to label them as vehicles or background.

Although the employment of DL approaches for vehicle classification from RSI has demonstrated its ability, there is a lack of widespread research on automated hyperparameter tuning approaches tailored specifically to this application. Considering and developing hyperparameter optimization systems that are effective for RSI is important. Several researchers have focused on certain databases for the testing and training of DL approaches. However, there is a need for research that explores the generalized methods and hyperparameters across different RS databases. Emerging methods and hyperparameter settings, which are adjusted to distinct data sources and imaging conditions, are major problems. Resolving these research gaps will lead to more effective, correct, and adjustable DL-based vehicle classification approaches for RSI applications. It also supports the broader field of RSI by developing the combination of DL approaches and optimizer systems.

3. The Proposed Model

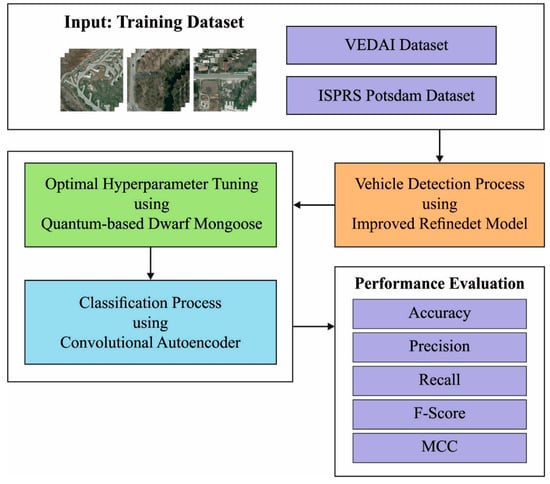

In this study, we focus on the design and development of the IDLVD-UARSI method using RSI. The major aim of the IDLVD-UARSI approach emphasizes the detection and classification of vehicles in RSI. In the IDLVD-UARSI technique, three major processes are involved, namely improved RefineDet vehicle detection, CAE-based classification, and QDMO-based hyperparameter tuning. Figure 1 describes the overall workflow of the IDLVD-UARSI method.

Figure 1.

Overall flow of IDLVD-UARSI algorithm.

3.1. Vehicle Detector: Improved RefineDet Model

The improved RefineDet approach is applied for vehicle target detection. Improved RefineDet uses the VGG16 architecture as a backbone network, which constructs a set of anchors with an aspect ratio and scale from the feature map through the anchor generative model of RPN. After two classifications and regressions [22], it attains a certain amount of object bounding boxes, along with the existence probabilities of the various classes in the bounding box. The object detection module (ODM), anchor refinement module (ARM), and transfer connection block (TCB) are the three modules of the proposed algorithm. Lastly, the final regression and classification are achieved by non-maximum suppression (NMS).

(1) The ARM module primarily includes additional convolution layers and the backbone network, VGG16. Mainly, a negative anchor filter, feature extraction, anchor generation, and anchor refinement are implemented by the ARM module. The negative anchor filter can effectively extract the negative anchor box and mitigate the sample imbalance. Then, the fused feature is transformed into lower-level features via TCBs, such that the low-level mapping feature exploited for detection has a high-level semantic dataset and enhances the outcomes of floating objects.

(2) The TCB module interconnects ODM and ARM and then transfers the ARM data to ODM. Like the architecture of FPN, the nearby TCB is connected to increase the semantic dataset of lower-level features and realize the feature fusion of high- and low-level features.

(3) The ODM module primarily includes prediction layers (classification and regression layers, viz., 3 × 3 convolution kernels) and the output of TCBs. The outcome of the prediction layer is the specific category and the coordinate offset relative to the anchor refinement boxes. The anchor refinement boxes are exploited as input for the regression and classification, and, based on NMS, the final bounding box is selected.

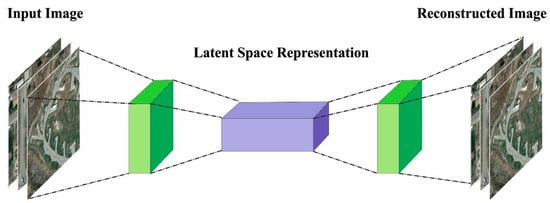

3.2. Vehicle Classification: CAE Model

In this work, the CAE model is utilized for the identification and classification of vehicles. As a variant of AE, CAE integrates the capability of autoencoder (AE) to represent the input dataset and the capability of CNNs to effectively extract image features [23]. An encoder and decoder are two NN blocks used to recreate the input. The encoded block is used to encode the input into hidden outcome , which is a compressed form of input. The dimension of the hidden outcome is smaller than that of the input . The decoder relies on the hidden output and generates the output, , to the input, . AE is trained to minimize the reconstructed loss of the network for the proper regeneration of the original input. Figure 2 displays the infrastructure of CAE.

Figure 2.

CAE structure.

AE comprises an encoder that compresses the information and decoder that reconstructs the input as follows.

where and denote the trained parameters of the encoder and decoder, correspondingly. By training AE, this parameter is set to minimize the cost function.

Here, indicates a reconstructed loss function, i.e., a binary cross-entropy or mean squared error averaged over the overall amount of samples, which are evaluated as follows:

In Equation (3), to prevent the network from learning the identity function, the regularization term is essential for the DNN, thereby increasing the generalization ability. In CAE, the pooling layer is used to prevent the overfitting of the network and enhances the generalization capability of the method. Therefore, the reconstructed loss is exploited as a cost function. The encoded CAE comprises convolution and pooling layers for the fully connected (FC) and network layers. A hidden layer is signified as an FC layer (1D layer). The decoder mainly includes a sequence of transposed convolutional layers with FC layers. However, transposed convolution tends to produce checkerboard artifacts and is replaced with the alternative layer of upsampling and convolution. Upsampling, convolution, and pooling are three major operations of CAE.

3.3. Hyperparameter Tuning: QDMO Algorithm

Lastly, the QDMO method is exploited for the optimum selection of the parameters of the CAE architecture. DMO was developed in [24] as an MH method that mimics the behavior of a DM looking for rich food. The scout group stage takes place when the new sleeping mound (SM) (i.e., food source) is found depending on the old SM. The DMO method initializes the population of mongoose () and begins with the generation of the matrix dimension x, where the problem dimension () is presented in a column, and the size of the population () is signified in rows.

where () represents the component of problem dimensions at population solution.

The value is based on Equation (7) as a uniformly distributed random integer constrained by and , the lower and upper limitations of the problem. Next, the fitness value of the solution estimates its probability, and this procedure is expressed as follows:

where , the number of mongoose, is upgraded to

In Equation (9), the number of babysitters can be represented as , and the female alpha exploits different vocalization () to communicate with others. Therefore, the DMO exploits Equation (10) to update the performance.

where represents a random integer in for each iteration. Moreover, the SM can be upgraded using Equation (11).

After calculating the average SM , the formula is represented as follows:

In the scouting phase, the new location of the candidate for food or SM is scouted, while the existing SM is ignored by the nomadic custom [25]. The movement regulating parameter , a movement vector (), a stochastic multiplier () and the existing position of the are used to navigate the scouted location for food and SMs:

The new scouted location (X) and the future movement are simulated by the success and failure of the growth of a complete solution based on the mongoose group. The mongoose movement (M) determined in Equation (14) and handled by is evaluated in Equation (15). At first, the collective–volitive parameters allow rapid exploration during the search process, but, utilizing each iteration of the work, we gradually shift towards defining a new region to exploit an effective one.

The alpha group shifts its position with a babysitter in the evening or after midday, which provides the possibility to explore the younger colony. The population size may affect the ratio of caretakers to foraging mongoose. Equation (16) is modeled to imitate the exchange process in the early evening and late afternoon.

The initial weight is set to 0 to ensure that the average weight of the alpha group is minimized. This procedure ends when the iteration count is reached and returns the best solution. In QBO, the features are considered as a quantum bit, where defines the superposition of a binary value in the range of [0, 1]. The QDMO technique is based on the quantum-based optimization (QBO) approach. A binary number represents the feature selection (1) or removed (0) [25]. The following equation is used to generate the arithmetical modelling of the .

In Equation (17), and characterize the probability values of the -bit as 0 and 1. The parameter indicates the angle of , and it can be upgraded through :

Now, the rotation angle of -bit of -solution is represented as . QBO is used to optimize the ability to balance among the exploitation and exploration of DMA while searching for better performance. At first, agents represent the population generation. Each performance has features and -bits:

The superposition of probability for the features selected or not is characterized by . The primary goal of QDMO is to upgrade the agents until they meet the final conditions:

Here, shows the random integer. The fitness selection is an important element in the QDMO method. An encoder solution is applied to measure the goodness (aptitude) of the candidate results. Here, the accuracy values are the primary condition employed in developing an FF.

Here, and signify the true and false positive values.

4. Results and Discussion

The performance of the IDLVD-UARSI method was tested using two databases: the VEDAI database [26] and the ISPRS Potsdam Database [27]. The VEDAI dataset comprises RGB tiles (1024 × 1024 px) and the associated infrared (IR) images at a 12.5 cm spatial resolution. For each tile, annotations are provided with the vehicle class, the coordinates of the center, and the four corners of the bounding polygons for all the vehicles in the image. VEDAI is used to train a CNN for vehicle classification. The ISPRS Potsdam Semantic labeling dataset is composed of 38 ortho-rectified aerial IRRGB images (6000 × 6000 px) at a 5 cm spatial resolution, taken over the city of Potsdam (Germany). A comprehensive pixel-level ground truth is provided for 24 tiles. The VEDAI database includes 3687 samples and the ISPRS Potsdam database includes 2244 samples, as defined in Table 1 and Table 2. Figure 3 demonstrates the sample visualization results offered by the proposed model. The figure illustrates that the proposed model properly classifies different classes of vehicles.

Table 1.

Description of the VEDAI database.

Table 2.

Description of ISPRS Potsdam database.

Figure 3.

Sample results. (a) Input images and (b) classified images.

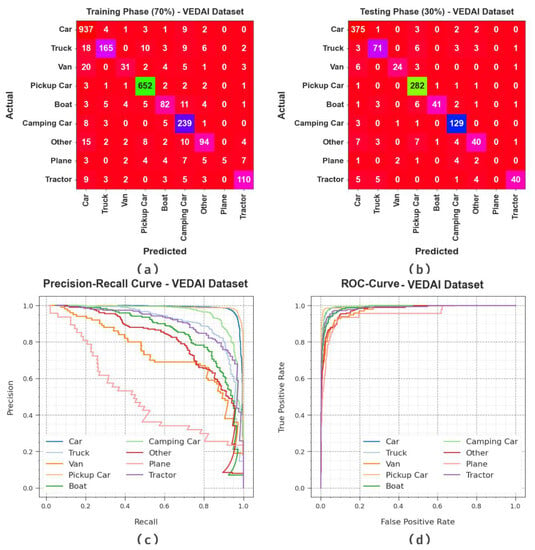

Figure 4 exhibits the classifier outcomes of the IDLVD-UARSI method on the VEDAI database. Figure 4a,b describe the confusion matrix presented by the IDLVD-UARSI technique at 70:30 of the TR set/TS set. The outcome indicates that the IDLVD-UARSI method has detected and classified all nine class labels accurately. Similarly, Figure 4c exhibits the PR examination of the IDLVD-UARSI system. The figure shows that the IDLVD-UARSI technique has obtained the highest PR outcomes in all nine classes. Finally, Figure 4d shows the ROC analysis of the IDLVD-UARSI method. It shows that the IDLVD-UARSI system has resulted in promising outcomes with high ROC performance on all nine class labels.

Figure 4.

Performances on VEDAI database. (a,b) Confusion matrices, (c) PR curve, and (d) ROC.

The vehicle recognition results of the IDLVD-UARSI technique are examined on the VEDAI database in Table 3. The outcomes show that the IDLVD-UARSI system properly recognizes the vehicles. On 70% of the TR set, the IDLVD-UARSI method gives average , , , , and MCC of 97.72%, 85.91%, 72.09%, 74.86%, and 75.26%, correspondingly. Moreover, on 30% of the TS set, the IDLVD-UARSI technique offers average , , , , and MCC of 97.89%, 78.78%, 72.86%, 75.35%, and 74.42%, correspondingly.

Table 3.

Vehicle recognition outcomes of IDLVD-UARSI technique on VEDAI database.

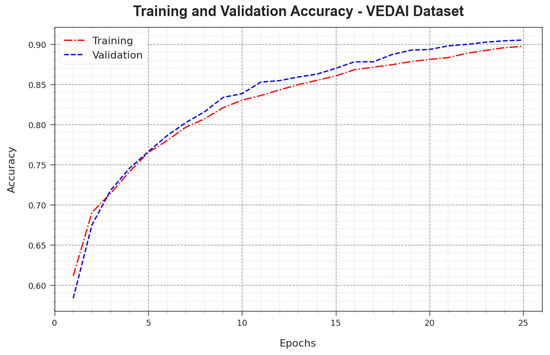

Figure 5 displays the training accuracy and of the IDLVD-UARSI technique on the VEDAI database. The is determined by the evaluation of the IDLVD-UARSI technique on the TR database, whereas the is computed by evaluating the performance on a separate testing database. The results show that and increase with an increase in epochs. Accordingly, the performance of the IDLVD-UARSI technique is improved on the TR and TS databases with the maximum number of epochs.

Figure 5.

curve of IDLVD-UARSI technique on VEDAI database.

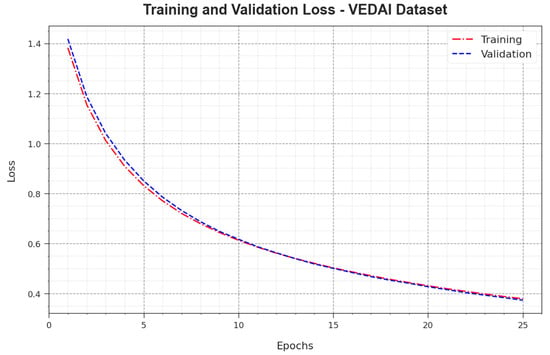

In Figure 6, the and outcomes of the IDLVD-UARSI method on the VEDAI database are shown. The defines the error among the predictive performance and original values on the TR data. The represents a measure of the performance of the IDLVD-UARSI technique on individual validation data. The results show that the and tend to decrease with rising epochs. This indicates the enhanced performance of the IDLVD-UARSI technique and its capability to generate accurate classification. The reduced values of and reveal the enhanced performance of the IDLVD-UARSI technique in capturing patterns and relationships.

Figure 6.

Loss curve of IDLVD-UARSI technique on VEDAI database.

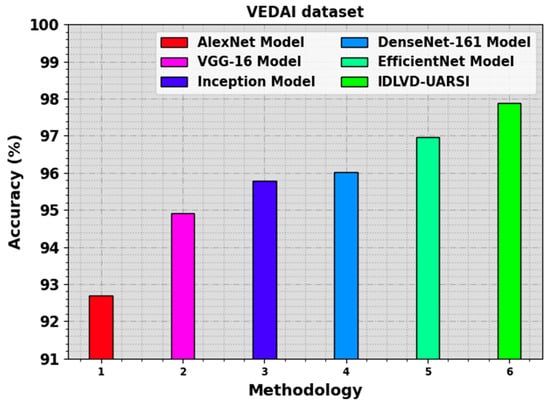

The comparison results of the IDLVD-UARSI technique on the VEDAI database are reported in Table 4 and Figure 7 [28,29]. The experimental outcomes show that the AlexNet and VGG16 techniques have poor performance. In addition, the Inception, DenseNet-161, and EfficientNet models show moderately improved results. Nevertheless, the IDLVD-UARSI technique exhibits improved results, with maximum of 97.89%.

Table 4.

outcomes of IDLVD-UARSI technique and other methods on VEDAI database.

Figure 7.

outcome of IDLVD-UARSI technique on VEDAI database.

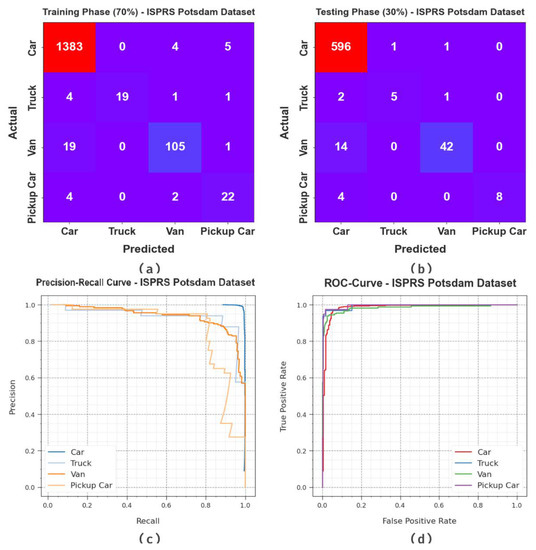

Figure 8 shows the classifier outcomes of the IDLVD-UARSI method on the ISPRS Potsdam database. Figure 8a,b describe the confusion matrix presented by the IDLVD-UARSI method at 70:30 of the TR set/TS set. The outcome indicates that the IDLVD-UARSI method has detected and classified all four class labels accurately. Similarly, Figure 8c demonstrates the PR examination of the IDLVD-UARSI system. The figure shows that the IDLVD-UARSI method has gained the maximum PR performance on all four classes. Finally, Figure 8d shows the ROC analysis of the IDLVD-UARSI method. The outcome reveals that the IDLVD-UARSI system has resulted in promising outcomes, with high ROC values on all four class labels.

Figure 8.

Performances on ISPRS Potsdam database. (a,b) Confusion matrices, (c) PR curve, and (d) ROC.

The vehicle recognition results of the IDLVD-UARSI technique are examined on the ISPRS Potsdam database in Table 5. The experimental outcomes show that the IDLVD-UARSI method properly detected the vehicles. On 70% of the TR set, the IDLVD-UARSI system shows average , , , , and MCC of 98.69%, 91.92%, 84.48%, 87.72%, and 84.96%, respectively. Moreover, on 30% of the TS set, the IDLVD-UARSI technique offers average , , , , and MCC of 98.29%, 93.89%, 75.96%, 83.40%, and 79.86%, correspondingly.

Table 5.

Vehicle recognition outcomes of IDLVD-UARSI technique on ISPRS Potsdam database.

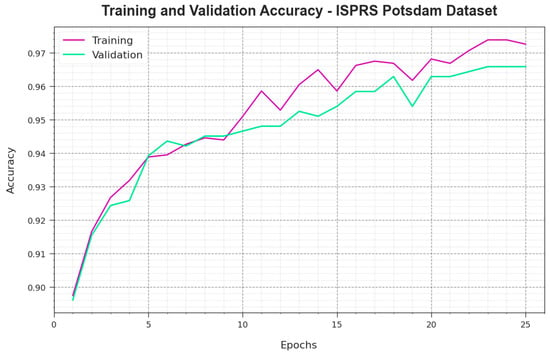

Figure 9 presents the training accuracy and of the IDLVD-UARSI technique on the ISPRS Potsdam database. The is determined by the evaluation of the IDLVD-UARSI technique on the TR database, whereas the is computed by evaluating the performance on a separate testing database. The results show that and increase with an increase in epochs. Accordingly, the performance of the IDLVD-UARSI technique is improved on the TR and TS databases with a rise in the number of epochs.

Figure 9.

curve of IDLVD-UARSI method on ISPRS Potsdam database.

In Figure 10, the and results of the IDLVD-UARSI technique on the ISPRS Potsdam database are shown. The defines the error among the predictive performance and original values on the TR data. The represents a measure of the performance of the IDLVD-UARSI method on individual validation data. The results indicate that the and tend to decrease with rising epochs. This highlights the enhanced performance of the IDLVD-UARSI technique and its capability to generate accurate classification. The reduced values of and reveal the enhanced performance of the IDLVD-UARSI technique in capturing patterns and relationships.

Figure 10.

Loss curve of IDLVD-UARSI method on ISPRS Potsdam database.

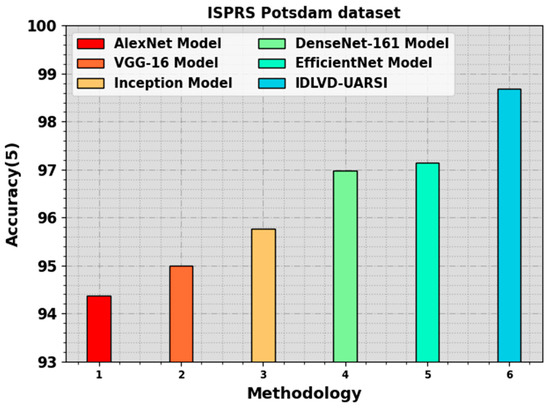

The comparison results of the IDLVD-UARSI technique on the ISPRS Potsdam database are reported in Table 6 and Figure 11. The experimental outcomes show that the AlexNet and VGG16 approaches have poor performance. In addition, the Inception, DenseNet-161, and EfficientNet models show moderately improved results. Nevertheless, the IDLVD-UARSI technique exhibits improved results, with maximum of 98.69%.

Table 6.

outcomes of IDLVD-UARSI technique and other methods on ISPRS Potsdam database.

Figure 11.

outcome of IDLVD-UARSI technique on ISPRS Potsdam database.

These performance results highlight the superior outcomes of the IDLVD-UARSI methodology compared with other methods. The improved solution of the IDLVD-UARSI algorithm is due to the employment of QDMO-based hyperparameter tuning, which appropriately chooses the optimum values for the hyperparameters of the provided CAE approach. Hyperparameters are settings that cannot be learned in the training but are supposed to be set earlier in training. They have a major impact on the model performance, and choosing an optimum solution leads to the best accuracy. By integrating QDMO-based hyperparameter tuning, the IDLVD-UARSI system obtains optimum solutions by focusing on better settings for the method. These outcomes ensure the greater solution of the IDLVD-UARSI methodology compared with other existing systems.

5. Conclusions

In this study, we focus on the design and development of the IDLVD-UARSI method using RSI. The major aim of the IDLVD-UARSI method focuses on the detection and classification of vehicle targets in RSI. In the IDLVD-UARSI technique, three major processes are involved, namely improved RefineDet vehicle detection, CAE-based classification, and QDMO-based hyperparameter tuning. The design of the QDMO technique helps in the optimal hyperparameter tuning process and it aids in achieving improved performance. The simulation results of the IDLVD-UARSI technique are obtained on a benchmark vehicle database. The simulation values indicate that the IDLVD-UARSI algorithm outperforms other recent DL models under various metrics. In the future, we aim to extend the IDLVD-UARSI technique by the use of feature fusion approaches. Moreover, the combination of multi-modal data sources such as multi-spectral images and LiDAR data offers richer data for classification. Examining systems to efficiently fuse and exploit these several data types from DL approaches will lead to better accuracy. Furthermore, the development of lightweight and effective DL techniques appropriate for utilization in resource-constrained remote sensing environments like satellites or drones is critical for real-time and on-device classification.

Author Contributions

Conceptualization, M.R. and H.A.A.; Methodology, M.R.; Software, A.M.A. and A.O.K. (Alaa O. Khadidos); Validation, A.O.K. (Adil O. Khadidos), A.M.A., K.H.A. and A.O.K. (Alaa O. Khadidos); Formal analysis, A.M.A. and M.R.; Investigation, H.A.A.; Resources, H.A.A., A.M.A. and K.H.A.; Data curation, H.A.A., A.O.K. (Adil O. Khadidos), K.H.A. and A.O.K. (Alaa O. Khadidos); Writing—original draft, M.R., A.O.K. (Alaa O. Khadidos) and H.A.A.; Writing—review & editing, K.H.A. and A.M.A.; Visualization, A.O.K. (Adil O. Khadidos) and A.O.K. (Alaa O. Khadidos); Project administration, M.R.; Funding acquisition, H.A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was funded by Institutional Fund Projects under grant no. (IFPIP: 1391-980-1443). Therefore, the authors gratefully acknowledge technical and financial support provided by the Ministry of Education and Deanship of Scientific Research (DSR), King Abdulaziz University (KAU), Jeddah, Saudi Arabia.

Data Availability Statement

Data sharing is not applicable to this article as no datasets were generated during the current study.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shi, F.; Zhang, T.; Zhang, T. Orientation-aware vehicle detection in aerial images via an anchor-free object detection approach. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5221–5233. [Google Scholar] [CrossRef]

- Qiu, H.; Li, H.; Wu, Q.; Meng, F.; Ngan, K.N.; Shi, H. A2RMNet: Adaptively aspect ratio multi-scale network for object detection in remote sensing images. Remote Sens. 2019, 11, 1594. [Google Scholar] [CrossRef]

- Ming, Q.; Miao, L.; Zhou, Z.; Dong, Y. CFC-Net: A critical feature capturing network for arbitrary-oriented object detection in remote-sensing images. arXiv 2021, arXiv:2101.06849. [Google Scholar] [CrossRef]

- Chen, J.; Wan, L.; Zhu, J.; Xu, G.; Deng, M. Multi-scale spatial and channel-wise attention for improving object detection in remote sensing imagery. IEEE Geosci. Remote Sens. Lett. 2019, 17, 681–685. [Google Scholar] [CrossRef]

- Li, X.; Deng, J.; Fang, Y. Few-shot object detection on remote sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Rabbi, J.; Ray, N.; Schubert, M.; Chowdhury, S.; Chao, D. Small-object detection in remote sensing images with end-to-end edge-enhanced GAN and object detector network. Remote Sens. 2020, 12, 1432. [Google Scholar] [CrossRef]

- Shen, J.; Liu, N.; Sun, H.; Zhou, H. Vehicle detection in aerial images based on lightweight deep convolutional network and generative adversarial network. IEEE Access 2019, 7, 148119–148130. [Google Scholar] [CrossRef]

- Ragab, M. Leveraging mayfly optimization with deep learning for secure remote sensing scene image classification. Comput. Electr. Eng. 2023, 108, 108672. [Google Scholar] [CrossRef]

- Tagab, M.; Ashary, E.B.; Aljedaibi, W.H.; Alzahrani, I.R.; Kumar, A.; Gupta, D.; Mansour, R.F. A novel metaheuristic with adaptive neuro-fuzzy inference system for decision making on autonomous unmanned aerial vehicle systems. ISA Trans. 2023, 132, 16–23. [Google Scholar]

- Ma, B.; Liu, Z.; Jiang, F.; Yan, Y.; Yuan, J.; Bu, S. Vehicle detection in aerial images using rotation-invariant cascaded forest. IEEE Access 2019, 7, 59613–59623. [Google Scholar] [CrossRef]

- Tan, Q.; Ling, J.; Hu, J.; Qin, X.; Hu, J. Vehicle detection in high-resolution satellite remote sensing images based on deep learning. IEEE Access 2020, 8, 153394–153402. [Google Scholar] [CrossRef]

- Shen, J.; Liu, N.; Sun, H.; Li, D.; Zhang, Y. Lightweight deep network with context information and attention mechanism for vehicle detection in the aerial image. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Rafique, A.A.; Al-Rasheed, A.; Ksibi, A.; Ayadi, M.; Jalal, A.; Alnowaiser, K.; Meshref, H.; Shorfuzzaman, M.; Gochoo, M.; Park, J. Smart Traffic Monitoring Through Pyramid Pooling Vehicle Detection and Filter-Based Tracking on Aerial Images. IEEE Access 2023, 11, 2993–3007. [Google Scholar] [CrossRef]

- Zakria, Z.; Deng, J.; Kumar, R.; Khokhar, M.S.; Cai, J.; Kumar, J. Multiscale and direction target detecting in remote sensing images via modified YOLO-v4. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1039–1048. [Google Scholar] [CrossRef]

- Koga, Y.; Miyazaki, H.; Shibasaki, R. A method for vehicle detection in high-resolution satellite images that uses a region-based object detector and unsupervised domain adaptation. Remote Sens. 2020, 12, 575. [Google Scholar] [CrossRef]

- Xiao, J.; Yao, Y.; Zhou, J.; Guo, H.; Yu, Q.; Wang, Y.F. FDLR-Net: A feature decoupling and localization refinement network for object detection in remote sensing images. Expert Syst. Appl. 2023, 225, 120068. [Google Scholar] [CrossRef]

- Zhang, W.; Liu, C.; Chang, F.; Song, Y. Multi-scale and occlusion aware network for vehicle detection and segmentation on UAV aerial images. Remote Sens. 2020, 12, 1760. [Google Scholar] [CrossRef]

- Cao, F.; Xing, B.; Luo, J.; Li, D.; Qian, Y.; Zhang, C.; Bai, H.; Zhang, H. An Efficient Object Detection Algorithm Based on Improved YOLOv5 for High-Spatial-Resolution Remote Sensing Images. Remote Sens. 2023, 15, 3755. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Y.; Liu, T.; Lin, Z.; Wang, S. DAGN: A real-time UAV remote sensing image vehicle detection framework. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1884–1888. [Google Scholar] [CrossRef]

- Zheng, K.; Wei, M.; Sun, G.; Anas, B.; Li, Y. Using vehicle synthesis generative adversarial networks to improve vehicle detection in remote sensing images. ISPRS Int. J. Geo-Inf. 2019, 8, 390. [Google Scholar] [CrossRef]

- Yu, Y.; Gu, T.; Guan, H.; Li, D.; Jin, S. Vehicle detection from high-resolution remote sensing imagery using convolutional capsule networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1894–1898. [Google Scholar] [CrossRef]

- Zhang, L.; Wei, Y.; Wang, H.; Shao, Y.; Shen, J. Real-time detection of river surface floating object based on improved refined. IEEE Access 2021, 9, 81147–81160. [Google Scholar] [CrossRef]

- Arumugam, D.; Kiran, R. Compact representation and identification of important regions of metal microstructures using complex-step convolutional autoencoders. Mater. Des. 2022, 223, 111236. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Dwarf mongoose optimization algorithm. Comput. Methods Appl. Mech. Eng. 2022, 391, 114570. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Ewees, A.A.; Al-qaness, M.A.; Alshathri, S.; Ibrahim, R.A. Feature Selection for High Dimensional Datasets Based on Quantum-Based Dwarf Mongoose Optimization. Mathematics 2022, 10, 4565. [Google Scholar] [CrossRef]

- Razakarivony, S.; Jurie, F. Vehicle Detection in Aerial Imagery: A small target detection benchmark. J. Vis. Commun. Image Represent 2016, 34, 187–203. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Jung, J.; Gerke, M.; Baillard, C.; Benitez, S.; Breitkopf, U. The ISPRS benchmark on urban object classification and 3D building reconstruction. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 1, 293–298. [Google Scholar] [CrossRef]

- Ahmed, M.A.; Althubiti, S.A.; de Albuquerque, V.H.C.; dos Reis, M.C.; Shashidhar, C.; Murthy, T.S.; Lydia, E.L. Fuzzy wavelet neural network driven vehicle detection on remote sensing imagery. Comput. Electr. Eng. 2023, 109, 108765. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).