Abstract

Image-based displacement measurement techniques are widely used for sensing the deformation of structures, and plays an increasing role in structural health monitoring owing to its benefit of non-contacting. In this study, a non-overlapping dual camera measurement model with the aid of global navigation satellite system (GNSS) is proposed to sense the three-dimensional (3D) displacements of high-rise structures. Each component of the dual camera system can measure a pair of displacement components of a target point in a 3D space, and its pose relative to the target can be obtained by combining a built-in inclinometer and a GNSS system. To eliminate the coupling of lateral and vertical displacements caused by the perspective projection, a homography-based transformation is introduced to correct the inclined image planes. In contrast to the stereo vision-based displacement measurement techniques, the proposed method does not require the overlapping of the field of views and the calibration of the vision geometry. Both simulation and experiment demonstrate the feasibility and correctness of the proposed method, heralding that it has a potential capacity in the field of remote health monitoring for high-rise buildings.

1. Introduction

With the rapid development of the global economy and construction technology, the construction of high-rise structures, such as civil buildings, industrial chimneys and towers, has maintained a rapid growth over the past ten years [1]. However, these structures may fail due to long-term load, environmental changes, and other factors [2,3]. The horizontal deformations, including shearing displacement between stories and overall bending, caused by wind, are the dominant factors for health monitoring [4,5]. Meanwhile, the overall vertical displacement of a high-rise structure is also a critical indicator to reflect the structural behavior in the service period [6]. Therefore, monitoring the 3D displacements of high-rise structures is of great significance for inspecting structural reliability and safety in structural health monitoring (SHM).

Traditionally, it is common to use the contact sensors for monitoring the lateral displacement of high-rise buildings [7]. Among them, displacement meters and accelerometers are two types that are mostly used. e.g., accelerometers can effectively capture the acceleration and further allow for deriving the displacement in a specific coordinate direction of large facilities under external excitation [8,9,10]. Due to the development of the global navigation satellite system (GNSS), it is widely used to monitor the lateral and vertical displacement of high-rise buildings and bridges, owing to its small dependence on the service environment [11,12,13,14]. Authors in [15] have attempted to sense the lateral displacement of the high-rise buildings subjected to wind load by installing an inclinometer at a target point on the buildings, and remotely cooperating with a remote sensing vibration detector. Even some sensors fabricated by new materials have also been used for high-rise building health monitoring, such as the PZT piezoelectric ceramics [16] and nanometer material [17].

Although most of the SHM systems are well-established, being based on these sensors after decades of development, they are working based on the data collection in the manner of point-contact sensing. Aside from the hassles in maintenance, this raises another issue: that it is difficult and expensive to collect the 3D deformation of multiple points simultaneously since it requires the integration of multiple sensors in the structures. To solve the problems, several non-contact measurement techniques have been established to perform health monitoring of structures, such as the laser displacement meters and the phase-scanned radars, which have been applied to collect the deformation data of bridges, dams, and high-rise buildings [18,19,20,21]. Although these systems can monitor the displacement of large infrastructures without installing sensors on the structures, it is difficult for them to achieve long-term stable monitoring because of their dependence on stable reference points [11]. Image-based displacement measurement techniques have significantly altered the progress of health monitoring large infrastructures due to the advantages of long distance, non-contact, high accuracy, and multi-point or full-field measurement [22]. A widely used image-based technique is derived from stereo vision [23,24], which is capable of retrieving the 3D displacement of targets, such as masonry structures [25,26], bridges [27,28], and even underwater structures [29]. As a result of stereo vision, a good calibration of the 3D imaging system is critical for obtaining accurate measurements. However, it is challenging for long range sensing due to the large field of view and complex measurement environment. Some alternate techniques are accordingly developed for easy remote monitoring. A monocular video deflectometer (MVD) is such a promising technique. Two types of MVDs were reported to measure the deflection of the bridges based on digital image correlation (DIC) [8,30]. Although both are effective in conditions with oblique optical axis, the latter advanced the MVD by considering the variation of the pitch angles in the whole image. In addition, the motion amplification method based on the monocular video was also a promising method for the monitoring of structures [31].

For image based SHM of high-rise buildings, several related studies are acknowledged. Jong et al. divided a high-rise building into sections and then measured the lateral deformation by accumulating the relative displacement of adjacent sections [32]. However, they did not consider the accumulation error of the relative displacement. Guo et al. proposed a stratification method based on projective rectification, with lines to monitor the seismic displacement of buildings [33]. Although the method is effective in reducing the influence of camera motion, it highly relies on the line features which might be unavailable in some situations. Recently, Ye et al. reported to monitor, long-term, the displacement of an ancient tower caused by the change of geotechnical conditions with a dual camera system [34]; the work shows the advantages of image-based SHM for high-rise buildings, yet does not consider the error induced by yaw angle variation. Besides, other researchers have also attempted to combine the GNSS with the image-based methods for sensing the deformation of the large-scale structures from a long distance [35]. These recent progresses are promoting the development of the SHM techniques and inspiring the related communities to build new methods.

This study proposes a non-overlapping dual camera model for sensing the 3D displacement of high-rise buildings from a long-range by combining image tracking with the GNSS system and inclinometers. The proposed method is described in detail by following the organization as: Section 2 introduces the methodology, including the non-overlapping dual camera measurement model in Section 2.1 and the principle of GNSS-aided yaw angle determination in Section 2.2; Section 3 shows the simulation and experimental results; Section 4 discusses the influence of the positioning accuracy of GNSS on the yaw angle, and Section 5 concludes.

2. Methodology

As towering structures are mainly subjected to random wind load, the lateral displacement is a critical control indicator for structural safety assessment. In addition, the vertical response may be remarkable even if the wind load is horizontal, implying that the vertical displacement or settlement should be concern also. However, most of the high-rise structures are located in the built-up yet prosperous urban areas, so it is difficult to find an appropriate workspace for setting up a stereo vision system (such as 3D-DIC) with good optical geometry to sense the lateral and vertical displacements of the structure’s sections of interest. To address this predicament, this section elaborates on a 3D displacement remote sensing system that does not relies on stereo vision geometry. The proposed system can measure the 3D displacement of a towering structure with two non-overlapping cameras placed at different sides of the structure. Details are described as follows.

2.1. Three-Dimensional Displacement Sensing with Two Non-Overlapping Cameras

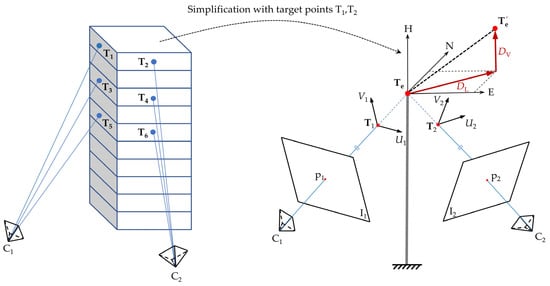

Generally, the lateral and vertical displacements characterize the overall deformation at a certain floor relative to the ground reference for high-rise structures, and the intraformational displacement of the floors is a low-order quantity compared to the overall displacement. Hence, it is reasonable to simplify the target floor to be measured to a point in 3D space. With this assumption, measuring the lateral and vertical displacements of a structure’s floor is turned into a problem of measuring 3D displacements of a space point from two non-overlapping views, as illustrated in Figure 1.

Figure 1.

3D displacement sensing with two non-overlapping cameras C1 and C2.

As shown in Figure 1, the different target floors of the high-rise building are observed by the cameras C1 and C2 from its left and front sides, respectively, obviously forming two non-overlapping views. The lines of sight of the cameras strike different sides of the target floors, generating a set of target points, e.g., T1 to T6. For any pair of target points, such as Ti (i = 1, 2) on the top floor, the corresponding cameras can sense the displacement components, denoted by Vi and Ui, parallel to their image planes. If we follow the assumption above and represent the target level by the 3D point , its lateral displacement, DL, and vertical settlement, DV, can be obtained from the displacement components of T1 and T2 by following the adding principle. To achieve the goal, the azimuth coordinate system E-N-H, rather than the Cartesian coordinate frame, in Figure 2a, is introduced to build a common coordinate frame for both cameras. With this simplification, the fundamental model of sensing lateral displacement and vertical settlement can be established as follows.

Figure 2.

Schematics for computing displacement: (a) lateral displacement; (b) vertical settlement.

The relationships between the lateral displacement and vertical settlement, and the measured displacement components of and are shown in Figure 2. Let θ1 be the angle between U1 and E-axis, and θ2 be the angle between U2 and N-axis. According to the Figure 2a, the lateral displacement, DL, can be computed as:

where

With the camera-measured displacements, Vi, the vertical settlement, Dv, can be computed according to Figure 2b. Supposing the pitch angles of cameras Ci are αi, if the target points Ti are selected on the lines of sight close to the optical axes, then we have

In Equations (2) and (3), Ui and Vi can be determined by scaling the corresponding pixel displacement components, which are estimated in the image domain by comparing the images before and after displacement with the well-established DIC technique [36,37]. Let ui and vi be the pixel displacement components in both axes. Then, Ui = Aiui and Vi = Aivi with Ai, and the scale factors for both cameras are determined by

where Di, the distance between the i-th camera and the target point Ti, is measured by the laser rangefinder or GNNS. fi and lps are the focal length and pixel size, respectively, (xi, yi) are the projection coordinates of Ti, and (xci, yci) are the principal coordinates. It is worth noting that computing the scale factors with the initial measured Di will lead to a significant error when the displacement of the target point is large. To address the problem, we recommend computing the current scale factors by updating the distances Di with the initially estimated displacement components, DLE and DLN. The updated distances, Di*, related to the current position of the target Ti, are given by Equation (5). The current scale factors are then computed by replacing the initial distances, Di, in Equation (4) with the updated distances Di*.

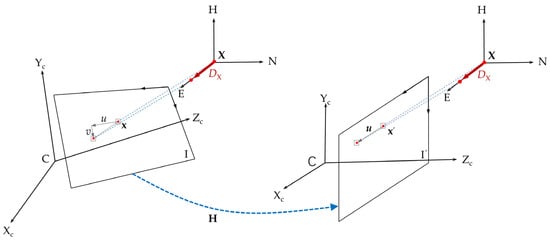

Figure 1 shows that both cameras observed the target points in a lateral oblique position, implying the measured settlement is affected by the lateral displacement. In particular, the lateral displacement of high-rise structures under external excitation is often much larger than the vertical displacement. As a result, the vertical displacement Dv, estimated by Equation (3), often contains a large error. The underlying reason is that the perspective projection does not hold parallelism. Therefore, when the image planes are not parallel to the plane DL–DV, lateral displacement of the object will cause the change of the vertical displacement. To address the problem, a homography-based correction approach is introduced here to correct the vertical displacement by mapping the inclined image plane (relative to the span plane of DL and DV, denoted by DL–DV) into the one parallel to the plane DL–DV, as shown in Figure 3. The inclined image plane I is the actual one on which the measured lateral and vertical displacements are coupled. Our goal is to map I to the image plane I’ by following the homography transformation, denoted by a matrix H, between them. Let x and x’ be the actual and corrected projections of the 3D target point X on the images I and I’, respectively. The relationship between x and x’ can be expressed as follows [38]:

Figure 3.

Homography-based vertical displacement correction.

According to the perspective camera model, the actual projection x is determined by

where K is the intrinsic matrix of the camera and I is a 3 × 3 identity matrix. From Figure 3, the image plane I’ can be considered as the result of rotating the original camera frame about the camera center. Supposing the rotation matrix is R, the corrected projection x’ is thus determined by

Eliminating X by combining Equations (7) and (8) shows that x’ = KRK−1x. According to Equation (6), the homography matrix H can be determined as:

This implies that, for a calibrated camera, the true vertical displacement of the target can be retrieved by estimating the corrected projection x’ via Equations (6) and (9), where the rotation matrix R can be computed according to each camera pose, measured in the azimuth frame E-N-H and the displacement direction of the equivalent target point. For camera Ci, its pose consists of the pitch angle αi, roll angle and yaw angle θi. The former two angles are measured by following the methods in [29,31], while measuring the latter one is not trivial in long-range high-rise structure displacement sensing, which will be introduced in the next section.

2.2. Determining the Yaw Angles of the Cameras with GNSS

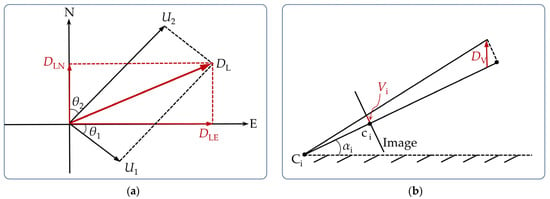

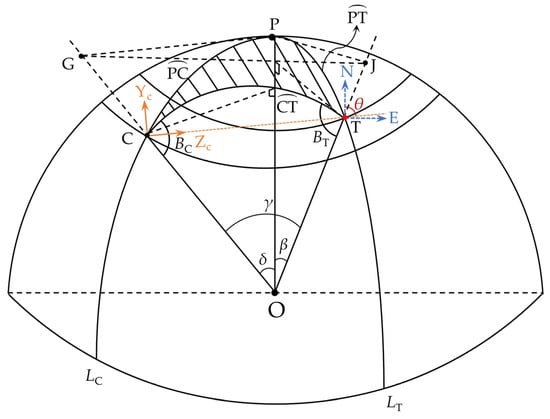

The yaw angle of each camera relative to the frame E-N-H is essential for determining the lateral and vertical displacements. Due to the long-range measurement, determining the yaw angle for each camera is not easy. For that, we here propose to obtain the yaw angles θ through the GNSS system. The method for determining the yaw angle θ of the camera C is schematically shown in Figure 4. For symbolic brevity, the subscript is omitted in this section.

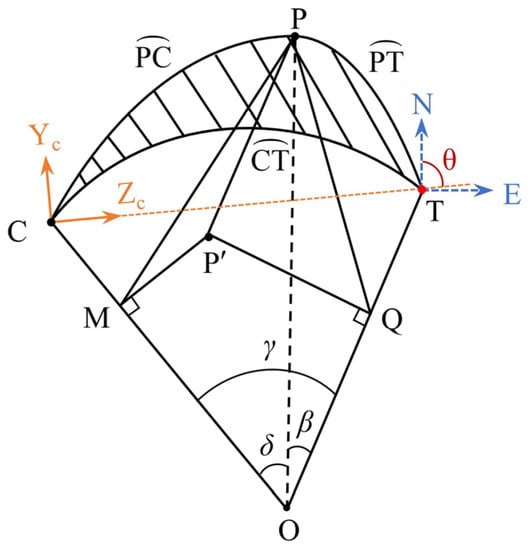

Figure 4.

Spatial geometry for determining the yaw angles θ with GNSS.

As shown in Figure 4, one camera C is set to observe a target point T. The relative positional relationship, namely the yaw angle θ, of them can be described with two points on a spherical triangle [39]. For that, two receivers of the GNSS are placed at the positions of camera C and the target point T to obtain their longitude and latitude coordinates, denoted by (BC, LC) and (BT, LT), respectively. Suppose O is the center of the earth sphere and P is the apex of the northern hemisphere. (The north direction is the starting target, and the clockwise direction is positive.) Points P, C, and T form a spherical triangle PCT, which gives the relative spatial relation for determining the yaw angle. One can see that the tangent of the arc edges and , passing through the point P, intersects with the lines OC and OT at the points G and J, respectively. Let δ, β and γ be the spherical center angles corresponding to the arc edges , and , respectively. We can obtain the following relationship according to the cosine formula of the spherical triangle:

where ∠P = LC–LT is the dihedral angle between planes COP and TOP, δ = 90° − BC, and β = 90° − BT.

Making a perpendicular line to the plane COT through the apex P, the vertical foot is denoted by P’. Then, we have four right angles ΔOMP, ΔOQP, PMP’, and ΔPQP’, as shown in Figure 5. Let ∠C be the dihedral angle between the planes COP and COT, i.e., ∠C = ∠PMP’, and ∠T be the dihedral angle between the planes TOP and COT, i.e., ∠T = ∠PQP’. With the sine formula of spherical triangle, we obtain:

Figure 5.

Spatial geometry for the camera C and the target T.

According to Equation (11), the dihedral angle ∠C is determined as follows:

Finally, the yaw angle θ of the camera C relative to the target T can be determined from ∠C, according to the latitude and longitude coordinates of C and T, as shown in Table 1.

Table 1.

True value of the yaw angle.

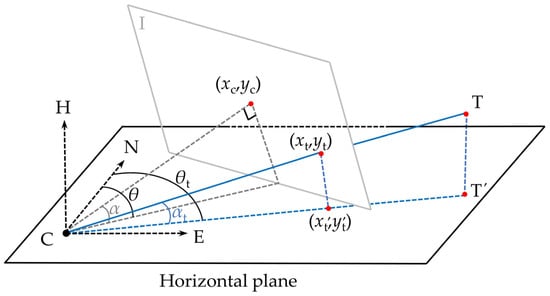

Table 1 gives the yaw angle, called after the leading yaw angle for short, of each camera relative to the spatial points on the optical axis, while, for those target points not on the optical axis (off-optical-axis), it is required to recalculate the yaw angles according to the leading yaw angle θ and their projections. The principle is shown in Figure 6. Given that the camera center C and the image center (xc, yc) (which is the projection of the targets on the optical axis), the yaw angle θt relative to the off-optical-axis target point T, can be determined as follows:

where xc and xt are the horizontal coordinates of the image center and the observed projection of T, and α is the pitch angle of the camera. As shown in Figure 6, Equation (13) is derived according to the projection of the target T on the right part of the image, I. If the projection falls on the left half of the image, the plus sign between the two terms on the right-hand side should be replaced by the minus sign. Therefore, the general expression for determining the yaw angle is given by:

Figure 6.

Geometry for determining the yaw angle of the camera C relative to the off-optical-axis targets.

It is worth noting that, because of the height difference between the camera and the target point in real applications of measuring the high-rising buildings, Equation (14) actually gives the yaw angle of the projection, denoted by CT’, of the line of sight CT on the E-N plane relative to the north direction, as shown in Figure 6. This will lead to a measurement error if the observed image point (xt, yt) is directly used to compute the displacement together with the determined yaw angle in Equation (14). A reasonable approach is to use the point (xt’, yt’) on the line CT’ that corresponds to the observed projection on the line CT. This can be done by inversely rotating the point (xt, yt) using the pitch angle αt, where αt is computed according to the measured pitch angle α and the distance from the observed projection to the image center by following the method in [31]. As the pitch angle representing the height difference between the camera and the target point, the foregoing rotation operation implicitly eliminates the error caused by ignoring the height difference in determining the yaw angle. In this paper, this rotation process is implemented by applying Equation (6) because the homography matrix H is related to the rotation matrix in Equation (9), which considers the pitch angle.

3. Experiments and Results

Here the correctness and performance of the proposed method are verified with a simulation and an experiment in the following sections. In the simulation, the resolution of the used camera is 5120 × 5120 pixels and the pixel size is 3.45 μm; in the experiment, the resolution of both cameras is 2448 × 2050 pixels and the pixel size is also 3.45 μm. Details are given below.

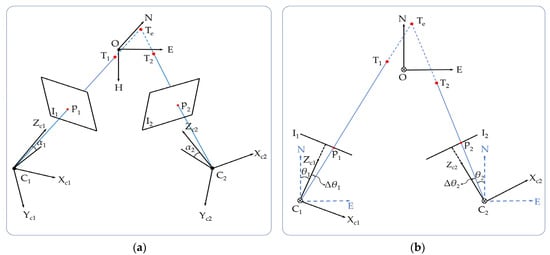

3.1. Three-Dimensional Displacement Simulation

To validate the correctness of the proposed 3D displacement sensing method, two cameras, C1 and C2, were set to measure a single target point, T, in the space, as shown in Figure 7. The pitch angles, α1 and α2, of cameras C1 and C2 were identical with a value of 70°, and the corresponding yaw angles θ1 and θ2 were both 15°. The distances between C1 and C2 to the origin, O, of the azimuth coordinate system both were 292.38 m, and that between the two cameras was 51.76 m. The positions of C1 and C2 in the coordinate frame E-N-H were −25,881.86, −96,592.43, 274,747.33 mm and 25,881.86, −96,592.43, 274,747.33 mm, respectively. From the H coordinates, it can be found that the height difference between each camera and the target level was 274.75 m, which is roughly equivalent to the height of an ordinary high-rise building. The focal lengths of both cameras were 200 mm. The initial positions of T1 and T2 in the coordinate frame E-N-H were −50, 50, 0 mm and 1000, −100, 0 mm, respectively. As the coordinates of the initially projected points of their initial positions were not located at the image centers, the yaw angles should be corrected according to Equation (14). Let Δθ1 and Δθ2 respectively represent the azimuth correction angles of the target points T1 and T2. For generating lateral and vertical displacements, target points T1 and T2 were simultaneously shifted along E-, N- and H-axis, respectively, from their initial positions. The displacement range for each direction was −500.00 mm to 500.00 mm with an increment of 1.00 mm.

Figure 7.

Schematic setup of the cameras and target points in the simulation: (a) geometric relations; (b) the vertical view.

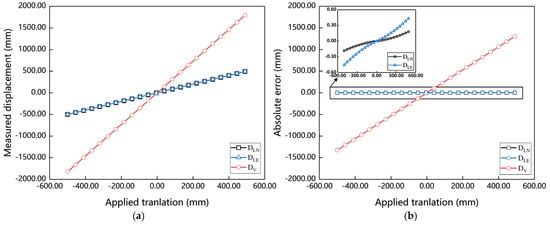

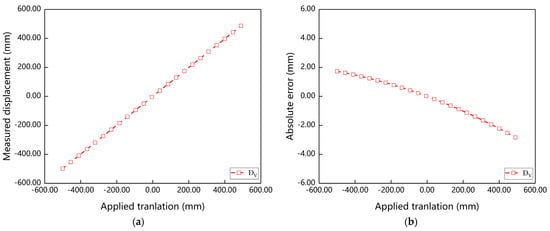

In the simulation, both the displacements along the E- and N-axis are lateral ones and were computed via Equation (1). The displacement along the H axis can be regarded as vertical settlement and was computed by Equation (3). The calculated lateral displacements and the vertical settlement are shown in Figure 8a. For investigating the accuracy, their absolute errors were computed and are shown in Figure 8b. One can see that the lateral displacements DLE and DLN are close to the true values, with maximum absolute errors 0.18 mm and 0.46 mm, respectively, while the error of the computed vertical settlement linearly increases with increasing the applied displacement. The maximum error of the vertical settlement is up to 1325.82 mm, and in every load step, the error is about 265% of the applied vertical displacement. This implies, as expected, the lateral displacement computed by Equation (2) is correct, but the computed vertical settlement is unbelievable. For that, the vertical settlement was corrected by following the strategy introduced in Section 2.1. Results are shown in Figure 9. One can see that the error of the vertical settlement is obviously reduced to the same level of the lateral displacement, with the maximum value about 2.89 mm, showing that the correction method is feasible and correct.

Figure 8.

Measurement results of target point displacement in simulation experiment: (a) measured displacement; (b) absolute error.

Figure 9.

Vertical displacement correction results: (a) measured displacement after correction; (b) absolute error.

3.2. Field Experiment

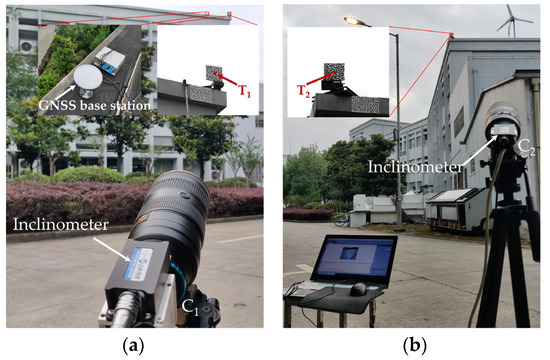

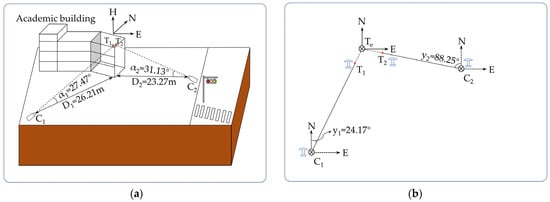

To show the potential capability of the proposed method in actual applications, an outdoor experiment was carried out. In the experiment, two square panels with speckles were fixed on a translation stage (accuracy is 0.01 mm) which was fixed on the roof of a building, as shown in Figure 10. The two panels are placed close and perpendicular to each other. Two cameras C1 and C2 (Baumer TX50, 200 mm Nikon lens with a model of af-s70-200/2.8e) were placed at the different sides of the building to observe the target points T1 and T2 on the speckled panels, respectively. The distance between the two target points was about 150 mm. For each camera, a dual-axis inclinometer (MSENSOR Tech, Wuxi, China) was amounted on its upper face. The measurement range and accuracy of both inclinometers were ±90° and 0.01°, respectively. To obtain the pitch angle of each camera, the leading axis of the inclinometer was parallel to the optical axis of the camera. The GNSS was a self-developed differential positioning system based on the chip F9P (made by Beitian communication). The horizontal positioning accuracy and elevation positioning accuracy of the positioning system were 1.00 cm and 2.00 cm, respectively. Before testing, the positions of both cameras and the targets in the E-N-H system were measured by using the GNSS. The overall experiment site and the relative positions between the targets and the cameras are shown in Figure 10 and Figure 11, and the positions and distances between the cameras and the corresponding target points are listed in Table 2.

Figure 10.

Experiment setups for (a) camera C1; (b) camera C2.

Figure 11.

Schematic of camera-target point arrangement: (a) spatial relations; (b) top view.

Table 2.

Positions and distances between the cameras and the corresponding target points.

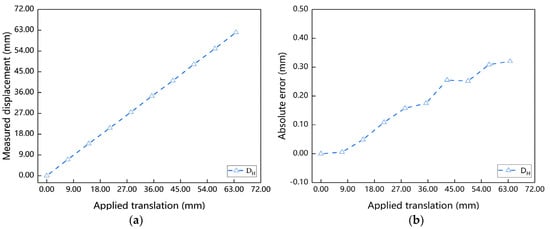

In the experiment, to simulate the lateral displacement of the building, the targets were translated along the horizontal direction by adjusting the stage. The applied displacement range was from 0 mm to 63.63 mm and the increment was 7.07 mm. In each step, each of the cameras captured one image of the corresponding target. Once the images of all steps were obtained, the lateral displacements could be computed by applying the method introduced in Section 2. Results are shown in Figure 12a and the absolute errors relative to the applied translations are shown in (b). By close inspection of the error curve, it can be found that the error increases with increasing of the applied displacement, and the maximum absolute error is 0.32 mm which is about 0.50% of the corresponding actual displacement. Limited to the range of the translation stage, Figure 12b shows the trend of the error for applied displacement to be below 64 mm. However, one can find that the measurement error of the proposed method goes slowly on a nearly linear path by incorporating the error trend, starting from the initial position in Figure 8b, implying that the error level for a given displacement range could be evaluated approximately from Figure 12b.

Figure 12.

Planar displacement results of target point displacement in the field experiment: (a) measured displacement; (b) absolute error.

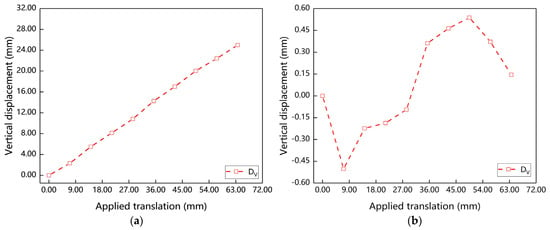

To verify the capacity of the proposed method for tackling the settlement, the vertical displacement values in this test were computed by Equation (3), and then corrected according to the homography-based strategy in Section 2.1. Figure 13a,b show the computed vertical displacements before and after correction, respectively. From (a), one can see that the error of the vertical displacement increases linearly when the applied lateral displacement increases, and the maximum value can be up to 26.00 mm, implying that the settlement determined by Equation (3) is linearly affected by the lateral displacement. In contrast, the corrected vertical displacements in (b) are much more reasonable than those in (a), and the maximum value is just 0.53 mm. The results in Figure 13 demonstrate the feasibility of the strategy for settlement computation in Section 2.1.

Figure 13.

Vertical displacement correction results: (a) measured vertical displacement before correction; (b) vertical displacement before correction.

4. Discussion

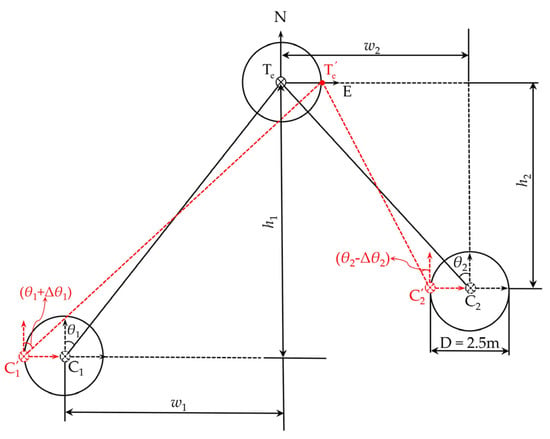

A non-overlapping cameras model based on the positioning system GNSS is proposed in this study to sense the 3D displacement of high-rise buildings from a long-range. As the yaw angles between the cameras and target points are determined by the GNSS, it is necessary to discuss the influence of the positioning accuracy on the yaw angle. The single-point positioning (SPP) diagram of a GNSS with a typical positioning error ±2.50 m is shown in Figure 14.

Figure 14.

The yaw angle error caused by GNSS single point positioning.

In Figure 14, C1, C2 and Te represent the real positions of the two cameras and the equivalent target point in the E-N-H system, respectively. , and are the positions of the two cameras and the equivalent target point at the maximum yaw angle error corresponding to the SPP method, respectively. Let w1 and w2 be the horizontal distances of the real equivalent target point from the real positions of C1 and C2 along the direction E, and h1 and h2 be the corresponding horizontal distances of the equivalent target point along the N direction. Then, relative to the equivalent target point, the maximum yaw angle error Δθ1 of the camera C1 and that Δθ2 of the camera C2 are given as:

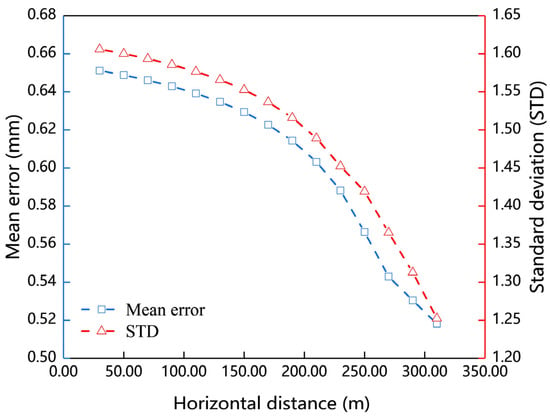

Assuming that the GNSS used in the field experiment in Section 3.2 is characterized by the SSP mode, the yaw angle errors Δθ1 and Δθ2 can be deduced as 4.79° and 0.21°, respectively, by Equation (15). According to Equation (2), by increasing the distances between the cameras and the target point, the mean value and standard deviation of the absolute errors of the measured lateral displacements can be evaluated, as shown in Figure 15.

Figure 15.

Mean error and standard deviation.

The horizontal distance between a camera and the corresponding target point is often greater than 30.00 m when the proposed method is applied to the monitoring of high-rise buildings. In this case, the yaw angle error caused by the SSP method, adopted by the positioning system, has a very limited impact on the measurement.

5. Conclusions

In this study, a non-overlapping dual camera measurement model with the aid of a global navigation satellite system (GNSS) is proposed to sense 3D displacements. The model aims to sense the 3D displacement of multiple target points by calibrating the pose relationship between dual cameras, with non-overlapping in the azimuth coordinate system relative to the corresponding target point on high-rise structures. As the camera projection model does not hold the parallel invariance, the lateral and vertical displacement components are coupled, and the vertical displacement cannot be solved directly by Equation (3). This study proposes to realize the transformation of cameras viewing angles by a homography matrix, which greatly reduces the influence of displacement coupling in the vertical displacement of high-rise structures. Meanwhile a yaw angle correction method is also proposed to improve the accuracy and precision of this model. We also found that the error in the yaw angle of the camera caused by SSP of a GNSS has a limited impact on the measurement if the horizontal distance between a camera and the corresponding target point is greater than 30.00 m; its influence decreases as the horizontal distance between the camera and the corresponding target point increases.

In conclusion, this study proposes a new method of 3D displacement sensing for high-rising structures that does not rely on stereo vision geometry. In contrast to traditional contact techniques, the proposed method is capable of capturing the lateral and vertical displacements of multiple points simultaneously. However, it is suitable to measure the overall lateral displacement and settlement of high-rising buildings with a large height-to-width ratio, due to the proposed measurement model ignores the intraformational deformation and tortional deformation. With the limitation and possible applicability of the proposed method, we expect this study could provide an alternative image-based displacement measurement technique to the field of SHM for buildings whose deformation is dominated by bending deflection and vertical settlement. Based on this work, we are also pursuing more comprehensive methods to measure the full deformation of high-rising buildings.

Author Contributions

Conceptualization, D.Z. and Z.S.; methodology, Z.Y. and Z.S.; software, Z.Y. and L.D.; validation, Z.Y., H.D. and Y.X.; formal analysis, Z.Y. and H.D.; investigation, Z.Y. and L.D.; data curation, Z.Y.; writing—original draft preparation, Z.Y. and Z.S.; writing—review and editing, D.Z. and Z.S.; visualization, Y.X.; supervision, D.Z. and Q.Y.; project administration, D.Z. and Q.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (NSFC), grant number 11727804, 11872240, 12072184, 12002197, and 51732008; and the China Postdoctoral Science Foundation, grant number 2021M692025.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hou, F.; Jafari, M. Investigation approaches to quantify wind-induced load and response of tall buildings: A review. Sustain. Cities Soc. 2020, 62, 102376. [Google Scholar] [CrossRef]

- Cheng, J.F.; Luo, X.Y.; Xiang, P. Experimental study on seismic behavior of rc beams with corroded stirrups at joints under cyclic loading. J. Build. Eng. 2020, 32, 101489. [Google Scholar] [CrossRef]

- Zhang, M.; McSaveney, M.; Shao, H.; Zhang, C.Y. The 2009 jiweishan rock avalanche, wulong, china: Precursor conditions and factors leading to failure. Eng. Geol. 2018, 233, 225–230. [Google Scholar] [CrossRef]

- Li, F.; Zou, L.H.; Song, J.; Liang, S.G.; Chen, Y. Investigation of the spatial coherence function of wind loads on lattice frame structures. J. Wind. Eng. Ind. Aerodyn. 2021, 215, 104675. [Google Scholar] [CrossRef]

- Li, Q.; Liu, H.; Wang, T.; Bao, E. Study on shear force distribution in structural design of brbf structure with high β value. Eng. Struct. 2019, 193, 82–90. [Google Scholar] [CrossRef]

- Su, J.Z.; Xia, Y.; Weng, S. Review on field monitoring of high-rise structures. Struct. Control Health Monit. 2020, 27, e2629. [Google Scholar] [CrossRef]

- Moreno-Gomez, A.; Perez-Ramirez, C.A.; Dominguez-Gonzalez, A.; Valtierra-Rodriguez, M.; Chavez-Alegria, O.; Amezquita-Sanchez, J.P. Sensors used in structural health monitoring. Arch. Comput. Methods Eng. 2018, 25, 901–918. [Google Scholar] [CrossRef]

- Sekiya, H.; Kimura, K.; Miki, C. Technique for determining bridge displacement response using mems accelerometers. Sensors 2016, 16, 257. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Hu, X.; Xie, Z.; Shi, B.; Zhang, L.; Wang, R. Field measurement study on time-varying characteristics of modal parameters of super high-rise buildings during super typhoon. J. Wind Eng. Ind. Aerodyn. 2020, 200, 104139. [Google Scholar] [CrossRef]

- Zheng, W.; Dan, D.; Cheng, W.; Xia, Y. Real-time dynamic displacement monitoring with double integration of acceleration based on recursive least squares method. Measurement 2019, 141, 460–471. [Google Scholar] [CrossRef]

- Yu, J.; Meng, X.; Yan, B.; Xu, B.; Fan, Q.; Xie, Y. Global navigation satellite system-based positioning technology for structural health monitoring: A review. Struct. Control Health Monit. 2020, 27, e2467. [Google Scholar] [CrossRef] [Green Version]

- Quesada-Olmo, N.; Jimenez-Martinez, M.J.; Farjas-Abadia, M. Real-time high-rise building monitoring system using global navigation satellite system technology. Measurement 2018, 123, 115–124. [Google Scholar] [CrossRef]

- Choi, S.W.; Kim, I.S.; Park, J.H.; Kim, Y.; Sohn, H.G.; Park, H.S. Evaluation of stiffness changes in a high-rise building by measurements of lateral displacements using gps technology. Sensors 2013, 13, 15489–15503. [Google Scholar] [CrossRef]

- Liao, M.; Liu, J.; Meng, Z.; You, Z. A sins/sar/gps fusion positioning system based on sensor credibility evaluations. Remote Sens. 2021, 13, 4463. [Google Scholar] [CrossRef]

- Hu, W.-H.; Xu, Z.-M.; Liu, M.-Y.; Tang, D.-H.; Lu, W.; Li, Z.-H.; Teng, J.; Han, X.-H.; Said, S.; Rohrmann, R.G. Estimation of the lateral dynamic displacement of high-rise buildings under wind load based on fusion of a remote sensing vibrometer and an inclinometer. Remote Sens. 2020, 12, 1120. [Google Scholar] [CrossRef] [Green Version]

- Chen, B.; Li, H.; Tian, W.; Zhou, C. Pzt based piezoelectric sensor for structural monitoring. J. Electron. Mater. 2019, 48, 2916–2923. [Google Scholar] [CrossRef]

- Rao, R.K.; Sasmal, S. Smart nano-engineered cementitious composite sensors for vibration-based health monitoring of large structures. Sens. Actuators A-Phys. 2020, 311, 112088. [Google Scholar] [CrossRef]

- Qiu, Z.; Jiao, M.; Jiang, T.; Zhou, L. Dam structure deformation monitoring by gb-insar approach. IEEE Access 2020, 8, 123287–123296. [Google Scholar] [CrossRef]

- Hu, J.; Guo, J.; Xu, Y.; Zhou, L.; Zhang, S.; Fan, K. Differential ground-based radar interferometry for slope and civil structures monitoring: Two case studies of landslide and bridge. Remote Sens. 2019, 11, 2887. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Chen, W.; Liu, G.; Yan, R.; Xu, H.; Qi, Y. A noncontact fmcw radar sensor for displacement measurement in structural health monitoring. Sensors 2015, 15, 7412–7433. [Google Scholar] [CrossRef] [Green Version]

- Gonzalez-Aguilera, D.; Gomez-Lahoz, J.; Sanchez, J. A new approach for structural monitoring of large dams with a three-dimensional laser scanner. Sensors 2008, 8, 5866–5883. [Google Scholar] [CrossRef] [Green Version]

- Luo, L.; Feng, M.Q.; Wu, Z.Y. Robust vision sensor for multi-point displacement monitoring of bridges in the field. Eng. Struct. 2018, 163, 255–266. [Google Scholar] [CrossRef]

- Dong, C.Z.; Catbas, F.N. A review of computer vision-based structural health monitoring at local and global levels. Struct. Health Monit.-Int. J. 2021, 20, 692–743. [Google Scholar] [CrossRef]

- Zona, A. Vision-based vibration monitoring of structures and infrastructures: An overview of recent applications. Infrastructures 2021, 6, 4. [Google Scholar] [CrossRef]

- Kim, D.-H.; Gratchev, I. Application of optical flow technique and photogrammetry for rockfall dynamics: A case study on a field test. Remote Sens. 2021, 13, 4124. [Google Scholar] [CrossRef]

- Sanchez-Aparicio, L.J.; Herrero-Huerta, M.; Esposito, R.; Schipper, H.R.; Gonzalez-Aguilera, D. Photogrammetric solution for analysis of out-of-plane movements of a masonry structure in a large-scale laboratory experiment. Remote Sens. 2019, 11, 1871. [Google Scholar] [CrossRef] [Green Version]

- Shan, B.; Wang, L.; Huo, X.; Yuan, W.; Xue, Z. A bridge deflection monitoring system based on ccd. Adv. Mater. Sci. Eng. 2016, 2016, 4857373. [Google Scholar] [CrossRef] [Green Version]

- Jo, B.-W.; Lee, Y.-S.; Jo, J.H.; Khan, R.M.A. Computer vision-based bridge displacement measurements using rotation-invariant image processing technique. Sustainability 2018, 10, 1785. [Google Scholar] [CrossRef] [Green Version]

- Su, Z.; Pan, J.; Lu, L.; Dai, M.; He, X.; Zhang, D. Refractive three-dimensional reconstruction for underwater stereo digital image correlation. Opt. Express 2021, 29, 12131–12144. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, S.Q.; Li, X.D.; Zou, Y.; Zhang, D.S. Development of monocular video deflectometer based on inclination sensors. Smart Struct. Syst. 2019, 24, 607–616. [Google Scholar]

- Fioriti, V.; Roselli, I.; Tati, A.; Romano, R.; De Canio, G. Motion magnification analysis for structural monitoring of ancient constructions. Measurement 2018, 129, 375–380. [Google Scholar] [CrossRef]

- Park, J.-W.; Lee, J.-J.; Jung, H.-J.; Myung, H. Vision-based displacement measurement method for high-rise building structures using partitioning approach. Ndt E Int. 2010, 43, 642–647. [Google Scholar] [CrossRef]

- Guo, J.; Xiang, Y.; Fujita, K.; Takewaki, I. Vision-based building seismic displacement measurement by stratification of projective rectification using lines. Sensors 2020, 20, 5775. [Google Scholar] [CrossRef]

- Ye, X.-W.; Jin, T.; Ang, P.-P.; Bian, X.-C.; Chen, Y.-M. Computer vision-based monitoring of the 3-d structural deformation of an ancient structure induced by shield tunneling construction. Struct. Control Health Monit. 2021, 28, e2702. [Google Scholar] [CrossRef]

- Chen, X.; Achilli, V.; Fabris, M.; Menin, A.; Monego, M.; Tessari, G.; Floris, M. Combining sentinel-1 interferometry and ground-based geomatics techniques for monitoring buildings affected by mass movements. Remote Sens. 2021, 13, 452. [Google Scholar] [CrossRef]

- Schreier, H.; Orteu, J.-J.; Sutton, M.A. Image Correlation for Shape, Motion and Deformation Measurements. Basic Concepts, Theory and Applications; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Su, Z.L.; Lu, L.; He, X.Y.; Yang, F.J.; Zhang, D.S. Recursive-iterative digital image correlation based on salient features. Opt. Eng. 2020, 59, 034111. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Zhang, X.L.; Zhu, P.A.; Hu, C.S. A Simple algorithm for distance and Azimuth of missile-to-target based on longitude and latitude. Ordnance Ind. Autom 2019, 38, 7–9. (In Chinese) [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).