Transferability of the Deep Learning Mask R-CNN Model for Automated Mapping of Ice-Wedge Polygons in High-Resolution Satellite and UAV Images

Abstract

1. Introduction

2. Data and Methods

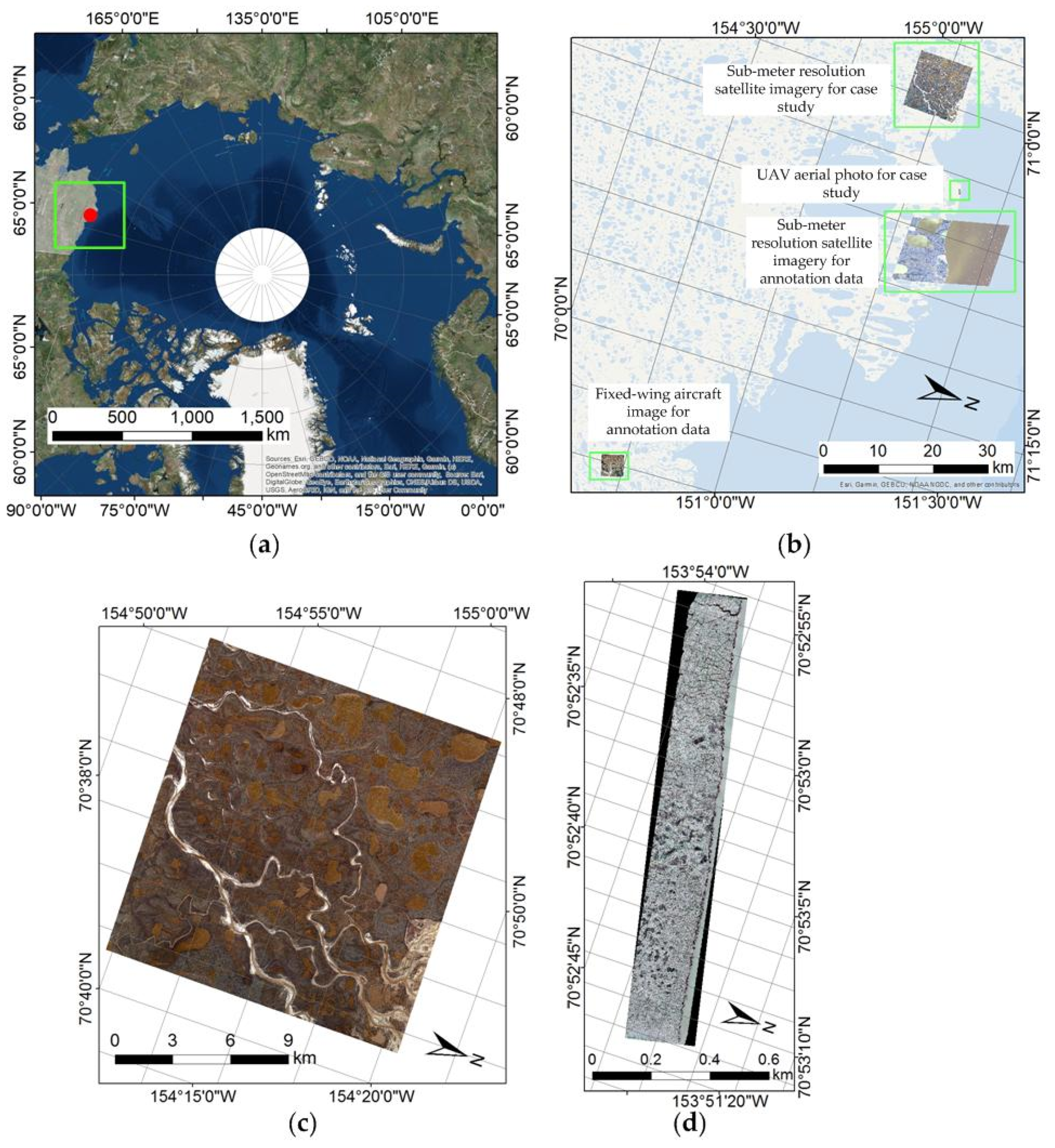

2.1. Imagery Data for Annotation

2.2. Imagery Data for Case Studies

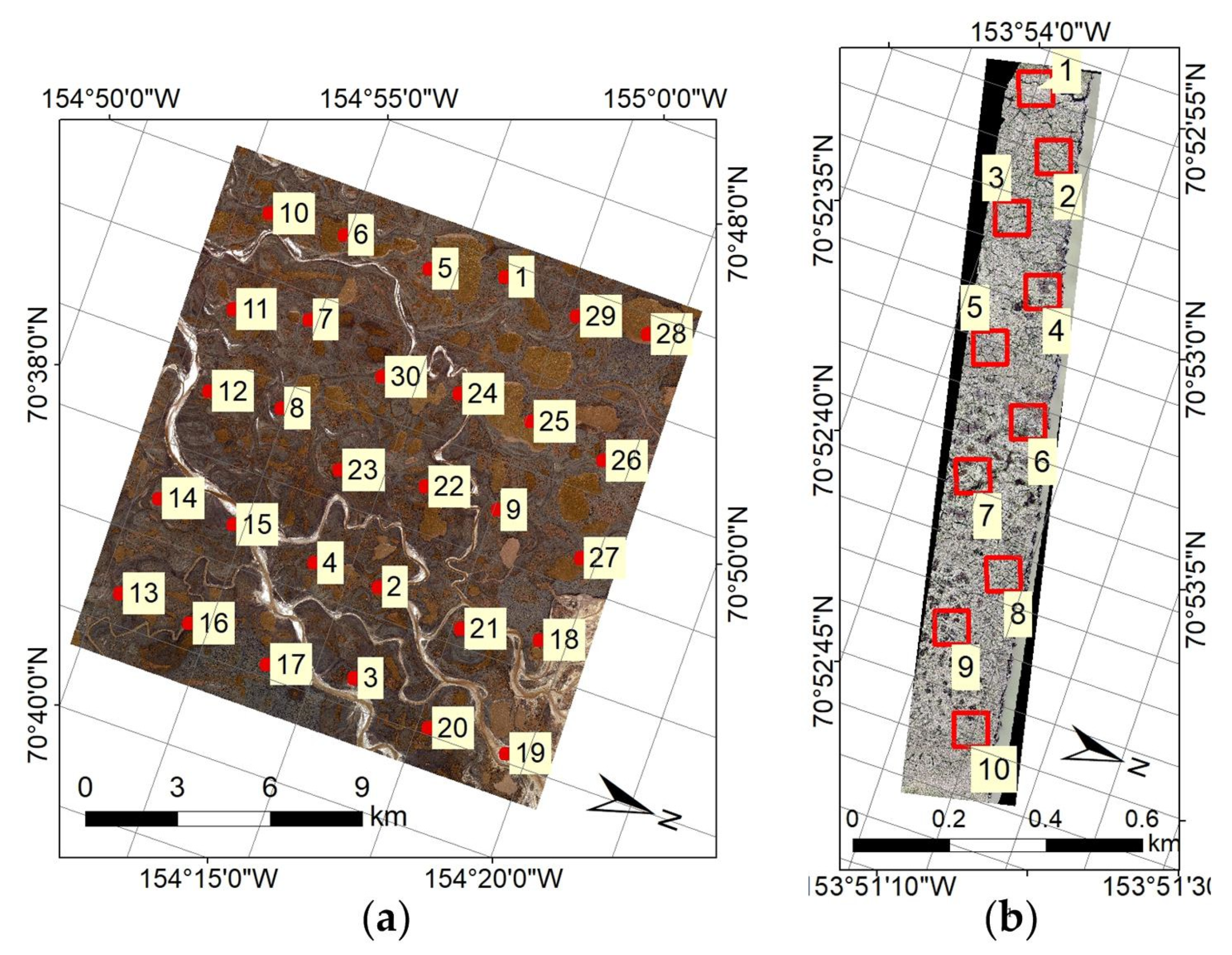

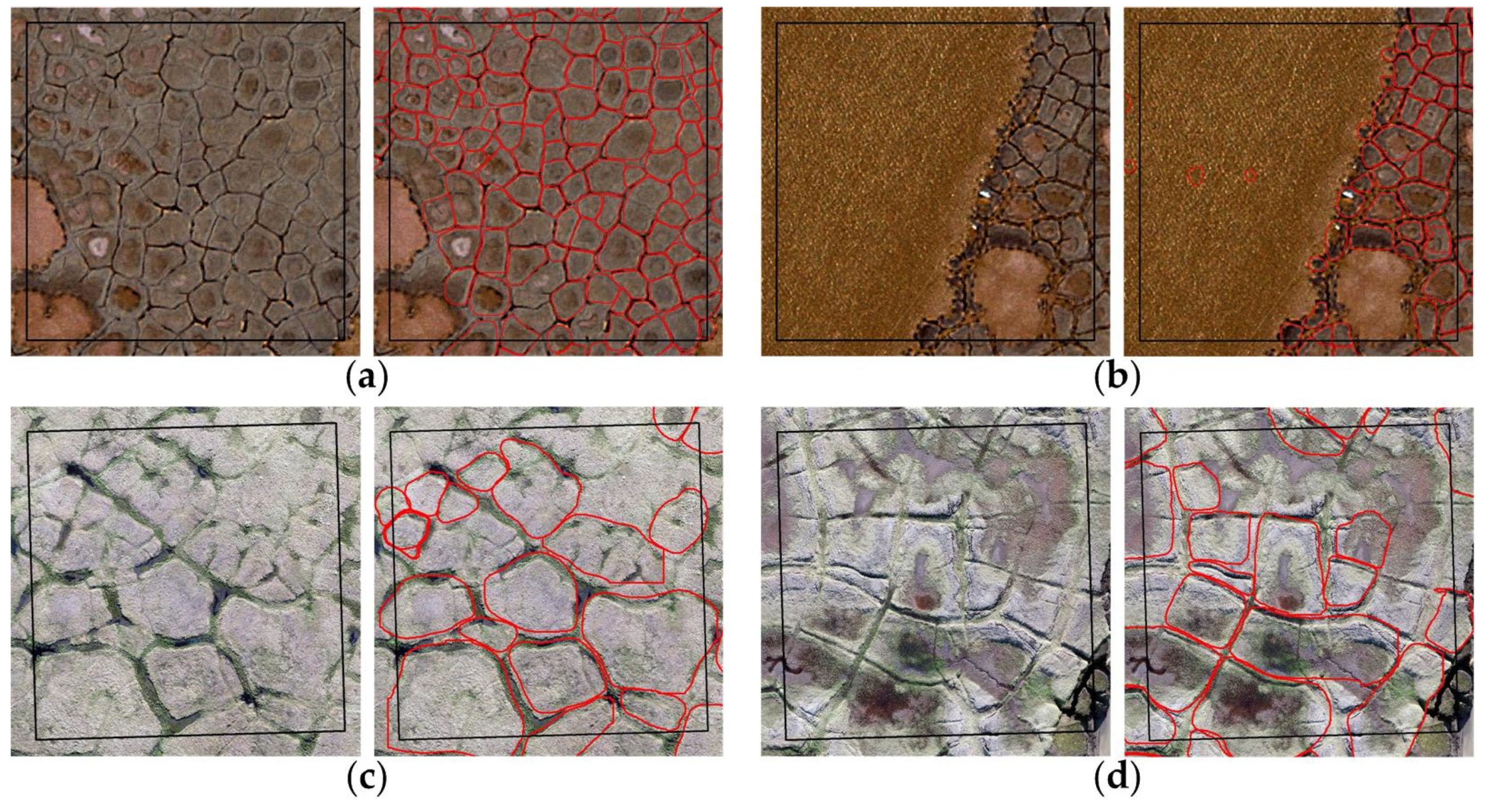

2.3. Annotated Data for the Mask R-CNN Model

2.4. Annotated Data for Case Studies

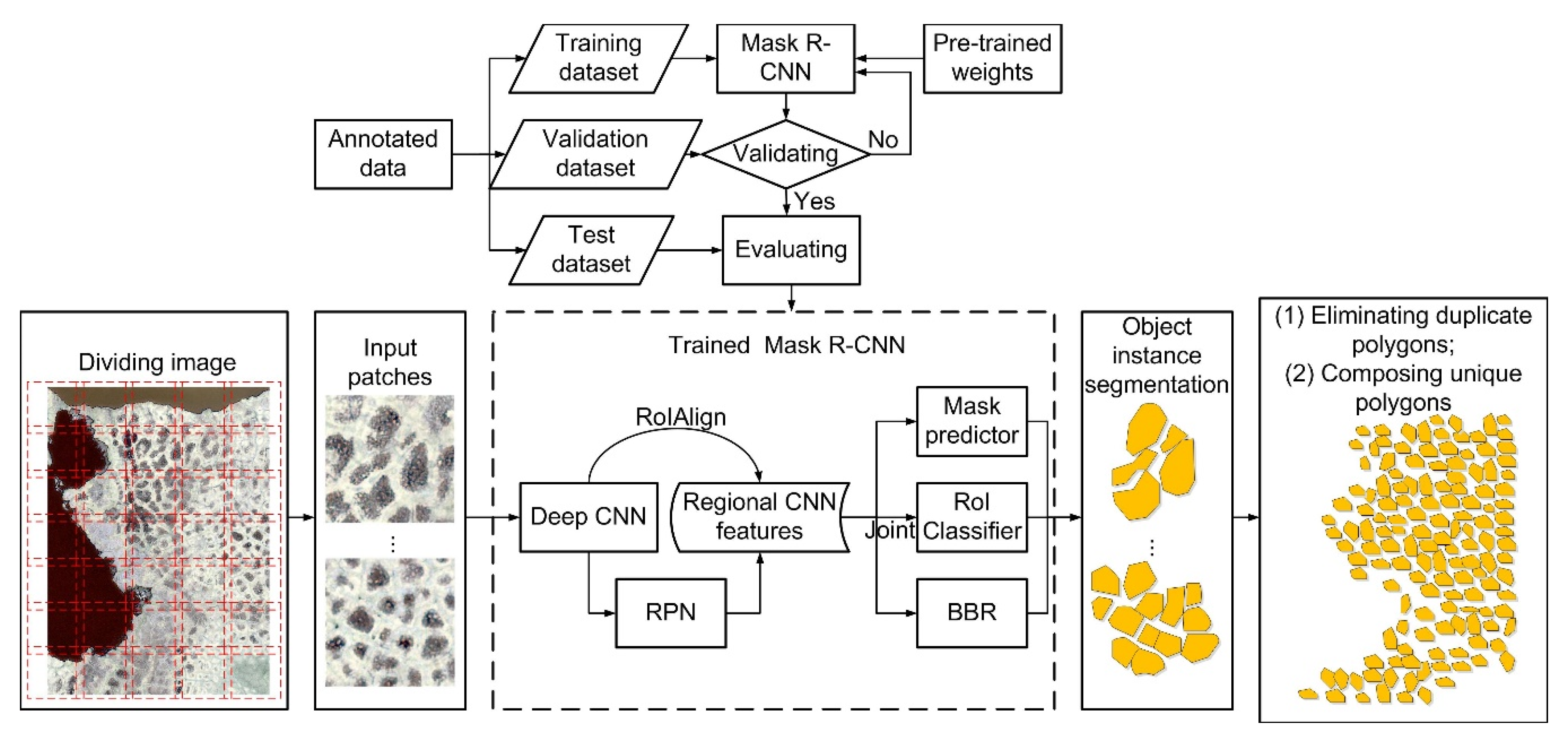

2.5. Experimental Design

- (C1)

- We applied the Mask R-CNN model trained on VHSR fixed-wing aircraft imagery from Zhang et al. [17] to IWP mapping of a high-resolution satellite image;

- (C2)

- We applied the Mask R-CNN model trained only on high-resolution satellite imagery to IWP mapping of another high-resolution satellite image;

- (C3)

- We re-trained the model from Zhang et al. [17] with high-resolution satellite imagery and applied the model to another high-resolution satellite image;

- (C4)

- We applied the Mask R-CNN model trained only on high-resolution satellite imagery to IWP mapping of a 3-band UAV image;

- (C5)

- We applied the Mask R-CNN model trained only on VHSR fixed-wing aircraft imagery from Zhang et al. [17] to IWP mapping of the 3-band UAV image; and

- (C6)

- We re-trained the Mask R-CNN model already trained on high-resolution satellite imagery with VHSR fixed-wing aircraft imagery from Zhang et al. [17] and applied the model to the 3-band UAV image.

2.6. Quantitative Assessment

2.7. Expert-Based Qualitative Assessment

2.8. Workflow and Implementation

3. Case Studies and Results

3.1. Quantitative Assessment Based on Model Testing Datasets

3.2. Quantitative Assessment Based on Case Testing Datasets

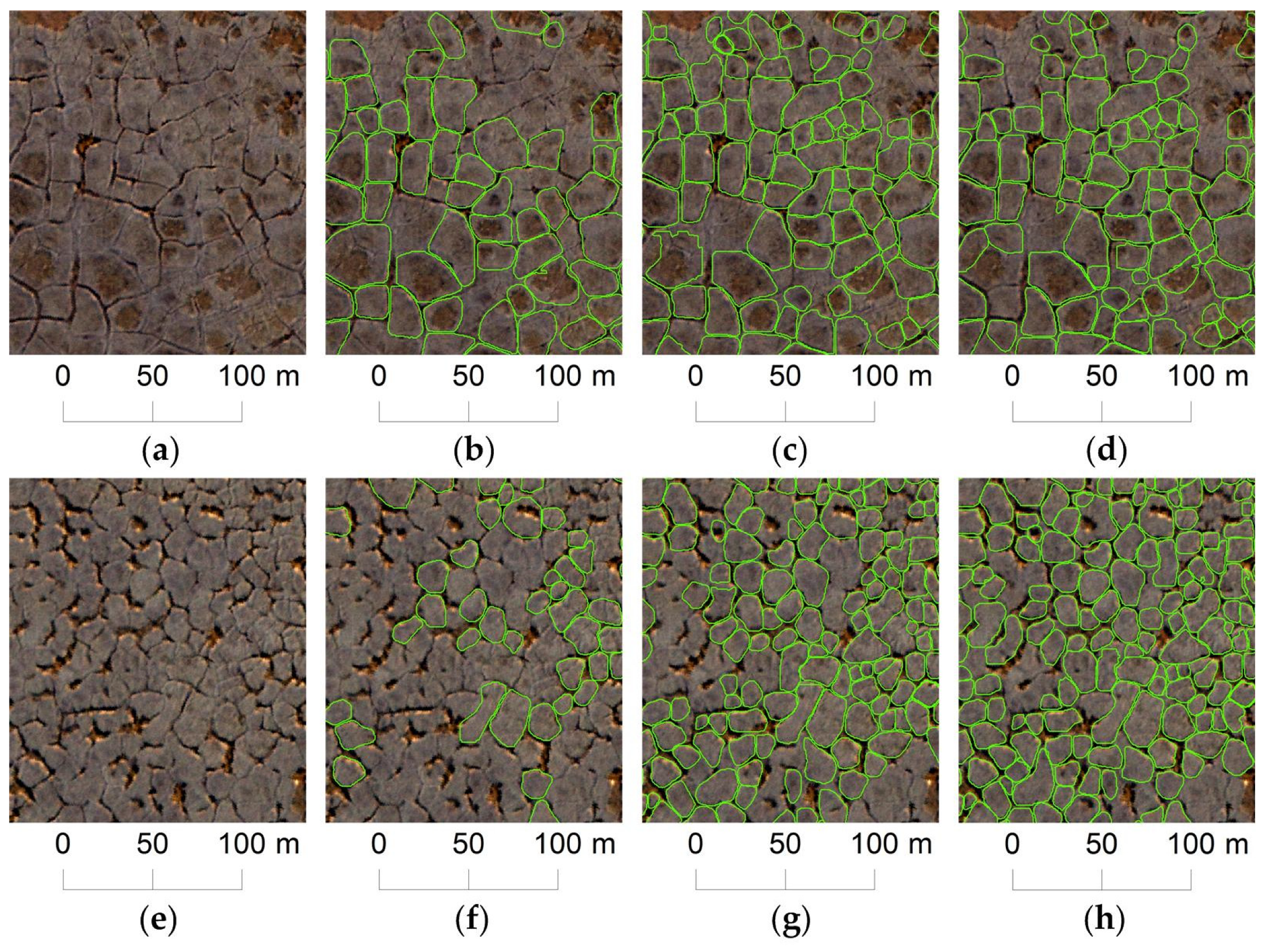

3.2.1. C1: A Mask R-CNN Model Trained Only on VHSR Fixed-Wing Aircraft Imagery Was Applied to a High-Resolution Satellite Image

3.2.2. C2: A Mask R-CNN Model Trained Only on High-Resolution Satellite Imagery Was Applied to Another High-Resolution Satellite Image

3.2.3. C3: A Mask R-CNN Model Trained on VHSR Fixed-Wing Aircraft Imagery and Re-Trained on High-Resolution Satellite Imagery Was Applied to Another High-Resolution Satellite Image

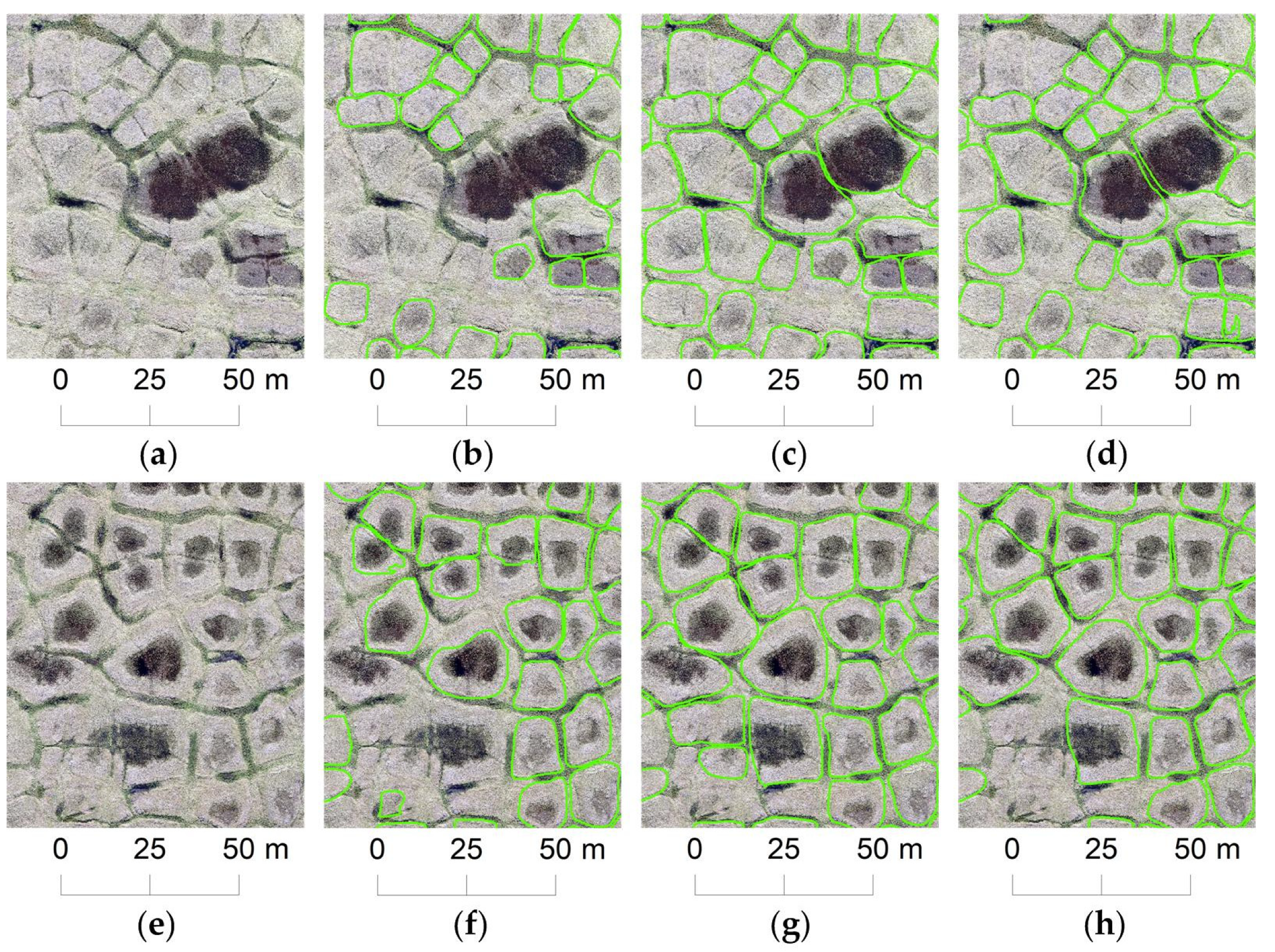

3.2.4. C4: A Mask R-CNN Model Trained Only on High-Resolution Satellite Imagery Was Applied to a 3-Band UAV Image

3.2.5. C5: A Mask R-CNN Model Trained Only on VHSR Fixed-Wing Aircraft Imagery Was Applied to a 3-Band UAV Image

3.2.6. C6: A Mask R-CNN Model Re-Trained on High-Resolution Satellite Imagery Was Applied to a 3-Band UAV Image

4. Discussion

4.1. Effect of Spatial Resolution of Training Data on Mask R-CNN Performance

4.2. Effect of Used Spectral Bands of Training Data on Mask R-CNN Performance

4.3. Limitations of the Mask R-CNN Model

4.4. Limitations of the Annotation Data

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Muller, S.W. Permafrost or Permanently Frozen Ground and Related Engineering Problems; J. W. Edwards Inc.: Ann Arbor, MI, USA, 1947. [Google Scholar]

- van Everdingen, R.O. Multi-Language Glossary of Permafrost and Related Ground-Ice; Arctic Institute of North America in University of Calgary: Calgary, AL, Canada, 1998. [Google Scholar]

- Jorgenson, M.T.; Kanevskiy, M.; Shur, Y.; Moskalenko, N.; Brown, D.R.N.; Wickland, K.; Striegl, R.; Koch, J. Role of ground ice dynamics and ecological feedbacks in recent ice wedge degradation and stabilization. J. Geophys. Res. Earth Surf. 2015, 120, 2280–2297. [Google Scholar] [CrossRef]

- Leffingwell, E.D.K. Ground-Ice Wedges: The Dominant Form of Ground-Ice on the North Coast of Alaska. J. Geol. 1915, 23, 635–654. [Google Scholar] [CrossRef]

- Lachenbruch, A.H. Mechanics of Thermal Contraction Cracks and Ice-Wedge Polygons in Permafrost; Geological Society of America: Boulder, CO, USA, 1962; Volume 70. [Google Scholar]

- Dostovalov, B.N. Polygonal Systems of Ice Wedges and Conditions of Their Development. In Proceedings of the Permafrost International Conference, Lafayette, IN, USA, 11–15 November 1963. [Google Scholar]

- Mackay, J.R. The World of Underground Ice. Ann. Assoc. Am. Geogr. 1972, 62, 1–22. [Google Scholar] [CrossRef]

- Kanevskiy, M.Z.; Shur, Y.; Jorgenson, M.T.; Ping, C.-L.; Michaelson, G.J.; Fortier, D.; Stephani, E.; Dillon, M.; Tumskoy, V. Ground ice in the upper permafrost of the Beaufort Sea coast of Alaska. Cold Reg. Sci. Technol. 2013, 85, 56–70. [Google Scholar] [CrossRef]

- Jorgenson, M.T.; Shur, Y.L.; Pullman, E.R. Abrupt increase in permafrost degradation in Arctic Alaska. Geophys. Res. Lett. 2006, 33. [Google Scholar] [CrossRef]

- Liljedahl, A.K.; Boike, J.; Daanen, R.P.; Fedorov, A.N.; Frost, G.V.; Grosse, G.; Hinzman, L.D.; Iijma, Y.; Jorgenson, J.C.; Matveyeva, N.; et al. Pan-Arctic ice-wedge degradation in warming permafrost and its influence on tundra hydrology. Nat. Geosci. 2016, 9, 312–318. [Google Scholar] [CrossRef]

- Fraser, R.H.; Kokelj, S.V.; Lantz, T.C.; McFarlane-Winchester, M.; Olthof, I.; Lacelle, D. Climate Sensitivity of High Arctic Permafrost Terrain Demonstrated by Widespread Ice-Wedge Thermokarst on Banks Island. Remote Sens. 2018, 10, 954. [Google Scholar] [CrossRef]

- Frost, G.V.; Epstein, H.E.; Walker, D.A.; Matyshak, G.; Ermokhina, K. Seasonal and Long-Term Changes to Active-Layer Temperatures after Tall Shrubland Expansion and Succession in Arctic Tundra. Ecosystems 2018, 21, 507–520. [Google Scholar] [CrossRef]

- Jones, B.M.; Grosse, G.; Arp, C.D.; Miller, E.; Liu, L.; Hayes, D.J.; Larsen, C.F. Recent Arctic tundra fire initiates widespread thermokarst development. Sci. Rep. 2015, 5, 15865. [Google Scholar] [CrossRef]

- Raynolds, M.K.; Walker, D.A.; Ambrosius, K.J.; Brown, J.; Everett, K.R.; Kanevskiy, M.; Kofinas, G.P.; Romanovsky, V.E.; Shur, Y.; Webber, P.J. Cumulative geoecological effects of 62 years of infrastructure and climate change in ice-rich permafrost landscapes, Prudhoe Bay Oilfield, Alaska. Glob. Chang. Biol. 2014, 20, 1211–1224. [Google Scholar] [CrossRef]

- Jorgenson, M.T.; Shur, Y.L.; Osterkamp, T.E. Thermokarst in Alaska. In Proceedings of the Ninth International Conference on Permafrost, Fairbanks, AK, USA, 29 June–3 July 2008; University of Alaska-Fairbanks: Fairbanks, AK, USA; pp. 121–122. Available online: https://www.researchgate.net/profile/Sergey_Marchenko3/publication/334524021_Permafrost_Characteristics_of_Alaska_Map/links/5d2f7672a6fdcc2462e86fae/Permafrost-Characteristics-of-Alaska-Map.pdf (accessed on 1 May 2019).

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Zhang, W.; Witharana, C.; Liljedahl, A.K.; Kanevskiy, M. Deep Convolutional Neural Networks for Automated Characterization of Arctic Ice-Wedge Polygons in Very High Spatial Resolution Aerial Imagery. Remote Sens. 2018, 10, 1487. [Google Scholar] [CrossRef]

- Abolt, C.J.; Young, M.H.; Atchley, A.L.; Wilson, C.J. Brief communication: Rapid machine-learning-based extraction and measurement of ice wedge polygons in high-resolution digital elevation models. Cryosphere 2019, 13, 237–245. [Google Scholar] [CrossRef]

- Lara, M.J.; Chipman, M.L.; Hu, F.S. Automated detection of thermoerosion in permafrost ecosystems using temporally dense Landsat image stacks. Remote Sens. Environ. 2019, 221, 462–473. [Google Scholar] [CrossRef]

- Cooley, S.W.; Smith, L.C.; Ryan, J.C.; Pitcher, L.H.; Pavelsky, T.M. Arctic-Boreal Lake Dynamics Revealed Using CubeSat Imagery. Geophys. Res. Lett. 2019, 46, 2111–2120. [Google Scholar] [CrossRef]

- Nitze, I.; Grosse, G.; Jones, B.M.; Arp, C.D.; Ulrich, M.; Fedorov, A.; Veremeeva, A. Landsat-Based Trend Analysis of Lake Dynamics across Northern Permafrost Regions. Remote Sens. 2017, 9, 640. [Google Scholar] [CrossRef]

- Nitze, I.; Grosse, G.; Jones, B.M.; Romanovsky, V.E.; Boike, J. Remote sensing quantifies widespread abundance of permafrost region disturbances across the Arctic and Subarctic. Nat. Commun. 2018, 9, 5423–5434. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Diao, W.; Sun, X.; Dou, F.; Yan, M.; Wang, H.; Fu, K. Object recognition in remote sensing images using sparse deep belief networks. Remote Sens. Lett. 2015, 6, 745–754. [Google Scholar] [CrossRef]

- Guan, H.; Yu, Y.; Ji, Z.; Li, J.; Zhang, Q. Deep learning-based tree classification using mobile LiDAR data. Remote Sens. Lett. 2015, 6, 864–873. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Bolya, D.; Zhou, C.; Xiao, F.; Lee, Y.J. YOLACT++: Better Real-time Instance Segmentation. arXiv 2019, arXiv:1912.06218. Available online: https://arxiv.org/abs/1912.06218 (accessed on 1 March 2020).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. ISBN 978-3-319-10601-4. [Google Scholar] [CrossRef]

- Dai, J.; He, K.; Sun, J. Instance-Aware Semantic Segmentation via Multi-Task Network Cascades. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3150–3158. Available online: https://www.cv-foundation.org/openaccess/content_cvpr_2016/html/Dai_Instance-Aware_Semantic_Segmentation_CVPR_2016_paper.html (accessed on 1 March 2020).

- Li, Y.; Qi, H.; Dai, J.; Ji, X.; Wei, Y. Fully Convolutional Instance-Aware Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 2359–2367. Available online: http://openaccess.thecvf.com/content_cvpr_2017/html/Li_Fully_Convolutional_Instance-Aware_CVPR_2017_paper.html (accessed on 5 March 2020).

- Dutta, A.; Zisserman, A. The VIA Annotation Software for Images, Audio and Video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; ACM: New York, NY, USA, 2019; pp. 2276–2279. [Google Scholar] [CrossRef]

- Huang, L.; Luo, J.; Lin, Z.; Niu, F.; Liu, L. Using deep learning to map retrogressive thaw slumps in the Beiluhe region (Tibetan Plateau) from CubeSat images. Remote Sens. Environ. 2020, 237, 111534. [Google Scholar] [CrossRef]

- Chen, Z.; Pasher, J.; Duffe, J.; Behnamian, A. Mapping Arctic Coastal Ecosystems with High Resolution Optical Satellite Imagery Using a Hybrid Classification Approach. Can. J. Remote Sens. 2017, 43, 513–527. [Google Scholar] [CrossRef]

- Abdulla, W. Mask r-cnn for Object Detection and Instance Segmentation on Keras and Tensorflow. GitHub Repos. 2017. Available online: https://github.com/matterport/Mask_RCNN (accessed on 1 November 2018).

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 8759–8768. Available online: http://openaccess.thecvf.com/content_cvpr_2018/html/Liu_Path_Aggregation_Network_CVPR_2018_paper.html (accessed on 5 March 2020).

- Huang, Z.; Huang, L.; Gong, Y.; Huang, C.; Wang, X. Mask Scoring R-CNN. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 6409–6418. Available online: http://openaccess.thecvf.com/content_CVPR_2019/html/Huang_Mask_Scoring_R-CNN_CVPR_2019_paper.html (accessed on 5 March 2020).

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High Quality Object Detection and Instance Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019. [Google Scholar] [CrossRef]

- Chen, K.; Ouyang, W.; Loy, C.C.; Lin, D.; Pang, J.; Wang, J.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; et al. Hybrid Task Cascade for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4969–4978. Available online: http://openaccess.thecvf.com/content_CVPR_2019/html/Chen_Hybrid_Task_Cascade_for_Instance_Segmentation_CVPR_2019_paper.html (accessed on 5 March 2020).

| Category | Sensor Platform | Image ID | Acquired Date | Spatial Resolution | Used Spectral Bands | Area (sq km) | Purpose | Number of Annotated IWPs |

|---|---|---|---|---|---|---|---|---|

| Imagery Data for Annotation | Fixed-wing Aircraft | n/a | 09/2013 | 0.15 × 0.15 m | near-infrared, green, and blue | 42 | Training | 6022 |

| Validation | 668 | |||||||

| Model Testing | 798 | |||||||

| WorldView-2 Satellite | 10300100065AFE00 | 07/29/2010 | 0.8 × 0.66 m | near-infrared, green, and blue | 535 | Training | 25,498 | |

| Validation | 3470 | |||||||

| Model Testing | 3399 | |||||||

| Imagery Data for Case Studies | WorldView-2 Satellite | 10300100468D9100 | 07/07/2015 | 0.48 × 0.49 m | near-infrared, green, and blue | 272 | Case Testing | 760 |

| DJI Phantom 4 UAV | n/a | 07/24/2018 | 0.02 × 0.02 m | red, green, and blue | 0.32 | Case Testing | 128 |

| Case Study | Pretrained Weight Dataset | Retrained Weights Dataset | Target Imagery | Addressing Questions |

|---|---|---|---|---|

| C1 | VHSR fixed-wing aircraft imagery | Worldview2 imagery | Q1, Q2 | |

| C2 | Worldview2 imagery | Q1, Q3 | ||

| C3 | VHSR fixed-wing aircraft imagery | Worldview2 imagery | Q1, Q3 | |

| C4 | Worldview2 imagery | UAV photo | Q1, Q2 | |

| C5 | VHSR fixed-wing aircraft imagery | Q1, Q2 | ||

| C6 | Worldview2 imagery | VHSR fixed-wing aircraft imagery | Q1, Q3 |

| Case Study | The Number of Epochs in Pre-Training Processes | The Number of Epochs in Re-Training Processes |

|---|---|---|

| C1 | None | 8 |

| C2 | None | 70 |

| C3 | 8 | 55 |

| C4 | None | 70 |

| C5 | None | 8 |

| C6 | 70 | 3 |

| Case Study | Category | IoU | TP | FP | FN | Precision | Recall | F1 | AP |

|---|---|---|---|---|---|---|---|---|---|

| C1 and C5 | Detection | 0.5 | 596 | 132 | 202 | 0.82 | 0.75 | 78% | 0.73 |

| 0.75 | 519 | 209 | 279 | 0.71 | 0.65 | 68% | 0.60 | ||

| Delineation | 0.5 | 591 | 137 | 207 | 0.81 | 0.74 | 77% | 0.72 | |

| 0.75 | 591 | 137 | 207 | 0.81 | 0.74 | 77% | 0.72 | ||

| C2 and C4 | Detection | 0.5 | 2151 | 77 | 1248 | 0.97 | 0.63 | 76% | 0.66 |

| 0.75 | 2060 | 168 | 1339 | 0.92 | 0.61 | 73% | 0.63 | ||

| Delineation | 0.5 | 2151 | 77 | 1248 | 0.97 | 0.63 | 76% | 0.66 | |

| 0.75 | 2151 | 77 | 1248 | 0.97 | 0.63 | 76% | 0.66 | ||

| C3 | Detection | 0.5 | 2131 | 82 | 1268 | 0.96 | 0.63 | 76% | 0.66 |

| 0.75 | 2006 | 207 | 1393 | 0.91 | 0.59 | 71% | 0.61 | ||

| Delineation | 0.5 | 2130 | 83 | 1269 | 0.96 | 0.63 | 76% | 0.66 | |

| 0.75 | 2130 | 83 | 1269 | 0.96 | 0.63 | 76% | 0.66 | ||

| C6 | Detection | 0.5 | 618 | 160 | 180 | 0.79 | 0.77 | 78% | 0.73 |

| 0.75 | 534 | 244 | 264 | 0.69 | 0.67 | 68% | 0.61 | ||

| Delineation | 0.5 | 617 | 161 | 181 | 0.79 | 0.77 | 78% | 0.73 | |

| 0.75 | 617 | 161 | 181 | 0.79 | 0.77 | 78% | 0.73 |

| Case Study | Category | IoU | TP | FP | FN | Precision | Recall | F1 | AP |

|---|---|---|---|---|---|---|---|---|---|

| C1 | Detection | 0.5 | 300 | 43 | 460 | 0.87 | 0.39 | 54% | 0.34 |

| 0.75 | 243 | 100 | 517 | 0.71 | 0.32 | 44% | 0.25 | ||

| Delineation | 0.5 | 298 | 45 | 462 | 0.87 | 0.39 | 54% | 0.34 | |

| 0.75 | 298 | 45 | 462 | 0.87 | 0.39 | 54% | 0.34 | ||

| C2 | Detection | 0.5 | 583 | 269 | 177 | 0.68 | 0.77 | 72% | 0.54 |

| 0.75 | 480 | 372 | 280 | 0.56 | 0.63 | 60% | 0.41 | ||

| Delineation | 0.5 | 587 | 265 | 173 | 0.69 | 0.77 | 73% | 0.55 | |

| 0.75 | 587 | 265 | 173 | 0.69 | 0.77 | 73% | 0.55 | ||

| C3 | Detection | 0.5 | 602 | 307 | 158 | 0.66 | 0.79 | 72% | 0.54 |

| 0.75 | 507 | 402 | 253 | 0.56 | 0.67 | 61% | 0.42 | ||

| Delineation | 0.5 | 601 | 308 | 159 | 0.66 | 0.79 | 72% | 0.54 | |

| 0.75 | 601 | 308 | 159 | 0.66 | 0.79 | 72% | 0.54 | ||

| C4 | Detection | 0.5 | 70 | 30 | 58 | 0.70 | 0.55 | 61% | 0.45 |

| 0.75 | 51 | 49 | 77 | 0.51 | 0.40 | 45% | 0.26 | ||

| Delineation | 0.5 | 69 | 31 | 59 | 0.69 | 0.54 | 61% | 0.44 | |

| 0.75 | 69 | 31 | 59 | 0.69 | 0.54 | 61% | 0.44 | ||

| C5 | Detection | 0.5 | 71 | 27 | 57 | 0.72 | 0.55 | 63% | 0.49 |

| 0.75 | 61 | 37 | 67 | 0.62 | 0.48 | 54% | 0.40 | ||

| Delineation | 0.5 | 71 | 27 | 57 | 0.72 | 0.55 | 63% | 0.49 | |

| 0.75 | 71 | 27 | 57 | 0.72 | 0.55 | 63% | 0.49 | ||

| C6 | Detection | 0.5 | 87 | 34 | 41 | 0.72 | 0.68 | 70% | 0.60 |

| 0.75 | 72 | 49 | 56 | 0.60 | 0.56 | 58% | 0.48 | ||

| Delineation | 0.5 | 85 | 36 | 43 | 0.70 | 0.66 | 68% | 0.58 | |

| 0.75 | 85 | 36 | 43 | 0.70 | 0.66 | 68% | 0.58 |

| Average Grades of Detection | Average Grades of Delineation | |

|---|---|---|

| Expert 1 | 3.7 | 4.6 |

| Expert 2 | 3.5 | 4.3 |

| Expert 3 | 2.9 | 4.6 |

| Expert 4 | 3.1 | 4.2 |

| Expert 5 | 3 | 4.6 |

| Expert 6 | 3.8 | 4.4 |

| Average grades | 3.3 (good) | 4.5 (excellent) |

| Average Grades of Detection | Average Grades of Delineation | |

|---|---|---|

| Expert 1 | 4 | 4.5 |

| Expert 2 | 4.3 | 3 |

| Expert 3 | 4.1 | 4.7 |

| Expert 4 | 4.7 | 4.4 |

| Expert 5 | 4 | 4 |

| Expert 6 | 4.3 | 4.7 |

| Average grades | 4.2 (excellent) | 4.2 (excellent) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, W.; Liljedahl, A.K.; Kanevskiy, M.; Epstein, H.E.; Jones, B.M.; Jorgenson, M.T.; Kent, K. Transferability of the Deep Learning Mask R-CNN Model for Automated Mapping of Ice-Wedge Polygons in High-Resolution Satellite and UAV Images. Remote Sens. 2020, 12, 1085. https://doi.org/10.3390/rs12071085

Zhang W, Liljedahl AK, Kanevskiy M, Epstein HE, Jones BM, Jorgenson MT, Kent K. Transferability of the Deep Learning Mask R-CNN Model for Automated Mapping of Ice-Wedge Polygons in High-Resolution Satellite and UAV Images. Remote Sensing. 2020; 12(7):1085. https://doi.org/10.3390/rs12071085

Chicago/Turabian StyleZhang, Weixing, Anna K. Liljedahl, Mikhail Kanevskiy, Howard E. Epstein, Benjamin M. Jones, M. Torre Jorgenson, and Kelcy Kent. 2020. "Transferability of the Deep Learning Mask R-CNN Model for Automated Mapping of Ice-Wedge Polygons in High-Resolution Satellite and UAV Images" Remote Sensing 12, no. 7: 1085. https://doi.org/10.3390/rs12071085

APA StyleZhang, W., Liljedahl, A. K., Kanevskiy, M., Epstein, H. E., Jones, B. M., Jorgenson, M. T., & Kent, K. (2020). Transferability of the Deep Learning Mask R-CNN Model for Automated Mapping of Ice-Wedge Polygons in High-Resolution Satellite and UAV Images. Remote Sensing, 12(7), 1085. https://doi.org/10.3390/rs12071085