PropNet-R: A Custom CNN Architecture for Quantitative Estimation of Propane Gas Concentration Based on Thermal Images for Sustainable Safety Monitoring

Abstract

1. Introduction

2. Materials and Methods

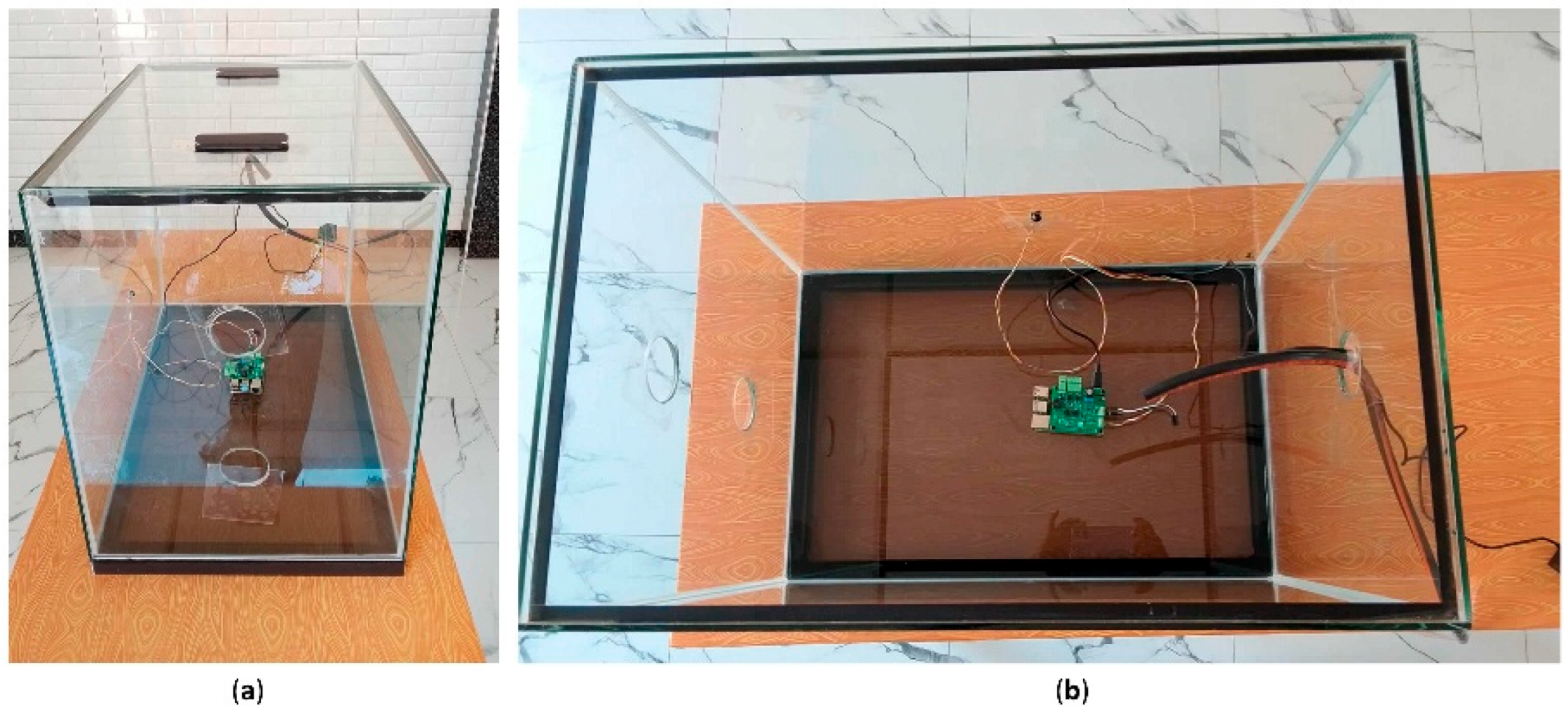

2.1. Hardware for Multi-Sensor Data Collection for Gas Leak Detection

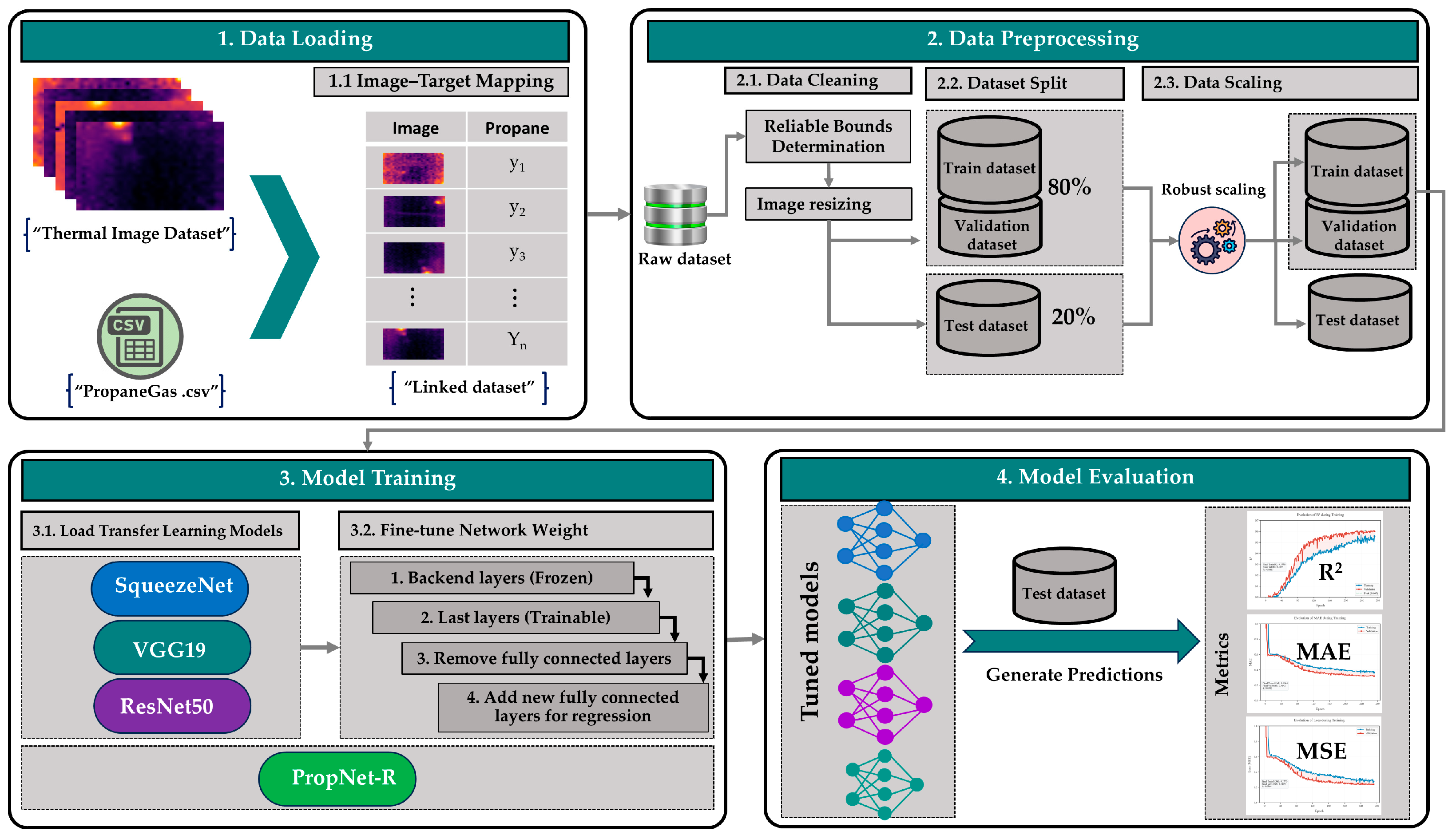

2.2. Framework for Quantitative Estimation of Propane Gas Concentration

2.2.1. Data Loading

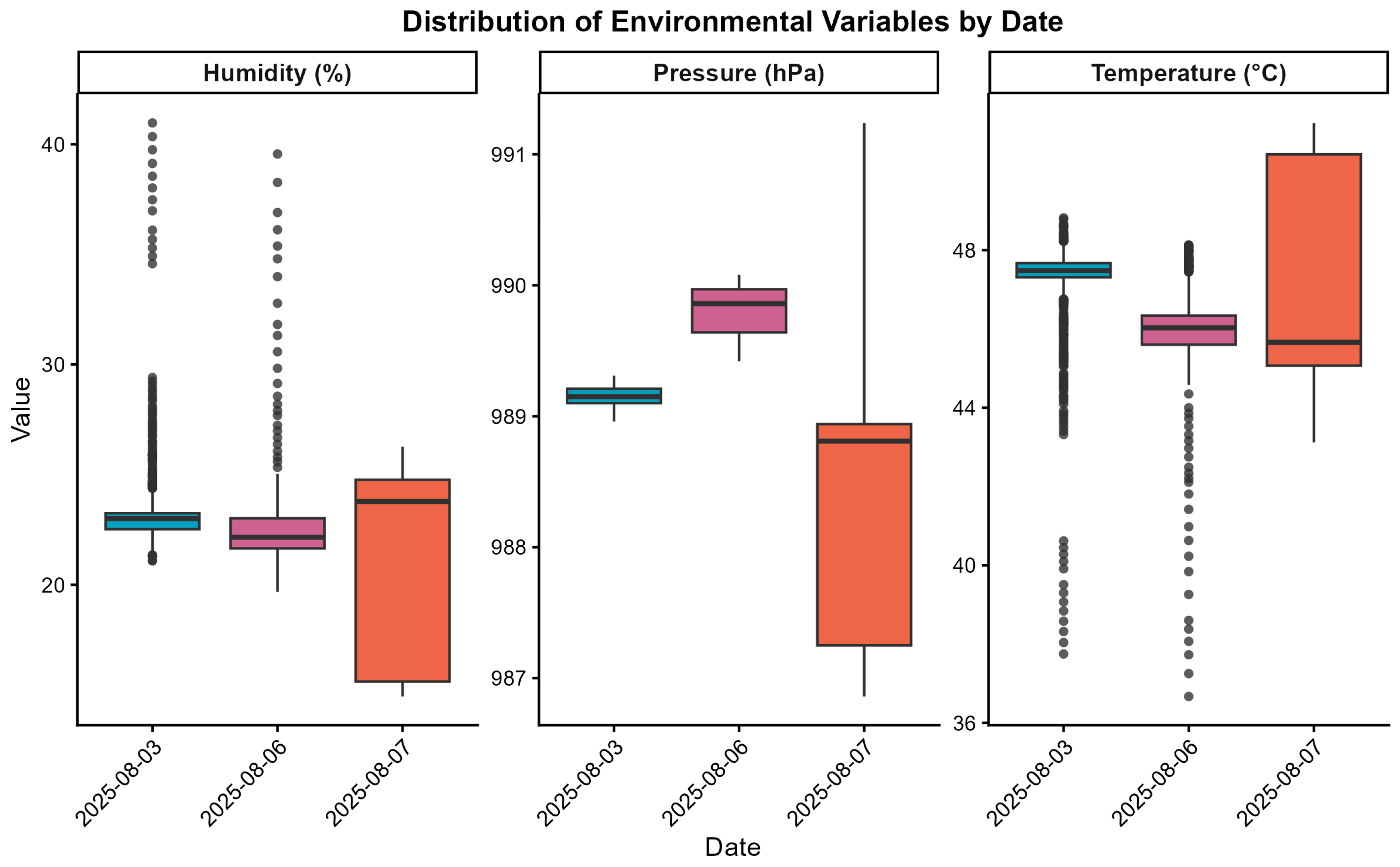

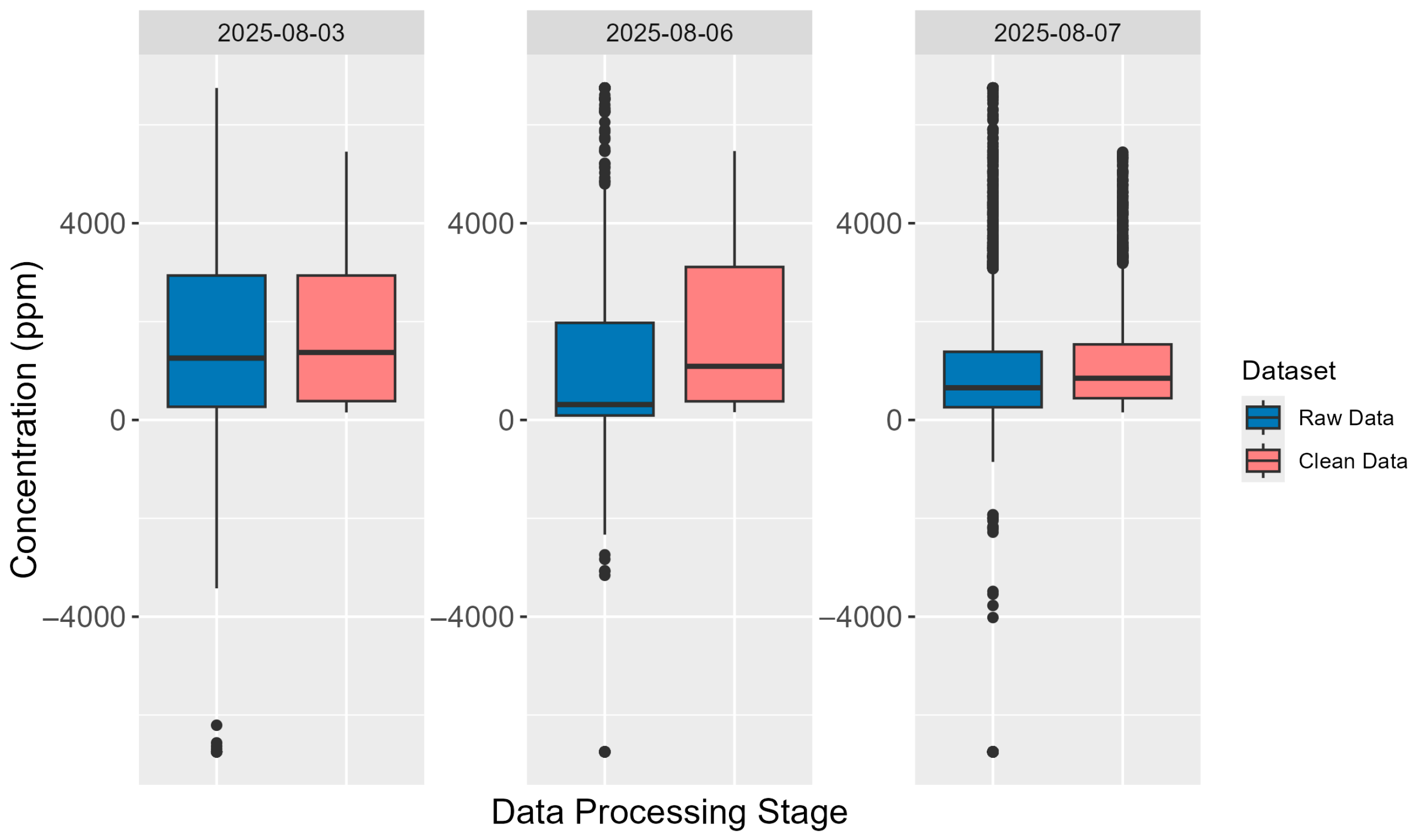

2.2.2. Data Preprocessing

Data Cleaning

Data Splitting

Data Scaling

2.2.3. Model Training

Load Transfer Learning Models

Fine-Tune Network Weight

- The implementation of SqueezeNet v1.1 retained the frozen initial convolutional layer to preserve low-level feature extraction, while fine-tuning all subsequent layers. After adapting it to the regression task, the final model contained 723,009 parameters, of which 721,217 were trainable and 1792 remained frozen. Fine-tuning focused on the last ten layers, corresponding to Fire modules 2 through 9 and the final convolutional layer.

- In the case of VGG19, a conservative fine-tuning strategy was adopted to mitigate early overfitting identified in preliminary experiments. The original architecture comprises approximately 143.7 million parameters. Following adaptation to the regression problem, the final model was reduced to approximately 23.2 million parameters, of which approximately 5.6 million remained trainable and 17.7 million were kept frozen. Training was concentrated solely on the last convolutional layer of the feature extractor and on the new fully connected head designed for the regression task.

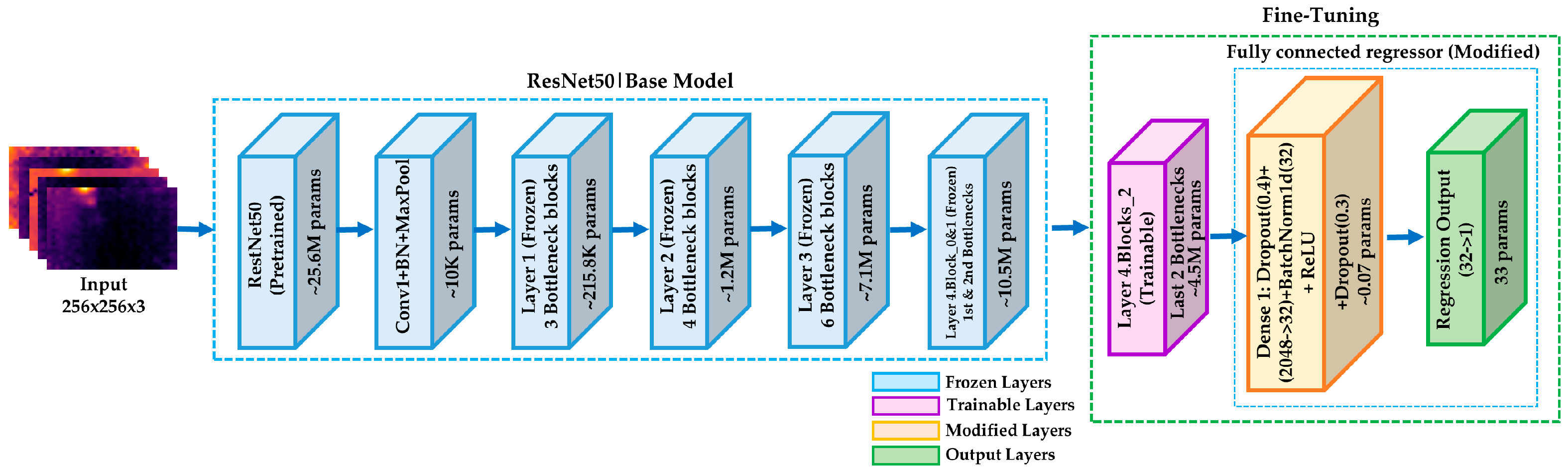

- The base architecture of ResNet50, composed of approximately 23.6 million parameters, was initially frozen in its entirety. Subsequently, the residual blocks were organized into a unified list grouping the four main sets of the network, layer1 (3 blocks), layer2 (4 blocks), layer3 (6 blocks), and layer4 (3 blocks), constituting a total of 16 architectural components. From this structure, only the upper portion of the model was enabled for training, corresponding to the last block of layer4 (layer4.2) and the regression head. Thus, the model was reduced to approximately 4.5 million trainable parameters, while 19.1 million remained frozen.

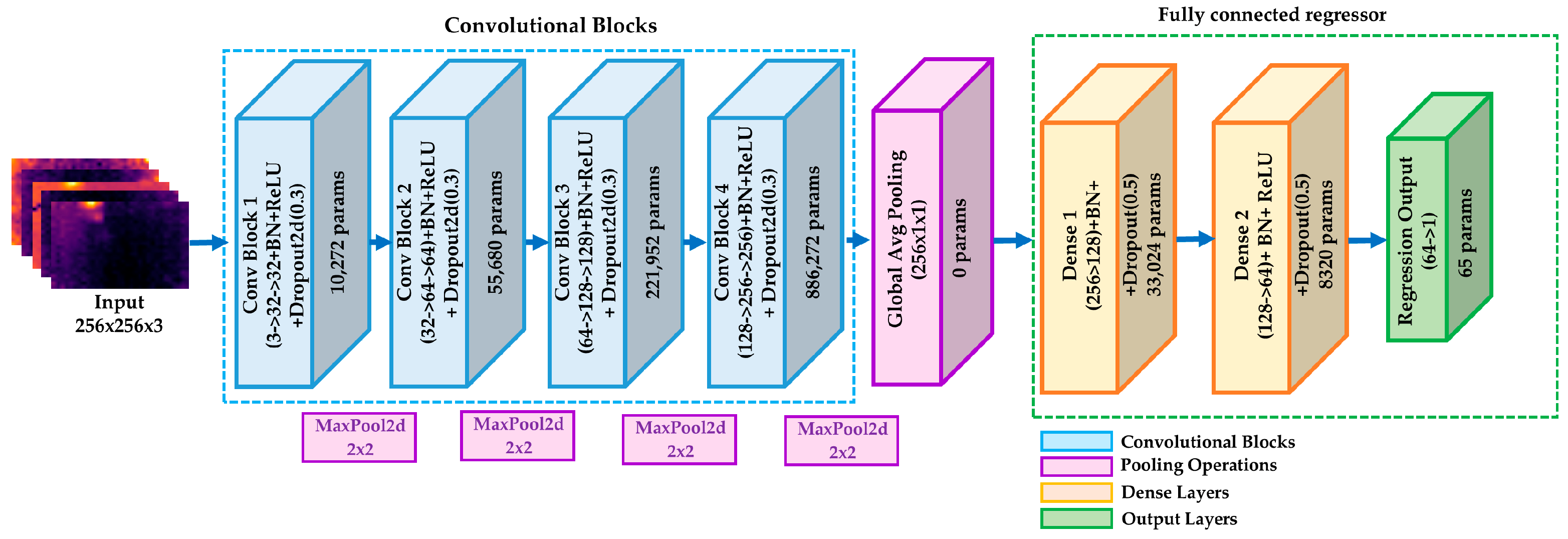

- The custom convolutional neural network architecture (PropNet-R) was designed to quantitatively estimate propane gas concentration from thermal images, predicting a single scalar value in ppm corresponding to the entire image, as recorded by the sensor at the time of capture. The network is organized into four progressive convolutional blocks that follow a systematic channel expansion pattern: 3→32→64→128→256.

2.2.4. Model Evaluation

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1

| Component | Model | Key Specifications | References |

|---|---|---|---|

| Processing Unit | Raspberry Pi 4 Model B | ARM Cortex-A72, 40 GPIO pins, Bluetooth 5.0, WiFi | [84] |

| Gas Sensor | TGS6810 | Propane/methane detection, linear response, low power | [85,86,87] |

| Thermal Camera | Adafruit MLX90640 | 32 × 24 IR array, −40 °C to 300 °C, 32 Hz max | [88] |

| Temperature, humidity, air pressure and air quality sensor | BME690 | Operating range Pressure: 300 to 1100 hPa Humidity: 0 to 100% Temperature: −40 to 85 °C | [89] |

Appendix A.2

Appendix A.3

| Model | Hyperparameter | Search Space | Optimal Value |

|---|---|---|---|

| SqueezeNet | lr | [0.0000: 0.002] step 0.0001 | 1.00 × 10−4 |

| weight_decay | [0.00: 0.100] step 0.01 | 6.00 × 10−2 | |

| VGG19 | lr | [0.0000: 0.002] step 0.0001 | 3.00 × 10−4 |

| weight_decay | [0.000: 0.050] step 0.005 | 2.50 × 10−2 | |

| ResNet50 | lr | [0.00000: 0.0002] step 0.00001 | 5.00 × 10−5 |

| weight_decay | [0.000: 0.010] step 0.001 | 7.00 × 10−3 | |

| PropNet-R | lr | [0.000: 0.020] step 0.001 | 1.00 × 10−3 |

| weight_decay | [0.000: 0.010] step 0.001 | 1.00 × 10−3 |

Appendix A.4

References

- Synák, F.; Čulík, K.; Rievaj, V.; Gaňa, J. Liquefied Petroleum Gas as an Alternative Fuel. Transp. Res. Procedia 2019, 40, 527–534. [Google Scholar] [CrossRef]

- Gould, C.F.; Urpelainen, J. LPG as a Clean Cooking Fuel: Adoption, Use, and Impact in Rural India. Energy Policy 2018, 122, 395–408. [Google Scholar] [CrossRef]

- Khanwilkar, S.; Gould, C.F.; DeFries, R.; Habib, B.; Urpelainen, J. Firewood, Forests, and Fringe Populations: Exploring the Inequitable Socioeconomic Dimensions of Liquified Petroleum Gas (LPG) Adoption in India. Energy Res. Soc. Sci. 2021, 75, 102012. [Google Scholar] [CrossRef]

- Zhou, L.; He, W.; Kong, Y.; Zhang, Z. Fuel Upgrading in the Kitchen: When Cognition of Biodiversity Conservation and Climate Change Facilitates Household Cooking Energy Transition in Nine Nature Reserves and Their Adjacent Regions. Energy 2025, 320, 135445. [Google Scholar] [CrossRef]

- Kim, D.S.; Chung, B.J.; Son, S.Y.; Lee, J. Developments of the In-Home Display Systems for Residential Energy Monitoring. In Proceedings of the 2013 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 11–14 January 2013; pp. 108–109. [Google Scholar] [CrossRef]

- Martins, J.; Brito, F.P. Alternative Fuels for Internal Combustion Engines. Energies 2020, 13, 4086. [Google Scholar] [CrossRef]

- Selim, M.Y.E.-S. Liquefied Petroleum Gas. In Alternative Fuels for Transportation; CRC Press: Boca Raton, FL, USA, 2016; pp. 203–226. [Google Scholar] [CrossRef]

- Hashem, G.T.; Al-Dawody, M.F.; Sarris, I.E. The Characteristics of Gasoline Engines with the Use of LPG: An Experimental and Numerical Study. Int. J. Thermofluids 2023, 18, 100316. [Google Scholar] [CrossRef]

- Woo, S.; Lee, J.; Lee, K. Experimental Study on the Performance of a Liquefied Petroleum Gas Engine According to the Air Fuel Ratio. Fuel 2021, 303, 121330. [Google Scholar] [CrossRef]

- Woo, S.; Lee, J.; Lee, K. Investigation of Injection Characteristics for Optimization of Liquefied Petroleum Gas Applied to a Direct-Injection Engine. Energy Rep. 2023, 9, 2130–2139. [Google Scholar] [CrossRef]

- Zahir, M.T.; Sagar, M.; Imam, Y.; Yadav, A.; Yadav, P. A Review on Gsm Based LPG Leakage Detection and Controller. In Proceedings of the 4th International Conference on Information Management & Machine Intelligence, Jaipur, India, 23–24 December 2022. [Google Scholar] [CrossRef]

- Bhagyashree, D.; Alkesh, G.; Sahil, M.; Ayush, T.; Abhishek, G.; Aman, N. LPG Gas Leakage Detection and Alert System. Int. J. Res. Appl. Sci. Eng. Technol. 2023, 11, 3302–3305. [Google Scholar] [CrossRef]

- Hu, Q.; Qian, X.; Shen, X.; Zhang, Q.; Ma, C.; Pang, L.; Liang, Y.; Feng, H.; Yuan, M. Investigations on Vapor Cloud Explosion Hazards and Critical Safe Reserves of LPG Tanks. J. Loss Prev. Process Ind. 2022, 80, 104904. [Google Scholar] [CrossRef]

- Terzioglu, L.; Iskender, H. Modeling the Consequences of Gas Leakage and Explosion Fire in Liquefied Petroleum Gas Storage Tank in Istanbul Technical University, Maslak Campus. Process. Saf. Prog. 2021, 40, 319–326. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Z.; Xia, D.; Zhang, C.; Yang, Y. A Plasma Partial Oxidation Approach for Removal of Leaked LPG in Confined Spaces. Process. Saf. Environ. Prot. 2024, 188, 694–702. [Google Scholar] [CrossRef]

- Gabhane, L.R.; Kanidarapu, N.R. Environmental Risk Assessment Using Neural Network in Liquefied Petroleum Gas Terminal. Toxics 2023, 11, 348. [Google Scholar] [CrossRef]

- Karthi, S.P.; Sri, P.J.; Kumar, M.M.; Akash, S.; Lavanya, S.; Varshan, K.H. Arduino Based Crizon Gas Detector System. In Proceedings of the 2021 5th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, 2–4 December 2021; Volume 2021, pp. 383–387. [Google Scholar] [CrossRef]

- Duong, P.A.; Lee, J.; Kang, H. LPG Dispersion in Confined Spaces: A Comprehensive Review. Eng. Rep. 2025, 7, e70064. [Google Scholar] [CrossRef]

- Rahayu, N.; Tinggi, S.; Bengkulu, I.A. Early Warning Of Leaking Lpg Gas Through Short Message Service (Sms) And Loudspeaker Tool Using Arduino Uno. J. Appl. Eng. Technol. Sci. 2020, 1, 91–102. [Google Scholar] [CrossRef]

- Pawar, S.; Korde, M.; Bhujade, P.; Auti, P. Intelligent Life Saver System with Gas Leakage. Int. J. Adv. Res. Sci. Commun. Technol. 2023, 3, 244–248. [Google Scholar] [CrossRef]

- Ahmed, S.; Rahman, M.J.; Razzak, M.A. Design and Development of an IoT-Based LPG Gas Leakage Detector for Households and Industries. In Proceedings of the 2023 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 7–10 June 2023; pp. 762–767. [Google Scholar] [CrossRef]

- Munahar, S.; Purnomo, B.C.; Ferdiansyah, N.; Widodo, E.M.; Aman, M.; Rusdjijati, R.; Setiyo, M. Risk-Based Leak Analysis of an LPG Storage Tank: A Case Study. Indones. J. Sci. Technol. 2022, 7, 37–64. [Google Scholar] [CrossRef]

- Pateriya, P.K.; Munna, A.A.; Saha, A.; Biswas, H.; Ahammed, A.; Shah, A. IoT-Based LPG Gas Leakage Detection and Prevention System. SSRN Electron. J. 2021. [Google Scholar] [CrossRef]

- Kimura, A.; Kudou, J.; Yuzui, T.; Itoh, H.; Oka, H. Numerical Simulation on Diffusion Behavior of Leaked Fuel Gas in Machinary Room. J. JIME 2021, 56, 638–645. [Google Scholar] [CrossRef]

- Bariha, N.; Ojha, D.K.; Srivastava, V.C.; Mishra, I.M. Fire and Risk Analysis during Loading and Unloading Operation in Liquefied Petroleum Gas (LPG) Bottling Plant. J. Loss Prev. Process Ind. 2023, 81, 104928. [Google Scholar] [CrossRef]

- Naveen, P.; Teja, K.R.; Reddy, K.S.; Sam, S.M.; Kumar, M.D.; Saravanan, M. A Comprehensive Review on Gas Leakage Monitoring and Alerting System Using IoT Devices. In Proceedings of the 2022 International Conference on Computer, Power and Communications (ICCPC), Chennai, India, 14–16 December 2022; pp. 242–246. [Google Scholar] [CrossRef]

- CTIF. Informe Mundial de Estadísticas de Incendios n.° 29 con un análisis exhaustivo de las estadísticas de incendios de 2022 | CTIF—Asociación Internacional de Servicios de Bomberos Para una Ciudadanía Más Segura Gracias a la Capacitación de Bomberos. Available online: https://www.ctif.org/news/world-fire-statistics-report-no-29-comprehensive-analysis-fire-statistics-2022 (accessed on 27 August 2025).

- OSINERGMIN. Informe de Resultados Consumo y Usos de Los Hidrocarburos Líquidos y GLP Encuesta Residencial de Consumo y Usos de Energía-ERCUE 2018; Osinergmin: Lima, Peru, 2020. [Google Scholar]

- CGBVP. Estadística de Emergencias Atendidas a Nivel Nacional. Available online: https://www.bomberosperu.gob.pe/diprein/Estadisticas/po_contenido_estadisticas.asp (accessed on 27 August 2025).

- OSINERGMIN. El Mercado Del GLP En El Perú: Problemática y Propuestas de Solución; Osinergmin: Lima, Peru, 2011. [Google Scholar]

- Wu, S.; Zhong, X.; Qu, Z.; Wang, Y.; Li, L.; Zeng, C. Infrared Gas Detection and Concentration Inversion Based on Dual-Temperature Background Points. Photonics 2023, 10, 490. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, G.; Lin, P.; Dong, D.; Jiao, L. Gas Imaging with Uncooled Thermal Imager. Sensors 2024, 24, 1327. [Google Scholar] [CrossRef]

- Wang, J.; Lin, Y.; Zhao, Q.; Luo, D.; Chen, S.; Chen, W.; Peng, X. Invisible Gas Detection: An RGB-Thermal Cross Attention Network and a New Benchmark. Comput. Vis. Image Underst. 2024, 248, 104099. [Google Scholar] [CrossRef]

- Mahmoud, K.A.A.; Badr, M.M.; Elmalhy, N.A.; Hamdy, R.A.; Ahmed, S.; Mordi, A.A. Transfer Learning by Fine-Tuning Pre-Trained Convolutional Neural Network Architectures for Switchgear Fault Detection Using Thermal Imaging. Alex. Eng. J. 2024, 103, 327–342. [Google Scholar] [CrossRef]

- Elgohary, A.A.; Badr, M.M.; Elmalhy, N.A.; Hamdy, R.A.; Ahmed, S.; Mordi, A.A. Transfer of Learning in Convolutional Neural Networks for Thermal Image Classification in Electrical Transformer Rooms. Alex. Eng. J. 2024, 105, 423–436. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, H.; Cao, K.; Li, Y. Using a Convolutional Neural Network and Mid-Infrared Spectral Images to Predict the Carbon Dioxide Content of Ship Exhaust. Remote Sens. 2023, 15, 2721. [Google Scholar] [CrossRef]

- Cao, X.; Shi, X.; Wang, Y. Infrared Fire Image Recognition Algorithm Based on ResNet50 and Transfer Learning. In Proceedings of the 2nd International Conference on Cyber Security, Artificial Intelligence and Digital Economy (CSAIDE 2023), Nanjing, China, 3–5 March 2023; Volume 12718, pp. 438–444. [Google Scholar] [CrossRef]

- Attallah, O.; Elhelw, A.M. Gas Leakage Recognition Using Manifold Convolutional Neural Networks and Infrared Thermal Images. In Proceedings of the 2023 Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE), Las Vegas, NV, USA, 24–27 July 2023; Volume 2023, pp. 2003–2008. [Google Scholar] [CrossRef]

- Wang, J.; Ji, J.; Ravikumar, A.P.; Savarese, S.; Brandt, A.R. VideoGasNet: Deep Learning for Natural Gas Methane Leak Classification Using an Infrared Camera. Energy 2022, 238, 121516. [Google Scholar] [CrossRef]

- Bin, J.; Bahrami, Z.; Rahman, C.A.; Du, S.; Rogers, S.; Liu, Z. Foreground Fusion-Based Liquefied Natural Gas Leak Detection Framework From Surveillance Thermal Imaging. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 1151–1162. [Google Scholar] [CrossRef]

- Wang, S.H.; Chou, T.I.; Chiu, S.W.; Tang, K.T. Using a Hybrid Deep Neural Network for Gas Classification. IEEE Sens. J. 2021, 21, 6401–6407. [Google Scholar] [CrossRef]

- Saleem, F.; Ahmad, Z.; Kim, J.M. Real-Time Pipeline Leak Detection: A Hybrid Deep Learning Approach Using Acoustic Emission Signals. Appl. Sci. 2024, 15, 185. [Google Scholar] [CrossRef]

- Spandonidis, C.; Theodoropoulos, P.; Giannopoulos, F. A Combined Semi-Supervised Deep Learning Method for Oil Leak Detection in Pipelines Using IIoT at the Edge. Sensors 2022, 22, 4105. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, E. Gas Pipeline Leakage Detection Based on Multiple Multimodal Deep Feature Selections and Optimized Deep Forest Classifier. Front. Environ. Sci. 2025, 13, 1569621. [Google Scholar] [CrossRef]

- Sharma, A.; Khullar, V.; Kansal, I.; Chhabra, G.; Arora, P.; Popli, R.; Kumar, R. Gas Detection and Classification Using Multimodal Data Based on Federated Learning. Sensors 2024, 24, 5904. [Google Scholar] [CrossRef]

- Attallah, O. Multitask Deep Learning-Based Pipeline for Gas Leakage Detection via E-Nose and Thermal Imaging Multimodal Fusion. Chemosensors 2023, 11, 364. [Google Scholar] [CrossRef]

- Zhang, E.; Zhang, E. Development of A Multimodal Deep Feature Fusion with Ensemble Learning Architecture for Real-Time Gas Leak Detection. In Proceedings of the 2024 IEEE 3rd International Conference on Computing and Machine Intelligence (ICMI), Mt Pleasant, MI, USA, 13–14 April 2024. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, J.; Huang, X.; Xiao, F.; Yang, M.; Huang, J.; Yin, X.; Sohail Usmani, A.; Chen, G. Towards Deep Probabilistic Graph Neural Network for Natural Gas Leak Detection and Localization without Labeled Anomaly Data. Expert Syst. Appl. 2023, 231, 120542. [Google Scholar] [CrossRef]

- Wang, S.; Bi, Y.; Shi, J.; Wu, Q.; Zhang, C.; Huang, S.; Gao, W.; Bi, M. Deep Learning-Based Hydrogen Leakage Localization Prediction Considering Sensor Layout Optimization in Hydrogen Refueling Stations. Process. Saf. Environ. Prot. 2024, 189, 549–560. [Google Scholar] [CrossRef]

- Zhang, L.; Wu, Q.; Liu, M.; Chen, H.; Wang, D.; Li, X.; Ba, Q. Hydrogen Leakage Location Prediction in a Fuel Cell System of Skid-Mounted Hydrogen Refueling Stations. Energies 2025, 18, 228. [Google Scholar] [CrossRef]

- Yan, W.; Liu, W.; Zhang, Q.; Bi, H.; Jiang, C.; Liu, H.; Wang, T.; Dong, T.; Ye, X. Multisource Multimodal Feature Fusion for Small Leak Detection in Gas Pipelines. IEEE Sens. J. 2024, 24, 1857–1865. [Google Scholar] [CrossRef]

- Spandonidis, C.; Theodoropoulos, P.; Giannopoulos, F.; Galiatsatos, N.; Petsa, A. Evaluation of Deep Learning Approaches for Oil & Gas Pipeline Leak Detection Using Wireless Sensor Networks. Eng. Appl. Artif. Intell. 2022, 113, 104890. [Google Scholar] [CrossRef]

- Ji, H.; An, C.H.; Lee, M.; Yang, J.; Park, E. Fused Deep Neural Networks for Sustainable and Computational Management of Heat-Transfer Pipeline Diagnosis. Dev. Built Environ. 2023, 14, 100144. [Google Scholar] [CrossRef]

- Yao, C.; Nagao, M.; Datta-Gupta, A.; Mishra, S. An Efficient Deep Learning-Based Workflow for Real-Time CO2 Plume Visualization in Saline Aquifer Using Distributed Pressure and Temperature Measurements. Geoenergy Sci. Eng. 2024, 239, 212990. [Google Scholar] [CrossRef]

- Xu, S.; Wang, X.; Sun, Q.; Dong, K. MWIRGas-YOLO: Gas Leakage Detection Based on Mid-Wave Infrared Imaging. Sensors 2024, 24, 4345. [Google Scholar] [CrossRef] [PubMed]

- Narkhede, P.; Walambe, R.; Mandaokar, S.; Chandel, P.; Kotecha, K.; Ghinea, G. Gas Detection and Identification Using Multimodal Artificial Intelligence Based Sensor Fusion. Appl. Syst. Innov. 2021, 4, 3. [Google Scholar] [CrossRef]

- Hosny, K.M.; Magdi, A.; Salah, A.; El-Komy, O.; Lashin, N.A. Internet of Things Applications Using Raspberry-Pi: A Survey. Int. J. Electr. Comput. Eng. 2023, 13, 902–910. [Google Scholar] [CrossRef]

- Zekovic, A. Survey of Internet of Things Applications Using Raspberry Pi and Computer Vision. In Proceedings of the 2023 31st Telecommunications Forum (TELFOR), Belgrade, Serbia, 21–22 November 2023. [Google Scholar] [CrossRef]

- Prieto-Luna, J.C.; Alarcón-Sucasaca, A.; Fernández-Romero, V.; Turpo-Galeano, Y.H.; Delgado-Berrocal, Y.R.; Holgado-Apaza, L.A. Automated Monitoring System for Estrus Signs in Cattle Using Precision Livestock Farming with IoT Technology in the Peruvian Amazon. Rev. Cient. Sist. Inform. 2025, 5, e837. [Google Scholar] [CrossRef]

- Farrel, G.E.; Yahya, W.; Basuki, A.; Amron, K.; Siregar, R.A. Scalable Edge Computing Cluster Using a Set of Raspberry Pi: A Framework. In Proceedings of the 8th International Conference on Sustainable Information Engineering and Technology, Badung, Indonesia, 24–25 October 2023; pp. 287–296. [Google Scholar] [CrossRef]

- Gizinski, T.; Cao, X. Design, Implementation and Performance of an Edge Computing Prototype Using Raspberry Pis. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; Volume 2022, pp. 592–601. [Google Scholar] [CrossRef]

- Salka, T.D.; Hanafi, M.B.; Rahman, S.M.S.A.A.; Zulperi, D.B.M.; Omar, Z. Plant Leaf Disease Detection and Classification Using Convolution Neural Networks Model: A Review. Artif. Intell. Rev. 2025, 58, 1–66. [Google Scholar] [CrossRef]

- Lathuilière, S.; Mesejo, P.; Alameda-Pineda, X.; Horaud, R. A Comprehensive Analysis of Deep Regression. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2065–2081. [Google Scholar] [CrossRef]

- Biju, V.G.; Schmitt, A.M.; Engelmann, B. Assessing the Influence of Sensor-Induced Noise on Machine-Learning-Based Changeover Detection in CNC Machines. Sensors 2024, 24, 330. [Google Scholar] [CrossRef] [PubMed]

- Mao, Y.; Li, J.; Qi, Z.; Yuan, J.; Xu, X.; Jin, X.; Du, X. Research on Outlier Detection Methods for Dam Monitoring Data Based on Post-Data Classification. Buildings 2024, 14, 2758. [Google Scholar] [CrossRef]

- Zhang, W.; Belcheva, V.; Ermakova, T. Interpretable Deep Learning for Diabetic Retinopathy: A Comparative Study of CNN, ViT, and Hybrid Architectures. Computers 2025, 14, 187. [Google Scholar] [CrossRef]

- Cardim, G.P.; Reis Neto, C.B.; Nascimento, E.S.; Cardim, H.P.; Casaca, W.; Negri, R.G.; Cabrera, F.C.; dos Santos, R.J.; da Silva, E.A.; Dias, M.A. A Study of COVID-19 Diagnosis Applying Artificial Intelligence to X-Rays Images. Computers 2025, 14, 163. [Google Scholar] [CrossRef]

- Manalı, D.; Demirel, H.; Eleyan, A. Deep Learning Based Breast Cancer Detection Using Decision Fusion. Computers 2024, 13, 294. [Google Scholar] [CrossRef]

- Holgado-Apaza, L.A.; Isuiza-Perez, D.D.; Ulloa-Gallardo, N.J.; Vilchez-Navarro, Y.; Aragon-Navarrete, R.N.; Quispe Layme, W.; Quispe-Layme, M.; Castellon-Apaza, D.D.; Choquejahua-Acero, R.; Prieto-Luna, J.C. A Machine Learning Approach to Identifying Key Predictors of Peruvian School Principals’ Job Satisfaction. Front. Educ. 2025, 10, 1580683. [Google Scholar] [CrossRef]

- Talukder, M.A.; Sharmin, S.; Uddin, M.A.; Islam, M.M.; Aryal, S. MLSTL-WSN: Machine Learning-Based Intrusion Detection Using SMOTETomek in WSNs. Int. J. Inf. Secur. 2024, 23, 2139–2158. [Google Scholar] [CrossRef]

- Omar, A.; Abd El-Hafeez, T. Optimizing Epileptic Seizure Recognition Performance with Feature Scaling and Dropout Layers. Neural Comput. Appl. 2024, 36, 2835–2852. [Google Scholar] [CrossRef]

- Linkon, A.H.M.; Labib, M.M.; Hasan, T.; Hossain, M.; Jannat, M.E. Deep Learning in Prostate Cancer Diagnosis and Gleason Grading in Histopathology Images: An Extensive Study. Inform. Med. Unlocked 2021, 24, 100582. [Google Scholar] [CrossRef]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Swati, Z.N.K.; Zhao, Q.; Kabir, M.; Ali, F.; Ali, Z.; Ahmed, S.; Lu, J. Brain Tumor Classification for MR Images Using Transfer Learning and Fine-Tuning. Comput. Med. Imaging Graph. 2019, 75, 34–46. [Google Scholar] [CrossRef]

- Sharma, P.; Nayak, D.R.; Balabantaray, B.K.; Tanveer, M.; Nayak, R. A Survey on Cancer Detection via Convolutional Neural Networks: Current Challenges and Future Directions. Neural Netw. 2024, 169, 637–659. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, M.; Mazzara, M.; Distefano, S. Regularized CNN Feature Hierarchy for Hyperspectral Image Classification. Remote Sens. 2021, 13, 2275. [Google Scholar] [CrossRef]

- Bera, S.; Shrivastava, V.K. Effect of Dropout on Convolutional Neural Network for Hyperspectral Image Classification. Lect. Notes Electr. Eng. 2023, 1056, 121–131. [Google Scholar] [CrossRef]

- Weng, W.; Zhu, X. U-Net: Convolutional Networks for Biomedical Image Segmentation. IEEE Access 2015, 9, 16591–16603. [Google Scholar] [CrossRef]

- Andrushia, A.D.; Anand, N.; Lublóy, É.; Arulraj, P. Deep Learning Based Thermal Crack Detection on Structural Concrete Exposed to Elevated Temperature. Adv. Struct. Eng. 2021, 24, 1896–1909. [Google Scholar] [CrossRef]

- Guo, S.; Yi, S.; Chen, M.; Zhang, Y. PIFRNet: A Progressive Infrared Feature-Refinement Network for Single Infrared Image Super-Resolution. Infrared Phys. Technol. 2025, 147, 105779. [Google Scholar] [CrossRef]

- Mao, K.; Li, R.; Cheng, J.; Huang, D.; Song, Z.; Liu, Z.K. PL-Net: Progressive Learning Network for Medical Image Segmentation. Front. Bioeng. Biotechnol. 2024, 12, 1414605. [Google Scholar] [CrossRef]

- Raspberry Pi Foundation. Raspberry Pi 4 Model B. Available online: https://www.raspberrypi.com/products/raspberry-pi-4-model-b/ (accessed on 28 August 2025).

- Aishwarya, K.; Nirmala, R.; Navamathavan, R. Recent Advancements in Liquefied Petroleum Gas Sensors: A Topical Review. Sens. Int. 2021, 2, 100091. [Google Scholar] [CrossRef]

- Tladi, B.C.; Kroon, R.E.; Swart, H.C.; Motaung, D.E. A Holistic Review on the Recent Trends, Advances, and Challenges for High-Precision Room Temperature Liquefied Petroleum Gas Sensors. Anal. Chim. Acta 2023, 1253, 341033. [Google Scholar] [CrossRef] [PubMed]

- Figaro USA Inc. Gas Sensor (TGS6810-D00). Available online: https://www.figaro.co.jp/en/product/docs/tgs6810-d00_product%20infomation%28en%29_rev03.pdf (accessed on 28 August 2025).

- Adafruit. MLX90640 IR Thermal Camera Breakout. 2024. Available online: https://www.adafruit.com/product/4407 (accessed on 28 August 2025).

- BOSCH. Gas Sensor BME690 | Bosch Sensortec. Available online: https://www.bosch-sensortec.com/products/environmental-sensors/gas-sensors/bme690/ (accessed on 1 October 2025).

| Layer Block | Type | Trainable | Input Dimension * | Output Dimension * | Activation | # Parameters |

|---|---|---|---|---|---|---|

| Features.3 (Fire) | Fire | Yes | [−1, 64, 63, 63] | [−1, 128, 63, 63] | ReLU | 11,408 |

| Features.4 (Fire) | Fire | Yes | [−1, 128, 63, 63] | [−1, 128, 63, 63] | ReLU | 12,432 |

| Features.6 (Fire) | Fire | Yes | [−1, 128, 31, 31] | [−1, 256, 31, 31] | ReLU | 45,344 |

| Features.7 (Fire) | Fire | Yes | [−1, 256, 31, 31] | [−1, 256, 31, 31] | ReLU | 49,440 |

| Features.9 (Fire) | Fire | Yes | [−1, 256, 15, 15] | [−1, 384, 15, 15] | ReLU | 104,880 |

| Features.10 (Fire) | Fire | Yes | [−1, 384, 15, 15] | [−1, 384, 15, 15] | ReLU | 111,024 |

| Features.11 (Fire) | Fire | Yes | [−1, 384, 15, 15] | [−1, 512, 15, 15] | ReLU | 188,992 |

| Features.12 (Fire) | Fire | Yes | [−1, 512, 15, 15] | [−1, 512, 15, 15] | ReLU | 197,184 |

| Regression Head | Conv2d + GAP + Flatten | Yes | [−1, 512, 15, 15] | [−1, 1] | Linear | 513 |

| Total | 721,217 |

| Layer Block | Type | Trainable | Input Dimension * | Output Dimension * | Activation | # Parameters |

|---|---|---|---|---|---|---|

| Features.35 | Conv2d | Yes | [−1, 512, 14, 14] * | [−1, 512, 14, 14] * | ReLU | 2,359,808 |

| FC Layer 1 | Fully Connected | Yes | [−1, 25,088] | [−1, 128] | ReLU | 3,211,392 |

| Regression Head | Fully Connected | Yes | [−1, 128] | [−1, 1] | Linear | 129 |

| Total | 5,571,329 |

| Layer Block | Type | Trainable | Input Dimension | Output Dimension | Activation | # Parameters |

|---|---|---|---|---|---|---|

| layer4.2 | Residual bottleneck | Yes | [−1, 2048, 7, 7] * | [−1, 2048, 7, 7] * | ReLU | 4,462,592 |

| FC Layer 1 | Fully Connected | Yes | [−1, 2048] | [−1, 32] | ReLU | 65,568 |

| BatchNorm1d | Batch Normalization | Yes | [−1, 32] | [−1, 32] | - | 64 |

| Regression Head | Fully Connected | Yes | [−1, 32] | [−1, 1] | Linear | 33 |

| Total | 4,528,257 |

| Parameter | SqueezeNet | VGG19 | ResNet50 |

|---|---|---|---|

| Loss function | MSE | MSE | MSE |

| Optimizer | Adam | Adam | Adam |

| Learning rate (lr) | 1.00 × 10−4 | 3.00 × 10−4 | 5.00 × 10−5 |

| Weight decay | 6.00 × 10−2 | 2.50 × 10−2 | 7.00 × 10−3 |

| Scheduler | ReduceLROnPlateau (factor = 0.5, patience = 10) | ReduceLROnPlateau (factor = 0.5, patience = 10) | ReduceLROnPlateau (factor = 0.5, patience = 10) |

| Training epochs | 300 | 300 | 300 |

| Early Stopping (patience) | 20 | 20 | 15 |

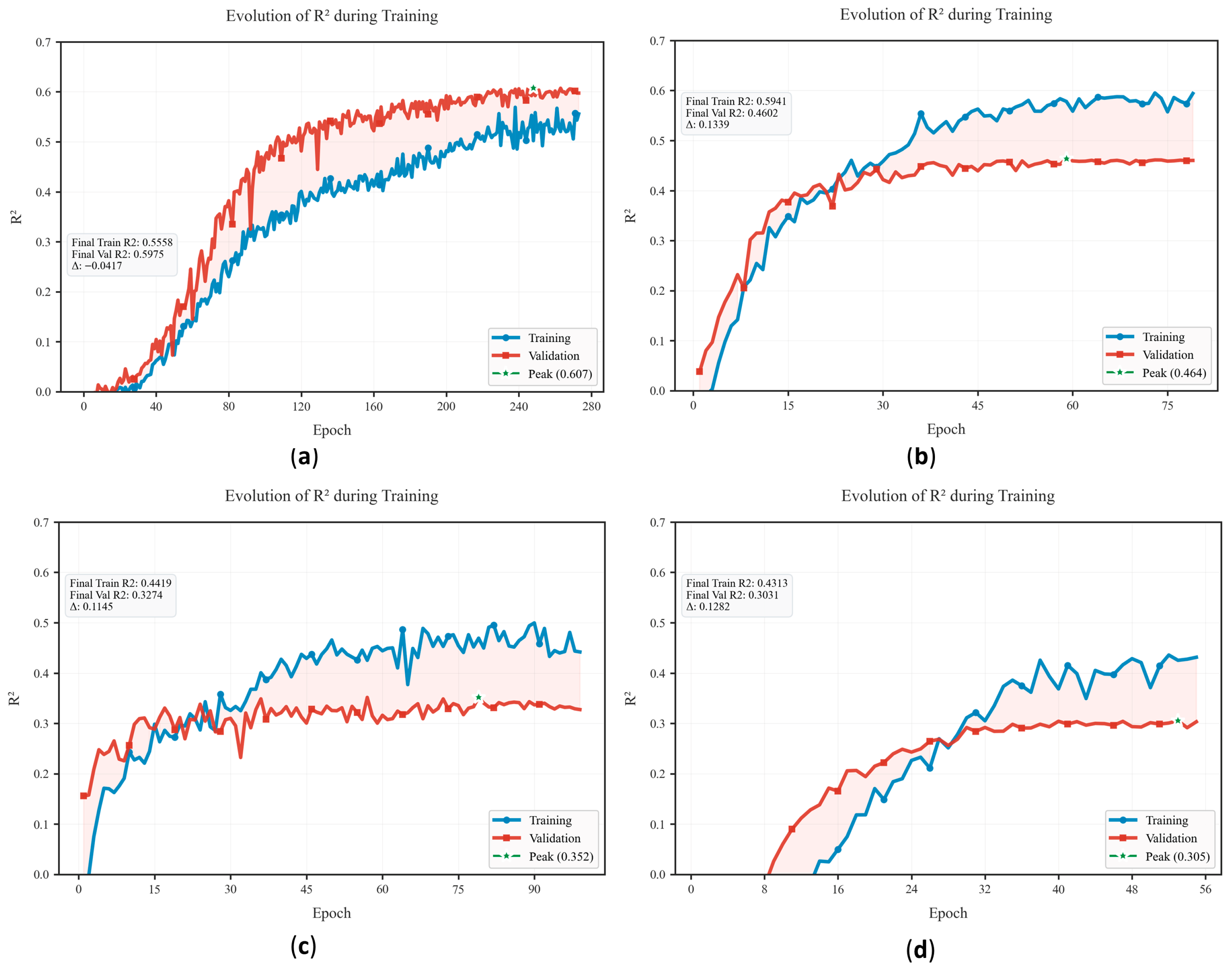

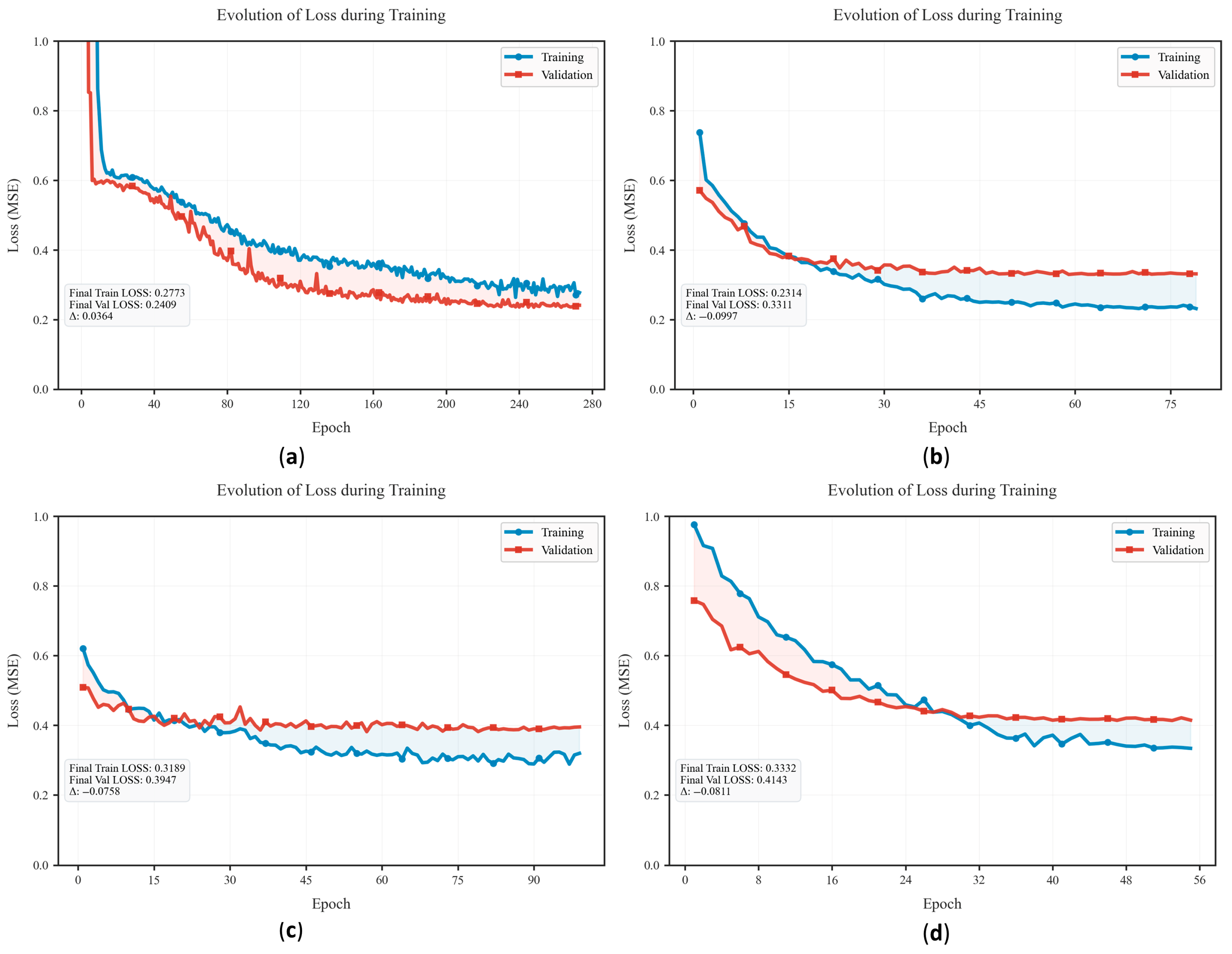

| Models | R2 | MAE | MSE | RMSE | Pearson’s r | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Train | Test | Train | Test | Train | Test | Train | Test | Train | Test | |

| PropNet-R | 0.607 | 0.614 | 0.328 | 0.333 | 0.236 | 0.240 | 0.485 | 0.489 | 0.781 | 0.786 |

| SqueezeNet | 0.451 | 0.397 | 0.390 | 0.418 | 0.329 | 0.374 | 0.574 | 0.612 | 0.673 | 0.637 |

| VGG19 | 0.364 | 0.280 | 0.420 | 0.465 | 0.381 | 0.447 | 0.617 | 0.668 | 0.655 | 0.564 |

| ResNet50 | 0.311 | 0.236 | 0.447 | 0.496 | 0.413 | 0.474 | 0.643 | 0.689 | 0.583 | 0.514 |

| Model | Inference Time | GPU Used (MB) | Trainable/Total Params |

|---|---|---|---|

| SqueezeNet | 0.008 | 514.51 | 721,217/723,009 |

| VGG19 | 0.009 | 426.36 | 3,211,521/23,235,905 |

| ResNet50 | 0.008 | 662.20 | 4,528,257/23,573,697 |

| PropaNet-R | 0.005 | 408.53 | 1,231,969/1,231,969 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Holgado-Apaza, L.A.; Prieto-Luna, J.C.; Carpio-Vargas, E.E.; Ulloa-Gallardo, N.J.; Vilchez-Navarro, Y.; Barrón-Adame, J.M.; Aguirre-Puente, J.A.; Ramos Enciso, D.; Castellon-Apaza, D.D.; Saman-Pacamia, D.J. PropNet-R: A Custom CNN Architecture for Quantitative Estimation of Propane Gas Concentration Based on Thermal Images for Sustainable Safety Monitoring. Sustainability 2025, 17, 9801. https://doi.org/10.3390/su17219801

Holgado-Apaza LA, Prieto-Luna JC, Carpio-Vargas EE, Ulloa-Gallardo NJ, Vilchez-Navarro Y, Barrón-Adame JM, Aguirre-Puente JA, Ramos Enciso D, Castellon-Apaza DD, Saman-Pacamia DJ. PropNet-R: A Custom CNN Architecture for Quantitative Estimation of Propane Gas Concentration Based on Thermal Images for Sustainable Safety Monitoring. Sustainability. 2025; 17(21):9801. https://doi.org/10.3390/su17219801

Chicago/Turabian StyleHolgado-Apaza, Luis Alberto, Jaime Cesar Prieto-Luna, Edgar E. Carpio-Vargas, Nelly Jacqueline Ulloa-Gallardo, Yban Vilchez-Navarro, José Miguel Barrón-Adame, José Alfredo Aguirre-Puente, Dalmiro Ramos Enciso, Danger David Castellon-Apaza, and Danny Jesus Saman-Pacamia. 2025. "PropNet-R: A Custom CNN Architecture for Quantitative Estimation of Propane Gas Concentration Based on Thermal Images for Sustainable Safety Monitoring" Sustainability 17, no. 21: 9801. https://doi.org/10.3390/su17219801

APA StyleHolgado-Apaza, L. A., Prieto-Luna, J. C., Carpio-Vargas, E. E., Ulloa-Gallardo, N. J., Vilchez-Navarro, Y., Barrón-Adame, J. M., Aguirre-Puente, J. A., Ramos Enciso, D., Castellon-Apaza, D. D., & Saman-Pacamia, D. J. (2025). PropNet-R: A Custom CNN Architecture for Quantitative Estimation of Propane Gas Concentration Based on Thermal Images for Sustainable Safety Monitoring. Sustainability, 17(21), 9801. https://doi.org/10.3390/su17219801