Abstract

One of the most important functions of the battery management system (BMS) in battery electric vehicle (BEV) applications is to estimate the state of charge (SOC). In this study, several machine and deep learning techniques, such as linear regression, support vector regressors (SVRs), k-nearest neighbor, random forest, extra trees regressor, extreme gradient boosting, random forest combined with gradient boosting, artificial neural networks (ANNs), convolutional neural networks, and long short-term memory (LSTM) networks, are investigated to develop a modeling framework for SOC estimation. The purpose of this study is to improve overall battery performance by examining how BEV operation affects battery deterioration. By using dynamic response simulation of lithium battery electric vehicles (BEVs) and lithium battery packs (LIBs), the proposed research provides realistic training data, enabling more accurate prediction of SOC using data-driven methods, which will have a crucial and effective impact on the safe operation of electric vehicles. The paper evaluates the performance of machine and deep learning algorithms using various metrics, including the R2 Score, median absolute error, mean square error, mean absolute error, and max error. All the simulation tests were performed using MATLAB 2023, Anaconda platform, and COMSOL Multiphysics.

1. Introduction

Global warming has been regarded as one of the most pressing issues of our day. One of the most dangerous forms of environmental pollution in metropolitan areas is the CO2 gas released while internal combustion engine automobiles are operating. The automobile industry is paying greater attention to electric vehicles [1], which can use electric motors as propulsion systems, to overcome these issues. What makes battery electric vehicles (BEVs) so attractive is that they forgo combustion engines entirely and rely on a battery pack to power the car [2]. Automotive manufacturing often adopts battery technologies such as nickel–metal hybrid (NiMH), nickel–cadmium (NiCd), lead–acid, and lithium-ion (LIB) technologies. Specifically, LIBs can deliver high energy density, depending on the chemistry. They also have a longer lifespan and are less expensive than other technologies [3,4]. The battery management system (BMS) controls how LIBs operate in BEVs. The BMS, which fits the battery pack in BEVs, is a combination of hardware (such as sensors, controllers, actuators, etc.) and software that works to improve safety, shield individual cells from damage, increase battery efficiency, and prolong battery life.

2. Related Works

There are many ways to estimate a battery charge level, from simple methods like tracking voltage changes to more advanced techniques using artificial intelligence. Ultimately, the BMS aims to keep the battery safe and efficient and make it last as long as possible [5]. The BMS plays a critical role in maintaining the battery’s temperature within safe and optimal limits, ensuring its long-term performance and safety. Temperature significantly affects battery performance, safety, and lifespan. There are a variety of methods that exist for temperature estimation, including electrochemical modeling, equivalent circuit modeling, machine learning, and direct impedance measurement [6]. Electric vehicle batteries rely heavily on the battery management system. This system acts like a watchful guardian, constantly monitoring the battery’s state of health (SOH), charge level (known as SOC), and state of function (SOF). SOF, SOC, and SOH are interconnected metrics that provide a comprehensive understanding of a battery’s performance. It is a complex task because batteries behave differently depending on various factors.

Monitoring the battery pack’s state of health (SOH) and state of charge (SOC) and acting quickly to correct aberrant behavior are two of the BMS’s primary responsibilities. Due to the non-linearities of the processes that define the battery’s electrochemical activity, evaluating the online SOC is a difficult operation [7]. Several methodologies have been invested in to identify the state of charge (SOC) in battery electric vehicles (BEVs). These methods can be divided into many categories, including nonlinear observers, adaptive filtering techniques, model-based estimation techniques, traditional techniques, and learning algorithms. Common approaches include electromotive force (EMF), Coulomb counting, and internal resistance measurements [8,9,10,11,12,13,14]. Model-based estimation methods often rely on equivalent circuit models (ECM), reduced-order models (ROM), state–space models, electrochemical models (EM), and electrochemical impedance spectroscopy models (EISM). Adaptive filter algorithms, also known as Bayesian frameworks, encompass Kalman filters (KF), extended Kalman filters (EKF), unscented Kalman filters (UKF), and adaptive unscented Kalman filters (AUKF). Other approaches include nonlinear observers, bi-linear interpolation (BI) techniques, and particle filter (PF) methods [15,16,17,18,19,20,21,22]. Learning techniques, including machine learning (ML), fuzzy logic (FL), and genetic algorithms (GA), for SOC estimation [12,23,24,25,26,27,28] belong to a distinctive class of techniques since they do not include modeling the battery’s dynamic states or physical processes, let alone the parameter estimation. Essentially, they only use trained data that are readily available and include battery properties (such as temperature, voltage, and current) gathered from laboratory experiments to understand the non-linearities of the processes taking place within a cell.

Currently, many researchers are using artificial intelligence models for battery state-of-charge calculation. Lab experiments often use single cells or a few cells, while BEVs have entire battery packs with multiple modules connected in series and parallel. This limited setup does not reflect the complex discharge patterns of a full BEV battery. When a lab battery is fully discharged, it can only run for a certain number of hours. As a result, its driving range is lowered in comparison to the range of BEVs that are presently available for purchase. For instance, according to estimates from the Environmental Protection Agency (EPA), the Tesla S vehicle can travel 391 miles (630 km) at a time [29]. Additionally, lab tests typically only consider the battery itself, ignoring other vehicle components like the motor, powertrain, and external factors like air resistance. These all influence battery discharges in real-world driving. Models trained using data from a specific battery chemistry cannot accurately predict SOC for batteries with different chemistries. The internal electrochemical processes occurring inside the battery have a major influence on the connection between SOC and battery properties. These limitations call for more comprehensive data collection methods that consider real-world BEV operation and diverse battery chemistries to create accurate data-driven SOC estimation models. This investigation introduces a simulating form designed to create reliable training data for learning-based procedures that are utilized to estimate the BEV state of charge. To overcome these issues, the developed BEV model is used to compute the battery characteristics and SOC with a high degree of realism to actual driving conditions.

In addition to the commonly used electric current, voltage, and temperature, power losses from aerodynamic drag and variations in the mechanical power output of the electric motor are other elements taken into account for SOC prediction. The technique has been applied to Tesla S models available on the BEV market to gather information for practical uses. Building a larger extensive dataset is feasible for a data-driven model by simulating the distinct chemistries of the battery packs’ cells (which are made of nickel, manganese, and cobalt minerals) as well as the many ways in which the powertrain and electric motors operate. This research tackles key challenges in improving battery performance for electric vehicles (BEVs) with more accurate SOC estimation. This research simulates various driving conditions, as follows: the US06, Federal Test Procedure 75 (FTP75); the Heavy-Duty Urban Dynamometer Driving Schedule (HDUDDS); the Highway Fuel Economy Test (HWFET); Los Angeles 92 (LA92); the Supplementary Federal Test Procedure (SC03); and the Worldwide Harmonized Light Vehicles Test Procedure (WLTP) to develop a realistic dataset for training SOC estimation models. The models, including linear regression, support vector regressors (SVRs), k-nearest neighbor, random forest, extra trees regressor, extreme gradient boosting, random forest combined with gradient boosting, artificial neural networks (ANNs), convolutional neural networks, long short-term memory (LSTM) networks, are renowned for being efficient at SOC prediction. This research offers a new approach for generating training data for accurate SOC estimation and investigates the impact of BEV operation on battery degradation, aiming to improve overall battery performance. The automotive simulations were performed using seven EPA drive cycles, with two cases for the testing and prediction of the SOC to determine the results more accurately than other models.

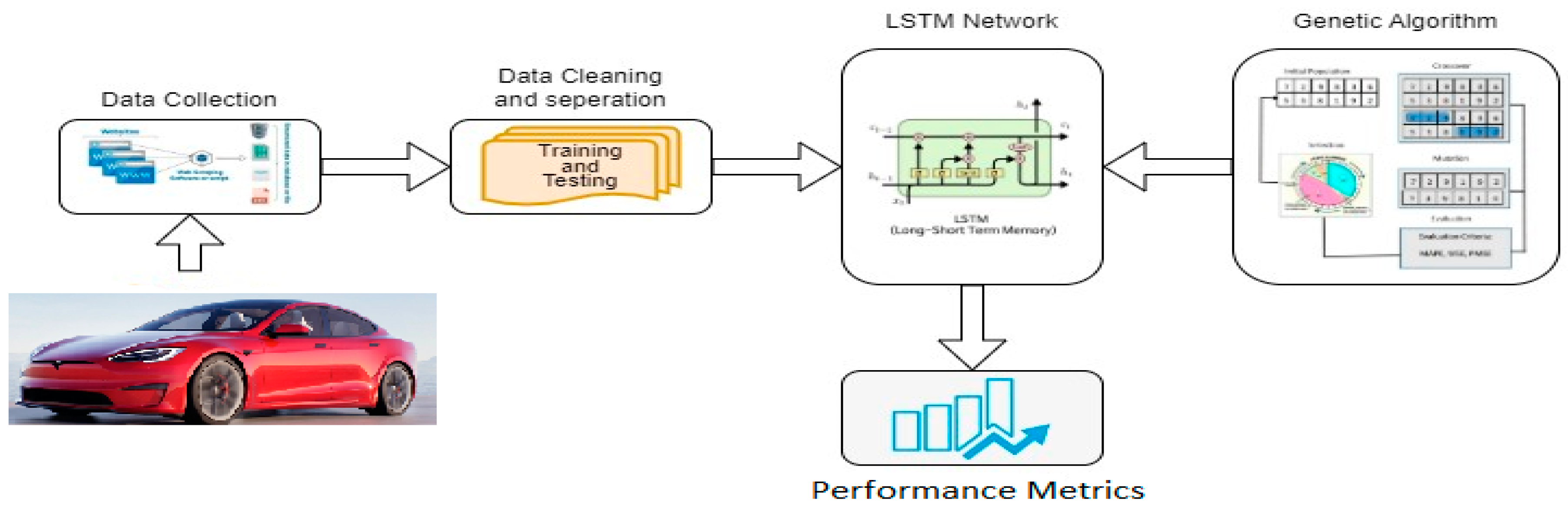

Employing machine and deep learning (DL) algorithms for state-of-charge forecasting of BEVs, such as linear regression, SVRs, K-NN, RF, EXR, XGB, random forest combined with gradient boosting, ANNs, CNN, and LSTM for this research made the results of the prediction more accurate with fewer errors than the results presented in [30]. In this research, several machine learning and deep learning algorithms were used, thus supporting the reliability of the model over others. In this research, the use of optimal parameters for machine learning and deep learning algorithms was investigated to obtain an accurate estimation of SOC. For example, our research used a genetic algorithm to obtain the optimum parameters of the LSTM network.

The contributions of this paper are as follows: (i) we provide a modeling framework for generating accurate training data for algorithms based on learning used in BEV SOC estimation; (ii) the battery properties and state of charge are accurately determined according to real-world driving parameters; (iii) machine and deep learning algorithms are employed for the state-of-charge forecasting of BEVs; (iv) machine learning and deep learning algorithms are parameterized to obtain a precise estimation of SOC; (v) a genetic algorithm is combined with LSTM to determine the optimal parameter of LSTM units. The article is arranged as follows. The model description is presented in Section 2. Machine and deep learning algorithms are discussed in Section 3. The modeling findings are discussed in Section 4. Lastly, Section 5 presents the conclusions of the research.

3. Model Description

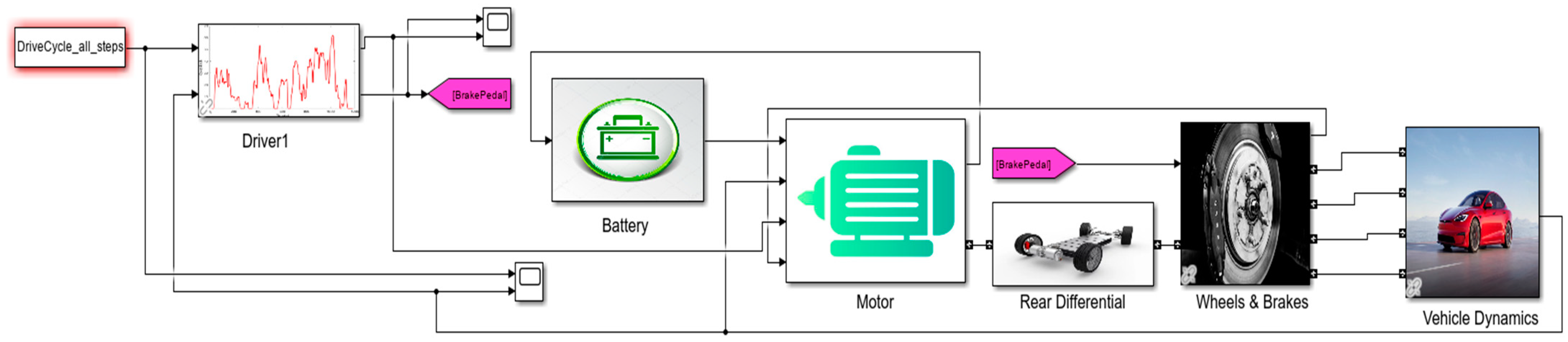

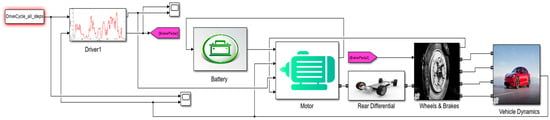

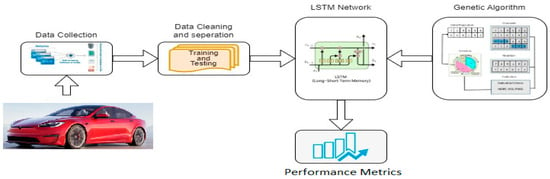

This study provides a deep learning and machine learning algorithm-based computational method for studying the battery state of charge in electric vehicles (BEVs). The electrochemical and thermal data of the LIB model are integrated with machine learning techniques and automotive simulations in the BEV model. The MATLAB platform was used to execute the automotive simulations [31]. Data-driven models of vehicle powertrains, including gasoline, diesel, and electric systems, are made using the Powertrain blockset [32] MATLAB/Simulink software (2023a). Primarily, using the blocks in the Powertrain blockset library allows one to change the settings of the different parts of the vehicle by providing the appropriate look-up tables. The technical specifications of the Tesla S vehicle were used to parameterize the model’s blocks [29]. Utilizing the LIB modeling in COMSOL Multiphysics served as the foundation for the lookup tables implemented in the battery block parametrization of the BEV’s model [33]. Then, using our informed dataset that included the characteristics of the BEV’s components, we trained and evaluated ANN, CNN, LSTM, extreme gradient boosting, ensemble, K-NN, SVR, extra trees regressor, linear regression, and a random forest utilizing the data generated by the automotive simulations of BEVs. The learning methods were developed using the packages TensorFlow, Scikit-Learn keras, pytorch, and seaborn for ML and DL model construction [34,35,36]. Figure 1 illustrates the layout of the BEV model.

Figure 1.

The layout of the BEV model created in MATLAB.

Utilizing specific blocks from the powertrain block set library, the test model replicates the dynamic behavior of the battery pack, electric motor, differential, wheels, braking system, and vehicle structure. The battery pack is constructed using the information sheet battery block [33], which makes use of an ECM whose factors can be predicted using look-up tables constructed by introducing the cell discharge curves at constant temperatures and C-rates. In particular, the created electrochemical–thermal model in COMSOL Multiphysics was used to simulate the discharge curves at various C-rates from 1C, 2C, 3C, 4C, and 5C with a constant temperature of 293 K, and at various temperatures of 263 K, 273 K, 29K, and 313 K at the constant 1C. We considered the many features of the batteries used in the Tesla S, specifically the cylindrical NMC 18,650 cell with a nominal capacity of 2.86 Ah. The information sheet of the battery block is designed to account for the connections that define the Tesla S battery packs (96 series 86 parallel). This makes it feasible to replicate the 100 kWh total energy discharge of the vehicles’ complete battery packs. The Coulomb counting method is used by the block to determine the SOC. The Mapped Motor block is used to imitate the Tesla S’s permanent magnet synchronous motor (PMSM) [37]. To meet the reference torque need, the block regulates the output shaft torque.

The motor’s characteristic curves at a certain angular speed are used to calculate the shaft torque. The reference torque considers the breaking torque that recovers the SOC during deceleration as well as the positive torque required for the vehicle to accelerate. To fit the demands of the driving cycle, the acceleration torque is calculated by multiplying the percentual acceleration [%] that the proportional integral (PI) controller computes from the motor’s characteristic curve at the angular speed. The limited slip differential [38] and the longitudinal wheel [39] blocks replicate the vehicle’s powertrain system by calculating the torque associated with the left and right axles as well as the net longitudinal forces applied to the front and back wheels. In addition, the tarmac and wheel friction are taken into account. Utilizing the vehicle body 1DOF longitudinal [40] block, the dynamics of the vehicle are simulated. The block considers the net longitudinal forces provided to the wheels and the aerodynamic drag force utilized by the windshield owing to the aerodynamic resistance of air, modeling the vehicle as a rigid body with one degree of freedom moving longitudinally parallel to the ground. We can calculate the acceleration and subsequent speed thanks to the balance of forces acting on the vehicle’s mass. The PI controller dynamically adjusts the feedback speed so that it coincides with the applied DC’s reference speed. The Mathworks webpages devoted to the powertrain blockset modules offer a more thorough description of how the look-up tables and differential equations that characterize each block were constructed [32]. The driving cycle that determines the vehicle’s reference speed is fed into the model, which then simulates the dynamics of BEV driving and determines the related SOC variation.

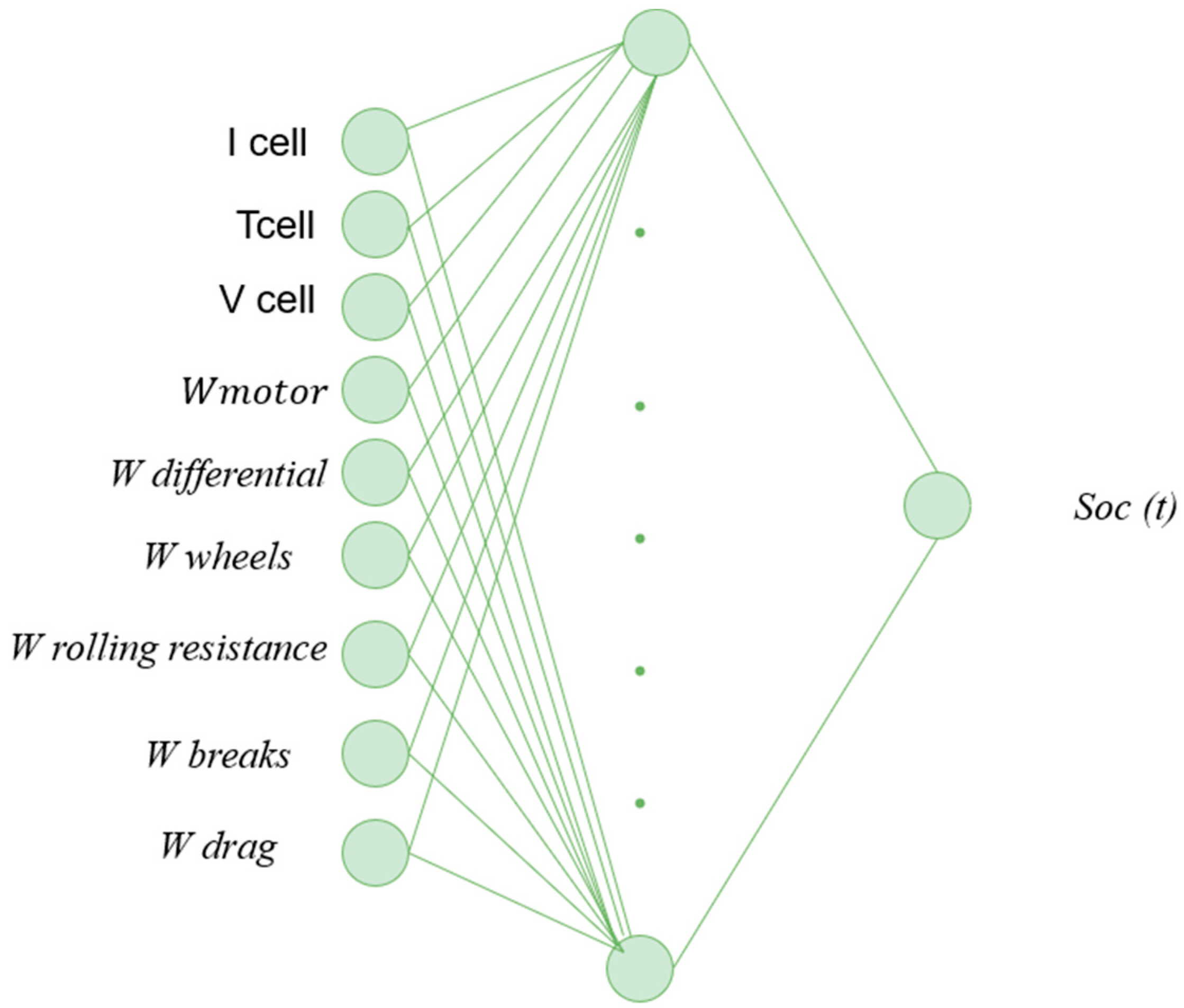

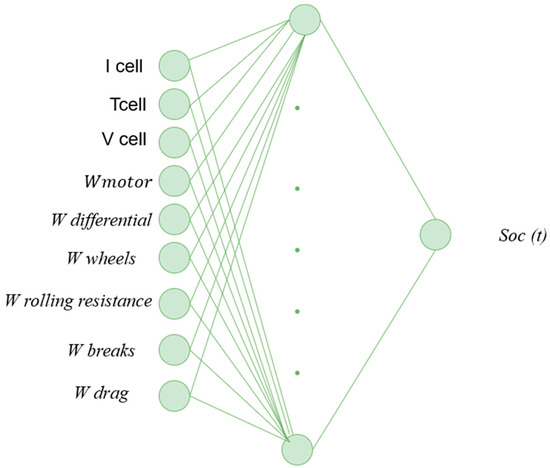

Several machine learning approaches for the estimate of the battery’s SOC have been trained and tested with the data created using the integrated automotive and electrochemical models of BEVs and LIBs, as discussed in this section. Here, we begin by introducing the input variables (features). Subsequently, we present a summary of the selected learning models for estimating the SOC. The battery block of the BEV model computes the current, voltage, and SOC fluctuation, whereas the cell’s thermal model computes the temperature profile. The dataset includes the system’s powertrain and the mechanical power flow from the electric motor to the wheels. It contains information on the power that the motor produces, the power that is transmitted via the differential, the power that is delivered to the wheels, the power that is lost as a result of rolling resistance, and the power needed for braking. Lastly, we considered the power loss brought on by the wind’s aerodynamic resistance. Consequently, the SOC was estimated by combining the nine variables that describe the vehicle performance. (I cell, V cell, T cell, Wmotor, W differential, W wheels, W rolling resistance, W breaks, W drag). We examined the learning algorithms that are included within the numerous categories mentioned in Section 1—linear regression, support vector regressors (SVRs), k-nearest neighbor, random forest, extra trees regressor, extreme gradient boosting, random forest combined with gradient boosting, ANNs, CNN, and LSTM networks [41,42,43,44,45,46,47,48,49,50,51]. All models will be illustrated in the next section.

4. Machine Learning Model

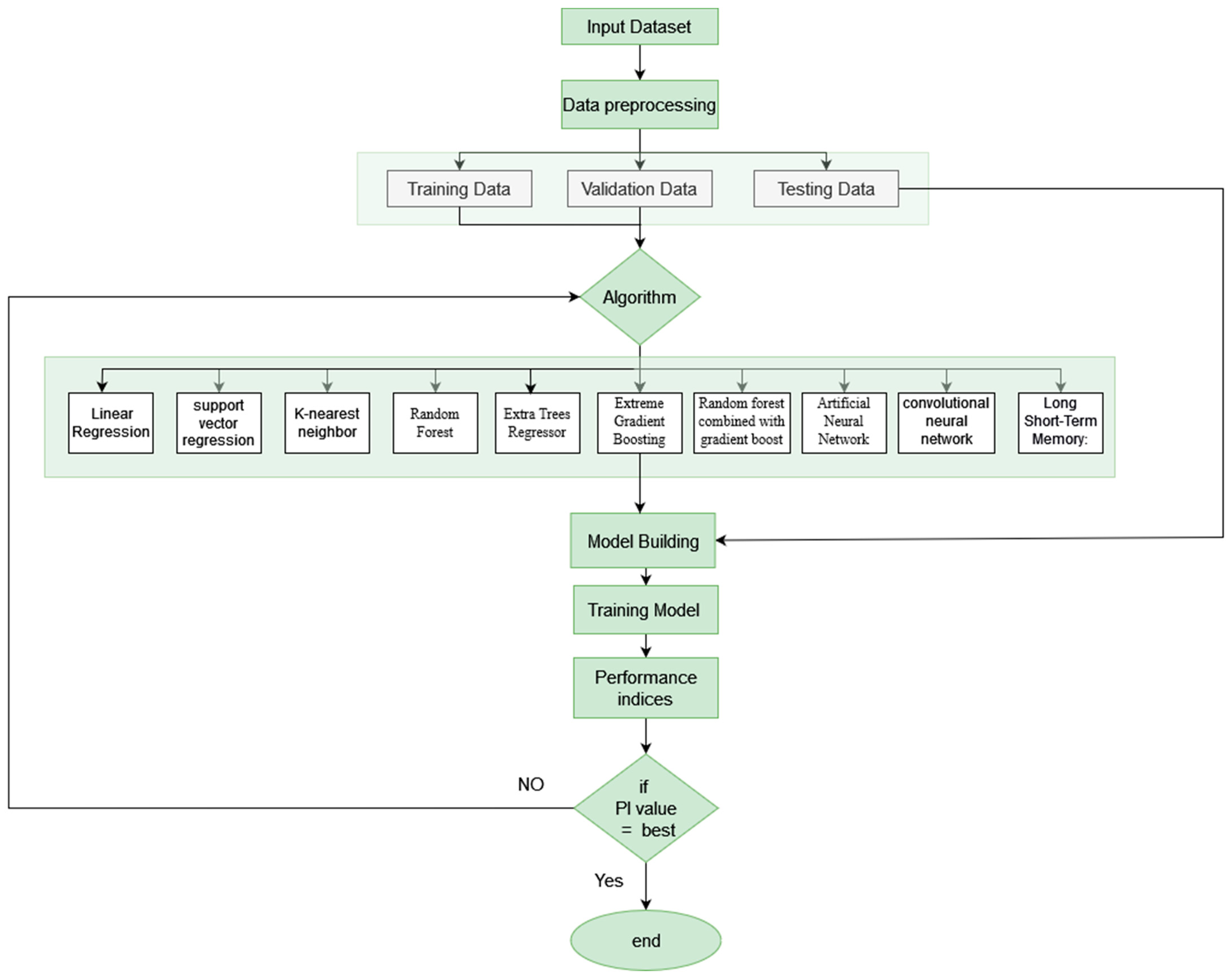

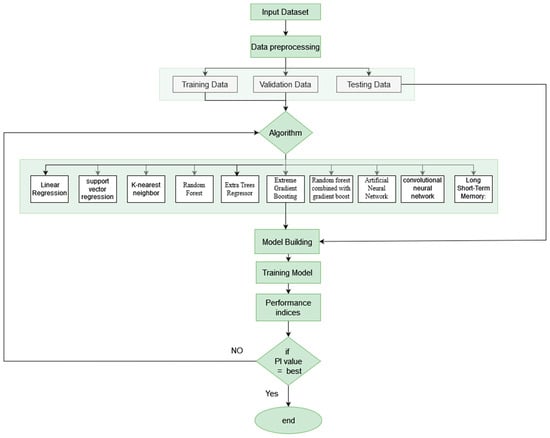

The integrated automotive and electrochemical models of BEVs and LIBs discussed in the preceding sections generated modeling data that were utilized to train and test numerous ML and DL models for the estimation of the battery’s state of charge (SOC). Here, we start by outlining the input variables, or features. Next, we provide a brief overview of the learning models that we have chosen to estimate the SOC. Then, we give a brief description of the learning models that we selected to compute the SOC. The discharge current, voltage, temperature, and SOC are the factors that define the battery. The system’s powertrain is taken into consideration in the dataset. Training and estimating are the two main stages of utilizing machine learning (ML) technology to estimate the state of health (SOH). While the estimating process can be carried out online or offline, the training process is usually carried out offline. Gathering an appropriate dataset is the initial step in the training process. From the raw data, pertinent characteristics that record aging information are then extracted. The training dataset is made up of these characteristics as well as the real SOH values. Then, to suit the training data, the ML algorithms learn and modify their weights and biases. Through this process, a nonlinear connection is established between the input characteristics, which represent the SOH, and the output, which is usually the capacity or SOH. Consequently, the SOC is estimated by combining nine variables that describe the vehicle’s performance. The proposed approach for the estimation of the SOC is divided into the following three modules: input parameters; feature extraction; and ML and DL algorithms. Ten different algorithms are implemented in this study, as shown in Figure 2.

Figure 2.

Flow chart for the suggested approach for estimating the battery’s state of charge.

4.1. Linear Regression

One of the most popular prediction models in statistics and machine learning is linear regression. Linear regression is an effective and practical technique for examining the relationship between two or more variables. To use this tool, the variables must be divided into independent input variables and a single dependent output variable. The objective is to identify the best linear function or a set of coefficients to accurately estimate the value of the reliant variable. The linear regression model is formally expressed as follows [52]:

where X1 to Xn represents the independent variable, ε is the error term, β0 is the intercept, and β1 to βn is the regression coefficient.

4.2. Support Vector Regression (SVR)

SVR is a machine learning approach based on the ideas of statistical learning [17]. The main idea of this method is to find a hyperplane by transforming a nonlinear input space into a higher-dimensional space using nonlinear mapping. Support vector regression (SVR) is a type of SVM used for function approximation and regression. Numerous kernel functions are used in SVM models, including polynomial, exponential, radial basis function, sigmoid, and linear. The kernel utilized in SVR forecasting and optimization is provided. The regression model can be formulated as presented in the following equation [53]:

To find the weight vector ω, we need to minimize the following regularized expression, subject to the constraints given in Equations (4)–(6). In this expression, ω is the weight vector, b is the bias term, and θ(χ) is a nonlinear mapping function that transforms χ into a higher-dimensional feature space, as follows:

represents the desired level of accuracy in the function approximation on the training data. The variables and represent the positive slack variables, while C is a penalty parameter that controls the balance between regularization and empirical risk. The SVR is solved using the Lagrange multipliers and by incorporating the following constraints:

Data that are not linearly separable can be handled well by kernel functions. One valuable tool for solving non-linear regression issues is a kernel function, which is essentially a measure of pattern similarity. The following RBF kernels are used in our study:

Here, > 0

4.3. K-Nearest Neighbor (K-NN)

Depending on the dataset parameter, the k-NN regression technique was employed in this work to forecast the SOC battery values. When k-NN is used in regression, as described in this article, it first uses a distance measure to determine the k nearest points, , , …, , of a new point, . It then computes the weighted average of their response to forecast a future outcome of . Given a training dataset of N points M = {, , …, }, where each point has d features, k-NN may estimate the response of a new data point as follows. First, the weighted Euclidean distance between each training point and the testing point , is determined and may be represented as in [54] to explain how near each is to the other, as follows:

where and are the j-th characteristic of the new point and the training points , consequently. In addition, wj is the weight of jth feature, with the weights being exposed to the restriction = 1. According to the distance d, the k training points , , …, arranged from the closest to furthest are determined. These are referred to as the k nearest neighbors of . to each neighbor is given a weight using a kernel function (which is often based on the determined distance); an estimate for the new sample may be produced by

where the letter k indicates the number of nearest neighbors and represents the resilience of the model (the smoother the model, the higher k), symbolizes the recognized response of , , the predicted response of , and K (, ), indicates the kernel function.

4.4. Random Forest

Random forest is a general-purpose machine learning approach that is used extensively for regression and classification applications. In this work, the remarkable regression capacity of the RF model is utilized to precisely predict the SOC of batteries used in electric vehicles. Leo Breiman introduced random forest regression [55]. Regression trees, ,, k = 1, …, K}, where X is the noticed input vector, X and are independent and similarity distributed random vectors, establish the random forest for regression. The output forecasting values of the random forest regression are numerical. Y is the output’s actual value. It is intended that the training data are chosen independently from the joint distribution of (X, Y). The RF estimate is the unweighted average of all regression tree forecasts, and it may be computed using [47], as follows:

4.5. Extra Trees Regressor

Developed from the random forest (RF) model, the extra tree regressor (ETR) explains a notable increase in ensemble learning. The ETR method makes use of a collection of unpruned regression trees, each of which is produced via a traditional top-down approach. The method presented is different from the RF model, which uses a two-step process for regression analysis that includes bootstrapping and bagging. The ETR model employs a deterministic splitting strategy to create individual trees. RF utilizes a chosen technique to find the best split from a random collection of properties at each node. On the other hand, ETR determines a split point at random for every characteristic before selecting the most suitable split among these options. This process can be expressed mathematically as follows [48]:

In this case, the chosen split in the ETR algorithm is indicated by the variable SplitETR. To represent a feature, we use the symbol “f”. A random split point for this feature is denoted by “s.” The function Error (f, s) measures how much the error is reduced by splitting the data based on f and s. The technique selects the f and s combination that reduces this error. In the RF algorithm’s bagging phase, each tree in the ensemble contributes a prediction. The final estimation is typically decided by averaging these individual predictions. A similar approach is used by the ETR technique, but with a more varied ensemble of unpruned trees. Mathematically, the result of the final ETR model is represented briefly in Equation (12), as follows:

The ensemble’s total number of trees, represented as N, the i-th tree in the ensemble, represented as , and the input feature vector, represented as X, all contribute to the expected output, defined as . The ETR technique generates a unique regression approach that incorporates mathematical elements to strike a balance between accuracy and unpredictability.

4.6. Extreme Gradient Boosting

Using the gradient boosting framework, XGBoost is an ensemble machine learning approach built on decision trees. A supervised learning approach called gradient boosting fits new models successively to make up for the shortcomings of older models. The models are then linearly combined to create new models. However, in the presence of noise, gradient boosting may result in overfitting. XGBoost provides the values of γ and λ to avoid the overfitting issue caused by gradient boosting. A classification and regression tree (CART) model is used by XGBoost. With each node representing a dataset, CART represents a single value. The XGBoost algorithm starts by initializing the model as a constant, as defined in the next equation [56], as follows:

is the TCN layer’s output, is the predicted number of , K is the number of CARTs, and F is the space of the regression trees. The formula that follows is an objective function that directs each CART model’s training:

To limit the intricacy of the tree structure and avoid overfitting, the right side of the equation acts as a regularization parameter. The gap between the actual and anticipated values is the training loss, which is shown on the left-hand side. W is a normalizing function that is used to prevent overfitting, and is the objective function that is generated based on the desired value and the expected value .The values assigned to the leaf nodes are denoted as = , , …. XGBoost iteratively adds models that predict the unexpected parts at each step, leading to a new objective function that can be expressed as an expansion. The layout of each tree are the variables that need to be learned, as follows:

4.7. Ensemble (Random Forest Combined Gradient Boost)

By combining the predictions of many machine learning models, ensemble learning is a potent approach that enhances the precision and resilience of SoC estimates in electric vehicles. It uses the combined knowledge of several models to provide more accurate forecasts [28,55,57], as follows:

where represents the ensemble forecast for input x, N is the number of decision trees in the ensemble, and ) is the estimate of the i-th decision tree. The random forest regressor and its variations, such as gradient boosting regressor, have shown impressive performances in the scope of SOC estimation.

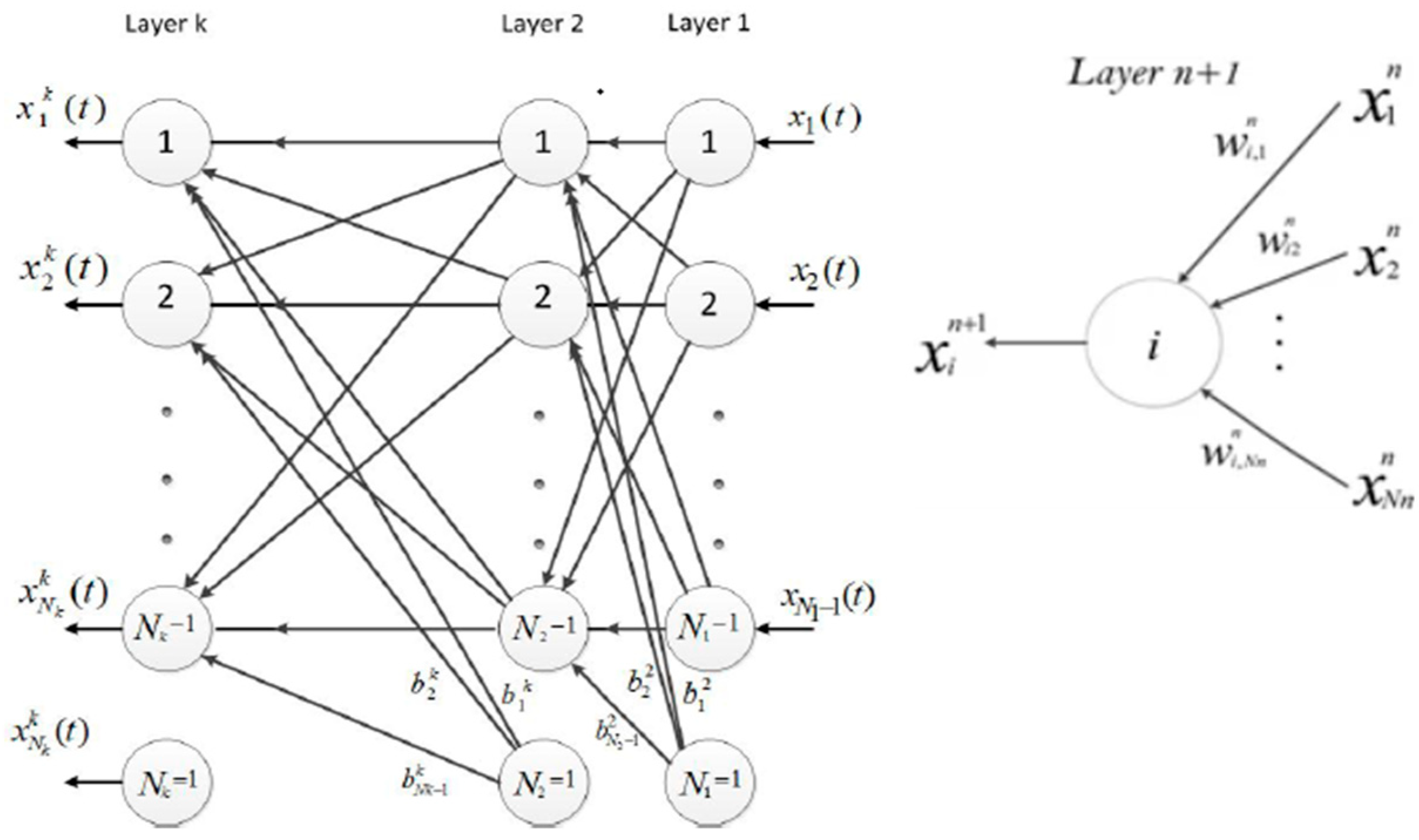

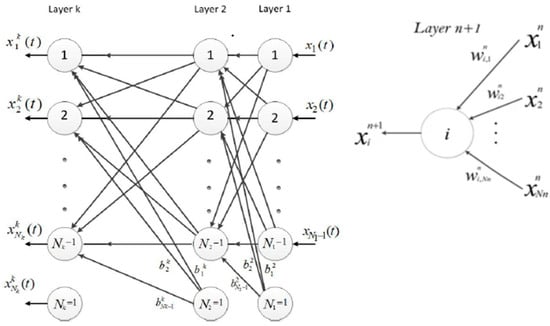

4.8. Artificial Neural Network

An artificial neural network (ANN) is formed of multiple active variables arranged in a deep architecture known as hidden layers; it breaks down the correlations between the input variables and a goal via a series of algebraic linear combinations. The input and output layers of the network are represented by the input variables and the target. In networks, there is a static mapping between the input and the output, where the input signal travels forward, layer by layer, from the input layer, via the hidden layers, to the output. Let k be the total number of input and output layers that are concealed. The ith node in the nth layer is stated by node(n, i), and the overall number of nodes in the nth layer is Nn − 1. Figure 3 illustrates how node (n + 1, i) assesses the following formula [58]:

Figure 3.

Diagram of a feed-forward multilayer perceptron system.

The weighted and biased mathematical functions for the ANN are produced and shown as follows:

where W represents weight and b and P represent bias and input.

Nine neurons in the input layer of ANN that we utilized to solve this issue reflect the values of the nine input variables at time t. The rectified linear unit (ReLu) activation process drives the neurons in the hidden layer, while the output layer has just one neuron that represents the final SOC forecasting, as seen in Figure 4.

Figure 4.

Artificial neural network for SOC estimation.

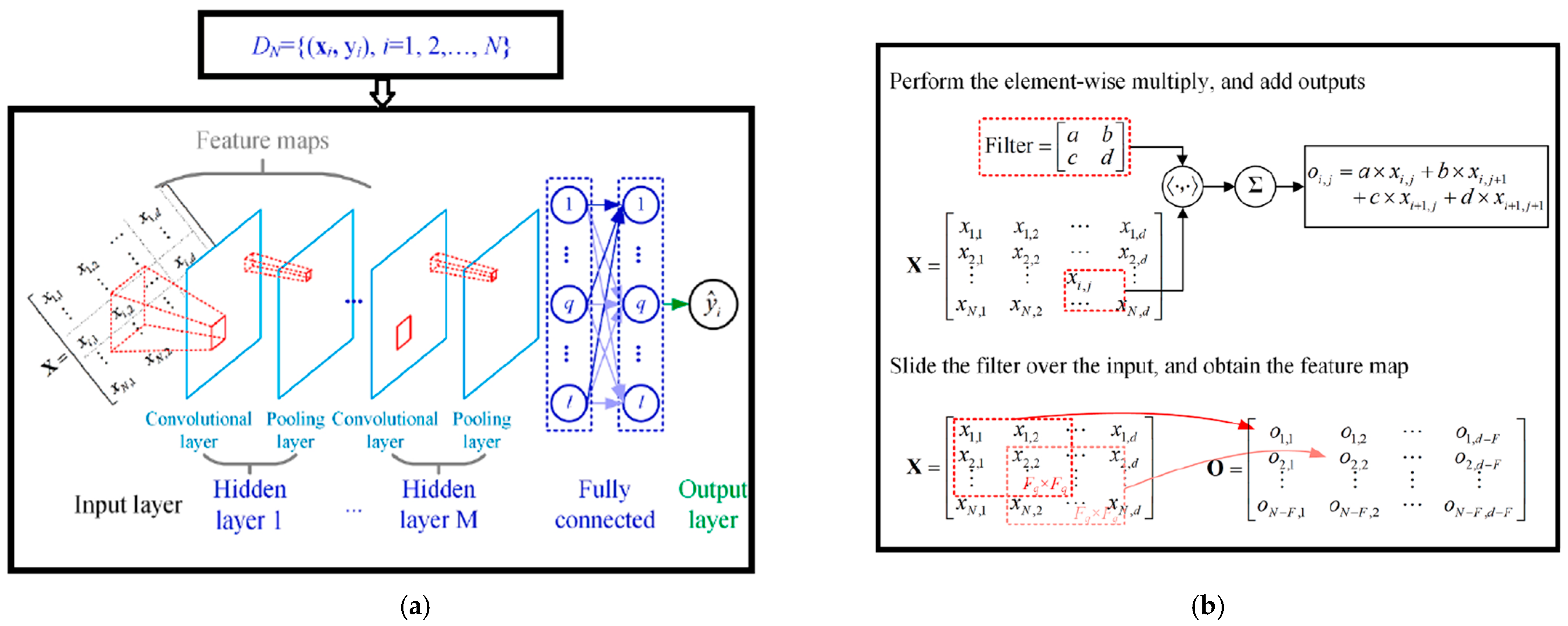

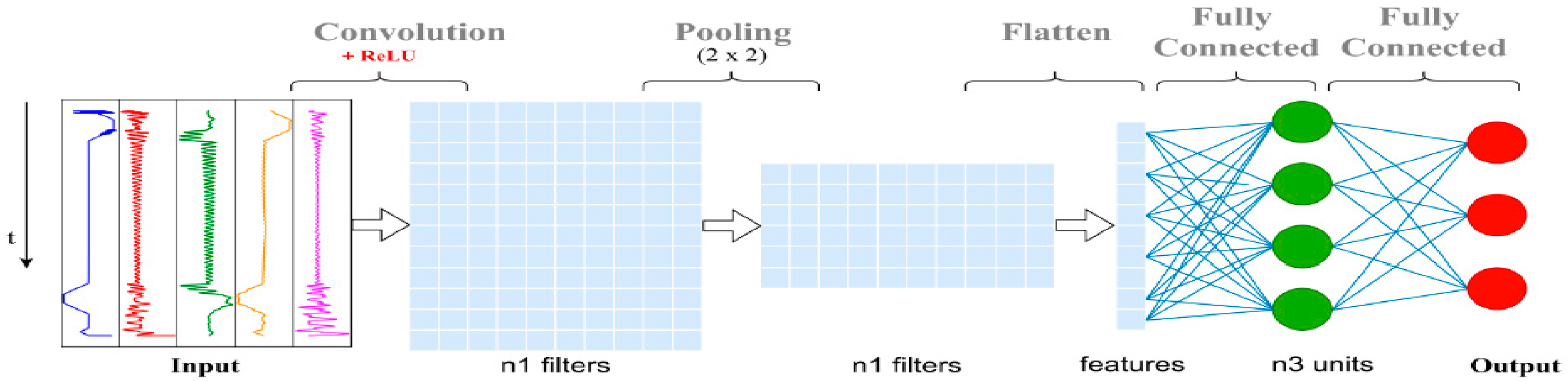

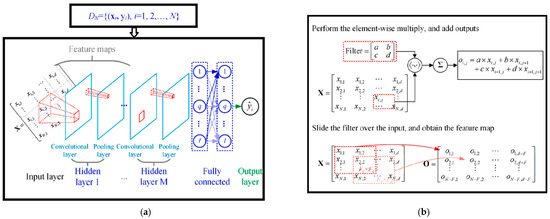

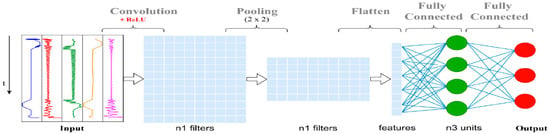

4.9. Convolutional Neural Network

Another DL method for estimating battery SOH is CNN [54]. As can be seen in Figure 5a, the CNN with a 2D input consists of one or more stacks of convolutional and pooling layers, layers that are completely connected, and the output layer. Each convolutional layer output is linked to a portion of the inputs, as opposed to being entirely connected. The sparse connectivity is obtained by sliding a filter (the weights matrix) across the input space. As can be noted in Figure 5b, “convolution” describes the computation that occurs between the subset of input and the filter (i.e., the weights matrix).

Figure 5.

(a) The convolutional neural networks (CNN) construction; and (b) schematic of convolution process for the feature extraction.

Because of their ability to automate and identify the significant attribute in the input data, CNNs are a pattern of neural networks especially good at reducing the number of parameters in data processing. This is because CNNs will take out the most significant features and search for correlations between the various input factors [59]. The CNN architecture is illustrated in Figure 6

Figure 6.

CNN architecture.

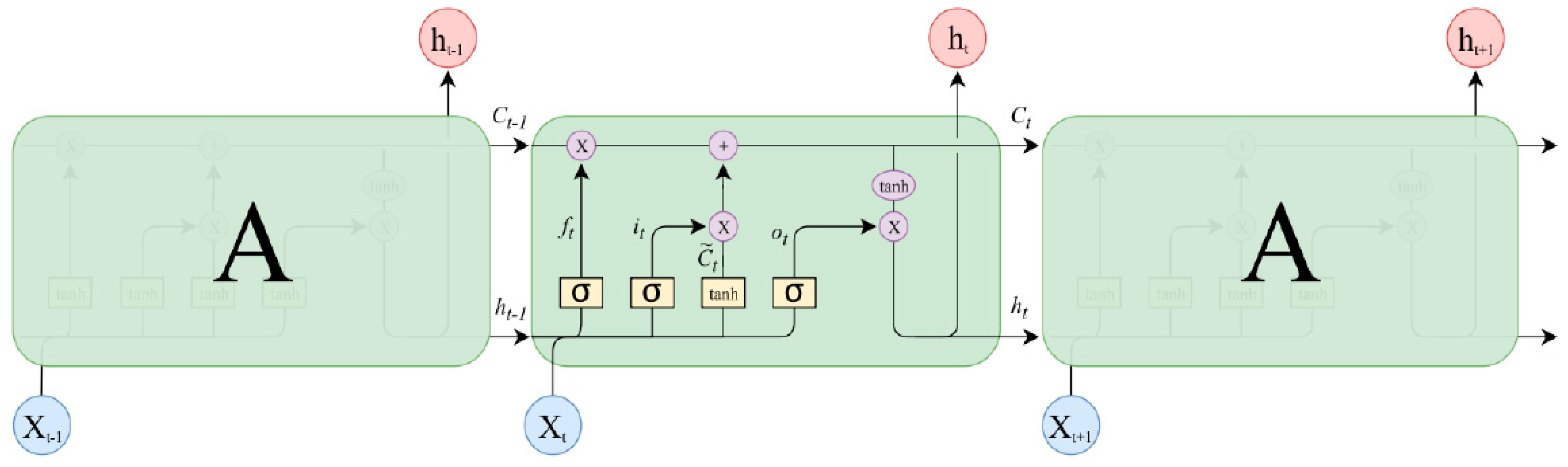

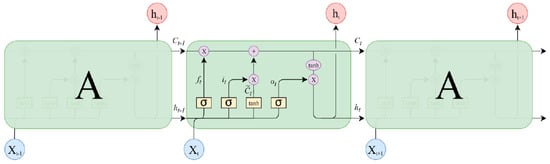

4.10. Long Short-Term Memory

To solve the RNN’s gradient vanishing issue, the LSTM [30,59,60] develops a novel activation function to reduce the gradient vanishing incidence and preserve long-term associations. Cell memory, the core idea of the long short-term memory (LSTM), is unpacked and moves down the whole chain by an affine transformation. The construction of a typical LSTM unit is shown in Figure 7, where represents the unit memory at time t equals k, as follows:

Figure 7.

LSTM architecture.

The three types of gates that the LSTM unit uses to calculate the effects of cell memory on node output, the forgetting rate of cell memory upon current input, and the percentage of current cell memory merging into the old cell memory are the input gate I, forget gate F, and output gate O. At time k, an LSTM unit makes a forward pass in the manner described below:

where and are the unit input and referring output at time k, consequently; indicates the hidden cell memory; w, u, and b are weight matrices and bias vectors to be learned during training; o signifies the element-wise product of the vectors. The activation vectors of the input gate, forget gate, and output gate are denoted by the symbols , , and , respectively, while are the associated activation functions; precisely, is a logistic sigmoid function while and are both hyperbolic tangent functions.

Nine distinct input factors were taken into consideration when developing the cell SOC estimate method. The cell’s SOC was the algorithm’s output. In this work, we suggest combining LSTM with GA to determine the ideal number of LSTM units for battery state-of-charge estimation, as observed in Figure 8.

Figure 8.

LSTM network optimization using genetic algorithm.

Natural evolution serves as the inspiration for GA, a metaheuristic stochastic optimization algorithm [61]. They are often employed to identify nearly ideal solutions for optimization issues involving sizable search areas. Lastly, the performance metrices—a statistic commonly employed in regression problems—between the estimated and the true SOC were taken into consideration to evaluate the effectiveness of the models. The performance metrics involve the R2 score, median absolute error, mean square error, mean absolute error, and max error [62,63].

5. Result and Discussion

This section begins by displaying the simulated data’s progression. The simulations of the SOC deterioration brought on by the BEV degradation are then shown. Finally, we provide the SOC prediction produced by machine and deep learning models that used modeling data for training and testing data.

5.1. Simulations of Battery Electric Vehicles and Generation of Datasets

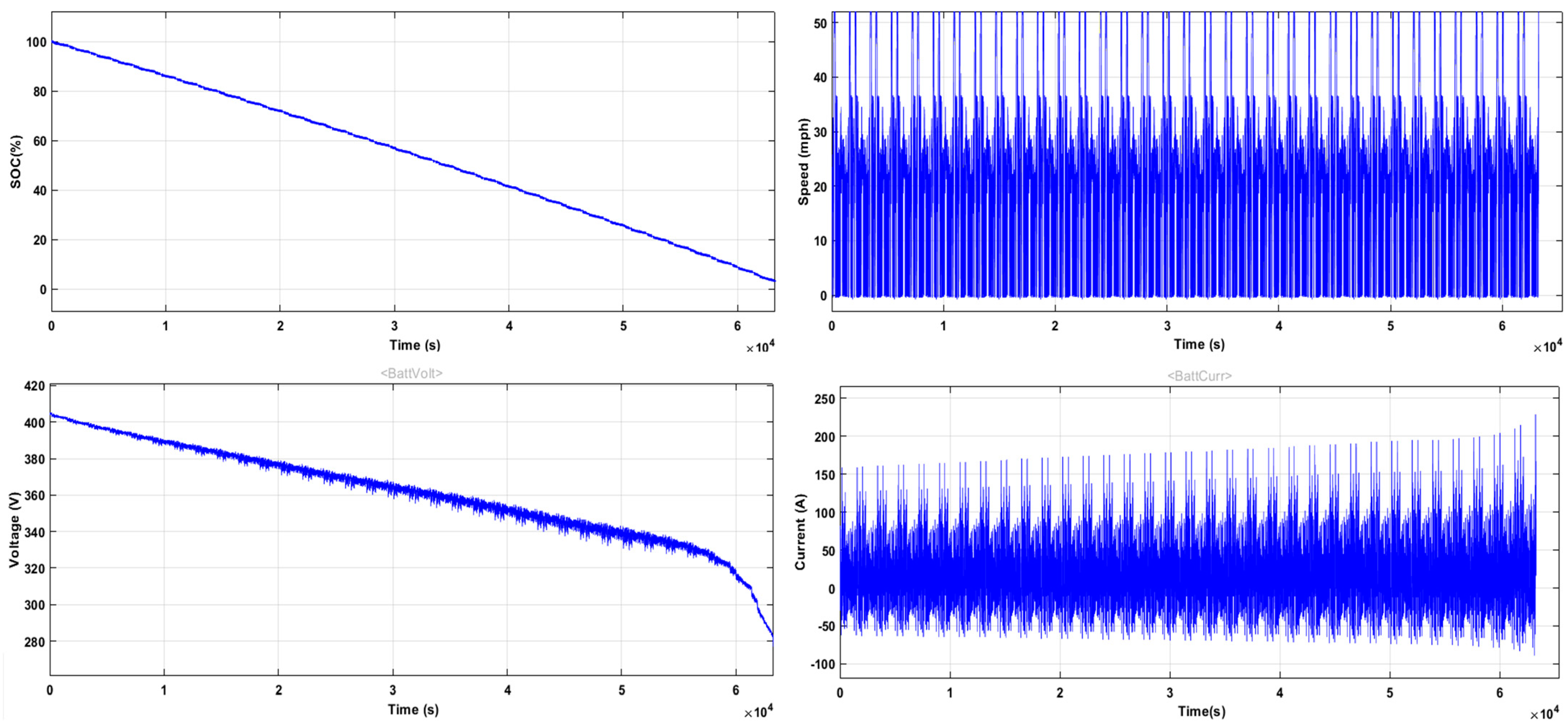

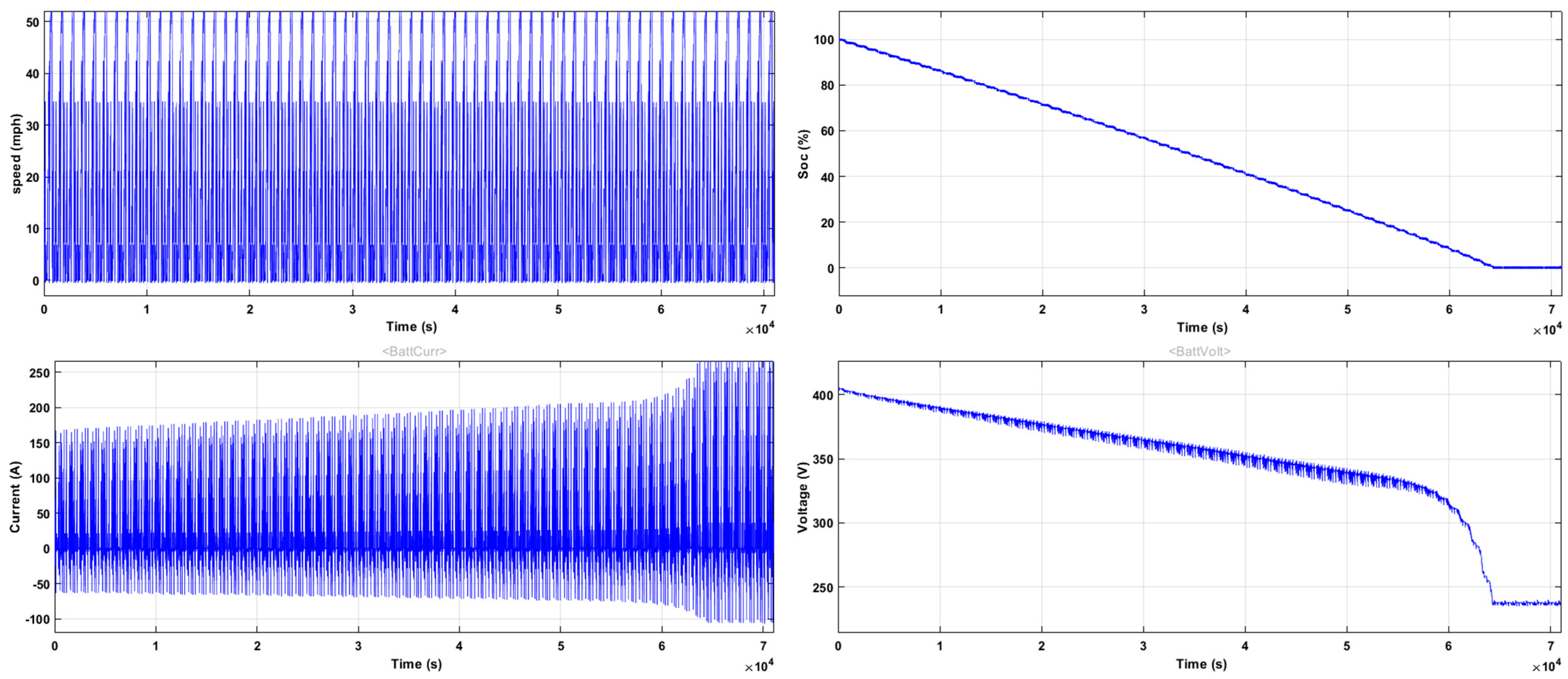

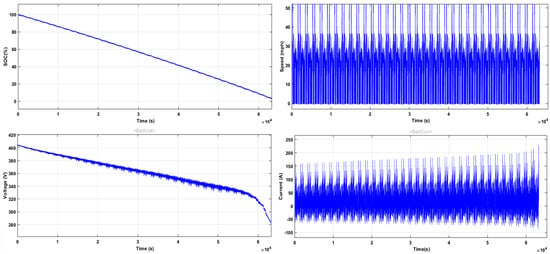

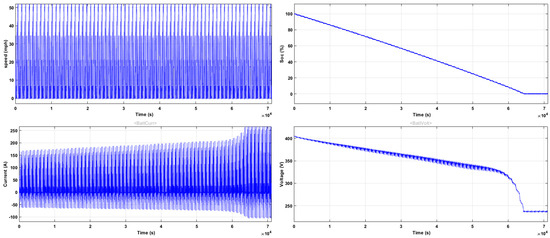

The BEV simulations consider many iterations of the seven DCs for Tesla S models: US06, FTP75, HDUDDS, HWFET, SC03, WLTP, and LA92. We simulate various driving patterns and offer a wider range of setups for a data-driven model by utilizing several DCs. The US06 driving cycle illustrates a fast-paced, aggressive driving style characterized by sharp acceleration and deceleration. However, FTP75 evaluates emissions in urban areas. The HWFET was created to predict the highway fuel economy rating, and the WLTP driving cycle is used to calculate light-duty car emissions and fuel consumption. The number of repetitions for each driving cycle is determined by taking the EPA driving range into account. This allows for the simulation of a full driving cycle before the battery pack needs to be recharged. The decrease in battery SOC is used to verify the dependability of our vehicle modeling; if the battery is consistently empty (SOC < 10%) after the simulation, when the maximum driving range is reached, the automotive model is considered reliable. The SOC fell to acceptable values for every driving cycle conducted; therefore, we believe that our BEV model accurately simulates the Tesla S. The computational model was run at a constant 298 K (25 °C ) ambient temperature for each condition. For the FTP75 and HDUDDS driving cycle, the simulated discharge profiles are displayed in Figure 9 and Figure 10. The discharge curves for the Tesla S model are shown. According to the FTP75 speed profile, Figure 9 and Figure 10 illustrate how long it takes for the Tesla S model battery pack to lose its charge, taking about 18 h, and 17 h for driving cycle HDUDDS. The figure depicts the forms of the voltage profiles and discharge current.

Figure 9.

Simulated discharge characteristics using the FTP75 driving cycle.

Figure 10.

Simulated discharge characteristics using the HDUDDS driving cycle.

5.2. The Preparation of Data

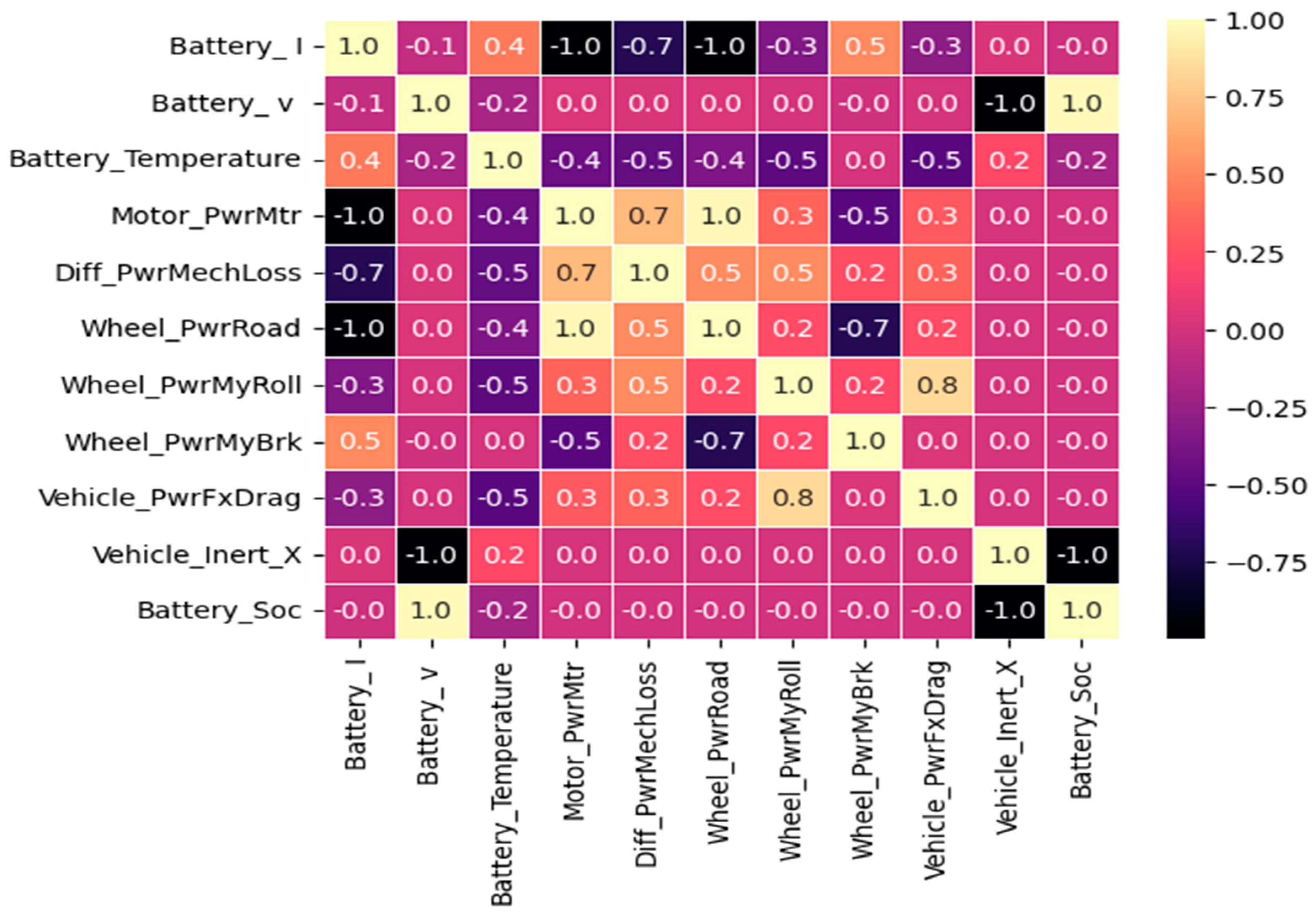

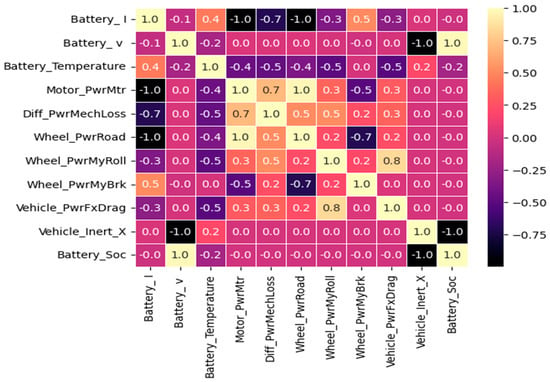

Before feeding the simulated data into machine learning models, it is necessary to properly preprocess the data using the modeling technique covered in the preceding section. Any machine learning project must begin with data collecting, which also happens to be the initial stage in the data preparation process. Importing the libraries required for machine learning projects, such as NumPy, panda, matplotlib, and seaborn, is the next step. A library is a set of functions that may be called and used by an algorithm. Loading the data that the machine learning algorithm will use is the next crucial step. This is the most important preprocessing step for machine learning. Next, it is time to assess the information and search for any missing values. For non-distance-based algorithms (like the decision tree), scaling is not required. Conversely, all features in distance-based models must be scaled. Lastly, the dataset is divided into sets for training, evaluation, and validation, after which the data preparation stages come to an end. The training and testing datasets can be produced using several methods with the simulated data that represent the seven driving cycles of the Tesla S. A specific approach is to take the datasets and divide them into 30% for testing and 70% for training, at random. These ratios are commonly used in machine learning to choose the training and test datasets. There are several ways to investigate the relevance of features. As seen in Figure 11, correlations may be easily studied using correlation graphs.

Figure 11.

Correlation graph for dataset in case driving cycle FFTP65 of BEV modelling.

The training and test datasets in this work are first created using different DCs. For training and testing, FTP75, HDUDDS, HWFET, LA92, SC03, US06, and WLTP are utilized in Case 1, as shown in Table 1. In case 2, FTP75, HDUDDS, and WLTP are used for training, with different driving cycles, such as HDUDDS, HWFET, LA92, SC03, US06, WLTP, HWFET, and SC03, used for testing, as shown in Table 1.

Table 1.

Two different cases used to produce the training and test datasets from the thirteen simulated datasets of the Tesla s model for ten different machine and deep learning algorithms.

All the simulation tests were executed on a 2.6 GHz i7 PC with 16 GB of RAM using MATLAB 2023, Anaconda platform and COMSOL Multiphysics.

5.3. State-of-Charge Estimation Using Machine Learning Models

In this section, we provide the SOC estimate findings utilizing the suggested learning algorithms on datasets generated by our suggested modeling framework. It was found that a radial basis kernel with values for the regularization parameter C, the polynomial kernel function’s degree, gamma equal to 1, 3, and scale, respectively, provides the best configuration for the SVR. Similarly, the highest accurate SOC estimation is obtained from the ANN constructed with two hidden layers and trained for 500 epochs with a batch size of 32 samples. The artificial neural network (ANN) that we have used for this problem is composed of nine neurons in the input layer that represent the values of the nine input variables at a given time, U. The output layer has just one neuron that represents the final SOC forecasting, whereas the neurons in the hidden layer are stimiulated by the rectified linear unit (ReLu) activation function. The ANN model was built using the Adam optimizer and the mean squared error (MSE) loss function.

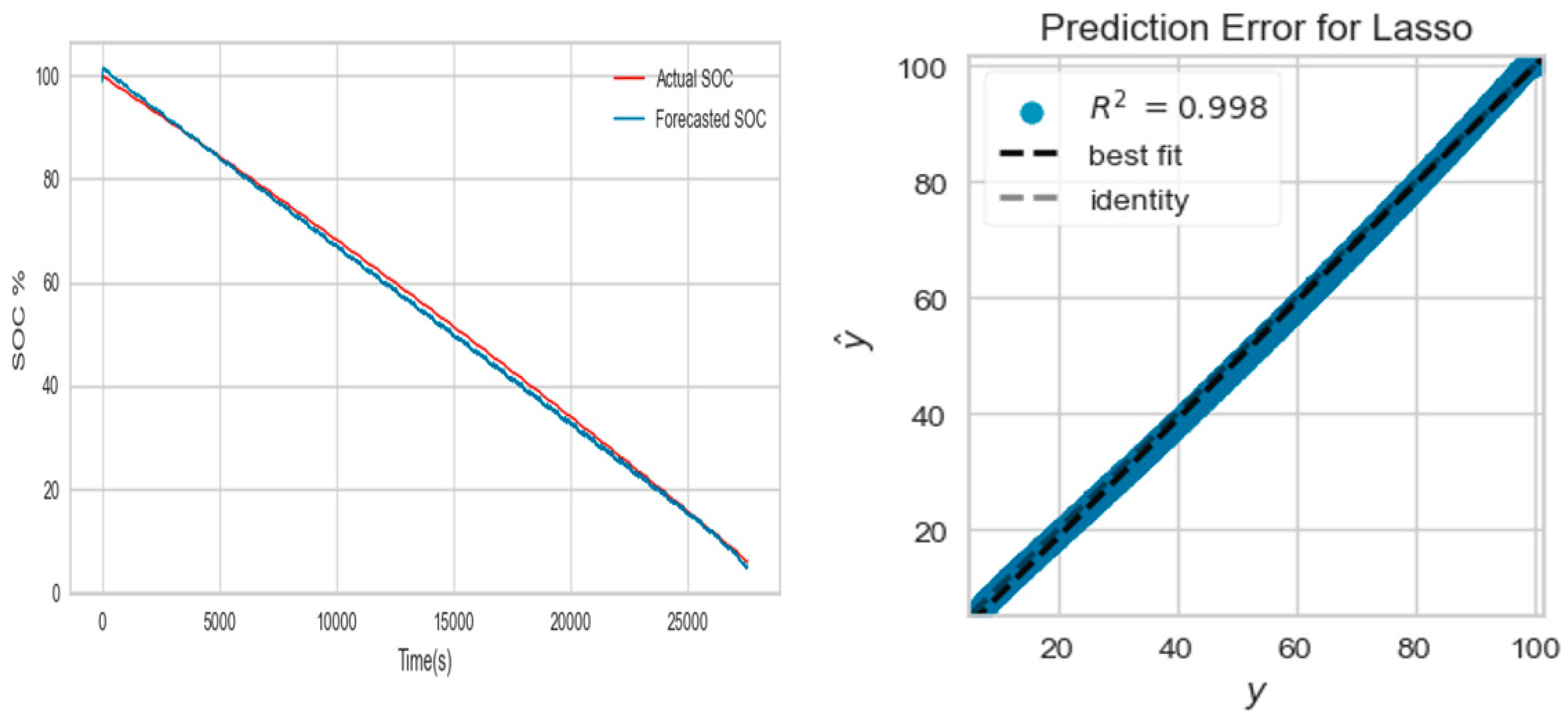

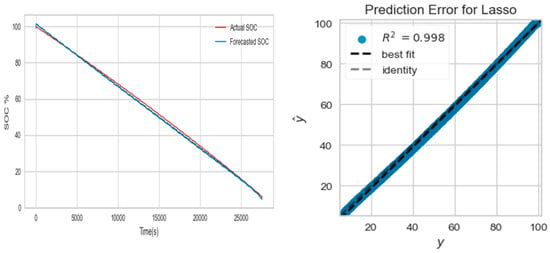

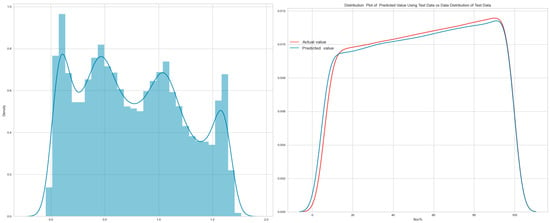

The LSTM network consists of three LSTM layers between the input and output layers. The LSTM is trained for 30 epochs, with a 312 batch size, a 0.01258 learning rate with 289 neurons for layer 1 and 226 for other layers, and a 0.2 dropout. The neurons are activated by the rectified linear unit (ReLu) activation function. The LSTM model was built using the Adam optimizer and the mean squared error (MSE) loss function. The optimum parameters of the LSTM network were found using the GA algorithm. The CNN model was built using the Adam optimizer and the mean squared error (MSE) loss function and the model was activated by the rectified linear unit (ReLu) activation function. The CNN model was constructed from 64 filter maps, 2 kernel sizes, and a pool size equal to 2. The CNN Model was trained for 300 epochs with a 32 batch size. Other types of machine learning algorithms were parametrized to obtain an accurate estimation for the state of charge, including random forest, KNN, extra trees regressor, extreme gradient boosting, gradient boosting and ensemble (RF with GB), where the number of estimators and number of neighbors equaled 100 and 5 and the learning rate of extreme gradient boosting equaled 0.1. Figure 12 and Figure 13 show the analysis performance of Tesla’s model including the prediction of the state of charge, R2 prediction, error difference between the testing and prediction, and the distribution plot between testing and forecasting of the model. SOC estimation for Tesla S using SVR was obtained by training and testing the models based on the datasets produced by BEV selection, with FTP75 for training, and HWFET for testing of the model (Case 2).

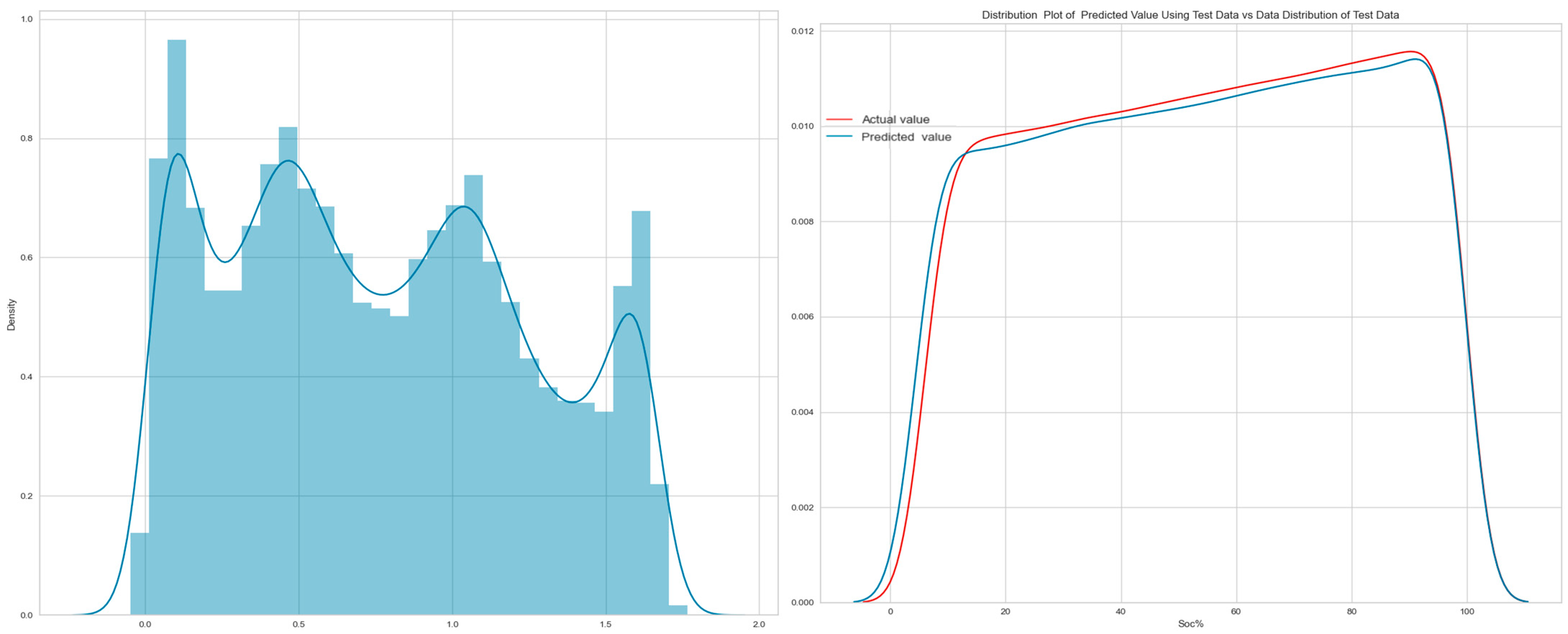

Figure 12.

SOC estimation and R2 prediction of Tesla s model using SVR (FTP75 for training and HWFET for testing).

Figure 13.

Error difference and distribution plot between testing and prediction dataset using SVR (FTP75 for training and HWFET for testing).

Estimation is expressed by the SVR (MSE = 1.2501, MAE = 1.0784, R2 = 0.9982, MAE = 1.00155, and max error = 1.8699). Table 2 reports the values of performance indicators for the entire training dataset and the test datasets built.

Table 2.

The values of performance indicators for the entire training dataset and test datasets built for different cases.

6. Conclusions

This work investigates the integration of machine learning approaches with electrochemical and thermal data of the LIB model, together with automotive simulations in the BEV model, for estimating the SOC of batteries. Seven driving cycles of automotive simulations (FTP75, US06, HWFET, HDUDDS, SCO3, WLTP, and LA92) were presented to simulate the fluctuation of the discharge current flowing in the NMC cylindrical cells of the Tesla S. Two different cases were provided to generate the training and test datasets from the thirteen simulated datasets of the Tesla s model for ten different machines and deep learning algorithms. Linear regression, (SVRs), K-NN, RF, EXR, XGB, random forest combined with gradient boosting, ANNs, CNN, and LSTM were trained and tested to predict the SOC using modeling data that accurately reflects the behavior of BEVs in real life. Mostly, when comparing the same algorithm in the first case, the results were better than the second case; this is normal because the model was trained and tested on the same drive cycle while the second case was trained on the drive cycle and tested on another drive cycle. This is one of the reasons that led us to use the second case to accurately evaluate the model. The suggested modeling framework may accommodate several BEVs with distinct battery structures. Future articles will take into account the applications of the diverse chemistry and the challenge of implementing the computation of running complex models for real-time applications.

Author Contributions

Conceptualization, E.I.E.-S. and S.K.E.; methodology, E.I.E.-S., S.K.E. and M.A.; software, E.I.E.-S. and S.K.E.; validation, E.I.E.-S. and M.A.; formal analysis, E.I.E.-S. and S.K.E.; investigation, S.K.E. and M.A.; resources, E.I.E.-S. and S.K.E.; data curation, E.I.E.-S. and S.K.E.; writing—original draft preparation, E.I.E.-S. and M.A.; writing—characteristics, E.I.E.-S. and S.K.E.; visualization, S.K.E. and M.A.; supervision, S.K.E. and M.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Taif University, Saudi Arabia, project no. (TU-DSPP-2024-70).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article. The data presented in this study are available on request from the corresponding author.

Acknowledgments

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-70).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Albrechtowicz, P. Electric vehicle impact on the environment in terms of the electric energy source—Case study. Energy Rep. 2023, 9, 3813–3821. [Google Scholar] [CrossRef]

- Sanguesa, J.A.; Torres-Sanz, V.; Garrido, P.; Martinez, F.J.; Marquez-Barja, J.M. A Review on Electric Vehicles: Technologies and Challenges. Smart Cities 2021, 4, 372–404. [Google Scholar] [CrossRef]

- Ralls, A.M.; Leong, K.; Clayton, J.; Fuelling, P.; Mercer, C.; Navarro, V.; Menezes, P.L. The Role of Lithium-Ion Batteries in the Growing Trend of Electric Vehicles. Materials 2023, 16, 6063. [Google Scholar] [CrossRef]

- Liu, W.; Placke, T.; Chau, K. Overview of batteries and battery management for electric vehicles. Energy Rep. 2022, 8, 4058–4084. [Google Scholar] [CrossRef]

- Thangavel, S.; Mohanraj, D.; Girijaprasanna, T.; Raju, S.; Dhanamjayulu, C.; Muyeen, S.M. A Comprehensive Review on Electric Vehicle: Battery Management System, Charging Station, Traction Motors. IEEE Access 2023, 11, 20994–21019. [Google Scholar] [CrossRef]

- Kour, G.; Perveen, R. Battery Management System in Electric Vehicle. In Proceedings of the 4th International Computer Sciences And Informatics Conference (ICSIC 2022), Amman, Jordan, 28–29 June 2022. [Google Scholar] [CrossRef]

- Halim, A.A.E.B.A.E.; Bayoumi, E.H.E.; El-Khattam, W.; Ibrahim, A.M. Implications of Lithium-Ion Cell Temperature Estimation Methods for Intelligent Battery Management and Fast Charging Systems. Bull. Pol. Acad. Sci. Tech. Sci. 2024, 72, 149171. [Google Scholar] [CrossRef]

- Mukherjee, S.; Chowdhury, K. State of charge estimation techniques for battery management system used in electric vehicles: A review. In Energy Systems; Springer: Berlin/Heidelberg, Germany, 2023; pp. 1–44. [Google Scholar] [CrossRef]

- Naik, M.M.; Koraddi, S.; Raju, A.B. State of Charge Estimation of Lithium-Ion Batteries for Electric Vehicle. In Proceedings of the 2023 International Conference for Advancement in Technology (ICONAT), Goa, India, 24–26 January 2023. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, R.; Pang, S.; Xu, L.; Zhao, D.; Wei, J.; Huangfu, Y.; Gao, F. A Nonlinear Observer SOC Estimation Method Based on Electrochemical Model for Lithium-Ion Battery. IEEE Trans. Ind. Appl. 2021, 57, 1094–1104. [Google Scholar] [CrossRef]

- Waag, W.; Sauer, D.U. Adaptive estimation of the electromotive force of the lithium-ion battery after current interruption for an accurate state-of-charge and capacity determination. Appl. Energy 2013, 111, 416–427. [Google Scholar] [CrossRef]

- Sarda, J.; Patel, H.; Popat, Y.; Hui, K.L.; Sain, M. Review of Management System and State-of-Charge Estimation Methods for Electric Vehicles. World Electr. Veh. J. 2023, 14, 325. [Google Scholar] [CrossRef]

- Zine, B.; Marouani, K.; Becherif, M.; Yahmedi, S. Estimation of Battery Soc for Hybrid Electric Vehicle using Coulomb Counting Method. Int. J. Emerg. Electr. Power Syst. 2018, 19, 20170181. [Google Scholar] [CrossRef]

- Pakpahan, J.F.; Dewangga, B.R.; Pratama, G.N.; Cahyadi, A.I.; Herdjunanto, S.; Wahyunggoro, O. State of Charge Estimation for Lithium Polymer Battery Using Kalman Filter under Varying Internal Resistance. In Proceedings of the 2019 International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 24–25 July 2019. [Google Scholar] [CrossRef]

- Naseri, F.; Schaltz, E.; Stroe, D.-I.; Gismero, A.; Farjah, E. An Enhanced Equivalent Circuit Model With Real-Time Parameter Identification for Battery State-of-Charge Estimation. IEEE Trans. Ind. Electron. 2022, 69, 3743–3751. [Google Scholar] [CrossRef]

- Jafari, S.; Byun, Y.-C. Efficient state of charge estimation in electric vehicles batteries based on the extra tree regressor: A data-driven approach. Heliyon 2024, 10, e25949. [Google Scholar] [CrossRef]

- Lotfi, N.; Landers, R.G.; Li, J.; Park, J. Reduced-Order Electrochemical Model-Based SOC Observer With Output Model Uncertainty Estimation. IEEE Trans. Control Syst. Technol. 2017, 25, 1217–1230. [Google Scholar] [CrossRef]

- Imran, R.M.; Li, Q.; Flaih, F.M.F. An Enhanced Lithium-Ion Battery Model for Estimating the State of Charge and Degraded Capacity Using an Optimized Extended Kalman Filter. IEEE Access 2020, 8, 208322–208336. [Google Scholar] [CrossRef]

- Koseoglou, M.; Tsioumas, E.; Papagiannis, D.; Jabbour, N.; Mademlis, C. A Novel On-Board Electrochemical Impedance Spectroscopy System for Real-Time Battery Impedance Estimation. IEEE Trans. Power Electron. 2021, 36, 10776–10787. [Google Scholar] [CrossRef]

- Feng, J.; Cai, F.; Yang, J.; Wang, S.; Huang, K. An Adaptive State of Charge Estimation Method of Lithium-ion Battery Based on Residual Constraint Fading Factor Unscented Kalman Filter. IEEE Access 2022, 10, 44549–44563. [Google Scholar] [CrossRef]

- Jokic, I.; Zecevic, Z.; Krstajic, B. State-of-Charge Estimation of Lithium-Ion Batteries Using Extended Kalman Filter and Unscented Kalman Filter. In Proceedings of the 2018 23rd International Scientific-Professional Conference on Information Technology (IT), Zabljak, Montenegro, 19–24 February 2018. [Google Scholar] [CrossRef]

- Chen, N.; Zhao, X.; Chen, J.; Xu, X.; Zhang, P.; Gui, W. Design of a Non-Linear Observer for SOC of Lithium-Ion Battery Based on Neural Network. Energies 2022, 15, 3835. [Google Scholar] [CrossRef]

- Faisal, M.; Hannan, M.A.; Ker, P.J.; Lipu, M.S.H.; Uddin, M.N. Fuzzy-Based Charging–Discharging Controller for Lithium-Ion Battery in Microgrid Applications. IEEE Trans. Ind. Appl. 2021, 57, 4187–4195. [Google Scholar] [CrossRef]

- Manriquez-Padilla, C.G.; Cueva-Perez, I.; Dominguez-Gonzalez, A.; Elvira-Ortiz, D.A.; Perez-Cruz, A.; Saucedo-Dorantes, J.J. State of Charge Estimation Model Based on Genetic Algorithms and Multivariate Linear Regression with Applications in Electric Vehicles. Sensors 2023, 23, 2924. [Google Scholar] [CrossRef]

- Anushalini, T.; Revathi, B.S.; Sulthan, S.M. Role of Machine Learning Approach in the Assessment of Lithium-Ion Battery’s SOC for EV Application. In Proceedings of the 2023 IEEE International Transportation Electrification Conference (ITEC-India), Chennai, India, 12–15 December 2023. [Google Scholar] [CrossRef]

- Lipu, M.S.H.; Miah, S.; Jamal, T.; Rahman, T.; Ansari, S.; Rahman, S.; Ashique, R.H.; Shihavuddin, A.S.M.; Shakib, M.N. Artificial Intelligence Approaches for Advanced Battery Management System in Electric Vehicle Applications: A Statistical Analysis towards Future Research Opportunities. Vehicles 2023, 6, 22–70. [Google Scholar] [CrossRef]

- Hannan, M.A.; How, D.N.T.; Lipu, M.S.H.; Mansor, M.; Ker, P.J.; Dong, Z.Y.; Sahari, K.S.M.; Tiong, S.K.; Muttaqi, K.M.; Mahlia, T.M.I.; et al. Deep learning approach towards accurate state of charge estimation for lithium-ion batteries using self-supervised transformer model. Sci. Rep. 2021, 11, 19541. [Google Scholar] [CrossRef] [PubMed]

- Khawaja, Y.; Shankar, N.; Qiqieh, I.; Alzubi, J.; Alzubi, O.; Nallakaruppan, M.; Padmanaban, S. Battery management solutions for li-ion batteries based on artificial intelligence. Ain Shams Eng. J. 2023, 14, 102213. [Google Scholar] [CrossRef]

- Model S. Available online: https://www.tesla.com/models (accessed on 18 June 2024).

- Ragone, M.; Yurkiv, V.; Ramasubramanian, A.; Kashir, B.; Mashayek, F. Data driven estimation of electric vehicle battery state-of-charge informed by automotive simulations and multi-physics modeling. J. Power Sources 2021, 483, 229108. [Google Scholar] [CrossRef]

- MATLAB and Simulink Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/products/new_products/release2023a.html (accessed on 4 April 2023).

- MATLAB and Powertrain Blockset Toolbox Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/products/powertrain.html (accessed on 18 April 2023).

- MATLAB and Battery Datasheet Block Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/help/autoblks/ref/datasheetbattery.html (accessed on 20 May 2023).

- Mohialden, Y.M.; Kadhim, R.W.; Hussien, N.M.; Hussain, S.A.K. Top Python-Based Deep Learning Packages: A Comprehensive Review. Int. J. Pap. Adv. Sci. Rev. 2024, 5, 1–9. [Google Scholar] [CrossRef]

- Hao, J.; Ho, T.K. Machine Learning Made Easy: A Review of Scikit-learn Package in Python Programming Language. J. Educ. Behav. Stat. 2019, 44, 348–361. [Google Scholar] [CrossRef]

- Stancin, I.; Jovic, A. An Overview and Comparison of Free Python Libraries for Data Mining and Big Data Analysis. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019. [Google Scholar] [CrossRef]

- MATLAB and Mapped Motor Block Release 2023b, The MathWorks, Inc. Available online: https://www.mathworks.com/help/autoblks/propulsion.html (accessed on 20 September 2023).

- MATLAB and Limited Slip Differential Block Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/help/autoblks/transmission-and-drivetrain.html (accessed on 20 August 2023).

- MATLAB and Longitudinal Wheel Block Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/help/autoblks/ref/longitudinalwheel.html (accessed on 20 August 2023).

- MATLAB and Vehicle Body 1DOF Longitudinal Block Release 2023a, The MathWorks, Inc. Available online: https://www.mathworks.com/help/autoblks/ref/vehiclebody1doflongitudinal.html (accessed on 20 August 2023).

- Zhang, R.; Xia, B.; Li, B.; Cao, L.; Lai, Y.; Zheng, W.; Wang, H.; Wang, W. State of the Art of Lithium-Ion Battery SOC Estimation for Electrical Vehicles. Energies 2018, 11, 1820. [Google Scholar] [CrossRef]

- Sundberg, N. Predicting Lithium-Ion Battery State of Health Using Linear Regression; Statistiska Institutionen, Uppsala Universitet: Uppsala, Sweden, 2024. [Google Scholar]

- Ben Youssef, M.; Jarraya, I.; Zdiri, M.A.; Ben Salem, F. Support vector regression-based state of charge estimation for batteries: Cloud vs non-cloud. Indones. J. Electr. Eng. Comput. Sci. 2024, 34, 697–710. [Google Scholar] [CrossRef]

- Tian, H.; Li, A.; Li, X. SOC estimation of lithium-ion batteries for electric vehicles based on multimode ensemble SVR. J. Power Electron. 2021, 21, 1365–1373. [Google Scholar] [CrossRef]

- Talluri, T.; Chung, H.T.; Shin, K. Study of Battery State-of-charge Estimation with kNN Machine Learning Method. IEIE Trans. Smart Process. Comput. 2021, 10, 496–504. [Google Scholar] [CrossRef]

- Jafari, S.; Byun, Y.-C. XGBoost-Based Remaining Useful Life Estimation Model with Extended Kalman Particle Filter for Lithium-Ion Batteries. Sensors 2022, 22, 9522. [Google Scholar] [CrossRef]

- Li, C.; Chen, Z.; Cui, J.; Wang, Y.; Zou, F. The Lithium-Ion Battery State-of-Charge Estimation Using Random Forest Regression. In Proceedings of the 2014 Prognostics and System Health Management Conference (PHM-2014 Hunan), Zhangiiajie, China, 24–27 August 2014. [Google Scholar] [CrossRef]

- Jafari, S.; Shahbazi, Z.; Byun, Y.-C.; Lee, S.-J. Lithium-Ion Battery Estimation in Online Framework Using Extreme Gradient Boosting Machine Learning Approach. Mathematics 2022, 10, 888. [Google Scholar] [CrossRef]

- Hernández, J.A.; Fernández, E.; Torres, H. Electric Vehicle NiMH Battery State of Charge Estimation Using Artificial Neural Networks of Backpropagation and Radial Basis. World Electr. Veh. J. 2023, 14, 312. [Google Scholar] [CrossRef]

- Song, X.; Yang, F.; Wang, D.; Tsui, K.-L. Combined CNN-LSTM Network for State-of-Charge Estimation of Lithium-Ion Batteries. IEEE Access 2019, 7, 88894–88902. [Google Scholar] [CrossRef]

- Zhao, F.; Li, P.; Li, Y.; Li, Y. The Li-Ion Battery State of Charge Prediction of Electric Vehicle Using Deep Neural Network. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019. [Google Scholar] [CrossRef]

- Qu, K. Research on linear regression algorithm. MATEC Web Conf. 2024, 395, 01046. [Google Scholar] [CrossRef]

- Issa, E.; Al-Gazzar, M.; Seif, M. Energy Management of Renewable Energy Sources Based on Support Vector Machine. Int. J. Renew. Energy Res. 2022, 12, 730–740. [Google Scholar] [CrossRef]

- Sui, X.; He, S.; Vilsen, S.B.; Meng, J.; Teodorescu, R.; Stroe, D.-I. A review of non-probabilistic machine learning-based state of health estimation techniques for Lithium-ion battery. Appl. Energy 2021, 300, 117346. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z. State of charge estimation for electric vehicles using random forest. Green Energy Intell. Transp. 2024, 3, 100177. [Google Scholar] [CrossRef]

- Lee, J.-H.; Lee, I.-S. Hybrid Estimation Method for the State of Charge of Lithium Batteries Using a Temporal Convolutional Network and XGBoost. Batteries 2023, 9, 544. [Google Scholar] [CrossRef]

- Mousaei, A.; Naderi, Y.; Bayram, I.S. Advancing State of Charge Management in Electric Vehicles With Machine Learning: A Technological Review. IEEE Access 2024, 12, 43255–43283. [Google Scholar] [CrossRef]

- Ismail, M.; Dlyma, R.; Elrakaybi, A.; Ahmed, R.; Habibi, S. Battery State of Charge Estimation Using an Artificial Neural Network. In Proceedings of the 2017 IEEE Transportation Electrification Conference and Expo (ITEC), Harbin, China, 7–10 August 2017. [Google Scholar] [CrossRef]

- Azkue, M.; Oca, L.; Iraola, U.; Lucu, M.; Martinez-Laserna, E. Li-ion Battery State-of-Charge estimation algorithm with CNN-LSTM and Transfer Learning using synthetic training data. In Proceedings of the 35th International Electric Vehicle Symposium & Exhibition, Oslo, Norway, 11–15 June 2022. [Google Scholar]

- Yang, F.; Zhang, S.; Li, W.; Miao, Q. State-of-charge estimation of lithium-ion batteries using LSTM and UKF. Energy 2020, 201, 117664. [Google Scholar] [CrossRef]

- Anwaar, A.; Ashraf, A.; Bangyal, W.H.; Iqbal, M. Genetic Algorithms: Brief Review on Genetic Algorithms for Global Optimization Problems. In Proceedings of the 2022 Human-Centered Cognitive Systems (HCCS), Shanghai, China, 17–18 December 2022. [Google Scholar] [CrossRef]

- Gaboitaolelwe, J.; Zungeru, A.M.; Yahya, A.; Lebekwe, C.K.; Vinod, D.N.; Salau, A.O. Machine Learning Based Solar Photovoltaic Power Forecasting: A Review and Comparison. IEEE Access 2023, 11, 40820–40845. [Google Scholar] [CrossRef]

- “Regression Metrics”, Regression Metrics—Permetrics 2.0.0 Documentation. Available online: https://permetrics.readthedocs.io/en/latest/pages/regression.html (accessed on 2 July 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).