Abstract

Creating an interpretable model with high predictive performance is crucial in eXplainable AI (XAI) field. We introduce an interpretable neural network-based regression model for tabular data in this study. Our proposed model uses ordinary least squares (OLS) regression as a base-learner, and we re-update the parameters of our base-learner by using neural networks, which is a meta-learner in our proposed model. The meta-learner updates the regression coefficients using the confidence interval formula. We extensively compared our proposed model to other benchmark approaches on public datasets for regression task. The results showed that our proposed neural network-based interpretable model showed outperformed results compared to the benchmark models. We also applied our proposed model to the synthetic data to measure model interpretability, and we showed that our proposed model can explain the correlation between input and output variables by approximating the local linear function for each point. In addition, we trained our model on the economic data to discover the correlation between the central bank policy rate and inflation over time. As a result, it is drawn that the effect of central bank policy rates on inflation tends to strengthen during a recession and weaken during an expansion. We also performed the analysis on CO2 emission data, and our model discovered some interesting explanations between input and target variables, such as a parabolic relationship between CO2 emissions and gross national product (GNP). Finally, these experiments showed that our proposed neural network-based interpretable model could be applicable for many real-world applications where data type is tabular and explainable models are required.

1. Introduction

Tabular data are the most common type because it covers many exciting problems in various domains. In addition, the predictive and explanatory modeling on tabular data is a non-trivial task because those models often need to be interpreted by explaining real-world phenomena.

Artificial intelligence (AI) recently has shown super-human performance in many domains including image processing, natural language processing, etc. However, researchers have been developing very complex black box models to achieve the high predictive performance. Nonetheless, such complex deep learning models do not show good predictive accuracy on tabular data, and it is challenging to explain output of them. Therefore, simple interpretable machine learning models are still broadly applied for modeling tabular data. For example, ordinary least squares (OLS) regression has been extensively employed to explain a wide variety of economic relationships [1,2]. Because the statistical properties of linear regression make it trustworthy, linear regression coefficients have been used as a model interpreter by determining the effect of each input feature on the output [3]. Unfortunately, the predictive capacity of OLS regression is not stronger than black box machine learning models [4]. On the other hand, deep learning models achieved significantly higher predictive performance on those types of data, such as audios, images or videos, and texts. Still, they have not shown better predictive performance than ensemble models, such as lightGBM, CatBoost, etc., on tabular data [5,6].

In addition, many techniques for explainability in machine learning (ML) have been proposed to understand the predictions provided by complex ML models. The most popular methods for explainable AI are shapley additive explanations (SHAP) and local interpretable model-agnostic explanations (LIME) [7,8]. Unfortunately, researchers have not done much work designing explainable methods for tabular data [9].

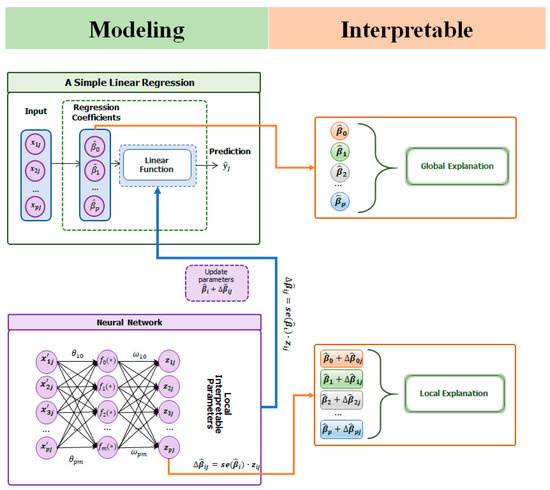

In this study, we introduce a novel neural network-based locally adaptive linear regression model by combining a simple OLS regression and feed-forward neural networks to provide model interpretability with high predictive performance for tabular data. Our proposed framework for tabular data is shown in Figure 1. We first estimate the linear regression coefficients using OLS method to generalize our base learner. In this phase, we do not normalize the input data () to obtain meaningful regression coefficients to calculate the exact effect of each input variable on the output. In the second phase, we train neural network model based on the normalized input data () to update the regression coefficients for each current observation. The neural networks are used as our meta-learner to adjust the weight parameter of base-learner as known as regression coefficients for each observation to improve the predictive performance. As shown in Figure 1, we update each regression coefficient using the formula for the confidence interval (CI) and then rebuild an interpretable local linear model for each observation.

Figure 1.

Summary of our proposed model, where is i-th variable of j-th observation, is i-th normalized input variable of j-th observation, is the j-th predicted output, is estimated regression coefficient of i-th variable, se() is standard error of the i-th estimated regression coefficient, and is predicted critical value for i-th variable of j-th observation. We first perform OLS estimator, and the resulting linear regression coefficients and standard errors are given as input to the neural network model. Second, we use feed-forward neural networks to adjust each regression coefficient using normalized input data. Finally, we reconstruct our local linear regression model for each observation based on the adjusted regression coefficients.

In our proposed model, since we use the formula for a CI, the adjusted regression coefficients should be in a range between their lower and upper confidence intervals. Therefore, our proposed model can avoid overfitting, and the model interpretable is identical with OLS regression. At the same time, we can extend the predictive power of linear regression.

We evaluated the predictive performance and model interpretability of our proposed approach on the benchmark tabular datasets for regression task. Our proposed model achieved slightly higher predictive performance than regression and the state-of-the-art models. Furthermore, we also showed that our proposed model can measure a local effect of each input variable on the output for each observation.

The contributions of this study are listed as follows:

- (1)

- We introduce a neural network-based interpretable local linear regression model for tabular data.

- (2)

- The linear regression coefficients are the same for all observations in a data, further degrading its predictive performance. We design regression coefficients as locally adaptable within their confidence intervals using a meta-level neural network model. Our proposed model parameterizes a local linear function for each example in a given data; therefore, the predictive performance of OLS regression is significantly improved.

- (3)

- Our proposed model can avoid overfitting because the adapted coefficients range between their lower and upper confidence intervals.

- (4)

- Our proposed model can measure a local effect of each input variable on the output. Therefore, our model can be applicable for many real-world applications, such as economics, biology, management, and social science, where data type is tabular and interpretable models are required.

The work is organized as follows. Section 2 presented the discussion of related research, and Section 3 introduced the proposal of our neural network-based architecture. The comparison and experiment results are presented in Section 4. We summarized this work and described the further research area at the end.

2. Related Work

Developing locally adaptive regression approaches have begun much earlier [10,11,12,13]. Those approaches can be classified into three main categories—nearest neighbor regression, weighted averaging regression, and locally weighted regression [14]. Nearest neighbor regression uses k most relative instances for a query instance to obtain its best fitting function for the target point [12,15]. Weighted averaging methods compute a weighted output of neighboring samples, weighted by using their similarities to the target point [16,17]. Locally weighted regression (LWR) is very similar to our proposed model because these models do not consider a fixed set of parameters for each instance [11,12]. However, the main weakness of nearest neighbor-based models is similar to the memory-based learning algorithms, where they keep full training data to provide the prediction for test data. This disadvantage makes them computationally expensive on large data. Instead, we use the neural networks as our meta-learner model that can adjust the parameters of the base-learner for each instance during the training process; therefore, our proposed model will be more efficient in terms of memory usage and time consumption on large datasets.

Using neural networks (meta-learner) to generate weight parameters for another one (base-learner) has been designed in the meta-learning field [18,19,20]. Based on this idea, we train a meta-learner model to retrieve the local best linear model for each instance by producing the parameters of base-learner. The parameters produced by the meta-learner are known as fast-weights in the field of meta-learning. Our proposed model is similar to the Meta Networks (MetaNet) [21] that uses an additional neural network model to generate the fast-weights for rapid generalization. This approach has been successfully used on images, text, and audio data [22,23,24]. However, our proposed architecture is interpretable and for tabular data, which is different from MetaNet.

Furthermore, there are several similar approaches for tabular data [25,26,27]. For example, Bildirici and Ersin (2009) [25] improved GARCH family models by artificial neural networks for financial time-series data. Furthermore, Bildirici and Ersin (2014) [26] also combined Markov switching ARMA-GARCH model with neural networks to predict exchange rates and stock returns. They used neural network approach to predict the parameter of ARMA and GARCH models, which is very similar with our work. Recently, LocalGLMnet architecture has been proposed in Richman and Wuthrich, (2021) [27], which is also very similar with our proposed architecture. This model improves the predictive power of GLMs by using neural networks and provides an explainability same as GLMs. The authors predict the parameters of GLMs using neural network to achieve superior predictive performance. The idea of this work is same as our proposed model, but the predicted parameters cannot be consistency of explaining the logical relationship between input and output variables. This is because the predicted parameters of GLMs highly depend on the weight parameter initialization for neural networks since they use gradient descent optimization algorithm to train LocalGLMnet. In other words, the main advantage of our work is that we first perform OLS to obtain unbiased regression coefficients, and then update them into their confidence intervals using neural network approach.

Another similar work is done by Takagi and Sugeno [28] called the TS fuzzy model. This method offers a new technique to build multi-models representing local input–output relations of a non-linear system. However, due to a large number of variables and the nature of the continuous variables for the regression task, the TS fuzzy model usually utilizes a tremendously enormous number of rules and does not consider the complexity of the model [29]. Unlike TS fuzzy models, the benefit of our proposed model is that we do not use rules, and our meta-learner learns these rules automatically based on a given data.

In addition, although the state-of-the-art ML models have showed magnificent predictive performance in various domains, the inability to explain them decreases humans’ trust. Subsequently, eXplainable AI (XAI) has become a significant and active research area [30]. Recently, a large number of studies has been done to understand the black-box model and increase humans’ trust. However, most studies focused on post-explainability rather than explainable models [5,8,31,32].

3. Methodology

In our proposed model, there are two main components: base-learner and meta-learner (see Figure 1). In the first component, we obtain our interpretable base-learner using OLS regression on training data. We then train our meta-learner based on the neural network model using normalized training data to predict the changes of each regression coefficient for each observation. Finally, we construct our local interpretable model using adjusted local regression coefficients to predict the target point.

3.1. Ordinary Least Squares

We choose the most interpretable OLS regression as our base-learner model and present its theoretical and statistical properties in this section.

Given a set of data of observations and independent variables (), the OLS regression model is formulated as follows:

where is the target sample of j-th observation, is i-th variable for j-th observation, and are random errors for j-th observation. OLS estimates the coefficients by minimizing the sum of squared residuals.

From Equation (1), the regression parameters can be obtained by the following formula:

where is input data and is target. However, we cannot estimate the true value of the regression coefficients from the sample data [1]. Therefore, the confidence interval for a regression coefficient in OLS regression is computed as shown below:

where is the critical value and is the standard error of the coefficient .

3.2. Neural Networks

We use multi-layer perceptron (MLP) as a meta-learner in our proposed architecture [33,34]. The goal of the meta-learner model is to augment the base-learner to achieve better predictive performance. A simple MLP neural network architecture is contracted by input, hidden, and output layers. The hidden layer consists of a certain number of neurons and activation function.

The Equation (4) defines MLP neural network model consisting of two hidden layers with and nodes:

where is i-th the input variable of variables, and are the first and second hidden layers, and are the weight parameters for the first hidden layer, and are the weight parameters for the second hidden layer, and are the weight parameters for output layer, is the g-th output and and are the activation functions for hidden and output layers.

3.3. The Proposed Model

Our model receives two types of inputs, non-normalized () and normalized (). The meta-learner takes normalized input to adjust the regression coefficients of the base-learner because the normalized input data help to learn the neural network models faster and achieve better predictive performance [35]. In contrast, the base-learner receives non-normalized input data to obtain an accurate explanation that is logically relevant to the real world because data scaling leads to misinterpreting the model [36].

As mentioned above, the input of the meta-learner is normalized input variables () and the output should be the predicted critical value for each regression coefficient. Since the critical value can be any number, we choose a linear function as an activation function of the output layer for meta-learner. Then our meta-learner for i-th variable is defined as follow:

where is normalized input, is the critical value for i-th variable, and is the parameters of the meta-learner for i-th variable, is the number of nodes in the last hidden layer, and represents a neural network model without linear output layer for i-th variable.

Furthermore, a local linear regression model can easily be rebuild using the CI formula during the training phase. Then our proposed model for j-th observation is shown as follows:

where is i-th input variable of j-th observation, is the normalized input variables of j-th observation, is the critical value for i-th variable, is the standard error of the i-th regression coefficient , and is error term of j-th observation.

3.4. Model Training

In Equation (7), and are not learnable parameters, and contains learnable parameters, which are described in Equation (5). Therefore, we can perform the following transformation for j-th observation in Equation (6).

From Equation (7), we can distinguish the part that contains learnable parameters only. Then it can be written as follows:

where defines an unpredicted value by OLS for j-th observation. We can now formulate our loss function based on the Equation (9):

We used the mean absolute error loss to optimize the parameters of meta-learner model ().

Recall that our estimated regression coefficients and their standard errors are numerical input after performing OLS estimator. In addition, our meta-learner model can consist of one or multiple neural networks, and the output of meta-learner should be equal to the number of variables. Both architectures can easily be trained with stochastic gradient descent (SGD) optimization with the backpropagation algorithm. The model training algorithm for our proposed model is as shown in Algorithm 1. First, we perform OLS estimator to obtain regression coefficients, their standard error, and prediction of training and validation set as shown in line 1. We then start to train meta-learner model based on SGD optimization with the backpropagation algorithm from the line 3. In order to select the best model, early stopping algorithm is used. From line 11, we calculate the validation loss during training process for every epoch. Based on the patience number, which is criteria for early stopping algorithm, we select the best model as shown in the line 12–15.

| Algorithm 1. Model training for our proposed model |

| Input: Training set non-normalized and normalized input |

| Validation set non-normalized and normalized input |

| Epoch number ; |

| Early stopping patience number ; |

| Learning rate |

| Output: meta-learner , the estimated regression coefficients and their standard errors |

| Procedure: { |

| 1: perform OLS estimator to obtain ; ; |

| 2: ; − ; − ; |

| 3: for ep = 0 to epoch do //loop for epoch iterations |

| 4: for j = 1 to N do |

| 5: |

| 6: |

| 7: //gradient descent |

| 8: //gradient descent |

| 9: end for |

| 10: |

| 11: |

| 12: if do |

| 13: |

| 14: |

| 15: return |

| 16: else do |

| 17: |

| 18: if do |

| 19: break |

| 20: end for |

| } |

4. Experimental Result

4.1. Benchmark Datasets

We presented benchmark public tabular datasets in Table 1. These datasets for the regression task were employed to evaluate the predictive performance. Datasets from 1 to 5 were retrieved from UCI Machine Learning Repository for regression task [37]. Other 3 datasets are downloaded from different sources such as California Housing dataset for predicting house price is retrieved from [38], FICO dataset for credit scoring is download from [39] and Bodyfat for estimating body fat is downloaded from [40].

Table 1.

Summary of datasets used in the comparison of the predictive performance.

We also trained our model on synthetic and the real-world economic datasets to demonstrate the model interpretability in Section 4.4.

4.2. Baseline Models and Hyperparameters

For regression baselines, a linear regression (OLS), Bayesian (Bayesian) [41] lasso [42], and ridge [43] regressions are chosen in the predictive performance comparison.

For other alternative baselines, we compare our results to Neural Additive Model (NAM) [44] and TabNet [6] models, which are the most popular and high-performance interpretable models for tabular data. We also performed additional experiments using LightGBM [45] and Catboost [46] machine learning algorithms to compare their predictive performance to our model.

We also need to configure the model architecture of our meta-learner and its hyperparameters for training. We trained two types of architectures—meta-learner consists of multiple MLPs by assigning each MLP to each regression coefficient (mult MLP), and meta-learner consists of a single MLP with multiple outputs; number of neurons for output layer must be the same as the number of variables including intercept (single MLP). The architecture of each MLP is constructed by three hidden layers with {256, 256, and 256} neurons.

For other hyperparameters, we set the maximum epoch number equal to 10,000 and the learning rate equal to 0.01. We used an Early Stopping algorithm to select the best model on the validation set. We set the same configuration for all datasets and used the five-fold cross-validation method to evaluate and compare the models.

4.3. Evaluation Metrics

The evaluation metrics for regression task mostly calculate the error between the observed and predicted values for target variables [47]. The root mean square error (RMSE) and mean absolute error (MAE) are used to measure the model performances.

where is the i-th predicted value, is the i-th observed value, and is the number of observation in the test set.

4.4. Comparison of Predictive Performance

The aim of this experiment analysis is to show how the predictive performance of OLS regression is improved after being augmented by neural networks.

Our proposed model achieved outstanding predictive performance on 3 out of 8 datasets (Energy Efficiency, Naval Propulsion, and Bodyfat) for RMSE evaluation metric and showed similar predictive performance on the other datasets (see Table 2). The regression baseline models showed poorer predictive performance than our proposed model on all datasets. As shown in Table 2, the predictive performance of OLS regression is weaker than that of the Lasso, Ridge, and Bayesian regressions, but its predictive power has improved significantly after being augmented by the neural networks. Table 3 reported the predictive performance on 8 datasets using the MAE evaluation metric. From the results, we can see that our proposed model notably outperformed the regression and the state-of-the-art baseline models on four datasets (Energy Efficiency, Naval Propulsion, Protein Structure, and Bodyfat).

Table 2.

Results on benchmark datasets comparing RMSE.

Table 3.

Results on benchmark datasets comparing MAE.

For machine learning models, LightGBM model showed the best performance on three datasets, (Concrete strength, Power plant, and California House) while the CatBoost model achieved the best results on two datasets (Protein Structure and FICO) using RMSE evaluation metric. In terms of MEA metric, LightGBM and CatBoost models achieved the performance on two datasets, respectively. Although the neural network-based NAM and TabNet models showed comparable results on most datasets, these models could not achieve superior predictive performances.

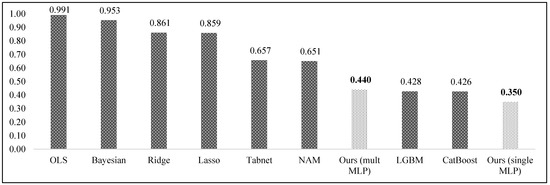

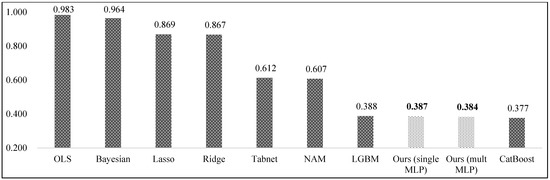

In addition, in order to clearly compare baseline models with our proposed model, we measured normalized average RMSE and MAE on all datasets as shown in Figure 2 and Figure 3. Our model is ranked the first and fourth places for the normalized average RMSE evaluation metric (see Figure 2). LightGBM and Catboost models showed the second and third best predictive performances by achieving 0.428 and 0.426 normalized average RMSE, respectively. Figure 3 displayed the normalized average MAE for all models and we can see that our proposed model consisting of multiple and single MLP models showed the second and third best predictive performance by achieving 0.384 and 0.387 normalized average MAE scores. From experimental results, we can now observe that augmenting linear regression by neural networks showed the state-of-the-art predictive performance without depreciating its interpretability.

Figure 2.

Normalized average RMSE for each model.

Figure 3.

Normalized average MAE for each model.

4.5. Model Interpretability

We evaluated our proposed model on synthetic and the real-world economic datasets to demonstrate its interpretability. We first aimed to evaluate how our proposed model accurately predicts the known regression coefficients on synthetic datasets. We also trained our model on the real-world economic data to explore the dynamic effect of the central bank policy rate (MN Policy rate) on inflation. We also performed the analysis on CO2 emission data, and our model discovered some interesting explanations between input and target variables, such as a parabolic relationship between CO2 emissions and gross national product (GNP).

4.5.1. Model Interpretability on Synthetic Data

In order to evaluate model interpretability, we generated the synthetic datasets based on linear and nonlinear functions. We then trained our proposed model on those datasets to predict the known regression coefficients. The coefficients used to create the synthetic datasets were derived from a normal distribution rather than using constant coefficients. Based on these known coefficients, we generated the datasets using linear and nonlinear functions; 1. Linear, 2. Quadric, and 3. Summation of multiplication function, which is a summation of multiplication between input variables. The used functions are as the following:

where represents number of variable, and are parameters used to calculate target variable , is input i-th variable, j and k are index for variables (these index should be less than p), and is independent, identically distributed random error. For Equation (13), meta-learner in our model should predict as model coefficients. For Equations (14) and (15), the meta-learner in our model will predict and, which are adaptive regression coefficients.

Based on the above three functions, we generated 10,000 samples of synthetic dataset consisting of six variables.

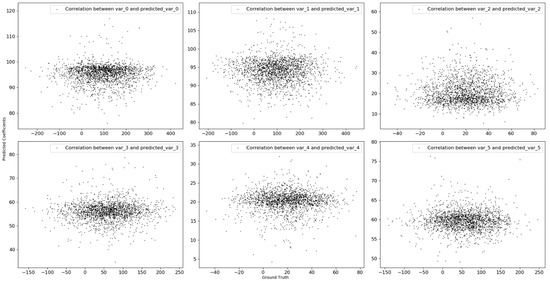

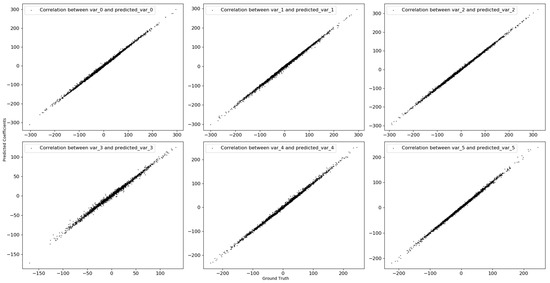

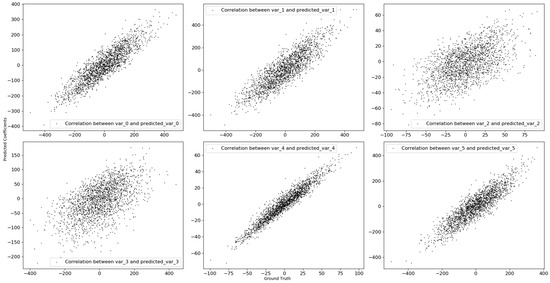

We then displayed the relationship between known and predicted coefficients for the synthetic data generated by using linear function based on scatter plot in Figure 4. We can see that our proposed model cannot predict the actual coefficients because the known coefficients were randomly derived from normal distribution, and linear regression perfectly predicts target variable.

Figure 4.

The scatter plot between known and predicted coefficients for linear function on test set.

Figure 5 and Figure 6 showed the relationship between known and predicted coefficients for the synthetic data generated by quadric and summation of multiplication functions. We can see that our model can accurately predict the actual coefficients for the synthetic data generated by using nonlinear functions. Table 4 also presented correlation between known and predicted coefficients for each synthetic data. We now can see that there is no correlation between actual and predicted coefficients for the synthetic dataset generated by linear function. In contrast, for the synthetic datasets generated by quadric and summation of multiplication functions, the actual and predicted coefficients are highly correlated with each other.

Figure 5.

The scatter plot between known and predicted coefficients for quadric function on test set.

Figure 6.

The scatter plot between known and predicted coefficients for summation of multiplication function on test set.

Table 4.

Correlation between known and predicted coefficients for each synthetic data.

Finally, as a result of the experimental analysis on the synthetic datasets, meta-learner in our proposed model can explain correlation between input and output variables by approximating the local linear function for each observation.

4.5.2. Model Interpretability on Economic Data

We then trained our proposed model on Mongolian economic data to explore the relationship between the central bank policy rate (MN Policy rate) and inflation over time. We retrieved quarterly data from various open sources such as The Central Bank of Mongolia, National Statistics Office of Mongolia, and National Bureau of Statistics of China as shown in Table 5. The data period is from the 4th quarter of 2000 to the 2nd quarter of 2022. For explanatory variables, China’s Real Gross Domestic Product (China RGDP, constant price-2015), China’s consumer price index (China CPI, price index 2015 = 100), the coal price (Coal price), the oil price (Oil price), the Mongolian Real Gross Domestic Product (MN RGDP, constant price-2015), the budget expenditure of Mongolian (MN Budget Exp), the average wage (MN Average wage), the money supply (MN Money Supply M2), the outstanding loan (MN Loan Outstanding), the central bank policy rate (MN Policy rate) and the terms of trade (MN Terms of trade) are used to forecast Mongolian consumer price index (MN CPI).

Table 5.

The descriptive statistic and data source for economic data.

Before proceeding to OLS estimation, the traditional Augmented Dickey-Fuller (ADF) [48,49] and KPSS [50] unit root tests should be tested to check the hypothesis of stationarity and nonlinearity for all variables [51]. Table 6 shows the result of unit root tests for variables. The optimal lags are selected based on AIC information criterion and maximum lag is equal to 4. The traditional unit root test results showed that the null hypothesis cannot be rejected for most variables; therefore, the variables must be transformed into stationarity. Only the MN Policy rate variable can reject the null hypothesis, so no transformation is needed. Moreover, since this variable is expressed as a percent, we need to transform the other variables to the same level.

Table 6.

The results of ADF and KPSS unit root test before transformation.

After transforming the variables, as shown in Table 7, ADF and KPSS test results showed that the null hypothesis cannot be rejected for d4log(China RGDP) and d1log(MN Money Supply M2) variables. On the other hand, the variable d4log(China CPI) was highly correlated with the variable d4log(MN RGDP) and the variable d1log(MN Money Supply M2) was also highly correlated with the variable d1log(MN Loan Outstanding), so we excluded these variables from the regression model.

Table 7.

The results of ADF and KPSS unit root test after transformation.

For the variables for which traditional unit root tests were accepted, we considered that these variables could affect inflation and are included in the OLS estimation.

A total of 78 quarters from the 1st quarter of 2001 to the 2nd quarter of 2020 are used as the training set, and 8 quarters from the 3rd quarter of 2020 to the 2nd quarter of 2022 are considered as a test set.

From the result of the OLS regression (Table 8 and Table 9), we can see a negative relationship between the central bank policy rate (MN Policy rate) and inflation d1log(MN CPI). Expressly, in the Mongolian economy, inflation tends to fall by 0.0025 percent if the central bank raises the policy rate by one percent.

Table 8.

Estimated coefficients of OLS regression on economic data.

Table 9.

Regression diagnostics result on economic data.

Table 10 also showed the predictive performance results, and we can see that our proposed model reduces the error of the OLS model by an average of 0.0107 units of inflation, or 30.7 percent.

Table 10.

The predictive performance on economic test set.

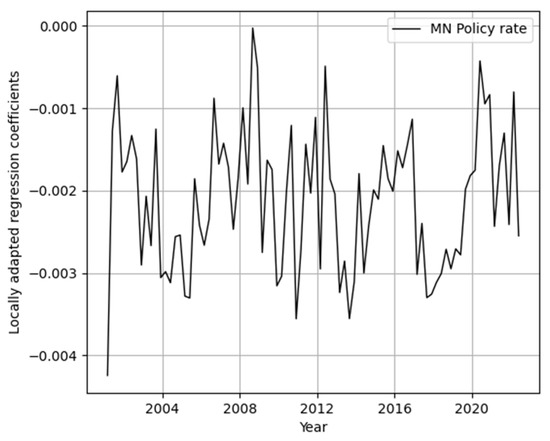

Another advantage of our proposed model is to capture how the regression coefficients change over time. In macroeconomics, the impact of explanatory variables on inflation can be changed over time depending on the economic situation [52]. Therefore, our model can be suitable for developing economic models rather than linear regression.

Figure 7 showed how the central bank’s policy rate affects inflation over time. As we know, there was a global economic crisis in the period between 2008 and 2010. During this period, we can see that the effect of the central bank policy rate on inflation is high. In other words, if the central bank raises the policy rate by one percent, inflation tends to fall by more than 0.03 percent. On the contrary, between 2010 and 2012, Mongolia’s largest copper mine called Oyu Tolgoi started and the Mongolian economy was extremely grown. At the same time, the effect of the central bank’s policy rate on inflation weakened.

Figure 7.

The impact of the central bank’s policy rate on inflation over time.

The estimated regression coefficient from the linear regression is consistent with the economic theory for MN Policy rate. We can now see that our proposed model showed how the effect of MN Policy rate changes dynamically on inflation while keeping this consistency.

4.5.3. Model Interpretability on CO2 Emission Data

In this section, we considered real-world CO2 emission dataset and examined the link between CO2 emission and gross national product (GNP) [49,50]. We explored the relationship between the CO2 and GNP based our proposed model.

The source of the dataset is the official web page of Our World in Data (https://ourworldindata.org/, accessed on 1 November 2022) and the data between 1990 and 2015 are the training and data in 2016 are the test set (see Table 11). In addition, we also performed ADF and KPSS tests on CO2 emission dataset as shown in Table 12, and ADF and KPSS test results showed that the null hypothesis can be accepted for logarithm scale of both variables. Furthermore, to investigate the link between CO2 emission and GNP, we estimated two different regression equations as follows:

Table 11.

The descriptive statistic for CO2 emission data.

Table 12.

The results of ADF and KPSS unit root test for CO2 emission data.

In general, assuming that there are positive linear and negative parabolic relationships between CO2 emission and GNP. Theoretically, the Environmental Kuznets Curve (EKC) hypothesis postulates an inverted-U-shaped relationship between CO2 emission and GNP [53,54].

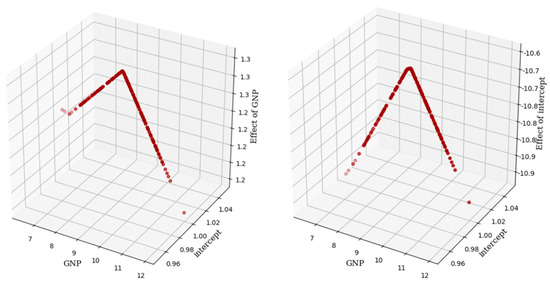

Our estimates of Equations (16) and (17) are reported in Table 13 and regression diagnostics result is presented in Table 14. The regression coefficients are consistent with EKC hypothesis. We then trained our model on these two OLS results and reported the prediction performance in Table 15. Our model showed slightly better performance than both OLS results. Finally, we captured the relationship between CO2 emission and GNP.

Table 13.

Estimated coefficients of OLS regression on CO2 emission data.

Table 14.

Regression diagnostics result on CO2 emission data.

Table 15.

The prediction performance on CO2 emission test dataset.

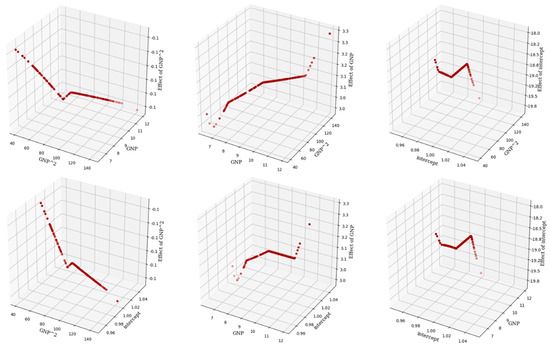

Figure 8 showed the influence of GNP (left) and intercept (right) on CO2 emission for Equation (16). We can easily see that GNP and intercept are parabolic with CO2 emission. When GNP goes up to 9.16, it intensively increases CO2 emission, then when GNP is higher than 9.16 its effect on CO2 emission starts to decrease. For intercept, average CO2 emission increases up to a certain level as GNP goes up; after that, it decreases. In Equation (17), we added the quadratic term of GNP as an explanatory variable, and the predictive performance of OLS is improved. Although the predictive performance of our model has not changed much, its interpretability is shifted as shown in Figure 9. We can now see that the parabolic relationship between CO2 and GNP on Equation (17) has transformed to linear. Our model can also measure how much CO2 will change due to the change in GNP for each country.

Figure 8.

The relationship between GNP, intercept and the estimated coefficients obtained by our proposed model.

Figure 9.

The relationship between GNP, the quadratic term of GNP, intercept and the estimated coefficients obtained by our model.

5. Conclusions

In this work, we aimed to create a white-box model for regression tasks on tabular data. In order to provide both high predictive accuracy and explainability, we proposed a novel locally adaptive interpretable regression model augmented by neural networks. The proposed model relies on two key aspects. First, a base-learner should be a simple interpretable model. In this work, we obtain our base-learner using OLS regression and its statistical properties. Second, we use neural networks as our meta-learner to re-parameterize our base-learner to produce a local interpretable linear model for each observation. We can locally explain the relationship between input and output variables based on the adapted local regression coefficients. We evaluate the predictive performance and interpretability of our proposed model on several tabular datasets. Experimental results showed that our model greatly improved the predictive performance of OLS regression after being augmented by neural networks. Our model is ranked first by the normalized average RMSE and second by the normalized average MAE from experimental results.

In addition, in order to evaluate model explainability, we perform additional experiments on the synthetic, economic, and CO2 emission datasets. For the synthetic data generated by non-linear functions, our proposed model can explain the relationship between input and output features by approximating a local linear function for each observation. We then perform an analysis of economic time-series data, and our model explores the dynamic relationship between input and output variables. As a result, we have observed that the impact of central bank policy rates on inflation tends to weaken during a recession and rises during an expansion, consistent with the economic theory. Lastly, we applied our model to CO2 emission data, and our model discovers some interesting explanations between input and target variables, such as a parabolic relationship between CO2 emissions and gross national product (GNP).

We believe that our proposed model can be applicable for many real-world domains where data type is tabular and interpretable models are required.

Author Contributions

Conceptualization, L.M., V.H.P. and K.H.R.; methodology, L.M. and T.M.; software, L.M.; validation, V.H.P., N.T.-U. and J.-E.H.; formal analysis, L.M. and K.H.R.; investigation, L.M.; resources, K.H.R.; data curation, L.M.; writing—original draft preparation, L.M. and K.H.R.; writing—review and editing, V.H.P., N.T.-U. and J.-E.H.; visualization, L.M.; supervision, J.-E.H. and K.H.R.; project administration, K.H.R.; funding acquisition, K.H.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT, and Future Planning under Grant No. 2020R1A2B5B02001717 in Korea.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data available in a publicly accessible repository.

Acknowledgments

This work has been done partially while Lkhagvadorj Munkhdalai visited in the Biomedical Engineering Institute, Chiang Mai University.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Goldberger, A.S. Best linear unbiased prediction in the generalized linear regression model. J. Am. Stat. Assoc. 1962, 57, 369–375. [Google Scholar] [CrossRef]

- Andrews, D.F. A Robust method for multiple linear regression. Technometrics 1974, 16, 523–531. [Google Scholar] [CrossRef]

- Hayes, A.F.; Glynn, C.J.; Huge, M.E. Cautions regarding the interpretation of regression coefficients and hypothesis tests in linear models with interactions. Commun. Methods Meas. 2012, 6, 651415. [Google Scholar] [CrossRef]

- Gaur, M.; Faldu, K.; Sheth, A. Semantics of the black-box: Can knowledge graphs help make deep learning systems more interpretable and explainable? IEEE Internet Comput. 2021, 25, 51–59. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?” Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 1135–1144. [Google Scholar] [CrossRef]

- Arik, S.Ö.; Pfister, T. Tabnet: Attentive interpretable tabular learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; Volume 35, pp. 6679–6687. [Google Scholar]

- Terejanu, G.; Chowdhury, J.; Rashid, R.; Chowdhury, A. Explainable deep modeling of tabular data using TableGraphNet. arXiv 2020, arXiv:2002.05205. Available online: https://arxiv.org/abs/2002.05205 (accessed on 6 June 2022).

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 30–40. [Google Scholar]

- Rudin, C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 2019, 1, 206–215. [Google Scholar] [CrossRef]

- Cleveland, W.S. Robust locally weighted regression and smoothing scatterplots. J. Am. Stat. Assoc. 1979, 74, 829–836. [Google Scholar] [CrossRef]

- Cleveland, W.S.; Devlin, S.J. Locally weighted regression: An approach to regression analysis by local fitting. J. Am. Stat. Assoc. 1988, 83, 596–610. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R. Discriminant adaptive nearest neighbor classification. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 607–616. [Google Scholar] [CrossRef]

- Fan, J.; Gijbels, I. Variable bandwidth and local linear regression smoothers. Ann. Stat. 1992, 20, 2008–2036. [Google Scholar] [CrossRef]

- Atkeson, C.G.; Moore, A.W.; Schaal, S. Locally Weighted Learning. In Lazy Learning; Aha, D.W., Ed.; Springer: Dordrecht, The Netherlands, 1997. [Google Scholar] [CrossRef]

- Chen, R.; Paschalidis, I. Selecting optimal decisions via distributionally robust nearest-neighbor regression. In Proceedings of the 32nd Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 30–40. [Google Scholar]

- Nadaraya, E.A. On estimating regression. Theory Probab. Its Appl. 1964, 9, 141–142. [Google Scholar] [CrossRef]

- Nguyen, X.S.; Sellier, A.; Duprat, F.; Pons, G. Adaptive response surface method based on a double weighted regression technique. Probabilistic Eng. Mech. 2009, 24, 135–143. [Google Scholar] [CrossRef]

- Hinton, G.E.; Plaut, D.C. Using fast weights to deblur old memories. In Proceedings of the 9th Annual Conference of the Cognitive Science Society; Psychology Press: Hillsdale, NJ, USA; Seattle, WA, USA, 1987; pp. 177–186. [Google Scholar]

- Schmidhuber, J. A neural network that embeds its own meta-levels. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993; pp. 407–412. [Google Scholar]

- Schmidhuber, J. Learning to control fast-weight memories: An alternative to dynamic recurrent networks. Neural Comput. 1992, 4, 131–139. [Google Scholar] [CrossRef]

- Munkhdalai, T.; Yu, H. Meta networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, NSW, Australia, 6–11 August 2017; pp. 2554–2563. [Google Scholar]

- Cao, W.; Wang, X.; Ming, Z.; Gao, J. A review on neural networks with random weights. Neurocomputing 2018, 275, 278–287. [Google Scholar] [CrossRef]

- Munkhdalai, T.; Sordoni, A.; Wang, T.; Trischler, A. Metalearned neural memory. In Proceedings of the 32nd Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Munkhdalai, L.; Munkhdalai, T.; Park, K.H.; Amarbayasgalan, T.; Erdenebaatar, E.; Park, H.W.; Ryu, K.H. An end-to-end adaptive input selection with dynamic weights for forecasting multivariate time series. IEEE Access 2019, 7, 99099–99114. [Google Scholar] [CrossRef]

- Bildirici, M.; Ersin, Ö. Improving forecasts of GARCH family models with the artificial neural networks: An application to the daily returns in Istanbul Stock Exchange. Expert Syst. Appl. 2009, 36, 7355–7362. [Google Scholar] [CrossRef]

- Bildirici, M.; Ersin, Ö. Modeling markov switching ARMA-GARCH neural networks models and an application to forecasting stock returns. Sci. World J. 2014, 2014, 497941. [Google Scholar] [CrossRef]

- Richman, R.; Wüthrich, M.V. LocalGLMnet: Interpretable deep learning for tabular data. Scand. Actuar. J. 2022, 10, 1–25. [Google Scholar] [CrossRef]

- Takagi, T.; Sugeno, M. Fuzzy identification of systems and its applications to modeling and control. IEEE Trans. Syst. Man Cybern. 1985, SMC-15, 116–132. [Google Scholar] [CrossRef]

- Jin, Y. Fuzzy modeling of high-dimensional systems: Complexity reduction and interpretability improvement. IEEE Trans. Fuzzy Syst. 2000, 8, 212–221. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2019, 58, 82–115. [Google Scholar] [CrossRef]

- Féraud, R.; Clérot, F. A methodology to explain neural network classification. Neural Netw. 2001, 15, 237–246. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-precision model-agnostic explanations. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence and Thirtieth Innovative Applications of Artificial Intelligence Conference and Eighth AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1527–1535. [Google Scholar]

- Rosenblatt, F. The perceptron: A probabilistic model for information storage and organization in the brain. Psychol. Rev. 1958, 65, 386–408. [Google Scholar] [CrossRef] [PubMed]

- Bishop, C.M. Neural Networks for Pattern Recognition; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Munkhdalai, L.; Munkhdalai, T.; Ryu, K.H. GEV-NN: A deep neural network architecture for class imbalance problem in binary classification. Knowl. Based Syst. 2020, 194, 105534. [Google Scholar] [CrossRef]

- Munkhdalai, L.; Munkhdalai, T.; Park, K.H.; Lee, H.G.; Li, M.; Ryu, K.H. Mixture of activation functions with extended min-max normalization for forex market prediction. IEEE Access 2019, 7, 183680–183691. [Google Scholar] [CrossRef]

- Blake, C. UCI Repository of Machine Learning Databases. 1998. Available online: https://archive.ics.uci.edu/ml/index.php (accessed on 25 March 2022).

- Pace, R.K.; Barry, R. Sparse spatial autoregressions. Stat. Probab. Lett. 1997, 33, 291–297. [Google Scholar] [CrossRef]

- FICO. FICO Explainable Machine Learning Challenge. 2018. Available online: https://community.fico.com/s/explainable-machine-learning-challenge (accessed on 25 March 2022).

- Johnson, R.W. Fitting percentage of body fat to simple body measurements. J. Stat. Educ. 1996, 4, 1–8. [Google Scholar] [CrossRef]

- Gelman, A.; Goodrich, B.; Gabry, J.; Vehtari, A. R-squared for bayesian regression models. Am. Stat. 2019, 73, 307–309. [Google Scholar] [CrossRef]

- Kim, S.-J.; Koh, K.; Lustig, M.; Boyd, S.; Gorinevsky, D. An interior-point method for large-scale -regularized least squares. IEEE J. Sel. Top. Signal Process. 2007, 1, 606–617. [Google Scholar] [CrossRef]

- McDonald, G.C. Ridge regression. WIREs Comput. Stat. 2009, 1, 93–100. [Google Scholar] [CrossRef]

- Agarwal, R.; Melnick, L.; Frosst, N.; Zhang, X.; Lengerich, B.; Caruana, R.; Hinton, G.E. Neural additive models: Interpretable machine learning with neural nets. In Proceedings of the 34th Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; pp. 4699–4711. [Google Scholar]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. In Proceedings of the 30th Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 3146–3154. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased boosting with categorical features. In Proceedings of the 31st Advances in Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 6638–6648. [Google Scholar]

- Enelow, J.M.; Mendell, N.R.; Ramesh, S. A Comparison of two distance metrics through regression diagnostics of a model of relative candidate evaluation. J. Politics 1988, 50, 1057–1071. [Google Scholar] [CrossRef]

- Dickey, D.A.; Fuller, W.A. Distribution of the estimators for autoregressive time series with a unit root. J. Am. Stat. Assoc. 1979, 74, 427–431. [Google Scholar]

- Dickey, D.A.; Fuller, W.A. Likelihood ratio statistics for autoregressive time series with a unit root. Econom. J. Econom. Soc. 1981, 49, 1057–1072. [Google Scholar] [CrossRef]

- Kwiatkowski, D.; Phillips, P.C.B.; Schmidt, P.; Shin, Y. Testing the null hypothesis of stationarity against the alternative of a unit root: How sure are we that economic time series have a unit root? J. Econom. 1992, 54, 159–178. [Google Scholar] [CrossRef]

- Bildirici, M.; Alp, E.A.; Ersin, Ö.Ö. TAR-cointegration neural network model: An empirical analysis of exchange rates and stock returns. Expert Syst. Appl. 2010, 37, 2–11. [Google Scholar] [CrossRef]

- Dangl, T.; Halling, M. Predictive regressions with time-varying coefficients. J. Financial Econ. 2012, 106, 157–181. [Google Scholar] [CrossRef]

- Bildirici, M.; Ersin, Ö. Markov-switching vector autoregressive neural networks and sensitivity analysis of environment, economic growth and petrol prices. Environ. Sci. Pollut. Res. 2018, 25, 31630–31655. [Google Scholar] [CrossRef]

- Holtz-Eakin, D.; Selden, T.M. Stoking the fires? CO2 emissions and economic growth. J. Public Econ. 1995, 57, 85–101. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).