Abstract

This study applies and builds on the Use and Gratification (U&G) theory to explore consumer acceptance of applied artificial intelligence (AI) in the form of Chatbots in online shopping in China. Data were gathered via an anonymous online survey from 540 respondents who self-identified as frequent online shoppers and are familiar with Chatbots. The results of the data analysis provide empirical evidence indicating that utilitarian factors such as the “authenticity of conversation” and “convenience”, as well as hedonic factors such as “perceived enjoyment”, result in users having a positive attitude towards Chatbots. However, privacy issues and the immaturity of technology have had a negative impact on acceptance. This paper provides both theoretical and practical insights into Chinese attitudes toward Chatbots and may be of interest to e-commerce researchers, practitioners, and U&G theorists.

1. Introduction

Artificial intelligence (AI) has become an important part of our lives and has been used in various applications for several years. Many of the ways that AI can be used in business are not apparent to consumers or end-users, for example, as a way to model complex business scenarios [1] and decision-making models [2,3]. AI is a driving force behind Industry 4.0, the most recent generation of applied technology [4,5]. As technologies become more advanced, AI has become more user friendly and, in recent years, has become commonplace in many end-user applications, including real-time human interactions with computers, known as Chatbots [6]. Adam et al. [7] reported that communication with customers via real-time chat interfaces is becoming increasingly popular. The use of Chatbots has been increasing for several years, driven in part by user preference; people use languages for communication with other humans and prefer to use languages in the same manner to interact with computers [8]. Chatbots are computer programs, and their purpose is to interact with humans by using language in such a manner as to simulate interactions between two humans to the extent that users feel that they are interacting with another human [9]. There are two main ways to communicate with computers; one is via text communication, and the other is via voice communication. Eliza, the first Chatbot, was developed in 1966. Since then, the Chatbot community explored many interesting ideas [10]. With improvements in the underlying technology, the development of Chatbots, particularly speech-based Chatbots, increased in recent years [11]. The primary purpose of Chatbots is to help users accomplish their goals by simplifying methods via verbal interactions.

Chatbots are versatile and have great potential. Human service workers often reach the limits of their capabilities; they may not be able to organize various resources for serving the company and may become fatigued [12]. However, Chatbots are available 24 h a day, and with scalable technology, they can be accessed without waiting times for customers. For companies, Chatbots can play a significant role in cost savings and in automating processes [13].

Some Chatbots, such as Amazon Alexa and Apple Siri, are well-known, and the use of Chatbots for various applied purposes is growing. One early example is IBM’s question-and-answer system, called Watson. The application scope of Chatbots is not limited to information technology companies but can be applied to all walks of life. In 2020, more than 85% of customer interactions were provided by various forms of Chatbots [14]. For example, “In addition to companies using Chatbots for external communication (for example, customers), businesses can also utilize Chatbots for internal communication” [12]. Chatbots are often used in large corporations for training, employee support, and recruitment; for example, meet Frank is an anonymous Chatbot that can introduce talent to companies [15]. Communicating with customers via live chat interfaces has become an increasingly popular means of providing real-time customer service in many settings, including e-commerce. Conversational software agents or Chatbots frequently replace human chat service agents. Using cost- and time-saving opportunities triggered a widespread implementation of AI-based Chatbots, and they still frequently fail to meet customer expectations, potentially resulting in users being less inclined to comply with requests made by the Chatbot and less inclined to engage in the use of Chatbots [9].

Drawing on social response and commitment-consistency theory, prior research includes a study that empirically examines the customer’s experience via a randomized online experiment on how verbal anthropomorphic design cues and the foot-in-the-door technique affect user request compliance [7]. The results demonstrate that anthropomorphism and the need to stay consistent significantly increase the likelihood that users will comply with a Chatbot’s request for service feedback [16]. Moreover, those results show that social presence mediates the effect of anthropomorphic design cues on user compliance. Chatbots have shown an S-shaped innovation diffusion curve in China, and artificial intelligence has entered and become a part of the Chinese e-commerce market in the past few years [17].

Related research on the use of Chatbots in the financial services industry [18] provides a basis for this study but leaves a gap in the area of e-commerce. Similarly, recent research explored how user characteristics affect the user acceptance of Chatbots in e-commerce using the social presence theory [19] and by applying the actual usage theory to explore Chatbot usage in the hospitality industry [20]. However, a thorough review of the existing literature on Chabot acceptance reveals a gap in how the U&G theory can be applied to ascertain consumer acceptance in e-commerce in China.

Therefore, this article focuses on three issues. The first is the positive or negative factors that affect how often Chinese people use Chatbots. Secondly, the paper will highlight the use of U&G models to test people’s acceptance of Chatbots in China. Expanding on the U&G model, this paper will also consider the impact of group differences on the acceptance of Chatbots.

This paper seeks to make the following contributions: (1) the determination of the acceptance degree of Chinese e-commerce users relative to Chatbot experiences and an analysis of different influencing factors; (2) these of the U&G model to understand the advantages and disadvantages of Chatbot use and to further understand the relationship between influencing factors; and (3) contribute to the model of the U&G theory by applying the theory to acceptance of Chatbots in e-commerce in China.

This paper is organized as follows: Section 2 (Literature Review) provides a review of current relevant findings related to Chatbots, beginning with the initial development process of Chatbots and how Chatbots have been used by consumers and then continuing with the U&G model and examining how it is applied to Chatbot acceptance. The relationship between the influencing factors and the current acceptance of Chatbot use and perception is reviewed. Section 3 provides the conceptual framework, followed by Section 4 (Methodology), Section 5 (Analysis and Results), Section 6 (Discussion and Conclusion), and finally, Section 7 (Limitations and Suggestions for future research).

2. Literature Review

2.1. Chatbots

Chatbots are intelligent computer programs that simulate humans via conversation or text. Chatbot features a simple text interface that allows users to access information or provide entertainment via an online messaging platform [21]. During interactions with Chatbots, conversation messages can be sent through a variety of media, including voice commands, text chat, graphical interfaces, or graphical widgets [22]. Conversational interfaces include language processing, intelligent conversation, and human–computer interaction, allowing actual humans to feel, at least temporarily, that they are engaged with another human and to approximate the experience of talking to another human. Chatbot technology has been around since the 1960s and has been used for user interface development in games since the 1980s [23]. Gaming software was the first mainstream type of application that used Chatbot user interfaces. More recently, due to the continuous development of smartphones, game software has also improved and is driving increased awareness and exposure to Chatbots [24]. Since the advent of the Industry 4.0 era, digitalization has been a driver of several new technologies, including Big Data, cloud computing, blockchain, and various applications of AI [25], of which Chatbots are one of the most visible.

Chatbots are successful because they can use algorithms to select the correct answer from a database provided by the developer. Chatbots can understand a variety of human questions and distinguish between unique words. To have high-quality Chatbot conversations, it is necessary to have a rich person-to-person conversational vocabulary [22]. Chatbots also allow developers to add Internet buzzwords to their vocabulary. Therefore, in the case of Chatbots with chat interfaces, rich vocabulary and fast reply speeds are key reasons for their rapid development and widespread use.

2.2. U&G Model

The Use and Gratification model is well-known and recognized as a branch of media effects research [26]. In the evolution process of the U&G Model, it has been used to investigate whether the media had achieved the expected purpose or what impact it had on the audience from the perspective of communicators. More recently, it is from the perspective of the audience to explain what meets the needs of the audience by analyzing the motivation of audience contact. According to the U&G model, developed in 1973 by Katz, Gurevitch, and Haas, people’s needs are divided into the five dimensions with respect to social and psychological needs that can be gratified by media use. These include the following:

- Cognitive needs: The need to acquire information and gain knowledge or to improve understanding;

- Affective needs: The need for aesthetic or emotional experiences;

- Integrative needs: The need to gain confidence, raise status, or improve credibility. These have both cognitive and affective components;

- Social integrative needs: The need to strengthen interpersonal relationships with friends and family;

- Tension-release needs: The need to relax and escape from pressure [27].

In recent years, “technical satisfaction” and “social satisfaction” have also been added to the U&G Model [17]. In the Internet era, technical satisfaction is particularly important as it is a precedent of c-commerce activity. It refers to whether people can achieve their desired effect efficiently and accurately with the technology provided, which in the case of Chatbots is heavily dependent on the satisfaction of the Chatbot-to-human interaction or “authenticity”. The Use and Gratification theory has been used in surveys in many fields, for example, to test people’s acceptance of Internet information service technology [28] and the reasons people use virtual goods [29], in which it was demonstrated that technology acceptance is dependent on several factors among which privacy and security, or “risk” in the case of e-commerce, stand out. The U&G model is widely used because it overturns the passive theory of the audience experience and advocates audience initiative. The U&G theory has been shown to be a superior tool in the examination of customer motivation, providing valuable insights [30]. U&G research has demonstrated that part of the value of the Chatbot experience may be related to escapism and relaxation inherent in the dimension of hedonism. This paper applies and expands on the U&G theory to analyze people’s acceptance of Chatbots from the three dimensions of technology, hedonism, and risk in e-commerce with a higher level of specificity.

2.3. Behavior Intention

The Fishbein–Ajzen behavioral intentions model was designed to present the effect of subjective norms and attitudes on behavioral intentions. The model is applied frequently and in various contexts, and the evidence of its validity is largely based on consistently good performance in predicting behavioral intentions [31]. Behavioral intention refers to the factors that motivate and influence a specific behavior in cases where a stronger intention to perform a behavior increases the likelihood that the behavior will be performed. As such, behavioral intention is a behavioral tendency that refers to a condition of preparation before acting [31].

The user’s behavior intention in virtual environments depends, in part, on the user’s loyalty to the virtual environment [32]. From the perspective of science and technology, for virtual products, if the product can meet “technical satisfaction”, then the loyalty of users to the virtual environment will be improved; that is to say that users will have a positive behavior tendency. Technology in virtual worlds (interactivity and sociability) has a significant impact on the social and business models of virtual environments [33]. Behavioral intention, as well as the perceived need for risk mitigation, depends in part on the presence, extent, and urgency of the danger or risk [34]. Risk and behavioral intentions are often negatively correlated [34]. In a virtual environment, privacy and immature technology have been found to be factors leading to the decline of user loyalty [35,36]. Therefore, this paper will explore behavioral intentions from the three aspects of how technology, risk, and enjoyment (hedonic) can affect consumers’ loyalty to Chatbots and can reflect consumers’ behavioral intentions.

2.4. Technology Acceptance

2.4.1. Authenticity of Conversation and Behavioral Intention

Situational awareness enables software applications to adapt to the environment to better meet the needs of users [37]. Whether it is a human or a Chatbot, authenticity comes from the honesty and sincerity with which they express themselves [38]. Authenticity is a word from Latin authenticus and the Greek word authentikos, which means trustworthy and authoritative [39]. Authenticity can be applied to both a person’s quality and to a consumer’s trust in a product or service. The difference between truth and falsity is reflected in consumers’ awareness and demand for authenticity [40]. For example, whether a user can obtain useful information from a Chatbot is a critical factor for consumers in choosing the product. The result is consumers’ behavioral intention [41].

For virtual products such as Chatbots, anthropomorphic effects, that is, attributing humanoid characteristics to non-human entities, are also considered [42]. The authenticity of the conversation is still based on the level of technology that people experience when they talk to a Chatbot. We assume that if the Chatbot has a good simulation effect, it will provide objective and accurate information for people in conversation and is more closely related to communicating within the typical human user experience. The interaction with the user can ultimately improve the Chatbot. The Chatbot will develop increased authenticity, increased user acceptance, and even increased user loyalty to the application. Authenticity is an essential factor in the user acceptance of Chatbot acceptance [43].

2.4.2. Convenience and Behavioral Intention

The convenience of use can be considered from two aspects of needs, namely, physical needs and cognitive needs [44]. For example, when shopping, physical needs mean that people can shop with a Chatbot anywhere without a location requirement. In contrast, cognitive needs mean that people can choose whatever they want to buy with a Chatbot without any kind of limitation. In particular, since the COVID-19 pandemic, online shopping has taken on a new importance, and the role of personalized interactions has become an essential factor in online shopping experiences [45]. This convenience can increase user loyalty by reducing shopping time. When users perceive a high probability of inconvenience, their behavioral intention will be negatively affected, especially during shopping [46]. In addition, Chatbots are favored in many fields because of their convenience. For example, college students tend to accept Chatbots for education purposes [47]. It is assumed that the convenience of Chatbots is based, in part, on a lack of time and this places restrictions for users to use Chatbots, which will increase users’ dependency on Chatbots and increase their loyalty, thus influencing their behavioral intentions.

2.5. Hedonic

Hedonic includes three factors: enjoyment, passing time, and behavior intention. Users’ positive attitude toward the product will increase their bias toward the product [48]. In many studies, users’ attitude is considered an important factor affecting their behavior and product demand [49]. Hedonism is one of the important factors in measuring a user’s attitude [50]. In a virtual environment, people may feel uneasy because of the unfamiliar, unique, and strange environment, which is the uniqueness of the virtual environment [51]. This uniqueness may reduce the user’s demand for the product in a virtual environment, meaning that users may develop negative attitudes, where hedonism plays a crucial role. In a computer environment, higher levels of mental enjoyment and pleasure help people develop positive attitudes [52]. Perceived enjoyment is a psychological result and an antecedent of consumer attitudes [9]. In addition, Brandtzaeg and Følstad [53] claim that Chatbots are a great way to spend time as a form of entertainment. The virtual world is one way that many people choose to spend at least part of their free time.

2.6. Risk

2.6.1. Privacy Concerns and Behavior Intention

Traditional mechanisms for delivering notifications and enabling choices fail to protect user privacy. Users are increasingly frustrated with complex privacy policies, inaccessible privacy settings, and numerous emerging standards for maintaining online security [54]. The existing research finds that humanoid Chatbots often suffer from information leakage, and their anthropomorphic perception-mediated recommendation compliance and lower privacy concerns have become focuses of attention [42]. People did not report a decrease in privacy concerns when using a Chatbot, although it increased the perception of social presence [55]. As Chatbots are widely used in various fields, Chatbots working in the financial industry intensified people’s distrust. When it comes to sharing financially sensitive information and using Chatbots for financial support, people’s trust in them is very low [55]. In addition, Baudart [56] noted that Chatbots may feed users’ viral-carrying content, which can raise privacy concerns; be adversarial or malicious; and cause significant financial, reputational, or legal damage to Chatbot providers [56]. Therefore, information leakage, virus spread, and other issues must be taken into consideration when using Chatbots.

2.6.2. Immature Technology and Behavior Intention

With regard to the impact of using Chatbots, the poor quality of the Chatbot’s feedback can seriously affect the journey and make consumers dissatisfied [57]. Ironically, the combination of “world-changing technology” touted by the media and vendors pushed Chatbot use to the level of inflated expectations when, in fact, the technology is still immature [58,59]. The technology’s reputation for practicality and practical impact remains questionable [58,59]. However, the immaturity of the technology will also be an opportunity for the future development of Chatbots [34]. The immaturity of Chatbot technology is reflected in its inaccuracy in text recognition, inability to recognize voice input, and inability to provide accurate answers [60].

3. Hypothesis and Conceptual Framework Development

The existing literature in technology acceptance reveals the importance of authenticity as a factor that is likely to affect consumer behavior. Therefore, the following is put forth for testing.

Hypothesis 1 (H1).

The authenticity of conversations and behavioral intentions have a positive relationship.

The literature on the relationship between convenience and behavioral intention is abundant and consistent. Convenience has a positive relationship with behavioral intention. Likewise, Chatbots have been found to provide convenience. Therefore, the following is put forth for testing.

Hypothesis 2 (H2).

The convenience of using a Chatbot has a positive effect on users’ behavioral intentions.

The positive relationship between enjoyment, or pleasure, and consumer behavior is well established in the prior research as in the relationship between passing time online. Therefore, within the context of Chatbots’ use in e-commerce and based on the above arguments, the following hypotheses are put forth for testing.

Hypothesis 3 (H3).

Enjoyment perception is positively correlated with consumer behavioral intention.

Hypothesis 4 (H4).

Chatbots help pass the time and will have a positive impact on behavioral intention.

The risks of sharing information online are well-known and reported in the existing literature, as are users’ concerns and aversion to use, especially in the financial services industry. Therefore, the following hypothesis is put forth for testing.

Hypothesis 5 (H5).

When using Chatbots, users’ concerns regarding privacy are inversely proportional to their actions’ intent.

As discussed in the review of existing literature, a consensus among users regarding the practicality of Chatbots at the current level of development is lacking. Therefore, based on the above review, the following hypothesis is put forth for testing.

Hypothesis 6 (H6).

The immaturity of Chatbots has negative impacts on users’ behavioral intentions.

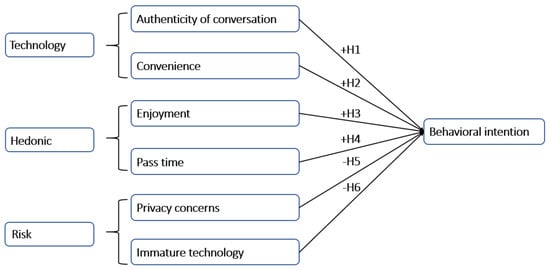

In summary, this study will test whether Technology (authenticity of conversation and convenience of using Chatbots), Hedonism (enjoyment and passing time), and Risk (privacy concerns and immature technology) have impacts on the public’s behavioral intentions. Specifically, the hypotheses are that Technology and Hedonism increase the likelihood that people will use Chatbots and that Risk is one of the factors that leads to negative public perceptions. Therefore, it is necessary to find relationship between the following six variables and the behavioral intention of the dependent variables by using the U&G model to analyze the public’s acceptance of Chatbots in China. This study uses the behavioral intention of users to explore loyalty to Chatbots and, thus, acceptance resulting in the conceptual model and established six hypotheses as presented in Figure 1.

Figure 1.

Conceptual framework.

4. Methodology

To measure Chinese people’s acceptance of Chatbots, this study employed quantitative research methodology to analyze data collected by an anonymous online survey. Items in the survey used for this study were adapted from previous studies and used with minor modifications for context. Based on the U&G model, the three dimensions of Technology, Hedonic, and Risk were included, and each dimension was addressed from two directions. These are the following: authenticity and convenience for Technology, enjoyment and pass time for Hedonic, and privacy concerns and immature technology for Risk. Each of the six different criteria is addressed by three separate questions. The questions were adapted from prior research Chatbots in Retailers’ Customer Communication: How to measure their acceptance? [30].

The questions have been tested and validated and can be used confidently. These six criteria were used to measure Chinese users’ attitudes toward Chatbots as the independent variables and users’ behavioral intentions were used as dependent variables. Linear regression is used to determine whether there is a direct relationship between them. In the survey, participants reported their attitudes on a Likert scale, ranging from “1” (strongly disagree) to “7” (strongly agree).

Survey Instrument and Data Collection

An online survey as a tool for data collection offers several benefits, including broad geographic coverage, anonymity for respondents, decreased bias compared to in-person interviews, and, finally, cost saving [61]. Neutral and easily understood language was used to facilitate the participants’ comprehension of the material and ensure the accuracy of the translation.

The survey’s instrument was adapted from surveys previously used and validated in related research.

To measure the constructs of the U&G model, scales already available in the literature were used and adapted to the context of Chatbots. The constructs “perceived enjoyment” and “privacy concerns” were adapted from the scales previously used by Rauschnabel et al. [62]. The scales for “pass time” were adapted from those previously used by Papacharissi and Rubin [63]. The scales to measure gratification by “convenience” were adapted from those developed by Childers et al. [64] and Ko et al. [65]. The scales to measure gratification by “authenticity” were adapted from Rese et al. [30], as were those used to measure “immature technology”. The constructs “perceived usefulness” and “perceived ease of use” were adapted from those used by Venkatesh and Davis [66]. For the measurement of “acceptance”, the scales were adapted from Moon and Kim [67] and Venkatesh et al. [66].

The survey items were initially written in English, so the survey was translated from English to Chinese. To ensure accuracy, the translation was checked by two native Mandarin speakers with PhDs from Western universities and subsequently translated back into English. This translation and back-translation approach is a widely applied technique in cross-cultural social research for achieving translation accuracy and credibility with minimized differences [68]. The translated survey was distributed to a pilot group of 12 people for trial and adjusted based on their feedback to assure the clarity and accuracy of the translation and the ease of comprehension.

The WeChat social media platform is widely used in China for online surveys, customer feedback, and for posting product and service reviews. Due to the significant market penetration of smartphone use, and the ubiquitous use of the WeChat application, nearly all smartphone users are familiar with the app and were therefore qualified for inclusion in the survey.

The QR code to access the survey was shared through the social media platform WeChat, China’s most widely used social network application. The survey was distributed via the software application Wen Juan Xing where the data were compiled and downloaded. As is typical in reposting behavior on social media in China [69], a snowball sampling effect was observed.

The first part of the survey gathered data on demographic characteristics. The second part contains questions regarding people’s attitudes toward Chatbots from three dimensions and six directions.

A total of 540 completed surveys were obtained through convenience sampling. The surveys were completed over a 21-day period, and participation in the survey was voluntary and anonymous. After inspection, all survey responses were determined to be valid.

5. Analysis and Results

5.1. Sampling

The demographic breakdown of the respondents is shown in Table 1. Of the 540 respondents, 42.2% were male and 53.3% were female, with similar proportions. Among them, 189 are between 18 and 25 years old, accounting for 35% of the total population, making it the largest group. One hundred forty-seven people aged 26–35 accounted for 27.2% of the total population. Among the respondents, the number of users familiar with Chatbots is 212, or 39.3% of the total, while 19.3% report a high level of familiarity with Chatbots.

Table 1.

Demographics of the sample.

5.2. Reliability Anal

As indicated in Table 2, all questions measuring the perceived authenticity factor are loaded separately. The overall reliability of data is adequate at 0.693, with no factors falling below 0.7, which means that the data are reliable and further analysis is justified.

Table 2.

Reliability Analysis.

Technology: Divided into convenience and the authenticity of conservation, all questions measuring perceived risk factors were loaded separately. The reliability of this factor was found to be adequate as reliability coefficients were 0.832 and 0.855, respectively.

Hedonic: Divided into enjoyment and pass time, all questions measuring perceived risks factor were loaded separately. The reliability of this factor was found to be adequate as reliability coefficients were 0.810 and 0.895, respectively.

Risk: Divided into privacy concerns and immature technology, all questions measuring perceived risks factor were loaded separately. The reliability of this factor was found to be adequate as reliability coefficients were 0.866 and 0.840, respectively.

Behavior intention: All questions measuring the behavior intention factor were loaded separately. The reliability of this factor was found to be adequate, as the reliability coefficient was 0.856.

5.3. Correlation Analysis

5.3.1. Authenticity of Conversation and Behavioral Intention

As shown in Table 3, which shows the model’s summary table, the R square value is 0.227, which implies that a 22.7% variation in behavior intention is explained by Technology (authenticity of conservation).

Table 3.

Regression Analysis—Technology (Authenticity of Conversation).

The p-value is less than 0.01. The standardized coefficient beta value is 0.460, which shows that an increase in one unit of Technology (authenticity of conversation) will lead to an increase in 0.460 units of behavioral intention. This shows that the relationship is positive. So, Technology (authenticity of conversation) has a significant positive relationship with behavioral intention. Thus, Hypothesis 1 is accepted.

5.3.2. Convenience and Behavioral Intention

As shown in Table 4, the p-value is less than 0.01. The model’s summary table shows that the R square value is 0.191, which implies that a 19.1% variation in behavior intention is explained by Technology (convenience).

Table 4.

Technology (Convenience).

The standardized coefficient beta value is 0.431, which shows that an increase in one unit of Technology (convenience) will lead to an increase in 0.431 units of behavioral intention. This shows that the relationship is positive. So, convenience has a significant positive relationship with behavioral intention. Thus, Hypothesis 2 is accepted.

5.3.3. Correlation of Enjoyment and Behavioral Intention

As shown in Table 5, the p-value is less than 0.01. The model summary table shows that the R square value is 0.206, which implies that a 20.6% variation in behavior intention is explained by the Hedonic (enjoyment) dimension.

Table 5.

Regression Analysis—Hedonic (Enjoyment).

The standardized coefficient beta value is 0.453, which shows that an increase in one unit of the Hedonic (enjoyment) dimension will lead to an increase in 0.453 units of behavioral intention. This shows that the relationship is positive. So, the Hedonic (enjoyment) dimension has a significant positive relationship with behavioral intention. Thus, Hypothesis 3 is accepted.

5.3.4. Correlation of Passing Time and Behavioral Intention

As shown in Table 6, the regression analysis for Hedonic (passing time) shows a p-value of less than 0.01. The model summary table shows that the R square value is 0.258, which implies that a 25.8% variation in behavior intention is explained by the Hedonic (pass time) dimension.

Table 6.

Hedonic (Passing Time).

The standardized coefficient beta value is 0.485, which shows that an increase in one unit of the Hedonic (pass time) dimension will lead to an increase in 0.485 units of behavioral intention. This shows that the relationship is positive. So, the Hedonic (pass time) dimension has a significant positive relationship with behavioral intention. Thus, Hypothesis 4 is accepted.

5.3.5. Correlation of Privacy Concerns and Behavioral Intention

As shown in Table 7, the regression analysis for Risk (privacy) shows a p-value of less than 0.01. The model summary table shows that the R square value is 0.159, which implies that a 15.9% variation in behavior intention is explained by Risk (privacy concern).

Table 7.

Regression Analysis—Risk (Privacy Concerns).

The standardized coefficient beta value is −0.404, which shows that an increase in one unit of Hedonic (pass time) will lead to a decrease in 0.404 units of behavioral intention. This shows that the relationship is negative. So, Risk (privacy concern) has a significant negative relationship with behavioral intention. Thus, Hypothesis 5 is accepted.

5.3.6. Correlation of Immature Technology and Behavioral Intention

As shown in Table 8, the regression analysis for Risk (immature technology) shows a p-value less than 0.01. The model summary table shows that the R square value is 0.205, which implies that a 20.5% variation in behavior intention is explained by Risk (immature technology).

Table 8.

Regression Analysis—Risk (Immature Technology).

The standardized coefficient beta value is −0.473, which shows that an increase in one unit of the Hedonic (pass time) dimension will lead to a decrease in 0.473 units of behavioral intention. This shows that the relationship is negative. So, Risk (immature technology) has a significant negative relationship with behavioral intention. Thus, Hypothesis 6 is accepted.

6. Discussion

The aim of the study was to measure the acceptance of Chatbots in China and to examine what factors contribute to positive or negative attitudes towards them. Based on insights into Chatbots from previous market research surveys, the U&G model was used, and confirmatory tests were carried out, taking into account the three aspects of Technology, Hedonistic, and Risk. Via regression analysis, the relationships between convenience, authenticity of conversation, enjoyment and passing time, privacy concerns, immaturity of technology, and behavioral intention were examined in order to determine consumers’ acceptance of Chatbots.

Previous research that applies the U&G Theory to Chatbots focused on various sectors, including the financial services industry [18], the education sector [70,71], and healthcare [72,73]. Among the existing literature on Chatbots in e-commerce, none applies U&G Theoretical constructs as presented in this study, namely Hedonistic, Risk, and Technology. While the U&G theory has been applied to various related models, including online information services [28,74] and online video entertainment content [75,76], however, the potential to gain insights into consumer perceptions of AI in the Chinese e-commerce market, as seen through the lens of the U&G theory, has not been previously examined.

The findings indicate that both Technology (convenience and authenticity of dialogue) and Hedonism (enjoyment and passing time) have positive effects on users’ behavioral intention. This may be because technology greatly improved the quality of people’s lives, and for Chinese users who rely heavily on smartphones, these two factors may be important in their acceptance of Chatbots. However, risk factors, including immature technology and privacy and security, are found to have negative impacts on consumers’ behavioral intentions. Even though the development of AI reached an impressive and unprecedented level with impressive layers of security, information leakage, and data theft and related problems still emerge regularly, which makes consumers concerned about potential privacy and security issues. In addition, users are still concerned that Chatbots cannot accurately recognize speech and cannot communicate effectively and accurately with humans, this also leads to users’ lack of acceptance of Chatbots.

These findings contribute to the body of knowledge related to the U&G theory by building on prior work and extending the methodology to the application of Chatbot acceptance in China. A further contribution is made in the area of Chatbot acceptance in the area of e-commerce; moreover, in the broader application of user acceptance of AI, a relatively recent area of exploration in which consensus is emerging, but it is not solidified and is of increasing relevance and interest. As such, this study expands the U&G theory by empirically validating and confirming the relationships between users’ satisfaction and Chatbot acceptance. The contribution of this article includes the application of an extended interpretation of the U&G Theory into a relatively new (e-commerce) area and a very distinct set of circumstances (E-Commerce—China). From a broader perspective, the importance of user acceptance of AI is relevant and of interest, and these findings indicate that despite some research to the contrary, end users, at least in China, are not receptive at this point in time in accepting the risks associated with AI in online financial transactions. This tells us that there is work should be conducted in the development of AI and Chatbots in particular before we can realize potential benefits.

These findings have practical implications as well. China is the largest e-commerce market, has a very high penetration of smartphone use, and the potential for Chatbots is enormous. Overall, these findings are good news for the Chinese Chatbot product market as they demonstrate a contextually limited acceptance and a positive attitude toward Chatbot use in e-commerce. Developers can also use these findings to further explore optimizations in the areas of immature technologies and privacy security issues in order to increase acceptance. As a practical matter, marketers can better promote products based on users’ preferences for Chatbots. The convenience of Chatbots, for example, could be a significant breakthrough for marketers. These findings shed light on the possible direction for the future development of Chatbots and highlight existing issues.

7. Limitations and Future Research

There are some limitations to this study. When data were collected, the 18–35-year-old demographic was highly represented in the sample investigated, and age groups were not controlled for. In addition, the sample’s population was segmented into different groups by gender, age, and familiarity with Chatbots. Other factors not controlled for were background factors such as respondents’ income and education, which could partly determine their perception of Chatbot technology. In future studies, more attention could be paid to the attitudes of people of different occupations and incomes toward Chatbots. For example, Chatbots might be helpful for students in school, while people in the financial services industry might have different purposes. Therefore, different scenarios use Chatbots in different ways, which may lead to different attitudes. In addition, the impact of COVID-19 on the industry is also worth considering in future studies. Since COVID-19 first emerged, the pandemic has had an enormous impact on the economy and the development of various industries. It is an open question whether Chatbots will make people’s lives easier and whether people’s attitudes towards them will change during the epidemic. In addition, the method of data collection by online surveys also has limitations on results. More comprehensive findings may be obtained if data collection is conducted via interviews.

Author Contributions

Formal analysis, R.K.M. and Y.Z.; Funding acquisition, Y.Z.; Investigation, Y.Z. and H.Z.; Methodology, R.K.M.; Project administration, R.K.M.; Validation, H.Z.; Writing—original draft, H.Z.; Writing—review & editing, R.K.M.. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

For data collected anonymously, that poses no risk, received no funding, and was gathered previously, no IRB statement is required by university policy.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yanyong, T. Action Value: An Introduction to Action Accounting. Rev. Integr. Bus. Econ. Res. 2021, 10, 62–75. [Google Scholar]

- Bhattacharya, S.; Kumar, K. Computational Intelligence and Decision Making: A Multidisciplinary Review. Rev. Integr. Bus. Econ. Res. 2012, 1, 316–336. [Google Scholar]

- Panwai, S. Artificial Neural Network Stock Price Prediction Model under the Influence of Big Data. Rev. Integr. Bus. Econ. Res. 2021, 10, 33–59. [Google Scholar]

- Ikhasari, A.; Faturohman, T. Risk Management of Start-up Company. Rev. Integr. Bus. Econ. Res. 2021, 10, 237–248. [Google Scholar]

- Yuphin, P.; Ruanchoengchum, P. Reducing the Waste in the Manufacturing of Sprockets Using Smart Value Stream Mapping to Prepare for the 4.0 Industrial Era. Rev. Integr. Bus. Econ. Res. 2020, 9, 158–174. [Google Scholar]

- Adamopoulou, E.; Moussiades, L. Chatbots: History, technology, and applications. Mach. Learn. Appl. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Adam, M.; Wessel, M.; Benlian, A. AI-based chatbots in customer service and their effects on user compliance. Electron. Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Shawar, B.A.; Atwell, E. Chatbots: Are they really useful? Ldv Forum. 2007, 22, 29–49. [Google Scholar]

- Hussain, S.; Ameri Sianaki, O.; Ababneh, N. A Survey on Conversational Agents/Chatbots Classification and Design Techniques. In Proceedings of the Web, Artificial Intelligence and Network Applications, Matsue, Japan, 27–29 March 2019; Barolli, L., Takizawa, M., Xhafa, F., Enokido, T., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 946–956. [Google Scholar]

- Pereira, M.J.; Coheur, L.; Fialho, P.; Ribeiro, R. Chatbots’ Greetings to Human-Computer Communication. arXiv 2016, arXiv:1609.06479. [Google Scholar]

- Huang, W.; Hew, K.F.; Fryer, L.K. Chatbots for language learning—Are they really useful? A systematic review of chatbot-supported language learning. J. Comput. Assist. Learn. 2022, 38, 237–257. [Google Scholar] [CrossRef]

- Johannsen, F.; Schaller, D.; Klus, M.F. Value propositions of chatbots to support innovation management processes. Inf. Syst. E-Bus. Manag. 2020, 19, 205–246. [Google Scholar] [CrossRef]

- Akhtar, M.; Neidhardt, J.; Werthner, H. The Potential of Chatbots: Analysis of Chatbot Conversations. In Proceedings of the 2019 IEEE 21st Conference on Business Informatics (CBI), Moscow, Russia, 15–17 July 2019; Volume 1, pp. 397–404. [Google Scholar]

- Li, L.; Lee, K.Y.; Emokpae, E.; Yang, S.-B. What makes you continuously use chatbot services? Evidence from chinese online travel agencies. Electron. Mark. 2021, 31, 575–599. [Google Scholar] [CrossRef] [PubMed]

- Cohen, T. How to leverage artificial intelligence to meet your diversity goals. Strateg. HR Rev. 2019, 18, 62–65. [Google Scholar] [CrossRef]

- Adams, D.A.; Nelson, R.R.; Todd, P.A. Perceived Usefulness, Ease of Use, and Usage of Information Technology: A Replication. MIS Q. 1992, 16, 227–247. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, N.; Zhao, X. Understanding the Determinants in the Different Government AI Adoption Stages: Evidence of Local Government Chatbots in China. Soc. Sci. Comput. Rev. 2020, 40, 534–554. [Google Scholar] [CrossRef]

- Alt, M.-A.; Ibolya, V. Identifying Relevant Segments of Potential Banking Chatbot Users Based on Technology Adoption Behavior. Mark.-Tržište 2021, 33, 165–183. [Google Scholar] [CrossRef]

- Min, F.; Fang, Z.; He, Y.; Xuan, J. Research on Users’ Trust of Chatbots Driven by AI: An Empirical Analysis Based on System Factors and User Characteristics. In Proceedings of the 2021 IEEE International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 15–17 January 2021; pp. 55–58. [Google Scholar]

- Pillai, R.; Sivathanu, B. Adoption of AI-based chatbots for hospitality and tourism. Int. J. Contemp. Hosp. Manag. 2020, 32, 3199–3226. [Google Scholar] [CrossRef]

- Klopfenstein, L.C.; Delpriori, S.; Malatini, S.; Bogliolo, A. The Rise of Bots: A Survey of Conversational Interfaces, Patterns, and Paradigms. In Proceedings of the 2017 Conference on Designing Interactive Systems; Association for Computing Machinery, New York, NY, USA, 10 June 2017; pp. 555–565. [CrossRef]

- Ahmad, N.A.; Che, M.H.; Zainal, A.; Abd Rauf, M.F.; Adnan, Z. Review of Chatbots Design Techniques. Int. J. Comput. Appl. 2018, 181, 7–10. [Google Scholar]

- Radziwill, N.M.; Benton, M.C. Evaluating Quality of Chatbots and Intelligent Conversational Agents. arXiv 2017, arXiv:1704.04579. [Google Scholar]

- Tubachi, P.S.; Tubachi, B.S. Application of chatbot technology in LIS. In Proceedings of the Third International Conference on Current Trends in Engineering Science and Technology, Bangalore, India, 5–7 January 2017; pp. 1135–1138. [Google Scholar]

- Hu, Q.; Lou, T.; Li, J.; Zuo, W.; Chen, X.; Ma, L. New Practice of E-Commerce Platform: Evidence from Two Trade-In Programs. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 875–892. [Google Scholar] [CrossRef]

- Ruggiero, T.E. Uses and Gratifications Theory in the 21st Century. Mass Commun. Soc. 2000, 3, 3–37. [Google Scholar] [CrossRef]

- Katz, E.; Blumler, J.G.; Gurevitch, M. Uses and Gratifications Research. Public Opin. Q. 1973, 37, 509–523. [Google Scholar] [CrossRef]

- Luo, M.M.; Remus, W.; Chea, S. Technology Acceptance of Internet-based Information Services: An Integrated Model of TAM and U&G Theory. AMCIS 2006, 153. [Google Scholar]

- Kaur, P.; Dhir, A.; Chen, S.; Malibari, A.; Almotairi, M. Why do people purchase virtual goods? A uses and gratification (U&G) theory perspective. Telemat. Inform. 2020, 53, 101376. [Google Scholar] [CrossRef]

- Rese, A.; Ganster, L.; Baier, D. Chatbots in retailers’ customer communication: How to measure their acceptance? J. Retail. Consum. Serv. 2020, 56, 102176. [Google Scholar] [CrossRef]

- Miniard, P.W.; Cohen, J.B. An examination of the Fishbein-Ajzen behavioral-intentions model’s concepts and measures. J. Exp. Soc. Psychol. 1981, 17, 309–339. [Google Scholar] [CrossRef]

- Lin, H.-F. Understanding Behavioral Intention to Participate in Virtual Communities. Cyber Psychol. Behav. 2006, 9, 540–547. [Google Scholar] [CrossRef]

- Animesh, A.; Pinsonneault, A.; Yang, S.-B.; Oh, W. An Odyssey into Virtual Worlds: Exploring the Impacts of Technological and Spatial Environments on Intention to Purchase Virtual Products. MIS Q. 2011, 35, 789–810. [Google Scholar] [CrossRef]

- Lo, A.Y. Negative income effect on perception of long-term environmental risk. Ecol. Econ. 2014, 107, 51–58. [Google Scholar] [CrossRef]

- Anic, I.-D.; Škare, V.; Milaković, I.K. The determinants and effects of online privacy concerns in the context of e-commerce. Electron. Commer. Res. Appl. 2019, 36, 100868. [Google Scholar] [CrossRef]

- Barth, S.; de Jong, M.D.; Junger, M.; Hartel, P.H.; Roppelt, J.C. Putting the privacy paradox to the test: Online privacy and security behaviors among users with technical knowledge, privacy awareness, and financial resources. Telemat. Inform. 2019, 41, 55–69. [Google Scholar] [CrossRef]

- Lei, H. Context Awareness: A Practitioner’s Perspective. In Proceedings of the International Workshop on Ubiquitous Data Management, Tokyo, Japan, 4 April 2005; pp. 43–52. [Google Scholar] [CrossRef]

- Albrecht, K. Social Intelligence: The New Science of Success; John Wiley & Sons: Hoboken, NJ, USA, 2006; ISBN 978-0-7879-7938-6. [Google Scholar]

- Cappannelli, G.; Cappannelli, S.C. Authenticity: Simple Strategies for Greater Meaning and Purpose at Work and at Home; Clerisy Press: Covington, KY, USA, 2004; ISBN 978-1-57860-148-6. [Google Scholar]

- Gilmore, J.H.; Pine, B.J. Authenticity: What Consumers Really Want; Harvard Business Press: Boston, MA, USA, 2007; ISBN 978-1-59139-145-6. [Google Scholar]

- Zogaj, A.; Mähner, P.M.; Tscheulin, D.K. Similarity between Human Beings and Chatbots—The Effect of Self-Congruence on Consumer Satisfaction while considering the Mediating Role of Authenticity. In Künstliche Intelligenz im Dienstleistungsmanagement: Band 2: Einsatzfelder-Akzeptanz-Kundeninteraktionen; Bruhn, M., Hadwich, K., Eds.; Springer Fachmedien: Wiesbaden, Germany, 2021; pp. 427–443. ISBN 978-3-658-34326-2. [Google Scholar] [CrossRef]

- Ischen, C.; Araujo, T.; Voorveld, H.; van Noort, G.; Smit, E. Privacy Concerns in Chatbot Interactions. In Proceedings of the Chatbot Research and Design, Virtual, 23–24 November 2020; Følstad, A., Araujo, T., Papadopoulos, S., Law, E.L.-C., Granmo, O.-C., Luger, E., Brandtzaeg, P.B., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 34–48. [Google Scholar] [CrossRef]

- Blut, M.; Wang, C.; Wünderlich, N.V.; Brock, C. Understanding anthropomorphism in service provision: A meta-analysis of physical robots, chatbots, and other AI. J. Acad. Mark. Sci. 2021, 49, 632–658. [Google Scholar] [CrossRef]

- Kang, A.S.; Jayaraman, K.; Soh, K.-L.; Wong, W.P. Convenience, flexible service, and commute impedance as the predictors of drivers’ intention to switch and behavioral readiness to use public transport. Transp. Res. Part F Traffic Psychol. Behav. 2019, 62, 505–519. [Google Scholar] [CrossRef]

- Gomes, S.; Lopes, J.M. Evolution of the Online Grocery Shopping Experience during the COVID-19 Pandemic: Empiric Study from Portugal. J. Theor. Appl. Electron. Commer. Res. 2022, 17, 909–923. [Google Scholar] [CrossRef]

- Moshrefjavadi, M.H.; Dolatabadi, H.R.; Nourbakhsh, M.; Poursaeedi, A.; Asadollahi, A. An Analysis of Factors Affecting on Online Shopping Behavior of Consumers. Int. J. Mark. Stud. 2012, 4, 81. [Google Scholar] [CrossRef]

- Malik, R.; Shrama, A.; Trivedi, S.; Mishra, R. Adoption of Chatbots for Learning among University Students: Role of Perceived Convenience and Enhanced Performance. Int. J. Emerg. Technol. Learn. 2021, 16, 200–212. [Google Scholar] [CrossRef]

- Ajzen, I. From Intentions to Actions: A Theory of Planned Behavior. In Action Control: From Cognition to Behavior; Kuhl, J., Beckmann, J., Eds.; SSSP Springer Series in Social Psychology; Springer: Berlin/Heidelberg, Germany, 1985; pp. 11–39. ISBN 978-3-642-69746-3. [Google Scholar] [CrossRef]

- Hassanein, K.; Head, M.; Ju, C. A cross-cultural comparison of the impact of Social Presence on website trust, usefulness and enjoyment. IJEB 2009, 7, 625–641. [Google Scholar] [CrossRef]

- Batra, R.; Ahtola, O.T. Measuring the hedonic and utilitarian sources of consumer attitudes. Mark. Lett. 1991, 2, 159–170. [Google Scholar] [CrossRef]

- Ha, S.; Stoel, L. Consumer e-shopping acceptance: Antecedents in a technology acceptance model. J. Bus. Res. 2009, 62, 565–571. [Google Scholar] [CrossRef]

- Kim, H.; Lee, U.K. Effects of Collaborative Online Shopping on Shopping Experience through Social and Relational Perspectives. Available online: https://www.sciencedirect.com/science/article/pii/S0378720613000128 (accessed on 19 December 2021).

- Brandtzaeg, P.B.; Følstad, A. Chatbots: Changing user needs and motivations. Interactions 2018, 25, 38–43. [Google Scholar] [CrossRef]

- Harkous, H.; Fawaz, K.; Shin, K.G.; Aberer, K. PriBots: Conversational Privacy with Chatbots; USENIX: Berkeley, CA, USA, 2016. [Google Scholar]

- Ng, M.; Coopamootoo, K.P.L.; Toreini, E.; Aitken, M.; Elliot, K.; van Moorsel, A. Simulating the Effects of Social Presence on Trust, Privacy Concerns amp; Usage Intentions in Automated Bots for Finance. In Proceedings of the 2020 IEEE European Symposium on Security and Privacy Workshops (EuroS PW), Genoa, Italy, 7–11 September 2020; pp. 190–199. [Google Scholar] [CrossRef]

- Baudart, G.; Dolby, J.; Duesterwald, E.; Hirzel, M.; Shinnar, A. Protecting chatbots from toxic content. In Proceedings of the 2018 ACM SIGPLAN International Symposium on New Ideas, New Paradigms, and Reflections on Programming and Software, New York, NY, USA, 7–8 November 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 99–110. [Google Scholar] [CrossRef]

- Nichifor, E.; Trifan, A.; Nechifor, E.M. Artificial intelligence in electronic commerce: Basic chatbots and the consumer journey. Amfiteatru Econ. 2021, 23, 87–101. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, J.; Wu, J.; Liu, Y. AI is better when I’m sure: The influence of certainty of needs on consumers’ acceptance of AI chatbots. J. Bus. Res. 2022, 150, 642–652. [Google Scholar] [CrossRef]

- Lin, X.; Shao, B.; Wang, X. Employees’ perceptions of chatbots in B2B marketing: Affordances vs. disaffordances. Ind. Mark. Manag. 2022, 101, 45–56. [Google Scholar] [CrossRef]

- Han, X.; Zhou, M.; Turner, M.J.; Yeh, T. Designing effective interview chatbots: Automatic chatbot profiling and design suggestion generation for chatbot debugging. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Gosling, S.D.; Vazire, S.; Srivastava, S.; John, O.P. Should we trust web-based studies? A comparative analysis of six preconceptions about internet questionnaires. Am. Psychol. 2004, 59, 93. [Google Scholar] [CrossRef] [PubMed]

- Rauschnabel, P.A.; Rossmann, A.; tom Dieck, M.C. An adoption framework for mobile augmented reality games: The case of Pokémon Go. Comput. Hum. Behav. 2017, 76, 276–286. [Google Scholar] [CrossRef]

- Papacharissi, Z.; Rubin, A.M. Predictors of Internet Use. J. Broadcast. Electron. Media 2000, 44, 175–196. [Google Scholar] [CrossRef]

- Childers, T.L.; Carr, C.L.; Peck, J.; Carson, S. Hedonic and utilitarian motivations for online retail shopping behavior. J. Retail. 2001, 77, 511–535. [Google Scholar] [CrossRef]

- Ko, H.; Cho, C.-H.; Roberts, M.S. Internet Uses and Gratifications: A Structural Equation Model of Interactive Advertising. J. Advert. 2005, 34, 57–70. [Google Scholar] [CrossRef]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef]

- Moon, J.-W.; Kim, Y.-G. Extending the TAM for a World-Wide-Web context. Inf. Manag. 2001, 38, 217–230. [Google Scholar] [CrossRef]

- Brislin, R.W. Back-translation for cross-cultural research. J. Cross-Cult. Psychol. 1970, 1, 185–216. [Google Scholar] [CrossRef]

- Lin, Y.; Marjerison, R.K.; Kennedyd, S. Reposting Inclination of Chinese Millennials on Social Media: Consideration of Gender, Motivation, Content and Form. J. Int. Bus. Cult. Stud. 2019, 12, 20. [Google Scholar]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Winkler, R.; Söllner, M. Unleashing the potential of chatbots in education: A state-of-the-art analysis. In Proceedings of the Academy of Management Annual Meeting (AOM), Chicago, IL, USA, 8–12 August 2018. [Google Scholar]

- Bates, M. Health care chatbots are here to help. IEEE Pulse 2019, 10, 12–14. [Google Scholar] [CrossRef]

- Bhirud, N.; Tataale, S.; Randive, S.; Nahar, S. A literature review on chatbots in healthcare domain. Int. J. Sci. Technol. Res. 2019, 8, 225–231. [Google Scholar]

- Luo, M.M.; Chea, S.; Chen, J.-S. Web-based information service adoption: A comparison of the motivational model and the uses and gratifications theory. Decis. Support Syst. 2011, 51, 21–30. [Google Scholar] [CrossRef]

- Nanda, A.P.; Banerjee, R. Binge watching: An exploration of the role of technology. Psychol. Mark. 2020, 37, 1212–1230. [Google Scholar] [CrossRef]

- Steiner, E.; Xu, K. Binge-watching motivates change: Uses and gratifications of streaming video viewers challenge traditional TV research. Convergence 2020, 26, 82–101. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).