Abstract

Incorporating substantial, sustainable development issues into teaching and learning is the ultimate task of Education for Sustainable Development (ESD). The purpose of our study was to identify the confused students who had failed to master the skill(s) given by the tutors as homework using the Intelligent Tutoring System (ITS). We have focused ASSISTments, an ITS in this study, and scrutinized the skill-builder data using machine learning techniques and methods. We used seven candidate models including: Naïve Bayes (NB), Generalized Linear Model (GLM), Logistic Regression (LR), Deep Learning (DL), Decision Tree (DT), Random Forest (RF), and Gradient Boosted Trees (XGBoost). We trained, validated, and tested learning algorithms, performed stratified cross-validation, and measured the performance of the models through various performance metrics, i.e., ROC (Receiver Operating Characteristic), Accuracy, Precision, Recall, F-Measure, Sensitivity, and Specificity. We found RF, GLM, XGBoost, and DL were high accuracy-achieving classifiers. However, other perceptions such as detecting unexplored features that might be related to the forecasting of outputs can also boost the accuracy of the prediction model. Through machine learning methods, we identified the group of students that were confused when attempting the homework exercise, to help foster their knowledge and talent to play a vital role in environmental development.

1. Introduction

The Intelligent tutoring systems (ITSs) and Massive Open Online Courses (MOOCs) both have similar educational approaches. However, they differ in many aspects, for instance; ITS facilitates instant feedback and scaffolding practice in solving pedagogical problems. While learning through web-based interfaces, students have the opportunity to take hints and watch related topic videos. They also receive guidance on how to practice concepts and attempt the right answer. MOOC, on the other hand, provides much more interactive learning through the learning management system (LMS), in which various forms of instructive video lectures, moderated discussion boards (MDBs), and forums are available for learning with peer feedback [1].

Conversely, the students’ learning enhancement is due to the most potent intelligent tutoring systems (ITSs), and these have commercial effectiveness. Although the availability of intelligent tutors is limited, their substantial cost subsidizes for content construction [2,3].

Researchers have generated numerous ITSs for various students, for instance: the Intelligent Tutoring Tools (ITTs) project of Byzantium [4], AutoTutor, Atlas and Why2 [5], Andes [6], ASSISTments [7].

In this study, our main focus will be on ASSISTments (ITS), as we collected data from Skill-builder 2009–2010 [8].

ASSISTments (ITS)

ASSISTments (https://www.assistments.org) is an ITS that provides a free web-based platform that facilitates the assessment of learning by both school students and teachers to assess [9]. Experimentation within this system showed that the students who received technology-assisted feedback had higher scores than those who did not receive hints or scaffolding.

ASSISTments is comprised of mathematics-related problems with hints, immediate feedback, and answers. These problems are grouped into sets, that teachers allocate to their students [10].

Essentially, ASSISTments came into being when authors were coaching, particularly math pupils, in middle school. The objective was to pursue students who had basic knowledge and recognized what they wanted to do. In previous years, each student mastered hand-based practicing skill(s), without computers. ASSISTments allows teachers to monitor the comprehension and trail the skill(s) that the students have grasped [7].

It is an environment produced by the Worcester Polytechnic Institute, USA, specially designed for middle schools. More than 50,000 students are registered and use ASSISTments for their homework, and master the skill(s) before their class on the following day. This is possible due to the instant feedback facility of ASSISTments for particular problem associated with skill(s) from the various subjects that include; Mathematics, Science, English, Statistics, etc. Similarly, teachers give two options for the students’ assignments: 1. Teachers compile questions with remedies-, hints-, and problem-related videos to master the skill(s) chosen by the student for a particular subject; 2. Teachers also use built-in problem sets and broadcast the homework for students.

In the word ASSISTments, assist is associated with the teachers while assessment is related to the students. Moreover, when a student chooses the wrong answer to a given problem, the prompt feedback pings student to rectify it by taking a hint or trying another option. This allows the teachers to log the results in accurate instantaneous output, and later they can use this evidence to develop strategies for the upcoming lesson [7].

Two types of educational contents are available in ASSISTments. One is related to the mathematics textbook homework, or the problems that teachers write for their students; and the second type is designed explicitly for skill-learning practice and mastery called “skill builders.” In ASSISTments, current skill-builder data consists of more than 300 topics related to middle school mathematics. The purpose of skill-builder is to master the skill by practicing the problem assigned by the teacher under defined standards or principles [9].

Skill-builder assures that the student has an expert in the topic or skill before moving forward to grasp other tasks. This is the best type of content in ASSISTments, testing and acknowledging what students learn. It is also mandatory for each student to correct three questions in a row until they understand the preliminary ability on the chosen topic area [7].

Many peer-reviewed journals publish articles in the context of prediction. ASSISTments has been broadly and widely used for data mining exploration [11], employing Bayesian networks [12], or using the platform to make classifiers of students’ demonstrative state [13].

The ultimate goal of our research is to identify and predict the confusion of students while solving their mathematics homework, using mastery skill-builder learning after attempting the teacher defined criterion (e.g., correcting three consecutive answers on similar math problems). Even though instant feedback from ITS causes student confusion. Therefore, as per education for sustainable development, we found the gap in which every student wishes to nurture their talent, knowledge, and experience to become a responsible member and citizen of the society during their ongoing development. However, the ultimate goal of sustainable development is to eliminate common issues and frustrations, which can only be achieved when we as people, students, teachers, etc. find out the remedies to resolve these issues by taking instant feedback, hints, and help from technology and humans. Confusion among the students while using ITS is a major hindrance in maintaining sustainable development, leading to an inability to meet the needs of the present.

Wang et al. [14] discourage manual classification due to its imperfection, irreproducibility, and classifications generated by the human eye. Thus, we used the digitized, computerized classification as our data were diversified. Therefore, by using machine learning classifiers allowed us to categorize students who were confused and those who were not. Machine learning algorithms work on the principle of statistics, and for this objective we used the statistical programming language R and RapidMiner 8.1 to analyze and predict the confused students in an ITS skill-builder session and showed the results.

To accomplish this task, the following was our research question, which we considered in order to bridge the gap.

- ▪

- Can we categorize which machine learning algorithms are the best fit to classify mastery skill learning confusion among the students using skill-builder in an intelligent tutoring system from chosen skills?

Further, the structure of the paper is as follows: In Section 2, we present a short overview of related works and research on the particular subject; in Section 3, we define related methods used, and proposed predictive methods; in Section 4, we interpret the results and discuss prediction performance; in Section 5, we leave the reader with concluding thoughts, shortcomings, and future recommendations.

2. Related Works

ITSs are computer technology-based programs that have been developed for different subject areas (e.g., algebra, physics, science, medicine, statistics, etc.) to assist students and learners obtain domain-specific intellectual knowledge. It also models the mental, cognitive states of students and learners to provide modified instructions through instant and prompt feedback. This system provides an interface that presents and receives information to communicate with learners. For instance, by learning the concept of the subject domain (e.g., algebra), the learner can interact through interfaces to solve problems while looking for a hints or answering the questions [15].

ITSs distinguish learning for students from various abilities, understandings, and performances. Variations in learning style are taken into account to distinguish learning. Learning style can be predicted by taking independent behavior variables during tutoring discussions. Fuzzy trees have been induced to predict the learning style of individuals. Outcome classification has been observed due to the automatic behavior of learning from a collection of data [16].

Material regarding the mathematics course in ASSISTments comprises of difficulties with solutions, and in-time suggestions. Furthermore, substantial assistance readily available over the Internet to resolve the issue that students solve online. Another type of material is precisely designed for mastery-focused skills training named “Skill-builders” as discussed above. At the moment, ASSISTments covers more than 300 matters related to mathematics for middle school, and the capability is given to teachers to allocate skill-builders to pupils, allowing them to rehearse those problems while emphasizing the desired skill(s) until they receive the pre-defined standards for accuracy [9].

Nonetheless, very limited research has discovered the importance of ITS used as homework [17]. Many types of research corroborate the significance of ITS used while in a class for students at school [18]. Hence, it was very inspiring when [19] ANDES and ITS used in this manner communicated favorable outcomes.

At present, ASSISTments is being used by massive numbers of students at middle and high school for their evening homework. The instant advice regarding homework, allows students to feel comfortable, and enables tutors to monitor the reports specifying students achievements [17]. So far, for the evening homework, multilayered tutoring systems are not suitable as on the other hand, technology-supported instructions which disseminate the same questions with a fast response about the problem are more appropriate [20].

According to Singh et al., homework on web-based tools using the tutoring system is more authentic and robust in learning and mastering student skill(s) compared to previous old-fashioned paper-based traditional style. Furthermore, this research focuses on comparing the instantaneous responses from the tutoring system against the feedback received by students from the tutor the next working day, which is time-consuming and reduces the learning ability as a whole. They further showed that 8th-grade math students who were indulged in both scenarios, expanded pointedly with an effect size of 0.40 by using technology-assisted homework [18].

As described by Fyfe, around the globe, ITS and technology-assisted homework achieved fame and pervasiveness in schools [21]. Conferring to Ma et al., personalized education, and well before advice, is the solid foundation of intelligent tutoring systems [15]. The objective of the study by Fyfe was to reveal an investigational assessment of an algebra class for middle school students who had preceding variable knowledge affected by the technology-based responses [21].

Generally, many types of research support the notion that using the in-time responses from ITSs, as usual, has useful properties on learning outputs as opposed to no response from ITSs [20,21]. Lee et al., Baker et al., and Gupta and Rose, all classified that confusion, both its roots and penalties, can be easily recognized through the performance and student actions [22,23,24].

Confusion causes students to halt, reproduce, and begin problem-solving to rectify their own confusion. The only way to cope with confusion is that every student must have in-depth knowledge of complicated matters, as fought with confusion is an intellectual action [25,26]. On the other hand, if a healthy learning atmosphere offers an adequate platform and timely assistance to students, and they efficiently normalize their confusion, they could achieve positive outcomes [25,26,27,28].

Moreover, many scholars used different methods like “classification or knowledge engineering” to detect the disturbance changes in students, particularly confusion [29]. Likewise, Conati and MacLaren established a detector built on logged data and survey question groupings to forecast self-described student disturbance, although this model was healthier to recognize attentive and inquisitive students but ineffective at classifying confused students [30].

Baker et al. conducted strong research particularly focused on computer software designed for education, e.g., ITSs to automatically identify confusion through affect detection where they collected this information through semantic actions of students, and labeled the existing Pittsburgh Science of Learning Center (PSLC) DataShop log files. In this research, they defined confusion as the slower pattern of students’ actions while attempting the pre-defined teacher criterion before the starting of mastery skill-builder assignment or homework. Authors focused the preliminary step and observed the percentage of clip actions [26]. Table 1 shows the summary of the illustrative methods used in the previous research in the domain of ITS.

Table 1.

Summary of previous research.

3. Methods

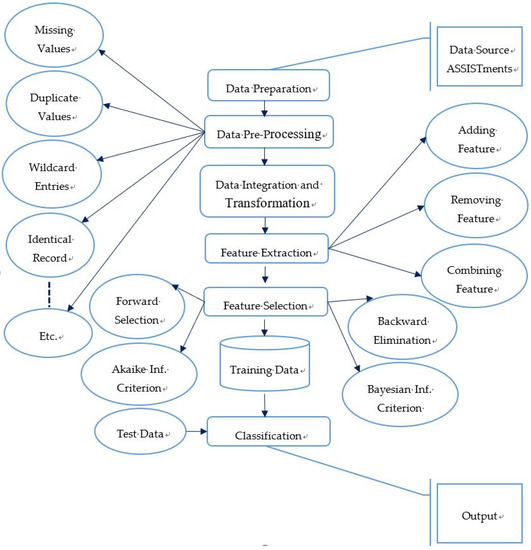

This section clarifies and illustrates the effectiveness of raw data to classification via machine learning methods. Figure 1 depicts the visualization of raw data to workflow classification:

Figure 1.

A pictorial view of raw data for workflow classification.

3.1. Preparation of Data

In this study, we used dataset (in the supplementary) collected from ASSISTments, Skill-builder data 2009–2010 [8].

Skill-builder problem sets have the following features:

- Questions based on one specific skill; a question can have multiple skill tagging’s.

- Students must answer three questions correctly in a row to complete the assignment.

- If a student uses the tutoring (“Hint” or “Break this Problem into Steps”), the question will be marked incorrect.

- Students will know immediately if they answered the question correctly.

- If a student is unable to figure out the problem on his or her own, the last hint will answer.

- Currently, this feature is only available for math problem sets.

In this whole dataset, various features are available relating to the mastery skill-builder learning. There were almost 72 participating schools with 93 mastery skills in algebra mathematics and about 28 features. We targeted the school ID-73 because it had maximum availability of records, i.e., the total record of 5148 with 20 attributes, and four attributes had various missing values initially amongst other school IDs, and selected 10 mastery skills, i.e., Absolute Value, Addition and Subtraction of Positive Decimals, Box and Whisker, Circle Graph, Multiplication Fractions, Ordering Fractions, Percent Of, Subtraction of Whole Numbers, Venn Diagram, and Write Linear Equation from Graph, as the maximum number of students selected these chosen skills and after removing duplicate values, we got total 166 distinct student IDs remaining.

3.1.1. Measurements and Covariates

We selected the predictors (original, attempt_count, ms_first_response, correct, hint_total, overlap_time, and opportunity) from the list of features available in the dataset, following are the basic description, measured ROC, accuracy, precision, recall, F-measure, sensitivity, and specificity as performance indicators that were used by machine learning algorithms.

- Original: If ‘0’ means scaffolding problem, and other than ‘0’ means the main problem.

- Attempt_count: Number of times a student entered an answer.

- Ms_first_response: Time between the start time and first student action.

- Correct: If ‘0’ means Incorrect on first attempt otherwise correct.

- Hint_total: Number of possible hints on the problem.

- Overlap_time: This is meant to be the time taken by student to finish the problem.

- Opportunity: The number of opportunities each student has to practice on the skill.

3.1.2. Discretization of Predicted Variable

After the precise selection of predictors, we were interested in learning what features appraise the status of the confused/not confused student. In order to determine this aspect, we used a feature extraction technique to select the predicted variable. We chose and combined three variables, i.e., attempt_count, correct, and overlap_time, with the conditions mentioned below to form a new feature called “student state”, and on the basis of that we categorized the status of the confused/not confused students, ‘1’ designated confused and ‘0’ not confused.

- If (attempt_count) > Total mean (attempt_count)

- and (correct) < Total mean (correct)

- and (overlap_time) > Total mean (overlap_time) then (Confuse) otherwise (Not Confuse)

3.1.3. Experimental Manipulations or Interventions

We used the 10-fold cross-validation technique to divide our datasets into a standard (80%–20%) of training, and test datasets, respectively with stratified sampling, as our response variable was dichotomous.

3.1.4. Statistical Analysis

For statistical analysis, we used the statistical programming language R (https://cran.r-project.org/), and used RStudio (https://www.rstudio.com/) to perform basic descriptive and regression analysis.

We also checked the correlation between explanatory and response variables and identified which variables were significant, bring information to the model, and which variables do not.

3.2. Pre-Processing of Data

Data extracted either from databases, log files, or Microsoft Excel files required cleaning. Although it was in good shape, data cleaning before moving ahead was an absolute part of the pre-processing. Data could be noisy, missing, or uneven. Machine learning algorithms performed pre-processing of data up-to some extent, but these algorithms were more robust if we manually accomplished this step.

3.3. Integration and Transformation of Data

For better statistical analysis and classification, data must be integrated and transformed. For this objective, Table 2 illustrates five amongst ten mastery skills as a snapshot and each skill has four attributes (stated above in 3.1. Preparation of Data) for each student.

Table 2.

Mastery skills and corresponding attributes.

3.4. Feature Extraction

Feature extraction is a procedure for creating new attributes amongst existing features. Figure 1 shows a snapshot of the feature extraction step. Classification is considered to be an essential step as the performance measure of the learning process depends on significant explanatory variables. In many real-world cases, we cumulatively extract features, alter if needed, combine them, and produce one variable. The same procedure might be adopted for the selection of response variables. In this study, we selected ten mastery skills and also nominated the associated explanatory variables and clubbed them to form 40 explanatory variables for each student in each mastery skill.

3.5. Feature Selection

As revealed in Figure 1 above, there are many criterions available for feature selection, for Instance: Backward Elimination, Forward Selection, AIC (Akaike Information Criterion), BIC (Bayesian Information Criterion), DIC (Deviance Information Criterion), Bayes factor, Mallow’s Cp, etc. We performed Backward Elimination using the adjusted R2 method with the cutoff p-value of 0.05 to construct our model because it is a common way [32]. We started with the full model and eliminated one variable at a time until the parsimonious model was reached [33].

3.6. Training of Model

Before predicting the confusion amongst the students attempting algebra mastery skills homework in ITS, we essentially trained machine learning algorithms to curtail the difference between actual and predicted values. For this objective, we split the data into (80%–20%) ratio with stratified sampling, as our response variable was nominal.

3.7. Testing and Evaluation of the Model

Model evaluation is an essential part of implementing machine learning techniques. When a machine is trained on known data, then we evaluate the model on unseen data to verify that the model is good enough, learned, and classified correctly. We used seven learning models in this study and the description is as follows:

Naïve Bayes (NB) is fast and efficient probabilistic classifier with an extensive record of research. Due to its robustness, precision, and competence this method is usually referenced [34].

Generalized Linear Model (GLM) is eventually the enhancement of old-style linear models, and the series of instructions inside these models turn to data by using the MLE technique. These models give tremendous, high-speed, and parallel computation with a small number of explanatory variables with non-zero constants [35,36].

Logistic Regression (LR) is a broadly used statistical technique for the classification of binary output. When predicting the output of the nominal response variable, usually the logistic regression algorithm is used [36,37].

Deep Learning (DL) works by a neural network that takes information that offers information about other data as input, and produces the outcome by using many layers [38]. DL can tune and select the model at an optimal level by itself, and it also achieves mining of features instinctively without involvement and interaction of individuals or humans, which spectacularly saves plenty of determination and time [39].

Decision Tree (DT) depicts tree-like building, where it has nodes (internal and leaf). It is made by training data, which consists of data rows or records. Each record is formed by a set of features and outcome labels [40].

Random Forest (RF) associates multiple tree input variables in a group. New occurrences being classified are broken down into trees, and each tree states a classification [41]. RF generates many arbitrary trees on various subsets of a data set, and the subsequent model builds on polling of these trees. Due to this variance, it is less likely to overtraining [36].

Gradient Boosted Trees (XGBoost) correlates with the Gradient Boosting Machine (GBM), which is another boosting algorithm. It produces good accuracy due to the competences of parallel computing and the effective linear model solver. It also creates decision trees which are individual understandable models [42].

We executed all the above-mentioned learning algorithms on the RapidMiner 8.1 for our experimentation. RapidMiner has a collection of machine learning/data mining algorithms, and we calculated the desired output by accuracy. Furthermore, we tested these algorithms on other performance metrics mentioned in Section 3.7.1.

3.7.1. Performance Metrics

In this study, we adopted the most common and widely used performance metrics of [43,44]. They used the ROC Area under the curve (AUC) to calculate the performance of prediction models.

- ROC Curve or AUC

ROC curve demonstrates the association between true positive and false positive rates [45]. It contains several thresholds, and each produces a 2 × 2 contingency table. We also used other performance metrics, i.e., accuracy, precision, recall, F-Measure, sensitivity, and specificity.

Detailed results for this present study are described in Section 4 (Results).

3.8. Classification

Plenty of machine learning/data mining tools are available. We used RapidMiner 8.1 for our investigation and testing. RapidMiner studio is well equipped with data mining/machine learning tasks with sufficient state-of-the-art collections of machine learning algorithms along with data access, pre-processing, blending, cleansing, modeling, visualization, and validation operators, which give high-tech advanced platforms to perform machine learning/data mining tasks in the most efficient and well-organized manner [46,47]. As we executed supervised learning, succeeding some linear and non-linear classifiers were used for classification, application and verification.

3.9. Statistical Analysis and Parameters

In order to discover the significance of explanatory variables for the prediction of students’ confusion in algebra mastery skills in ITS, it was imperative to explore the predictors (explanatory variables), and its impact on response variables statistically. Although machine learning algorithms intrinsically perform statistical tests and analysis of variables, it is always good practice to check manually before applying any machine learning methods and techniques.

Table 3 is the weights (ranks) of the attributes, which show the universal significance of each attribute for the value of the target attribute, independent of the modeling algorithm.

Table 3.

Ranks of attributes.

We have used the statistical programming language R with the standard cut-off level of probability value (p-value 0.05). Table 4 displays a correlation matrix p-values with the response variable.

Table 4.

Correlation matrix with response variable.

In this statistical summary of correlation, we found nine predictor variables that were most significant, i.e., their values were (p < 0.05) related to the dichotomous response variable. Correlation was used to measure the strength of the linear association between two numeric variables. Many types of correlation coefficients exist. i.e., Pearson, Kendall, and Spearman. We used Pearson’s correlation coefficient as it commonly used in linear regression. It is denoted by (r or R) and its value is always in the range from −1 to +1, where +1 specifies strong positive correlation, and −1 the strong negative correlation.

Statistically, after using backward elimination techniques, we ended up with the final model which validates the significance of nine explanatory variables, Table 5 portrays descriptive statistics; Table 6 shows regression analysis including predictor’s coefficients, standard errors, p-values, etc.; and Table 7 reveals regression summary.

Table 5.

Descriptive statistics.

Table 6.

Regression analysis of predictors (explanatory variables).

Table 7.

Regression statistics summary.

Furthermore, Table 8 reveals the efficient feature of R language, which shows what maximum adjusted R2 value could achieve through given explanatory variables. These are the ten chosen skills with ASSISTments data attributes. The purpose of showing this Table is to validate the adjusted R2 value in Table 7.

Table 8.

Maximum adjusted R2 representation.

4. Results

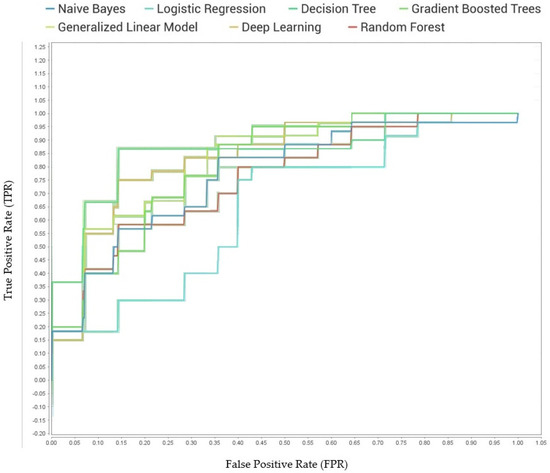

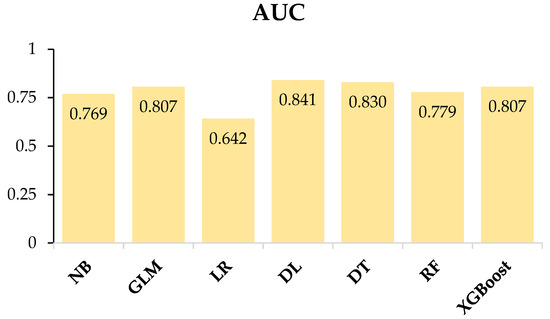

As discussed in Section 3; Methods, ROC is Receiver Operating Characteristic and also recognized as ROC AUC or just simply ROC curve. It demonstrates the relationship between the true positive rate (TPR) and false positive rate (FPR). It also determines the cooperation between sensitivity and specificity as both are contradictory, i.e., when the sensitivity rises, specificity declines. The accuracy can be monitored if the curve is closer to the top left corner and could be considered the finest results, but if curve comes closer to the diagonal angle (45°), the results would not be accurate. Moreover, ROC AUC value >0.9 portrays excellent results; values between 0.8–0.9 are considered good, those between 0.7–0.8 reflects fair, and <0.6 illustrates poor [48].

Graphical representation of ROC AUC shown in Figure 2 and Figure 3 depicts the AUC values graphs for seven machine learning algorithms, which correctly predicted the confusion amongst the students attempting algebra mastery skills in ITS. In Figure 3, vertical axis number shows the percentage value between 0% and 100%.

Figure 2.

A symbolic view of Area under the curve (AUC) of seven selected machine learning algorithms.

Figure 3.

Graphical representation of ROC AUC of nominated machine learning models.

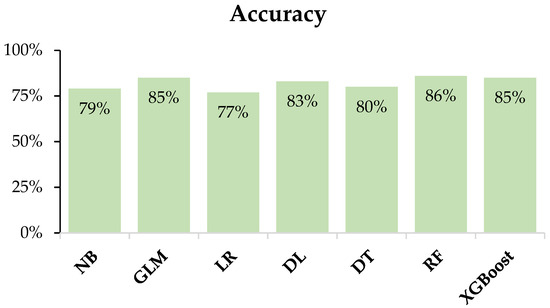

We have constructed seven candidate models built on various machine learning methods. The performance achieved by each classifier is shown in Figure 4, which reveals the accuracy performance metric of each model by repetitive sampling validation technique, in which it randomly replicates division of training and test data.

Figure 4.

Candidate models’ summary concerning the accuracy.

These results illustrate the ratio of time we require to acceptably predict the cases. We attained maximum accuracy with RF, GLM, XGBoost, and DL, i.e., 86.1%, 84.9%, 84.9%, and 83.1%, respectively. We also employed other classifiers, i.e., DT: 79.5%, NB: 78.9%, and LR: 77.1% as shown in Table 9.

Table 9.

Complete detail of learning algorithms along with runtime.

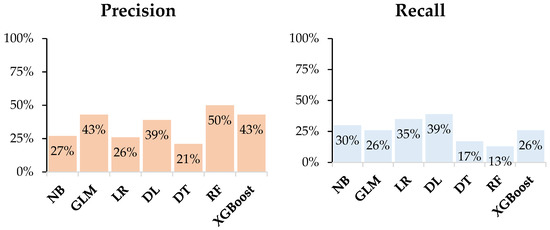

We checked other performance metrics, which we discussed in Section 3. Figure 5 displays the performance of seven machine learning algorithms regarding precision, recall, F-measure, sensitivity, and specificity.

Figure 5.

Performance of machine learning algorithms relating to performance measures.

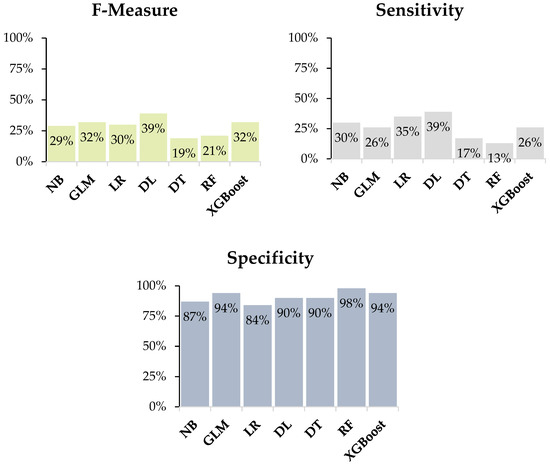

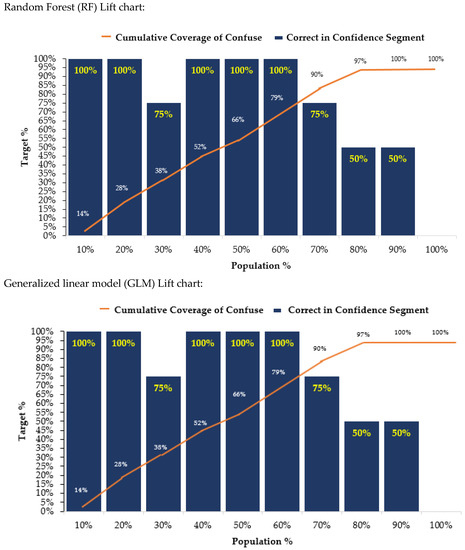

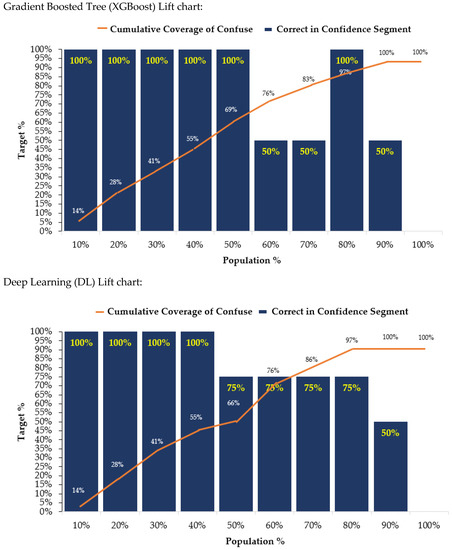

Figure 6 displays the lift charts of high accuracy achieving machine learning models. A lift chart is a graphical illustration of the enhancement that a model delivers when related against a random guess [49]. It shows the efficiency of the model by measuring the ratio between the outcome obtained “with and without a model” [36].

Figure 6.

High achieving accuracy models’ lift chart.

5. Discussion and Conclusions

This research investigates models for the prediction of confused students attempting homework using skill-builder in ITS. Analyzing confusion is a task of classification, and machine learning has plenty of robust classification algorithms. In this study, we used machine learning methods for the experiment. Performing techniques of data mining on ITS is a tough task because the multiple features are related in various extents with many of noisy data and missing fields. We then extracted explanatory variables (input features) and targeted (output) response variables from ITS. This was followed by applying machine learning models NB, GLM, LR, DL, DT, RF, and XGBoost, respectively. The results demonstrated that RF, GLM, XGBoost, and DL models attained a high accuracy of 86.1%, 84.9%, 84.9%, and 83.1% in predicting the students’ confusion in the algebra mastery skills in ITS.

Such a result can assist school tutors in next day classes, and identifying student groups which were confused attempting the homework exercise in mastery skill-builder. This tool also highlights which skill(s) need(s) more attention for further practice. Furthermore, tutors can also govern learning behaviors and student performances during various mastery skill(s), allowing them to focus only problematic skill(s) in the next day of the class, which will save a lot of time and effort for both tutors and students.

Our study has many decent inferences both educationally and practically. Firstly, to the best of our information and facts, our research, amongst the previous studies for predicting confusion by using machine learning methods for sustainable educational development, is one of the rare studies that have focused on these specific aspects. ITS contributes to sustainable development in education, as the development focuses on the necessities of the present-day without compromising the future needs. The objective of sustainable development is to stabilize our environmental, economic, and social needs [50].

Sustainable development in education is an interdisciplinary learning approach that covers the combined environmental, social, and economic aspects of the formal and informal curriculum. This educational approach can assist students develop their aptitudes, knowledge, and experience to show a significant role in ecological development, and become liable members of society. Participation and sharing teaching and learning techniques and methods are also required to encourage and empower learners to change and alter their performances and take corrective actions for sustainable development. Critical thinking, visualizing the future, and decisions making are the skills and abilities that Education for Sustainable Development (ESD) promotes [51].

5.1. Shortcomings

The shortcoming in this study is that we have used a limited number of variables as there are more attributes available, which can be used for further investigation and could be statistically stronger. Another shortcoming is that by doing rigorous optimization techniques like changing criterion, pruning, selecting a threshold of machine learning models (algorithms) we could achieve better results.

5.2. Future Recommendations

In future work, we will design and apply some strategies to further augment our model. First, a more decent optimization parameter can be used for building a more accurate model, for instance: In DT, we can change the criterion i.e., gain_ratio, Information_gain, gini_index, accuracy, maximal depth parameter etc.; in LR, we can set the criterion, solver, reproducible, use regularization, etc.; and in XGBoost, we can alter maximal depth, min rows, min split improvement, number of bins, etc. to optimize performance. Additionally, we will consider Convolutional Neural Network (CNN) [52] in our experimentation as this methodology assures negligible loss and best classification accuracy. Other approaches like the Dynamic Bayesian Network (DBN), Gauss Cloud Model, and Cloud Reasoning Algorithm will also be taken into consideration for best classifying accuracy and precision [53,54,55].

Secondly, other kinds of classification methods and techniques can be measured. Though the machine learning techniques used and applied in this study are relatively comprehensive but still, there are various unexplored methods/techniques that can be applied to the prediction problem in the domain of students in intelligent tutoring system. Thirdly, other structures and features in the data may enhance the prediction correctness and accuracy that can be added. Furthermore, as per the tutors’ perspective, we can identify the benefits associated while detecting the confusion in a group of students solving mathematics homework using skill-builder in ITS.

Supplementary Materials

The dataset is available online at http://www.mdpi.com/2071-1050/11/1/105/s1.

Author Contributions

Conceptualization, M.H. and Y.X.; methodology, M.H. and S.M.R.A.; software, S.M.R.A.; validation, M.H., Y.X., and S.M.R.A.; formal analysis, S.M.R.A.; resources, S.M.R.A.; writing—original draft preparation, S.M.R.A.; writing—review and editing, M.H.; visualization, S.M.R.A. and Y.X.; supervision, W.Z.; funding acquisition, W.Z.

Funding

This research was funded by “National Natural Science Foundation of China, grant number 91630206, and 61572434”, and “The National Key R&D Program of China, grant number 2017YFB0701501”.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Aleven, V.; Baker, R.; Blomberg, N.; Andres, J.M.; Sewall, J.; Wang, Y.; Popescu, O. Integrating MOOCs and Intelligent Tutoring Systems: EdX, GIFT, and CTAT. In Proceedings of the 5th Annual Generalized Intelligent Framework for Tutoring Users Symposium, Orlando, FL, USA, 11 May 2017; p. 11. [Google Scholar]

- Koedinger, K.R.; Anderson, J.R.; Hadley, W.H.; Mark, M.A. Intelligent tutoring goes to school in the big city. Int. J. Artif. Intell. Educ. 1997, 8, 30–43. [Google Scholar]

- Aleven, V.; Sewall, J.; McLaren, B.M.; Koedinger, K.R. Rapid Authoring of Intelligent Tutors for Real-World and Experimental Use. In Proceedings of the Sixth IEEE International Conference on Advanced Learning Technologies (ICALT’06), Kerkrade, The Netherlands, 5–7 July 2006; pp. 1–5. [Google Scholar]

- Kinshuk. Computer Aided Learning for Entry Level Accountancy Students. 1996. British Library, Imaging Services North, Boston Spa, Wetherby, West Yorkshire, UK. Available online: https://core.ac.uk/download/pdf/77603309.pdf (accessed on 28 April 2018).

- Freedman, R. Atlas: A plan manager for mixed-initiative, multimodal dialogue. In Proceedings of the AAAI-99 Workshop on Mixed-initiative Intelligence, Orlando, FL, USA, 18–19 July 1999; pp. 1–8. [Google Scholar]

- Gertner, A.; Conati, C.; VanLehn, K. Procedural Help in Andes: Generating Hints Using a Bayesian Network Student Model; American Association for Artificial Intelligence (AAAI): Palo Alto, CA, USA, 1988; pp. 106–111. [Google Scholar]

- Heffernan, N.T.; Heffernan, C.L. The ASSISTments ecosystem: Building a platform that brings scientists and teachers together for minimally invasive research on human learning and teaching. Int. J. Artif. Intell. Educ. 2014, 24, 470–497. [Google Scholar] [CrossRef]

- Heffernan, N. ASSISTmentsData. 2012. Available online: https://sites.google.com/site/assistmentsdata/home/assistment-2009-2010-data/skill-builder-data-2009-2010 (accessed on 2 February 2018).

- Roschelle, J.; Feng, M.; Murphy, R.F.; Mason, C.A. Online Mathematics Homework Increases Student Achievement. AERA Open 2016, 2. [Google Scholar] [CrossRef]

- Roschelle, J.; Murphy, R.; Feng, M.; International, S.R.I.; Mason, C.; Fairman, J. Rigor and Relevance in an Efficacy Study of an Online Mathematics Homework Intervention. 2014. Available online: https://www.sri.com/newsroom/press-releases/rigorous-sri-study-shows-online-mathematics-homework-program-developed (accessed on 30 April 2018).

- Feng, M.; Heffernan, N.; Koedinger, K. Addressing the assessment challenge with an online system that tutors as it assesses. User Model. User Adapt. Interact. 2009, 19, 243–266. [Google Scholar] [CrossRef]

- Pardos, Z.; Heffernan, N. Tutor Modeling vs. Student Modeling. In Proceedings of the Twenty-Fifth International Florida Artificial Intelligence Research Society Conference, Marco Island, FL, USA, 23–25 May 2012. [Google Scholar]

- Pedro, M.O.Z.S.; Baker, R.S.J.D.; Gowda, S.M.; Heffernan, N.T. Towards an understanding of affect and knowledge from student interaction with an intelligent tutoring system. In Artificial Intelligence in Education; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7926, pp. 41–50. [Google Scholar]

- Wang, S.; Lu, S.; Dong, Z.; Yang, J.; Yang, M.; Zhang, Y. Dual-Tree Complex Wavelet Transform and Twin Support Vector Machine for Pathological Brain Detection. Appl. Sci. 2016, 6, 169. [Google Scholar] [CrossRef]

- Ma, W.; Adesope, O.; Nesbit, J.C.; Liu, Q. Intelligent tutoring systems and learning outcomes: A meta-analysis. J. Educ. Psychol. 2014, 106, 901–918. [Google Scholar] [CrossRef]

- Crockett, K.; Latham, A.; Whitton, N. On predicting learning styles in conversational intelligent tutoring systems using fuzzy decision trees. Int. J. Hum. Comput. Stud. 2017, 97, 98–115. [Google Scholar] [CrossRef]

- Kelly, K.; Heffernan, N.; Heffernan, C.; Goldman, S.; Pellegrino, J.; Soffer-goldstein, D. WEB-BASED HOMEWORK. In Proceedings of the Joint Meeting of PME 38 and PME-NA 36, Vancouver, BC, Canada, 15–20 July 2014; Volume 3, pp. 417–424. [Google Scholar]

- Singh, R.; Saleem, M.; Pradhan, P.; Heffernan, C.; Heffernan, N.T.; Razzaq, L.; Dailey, M.D.; O’Connor, C.; Mulcahy, C. Feedback during web-based homework: The role of hints. In Artificial Intelligence in Education; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6738, pp. 328–336. [Google Scholar]

- Vanlehn, K.; Lynch, C.; Schulze, K. The Andes physics tutoring system: Lessons learned. Int. J. Artif. Intell. Edu. 2005, 15, 1–51. [Google Scholar]

- Kelly, K.; Heffernan, N.; Heffernan, C.; Goldman, S.; Pellegrino, J.; Goldstein, D.S. Estimating the effect of web-based homework. In Artificial Intelligence in Education; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7926, pp. 824–827. [Google Scholar]

- Fyfe, E.R. Providing feedback on computer-based algebra homework in middle-school classrooms. Comput. Hum. Behav. 2016, 63, 568–574. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The Power of feedback. Review of Educational Research. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Alfieri, L.; Brooks, P.J.; Aldrich, N.J.; Tenenbaum, H.R. Does Discovery-Based Instruction Enhance Learning? J. Educ. Psychol. 2011, 103, 1–18. [Google Scholar] [CrossRef]

- Gupta, N.K.; Rose, C.P. Understanding Instructional Support Needs of Emerging Internet Users for Web-based Information Seeking. JEDM J. Educ. Data Min. 2010, 2, 38–82. [Google Scholar]

- Lee, D.M.C.; Rodrigo, M.M.T.; Baker, R.S.J.D.; Sugay, J.O.; Coronel, A. Exploring the relationship between novice programmer confusion and achievement. In Affective Computing and Intelligent Interaction; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6974, pp. 175–184. [Google Scholar]

- Baker, R.S.J.d.; Gowda, S.M.; Wixon, M.; Kalka, J.; Wagner, A.Z.; Salvi, A.; Aleven, V.; Kusbit, G.K.; Ocumpaugh, J.; Ocumpaugh, L. Towards Sensor-Free Affect Detection in Cognitive Tutor Algebra. In Proceedings of the 5th International Conference on Educational Data Mining, Chania, Greece, 19–21 June 2012; pp. 126–133. [Google Scholar]

- Lehman, B.; D’Mello, S.; Graesser, A. Interventions to regulate confusion during learning. In Intelligent Tutoring Systems; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7315, pp. 576–578. [Google Scholar]

- Pardos, Z.A.; Baker, R.S.J.D.; Pedro, M.O.C.Z.S.; Gowda, S.M.; Gowda, S.M. Affective States and State Tests: Investigating How Affect Throughout the School Year Predicts End of Year Learning Outcomes. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (LAK’13), Leuven, Belgium, 8–12 April 2013; Volume 1, pp. 117–124. [Google Scholar]

- Pekrun, R.; Goetz, T.; Titz, W.; Perry, R.P. Academic emotions in students’ self-regulated learning and achievement: A program of qualitative and quantitative research. Educ. Psychol. 2002, 37, 91–105. [Google Scholar] [CrossRef]

- Conati, C.; MacLaren, H. Empirically building and evaluating a probabilistic model of user affect. User Model. User Adapt. Interact. 2009, 19, 267–303. [Google Scholar] [CrossRef]

- D’Mello, S.; Lehman, B.; Pekrun, R.; Graesser, A. Confusion can be beneficial for learning. Learn. Instr. 2014, 29, 153–170. [Google Scholar] [CrossRef]

- Vu, D.H.; Muttaqi, K.M.; Agalgaonkar, A.P. A variance inflation factor and backward elimination based robust regression model for forecasting monthly electricity demand using climatic variables. Appl. Energy 2015, 140, 385–394. [Google Scholar] [CrossRef]

- Rundel, M.C. Linear Regression and Modeling. 2018. Available online: https://www.coursera.org/learn/linear-regression-model (accessed on 18 January 2018).

- Smith, V.C.; Lange, A.; Huston, D.R. Predictive modeling to forecast student outcomes and drive effective interventions in online community college courses. J. Asynchronous Learn. Netw. 2012, 16, 51–61. [Google Scholar] [CrossRef]

- Ng, V.K.Y.; Cribbie, R.A. The gamma generalized linear model, log transformation, and the robust Yuen-Welch test for analyzing group means with skewed and heteroscedastic data. Commun. Stat. Simul. Comput. 2018, 1–18. [Google Scholar] [CrossRef]

- RapidMiner. RapidMiner Documentation. 2016. Available online: https://docs.rapidminer.com/latest/studio/operators/ (accessed on 29 April 2018).

- Peng, C.; Lee, K.; Ingersoll, G.M. An introduction to logistic regression analysis and reporting. J. Educ. Res. 2002, 96, 3–14. [Google Scholar] [CrossRef]

- Li, W.; Gao, M.; Li, H.; Xiong, Q.; Wen, J.; Wu, Z. Dropout prediction in MOOCs using behavior features and multi-view semi-supervised learning. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 3130–3137. [Google Scholar]

- Xing, W.; Du, D. Dropout Prediction in MOOCs: Using Deep Learning for Personalized Intervention. J. Educ. Comput. Res. 2018. [Google Scholar] [CrossRef]

- Kabra, R.R.; Bichkar, R.S. Performance prediction of engineering students using decision trees. Int. J. Comput. Appl. 2011, 36, 8–12. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learnl. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cobos, R.; Wilde, A.; Zaluska, E. Predicting attrition from massive open online courses in FutureLearn and edX. In Proceedings of the 7th International Learning Analytics and Knowledge Conference, Simon Fraser University, Vancouver, BC, Canada, 13–17 March 2017; Volume 1967, pp. 74–93. [Google Scholar]

- Pontius, R.G.; Si, K. The total operating characteristic to measure diagnostic ability for multiple thresholds. Int. J. Geogr. Inf. Sci. 2014, 28, 570–583. [Google Scholar] [CrossRef]

- Wang, S.; Yang, M.; Zhang, Y.; Zhang, Y.-D. Detection of left-sided and right-sided hearing loss via fractional Fourier transform. Entropy 2016, 18, 194. [Google Scholar] [CrossRef]

- Hussain, M.; Zhu, W.; Zhang, W.; Abidi, S.M.R.; Ali, S. Using machine learning to predict student difficulties from learning session data. Artif. Intell. Rev. 2018, 1–27. [Google Scholar] [CrossRef]

- Mierswa, I.; Wurst, M.; Klinkenberg, R.; Scholz, M.; Euler, T. YALE: Rapid prototyping for complex data mining tasks. In Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Philadelphia, PA, USA, 20–23 August 2006; Volume 2006, pp. 935–940. [Google Scholar]

- Godwin, K.E.; Almeda, M.V.; Petroccia, M.; Baker, R.S.; Fisher, A.V. Classroom activities and off-task behavior in elementary school children. In Proceedings of the 35th Annual Meeting of the Cognitive Science Society, Berlin, Germany, 31 July–3 August 2013; No. 2001. pp. 2428–2433. [Google Scholar]

- Metz, C.E. Basic Principles of ROC Analysis. 2018. Available online: http://gim.unmc.edu/dxtests/ROC1.htm (accessed on 4 May 2018).

- Microsoft. Lift Chart (Analysis Services—Data Mining). 2018. Available online: https://docs.microsoft.com/en-us/sql/analysis-services/data-mining/lift-chart-analysis-services-data-mining?view=sql-analysis-services-2017 (accessed on 7 May 2018).

- Education for Sustainable Development|Higher Education Academy. Available online: https://www.heacademy.ac.uk/knowledge-hub/education-sustainable-development-0 (accessed on 16 November 2018).

- The Brundtland Commission. Available online: https://www.sustainabledevelopment2015.org/AdvocacyToolkit/index.php/earth-summit-history/past-earth-summits/58-the-brundtland-commission (accessed on 13 November 2018).

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object Classification Using CNN-Based Fusion of Vision and LIDAR in Autonomous Vehicle Environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4230. [Google Scholar] [CrossRef]

- Xie, G.; Gao, H.; Qian, L.; Huang, B.; Li, K.; Wang, J. Vehicle Trajectory Prediction by Integrating. Mech. Syst. Signal Process. 2018, 102, 1377–1380. [Google Scholar]

- Li, D.; Gao, H. A Hardware Platform Framework for an Intelligent Vehicle Based on a Driving Brain. Engineering 2018, 4, 464–470. [Google Scholar] [CrossRef]

- Gao, H.; Zhang, X.; Liu, Y.; Li, D. Cloud Model Approach for Lateral Control of Intelligent Vehicle Systems. Sci. Program. 2016, 2016, 1–12. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).